[table]capposition=top \newfloatcommandcapbtabboxtable[][\FBwidth]

RIM: Reliable Influence-based Active Learning

on Graphs

Abstract

Message passing is the core of most graph models such as Graph Convolutional Network (GCN) and Label Propagation (LP), which usually require a large number of clean labeled data to smooth out the neighborhood over the graph. However, the labeling process can be tedious, costly, and error-prone in practice. In this paper, we propose to unify active learning (AL) and message passing towards minimizing labeling costs, e.g., making use of few and unreliable labels that can be obtained cheaply. We make two contributions towards that end. First, we open up a perspective by drawing a connection between AL enforcing message passing and social influence maximization, ensuring that the selected samples effectively improve the model performance. Second, we propose an extension to the influence model that incorporates an explicit quality factor to model label noise. In this way, we derive a fundamentally new AL selection criterion for GCN and LP–reliable influence maximization (RIM)–by considering quantity and quality of influence simultaneously. Empirical studies on public datasets show that RIM significantly outperforms current AL methods in terms of accuracy and efficiency.

1 Introduction

Graphs are ubiquitous in the real world, such as social, academic, recommendation, and biological networks [43, 34, 35, 11, 32]. Unlike the independent and identically distributed (i.i.d) data, nodes are connected by edges in the graph. Due to the ability to capture the graph information, message passing is the core of many existing graph models assuming that labels and features vary smoothly over the edges of the graph. Particularly in Graph Convolutional Neural Network (GCN) [18], the feature of each node is propagated along edges and transformed through neural networks. In Label Propagation (LP) [28], node labels are propagated and aggregated along edges in the graph.

The message passing typically requires a large amount of labeled data to achieve satisfactory performance. However, labeling data, be it by specialists or crowd-sourcing, often consumes too much time and money. The process is also tedious and error-prone. As a result, it is desirable to achieve good classification results with labeled data that is both few and unreliable. Active Learning (AL) [1] is a promising strategy to tackle this challenge, which minimizes the labeling cost by prioritizing the selection of data in order to improve the model performance as much as possible. Unfortunately, conventional AL methods [3, 9, 22, 46, 23, 31] treat message passing and AL independently without explicitly considering their impact on each other. In this paper, we advocate that a better AL method should unify node selection and message passing towards minimizing labeling cost, and we make two contributions towards that end.

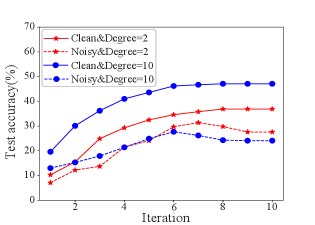

The first contribution is that we quantify node influence by how much the initial feature/label of label node influences the output feature/label of node in GCN/LP, and then connect AL with influence maximization, e.g., the problem of finding a small set of seed nodes in a network that maximizes the spread of influence. To demonstrate this idea, we randomly select different sets of labeled nodes under the clean label and train a 2-layer GCN with a different labeled set on the Cora dataset [18]. For LP, we select two sets with the average node degree of 2 and 10. As shown in Figure 1, the model performance in both GCN/LP tends to increase along with the receptive field/node degree under the clean label, implying the potential gain of increasing the node influence.

Note that in real life, both humans and automated systems are prone to mistakes. To examine the impact of label noise, we set the label accuracy to 50%, and Figure 1(a) and Figure 1(b) show that the test accuracy could even drop with the increase of node influence under the noisy label. This is because the noise of labels will also be widely propagated with node influence increasing, thus diminishing the benefit of influence maximization. Therefore, our second contribution is that we further propose to maximize the reliable influence spread when label noise is taken into consideration. Specifically, each node is associated with a new parameter called the quality factor, indicating the probability that the label given by the oracle is correct. We recursively infer the quality of newly selected nodes based on the smoothing features/labels of previously selected nodes across the graph’s edges, i.e., nodes that share similar features or graph structure are likely to have the same label.

Based on the above insights, we propose a fundamentally new AL selection criterion for GCN and LP–reliable influence maximization (RIM)–by considering both quantity and quality of influence simultaneously. Under a high-quality factor, we enforce the influence of selected label nodes for large overall reaches, while under a low-quality factor, we make mistake penalization to limit the selected node influence. RIM also maintains some nice properties such as submodularity, which allows a greedy approximation algorithm for maximizing reliable influence to reach an approximation ratio of compared with the optimum. Empirical studies on public datasets demonstrate that RIM significantly outperforms the state-of-the-art methods GPA [14] by 2.2%-5.1% in terms of predictive accuracy when the labeling accuracy is 70%, even if it is enhanced with the anti-noise mechanism PTA [7]. Furthermore, in terms of efficiency, RIM achieves up to 4670× and 18652× end-to-end runtime speedups compared to GPA in GPU and CPU, respectively.

In summary, the core contributions of this paper are 1) We open up a novel perspective for efficient and effective AL for GCN and LP by enforcing the feature/label influence with a connection to social influence maximization [20, 10, 4]; 2) To the best of our knowledge, we are the first to consider the influence quality in graph-based AL, and we propose a new method to estimate the influence quantity based on the feature/label smoothing; 3) We combine the influence quality and quantity in a unified RIM framework. The empirical study on both GCNs and LP demonstrates that RIM significantly outperforms the compared baselines in performance and efficiency.

2 Preliminary

2.1 Active Learning

Problem Formulation.

Let be the number of label classes and the ground-truth label for a node be a one-hot vector . Suppose that the entire node set is partitioned into training set (including both the labeled set and unlabeled set ), validation set and test set . Given a graph = (,) with nodes and edges, feature matrix in which , a labeling budget , and a loss function , the goal of an AL algorithm is to select a subset of nodes to label from a noisy oracle with the labeling accuracy , so that it can produce a model with the lowest loss on the test set:

| (1) |

where is the predictive label distribution of node . To measure the influence of feature or label propagation, we focus on being GCN or LP.

AL on graphs.

Both GCN and LP are representative semi-supervised graph models which can utilize additional unlabeled data to enhance the model learning [45], and lots of AL methods are specially designed for these two models. For example, both AGE [3] and ANRMAB [9] adopt uncertainty, density, and node degree when composing query strategies. LP-ME chooses the node that maximizes entropy for itself, while LP-MRE chooses the node such that the acquisition of its label reduces entropy most for the total graph [22].

2.2 Graph Models

LP.

Based on the intuitive assumption that locally connected nodes are likely to have the same label, LP iteratively propagates the label influence to distant nodes along the edges as follows:

| (2) |

where is the initial label matrix consisting of one-hot label indicator vectors, and denote the soft label matrix of the labeled nodes set and unlabeled nodes set in iteration respectively. According to [44], we iteratively set the nodes in back to their initial label since their labels are correct and should not be influenced by the unlabeled nodes.

GCN.

Each node in GCN iteratively propagates its feature influence to the adjacent nodes when predicting a label. Especially, each layer updates the node feature embedding in the graph by aggregating the features of neighboring nodes:

| (3) |

where and are the embeddings of layer and respectively. Specifically, (and ) is the original node feature. is used to aggregate feature vectors of adjacent nodes, where is the adjacent matrix of the graph and is the identity matrix. is the diagonal node degree matrix used to normalize , is a layer-specific trainable weight matrix and is the activation function.

2.3 Influence Maximization

The influence maximization (IM) problem in social networks aims to select nodes so that the number of nodes activated (or influenced) in the social networks is maximized [17]. That being said, given a graph , the formulation is as follows:

| (4) |

where is the set of nodes activated by the seed set under certain influence propagation models, such as Linear Threshold (LT) and Independent Cascade (IC) models [17]. The maximization of is NP-hard. However, if is nondecreasing and submodular with respect to , a greedy algorithm can provide an approximation guarantee of [25]. RIM is the first to connect social influence maximization with graph-based AL under a noisy oracle by defining reliable influence.

3 Reliable Influence Maximization

This section presents RIM, the first graph-based AL framework that considers both the influence quality and influence quantity. At each batch of node selection, RIM first measures the proposed reliable influence quantity and selects a batch of nodes that can maximize the number of activated nodes, and then it updates the influence quality for the next iteration. The above process is repeated until the labeling budget runs out. We will introduce each component of RIM in detail below.

3.1 Influence Propagation

We measure the feature/label influence of a node on by how much change in the input feature/label of affects the aggregated feature/label of after iterations propagation [29, 37].

Definition 1 (Feature Influence).

The feature influence score of node on node after k-step propagation is the L1-norm of the expected Jacobian matrix The normalized influence score is defined as

| (5) |

Definition 2 (Label Influence).

The label influence score of node on node after k-step propagation is the gradient of with respect to :

| (6) |

Given the -step feature/label propagation mechanism, the feature/label influence score captures the sum over probabilities of all possible paths of length from to , which is the probability that a random walk (or variants) starting at ends at after taking steps [37]. Thus, we could take them as the probability that propagates its feature/label to via random walks from the influence propagation perspective.

3.2 Influence Quality Estimation

Most AL works end up fully trusting the few available labels. However, both humans and automated systems are prone to mistakes (i.e., noisy oracles). Thus, we further estimate the label reliability and associate it with influence quality. The intuition behind our method is to exploit the assumption that labels and features vary smoothly over the edges of the graph; in other words, nodes that are close in feature space and graph structure are likely to have the same label. So we can recursively infer the newly selected node’s quality based on the features/labels of previously selected nodes.

Specifically, after iterations of propagation, we calculate the node similarity of node and in LP by measuring the cosine similarity in according to E.q. (2). Like SGC [33], we remove the activate function and the trainable weight and get the new feature matrix as: . For better efficiency in measuring the node similarity, we replace in E.q. (3) with the simplified since its calculation is model-free. Similar to LP, we get the node similarity in GCN by measuring the cosine similarity in . Larger means and are more similar after iterations of feature/label propagation and thus are more likely to have the same label.

Theorem 1 (Label Reliability).

Denote the number of classes as . And assume that the label is wrong with the probability of , and the wrong label is picked uniformly at random from the remaining classes. Given the labeled node and unlabeled node , supposing the oracle labels as and (the ground truth label for , the same notation also applies to ), the reliability of node according to is

| (7) |

where is the labeling accuracy, and measures the similarity between and .

Proof of Theorem 1 is in Appendix A.1. Intuitively, Theorem 1 shows that the label of node is more reliable if (1) The oracle is more proficient and thus has higher labeling accuracy . (2) The labeled node is more similar to and thus leads to larger .

Definition 3 (Influence Quality).

The influence quality of is recursively defined as

| (8) |

where is the label reliability of node with respect to node , and is the normalized label reliability score of node .

For each unlabeled node , we firstly find all labeled nodes which have the same label with and then get the final influence quality with weighted voting [19]. The source node should contribute more in measuring its influence on another node if its label is reliable, i.e., with higher .

3.3 Reliable Influence

Different from the original social influence method which only considers the influence magnitude [16, 38], we measure the influence quantity and get the reliable influence quantity by introducing the influence quality since it is common to have noisy oracles in the labeling process of Active Learning.

Definition 4 (Reliable Influence Quantity Score).

Given the influence quality , the reliable influence quantity score of node on node after k-step feature/label propagation is

| (9) |

where is for GCN and for LP.

The reliable influence quantity score is determined by: (1) The influence quality of the labeled influence source node . (2) The feature/label influence score of on after k-step propagation. From the perspective of random walk, it is harder to get a path from to with larger steps , and the influence score will gradually decay along with the propagation steps. As a result, node can get a highly reliable influence quality score from the labeled node if they are close neighbors and has high influence quality.

In a node classification problem, the node label is dominated by the maximum class in its predicted label distribution. Motivated by this, we assume an unlabeled node can be activated if and only if the maximum influence magnitude satisfies:

| (10) |

where is the maximum influence of on the node , and the threshold is a parameter which should be specially tuned for a given dataset.

Definition 5 (Activated Nodes).

Given a set of labeled seeds , the activated node set is a subset of nodes in that can be activated by :

| (11) |

The threshold means we consider an unlabeled node is influenced as long as there is a -step path from any labeled node, which is equal to measuring whether this unlabeled node can be involved in the entire training process of GCN or LP. In practice, we could choose this threshold value in the case of a tiny budget so that our goal is to involve unlabeled nodes as more as possible. However, if the budget is relatively large, we could choose a positive threshold , which enables the selection process to pay more attention to those weakly influenced nodes.

3.4 Node Selection and Model Training

In order to increase the feature/label influence (smoothness) effect on the graph, we should select nodes that can influence more unlabeled nodes. Due to the impact of the graph structure, the speed of expansion or, equivalently, growth of the influence can change dramatically given different sets of label nodes. This observation motivates us to address the graph data selection problem in the viewpoint of influence maximization defined below.

RIM Objective. Specifically, RIM adopts a reliable influence maximization objective:

| (12) |

By considering both the influence quality and quantity, RIM aims to find a subset of to label so that the number of activated nodes can be maximized.

Reliable Node Selection. Without losing generality, we consider a batch setting where nodes are selected in each iteration. For the first batch, when the initial labeled set , we ignore the influence quality term in E.q. 9 since there are no reference nodes for measuring the label quality from the oracle. For better efficiency in finding selected nodes in each batch, we set the influence quality of each node to the labeling accuracy during the node selection process and then simultaneously update these values according to E.q. 8 after the node selection process of this batch. For the node selection after the first batch, Algorithm 1 provides a sketch of our greedy selection method for the graph models, including both GCN and LP. Given the initial labeled set , query batch size , and labeling accuracy , we first select the node generating the maximum marginal gain (line 3), set its influence quality to the labeling accuracy (line 4), and then update the labeled set (line 5). After getting a batch of labeled nodes, we require the label from a noisy oracle (line 5) and then update their influence quality according to E.q. 8 (line 6).

Theorem 2.

The greedily selected batch node set is within a factor of of the optimal set for the objective of reliable influence maximization.

Proof of Theorem 2 is in Appendix A.2. The node selection strategy in both AGE and ANRMAB relies on the model prediction, while this process in RIM is model-free. Such a characteristic is helpful in practice since the oracle does not need to wait for the end of model training in each batch node selection of RIM. For example, if the model training dominates the end-to-end runtime, the main efficiency overhead of both AGE and ANRMAB is the model training.

Reliable Model Training. Nodes with larger influence quality in should contribute more to the training process, so we introduce the influence quality in the model training. For GCN, we use the weighted cross entropy loss as: , where is the influence quality of node . For each labeled node in LP, we just change its label to .

3.5 Comparison with Prior Works

Existing AL works [40, 8, 2, 5] regarding label noise mainly focus on two phases: noise detection and noise handling. Both the data-driven and model-based methods are designed for noise detection. The former firstly constructs a graph from the dataset and then utilizes graph properties, e.g., the homophily assumption [24] without corrupting graph structure [27], while the latter [40] measures the likelihood of noise by the predicted soft labels [36]. For noise handling, existing works primarily concentrate on three aspects: data correction, objective function modification, and optimization policy modification [13]. However, none of these AL methods is specially designed for graphs and fails to consider the influence quantity imposed by the graph structure, leading to sub-optimal performance.

Meanwhile, there are also works [42, 9, 41] concerning graph-based AL, and they are designed for GCN or LP. Nonetheless, they fail to take into account the noise brought by oracles. That being said, the quality of influence is overlooked. Thus these methods suffer from a lack of robustness, especially when the quantity of noise is substantial. To sum up, current AL methods are unable to consider the quantity and quality of influence simultaneously. To bridge this gap, RIM introduces the influence quality to tackle the noise from the oracle. Besides, the influence quantity has been deliberated to get more nodes involved in semi-supervised learning.

4 Experiments

We now verify the effectiveness of RIM on four real-world graphs. We aim to answer four questions. Q1: Compared with other state-of-the-art baselines, can RIM achieve better predictive accuracy? Q2: How does the influence quality and quantity influence RIM? Q3: Is RIM faster than the compared baselines in the end-to-end AL process? Q4: If RIM is more effective than the baselines, what should be the reason?

4.1 Experiment Setup

Datasets and Baselines. We use node classification tasks to evaluate RIM in both inductive and transductive settings [12] on three citation networks (i.e., Citeseer, Cora, and PubMed) [18] and one large social network (Reddit). The properties of these datasets are summarized in Appendix A.3. We compare RIM with the following baselines: (1) Random: Randomly select the nodes to query; (2) AGE [3]: Combine different query strategies linearly with time-sensitive parameters for GCN; (3)ANRMAB [9]: Adopt a multi-armed bandit mechanism for adaptive decision making to select nodes for GCNs; (4)GPA [14]: Jointly train on several source graphs and learn a transferable active learning policy which can directly generalize to unlabeled target graphs; (5)LP-MRE [22]: Select a node that can reduce the entropy most in LP; (6)LP-ME [22]: Select a node with maximum uncertainty score in LP.

None of these baselines has considered the label noise in AL. For a fair comparison, we have tried several anti-noise mechanisms [13, 27, 36] to fight against noise in GCN and LP, and finally choose PTA [7] to our baselines since it can get the best performance in most datasets. We name AGE enhanced with PTA as AGE+, so do other baselines. Similar to RIM, PTA assigns each labeled node a dynamic label reliability score for model training. PTA computes the label reliability based on the graph proximity and the similarity of the predicted label, while RIM does not consider the model prediction because it may be unreliable in the AL setting that only a few labeled nodes can be used in the initial node selection phases. Unlike PTA, RIM considers the labeling accuracy and combines it with the graph proximity in its influence quality estimation.

Implementations. We use OpenBox [21] for hyper-parameter tuning or follow the original papers to find the optimal hyperparameters for each method. To eliminate randomness, we repeat each method ten times and report the mean test accuracy. The implementation details are shown in Appendix A.4, and our code is available in the supplementary material.

4.2 Experimental Results

Performance on GCN. To answer Q1, We choose the labeling size as nodes per class labeling error rate ranging from to , and then we report the corresponding test accuracy of GCN in Figure 2. Compared to other baselines, RIM consistently outperforms the baselines as the labeling error rate grows. Moreover, even with the anti-noise method, i.e., PTA, the baselines still have a noticeable performance gap from RIM. To demonstrate the improvement of RIM in the noisy situation, we also provide the test accuracy with a labeling error rate set as 0.3. Table 2 shows that GPA+, AGE+, and ANRMAB+ outperform Random in most datasets, as they are specially designed for GCNs. However, RIM further boosts the performance by a significant margin. RIM improves the test accuracy of the best baseline, i.e., GPA+, by 3.5-5.1% on the three citation networks and 2.2% on the Reddit dataset.

| Model | Methods | Cora | Citeseer | PubMed | |

| GCN | Random | 65.6 | 56.3 | 63.3 | 75.2 |

| AGE+ | 72.5 | 61.1 | 68.3 | 77.6 | |

| ANRMAB+ | 72.4 | 63.4 | 68.9 | 77.2 | |

| GPA+ | 72.8 | 63.8 | 69.7 | 77.9 | |

| RIM | 77.9 | 67.5 | 73.2 | 80.1 | |

| LP | Random | 51.7 | 31.4 | 50.4 | 51.3 |

| LP-ME+ | 55.7 | 35 | 56.1 | 53.4 | |

| LP-MRE+ | 59.1 | 41.4 | 58.5 | 54.9 | |

| RIM | 62.4 | 46.7 | 65.5 | 58.5 |

| Method | Cora | Citeseer | PubMed | |||

| No RT | 75.1 | -2.8 | 63.9 | -3.6 | 71.4 | -1.8 |

| No RS | 74.8 | -3.1 | 63.4 | -4.1 | 70.5 | -2.7 |

| No RTS | 73.4 | -4.5 | 61.9 | -5.6 | 68.9 | -4.3 |

| RIM | 77.9 | – | 67.5 | – | 73.2 | – |

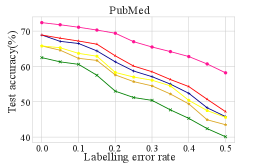

Performance on LP. Following the same settings in GCN, the result of LP is shown in Figure 3. Even if combined with the anti-noise method PTA, RIM consistently outperforms LP-MRE and LP-ME by a large margin as the labeling error rate grows. To demonstrate the improvement of RIM with the existence of noise, we also provide the test accuracy with labeling accuracy set as 0.7. Table 2 shows that both LP-ME+ and LP-MRE+ outperform Random, but RIM further boosts the performance by a significant margin. Especially, RIM improves test accuracy of the best baseline LP-MRE+ by 3.3-7.0% on the three citation networks and 3.6% on Reddit.

Ablation Study. RIM combines both influence quality and influence quantity. To answer Q2 and verify the necessity of each component, we evaluate RIM on GCN while disabling one component at a time when the labeling accuracy is 0.7. We evaluate RIM: (i) without the label reliability score served as the loss weight (called “No Reliable Training (RT)”); (ii) without the label reliability when selecting the node (called “No Reliable Selection (RS)”);(iii) without both reliable component(called “No Reliable Training and Selection (RTS)”). Table 2 displays the results of these three settings.

The influence quality in RIM contributes to both the reliable node selection and reliable training. First, the test accuracy will decrease in all three datasets if reliable training is ignored. For example, the performance gap is as large as 3.6% if reliable training is unused on Citeseer. Adopting reliable training can avoid the bad influence from the nodes with low label reliability. Besides, reliable node selection has a significant impact on model performance on all datasets, and it is more important than reliable training since removing the former will lead to a more substantial performance gap. For example, the gap on PubMed is 2.7%, which is higher than the other gap (1.8%). The more reliable the label is, the more reliable activated nodes we can use to train a GCN.

With the removal of reliable training and selection, the objective of RIM is to maximize the influence quantity (the total number of activated nodes). As shown in Table 2 and 2, RIM still exceeds the AGE+ method by a margin of 0.9%, 0.8%, and 0.6% on Cora, Citeseer, and PubMed, respectively, which verifies the effectiveness of maximizing the influence quantity.

Influence of labeling budget We study the performance of different AL methods under different labeling budgets in order to answer Q1. More concretely, the budget size ranges from to with labeling error rates being 0 and 0.3, respectively, and report the test accuracy of GCN. Figure 5 shows that with the budget size growing, RIM constantly outperforms other baselines, especially when there is more label noise, which shows the robustness of RIM.

Efficiency Comparison. Another key advantage of RIM is its high efficiency in node selection. To answer Q3, we report the end-to-end runtime of each method in Figure 5. In real-world AL settings, the end-to-end runtime of model-based methods (i.e., GPA, AGE, and ANRMAB) includes both labeling time and model training time. However, the labeling time is excluded from our measurement since the quality and proficiency of oracles dramatically influence it.

The left part in Figure 5 shows that RIM obtains a speedup of 22×, 30×, and 172× over ANRMAB on Cora, PubMed, and Reddit and a speedup of 4670×, 950×, and 177× over GPA on Cora, PubMed, and Reddit, respectively on GPUs. It is worth mentioning that, since GPA is a transfer learning method, the training time does not depend on the scale of the target dataset, and thus the runtime of GPA is very close on the three datasets (its details can be found in Appendix A.4). As RIM is model-free and does not rely on GPU, we also evaluate them on CPU environment, and the result is shown in the right part of Figure 5. The speedup grows on all these three datasets. For example, the speedup increases from 4670x to 18652x when the GPU device is unavailable on the Cora dataset.

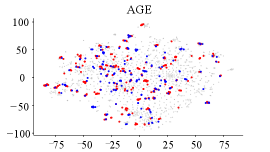

Interpretability. To answer Q4, we evaluate the distribution of the correctly activated nodes, incorrectly activated nodes, and inactivated nodes for AGE, RIM (No RS), and RIM when labeling accuracy is 0.7 for Cora in GCN. The result in Figure 6 shows that AGE has the fewest activated nodes, and nearly half of them are incorrectly activated. RIM (No RS) has the most activated nodes but also gets many nodes activated by incorrectly labeled nodes. Compared to these two methods, RIM has enough activated nodes, and most of them are activated by correctly labeled nodes (i.e., restrain the noisy propagation), which is why RIM performs better in node classification.

5 Conclusion

Both GCN and LP are representative graph models which rely on feature/label propagation. Efficient and effective data selection for the model training is demanding due to its inherent complexity, especially in the real world when the oracle provides noisy labels. In this paper, we propose RIM, a novel AL method that connects node selection with social influence maximization. RIM represents a critical step in this direction by showing the feasibility and the potential of such a connection. To accomplish this, we firstly introduce the concept of feature/label influence and then define their influence quality/quantity. To deal with the oracle noise, we propose a novel criterion to measure the influence quality based on the graph isomorphism. Finally, we connect the influence quality with the influence quantity and propose a new objective that maximizes the reliable influence quantity. Empirical studies on real-world graphs show that RIM outperforms competitive baselines by a large margin in terms of both model performance and efficiency. We are extending RIM to heterogeneous graphs for future work as the current measurement of influence quality cannot be directly used.

Broader Impact

Specifically, RIM can be employed in graph-related areas such as prediction on citation networks, social networks, chemical compounds, transaction graphs, road networks, etc. Each of the usage may bring a broad range of societal benefits. For example, predicting the malicious accounts on transaction networks can help identify criminal behaviors such as stealing money and money laundry. Prediction on road networks can help to avoid traffic overload and saving people’s time. RIM has significant technical-economic and social benefits because it can significantly shorten the labor time and labor intensity of oracles. However, as RIM requires oracles to label each selected node, it also faces the risk of information leakage. In this regard, we encourage researchers to understand the privacy concerns of RIM and investigate how to mitigate possible information leakage.

Acknowledgments and Disclosure of Funding

This work is supported by NSFC (No. 61832001, 6197200), Apple Scholars in AI/ML PhD fellowship, Beijing Academy of Artificial Intelligence (BAAI), PKU-Baidu Fund 2019BD006, and PKU-Tencent Joint Research Lab. Zhi Yang and Bin Cui are the corresponding authors.

References

- [1] C. C. Aggarwal, X. Kong, Q. Gu, J. Han, and S. Y. Philip. Active learning: A survey. In Data Classification: Algorithms and Applications, pages 571–605. CRC Press, 2014.

- [2] M.-R. Bouguelia, S. Nowaczyk, K. Santosh, and A. Verikas. Agreeing to disagree: active learning with noisy labels without crowdsourcing. International Journal of Machine Learning and Cybernetics, 9(8):1307–1319, 2018.

- [3] H. Cai, V. W. Zheng, and K. C.-C. Chang. Active learning for graph embedding. arXiv preprint arXiv:1705.05085, 2017.

- [4] Y. Chen and A. Krause. Near-optimal batch mode active learning and adaptive submodular optimization. In Proceedings of the 30th International Conference on Machine Learning, ICML 2013, Atlanta, GA, USA, 16-21 June 2013, pages 160–168, 2013.

- [5] G. Dasarathy, R. D. Nowak, and X. Zhu. S2: an efficient graph based active learning algorithm with application to nonparametric classification. In Proceedings of The 28th Conference on Learning Theory, COLT 2015, Paris, France, July 3-6, 2015, pages 503–522, 2015.

- [6] A. P. Dawid and A. M. Skene. Maximum likelihood estimation of observer error-rates using the em algorithm. Journal of the Royal Statistical Society: Series C (Applied Statistics), 28(1):20–28, 1979.

- [7] H. Dong, J. Chen, F. Feng, X. He, S. Bi, Z. Ding, and P. Cui. On the equivalence of decoupled graph convolution network and label propagation. In WWW ’21: The Web Conference 2021, Virtual Event / Ljubljana, Slovenia, April 19-23, 2021, pages 3651–3662, 2021.

- [8] J. Du and C. X. Ling. Active learning with human-like noisy oracle. In 2010 IEEE International Conference on Data Mining, pages 797–802. IEEE, 2010.

- [9] L. Gao, H. Yang, C. Zhou, J. Wu, S. Pan, and Y. Hu. Active discriminative network representation learning. In IJCAI International Joint Conference on Artificial Intelligence, 2018.

- [10] D. Golovin and A. Krause. Adaptive submodularity: Theory and applications in active learning and stochastic optimization. J. Artif. Intell. Res., 42:427–486, 2011.

- [11] Q. Guo, X. Qiu, X. Xue, and Z. Zhang. Syntax-guided text generation via graph neural network. Sci. China Inf. Sci., 64(5), 2021.

- [12] W. L. Hamilton, Z. Ying, and J. Leskovec. Inductive representation learning on large graphs. In Advances in Neural Information Processing Systems 30: Annual Conference on Neural Information Processing Systems 2017, December 4-9, 2017, Long Beach, CA, USA, pages 1024–1034, 2017.

- [13] B. Han, Q. Yao, T. Liu, G. Niu, I. W. Tsang, J. T. Kwok, and M. Sugiyama. A survey of label-noise representation learning: Past, present and future. arXiv preprint arXiv:2011.04406, 2020.

- [14] S. Hu, Z. Xiong, M. Qu, X. Yuan, M. Côté, Z. Liu, and J. Tang. Graph policy network for transferable active learning on graphs. In Advances in Neural Information Processing Systems 33: Annual Conference on Neural Information Processing Systems 2020, NeurIPS 2020, December 6-12, 2020, virtual, 2020.

- [15] W. Hu, M. Fey, M. Zitnik, Y. Dong, H. Ren, B. Liu, M. Catasta, and J. Leskovec. Open graph benchmark: Datasets for machine learning on graphs. arXiv preprint arXiv:2005.00687, 2020.

- [16] K. Jung, W. Heo, and W. Chen. Irie: Scalable and robust influence maximization in social networks. In 2012 IEEE 12th International Conference on Data Mining, pages 918–923. IEEE, 2012.

- [17] D. Kempe, J. Kleinberg, and É. Tardos. Maximizing the spread of influence through a social network. In Proceedings of the ninth ACM SIGKDD international conference on Knowledge discovery and data mining, pages 137–146, 2003.

- [18] T. N. Kipf and M. Welling. Semi-supervised classification with graph convolutional networks. In 5th International Conference on Learning Representations, ICLR 2017, Toulon, France, April 24-26, 2017, Conference Track Proceedings, 2017.

- [19] L. I. Kuncheva and J. J. R. Diez. A weighted voting framework for classifiers ensembles. Knowl. Inf. Syst., 38(2):259–275, 2014.

- [20] Y. Li, J. Fan, Y. Wang, and K.-L. Tan. Influence maximization on social graphs: A survey. IEEE Transactions on Knowledge and Data Engineering, 30(10):1852–1872, 2018.

- [21] Y. Li, Y. Shen, W. Zhang, Y. Chen, H. Jiang, M. Liu, J. Jiang, J. Gao, W. Wu, Z. Yang, C. Zhang, and B. Cui. Openbox: A generalized black-box optimization service. In KDD ’21: The 27th ACM SIGKDD Conference on Knowledge Discovery and Data Mining, Virtual Event, Singapore, August 14-18, 2021, pages 3209–3219, 2021.

- [22] J. Long, J. Yin, W. Zhao, and E. Zhu. Graph-based active learning based on label propagation. In International Conference on Modeling Decisions for Artificial Intelligence, pages 179–190. Springer, 2008.

- [23] Y. Ma, R. Garnett, and J. G. Schneider. -optimality for active learning on gaussian random fields. In Advances in Neural Information Processing Systems 26: 27th Annual Conference on Neural Information Processing Systems 2013. Proceedings of a meeting held December 5-8, 2013, Lake Tahoe, Nevada, United States, pages 2751–2759, 2013.

- [24] M. McPherson, L. Smith-Lovin, and J. M. Cook. Birds of a feather: Homophily in social networks. Annual review of sociology, 27(1):415–444, 2001.

- [25] G. L. Nemhauser, L. A. Wolsey, and M. L. Fisher. An analysis of approximations for maximizing submodular set functions - I. Math. Program., 14(1):265–294, 1978.

- [26] A. Paszke, S. Gross, F. Massa, A. Lerer, J. Bradbury, G. Chanan, T. Killeen, Z. Lin, N. Gimelshein, L. Antiga, et al. Pytorch: An imperative style, high-performance deep learning library. In Advances in Neural Information Processing Systems, pages 8024–8035, 2019.

- [27] S. Paul, S. Chandrasekaran, B. Manjunath, and A. K. Roy-Chowdhury. Exploiting context for robustness to label noise in active learning. arXiv preprint arXiv:2010.09066, 2020.

- [28] F. Wang and C. Zhang. Label propagation through linear neighborhoods. IEEE Transactions on Knowledge and Data Engineering, 20(1):55–67, 2007.

- [29] H. Wang and J. Leskovec. Unifying graph convolutional neural networks and label propagation. arXiv preprint arXiv:2002.06755, 2020.

- [30] K. Wang, Z. Shen, C. Huang, C.-H. Wu, Y. Dong, and A. Kanakia. Microsoft academic graph: When experts are not enough. Quantitative Science Studies, 1(1):396–413, 2020.

- [31] X. Wang, B. Liu, S. Cao, L. Jing, and J. Yu. Important sampling based active learning for imbalance classification. Sci. China Inf. Sci., 63(8):1–14, 2020.

- [32] Y. Wang, Y. Yuan, Y. Ma, and G. Wang. Time-dependent graphs: Definitions, applications, and algorithms. Data Sci. Eng., 4(4):352–366, 2019.

- [33] F. Wu, A. H. S. Jr., T. Zhang, C. Fifty, T. Yu, and K. Q. Weinberger. Simplifying graph convolutional networks. In Proceedings of the 36th International Conference on Machine Learning, ICML 2019, 9-15 June 2019, Long Beach, California, USA, pages 6861–6871, 2019.

- [34] S. Wu, F. Sun, W. Zhang, and B. Cui. Graph neural networks in recommender systems: a survey. arXiv preprint arXiv:2011.02260, 2020.

- [35] S. Wu, Y. Zhang, C. Gao, K. Bian, and B. Cui. Garg: Anonymous recommendation of point-of-interest in mobile networks by graph convolution network. Data Science and Engineering, 5(4):433–447, 2020.

- [36] B. Xu, X. Zhao, and Q. Kong. Using active learning to improve distantly supervised entity typing in multi-source knowledge bases. In CCF International Conference on Natural Language Processing and Chinese Computing, pages 219–231. Springer, 2020.

- [37] K. Xu, C. Li, Y. Tian, T. Sonobe, K. Kawarabayashi, and S. Jegelka. Representation learning on graphs with jumping knowledge networks. In Proceedings of the 35th International Conference on Machine Learning, ICML 2018, Stockholmsmässan, Stockholm, Sweden, July 10-15, 2018, pages 5449–5458, 2018.

- [38] Y. Yang, E. Chen, Q. Liu, B. Xiang, T. Xu, and S. A. Shad. On approximation of real-world influence spread. In Joint European conference on machine learning and knowledge discovery in databases, pages 548–564. Springer, 2012.

- [39] H. Zeng, H. Zhou, A. Srivastava, R. Kannan, and V. K. Prasanna. Graphsaint: Graph sampling based inductive learning method. In 8th International Conference on Learning Representations, ICLR 2020, Addis Ababa, Ethiopia, April 26-30, 2020. OpenReview.net, 2020.

- [40] C. Zhang and K. Chaudhuri. Active learning from weak and strong labelers. arXiv preprint arXiv:1510.02847, 2015.

- [41] W. Zhang, Y. Shen, Y. Li, L. Chen, Z. Yang, and B. Cui. ALG: fast and accurate active learning framework for graph convolutional networks. In SIGMOD ’21: International Conference on Management of Data, Virtual Event, China, June 20-25, 2021, pages 2366–2374, 2021.

- [42] W. Zhang, Z. Yang, Y. Wang, Y. Shen, Y. Li, L. Wang, and B. Cui. Grain: Improving data efficiency of graph neural networks via diversified influence maximization. Proc. VLDB Endow., 14(11):2473–2482, 2021.

- [43] Z. Zhang, P. Cui, and W. Zhu. Deep learning on graphs: A survey. IEEE Transactions on Knowledge and Data Engineering, 2020.

- [44] X. Zhu. Semi-supervised learning with graphs. Carnegie Mellon University, 2005.

- [45] X. Zhu and A. B. Goldberg. Introduction to semi-supervised learning. Synthesis lectures on artificial intelligence and machine learning, 3(1):1–130, 2009.

- [46] X. Zhu, J. Lafferty, and Z. Ghahramani. Combining active learning and semi-supervised learning using gaussian fields and harmonic functions. In ICML 2003 workshop on the continuum from labeled to unlabeled data in machine learning and data mining, volume 3, 2003.

Appendix A Appendix

A.1 Proof of Theorem 1

Theorem 3 (Label Reliability).

Denote the number of classes as . And assume that the label is wrong with the probability of , and the wrong label is picked uniformly at random from the remaining classes. Given the labelled node and unlabelled node , suppose the oracle labels as and (the ground truth label for , the same notation also applies to ), the reliability of node according to is

| (13) |

where is the labelling accuracy, and is the probability that and actually have the same label.

In practice, we can estimate with redundant votes across oracles (e.g., such as Amazon’s Mechanical Turk) by treating the majority vote as correct labels, like the Dawid-Skene algorithm [6].

Mathematically speaking, what we want is a conditional probability. To be more precise, we have already know that the label for given by the oracle is the same as the ground truth label of , and we want to calculate the probability of the event that the label for given by the oracle is the same as the ground truth label of . Formally, the reliability of node according to is

| (14) |

With the definition of conditional probability, we have

| (15) |

Then we shall calculate the numerator and denominator, respectively.

For denominator, with the law of total probability, we have

| (16) |

Then calculate these two terms separately.

| (17) | |||

The correctness of the second equal sign is due to the independence of the correctness of the oracle and the conformity of ground truth labels of two i.i.d. samples.

| (18) |

Add them and the denominator is solved,

| (19) |

Now calculate the numerator.

| (20) |

Then we have the final answer,

| (21) |

Therefore, the theorem follows.

A.2 Proof of Theorem 2

Definition 6 (Nondecreasing submodular).

Given a set , and the function , is nondecreasing submodular with respect to if and .

Previous work [25] shows a greedy algorithm can provide an approximation guarantee of if is nondecreasing and submodular with respect to .

Consider a batch setting with rounds where nodes are selected in each iteration (see Algorithm 1). Theorem 3.2 states that the greedy selection returns a -approximate to the RIM objective for each batch selection, i.e.,, where is the set of nodes selected in previous rounds.

We can prove is submodular as follows:

For every and , let and . Since , for any , , so we have:

Therefore, the Theorem follows.

A.3 Dataset description

| Dataset | #Nodes | #Features | #Edges | #Classes | #Train/Val/Test | Task type | Description |

| Cora | 2,708 | 1,433 | 5,429 | 7 | 1,208/500/1,000 | Transductive | citation network |

| Citeseer | 3,327 | 3,703 | 4,732 | 6 | 1,827/500/1,000 | Transductive | citation network |

| Pubmed | 19,717 | 500 | 44,338 | 3 | 18,217/500/1,000 | Transductive | citation network |

| 232,965 | 602 | 11,606,919 | 41 | 155,310/23,297/54,358 | Inductive | social network |

Cora, Citeseer, and Pubmed111https://github.com/tkipf/gcn/tree/master/gcn/data are three popular citation network datasets, and we follow the public training/validation/test split in GCN [18]. In these three networks, papers from different topics are considered as nodes, and the edges are citations among the papers. The node attributes are binary word vectors, and class labels are the topics papers belong to.

Reddit is a social network dataset derived from the community structure of numerous Reddit posts. It is a well-known inductive training dataset, and the training/validation/test split in our experiment is the same as that in GraphSAGE [12]. The public version provided by GraphSAINT222https://github.com/GraphSAINT/GraphSAINT [39] is used in our paper. For more specifications about the four aforementioned datasets, see Table 3.

ogbn-arxiv is a directed graph, representing the citation network among all Computer Science (CS) arXiv papers indexed by MAG. The training/validation/test split in our experiment is the same as the public version. The public version provided by OGB333https://ogb.stanford.edu/docs/nodeprop/#ogbn-arxivis used in our paper.

ogbn-papers100M is a paper citation dataset with 111 million papers indexed by MAG [30] in it. This dataset is currently the largest existing public node classification dataset and is much larger than others. We follow the official training/validation/test split and metric released in the official website444https://github.com/snap-stanford/ogb and official paper [15].

A.4 Implementation details

For Cora and Citeseer, the threshold is chosen as 0.05, while for PubMed and Reddit, the threshold is chosen as 0.005.

In terms of GPA [14], so as to obtain its full performance, the pre-trained model released by its authors on Github is adopted. More precisely, for Cora, we choose the model pre-trained on PubMed and Citeseer; for PubMed, we choose the model pre-trained on Cora and Citeseer; for Citeseer and Reddit, we choose the model pre-trained on Cora and PubMed. Other hyper-parameters are all consistent with the released code555https://github.com/zwt233/RIM.

When it comes to AGE [3] and ANRMAB [9], in order to obtain well-trained models and guarantee that the model-based selection criteria employed by them run well, GCN is trained for 200 epochs in each node selection iteration. For LP [28], the number of propagation iterations is set to 10. AGE is implemented with its open-source version and ANRMAB in accordance with its original paper.

In addition, (i.e., the number of classes) nodes are chosen to be labeled in each iteration. As an instance, is chosen as 7 in Cora.

Efficiency measurement is carried out on each of the four datasets. Models are all trained for 2000 epochs to measure the end-to-end runtime. It is worth noting that the runtime of GPA on all four datasets is virtually identical, e.g., the end-to-end runtime with GPU on Cora, PubMed, Citeseer, and Reddit is 22,416s, 21,983s, 22,175s, and 22,319s, respectively, which can be justified by the fact that the RL model of GPA is trained on small datasets, whereas its time complexity is irrelevant to the scale of datasets.

The experiments are conducted on an Ubuntu 16.04 system with Intel(R) Xeon(R) CPU E5-2650 v4 @ 2.20GHz, 4 NVIDIA GeForce GTX 1080 Ti GPUs and 256 GB DRAM. All the experiments are implemented in Python 3.6 with Pytorch 1.7.1 [26] on CUDA 10.1.

A.5 Experiments on OGB datasets

To verify the effectiveness of RIM on large graphs, we add the experiments on ogbn-arxiv and ogbn-papers100M. Due to the large memory cost, GCN cannot be implemented on ogbn-papers100M in a single machine, thus we use the simplified GCN [33] to replace the original GCN here [18].

| Model | Methods | ogbn-arxiv | ogbn-paper100M |

| SGC | Random | 47.7 | 44.6 |

| AGE+ | 53.9 | OOT | |

| ANRMAB+ | 54.1 | OOT | |

| GPA+ | 56.3 | OOT | |

| RIM | 60.8 | 48.7 | |

| LP | Random | 42.6 | 39.1 |

| LP-ME+ | 47.2 | 39.9 | |

| LP-MRE+ | 51.3 | OOT | |

| RIM | 54.9 | 44.3 |

The experimental results in Table 4 shows that RIM has better performance and robustness than other baselines in these two datasets. Note that it takes more than one week for model-based baselines to finish the AL process on the large ogbn-papers100M, and we mark these methods as out-of-time(OOT).