Robot Deformable Object Manipulation via NMPC-generated Demonstrations in Deep Reinforcement Learning

Abstract

In this work, we conducted research on deformable object manipulation by robots based on demonstration-enhanced reinforcement learning (RL). To improve the learning efficiency of RL, we enhanced the utilization of demonstration data from multiple aspects and proposed the HGCR-DDPG algorithm. It uses a novel high-dimensional fuzzy approach for grasping-point selection, a refined behavior-cloning method to enhance data-driven learning in Rainbow-DDPG, and a sequential policy-learning strategy. Compared to the baseline algorithm (Rainbow-DDPG), our proposed HGCR-DDPG achieved 2.01 times the global average reward and reduced the global average standard deviation to 45% of that of the baseline algorithm. To reduce the human labor cost of demonstration collection, we proposed a low-cost demonstration collection method based on Nonlinear Model Predictive Control (NMPC). Simulation experiment results show that demonstrations collected through NMPC can be used to train HGCR-DDPG, achieving comparable results to those obtained with human demonstrations. To validate the feasibility of our proposed methods in real-world environments, we conducted physical experiments involving deformable object manipulation. We manipulated fabric to perform three tasks: diagonal folding, central axis folding, and flattening. The experimental results demonstrate that our proposed method achieved success rates of 83.3%, 80%, and 100% for these three tasks, respectively, validating the effectiveness of our approach. Compared to current large-model approaches for robot manipulation, the proposed algorithm is lightweight, requires fewer computational resources, and offers task-specific customization and efficient adaptability for specific tasks.

Index Terms:

Deformable objects, robotic Manipulation, reinforcement Learning, demonstration, nonlinear model predictive controlI Introduction

Deformable objects play a critical role in numerous key industries and are widely used across various sectors [1]. Their manipulation is a common task in manufacturing [2, 3], medical surgery [4, 5], and service robotics [6, 7, 8]. However, manual handling of deformable objects can be time-consuming, labor-intensive, costly, and may not guarantee efficiency or accuracy. Consequently, robots are often employed to replace human operators for manipulating deformable objects, such as connecting cables on automated assembly lines [9, 10], cutting or suturing soft tissue during medical surgeries [11], and handling fabrics like towels and clothes in home service scenarios [12, 13]. Automating the manipulation of deformable objects with robots can significantly reduce labor costs while improving operational efficiency and precision. Therefore, robotic systems for manipulating deformable objects have attracted considerable attention and research [14].

Currently, the majority of robotic manipulation research focuses on rigid objects, where the deformation caused during grasping is negligible. In contrast, when dealing with deformable objects, robots face many new challenges, including high-dimensional state spaces, complex dynamics, and highly nonlinear physical properties [15]. To address these challenges, some researchers have established dynamical models for deformable objects and designed robotic manipulation strategies based on these models [16, 17]. Nonetheless, ensuring high accuracy in the dynamical model presents significant difficulties, and the derivation of model gradients can be highly complex [15, 18]. To avoid the complexity of dynamical model derivation, some researchers have turned to learning-based methods, particularly reinforcement learning (RL) and imitation learning (IL) [15]. These methods learn control policies from data using learning algorithms, without requiring explicit dynamical modeling. RL involves the agent continuously exploring the action space through trial and error, collecting interaction data with the environment to facilitate learning. Still, in real-world scenarios, the intricacy involved in handling deformable objects frequently results in inefficient learning processes that require extensive time and data, yielding less-than-optimal results. Therefore, it is crucial to set more effective states and action spaces to reduce task complexity based on domain-specific knowledge [19, 20]. With the advancement of deep learning technology, Deep Reinforcement Learning (DRL) is being used to tackle deformable object manipulation problems. Matas et al. [21] trained agents using DRL methods in simulation environments to fold clothes or place them on hangers. Researchers incorporated seven commonly used techniques in the DRL field into the Deep Deterministic Policy Gradient (DDPG) to develop the Rainbow-DDPG algorithm and validated the effectiveness of these techniques through ablation experiments. Additionally, they conducted deformable object manipulation experiments in real scenes through domain randomization. Jangir et al. [22] treated the coordinates of a series of key points on the fabric as the state space, introducing Behavioral Cloning (BC) and Hindsight Experience Replay (HER) to improve the DDPG algorithm for handling tasks involving dynamic manipulation of fabrics by robots. They also studied the impact of key point selection on RL performance. Despite making some progress in learning effective strategies for deformable object manipulation, DRL still faces challenges in terms of learning efficiency due to the inherent complexity of such manipulation tasks, requiring substantial amounts of data and computational resources for training.

Collecting human demonstration data and extracting operational skills using IL algorithms from these demonstrations has also received extensive research attention. Unlike the trial-and-error mechanism of RL, IL completes tasks through observation and imitation of expert behavior. This method has unique advantages in handling tasks that are too complex for RL or where clear rewards are difficult to define [23]. With the continuous development of deep learning technology and hardware infrastructure, recent research has been able to collect a large amount of human demonstration data and utilize deep learning techniques to extract manipulation skills from it [24, 25, 26]. Although extracting manipulation skills from a large amount of human demonstrations can yield decent results, the high manpower cost associated with this approach is often challenging and unsustainable. Some studies combine RL and IL, leveraging human demonstrations to enhance the learning efficiency of RL while also benefiting from RL’s ability for autonomous exploration [21, 22, 27]. Balaguer et al. [27] used the K-means clustering algorithm to categorize human demonstration actions into classes, testing the feasibility of each type of human demonstration action on the robot and selecting the feasible one with the highest reward as the starting point for agent exploration, thus streamlining the search space of RL algorithms. It is worth mentioning that the Rainbow-DDPG algorithm mentioned earlier [21] and the work by Jangir et al. [22] also incorporate human demonstration data to improve the learning efficiency of RL. Undeniably, the morphological diversity of deformable objects imposes higher requirements on the range of operational scenarios covered by demonstration data. To cover as wide a range of operational scenarios as possible, researchers typically need to collect a large amount of demonstration data. Existing studies [24, 25] use manually collected large-scale demonstration data for training purposes, which inevitably incurs significant human resource costs.

To address the challenges of low learning efficiency in RL and the difficulty in collecting IL demonstration data mentioned above, this article adopts a learning-based approach to tackle the problem of deformable object manipulation by robots. The aim is to improve the learning efficiency of algorithms and reduce learning costs. The research aims to optimize existing RL algorithms from two perspectives. First, by integrating IL, we innovatively design the HGCR-DDPG algorithm. It leverages a novel high-dimensional fuzzy-based approach to select grasping points, a refined behavior cloning-inspired method to boost data-driven learning in Rainbow-DDPG, and a sequential policy-learning strategy. This holistic design enhances RL learning efficiency. Second, we develop a low-cost demonstration data collection method using NMPC. It’s built upon a spring-mass model, enabling automated data generation and effective mapping to robot actions, thus reducing data collection costs. Through these, robots can learn manipulation skills with higher efficiency and lower cost, thus operating deformable objects more efficiently in practical applications. Specifically, the main contributions of this article are as follows:

-

•

An RL method enhanced by demonstration to increase the learning efficiency of RL with human demonstration data for training, which is named as HGCR-DDPG.

-

•

A demonstration data collection method in simulation environment based on Nonlinear Model Predictive Control (NMPC) to reduce the cost of demonstration data collection.

-

•

The feasibility of the proposed methods in simulation and real environments through experiments.

The article is divided into 6 sections, with the main research content and their relationships shown in Fig. 1. The specific content arrangement of each section is as follows: Section I is the introduction. Section II addresses the issue of low learning efficiency in RL algorithms by proposing HGCR-DDPG algorithm that combines a High-Dimensional Takagi-Sugeno-Kang (HTSK) fuzzy system, Generative Adversarial Behavior Cloning (GABC) techniques, Rainbow-DDPG, and Conditional Policy Learning (CPL). Section III addresses the issue of high cost of collecting demonstration data by exploring automated demonstration collection techniques and proposes a low-cost demonstration collection method based on NMPC. Section IV presents the simulation and physical experiment settings that validate the methods proposed in this article. Section V presents the experiment results. Section VI provides a summary of the entire article and outlooks future work.

II Problem Formulation

II-A Introduction to the Simulation Environment and the Physical Experiment Platform

A complex deformable object manipulation simulation environment was established using PyBullet [34]. Specifically, a UR5e robot model and a square cloth model with a side length of 0.24 meters were constructed. The cloth model consisted of detailed triangular meshes, with its mass uniformly distributed across the mesh vertices (i.e., nodes). The process of the robot grasping the cloth was simulated by establishing precise anchor connections between the corresponding nodes of the cloth model and the robot end-effector. During the simulation, the cloth model was affected only by gravity, friction with the table surface, and the traction force exerted by the robot. The simulation experiments were conducted on a high-performance server equipped with 48GB of RAM, an Intel E5-2660 v4 processor, and an NVIDIA 3090 graphics card.

The deformable object manipulation system in this article consists of sensor subsystems, decision and control subsystems, and robot motion subsystems, as illustrated in Fig. S1(a). The target object for the experiment is a red square fabric with a side length of 0.24 meters. The sensor subsystem is responsible for capturing image information of the fabric. The robot motion subsystem executes precise grasping and placing actions. The decision and control subsystem extract key features from visual information, utilize the algorithm to generate decision commands, and communicate with the robot motion subsystem via ROS. As depicted in Fig. S1(b), the Intel RealSense D435i camera is fixed in an “eye-to-hand” manner, the UR5e robotic arm is mounted on a dedicated workstation, and the RG2 gripper, serving as the execution end, is installed at the end of the robot.

II-B Problem Formulation for Robotic Manipulation of Deformable Objects under the DRL Framework

This section will provide a detailed introduction to the DRL model of this study from five aspects: task setting, state space, action space, state transition, and reward setting.

II-B1 Task Setting

For a square piece of fabric as a representative deformable object, these tasks are designed: folding along the diagonal, folding along the central axis, and flattening. In each task, the robot is allowed a maximum number of operations, denoted as , which varies among different tasks. At the beginning of each experimental round, the robot returns to its default initial pose, while the initial position of the fabric is set according to the requirements of the specific task and is subject to a certain degree of random noise. The details are as followed:

-

•

Folding along the diagonal: The specific objective of the operation is to achieve perfect alignment of one pair of diagonal endpoints of the fabric, while maintaining the distance between the other pair of diagonal endpoints exactly equal to the length of the fabric diagonal, and ensuring that the area of the fabric is equal to half of its area when fully unfolded.

-

•

Folding along the central axis: Before folding, the two sets of fabric endpoints should be symmetrically arranged relative to the folding axis. After folding, these two sets of endpoints should coincide, while ensuring that the distance between endpoints on the side of the folding axis remains consistent with before folding, and the area of the fabric is equal to half of its area when fully unfolded.

-

•

Flattening: When faced with heavily wrinkled fabric, the robot’s task is to flatten it to its maximum area. At the beginning of each experiment, the fabric is initialized by the robot applying random actions within the first 10 time steps. The robot moves a point on the fabric from its initial position to a placement point within a distance of 0.1 m to 0.2 m during each random step, ensuring sufficient disturbance to generate random wrinkles.

II-B2 State Space

Previous studies [21, 30] often directly fed the visual information of the scene as state inputs to DRL, which is intuitive but results in an overly complex state space. Some research [22] simplifies the state space in simulation by using the coordinates of deformable object feature points as state inputs, which is simple but difficult to directly transfer to the real world. This article adopts a compromise solution. Algorithms from OpenCV are utilized to preprocess the visual information of the scene, and the processed results are then used as state inputs for DRL. The state spaces of three different tasks are introduced as follows.

In both the along-diagonal and along-axis folding tasks, using Canny edge detection [31] and the Douglas-Peucker algorithm in OpenCV, the four right-angle corners of the fabric can be identified. During robot manipulation, considering the relationship between the fabric’s motion speed and the robot’s operation speed, this article, under the premise of relatively slow robot operation, employs the pyramid Lucas-Kanade optical flow tracking method to track the four corners. This article selects the positions of the four corners of the square fabric and the proportion of the fabric’s current area to its area when fully flattened as the state representation in these two folding tasks. This results in a 13-dimensional state space, as shown in Fig. 2(a). The symbols defining the state variables for the folding tasks are as follows:

| (1) |

where respectively represent the three-dimensional coordinates of the four corners of the fabric at time . All coordinates and vectors mentioned in this article are described with respect to the base coordinate system of the robot they are associated with.

In the flattening task, the fabric’s initial state is heavily wrinkled, making it extremely difficult to detect the right-angle corners of the fabric. We use Canny edge detection and the Douglas-Peucker algorithm in OpenCV to fit the contour of the fabric into an octagon, representing the eight points on the contour that best characterize the shape of the fabric. The coordinates of the eight endpoints, the coordinates of the center point of the fabric contour and the proportion of the fabric’s current area to its area when fully flattened are the state representation. Ultimately, the dimensionality of the state space used in the spreading task in this article is 28, as shown in Fig. 2(b). The symbols defining the state variables for the spreading task are as follows:

| (2) |

where respectively represent the three-dimensional coordinates of the eight fitted endpoints of the fabric at time , and represents the three-dimensional coordinates of the center point of the fabric contour at time .

II-B3 Action Space

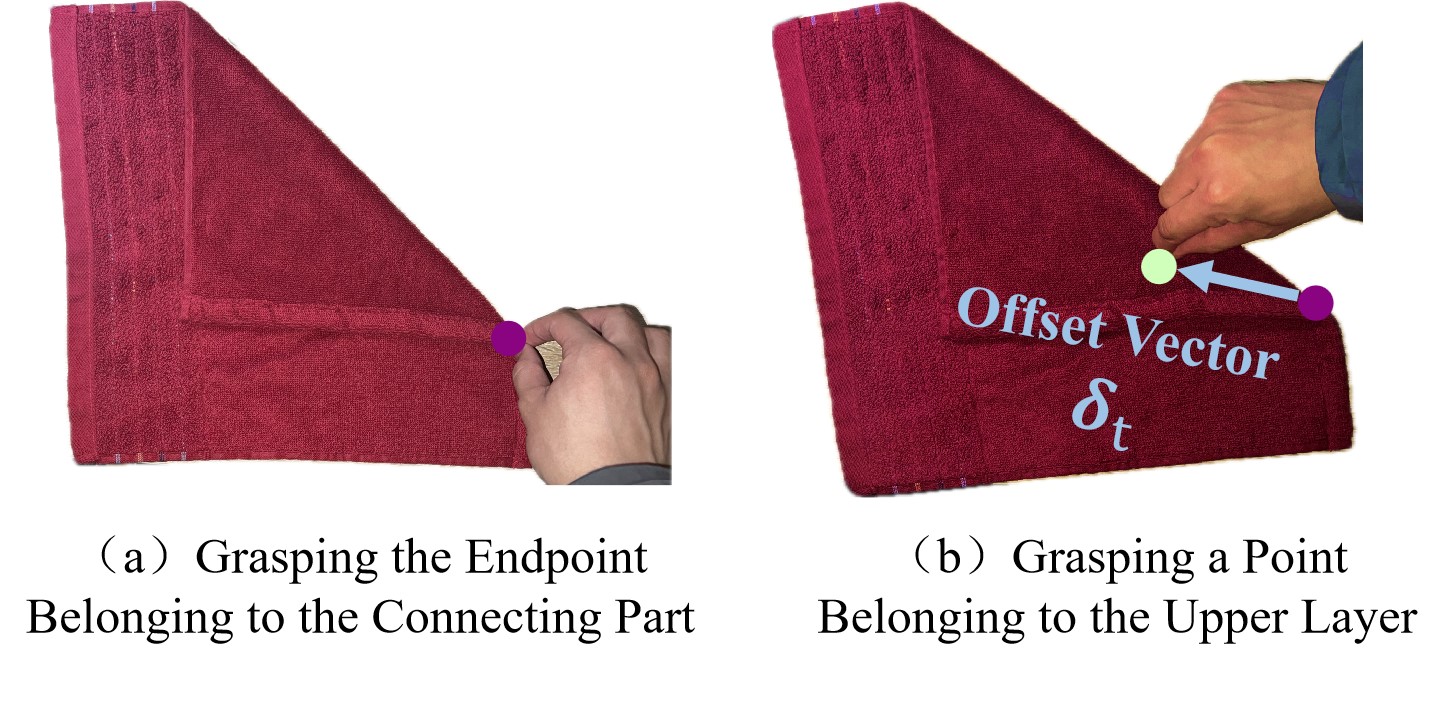

During the process of collecting human demonstrations, we found that humans only need to manipulate the four endpoints of the fabric to complete all folding tasks. In contrast, a strategy solely based on manipulating the endpoints of the fabric outline has minimal effect in flattening tasks. Fig. S1 explains this phenomenon: the fabric in a folded state is divided into upper layer (green), intermediate connecting parts (purple), and lower layer (orange), as shown in Fig. S2(a). Effective relative displacement between the upper and lower layers occurs only when manipulating points on the upper layer, as depicted in Fig. S2(b). Conversely, manipulating the lower layer or the connecting parts, as shown in Fig. S2(c), mostly results in overall movement of the fabric, which is not substantially helpful for flattening tasks.

This article designs a motion vector, as illustrated in Fig. 3. An offset vector is introduced based on the fabric’s edge endpoints to enable the robot to grasp points on the upper layer of the fabric. By adjusting , the robot can grasp any part of the fabric to manipulate it.

This article refines the operation process into three key steps: firstly, selecting one endpoint from the state variables as the base grasping point; secondly, generating an offset vector to accurately adjust the position of the grasping point; thirdly, determining the coordinates of the placement point to guide the robot to complete the entire action from grasping to placing. The representation of the action space is described in (3):

| (3) |

where represents the index of the endpoint selected at time in the state variables, represents the offset vector for time , and represents the coordinates of the placement point at time .

II-B4 State Transition

The state transition from time to time is controlled by as expressed in (3). Initially, the robot determines the coordinates of the corresponding endpoint in based on . Subsequently, by combining , the actual grasping coordinates are calculated, guiding the end effector to execute the grasping action at the position. Finally, the robot moves the end effector to the position, opens the gripper, and completes the placing operation.

II-B5 Reward Setting

In the diagonal folding task, as shown in Fig. 2, the target state of the fabric is when endpoint 1 and endpoint 3 coincide, and the distance between endpoint 2 and endpoint 4 equals the length of the diagonal, with the unfolded area of the fabric equal to half of its fully unfolded area. Assuming the side length of the square fabric is , we define the difference between the fabric state at time and the target state in the diagonal folding task as follows:

| (4) |

The objective of folding along the central axis is to align endpoint 1 with endpoint 2, endpoint 3 with endpoint 4, ensure that the distance between endpoint 1 and endpoint 3 equals the distance between endpoint 2 and endpoint 4, both equal to ls, and the fabric’s unfolded area equals half of its fully unfolded area. We define the gap between the fabric state at time and the target state in the folding along the central axis task as follows:

| (5) |

For in different folding tasks, we define their reward functions as follows:

| (6) |

where represents the completion status of the task, which becomes True when the maximum number of operations, , is reached. is the threshold to measure whether the fabric state has significantly changed. According to (6), the reward mechanism assigns rewards or penalties to the agent based on its immediate actions and states, following the following guidelines:

-

•

At the end of a round, a decisive reward of is given based on the error . The greater the error, the lower the decisive reward. The decisive reward is set to 100 when the is 0.

-

•

If the round is not over and the error significantly decreases, i.e., , indicating the agent is approaching the target, a positive reward of 3 is given to encourage similar behavior.

-

•

Conversely, if the round is not over and the error significantly increases, i.e., , indicating the agent deviates from the target, a negative penalty of -3 is applied to suppress this behavior.

In the flattening task, we directly define the reward function for the flattening task based on the ratio of the fabric’s unfolded area at time to its fully unfolded area:

| (7) |

The criteria followed here are similar to those described in (6), and will not be repeated here.

II-C Establishment and Analysis of the Spring-Mass Model

We introduce a low-cost demonstration collection method based on NMPC. A NMPC problem is built based on the spring-mass particle model, and optimal control strategies are obtained by solving this problem to accomplish specific tasks. It achieves automated generation of demonstration data and significantly cuts data collection costs. However, in complex states of deformable objects (e.g., heavily wrinkled fabric), extracting the state of all particles in real environments poses significant challenges, limiting the feasibility of NMPC in real environments. Therefore, the purpose of the NMPC method is to automatically collect demonstration data in simulation to assist RL training.

The spring-mass particle model adopted in this article is illustrated in Fig. 4(a). This model can be viewed as a system of particles connected by multiple springs, with the mass of the cloth evenly distributed among the particles. The spring-mass particle model established in this article consists of a set of particles denoted by and a set of springs denoted by . The set of neighbors of the -th particle, i.e., the set of particles connected to particle by springs, is defined as , and it is assumed that the neighbor set of each particle is fixed.

We use a square cloth, so , where is the number of particles on one edge of the cloth. The mass of each particle is denoted by , . The position of particle at time is represented by the three-dimensional coordinate vector . The stiffness coefficient of the springs usually depends on the physical properties of the material, while the natural length depends on the initial state of the cloth. We assume that all spring stiffness coefficients and natural lengths are equal, denoted as and , respectively. The spring connecting particle and particle is denoted by (or , which is equivalent). Thus, the neighbor set can be expressed as:

| (8) |

The particles are numbered from left to right and from top to bottom. Specifically, the particles in the first row are numbered from 1 to , the particles in the second row are numbered from to , and so on. Fig. 4(b) illustrates the case when . Particles are initially subjected to the force exerted by the springs, which depends on the relative positions of the particles. For any two particles and connected by a spring, the spring force at time can be expressed as:

| (9) |

where represents the actual distance between particles and at time , which can be calculated using the following equation:

| (10) |

The damping force can be expressed as follows:

| (11) |

where is the damping coefficient, is the velocity vector of particle at time , and , where are the velocity components of particle in the directions, respectively. Each particle is also influenced by gravity , external force , and damping force . In summary, the total force acting on particle at time can be expressed as:

| (12) |

We express the acceleration of particle at time as:

| (13) |

Furthermore, the particle’s velocity can be update as:

| (14) |

Next, we treat as a function of time . Then, the position of particle at time can be obtained by Second-order Taylor expanding at :

| (15) |

This article uses a small time step and sets the damping coefficient to a large positive value to ensure the stability of the model. In the following text, is abbreviated as to simplify the subscript. The update formula for particle position is shown as (16):

| (16) |

III Methodology

III-A HGCR-DDPG Algorithm

III-A1 Algorithm for Selecting Benchmark Grasping Points Based on the HTSK Fuzzy System

In this article, as long as a suitable final is chosen, selecting any reference can promote the task to some extent. Therefore, the selection of the fabric is more closely related to the application range of fuzzy sets. We use the state-action pairs , with the state as input and from the action as output, to construct an HTSK fuzzy system, denoted as , where represents its parameters. The input-output relationship of is represented as follows:

| (17) |

The training data for the selection strategy of the reference grasping point is sourced from the human demonstration dataset . Additionally, data with significant contributions to task progress are continuously supplemented during the interaction between the robot and the environment, denoted as , where is the size of the training dataset, represents the -dimensional state variables of the -th sample, is the index of the reference grasping point for the -th sample, which is also the label of the training dataset, and is the number of candidate reference grasping points. The system learns the mapping relationship between the states and the corresponding reference grasping points in the demonstration dataset, enabling it to predict appropriate reference grasping points under different states.

The primary improvement of HTSK over TSK is reflected in the saturation issues related to the dimensions of the data. In TSK fuzzy systems, the traditional softmax function is used to calculate the normalized firing levels of each fuzzy rule, as follows:

| (18) |

Here, decreases as the dimension of the input data increases, leading to the saturation of the softmax function [28]. In conventional TSK fuzzy systems, typically only the fuzzy rule with the highest receives a non-zero firing level . Consequently, as the data dimension increases, the distinctiveness of all values diminishes, and the classification performance of the TSK fuzzy system declines. To address this saturation issue, HTSK substitutes in the normalized firing levels of each fuzzy rule within the TSK system with its mean value , thereby allowing the normalization process to better accommodate high-dimensional data inputs. In the sixth and seventh layers of the HTSK net [29], based on the softmax function and the probability distribution, the final is selected as the output.

This article adopts the k-means clustering method to initialize which is the parameter of the Gaussian membership function, the cross-entropy loss function to measure the difference between the output of the fuzzy system and the true labels, and the Adam optimizer for gradient descent, with a learning rate of 0.04, a batch size of 64, and a weight decay of 1e-8.

III-A2 GABC-Improved Rainbow-DDPG Algorithm

In Rainbow-DDPG, BC is typically implemented by adding to the loss function of the Actor network, as shown in (19):

| (19) |

The definition of only takes effect when . However, training the Critic network to output accurate Q-values is a time-consuming process, which results in the ineffectiveness of in the early stages of training. Additionally, when the Critic network is fully trained, the replay buffer mainly contains real-time interaction data rather than demonstration data, reducing the probability of sampling demonstration data for training. Therefore, may not have a significant impact, and RL still requires considerable training time to achieve good policies.

We propose GABC to improve Rainbow-DDPG for generating and . We denote the current Actor network as , where represents its parameters. In each state , this network combines with environmental noise to output and , guiding the robot to perform fabric manipulation tasks. The input-output relationship of is represented as follows:

| (20) |

Its current Critic network is denoted as , where represents its parameters. In each state , this network outputs a Q-value through to evaluate the quality of action . Since the quality of and is closely related to the selection of , the input of not only includes and output by the Actor network but also includes output from demonstration data or the .

Assuming that during training, the sampled state-action data pairs are , where . This study denotes as , and sets . Then, in the framework of this article, can be redefined as follows:

| (21) |

To expedite the training of the Critic, GABC introduces a loss term called into the current Critic network’s loss function. Assuming , based on the fact that and the placement point in the human demonstration actions are significantly superior to the offset vector and placement point output by the current Actor network , guides the training of the Critic network by measuring the difference between the -values output by the current Critic network for the actions in the human demonstration data and the -values output for the actions by the current Actor network, under the same state . Specifically, this article sets a pre-training stage, during which, in the pre-training phase, when , is set to 0; otherwise, , as shown in (22):

| (22) |

After pre-training is completed, the Actor network has already acquired a certain policy, and its output actions may not significantly inferior to the actions in the human demonstration data. The introduction of may lead to the training of the Actor network getting stuck in local optima, so that the is removed.

The loss function for the improved Critic network is defined as follows:

| (23) |

where and are the weights of the 1-step and n-step TD loss functions, respectively. is the weight of , set to 1 during the pre-training phase and 0 in subsequent phases. and are similar to the 1-step and n-step TD loss functions [21], and the target functions and can be referenced from TD3 model [32]. Here, we define , , and as two target Critic networks and one target Actor network, with where is the step length of the n-step TD loss function, is the batch size, is the reward, and is the discount factor. Consequently, and are defined as follows:

| (24) |

| (25) |

| (26) |

| (27) |

During training, the introduction of causes the current Critic network to initially tend towards generating larger -values for actions from human demonstrations, the effect of in (21) becomes more pronounced, leading to a more thorough utilization of human demonstrations and thus accelerating the training of the Actor network. Additionally, the training of the current Actor network also depends on the -values output by the current Critic network . Consequently, a current Critic network capable of providing more precise -values will further accelerate the training of the current Actor network.

III-A3 CPL Learning Method

This article adopts the CPL training method illustrated in Fig. 5. At each state , CPL first utilizes the to select , and then, based on , selects and through .

CPL often faces the challenge of loss allocation [30]. It’s difficult to determine whether high rewards obtained for an action are attributed to the grasping policy or the offset vector and placement point selection policy . To address this, the following training approach is adopted. Firstly, each state-action pair is extracted from to form an initial training dataset tailored for the HTSK fuzzy system. Then, is used to train to get . Subsequently, with fixed, based on the to select , the improved Rainbow-DDPG algorithm with GABC enhancement is employed to train the offset vector and placement point selection policy , obtaining .

During the process, data is also collected to supplement the training dataset and continuously train the improved grasping policy parameters . Specifically, when the action executed by the robot at time significantly advances the progress of the task (in folding tasks, , in flattening tasks, ), is added to . After getting a certain amount of new data, is used to retrain the grasping policy to get new parameters .

Combining all the improvements introduced above, we proposed the HGCR-DDPG algorithm. The relevant pseudocode is detailed in Algorithm 1.

III-B Low-Cost Demonstration Collection Based on NMPC

In this article, the objective of NMPC control is to find an optimal control sequence within the prediction horizon to minimize the objective function . The system’s state consists of the coordinates of each particle , and the control inputs are the external forces applied to each particle , where , are the components of the external force in the directions, respectively. Consequently, the state transition equation for the spring-mass model can be expressed as:

| (28) |

where is the velocity of each particle, is the spring force applied to each particle, is the gravity acting on each particle, is the damping force applied to each particle, and is the mass of each particle.

The design of the loss function is based on the distances between particles. Specifically, for the three task objectives, each of which is specified by the distances between particles at time . Taking the particle ordering in Fig. 4 as an example, the target state is redefined as follows:

1. Folding along the diagonal: For any pair of particles and symmetrically positioned about the specified diagonal, in the target state, the distance between them should satisfy:

| (29) |

2. Folding along the central axis: For any pair of particles and symmetrically positioned about the specified central axis, in the target state, the distance between them should satisfy:

| (30) |

3. Flattening: For the particles and at the ends of the two diagonals of the cloth, and for the particles and , in the target state, the distances between them should satisfy:

| (31) |

where is the side length of the cloth in the fully flattened state.

For a given task objective, the loss function can be defined as:

| (32) |

where is the set of all pairs of particles to be considered, is the desired distance between particles and in the target state , is the weight factor used to adjust the relative importance of the differences in distances between different pairs of particles. For a specific task objective, the value of can be chosen based on (29) to (31).

The objective of NMPC is to minimize the cumulative loss within the prediction horizon. (28) is used to predict future states based on the initial state and control inputs:

| (33) |

where and represent the states at time steps and predicted based on the current state and a series of control inputs . Specifically, when , .

In summary, the NMPC in this article can be implemented by solving the following optimization problem in (34):

| (34) | ||||||

| subject to | ||||||

where represents the cumulative loss function over the entire prediction horizon . The term denotes the distance between the -th and -th particles calculated based on at the -th time step. denotes the set of state constraints in the robot’s workspace. We utilized the Interior Point OPTimizer (Ipopt), which is based on interior-point methods for nonlinear programming [33]. By solving the optimization problem described above, we can obtain the optimal control sequence that minimizes the loss function within the prediction horizon.

The optimal control sequence obtained from solving the NMPC problem defines the ideal external forces applied to each particle of the system at time . However, the robot can only apply force to a single particle at any given time. Therefore, to translate NMPC into a practically executable robot control strategy, must be mapped to the robot’s action space. The specific process is illustrated in Fig. 6, and the detailed explanation follows.

Firstly, the optimal control sequence is applied to the dynamic equations of the spring-mass particle model to predict the system’s state at the next time step:

| (35) |

Next, utilizing the PyBullet simulation environment, we obtain the current state vector of the system, from which we extract the coordinates of the model’s endpoints, denoted as , where is the number of endpoints in the state vector.

Subsequently, we analyze each endpoint , identify the nearest 10 particles and the neighboring particles of these identified particles to form the set . Therefore, for all endpoints, we construct a broader set .

By analyzing , we identify the maximum external force acting on all particles in , and determine the index of the endpoint nearest to particle in the state vector as the reference grasping point for the robot. We calculate the displacement vector between particle and endpoint as the grasping offset vector .

Finally, based on the predicted position information , we determine the ideal position of particle at the next time step and designate it as the placement point coordinate , completing the conversion from theoretical control quantities to actual robot motion commands.

In summary, for the optimal control quantity computed by NMPC, we transform it to the action space:

| (36) |

Additionally, due to potential simulation errors in the spring-mass particle model, we utilize the PyBullet simulation environment to correct the errors in the spring-mass particle model at each time step, as illustrated in Fig. S3. After obtaining from NMPC as in (36), it is executed in PyBullet to obtain the new state of the cloth, which is then used as the initial state for the next control cycle, thereby achieving precise updating of the cloth state.

We use to denote whether a demonstration episode has ended. The termination condition for an episode is for folding tasks or for flattening tasks. We collect episodes with relatively high rewards as demonstration data, resulting in the NMPC demonstration dataset , as shown in Algorithm 2.

IV Experimental Setup

IV-A Simulation Experiment Settings of the Improved HGCR-DDPG Algorithm with Human Demonstrations

As shown in Fig. S4, in simulation, humans guide the robot to perform grasping and placing actions by clicking on the grasp point and the placement point with a mouse, respectively. During this process, we identify the endpoint closest to in state , denote its coordinates as , and its index as , and calculate the offset vector as . Subsequently, we obtain the action , and organize it along with state, reward, and other information into a tuple . At the end of each demonstration, all tuples of the round are stored in a dedicated dataset to assist in training the DRL. During data collection, the end condition for rounds of both folding tasks is , and for flattening tasks, the end condition for rounds is .

In Table. I, we present the analysis results of human demonstration datasets for three different tasks (diagonal folding, axial folding, and flattening). For the diagonal folding task, the demonstrators achieve the highest average reward (93.662), the most stable performance (with a reward standard deviation of only 3.105), and the fewest rounds (1.000) to successfully complete the task. While for the axial folding task, the average reward is 87.741, the reward standard deviation is 3.624, and the number of rounds needed to complete the task is 2.868. These metrics indicate that although the quality of task execution remains relatively high, consistency and efficiency have slightly decreased compared to diagonal folding. In the flattening task, although the average reward (87.688) is comparable to that of axial folding, the standard deviation of the reward significantly increased to 5.572, and the number of steps required to complete the task surged to 8.291, indicating the high complexity of the flattening task.

|

|

|

|||||||

|---|---|---|---|---|---|---|---|---|---|

|

93.662 | 3.105 | 1.000 | ||||||

|

87.741 | 3.624 | 2.868 | ||||||

| Flattening | 87.688 | 5.572 | 8.291 |

Human demonstration data was collected to construct , which was used to enhance the training of HGCR-DDPG. This chapter systematically evaluated the effectiveness of three key technical improvements (HTSK, GABC, CPL) introduced in the HGCR-DDPG algorithm and their contributions to model performance through comparative experiments with multiple algorithms. These comparative algorithms include:

1. Rainbow-DDPG: As the baseline model for the experiment, Rainbow-DDPG utilizes the same neural network structure to implement , , and the target networks , and . These networks consist of an input layer, 50 hidden fully connected layers (each with 16 neurons), and an output layer. In this structure, the loss function is defined as (21).

The original Rainbow-DDPG does not use the HTSK fuzzy system but employs a neural network as . During the training process, a cross-entropy loss function is used as the BC loss function for , defined as follows:

| (37) |

where is the number of candidate endpoints. is the Kronecker delta function, which equals 1 when , otherwise it’s 0. is the probability corresponding to the -th endpoint in the output probability distribution .It’s assumed that the grasp point selected from is consistently superior to . The cross-entropy loss function is applied whenever the sampled data comes from ; otherwise, is set to 0. Note that in Rainbow-DDPG, the training strategy does not include CPL. This means is trained in the same way as the current Actor, and its output is not used as part of the input for .

2. Rainbow-DDPG + GABC: Integrates only the GABC into Rainbow-DDPG.

3. Rainbow-DDPG + CPL + HTSK: Incorporates the CPL into Rainbow-DDPG, using HTSK to select the grasp point.

4. Rainbow-DDPG + CPL + Random: Integrates the CPL into Rainbow-DDPG, employing a random sampling strategy to select the grasp point.

5. Rainbow-DDPG + CPL + Uniform: Combines the CPL with Rainbow-DDPG, utilizing a uniform sampling strategy to select the grasp point.

6. Rainbow-DDPG + GABC + CPL + Random: Merges both GABC and CPL into Rainbow-DDPG, employing a random sampling strategy to select the grasp point.

7. Rainbow-DDPG + GABC + CPL + Uniform: Extends Rainbow-DDPG by incorporating both GABC and CPL, selecting the grasp point using a uniform sampling strategy.

To evaluate the performance under different levels of difficulty, we designed two modes, simple and challenging, for each of the three tasks. In the simple mode, the maximum number of operations for each task is set to ; while in the challenging mode, this limit is halved to ( is 2 for fold along diagonal tasks, 4 for fold along axis tasks, and 10 for flatten tasks). Additionally, to investigate the specific impact of the amount of human demonstration data, the models were trained with human demonstration data from 5, 20, and 100 rounds, respectively.

During the training phase, we first performed 20 rounds of pre-training to optimize the Critic network using GABC technique. Subsequently, training proceeded to the regular phase, which consisted of 30 training epochs, with each epoch comprising 20 rounds of training and a batch size () of 64. After executing a set of actions (grasping and manipulation), the policy was updated times. The single interaction update counts () for policies in fold along diagonal tasks, fold along axis tasks, and flatten tasks were 80, 40, and 20, respectively.

At the end of each training epoch, we conducted a testing phase comprising 10 rounds. Specifically, this study initially tested the performance of the initial policy upon completing policy initialization and increased the testing frequency during the pre-training phase (testing every 5 rounds of training). Ultimately, we conducted 35 testing epochs. Furthermore, we conducted experiments with three different random seeds. At the end of the -th testing epoch (), for each seed (), we recorded the total reward obtained by the agent in a single round. Subsequently, the average reward for each seed was computed across the ten testing instances.

Based on the aforementioned processing steps, we define several key performance metrics:

1. The average reward per testing epoch is defined as the average of all values within the -th testing epoch. All reward curves presented in this article are plotted based on . The specific calculation formula is:

| (38) |

2. The average reward , which is the average of all values for .

3. The average standard deviation . Firstly, calculate the standard deviation for for , then calculate the average of all as .

4. Average Reward Ranking can be obtained by ranking all algorithms based on .

5. Average Standard Deviation Ranking can be obtained by ranking all algorithms based on . Lower rankings demonstrate higher stability.

IV-B Experiment Settings for Verifying the Effectiveness of the NMPC Demonstration Dataset

We constructed a point-mass spring-damper model, collected demonstration data, and selected the top 100 rounds with the highest final rewards for each task, forming . Table II provides a detailed analysis of the NMPC demonstration dataset for three specific tasks. Compared to Table I, the NMPC dataset exhibits similar average rewards and standard deviations but need more average number of steps to complete tasks, indicating that NMPC strategies can accomplish tasks with lower operational efficiency.

|

|

|

|||||||

|---|---|---|---|---|---|---|---|---|---|

|

95.1 | 2.4 | 4.4 | ||||||

|

87.1 | 3.5 | 6.1 | ||||||

| Flattening | 80.9 | 6.7 | 9.2 |

In this experiments, we adopt the same experimental settings, evaluation metrics, and numbering system as in Section IV.B. The demonstration dataset generated by the NMPC algorithm is used as the demonstration training set for the HGCR-DDPG model. Three different statistical metrics are also employed in this experiment to compare the effectiveness of HGCR-DDPG models trained with assistance from and . These metrics are Cosine Similarity (CSS), Dynamic Time Warping (DTW), and Pearson Correlation Coefficient (PCC). We have calculated these three similarity measures in terms of both reward and standard deviation, the specific metrics are as follows:

1. Reward Cosine Similarity (RCS): Cosine similarity of the average reward sequence for a single test cycle.

2. Reward Dynamic Time Warping (RDT): DTW similarity of the average reward sequence for a single test cycle.

3. Reward Pearson Correlation (RPC): Pearson correlation coefficient of the average reward sequence for a single test cycle.

4. Standard Deviation Cosine Similarity (SCS): Cosine similarity of the standard deviation of reward sequences obtained with different random seeds.

5. Standard Deviation Dynamic Time Warping (SDT): DTW similarity of the standard deviation of reward sequences obtained with different random seeds.

6. Standard Deviation Pearson Correlation (SPC): Pearson correlation coefficient of the standard deviation of reward sequences obtained with different random seeds.

IV-C Physical Experiment for Deformable Object Robot Manipulation

Hand-eye calibration is used to determine the spatial relationship between the camera coordinate system and the robot coordinate system. Endpoint detection is utilized to extract key endpoints from the fabric’s image. Optical flow tracking is employed for real-time tracking of the fabric endpoints’ positions. The specific processes of these three parts are as follows:

IV-C1 Hand-eye calibration

In this study, the easy-eye-hand software package is utilized for calibration.

IV-C2 Endpoint Detection

During the initial stages of the tasks, this study employs endpoint detection algorithms to extract the pixel coordinates of fabric endpoints in the image. For non-initial states in folding tasks, we use the previous placement point as a reference to determine the current position of the baseline grasp point. Simultaneously, optical flow tracking algorithms are employed to track the movement of the remaining endpoints. After coordinate transformation and hand-eye calibration, the three-dimensional positions of the endpoints in the robot coordinate system are obtained. Combining observable visual information such as the fabric’s area, a comprehensive state vector is formed.

We designed an endpoint recognition algorithm for extracting key endpoints from images of fabrics. The algorithm is based on the Canny edge detection and Douglas-Peucker polygon approximation algorithms, which accurately extract edge information from fabrics and identify several furthest endpoints. First, the input RGB image is converted into a grayscale image. Then, Gaussian blur is applied to the grayscale image to reduce noise influence. Next, the Canny edge detection algorithm is employed to extract the edges of the image. We further refine the edges by contour detection to find the largest contour in the image and apply the Douglas-Peucker algorithm [35] for polygon approximation to simplify the contour. Finally, we obtain a simplified contour containing a series of points, denoted as .

To select the furthest endpoints from the simplified contour (where for folding tasks and for flattening tasks), this study devises a heuristic method called Maximum Minimum Distance Vertices Selection (MMDVS). For any points on the contour, we first define a set containing all possible pairs of points. Then, we compute the Euclidean distance between each pair of points in set and find the minimum distance . By traversing all possible combinations of points in , we find the group of points with the maximum minimum distance , which are the desired endpoints . Additionally, if the number of endpoints in the simplified contour obtained by the Douglas-Peucker algorithm is less than , we adjust the relevant parameters of the Douglas-Peucker algorithm to include new endpoints into the simplified contour set until a sufficient number of endpoints are obtained. The area of the fabric, needed for deep RL, can be obtained by calculating the area of the contour, and the centroid can be obtained by calculating the centroid of the contour by using relevant functions in the OpenCV library.

IV-C3 Optical Flow Tracking

To achieve real-time tracking of the fabric’s shape, this study designs an optical flow-based tracking algorithm. To address the issue that tracking failures can be attributed to occlusion by the end effector, the endpoints that cannot be successfully tracked can be classified into two categories: non-baseline grasp points and baseline grasp points. For non-baseline grasp points, their positions are set to the positions from the last frame before the tracking failure, , and tracking continues based on these positions. For baseline grasp points, after completing the placement action, the placement point is considered as the new position for anchor grasp points.

The comprehensive experimental procedure is shown in Fig. S5. At the beginning of the experiment, the robot and fabric were placed in their initial states. Subsequently, the system operated in a loop according to the following steps until the fabric is manipulated from its initial state to the target state. First, capture and analyze the current state of the fabric to form a comprehensive state vector. Then, this state vector is input into the HGCR-DDPG model pre-trained with to generate instructions. Next, the robot executes actions according to the generated instructions, pushing the fabric towards the desired next state. Finally, after the operation is completed, the system collects and updates the state vector of the fabric, preparing for the next steps of operation.

V Experiment Results

V-A Simulation Results of the Improved HGCR-DDPG Algorithm with Human Demonstrations

Tables S1 and S2 respectively list the algorithm numbers and experiment numbers involved in this article. Table S3 presents the design of 8 control groups in this study. Each control group evaluates the effectiveness of three technical improvements introduced in the HGCR-DDPG algorithm (HTSK, GABC, CPL) by comparing the performance of multiple algorithms. Table S3 also lists the legend styles and expected results of each algorithm in Fig. 7 and Fig. S6.

Fig. 7 and Fig. S6 display the dynamic changes of the average reward of individual test cycles obtained by various algorithms in different simulation experiments under three task settings: folding along the diagonal, folding along the central axis, and flattening. We smoothed the reward curves using a window of length 3. Tables S4, S6, and S8 respectively list the average rewards and average reward rankings achieved by various algorithms in different experiments conducted under the three tasks. Tables S2, S7, and S9 then respectively display the average standard deviations and average standard deviation rankings achieved by various algorithms in different experiments targeting the aforementioned three tasks. The last column of each of these tables shows the average value of the respective metrics ( or ) for each algorithm across all experiments for that task. Table III presents the global average reward (average of ), global average standard deviation (), reward average ranking (average of ), and standard deviation average ranking (average of ) for each algorithm across all experiments. In this section, the optimal indicators are highlighted in bold, and the HGCR-DDPG algorithm proposed in this article is underlined.

|

|

|

|

|

||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 76.3 | 4.8 | 1.1 | 7.1 | ||||||||||||||||

| 2 | 60.1 | 11.2 | 3.6 | 3.9 | ||||||||||||||||

| 3 | 57.1 | 11.9 | 4.8 | 3.3 | ||||||||||||||||

| 4 | 57.8 | 8.1 | 4.7 | 5.2 | ||||||||||||||||

| 5 | 67.4 | 6.7 | 2.7 | 6.4 | ||||||||||||||||

| 6 | 52.2 | 14.0 | 5.7 | 2.7 | ||||||||||||||||

| 7 | 52.4 | 12.7 | 5.9 | 2.7 | ||||||||||||||||

| 8 | 37.9 | 10.6 | 7.5 | 4.8 |

From these figures and tables, we can derive the following key conclusions: Firstly, Algorithm 1 (HGCR-DDPG) demonstrates outstanding performance across all numerical simulation experiments for all tasks. Secondly, HTSK significantly enhances algorithm performance. Under the same marker style, the red curve (representing algorithms using HTSK) generally exhibits significant advantages. In the majority of experimental scenarios, algorithms utilizing HTSK for benchmark grasping point selection outperform those employing random selection strategies, particularly in experimental setups with stringent constraints on the number of operations. Furthermore, the positive impact of GABC is also significant. Among curves of the same color, the curves marked with circles (representing algorithms using the GABC) generally outperform those marked with squares. Although the performance of algorithms incorporating GABC in flattening tasks is not particularly remarkable in terms of standard deviation, its performance surpasses algorithms without GABC in all other experiments. Additionally, the effectiveness of CPL has been validated. It is worth noting that the performance of CPL is influenced by both the benchmark grasping point selection strategy it adopts and the operational constraints in the experiments. The looser the constraints on the number of operations and the more stable the benchmark grasping point selection strategy, the more significant the effect of CPL. Finally, from the Table III, it can be observed that Algorithm 1 (HGCR-DDPG) achieved the best performance across all metrics. Compared to the selected baseline algorithm, namely Algorithm 8 (Rainbow-DDPG), HGCR-DDPG achieved a 2.01-fold improvement in global average reward and successfully reduced the global average standard deviation to 45% of the baseline algorithm, demonstrating a significant performance advantage.

V-B Results of the Experiment for Verifying the Effectiveness of the NMPC Demonstration Dataset

Fig. 8 and Fig. S7 depict the variation curves of the average reward for single test cycles of the HGCR-DDPG model trained with assistance from and for the tasks of folding along the diagonal, folding along the central axis, and flattening. Tables S10, S11, and S12 respectively show the performance of the HGCR-DDPG model assisted by in terms of average reward and average standard deviation for the three tasks, as well as the ratio of the performance achieved by models assisted by to those assisted by .

From these curves and tables, it is evident that in the task of folding along the diagonal, HGCR-DDPG can quickly learn and develop effective strategies regardless of whether or is used. However, in the tasks of folding along the central axis and flattening, as the difficulty increases, the performance difference between HGCR-DDPG assisted by the two demonstration datasets gradually becomes significant. In simplified task settings (Experiments 2.2, 2.4, 2.6, 3.2, 3.4), HGCR-DDPG assisted by demonstrates the ability to learn rapidly, with its performance even reaching or slightly exceeding that of models assisted by . This may be because the NMPC strategy itself performs well in scenarios with a generous number of steps, allowing HGCR-DDPG to effectively extract strategies from its demonstrations. Conversely, under more stringent task settings (Experiments 2.1, 2.3, 2.5, 3.3, 3.5), HGCR-DDPG assisted by is generally lower than models assisted by . This could be attributed to the difficulty of the NMPC strategy in completing tasks within a limited number of steps, thus affecting the performance of HGCR-DDPG under these conditions. This pattern also aligns with the higher average number of steps observed in the NMPC dataset in Table II. The results of Experiments 3.1 and 3.6 show the inherent stochastic factors in the experimental process.

|

|

|

||||||

|---|---|---|---|---|---|---|---|---|

| 76.3 | 4.8 | |||||||

| 76.1 | 4.0 |

Table IV presents a comparison of the overall performance metrics between HGCR-DDPG models assisted by and . The global average reward achieved by the HGCR-DDPG model assisted by is 99.7% of that achieved by the model assisted by , while the global average standard deviation of rewards obtained under different random seeds is 83.3% of that achieved by the model assisted by . This indicates that HGCR-DDPG assisted by exhibits a performance level similar to that of HGCR-DDPG assisted by .

From Table S13, it can be observed that the HGCR-DDPG model trained with and exhibits significant similarity at the sequence level, particularly in RCS and RPC. This emphasizes a strong consistency between the -assisted HGCR-DDPG model and the -assisted model concerning the sequence. However, in terms of the standard deviation of reward sequences obtained with different random seeds, the similarity metrics show significant differences, especially evident in SPC. This difference may stem from two factors: firstly, the inherent randomness of the experiment may lead to some fluctuations in reward curves under different random seeds; secondly, the stylistic differences between NMPC and human-operated strategies may cause the model to adopt different action strategies in specific contexts, thus affecting certain performance metrics.

V-C Results of Physical Experiments on Visual Processing and Robot Manipulation

The endpoint recognition algorithm mainly targets two situations: when the fabric is completely flattened and when it is fully wrinkled. In the case of complete flattening, the endpoint recognition algorithm can accurately identify the four endpoints of the fabric, as shown in the first picture of Fig. 9. In the case of complete wrinkling, the endpoint recognition algorithm can also accurately identify the eight representative endpoints of the fabric, as shown in Fig. S8. This indicates that the endpoint recognition algorithm can accurately identify the endpoints of the fabric under different fabric states, providing accurate initial positions for subsequent optical flow tracking. The optical flow tracking algorithm is primarily used to track the endpoints of the fabric in folding tasks, as shown in Fig. 9. This indicates that the optical flow tracking algorithm can accurately track the endpoints of the fabric when the shape of the fabric changes, providing precise target positions for subsequent robot operations. It is worth noting that in the second-to-last image of Fig. 9, there is a tracking failure for the two endpoints in the top left corner of the fabric. This is caused by occlusion from the end effector, and in such cases, we utilized the method introduced in Section IV.D for supplementation. After the operation is completed, the placement point is treated as the new position of the reference grasping point, ensuring the smoothness of robot operations.

The experimental operation process is illustrated in Fig. S9. In physical experiments, measuring the distance between different endpoints is inconvenient, so several indicators directly computable from visual information were set as follows:

1. Task Completion Rate: For folding along the diagonal, the target shape of the fabric was set as an isosceles right triangle with a side length of 0.24 meters. For folding along the central axis, the target shape of the fabric was set as a rectangle measuring 0.24 meters by 0.12 meters. For flattening, the target shape of the fabric was set as a square with a side length of 0.24 meters. Subsequently, the similarity between the target shape of the fabric and the actual shape was calculated using the ‘cv2.matchShapes()’ function in OpenCV, and this was used as the task completion rate.

2. Success Rate: An experiment was considered successful when the final task completion rate exceeded 0.9.

3. Average Steps: The average number of actions required for the robot to complete a specific task measured the efficiency of the robot’s operations. In this study, due to the thinness of the fabric used, the positioning accuracy of the sensor subsystem in the z-axis direction was extremely strict, with a tolerance of only 2 mm, which greatly increased the likelihood of gripping failure. To address this challenge, a heuristic strategy was adopted in the experiment: first attempt gripping based on the positioning information provided by the sensor subsystem. If the first gripping attempt was unsuccessful (i.e., no improvement in task completion rate), the gripping point was lowered by 2 mm in the z-axis direction and another attempt was made, repeating this process until successful gripping was achieved.

In the process of counting operation steps, this study only included each successful placement action in the total steps, without counting repeated attempts due to gripping failures. 30 experiments were conducted for each of the three tasks, and the aforementioned indicators were recorded. The experimental results are shown in Table V. For the folding along the diagonal task, 93.3% of the trials achieved a task completion score of no less than 0.6, while 90.0% of the trials achieved a task completion score of no less than 0.8. The overall success rate for this task is 83.3%, with an average of 1.1 steps required, indicating a relatively high success rate and fewer required steps. For the folding along the central axis task, 90.0% of the trials reached the standard of a task completion score of at least 0.6, and 86.7% of the trials reached the standard of a task completion score of at least 0.8. The success rate is 80.0%, with an average of 3.9 steps required. Compared to folding along the diagonal, this task requires more steps but still maintains a relatively high success rate. For the flattening task, all trials reached the standards of a task completion score of at least 0.6 and 0.8, with a high success rate of 96.7%. However, the average number of steps required is 13.5 steps, indicating that although the flattening task has the highest success rate, it is also the most time-consuming of the three tasks. This phenomenon can be attributed to the high tolerance for errors in the flattening task. Specifically, even if a certain operation leads to a decrease in task completion score, the robot can still flatten the fabric through subsequent operations. This characteristic leads to a high success rate for the flattening task but also results in an increase in the number of required steps. In summary, the success rates of all three tasks are relatively high, indicating that the experimental setup and methods used perform well in physical operations.

| Task Completion | Task Completion | Success Rate | Average Steps | |

|---|---|---|---|---|

| Diagonal Folding | 93.3% | 90.0% | 83.3% | 1.1 |

| Central Axis Folding | 90.0% | 86.7% | 80.0% | 3.9 |

| Flattening | 100.0% | 100.0% | 96.7% | 13.5 |

V-D Discussion

In the context of robotic manipulation tasks for deformable objects, this article addresses the inefficiency of traditional RL methods by proposing the HGCR-DDPG algorithm. To tackle the issue of high costs associated with traditional human teaching methods, a low-cost demonstration collection method based on NMPC is introduced. The effectiveness of the proposed methods is validated through three experimental scenarios involving folding fabric diagonally, along the midline, and flattening it, both in simulation and real-world experiments. Extensive ablation studies are conducted to substantiate the rationality and efficacy of the algorithms.

Compared to similar research, Matas et al. [21] required nearly 80,000 interactions between the robot and the environment to complete the learning process; Jangir et al. [22] needed approximately 260,000 rounds of interaction data to train their agent; Yang et al. [24] utilized 28,000 pairs of images and actions collected via teleoperation to train a DNN as an end-to-end policy for folding a single towel. This study simplifies the data acquisition process and achieves comparable or even higher success rates than the aforementioned studies, providing novel insights and contributions for future tasks of a similar nature. Currently, more and more research tends to adopt Vision-Language-Action models (VLA) for robotic manipulation. However, such research often requires significant computational resources and is overqualified when dealing with specific tasks. For example, OpenVLA is a 7B-parameter VLA that was trained on 64 A100 GPUs for 14 days. During inference, it requires 15GB of video memory and runs at approximately 6Hz on an NVIDIA RTX 4090 GPU [36]. The largest model of RT-2 uses 55B parameters, and it is infeasible to directly run such a model on standard desktop machines or on-robot GPUs commonly used for real-time robot control [37]. Even TinyVLA requires 1.3B parameters [38]. In contrast, our proposed algorithm shows significant advantages in learning efficiency. Trained on an Intel i5 12400f CPU and NVIDIA RTX 3050 GPU, our algorithm can converge within dozens of epochs, and the entire training process takes at about 4 hours, with a maximum number of 14,183 parameters. Compared with the currently popular approaches based on large models for robot manipulation, the algorithm proposed in this paper has the advantages of being lightweight, requiring low computational resources, and being able to provide task-specific customization and efficient adaptability when handling specific tasks.

VI Conclusion

This article presents a study on deformable object robot manipulation based on demonstration-enhanced RL. To improve the learning efficiency of RL, this article enhances the utilization efficiency of algorithms for demonstration data from multiple aspects, proposing the HGCR-DDPG algorithm and collecting for training. It first uses demonstration data to train the HTSK fuzzy system to select appropriate grasp points, then proposes the GABC to improve the utilization of demonstration data in Rainbow-DDPG, and finally uses CPL to synthesize HTSK and GABC improved Rainbow-DDPG, forming a complete control algorithm for deformable object robot manipulation, namely HGCR-DDPG. Additionally, the effectiveness of the proposed methods is verified through comprehensive simulation experiments. Compared to the baseline algorithm (Rainbow-DDPG), the proposed HGCR-DDPG algorithm achieves a 2.01 times higher global average reward and reduces the global average standard deviation to 45% of the baseline algorithm. To reduce the labor cost of demonstration collection, this article proposes a low-cost demonstration collection method based on NMPC. Based on the established spring-mass model, it uses the NMPC algorithm to control the robot to perform deformable object manipulation tasks in a simulation environment, and uses the trajectories of rounds with higher rewards as demonstration data. Simulation results show that the global average reward obtained by the HGCR-DDPG model trained with is 99.7% of the model trained with , and the global average standard deviation of rewards obtained under different random seeds is 83.3% of the model trained with . This indicates that demonstration data collected through NMPC can be used to train HGCR-DDPG and its effectiveness is comparable to human demonstration data. To verify the feasibility of the proposed methods in a real environment, this article conducts physical experiments on deformable object robot manipulation. Utilizing hardware facilities such as the UR5e robot, OnRobot RG2 gripper, and RealSense D435i camera, this article builds a physical experimental platform for deformable object robot manipulation and uses the -assisted training HGCR-DDPG algorithm on this platform to control the robot to manipulate fabric and perform folding along the diagonal, folding along the central axis, and flattening tasks. The experimental results show that the proposed methods achieve success rates of 83.3%, 80%, and 100% respectively in these three tasks, verifying the effectiveness of the method.

There are still many areas for improvement due to time constraints. Specifically, future work of this article could be expanded in the aspect such as multimodal perception input for RL state vectors, refinement of deformable object dynamic models, and small-sample learning for operations on various deformable objects, etc.

References

- [1] J. Zhu et al., “Challenges and Outlook in Robotic Manipulation of Deformable Objects,” in IEEE Robotics & Automation Magazine, vol. 29, no. 3, pp. 67-77, Sept. 2022.

- [2] P. Long, W. Khalil and P. Martinet, “Modeling & control of a meat-cutting robotic cell,” 2013 16th International Conference on Advanced Robotics (ICAR), Montevideo, Uruguay, 2013, pp. 1-6.

- [3] M. C. Gemici and A. Saxena, “Learning haptic representation for manipulating deformable food objects,” 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems, Chicago, IL, USA, 2014, pp. 638-645.

- [4] I. Leizea, A. Mendizabal, H. Alvarez, I. Aguinaga, D. Borro and E. Sanchez, “Real-Time Visual Tracking of Deformable Objects in Robot-Assisted Surgery,” in IEEE Computer Graphics and Applications, vol. 37, no. 1, pp. 56-68, Jan.-Feb. 2017.

- [5] B. Thananjeyan, A. Garg, S. Krishnan, C. Chen, L. Miller and K. Goldberg, “Multilateral surgical pattern cutting in 2D orthotropic gauze with deep reinforcement learning policies for tensioning,” 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 2017, pp. 2371-2378.

- [6] Y. Gao, H. J. Chang and Y. Demiris, “Iterative path optimisation for personalised dressing assistance using vision and force information,” 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Korea (South), 2016, pp. 4398-4403.

- [7] F. Zhang and Y. Demiris, “Learning garment manipulation policies toward robot-assisted dressing,” in Science Robotics, vol.7, no.65, eabm6010, 2022.

- [8] I. G. Ramirez-Alpizar, M. Higashimori, M. Kaneko, C. -H. D. Tsai and I. Kao, “Dynamic Nonprehensile Manipulation for Rotating a Thin Deformable Object: An Analogy to Bipedal Gaits,” in IEEE Transactions on Robotics, vol. 28, no. 3, pp. 607-618, June 2012.

- [9] J. Huang, T. Fukuda and T. Matsuno, “Model-Based Intelligent Fault Detection and Diagnosis for Mating Electric Connectors in Robotic Wiring Harness Assembly Systems,” in IEEE/ASME Transactions on Mechatronics, vol. 13, no. 1, pp. 86-94, Feb. 2008.

- [10] J. Zhu, B. Navarro, P. Fraisse, A. Crosnier and A. Cherubini, “Dual-arm robotic manipulation of flexible cables,” in 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 2018, pp. 479-484.

- [11] A. Shademan, R. S. Decker, J. D. Opfermann, S. Leonard, A. Krieger and P. C. W. Kim, “Supervised autonomous robotic soft tissue surgery,” in Science Translational Medicine, vpl. 8, no. 337, ra64-337, 2016.

- [12] A. Jevtić et al., ”Personalized Robot Assistant for Support in Dressing,” in IEEE Transactions on Cognitive and Developmental Systems, vol. 11, no. 3, pp. 363-374, Sept. 2019.

- [13] J. Zhu, M. Gienger, G. Franzese and J. Kober, “Do You Need a Hand? – A Bimanual Robotic Dressing Assistance Scheme,” in IEEE Transactions on Robotics, vol. 40, pp. 1906-1919, 2024.

- [14] J. Sanchez, J-A. Corrales, B-C. Bouzgarrou and Y. Mezouar, “Robotic manipulation and sensing of deformable objects in domestic and industrial applications: a survey,” in The International Journal of Robotics Research, vol. 37, no. 7, pp. 688-716, 2018.

- [15] H. Yin, A. Varava and D. Kragi, “Modeling, learning, perception, and control methods for deformable object manipulation,” in Science Robotics, vol.6, no.54, eabd8803, 2021.

- [16] Y. Li, Y. Yue, D. Xu, E. Grinspun and P. K. Allen, “Folding deformable objects using predictive simulation and trajectory optimization,” 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 2015, pp. 6000-6006.

- [17] H. Lin, F. Guo, F. Wang and Y-B. Jia, “Picking up a soft 3D object by “feeling” the grip,” in The International Journal of Robotics Research, vol. 34, no. 11, pp. 1361-1384, 2015.

- [18] D. Navarro-Alarcon and Y. -H. Liu, “Fourier-Based Shape Servoing: A New Feedback Method to Actively Deform Soft Objects into Desired 2-D Image Contours,” in IEEE Transactions on Robotics, vol. 34, no. 1, pp. 272-279, Feb. 2018.

- [19] T. Tamei, T. Matsubara, A. Rai and T. Shibata, “Reinforcement learning of clothing assistance with a dual-arm robot,” 2011 11th IEEE-RAS International Conference on Humanoid Robots, Bled, Slovenia, 2011, pp. 733-738.

- [20] A. Colomé and C. Torras, “Dimensionality Reduction for Dynamic Movement Primitives and Application to Bimanual Manipulation of Clothes,” in IEEE Transactions on Robotics, vol. 34, no. 3, pp. 602-615, June 2018.

- [21] J. Matas, S. James and A. J. Davison. “Sim-to-real reinforcement learning for deformable object manipulation,” 2018 Conference on Robot Learning (CoRL), Zürich, Switzerland, PMLR, 2018, pp. 734–743.

- [22] R. Jangir, G. Alenyà and C. Torras, “Dynamic Cloth Manipulation with Deep Reinforcement Learning,” 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 2020, pp. 4630-4636.

- [23] E. Pignat and S. Calinon, “Learning adaptive dressing assistance from human demonstration,” Robotics and Autonomous Systems, vol. 93, pp. 61-75, 2017.

- [24] P. -C. Yang, K. Sasaki, K. Suzuki, K. Kase, S. Sugano and T. Ogata, “Repeatable Folding Task by Humanoid Robot Worker Using Deep Learning,” in IEEE Robotics and Automation Letters, vol. 2, no. 2, pp. 397-403, April 2017.

- [25] A. Cherubini, J. Leitner, V. Ortenzi and P. Corke, “Towards vision-based manipulation of plastic materials,” 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 2018, pp. 485-490.

- [26] J. Zhu, M. Gienger and J. Kober, “Learning Task-Parameterized Skills From Few Demonstrations,” in IEEE Robotics and Automation Letters, vol. 7, no. 2, pp. 4063-4070, April 2022.

- [27] B. Balaguer and S. Carpin, “Combining imitation and reinforcement learning to fold deformable planar objects,” 2011 IEEE/RSJ International Conference on Intelligent Robots and Systems, San Francisco, CA, USA, 2011, pp. 1405-1412.

- [28] H. Wang, J. Huang, H. Ru, Z. Fu, H. Lei, D. Wu and H. Wu, “Grasping State Analysis of Soft Manipulator Based on Flexible Tactile Sensor and High-Dimensional Fuzzy System,” in IEEE/ASME Transactions on Mechatronics, doi: 10.1109/TMECH.2024.3445504.

- [29] Y. Cui, Y. Xu, R. Peng, and D. Wu, “Layer normalization for TSK fuzzy system optimization in regression problems,” in IEEE Trans. Fuzzy Syst., vol. 31, no. 1, pp. 254–264, Jan. 2023.

- [30] Y. Wu, W. Yan, T. Kurutach, et al., “Learning to manipulate deformable objects without demonstrations,” Proc. 16th Robot.: Sci. Syst., 2020, [online] Available: https://roboticsproceedings.org/rss16/p065.html.

- [31] J. Canny, “A Computational Approach to Edge Detection,” in IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. PAMI-8, no. 6, pp. 679-698, Nov. 1986.

- [32] S. Fujimoto, H. Hoof and D. Meger, “Addressing function approximation error in actorcritic methods,” 2018 International Conference on Machine Learning (ICML), Stockholm, Sweden, PMLR, 2018, pp. 1587–1596.

- [33] A. Wächter and L. T. Biegler, “On the implementation of an interior-point filter line-search algorithm for large-scale nonlinear programming,” in Mathematical Programming, vol. 106, pp. 25–57, 2006.