Robust Bayesian Inference for Big Data: Combining Sensor-based Records with Traditional Survey Data

Abstract

Big Data often presents as massive non-probability samples. Not only is the selection mechanism often unknown, but larger data volume amplifies the relative contribution of selection bias to total error. Existing bias adjustment approaches assume that the conditional mean structures have been correctly specified for the selection indicator or key substantive measures. In the presence of a reference probability sample, these methods rely on a pseudo-likelihood method to account for the sampling weights of the reference sample, which is parametric in nature. Under a Bayesian framework, handling the sampling weights is an even bigger hurdle. To further protect against model misspecification, we expand the idea of double robustness such that more flexible non-parametric methods as well as Bayesian models can be used for prediction. In particular, we employ Bayesian additive regression trees, which not only capture non-linear associations automatically but permit direct quantification of the uncertainty of point estimates through its posterior predictive draws. We apply our method to sensor-based naturalistic driving data from the second Strategic Highway Research Program using the 2017 National Household Travel Survey as a benchmark.

keywords:

, , and

1 Introduction

The 21st century is witnessing a re-emergence of non-probability sampling in various domains (Murdoch and Detsky, 2013; Daas et al., 2015; Lane, 2016; Senthilkumar et al., 2018). Probability sampling is facing new challenges, mainly because of a steady decline in response rates (Groves, 2011; Johnson and Smith, 2017; Miller, 2017). At the same time, new modes of data collection via sensors, web portals, and smart devices have emerged that routinely capture a variety of human activities. These automated processes have led to an ever-accumulating massive volume of unstructured information, so-called “Big Data” (Couper, 2013; Kreuter and Peng, 2014; Japec et al., 2015). Being easy to access, inexpensive to collect, and highly detailed, this broad range of data is perceived to be valuable for producing official statistics as an alternative or supplement to probability surveys (Struijs et al., 2014; Kitchin, 2015). However, “Big Data” typically have a self-selecting data-generating process, which can lead to biased estimates. When this is the case, the larger data volume in the non-probability sample increases the relative contribution of selection bias to absolute or squared error. Meng et al. (2018) call this phenomenon a “Big Data Paradox”, and these authors show both theoretically and empirically that the impact of selection bias on the effective sample size can be extremely large.

The motivating application in this article comes from naturalistic driving studies (NDS), which are one real-world example of Big Data for rare event investigations. Since traffic collisions are inherently rare events, measuring accurate pre-crash behaviors as well as exposure frequency in normal driving demands accurate long-term follow-up of the population of drivers. Thus, NDS are designed to continually monitor

drivers’ behavior via in-vehicle sensors, cameras, and advanced wireless technologies (Guo et al., 2009). The detailed information collected by NDS are considered a rich resource for assessing various aspects of transportation such as traffic safety, crash causality, and travel patterns (Huisingh et al., 2019; Tan et al., 2017). In particular, we consider the sensor-based Big Data from the second phase of the Strategic Highway Research Program (SHRP2), which is the largest NDS conducted to date. This study recruited a convenience sample from geographically restricted regions (6 US states: Florida, Indiana, New York, North Carolina, Pennsylvania, and Washington) and attempted to oversample both younger and older drivers, leading to potential selection bias in the sample mean of some trip-related variables (Antin et al., 2015). To deal with this, we employ the 2017 National Household Travel Survey (NHTS) as a “reference survey”, which can serve as a probability sample representing the population of American drivers (Santos et al., 2011). While daily trip measures in SHRP2 are recorded via sensors, NHTS asks respondents to self-report their trip measures through an online travel log. By analyzing the aggregated data at the day level, we develop adjusted sensor-based estimates from SHRP2 for measures such as frequency of trips, trip duration, trip speed, and starting time of trip that can be compared with self-reported weighted estimates in NHTS to assess the performance of our proposed methods in terms of bias and efficiency, as well as estimates of maximum speed, brake use per mile driven, and stop time that are available only in SHRP2.

Standard design-based approaches cannot be applied to non-probability samples for the simple reason that the probabilities of selection are unknown (Chen et al., 2020). Thus the American Association for Public Opinion Research (AAPOR) task force on non-probability samples recommends that adjustment methods should rely on models and external auxiliary information (Baker et al., 2013). In the presence of a relevant probability sample with a set of common auxiliary variables, which is often called a “reference survey”, two general approaches can be taken: (1) prediction modeling (PM)–fitting models on the non-probability sample to predict the response variable for units in the reference survey (Rivers, 2007; Kim and Rao, 2012; Wang et al., 2015; Kim et al., 2018), and (2) quasi-randomization (QR)–estimating the probabilities of being included in the non-probability sample, also known as propensity scores (PS), while treating the Big Data as a quasi-random sample (Lee, 2006; Lee and Valliant, 2009; Valliant and Dever, 2011). Our focus is on the PM setting, since in our application the key measures of interest are not available in the probability (reference) survey, and our goal is to use prediction to impute them.

Correct specification of the model predicting the outcome is critical for imputation. To help relax this assumption, the PM approach can be combined with the QR method, in a way that the adjusted estimate of a population quantity is consistent if either the propensity or the outcome model holds. Augmented inverse propensity weighting (AIPW) was the first of these so-called “doubly-robust” (DR) methods (Robins et al., 1994), with applications to causal inference (Scharfstein et al., 1999; Bang and Robins, 2005; Tan, 2006; Kang et al., 2007; Tan et al., 2019) and adjustment for non-response bias (Kott, 1994; Kim and Park, 2006; Kott, 2006; Haziza and Rao, 2006; Kott and Chang, 2010). Further extension to multiple robustness has been developed by Han and Wang (2013), where multiple models are specified and consistency is obtained as long as at least one of the models is correctly specified. Chen et al. (2020) offer further adjustments to adapt the AIPW estimator to a non-probability sampling setting where an external benchmark survey is available. While their method employs a modified pseudo-likelihood approach to estimate the selection probabilities for the non-probability sample, a parametric model is used to impute the outcome for units of the reference survey.

In a non-probability sample setting, Rafei et al. (2020) utilized BART in the QR approach outlined in Elliott and Valliant (2017). In this paper, we extend Rafei et al. (2020) in two major ways: first, by blending the QR and PS methods into a novel DR method that is made even more robust by using BART, which provides a strong non-parametric predictive tool by automatically capturing non-linear associations as well as high-order interactions (Chipman et al., 2007). The proposed method separates the propensity model from the sampling weights in a two-step process, allowing for a broader range of models to be utilized for imputing the missing inclusion probabilities. This allows us to consider both parametric (linear and generalized linear models) and non-parametric (BART) models for both propensity and outcome. In addition, the posterior predictive distribution produced by BART makes it easier to quantify the uncertainty due to the imputation of pseudo-weights and the outcome variable (Tan et al., 2019; Kaplan and Chen, 2012). Second, we derive asymptotic variance estimators for the previously proposed QR estimators in Rafei et al. (2020) as well as proofs of the double robustness of the proposed DR estimators.

The rest of the article is organized as follows. In Section 2, we develop the theoretical background behind the proposed methods and associated variance estimators. A simulation study is designed in Section 3 to assess the repeated sampling properties of the proposed estimator, i.e. bias and efficiency. Section 4 uses the NHTS to develop adjusted estimates from the SHRP2 using the methods discussed and developed in the previous sections. All the statistical analyses in both the simulations and empirical studies have been performed using R version 3.6.1. The annotated R code is available for public use at https://github.com/arafei/drinfNP. Finally, Section 5 reviews the strengths and weaknesses of the study in more detail and suggests some future research directions.

2 Methods

2.1 Notation

Denote by a finite population of size . We consider the values of a scalar outcome variable, , and , the values of a -dimensional vector of relevant auxiliary variables, . Let be a non-probability sample of size selected from . The goal is to estimate an unknown finite population quantity, e.g. . Here, the quantity of interest is considered to be the finite population mean that is a function of the outcome variable, i.e. . Suppose is the inclusion indicator variable of the “big data” survey of size in . Further, we initially assume that given , elements in are independent draws from , but later, we relax this assumption by considering to have a single-stage clustering design as is the case in the real-data application of this article.

Suppose is a parallel reference survey of size , for which the same set of covariates, , has been measured. We also define , the values of a -dimensional vector of design variables for the reference survey. We assume is unobserved for ; otherwise inference could be directly drawn based on . Also, let denote the inclusion indicator variable associated with for . To avoid unnecessary complexity, we assume that units of are selected independently. Being a full probability sample implies that the selection mechanism in is ignorable given its design features, i.e. for , where denotes a -dimensional vector of associated design variables. Thus, one can define the selection probabilities and sampling weights in as and , respectively, for , which we assume are known.

While and may overlap or correlate, we define , the -dimensional vector of all auxiliary variables associated with and . To be able to make unbiased inference for , we consider the following assumptions for :

-

C1.

Positivity— actually does have a probabilistic sampling mechanism, albeit unknown. That means for all possible values of in .

-

C2.

Ignorability—the selection mechanism of is fully governed by , which implies . Then, for , the unknown “pseudo-inclusion” probability associated with is defined as .

-

C3.

Independence—conditional on , and are selected independently, i.e. .

Note that the first two assumptions are collectively called “strong ignorability” by Rosenbaum and Rubin (1983). Considering C1-C3, the joint density of , and can be factorized as below:

| (2.1) |

where are some distributional parameters. While and are unknown, may be known as is a probability sample. A QR approach involves modeling , whereas a PM approach deals with modeling .

Now suppose and have trivial overlap, i.e. . This assumption is reasonable when the sampling fraction in both samples is small. Note that under the ignorable assumption, the propensity model for depends on observed for the entire population. Thus, given the combined sample, , with being the sample size, it is reasonable to expect that the pseudo-inclusion probabilities, ’s, are a function of both and for . Let be the indicator of subject belonging to the non-probability sample in the combined sample where . Note that since , can take values of either or as below:

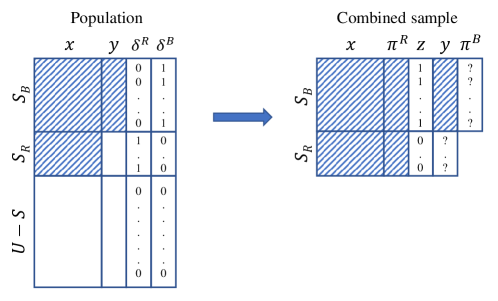

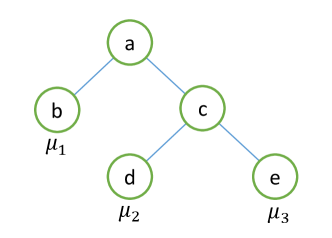

Figure 1 illustrates the data structure in both the finite population and the combined sample.

2.2 Quasi-randomization

In QR, the non-probability sample is treated as if the self-selection mechanism of population units mimics a stochastic process, but with unknown selection probabilities. Then, attempts are made to estimate these missing quantities in based on external information. Conditional on , suppose follows a logistic regression model in the finite population. We have

| (2.2) |

where is a vector of model parameters in . Using a modified pseudo-maximum likelihood approach (PMLE), Chen et al. (2020) demonstrate that, given , a consistent estimate of can be obtained by solving the following estimating equation with respect to :

| (2.3) |

The estimates of the ’s, which we also call propensity scores (PS), are obtained by plugging the solution of Eq. 2.3, i.e. , into Eq. 2.2. It is important to note that the proposed PS estimator by Chen et al. (2020) depends implicitly on in addition to , because we know that is a determinstic function of for . Under certain regularity conditions, the authors show that the inverse PS weighted (IPSW) mean from yields a consistent and asymptotically unbiased estimate for the population mean.

Obviously, the possible solutions of Eq. 2.3 are not a typical output of logistic regression procedures in the existing standard software. With one additional assumption, which is mutual exclusiveness of the two samples, i.e. , we show that estimating ’s can be reduced to modeling for instead of modeling for . Intuitively, one can view the selection process of the -th population unit in as being initially selected in the joint sample () and then being selected in given the combined sample (). By conditioning on , the selection probabilities in are factorized as

| (2.4) | ||||

Note that the last expression in Eq. 2.4 results from the definition of given . The same factorization can be derived for the selection probabilities in . Thus, we have

| (2.5) |

By dividing the two sides of the equations 2.4 and 2.5, one can get rid of and obtain the pseudo-selection probabilities in as below:

| (2.6) |

It is clear that as contains and the sampling design of is known given . As will be seen in Section 2.4, conditioning on is vital for the DR estimator, as Chen’s method is limited to situations where the dimension of the auxiliary variables must be the same in QR and PM.

Note that Eq. 2.6 is identical to the pseudo-weighting formula Elliott and Valliant (2017) derive for a non-probability sample. Unlike the PMLE approach, modeling in can be performed using the standard binary logistic regression or any alternative classification methods, such as supervised machine learning algorithms. Under a logistic regression model, we have

| (2.7) |

where denotes the vector of model parameters being estimated via maximum likelihood estimation (MLE). Hence, in situations where is known or can be calculated for , the estimate of for is given by

| (2.8) |

where denotes the MLE estimate of the logistic regression model parameters, and is a shorthand of . Intuitively, one can envision that the first factor in 2.8 treats as if it is selected under the design of , and the second factor attempts to balance the distribution of in with respect to that in .

Having estimated based on 2.8 for all , one can construct the Hájek-type pseudo-weighted estimator for the finite population mean as below:

| (2.9) |

where . Hereafter, we refer to the estimator in 2.9 as propensity-adjusted probability weighting (PAPW). Under mild regularity conditions, the ignorable assumption in given , the logistic regression model and the additional assumption of , Appendix 8.1 shows that this estimator is consistent and asymptotically unbiased for . Further, when is known, the sandwich-type variance estimator for is given by

| (2.10) | ||||

where

| (2.11) |

where is the estimated pseudo-selection probability based on 2.9 for . See Appendix 8.1 for the derivation.

In situations where is unknown for , Elliott and Valliant (2017) suggest predicting this quantity for units of the non-probability sample. Note that, in this situation, it is no longer required to condition on in addition to . Treating as a random variable for conditional on , one can obtain this quantity by regressing the ’s on the ’s in the reference survey. We have

| (2.12) | ||||

However, since the outcome is continuous bounded taking values within , fitting a regression model is recommended (Ferrari and Cribari-Neto, 2004). Note that, is fixed given as is a probability sample, but conditional on , can be regarded as a random variable.

Rafei et al. (2020) call this approach propensity-adjusted probability prediction (PAPP). This two-step derivation of pseudo-inclusion probabilities is especially useful, as it separates sampling weights in from the propensity model computationally. When the true model is unknown, this feature enables us to fit a broader and more flexible range of models, such as algorithmic tree-based methods. It is worth noting that modeling does not impose an additional ignorable assumption in given , because in the extreme case if , that means weighted and unweighted distributions of are identical in , and therefore the ’s can be safely ignored in propensity modeling.

2.3 Prediction modeling approach

An alternative approach to deal with selectivity in Big Data is modeling (Smith, 1983). In a fully model-based fashion, this essentially involves imputing for the population non-sampled units, . When is unobserved for non-sampled units, it is recommended that a synthetic population is generated by undoing the selection mechanism of through a non-parametric Bayesian bootstrap method using the design variables in (Dong et al., 2014; Zangeneh and Little, 2015). In the non-probability sample context, Elliott and Valliant (2017) propose an extension of the General Regression Estimator (GREG) when only summary information about , such as totals, is known regarding . In situations where an external probability sample is available with measured, an alternative is to limit the outcome prediction to the units in , and then, use design-based approaches to estimate the population quantity (Rivers, 2007; Kim et al., 2018).

However, to the best of our knowledge, none of the prior literature distinguish the role of from in the conditional mean structure of the outcome, while the likelihood factorization in Eq. 2.1 indicates that predicting requires conditioning not only on but also on . Suppose is a realization of a repeated random sampling process from a super-population under the following model:

| (2.13) |

where can be either a parametric model with being a continuous differentiable function or an unspecified non-parametric form. Under the ignorable condition where

| (2.14) |

an MLE estimate of can be obtained by regressing on given . The predictions for units in are then given by

| (2.15) |

where . Once is imputed for all units in the reference survey, the population mean can be estimated through the Hájek formula as below:

| (2.16) |

where for , and is the selection probability for subject .

The asymptotic properties of the estimator in 2.16, including consistency and unbiasedness, have been investigated by Kim et al. (2018). Note that in situations where is available for , one can use instead of the high-dimensional as a predictor in . This method is known as linear-in-the-weight prediction (LWP) (Scharfstein et al., 1999; Bang and Robins, 2005; Zhang and Little, 2011). However, since outcome imputation relies fully on extrapolation, even minor misspecification of the underlying model can be seriously detrimental to bias correction.

2.4 Doubly robust adjustment approach

To reduce the sensitivity to model misspecification, Chen et al. (2020) reconcile the two aforementioned approaches, i.e. QR and PM, in a way that estimates remain consistent even if one of the two models is incorrectly specified. Their method involves an extension of the augmented inverse propensity weighting (AIPW) proposed by Robins et al. (1994). When is known, the expanded AIPW estimator takes the following form:

| (2.17) |

where given , and are estimated using the modified PMLE and MLE methods mentioned in sections 2.2 and 2.3, respectively. The theoretical proof of the asymptotic unbiasedness of under the correct modeling of or is reviewed in Appendix 8.1.

To avoid using in modeling because of the PMLE restrictions we discussed in Section 2.2, in this study, we suggest estimating for in Eq. 2.17 based on the PAPW/PAPP method depending on whether is available for or not. As a result, in situations where is known for , our proposed DR estimator is given by

| (2.18) |

wher is substituted using Eq. 2.8. We demonstrate that this form of the AIPW estimator is identical to that defined by Kim and Haziza (2014) in the non-response adjustment context under probability surveys. Assuming that is fully observed for , let us define the following HT-estimator for the population mean:

| (2.19) |

Now, one can easily conclude that

| (2.20) | ||||

where . The formula in 2.20 is similar to what is derived by Kim and Haziza (2014). Therefore, the rest of the theoretical proof of asymptotic unbiasedness, i.e. , in Kim and Haziza (2014) should hold for our modified AIPW estimator in 2.18 as well.

To preserve the DR property for both the point and variance estimator of , as suggested by Kim and Haziza (2014), one can solve the following estimating equations simultaneously given to obtain the estimate of . The aim is to cancel the first-order derivative terms in the Taylor-series expansion of under QR and PM. These estimating equations are given by

| (2.21) | ||||

where is the derivative of with respect to . Under a linear regression model, . Therefore, given the same regularity conditions, ignorability in , the logistic regression model as well as the additional imposed assumption of , one can show that the proposed DR estimator is consistent and approximately unbiased given that either the QR or PM model holds.

It is important to note that the system of equations in 2.21 may not have unique solutions unless the dimension of covariates in QR and PM is identical. Therefore, the AIPW estimator by Chen et al. (2020) may not be applicable here, as our likelihood factorization suggests that conditioning on is necessary at least for the PM. Furthermore, when is known for , one can replace the -dimensional with the -dimensional in modeling both QR and PM. Bang and Robins (2005) shows that estimators based on a linear-in-weight prediction model remains consistent.

2.5 Extension of the proposed method under a two-step Bayesian framework

A fully Bayesian approach specifies a model for the joint distribution of selection indicator, , and the outcome variable, , for (McCandless et al., 2009; An, 2010). This requires multiply generating synthetic populations and fitting the QR and PM models on each of them repeatedly (Little and Zheng, 2007; Zangeneh and Little, 2015), which can be computationally expensive under a Big Data setting. While joint modeling may result in good frequentist properties (Little, 2004), feedback occurs between the two models (Zigler et al., 2013). This can be controversial in the sense that PS estimates should not be informed by the outcome model (Rubin, 2007). Here, we are interested in modeling the PS and the outcome separately through the two-step framework proposed by Kaplan and Chen (2012). The first step involves fitting Bayesian models to multiply impute the PS and the outcome by randomly subsampling the posterior predictive draws, and Rubin’s combining rules are utilized as the second step to obtain the final point and interval estimates. This method not only is computationally efficient as it suffices to fit the models once and on the combined sample but also cuts the undesirable feedback between the models as they are fitted separately. Bayesian modeling can be performed either parametrically or non-parametrically.

2.5.1 Parametric Bayes

As the first step, we employ Bayesian Generalized Linear Models to handle multiple imputations of and for , and if it is unknown for . Under a standard Bayesian framework, a set of independent prior distributions are assigned to the model parameters, and conditional on the observed data, the associated posterior distributions are simulated through an appropriate MCMC method, such as Metropolis–Hastings algorithm. We propose the following steps:

where , and are the parameters associated with modeling in a Beta regression (), in a binary logistic regression () and is a linear regression (), respectively, and denotes a prior density function. Note that in situations where is calculable for , should be skipped, and should be replaced by for . Standard weak or non-informative priors for the regression parameter models can be used (Kaplan and Chen, 2012). We also note that , which will be required for the estimation of when not provided directly or through the availability of in , relies on a reasonably strong association between the available and in order to accurately estimate . We explore the effect of differing degrees of this association via simulation in Sections 3.2 and 3.3.

Suppose is the -th unit of an -sized random sample from the MCMC draws associated with the posterior distribution of the models parameters. Then, given that is known for , one can obtain the m-th draw of the proposed AIPW estimator as below:

| (2.22) |

where corresponds to an imputation of for the unobserved values in the probability sample. In situations where is unknown for , the -th draw of the AIPW estimator is given by

| (2.23) |

Having for all , then, Rubin’s combining rule for the point estimate (Rubin, 2004) can be employed to obtain the final AIPW estimator as below:

| (2.24) |

If at least one of the underlying models is correctly specified, we would expect that this estimator is approximately unbiased. The variance estimation under the two-step Bayesian method is discussed in Section 2.6.

2.5.2 Non-parametric Bayes

Despite the prominent feature of double robustness, for a given non-probability sample, neither QR nor PM have a known modeling structure in practice. When both working models are invalid, the AIPW estimator will be biased and a non-robust estimator based on PM may produce a more efficient estimate than the AIPW (Kang et al., 2007). To show the advantage of our modified estimator in 2.18 over that proposed by Chen et al. (2020), we employ Bayesian Additive Regression Trees (BART) as a predictive tool for multiply imputing the ’s as well as the ’s in . A brief introduction to BART has been provided in Appendix 8.2.

Suppose BART approximates a continuous outcome variable through an implicit function as below:

| (2.25) |

where . Accordingly, one can train BART in and multiply impute for using the simulated posterior predictive distribution. Regarding QR, we consider two situations; first, is known for . Under this circumstance, it suffices to model on in to estimate for . For a binary outcome variable, BART utilizes a data augmentation technique with respect to a given link function, to map values to via a probit link. Suppose

| (2.26) |

where is the inverse CDF of the standard normal distribution. Hence, using the posterior predictive draws generated by BART in 2.26, and consequently can be imputed multiply for . For a given imputation , one can expand the DR estimator in 2.18 as below:

| (2.27) |

where and are the constructed sum-of-trees associated with the -th MCMC draw in 2.25 and 2.26, respectively, after training BART on the combined sample.

Secondly, in situations where is not available for , we suggest applying BART to multiply impute the missing ’s in . Since the outcome is continuous bounded within , a logit transformation of the ’s can be used as the outcome variable in BART to map the values to . Such a model is presented by

| (2.28) |

where is a sum-of-trees function approximated by BART. Under this circumstance, based on the -th draw from the posterior distribution is given by

| (2.29) |

Having estimated for , one can eventually use Rubin’s combining rule (Rubin, 2004) to obtain the ultimate point estimate as in 2.24.

2.6 Variance estimation

To obtain an unbiased variance estimate for the the proposed DR estimator, one needs to account for three sources of uncertainty: (i) the uncertainty due to estimated pseudo-weights in , (ii) the uncertainty due to the predicted outcome in both and , and (iii) the uncertainty due to sampling itself. In the following, we consider two scenarios:

2.6.1 Scenario I: is known for

In this scenario the derivation of the asymptotic variance estimator for is straightforward and follows Chen et al. (2020). It is given by

| (2.30) |

where under the sampling design of . is the variance of under the joint sampling design of and the PS model. This quantity can be estimated from as below:

| (2.31) |

Finally, corrects for the bias in under the PM, and is given by

| (2.32) |

where . Since the quantity in 2.32 tends to zero asymptotically under the QR model, the derived variance estimator in 2.30 is DR.

2.6.2 Scenario II: is unknown for

To estimate the variance of in 2.18 under the GLM, we employ the bootstrap repeated replication method proposed by Rao and Wu (1988). For a given replication , we draw replicated bootstrap subsamples, and , of sizes and from and , respectively. The sampling weights in are updated as below:

| (2.33) |

where is the number of times the -th unit has been repeated in . Let’s assume is the DR estimate based on the -th combined bootstrap sample, , using Eq. 2.7. The variance estimator is given by

| (2.34) |

where . Note that when and are clustered, which is the case in our application, bootstrap subsamples are selected from the primary sampling units (PSU), and and are replaced by their respective PSU sizes.

To estimate the variance of under a Bayesian framework, whether parametric or non-parametric, we treat for , and and for , as missing values in Eq. 2.18 and multiply impute these quantities using the Monte Carlo Markov Chain (MCMC) sequence of the posterior predictive distribution generated by BART. For randomly selected MCMC draws, one can estimate for based on Eq. 2.18. Following Rubin’s combining rule for variance estimation under multiple imputation, the final variance estimate of is given as below:

| (2.35) |

where , and . We have shown in the Appendix 8.1 that the within-imputation component can be approximated by

| (2.36) |

where . Note that when or is clustered, under a Bayesian framework, it is important to fit multilevel models to obtain unbiased variance (Zhou et al., 2020). In addition, one needs to account for the intraclass correlation across the sample units in for . Further, one may use the extension of BART with random intercept to properly specify the working models under a cluster sampling design (Tan et al., 2016).

3 Simulation Study

Three simulations are studied in this section to assess the performance of our proposed methods and associated variance estimators in terms of bias magnitude and other repeated sampling properties. In Simulation 1, we mimic the simulation design in Chen et al. (2020) to compare the proposed methods. Here the probability of selection in the probability sample is a fixed linear combination of a subset of the covariates that govern selection into the non-probability sample. In Simulation 2 we separate the design variable for the probability sample and the selection covariate for the non-probability sample in order to consider different associations between these values, as well as misspecification of the functional form of the means to consider the advantages of BART in modeling. Simulation 3 extends Simulation 2 to allow for cluster sampling in the probability sample to better match the design of the National Household Transportation Survey in the application.

3.1 Simulation I

The design of our first simulation is inspired by the one implemented in Chen et al. (2020). For all three studies, the non-probability samples are given a random selection mechanism with unequal probabilities, but it is later assumed that these selection probabilities are unknown at the stage of analysis, and the goal is to adjust for the selection bias using a parallel probability sample whose sampling mechanism is known. We conduct the simulation under both asymptotic frequentist and two-step Bayesian frameworks. Consider a finite population of size with being a set of auxiliary variables generated as follows:

| (3.1) |

and is defined as a function of as below:

| (3.2) |

Given , a continuous outcome variable is defined by

| (3.3) |

where , and is defined such that the correlation between and equals , which takes one of the values . Further, associated with the design of , a set of selection probabilities are assigned to the population units through the following logistic model:

where is determined such that . For , we assume where is obtained such that . Hence, is assumed to be known for as long as is observed in . Using these measures of size, we repeatedly draw pairs of samples of sizes and associated with and from through a Poisson sampling method. Note that units in both and are independently selected, and , which might be the case in a Big Data setting. Extensions with and for both frequentist and Bayesian methods are provided in Appendix 8.3.

Once and are drawn from , we assume that the ’s for and ’s for are unobserved. The simulation is then iterated times, where the bias-adjusted mean, standad error (SE), and associated 95% confidence interval (CI) for the mean of are estimated in each iteration. Under the frequentist approach, the AIPW point estimates are obtained by simultaneously solving the estimating equations in 2.21. In addition, the proposed two-step method is used to derive the AIPW point estimates under the parametric Bayes. Also, to estimate the variance, we use the DR asymptotic method proposed by Chen et al. (2020), and the conditional variance formula in Eq. 2.35 under the frequentist and Bayesian approaches, respectively. For the latter, we set flat priors to the model parameters, and simulate the posteriors using MCMC draws after omitting an additional draws as the burn-in period. We then get a random sample of size from the posterior draws to obtain the point and variance estimates.

To evaluate the repeated sampling properties of the competing method, relative bias (rBias), relative root mean square error (rMSE), the nominal coverage rate of 95% CIs (crCI) and SE ratio (rSE) are calculated as below:

| (3.4) | ||||

| (3.5) | ||||

| (3.6) | ||||

| (3.7) |

where denotes the DR adjusted sample mean from iteration , , is the finite population true mean, and represents the variance estimate of the adjusted mean based on the sample. Finally, we consider model misspecification to test the DR property by removing from the predictors of the working model. Here .

| Method | rBias | rMSE | crCI | rSE | rBias | rMSE | crCI | rSE | rBias | rMSE | crCI | rSE | ||

| Probability sample () | ||||||||||||||

| Unweighted | 8.528 | 19.248 | 92.6 | 1.009 | 8.647 | 11.065 | 77.4 | 1.018 | 8.682 | 9.719 | 50.9 | 1.020 | ||

| Fully weighted | -0.029 | 20.276 | 94.7 | 1.001 | 0.006 | 8.035 | 95.1 | 1.010 | 0.015 | 5.008 | 94.9 | 1.008 | ||

| Non-probability sample () | ||||||||||||||

| Unweighted | 31.742 | 32.230 | 0.0 | 1.009 | 31.937 | 32.035 | 0.0 | 1.012 | 31.996 | 32.049 | 0.0 | 1.013 | ||

| Fully weighted | 0.127 | 6.587 | 95.4 | 1.013 | 0.078 | 2.583 | 95.7 | 1.014 | 0.061 | 1.554 | 95.4 | 1.012 | ||

| Non-robust adjustment | ||||||||||||||

| Model specification: True | ||||||||||||||

| PAPW | -1.780 | 8.088 | 97.0 | 1.107 | -1.906 | 4.734 | 95.7 | 1.103 | -1.947 | 4.186 | 94.0 | 1.100 | ||

| IPSW | -3.054 | 10.934 | 97.2 | 1.305 | -3.134 | 8.145 | 95.2 | 1.173 | -3.160 | 7.778 | 92.4 | 1.067 | ||

| PM | 0.490 | 7.577 | 95.2 | 1.007 | 0.190 | 4.668 | 94.6 | 0.991 | 0.095 | 4.204 | 94.6 | 0.985 | ||

| Model specification: False | ||||||||||||||

| PAPW | 26.338 | 27.089 | 3.1 | 1.112 | 26.434 | 26.618 | 0.0 | 1.123 | 26.461 | 26.580 | 0.0 | 1.128 | ||

| IPSW | 28.269 | 28.917 | 0.6 | 1.021 | 28.474 | 28.648 | 0.0 | 1.018 | 28.536 | 28.654 | 0.0 | 1.014 | ||

| PM | 28.093 | 28.750 | 0.6 | 1.022 | 28.315 | 28.494 | 0.0 | 1.022 | 28.382 | 28.505 | 0.0 | 1.021 | ||

| Doubly robust adjustment | ||||||||||||||

| Model specification: QR–True, PM–True | ||||||||||||||

| AIPW–PAPW | 0.238 | 8.070 | 95.2 | 1.017 | 0.100 | 4.787 | 95.0 | 0.996 | 0.056 | 4.235 | 94.6 | 0.987 | ||

| AIPW–IPSW | 0.105 | 7.861 | 95.1 | 1.019 | 0.053 | 4.737 | 94.8 | 0.996 | 0.036 | 4.222 | 94.6 | 0.987 | ||

| Model specification: QR–True, PM–False | ||||||||||||||

| AIPW–PAPW | 0.311 | 8.197 | 95.4 | 1.021 | 0.172 | 4.988 | 95.0 | 1.013 | 0.127 | 4.460 | 95.2 | 1.011 | ||

| AIPW–IPSW | 0.222 | 7.962 | 95.5 | 1.024 | 0.170 | 4.901 | 95.4 | 1.019 | 0.152 | 4.405 | 95.3 | 1.018 | ||

| Model specification: QR–False, PM–True | ||||||||||||||

| AIPW–PAPW | 0.877 | 13.362 | 96.9 | 1.028 | 0.327 | 6.089 | 95.8 | 1.027 | 0.154 | 4.523 | 95.2 | 1.006 | ||

| AIPW–IPSW | 0.609 | 12.532 | 96.6 | 1.025 | 0.232 | 5.842 | 95.5 | 1.022 | 0.113 | 4.464 | 95.3 | 1.003 | ||

| Model specification: QR–False, PM–False | ||||||||||||||

| AIPW–PAPW | 28.301 | 28.995 | 1.0 | 1.024 | 28.392 | 28.579 | 0.0 | 1.021 | 28.419 | 28.546 | 0.0 | 1.018 | ||

| AIPW–IPSW | 28.104 | 28.762 | 0.7 | 1.024 | 28.313 | 28.493 | 0.0 | 1.023 | 28.376 | 28.500 | 0.0 | 1.022 | ||

-

•

PAPW: propensity adjusted probability weighting; IPSW: Inverse propensity score weighting; QR: quasi-randomization; PM: prediction model; AIPW: augmented inverse propensity weighting.

| Method | rBias | rMSE | crCI | rSE | rBias | rMSE | crCI | rSE | rBias | rMSE | crCI | rSE | ||

| Non-robust adjustment | ||||||||||||||

| Model specification: True | ||||||||||||||

| Unweighted | 8.528 | 19.248 | 92.6 | 1.009 | 8.647 | 11.065 | 77.4 | 1.018 | 8.682 | 9.719 | 50.9 | 1.020 | ||

| Fully weighted | -0.029 | 20.276 | 94.7 | 1.001 | 0.006 | 8.035 | 95.1 | 1.010 | 0.015 | 5.008 | 94.9 | 1.008 | ||

| Non-probability sample () | ||||||||||||||

| Unweighted | 31.620 | 32.106 | 0.0 | 1.014 | 31.906 | 32.003 | 0.0 | 1.015 | 31.993 | 32.045 | 0.0 | 1.017 | ||

| Fully weighted | 0.026 | 6.615 | 95.3 | 1.010 | 0.052 | 2.604 | 95.2 | 1.007 | 0.059 | 1.564 | 95.2 | 1.006 | ||

| Non-robust adjustment | ||||||||||||||

| Model specification: True | ||||||||||||||

| PAPW | -1.846 | 8.148 | 96.3 | 1.081 | -1.890 | 4.749 | 96.9 | 1.163 | -1.906 | 4.195 | 96.6 | 1.200 | ||

| IPSW | 0.113 | 7.566 | 96.5 | 1.076 | 0.117 | 4.302 | 97.7 | 1.140 | 0.117 | 3.759 | 97.9 | 1.164 | ||

| PM | 0.385 | 7.534 | 95.2 | 1.027 | 0.151 | 4.644 | 95.1 | 1.001 | 0.078 | 4.190 | 95.0 | 0.989 | ||

| Model specification: False | ||||||||||||||

| PAPW | 26.290 | 27.041 | 2.3 | 1.051 | 26.499 | 26.687 | 0.0 | 1.071 | 26.562 | 26.684 | 0.0 | 1.083 | ||

| IPSW | 28.151 | 28.784 | 0.5 | 1.038 | 28.446 | 28.612 | 0.0 | 1.025 | 28.535 | 28.647 | 0.0 | 1.015 | ||

| PM | 27.981 | 28.641 | 0.8 | 1.040 | 28.291 | 28.472 | 0.0 | 1.025 | 28.384 | 28.510 | 0.0 | 1.015 | ||

| Doubly robust adjustment | ||||||||||||||

| Model specification: QR–True, PM–True | ||||||||||||||

| AIPW–PAPW | 0.115 | 8.093 | 96.9 | 1.097 | 0.057 | 4.764 | 97.1 | 1.121 | 0.037 | 4.219 | 97.2 | 1.130 | ||

| AIPW–IPSW | 0.009 | 7.803 | 96.6 | 1.083 | 0.019 | 4.704 | 96.7 | 1.106 | 0.020 | 4.206 | 97.0 | 1.114 | ||

| Model specification: QR–True, PM–False | ||||||||||||||

| AIPW–PAPW | -0.016 | 7.930 | 97.2 | 1.108 | -0.080 | 4.444 | 97.9 | 1.166 | -0.098 | 3.842 | 98.1 | 1.193 | ||

| AIPW–IPSW | -0.079 | 7.648 | 96.8 | 1.095 | -0.074 | 4.411 | 97.7 | 1.151 | -0.069 | 3.867 | 97.9 | 1.175 | ||

| Model specification: QR–False, PM–True | ||||||||||||||

| AIPW–PAPW | 0.557 | 7.693 | 96.4 | 1.086 | 0.214 | 4.669 | 96.8 | 1.092 | 0.105 | 4.195 | 96.6 | 1.090 | ||

| AIPW–IPSW | 0.392 | 7.526 | 96.0 | 1.067 | 0.155 | 4.637 | 96.3 | 1.077 | 0.080 | 4.189 | 96.4 | 1.078 | ||

| Model specification: QR–False, PM–False | ||||||||||||||

| AIPW–PAPW | 28.167 | 28.864 | 1.4 | 1.096 | 28.359 | 28.549 | 0.0 | 1.082 | 28.416 | 28.548 | 0.0 | 1.068 | ||

| AIPW–IPSW | 27.990 | 28.647 | 1.0 | 1.069 | 28.289 | 28.471 | 0.0 | 1.059 | 28.379 | 28.506 | 0.0 | 1.049 | ||

-

•

PAPW: propensity adjusted probability weighting; IPSW: Inverse propensity score weighting; QR: quasi-randomization; PM: prediction model; AIPW: augmented inverse propensity weighting.

Table 1 summarizes the results of the first simulation study under the frequentist approach. As illustrated, unweighted estimates of the population mean are biased in both and . For the non-robust estimators, when the working model is valid, it seems that PM outperforms QR consistently in terms of bias correction across different values. While PAPW works slightly better than IPSW with respect to bias, when the QR model is true, the latter tends to overestimate the variance slightly according to the values of rSE. In addition, the smaller value of rMSE indicates that PAPW is more efficient than IPSW. For the PM, both crCI and rSE reflect accurate estimates of the variance for all values of . When the working model is incorrect, point estimates associated with both QR and PM are biased, but the variance remains unbiased. These findings hold across all three values of .

For the DR methods, it is evident that estimates based on both PAPW and IPSW remain unbiased when at least one of the PM or QR models holds. Also, the values of crCI and rSE reveal that the asymptotic variance estimator is DR for both methods. Comparing the rMSE values, the AIPW estimate based on IPSW is slightly more efficient than the one based on PAPW. While the variance estimates remain unbiased under the false-false model specification status, point estimates are severely biased. Finally, the performance of both AIPW estimators improves with respect to bias reduction especially when the QR model is misspecified.

For the Bayesian approach, the simulation results are displayed in Table 2. Note that we no longer are able to use the PMLE approach. Instead, we apply the PAPP method assuming that is unknown for . As illustrated, PAPP outperforms all the non-robust methods with respect to bias. Surprisingly, the magnitude of bias is even smaller in the Bayesian PAPP than the QR methods examined under the frequentist framework. In addition, estimates under the Bayesian approach are slightly more efficient than those obtained under the frequentist methods. While variance is approximately unbiased for , there is evidence that PM and QR increasingly underestimate and overestimate the true variance, respectively, as the value of increases. Regarding the DR methods, the Bayesian and frequentist methods yield similar results. The DR property holds for all values of , when at least one of the working models are correctly specified.

3.2 Simulation II

In the previous simulation study, we violated the ignorable assumption in order to misspecify the working model by dropping a key auxiliary variable. Now, we focus on a situation where models misspecified with respect to the functional form of their conditional means. To this end, we consider non-linear associations and two-way interactions in constructing of the outcome variables as well as the selection probabilities. This also allows us to examine the flexibility of BART as a non-parametric method when the true functional form of the underlying models is unknown. In addition, to simulate a more realistic situation, this time, two separate sets of auxiliary variables are generated, associated with the design of , and associated with the design of . However, we allow the two variables to be correlated through a bivariate Gaussian distribution as below:

| (3.8) |

Note that controls how strongly the sampling design of is associated with that of , which in turn controls the quality of our assumption that can be well estimated by ( in Section 2.5.1). In addition, the values of can be either observed or unobserved for . In this simulation, we set , but later we check other values ranging from to as well.

To generate the outcome variable in , we consider the following non-linear model:

| (3.9) |

where , and is determined such that the correlation between and equals for . The function is assumed to take one of the following forms:

| (3.10) |

We then consider an informative sampling strategy with unequal probabilities of inclusion, where the selection mechanism of and depends on and , respectively. Thus, each is assigned two values corresponding to the probabilities of selection in and through a function as below:

| (3.11) | ||||

where and are the indicators of being selected in and , respectively.

Associated with and , independent samples of size and were selected randomly from with Poisson sampling at the first stage and simple random sampling at the second stage. The sample size per cluster, , was and for and , respectively. The model intercepts, and in Eq. 3.11, are obtained such that and . We restrict this simulation to Bayesian analysis based on the proposed PAPW and PAPP methods but focus on how well the non-parametric Bayes performs over the parametric Bayes in situations when the true structure of both underlying models are supposed to be unknown. The rest of the simulation design is similar to that defined in Simulation I, except for the way we specify a working model. This is done by including only the main and linear effects of and in the PM model, and the main and linear effect of in the QR model. BART’s performance is examined under the assumption that the true functional form of both QR and PM models is unknown, and thus, only main effects are included in BART.

The findings of this simulation for the two-step Bayesian approach with MCMC draws and are exhibited numerically in Table 3. Regarding the non-robust methods, both QR and PM estimators show unbiased results across the three defined functions, i.e. SIN, EXP and SQR, as long the working GLM is valid, with the minimum value of rBias associated with the PAPP method. According to the rSE values, there is evidence that PAPW and PAPP overestimate the variance, and PM underestimate the variance to some degrees, especially under the EXP and SQR scenarios. When the specified GLM is wrong, as seen, point estimates are biased for both QR and PM methods across all three functions. However, BART produces approximately unbiased results with smaller values of rMSE than GLM. In general, the PM method outperforms the QR methods under BART with respect to bias, but results based on the PAPP method are more efficient. In addition, BART tends to overestimate the variance under both QR and PM methods.

When it comes to the DR adjustment, Bayesian GLM produces unbiased results across all the three defined functions if the working model of either QR or PM holds. However, the variance is slightly underestimated for the SIN function when the PM specified model is wrong, and it is overestimated for the EXP function under all model-specification scenarios. As expected, point estimates are biased when the GLM is misspecified for both QR and PM. However, BART tends to produce unbiased estimates consistently across all three functions, and the magnitude of both rBias and rMSE are smaller in the AIPW estimator based on PAPP compared to the AIPW estimator based on PAPW. Finally, as in the non-robust method, variance under BART is overestimated compared to the GLM.

| SIN | EXP | SQR | ||||||||||||

| Model-method | rBias | rMSE | crCI | rSE | rBias | rMSE | crCI | rSE | rBias | rMSE | crCI | rSE | ||

| Probability sample () | ||||||||||||||

| Unweighted | -17.210 | 23.109 | 80.0 | 0.999 | -8.406 | 11.126 | 78.3 | 1.000 | -17.302 | 20.563 | 65.8 | 1.002 | ||

| Fully weighted | -0.623 | 17.027 | 94.4 | 0.987 | -0.303 | 7.947 | 94.6 | 0.987 | -0.675 | 13.219 | 94.0 | 0.975 | ||

| Non-probability sample () | ||||||||||||||

| Unweighted | 33.063 | 33.379 | 0.0 | 1.003 | 40.307 | 40.409 | 0.0 | 1.079 | 49.356 | 49.570 | 0.0 | 1.016 | ||

| Fully weighted | 0.019 | 6.010 | 95.1 | 1.006 | 0.005 | 2.755 | 94.9 | 1.005 | 0.009 | 3.948 | 95.0 | 0.992 | ||

| Non-robust adjustment | ||||||||||||||

| Model specification: True | ||||||||||||||

| GLM–PAPW | -0.425 | 9.257 | 96.3 | 1.072 | -0.185 | 4.262 | 98.7 | 1.257 | -0.325 | 6.649 | 98.4 | 1.213 | ||

| GLM–PAPP | 0.018 | 8.460 | 95.7 | 1.018 | 0.040 | 3.870 | 98.6 | 1.238 | -0.037 | 5.914 | 98.8 | 1.222 | ||

| GLM–PM | -0.411 | 9.899 | 94.7 | 0.982 | -0.371 | 4.504 | 94.4 | 0.988 | -0.762 | 8.115 | 92.5 | 0.947 | ||

| Model specification: False | ||||||||||||||

| GLM–PAPW | 7.180 | 11.635 | 86.4 | 1.027 | 2.511 | 5.299 | 97.2 | 1.316 | 52.170 | 52.559 | 0.0 | 1.102 | ||

| GLM–PAPP | 7.647 | 11.265 | 78.0 | 0.954 | 3.025 | 5.425 | 96.2 | 1.277 | 53.095 | 53.397 | 0.0 | 1.122 | ||

| BART–PAPW | 4.035 | 10.078 | 97.0 | 1.217 | 2.811 | 5.129 | 98.4 | 1.472 | 8.356 | 11.082 | 97.2 | 1.468 | ||

| BART–PAPP | 1.098 | 8.530 | 96.7 | 1.121 | 1.108 | 4.120 | 98.9 | 1.391 | 4.482 | 7.479 | 98.0 | 1.401 | ||

| GLM–PM | 5.870 | 10.542 | 87.9 | 0.972 | -6.589 | 9.264 | 82.5 | 0.976 | 48.993 | 49.409 | 0.0 | 0.994 | ||

| BART–PM | 0.577 | 9.635 | 97.0 | 1.115 | 0.087 | 4.501 | 97.5 | 1.155 | 0.249 | 8.276 | 96.1 | 1.062 | ||

| Doubly robust adjustment | ||||||||||||||

| Model specification: QR–True, PM–True | ||||||||||||||

| GLM–AIPW–PAPW | -0.450 | 9.930 | 95.8 | 1.023 | -0.165 | 4.593 | 98.2 | 1.200 | -0.458 | 8.116 | 96.5 | 1.089 | ||

| GLM–AIPW–PAPP | -0.452 | 9.925 | 95.8 | 1.020 | -0.162 | 4.592 | 98.1 | 1.193 | -0.453 | 8.106 | 96.5 | 1.086 | ||

| Model specification: QR–True, PM–False | ||||||||||||||

| GLM–AIPW–PAPW | -0.279 | 9.996 | 93.2 | 0.926 | 0.310 | 5.697 | 98.8 | 1.303 | -0.338 | 7.128 | 97.5 | 1.154 | ||

| GLM–AIPW–PAPP | -0.134 | 9.418 | 94.1 | 0.961 | 0.508 | 4.977 | 99.5 | 1.475 | -0.275 | 7.376 | 97.6 | 1.152 | ||

| Model specification: QR–False, PM–True | ||||||||||||||

| GLM–AIPW–PAPW | -0.411 | 10.098 | 96.1 | 1.024 | -0.176 | 4.715 | 98.5 | 1.234 | -0.771 | 8.122 | 95.5 | 1.057 | ||

| GLM–AIPW–PAPP | -0.417 | 10.101 | 96.0 | 1.021 | -0.173 | 4.705 | 98.4 | 1.229 | -0.778 | 8.119 | 95.4 | 1.057 | ||

| Model specification: QR–False, PM–False | ||||||||||||||

| GLM–AIPW–PAPW | 9.015 | 13.176 | 84.1 | 1.000 | 6.735 | 8.693 | 94.1 | 1.456 | 50.835 | 51.288 | 0.0 | 1.019 | ||

| GLM–AIPW–PAPP | 9.191 | 12.717 | 84.9 | 1.082 | 6.787 | 8.181 | 96.7 | 1.761 | 51.667 | 52.131 | 0.0 | 1.047 | ||

| BART–AIPW–PAPW | 0.425 | 10.071 | 97.9 | 1.184 | 0.122 | 4.689 | 99.3 | 1.407 | -0.259 | 8.349 | 98.0 | 1.231 | ||

| BART–AIPW–PAPP | -0.144 | 9.794 | 97.8 | 1.184 | -0.100 | 4.541 | 99.3 | 1.405 | -0.245 | 8.329 | 97.7 | 1.203 | ||

-

•

PAPW: propensity adjusted probability weighting; PAPP: propensity adjusted probability Prediction; QR: quasi-randomization; PM: prediction model; AIPW: augmented inverse propensity weighting.

3.3 Simulation III

Since the non-probability sample in the application of this study is clustered, we performed a third simulation study. To this end, the hypothetical population is assumed to be clustered with clusters, each of size (). Then, three cluster-level covariates, , are defined with the following distributions:

| (3.12) |

where denotes a design variable in , and describes the selection mechanism in . Primarily, we set , but later we check other values ranging from to as well. Note that controls how strongly the sampling design of is associated with that of . Furthermore, we assume that both and are observed for units of .

Again, to be able to assess BART’s performance, we consider non-linear associations with polynomial terms and two-way interactions in construction of the outcome variables as well as the selection probabilities. Two outcome variables are studied, one continuous () and one binary (), which both depend on as below:

| (3.13) | ||||

| (3.14) |

where , and is determined such that the intraclass correlation equals (Oman and Zucker, 2001; Hunsberger et al., 2008). For each , we then consider the following set of selection probabilities associated with the design of the and :

| (3.15) | ||||

where and are the indicators of being selected in and , respectively. Associated with and , two-stage cluster samples of size and were selected randomly from with Poisson sampling at the first stage and simple random sampling at the second stage. The sample size per cluster, , was and for and , respectively. The model intercepts, and in 3.15, are obtained such that and .

The rest of the simulation design is similar to that defined in Simulation II, except for the methods we use for point and variance estimation. In addition to the situation where is known for , we consider a situation where is unobserved for and draw the estimates based on PAPP. Furthermore, unlike the simulation I, DR estimates are achieved by separately fitting the QR and PM models, and to get the variance estimates, a bootstrap technique is applied with based on Rao and Wu (1988). Finally, under BART, Rubin’s combining rules are employed to derive the point and variance estimates based on the random draws of the posterior predictive distribution. As in Simulations II, we consider different scenarios of model specification. To misspecify a model, we only include the main effects in the working model. Also, under BART, no interaction or polynomial is included as input.

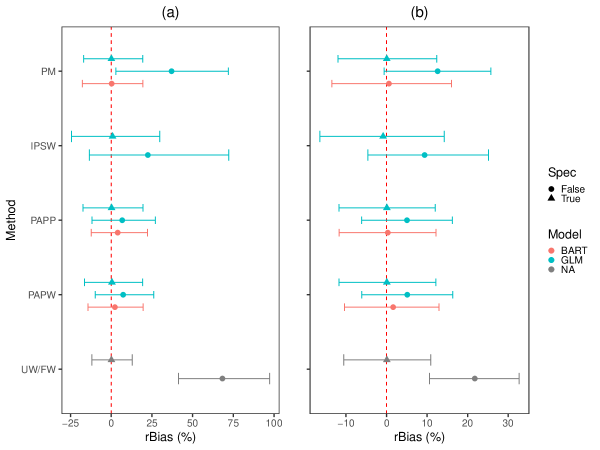

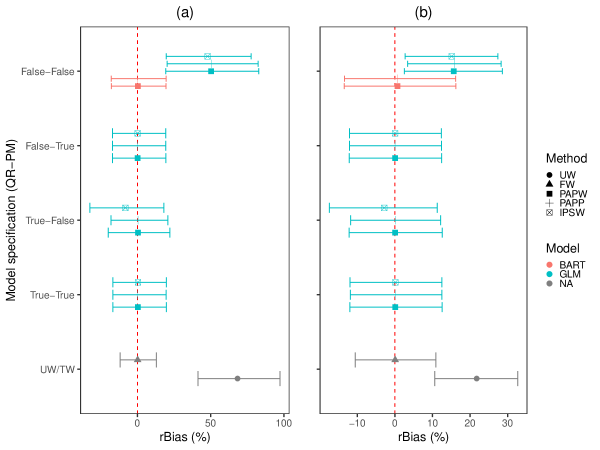

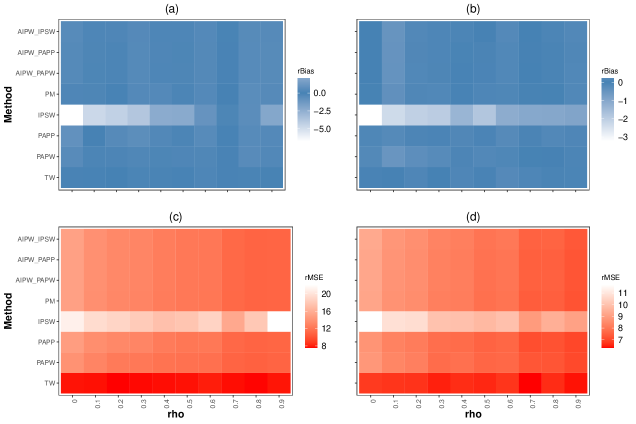

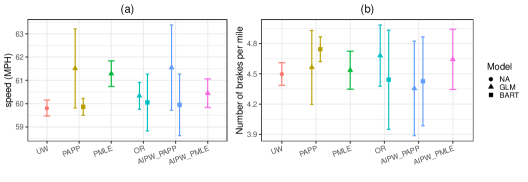

The means of the synthesized for the outcome variables were and . Figure 2 compares the bias magnitude and efficiency across the non-robust methods. As illustrated, point estimates from both and are biased if the true sampling weights are ignored. After adjusting, for both continuous and binary outcomes, the bias is close to zero under both QR and PM methods when the working model is correct. However, the lengths of the error bars reveal that the proposed PAPW/PAPP method is more efficient than the IPSW. When only main effects are included in the model, all adjusted estimates are biased except for those based on BART. Note that BART cannot be applied under IPSW. Further details about the simulation results for the non-robust methods are displayed in Appendix 8.3. We see that IPSW tends to have slightly larger magnitudes of rBias and rMSE for both and . Also, the values of rSE close to indicate that the Rao & Wu’s bootstrap method of variance estimation performs well under both QR and PM approaches. However, coverage is achieved only when the working model is correct.

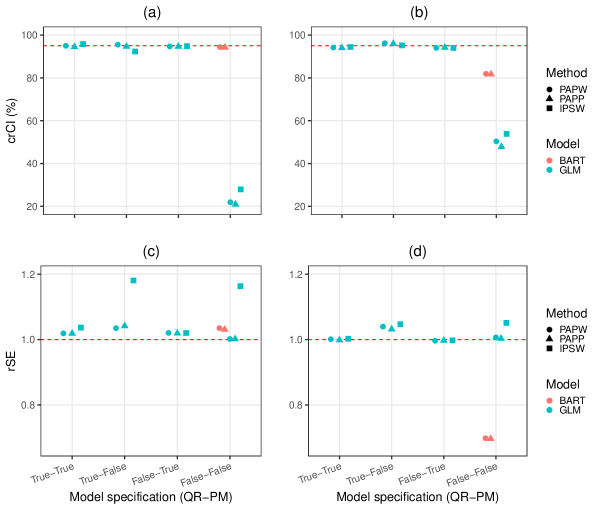

In Figure 3, we depict the results of the DR estimators under different permutations of model specification. One can immediately infer that AIPW produces unbiased results when either the PM or QR model holds. However, in situations where the true underlying models for both PM and QR are unknown, the point estimates based on BART remains unbiased under both the PAPW and PAPP approaches. Furthermore, under the GLM, it is evident that AIPW estimates based on PAPW/PAPP are slightly less biased and more efficient than those based on IPSW when the PM is incorrect. Details of the numerical results can be found in Appendix 8.3. The latter compares BART with GLM under a situation where both working models are wrong. Results showing the performance of the bootstrap variance estimator are provided in Figure 4. The crCI values are all close to the correct value unless both working models are incorrectly specified. When the models are incorrrectly specified, the BART approach yields correct variance estimation for the continuous outcome; variance is underestimated and anticonservative for the binary outcome, although closer to nominal coverage than competing methods. To conclude, we observe that when neither the PM nor QR model are known, BART based on PAPP produces unbiased and efficient estimates with accurate variance.

As the final step, we replicate the simulation for different values of ranging from to to show how stable the competing methods perform in terms of rbias and rMSE. Figure 5 depicts changes in the values of rBias and rMSE for different adjustment methods as the value of increases. Generally, it seems that the value of rMSE decreases for all competing methods as increases, but for all values of , PAPW are PAPP are less biased than IPSW. It is only when for the continuous variable that IPSW outperforms the PPAW/PAPP in bias reduction. However, when is highly correlated with , there is also evidence of better performance by PAPP than IPSW in terms of bias reduction. We believe this is mainly because the stronger association between and implies that the additional ignorable assumption under PAPP is better met, while this correlation causes a sort of collinearity in IPSW leading to a loss of efficiency. The rest of the methods did not show significant changes as the value of increases. Numerical values associated with Figure 5 have been provided in Appendix 8.3.

4 Strategic Highway Research Program 2 Analysis

We briefly describe SHRP2, the non-probability sample, and the NHTS, the probability sample, as well as the variables used for statistical adjustment.

4.1 Strategic Highway Research Program 2

SHRP2 is the largest naturalistic driving study conducted to date, with the primary aim to assess how people interact with their vehicle and traffic conditions while driving (of Sciences, 2013). About drivers aged years were recruited from six geographically dispersed sites across the United States (Florida, Indiana, New York, North Carolina, Pennsylvania, and Washington), and over five million trips and million driven miles have been recorded. The average follow-up time per person was days. A quasi-random approach was initially employed to select samples by random cold calling from a pool of pre-registered volunteers. However, because of the low success rate along with budgetary constraints, the investigators later chose to pursue voluntary recruitment. Sites were assigned one of three pre-determined sample sizes according to their population density (Campbell, 2012). The youngest and oldest age groups were oversampled because of the higher crash risk among those subgroups. Thus, one can conclude that the selection mechanism in SHRP2 is a combination of convenience and quota sampling methods. Further description of the study design and recruitment process can be found in Antin et al. (2015).

SHRP2 data are collected in multiple stages. Selected participants are initially asked to complete multiple assessment tests, including executive function and cognition, visual perception, visual-cognitive, physical and psychomotor capabilities, personality factors, sleep-related factors, general medical condition, driving knowledge, etc. In addition, demographic information such as age, gender, household income, education level, and marital status as well as vehicle characteristics such as vehicle type, model year, manufacturer, and annual mileage are gathered at the screening stage. A trip in SHRP2 is defined as the time interval during which the vehicle is operating. The in-vehicle sensors start recording kinematic information, the driver’s behaviors, and traffic events continuously as soon as the vehicle is switched on. Trip-related information such as average speed, duration, distance, and GPS trajectory coordinates are obtained by aggregating the sensor records at the trip level (Campbell, 2012).

4.2 National Household Travel Survey data

In the present study, we use data from the eighth round of the NHTS conducted from March 2016 through May 2017 as the reference survey. The NHTS is a nationally representative survey, repeated cross-sectionally approximately every seven years. It is aimed at characterizing personal travel behaviors among the civilian, non-institutionalized population of the United States. The 2017 NHTS was a mixed-mode survey, in which households were initially recruited by mailing through an address-based sampling (ABS) technique. Within the selected households, all eligible individuals aged years were requested to report the trips they made on a randomly assigned weekday through a web-based travel log. Proxy interviews were requested for younger household members who were years old.

The overall sample size was 129,696, of which roughly 20% was used for national representativity and the remaining 80% was regarded as add-ons for the state-level analysis. The recruitment response rate was 30.4%, of which 51.4% reported their trips via the travel logs (Santos et al., 2011). In NHTS, a travel day is defined from 4:00 AM of the assigned day to 3:59 AM of the following day on a typical weekday. A trip is defined as that made by one person using any mode of transportation. While trip distance was measured by online geocoding, the rest of the trip-related information was based on self-reporting. A total of 264,234 eligible individuals aged 5 took part in the study, for which 923,572 trips were recorded (McGuckin and Fucci, 2018).

4.3 Auxiliary variables and analysis plan

Because of the critical role of auxiliary variables in maintaining the ignorable assumption for the selection mechanism of the SHRP2 sample, particular attention was paid to identify and build as many common variables as possible in the combined sample that are expected to govern both selection mechanism and outcome variables in SHRP2. However, since the SHRP2 sample is gathered from a limited geographical area, in order to be able to generalize the findings to the American population of drivers, we had to assume that no other auxiliary variable apart from those investigated in this study will define the distribution of the outcome variables. This assumption is in fact embedded in the ignorable condition in the SHRP2 given the set of common observed covariates. Three distinct sets of variables were considered: (i) demographic information of the drivers, (ii) vehicle characteristics, and (iii) day-level information. These variables and associated levels/ranges are listed in Table 4.

Our focus was on inference at the day level, so SHRP2 data were aggregated. We constructed several trip-related outcome variables such as daily frequency of trips, daily total trip duration, daily total distance driven, mean daily trip average speed, and mean daily start time of trips that were available in both datasets as well as daily maximum speed, daily frequency of brakes per mile, and daily percentage of trip with a full stop, which was available in SHRP2 only. The final sample sizes of the complete day-level datasets were and in SHRP2 and NHTS, respectively.

In order to make the two datasets more comparable, we filtered out all the subjects in NHTS who were not drivers or were younger than years old or used public transportation or transportation modes other than cars, SUVs, vans, or light pickup trucks. One major structural difference between NHTS and SHRP2 was that in the NHTS, participants’ trips were recorded for only one randomly assigned weekday, while in SHRP2, individuals were followed up for several months or years. Therefore, to properly account for the potential intraclass correlation across sample units in SHRP2, we treated SHRP2 participants as clusters for variance estimation. For BART, we fitted random intercept BART (Tan et al., 2016). In addition, since the were not observed for units of SHRP2, we employed the PAPP and IPSW methods to estimate pseudo-weights, so variance estimation under the GLM was based on the Rao & Wu bootstrap method throughout the application section.

| Auxiliary variables (scale) | Levels/range |

|---|---|

| Demographic information | |

| gender | (female, male) |

| age (yrs) | (16-24, 25-34, 35-44, 45-54, 55-64, 65-74, 75+) |

| race | (White, Black, other) |

| ethnicity | (Hispanic, non-Hispanic) |

| birth country | (citizen, alien) |

| education level | (HS, HS completed, associate, graduate, post-graduate) |

| household income ($1,000) | (0-49k, 50-99k, 100-149k, 150k+) |

| household size | (1, 2, 3-5, 6-10, 10+) |

| job status | (part-time, full time) |

| home ownership | (owner, renter) |

| pop. size of resid. area () | (0-49, 50-200, 200-500, 500+) |

| Vehicle characteristics | |

| age (yrs) | (0-4, 5-9, 10-14, 15-19, 20+) |

| type | (passenger car, Van, SUV, truck) |

| make | (American, European, Asian) |

| mileage (1000km) | (0-4, 5-9, 10, 10-19, 20-49, 50+) |

| fuel type | (gas, other) |

| Day-level information | |

| weekend indicator of trip day | {0,1} |

| season of trip day | (winter, spring, summer, fall) |

4.4 Results

According to Figure 7 of Appendix 8.4, one can visually infer that the largest discrepancies between the sample distribution of auxiliary variables in SHRP2 and that in the population stem from participants’ age, race and population size of residential area as well as vehicles’ age and vehicles’ type. The youngest and eldest age groups have been oversampled as are Whites and non-Hispanics. In addition, we found that the proportion of urban dwellers is higher in SHRP2 than that in the NHTS. In terms of vehicle characteristics, SHRP2 participants tend to own passenger cars more than the population average, whereas individuals with other vehicle types were underrepresented in SHRP2.

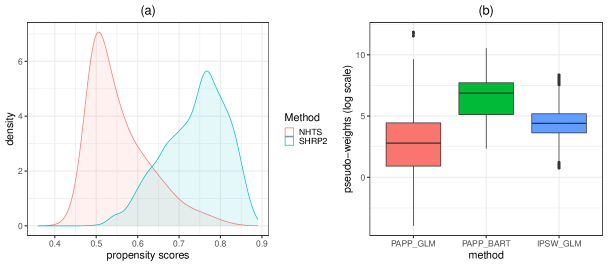

As the first step of QR, we checked if there is any evidence of a lack of common distributional support between the two studies for the auxiliary variables. Figure 6a compares the kernel density of the estimated PS using BART across the two samples. As illustrated, a notable lack of overlap appears on the left tail of the PS distribution in SHRP2. However, owing to the huge sample size in SHRP2, we believe this does not jeopardize the positivity assumption. The available auxiliary variables are strong predictors of the NHTS selection probabilities for SRHP2: the average pseudo- was for BART in a -fold cross validation.

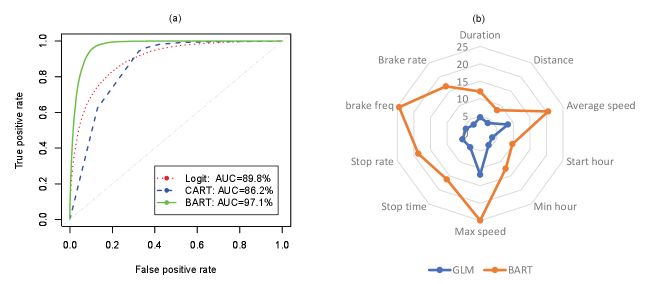

In Figure 6b, we compare the distribution of estimated pseudo-weights across the QR methods. It seems that PAPP based on BART is the only method that does not produce influential weights. Also, the highest variability in the estimated pseudo-weights belonged to the PAPP method under GLM. As can be seen in Figure 13 in Appendix 8.4, the largest values of area under the ROC curve (AUC) and the largest values of (pseudo)- in the radar across different trip-related outcome variables are associated with BART, versus classification and regression trees (CART) or GLM when modeling on and on , respectively. Additionally, Figure 14 in Appendix 8.4 exhibits how pseudo-weighting based on PAPP-BART improves the imbalance in the distribution of in SHRP2 with respect to the weighted distribution of NHTS.

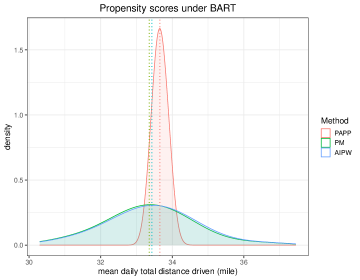

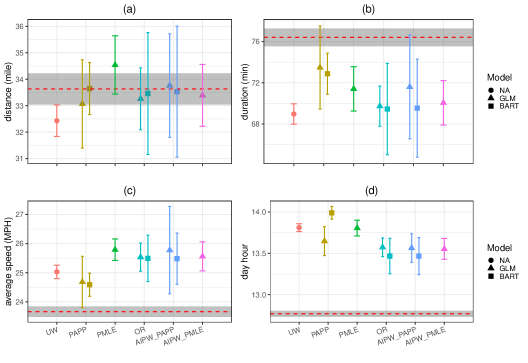

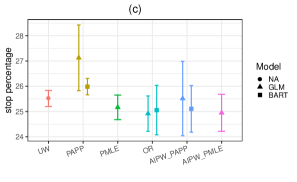

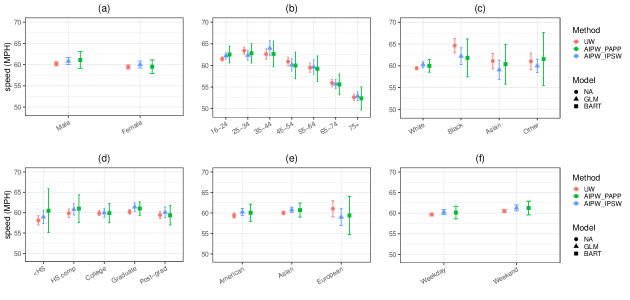

Figure 8 depicts the adjusted sample means for some trip-related measures that were available in both SHRP2 and NHTS. The methods we compare here encompass PAPP, IPSW and PM as the non-robust approaches, and AIPW with PAPP and AIPW with IPSW as the DR approaches. Also, a comparison is made between GLM and BART for all the methods except those involving IPSW. Our results suggest that, as expected, the oversampling of younger and older drivers lead to underestimating miles driven and length of trips, and overestimating the time of the first trip of the day; other factors may impact these variables, as well as the average speed of a given drive. For three of these four variables (total trip duration, total distance driven, and start hour of daily trip), there appeared to be improvement with respect to bias considering the NHTS weighted estimates as the benchmark, although only trip duration appears to be fully corrected. In Figure 7, we display the posterior predictive density of mean daily total distance driven under PAPP, PM and AIPW-PAPP. Note that the narrow variance associated with the PAPP approach is due to the fact that the posterior predictive distribution under pseudo-weighting does not account for the clustering effects in SHRP2. It is in fact in 2.35 that is capturing this source of uncertainty in variance estimation.

Among the QR methods, we observed that the PAPP based on BART gives the most accurate estimate with respect to bias for this variable. However, the relatively narrow 95% CI associated with BART may indicate that BART does not properly propagate the uncertainty in pseudo-weighting. Regarding the PM, it seems BART performs as well as GLM, but with wider uncertainty. As a consequence, the AIPW estimator performs the same in terms of bias across different QR methods. The AIPW estimator based on IPSW, on the other hand, seems to be is more efficient than the ones based on PAPP. However, these findings are not consistent across the outcome variables.

Results related to the adjusted means for some SHRP2-specific outcome variables are summarized in Figure 9. These variables consist of (a) daily maximum speed, (b) frequency of brakes per mile, and (c) percentage of trip duration when the vehicle is fully stopped. For the daily maximum speed, we take one further step and present the DR adjusted mean based on the IPSW-GLM and PAPP-BART by some auxiliary variables in Figure 10. As illustrated, higher levels of mean daily maximum speed are associated with males, age group 35-44 years, Blacks, high school graduates, Asian cars, and weekends. According to the lengths of 95% CIs, one can see that the AIPW-PAPP-BART consistently produces more efficient estimates than the AIPW-IPSW-GLM. Further numerical details of these findings by the auxiliary variables have been provided in Tables 11-17 in Appendix 8.4.

5 Discussion

In this study, we proposed a doubly robust (DR) adjustment method for finite population inference in non-probability samples when a well-designed probability sample is available as a benchmark. Combining the ideas of pseudo-weighting with prediction modeling, our method involved a modified version of AIPW, which is DR in the sense that estimates are consistent if either underlying model holds. More importantly, the proposed method permitted us to apply a wider class of predictive tools, especially supervised algorithmic methods. To better address model misspecification, our study employed BART to multiply impute both pseudo-inclusion probabilities and the outcome variable. We also proposed a method to estimate the variance of the DR estimator based on the posterior predictive draws simulated by BART. In a simulation study, we then assessed the repeated sampling properties of our proposed estimator. Finally, we apply it to real Big Data from naturalistic driving studies with the aim to improve the potential selection bias in the estimates of finite population means.

Generally, the simulation findings revealed that our modified AIPW method produces less biased estimates than its competitors, especially when . When at least one of the models, i.e. QR or PM, is correctly specified, all the DR methods generated unbiased results, though our estimator was substantially more efficient with narrower 95% CIs. However, when both working models are invalid, our findings suggest that DR estimates based on the GLM can be severely biased. However, under BART, it seems that estimates remain approximately unbiased if the true model structure associated with both QR and PM is unknown to the researcher. In contrast to the conventional IPSW estimator, we found that the new proposed estimator produces more stable results in terms of bias and efficiency across different sampling fractions and various degrees of association between the sampling designs of and .

Generally, the results of the application suggest near total removal of bias for only one of the four variables that can be estimated from the reference survey (daily total distance driven). We believe this failure originates from several sources. First and foremost, the bias observed in the final estimates is very likely to be mixed with measurement error because we compared the results of sensor data with self-reported data as a benchmark. Second, there was evidence of departure from the positivity assumption in SHRP2. Studies show that even a slight lack of common support in the distribution of auxiliary variables may lead to inflated variance and aggravated bias (Hill and Su, 2013). Part of this can be due to the fact that we attempted to generalize the results to the general population of American drivers, while SHRP2 data was restricted to six states. Another reason might be deviation from the ignorable assumptions: The associations between the auxiliary variables and the outcome variables were relatively weak and varying across the variables.

Our study was not without weaknesses. First, our approach assumes the ideal situation where the are available in the non-probability sample, since that is demanded by the general theory linking together the probability and non-probability samples. In practice it can be difficult to fully meet this requirement, and indeed in many practical settings it might be that only the available subset of is required to fully model selection into the non-probability sample and the outcome variable, or alternatively, that the available components of will provide a much better approximation to the true estimates than simply using the non-probability sample without correction. Second, our adjustment method assumes that the two samples are mutually exclusive. However, in many Big Data scenarios (though not the one we consider), the sampling fraction may be non-trivial, so the two samples may overlap substantially. In such a situation, it is important to check how sensitive our proposed pseudo-weighting approach is to this assumption. Extensions may be plausible to account for the duplicate units of the population in the pooled sample. Third, our multiple imputation variance estimator (Eq. 2.35) ignores covariance between and induced by the weights. This covariance is typically negative and leads to conservative inference, as seen in the modest overestimation of variance in the BART estimations in Simulations 2 and 3. Use of a bootstrap procedure such as that described in the simulation study of Chen et al. (2020) may be an alternative, although impractical in our setting given the computational demands of fitting the BART models to each bootstrap sample. Another drawback is that the combined dataset may be subject to differential measurement error in the variables. This issue is particularly acute in our SHPR2 analysis, because the definition of a trip may not be identical between the two studies: although trip measures in the SHRP2 are recorded by sensors, in the NHTS trip measures are memory and human estimation based, as they are self-reported. Having such error-prone information either as the outcome or as an auxiliary variable may lead to biased results. Finally, we failed to use the two-step Bayesian method under GLM for the application part, because SHRP2 data were clustered demanding for Bayesian generalized linear mixed effect models to properly estimate the variance of the DR estimators required computational resources beyond our reach. This prompted us to apply resampling techniques to the actual data instead of a full Bayesian method.

There are a number of potential future directions for our research. First, we would like to expand the asymptotic variance estimator under PAPP when cannot be computed for . Alternatively, one may be interested in developing a fully model-based approach, in which a synthetic population is created by undoing the sampling stages via a Bayesian bootstrap method, and attempts are made to impute the outcome for non-sampled units of the population (Dong et al., 2014; Zangeneh and Little, 2015; An and Little, 2008). The synthetic population idea makes it easier to incorporate the design features of the reference survey into adjustments, especially when Bayesian inference is of interest. While correcting for selection bias, one can adjust for the potential measurement error in the outcome variables as well if there exists a validation dataset where both mismeasured and error-free values of the variables are observed (Kim and Tam, 2020). When combining data from multiple sources, it is also likely that auxiliary variables are subject to differential measurement error. Hong et al. (2017) propose a Bayesian approach to adjust for a different type of measurement error in a causal inference context. Also, in a Big Data setting, fitting models can be computationally demanding. To address this issue, it might be worth expanding the divide-and-recombine techniques for the proposed DR methods. Finally, as noted by a reviewer, the basic structure of our problem (see Figure 1) approximates that tackled by “data fusion” methods, developed primarily in the computer science literature. While this literature does not appear to have directly addressed issues around sample design, it may be a useful vein of research to mine for future connections to non-probability sampling research.

6 Acknowledgement