Robust Errant Beam Prognostics with Conditional Modeling for Particle Accelerators

Abstract

Particle accelerators are complex and comprise thousands of components, with many pieces of equipment running at their peak power. Consequently, particle accelerators can fault and abort operations for numerous reasons. These faults impact the availability of particle accelerators during scheduled run-time and hamper the efficiency and the overall science output. To avoid these faults, we apply anomaly detection techniques to predict any unusual behavior and perform preemptive actions to improve the total availability of particle accelerators. Semi-supervised Machine Learning (ML) based anomaly detection approaches such as autoencoders and variational autoencoders are often used for such tasks. However, supervised ML techniques such as Siamese Neural Network (SNN) models can outperform unsupervised or semi-supervised approaches for anomaly detection by leveraging the label information. One of the challenges specific to anomaly detection for particle accelerators is the data’s variability due to system configuration changes. To address this challenge, we employ Conditional Siamese Neural Network (CSNN) models and Conditional Variational Auto Encoder (CVAE) models to predict errant beam pulses at the Spallation Neutron Source (SNS) under different system configuration conditions and compare their performance. We demonstrate that CSNN outperforms CVAE in our application.

-

November 2023

Keywords: Anomaly detection, Siamese Model, Conditional Siamese Model, Variational Autoencoder, Conditional Variational Autoencoder, CSNN, CVAE, Spallation Neutron Source, Particle Accelerators, Anomaly Prediction, Prognostics

1 Introduction

The Spallation Neutron Source (SNS) accelerator facility at Oak Ridge National Laboratory (ORNL) delivers a 60 Hz pulsed 1.1 GeV proton beam at 1.7 MW, making it the world’s highest power proton accelerator. The beam is accelerated in a super-conducting linear accelerator and accumulated in a ring to form a very short and intense pulse with an intensity of up to protons. The protons are then directed to a stainless steel vessel filled with liquid mercury where the impact spalls the mercury atoms and neutrons are released [1] for material research. High availability of the SNS accelerator (greater than 95%) is challenging to attain, even with judicious maintenance and monitoring, since errant beam pulses still occur and cause downtime [2]. To reduce downtime, radio-activation of beam line elements, and damage to components, we explored ML methods that use data from existing diagnostic sensors to predict equipment failures and errant beams. Previous studies at the SNS [3, 4], show that precursor information is present in the available data, which can predict an impending fault.

Existing accelerator data provides a large amount of normal samples and a small number of anomaly samples. As such, researchers have been resorting to semi-supervised learning methods such as autoencoder (AE) [5] or Variational AE (VAE) [6] to leverage a large amount of existing normal data samples [7, 8, 9]. AE and VAE models learn to reproduce the normal data samples and predict anomalies based on the reconstruction error (the error between the input data and the corresponding reproduced data). However, our previous study [4] on this application concluded that Siamese neural network (SNN), a supervised learning method, can outperform AE. SNN can also leverage many normal samples and avoid label imbalance as described in Section 4.

Particle accelerators have multiple configuration parameters updated throughout operation for control and optimization. The changes in the configurations impact the equipment behavior and, subsequently, the measurements from the diagnostic sensors, making anomaly detection more challenging. Machine Learning (ML) models trained on the data from a particular beam configuration may no longer be valid for the following accelerator configuration. Conditional models can tackle such shifts in the data distribution by using the beam configuration parameters as conditional inputs. In this research, we study the performance of conditional-SNN (CSNN) and conditional-VAE (CVAE)[10] for predicting impending errant beam pulses using the beam current data from eight different SNS accelerator beam configurations. The results indicate that the CSNN model trained on data from all eight beam configurations outperforms dedicated SNN models trained on data from a single beam configuration. Based on the specific cases studied in this paper, we show that the CSNN outperforms the CVAE which is also trained on data from all beam configurations.

In Section 2, we summarize previous research in anomaly detection, classification, and prognostication as it applies to particle accelerator use cases. In Section 3, we describe the data source, collection, and curation procedures. Section 4 describes the ML methods we explored for this paper. In Section 5, we present the comparison results between the SNN, CSNN, and CVAE . In addition, we present the model architecture and hyper-parameter optimization process. Finally, in Section 6, we present our conclusions and outlook.

2 Previous Work

Anomaly detection problems have been studied with statistics and visualization-based methods for many years. Methods such as K-nearest neighbor clustering [11, 12], quantile method or whisker plots [13], probabilistic envelope-based approach [14] have been applied for anomaly detection in different fields. Recent advancements in ML have been a good fit for many applications, including anomaly prediction. Deep Learning (DL), particularly, is very expressive and can handle large and complex data sets; due to high expressivity and a large number of parameters, it can model large and complex systems.

The use of ML for particle accelerator applications has grown in recent years including, but not limited to, diagnostics [15, 16, 17, 18, 19, 20, 21], anomaly detection/forecasting/classification [4, 22, 23, 24, 25], and optimization/controls [26, 27, 28, 29, 30, 31, 32, 33].

AEs and VAEs are commonly used for anomaly detection, where the samples used during training are assumed to be normal.

Previous work using AEs for particle accelerator sub-systems include the Air Conditioning systems [34] and magnet faults in the Advanced Photon Source storage ring at Argonne National Laboratory [7].

Recently, VAEs have been applied for High Voltage Converter Modulator (HVCM) anomaly detection at SNS accelerator [35, 36].

There have also been methods proposed, such as [8], to deal with impurities in the normal training data for VAEs.

In addition, there have been advances in research to understand uncertainty quantification (UQ) from ML models.

New accelerator physics studies have included UQ to estimate out-of-distribution uncertainties using data-driven ML-based surrogate models.

Examples include developing a UQ-based surrogate model for a cyclotron-based model [37], modeling the FNAL Booster accelerator complex for a reinforcement learning application [31], and uncertainty aware anomaly predictions [4].

Furthermore, recently published studies compared Bayesian Neural Networks (BNN), ensemble methods, and a novel deep Gaussian process approximation method for particle accelerator applications [38, 39, 40, 41].

These new techniques will allow us to leverage the strength of Deep Neural Networks (DNNs) while providing some safety guarantees.

Although these studies show very promising results, they typically do not address how to apply them for longer periods of time with varying operational conditions. Presented in this paper is a study on conditional ML to address the underlying changes in the particle accelerator configurations.

3 Data Description

There are various sensors deployed at SNS including Beam Positional Monitors (BPM), Beam Loss Monitor (BLM), and Differential Current Monitor (DCM). These sensors provide data to perform diagnostics and preemptive maintenance. This research uses beam current data from DCM as described below.

3.1 Data Source and Collection

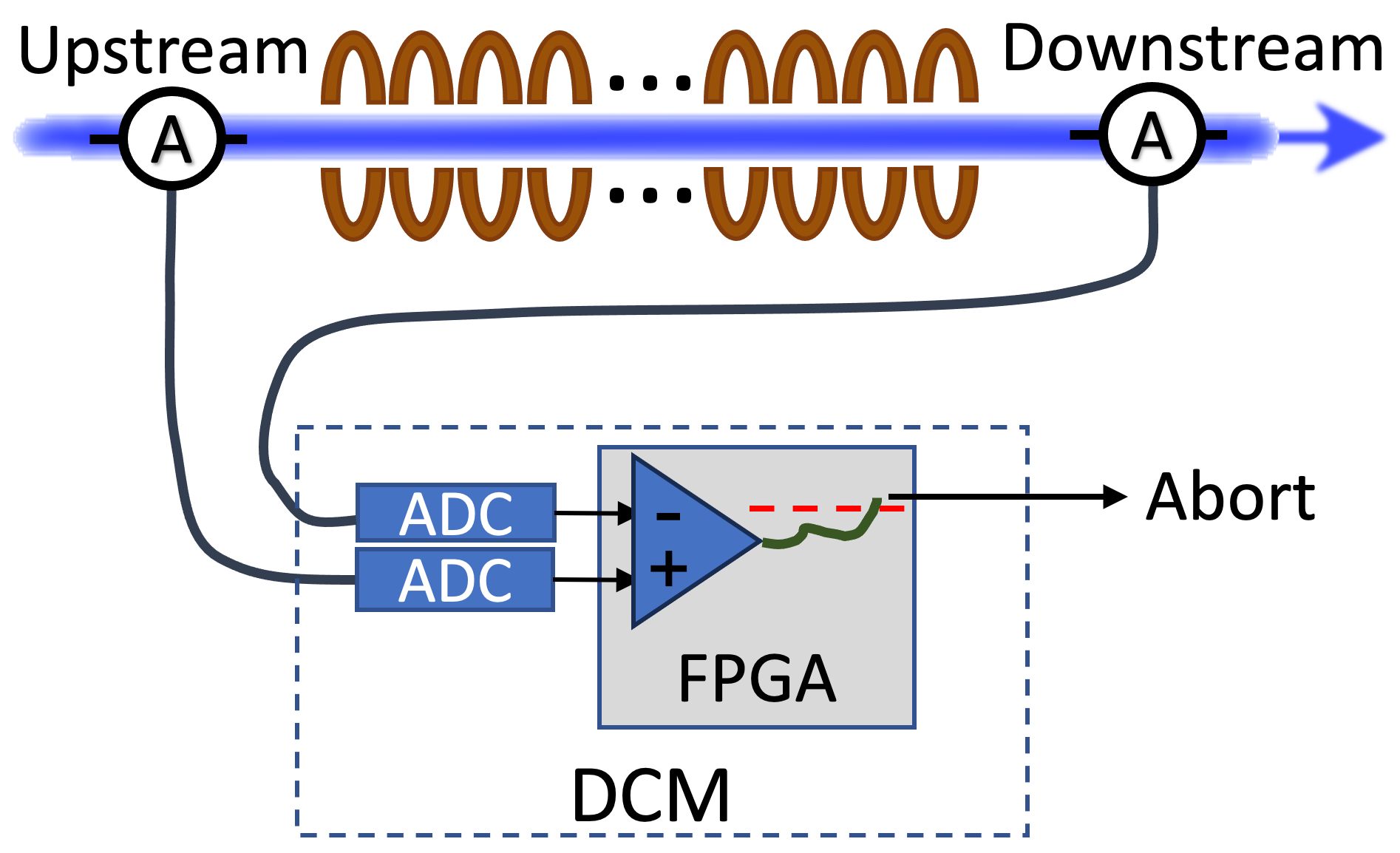

SNS accelerator employs a DCM [42, 43] to protect the Super Conducting Linac (SCL) from adverse effects of beam losses [44]. Figure 1 shows a schematic diagram of the DCM. It continuously monitors upstream and downstream beam current waveforms to detect any beam loss across the SCL. When significant beam loss is detected, it aborts the beam to minimize possible damage or activation. DCM uses a Field Programmable Gate Array (FPGA) and a dedicated communication line with the Machine Protection System (MPS) to enable fast aborts. Initially, the DCM only streamed a subset of the waveforms to a server for storage. At regular intervals, normal waveforms were stored and waveforms right before, during, and right after an errant beam pulse. All waveforms of all beam pulses are streamed to an edge computer for inferences of models and to store the data.

With this study, we aim to predict errant beam pulses before they occur using normal prefault pulses. Our previous studies [4, 3] have shown that there are precursors in the normal pulse to indicate an impending errant beam pulse. To achieve this, the DCM was programmed to archive beam current waveforms preceding errant beam pulses and regularly archive waveforms during normal operation. The prefault normal waveforms are labeled as anomalies and waveforms from the normal operation are labeled as normal (reference) for the ML study.

We also recorded a snapshot of beam configuration settings along with the beam current data for conditional modeling. Eight relevant beam tuning parameters are considered for this study, namely, Ramp-Up-Pulse-Width, Ramp-Up-Pulse-Width-Change, Ramp-Up-Width, Beam-On-Width, Beam-On-Pulse-Width, Ramp-Down-Pulse-Width, Gate-Source-Offset, and Beam-Ref-Gate-Width. The description of these parameters is included in the Appendix.

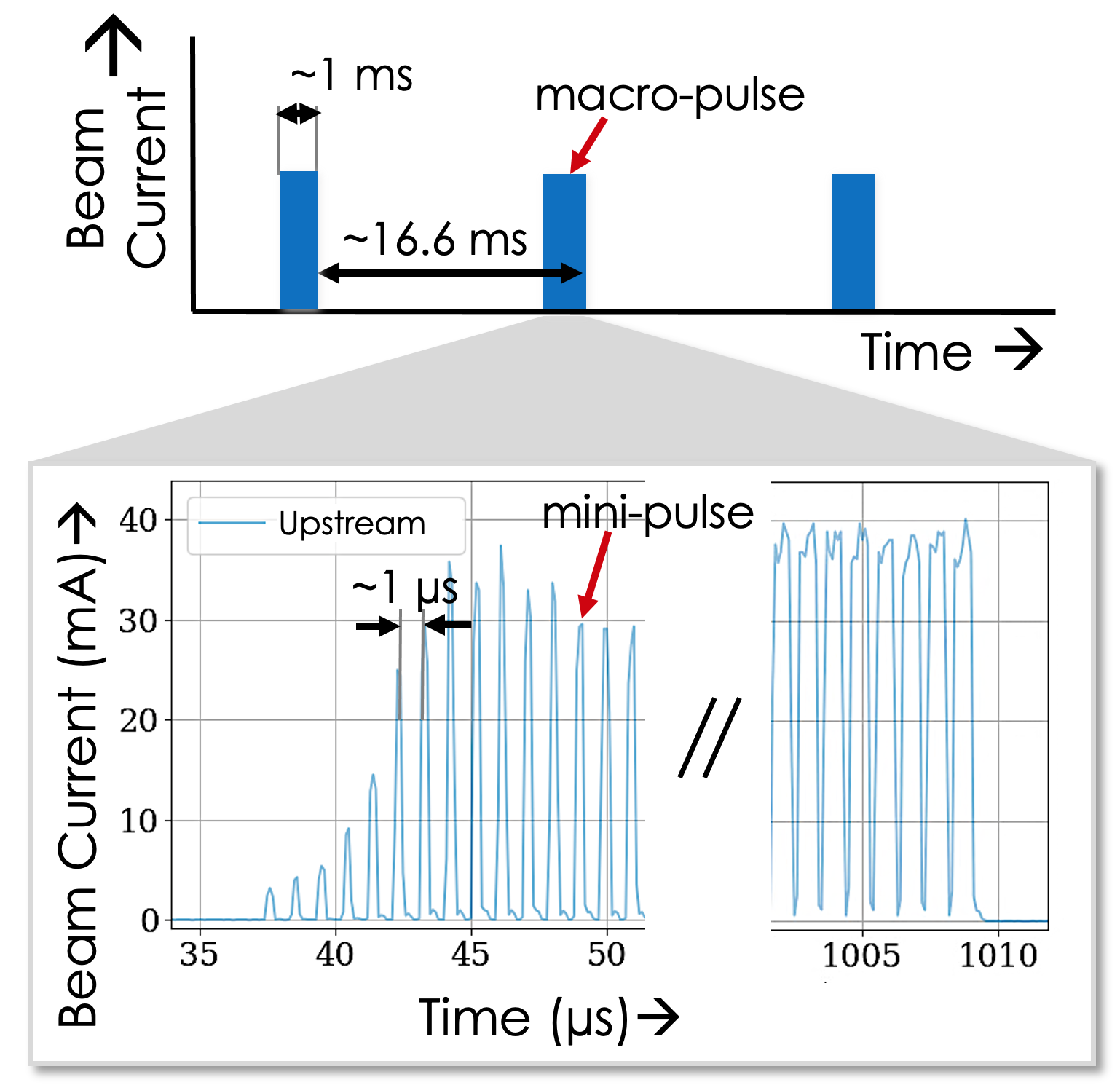

Figure 2 shows an example of the beam current waveform. The top plot shows a series of macro-pulses, a 1 ms long pulse repeated at 60 Hz. This macro-pulse consists of approximately 1000 mini-pulses. Each mini-pulse is 650 ns, followed by a gap of 350 ns. Within each mini-pulse are the micro-pulses, not shown, which are the Radio Frequency (RF) buckets filled with the beam particles spaced at 402.5 MHz. The bottom plot shows a beam pulse’s current waveform with the initial ramp-up in intensity in the beginning of the macro-pulse, as well as the different widths of the mini-pulses during the macro-pulse. This setup is typical for a production-style beam.

The waveform has a length of about 1.2 ms and is digitized at 100 MS/s. The beam pulse’s timestamp, beam configuration parameters, and cycle ID are collected and archived along with the upstream and downstream beam current waveforms.

3.2 Training Data Generator

Each macro pulse comprises 120K time steps; however, for this study, we only use 10K time steps (from time step 3K to 13K) as our research indicated that the pre-cursors for an upcoming errant beam pulse are prevalent in this region. Furthermore, a peak counter approach was employed to exclusively select beam current waveforms associated with neutron production and avoid outliers such as test beams or beam studies. This technique leverages the “find peaks” method in the SciPy library [45]. The parameters for peak identification were set with a minimum height threshold of 2 mA and a stipulated minimum distance of 75 steps, equivalent to 750 ns, between adjacent peaks. The waveforms of the macro-pulse must encompass a minimum of 900 mini-pulses and exhibit a consistent repetition frequency of 60 Hz to indicate that these pulses were produced during full power beam and not during physics studies or testing. These strict requirements effectively filter out any potential outliers in accelerator conditions.

The dataset is divided into three parts, specifically training (64%), validation (16%), and testing (20%) according to their beam setting configurations. All data collected during normal operation was considered normal data. For SNN training, since the model requires two input samples, we developed a training data generator using tensorflow data generator [46] to create combinations of normal-normal data labeled as 0 and normal-prefault data labeled as 1. The data generator has two hyper-parameters: the number of anomalies per batch and the samples per anomaly. The former controls the number of unique prefault beam current samples in a batch, and the latter controls the number of normal beam current waveforms paired with each anomaly. The number of normal-normal samples matches the number of normal-prefault samples per training batch in the data generator. It is important to note that only samples from the same beam configuration are paired with each other. The data generator maintains separate counters for both normal and anomaly waveforms for each configuration setting to iterate over them in sequential order during training and evaluation. To introduce randomness, the normal and anomaly data are shuffled at the end of each epoch.

4 Methods

As discussed in Section 2, there are various techniques to predict anomalies. In this study, we compare VAEs and SNNs with their conditional variants to accommodate data from different beam configurations. Below, we provide a brief background of these techniques.

4.1 Variational Autoencoders

VAEs [6] are commonly used for anomaly detection [47, 35] when there are few anomaly examples. VAEs are normally used for semi-supervised learning and can address imbalanced classification problems. VAEs are trained only on normal data to model the normal behavior of the system. The model identifies any anomalous data using the reconstruction error at inference time. A typical VAE consists of two Artificial Neural Networks (ANNs), an encoder , and a decoder . The maps input space into a reduced representation that is forced to follow a specific well-known distribution, such as a Gaussian distribution. The latent is generated by sampling from and parameters estimated by the . A new parameter is also introduced to reparametrize the sampling layer and allow the model to backpropagate through the entire network. The latent variable is then fed to the to reconstruct the input data. The loss function for a VAE is motivated by variational inference [48] by minimizing the Kullback–Leibler divergence (KLD) [49] between the posterior and the encoded prior distribution :

| (1) |

where the first term is the reconstruction error, the second term computes KLD, and is the harmonic parameter to balance the two.

4.2 Siamese Neural Network Model

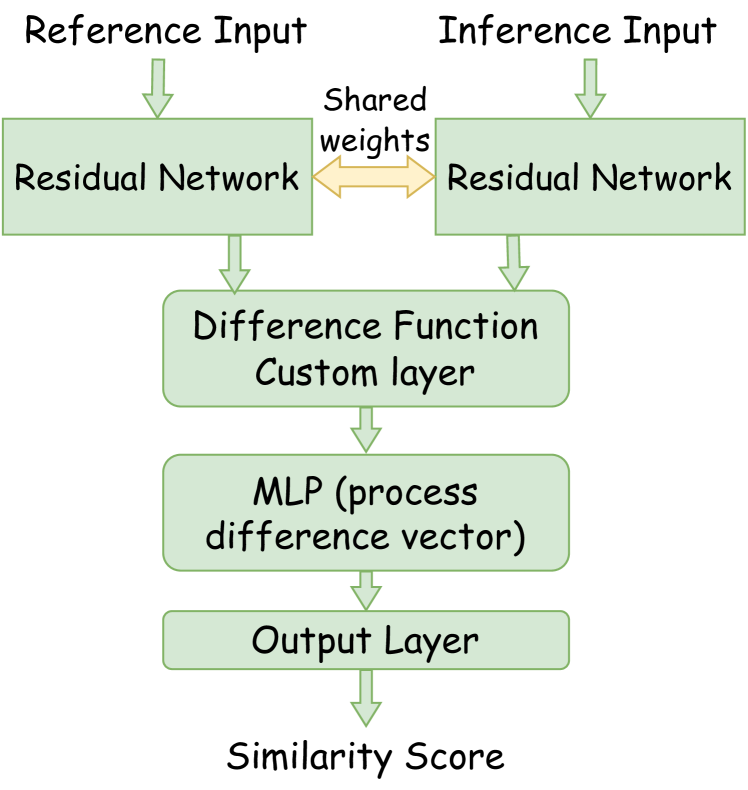

The SNN model takes a different approach to anomaly detection than traditional classification methods, or VAEs, by learning the similarities between a pair of inputs. Instead of focusing on classifying individual data samples, it seeks to capture similarities (or dissimilarities for anomalies). This is achieved by encoding two separate input vectors using twin networks (sharing weights and biases) and then comparing the reduced latent space using a distance metric, as illustrated in Figure 3. The twin networks are deployed to learn and extract the relevant features for similarity prediction between normal-normal and normal-abnormal combinations. As it learns the relevant features, it produces a reduced representation of the original inputs that are then compared using a Lambda difference layer as described in Equation 2.

| (2) |

Here, and are reduced representations of reference and inference inputs, respectively. The output from the difference layer is fed into a multi-layer perceptron (MLP) block with dropouts to avoid over-fitting before the final output layer that produces scalar similarity score between the two inputs.

During training, predicted similarity scores are compared with the ideal scores (labels) using a modified contrastive loss function described in Equation 3.

| (3) |

Here, is the true label, is the predicted similarity score, (set to 5.2 for this study) is a tuning parameter used to emphasize the similar pulses, and is another tuning parameter used to emphasize dissimilar pulses (set to 1 for this study).

This study uses a ResNets [50] model for the twin network with three stacked convolution residual blocks. Each residual block consists of skip connections between two convolution layers to improve the information propagation in the deep model. Each ResNet block consists of a convolution layer, Rectified Linear Unit (ReLU) activation layer, a batch-normalization layer, and a dropout layer. The model architecture parameters are provided in Table 2.

4.3 Conditional Models

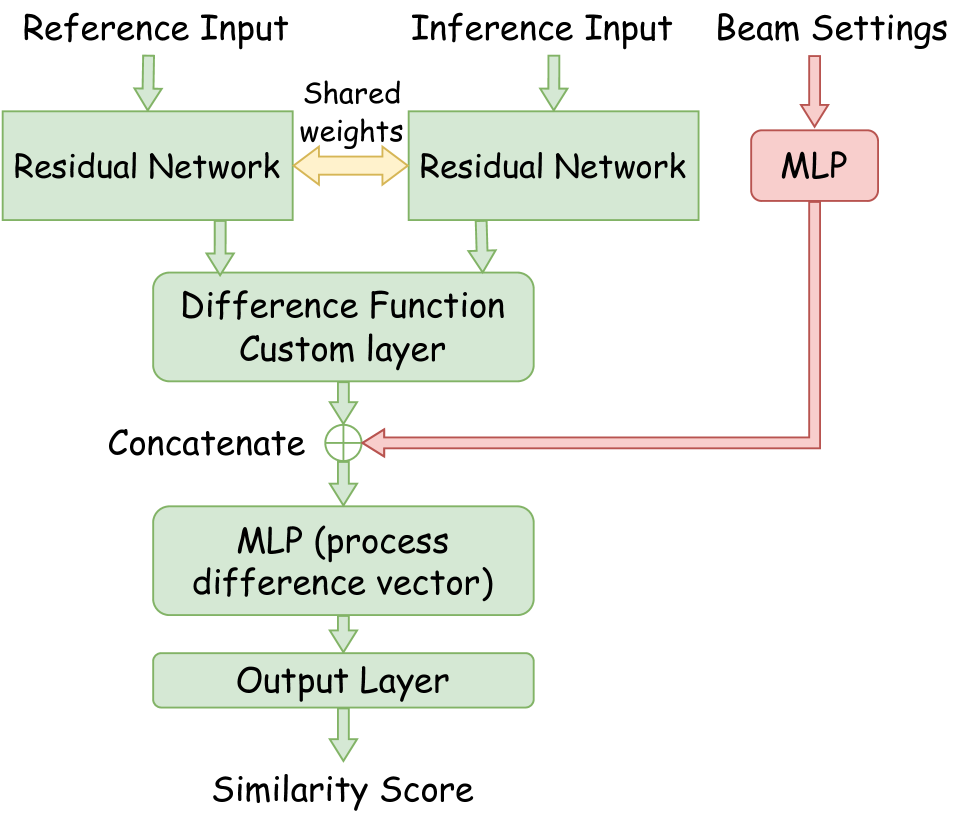

Conditional models learn a relationship between input and output data under given conditions. These conditions can be explicit features or variables influencing the relationship between inputs and outputs. In other words, the model’s predictions depend on specific conditions or contextual information. We employed conditional models that take beam configurations as input to an orthogonal MLP to handle the beam current data belonging to different beam configurations. We compared both CSNN and CVAE for our application.

CSNN consists of the same architecture described in Section 4.2 with an additional conditional input. The conditional input is then passed to an embedding sub-model as shown in red in Figure 4. The configuration embedding MLP consists of 3 dense layers with 64, 32, and 16 nodes respectively along with leaky relu activations and 5% dropouts. The embedding transforms the beam configuration values into a latent space representation, which allows the model to learn the beam configuration landscape and leverage any correlations. The embedded beam configuration is concatenated to the output from the difference layer as shown in Figure 4.

CVAE [10] was proposed to make diverse predictions for different input samples. A CVAE is a type of VAE that introduces a conditional variable into both the encoder and the decoder. The objective function of the VAE can be modified by adding the variable ,

| (4) |

where we condition all of the distributions with . CVAEs are an extension of VAEs by adding the conditional part in the and to associate the input samples with labels. Therefore, at inference time, we have more control to generate samples that belong to specific conditions in contrast to a VAE that does not have control over the generated samples.

All the models in this study are implemented using Tensorflow 2.8 [46] with Adam optimizer [51] for training. For efficient optimization, we used a learning rate decay (callback provided by tensorflow) of 85% using the validation loss plateau with a patience value of 5 epochs. To automatically tune the number of epochs required for convergence, we used an early stopping callback at the validation loss plateau with a patience of 20 epochs.

5 Results

The beam current waveforms belonging to eight different beam configuration instances were used for the training and evaluation of the SNN, CSNN, and CVAE models, and the results are discussed in this section.

| Config Id | CVAE | SNN | CSNN | % improvement by CSNN | |

|---|---|---|---|---|---|

| over CVAE | over SNN | ||||

| 1 | 71.67% | 200.75% | |||

| 2 | 16.45% | 0.00% | |||

| 3 | 85.51% | 0.00% | |||

| 4 | - | 100.00% | |||

| 5 | 33.54% | 71.07% | |||

| 6 | 66.82% | 149.65% | |||

| 7 | 82.48% | 11.38% | |||

| 8 | 22.40% | 2.68% | |||

| Overall | 69.74% | 39.67% | |||

5.1 Evaluation Metric

The goal of this study is to show that ML can improve beam availability and minimize damage to the accelerator by developing advanced fault prediction models and comparing their performance. Any anomaly prediction technique we deploy should not significantly increase the levels of beam aborts or abort duration due to false alarms. Hence, our evaluation metric is built to keep a fixed minimal False Positive Rate (FPR) while achieving a significant True Positive Rate (TPR). Even a modest level of FPR, such as 2%, would noticeably reduce the beam availability and negatively affect the science research program. Based on input from the operators and subject matter experts a FPR of 0.1% is considered the upper acceptable limit.

5.2 Model architecture selection (HPO and NAS)

Deep learning models can be built with a wide range of different configurations and architectures, producing varying results. It is essential to explore and choose the best hyper-parameters and model architecture for a given task.

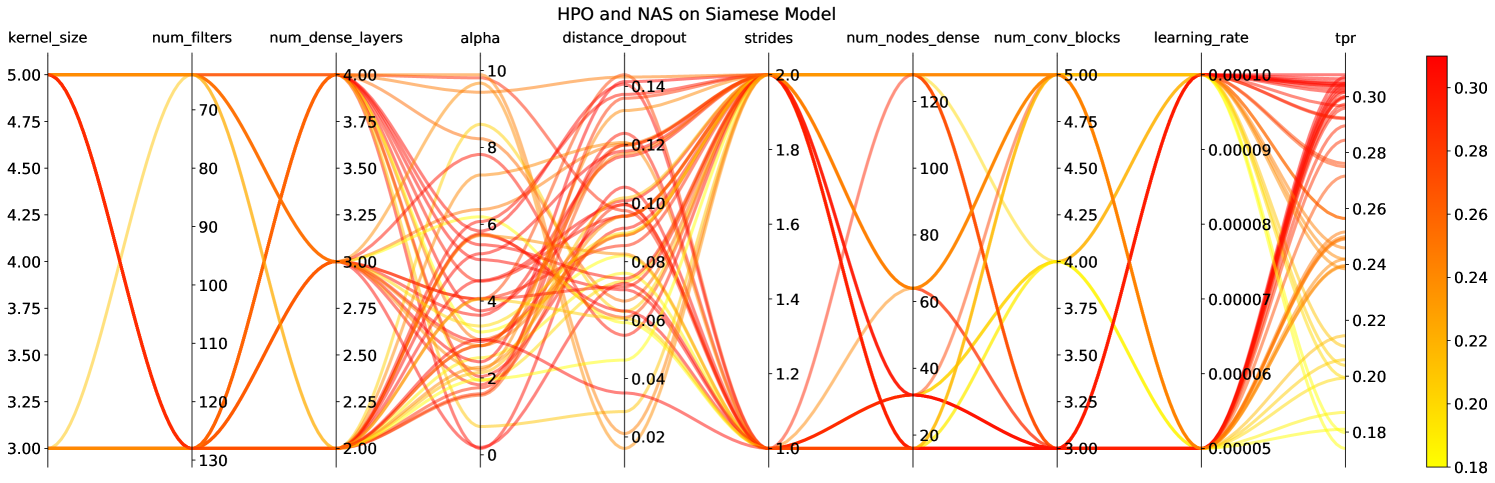

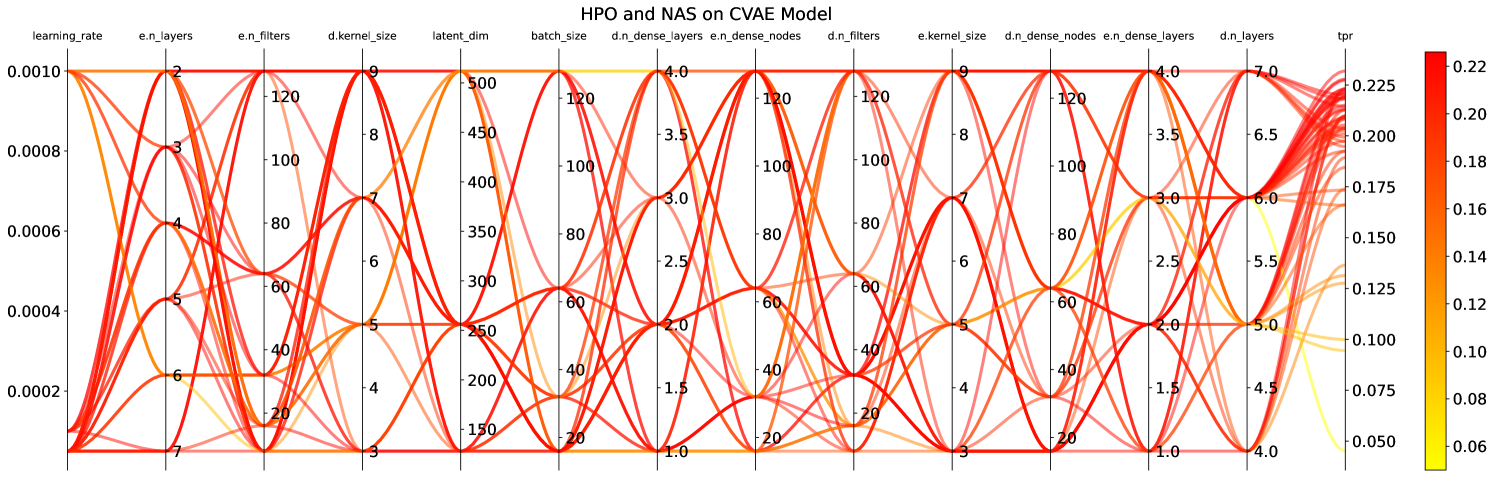

There are multiple HPO and/or NAS toolkits that are available for researchers to use and produce an optimal architecture(s). In this study, we use the Tree-based Parzen Estimator (TPE) [52] algorithm available in the HyperOpt [53] library in python in combination with ml-flow [54] to perform HPO and NAS studies on both Siamese and VAE models in order to pick the best model architecture for the comparison. The model initialization was fixed for this process. The objective was set to minimize validation loss with 50 trials to be consistent with the HyperOpt usage in the literature.

In addition to the numerical hyper-parameters and model architecture parameters shown in Figure 5, we also explore activation functions between ReLU, and Leaky_ReLU for both the models. Based on this study, we compared the model architectures and hyper-parameters that produced the best TPR at the target FPR of 0.1% in the HPO/NAS study.

5.3 CSNN Model results and comparison to individual SNNs

The models were trained on the above-described beam current data set from DCM with the training data generator to learn to produce a low similarity score between normal pulses collected during normal operation (named normal) and normal pulse preceding faults (named anomaly).

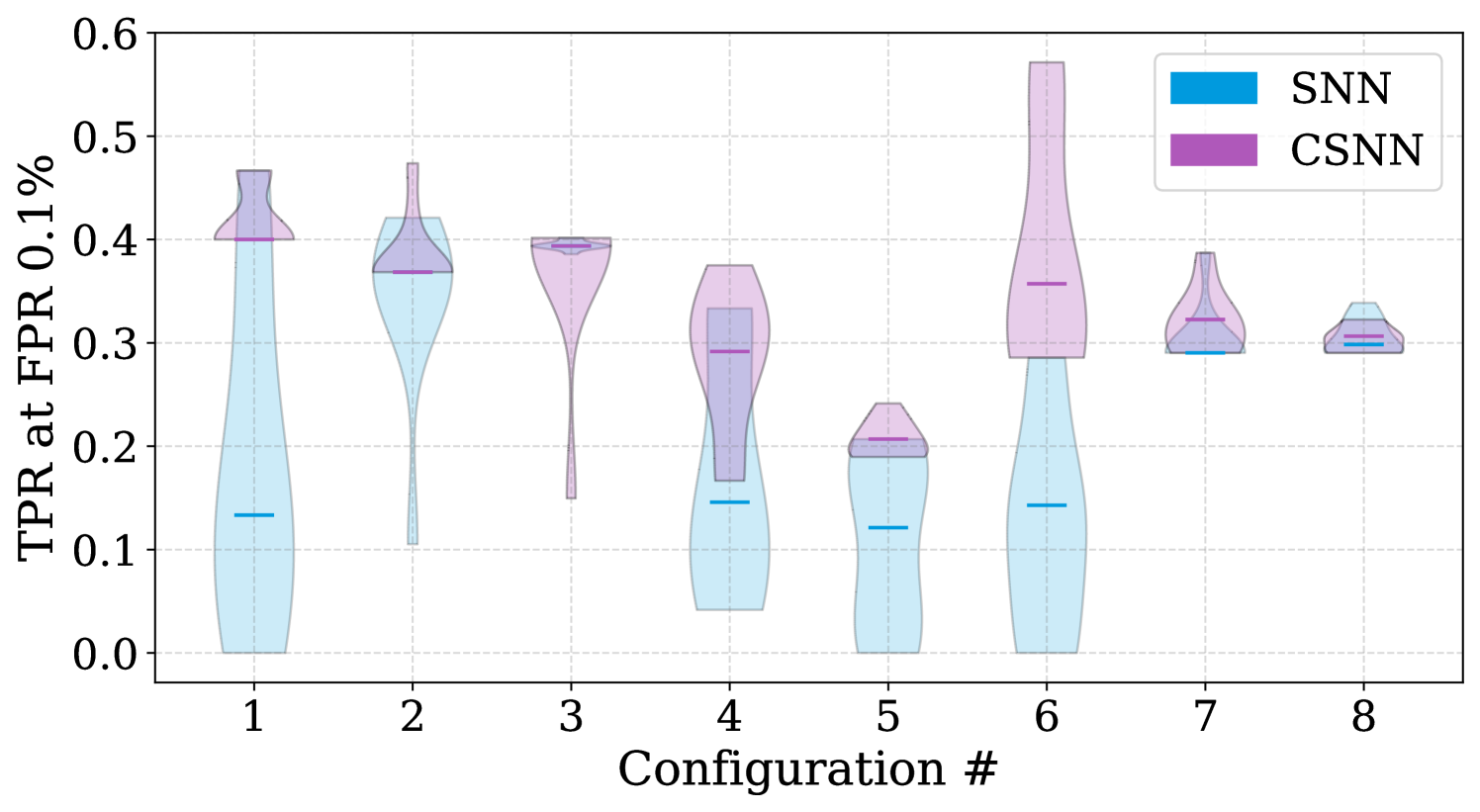

We trained eight individual SNN models on data sets belonging to each beam configuration and a single CSNN model on the entire data set using beam configurations as conditional input. To statistically evaluate the performance, stability, and robustness of the model architecture, we trained each model (SNN and CSNN) 10 times with the same architecture (chosen after architecture optimization as described in Section 5.2), using different weight and bias initialization. Figure 6, compares the prediction performance between SNN and CSNN models regarding TPR at FPR of 0.1%. In the plot, each blue violin represents an ensemble of SNN model inferences on the test data of the beam configuration they are trained on. The corresponding purple violins represent the ensemble of CSNN model inferences on the same test data. The beam configuration ID are marked on the x-axis. As shown in Figure 6, the CSNN outperforms SNN model trained on a single configuration for our application. We believe that this improved performance is due to the CSNN model being able to leverage the correlation between beam current waveforms from different configurations and hence can learn from an increased amount of data.

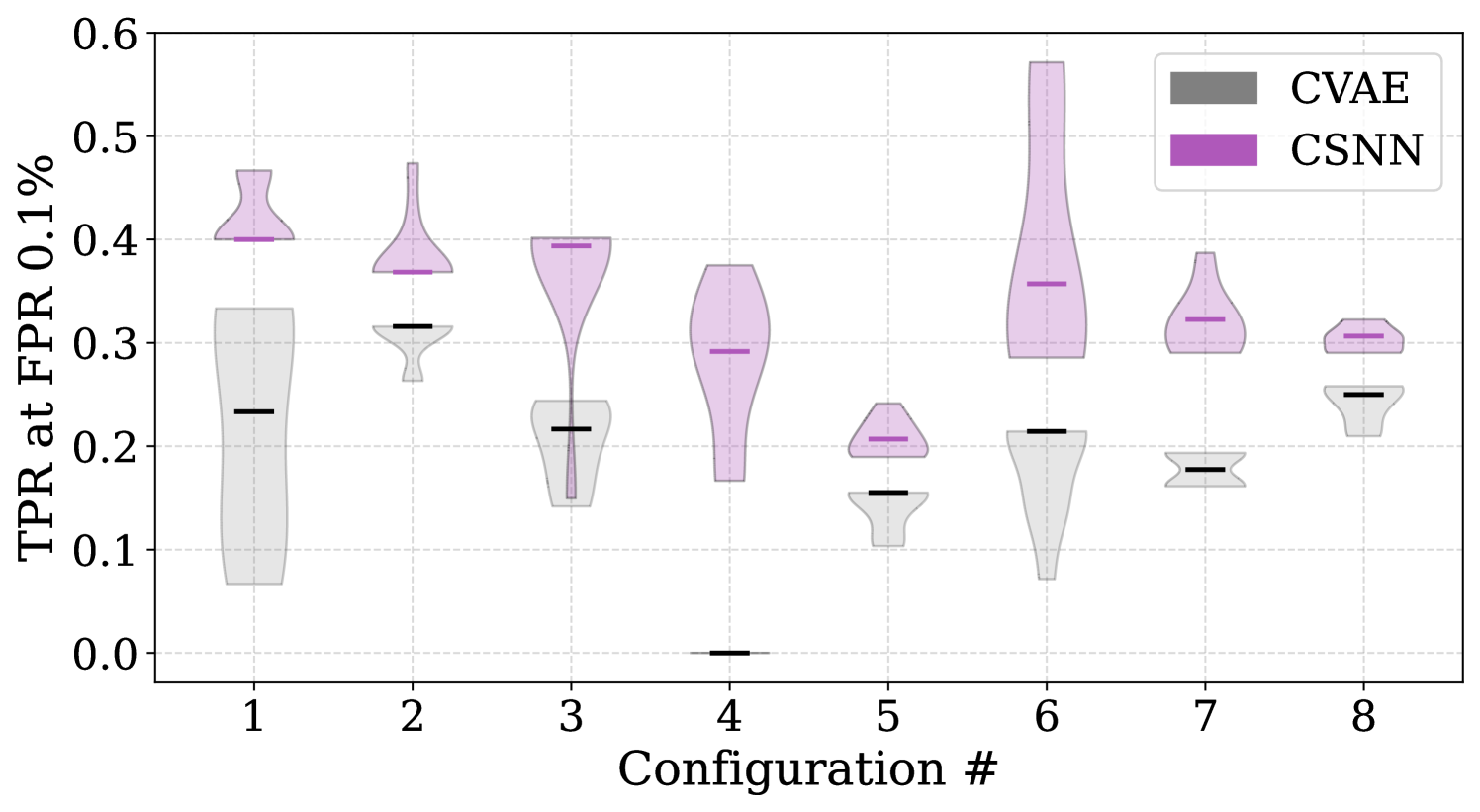

5.4 Comparision of CSNN with CVAE

To understand the stability and robustness of our models, we trained an ensemble of 10 CVAE models with different weights and bias initialization on the same data set. Figure 7 shows the predictive results of comparing CSNN and CVAE regarding TPR at FPR of 0.1%. For all the configurations, CSNN is seen to outperform CVAE. Even though both models, in this case, are trained on the same data set and use beam configuration as a conditional input, we believe that the CSNN model, being a supervised learning method, can leverage the label information and is much more powerful than CVAE. In addition, the CVAE model needs to reproduce all the features to reconstruct the normal waveforms. In contrast, CSNN does not need to learn to reproduce the whole waveforms; instead learns to extract the relevant features to distinguish anomalies from normal.

| Parameter name | CSNN | CVAE |

| Waveform input dimension | [N, 10000, 1] | [N, 10000, 1] |

| config. input dimension | [N, 8] | [N, 8] |

| Conv1D blocks | 3 | 3 (4) |

| Number of kernels | 128 | 128 (128) |

| kernel size | 3 | 6 (6) |

| Activation function | ||

| Number of dense layers | 3 | 3 (3) |

| Unites per Dense layer | 128 | 128 (128) |

| Latent | 40 | 512 |

| Optimizer | Adam | Adam |

| Loss | ||

| Trainable Parameters | 250K | 2.3M |

It is important to note that the two models also vary in architecture and how they learn to distinguish abnormal inputs from normal. However, deep learning models are often compared based on the number of trainable parameters they have. The model architectures are compared in Table 2; the number of trainable parameters in the CVAE (after a light HPO and NAS) is roughly an order of magnitude higher than that in the CSNN model. This indicates that the CVAE requires much more expressivity to learn to reproduce the normal data waveforms. On the other hand, CSNN leverages the label information and does not need to learn to reproduce the entire waveforms. This requires much lower trainable parameters and still outperforms the CVAE model.

6 Conclusions and Outlook

In this paper, we have explored conditional ML models to predict errant beams with varying beam configurations at the SNS accelerator. We have trained and evaluated SNN individually on beam current data belonging to different beam configurations as well as CSNN and CVAE on the entire data set. We have described the data collection, processing, methods, and training procedure along with HPO and NAS studies for optimal model architecture and parameter selection. The comparison between the above three variations has also been presented and it can be concluded that the CSNN outperforms both SNN and CVAE by 39.67% and 69.74% for our application. The CSNN model can achieve more than 30% of TPR at our target FPR which is very promising for any application in the field. We can also move the threshold to adjust FPR as needed to avoid more false aborts while preventing a significant amount of errant beam pulses. While CSNN performs better than SNN and CVAE, more studies are required to fully understand its capabilities to handle a large number of different beam configurations and adaptability in terms of continual learning. We would also like to further explore uncertainty quantification with the CSNN model and explore its usage with continual learning for practical purposes.

The conditional models presented in this paper are capable of handling sudden distribution shifts due to known configuration changes, however, these models have not been tested on gradual and/or sudden data drifts coming from aging machine components or other unexpected events such as field emissions. We would like to further develop the supervised learning approaches to better handle all the data drifts moving forward.

Appendix

In this section, we describe the effect of the conditional inputs on the input data i.e. how the beam configuration changes the beam current waveforms.

Beam current waveform can be decomposed into three sections namely, ramp-up, beam-on, and ramp-down.

The ramp-up is the beginning of the waveform when the beam current gradually increases in amplitude, beam-on is the middle section when the beam current stays high, and the ramp-down section comes at the end when the beam current gradually decreases.

-

1.

Ramp Up Pulse Width is the width of the mini-pulse at the beginning of the ramp-up section.

-

2.

Ramp Up Pulse Width Change is the period in terms of the number of mini-pulses before the width of the mini-pulse is increased by one in the ramp-up section. In other words, if it is set to 5 then the mini-pulse width is increased by one after every 5 mini-pulses.

-

3.

Ramp Up Width is the number of mini-pulses in the ramp-up section.

-

4.

Beam On Pulse Width is the width of the mini-pulse at the beginning of the beam-on section.

-

5.

Beam On Width is the number of mini-pulses in the beam-on section.

-

6.

Ramp Down Pulse Width is the width of the mini-pulse at the beginning of the ramp-down section.

-

7.

Gate Source Offset - Offset of Reference Gate relative to start of source.

-

8.

Beam Reference Gate Width - Width of the macro pulse

References

References

- [1] S Henderson et al 2014 Nuclear Instruments and Methods in Physics Research Section A: Accelerators, Spectrometers, Detectors and Associated Equipment 763 610–673 ISSN 0168-9002 URL https://www.sciencedirect.com/science/article/pii/S0168900214003817

- [2] Peters C, Blokland W, Justice T and Southern T 2018 Minimizing Errant Beam at the Spallation Neutron Source 6th International Beam Instrumentation Conference p TH2AB1

- [3] Reščič M, Seviour R and Blokland W 2020 Nuclear Instruments and Methods in Physics Research, Section A: Accelerators, Spectrometers, Detectors and Associated Equipment 955 ISSN 0168-9002

- [4] Blokland W, Rajput K, Schram M, Jeske T, Ramuhalli P, Peters C, Yucesan Y and Zhukov A 2022 Phys. Rev. Accel. Beams 25(12) 122802 URL https://link.aps.org/doi/10.1103/PhysRevAccelBeams.25.122802

- [5] Rumelhart D E, Hinton G E and Williams R J 1986 Learning Internal Representations by Error Propagation (Cambridge, MA, USA: MIT Press) p 318–362 ISBN 026268053X

- [6] Kingma D P and Welling M 2013 Auto-encoding variational bayes URL https://arxiv.org/abs/1312.6114

- [7] Hu W, Del Vento D and Su S 2021 Proceedings of the 2021 improving scientific software conference Tech. rep. URL https://opensky.ucar.edu/islandora/object/technotes:589

- [8] Humble R, Colocho W, O’Shea F, Ratner D and Darve E 2023 Resilient vae: Unsupervised anomaly detection at the slac linac coherent light source (Preprint 2309.02333)

- [9] Pol A A, Berger V, Cerminara G, Germain C and Pierini M 2020 Anomaly detection with conditional variational autoencoders (Preprint 2010.05531)

- [10] Sohn K, Lee H and Yan X 2015 Learning structured output representation using deep conditional generative models Advances in Neural Information Processing Systems vol 28 ed Cortes C, Lawrence N, Lee D, Sugiyama M and Garnett R (Curran Associates, Inc.) URL https://shorturl.at/gDLP3

- [11] Fukunaga K and Narendra P 1975 IEEE Transactions on Computers C-24 750–753

- [12] Keller J M, Gray M R and Givens J A 1985 IEEE Transactions on Systems, Man, and Cybernetics SMC-15 580–585

- [13] Moumena A and Guessoum A 2015 Security and Communication Networks 8 212–219 (Preprint https://onlinelibrary.wiley.com/doi/pdf/10.1002/sec.974) URL https://onlinelibrary.wiley.com/doi/abs/10.1002/sec.974

- [14] Rajput K and Chen G 2022 Probabilistic envelope based visualization for monitoring drilling well data logging. VISIGRAPP (3: IVAPP) pp 51–62

- [15] Emma C, Edelen A, Hogan M J, O’Shea B, White G and Yakimenko V 2018 Phys. Rev. Accel. Beams 21 112802

- [16] Sanchez-Gonzalez A et al. 2017 Nature Commun. 8 15461 (Preprint 1610.03378)

- [17] Wielgosz M, Skoczeń A and Mertik M 2017 Nucl. Instrum. Meth. A 867 40–50 (Preprint 1611.06241)

- [18] Scheinker A and Gessner S 2015 Phys. Rev. ST Accel. Beams 18 102801

- [19] Scheinker A, Emma C, Edelen A L and Gessner S 2020 Advanced control methods for particle accelerators (ACM4PA) 2019 workshop report Tech. rep. (Preprint 2001.05461) URL https://doi.org/10.2172/1579684

- [20] Scheinker A, Edelen A, Bohler D, Emma C and Lutman A 2018 Phys. Rev. Lett. 121(4) 044801

- [21] Li S, Zacharias M, Snuverink J, Coello de Portugal J, Perez-Cruz F, Reggiani D and Adelmann A 2021 Information 12(121) URL https://www.mdpi.com/2078-2489/12/3/121

- [22] Rescic M, Seviour R and Blokland W 2020 Nucl. Instrum. Meth. A 955 163240

- [23] Reščič M, Seviour R and Blokland W 2022 Nuclear Instruments and Methods in Physics Research Section A: Accelerators, Spectrometers, Detectors and Associated Equipment 1025 166064 ISSN 0168-9002 URL https://www.sciencedirect.com/science/article/pii/S0168900221009888

- [24] Tennant C, Carpenter A, Powers T, Shabalina Solopova A, Vidyaratne L and Iftekharuddin K 2020 Phys. Rev. Accel. Beams 23(11) 114601 URL https://link.aps.org/doi/10.1103/PhysRevAccelBeams.23.114601

- [25] Powers T and Solopova A 2019 CEBAF C100 Fault Classification based on Time Domain RF Signals 19th International Conference on RF Superconductivity (SRF 2019) p WETEB3

- [26] Miskovich S A, Neiswanger W, Colocho W, Emma C, Garrahan J, Maxwell T, Mayes C, Ermon S, Edelen A and Ratner D 2023 Multipoint-bax: A new approach for efficiently tuning particle accelerator emittance via virtual objectives (Preprint 2209.04587)

- [27] Scheinker A, Huang E C and Taylor C 2022 IEEE Transactions on Control Systems Technology 30 2261–2268

- [28] Kaiser J, Stein O and Eichler A 2022 Learning-based optimisation of particle accelerators under partial observability without real-world training Proceedings of the 39th International Conference on Machine Learning (Proceedings of Machine Learning Research vol 162) ed Chaudhuri K, Jegelka S, Song L, Szepesvari C, Niu G and Sabato S (PMLR) pp 10575–10585 URL https://proceedings.mlr.press/v162/kaiser22a.html

- [29] Kirschner J, Mutný M, Krause A, Coello de Portugal J, Hiller N and Snuverink J 2022 Phys. Rev. Accel. Beams 25(6) 062802 URL https://link.aps.org/doi/10.1103/PhysRevAccelBeams.25.062802

- [30] St John J, Herwig C, Kafkes D, Mitrevski J, Pellico W A, Perdue G N, Quintero-Parra A, Schupbach B A, Seiya K, Tran N, Schram M, Duarte J M, Huang Y and Keller R 2021 Phys. Rev. Accel. Beams 24(10) 104601 URL https://link.aps.org/doi/10.1103/PhysRevAccelBeams.24.104601

- [31] Kafkes D and Schram M 2021 Developing Robust Digital Twins and Reinforcement Learning for Accelerator Control Systems at the Fermilab Booster 12th International Particle Accelerator Conference (Preprint 2105.12847)

- [32] Edelen A L, Biedron S G, Chase B E, Edstrom D, Milton S V and Stabile P 2016 IEEE Trans. Nucl. Sci. 63 878–897 (Preprint 1610.06151)

- [33] Hirlaender S and Bruchon N 2020 Model-free and bayesian ensembling model-based deep reinforcement learning for particle accelerator control demonstrated on the fermi fel (Preprint 2012.09737)

- [34] Assmann R, McIntosh P, Fabris A, Bisoffi G, Andrian I and Vinicola G 2023 JACoW IPAC2023 THPL013

- [35] Alanazi Y, Schram M, Rajput K, Goldenberg S, Vidyaratne L, Pappas C, Radaideh M I, Lu D, Ramuhalli P and Cousineau S 2023 Machine Learning with Applications 13 100484 ISSN 2666-8270 URL https://www.sciencedirect.com/science/article/pii/S2666827023000373

- [36] Radaideh M I, Pappas C, Walden J, Lu D, Vidyaratne L, Britton T, Rajput K, Schram M and Cousineau S 2022 Digital Signal Processing 130 103704 ISSN 1051-2004 URL https://www.sciencedirect.com/science/article/pii/S1051200422003219

- [37] Adelmann A 2019 SIAM/ASA Journal on Uncertainty Quantification 7 383–416 (Preprint https://doi.org/10.1137/16M1061928) URL https://doi.org/10.1137/16M1061928

- [38] Mishra A A, Edelen A, Hanuka A and Mayes C 2021 Phys. Rev. Accel. Beams 24(11) 114601 URL https://link.aps.org/doi/10.1103/PhysRevAccelBeams.24.114601

- [39] Schram M, Rajput K, NS K S, Li P, St John J and Sharma H 2023 Phys. Rev. Accel. Beams 26(4) 044602 URL https://link.aps.org/doi/10.1103/PhysRevAccelBeams.26.044602

- [40] Rajput K, Schram M and Somayaji K 2023 Uncertainty aware deep learning for particle accelerators (Preprint 2309.14502)

- [41] Goldenberg S, Schram M, Rajput K, Britton T, Pappas C, Lu D, Walden J, Radaideh M I, Cousineau S and Harave S 2023 Distance preserving machine learning for uncertainty aware accelerator capacitance predictions (Preprint 2307.02367)

- [42] Blokland W and Peters C C 2013 A new differential and errant beam current monitor for the sns* accelerator Proc. 2nd Int. Beam Instrumentation Conf. (IBIC’13) (JACoW Publishing) pp 921–924 URL https://www.osti.gov/biblio/1185366

- [43] Blokland W, Peters C and Southern T 2019 Enhancements to the SNS* Differential Current Monitor to Minimize Errant Beam Proc. IBIC’19 (International Beam Instrumentation Conferenc no 8) (JACoW Publishing, Geneva, Switzerland) pp 146–149 ISBN 978-3-95450-204-2 ISSN 2673-5350 https://doi.org/10.18429/JACoW-IBIC2019-MOPP025 URL http://jacow.org/ibic2019/papers/mopp025.pdf

- [44] Kim S H, Afanador R, Barnhart D, Crofford M, Degraff B, Doleans M, Galambos J, Gold S, Howell M, Mammosser J, McMahan C, Neustadt T, Peters C, Saunders J, Strong W, Vandygriff D and Vandygriff D 2017 Nuclear Instruments and Methods in Physics Research Section A: Accelerators, Spectrometers, Detectors and Associated Equipment 852 20–32 ISSN 0168-9002 URL https://www.sciencedirect.com/science/article/pii/S0168900217301894

- [45] Jones E, Oliphant T, Peterson P et al. 2001– SciPy: Open source scientific tools for Python URL http://www.scipy.org/

- [46] Abadi M, Agarwal A, Barham P, Brevdo E, Chen Z, Citro C, Corrado G S, Davis A, Dean J, Devin M, Ghemawat S, Goodfellow I, Harp A, Irving G, Isard M, Jia Y, Jozefowicz R, Kaiser L, Kudlur M, Levenberg J, Mané D, Monga R, Moore S, Murray D, Olah C, Schuster M, Shlens J, Steiner B, Sutskever I, Talwar K, Tucker P, Vanhoucke V, Vasudevan V, Viégas F, Vinyals O, Warden P, Wattenberg M, Wicke M, Yu Y and Zheng X 2015 TensorFlow: Large-scale machine learning on heterogeneous systems software available from tensorflow.org URL https://www.tensorflow.org/

- [47] An J and Cho S 2015 Special lecture on IE 2 1–18

- [48] Blei D, Kucukelbir A and McAuliffe J 2017 Journal of the American Statistical Association 112 859–877

- [49] Kullback S and Leibler R A 1951 The annals of mathematical statistics 22 79–86

- [50] He K, Zhang X, Ren S and Sun J 2016 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 770–778

- [51] Kingma D P and Ba J 2015 Adam: A method for stochastic optimization 3rd International Conference on Learning Representations, ICLR 2015 (San Diego, CA, USA: ICLR) (Preprint 1412.6980)

- [52] Watanabe S 2023 arXiv preprint arXiv:2304.11127

- [53] Bergstra J, Yamins D and Cox D 2013 Making a science of model search: Hyperparameter optimization in hundreds of dimensions for vision architectures Proceedings of the 30th International Conference on Machine Learning (Proceedings of Machine Learning Research vol 28) ed Dasgupta S and McAllester D (Atlanta, Georgia, USA: PMLR) pp 115–123 URL https://proceedings.mlr.press/v28/bergstra13.html

- [54] Chen A, Chow A, Davidson A, DCunha A, Ghodsi A, Hong S A, Konwinski A, Mewald C, Murching S, Nykodym T, Ogilvie P, Parkhe M, Singh A, Xie F, Zaharia M, Zang R, Zheng J and Zumar C 2020 Developments in mlflow: A system to accelerate the machine learning lifecycle Proceedings of the Fourth International Workshop on Data Management for End-to-End Machine Learning DEEM’20 (New York, NY, USA: Association for Computing Machinery) ISBN 9781450380232 URL https://doi.org/10.1145/3399579.3399867