Robust Estimation of Regression Models with Potentially Endogenous Outliers via a Modern Optimization Lens

Abstract

This paper addresses the robust estimation of linear regression models in the presence of potentially endogenous outliers. Through Monte Carlo simulations, we demonstrate that existing -regularized estimation methods, including the Huber estimator and the least absolute deviation (LAD) estimator, exhibit significant bias when outliers are endogenous. Motivated by this finding, we investigate -regularized estimation methods. We propose systematic heuristic algorithms, notably an iterative hard-thresholding algorithm and a local combinatorial search refinement, to solve the combinatorial optimization problem of the -regularized estimation efficiently. Our Monte Carlo simulations yield two key results: (i) The local combinatorial search algorithm substantially improves solution quality compared to the initial projection-based hard-thresholding algorithm while offering greater computational efficiency than directly solving the mixed integer optimization problem. (ii) The -regularized estimator demonstrates superior performance in terms of bias reduction, estimation accuracy, and out-of-sample prediction errors compared to -regularized alternatives. We illustrate the practical value of our method through an empirical application to stock return forecasting.

Key words: Robust estimation, mixed integer optimization, outlier detection, endogeneity

JEL code: C13, C53, C61, C63

1 Introduction

Robust estimation of linear regression models in the presence of outliers has been extensively studied in econometrics and statistics. It is well-known that the classical ordinary least square (OLS) estimator is extremely sensitive to outliers. Various robust estimation methods have been proposed, for example, Huber’s M-estimation (Huber, 1964), the least median of squares (LMS) estimator (Siegel, 1982), and the least trimmed squares (LTS) estimator (Rousseeuw, 1984, 1985), among others. More recently, Lee et al. (2012) propose regularization of the -norm of case-specific parameters to achieve robust estimation against outliers and She and Owen (2011) show that any regularized estimator can be formulated as an equivalent iterative thresholding procedure of outlier detection. Refer to Yu and Yao (2017) for a comprehensive survey and detailed comparison.

The traditional literature has focused on the breakdown point and efficiency of a robust estimator (Huber and Donoho, 1983). The finite sample breakdown point of an estimator measures the proportion of outliers that can be arbitrarily contaminated before the estimation error goes to infinity. On the other hand, efficiency measures the relative estimation efficiency of the robust estimator compared to OLS in the ideal scenario where the error term is normally distributed and there are no outliers. However, there is limited work investigating how robust estimators perform when the outliers are endogenous in the sense that the noises can be arbitrarily correlated with observed regressors. In this scenario, an outlier not only brings in a contamination noise of large magnitude but also introduces model misspecification against the classical linear regression assumptions. The endogeneity issues from the outliers can lead to severe estimation bias.

In this paper, we first examine the widely adopted -regularized estimator, which includes the Huber estimator and least absolute deviation as special cases, and demonstrate that it is subject to significant bias when outliers are endogenous. As shown in She and Owen (2011), the -regularized estimation is equivalent to an iterative soft-thresholding procedure with a data-driven thresholding parameter, and hence it does not completely eliminate the detected outlier.

Motivated by this finding, we turn to the -regularized approach. By restricting the cardinality of the set of outliers, the -regularized estimator searches for the best subset of observations and the regression coefficients that minimize the least squares objective function. This problem is equivalent to solving the least trimmed squares (LTS) estimator, which is well-known to be an NP-hard combinatorial optimization problem (Natarajan, 1995). Following Bertsimas et al. (2016); Thompson (2022), the -regularized estimator can be formulated as a mixed integer optimization (MIO) problem. With the continuous advancement in modern optimization software, such as gurobi111gurobi is a commercial optimization solver that specializes in mixed integer optimization. In the past decade, gurobi has been up to 75-fold faster in computation speed for mixed integer optimization problems independent of hardware advancement (Gurobi Optimization, LLC, 2024)., and computational infrastructure, it is now tractable to solve the -regularized estimation problem with a verifiable global optimal solution for datasets with up to hundreds of observations. However, the optimization routine heavily relies on the initial values because of its non-convexity nature. In addition, the implementation is not scalable, and the computational burden is increasingly heavy as the sample size and fraction of outliers grow.

To address the computational challenges, we propose a scalable and efficient heuristic algorithm that combines the iterative hard-thresholding (IHT) algorithm and a local combinatorial search refinement inspired by Hazimeh and Mazumder (2020). Given the initial solution by the IHT algorithm, the local combinatorial search algorithm checks whether swapping a few observations, say one or two, between the estimated set of inliers and outliers, can improve the objective value by solving a small-scale mixed integer optimization problem. The heuristic algorithm is more computationally efficient and can improve the initial IHT solution to be as good as the solution from solving the original -regularized MIO problem in most cases, according to the Monte Carlo experiments.

Our contributions are twofold. First, we document that existing -regularized methods are biased in the presence of endogenous outliers, whereas the -regularized approach does not suffer from this bias through Monte Carlo simulations. This finding extends the understanding of the properties of robust estimation methods to a new scenario. Second, we propose systematic heuristic algorithms that provide stable and high-quality solutions while being computationally efficient. To the best of our knowledge, this is the first work to apply the idea of local combinatorial search to the context of robust estimation and outlier detection.

To illustrate the practical value of our method, we apply it to an empirical application in stock return forecasting. The results demonstrate that our -regularized estimator outperforms -regularized alternatives in terms of out-of-sample prediction errors.

Notations. For a generic vector , , , and . For matrix , we define the Frobenius matrix norm as . Generically, for positive integer . For , is the integer part of the real number . denotes the one vector.

The rest of the paper is organized as follows. Section 2 presents the model setup and motivating examples. Section 3 summarizes the existing -regularized methods for robust estimation. Section 4 delineates the -regularized robust methods and the detailed algorithms. The performance of the algorithms is evaluated through Monte Carlo experiments in 5. Section 6 illustrates the proposed method in an empirical application of stock return forecasting. Section 7 concludes.

2 Linear Regression with Potentially Endogenous Outliers

This section sets up the linear regression models with potentially endogenous outliers. Suppose we observe samples for individual unit . Let and . Consider the following linear regression model:

| (2.1) |

where for all . The parameter represents the conditional mean shift such that

We refer to the parameters as outlier fixed effects, which are allowed to be arbitrarily correlated with the regressors . An alternative formulation of model (2.1) is:

| (2.2) |

where is the outlier dummy and is the latent outlier fixed effect.

Remark 1.

and are generally allowed to be correlated. For example, , i.e., the shock affects the dependent variable only if it is large enough to pass the threshold . Conversely, implies that the error affects the dependent variable only if it is small enough to avoid detection during the data collection process.

The existence of introduces endogeneity to a subset of observations . The classical ordinary least squares (OLS) estimator is expected to be biased in the presence of endogenous outliers. The following motivating examples demonstrate possible sources of endogenous outliers.

Example 1 (Heterogeneous Coefficients).

Suppose that for ,

while for ,

where the coefficients and are heterogeneous across and potentially correlated with . Define

Then, for ,

which can be reformulated as (2.1) with

where for in general.

Example 2 (Measurement Errors).

Suppose that and are the outcome variable and the regressors of interest, respectively. Assume that there exists a linear relationship between and as

where . However, measurement errors can contaminate the variables during data collection and processing. We observe only for , and for , we observe proxy variables with measurement errors, , where represents measurement errors. Then, the regression model becomes

where

In the case of nonclassical measurement errors, in general, which induces endogeneity to a subset of observations .

The objective is to accurately estimate in the presence of outliers in the samples.

3 Existing Methods: -regularized Robust Regression

The most widely used robust estimators of in the presence of outlier observations are Huber’s M-estimation and the least absolute deviation (LAD) estimation method (Huber, 1964). These two estimators can be understood as special cases of the -regularized estimator.

Denote . Then, we can present the model (2.1) in matrix form as

| (3.1) |

Let the least squares loss function be

and consider the following -regularized optimization problem,

| (3.2) |

Given , The closed-form solution is

| (3.3) |

Then the profile -regularized objective function becomes

| (3.4) |

In (3.3), the tuning parameter plays the role of a soft thresholding parameter. When is fixed, (3.4) becomes the objective function of Huber regression

where

| (3.5) |

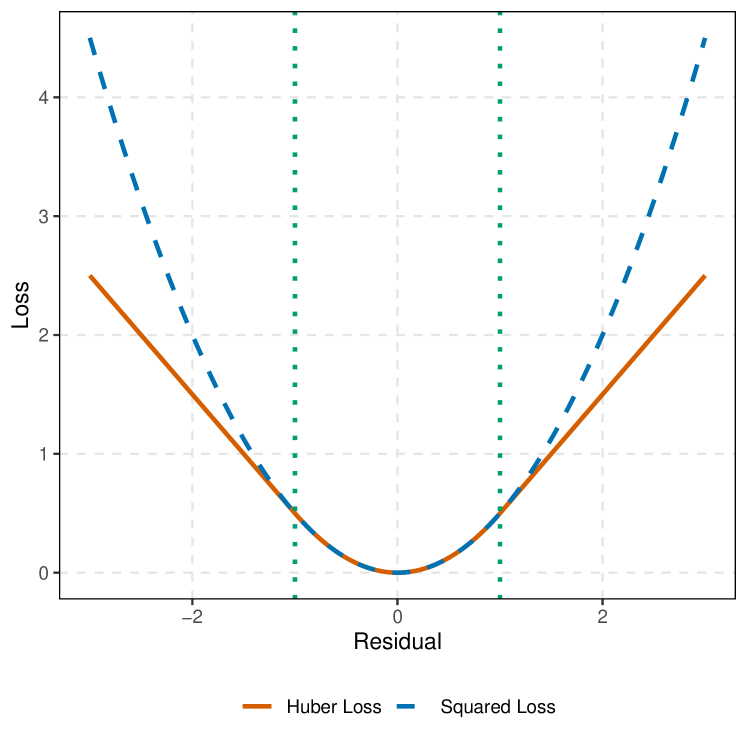

is the Huber loss function (Hastie et al., 2015, p. 26 - 27). As illustrated in Figure 1, the cutoffs and divide the Huber loss function into two regimes: it is a squared loss if and a linear loss in absolute deviation if . If we let so that given and the sample , then we have

as , which shrinks the squared loss region in Figure 1.

The -regularized estimator unifies the Huber and LAD estimators and offers greater flexibility by allowing the regularization parameter to be chosen in a data-driven manner. However, as a soft-thresholding algorithm, as shown in (3.3), it does not completely eliminate the detected outliers from the estimation procedure. This can lead to estimation biases when the outliers are endogenous.

As demonstrated in the Monte Carlo experiments in Section 5, the -regularized estimator performs well in terms of estimation bias, root mean squared error (RMSE), and prediction error in DGP 1, where the outlier fixed effects are generated as exogenous random variables. However, in DGP 2 and DGP 3, where the outlier fixed effects are correlated with the regressors, the -regularized estimator for the slope coefficients exhibits significant biases. This finding motivates the consideration of the hard-thresholding-based -regularized estimator, as discussed in Section 4.

4 -regularized Robust Regression

We consider the -reguarization on the outlier fixed effects in the least square estimation,

| (4.1) |

where and the tuning parameter controls the exact sparsity of the outlier fixed effects .

Remark 2.

The Lagrangian form of the regularized estimation problem

| (4.2) |

where is the penalty function, is often studied in the literature, for example, by She and Owen (2011) and Lee et al. (2012). When , (4.2) is equivalent to classical Huber regression or least absolute deviation (LAD) estimation, depending on the tuning parameter , which is detailed in Section 3. She and Owen (2011) show that the iterative procedure of outlier detection based on hard-thresholding, , gives a local minimum of the -regularized optimization,

| (4.3) |

Note that (4.1) and (4.3) are not equivalent since it is not guaranteed that there exists a corresponding for each . To see this, denote , and . Note that is strictly decreasing in unless the model perfectly fits the data. Suppose is decreasing, then for any , there exists such that the corresponding -penalized problem gives the same solution as (4.1). However, is not guaranteed to be decreasing. For any local minimum , there is no corresponding that yields the same solution. Scenarios like cointegration can serve as examples. In this paper, we focus on the constrained form of -regularization (4.1), which allows one to control the exact sparsity of .

As in Bertsimas et al. (2016); Thompson (2022), (4.1) can be formulated as the following mixed integer optimization (MIO) problem,

| s.t. | ||||

| (4.4) | ||||

in which we introduce a binary variable to model whether an observation is detected as an outlier. corresponds to the cardinality constraint . If , i.e. is labelled as an outlier, then should be nonzero; on the other hand if , i.e. is labelled as an inlier, then should be exactly 0. This intuition can be translated into the constraint , which can be further modeled via integer optimization using the Special Ordered Set of Type 1 (SOS-1), that is a set contains at most one non-zero variable.222 (4.1) can also be formulated as a MIO problem using big- method, where is provides a sufficiently large bound for . In practice, we set with and is the initial estimator form the heuristic algorithm. and are user-defined bound parameters that can help to tighten the parameter space and improve the computation performance.

The optimization problem (4.4) is a well-posed MIO problem, and provable global optimality can be achieved by optimization solvers such as gurobi (Gurobi Optimization, LLC, 2024). The computational efficiency and solution quality are highly dependent on the initial values provided to the solver. Furthermore, as demonstrated in the Monte Carlo experiments in Table 1, directly solving (4.4) does not achieve provable optimality within the prespecified 5-minute timeframe when the sample size and the number of outliers are large. In the following subsection, we propose systematic approximate algorithms to solve (4.1).

4.1 Heuristics

The same as best subset selection studied in Bertsimas et al. (2016), -regularization robust regression (4.1) cannot be solved in polynomial time, i.e. it is NP-hard (Natarajan, 1995). The computational cost of solving for the exact solution increases steeply as the sample size increases. Heuristic algorithms that can find approximate solutions are useful for parameter tuning and providing warm-starts for the solvers. In this section, we follow Bertsimas et al. (2016); Hazimeh and Mazumder (2020); Thompson (2022); Mazumder et al. (2022) to provide heuristic algorithms based on iterative hard-thresholding, neighborhood search, local combinatorial search for (4.1).

4.1.1 Iterative Hard-thresholding

Iterative hard-thresholding (IHT) based on project gradient descent is a generic heuristic algorithm for general nonlinear programming with sparsity constraints (Beck and Eldar, 2013) and it is successfully applied to best subset selection in Bertsimas et al. (2016). For problem (4.1), we propose the following iterative hard-thresholding algorithm, which is a simplified version of the projected block-coordinate gradient descent in Thompson (2022, Algorithm 1).

Define the hard-thresholding operator for as

As shown in Bertsimas et al. (2016, Proposition 3), if , then retains the largest (in absolute value) elements of and sets the rest to zero, i.e.

if is an ordering of such that .

The iterative hard-thresholding algorithm starts with an initial robust estimator of and iteratively updates and by applying the hard-thresholding operator to the residuals and running least square estimation based on the support of updated . The details are summarized in Algorithm 1.

Remark 3.

The initial estimator for Algorithm 1 can be obtained by Huber regression or least absolute deviation estimation. Algorithm 1 is a simplified version of the projected block-coordinate gradient descent in Thompson (2022, Algorithm 1). The convergence of IHT directly follows from Thompson (2022). In addition, is automatically coordinate-wise optimal in the sense that optimizing concerning one coordinate at a time, while keeping others fixed, cannot improve the objective (Hazimeh and Mazumder, 2020).

4.1.2 Local Combinatorial Search

Despite the iterative hard-thresholding algorithm is fast and easy to implement, it is only guaranteed to converge to a coordinate-wise optimal solution. In this section, we propose a local combinatorial search algorithm, similar to Hazimeh and Mazumder (2020), to further refine the solution.

Note that (4.1) is equivalent to

For an estimate , denote and . Following Hazimeh and Mazumder (2020), is said to be swap-inescapable of order if arbitrarily swapping up to observations between and and then optimizing over the new support cannot improve the objective value. Formally,

| (4.5) |

If solution is swap-inescapable of order , then , associated with the OLS estimates using observations in , is the exact global optimal solution to (4.1). By solving the local combinatorial search problem in (4.5) for small , say or , we improve the IHT algorithm and verify the local combinatorial exactness.

The problem in (4.5) can be formulated as a mixed-integer optimization (MIO) problem given by

| s.t. | ||||

| (4.6) | ||||

The constraints and restrict the number of swaps between and up to . (4.6) has a much smaller search space than the original problem (4.4) when is small.333 When , we can solve the problem in a brute force way by running least square estimation on all possible 1-1 swaps between and and comparing the resulting objective values without invoking the MIO solver. The heuristics combining IHT and local combinatorial search are summarized in Algorithm 2.

-

1.

Output of Algorithm 1 (IHT) initialized with ;

-

2.

Compute and ;

-

3.

solutions to local combinatorial search problem in (4.6) given , and .

4.1.3 Neighborhood Search

Algorithm 2 is guaranteed to provide a solution that is locally optimal in the sense of swap-inescapability of order . However, the quality of the solution depends on the initial inputs due to the noncovexity. As noted in Thompson (2022) and Mazumder et al. (2022), a neighborhood search procedure can serve as a useful systematic way of perturbing the initial inputs to improve the solution quality of the heuristic algorithm. Let with be the set of candidate sparsity parameters.

Denote and as the outputs of Algorithm 2 initialized with , and . The neighborhood search procedure is summarized in Algorithm 3. As a by-product of the procedure, we obtain a solution for each which is useful for sensitivity analysis and parameter tuning, which is discussed in Section 4.2.

4.2 Tuning Parameter Choice

In both - and - regularized estimation methods, the selection of tuning parameters, in (3.2) and in (4.1), plays a critical role. We propose the BIC-type information criteria,

| (4.7) |

For computational efficiency, we use Algorithm 3 to generate solutions for a grid of candidate tuning parameters and select the one that minimizes the BIC,

| (4.8) |

where is the maximum potential number of outliers.

The widely used theoretical property to evaluate robust estimation methods is the finite sample breakdown point, proposed in Hampel (1971) and Huber and Donoho (1983). Suppose the original sample is and the contaminated sample is with observations in the original sample being arbitrarily replaced by outliers. The finite sample breakdown point of an estimator is defined as

Proposition 1.

Proof.

The proof is relegated in Appendix A.1. ∎

5 Monte Carlo Simulation

In this section, we examine the numerical performance of the proposed -regularized estimation procedure. We compare its coefficient estimation accuracy and prediction error to those of the -regularized estimation and the classical methods, LAD and OLS.

5.1 Setup

Following the setting in Section 2, we consider the following data generating processes (DGPs).

DGP 1 (Exogenous Outliers). Consider the linear regression model with outliers,

where is the indicator for outliers and is the outlier fixed effect. We generate the regressors by and , where for , and the error term by . Let denote the fraction of outliers. and Let be exogenous shocks to outliers where . The true coefficients are , .

DGP 2 (Endogenous Outliers). This DGP deviates from DGP 1 by allowing the outlier fixed effects to be correlated with the regressors. Let be a linear combination of the innovations to make the outlier fixed effects correlated with regressors and create endogeneity. The parameter to control the degree of correlation. The rest components are the same as DGP 1.

DGP 3 (Predictive Regression with Endogenous Shocks). Consider a linear predictive regression model as studied in Kostakis et al. (2015); Koo et al. (2020); Lee et al. (2022). The dependent variable is generated as

where , , and . The vector of the stacked innovation follows a VAR(1) process , where in which and are empirically estimated from the Welch and Goyal (2008) data as in Lee et al. (2022, Supplements S1). is a vector I(1) process with cointegration rank 1 based on the VECM, where and are the cointegrating matrix and the loading matrix, respectively. are random walks generated by , . Let be the fraction of outliers and define . We impose two outlier periods. Let and be the positions in the sample where the outliers start. The outlier indicator for , and 0 otherwise. The outlier shifts where .

To evaluate each heuristic algorithm in Section 4.1 as compared to solving the full-scale mixed integer optimization for (4.4), we will report the measure Equal MIO, which is the frequency among replications the estimates obtained by the algorithm are the same as those of the MIO solution of (4.4). For each implementation invoking the MIO solver, we report relative optimality gap, which is defined as

where is the attained upper bound of the objective value of the MIO solution and is the dual lower bound of the objective value delivered by the solver.444The value can be read from the solver output. Details of the definition can be found at https://www.gurobi.com/documentation/current/refman/mipgap2.html. Relative optimality gap measures the progress of optimality verification of the solver. The solution is verified to be globally optimal if this gap is less than a prespecified tolerance threshold .

For the estimation and out-of-sample prediction performance, we report the bias, root mean squared error (RMSE) and prediction errors. Generically, bias and RMSE are calculated by and , respectively, for true parameter , its estimate across replications. To calculate the prediction error, we generate test data following the same DGP without outliers, , . The prediction error is calculated by

In all experiments, we consider and . All experiments are carried out on a Linux machine with an Intel i9-13900K CPU and Guorbi optimization solver at version 11.0. The time limit for the solver is set to 5 minutes.

5.2 Performance of the Heuristic Algorithms

We begin by evaluating the performance of the heuristic algorithms presented in Section 4.1 in comparison to solving the full-scale mixed integer optimization problem (4.4). We set the parameters for DGP 1 and for DGP 2 and 3 and run replications for each setup. The parameter is set to the true value for all algorithms. For MIO problems (4.4) and (4.6), and where is the initial estimator and .

For each simulated dataset, we implement Algorithm 1, the iterative hard-thresholding (IHT) method. Subsequently, using the IHT estimate as the initial estimator, we apply the local combinatorial search (LCS) algorithm with local exactness levels and , referred to as LCS-1 and LCS-2, respectively. Additionally, we solve the full-scale mixed integer optimization problem (4.4) using the IHT estimate as the warm-start. In Table 1, we report the average CPU runtime (in seconds) for each algorithm, the frequency of IHT, LCS-1 and LCS-2 estimates are equal to MIO and the average relative optimality gap.

| CPU Time (Seconds) | Equal MIO | Optimality Gap | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| IHT | LCS-1 | LCS-2 | MIO | IHT | LCS-1 | LCS-2 | LCS-1 | LCS-2 | MIO | |||

| DGP 1 | 100 | 0.05 | 0.0004 | 0.0969 | 0.2030 | 0.1596 | 0.91 | 1.00 | 1.00 | 0.0001 | 0.0000 | 0.0000 |

| 100 | 0.1 | 0.0004 | 0.1169 | 0.8459 | 5.2721 | 0.83 | 1.00 | 1.00 | 0.0001 | 0.0002 | 0.0000 | |

| 100 | 0.2 | 0.0004 | 0.2433 | 1.4303 | 222.29 | 0.59 | 0.93 | 0.99 | 0.0000 | 0.0003 | 0.1298 | |

| 200 | 0.05 | 0.0005 | 0.3674 | 5.2707 | 65.573 | 0.95 | 0.98 | 1.00 | 0.0000 | 0.0004 | 0.0131 | |

| 200 | 0.1 | 0.0005 | 0.6265 | 12.093 | 304.92 | 0.78 | 0.94 | 1.00 | 0.0001 | 0.0009 | 0.3527 | |

| 200 | 0.2 | 0.0005 | 0.7830 | 19.359 | 306.12 | 0.72 | 0.87 | 1.00 | 0.0002 | 0.0009 | 0.7530 | |

| 400 | 0.05 | 0.0007 | 1.7538 | 105.28 | 302.69 | 0.92 | 1.00 | 1.00 | 0.0000 | 0.0010 | 0.4249 | |

| 400 | 0.1 | 0.0007 | 4.3731 | 205.89 | 302.87 | 0.77 | 0.92 | 0.98 | 0.0006 | 0.0010 | 0.7960 | |

| 400 | 0.2 | 0.0008 | 4.7983 | 299.60 | 302.56 | 0.55 | 0.83 | 0.98 | 0.0008 | 0.0045 | 0.9500 | |

| DGP 2 | 100 | 0.05 | 0.0004 | 0.1001 | 0.2034 | 0.2160 | 0.88 | 1.00 | 1.00 | 0.0001 | 0.0000 | 0.0000 |

| 100 | 0.1 | 0.0004 | 0.1264 | 1.0286 | 2.4994 | 0.87 | 1.00 | 1.00 | 0.0002 | 0.0002 | 0.0000 | |

| 100 | 0.2 | 0.0004 | 0.1664 | 1.4692 | 76.835 | 0.65 | 0.94 | 0.99 | 0.0002 | 0.0004 | 0.0176 | |

| 200 | 0.05 | 0.0005 | 0.4235 | 6.0045 | 134.65 | 0.91 | 1.00 | 1.00 | 0.0001 | 0.0006 | 0.0402 | |

| 200 | 0.1 | 0.0005 | 0.6005 | 12.279 | 292.25 | 0.74 | 0.94 | 1.00 | 0.0001 | 0.0009 | 0.3163 | |

| 200 | 0.2 | 0.0005 | 0.8669 | 19.165 | 305.50 | 0.71 | 0.89 | 0.98 | 0.0003 | 0.0010 | 0.6237 | |

| 400 | 0.05 | 0.0006 | 1.9436 | 115.93 | 302.42 | 0.92 | 1.00 | 1.00 | 0.0002 | 0.0010 | 0.4884 | |

| 400 | 0.1 | 0.0007 | 4.9341 | 212.42 | 302.54 | 0.81 | 0.92 | 0.96 | 0.0007 | 0.0010 | 0.8046 | |

| 400 | 0.2 | 0.0007 | 5.2007 | 300.10 | 302.34 | 0.57 | 0.81 | 0.97 | 0.0008 | 0.0030 | 0.9354 | |

| DGP 3 | 100 | 0.05 | 0.0008 | 0.3549 | 0.2814 | 0.3953 | 0.86 | 1.00 | 1.00 | 0.0001 | 0.0000 | 0.0000 |

| 100 | 0.1 | 0.0009 | 0.4673 | 9.9887 | 13.779 | 0.86 | 0.99 | 1.00 | 0.0000 | 0.0005 | 0.0001 | |

| 100 | 0.2 | 0.0010 | 0.5272 | 13.365 | 214.53 | 0.60 | 0.96 | 0.99 | 0.0001 | 0.0007 | 0.2078 | |

| 200 | 0.05 | 0.0014 | 1.8395 | 38.356 | 135.95 | 0.79 | 0.97 | 1.00 | 0.0001 | 0.0008 | 0.0597 | |

| 200 | 0.1 | 0.0013 | 2.2910 | 64.206 | 293.49 | 0.75 | 0.95 | 0.99 | 0.0002 | 0.0011 | 0.4392 | |

| 200 | 0.2 | 0.0016 | 2.3277 | 61.629 | 302.97 | 0.63 | 0.84 | 0.95 | 0.0002 | 0.0009 | 0.8481 | |

| 400 | 0.05 | 0.0019 | 7.4183 | 241.30 | 301.51 | 0.87 | 0.96 | 1.00 | 0.0003 | 0.0062 | 0.5299 | |

| 400 | 0.1 | 0.0097 | 9.0912 | 265.04 | 301.38 | 0.80 | 0.88 | 0.95 | 0.0004 | 0.0075 | 0.8901 | |

| 400 | 0.2 | 0.0063 | 10.169 | 253.46 | 301.07 | 0.51 | 0.78 | 0.93 | 0.0004 | 0.0103 | 0.9900 | |

Note: The left panel reports the average computation time used for each algorithm in seconds. The middle panel reports the frequency among replications the estimates obtained by the algorithm are the same as those of the MIO solution of (4.4). The right panel corresponds to the average of the relative optimality gap defined as , where is the attained upper bound of the objective value of the MIO solution and is the dual lower bound of the objective value delivered by the solver, across replications. IHT refers to the iterative hard-thresholding in Algorithm 1. LCS-1 and LCS-2 are the local combinatorial search algorithm in Algorithm 2 with and , respectively. MIO refers to solving (4.4) directly by Gurobi solver.

The key findings presented in Table 1 highlight the performance differences between the heuristic algorithms and the mixed integer optimization (MIO) approach. Notably, within the 5-minute time limit, the MIO method fails to complete the optimality verification for cases where the scale is larger than and . In contrast, the iterative hard-thresholding (IHT) and the local combinatorial search (LCS-1) with are significantly faster. Specifically, IHT runs in milliseconds and LCS-1 achieves optimality in seconds for most cases. LCS-2 takes less than a minute except when and or . In these exceptional cases, the relative optimality gap of LCS-2 remains below 1%, indicating near convergence. Furthermore, the initial estimator, IHT, provides solutions of the same quality to MIO in 50% to 90% of the cases. When advancing to LCS-1, the frequency of obtaining solutions equivalent to MIO increases to approximately 90% while with a significantly lower computational cost. LCS-2 consistently matches the MIO solutions in nearly all replications. These findings underscore the effectiveness and computation efficiency of the heuristic algorithms. Particularly, the local combinatorial search algorithm balances the solution stability and computation costs, which is important when dealing with large sample sizes and many outliers.

5.3 Comparison between and -Regularized Estimation

In this section, we compare the performance of and -regularized estimation in different scenarios. For the and -regularized estimation, the tuning parameters and are selected using the information criteria (4.7). When choosing , we rely on the neighborhood search algorithm, as detailed in Algorithm 3 to generate the estimates for each candidate . Specifically, in the implementation of Algorithm 3, we set and the local exactness level . We report LCS-2 with selected by BIC as the -regularized estimation results. In addition, we include ordinary least square (OLS) and least absolute deviation (LAD) as benchmarks. Bias and root mean squared error (RMSE) are reported for the first slope parameter in all data generating processes (DGPs). For each setup, we run 1000 replications.

The key findings from the Monte Carlo simulation reported in Table 2 to 4 reveal several important insights. In DGP 1, where the outlier fixed effects are exogenous and there is no endogeneity issue, the bias of the method is slightly larger than that of the alternatives. However, the method achieves the smallest RMSE and prediction error in most cases. Ordinary Least Squares (OLS) is not robust to outliers, and their RMSE and prediction error increase significantly as the magnitude of the outlier fixed effects becomes larger. The estimation accuracy of the method is unstable when the sample size and the outlier fraction are small, but it outperforms Least Absolute Deviations (LAD) and OLS as and increase. In DGP 2 and 3, where endogenous outlier fixed effects are introduced, the -regularized method, LAD, and OLS exhibit severe estimation bias. In contrast, the -regularized method is free from estimation bias and achieves the smallest RMSE and prediction error. The accuracy gap widens as , the parameter controlling the degree of endogeneity, and the fraction of outliers increase. These findings demonstrate that the method is more robust to the presence of endogenous outliers and provides more accurate estimation.

| Bias | RMSE | Prediction Error | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| L0 | L1 | LAD | OLS | L0 | L1 | LAD | OLS | L0 | L1 | LAD | OLS | |||

| 100 | 0.05 | 0.0178 | -0.0059 | -0.0068 | 0.0044 | 0.1754 | 0.4331 | 0.2591 | 0.2172 | 1.0455 | 1.0754 | 1.0691 | 1.0708 | |

| 100 | 0.05 | 0.0163 | 0.0061 | -0.0013 | -0.0119 | 0.1687 | 0.2567 | 0.2372 | 0.2618 | 1.0471 | 1.0513 | 1.0647 | 1.1550 | |

| 100 | 0.05 | 0.0095 | 0.0174 | -0.0084 | -0.0001 | 0.1740 | 0.4375 | 0.2403 | 0.4806 | 1.0462 | 1.0899 | 1.0640 | 1.5507 | |

| 100 | 0.1 | 0.0195 | -0.0032 | -0.0009 | -0.0018 | 0.2057 | 0.3765 | 0.4099 | 0.2793 | 1.0634 | 1.0752 | 1.1189 | 1.1139 | |

| 100 | 0.1 | 0.0197 | -0.0129 | 0.0051 | 0.0071 | 0.1952 | 0.4613 | 0.2992 | 0.3372 | 1.0713 | 1.1072 | 1.1006 | 1.3960 | |

| 100 | 0.1 | 0.0217 | -0.0251 | -0.0053 | -0.0206 | 0.1907 | 0.5812 | 0.2687 | 0.6476 | 1.0726 | 1.1381 | 1.0992 | 2.5156 | |

| 100 | 0.2 | 0.0598 | 0.0013 | -0.0037 | -0.0014 | 0.2537 | 0.2037 | 0.3693 | 0.3467 | 1.1117 | 1.0707 | 1.1242 | 1.1861 | |

| 100 | 0.2 | 0.0657 | -0.0023 | 0.0048 | -0.0133 | 0.2930 | 0.4674 | 0.2858 | 0.4629 | 1.2384 | 1.2532 | 1.1423 | 2.2922 | |

| 100 | 0.2 | 0.0886 | 0.0044 | -0.0041 | -0.0359 | 0.3756 | 0.3443 | 0.3889 | 0.8796 | 1.2692 | 1.5191 | 1.1856 | 6.0357 | |

| 200 | 0.05 | 0.0055 | -0.0011 | -0.0005 | -0.0020 | 0.1276 | 0.1125 | 0.1802 | 0.1504 | 1.0227 | 1.0185 | 1.0320 | 1.0338 | |

| 200 | 0.05 | 0.0091 | 0.0069 | 0.0073 | -0.0026 | 0.1226 | 0.2324 | 0.1887 | 0.1904 | 1.0218 | 1.0310 | 1.0340 | 1.1070 | |

| 200 | 0.05 | 0.0074 | 0.0036 | 0.0107 | 0.0086 | 0.1159 | 0.1134 | 0.2214 | 0.3237 | 1.0249 | 1.0246 | 1.0448 | 1.3797 | |

| 200 | 0.1 | 0.0146 | 0.0089 | 0.0060 | 0.0030 | 0.1363 | 0.2345 | 0.1631 | 0.1898 | 1.0254 | 1.0298 | 1.0293 | 1.0527 | |

| 200 | 0.1 | 0.0166 | 0.0137 | 0.0047 | 0.0121 | 0.1278 | 0.2367 | 0.1525 | 0.2437 | 1.0385 | 1.0539 | 1.0449 | 1.3352 | |

| 200 | 0.1 | 0.0140 | 0.0053 | 0.0117 | -0.0005 | 0.1269 | 0.1355 | 0.1899 | 0.4503 | 1.0331 | 1.0522 | 1.0451 | 2.2763 | |

| 200 | 0.2 | 0.0382 | -0.0025 | 0.0039 | -0.0155 | 0.1833 | 0.1406 | 0.2778 | 0.2525 | 1.0544 | 1.0321 | 1.0520 | 1.0980 | |

| 200 | 0.2 | 0.0330 | 0.0049 | 0.0018 | 0.0001 | 0.1775 | 0.1669 | 0.2220 | 0.3259 | 1.0985 | 1.1675 | 1.0926 | 2.1352 | |

| 200 | 0.2 | 0.0337 | -0.0013 | 0.0064 | -0.0189 | 0.1907 | 0.2270 | 0.2291 | 0.6181 | 1.0957 | 1.3804 | 1.1007 | 5.4837 | |

| 400 | 0.05 | 0.0033 | 0.0071 | 0.0021 | 0.0039 | 0.0822 | 0.2162 | 0.0944 | 0.1065 | 1.0119 | 1.0207 | 1.0140 | 1.0194 | |

| 400 | 0.05 | 0.0055 | 0.0028 | 0.0026 | 0.0024 | 0.0825 | 0.0789 | 0.0962 | 0.1294 | 1.0113 | 1.0154 | 1.0148 | 1.0867 | |

| 400 | 0.05 | 0.0041 | 0.0031 | 0.0033 | 0.0089 | 0.0800 | 0.0807 | 0.1000 | 0.2409 | 1.0132 | 1.0173 | 1.0165 | 1.3312 | |

| 400 | 0.1 | 0.0012 | -0.0024 | -0.0013 | -0.0064 | 0.0872 | 0.0852 | 0.1042 | 0.1310 | 1.0122 | 1.0119 | 1.0153 | 1.0273 | |

| 400 | 0.1 | 0.0052 | -0.0007 | 0.0028 | -0.0057 | 0.0857 | 0.0912 | 0.1288 | 0.1710 | 1.0158 | 1.0433 | 1.0277 | 1.2877 | |

| 400 | 0.1 | 0.0069 | 0.0019 | 0.0073 | -0.0055 | 0.0833 | 0.0911 | 0.1721 | 0.3137 | 1.0131 | 1.0351 | 1.0299 | 2.1209 | |

| 400 | 0.2 | 0.0023 | -0.0003 | -0.0017 | 0.0019 | 0.1051 | 0.1033 | 0.1025 | 0.1779 | 1.0152 | 1.0153 | 1.0150 | 1.0465 | |

| 400 | 0.2 | 0.0134 | 0.0040 | 0.0032 | 0.0063 | 0.1099 | 0.1130 | 0.2314 | 0.2254 | 1.0487 | 1.1439 | 1.0823 | 2.0636 | |

| 400 | 0.2 | 0.0172 | -0.0030 | -0.0059 | -0.0163 | 0.1215 | 0.1501 | 0.1191 | 0.4302 | 1.0602 | 1.3277 | 1.0741 | 5.2611 | |

Note: L0, L1, LAD and OLS refer to local combinatorial search algorithm with , -regularized estimator defined in (3.2), the least absolute deviation estimator and the ordinary least squares estimator, respectively. Generically, bias and RMSE are calculated by and , respectively, for true parameter , its estimate across replications. We report bias and RMSE for . The prediction error is calculated by where is generated from the same DGP without outliers and .

| Bias | RMSE | Prediction Error | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| L0 | L1 | LAD | OLS | L0 | L1 | LAD | OLS | L0 | L1 | LAD | OLS | |||

| 100 | 0.05 | 2 | 0.0136 | -0.0425 | -0.0213 | -0.1039 | 0.1856 | 0.4412 | 0.2999 | 0.2418 | 1.0520 | 1.1012 | 1.0764 | 1.0753 |

| 100 | 0.05 | 5 | 0.0108 | -0.0292 | -0.0293 | -0.2481 | 0.1721 | 0.3388 | 0.3421 | 0.4599 | 1.0454 | 1.0695 | 1.0981 | 1.2632 |

| 100 | 0.05 | 10 | 0.0024 | -0.0455 | -0.0375 | -0.5083 | 0.1695 | 0.5241 | 0.2712 | 0.9381 | 1.0450 | 1.1111 | 1.0725 | 2.0180 |

| 100 | 0.1 | 2 | 0.0124 | -0.0782 | -0.0647 | -0.2000 | 0.1985 | 0.2809 | 0.3104 | 0.3223 | 1.0584 | 1.0694 | 1.0793 | 1.1250 |

| 100 | 0.1 | 5 | 0.0177 | -0.1038 | -0.0633 | -0.5106 | 0.1959 | 0.4527 | 0.3108 | 0.7374 | 1.0617 | 1.0868 | 1.0951 | 1.6280 |

| 100 | 0.1 | 10 | 0.0084 | -0.1197 | -0.0665 | -0.9777 | 0.1932 | 0.3861 | 0.2889 | 1.4546 | 1.0652 | 1.1075 | 1.0973 | 3.4246 |

| 100 | 0.2 | 2 | 0.0470 | -0.1924 | -0.1443 | -0.3914 | 0.2518 | 0.5470 | 0.3400 | 0.5008 | 1.0995 | 1.1424 | 1.1261 | 1.2899 |

| 100 | 0.2 | 5 | 0.0210 | -0.2921 | -0.1563 | -1.0116 | 0.2536 | 0.4487 | 0.3351 | 1.2382 | 1.1436 | 1.1982 | 1.1369 | 2.6585 |

| 100 | 0.2 | 10 | -0.0150 | -0.4833 | -0.1652 | -1.9442 | 0.2628 | 0.6554 | 0.4466 | 2.4123 | 1.1598 | 1.5000 | 1.1400 | 7.3165 |

| 200 | 0.05 | 2 | -0.0039 | -0.0472 | -0.0373 | -0.1026 | 0.1263 | 0.1222 | 0.1418 | 0.1779 | 1.0203 | 1.0179 | 1.0231 | 1.0373 |

| 200 | 0.05 | 5 | 0.0019 | -0.0439 | -0.0357 | -0.2472 | 0.1228 | 0.2360 | 0.1610 | 0.3677 | 1.0200 | 1.0288 | 1.0287 | 1.1555 |

| 200 | 0.05 | 10 | 0.0028 | -0.0480 | -0.0289 | -0.5072 | 0.1209 | 0.1247 | 0.1675 | 0.7180 | 1.0243 | 1.0235 | 1.0350 | 1.6064 |

| 200 | 0.1 | 2 | -0.0058 | -0.0950 | -0.0619 | -0.2023 | 0.1371 | 0.2891 | 0.1853 | 0.2717 | 1.0284 | 1.0398 | 1.0369 | 1.0844 |

| 200 | 0.1 | 5 | 0.0066 | -0.0861 | -0.0538 | -0.4758 | 0.1344 | 0.2542 | 0.2812 | 0.6015 | 1.0284 | 1.0424 | 1.0614 | 1.4231 |

| 200 | 0.1 | 10 | 0.0024 | -0.1185 | -0.0633 | -1.0126 | 0.1321 | 0.1844 | 0.1817 | 1.2477 | 1.0283 | 1.0408 | 1.0515 | 2.6637 |

| 200 | 0.2 | 2 | 0.0132 | -0.1876 | -0.1343 | -0.3975 | 0.1743 | 0.2374 | 0.2047 | 0.4621 | 1.0486 | 1.0667 | 1.0520 | 1.2298 |

| 200 | 0.2 | 5 | 0.0077 | -0.2762 | -0.1411 | -1.0094 | 0.1801 | 0.3342 | 0.3001 | 1.1396 | 1.0629 | 1.1241 | 1.0809 | 2.3628 |

| 200 | 0.2 | 10 | -0.0130 | -0.4515 | -0.1441 | -2.0215 | 0.1803 | 0.5364 | 0.2658 | 2.2574 | 1.0698 | 1.3223 | 1.0785 | 6.3780 |

| 400 | 0.05 | 2 | -0.0055 | -0.0437 | -0.0278 | -0.1000 | 0.0815 | 0.2212 | 0.0907 | 0.1433 | 1.0099 | 1.0209 | 1.0117 | 1.0243 |

| 400 | 0.05 | 5 | 0.0010 | -0.0513 | -0.0274 | -0.2519 | 0.0826 | 0.0952 | 0.1322 | 0.3206 | 1.0115 | 1.0136 | 1.0192 | 1.1145 |

| 400 | 0.05 | 10 | -0.0005 | -0.0543 | -0.0338 | -0.4964 | 0.0829 | 0.0981 | 0.1257 | 0.6225 | 1.0113 | 1.0136 | 1.0172 | 1.4262 |

| 400 | 0.1 | 2 | -0.0112 | -0.0978 | -0.0607 | -0.1992 | 0.0874 | 0.1306 | 0.1100 | 0.2350 | 1.0123 | 1.0219 | 1.0171 | 1.0623 |

| 400 | 0.1 | 5 | -0.0001 | -0.1024 | -0.0568 | -0.4896 | 0.0860 | 0.1349 | 0.2014 | 0.5579 | 1.0116 | 1.0225 | 1.0339 | 1.3413 |

| 400 | 0.1 | 10 | -0.0017 | -0.1138 | -0.0648 | -0.9929 | 0.0854 | 0.1473 | 0.1400 | 1.1230 | 1.0112 | 1.0249 | 1.0237 | 2.3312 |

| 400 | 0.2 | 2 | -0.0307 | -0.2113 | -0.1373 | -0.4016 | 0.1022 | 0.2347 | 0.1827 | 0.4328 | 1.0176 | 1.0613 | 1.0381 | 1.1956 |

| 400 | 0.2 | 5 | -0.0047 | -0.2648 | -0.1350 | -1.0076 | 0.1064 | 0.2933 | 0.2762 | 1.0737 | 1.0207 | 1.0937 | 1.0692 | 2.1861 |

| 400 | 0.2 | 10 | -0.0052 | -0.4260 | -0.1478 | -2.0080 | 0.1020 | 0.4701 | 0.1815 | 2.1353 | 1.0214 | 1.2335 | 1.0410 | 5.6672 |

Note: L0, L1, LAD and OLS refer to local combinatorial search algorithm with , -regularized estimator defined in (3.2), the least absolute deviation estimator and the ordinary least squares estimator, respectively. Generically, bias and RMSE are calculated by and , respectively, for true parameter , its estimate across replications. We report bias and RMSE for . The prediction error is calculated by where is generated from the same DGP without outliers and .

| Bias | RMSE | Prediction Error | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| L0 | L1 | LAD | OLS | L0 | L1 | LAD | OLS | L0 | L1 | LAD | OLS | |||

| 100 | 0.05 | 2 | 0.0133 | 0.0374 | 0.0305 | 0.0947 | 0.1181 | 0.1216 | 0.1343 | 0.1707 | 1.0929 | 1.1016 | 1.1199 | 1.1796 |

| 100 | 0.05 | 5 | 0.0028 | 0.0372 | 0.0288 | 0.2316 | 0.1120 | 0.1214 | 0.1335 | 0.3450 | 1.1013 | 1.0985 | 1.1204 | 1.3718 |

| 100 | 0.05 | 10 | -0.0014 | 0.0400 | 0.0233 | 0.4303 | 0.1137 | 0.1283 | 0.1332 | 0.6133 | 1.1252 | 1.1627 | 1.1821 | 2.7509 |

| 100 | 0.1 | 2 | 0.0202 | 0.0912 | 0.0705 | 0.2313 | 0.1379 | 0.1571 | 0.1586 | 0.2903 | 1.2159 | 1.2227 | 1.2517 | 1.3784 |

| 100 | 0.1 | 5 | 0.0051 | 0.1177 | 0.0699 | 0.5802 | 0.1244 | 0.1799 | 0.1529 | 0.6761 | 1.1360 | 1.1734 | 1.1954 | 2.3094 |

| 100 | 0.1 | 10 | 0.0104 | 0.1829 | 0.0787 | 1.1178 | 0.1154 | 0.2515 | 0.1547 | 1.3130 | 1.1591 | 1.2737 | 1.1658 | 6.6681 |

| 100 | 0.2 | 2 | 0.0313 | 0.2201 | 0.1568 | 0.4539 | 0.1541 | 0.2676 | 0.2196 | 0.4995 | 1.1839 | 1.2791 | 1.2140 | 1.7171 |

| 100 | 0.2 | 5 | 0.0128 | 0.3870 | 0.1699 | 1.1336 | 0.1371 | 0.4517 | 0.2315 | 1.2259 | 1.1921 | 1.6585 | 1.2529 | 4.9567 |

| 100 | 0.2 | 10 | 0.0178 | 0.7165 | 0.1692 | 2.2973 | 0.1379 | 0.8299 | 0.2350 | 2.4777 | 1.1395 | 3.0481 | 1.2506 | 17.9627 |

| 200 | 0.05 | 2 | 0.0090 | 0.0459 | 0.0326 | 0.1128 | 0.0837 | 0.0911 | 0.0937 | 0.1493 | 1.1170 | 1.1022 | 1.1237 | 1.1233 |

| 200 | 0.05 | 5 | 0.0021 | 0.0481 | 0.0299 | 0.2854 | 0.0834 | 0.0992 | 0.0998 | 0.3453 | 1.0353 | 1.0283 | 1.0434 | 1.3253 |

| 200 | 0.05 | 10 | -0.0005 | 0.0523 | 0.0292 | 0.5746 | 0.0736 | 0.0962 | 0.0924 | 0.6796 | 1.0445 | 1.0538 | 1.0657 | 2.2574 |

| 200 | 0.1 | 2 | 0.0122 | 0.0927 | 0.0650 | 0.2258 | 0.0927 | 0.1263 | 0.1145 | 0.2563 | 1.0816 | 1.0692 | 1.0776 | 1.1800 |

| 200 | 0.1 | 5 | 0.0066 | 0.1106 | 0.0712 | 0.5705 | 0.0815 | 0.1417 | 0.1155 | 0.6230 | 1.1258 | 1.1599 | 1.1389 | 2.1070 |

| 200 | 0.1 | 10 | -0.0008 | 0.1536 | 0.0654 | 1.1162 | 0.0822 | 0.1931 | 0.1145 | 1.2159 | 1.0499 | 1.1218 | 1.0782 | 4.7269 |

| 200 | 0.2 | 2 | 0.0336 | 0.2183 | 0.1547 | 0.4549 | 0.1168 | 0.2405 | 0.1856 | 0.4767 | 1.0496 | 1.0919 | 1.0602 | 1.4486 |

| 200 | 0.2 | 5 | 0.0125 | 0.3576 | 0.1620 | 1.1350 | 0.0987 | 0.3921 | 0.1970 | 1.1828 | 1.0410 | 1.4231 | 1.1345 | 4.2417 |

| 200 | 0.2 | 10 | 0.0075 | 0.6543 | 0.1656 | 2.2731 | 0.0988 | 0.7136 | 0.2011 | 2.3656 | 1.0560 | 2.2161 | 1.1482 | 13.1995 |

| 400 | 0.05 | 2 | 0.0084 | 0.0527 | 0.0319 | 0.1139 | 0.0575 | 0.0760 | 0.0680 | 0.1335 | 1.0229 | 1.0125 | 1.0149 | 1.0271 |

| 400 | 0.05 | 5 | 0.0033 | 0.0571 | 0.0337 | 0.2849 | 0.0544 | 0.0800 | 0.0712 | 0.3138 | 1.0249 | 1.0412 | 1.0431 | 1.3004 |

| 400 | 0.05 | 10 | 0.0023 | 0.0568 | 0.0334 | 0.5652 | 0.0551 | 0.0812 | 0.0722 | 0.6180 | 1.0471 | 1.0697 | 1.0608 | 2.2000 |

| 400 | 0.1 | 2 | 0.0136 | 0.1077 | 0.0675 | 0.2276 | 0.0660 | 0.1241 | 0.0949 | 0.2433 | 1.0291 | 1.0416 | 1.0408 | 1.1401 |

| 400 | 0.1 | 5 | 0.0062 | 0.1134 | 0.0697 | 0.5633 | 0.0597 | 0.1299 | 0.0945 | 0.5890 | 1.0542 | 1.0938 | 1.0799 | 1.9397 |

| 400 | 0.1 | 10 | 0.0011 | 0.1469 | 0.0691 | 1.1311 | 0.0583 | 0.1676 | 0.0971 | 1.1816 | 1.1474 | 1.1870 | 1.1448 | 4.3749 |

| 400 | 0.2 | 2 | 0.0243 | 0.2288 | 0.1505 | 0.4515 | 0.0795 | 0.2405 | 0.1671 | 0.4632 | 1.0798 | 1.1893 | 1.1186 | 1.5341 |

| 400 | 0.2 | 5 | 0.0057 | 0.3392 | 0.1541 | 1.1227 | 0.0658 | 0.3560 | 0.1717 | 1.1470 | 0.9963 | 1.2354 | 1.0458 | 3.7024 |

| 400 | 0.2 | 10 | 0.0053 | 0.6241 | 0.1604 | 2.2530 | 0.0656 | 0.6515 | 0.1781 | 2.2978 | 1.0662 | 1.9160 | 1.1003 | 11.8648 |

Note: L0, L1, LAD and OLS refer to local combinatorial search algorithm with , -regularized estimator defined in (3.2), the least absolute deviation estimator and the ordinary least squares estimator, respectively. Generically, bias and RMSE are calculated by and , respectively, for true parameter , its estimate across replications. We report bias and RMSE for . The prediction error is calculated by where is generated from the same DGP without outliers and .

6 Empirical Illustration: Stock Return Predictability

Linear predictive regression models have been extensively studied for stock return forecasting. For instance, Koo et al. (2020); Lee et al. (2022) and others have applied linear predictive regression to the Welch and Goyal (2008) dataset to investigate stock return predictability. Financial time series data often exhibit instability, particularly during periods such as the financial crisis. Including these periods in estimation and forecasting can lead to varying results. As an illustration, we apply data-driven and -regularized robust estimation methods to the Welch and Goyal (2008) dataset555Retrieved from http://www.hec.unil.ch/agoyal/ and evaluate the out-of-sample stock return prediction performance.

We use monthly data from January 1990 to December 2023, which covers the dot-com bubble period, the 2007-09 financial crisis, and the Covid-19 pandemic. The dependent variable, excess return, is defined as the difference between the continuously compounded return on the S&P 500 index and the three-month Treasury bill rate, computed by

Twelve financial and macroeconomic variables666 The predictors are Dividend Price Ratio, Dividend Yield, Earning Price Ratio, Term Spread, Default Yield Spread, Default Return Spread, Book-to-Market Ratio, Treasury Bill Rates, Long-Term Return, Net Equity Expansion, Stock Variance, Inflation. Table A.1 in the appendix summarizes the detailed description of each variable. , denoted by , are included in the model as predictors. We apply the and -regularized methods to estimate the model,

for , and construct one-month-ahead forecasts recursively with a 10-year rolling window for each month from January 2000 to December 2023. As in Monte Carlo experiments, LAD and OLS are included as benchmarks.

Table 5 compares the mean prediction squared error (MPSE) among different methods across various forecasting periods. We consider the forecast period from January 2000 to December 2023, and six subperiods based on the start and end dates of the dot-com bubble burst period (Mar. 2000 to Nov. 2000), the financial crisis (Dec. 2007 to Jun. 2009) and the Covid-19 shocks (Feb. 2020 to Apr. 2020). The results demonstrate that -regularized method outperforms alternative methods with a margin in all subperiods except for the financial crisis period, which illuminates the forecast accuracy gain from the robustness to potentially endogenous outliers.

| Forecast Period | L0 | L1 | LAD | OLS |

|---|---|---|---|---|

| All periods | 0.00306 | 0.00325 | 0.00400 | 0.00341 |

| (Jan. 2000 to Dec. 2023) | ||||

| Dot-com Bubble Burst | 0.00409 | 0.00455 | 0.00450 | 0.00474 |

| (Mar. 2000 to Nov. 2000) | ||||

| Dot-com - Financial Crisis | 0.00169 | 0.00187 | 0.00207 | 0.00197 |

| (Mar. 2001 to Jun. 2009) | ||||

| Financial Crisis | 0.00937 | 0.00906 | 0.01389 | 0.01082 |

| (Dec. 2007 to Jun. 2009) | ||||

| Financial Crisis - Covid | 0.00128 | 0.00134 | 0.00148 | 0.00145 |

| (Dec. 2007 to Feb. 2020) | ||||

| Covid | 0.06758 | 0.07493 | 0.11073 | 0.07988 |

| (Feb. 2020 to Apr. 2020) | ||||

| Post-Covid | 0.00332 | 0.00378 | 0.00335 | 0.00340 |

| (Apr. 2020 to Dec. 2023) |

Notes: The mean prediction squared error (MPSE) is calculated by averaging the square forecasting loss over the corresponding periods. The date below each period refers to the forecast period. L0, L1, LAD and OLS refer to local combinatorial search algorithm with , -regularized estimator defined in (3.2), the least absolute deviation estimator and the ordinary least squares estimator, respectively. The smallest MPSE in each period is labeled in bold.

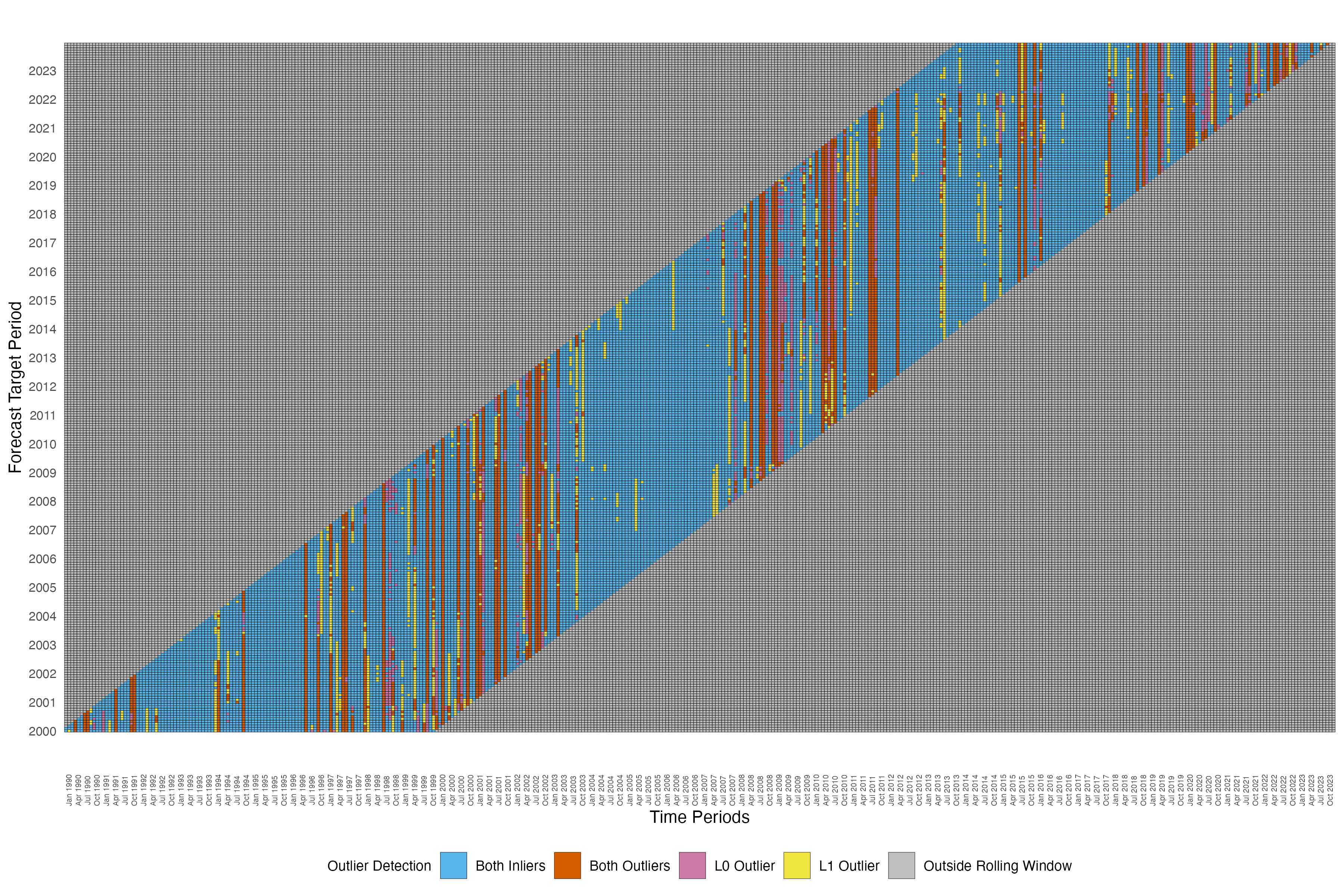

Additionally, Figure 2 illustrates the outliers detected () for each rolling window using and -regularized methods. In the grid plot, each row corresponds to a different forecast period, and each column corresponds to a period that may be included in the estimation rolling window. Within each row, the highlighted cells represent the estimation window for the corresponding forecast target period. A cell is labeled red if the corresponding period is detected as an outlier in the rolling window by both methods, blue if both methods detect this period as an inlier, purple if only the -regularized method detects this period as an outlier, and yellow if only the -regularized method detects this period as an outlier. As shown in the figure, the method consistently detects periods around the dotcom bubble, financial crisis, and Covid-19 shocks across rolling windows. In general, the method detects a similar pattern while also labeling some outlier periods outside the concentrated outlier regions.

7 Conclusion

This paper addresses the robust estimation of linear regression models in the presence of potentially endogenous outliers. We demonstrate that existing -regularized estimation methods exhibit significant bias when outliers are endogenous and develop -regularized estimation methods to overcome this issue. We propose systematic heuristic algorithms, notably an iterative hard-thresholding algorithm and a local combinatorial search refinement, to efficiently solve the combinatorial optimization problem of the -regularized estimation. The properties of the and -regularized methods are examined through Monte Carlo simulations. We illustrate the practical value of our method with an empirical application to stock return forecasting.

Appendix

Appendix A.1 Proofs

Proof of Proposition 1.

Note that (4.1) is equivalent to

Denote

where

Note that since is a quadratic function of , so we can focus on as proceed.

We first show by contradiction. Suppose , and the set of uncontaminated sample is with . The objective value with sample in satisfies

then and

| (A.1) |

Suppose

then

This implies

for , which contradicts to (A.1). As a result, .

Suppose , then for some with at least one arbitrary outlier, which leads to , and then

Then , which completes the proof. ∎

Appendix A.2 Data Description

Table A.1 summarizes all the variables included and the first-order autocorrelation coefficient of each variable estimated for the whole sample period. As shown in the table, the excess return has an estimated first-order autocorrelation coefficient of , which indicates little persistence, similar to the default return spread (dfr), the long-term return of government bonds (ltr), stock variance (svar) and inflation (infl). The other predictors show high persistence, with AR(1) coefficients greater than 0.95.

| Predictor | Description | AR(1) Coef |

|---|---|---|

| ExReturn | Excess Return: the difference between the continuously compounded return on the S&P 500 index and the three-month Treasury Bill rate | 0.0444 |

| dp | Dividend Price Ratio: the difference between the log of the 12-month moving sum dividends and the log of the S&P 500 index | 0.9941 |

| dy | Dividend Yield: the difference between the log of the 12-month moving sum dividends and the log of lagged the S&P 500 index | 0.9941 |

| ep | Earning Price Ratio: the difference between the log of the 12-month moving sum earnings and the log of the S&P 500 index | 0.9904 |

| tms | Term Spread: the difference between the long-term government bond yield and the Treasury Bill rate | 0.9576 |

| dfy | Default Yield Spread: the difference between Moody’s BAA- and AAA-rated corporate bond yields | 0.9717 |

| dfr | Default Return Spread: the difference between the returns of long-term corporate bonds and long-term government bonds | -0.0735 |

| bm | Book-to-Market Ratio: the ratio of the book value to market value for the Dow Jones Industrial Average | 0.9927 |

| tbl | Treasury Bill Rates: the 3-month Treasury Bill rates | 0.9905 |

| ltr | Long-Term Return: the rate of returns of long-term government bonds | 0.0500 |

| ntis | Net Equity Expansion: the ratio of the 12-month moving sums of net issues by NYSE listed stocks over the total end-of-year market capitalization of NYSE stocks | 0.9778 |

| svar | Stock Variance: the sum of the squared daily returns on the S&P 500 index | 0.4714 |

| infl | Inflation: the log growth of the Consumer Price Index (all urban consumers) | 0.4819 |

References

- Beck and Eldar (2013) Beck, A. and Y. C. Eldar (2013). Sparsity constrained nonlinear optimization: Optimality conditions and algorithms. SIAM Journal on Optimization 23(3), 1480–1509.

- Bertsimas et al. (2016) Bertsimas, D., A. King, and R. Mazumder (2016). Best subset selection via a modern optimization lens. The Annals of Statistics 44(2), 813–852.

- Gurobi Optimization, LLC (2024) Gurobi Optimization, LLC (2024). Gurobi Optimizer Reference Manual.

- Hampel (1971) Hampel, F. R. (1971). A general qualitative definition of robustness. The Annals of Mathematical Statistics 42(6), 1887–1896.

- Hastie et al. (2015) Hastie, T., R. Tibshirani, and M. Wainwright (2015). Statistical Learning with Sparsity: The Lasso and Generalizations. Chapman & Hall/CRC.

- Hazimeh and Mazumder (2020) Hazimeh, H. and R. Mazumder (2020). Fast best subset selection: Coordinate descent and local combinatorial optimization algorithms. Operations Research 68(5), 1517–1537.

- Huber (1964) Huber, P. J. (1964). Robust estimation of a location parameter. The Annals of Mathematical Statistics 35(1), 73–101.

- Huber and Donoho (1983) Huber, P. J. and D. Donoho (1983). The notion of breakdown point. In P. J. Bickel, K. Doksum, and J. L. Hodges (Eds.), A Festschrift for Erich L. Lehmann. Chapman and Hall/CRC.

- Koo et al. (2020) Koo, B., H. M. Anderson, M. H. Seo, and W. Yao (2020). High-dimensional predictive regression in the presence of cointegration. Journal of Econometrics 219(2), 456–477.

- Kostakis et al. (2015) Kostakis, A., T. Magdalinos, and M. P. Stamatogiannis (2015). Robust econometric inference for stock return predictability. The Review of Financial Studies 28(5), 1506–1553.

- Lee et al. (2022) Lee, J. H., Z. Shi, and Z. Gao (2022). On lasso for predictive regression. Journal of Econometrics 229(2), 322–349.

- Lee et al. (2012) Lee, Y., S. N. MacEachern, and Y. Jung (2012). Regularization of case-specific parameters for robustness and efficiency. Statistical Science 27(3), 350–372.

- Mazumder et al. (2022) Mazumder, R., P. Radchenko, and A. Dedieu (2022). Subset selection with shrinkage: Sparse linear modeling when the snr is low. Operations Research.

- Natarajan (1995) Natarajan, B. K. (1995). Sparse approximate solutions to linear systems. SIAM Journal on Computing 24(2), 227–234.

- Rousseeuw (1984) Rousseeuw, P. J. (1984). Least median of squares regression. Journal of the American statistical association 79(388), 871–880.

- Rousseeuw (1985) Rousseeuw, P. J. (1985). Multivariate estimation with high breakdown point. Mathematical Statistics and Applications 8(283-297), 37.

- She and Owen (2011) She, Y. and A. B. Owen (2011). Outlier detection using nonconvex penalized regression. Journal of the American Statistical Association 106(494), 626–639.

- Siegel (1982) Siegel, A. F. (1982). Robust regression using repeated medians. Biometrika 69(1), 242–244.

- Thompson (2022) Thompson, R. (2022). Robust subset selection. Computational Statistics & Data Analysis 169, 107415.

- Welch and Goyal (2008) Welch, I. and A. Goyal (2008). A comprehensive look at the empirical performance of equity premium prediction. The Review of Financial Studies 21(4), 1455–1508.

- Yu and Yao (2017) Yu, C. and W. Yao (2017). Robust linear regression: A review and comparison. Communications in Statistics-Simulation and Computation 46(8), 6261–6282.