JMA_Supplement

Robust Inference for Change Points in High Dimension

Abstract

This paper proposes a new test for a change point in the mean of high-dimensional data based on the spatial sign and self-normalization. The test is easy to implement with no tuning parameters, robust to heavy-tailedness and theoretically justified with both fixed- and sequential asymptotics under both null and alternatives, where is the sample size. We demonstrate that the fixed- asymptotics provide a better approximation to the finite sample distribution and thus should be preferred in both testing and testing-based estimation. To estimate the number and locations when multiple change-points are present, we propose to combine the p-value under the fixed- asymptotics with the seeded binary segmentation (SBS) algorithm. Through numerical experiments, we show that the spatial sign based procedures are robust with respect to the heavy-tailedness and strong coordinate-wise dependence, whereas their non-robust counterparts proposed in Wang et al., (2022) appear to under-perform. A real data example is also provided to illustrate the robustness and broad applicability of the proposed test and its corresponding estimation algorithm.

Keywords: Change Points, High Dimensional Data, Segmentation, Self-Normalization, Spatial Sign

1 Introduction

High-dimensional data analysis often encounters testing and estimation of change-points in the mean, and it has attracted a lot of attention in statistics recently. See Horváth and Hušková, (2012), Jirak, (2015), Cho, (2016), Wang and Samworth, (2018), Liu et al., (2020), Wang et al., (2022), Zhang et al., (2021) and Yu and Chen, (2021, 2022) for some recent literature. Among the proposed tests and estimation methods, most of them require quite strong moment conditions (e.g., Gaussian or sub-Gaussian assumption, or sixth moment assumption) and some of them also require weak component-wise dependence assumption. There are only a few exceptions, such as Yu and Chen, (2022), where they used anti-symmetric and nonlinear kernels in a U-statistics framework to achieve robustness. However, the limiting distribution of their test statistic is non-pivotal and their procedure requires bootstrap calibration, which could be computationally demanding. In addition, their test statistic targets the sparse alternative only. As pointed out in athe review paper by Liu et al., (2022), the interest in the dense alternative can be well motivated by real data and is often the type of alternative the practitioners want to detect. For example, copy number variations in cancer cells are commonly manifested as change-points occurring at the same positions across many related data sequences corresponding to cancer samples and biologically related individuals; see Fan and Mackey, (2017).

In this article, we propose a new test for a change point in the mean of high-dimensional data that works for a broad class of data generating processes. In particular, our test targets the dense alternative, is robust to heavy-tailedness, and can accommodate both weak and strong coordinate-wise dependence. Our test is built on two recent advances in high-dimensional testing: spatial sign based two sample test developed in Chakraborty and Chaudhuri, (2017) and U-statistics based change-point test developed in Wang et al., (2022). Spatial sign based tests have been studied in the literature of multivariate data and they are usually used to handle heavy-tailedness, see Oja, (2010) for a book-length review. However, it was until recently that Wang et al., (2015) and Chakraborty and Chaudhuri, (2017) discovered that spatial sign could also help relax the restrictive moment conditions in high dimensional testing problems. In Wang et al., (2022), they advanced the high-dimensional two sample U-statistic pioneered by Chen and Qin, (2010) to the change-point setting by adopting the self-normalization (SN) (Shao,, 2010; Shao and Zhang,, 2010). Their test targets dense alternative, but requires sixth moment assumption and only allows for weak coordinate-wise dependence.

Building on these two recent advances, we shall propose a spatial signed SN-based test for a change point in the mean of high-dimensional data. Our contribution to the literature is threefold. Firstly, we derive the limiting null distribution of our test statistic under the so-called fixed- asymptotics, where the sample size is fixed and dimension grows to infinity. We discovered that the fixed- asymptotics provide a better approximation to the finite sample distribution when the sample size is small or moderate. We also let grows to infinity after we derive -dependent asymptotic distribution, and obtain the limit under the sequential asymptotics (Phillips and Moon,, 1999). This type of asymptotics seems new to the high-dimensional change-point literature and may be more broadly adopted in change-point testing and other high-dimensional problems. Secondly, our asymptotic theory covers both scenarios, the weak coordinate-wise dependence via mixing, and strong coordindate-wise dependence under the framework of “randomly scaled -mixing sequence” (RSRM) in Chakraborty and Chaudhuri, (2017). The process convergence associated with spatial signed U-process we develop in this paper further facilitates the application of our test under sequential asymptotics where , in addition to , also goes to infinity. In particular, we have developed novel theory to establish the process convergence result under the RSRM framework. In general, this requires to show the finite dimensional convergence and asymptotic equicontinuity (tightness). For the tightness, we derive a bound for the eighth moment of the increment of the sample path based on a conditional argument under the sequential asymptotics, which is new to the literature. Using this new technique, we provide the unconditional limiting null distribution of the test statistic for the fixed- and growing- case. This is stronger than the results in Chakraborty and Chaudhuri, (2017) which is a conditional limiting null distribution. Thirdly, We extend our test to estimate multiple changes by combining the p-value based on the fixed- asymptotics and the seeded binary segmentation (SBS) (Kovács et al.,, 2020). The use of fixed- asymptotics is especially recommended due to the fact that in these popular generic segmentation algorithms such as WBS (Fryzlewicz,, 2014) and SBS, test statistics over many intervals of small/moderate lengths are calculated and the sequential asymptotics is not accurate in approximating the finite sample distribution, as compared to its fixed- counterpart. The superiority and robustness of our estimation algorithm is corroborated in a small simulation study.

The rest of the paper is organized as follows. In Section 2, we define the spatial signed SN test. Section 3 studies the asymptotic behavior of the test under both the null and local alternatives. Extensions to estimating multiple change-points are elaborated in Section 4. Numerical studies for both testing and estimation are relegated to Section 5. Section 6 contains a real data example and Section 7 concludes. All proofs with auxiliary lemmas are given in the appendix. Throughout the paper, we denote as the convergence in probability, as the convergence in distribution and as the weak convergence for stochastic processes. The notations and are used to represent vectors of dimension whose entries are all ones and zeros, respectively. For , denote and . For a vector , denotes its Euclidean norm. For a matrix , denotes its Frobenius norm.

2 Test Statistics

Let be a sequence of i.i.d -valued random vectors with mean and covariance . We assume that the observed data satisfies , where and is the mean at time . We are interested in the following testing problem:

| (1) |

In (1), under the null, the mean vectors are constant over time while under the alternative, there is one change-point at unknown time point .

Let denote the spatial sign of a vector . Consider the following spatial signed SN test statistic:

| (2) |

where for ,

| (3) | ||||

| (4) |

Here, the superscript (s) is used to highlight the role of spatial sign plays in constructing the testing statistic. In contrast to Wang et al., (2022), where they introduced the test statistic

| (5) |

with and defined in the same way as (3) but without spatial sign.

As pointed out by Wang et al., (2022), the limiting distribution of (properly standardized) relies heavily on the covariance (correlation) structure of , which is typically unknown in practice. One may replace it with a consistent estimator, and this is indeed adopted in high dimensional one-sample or two-sample testing problems, see, for example, Chen and Qin, (2010) and Chakraborty and Chaudhuri, (2017). Unfortunately, in the context of change-point testing, the unknown location makes this method practically unreliable. For our spatial sign based test, the limiting distribution of (properly standardized) depends on unknown nuisance parameters that could be a complex functional of the underlying distribution. To this end, following Wang et al., (2022) and Zhang et al., (2021), we propose to adopt the SN technique in Shao and Zhang, (2010) to avoid the consistent estimation of unknown nuisance parameters. SN technique was initially developed in Shao, (2010) and Shao and Zhang, (2010) in the low dimensional time series setting and its main idea is to use an inconsistent variance estimator (i.e. self-normalizer) which is based on recursive subsample test statistic, so that the limiting distribution is pivotal under the null. See Shao, (2015) for a recent review.

3 Theoretical Properties

We first introduce the concept of -mixing, see e.g. Bradley, (1986). Typical -mixing sequences include i.i.d sequences, -dependent sequences, stationary strong ARMA processes and many Markov chain models.

Definition 3.1 (-mixing).

A sequence is said to be -mixing if

where denotes the correlation between and , and is the -field generated by . Here is called the -mixing coefficient of .

3.1 Assumptions

To analyze the asymptotic behavior of , we make the following assumptions.

Assumption 3.1.

are i.i.d copies of , where is formed by the first observations from a sequence of strictly stationary and -mixing random variables such that and .

Assumption 3.2.

The -mixing coefficients of satisfies .

Assumptions 3.1 and 3.2 are imposed in Chakraborty and Chaudhuri, (2017) to analyze the behaviour of spatial sign based two-sample test statistic for the equality of high dimensional mean. In particular, Assumption 3.1 allows us to analyze the behavior of under the fixed- scenario by letting go to infinity alone. Assumption 3.2 allows weak dependence among the coordinates of the data, and similar assumptions are also made in, e.g. Wang et al., (2022) and Zhang et al., (2021). The strict stationary assumption can be relaxed with additional conditions and the scenario that corresponds to strong coordinate-wise dependence is provided in Section 3.4

3.2 Limiting Null

We begin by deriving the limiting distribution of when is fixed while letting , and then analyze the large sample behavior of the fixed- limit by letting . The sequential asymptotics is fairly common in statistics and econometrics, see Phillips and Moon, (1999).

Theorem 3.1.

(i) for any fixed , as , we have

where

with

and is a centered Gaussian process defined on with covariance structure given by:

(ii) Furthermore, if , then

| (6) |

with

and is a centered Gaussian process defined on with covariance structure given by:

Theorem 3.1 (i) states that for each fixed , when , the limiting distribution is a functional of Gaussian process, which is pivotal and can be easily simulated, see Table 1 for tabulated quantiles with (based on 50,000 Monte Carlo replications). Theorem 3.1 (ii) indicates that converges in distribution as diverges, which is indeed supported by Table 1. In fact, is exactly the same as the limiting null distribution obtained in Wang et al., (2022) under the joint asymptotics when both and diverge at the same time.

Our spatial signed SN test builds on the test by Chakraborty and Chaudhuri, (2017), where an estimator for the covariance is necessary as indicated by Section 2.1 therein. However, if the sample size is fixed, their estimator is only unbiased but not consistent. In contrast, the SN technique adopted in this paper enables us to avoid such estimation, and thus makes the fixed inference feasible in practice. It is worth noting that the test statistics and share the same limiting null under both fixed- asymptotics and sequential asymptotics.

Our test statistic is based on the spatial signs and only assumes finite second moment, which is much weaker than the sixth moment in Wang et al., (2022) under joint asymptotics of and . The fixed- asymptotics provides a better approximation to the finite sample distribution of and when is small or moderate. So its corresponding critical value should be preferred than the counterparts derived under the joint asymptotics. Thus, when data is heavy-tailed and data length is short, our test is more appealing.

| 80% | 90% | 95% | 99% | 99.5% | 99.9% | |

|---|---|---|---|---|---|---|

| 10 | 1681.46 | 3080.03 | 5167.81 | 14334.10 | 20405.87 | 46201.88 |

| 20 | 719.03 | 1124.26 | 1624.11 | 3026.24 | 3810.61 | 5899.45 |

| 30 | 633.70 | 965.12 | 1350.52 | 2403.64 | 2988.75 | 4748.03 |

| 40 | 609.65 | 926.45 | 1283.00 | 2292.31 | 2750.01 | 4035.71 |

| 50 | 596.17 | 889.34 | 1224.98 | 2186.99 | 2624.72 | 3846.51 |

| 100 | 594.54 | 881.93 | 1200.31 | 2066.37 | 2482.51 | 3638.74 |

| 200 | 592.10 | 878.23 | 1195.25 | 2049.32 | 2456.71 | 3533.44 |

3.3 Power Analysis

Denote as the shift in mean under the alternative, and as the limiting average signal. Next, we study the behavior of the test under both fixed () and local alternatives ().

We first consider the case when the average signal is non-diminishing.

Assumption 3.3.

(i) , (ii) as .

Here the Assumption 3.3 (ii) is quite mild and can be satisfied by many weak dependent sequences such as ARMA sequences.

Theorem 3.2 shows that when average signal is non-diminishing, then both and are consistent tests. Next, we analyze under local alternatives when .

Assumption 3.4.

(i) , (ii) as .

Assumption 3.4 regulates the behavior of the shift size, and is used to simplify the theoretical analysis of under local alternatives. Similar assumptions are also made in Chakraborty and Chaudhuri, (2017). Clearly, when is the identity matrix, Assumption 3.4 (ii) automatically holds if .

Theorem 3.3.

[Local Alternative] Suppose Assumptions 3.1, 3.2 and 3.4 hold. Assume there exists a such that , and , . Then for any fixed , as ,

(i) if , then and ;

(ii)if , then and ;

(iii)if , then and where

and

Furthermore, if , then as ,

| (7) |

where

and for ,

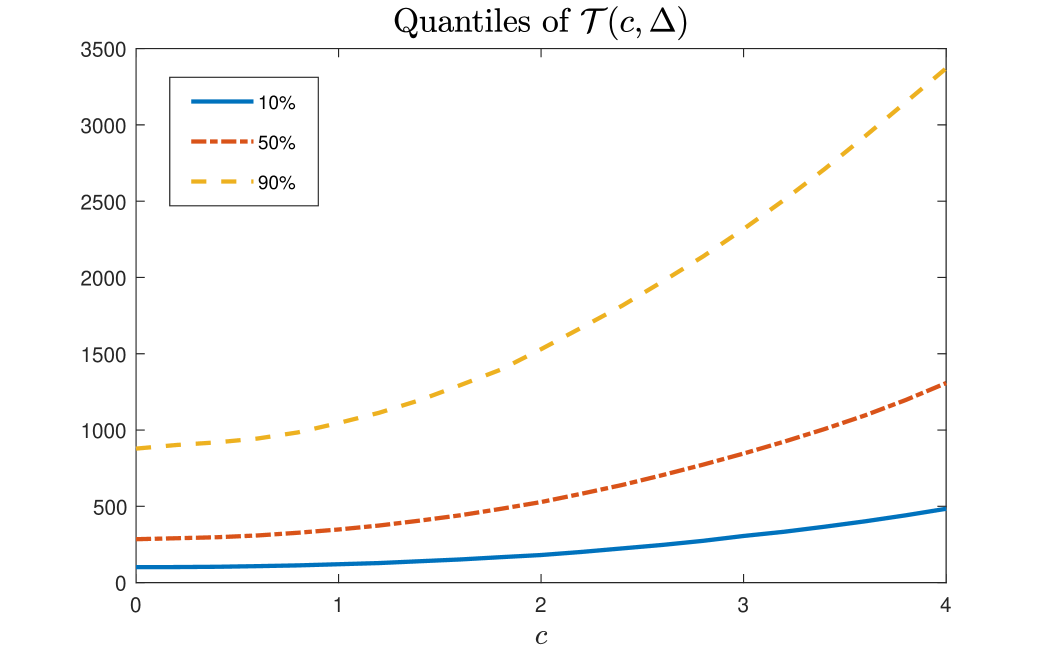

The above theorem implies that the asymptotic power of and depends on the joint behavior of and , holding as fixed. If is the identity matrix, then and will exhibit different power behaviors according to whether converges to zero, infinity, or some constant . In addition, under the local alternative, the limiting distribution of and under the sequantial asymptotics coincides with that in Wang et al., (2022) under the joint asymptotics, see Theorem 3.5 therein. In Figure 1, we plot at 10%, 50% and 90% quantile levels with fixed at and it suggests that is stochastically increasing with , which further supports the consistency of both tests.

3.4 Analysis Under Stronger Dependence Structure

In this section, we focus on a special class of probability models for high dimensional data termed “randomly scaled -mixing (RSRM)” sequence.

Definition 3.2 (RSRM, Chakraborty and Chaudhuri, (2017)).

A sequence is a randomly scaled -mixing sequence if there exist a zero mean -mixing squence and an independent positive non-degenerate random variable such that , .

RSRM sequences introduce stronger dependence structure among the coordinates than -mixing sequences, and many models fall into this category, see, e.g. elliptically symmetric models in Wang et al., (2015) and non-Gaussian sequences in Cai et al., (2014).

Assumption 3.5.

Clearly, when is degenerate (i.e., a positive constant), Assumption 3.5 reduces to the model assumed in previous sections. However, when is non-degenerate, Assumption 3.5 imposes stronger dependence structure on coordinates of than -mixing sequences, and hence result in additional theoretical difficulties. We refer to Chakraborty and Chaudhuri, (2017) for more discussions of RSRM sequences.

Theorem 3.4.

Suppose Assumption 3.5 holds, then under ,

(i) let for any fixed , if and , as , there exists two random variables and dependent on such that,

In general, if the sample size is small and is generated from an RSRM sequence, the unconditional limiting distributions of and as are no longer pivotal due to the randomness in . Nevertheless, using the pivotal limiting distribution in hypothesis testing can still deliver relatively good performance for in both size and power, see Section 5.1 below for numerical evidence. If is also diverging, the same pivotal limiting distribution as presented in Theorem 3.1 (ii) and in Theorem 3.4 of Wang et al., (2022) can still be reached.

Let be the covariance of (or equivalently ), the next theorem provides with the asymptotic behavior under local alternative for the RSRM model.

Theorem 3.5.

(i) let for any fixed , if and , as , there exists two random variables and dependent on such that,

(ii) Furthermore, if we assume and , and , then as , we have

where is defined in (7), and

is a constant.

For the RSRM model, similar to Theorem 3.4 (i), the fixed- limiting distributions of and are non-pivotal under local alternatives. However, the distribution of under sequential limit is pivotal while that of is . The multiplicative constant suggests that for the RSRM model, using could be more powerful as is expected to be monotone in , see Figure 1 above. This finding coincides with Chakraborty and Chaudhuri, (2017) where they showed that using spatial sign based U-statistics for testing the equality of two high dimensional means could be more powerful than the conventional mean-based ones in Chen and Qin, (2010). Thus, when strong coordinate-wise dependence is exhibited in the data, is more preferable.

4 Multiple Change-point Estimation

In real applications, in addition to change-point testing, another important task is to estimate the number and locations of these change-points. In this section, we assume there are change-points and are denoted by . A commonly used algorithm for many practitioners would be binary segmentation (BS), where the data segments are recursively split at the maximal points of the test statistics until the null of no change-points is not rejected for each segment. However, as criticized by many researchers, BS tends to miss potential change-points when non-monotonic change patterns are exhibited. Hence, many algorithms have been proposed to overcome this drawback. Among them, wild binary segmentation (WBS) by Fryzlewicz, (2014) and its variants have become increasingly popular because of their easy-to-implement procedures. The main idea of WBS is to perform BS on randomly generated sub-intervals so that some sub-intervals can localize at most one change-point (with high probability). As pointed out by Kovács et al., (2020), WBS relies on randomly generated sub-intervals and different researchers may obtain different estimates. Hence, Kovács et al., (2020) propose seeded binary segmentation (SBS) algorithm based on deterministic construction of these sub-intervals with relatively cheaper computational costs so that results are replicable. To this end, we combine the spatial signed SN test with SBS to achieve the task of multiple change-point estimation, and we call it SBS-SN(s). We first introduce the concept of seeded sub-intervals.

Definition 4.1 (Seeded Sub-Intervals, Kovács et al., (2020)).

Let denote a given decay parameter. For (i.e. logarithm with base ) define the -th layer as the collection of intervals of initial length that are evenly shifted by the deterministic shift as follows:

where and The overall collection of seeded intervals is

Let be a decay parameter, denote as the set of seeded intervals based on Definition 4.1. For each sub-interval , we calculate the spatial signed SN test

where and are defined in (3) and (4). We obtain the p-value of the sub-interval test statistic based on the fixed- asymptotic distribution . SBS-SN(s) then finds the smallest p-value evaluated at all sub-intervals and compare it with a predetermined threshold level . If the smallest p-value is also smaller than , denote the corresponding sub-interval where the smallest p-value is achived as and estimate the change-point by . Once a change-point is identified, SBS-SN(s) then divides the data sample into two subsamples accordingly and apply the same procedure to each of them. The process is implemented recursively until no change-point is detected. Details are provided in Algorithm 1.

Our SBS-SN(s) algorithm differs from WBS-SN algorithm in Wang et al., (2022) and Zhang et al., (2021) in two aspects. First, WBS-SN is built on WBS, which relies on randomly generated intervals while SBS relies on deterministic intervals. As documented in Kovács et al., (2020), WBS is computationally more demanding than SBS. Second, the threshold used in WBS-SN is universal for each sub-interval, depends on the sample size and dimension and needs to be simulated via extensive Monte Carlo simulations. Generally speaking, WBS-SN requires simulating a new threshold each time for a new dataset. By contrast, our estimation procedure is based on p-values under the fixed- asymptotics, which takes into account the interval length for each sub-interval . When implementing either WBS or SBS, inevitably, there will be intervals of small lengths. Hence, the universal threshold may not be suitable as it does not take into account the effect of different interval lengths. In order to alleviate the problem of multiple testing, we may set a small threshold number for , such as 0.0001 or 0.0005. Furthermore, the WBS-SN requires to specify a minimal interval lentgh which can affect the finite sample performance. In this work, when generating seed sub-intervals as in Definition 4.1, the lengths of these intervals are set as integer values times 10 to reduce the computational cost for simulating fixed- asymptotic distribution . Therefore, we only require the knowledge of for SBS-SN(s) to work, which can be simulated once for good and do not change with a new dataset.

5 Numerical Experiments

This section examines the finite sample behavior of the proposed tests and multiple change-point estimation algorithm SBS-SN(s) via simulation studies.

5.1 Size and Power

We first assess the performance of with respect to various covariance structure of the data. Consider the following data generating process with and :

where represents the mean shift vector, and are i.i.d copies of based on the following specifications:

-

(i)

;

-

(ii)

;

-

(iii)

;

-

(iv)

, , , where are i.i.d random variables;

-

(v)

, , , where are i.i.d random variables;

-

(vi)

, , , , where are i.i.d random variables, and is independently generated;

-

(vii)

, , , , where are i.i.d random variables, and is independently generated;

where is the multivariate distribution with degree of freedom and covariance ; is the exponential distribution with mean .

Case (i) assumes that coordinates of are independent and light-tailed; Cases (ii) and (iii) consider the scenario of heavy-tailedness of ; Cases (iv) and (v) assume the coordinates of are consecutive random observations from a stationary AR(1) model with autoregressive coefficient ; and Cases (vi) and (vii) assume the coordinates of are generated from an RSRM with .

Table 2 shows the empirical rejection rate of and in percentage based on 1000 replications under the null with ; dense alternative ; and sparse alternative . We compare the approximation using the limiting null distribution of fixed- asymptotics and sequential asymptotics at level.

| Case | Test | Limit | 10 | 20 | 50 | 100 | 200 | 10 | 20 | 50 | 100 | 200 | 10 | 20 | 50 | 100 | 200 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| (i) | 5.6 | 4.8 | 6.9 | 4.0 | 6.4 | 6.3 | 6.5 | 15.2 | 34.7 | 77.1 | 7.3 | 11.0 | 34.1 | 78.0 | 99.9 | |||

| 27.4 | 9.0 | 7.4 | 4.1 | 6.7 | 29.9 | 11.2 | 16.5 | 35.2 | 77.8 | 33.7 | 18.1 | 34.9 | 78.1 | 99.9 | ||||

| 5.5 | 4.8 | 6.2 | 4.3 | 6.6 | 6.2 | 5.9 | 15.0 | 33.4 | 76.7 | 7.2 | 10.4 | 33.3 | 77.7 | 99.8 | ||||

| 28.5 | 8.7 | 6.8 | 4.4 | 7.1 | 29.8 | 10.8 | 15.7 | 34.6 | 77.6 | 33.5 | 17.3 | 34.6 | 78.6 | 99.8 | ||||

| (ii) | 6.9 | 6.4 | 6.8 | 4.3 | 6.0 | 7.2 | 7.2 | 11.7 | 18.5 | 41.4 | 8.0 | 8.8 | 22.0 | 47.2 | 87.3 | |||

| 31.8 | 12.6 | 7.6 | 4.3 | 6.2 | 31.8 | 12.4 | 12.8 | 19.0 | 42.5 | 33.9 | 15.3 | 22.9 | 47.5 | 87.4 | ||||

| 5.3 | 5.3 | 6.2 | 4.1 | 5.6 | 5.7 | 5.5 | 11.8 | 26.1 | 59.6 | 6.4 | 8.1 | 26.7 | 62.9 | 96.8 | ||||

| 28.2 | 9.7 | 6.7 | 4.2 | 5.7 | 28.5 | 10.0 | 12.6 | 27.0 | 60.4 | 30.8 | 14.4 | 28.0 | 63.2 | 96.8 | ||||

| (iii) | 9.0 | 9.5 | 9.2 | 6.7 | 7.9 | 10.0 | 10.4 | 11.8 | 14.2 | 25.7 | 9.8 | 12.3 | 17.9 | 27.9 | 57.5 | |||

| 35.8 | 16.1 | 9.6 | 6.9 | 8.5 | 35.6 | 16.1 | 12.6 | 14.7 | 26.0 | 36.8 | 18.7 | 18.8 | 28.7 | 58.2 | ||||

| 5.6 | 5.0 | 6.4 | 4.8 | 6.4 | 5.7 | 4.9 | 10.5 | 21.2 | 50.2 | 6.2 | 6.9 | 21.9 | 53.0 | 93.4 | ||||

| 27.5 | 9.6 | 7.0 | 4.9 | 6.8 | 29.2 | 9.3 | 11.4 | 21.7 | 50.8 | 28.6 | 12.8 | 23.0 | 53.9 | 93.7 | ||||

| (iv) | 5.9 | 4.8 | 6.1 | 6.8 | 5.4 | 6.5 | 8.6 | 23.5 | 46.4 | 78.8 | 9.3 | 14.7 | 43.2 | 83.8 | 99.6 | |||

| 28.1 | 9.2 | 6.7 | 6.9 | 5.7 | 30.9 | 13.8 | 24.6 | 47.4 | 79.1 | 33.9 | 20.1 | 44.4 | 84.0 | 99.6 | ||||

| 4.8 | 3.9 | 6.0 | 6.3 | 5.4 | 5.5 | 7.1 | 22.5 | 44.2 | 77.9 | 6.9 | 13.0 | 41.5 | 84.1 | 99.8 | ||||

| 27.6 | 8.2 | 6.5 | 6.6 | 5.4 | 30.6 | 12.2 | 23.5 | 45.3 | 78.1 | 33.1 | 18.8 | 43.0 | 84.6 | 99.8 | ||||

| (v) | 7.0 | 7.6 | 6.0 | 6.8 | 6.1 | 8.6 | 11.1 | 17.5 | 30.1 | 54.2 | 9.4 | 11.3 | 26.7 | 56.7 | 94.0 | |||

| 33.5 | 12.9 | 6.6 | 7.2 | 6.1 | 33.6 | 16.9 | 17.9 | 30.4 | 54.6 | 34.4 | 18.3 | 27.9 | 57.1 | 94.2 | ||||

| 5.3 | 4.4 | 5.0 | 6.9 | 5.2 | 6.1 | 7.5 | 18.5 | 37.1 | 65.2 | 5.6 | 9.4 | 35.2 | 73.7 | 98.7 | ||||

| 29.3 | 8.5 | 5.3 | 7.5 | 5.5 | 30.5 | 11.8 | 19.0 | 37.7 | 65.6 | 30.6 | 14.1 | 35.8 | 74.2 | 98.8 | ||||

| (vi) | 34.7 | 39.7 | 39.2 | 34.6 | 33.6 | 34.6 | 40.7 | 39.4 | 35.6 | 34.2 | 35.0 | 39.6 | 40.1 | 34.3 | 33.8 | |||

| 60.2 | 46.7 | 40.5 | 34.9 | 34.1 | 62.5 | 47.5 | 40.3 | 36.1 | 34.8 | 60.6 | 46.9 | 41.0 | 34.4 | 34.1 | ||||

| 5.0 | 4.2 | 5.3 | 5.9 | 5.9 | 6.0 | 4.8 | 11.3 | 20.1 | 35.3 | 5.6 | 7.1 | 16.8 | 37.2 | 73.5 | ||||

| 27.9 | 8.6 | 5.7 | 6.2 | 6.1 | 28.1 | 10.0 | 12.0 | 20.3 | 35.4 | 28.2 | 11.9 | 17.6 | 38.0 | 74.0 | ||||

| (vii) | 33.7 | 40.6 | 37.9 | 36.5 | 36.6 | 34.3 | 40.3 | 37.9 | 37.0 | 36.9 | 33.5 | 40.6 | 38.3 | 36.9 | 36.8 | |||

| 61.9 | 47.3 | 38.6 | 37.2 | 36.8 | 62.2 | 46.5 | 39.1 | 37.4 | 37.1 | 61.5 | 47.7 | 39.8 | 37.7 | 36.9 | ||||

| 4.3 | 4.4 | 5.2 | 6.4 | 6.0 | 5.1 | 6.2 | 9.5 | 17.5 | 32.9 | 5.1 | 5.8 | 14.1 | 28.5 | 62.5 | ||||

| 30.2 | 8.4 | 5.5 | 6.7 | 6.5 | 30.6 | 10.1 | 10.2 | 17.7 | 33.5 | 30.4 | 9.2 | 15.3 | 29.1 | 63.0 | ||||

We summarize the findings of Table 2 as follows: (1) both and suffer from severe size distortion using sequential asymptotics if is small (i.e., ); (2) both fixed- asymptotics and large- asymptotics work well for and when is large under weak dependence in coordinates (cases (i)-(v)); (3) and are both accurate in size and comparable in power performance when ’s are light-tailed (cases (i),(ii), (iv) and (v)) if appropriate limiting distributions are used; (4) is slightly oversized compared with under heavy-tailed distributions (case (iii)); (5) when strong dependence is exhibited in coordinates (cases (vi) and (vii)), still works for small while other combinations of tests and asymptotics generally fail; (6) increasing the data length enhances power under all settings while increasing dependence in coordinates generally reduces power. Overall, the spatial signed SN test using fixed- asymptotic critical value outperforms all other tests and should be preferred due to its robustness and size accuracy.

5.2 Segmentation

We then examine the numerical performance of SBS-SN(s) by considering the multiple change-points models in Wang et al., (2022). For and , we generate i.i.d. samples of , where ’s are either normally distributed, i.e., case (i); or RSRM sequences, i.e., case (vii) with autoregressive coefficient We assume there are three change points at 30, 60 and 90. Denote the changes in mean by , and , where , and Here, represents the sparse or dense alternatives while represents the signal strength. For example, we refer to the choice of and as Sparse(2.5) and , as Dense(4).

To assess the estimation accuracy, we consider (1) the differences between the estimated number of change-points and the truth ; (2) Mean Squared Error (MSE) between and the truth ; (3) the adjusted Rand index (ARI) which measures the similarity between two partitions of the same observations. Generally speaking, a higher ARI (with the maximum value of 1) indicates more accurate change-point estimation, see Hubert and Arabie, (1985) for more details.

We also report the estimation results using WBS-SN in Wang et al., (2022) and SBS-SN (by replacing WBS with SBS in Wang et al., (2022)). The superiority of WBS-SN over other competing methods can be found in Wang et al., (2022) under similar simulation settings. For SBS based estimation results, we vary the decay rate , which are denoted by SBS1 and SBS2 respectively. Here, the thresholds in above algorithms are either Monte Carlo simulated constant for all sub-interval test statistics; or prespecified quantile level based on p-values reported by these sub-interval statistics. Here, we fix as the 95% quantile levels of the maximal test statistics based on 5000 simulated Gaussian data with no change-points as in Wang et al., (2022) while is set as 0.001 (the results using 0.005 is similar hence omitted). We replicate all experiments 500 times, and results for dense changes and sparse changes are reported in Table 3 and Table 4, respectively.

From Table 3, we find that (1) WBS-SN and SBS-SN tend to produce close estimation accuracy holding the form of and signal strength fixed; (2) different decay rates have some impact on the performance of SBS-SN methods, and when the signal is weak the impact is noticeable; (3) increasing the signal strength of change-points boosts the detection power for all methods; (4) using gives more accurate estimation than using in SBS-SN when data is normally distributed with weak signal level and they work comparably in other settings; (5) when is normally distributed, SBS-SN(s) works comparably with other estimation algorithms while for RSRM sequences, our SBS-SN(s) greatly outperforms other methods. The results in Table 4 are similar. These findings together suggest substantial gain in detection performance using SBS-SN(s) due to its robustness to heavy-tailedness and stronger dependence in the coordinates.

| Test | Threshold | MSE | ARI | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| <-1 | -1 | 0 | 1 | >1 | |||||||

| Dense(2.5) | WBS | Normal | 95 | 156 | 246 | 3 | 0 | 1.278 | 0.729 | ||

| SBS1 | Normal | 76 | 206 | 214 | 4 | 0 | 1.068 | 0.742 | |||

| SBS2 | Normal | 32 | 195 | 266 | 7 | 0 | 0.660 | 0.809 | |||

| SBS1 | Normal | 0 | 0 | 35 | 1 | 0.078 | |||||

| SBS2 | Normal | 0 | 10 | 23 | 1 | ||||||

| SBS1 | Normal | 0 | 1 | 29 | 1 | ||||||

| SBS2 | Normal | 0 | 19 | 463 | 18 | 0 | 0.926 | ||||

| WBS | RSRM | 64 | 89 | 133 | 104 | 110 | 2.668 | 0.461 | |||

| SBS1 | RSRM | 35 | 58 | 116 | 107 | 184 | 3.612 | 0.462 | |||

| SBS2 | RSRM | 38 | 72 | 114 | 130 | 146 | 3.032 | 0.470 | |||

| SBS1 | RSRM | 8 | 6 | 32 | 84 | 376 | 8.792 | 0.653 | |||

| SBS2 | RSRM | 57 | 43 | 104 | 106 | 233 | 4.508 | 0.532 | |||

| SBS1 | RSRM | 3 | 65 | 412 | 20 | 0 | 0.194 | 0.880 | |||

| SBS2 | RSRM | 11 | 121 | 355 | 13 | 0 | 0.356 | 0.850 | |||

| Dense(4) | WBS | Normal | 0 | 7 | 486 | 7 | 0 | 0.028 | 0.948 | ||

| SBS1 | Normal | 0 | 21 | 467 | 12 | 0 | 0.066 | 0.936 | |||

| SBS2 | Normal | 0 | 23 | 464 | 13 | 0 | 0.072 | 0.945 | |||

| SBS1 | Normal | 0 | 0 | 464 | 34 | 2 | 0.084 | 0.937 | |||

| SBS2 | Normal | 0 | 0 | 476 | 23 | 1 | 0.054 | 0.943 | |||

| SBS1 | Normal | 0 | 0 | 468 | 31 | 1 | 0.070 | 0.936 | |||

| SBS2 | Normal | 0 | 0 | 482 | 18 | 0 | 0.036 | 0.942 | |||

| WBS | RSRM | 64 | 89 | 133 | 104 | 110 | 2.668 | 0.461 | |||

| SBS1 | RSRM | 26 | 67 | 107 | 115 | 185 | 3.732 | 0.484 | |||

| SBS2 | RSRM | 33 | 74 | 115 | 125 | 153 | 3.146 | 0.489 | |||

| SBS1 | RSRM | 27 | 25 | 71 | 93 | 309 | 6.604 | 0.579 | |||

| SBS2 | RSRM | 39 | 33 | 103 | 114 | 244 | 4.740 | 0.559 | |||

| SBS1 | RSRM | 0 | 10 | 469 | 20 | 1 | 0.068 | 0.918 | |||

| SBS2 | RSRM | 0 | 35 | 451 | 14 | 0 | 0.098 | 0.911 | |||

Note: Top 3 methods are in bold format.

| Test | Threshold | MSE | ARI | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| <-1 | -1 | 0 | 1 | >1 | |||||||

| Sparse(2.5) | WBS | Normal | 78 | 147 | 274 | 1 | 0 | 1.050 | 0.759 | ||

| SBS1 | Normal | 59 | 214 | 223 | 4 | 0 | 0.928 | 0.760 | |||

| SBS2 | Normal | 20 | 185 | 287 | 8 | 0 | 0.546 | 0.829 | |||

| SBS1 | Normal | 0 | 1 | 464 | 34 | 1 | 0.078 | 0.929 | |||

| SBS2 | Normal | 0 | 13 | 465 | 21 | 1 | 0.076 | 0.930 | |||

| SBS1 | Normal | 0 | 2 | 470 | 27 | 1 | 0.066 | 0.927 | |||

| SBS2 | Normal | 0 | 23 | 460 | 17 | 0 | 0.080 | 0.924 | |||

| WBS | RSRM | 70 | 97 | 121 | 102 | 110 | 2.682 | 0.449 | |||

| SBS1 | RSRM | 38 | 51 | 110 | 124 | 177 | 3.572 | 0.460 | |||

| SBS2 | RSRM | 39 | 66 | 122 | 122 | 151 | 3.100 | 0.474 | |||

| SBS1 | RSRM | 38 | 35 | 75 | 109 | 278 | 5.800 | 0.447 | |||

| SBS2 | RSRM | 84 | 69 | 129 | 108 | 179 | 3.258 | 0.528 | |||

| SBS1 | RSRM | 5 | 64 | 414 | 17 | 0 | 0.212 | 0.872 | |||

| SBS2 | RSRM | 8 | 117 | 364 | 11 | 0 | 0.330 | 0.851 | |||

| Sparse(4) | WBS | Normal | 0 | 7 | 486 | 7 | 0 | 0.028 | 0.958 | ||

| SBS1 | Normal | 0 | 19 | 468 | 13 | 0 | 0.064 | 0.938 | |||

| SBS2 | Normal | 0 | 27 | 458 | 15 | 0 | 0.084 | 0.945 | |||

| SBS1 | Normal | 0 | 0 | 465 | 34 | 1 | 0.076 | 0.938 | |||

| SBS2 | Normal | 0 | 0 | 477 | 22 | 1 | 0.052 | 0.944 | |||

| SBS1 | Normal | 0 | 0 | 472 | 27 | 1 | 0.062 | 0.937 | |||

| SBS2 | Normal | 0 | 0 | 481 | 19 | 0 | 0.038 | 0.943 | |||

| WBS | RSRM | 58 | 93 | 125 | 106 | 118 | 2.716 | 0.476 | |||

| SBS1 | RSRM | 32 | 51 | 96 | 133 | 188 | 3.840 | 0.486 | |||

| SBS2 | RSRM | 32 | 62 | 121 | 117 | 168 | 3.256 | 0.503 | |||

| SBS1 | RSRM | 27 | 25 | 76 | 97 | 300 | 6.250 | 0.574 | |||

| SBS2 | RSRM | 70 | 55 | 119 | 117 | 194 | 3.460 | 0.557 | |||

| SBS1 | RSRM | 0 | 8 | 472 | 20 | 0 | 0.056 | 0.914 | |||

| SBS2 | RSRM | 0 | 38 | 448 | 14 | 0 | 0.104 | 0.908 | |||

6 Real Data Application

In this section, we analyze the genomic micro-array (ACGH) dataset for 43 individuals with bladder tumor. The ACGH data contains log intensity ratios of these individuals measured at 2215 different loci on their genome, and copy number variations in the loci can be viewed as the change-point in the genome. Hence change-point estimation could be helpful in determining the abnormality regions, as analyzed by Wang and Samworth, (2018) and Zhang et al., (2021). The data is denoted by .

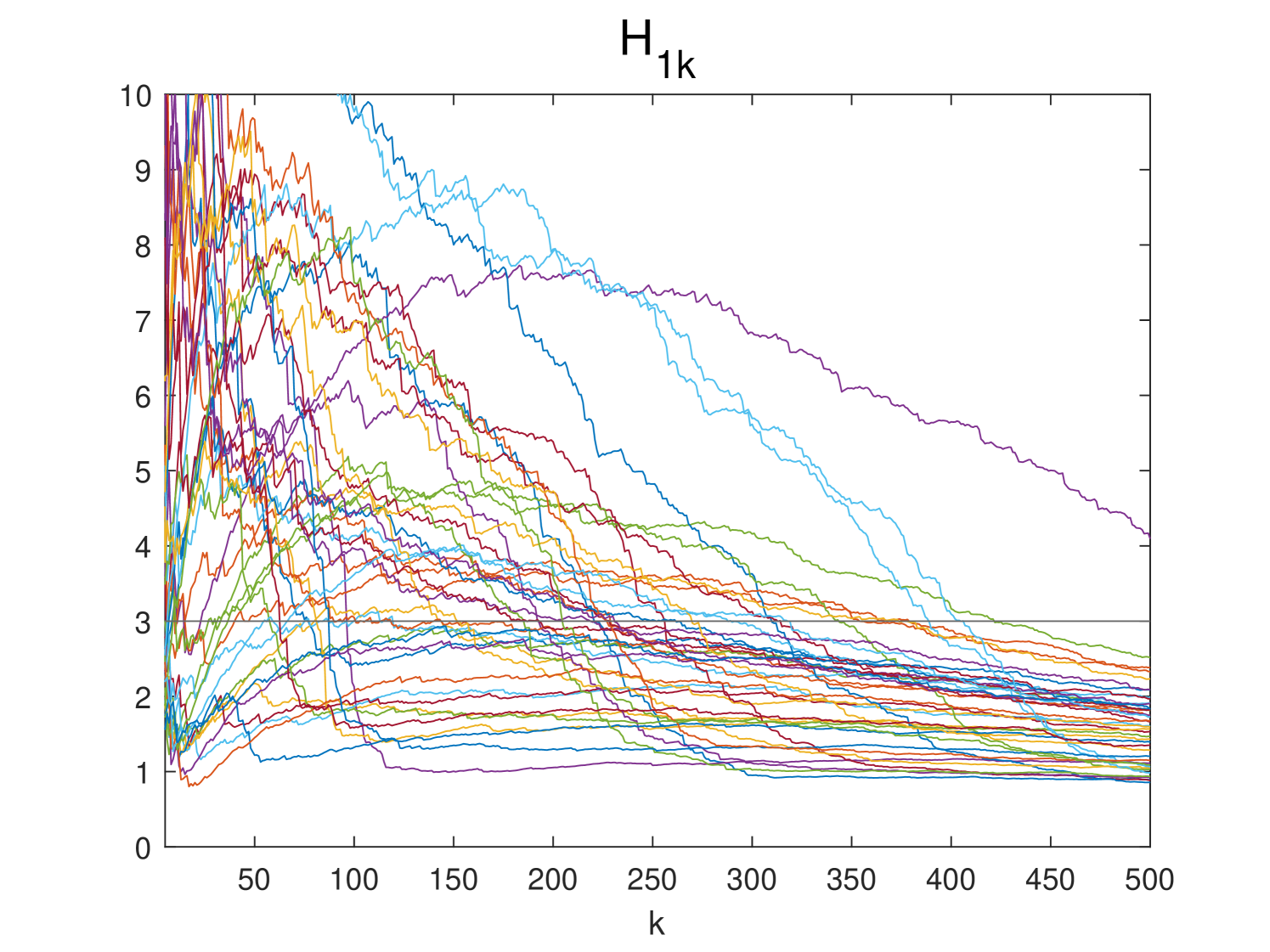

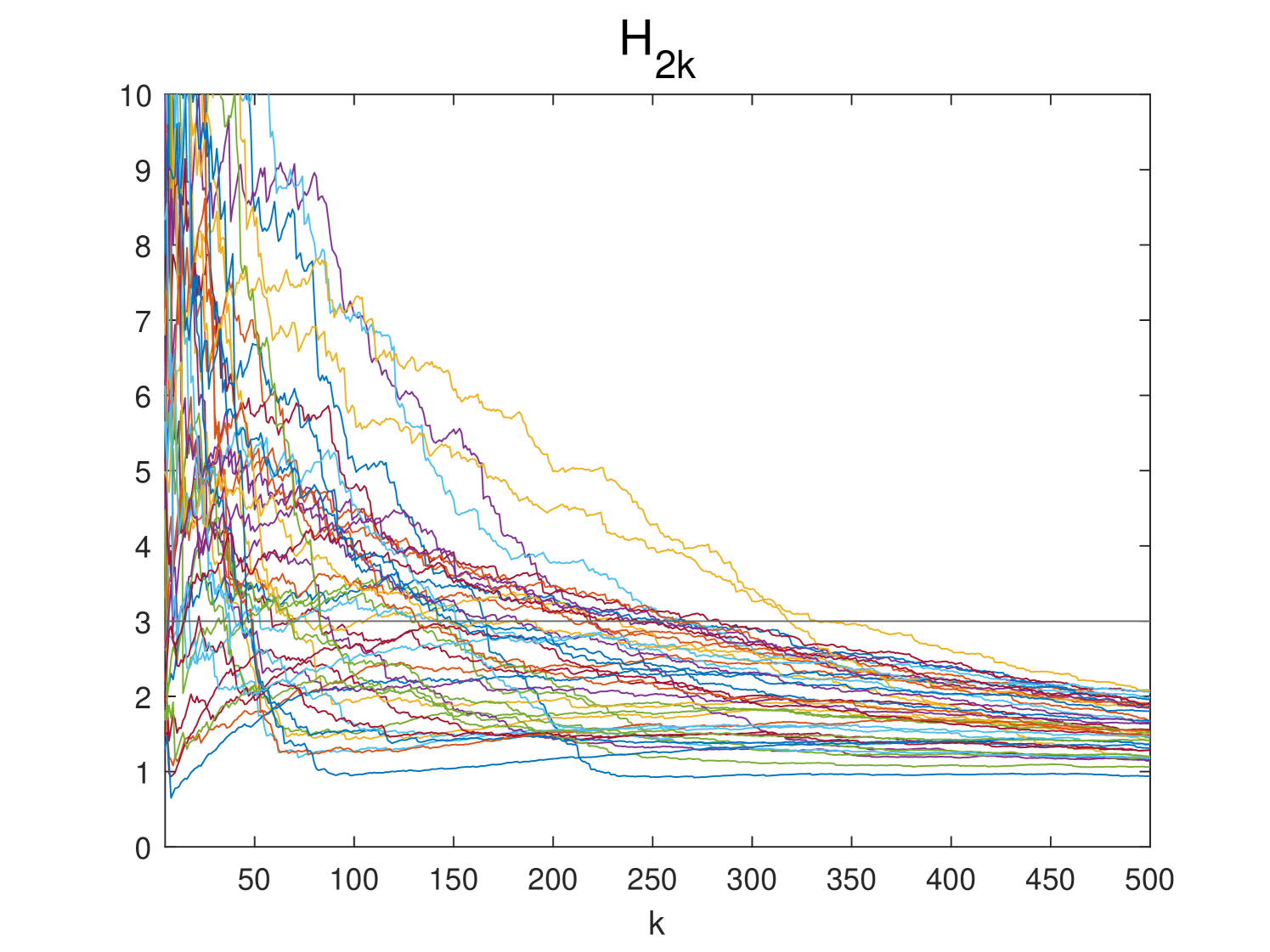

To illustrate the necessity of robust estimation method proposed in this paper, we use the Hill’s estimator to estimate the tail index of a sequence, see Hill, (1975). Specifically, let be the ascending order statistics of the th individual (coordinate) across 2215 observations. For , we give the left-tail and right-tail Hill estimators by

respectively, and they are plotted in Figure 2. From the plot, we see that most of the right-tail and the left-tail indices are below 3, suggesting the data is very likely heavy-tailed.

We take the first 200 loci for our SBS-SN(s) change-point estimation following the practice in Zhang et al., (2021), where the decay rate for generation of seeded interval in SBS is . We also compare the results obtained for Adaptive WBS-SN in Zhang et al., (2021) and 20 most significant points detected by INSPECT in Wang and Samworth, (2018). For this dataset, INSPECT is more like a screening method as it delivers a total of 67 change-points. In contrast to Adaptive WBS-SN and INSPECT where the thresholds for change-point estimation are simulated, the threshold used in SBS-SN(s) can be pre-specified, and it reflects a researcher’s confidence in detecting the change-points. We set the p-value threshold as 0.001, 0.005 and 0.01 and the results are as follows:

As we see, increasing the p-value threshold leads to more estimated change-points, and the set of estimated change-points by using larger contain those by smaller as subsets. In addition, increasing from 0.005 to 0.01 only brings in one more estimated change-point, suggesting may be a reasonable choice for the ACGH dataset.

All of our detected change-points at are also detected by INSPECT, i.e., 30(28), 41(40), 56, 72(73), 89(91), 97, 116, 130(131), 136 (134,135), 155, 174, 191. Although most of these points also coincide with Adaptive WBS-SN, there are non-overlapping ones. For example, 41, 56, 130 in SBS-SN(s) seem to be missed in Adaptive WBS-SN while 102 is missed by our SBS-SN(s) as it is detected by both Adaptive WBS-SN and INSPECT. These results are not really in conflict as Adaptive WBS-SN targets both sparse and dense alternatives, whereas our procedure aims to detect dense change with robustness properties.

7 Conclusion

In this paper, we propose a new method for testing and estimation of change-points in high dimensional independent data. Our test statistic builds on two recent advances in high-dimensional testing problem: spatial sign used in two-sample testing in Chakraborty and Chaudhuri, (2017) and self-normalized U-statistics in Wang et al., (2022), and inherits many advantages therein such as robustness to heavy-tailedness and tuning-free. The test is theoretically justified under both fixed- asymptotics and sequential asymptotics, and under both null and alternatives. When data exhibits stronger dependence in coordinates, we further enhance the analysis by focusing on RSRM models, and discover that using spatial sign leads to power improvement compared with mean based tests in Wang et al., (2022). As for multiple change-point estimation, we propose to combine p-values under the fixed- asymptotics with the SBS algorithm. Numerical simulations demonstrate that our fixed- asymptotics for spatial sign based test provides a better approximation to the finite sample distribution, and the estimation algorithm outperforms the mean-based ones when data is heavy-tailed and when coordinates are strongly dependent.

To conclude, we mention a few interesting topics for future research. Our method builds on spatial sign and targets dense signals by constructing unbiased estimators for . As pointed out by Liu et al., (2020), many real data exhibit both sparse and dense changes, and it would be interesting to combine with the adaptive SN based test in Zhang et al., (2021) to achieve both robustness and adaptiveness. In addition, the independence assumption imposed in this paper may limit its applicability to high dimensional time series where temporal dependence can not be neglected. It’s desirable to relax the independence assumption by U-statistics based on trimmed observations, as is adopted in Wang et al., (2022). It would also be interesting to develop robust methods for detecting change-points in other quantities beyond mean, such as quantiles, covariance matrices and parameter vectors in high dimensional linear models.

Supplement to “Robust Inference for Change Points in High Dimension”

This supplementary material contains all the technical proofs for the main paper. Section S.1 contains all the proofs of main theorems and Section S.2 contains auxiliary lemmas and their proofs.

In what follows, let denote the th coordinate of a vector . Denote if there exits such that for .

S.1 Proofs of Theorems

S.1.1 Proof of Theorem 3.1

First, we have that

| (S.8) |

(i) Under , by Theorem 8.2.2 in Lin and Lu, (2010), as , we have almost surely,

| (S.9) |

Then, for any fixed , we have that

| (S.10) |

where clearly , and

Next, since we view as fixed, then for all , , by Theorem 4.0.1 in Lin and Lu, (2010), it follows that . In addition, in view of (S.9) we have , and this implies that .

For , we let

then it follows that

| (S.13) |

Then, by Lemma S.2.1, and continuous mapping theorem, we have

(ii) The proof is a simplified version of the proof of Theorem 3.4 (ii), hence omitted here.

∎

S.1.2 Proof of Theorem 3.2

Clearly,

and

Note that The construction of (or ) only uses sample before (or after) the change point, so the change point has no influence on this part. The proof of Theorem 3.1 indicates that and similarly . Hence, it suffices to show and .

If , then

and

Hence,

and

∎

S.1.3 Proof of Theorem 3.3

By symmetry, we only consider the case . Since under Assumption 3.4, (S.9) still holds by Cauchy-Schwartz inequality, then using similar arguments in the proof of Theorem 3.1, we have

| (S.14) |

That is, for any triplet , hence it suffices to consider as the results of are similar.

We first note that

hence by Chebyshev inequality, for any triplet , we have

| (S.15) |

(i) By similar arguments in the proof of Theorem 3.2, it suffices to show

In fact, by similar arguments used in the proof of Theorem 3.1, we can show that

Then, recall (S.14), the result follows by noting

(ii) As , it follows from the same argument as (S.12).

(iii) As , then we have

Therefore, continuous mapping theorem together with Lemma S.2.1 indicate that

The last part of the proof is similar to the proof of Theorem 3.5 (ii) below, and is simpler, hence omitted.

∎

S.1.4 Proof of Theorem 3.4

(i) Note that

| (S.16) |

hence given , as , we have almost surely

| (S.17) |

Note that

| (S.18) |

Let

and

Then under ,

Denote that which contains all inner products of and for all , and . By definition, , where is the field generated by , and we further observe that is a continuous functional of and . Hence to derive the limiting distribution of when , it suffices to derive the limiting distribution of .

For any , similar to the proof of Theorem 3.1, by Theorem 4.0.1 in Lin and Lu, (2010) we have

where are i.i.d. standard normal random variables, and we can assume is independent of . For the ease of our notation, we let , for all . Furthermore since , for any and , the characteristic function of is the product of the characteristic function of and that of . By applying the Cramér-Wold device, . Therefore, by continuous mapping theorem, as ,

| (S.19) |

where

| (S.20) |

It is clear that the conditional distribution of given is Gaussian, and for any , , the covariance structure is given by

| (S.21) | ||||

Clearly, when , we have where is defined in (S.10), and the result reduces to (S.11).

As for , note that

Using similar arguments as in (S.19), we have

| (S.22) |

where

| (S.23) |

Similar to , the conditional distribution of given is Gaussian, and for any , , the covariance structure is given by

| (S.24) | ||||

Hence, as ,

(ii)

We shall only show the process convergence . That is similar and simpler. Once the process convergence is obtained, the limiting distributions of and can be easily obtained by the continuous mapping theorem.

The proof for the process convergence contains two parts: the finite dimensional convergence and the tightness.

To show the finite dimensional convergence, we need to show that for any positive integer , any fixed and any ,

where for , . Since both and are Gaussian processes, by Lemma S.2.2 we have

Then by bounded convergence theorem we have

This completes the proof of the finite dimensional convergence.

To show the tightness, it suffices to show that there exists such that

for any (see the proof of equation S8.12 in Wang et al., (2022)).

Since given , is a Gaussian process, we have

By (S.21), for (and similar for ) this reduces to

Note that

We shall analyze first, and WLOG we let . Then we have (with )

Hence,

By rearranging terms we have

Thus by CR-inequality we have . We shall analyze first. Note that

Since and almost surely, we have

By the Hölder’s inequalilty, and the fact that , , and are not identical to any of for any , we have

Therefore,

We repeatedly apply the Hölder’s inequality and the above bound for the expectation, and we have for since there are 8 summations in each which take the sum from to , and for since there are only 4 summations in each which take the sum from to . Combining these results we have

We can also show , and . Since the steps are very similar to the the arguments for , we omit the details here. Thus, for any , we have

for some positive constant . It is easy to see that

So

since This completes the proof of tightness. ∎

S.1.5 Proof of Theorem 3.5

(i) Under Assumption 3.4, conditional on , we still have almost surely

as conditional on , is still a -mixing sequence.

Recall (S.18), conditional on , we mainly work on since is of a smaller order, where

By symmetry, we only consider the case , and the summation in can be decomposed into

according to the relative location of and .

Then, it is not hard to see that

Under Assumption 3.4, and conditional on , similar to (S.15), we can show for .

while by (S.19), .

Hence, if as , then conditional on , we obtain that

where

| (S.25) |

Hence, we have

For , by similar arguments as above, we have

Hence, we have

(ii) Note that for any such that , as ,

by the law of large numbers for -statistics (since can be viewed as a two sample -statistic). Then using the similar arguments in the proof of Theorem 3.4 (ii), we have

Note that is deterministic, and recall in the proof of Theorem 3.4 (ii), by similar arguments in the proof of Theorem 3.6 in Wang et al., (2022), we have

Similarly,

The result follows by the continuous mapping theorem. Here, the multiplicative follows by the proof of Theorem 3.2 in Chakraborty and Chaudhuri, (2017).

∎

S.2 Auxiliary Lemmas

Lemma S.2.1.

Proof of Lemma S.2.1

By Cramér-Wold device, it suffices to show that for fixed and , any sequences of , ,

where are integers.

For simplicity, we consider the case of , and by symmetry there are basically three types of enumerations of : (1) ; (2) ; (3) .

Define , and . Then, we can show

For simplicity, we consider the Case (2), and using the independence of , one can show that , and are independent. Then by Theorem 4.0.1 in Lin and Lu (2010), they are asymptotically normal with variances given by

Similarly, we can obtain the asymptotic normality for Case (1) and Case (3).

Hence,

where

Hence, the case of is proved by examining the covariance structure of defined in Theorem 3.1. The cases are similar. ∎

Lemma S.2.2.

As , we have for any and , as ,

There are 9 terms in the covariance structure given in (S.21), for first one, we have

where the last equality holds by applying the law of large numbers for -statistics to and , and the last holds by the law of large numbers of -statistics to .

Therefore, similar arguments for other terms indicate that

This is indeed the covariance structure of after tedious algebra. ∎

References

- Bradley, (1986) Bradley, R. C. (1986). Basic properties of strong mixing conditions. In Dependence in probability and statistics, pages 165–192. Springer.

- Cai et al., (2014) Cai, T. T., Liu, W., and Xia, Y. (2014). Two-sample test of high dimensional means under dependence. Journal of the Royal Statistical Society: Series B: Statistical Methodology, pages 349–372.

- Chakraborty and Chaudhuri, (2017) Chakraborty, A. and Chaudhuri, P. (2017). Tests for high-dimensional data based on means, spatial signs and spatial ranks. Annals of Statistics, 45(2):771–799.

- Chen and Qin, (2010) Chen, S. X. and Qin, Y.-L. (2010). A two-sample test for high-dimensional data with applications to gene-set testing. Annals of Statistics, 38(2):808–835.

- Cho, (2016) Cho, H. (2016). Change-point detection in panel data via double cusum statistic. Electronic Journal of Statistics, 10(2):2000–2038.

- Fan and Mackey, (2017) Fan, Z. and Mackey, L. (2017). Empirical bayesian analysis of simultaneous changepoints in multiple data sequences. Annals of Applied Statistics, 11(4):2200–2221.

- Fryzlewicz, (2014) Fryzlewicz, P. (2014). Wild binary segmentation for multiple change-point detection. Annals of Statistics, 42(6):2243–2281.

- Hill, (1975) Hill, B. M. (1975). A simple general approach to inference about the tail of a distribution. Annals of Statistics, 3(5):1163–1174.

- Horváth and Hušková, (2012) Horváth, L. and Hušková, M. (2012). Change-point detection in panel data. Journal of Time Series Analysis, 33(4):631–648.

- Hubert and Arabie, (1985) Hubert, L. and Arabie, P. (1985). Comparing partitions. Journal of classification, 2(1):193–218.

- Jirak, (2015) Jirak, M. (2015). Uniform change point tests in high dimension. Annals of Statistics, 43(6):2451–2483.

- Kovács et al., (2020) Kovács, S., Li, H., Bühlmann, P., and Munk, A. (2020). Seeded binary segmentation: A general methodology for fast and optimal change point detection. arXiv preprint arXiv:2002.06633.

- Lin and Lu, (2010) Lin, Z. and Lu, C. (2010). Limit Theory for Mixing Dependent Random Variables. Springer Science & Business Media.

- Liu et al., (2022) Liu, B., Zhang, X., and Liu, Y. (2022). High dimensional change point inference: Recent developments and extensions. Journal of Multivariate Analysis, 188:104833.

- Liu et al., (2020) Liu, B., Zhou, C., Zhang, X., and Liu, Y. (2020). A unified data-adaptive framework for high dimensional change point detection. Journal of the Royal Statistical Society: Series B (Statistical Methodology), 82(4):933–963.

- Oja, (2010) Oja, H. (2010). Multivariate Nonparametric Methods with R: an Approach Based on Spatial Signs and Ranks. Springer Science & Business Media.

- Phillips and Moon, (1999) Phillips, P. and Moon, H. (1999). Linear regression limit theory for nonstationary panel data. Econometrica, 67(5):1057–1111.

- Shao, (2010) Shao, X. (2010). A self-normalized approach to confidence interval construction in time series. Journal of the Royal Statistical Society: Series B (Statistical Methodology), 72(3):343–366.

- Shao, (2015) Shao, X. (2015). Self-normalization for time series: a review of recent developments. Journal of the American Statistical Association, 110(512):1797–1817.

- Shao and Zhang, (2010) Shao, X. and Zhang, X. (2010). Testing for change points in time series. Journal of the American Statistical Association, 105(491):1228–1240.

- Wang et al., (2015) Wang, L., Peng, B., and Li, R. (2015). A high-dimensional nonparametric multivariate test for mean vector. Journal of the American Statistical Association, 110(512):1658–1669.

- Wang et al., (2022) Wang, R., Zhu, C., Volgushev, S., and Shao, X. (2022). Inference for change points in high-dimensional data via selfnormalization. The Annals of Statistics, 50(2):781–806.

- Wang and Samworth, (2018) Wang, T. and Samworth, R. J. (2018). High dimensional change point estimation via sparse projection. Journal of the Royal Statistical Society: Series B (Statistical Methodology), 80(1):57–83.

- Yu and Chen, (2021) Yu, M. and Chen, X. (2021). Finite sample change point inference and identification for high-dimensional mean vectors. Journal of the Royal Statistical Society: Series B (Statistical Methodology), 83(2):247–270.

- Yu and Chen, (2022) Yu, M. and Chen, X. (2022). A robust bootstrap change point test for high-dimensional location parameter. Electronic Journal of Statistics, 16(1):1096–1152.

- Zhang et al., (2021) Zhang, Y., Wang, R., and Shao, X. (2021). Adaptive inference for change points in high-dimensional data. Journal of the American Statistical Association, In Press.