Robust mixture regression with Exponential Power distribution

Abstract

Assuming an exponential power distribution is one way to deal with outliers in regression and clustering, which can increase the robustness of the analysis. Gaussian distribution is a special case of an exponential distribution. And an exponential power distribution can be viewed as a scale mixture of normal distributions. Thus, model selection methods developed for the Gaussian mixture model can be easily extended for the exponential power mixture model.

Moreover,

Gaussian mixture models tend to select more components than exponential power mixture models in real-world cases, which means exponential power mixture models are easier to interpret.

In this paper,

We develop analyses for mixture regression models when the errors are assumed to follow an exponential power distribution. It will be robust to outliers, and model selection for it is easy to implement.

Keywords: Robust mixture regression, model selection, exponential power distribution

1 Introduction

Finite mixtures of regression models have been broadly used to deal with various heterogeneous data. In most cases, we assume the errors follow normal distributions, and parameters are estimated by the maximum likelihood estimator (MLE). However, this model will be very sensitive to outliers.

There are many methods to handle outliers in mixture of linear regression models. Trimmed likelihood estimator (TLE)(Neykov et al.,2007) uses the top data with the largest log-likelihood. The choice of is very important for the TLE. It should not be too small or too large. But it’s not easy to choose Yao(2014) proposed a robust mixture of linear regression models by assuming the errors follow the t-distribution. Song(2016) dealt with the same problem using Laplace distribution.

Assuming an exponential power distribution is one way to deal with outliers in regression and clustering. (Salazar E et.al., 2012; Liang F et.al. 2007; Ferreira, M.A.,2014). Gaussian distribution and Laplace dsitribution are special cases of exponential distribution. leptokurtic distributions have heavier tails than Gaussian distributions, which provide protection against outliers. And an exponential power distribution is a scale mixture of normal distributions. This makes it easier to understand.

Outliers are not the only problem. Choose the model selection is also a challenge for mixture model. Gaussian mixture models tend to select more components than exponential power mixture models in real world case, which means the interpretability is greatly reduced.[7] One possible explanation is that exponential power distribution is a scale mixture of Gaussian distributions.

In this paper, robust mixture regression with Exponential power distribution and model selection for it are introduced.

2 Exponential Power(EP) Distribution

The density function of the Exponential Power(EP) distribution where the mean is 0 is

| (1) |

where is the Gamma function. When , the distributions are heavy tailed, which means it will provide protection against outliers. When p=1, Equation(1) defines Laplace distribution. When p=2, Equation(1) defines Gaussian distribution.

3 Robust regression with mixture of exponential power distribution

We assume that follows one of the following linear regression model with probability ,

| (2) |

where . follows Exponential Power (EP) distributions

Assume

The complete likelihood function is

where is an indicator variable. It implies that comes form th linear regression model. And .

And the complete log-likelihood function is

In practice, the number of components has to be estimated. So we use penalty function to select K automatically.(Huang, T et.al., 2017)

where

is a very small positive number, is a positive tuning parameter , and is the number.

4 Algorithm

We propose a Generalized Expectation–Maximization algorithm to solve the proposed model.

In the step, conditional expectation of given is computed by the Bayes’ rule:

| (3) |

Then in the M step, are updated by maximizing .

| (4) |

| (5) |

where .

On ignorning the terms not involving , we have

| (6) |

Then we use MM algorithm to estimate

Thus

| (7) |

where .

Equation (7) leads to the update

| (8) |

We can also use Newton-Raphson method to updata .

5 Simulation

In this section, we demonstrate the effectiveness of the proposed method by simulation study.

Since TLE performed worse than the robust mixture regression model based on the t-distribution [4] and exponential power distribution can cover Laplace distribution, we only compare

1.the robust mixture regression model based on the t-distribution (MixT),

2.the robust mixture regression model based on the exponetional power distribution (MixEP).

To assess different methods, we record the mean squared errors (MSE) and the bias of the parameter estimates. In terms of the mixture switching issues, we use label permutation that minimizes the difference between predicted parameters and the true parameter values.

Example Suppose the independently and identically distributed samples are sampled from the model

where is a component indicator with and both and follow the same distribution as We consider the following four cases for the error density function of :(Weixin Yao et.al., 2014)

Case I: , which means -distribution with degrees of freedom 1 ,

Case II: ,which is a contaminated normal mixture,

Case with of high leverage outliers being and

Case IV: , standard normal distribution with 10% outlier contamination.

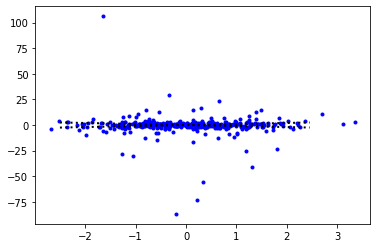

CaseI: The number of samples is N=400.

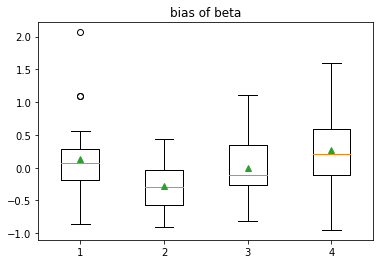

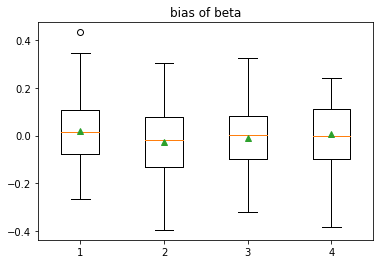

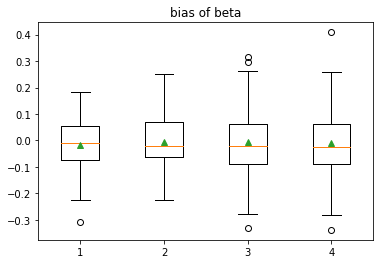

Figure 1 shows the simulation data in CaseI. We replicate this experiment 50 times, and the comparison of different methods are shown in Figure 2 and Table 1.

| MSE | ||||

|---|---|---|---|---|

| Method | ||||

| MixT | 0.0169 | 0.01936 | 0.01491 | 0.0203 |

| MixEP | 0.3973 | 0.2175 | 0.2594 | 0.4337 |

| bias | ||||

| Method | ||||

| MixT | -0.0169 | 0.01393 | 0.03333 | 0.001208 |

| MixEP | 0.13677 | -0.2754 | -0.007769 | 0.2658 |

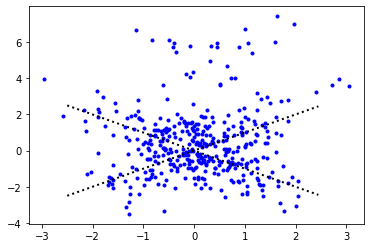

CaseII: The number of samples is N=400.

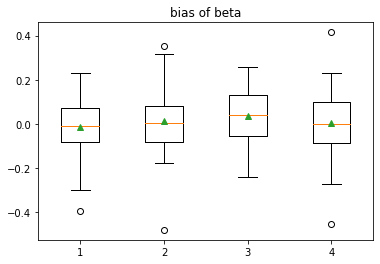

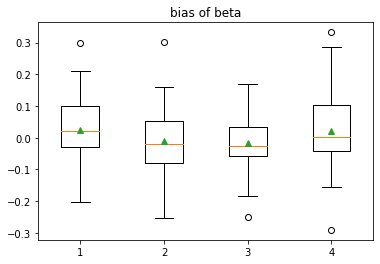

Figure 3 shows the simulation data in CaseII. We replicate this experiment 50 times, and the comparison of different methods are shown in Figure 4 and Table 2.

| MSE | ||||

|---|---|---|---|---|

| Method | ||||

| MixT | 0.0111 | 0.0105 | 0.006161 | 0.01433 |

| MixEP | 0.0189 | 0.02219 | 0.01943 | 0.02024 |

| bias | ||||

| Method | ||||

| MixT | 0.02289 | -0.009907 | -0.01731 | 0.02171 |

| MixEP | -0.0116 | -0.0408 | 0.02623 | 0.03788 |

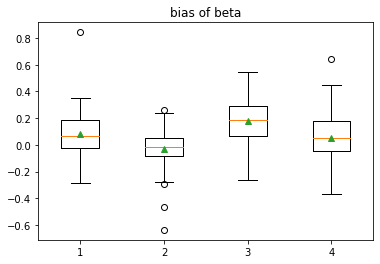

CaseIII: The number of samples is N=400.

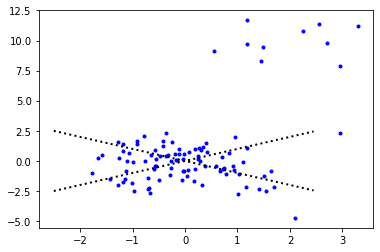

Figure 5 shows the simulation data in CaseIII. We replicate this experiment 50 times, and the comparison of different methods are shown in Figure 6 and Table 3.

| MSE | ||||

|---|---|---|---|---|

| Method | ||||

| MixT | 0.01188 | 0.01052 | 0.01857 | 0.02060 |

| MixEP | 0.03807 | 0.03744 | 0.02973 | 0.02881 |

| bias | ||||

| Method | ||||

| MixT | -0.01858 | -0.00432 | -0.006022 | -0.008520 |

| MixEP | 0.06676 | -0.08744 | -0.01664 | 0.03178 |

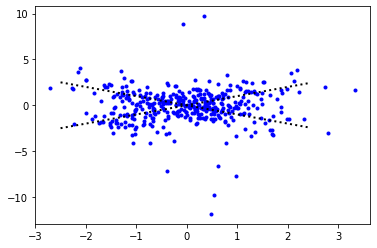

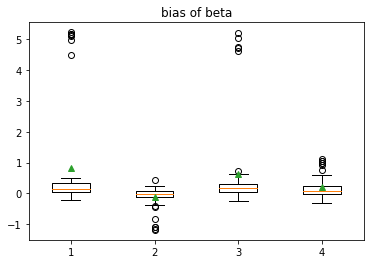

CaseIV: The number of samples is N=400.

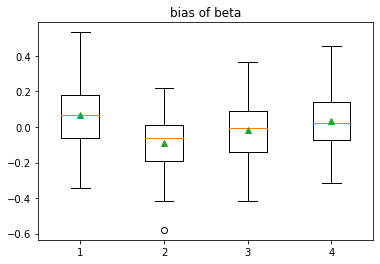

Figure 7 shows the simulation data in CaseIV. We replicate this experiment 50 times, and the comparison of different methods are shown in Figure 8 and Table 4.

| MSE | ||||

|---|---|---|---|---|

| Method | ||||

| MixT | 3.6119 | 0.1376 | 2.4279 | 0.1662 |

| MixEP | 0.0411 | 0.02742 | 0.06486 | 0.04627 |

| bias | ||||

| Method | ||||

| MixT | 0.8222 | -0.1153 | 0.6159 | 0.1979 |

| MixEP | 0.08276 | -0.02965 | 0.1790 | 0.05284 |

It’s hard to choose the dimension of freedom for Mixture of t-distribution. In the experiments shown above, the freedom leading to best results are used. However, the freedom selected by BIC could lead to bad results. In addition, selecting the number of components is also a problem for Mixture regression with t-distribution.

6 Conclusion

The adaptively trimmed version of MIXT worked well when there are high leverage outliers, but it can deal with drift. We can’t select a proper dimension of freedom for MIXT using BIC when faced with drift.

Adaptively removing abnormal points can improve the estimation accuracy in MixEP. It’s a natural idea is to build a model to cover the drift. This will be the future work.

References

- [1] Huang, T., Peng, H., Zhang, K. (2017). MODEL SELECTION FORGAUSSIAN MIXTURE MODELS. Statistica Sinica, 27(1), 147-169. Re-trieved November 4, 2020, from

- [2] Lange, K. (2016). MM optimization algorithms. Society for Industrial and Applied Mathematics. Weixing Song, Weixin Yao, Yanru Xing,

- [3] Robust mixture regression model fitting by Laplace distribution, Computational Statistics and Data Analysis, Volume 71, 2014, Pages 128-137, ISSN 0167-9473, https://doi.org/10.1016/j.csda.2013.06.022.

- [4] Weixin Yao, Yan Wei, Chun Yu, Robust mixture regression using the t-distribution, Computational Statistics and Data Analysis, Volume 71, 2014, Pages 116-127, ISSN 0167-9473, https://doi.org/10.1016/j.csda.2013.07.019.

- [5] N. Neykov, P. Filzmoser, R. Dimova, P. Neytchev, Robust fitting of mixtures using the trimmed likelihood estimator, Computational Statistics and Data Analysis, Volume 52, Issue 1, 2007, Pages 299-308, ISSN 0167-9473, https://doi.org/10.1016/j.csda.2006.12.024.

- [6] Wennan Chang and Xinyu Zhou and Yong Zang and Chi Zhang and Sha Cao,A New Algorithm using Component-wise Adaptive Trimming For Robust Mixture Regression, 2020. https://arxiv.org/abs/2005.11599

- [7] X. Cao, Q. Zhao, D. Meng, Y. Chen and Z. Xu, Robust Low-Rank Matrix Factorization Under General Mixture Noise Distributions, IEEE Transactions on Image Processing, vol. 25, no. 10, pp. 4677-4690, Oct. 2016, doi: 10.1109/TIP.2016.2593343.

- [8] Liang F, Liu C, Wang N: A robust sequential Bayesian method for identification of differentially expressed genes. Statistica Sinica 17: 571–597. 2007

- [9] Salazar E, Ferreira MAR, Migon HS: Objective Bayesian analysis for exponential power regression models. Sankhya - Series B 74: 107–125. 2012 Ferreira, M.A., Salazar, E. Bayesian reference analysis for exponential power regression models. J Stat Distrib App 1, 12 (2014).