22email: {l.xi,yingliang.ma}@uea.ac.uk

Robust Noisy Pseudo-label Learning for Semi-supervised Medical Image Segmentation Using Diffusion Model

Abstract

Obtaining pixel-level annotations in the medical domain is both expensive and time-consuming, often requiring close collaboration between clinical experts and developers. Semi-supervised medical image segmentation aims to leverage limited annotated data alongside abundant unlabeled data to achieve accurate segmentation. However, existing semi-supervised methods often struggle to structure semantic distributions in the latent space due to noise introduced by pseudo-labels. In this paper, we propose a novel diffusion-based framework for semi-supervised medical image segmentation. Our method introduces a constraint into the latent structure of semantic labels during the denoising diffusion process by enforcing prototype-based contrastive consistency. Rather than explicitly delineating semantic boundaries, the model leverages class prototypes centralized semantic representations in the latent space as anchors. This strategy improves the robustness of dense predictions, particularly in the presence of noisy pseudo-labels. We also introduce a new publicly available benchmark: Multi-Object Segmentation in X-ray Angiography Videos (MOSXAV), which provides detailed, manually annotated segmentation ground truth for multiple anatomical structures in X-ray angiography videos. Extensive experiments on the EndoScapes2023 and MOSXAV datasets demonstrate that our method outperforms state-of-the-art medical image segmentation approaches under the semi-supervised learning setting. This work presents a robust and data-efficient diffusion model that offers enhanced flexibility and strong potential for a wide range of clinical applications.

Keywords:

Semi-supervised learning Representation learning Diffusion models Medical image segmentation.1 Introduction

Medical image segmentation, which involves pixel-wise classification, is a critical dense prediction task that plays a vital role in enhancing the accuracy of disease diagnosis, monitoring, and assessment. In recent years, learning-based approaches [25, 24, 1, 16, 19, 14, 31] have significantly outperformed traditional methods, with fully supervised image segmentation achieving higher accuracy due to the abundance of annotated data. In other words, large-scale image datasets [20, 15, 8, 17, 18] with manual annotations are typically required to train robust and generalizable deep neural networks for dense prediction tasks, e.g., image segmentation. However, obtaining pixel-wise annotations is challenging, as it both time-consuming and labor-intensive. Such a massive annotation cost has motivated the community to develop semi-supervised learning methods [13, 33, 5].

Given that unlabeled data is typically abundant in practice, semi-supervised learning has emerged as a compelling approach for medical image segmentation. It leverages limited labeled data in conjunction with large amounts of pseudo-labeled data to iteratively train the segmentation model [21, 13]. In this paradigm, pseudo labels are generated for unlabeled images using a reliable model, and these pseudo-labeled samples are effectively incorporated into training by minimizing their prediction entropy. Nevertheless, semantic misalignment remains a significant challenge in semi-supervised learning. Most existing methods [4, 27] rely primarily on consistency regularization and auxiliary supervision at the output mask level, implicitly enforcing semantic consistency under perturbations (e.g., pseudo labels). However, these approaches often result in overlapping semantic representations in the latent space and overlook distinct semantic boundaries, thereby limiting generalization.

To address the above issue, some previous works have proposed modeling data distribution with deep generative models, including Variational Autoencoders (VAEs) [34], Generative Adversarial Networks (GANs) [35], and specialized medical image segmentation diffusion models [32, 13]. Among these generalist approaches, diffusion models [13] have demonstrated significant potential in alleviating this problem by formulating complex data distributions probabilistically. A prominent branch treats dense prediction tasks as a label-denoising problem [9, 11], employing variational inference to progressively generate predictions from noisy data. While diffusion models are proven capable of capturing the underlying distribution of each semantic category, their full potential to distinctly shape the latent structure of semantic labels remains to be discovered. The precise delineation of semantic boundaries and distinct domains within each semantic representation is crucial for improving overall performance in semi-supervised dense prediction tasks by eliminating ambiguity. These observations motivate our investigation into whether diffusion-based deep generative models can accurately capture and align the precise distribution of semantic labels, enabling the progressive rectification of pseudo-labels for enhanced supervision.

In this paper, we propose a novel diffusion model for semi-supervised medical image segmentation. Specifically, we first use embedding layers to encode dense labels and map these features into a semantic latent space via project layers. To structure the latent space, we introduce explicit class prototypes as fixed anchors in the embedding space and directly optimize the feature representations using a contrastive loss. These non-learnable prototypes guide the model beyond simply optimizing for prediction accuracy. The denoised embedding features are then processed by a diffusion decoder, guided by visual conditions.

Our main contributions are as follows: 1) We propose a novel diffusion-based framework for semi-supervised medical image segmentation, which performs dense label denoising through a diffusion process by reformulating dense prediction as a label denoising task. 2) We introduce a prototype-anchored contrastive learning to structure the latent space of semantic labels, enhancing the quality and robustness of segmentation predictions. 3) We present a new benchmark dataset, Multi-Object Segmentation in X-ray Angiography Videos (MOSXAV) 111https://github.com/xilin-x/MOSXAV, which focuses on the segmentation of multiple objects in X-ray angiography videos and provides high-quality, manually annotated ground truth labels.

To validate these contributions, the proposed method is evaluated on one public benchmark and a newly collected benchmark dataset. Experimental results demonstrate that our algorithm outperforms state-of-the-art (SoTA) medical image segmentation methods under the semi-supervised learning setting.

2 Method

2.1 Preliminaries

Diffusion models [26, 7, 28], which consist of a forward noising process and a reverse denoising process, are widely used in generative tasks. The forward noising process gradually adds noise to the data sample to generate a noisy sample , which can be formulated as =+, where is the Gaussian noise and indicates the time steps. is a monotonically decreasing function to control the signal-to-noise ratio and the degree of corrosion. In the forward process, the original data is iteratively broken towards the pure Gaussian noise . At the training stage, a denosing network parameterized by is trained to predict from by minimizing an objective function, which is a loss most of the time. At the inference stage, the diffusion models perform the reverse denoising process. The neural network follows a Markovian way that recovers from the pure Gaussian noise iteratively. Specifically, the process of is achieved by applying the denoising network to and then using the predicted to make the transition to iteratively.

In perception tasks, the diffusion models usually take the feature as conditions to perform denoising. For example, in the semantic segmentation task, the diffusion models take both the noisy segmentation label and conditional feature as input to perform the denoising, which can be formulated as follows:

| (1) |

where is implemented by the transition rule based on denoising network that takes as conditional input. Our method is based on the conditional diffusion models to perform the medical image segmentation. We propose a label encoding that generates pixel-wise dense label embedded maps as input to the diffusion decoder. We utilize the visual features as the condition.

2.2 Architecture

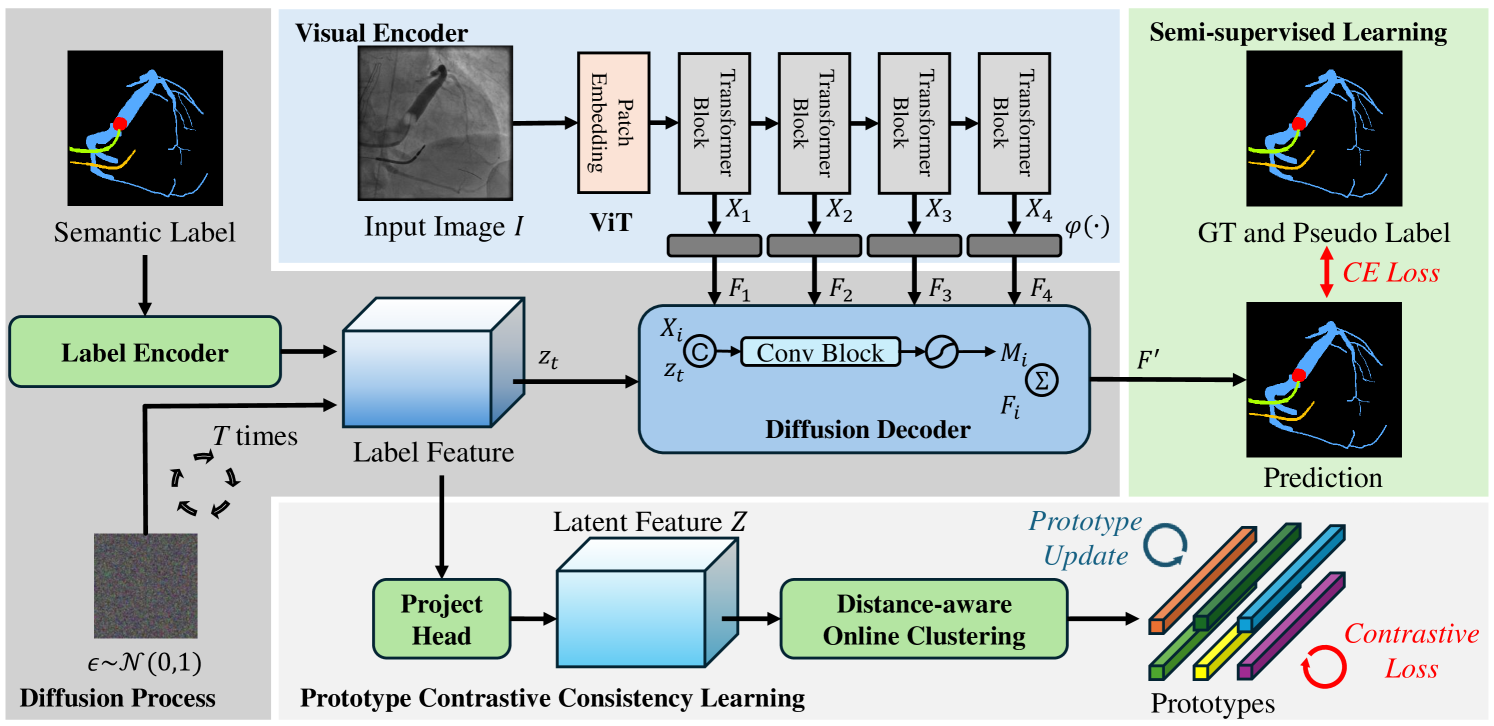

The overall framework of our model is presented in Fig. 1. which comprises a visual encoder, a label encoder, a latent projector, and a diffusion decoder.

Visual encoder. The visual encoder takes an image as input and extracts multi-level features () for diffusion decoder as visual condition to guide denoising. , , and denote the height, width, and channels of one-level feature, respectively. indicates the total number of levels of visual encoder. Specifically, we first utilize a backbone ViT [6] for visual branch to extract visual features. We select features from different layers of the backbone, denoted as (), where is the layer index. The -level features are fed into visual-specific branches to generate the visual-specific multi-level features . The visual-specific branch contains two stacked convolutional blocks, each of which consists of a 33 convolution followed by a batch normalization layer and a GeLU activation layer and a 11 convolution. Moreover, to learn discriminative multi-level visual-specific features, we also design visual-specific auxiliary heads to generate intermediate predictions. These intermediate predictions are supervised by the corresponding semantic ground-truth and pseudo labels () and the visual-specific branches will be updated by the gradient from segmentation prediction. The output of each visual-specific branch acts as the conditions for the diffusion decoder.

Label and latent encoder. We first encode the discrete, dense semantic label map into a latent feature space using a label encoder to capture semantic representations. Given the discrete nature of semantic segmentation labels, we begin by converting them into one-hot representations. The label encoder consists of a 11 convolutional layer and a 33 convolutional layer that transforms the dense labels into label-specific encoded features . This output is then normalized to the range [], and a scaling factor is applied to control the signal-to-noise ratio. This scaling increases the difficulty of the denoising task, thereby encouraging the diffusion decoder to learn more informative representations. To simulate the forward diffusion process, Gaussian noise is added to the label-specific encoded features , producing the corrupted representation . In the forward noising process, the corruption intensity is controlled by [], which decreases over time . Following previous works [9, 23], we adopt a cosine schedule for to modulate the noise level throughout the diffusion process.

Subsequently, we apply a latent encoder composed of a lightweight convolutional layer that performs a non-linear projection, denoted as , producing the latent embedding =. This projection step is commonly used in contrastive learning over convolutional feature maps [3] to obtain more discriminative representations.

Diffusion decoder The decoder takes the noisy label-specific encoded features as input and the visual-specific multi-level features as conditions. We also use the features from the backbone different layers to help model the semantic space. The noisy label map is concatenated with the features and sent to the diffusion decoder to perform level interaction successively with . Specifically, in the level-interaction phase, we perform the interaction at each level. We utilize 2 convolutional blocks (Conv-BatchNorm-GeLU-Conv) and a convolutional layer to map the concatenated feature map and to each feature level and the channel number is transformed from to . The multi-level mask maps are generated by applying a Sigmoid function to the output features of the convolutional block at each level, denoted as (). Then, we use to generate the final aggregated features by =. The aggregated feature is sent to the prediction branch to generate the final prediction, where the prediction branch contains three convolutional blocks. The predictions are then encoded as mentioned in 2.1 to generate the predicted .

2.3 Prototype Contrastive Consistency Learning

Prototype-based learning represents semantic classes using class-specific prototypes and performs classification by comparing inputs to these representative prototypes. Let () denote a set of prototypes corresponding to semantic class . Rather than adopting a parametric prototype learning approach for constraint in semantic latent space, we introduce a non-parametric prototype contrastive learning framework. Specifically, we employ a set of non-learnable class prototypes and apply a contrastive loss directly to these prototypes via a prototype-anchored metric learning strategy. This method enables the latent semantic space to be structured more effectively by incorporating known inductive biases, such as intra-class compactness and inter-class separability, which are often overlooked in traditional approaches. The label-based contrastive loss encourages features of pixels from the same class to cluster closely, while enforcing separation between features from different classes. During training, since the prototypes are representative of the dataset as a whole, these inductive biases can be directly imposed as optimization objectives. This enables us to explicitly shape the embedding space beyond merely optimizing for prediction accuracy.

Inter-class compactness. We begin by grouping the latent embeddings into prototypes for each semantic class in an online clustering manner. After processing all samples in the current batch, each pixel embedding is assigned to the -th prototype of class , where the assignment is determined by = with = and denotes a similarity metric, e.g., the cosine similarity. This prototype assignment leads to a training objective aimed at maximizing the posterior probability of the correct prototype assignment. This can be viewed as a inter-class prototype contrastive learning loss:

| (2) |

where denotes the set of prototypes excluding those belonging to the target class , and the temperature parameter regulates the concentration of the similarity distribution.

Intra-class separability. To further reduce intra-class variation, i.e., to encourage pixel features assigned to the same prototype to form compact clusters, we introduce a loss that regularizes the learned representations by directly minimizing the distance between each embedded pixel and its assigned prototype:

| (3) |

Notably, both and are -normalized. This normalization ensures that the training objective focuses on angular similarity, minimizing intra-class variation while preserving separation between features assigned to different prototypes.

2.4 Training and Inference

During training, we first generate label-specific encoded features , to which noise is added to obtain the noisy representation . This noisy feature is then fed into the diffusion decoder, and the model is trained to perform the denoising. The overall procedure is outlined in Algorithm 1. During inference, the diffusion model starts from an initial Gaussian noise and iteratively denoises the input to approximate the ground truth. This inference process is detailed in Algorithm 2.

We adopt DDIM [28] as the update rule for the noisy map. After predicting at each step, we apply the reparameterization trick to generate the noisy label-specific features for the subsequent step. Following the approaches in [9], we employ asymmetric time intervals during inference. These time intervals are controlled by t_diff in Algorithm 2, which is empirically set to 1.

3 Experiments

3.1 Experimental Setup

Datasets. We evaluate our model using two datasets. First, we employ the public Endoscapes2023 dataset [22], which includes three sub-tasks: surgical scene segmentation, object detection, and critical view of safety assessment. For our experiments, we use the segmentation subset, which comprises 493 annotated frames extracted from 50 laparoscopic cholecystectomy videos. The dataset is officially split into training, validation, and test sets in a 3:1:1 ratio, respectively.

Additionally, we introduce a new benchmark dataset named MOSXAV, comprising 62 X-ray angiography video sequences: 40 from the CADICA dataset [10] and 22 collected from cardiac resynchronization therapy procedures performed at two hospitals. These videos capture the injection and flow of contrast agents through the coronary arteries or coronary sinus along the heart surface. Each video contains 33–70 frames at a resolution of 512512. Vascular regions were annotated by experienced radiologists, with one or two key frames labeled per video—specifically when the contrast agent is most visible. For training and validation, dense annotations are provided every 5 frames across 50 sequences (totaling 2,335 frames). The test set includes 488 frames, all of which are fully annotated.

Evaluation metric. Following conventions [25, 24], mean intersection-over-union (mIoU) is adopted for evaluation.

Implementation Details. We utilize ViT-Large [6] as the visual backbone for our main experiments and ViT-Base for all ablation studies, both producing features with a stride of 16. All models are trained for 40,000 iterations with a batch size of 8. The network is optimized using the Adam optimizer (=0.9, =0.999), with an initial learning rate of 5e-4 and a weight decay of 1e-6 for both the Endoscapes2023 and MOSXAV datasets and a polynomial learning rate scheduler is employed. The semantic segmentation task is supervised using the cross-entropy loss. To generate pseudo masks for Endoscapes2023, we first use object detection bounding boxes as prompts for the MedSAM [16]. The initial masks produced by MedSAM are then refined using SAMRefiner [12] to obtain higher-quality pseudo labels. All the experiments are trained with 2 NVIDIA RTX 6000 GPUs for 40,000 iterations.

3.2 Main Results

To comprehensively evaluate the superiority of our proposed method, we show a comparison of SoTA results on the Endoscapes2023, val and test sets of MOSXAV in Table 1. Our method is evaluated alongside several publicly available baseline segmentation methods from the computer vision community. Note that the ground truth is obtained from the Seg50 subset, while the pseudo labels are generated for the BBox201 subset of the Endoscapes2023 dataset.

| Methods | Endoscapes2023 | MOSXAV | |||

|---|---|---|---|---|---|

| val | val | test | |||

| GT | Pseudo | GT + Pseudo | GT | ||

| mIoU (%) | mIoU (%) | mIoU (%) | mIoU (%) | mIoU (%) | |

| U-Net [25] | 41.44 | 44.09 | 45.64 | 67.58 | 27.81 |

| Attention U-Net [24] | 42.13 | 45.06 | 45.47 | 67.91 | 32.08 |

| TransU-Net [2] | 45.00 | 45.06 | 46.05 | 68.22 | 30.91 |

| CMU-Net [29] | 45.63 | 46.23 | 46.92 | 68.36 | 27.95 |

| CMU-NeXt [30] | 39.95 | 40.97 | 40.61 | 64.11 | 24.85 |

| SwinU-Net [1] | 36.65 | 37.23 | 37.83 | 63.46 | 22.67 |

| MedSegDiff [32] | 50.72 | 51.18 | 51.53 | 69.59 | 39.93 |

| Ours | 55.33 | 56.04 | 56.68 | 73.48 | 43.04 |

Endoscapes2023 dataset. Our method significantly outperforms baseline semantic segmentation approaches under the semi-supervised learning setting (i.e., GT + Pseudo), notably surpassing the diffusion-based model MedSegDiff [32] by a margin of +5.15%. Furthermore, our method also achieves the best performance when trained solely on ground truth or pseudo labels.

MOSXAV val and test sets. Table 1 shows that our method outperforms all baseline semantic segmentation methods on the val set. More importantly, on the challenging test set, it consistently surpasses MedSegDiff, achieving an mIoU improvement from 39.93% to 43.04%.

3.3 Ablation Study

To demonstrate the effectiveness of the different components in our model, we perform an ablation study on the Endoscapes2023 dataset.

[Training Objective ] mIoU (%) 53.04 54.22 54.04 54.47 [Prototype Number ] # Prototype mIoU (%) =1 54.29 =5 54.37 =10 54.47 =20 54.46

Effectiveness of Contrastive Loss. To verify the effectiveness of our overall training objective, we progressively incorporate contrastive learning components defined in Eqs. 2 and 3 into the final loss function. As shown in Table 2, the model with alone achieves an mIoU score of 53.04%. Combing all the losses together leads to the best performance, yielding an mIoU score of 54.47%.

Prototype Number Per Class . Table 2 presents the performance of our approach concerning the number of prototype per class. For =1, we directly represent each class as the mean embedding of its pixel samples. As a result, we set =10 for a better trade-off between accuracy and computation cost.

4 Conclusion

In this paper, we propose a novel diffusion-based framework for semi-supervised medical image segmentation that enhances the robustness of dense predictions, particularly in the presence of noisy pseudo-labels. Our approach introduces a prototype contrastive consistency constraint into the latent structure of semantic labels during the denoising diffusion process. Instead of explicitly delineating semantic boundaries, the model utilizes class prototypes—centralized semantic representations in the latent space—as anchors to guide learning. Additionally, we introduce MOSXAV, a new publicly available benchmark dataset that provides detailed, manually annotated ground truth for multiple anatomical structures in X-ray angiography videos. Extensive experiments demonstrate that our method consistently outperforms SoTA medical image segmentation approaches under the semi-supervised learning setting.

4.0.1 Acknowledgements

This work was supported by EPSRC UK (EP/X023826/1) and MRC impact fund (University of East Anglia).

4.0.2 \discintname

The authors have no competing interests to declare that are relevant to the content of this article.

References

- [1] Cao, H., et al.: Swin-unet: Unet-like pure transformer for medical image segmentation. In: Proceedings of the European Conference on Computer Vision. pp. 205–218 (2022)

- [2] Chen, J., et al.: Transunet: Transformers make strong encoders for medical image segmentation. arXiv preprint arXiv:2102.04306 (2021)

- [3] Chen, T., et al.: A simple framework for contrastive learning of visual representations. In: Proceedings of the International Conference on Machine Learning. vol. 119, pp. 1597–1607 (2020)

- [4] Chen, X., et al.: Semi-supervised semantic segmentation with cross pseudo supervision. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 2613–2622 (2021)

- [5] Chi, H., et al.: Adaptive bidirectional displacement for semi-supervised medical image segmentation. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 4070–4080 (2024)

- [6] Dosovitskiy, A., et al.: An image is worth 16x16 words: Transformers for image recognition at scale. In: International Conference on Learning Representations (2021)

- [7] Ho, J., et al.: Denoising diffusion probabilistic models. In: Advances in Neural Information Processing Systems. vol. 33, pp. 6840–6851 (2020)

- [8] Hong, W.Y., et al.: Cholecseg8k: a semantic segmentation dataset for laparoscopic cholecystectomy based on cholec80. arXiv preprint arXiv:2012.12453 (2020)

- [9] Ji, Y., et al.: Ddp: Diffusion model for dense visual prediction. In: Proceedings of the IEEE International Conference on Computer Vision. pp. 21684–21695 (2023)

- [10] Jiménez-Partinen, A., et al.: Cadica: A new dataset for coronary artery disease detection by using invasive coronary angiography. Expert Systems 41(12), e13708 (2024)

- [11] Le, M.Q., et al.: Maskdiff: Modeling mask distribution with diffusion probabilistic model for few-shot instance segmentation. In: Proceedings of the AAAI Conference on Artificial Intelligence. vol. 38, pp. 2874–2881 (2024)

- [12] Lin, Y., et al.: Samrefiner: Taming segment anything model for universal mask refinement. In: International Conference on Learning Representations (2025)

- [13] Liu, X., et al.: Diffrect: Latent diffusion label rectification for semi-supervised medical image segmentation. In: Proceedings of Medical Image Computing and Computer-Assisted Intervention (2024)

- [14] Luo, Y., et al.: Edge-enhancement densenet for x-ray fluoroscopy image denoising in cardiac electrophysiology procedures. Medical Physics 49(2), 1262–1275 (2022)

- [15] Ma, J., et al.: Abdomenct-1k: Is abdominal organ segmentation a solved problem? IEEE Transactions on Pattern Analysis and Machine Intelligence 44(10), 6695–6714 (2022)

- [16] Ma, J., et al.: Segment anything in medical images. Nature Communications 15(1), 654 (2024)

- [17] Ma, Y., et al.: A novel real-time computational framework for detecting catheters and rigid guidewires in cardiac catheterization procedures. Medical physics 45(11), 5066–5079 (2018)

- [18] Ma, Y., et al.: Real-time registration of 3d echo to x-ray fluoroscopy based on cascading classifiers and image registration. Physics in Medicine & Biology 66(5), 055019 (2021)

- [19] Ma, Y., et al.: A tensor-based catheter and wire detection and tracking framework and its clinical applications. IEEE Transactions on Biomedical Engineering 69(2), 635–644 (2022)

- [20] Menze, B.H., et al.: The multimodal brain tumor image segmentation benchmark. IEEE Transactions on Medical Imaging 34(10), 1993–2024 (2015)

- [21] Miao, J., et al.: Cross prompting consistency with segment anything model for semi-supervised medical image segmentation. In: Proceedings of Medical Image Computing and Computer-Assisted Intervention. vol. LNCS 15011 (2024)

- [22] Murali, A., et al.: The endoscapes dataset for surgical scene segmentation, object detection, and critical view of safety assessment: Official splits and benchmark. arXiv preprint arXiv:2312.12429 (2023)

- [23] Nichol, A.Q., et al.: Improved denoising diffusion probabilistic models. In: Proceedings of the International Conference on Machine Learning. vol. 139, pp. 8162–8171 (2021)

- [24] Oktay, O., et al.: Attention u-net: Learning where to look for the pancreas. arXiv preprint arXiv:1804.03999 (2018)

- [25] Ronneberger, O., et al.: U-net: Convolutional networks for biomedical image segmentation. In: Medical Image Computing and Computer-Assisted Intervention. pp. 234–241 (2015)

- [26] Sohl-Dickstein, J., et al.: Deep unsupervised learning using nonequilibrium thermodynamics. In: Proceedings of the International Conference on Machine Learning. vol. 37, pp. 2256–2265 (2015)

- [27] Sohn, K., et al.: Fixmatch: Simplifying semi-supervised learning with consistency and confidence. In: Advances in Neural Information Processing Systems. vol. 33, pp. 596–608 (2020)

- [28] Song, J., et al.: Denoising diffusion implicit models. In: International Conference on Learning Representations (2021)

- [29] Tang, F., et al.: Cmu-net: A strong convmixer-based medical ultrasound image segmentation network. In: Proceedings of the IEEE International Symposium on Biomedical Imaging. pp. 1–5 (2023)

- [30] Tang, F., et al.: Cmunext: An efficient medical image segmentation network based on large kernel and skip fusion. In: Proceedings of the IEEE International Symposium on Biomedical Imaging. pp. 1–5 (2024)

- [31] Wahid, F., et al.: Biomedical image segmentation: a systematic literature review of deep learning based object detection methods. arXiv preprint arXiv:2408.03393 (2024)

- [32] Wu, J., et al.: Medsegdiff-v2: Diffusion-based medical image segmentation with transformer. In: Proceedings of the AAAI Conference on Artificial Intelligence. vol. 38, pp. 6030–6038 (2024)

- [33] Zhang, H., et al.: Prototype-augmented mean teacher for robust semi-supervised medical image segmentation. Pattern Recognition 166, 111722 (2025)

- [34] Zhang, X., et al.: Adversarial variational embedding for robust semi-supervised learning. In: Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining. p. 139–147 (2019)

- [35] Zhang, Y., et al.: Generator versus segmentor: Pseudo-healthy synthesis. In: Proceedings of Medical Image Computing and Computer-Assisted Intervention. pp. 150–160 (2021)