Robust Offline Active Learning on Graphs

Abstract

We consider the problem of active learning on graphs for node-level tasks, which has crucial applications in many real-world networks where labeling node responses is expensive. In this paper, we propose an offline active learning method that selects nodes to query by explicitly incorporating information from both the network structure and node covariates. Building on graph signal recovery theories and the random spectral sparsification technique, the proposed method adopts a two-stage biased sampling strategy that takes both informativeness and representativeness into consideration for node querying. Informativeness refers to the complexity of graph signals that are learnable from the responses of queried nodes, while representativeness refers to the capacity of queried nodes to control generalization errors given noisy node-level information. We establish a theoretical relationship between generalization error and the number of nodes selected by the proposed method. Our theoretical results demonstrate the trade-off between informativeness and representativeness in active learning. Extensive numerical experiments show that the proposed method is competitive with existing graph-based active learning methods, especially when node covariates and responses contain noises. Additionally, the proposed method is applicable to both regression and classification tasks on graphs.

Key words: Offline active learning, graph semi-supervised learning, graph signal recovery, network sampling

1 Introduction

In many graph-based semi-supervised learning tasks for node-level prediction, labeled nodes are scarce, and the labeling process often incurs high costs in real-world applications. Randomly sampling nodes for labeling can be inefficient, as it overlooks label dependencies across the network. Active learning [29] addresses this issue by selecting informative nodes for labeling by human annotators, thereby improving the performance of downstream prediction algorithms.

Active learning is closely related to the optimal experimental design principle [1] in statistics. Traditional optimal experimental design methods select samples to maximize a specific statistical criterion [25, 11]. However, these methods often are not designed to incorporate network structure, therefore inefficient for graph-based learning tasks. On the other hand, selecting informative nodes on a network is studied extensively in the graph signal sampling literature [14, 22, 26, 8]. These strategies are typically based on the principle of network homophily, which assumes that connected nodes tend to have similar labels. However, a node’s label often also depends on its individual covariates. Therefore, signal-sampling strategies that focus solely on network information may miss critical insights provided by covariates.

Recently, inspired by the great success of graph neural networks (GNNs) [19, 35] in graph-based machine learning tasks, many GNN-based active learning strategies have been proposed. Existing methods select nodes to query by maximizing information gain under different criteria, including information entropy [7], the number of influenced nodes [39], prediction uncertainty [23], expected error reduction [27], and expected model change [30]. Most of these information gain measurements are defined in the spatial domain, leveraging the message-passing framework of GNNs to incorporate both network structure and covariate information. However, their effectiveness in maximizing learning outcomes is not guaranteed and can be difficult to evaluate. This challenge arises from the difficulty of quantifying node labeling complexity in the spatial domain due to intractable network topologies. While complexity measures exist for binary classification over networks [10], their extension to more complex graph signals incorporating node covariates remains unclear. This lack of well-defined complexity measures complicates performance analysis and creates a misalignment between graph-based information measurements and the gradient used to search the labeling function space, potentially leading to sub-optimal node selection.

Moreover, from a practical perspective, most of the previously discussed methods operate in an online setting, requiring prompt labeling feedback from an external annotator. However, this online framework is not always feasible when computational resources are limited [28] or when recurrent interaction between the algorithm and the annotator is impractical, such as in remote sensing or online marketing tasks [33, 37]. Additionally, both network data and annotator-provided labels may contain measurement errors. These methods often fail to account for noise in the training data [18], which can significantly degrade the prediction performance of models on unlabeled nodes [12, 6].

To address these challenges, we propose an offline active learning on graphs framework for node-level prediction tasks. Inspired by the theory of graph signal recovery [14, 22, 8] and GNNs, we first introduce a graph function space that integrates both node covariate information and network topology. The complexity of the node labeling function within this space is well-defined in the graph spectral domain. Accordingly, we propose a query information gain measurement aligned with the spectral-based complexity, allowing our strategy to achieve theoretically optimal sample complexity.

Building on this, we develop a greedy node query strategy. The labels of the queried nodes help identify orthogonal components of the target labeling function, each with varying levels of smoothness across the network. To address data noise, the query procedure considers both informativeness—the contribution of queried nodes in recovering non-smooth components of a signal—and representativeness—the robustness of predictions against noise in the training data. Compared to existing methods, the proposed approach provides a provably effective strategy under general network structures and achieves higher query efficiency by incorporating both network and node covariate information.

The proposed method identifies the labeling function via a bottom-up strategy—first identifying the smoother components of the labeling function and then continuing to more oscillated components. Therefore, the proposed method is naturally robust to high-frequency noise in node covariates. We provide a theoretical guarantee for the effectiveness of the proposed method in semi-supervised learning tasks. The generalization error bound is guaranteed even when the node labels are noisy. Our theoretical results also highlight an interesting trade-off between informativeness and representativeness in graph-based active learning.

2 Preliminaries

We consider an undirected, weighted, connected graph , where is the set of nodes, and is the symmetric adjacency matrix, with element denoting the edge weight between nodes and . The degree matrix is defined as , where denotes the degree of node . Additionally, we observe the node response vector and the node covariate matrix , where the row, , is the -dimensional covariate vector for node . The linear space of all linear combinations of is denoted as . The normalized graph Laplacian matrix is defined as , where is the identity matrix. The matrix is symmetric and positive semi-definite, with real eigenvalues satisfying , and a corresponding set of eigenvectors denoted by . We use to indicate for some . For a set of nodes , indicates its cardinality, and denotes the complement of .

2.1 Graph signal representation

Consider a graph signal , where denotes the signal value at node . For a set of nodes , we define the subspace , where represents the values of on nodes in . In this paper, we consider both regression tasks, where is a continuous response, and classification tasks, where is a multi-class label.

Since serves as a set of bases for , we can decompose in the graph spectral domain as , where is defined as the graph Fourier transform (GFT) coefficient corresponding to frequency . From a graph signal processing perspective, a smaller eigenvalue indicates lower variation in the associated eigenvector , reflecting smoother transitions between neighboring nodes. Therefore, the smoothness of over the network can be characterized by the magnitude of at each frequency . More formally, we measure the signal complexity of using the bandwidth frequency . Accordingly, we define the subspace of graph signals with a bandwidth frequency less than or equal to as . It follows directly that .

2.2 Active semi-supervised learning on graphs

The key idea in graph-based semi-supervised learning is to reconstruct the graph signal within a function space that depends on both the network structure and node-wise covariates, where the frequency parameter controls the size of the space to mitigate overftting. Assume that is the observed noisy realization of the true signal at node , active learning operates in a scenario where we have limited access to on only a subset of nodes , with . The objective is to estimate within using by considering the empirical estimator of as

| (1) |

where is a task-specific loss function. We denote as the minimizer of (1) when responses on all nodes are available, i.e., . The goal of active semi-supervised learning is to design an appropriate function space and select an informative subset of nodes for querying responses, under the query budget , such that the estimation error is bounded as follows:

For a fixed , we wish to minimize the parameter , which converges to as the query budget approaches .

3 Biased Sequential Sampling

In this section, we introduce a function space for recovering the graph signal. Leveraging this function space, we propose an offline node query strategy that integrates criteria of both node informativeness and representativeness to infer the labels of unannotated nodes in the network.

3.1 Graph signal function space

In semi-supervised learning tasks on networks, both the network topology and node-wise covariates are crucial for inferring the graph signal. To effectively incorporate this information, we propose a function class for reconstructing the graph signal that lies at the intersection of the graph spectral domain and the space of node covariates. Motivated by the graph Fourier transform, we define the following function class:

Here, the choice of balances the information from node covariates and network structure. When , spans the full column space of covariates, i.e., , allowing for a full utilization of the original covariate space to estimate the graph signal, but without incorporating any network information. On the other hand, when is close to zero—consider, for example, the extreme case where and —then reduces to , resulting in a loss of critical information provided by the original .

By carefully choosing , however, this function space can offer two key advantages for estimating the graph signal. From a signal recovery perspective, imposes graph-based regularization over node covariates, enhancing generalizability when the dimension of covariates exceeds the query budget or even the network size—conditions commonly encountered in real applications. Additionally, covariate smoothing filters out signals in the covariates that are irrelevant to network-based prediction, thereby increasing robustness against potential noise in the covariates. From an active learning perspective, using enables a bottom-up query strategy that begins with a small to capture the smoothest global trends in the graph signal. As the labeling budget increases, is adaptively increased to capture more complex graph signals within the larger space .

The graph signal can be approximated by its projection onto the space . Specifically, let stack the leading eigenvectors of , where . The graph signal estimation is then given by , where is a trainable weight vector. However, the parameters may become unidentifiable when the covariate dimension exceeds . To address this issue, we reparameterize the linear regression as follows:

| (2) |

where and . Here, , , and denote the left and right singular vectors and the diagonal matrix of the singular values, respectively.

In the reparameterized form , the columns of serve as bases for , thus

. The transformed predictors capture the components of the node covariates constrained within the low-frequency graph spectrum. A graph signal can be parameterized as a linear combination of the columns of , with the corresponding weights identified via

| (3) |

where is the submatrix of containing rows indexed by the query set , and represents the true labels for nodes in . To achieve the identification of , it is necessary that ; otherwise, there will be more parameters than equations in (3). More importantly, since , is only identifiable if has full column rank. Notice that increases monotonically with . If is not carefully selected, the graph signal can only be identified in for some , which is a subspace of .

3.2 Informative node selection

We first define the identification of by the node query set as follows:

Definition 1.

A subset of nodes can identify the graph signal space up to frequency if, for any two functions such that for all , it follows that for all .

Intuitively, the informativeness of a set can be quantified by the frequency corresponding to the space that can be identified. To select informative nodes, we need to bridge the query set in the spatial domain with in the spectral domain. To achieve this, we consider the counterpart of the function space in the spatial domain. Specifically, we introduce the projection space with respect to a subset of nodes as follows: , where

Here, denotes the -th covariate for node in . Theorem 3.1 establishes a connection between the two graph signal spaces and , providing a metric for evaluating the informativeness of querying a subset of nodes on the graph.

Theorem 3.1.

Any graph signal can be identified using labels on a subset of nodes if and only if:

| (4) |

where denotes the complement of in .

We denote the quantity in (4) as the bandwidth frequency with respect to the node set . This quantity can be explicitly calculated and measures the size of the space that can be recovered from the subset of nodes . The goal of the active learning strategy is to select within a given budget to maximize the bandwidth frequency , thus enabling the identification of graph signals with the highest possible complexity.

To calculate the bandwidth frequency , consider any graph signal and its components with non-zero frequency . We use the fact that

where and . Combined with the Rayleigh quotient representation of eigenvalues, the bandwidth frequency can be calculated as

As a result, we can approximate the bandwidth using for a large . Maximizing over then transforms into the following optimization problem:

| (5) |

where represents the budget for querying labels. Due to the combinatorial complexity of directly solving optimization problem (5) by simultaneously selecting , we propose a greedy selection strategy as a continuous relaxation of (5).

The selection procedure starts with and sequentially adds one node to that maximizes the increase in until the budget is reached. We introduce an -dimensional vector with , and define the corresponding diagonal matrix with diagonal entries given by . This allows us to encode the set of query nodes using , where if and if . We then consider the space spanned by the columns of as , and the following relation holds:

Intuitively, acts as a differentiable relaxation of the subspace , enabling perturbation analysis of the bandwidth frequency when a new node is added to . The projection operator associated with can be explicitly expressed as

To quantify the increase in when adding a new node to , we consider the following regularized optimization problem:

| (6) |

The penalty term on the right-hand side of (6) encourages the graph signal to remain in . As the parameter approaches infinity and , the minimization in (6) converges to in (5). The information gain from labeling a node can then be quantified by the gradient of the bandwidth frequency as decreases from 1 to 0:

| (7) |

where is the minimizer of (6) at , which corresponds to the eigenvector associated with the smallest non-zero eigenvalue of the matrix . We then select the node and update the query set as .

When calculating node-wise informativeness in (7), we can enhance computational efficiency by avoiding the inversion of . When is in the neighborhood of , we can approximate:

where and . Then, the node-wise informativeness can be explicitly expressed as:

| (8) |

We find that this approximation yields very similar empirical performance compared to the exact formulation in (7). Therefore, we adopt the formulation in (8) for the subsequent numerical experiments.

3.3 Representative node selection

In real-world applications, we often have access only to a perturbed version of the true graph signals, denoted as , where represents node labeling noise that is independent of the network data. When replacing the true label with in (3), this noise term introduces both finite-sample bias and variance in the estimation of the graph signal . As a result, we aim to query nodes that are sufficiently representative of the entire covariate space to bound the generalization error. To achieve this, we introduce principled randomness into the deterministic selection procedure described in Section 3.2 to ensure that includes nodes that are both informative and representative. The modified graph signal estimation procedure is given by:

| (9) |

where is the weight associated with the probability of selecting node into .

Specifically, the generalization error of the estimator in (9) is determined by the smallest eigenvalue of , denoted as . Given that , our goal is to increase the representativeness of such that is lower-bounded by:

| (10) |

However, the informative selection method in Section 3.2 does not guarantee (10). To address this, we propose a sequential biased sampling approach that balances informative node selection with generalization error control.

The key idea to achieve a lower bound for is to use spectral sparsification techniques for positive semi-definite matrices [4]. Let denote the -th row of the constrained basis . By definition of , it follows that . Inspired by the randomized sampling approach in [21], we propose a biased sampling strategy to construct with and weights such that . In other words, the weighted covariance matrix of the query set satisfies , where is a diagonal matrix with on its diagonal.

We outline the detailed sampling procedure as follows. After the selection, let the set of query nodes be with corresponding node-wise weights . The covariance matrix of is given by , defined as . To analyze the behavior of eigenvalues as the query set is updated, we follow [21] and introduce the potential function:

| (11) |

where and are constants such that , and denotes the trace of a matrix. The potential function quantifies the coherence among all eigenvalues of . To construct the candidate set , we sample node and update , , and such that all eigenvalues of remain within the interval . To achieve this, we first calculate the node-wise probabilities as:

| (12) |

where . We then sample nodes into according to . For each node , the corresponding weight is given by . After obtaining the candidate set , we apply the informative node selection criterion introduced in Section 3.2, i.e., selecting the node , and update the query set and weights as follows:

We then update the lower and upper eigenvalue bounds as follows:

| (13) |

The update rule ensures that increases at a slower rate than , leading to the convergence of the gap between the largest and smallest eigenvalues of , thereby controlling the condition number. Accordingly, the covariance matrix is updated with the selected node as:

| (14) |

With the covariance matrix update rule in (14), the average increment is . Intuitively, the selected node allows all eigenvalues of to increase at the same rate on average. This ensures that continues to approach during the selection process, thus driving the smallest eigenvalue away from zero. Additionally, the selected node remains locally informative within the candidate set . Compared with the entire set of nodes, selecting from a subset serves as a regularization on informativeness maximization, achieving a balance between informativeness and representativeness for node queries.

3.4 Node query algorithm and graph signal recovery

We summarize the biased sampling selection strategy in Algorithm 1. At a high level, each step in the biased sampling query strategy consists of two stages. First, we use randomized spectral sparsification to sample nodes and collect them into a candidate set . Intuitively, the covariance matrix on the updated maintains lower-bounded eigenvalues if a node from is added to . In the second stage, we select one node from based on the informativeness criterion in Section 3.2 to achieve a significant frequency increase in (7).

For initialization, the dimension of the network spectrum , the size of the candidate set , and the constant for spectral sparsification need to be specified. Based on the discussion at the end of Section 3.1, the dimension of the function space is at most , where is the budget for label queries. Therefore, we can set . The parameters and jointly control the condition number .

In practice, we can tune to ensure that the covariance matrix is well-conditioned. Specifically, we can run the biased sampling procedure multiple times with different values of and select the largest such that the condition number of the covariance matrix on the query set is less than 10 [20]. This threshold is a commonly accepted rule of thumb for considering a covariance matrix to be well-conditioned [20]. Additionally, is typically fixed at a small value, following the protocol outlined in [21].

Based on the output from Algorithm 1, we solve the weighted least squares problem in (9):

| (15) |

and recover the graph signal on the entire network as .

Although our theoretical analysis is developed for node regression tasks, the proposed query strategy and graph signal recovery procedure are also applicable to classification tasks. Consider a -class classification problem, where the response on each node is given by . We introduce a dummy membership vector , where if and otherwise. For each class , we first estimate based on (15) with the training data , and then compute the score for class as . The label of an unqueried node is assigned as . Notice that the above score-based classifier is equivalent to the softmax classifier:

since the softmax function is monotonically increasing with respect to each score function .

3.5 Computational Complexity

In the representative sampling stage, the computational complexity of calculating the sampling probability is . We then sample nodes to formulate a candidate set , where the complexity of sampling variables from a discrete probability distribution is [32]. Consequently, the complexity of the representative learning stage is .

In the informative selection stage, we calculate the information gain for each node. This involves obtaining the eigenvector corresponding to the smallest non-zero eigenvalue of the projected graph Laplacian matrix, with a complexity of due to the singular value decomposition (SVD) operation. Subsequently, we compute for each node in the candidate set based on their loadings on the eigenvector, which incurs an additional computational cost of . Therefore, the total complexity of our biased sampling method is . Given a node label query budget , the overall computational cost becomes .

When the dimension of node covariates , we can replace the SVD operation with the Lanczos algorithm to accelerate the informative selection stage. The Lanczos algorithm is designed to efficiently obtain the th largest or smallest eigenvalues and their corresponding eigenvectors using a generalized power iteration method, which has a time complexity of [17]. As a result, the complexity of the proposed biased sampling method reduces to . This is comparable to GNN-based active learning methods, as GNNs and their variations generally have a complexity of per training update [5, 36].

4 Theoretical Analysis

In this section, we present a theoretical analysis of the proposed node query strategy. The results are divided into two parts: the first focuses on the local information gain of the selection process, while the second examines the global performance of graph signal recovery. Given a set of query nodes , the information gain from querying the label of a new node is measured as the increase in bandwidth frequency, defined as . We provide a step-by-step analysis of the proposed method by comparing the increase in bandwidth frequency with that of random selection.

Theorem 4.1.

Define , where denotes the degree of node . Let represent the set of queried nodes prior to the selection. Denote the adjacency matrix of the subgraph excluding as . Let and denote the increase in bandwidth frequency resulting from the label query on a node selected by random sampling and the proposed sampling method, respectively. Let denote the node with the largest magnitude in the eigenvector corresponding to the smallest non-zero eigenvalue of . Then we have:

where if for constants when is sufficient large. Inequality (2) holds given satisfying

The expectation is taken over the randomness of node selection. Both are network-related quantities, where and .

Theorem 4.1 provides key insights into the information gain achieved through different node label querying strategies. While random selection yields a constant average information gain, the proposed biased sampling method guarantees a higher information gain under mild assumptions.

Theorem 4.1 is derived using first-order matrix perturbation theory [2] on the Laplacian matrix . In Theorem 4.1, we assume that the column space of the node covariate matrix is identical to the space spanned by the first eigenvectors of . This assumption simplifies the analysis and the results by focusing on the perturbation of , where is the reduced Laplacian matrix with zero entries in the rows and columns indexed by .

The analysis can be naturally extended to the general setting by replacing with , where is the projection operator defined in Section 3.2. Moreover, under the assumption on the node covariates, the information gain exhibits an explicit dependence on the network statistics, providing a clearer interpretation of how the network structure influences the benefits of selecting informative nodes.

Theorem 4.1 indicates that the improvement of biased sampling is more significant when is larger and are smaller. Specifically, reflects the connectedness of the network, where a better-connected network facilitates the propagation of label information and enhances the informativeness of a node’s label for other nodes. A smaller prevents the existence of dominating nodes, ensuring that the connectedness does not significantly decrease when some nodes are removed from the network.

Notice that the node is the most informative node for the next selection, and measures the number of nodes similar to in the network. Recall that the proposed biased sampling method considers both the informativeness and representativeness of the selected nodes. Therefore, the information gain is less penalized by the representativeness requirement if is small. Additionally, the size of the candidate set should be sufficiently large to ensure that informative nodes are included in .

In Theorem 4.2, we provide the generalization error bound for the proposed sampling method under the weighted OLS estimator. To formally state Theorem 4.2, we first introduce the following two assumptions:

Assumption 1 For the underlying graph signal , there exists a bandwidth frequency such that .

Assumption 2 The observed node-wise response can be decomposed as , where are independent random variables with and .

Theorem 4.2.

Under Assumptions 1 and 2, for the graph signal estimation obtained by training (15) on labeled nodes selected by Algorithm 1, with probability greater than , where , we have

| (16) |

where , and . denotes the expected value with respect to the randomness in observed responses.

Theorem 4.2 reveals the trade-off between informativeness and representativeness in graph-based active learning, which is controlled by the spectral dimension . Since is a monotonic function of , a larger reduces representativeness among queried nodes, thereby increasing variance in controlling the condition number (i.e., the first three terms). On the other hand, a larger reduces approximation bias to the true graph signal (i.e., the fifth and last terms) by including more informative nodes for capturing less smoothed signals.

The RHS of (16) captures both the variance and bias involved in estimating using noisy labels on sampled nodes. Specifically, the first three terms represent the estimation variance arising from controlling the condition number of the design matrix on the queried nodes. The fourth and fifth terms reflect the noise and unidentifiable components in the responses of the queried nodes, while the last term denotes the bias resulting from the approximation error of the space using .

The bias term in Theorem 4.2 can be further controlled if the true signal exhibits decaying or zero weights on high-frequency network components. In addition to , the size of the candidate set also influences the probability of controlling the generalization error. A small places greater emphasis on the representativeness criterion in sampling, increasing the likelihood of controlling the condition number but potentially overlooking informative nodes, thereby increasing approximation bias.

For a fixed prediction MSE, the query complexity of our method is , whereas random sampling incurs a complexity of , where . Our method outperforms random sampling in two key aspects: (1) The information-based selection identifies with fewer queries than random sampling, as shown in Theorem 4.1, and (2) our method achieves an additional improvement by actively controlling the condition number of the covariate matrix, resulting in a logarithmic factor reduction compared to random sampling.

5 Numerical Studies

In this section, we conduct extensive numerical studies to evaluate the proposed active learning strategy for node-level prediction tasks on both synthetic and real-world networks. For the synthetic networks, we focus on regression tasks with continuous responses, while for the real-world networks, we consider classification tasks with discrete node labels.

5.1 Synthetic networks

We consider three different network topologies generated by widely studied statistical network models: the Watts–Strogatz model [34] for small-world properties, the Stochastic block model [16] for community structure, and the Barabási–Albert model [3] for scale-free properties.

Node responses are generated as , where is the true graph signal and is Gaussian noise. The true signal is constructed as , where is the linear coefficient and denotes the leading eigenvectors of the normalized graph Laplacian of the synthetic network. Since our theoretical analysis assumes that the observed node covariates contain noise, we generate as a perturbed version of by adding non-leading eigenvectors of the normalized graph Laplacian. The detailed simulation settings can be found in Appendix B.1.

We compare our algorithm with several offline active learning methods: 1. D-optimal [25] selects subset of nodes to maximize determinant of observed covariate information matrix . 2. RIM [38] selects nodes to maximize the number of influenced nodes. 3. GPT [23] and SPA [13] split the graph into disjoint partitions and select informative nodes from each partition.

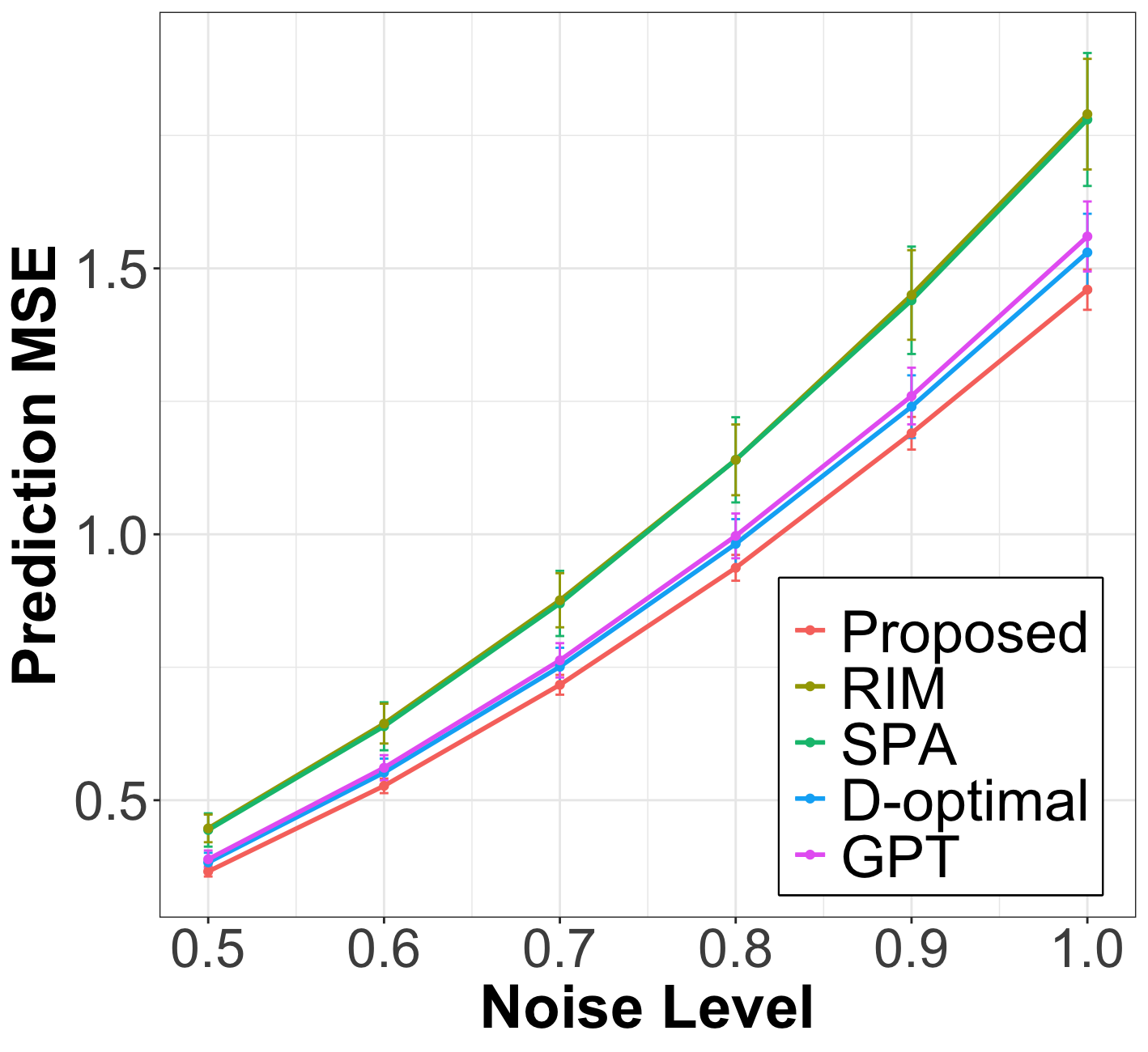

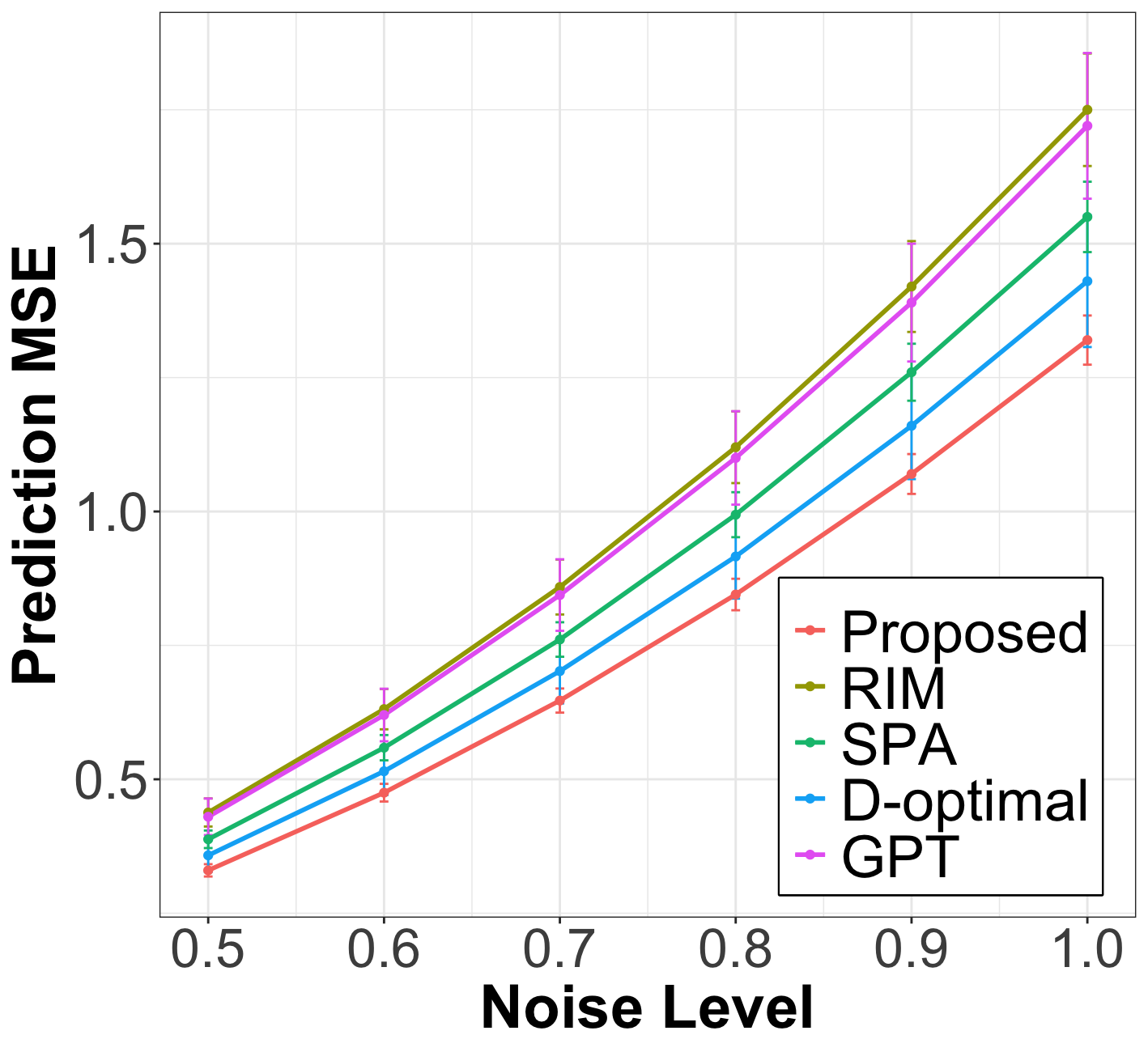

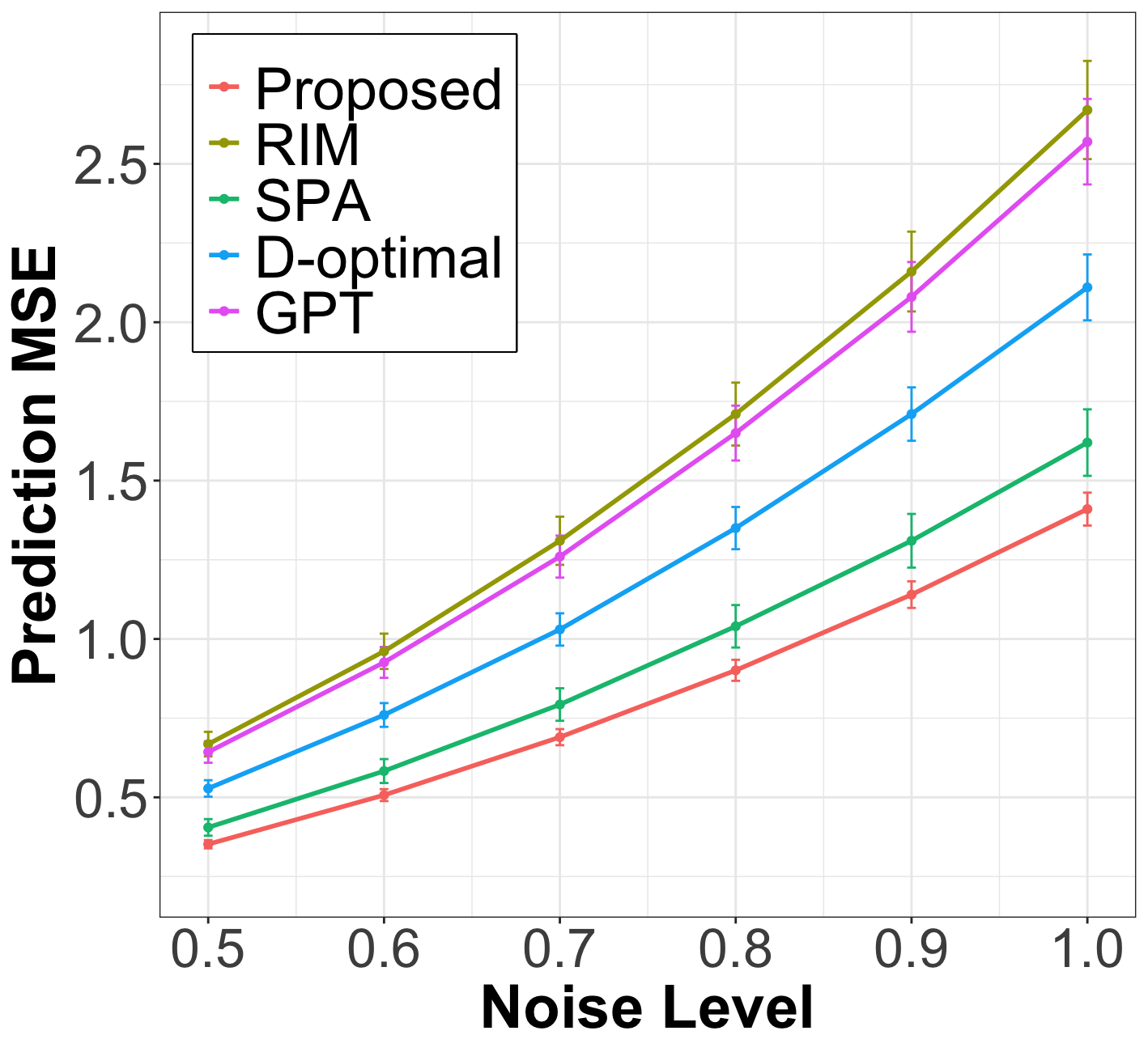

After the node query step, we fit the weighted linear regression from (15) on the labeled nodes, using the smoothed covariates , to estimate the linear coefficient and predict the response for the unlabeled nodes. In Figure 1, we plot the prediction MSE of the proposed method against baselines on unlabeled nodes for various levels of labeling noise . The results show that the proposed method significantly outperforms all baselines across all simulation settings and exhibits strong robustness to noise. The inferior performance of the baselines can be attributed to several factors. D-optimal and RIM fail to account for noise in the node covariates. Meanwhile, partition-based methods like GPT and SPA are highly sensitive to hyperparameters, such as the optimal number of partitions, which limits their generalization to networks lacking a clear community structure.

| Cora () | Pubmed () | Citeseer () | Chameleon () | Texas () | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| #labeled nodes | 35 | 70 | 140 | 15 | 30 | 60 | 30 | 60 | 120 | 50 | 75 | 100 | 15 | 30 | 45 |

| Random | 68.2 1.3 | 74.5 1.0 | 78.9 0.9 | 71.2 1.8 | 74.9 1.6 | 78.4 0.5 | 57.7 0.8 | 65.3 1.4 | 70.7 0.7 | 22.4 2.6 | 22.1 2.5 | 21.8 2.1 | 67.0 3.3 | 69.9 3.3 | 73.8 3.2 |

| AGE | 72.1 1.1 | 78.0 0.9 | 82.5 0.5 | 74.9 1.1 | 77.5 1.2 | 79.4 0.7 | 65.3 1.1 | 67.7 0.5 | 71.4 0.5 | 30.0 4.5 | 28.2 4.9 | 28.6 5.0 | 67.9 2.6 | 68.8 3.3 | 72.1 3.6 |

| GPT | 77.4 1.6 | 81.6 1.2 | 86.5 1.2 | 77.0 3.1 | 79.9 2.8 | 81.5 1.6 | 67.9 1.8 | 71.0 2.4 | 74.0 2.0 | 14.1 2.5 | 15.8 2.2 | 16.4 2.4 | 72.6 2.0 | 72.5 3.6 | 74.6 1.8 |

| RIM | 77.5 0.8 | 81.6 1.1 | 84.1 0.8 | 75.0 1.5 | 77.2 0.6 | 80.2 0.4 | 67.5 0.7 | 70.0 0.6 | 73.2 0.7 | 35.5 3.7 | 42.8 3.0 | 34.4 3.5 | 68.5 3.7 | 78.4 3.0 | 74.6 3.7 |

| IGP | 77.4 1.7 | 81.7 1.6 | 86.3 0.7 | 78.5 1.2 | 82.3 1.4 | 83.5 0.5 | 68.2 1.1 | 72.1 0.9 | 75.8 0.4 | 32.5 3.6 | 33.7 3.1 | 33.4 3.5 | 70.8 3.7 | 69.9 3.3 | 76.1 3.6 |

| SPA | 76.5 1.9 | 80.3 1.6 | 85.2 0.6 | 75.4 1.6 | 78.3 2.0 | 73.5 1.2 | 66.4 2.2 | 69.3 1.7 | 73.5 2.0 | 30.2 3.2 | 28.5 2.9 | 31.0 4.4 | 72.0 3.2 | 72.5 3.1 | 74.6 2.1 |

| Proposed | 78.4 1.7 | 81.8 1.8 | 86.5 1.1 | 78.9 1.1 | 79.1 0.6 | 82.3 0.6 | 69.1 1.0 | 72.2 1.3 | 75.5 0.8 | 35.1 2.8 | 35.7 3.0 | 37.2 3.0 | 75.0 1.9 | 79.5 0.8 | 80.4 2.7 |

| Ogbn-Arxiv (Macro-F1) | Ogbn-Arxiv (Micro-F1) | Co-Physics (Macro-F1) | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| #labeled nodes | 160 | 320 | 640 | 1280 | 160 | 320 | 640 | 1280 | 10 | 20 | 40 | |

| Random | 21.9 1.4 | 27.6 1.5 | 33.0 1.4 | 37.2 1.1 | 52.3 0.8 | 56.4 0.8 | 60.0 0.7 | 63.5 0.4 | 58.3 13.8 | 66.9 10.1 | 78.3 7.1 | |

| AGE | 20.4 0.9 | 25.9 1.1 | 31.7 0.8 | 36.4 0.8 | 48.3 2.3 | 54.9 1.6 | 60.0 0.7 | 63.5 0.3 | 63.7 7.8 | 71.0 8.8 | 82.4 3.9 | |

| GPT | 24.2 0.7 | 29.5 0.8 | 36.4 0.5 | 41.0 0.5 | 52.3 0.9 | 56.8 0.8 | 60.7 0.6 | 63.6 0.5 | 75.8 2.7 | 85.8 0.3 | 88.9 0.3 | |

| Proposed | 25.8 1.3 | 34.3 1.4 | 38.3 1.2 | 41.3 1.3 | 53.1 1.3 | 58.0 1.0 | 62.3 1.6 | 64.8 1.0 | 83.5 0.8 | 86.8 1.3 | 89.2 1.2 | |

| Dataset | Size | Time |

|---|---|---|

| Texas | 183 | 0.19 0.03 |

| Chameleon | 2,277 | 0.34 0.18 |

| Cora | 2,708 | 0.30 0.19 |

| Citeseer | 3,327 | 0.26 0.07 |

| Pubmed | 19,717 | 0.48 0.25 |

| Co-Physics | 34,493 | 1.08 0.43 |

| Ogbn-Arxiv | 169,343 | 2.11 0.33 |

5.2 Real-world networks

We evaluate the proposed method for node classification tasks on real-world datasets, which include five networks with varying homophily levels (high to low: Cora, PubMed, Citeseer, Chameleon and Texas) and two large-scale networks (Ogbn-Arxiv and Co-Physics). In addition to the offline methods described in Section 5.1, we also compare our approach with two GNN-based online active learning methods AGE [7] and IGP [39]. In each GNN iteration, AGE selects nodes to maximize a linear combination of heuristic metrics, while IGP selects nodes that maximize information gain propagation.

Unlike regression, node classification with GNNs is a widely studied area of research. Previous works [23, 30, 38, 39] have demonstrated that the prediction performance of various active learning strategies on unlabeled nodes remains relatively consistent across different types of GNNs. Therefore, we employ Simplified Graph Convolution (SGC) [35] as the GNN classifier due to its straightforward theoretical intuition. Since SGC is essentially multi-class logistic regression on low-pass-filtered covariates, it can be approximately viewed as a special case of the regression model defined in (15). Thus, we conjecture that our theoretical analysis can also be extended to classification tasks and leave its formal verification for future work.

The results in Figure 1 demonstrate that the proposed algorithm is highly competitive with baselines across real-world networks with varying degrees of homophily. Our method achieves the best performance on Cora (highest homophily) and Texas (lowest homophily, i.e., highest heterophily) and is particularly effective when the labeling budget is most limited. To handle heterophily in networks like Chameleon and Texas, we expand the graph signal subspace in Algorithm 1 to , combining eigenvectors corresponding to the smallest and largest eigenvalues. Admittedly, relying on a priori knowledge of label construction may be unrealistic, so developing adaptive methods for designing the signal subspace to effectively handle both homophily and heterophily remains a promising direction for future research.

Table 5.1 summarizes the performance on two large-scale networks. The greatest improvement is observed in the Macro-F1 score on Ogbn-Arxiv, with an increase of up to 4.8% at 320 labeled nodes. Moreover, Table 5.1 demonstrates that our algorithm scales efficiently to large networks, with the time cost of querying a single node being approximately 2 seconds when .

6 Conclusion

We propose a graph-based offline active learning framework for node-level tasks. Our node query strategy effectively leverages both the network structure and node covariate information, demonstrating robustness to diverse network topologies and node-level noise. We provide theoretical guarantees for controlling generalization error, uncovering a novel trade-off between informativeness and representativeness in active learning on graphs. Empirical results demonstrate that our method performs strongly on both synthetic and real-world networks, achieving competitiveness with state-of-the-art methods on benchmark datasets. Future work could explore extensions to an online active learning setting that iteratively incorporates node response information to further enhance query efficiency. Additionally, scalability on large graphs could be improved by utilizing the Lanczos method [31] or Chebyshev polynomial approximation [19] during node selection.

References

- [1] Z. Allen-Zhu, Y. Li, A. Singh, and Y. Wang. Near-optimal discrete optimization for experimental design: A regret minimization approach. Mathematical Programming, 2020.

- [2] B. Bamieh. A tutorial on matrix perturbation theory (using compact matrix notation). arXiv preprint arXiv:2002.05001, 2020.

- [3] A.-L. Barabasi and R. Albert. Emergence of scaling in random networks. Science, 1999.

- [4] J. Batson, D. A. Spielman, N. Srivastava, and S.-H. Teng. Spectral sparsification of graphs: theory and algorithms. Communications of the ACM, 2013.

- [5] D. Blakely, J. Lanchantin, and Y. Qi. Time and space complexity of graph convolutional networks. Accessed on: Dec, 2021.

- [6] M.-R. Bouguelia, S. Nowaczyk, K. Santosh, and A. Verikas. Agreeing to disagree: active learning with noisy labels without crowdsourcing. International Journal of Machine Learning and Cybernetics, 2018.

- [7] H. Cai, V. W. Zheng, and K. Chen-Chuan Chang. Active learning for graph embedding. arXiv preprint arXiv:1705.05085, 2017.

- [8] S. Chen, A. Sandryhaila, J. M. F. Moura, and J. Kovacevic. Signal recovery on graphs: Variation minimization. IEEE Transactions on Signal Processing, 2016.

- [9] X. Chen and E. Price. Active regression via linear-sample sparsification. In Conference on Learning Theory, pages 663–695. PMLR, 2019.

- [10] G. Dasarathy, R. Nowak, and X. Zhu. S2: An efficient graph based active learning algorithm with application to nonparametric classification. In Proceedings of The 28th Conference on Learning Theory (COLT), 2015.

- [11] M. Derezinski, M. K. Warmuth, and D. J. Hsu. Leveraged volume sampling for linear regression. In Advances in Neural Information Processing Systems (NeurIPS), 2018.

- [12] J. Du and C. X. Ling. Active learning with human-like noisy oracle. In Proceedings of the 2010 IEEE International Conference on Data Mining (ICDM), 2010.

- [13] R. Fajri, Y. Pei, L. Yin, and M. Pechenizkiy. A structural-clustering based active learning for graph neural networks. In Symposium on Intelligent Data Analysis (IDA), 2024.

- [14] A. Gadde, A. Anis, and A. Ortega. Active semi-supervised learning using sampling theory for graph signals. In Proceedings of the 20th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (KDD), 2014.

- [15] A. Gadde, A. Anis, and A. Ortega. Active semi-supervised learning using sampling theory for graph signals. In Proceedings of the 20th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pages 492–501, 2014.

- [16] M. Girvan and M. E. J. Newman. Community structure in social and biological networks. Proceedings of the National Academy of Sciences, 2002.

- [17] G. Golub and C. Loan. Matrix computations. HU Press, 2013.

- [18] N. T. Hoang, T. Maehara, and T. Murata. Revisiting graph neural networks: Graph filtering perspective. In Proceedings of the 25th International Conference on Pattern Recognition (ICPR), 2021.

- [19] T. N. Kipf and M. Welling. Semi-supervised classification with graph convolutional networks. In Proceedings of the 5th International Conference on Learning Representations (ICLR), 2017.

- [20] M. Kutner, C. Nachtsheim, J. Neter, and W. Li. Applied Linear Statistical Models. McGraw-Hill/Irwin, 2004.

- [21] Y. T. Lee and H. Sun. Constructing linear-sized spectral sparsification in almost-linear time. SIAM Journal on Computing, 47(6):2315–2336, 2018.

- [22] P. Di Lorenzo, S. Barbarossa, and P. Banelli. Cooperative and Graph Signal Processing. Academic Press, 2018.

- [23] J. Ma, Z. Ma, J. Chai, and Q. Mei. Partition-based active learning for graph neural networks. Transactions on Machine Learning Research, 2023.

- [24] E. L. Paluck, H. Shepherd, and P. M. Aronow. Changing climates of conflict: a social network experiment in 56 schools. Proceedings of the National Academy of Sciences, page 566–571, 2016.

- [25] F. Pukelsheim. Optimal Design of Experiments (Classics in Applied Mathematics, 50). Society for Industrial and Applied Mathematics, 2018.

- [26] G. Puy, N. Tremblay, R. Gribonval, and P. Vandergheynst. Random sampling of bandlimited signals on graphs. Applied and Computational Harmonic Analysis, 2016.

- [27] F. F. Regol, S. Pal, Y. Zhang, and M. Coates. Active learning on attributed graphs via graph cognizant logistic regression and preemptive query generation. In Proceedings of the 37th International Conference on Machine Learning (ICML), 2020.

- [28] P. Ren, Y. Xiao, X. Chang, P.-Y. Huang, Z. Li, X. Chen, and X. Wang. A survey of deep active learning. ACM Computing Surveys, 2020.

- [29] B. Settles. Active learning literature survey. Technical report, University of Wisconsin Madison, 2010.

- [30] Z. Song, Y. Zhang, and I. King. No change, no gain: Empowering graph neural networks with expected model change maximization for active learning. In Advances in Neural Information Processing Systems (NeurIPS), 2023.

- [31] A. Susnjara, N. Perraudin, D. Kressner, and P. Vandergheynst. Accelerated filtering on graphs using lanczos method. arXiv preprint arXiv:1509.04537, 2015.

- [32] M. Vose. Linear algorithm for generating random numbers with a given distribution. IEEE Transactions on Software Engineering, 1991.

- [33] W. Wang and W. N. Street. Modeling and maximizing influence diffusion in social networks for viral marketing. Applied Network Science, 2018.

- [34] D. J. Watts and S. H. Strogatz. Collective dynamics of ’small-world’ networks. Nature, 1998.

- [35] F. Wu, A. H. S. Jr., T. Zhang, C. Fifty, T. Yu, and K. Q. Weinberger. Simplifying graph convolutional networks. In Proceedings of the 36th International Conference on Machine Learning (ICML), 2019.

- [36] Z. Wu, S. Pan, F. Chen, G. Long, C. Zhang, and P. Yu. A comprehensive survey on graph neural networks. IEEE Transactions on Neural Networks and Learning Systems, 2020.

- [37] Y. Xu, X. Chen, A. Liu, and C. Hu. A latency and coverage optimized data collection scheme for smart cities. In New Advances in Identification, Information and Knowledge in the Internet of Things, 2017.

- [38] W. Zhang, Y. Wang, Z. You, M. Cao, P. Huang, J. Shan, Z. Yang, and B. Cui. Rim: Reliable influence-based active learning on graphs. In Advances in Neural Information Processing Systems (NeurIPS), 2021.

- [39] W. Zhang, Y. Wang, Z. You, M. Cao, P. Huang, J. Shan, Z. Yang, and B. Cui. Information gain propagation: a new way to graph active learning with soft labels. In Proceedings of the 10th International Conference on Learning Representations (ICLR), 2022.

Appendix A Proofs

A.1 Proof of Theorem 3.1

A.2 Proof of Theorem 4.1

Proof: Let be the eigenvectors of and . Without loss of generality, we analyze the case when and the case of no repeated eigenvalues. The analysis for the case of repeated eigenvalues can be similar performed with matrix perturbation analysis on degenerate case [2].

After selecting node , we have the reduced Laplacian matrix

Define as the diagonal matrix containing eigenvalues of . Using the first order perturbation analysis [2], we have . Let and be the column and row of , respectively. Since

we have

Therefore,

Assume corresponding to the smallest non-zero eigenvalue of , then the increase of bandwidth frequency for node is .

For random selection, where node is queried with uniform probability,

For the proposed selection method, define , where , then

Next we perform matrix perturbation analysis, define

where approximate eigenvalues and eigenvectors of . Denote as the column of and assume , we have

To satisfy , we multiply as

Then we consider the normalized as in the following analysis. Assume is the eigenvector to the smallest non-zero eigenvalue, then at the step

where is in terms of the randomness in the proposed sampling procedure.

Based on the approximation

then

Define and . Take and denotes the probability of being selected into candidate set size . Denote , we first upper bound as

We calculate the denominator as

In addition, by Stirling’s approximation, when is large

then combining the above simplification, we have

then we can lower bound the expected value of information gain as

Notice that is a monotone decreasing function of given and fixed, then we can select a such that

where is a constant, therefore .

Next we lower bound the quantity . Denote and , we calculate the lower bound for as

which implies

As a result, as long as we have

A.3 Proof of Theorem 4.2

Proof: Based on the assumption that , we denote and . Therefore, we can represent for some parameter and . For the query set and the corresponding bandwidth frequency , we similarly denote and . We denote as the bases of where is obtained from SVD decomposition where and are left and right singular vectors, respectively. The diagonal matrix contains positive singular values with . The estimation (11) at the end of section 3 is equivalent to the weighted regression problem of ,

where . We have the least squares solution

where

| (19) |

We assume , where and . Notice the oracle satisfies

We decompose the space as

Then we decompose , then

Then we can represent , by solving , we have

From and , we have

Denote , we have

Denote for where is the probability of node being selected to query. Since

we have

Notice that

Notice that and , then where . Therefore, . We first state and then prove the following Lemma 1.

Lemma A.1.

For the output from Algorithm 1 with query budgets, we have

where , and is a constant such that .

Using Lemma 1 we have

with probability larger than where .

In the following, we prove Lemma 1 which is based on Theorem 5.2 in [9] and Lemma 3.5 and 3.6 in [21].

In the following, we denote the accumulated covariance matrix in the selection as , the potential function as , and , where is the th row of . Notice that . At each iteration of algorithm 1, the th node is selected as one of candidates with . For the candidates, we define the following probability

where . Notice that when goes to , the approximate the step 3 in Algorithm 1. Therefore, the probability of node k being query is

where denote all possible candidate set with choosing nodes from nodes, denotes the probability of selecting nodes into conditioning on . Denote as all possible size candidate sets with node always in the set. Then

where we use the fact for any semi-positive definite matrix . In addition,

for any . Denote , then , which implies for any

Similarly, we have

Then from Lemma 3.3 and Lemma 3.4 in [batson2014twice] we have

| (20) | ||||

| (21) |

Define , we show in the following that .

From (20) we have

Define and . Notice that

at each step based on the design of and . and is convex in terms of and . From , we have

then with

Define

then

Since is convex, we have

| (23) |

Then plugin (23), we have

Notice that for selection

where . We consider that the selection process stops when at the iteration that . Notice , when stop at , we have

Then we have . Notice that .

Consider at the th selection

Then

where we use the result that by recursively using and the fact that .

We consider the following reparametrization:

For the th selection, . From previous result, we have with probability with . Notice that and , and

Then if stop at th selection

Denote , then . We find such that then

Therefore,

Then we choose large such that . Given that and , we choose appropriate to satisfy previous requirement on and as

Therefore, we choose such that . Notice that

and mid is defined as , then . Also,

which implies

Notice that for the design matrix in (19), we have where is the accumulated covariance matrix when the query process stops at the the selection. Therefore, the eigenvalues of satisfy . Then

Given that with high probability, . Then for the lower bound,

and upper bound

Then with probability larger than , we have

Consider ,

Finally, check at the th selection

Then we finish the proof of Lemma 1.

Appendix B More on Numerical Studies

B.1 Experimental setups

Synthetic networks The parameters for the three network topologies are: Watts–Strogatz (WS) model () for small world properties, Stochastic block model (SBM) () for community structure, and Barabási-Albert (BA) model () for scale-free properties. We set for all three networks. After generating the networks, we consider them fixed and then simulate and repeatedly using 10 different random seeds. By a slight abuse of notation, we set the node responses and covariates for SBM and WS as and , where and . For the BA model, we set and , where and .

Real-world networks For the proposed method and all baselines, we train a 2-layer SGC model for a fixed 300 epochs. In SGC, the propagation matrix performs low-pass filtering on homophilic networks and high-pass filtering on heterophilic networks. During training, the initial learning rate is set to and weight decay as .

| Dataset | #Nodes | Type | Dataset | #Nodes | Type | ||||

|---|---|---|---|---|---|---|---|---|---|

| SBM | 100 | homophilic | 50 | 10 | Citeseer | 3,327 | homophilic | 1000 | 100 |

| WS | 100 | homophilic | 50 | 10 | Chameleon | 2,277 | heterophilic | 800 | 30, 30 |

| BA | 100 | homophilic | 50 | 15 | Texas | 183 | heterophilic | 60 | 15, 15 |

| Cora | 2,708 | homophilic | 2000 | 200 | Ogbn-Arxiv | 169,343 | homophilic | 1000 | 120 |

| Pubmed | 19,717 | homophilic | 3000 | 60 | Co-Physics | 34,493 | homophilic | 3000 | 150 |

B.2 Visualization

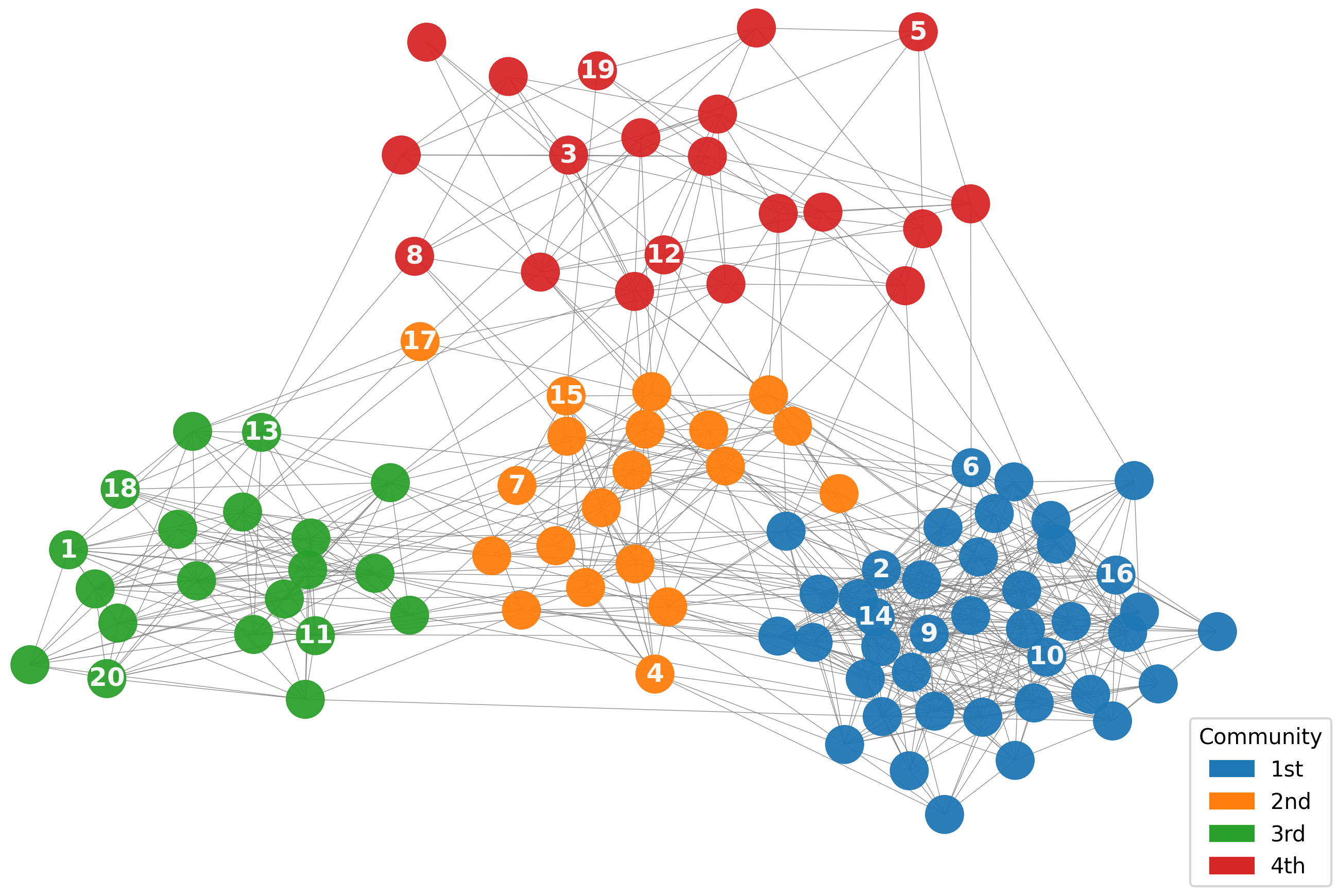

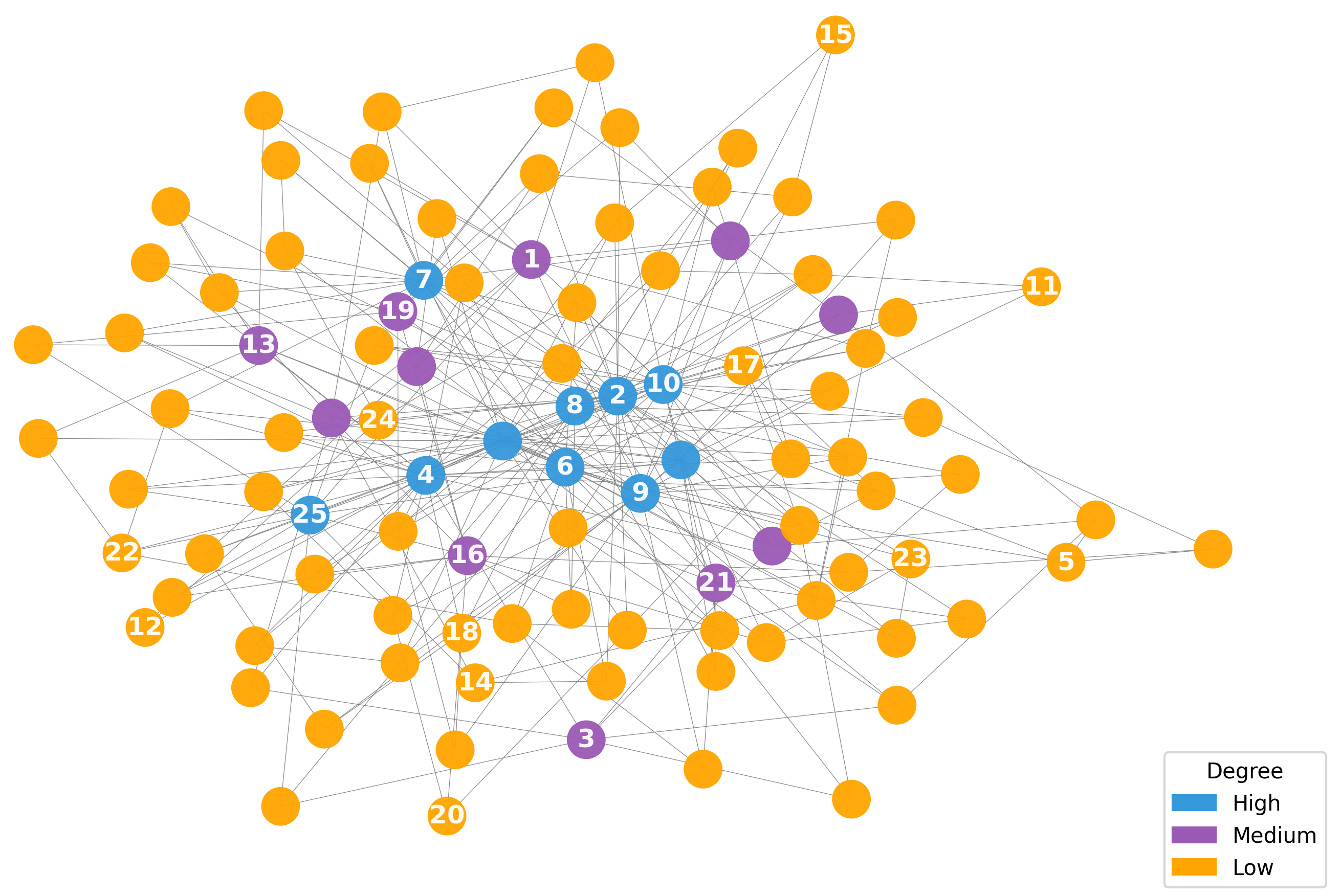

In Figure 2, we visualize the node query process on synthetic networks generated using SBM and BA, as described in Section 5.1. The figure clearly demonstrates that nodes queried by the proposed algorithm adapt to the informativeness criterion specific to each network topology, effectively aligning with the community structure in SBM and the scale-free structure in BA.

B.3 Ablation study

To gain deeper insights into the respective roles of representative sampling and informative selection in the proposed algorithm, we conduct additional experiments on a New Jersey public school social network dataset, School [24], which was originally collected to study the impact of educational workshops on reducing conflicts in schools. As School is not a benchmark dataset in the active learning literature, we did not compare the performance of our method against other baselines in Section 5.2 to ensure fairness. In this dataset with nodes, each node represents an individual student, and edges denote friendships among students. We treat the students’ grade point averages (GPA) as the node responses and select student features—grade level, race, and three binary survey responses—as node covariates using a standard forward selection approach.

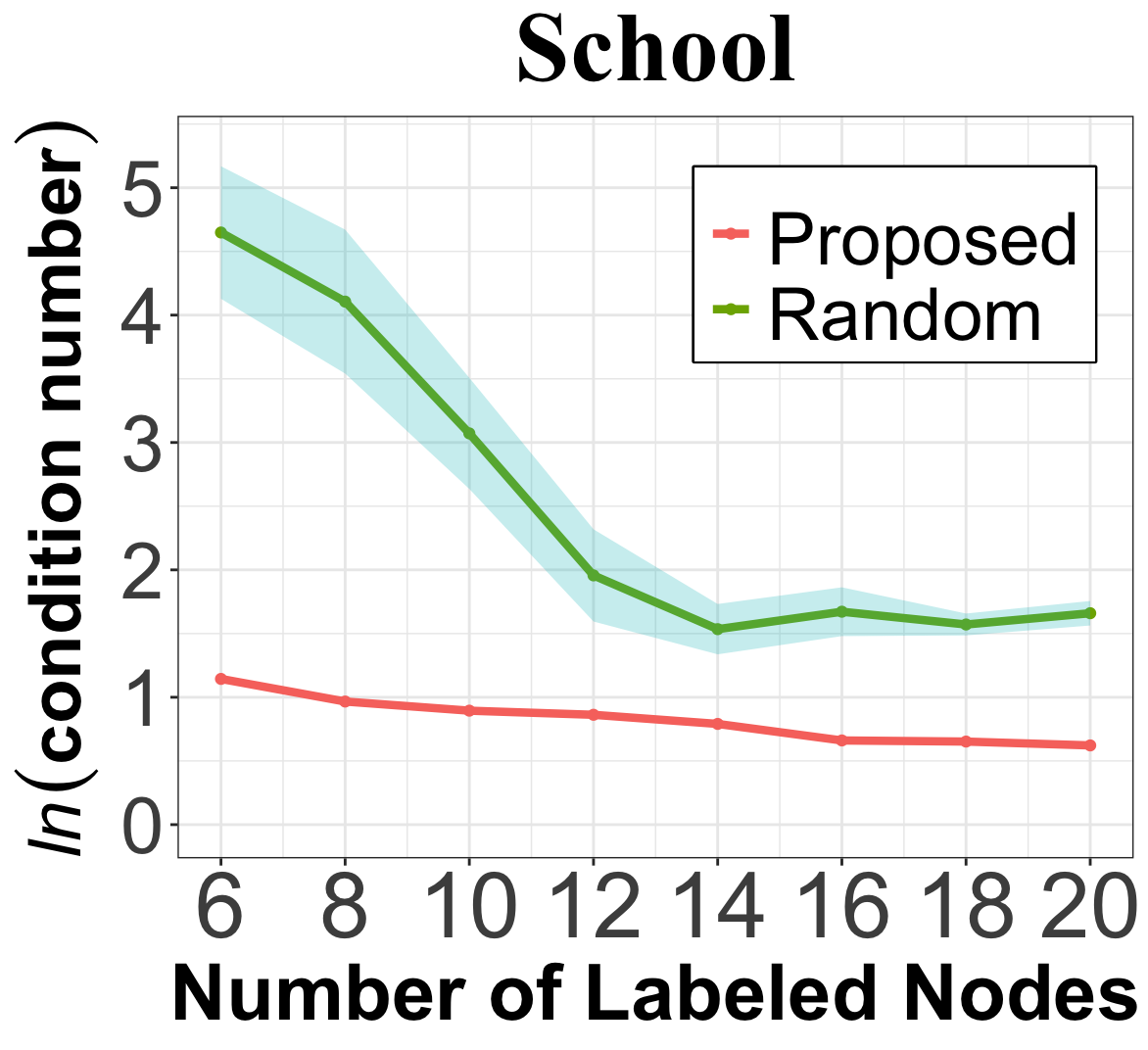

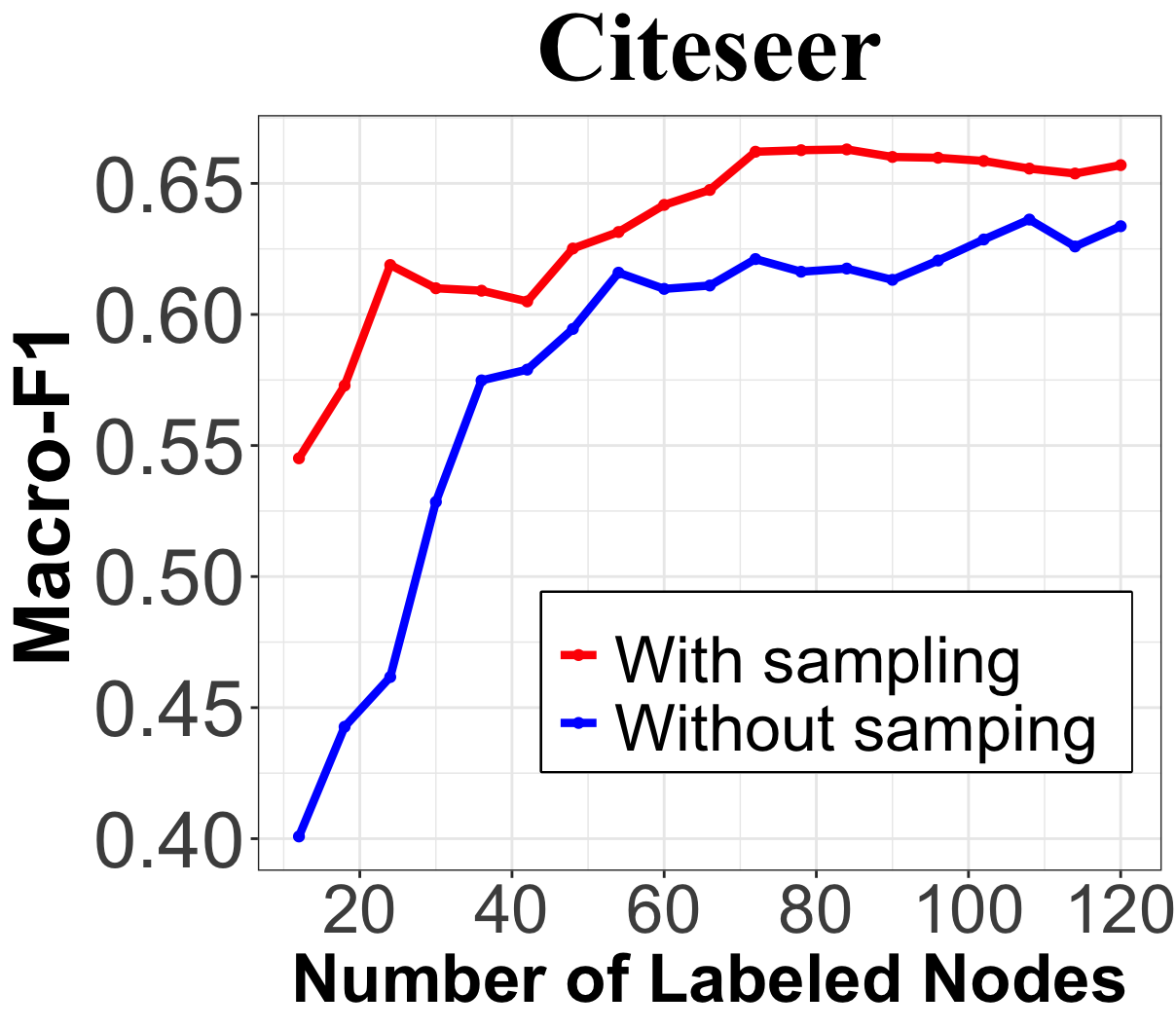

As shown in Section 3.4, the representative sampling in steps 1 and 2 of Algorithm 1 is essential to control the condition number of the design matrix and, consequently, the prediction error given noisy network data. We illustrate in Figure 3(a) the condition number using the proposed method, and compare with the one using random selection. With , the proposed algorithm achieve a significantly lower condition number than random selection, especially when the number of query is small. In the Citeseer dataset, we investigate the prediction performance of Algorithm 1 when removing steps 1 and 2, i.e., setting the candidate set for the selection. Figure 3(b) shows that, with representative sampling, the Macro-F1 score is consistently higher, with a performance gap of up to 15%. Given that node classification on Citeseer is found to be sensitive to labeling noise [38], this result validates the effectiveness of representative sampling in improving the robustness of our query strategy to data noise.

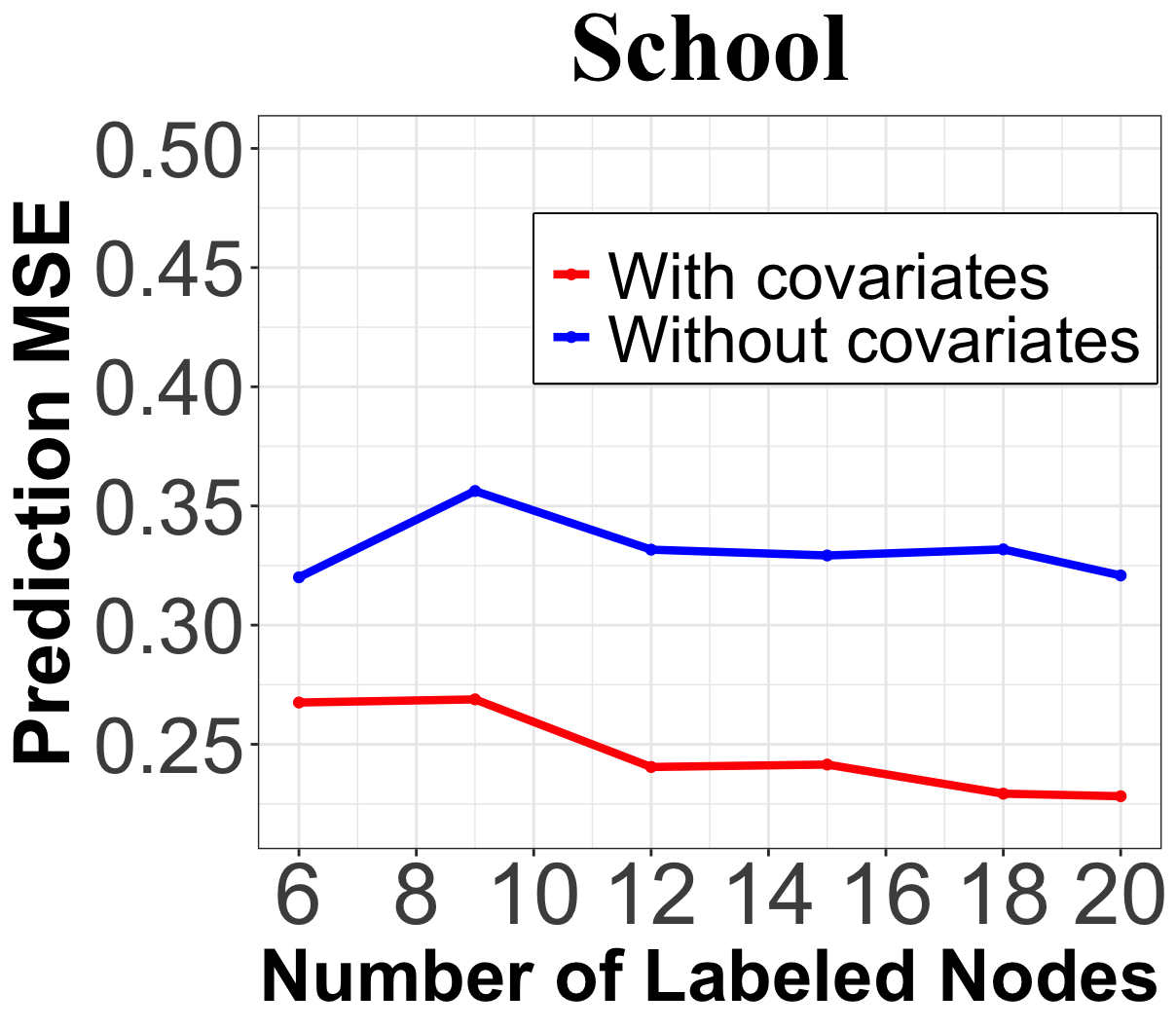

In addition, we examine the ability of the proposed method to integrate node covariates for improving prediction performance. In the School dataset, we compare our method to one that removes node covariates during the query stage by setting as the identity matrix . Figure 3(c) illustrates that the prediction MSE for GPA is significantly lower when incorporating node covariates, thus distinguishing our node query strategy from existing graph signal recovery methods [15] that do not account for node covariate information.

B.4 Code

The implementation code for the proposed algorithm is available at

github.com/Yuanchen-Wu/RobustActiveLearning/.