11email: mgs214505@iisec.ac.jp, otsuka@ai.iisec.ac.jp22institutetext: NTT TechnoCross Corporation, Yokohama, Japan

Robustness bounds on the successful adversarial examples in probabilistic models: Implications from Gaussian processes

Abstract

Adversarial example (AE) is an attack method for machine learning, which is crafted by adding imperceptible perturbation to the data inducing misclassification. In the current paper, we investigated the upper bound of the probability of successful AEs based on the Gaussian Process (GP) classification, a probabilistic inference model. We proved a new upper bound of the probability of a successful AE attack that depends on AE’s perturbation norm, the kernel function used in GP, and the distance of the closest pair with different labels in the training dataset. Surprisingly, the upper bound is determined regardless of the distribution of the sample dataset. We showed that our theoretical result was confirmed through the experiment using ImageNet. In addition, we showed that changing the parameters of the kernel function induces a change of the upper bound of the probability of successful AEs.

Keywords:

Gaussian Processes Adversarial Examples Robustness Bounds1 Introduction

1.1 Adversarial Example

Today, machine learning is widely used in various fields, and concerns about its security have emerged. An adversarial example (AE) is widely investigated among such attack methods [goodfellow_explaining_2015]. AE is a sample that is slightly different from a natural sample that is misclassified by a machine learning classifier [goodfellow_explaining_2015]. AE is often crafted against neural networks by adding a small perturbation (adversarial perturbation) to the original input, and various methods are proposed to make an adversarial perturbation [carlini_towards_2017, goodfellow_explaining_2015].

Since AE is considered an attack method against machine learning, various defense methods against AE have been investigated, including the detection method [feinman_detecting_2017] and adversarial training [cai_curriculum_2018]. However, Carlini & Wagner [carlini_adversarial_2017] reviewed ten detection methods and stated that no defense method could survive a white-box AE attack, an attack using the knowledge of the victim’s architecture. Therefore, both the defense methods and their theoretical basis should be required for the defense against AE.

In the current study, we investigated the theoretical basis of AE using the Gaussian Process (GP), a stochastic process whose output is multivariate normally distributed. GP can be used for a probabilistic model for classification and regression [rasmussen_gaussian_2006], and previous research shows that GP is equivalent to some types of classifier such as linear regression and a relevance vector machine, and especially, GP is equivalent to a certain type of neural networks [williams_computing_1996]. More specifically, the activation function in neural networks corresponds to the kernel function in the NN [williams_computing_1996].

1.2 Related Researches

Until now, some studies have been conducted that aim to establish the theoretical basis of AE. Shafahi, Huang, Studer, Feizi & Goldstein [shafahi_are_2019] have suggested that AE is inevitable under a certain condition on the distribution of the training data, using the geometrical method. Blaas et al. [blaas_adversarial_2020] have shown the algorithmic method to calculate the upper and lower bound of the value of GP classification in an arbitrary set. Cardelli, Kwiatkowska, Laurenti & Patane [cardelli_robustness_2019] have shown the upper bound of the probability of robustness of the GP output in an arbitrary set. Wang, Jha & Chandhuri [wang_analyzing_2018] have shown the method of creating a robust dataset when using a k-nearest neighborhood classifier. Fawzi, Fawzi & Fawzi [fawzi_adversarial_2018] have shown the fundamental upper bound on the robustness of the classification, which some studies are based on. Mahloujifar, Diochnos & Mahmoody [mahloujifar_curse_2019] have applied their results to broader types of data distributions, and Zhang, Chen, Gu & Evans [zhang_understanding_2020] have shown the intrinsic robustness bounds for classifiers with a conditional generative model. More specifically, Gilmer et al. [gilmer_relationship_2018] have shown a fundamental bound relating to the classification error rate in the field of neural networks; Bhagoji, Cullina & Mittal [bhagoji_lower_2019] have shown lower bounds of adversarial classification loss using optimal transport theory.

Some studies suggest certified defense methods. Randomized smoothing [cohen_certified_2019] is a method for composing "smoothed" classifiers that are derived from arbitrary classifiers. The prediction of an arbitrary input by smoothed classifiers has a safety radius, within which the classification results of the neighborhood inputs are the same as the central input.

Our approach is different from the related studies in the following viewpoints. First, our approach focuses on the training data for the classifier and is easier to use practically. For example, the other studies focus on the geometry of the classifier [shafahi_are_2019] or the upper bound for which some cumbersome calculation with all training data is necessary [blaas_adversarial_2020, cardelli_robustness_2019]. Using our approach, we can analyze how the robustness will change when the distribution of training data of the classifier changes, for example, when some training data are removed from the dataset or moved within the input space. Cohen, Rosenfeld & Kolter’s randomized classifier [cohen_certified_2019] needs Monte Carlo samplings to calculate the variance used in the calculation of the safety radius. However, our approach can provide the predictive variance using GP. Thus, our approach can be used with Cohen, Rosenfeld & Kolter’s randomized smoothing.

Second, our approach can be applied to a wide range of classifiers thanks to GP. For example, Wang, Jha & Chandhuri ’s approach [wang_analyzing_2018] also focuses on how robustness changes when the distribution of training data of the classifier changes, but their approach is based on the nearest-neighbor method. Our approach is based on GP, which can be applied to broader inference models. Smith, Grosse, Backes & Alvarez [smith_adversarial_2023] have shown adversarial vulnerability bounds for binary GP classification. However, Smith, Grosse, Backes & Alvarez’s proof is based on the Gaussian kernel, while the result of our research is based on the characteristics of the kernel functions, which includes the Gaussian kernel as a special case. Therefore, the current study has a broader application than [smith_adversarial_2023].

1.3 Contributions

Our research has the following contributions.

-

•

Our research shows that the success probability of an AE attack against a certain dataset in the GP classification with a certain kernel function is upper-bounded by the function of the distance of the nearest points that have different labels.

-

•

We confirmed our theoretical result through the experiment using ImageNet with various kernel parameters in GP classification. The experimental result is well-suited to the theoretical result.

-

•

We showed that changing the parameters of the kernel function causes the change of the theoretical upper bound, and thus our result gives the theoretical basis for the enhancement method of robustness.

2 Theoretical Results

2.1 Problem Formulation and Assumption

Let be a dataset with samples that has data points and labels of object classes . The data point is a -dimensional vector, and the label is binary. Namely,

| (1) |

Let

| (2) |

for further simplicity.

We consider the binary classification of the dataset, using GP regression with a certain kernel function [rasmussen_gaussian_2006]. We define a GP regressor . gives the output as a Gaussian distribution . Then we define a probabilistic GP classifier , which is constructed as below:

| (3) |

where is a value sampled from the distribution . Intuitively, is a probabilistic binary classifier whose output is when a sample from the output distribution of is not negative.

Let the maximum value of the kernel function between two input points of with different labels be . We write the definition of as follows, without loss of generality:

The value of the kernel function between 2 points is described as s, where gives the maximum value of when is fixed. can be written as a function of , that is

Put such that , where is a constant.

In this paper, we introduce the following assumption.

Assumption 1 (-proximity).

Let be the predictive mean of by GP regression trained with , and be the corresponding value by GP regression trained with . Then, for all such that ,

| (4) |

holds with some .

Intuitively, the former part of ˜1 suggests that the predictive mean of the GP regressor with all training data is close to that with only two training data, implying that the effect of training data other than , is small.

2.2 Maximum Success Probability of AE

Theorem 1.

Consider with kernel function trained with . Let be arbitrarily chosen from and be the data point of , such that is the greatest value when is fixed and is taken from . Then, for any such that and , the upper bound of the probability that is upper-bounded with Maximum Success Probability (MSP) function as

| (5) |

where the notations below are used.

| (6) |

| (7) |

| (8) |

| (9) |

The above bound holds for any translation-invariant kernel function , that is, a kernel function that satisfies is constant for all .

The schematic diagram is shown in Fig.˜1.

Proof sketch. First, we prove that the prediction variance increases if the training point increases in GP regression (Lemma 1). In the next, we prove that increases monotonically with respect to if (Lemma 2). Then we prove that the probability that is classified as increases monotonically with respect to (Lemma 3), and the theorem is proved. ∎

2.3 Proof of Theorem 1

In the proof, we set the notations below.

We construct a GP regressor with the kernel function . In GP regressor, the mean and the variance of the new data point can be calculated as

| (10) |

| (11) |

where

| (12) |

| (13) |

| (14) |

In the next we construct GP classifier whose prediction is if a sample from is greater than 0, and the prediction is otherwise.

For further calculation, set the below notations

| (15) |

The following lemma is a simpler version of the statement Exercise 4 of Chapter 2 from [rasmussen_gaussian_2006]. The proof is in the supplemental material.

Lemma 1.

Let be the predictive variance of a GP regression at given a training dataset of size , and be the correspondent variance given only the first points of the training dataset. Then, holds.

Proof.

Let

| (16) |

| (17) |

| (18) |

| (19) |

can be written as

| (20) |

where .

Therefore, can be decomposed as

| (21) |

where

| (22) |

Now

| (23) |

Decomposing A results in

| (24) |

Now, proving the Lemma is equivalent to prove

| (25) |

We now prove Equation 25. It can be decomposed as:

| (26) |

( M is matrix, therefore M can be regarded as a scalar.)

Now is a scalar. Then, consider .

| (27) |

Now consider . This means the predictive variance by GP regression of with a dataset which includes . Therefore, .

∎

Thus, if the two points () in the training dataset of GP regression are fixed, the maximum predictive variance is the variance calculated with the two points as the training dataset only.

Next, we calculate the predicted mean under the condition that only and are used as the training dataset. We write the predictive mean as .

Then and y are written as , . Using these, the predicted mean is calculated below, plugging them into Eq.˜10.

| (29) |

The category boundary is regarded as the point where the predicted mean is 0, therefore on the category boundary holds.

Therefore, category boundary is located where

This implies that the set of the points which satisfies is the category boundary, and Eq.˜29 implies that where the mean is positive.

Similarly, the prediction variance with only as the training dataset is described as follows, plugging the values into Eq.˜11:

| (30) |

Considering Lemma˜1, Eq.˜30 gives the maximum predictive variance of GP regression with the training dataset including .

The probability of is the probability that the value of a sample from the output distribution is lower than 0. Therefore, it can be calculated as follows, using the cumulative distribution function of Gaussian distribution

| (31) |

where

| (32) |

Lemma 2.

is monotonically increasing with respect to , under the condition .

Proof.

Let

| (33) |

and put

| (34) |

Equation˜34 can be rewritten as:

| (35) |

and calculating partial differentiation,

| (36) |

Then we show that under the condition .

calculating integral when

Let .

Then can be written as

| (37) |

Calculating the integral above, using these integral(Gaussian Integral)

| (38) |

Now

| (39) |

can be written as below, under condition .

| (40) |

Then the Gaussian integral is applied, and the integral is written as below:

| (41) |

calculating integral when

Let and .

Equation˜36 can be written as below, using integration by substitution .

| (42) |

, and this is calculated as

| (43) |

This is calculated by two conditions: a) and b) .

a)

Equation˜43 can be written as:

| (44) |

In the interval , therefore the integrand all over the integral interval. Therefore the value of Eq.˜44 is bigger than 0 regardless of .

b)

From the Eq.˜41

| (45) |

Therefore

| (46) |

in the interval , the integrand is negative, thus

| (47) |

Equation˜47 can be written as

| (48) |

thus

| (49) |

holds.

Therefore, regardless of if , then is monotonically increasing with respect to . ∎ Lemma˜2 suggests that, the maximum gives the maximum value of . Lemma˜1 suggests that the maximum value of the is given by Eq.˜30, thus the maximum value of the can be calculated, when fixing .

When satisfies the condition , then are constants. Thus can be regarded as the function of .

Lemma 3.

is monotonically increasing with respect to .

Proof.

Set as the cumulative distribution function of .

| (50) |

Then can be written as

| (51) |

is the cumulative distribution function of the Gaussian distribution, thus it is monotonically increasing with respect to . Now

| (52) |

From the condition, , therefore is positive. is positive by definition, thus is negative.

Set

| (53) |

When is decreasing with respect to , then is decreasing with respect to thus is increasing with respect to .

Below we show that is decreasing with respect to .

the differentiation of can be decomposed as

| (54) |

The inequality below holds for any kernel functions because the determinant of the gram matrix is positive:

| (55) |

Now by the condition, thus holds.

From the condition of the theorem, holds.

Thus,

| (56) |

and

| (57) |

From the condition of the theorem,

| (58) |

holds, therefore

| (59) |

and

| (60) |

holds.

From the discussions above, gives the maximum value of .

Moreover, is monotonically decreasing with respect to , thus for any holds.

Now we prove Theorem 1. From the discussions above, the probability is upper-bounded as

| (61) |

Plugging the inequality below [shafahi_are_2019] into Eq.˜61, that is, applying

| (62) |

is a maximum success probability (MSP) function where

| (64) |

2.4 Remarks on ˜1

-

•

and indicate the closest points of the two classes from the training dataset in the classification task since the kernel function can be regarded as the function whose output is the similarity between the data points. Practically, can be regarded as an AE whose original data point is and the adversarial perturbation is . AE is formalized as a sample whose distance from the original data points is a small value (or more specifically, a small adversarial perturbation norm) [carlini_towards_2017].

-

•

This theorem indicates that when an AE is crafted using an original input point in GP with adversarial perturbation , the probability that the AE is classified as a different class has a non-trivial upper bound, and that bound is determined as the function of the kernel function used in the GP regression, the distance between the original data point and the nearest data point from the different class. The distance is measured using the kernel function.

-

•

When the AE is classified as a different class, it can be regarded as a successful attack. Thus, the theorem indicates that the probability of a successful attack using AE is upper-bounded.

-

•

We use GP regression for the classification task. The method using a regressor for classification instead of a classifier was also used in [lee_deep_2018] and produced a good result.

-

•

Intuitively, the probability of successful adversarial examples should be upper-bounded by the distance from the nearest sample to the decision boundary. However, in general, the decision boundary cannot be easily calculated. Instead of considering the decision boundary, we proved that finding the closest points from different classes is sufficient to investigate the robustness of GP.

2.5 Maximum Success Probability of AE within All Data in the Training Set

In this section, we prove the maximum success probability of AE in all data in the training set.

The problem formulation is the same as ˜1. Let where and . Set as the maximum of the set , and put such that . Set such that for all and such that and holds.

Theorem 2.

Given and as above, and given arbitrarily from , set such that and . Then, for any , , and , is upper-bounded by the upper bound of , when MSP function in ˜1 increases monotonically with respect to . That is, holds.

The schematic diagram is shown in Fig.˜2.

Proof.

Where is fixed, can be regarded as a function of provided that the kernel function is translation invariant. Therefore, when in ˜1 increases monotonically with respect to , the greater value is given by bigger . From the condition, the value of the kernel function whose inputs are and is the greatest among the values of the kernel function with the pairwise chosen points from and . Thus, the upper bound of is greater than the upper bound with any and the correspondent point in used. ∎∎

2.6 Remarks on ˜2

-

•

˜2 indicates that the AE crafted with the original data point more successfully when the original data point is closer to the image from another class if the kernel function meets the condition that is monotonically increasing as a function of .

-

•

If the kernel function has the condition increases monotonically as a function of , it suggests that one of the points of the closest pair is the original data point from which the AE is crafted most successfully in GP classification with that kernel function.

3 Experimental Results

For justification of ˜1 and confirmation of whether the theorem holds for GP classification with a standard dataset, we conducted the following experiment.

3.1 Devices

The experiments were conducted on Ubuntu 20.04.3 LSB machine, Intel(R) Xeon(R) Gold 6140 CPU @ 2.30GHz (72 cores, 144 threads). We used Python 3.8.10, numpy 1.23.5, and scipy 1.10.1 for the calculation.

3.2 Materials

We used ImageNet as a dataset. ImageNet is used under the license written on this page https://www.image-net.org/download.php. The ImageNet data used are downloaded from https://www.kaggle.com/datasets/liusha249/imagenet10.

3.3 Procedure

We used the image of 10 classes from ImageNet, labeled as respectively. We chose samples from ImageNet, whose labels are either or ( and are taken from the labels pairwisely, which results in 90 combinations). We used 500 samples for each label. The data used in the experiment were those with 3 colors, and randomly cropped to pixels to adjust the image size with different aspect ratios. Therefore, the input dimension of the data was 150528. We trained the GP regressor [rasmussen_gaussian_2006] using these samples as training data with the Gaussian kernel. The Gaussian kernel used in the experiment is as follows:

| (65) |

In the experiment, the adversarial perturbation was fixed to 10, and the parameters of the kernel function changed. That is, the parameters and were used. The randomly cropped area of each image is the same to compare the results across the conditions.

We decided on the adversarial perturbation size considering the distance between a point and the closest point of the other class (see Figure˜5). Most points are not classified as the other class even when the adversarial perturbation of size 10 is added to the points.

We gave the objective variable -1 to the points with label , and 1 to the points with label .

We crafted AE as the point with the following conditions:

-

•

Each AE is crafted by adding perturbation to the original sample of label , resulting in crafting 500 AEs.

-

•

The perturbation is given by moving the original sample by a certain distance ( norm) towards the nearest point whose label is .

A sample of adversarial examples crafted in this experiment is shown in Fig.˜3.

Next, we calculated the predicted mean and variance using the GP regressor, and the probability that the regression result is negative (i.e. the classification result is labeled ) given by

| (66) |

This is the empirical probability of the classification of an AE as the other class than the original class in the GP classifier.

Finally, we calculated the theoretical probability of classification of an AE as the other class than the original class, using in ˜1 with .

These theoretical and empirical probabilities were calculated for all the 500 points that had the label in each condition. In each condition, the mean and maximum of the theoretical and empirical probabilities were calculated and their mean across the conditions was calculated.

3.4 Result

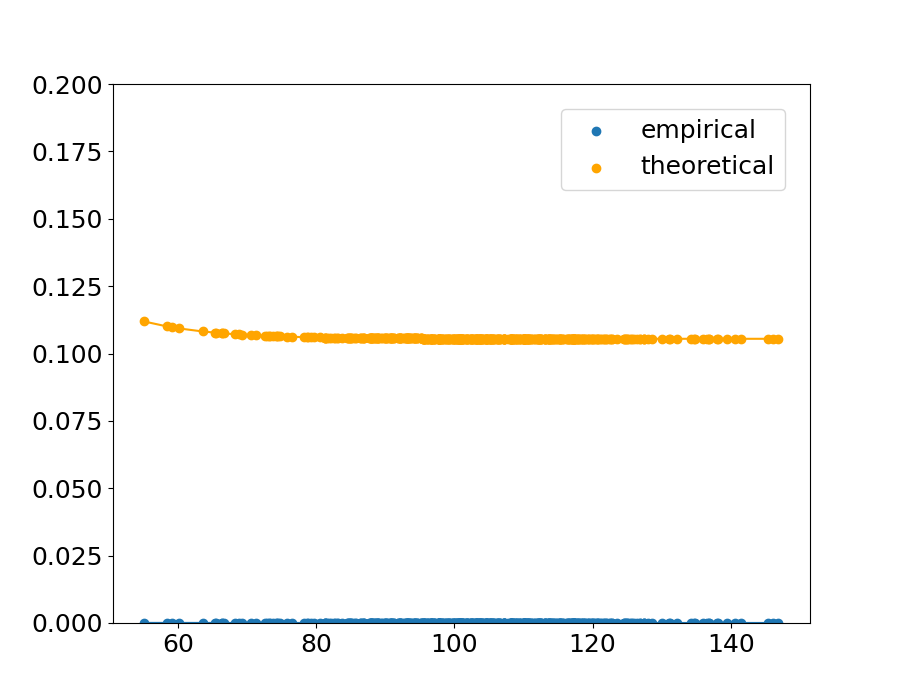

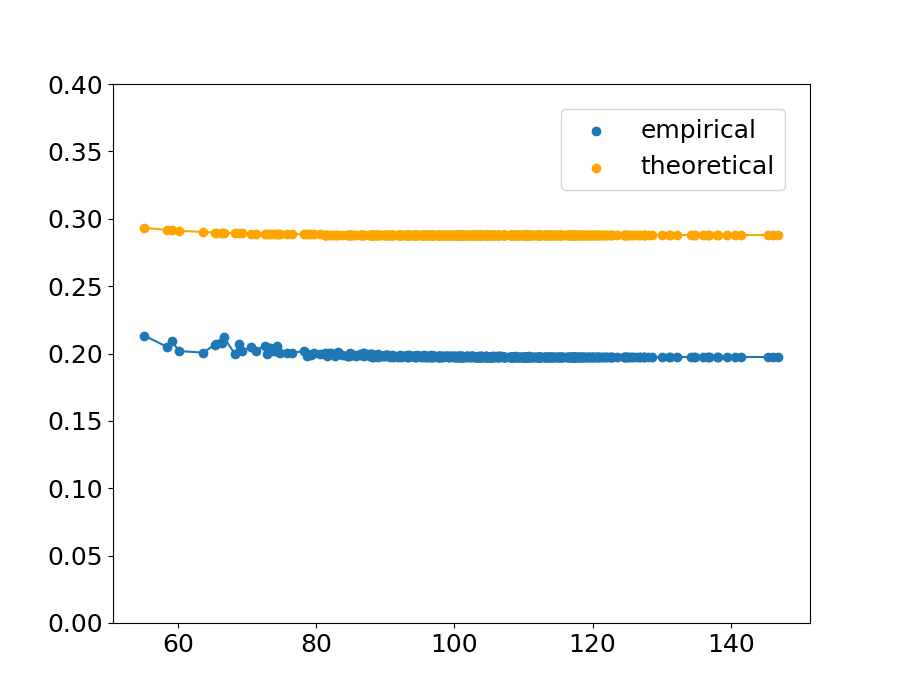

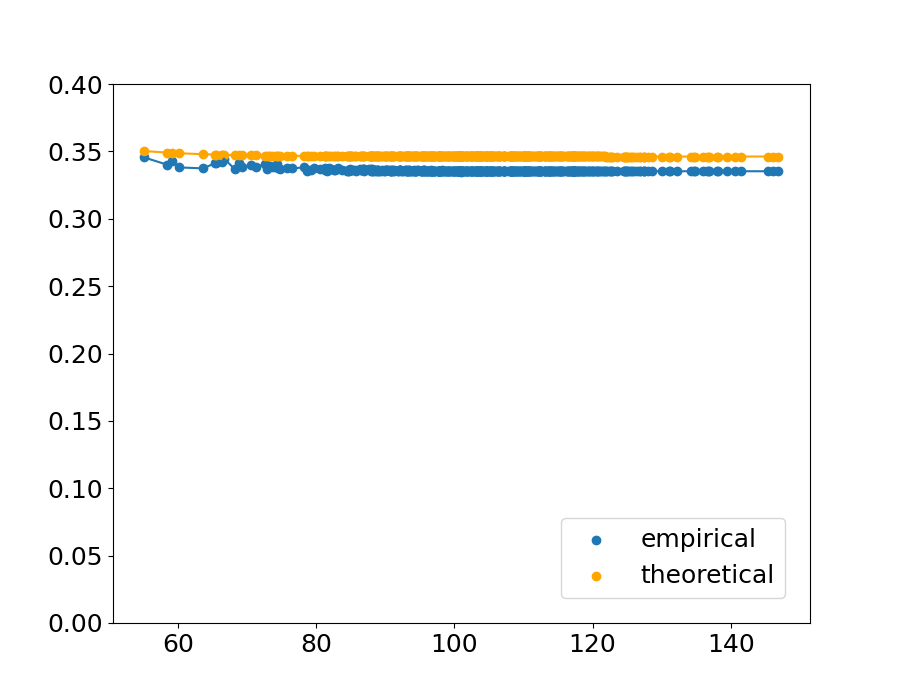

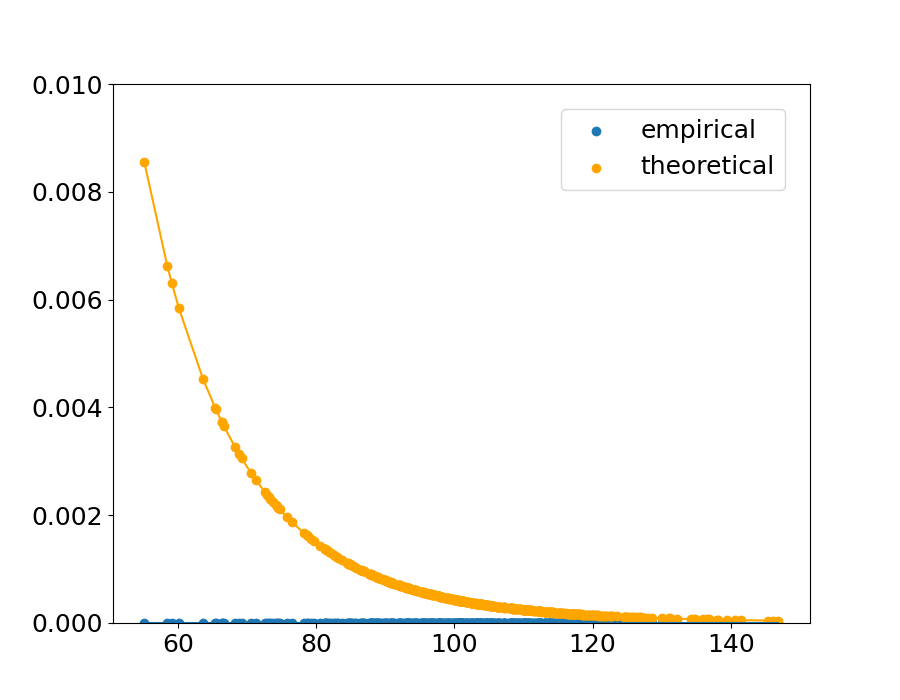

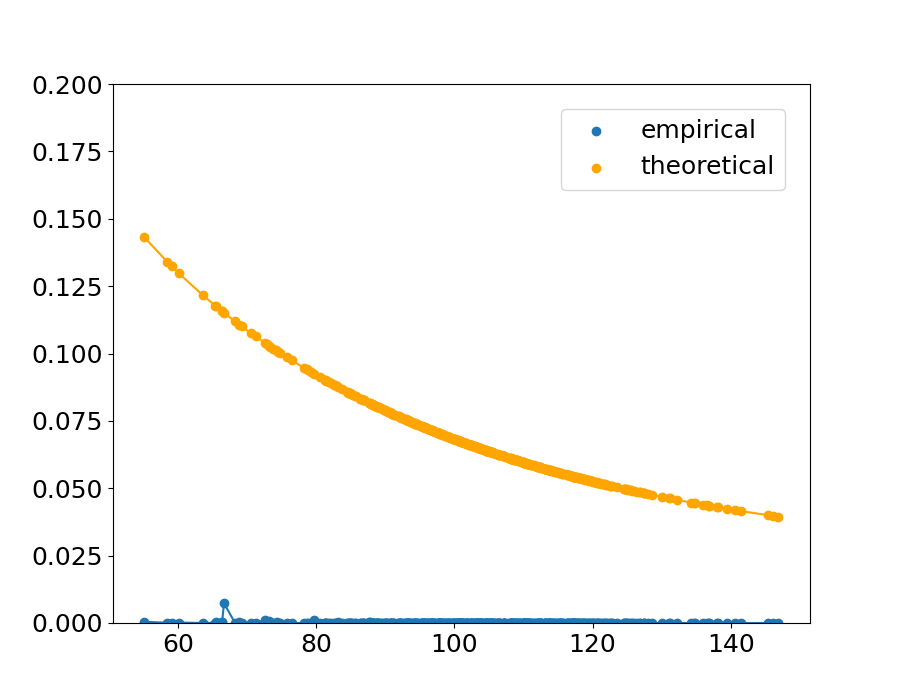

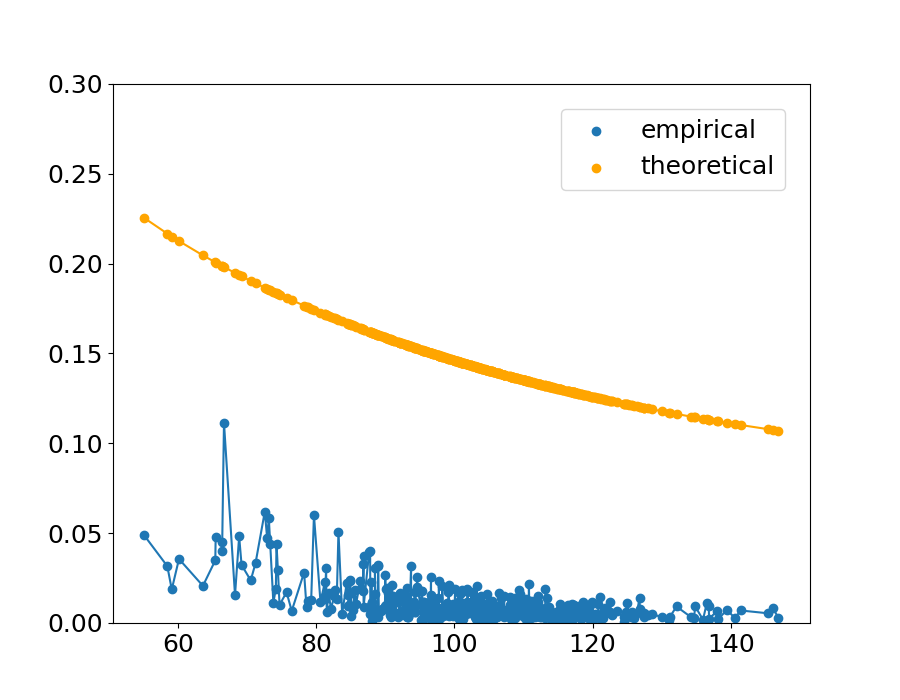

Fig.˜4 shows the sample of the theoretical upper bound and the empirical value in the condition . The horizontal axis indicates the distance between a point and the nearest point from the other class, and the vertical axis indicates the theoretical upper bound calculated with ˜1 and the empirical value calculated with Eq.˜66. The result is shown in Table˜1, which indicates the proportion of the points that follow the theorem, and the maximum theoretical value within the and combination (the values shown in Table˜1 are the means across the 90 combinations and ).

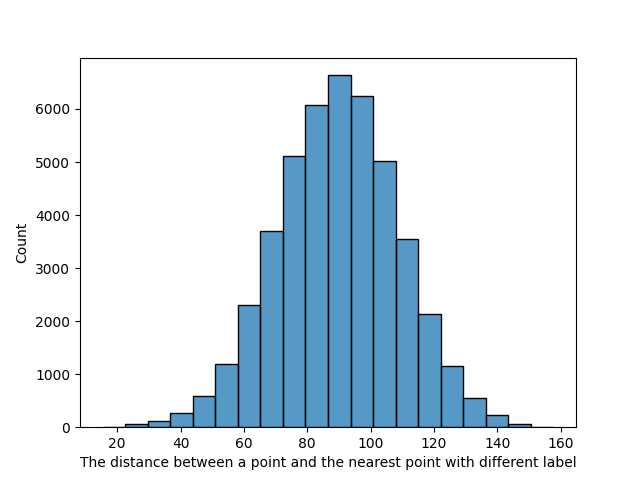

The analysis of the distance between the points and their nearest points that have different labels is shown in Table˜2. The mean of the distance is , and if the points are restricted to those that do not follow the theorem (that is, their theoretical values are not larger than the empirical values), the distance is smaller. The overall histogram of the distance between a point and the nearest point with a different label is shown in Figure˜5.

An extreme example is shown in (c) of Figure˜4. In this condition of kernel parameters, the empirical probability is high and in that condition the theoretical upper bound is tight.

The upper bound does not always hold because in this experiment, the upper bound is calculated with ˜1 with , while in the real data, could be positive. However, the fact that the theorem holds with high probability even if is suggests that we can empirically say that ˜1 is satisfied in practice.

Theoretically, when the bandwidth of the Gaussian distribution in the kernel function is small, the empirical decision boundary is not affected by the distant points and is determined mainly by the closest points. In that condition, the theoretical upper bound (calculated by two nearest points) is closer to the empirical probability.

| 0.1 | 0.1 | 0.5 | 0.5 | 1 | 1 | |||

| 10 | 50 | 10 | 50 | 10 | 50 | |||

|

0.9996 | 0.9999 | 0.9991 | 0.9998 | 0.9898 | 0.9997 | ||

| The mean of the | 0.2095 | 0.1216 | 0.3512 | 0.2616 | 0.3928 | 0.3216 | ||

| max theoretical value | 0.1538 | 0.1927 | 0.0809 | 0.1291 | 0.0585 | 0.0981 |

| 0.1 | 0.1 | 0.5 | 0.5 | 1 | 1 | |||

|---|---|---|---|---|---|---|---|---|

| 10 | 50 | 10 | 50 | 10 | 50 | |||

| The mean distance between the points | 23.59 | 18.55 | 31.13 | 24.58 | 50.84 | 30.13 | ||

| which don’t follow the theorem | 5.342 | 4.031 | 8.524 | 13.25 | 10.99 | 14.98 | ||

|

89.72 | |||||||

4 Discussion

4.1 The Interpretation of the Theorems

Our theorems show the fundamental limitation of the AE in GP; the success probability of AE crafted by any method cannot exceed the value of MSP function in ˜1, and the vulnerability of a training dataset to the AE is determined by the closest pair of the data points whose labels are different.

The theorems can be applied to other inference models because GP includes some inference models. In particular, the theoretical bound can be computed for neural networks. Since neural networks with an infinite number of units in the hidden layer and Bayesian neural networks are regarded GP [gal_dropout_2016, williams_computing_1996], our results apply to neural networks, resulting in the fundamental limitation of AE in neural networks, as in previous research [blaas_adversarial_2020, fawzi_adversarial_2018, shafahi_are_2019]. Compared with previous research, our result adds the viewpoint of the distance between the samples.

4.2 The Choice of Kernel Functions

Since the predictive mean can be written as the sum of the terms of the kernel functions whose inputs are each training point and test point (Representer Theorem in GP [goos_generalized_2001]), changing the parameter of the kernel functions changes the shape of the decision boundary.

The result of the experiments in Section˜3 suggests that the upper bound of the probability changes according to the choice of the kernel functions. This suggests that changing the activation function of a neural network can improve the robustness of that neural network. The change according to the kernel parameter of the Gaussian kernel (Eq.˜65) shown in the experiment in Section˜3 can be interpreted as below:

-

•

When changes, the value of the Gaussian kernel is multiplied by . With regard to and in ˜1, when becomes larger, will remain unchanged and will be larger. Considering that the MSP function (Eq.˜5) is based on the CDF of Gaussian distribution and the CDF of Gaussian distribution increases monotonically with respect to when , the value of Eq.˜5 is greater when is greater.

-

•

When changes, the Gaussian distribution bandwidth in the Gaussian kernel will change. Considering the form of the exponential function, if is larger, the absolute value of the derivative of the Gaussian kernel with respect to at the same point is smaller. This suggests that if is greater, the decay of the value of the Gaussian kernel with respect to is slower, and the effect on the value of the Gaussian kernel by the distance of two points would be greater in the interval focused in the experiment.

Since is not easily differentiable with respect to , the discrete calculation is required to confirm whether the kernel function satisfied the constraint in Section˜2.6 and to determine the kernel function to improve the robustness. However, it is reasonable that if is small (that is, and is too far away), , the point near , cannot be classified as the same class as .

Previous research showed that the activation functions in neural networks are equivalent to the kernel functions in GP[lee_deep_2018, williams_computing_1996]. Therefore, the theoretical result of this paper suggests the theoretical basis for the enhancement method that changes the activation function in neural networks. Previous studies suggest that changing the activation function in neural networks can enhance the robustness against AEs [goodfellow_explaining_2015, gowal_uncovering_2021, taghanaki_kernelized_2019]. Our result shows that the change of the kernel function induces the change of the theoretical upper bound of the successful AEs, and considering that the activation function in neural networks is the counterpart of the kernel function in GP, our result suggests that the robustness enhancement method by changing the activation functions in neural networks has the same basis as our result.

4.3 Open Problems

This paper has the following open problems: Further investigation should solve these problems.

-

•

˜1 is proved under the assumption that the effect of input points other than on the predictive mean is less than . When using the Gaussian kernel, this condition is reasonable because the value of the Gaussian kernel decreases rapidly according to the distance of the input points, and it is confirmed in the experiment that even if is set to 0, over 98% of the points follow the theorem. The theorem under the condition of using another kernel function should be investigated further.

-

•

An experiment with neural networks should be conducted to confirm that one can design a more robust activation function using ˜1.

4.4 Limitations

The research has the following limitations.

-

•

The problem formulation of the research is binary classification, not multi-class classification.

-

•

Finding the nearest point can be time-consuming if the number of input points increases. An efficient algorithm, such as a divide-and-conquer algorithm, should be investigated for the search for the nearest point.

- •

4.5 Conclusion

In this paper, we showed that in the GP classification, the probability of a successful attack of AE has an upper bound. The experiment showed that the parameter of kernel functions can change the theoretical upper bound of the probability of a successful attack of AE, suggesting that the choice of kernel functions affects the robustness against AE in the GP classification.

4.5.1 Disclosure of Interests.

The authors have no competing interests to declare that are relevant to the content of this article.

References

- [1] Bhagoji, A.N., Cullina, D., Mittal, P.: Lower Bounds on Adversarial Robustness from Optimal Transport. 33rd Conference on Neural Information Processing Systems (Oct 2019), https://doi.org/10.48550/arXiv.1909.12272

- [2] Blaas, A., Patane, A., Laurenti, L., Cardelli, L., Kwiatkowska, M., Roberts, S.: Adversarial Robustness Guarantees for Classification with Gaussian Processes. Proceedings of the Twenty Third International Conference on Artificial Intelligence and Statistics, 108, 3372–3382 (Mar 2020), https://doi.org/10.48550/arXiv.1809.06452

- [3] Cai, Q.Z., Du, M., Liu, C., Song, D.: Curriculum Adversarial Training. In: International Joint Conference on Artificial Intelligence (May 2018), https://doi.org/10.48550/arXiv.1805.04807

- [4] Cardelli, L., Kwiatkowska, M., Laurenti, L., Patane, A.: Robustness Guarantees for Bayesian Inference with Gaussian Processes. The Thirty-Third AAAI Conference on Artificial Intelligence (2019), http://arxiv.org/abs/1809.06452

- [5] Carlini, N., Wagner, D.: Adversarial Examples Are Not Easily Detected: Bypassing Ten Detection Methods. Proceedings of the 10th ACM Workshop on Artificial Intelligence and Security pp. 3–14 (Nov 2017), https://doi.org/10.48550/arXiv.1705.07263

- [6] Carlini, N., Wagner, D.: Towards Evaluating the Robustness of Neural Networks. In: 2017 IEEE Symposium on Security and Privacy (SP). pp. 39–57. IEEE, San Jose, CA, USA (May 2017). https://doi.org/10.1109/SP.2017.49

- [7] Cohen, J.M., Rosenfeld, E., Kolter, J.Z.: Certified Adversarial Robustness via Randomized Smoothing (Jun 2019), https://doi.org/10.48550/arXiv.1902.02918, arXiv:1902.02918 [cs, stat]

- [8] Fawzi, A., Fawzi, H., Fawzi, O.: Adversarial vulnerability for any classifier. Proceedings of the 32nd International Conference on Neural Information Processing Systems. pp. 1186–1195 (Nov 2018), https://doi.org/10.48550/arXiv.1802.08686

- [9] Feinman, R., Curtin, R.R., Shintre, S., Gardner, A.B.: Detecting Adversarial Samples from Artifacts. arXiv:1703.00410 [cs, stat] (Nov 2017), https://doi.org/10.48550/arXiv.1703.00410

- [10] Gal, Y., Ghahramani, Z.: Dropout as a Bayesian Approximation: Representing Model Uncertainty in Deep Learning. Proceedings of The 33rd International Conference on Machine Learning 48, 1050–1059 (Oct 2016), https://doi.org/10.48550/arXiv.1506.02142

- [11] Gilmer, J., Metz, L., Faghri, F., Schoenholz, S.S., Raghu, M., Wattenberg, M., Goodfellow, I.: The Relationship Between High-Dimensional Geometry and Adversarial Examples. arXiv:1801.02774 [cs] (Sep 2018), https://doi.org/10.48550/arXiv.1801.02774

- [12] Goodfellow, I.J., Shlens, J., Szegedy, C.: Explaining and Harnessing Adversarial Examples. International Conference on Learning Representations (Mar 2015), https://doi.org/10.48550/arXiv.1412.6572

- [13] Gowal, S., Qin, C., Uesato, J., Mann, T., Kohli, P.: Uncovering the Limits of Adversarial Training against Norm-Bounded Adversarial Examples (Mar 2021), https://doi.org/10.48550/arXiv.2010.03593, arXiv:2010.03593 [cs, stat]

- [14] Lee, J., Bahri, Y., Novak, R., Schoenholz, S.S., Pennington, J., Sohl-Dickstein, J.: Deep Neural Networks as Gaussian Processes. International Conference on Learning Representations (Mar 2018), https://doi.org/10.48550/arXiv.1711.00165

- [15] Mahloujifar, S., Diochnos, D.I., Mahmoody, M.: The Curse of Concentration in Robust Learning: Evasion and Poisoning Attacks from Concentration of Measure. Proceedings of the AAAI Conference on Artificial Intelligence 33, 4536–4543 (Jul 2019). https://doi.org/10.1609/aaai.v33i01.33014536

- [16] Rasmussen, C.E., Williams, C.K.I.: Gaussian processes for machine learning. Adaptive computation and machine learning, MIT Press, Cambridge, Mass (2006), oCLC: ocm61285753

- [17] Schölkopf, B., Herbrich, R., Smola, A.J.: A Generalized Representer Theorem. In: Goos, G., Hartmanis, J., Van Leeuwen, J., Helmbold, D., Williamson, B. (eds.) Computational Learning Theory, vol. 2111, pp. 416–426. Springer Berlin Heidelberg, Berlin, Heidelberg (2001). https://doi.org/10.1007/3-540-44581-1_27, http://link.springer.com/10.1007/3-540-44581-1_27, series Title: Lecture Notes in Computer Science

- [18] Shafahi, A., Huang, W.R., Studer, C., Feizi, S., Goldstein, T.: Are adversarial examples inevitable? 7th International Conference on Learning Representations (2019), https://doi.org/10.48550/arXiv.1809.02104

- [19] Smith, M.T., Grosse, K., Backes, M., Álvarez, M.A.: Adversarial vulnerability bounds for Gaussian process classification. Machine Learning 112(3), 971–1009 (Mar 2023). https://doi.org/10.1007/s10994-022-06224-6

- [20] Taghanaki, S.A., Abhishek, K., Azizi, S., Hamarneh, G.: A Kernelized Manifold Mapping to Diminish the Effect of Adversarial Perturbations. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition. IEEE (2019), https://doi.org/10.48550/arXiv.1903.01015

- [21] Wang, Y., Jha, S., Chaudhuri, K.: Analyzing the Robustness of Nearest Neighbors to Adversarial Examples. Proceedings of the 35 th International Conference on Machine Learning p. 10 (2018). https://doi.org/10.48550/arXiv.1706.03922

- [22] Williams, C.: Computing with infinite networks. In: Mozer, M., Jordan, M., Petsche, T. (eds.) Advances in Neural Information Processing Systems. vol. 9. MIT Press (1996), https://proceedings.neurips.cc/paper_files/paper/1996/file/ae5e3ce40e0404a45ecacaaf05e5f735-Paper.pdf

- [23] Zhang, X., Chen, J., Gu, Q., Evans, D.: Understanding the intrinsic robustness of image distributions using conditional generative models. In: Chiappa, S., Calandra, R. (eds.) Proceedings of the Twenty Third International Conference on Artificial Intelligence and Statistics. Proceedings of Machine Learning Research, vol. 108, pp. 3883–3893. PMLR (26–28 Aug 2020). https://doi.org/10.48550/arXiv.2003.00378