School of Mathematical Sciences, University of Electronic Science and Technology of China, Chengdu 611731, P. R. China

longwang@pku.edu.cn

Decision theory and game theory Dynamics of evolution Stochastic modeling

Role of the effective payoff function in evolutionary game dynamics

Abstract

In most studies regarding evolutionary game dynamics, the effective payoff, a quantity that translates the payoff derived from game interactions into reproductive success, is usually assumed to be a specific function of the payoff. Meanwhile, the effect of different function forms of effective payoff on evolutionary dynamics is always left in the basket. With introducing a generalized mapping that the effective payoff of individuals is a non-negative function of two variables on selection intensity and payoff, we study how different effective payoff functions affect evolutionary dynamics in a symmetrical mutation-selection process. For standard two-strategy two-player games, we find that under weak selection the condition for one strategy to dominate the other depends not only on the classical -rule, but also on an extra constant that is determined by the form of the effective payoff function. By changing the sign of the constant, we can alter the direction of strategy selection. Taking the Moran process and pairwise comparison process as specific models in well-mixed populations, we find that different fitness or imitation mappings are equivalent under weak selection. Moreover, the sign of the extra constant determines the direction of one-third law and risk-dominance for sufficiently large populations. This work thus helps to elucidate how the effective payoff function as another fundamental ingredient of evolution affect evolutionary dynamics.

pacs:

02.50.Lepacs:

87.23.Kgpacs:

87.10.Mn1 Introduction

In a Darwinian evolutionary process, it mainly incorporates three fundamental ingredients: inheritance, mutation, and selection. Due to the influence of perturbation or random drift, a genetic process frequently accompanies the occurrence of mutations, which creates genotypic or phenotypic variation, thereby finally leading to the differences in individual fitness that selection acts upon [1]. By integrating game theory with Darwinian evolution [2, 3], evolutionary game theory has become a powerful mathematical framework to model biological [4, 5] and social [6, 7, 8, 9] evolution in a population consisting of different types of interacting individuals under frequency-dependent selection.

Traditionally, a widely used system that focuses on the effects of frequency-dependent selection is the replicator equation [10, 11], where the population is infinitely large well-mixed and the stochastic effect is exclusively overlooked usually. However, if we relax this setting to a more realistic situation where the population is finite well-mixed and subject to fluctuations, this deterministic approach is augmented and disturbed by random drift [12, 13, 14]. In such a finite population with fluctuations, it needs to resort to the tool of stochastic evolutionary game dynamics for investigating the evolution of different traits [1, 15]. In addition to the classical Wright-Fisher process [12, 16], frequency-dependent Moran process [13, 15], and imitation-based pairwise comparison process [17, 18, 19] are two most common microscopic models of strategy spreading in finite populations. In contrast to the well-mixed population setting above, there are also lots of interest in studying evolutionary game dynamics in structured populations [17, 20, 21, 22]. Typically, the spatial geometry of population structure is modeled by regular lattices [23, 24, 25, 26, 27, 28] or more general complex networks [29, 30, 31, 32], where individual interactions merely occur among nearest neighbors.

For a game system, in general, the ingredients influencing the final evolutionary outcomes are nothing but the model, update rule, mutation rate, and population structure, etc. Models and update rules determine the way of strategy spreading. Mutation rates measure the intensity of randomness, while the underlying population structure describes the geometry of individual interactions. Depending on the game interactions, each individual obtains a payoff, and finally it needs to translate into reproductive success, termed effective payoff [33]. For example, the effective payoff is known as fitness in Moran process [13, 15] and imitation probability in pairwise comparison process [18, 19, 34]. Based on the usual assumption that the effective payoff is the form of ), for standard two-strategy two-player games, Tarnita et al. demonstrate that if the selection intensity is weak the condition for one strategy to dominate the other is determined by a ‘-rule’ [33], which holds on any population structure [35]. The parameter , termed structure coefficient, is a quantity that only depends on the population structure, update rule, and mutation rate, but not on the payoff values. Later, this work attracts wide interest [36, 37, 38]. For two-player games with multiple strategies, it involves two structure coefficients [36]. To calculate them, investigating games with three strategies is enough. While for multi-player games with two strategies where individuals are selected to play a game, the -rule will depend on structure coefficients [37]. In particular, for a more general setting of multi-player games with many strategies, this rule turns out to be quite complicated and the number of structure coefficients required for a symmetric game with -player and -strategy grows in like [38]. Clearly, because the form of the effective payoff is a specific function in these works, the -rule does not reflect the influence of the effective payoff function on evolutionary outcomes.

In evolutionary biology, however, how to measure the genotype-fitness map (i.e., the fitness landscape) is always a challenging issue, and now it has been accepted that the shape of the genotype-fitness map has fundamental effects on the course of evolution [39]. In addition, based on a Markov chain model, it has been demonstrated that the heterogeneity of individual background fitness can act as a suppressor of selection [40]. In a way, therefore, it means that the form of the effective payoff function (which translates the payoff derived from game interactions into the ability of reproductive success) has a significant effect on the evolution of game dynamics.

To this end, in this letter we study the effect of different function forms of effective payoff on evolutionary dynamics and accordingly extend the results given by Tarnita et al. [33]. We find that if the first-order derivative of the effective payoff function can be written by a linear combination of payoff, then the condition for one strategy to dominate the other depends not only on the -rule, but also on an extra constant which is determined by the effective payoff function. This constant determines the direction of -rule (strategy selection). Additionally, taking the Moran process and pairwise comparison process as specific models in well-mixed populations, we demonstrate that different fitness or imitation mappings are equivalent under weak selection and the extra constant curbs the direction of one-third law and risk-dominance in the limit of large populations.

2 Model and results

In a structured population with individuals, we consider stochastic evolutionary dynamics induced by a mutation-selection process. Each player can choose an arbitrary strategy from and . Then, depending on the payoff matrix

| (1) |

players obtain an accumulative payoff by interacting with other individuals based on the underlying population structure. For example, when an player interacts with another player, it will obtain a payoff , but when interacting with a player. Likewise, a player can obtain a payoff when interacting with an player, and payoff when interacting with another player. Therefore, the total payoff of each player is a linear function of , , , and without including constant terms (evidently, if the payoff is calculated in an average way, the linear relation also holds). For an player, for instance, the total payoff is . To study the effect of effective payoff functions on evolutionary dynamics, instead of a specific form, we assume that the effective payoff of a player is given by . Parameter measures the intensity of selection, and corresponds to the case of weak selection [13, 19, 41].

The reproductive process of each player is dependent on the update rule and its effective payoff, and subject to mutations. With probability , a mutation occurs and the offspring adopts a strategy ( or ) at random. Otherwise, with probability , the offspring inherits its parent’s strategy. For , there are only mutations, no selection, and strategy choice is completely random. If , however, there exists a mutation-selection equilibrium [42, 43].

For the game of two strategies, the frequency of players in the population defines a finite state space, , and the evolutionary dynamics can be captured by a Markov process on this state space. We denote the transition probability from state to state by . Since the transition probability depends on the update rule and on the effective payoff of players, it can be given by . Furthermore, we assume that is differentiable at . In the limit of weak selection, then we can give in the form of first-order Taylor expansions,

.

Here represents the baseline effective payoff of each player, and represents the first-order coefficient of selection intensity. Particularly, if can be written by a linear combination of payoff, that is , then the transition probability is given by . Clearly, the constants, and , are dependent on the choice of the function and may rely on the entries of the payoff matrix. In addition, actually a large body of functions meet the condition , such as [13], [37], and [19]. Therefore, this condition is not a harsh requirement. Note that the payoff of players is linear in , , , and without constant terms, it follows that the transition probability is the function . Then, based on the notation and following the proof given in Ref. [33], we know that the condition that strategy is favored over strategy (i.e., strategy is more abundant than in the mutation-selection equilibrium) is , where is a parameter that depends on the population structure, update rule, and mutation rate. Accordingly, we have the following theorem:

Theorem . Consider a population structure and an update rule that satisfy the following three conditions: (i) the transition probabilities are differentiable at ; (ii) the update rule is symmetric for the two strategies and ; and (iii) in the game given by the matrix entries, and , strategy is not disfavored. Then, in the limit of weak selection, when the effective payoff function satisfies , strategy is favored over strategy if

where is a constant that relies on the function of the effective payoff, and is the structure coefficient which depends on the model and the dynamics (population structure, update rule, and mutation rate), but not on the entries of the payoff matrix.

This theorem implies that for determining the condition under which one strategy dominates the other, the classical -rule [33] is not enough. It also depends on an additional constant determined by the effective payoff function. Actually, the constant controls the direction of -rule (strategy selection). If the effective payoff function is given such that the constant is positive, the theorem recovers the classical -rule (selection favors to dominate ). Otherwise, if the effective payoff function is given such that is negative, the classical -rule will reverse the direction (selection favors to dominate ).

3 Moran and pairwise comparison process

To check the validity of our theorem and to study how the effective payoff function influences the evolutionary outcomes in a specific dynamic process, here we consider the frequency-dependent Moran process and pairwise comparison process. These two processes represent two classes of typical evolutionary dynamics. The former describes how successful strategies spread in the population through genetic reproduction, whereas the latter describes such a process through cultural imitation.

In the Moran process, the effective payoff is known as the individual fitness, which measures the ability to survive and produce offspring. With a probability proportional to the fitness, an individual is selected randomly for reproduction. And then one identical offspring replaces another randomly chosen individual. Usually, the fitness is assumed to be a convex combination of a background fitness (which is set to one) and the payoff from the game [13, 15], or an exponential function of payoff [37]. Under these specific forms, the constant related to the fitness function actually turns to and it reduces to the previous results [13, 18]. Instead of using a specific fitness form, here we adopt a generalized mapping that the fitness of a player is any a non-negative function of two variables on selection intensity and payoff, . Since mutation occurs during the process of reproduction, it follows that the transition probabilities are given by

| (2) |

where and are the average payoffs of an player and a player, respectively.

While in the pairwise comparison process, the effective payoff is known as the imitation probability. Two individuals are sampled randomly and then a focal player imitates the strategy of the role model with a probability depending on the payoff comparison [43, 18]. As usual, the imitation probability is modeled by the Fermi function with considering the effect of noise [24, 17, 25]. Thus, this process is also called Fermi process. Under the situation that the effective payoff mapping is non-specified, however, the imitation probability function for the pairwise comparison process should be given by . Here, denotes the difference of average payoffs between strategy and . In the presence of mutations, this imitation process occurs accurately with probability . Otherwise, with probability , the focal player adopts a random strategy, or . Then, it leads to the transition probabilities,

| (3) |

where . Herein, parameters and are defined by and , respectively.

Moreover, for both processes, the probability to stay in the current state is , and the probability to transform to other states is vanishing. In what follows, we first calculate the fixation probabilities and fixation times under weak selection when mutations are absent. Then, we derive the criterion that strategy is favored over strategy in this case, and finally extrapolate this criterion to small mutation rates.

3.1 Fixation probabilities and fixation times

If there are no mutations in these two game systems, then one quantity of most interest is the fixation probability, , which describes the probability that individuals of type reach fixation at all . Another significant quantity is the average time for a single player reaching fixation [44, 45, 46]. The former measures the preference of natural selection whereas the latter characterizes the evolutionary velocity of the system.

First, we follow the conditions given by Theorem , that is, the first-order derivative of fitness function for the Moran process can be written by , and the one of imitation probability function for the pairwise comparison process can be written by . Here, and ( and ) are two constants that depend on the choice of fitness (imitation probability) functions and may be related to the entries of the payoff matrix. With the notations and , where is the baseline fitness of each player and is the probability of random imitation, we obtain the approximation of fixation probabilities under weak selection (details for the Supplementary Material) as

| (4) |

where for the frequency-dependent Moran process, and for the pairwise comparison process.

While for the average times of a single player reaching fixation, there are two kinds of fixation times that attract much research attention [44, 18]. The first one is the unconditional average time of fixation , which is the expected value for the time until the population reaches one of the two absorbing states, all and all , when starting from a single . Another is the conditional average time of fixation , which specifies the expected time that a player of type takes to reach the absorbing state, all . In the limit of weak selection, we find that the unconditional and conditional fixation times for the Moran process (details for the Supplementary Material) can be approximated to

| (5) |

whereas for the pairwise comparison process, they are given by

| (6) |

where is the harmonic number.

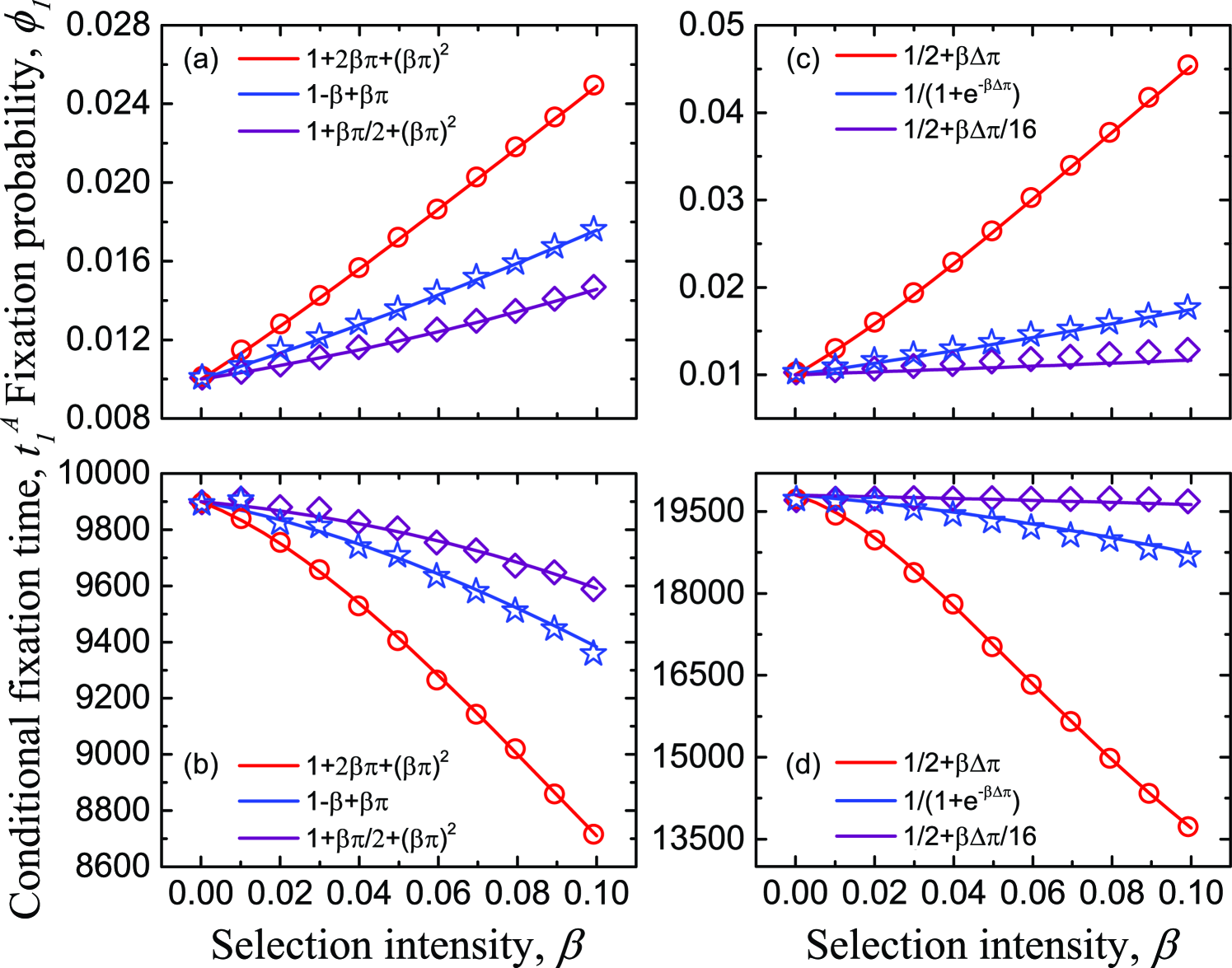

Interestingly, for both Moran process and pairwise comparison process, if the first-order derivative of the effective payoff function (i.e., the fitness and imitation probability function) can be written by a linear combination of the payoff, the difference in the influence of effective payoff functions on evolutionary outcomes just embodies in the coefficients before selection intensity, and . By proper rescaling, actually, these constant coefficients can be absorbed into the selection intensity, or make all payoff matrix entries (, , , and ) change a scale in view of the exact formulae of and . In particular, if we adopt a linear or an exponential form of payoff as the fitness function, or the Fermi function as the imitation probability, both and are , which recovers the previous results [18] as specific cases. Moreover, under the conditions and , if a fitness (imitation probability) function is chosen such that (), then taking the constant as the benchmark, () leads this function to acting as an amplifier of selection (facilitating the fixation of advantage individuals and decreasing the fixation time). Nevertheless, when (), this function acts as a suppressor of selection (suppressing the fixation of advantage individuals and increasing the fixation time). This result holds not only for weak selection, but also for intermediate selection intensity (see Fig. 1). As the counterpart, if the function is chosen such that (), with the scaling theory of dilemma strength [47, 48], another example of prisoner’s dilemma is given in the Supplementary Material.

3.2 Equivalence

Based on the above calculations of fixation probabilities and fixation times under weak selection, additionally, we find that two arbitrary fitness (imitation probability) functions in a Moran process (pairwise comparison process) are equivalent. Specifically, for the frequency-dependent Moran process with a generalized fitness function , if is satisfied, we know that the influence of any two different fitness functions on evolutionary outcomes just embodies in the constant factor before the selection intensity. Thus, in this sense, any two fitness mappings meeting the conditions defined above are equivalent under weak selection. The equivalence means that the difference in fixation probabilities and fixation times is merely captured by the constant factor , with which the payoff matrix or the intensity of selection changes a scale.

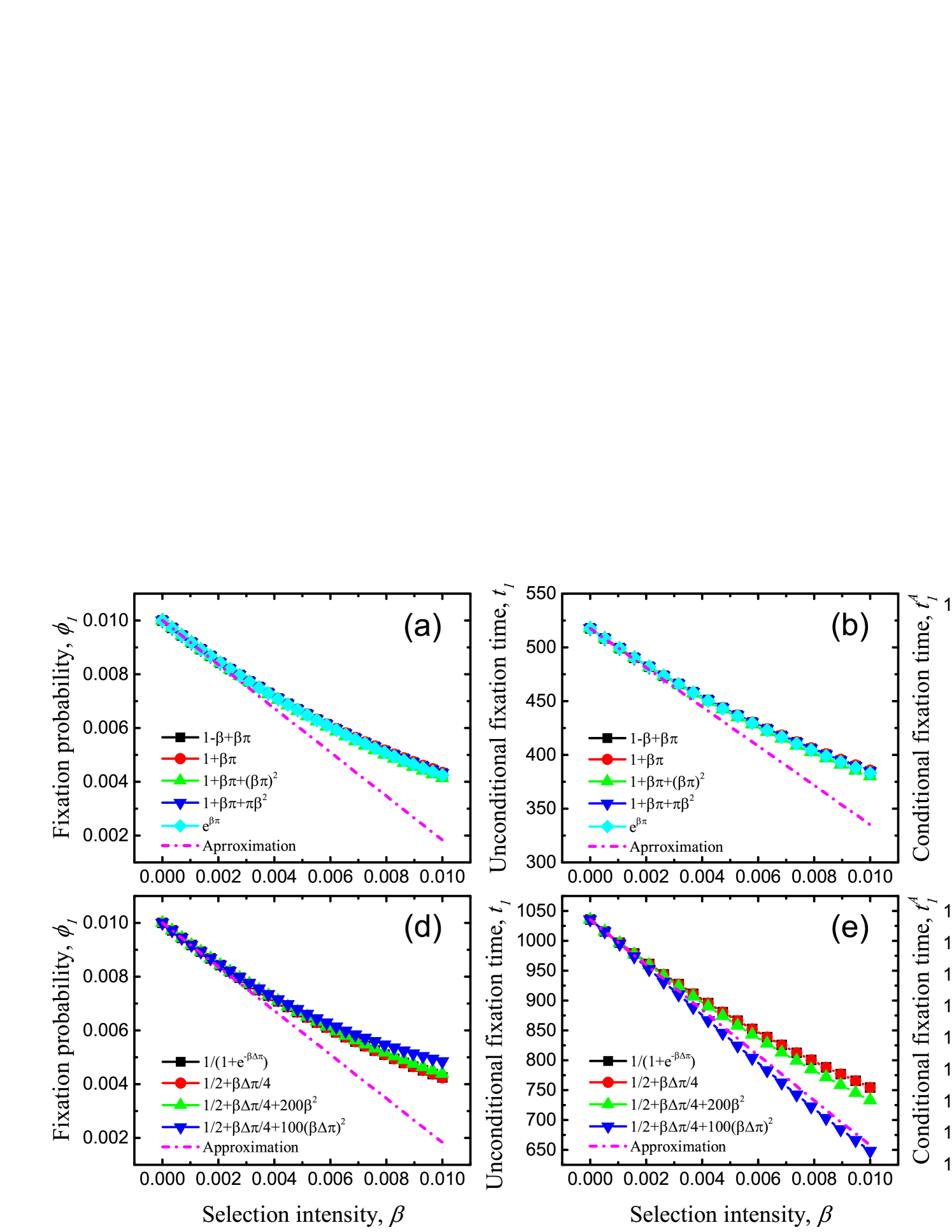

Particularly, if the fitness function adopts one of the function families, and , where and are constant coefficients that guarantee and because the individual fitness is positive [37, 19], then we have the same factor . Interestingly, if , these two function families are equivalent to the prevalent fitness mappings and under weak selection (see Fig. 2). Actually, the Taylor series of at is just the function family when specific coefficients , , and are applied.

Similarly, for the pairwise comparison process with a generalized imitation probability function , if is satisfied, then the influence of any two different imitation probability functions on evolutionary outcomes also embodies in a constant factor before the selection intensity, . Thus, in this sense, for two arbitrary imitation probability functions, they are also equivalent under weak selection. The equivalence follows the same meaning as that in the Moran process, that is, the constant factor uniquely measures the difference of fixation probabilities and fixation times under weak selection.

Surprisingly, with a completely similar formulation to the fitness function families and , and , where and are constant coefficients which guarantee that and are probability functions and , are two classes of equivalent imitation probability functions. Particularly, if , these two function families are equivalent to the popular Fermi function under weak selection (see Fig. 2). Actually, by choosing specific coefficients, can also become the Taylor series of Fermi function at .

3.3 One-third law and risk-dominance

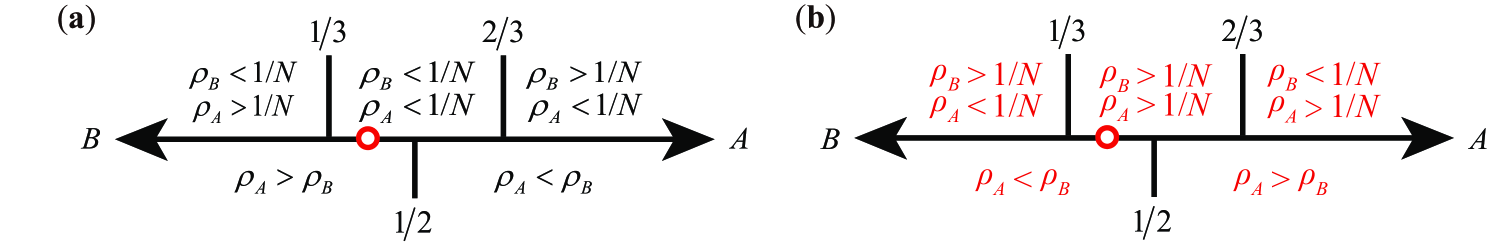

In stochastic evolutionary game dynamics, the notions of invasion and fixation are two fundamental concepts to describe the spreading of strategies in finite populations [13, 15]. Using the neutral game as the benchmark, strategy is shortly said to fixate in a resident population (selection favors replacing ) if the fixation probability for a single is larger than that in the neutral game [13, 15]. Thus, for the frequency-dependent Moran process with a fitness function which fulfills the condition given above, selection favors replacing under weak selection if (see Eq. (4)). Similarly, for the pairwise comparison process with an imitation probability function , selection favors replacing under weak selection if (see Eq. (4)). In view of the notations and , it follows that the criterion that selection favors replacing under weak selection is , where for the Moran process and for the pairwise comparison process. Specifically, we have (see Fig. 3): (i) When , the condition under which selection favors replacing () is . Particularly, for sufficiently large population size , it corresponds to one-third law [13] in the case of coordination games (); (ii) When , this condition changes to . Particularly, for , the classical one-third law is reversed in the case of coordination games (); (iii) When , the condition that selection favors replacing will depend on the higher order coefficients of in . Actually, the calculations of higher order coefficients under weak selection are more tedious than the linear approximation [19].

Except for the underlying principle that determines the condition of favoring strategy to replace , it is also of interest to ask whether strategy is selected over strategy , termed ‘strategy selection’ [33]. First, let () denotes the fixation probability that a single individual using strategy () invades and takes over a resident population of () players. Accordingly, we have . Moreover, note that the probability is equal to that individuals of type fail to take over a population in which there is just a single individual. Then, it leads to . With introducing the notation , which is the ratio of transition probabilities when mutations are absent (), we have the ratio of fixation probabilities for strategy and as . Under weak selection, the ratio of these two fixation probabilities can be approximated to

| (7) |

where for the Moran process, and for the pairwise comparison process. In view of the definitions of and , therefore, the condition under which strategy is selected over strategy is given by , where for the Moran process and for the pairwise comparison process, and is the structure coefficient of well-mixed populations [33]. Under weak selection, specifically we have (see Fig. 3): (i) When , the condition that strategy is selected over strategy is . Particularly, for sufficiently large population size , it corresponds to that is risk-dominant in the case of coordination games (); (ii) When , this condition changes to . Particularly, for , it corresponds to that is risk-dominant in the case of coordination games (); (iii) When , the condition that strategy is selected over strategy under weak selection will depend on higher order coefficients of in .

In particular, if we additionally consider the situation where small non-uniform mutations occur between the two strategies, that strategy is more abundant than in the long run is determined by [42, 44, 49], where and denote the mutation rates from to and from to , respectively. For , clearly the conclusions obtained above are still valid, which extrapolates our results to the situation of small mutations.

4 Discussion

Most previous studies exclusively assume that the effective payoff function is a specific form. One direct result of this setting is that the final evolutionary outcomes do not reflect the effect of the effective payoff function on evolutionary dynamics. Therefore, it still remains unclear how the effective payoff function influences the evolutionary dynamics in a game system. With introducing a generalized mapping, we investigate such effect in this letter.

For standard games where a specific form of the effective payoff is adopted, it has been demonstrated that under weak selection the condition for one strategy to dominate the other is determined by a -rule [33]. This rule almost captures all aspects of evolutionary dynamics, but ignores the effect of the effective payoff function. Particularly, if the effective payoff function is an any non-negative mapping of the product of the payoff and the selection intensity [19, 37], the rule still holds. But it does not change the basic fact that the role played by the effective payoff function in evolutionary game dynamics is exclusively overlooked. With introducing a more generalized mapping that the effective payoff of individuals is a function of payoff and selection intensity, however, we find that the condition determining a strategy to be selected relies not only on the -rule, but also on an extra constant which characterizes and depends on the effective payoff function. As an extension, a multi-strategy version is also given in the Supplementary Material.

Based on specific effective payoff forms, it has been found that the linear and exponential functions lead to identical evolutionary outcomes under weak selection [43, 37, 19]. Here we generalize this equivalence understanding to any two fitness functions in a Moran process, and imitation probability functions in a pairwise comparison process. In addition, except for the standard games and weak selection, there are lots of research interest in the games of multiple players or strategies [37, 50, 36] and strong selection [51], which are worth the effort in the future.

Acknowledgements.

We thank Bin Wu for helpful discussions and comments. This work was supported by the National Natural Science Foundation of China (Grants No. 61751301, No. 61533001, and No. 61503062).References

- [1] \NameNowak M. A. \BookEvolutionary Dynamics: Exploring the Equations of Life (Harvard University Press, Cambridge MA) 2006.

- [2] \NameMaynard Smith J. Price G. R. \REVIEWNature246197315.

- [3] \NameMaynard Smith J. \BookEvolution and the Theory of Games (Cambridge University Press, Cambridge) 1982.

- [4] \NameTurner P. E. Chao L. \REVIEWNature3981999441.

- [5] \NameKerr B., Riley M. A., Feldman M. W. Bohannan B. J. \REVIEWNature4182002171.

- [6] \NameHamilton W. D. \REVIEWJ. Theor. Biol.719641.

- [7] \NameNowak M. A. Sigmund K. \REVIEWNature43720051291.

- [8] \NameHelbing D. Yu W. \REVIEWProc. Natl. Acad. Sci. U.S.A.10620093680.

- [9] \NameChen X. Szolnoki A. \REVIEWPLoS Comput. Biol.142018e1006347.

- [10] \NameTaylor P. D. Jonker L. B. \REVIEWMath. Biosci.401978145.

- [11] \NameHofbauer J. Sigmund K. \BookEvolutionary Games and Population Dynamics (Cambridge University Press, Cambridge) 1998.

- [12] \NameVan Kampen N. G. \BookStochastic Processes in Physics and Chemistry 2nd Edition (Elsevier, Amsterdam) 1997.

- [13] \NameNowak M. A., Sasaki A., Taylor C. Fudenberg D. \REVIEWNature4282004646.

- [14] \NameSui X., Cong R., Li K. Wang L. \REVIEWPhys. Lett. A37920152922.

- [15] \NameTaylor C., Fudenberg D., Sasaki A. Nowak M. A. \REVIEWBull. Math. Biol.6620041621.

- [16] \NameImhof L. A. Nowak M. A. \REVIEWJ. Math. Biol.522006667.

- [17] \NameSzabó G. Fath G. \REVIEWPhys. Rep.446200797.

- [18] \NameAltrock P. M. Traulsen A. \REVIEWNew J. Phys.112009013012.

- [19] \NameWu B., Altrock P. M., Wang L. Traulsen A. \REVIEWPhys. Rev. E822010046106.

- [20] \NameSzolnoki A., Perc M. Danku Z. \REVIEWPhysica A38720082075.

- [21] \NamePerc M. Szolnoki A. \REVIEWNew J. Phys.142012043013.

- [22] \NamePerc M., Gómez-Gardeñes J., Szolnoki A., Floría L. M. Moreno Y. \REVIEWJ. R. Soc. Interface10201320120997.

- [23] \NameNowak M. A. May R. M. \REVIEWNature3591992826.

- [24] \NameSzabó G. Tőke C. \REVIEWPhys. Rev. E58199869.

- [25] \NameHauert C. Szabó G. \REVIEWAm. J. Phys.732005405.

- [26] \NamePerc M. \REVIEWEPL752006841.

- [27] \NamePerc M. Szolnoki A. \REVIEWBioSystems992010109.

- [28] \NameChen X., Fu F. Wang L. \REVIEWPhys. Rev. E782008051120.

- [29] \NameOhtsuki H., Hauert C., Lieberman E. Nowak M. A. \REVIEWNature4412006502.

- [30] \NameSantos F. C., Santos M. D. Pacheco J. M. \REVIEWNature4542008213.

- [31] \NameFu F., Wang L., Nowak M. A. Hauert C. \REVIEWPhys. Rev. E792009046707.

- [32] \NameChen X. Wang L. \REVIEWPhys. Rev. E772008017103.

- [33] \NameTarnita C. E., Ohtsuki H., Antal T., Fu F. Nowak M. A. \REVIEWJ. Theor. Biol.2592009570.

- [34] \NameHuang F., Chen X. Wang L. \REVIEWSci. Rep.82018528.

- [35] \NameAllen B., Lippner G., Chen Y.-T., Fotouhi B., Momeni N., Yau S.-T. Nowak M. A. \REVIEWNature5442017227.

- [36] \NameTarnita C. E., Wage N. Nowak M. A. \REVIEWProc. Natl. Acad. Sci. U.S.A.10820112334.

- [37] \NameWu B., Traulsen A. Gokhale C. S. \REVIEWGames42013182.

- [38] \NameMcAvoy A. Hauert C. \REVIEWJ. Math. Biol.722016203.

- [39] \NameDe Visser J. A. G. Krug J. \REVIEWNature Rev. Genet.152014480.

- [40] \NameHauser O. P., Traulsen A. Nowak M. A. \REVIEWJ. Theor. Biol.3432014178.

- [41] \NameWu B., García J., Hauert C. Traulsen A. \REVIEWPLoS Comput. Biol.92013e1003381.

- [42] \NameFudenberg D., Nowak M. A., Taylor C. Imhof L. A. \REVIEWTheor. Popul. Biol.702006352.

- [43] \NameAntal T., Nowak M. A. Traulsen A. \REVIEWJ. Theor. Biol.2572009340.

- [44] \NameAntal T. Scheuring I. \REVIEWBull. Math. Biol.6820061923.

- [45] \NameTaylor C., Iwasa Y. Nowak M. A. \REVIEWJ. Theor. Biol.2432006245.

- [46] \NameSui X., Wu B. Wang L. \REVIEWPhys. Rev. E922015062124.

- [47] \NameWang Z., Kokubo S., Jusup M. Tanimoto J. \REVIEWPhys. Life Rev.14201556.

- [48] \NameTanimoto J. Sagara H. \REVIEWBioSystems902007105.

- [49] \NameWu B., Gokhale C. S., Wang L. Traulsen A. \REVIEWJ. Math. Biol.642012803.

- [50] \NameSu Q., Wang L. Stanley H. E. \REVIEWNew J. Phys.202018103030.

- [51] \NameAltrock P. M., Traulsen A. Nowak M. A. \REVIEWPhys. Rev. E952017022407.