RPF-Search: Field-based Search for Robot Person Following

in Unknown Dynamic Environments

Abstract

Autonomous robot person-following (RPF) systems are crucial for personal assistance and security but suffer from target loss due to occlusions in dynamic, unknown environments. Current methods rely on pre-built maps and assume static environments, limiting their effectiveness in real-world settings. There is a critical gap in re-finding targets under topographic (e.g., walls, corners) and dynamic (e.g., moving pedestrians) occlusions. In this paper, we propose a novel heuristic-guided search framework that dynamically builds environmental maps while following the target and resolves various occlusions by prioritizing high-probability areas for locating the target. For topographic occlusions, a belief-guided search field is constructed and used to evaluate the likelihood of the target’s presence, while for dynamic occlusions, a fluid-field approach allows the robot to adaptively follow or overtake moving occluders. Past motion cues and environmental observations refine the search decision over time. Our results demonstrate that the proposed method outperforms existing approaches in terms of search efficiency and success rates, both in simulations and real-world tests. Our target search method enhances the adaptability and reliability of RPF systems in unknown and dynamic environments to support their use in real-world applications. Our code, video, experimental results and appendix are available at https://medlartea.github.io/rpf-search/.

I INTRODUCTION

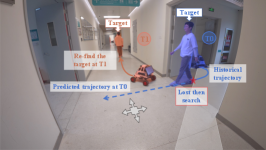

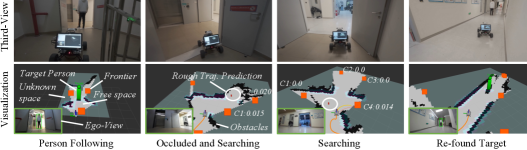

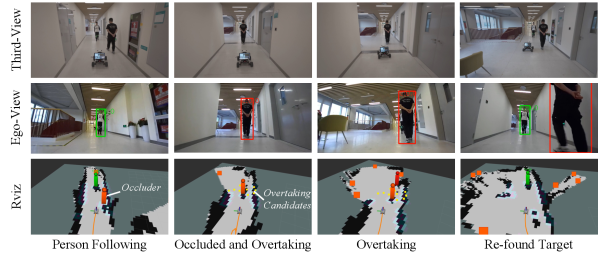

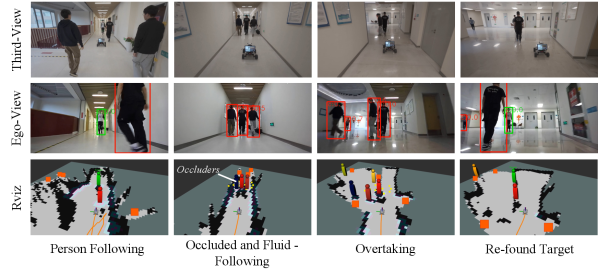

Robot person following[1, 2] (RPF) plays a vital role in human-robot interaction, with applications in personal assistance, security, and service robots. RPF is essential for tasks like elderly care and guided tours, especially in complex and dynamic environments. However, target occlusions due to static objects or moving pedestrians can cause tracking failures. This points to a need for the robot to perform a search task to re-find the target person – a critical component of robust RPF systems, as illustrated in Fig. 1.

Traditional search methods assume known environments and treat the task as a coverage problem[3], maximizing visibility within limited timeframes. These approaches use prior information about both the environment and the target person, such as metric graphs[4], topo-metric graphs[3, 5], or semantic graphs[6] to locate lost targets. Subsequent searches rely on criteria like frequently visited nodes in topometric graphs or objects associated with the person in semantic graphs. While effective in static or known environments, these methods often struggle in complex and unknown settings. Exploration-based techniques[7, 8, 9] select goals from frontiers of the searched region via utility metrics or deep reinforcement learning[9], but neglect motion cues of the lost person, resulting in inefficient searches.

Additionally, some methods leverage trajectory prediction models, such as linear regression[10], Bayesian regression[11], and SVM-based regression[12], to estimate the target’s future position. If unsuccessful, the search often defaults to frontier navigation, repeating the process[12]. Such a greedy strategy ignores environmental dynamics, leading to extended searches. Furthermore, these methods neglect the dynamic behavior of other people in a real environment, resulting in dynamic occlusion of the target person and the risk of robot collision.

To address these limitations, we propose a field-based heuristic-guided search framework that incrementally maps the environment while following the target. The framework prioritizes areas with a high probability of target existence while maximizing environmental exploration and adapts to different types of occlusions:

-

•

Topographic Occlusion: For static obstacles like walls or corners, a belief-guided search field estimates target probability, optimizing information gain, trajectory prediction, path efficiency, and collision avoidance.

-

•

Dynamic Occlusion: When moving pedestrians obstruct the target, the robot switches to a fluid-following field to follow the occluders or adopts an observation-based potential field to overtake them by analyzing their motion patterns.

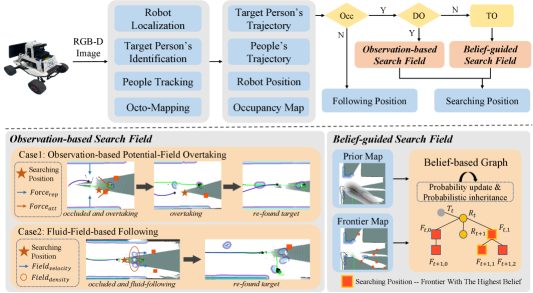

Unlike traditional map-dependent methods, our approach dynamically constructs maps during RPF by focusing on high-probability areas instead of uniform exploration typically employed in active SLAM [7, 8]. This probability representation is derived from our proposed incrementally constructed search fields, which leverage target motion cues and recently observed environmental information. As illustrated in Fig. 2, this adaptive strategy ensures efficient and responsive searching in evolving environments, thereby enhancing the reliability of an RPF system.

II Related Work

II-A Person Search Under Topographic Occlusion

Person search in RPF aims to relocate a lost target. Existing methods often estimate the person’s position using a pre-built map. For example, Goldhoorn et al.[13] employ a Partially Observable Markov Decision Process (POMDP) algorithm to estimate the target location on an occupancy map. To mitigate POMDP’s high computational cost, they later introduced a particle-filter-based approach[4], propagating particles from the target’s last known location and assigning higher weights to those in visible free spaces. The particle with the highest weight is selected as the next search goal.

Beyond occupancy maps, topo-metric graphs[3, 5] and semantic graphs[6] have been used. For instance, Isler et al.[3] employ polygon triangulation to transform a metric map into a tree and use game theory to identify the optimal search node. Some methods incorporate prior knowledge to expedite the search. Bruckschen et al.[5] utilize frequently visited locations in a topo-metric graph, modeling belief states with a hidden Markov model and updating them based on new observations. Similarly, semantic graphs have been used to predict navigation goals based on human-object interactions[6]. These approaches rely on prior information, often framing person search as a coverage problem. However, such prior knowledge is difficult to obtain in real-world RPF scenarios. A practical RPF system must enable robots to follow targets seamlessly in unfamiliar environments, requiring a way to search without pre-built maps. Some methods bypass the need for prior information by leveraging historical trajectories to predict likely destinations. To handle sudden occlusions, techniques such as Kalman filters[14], variational Bayesian regression[11], and least squares linear regression[10] forecast future trajectories. Although map-independent, these methods rely on single predictions, limiting their adaptability to handle unknown environments.

Person search in unknown environments inherently combines exploration with target search. Active SLAM[8] is a closely related research topic, focusing on efficient exploration by selecting optimal frontiers using utility-based metrics[7] or deep reinforcement learning policies[9]. Placed et al.[8] introduce a graph-SLAM utility function to balance localization accuracy and exploration efficiency. Unlike these methods, which prioritize mapping, our approach focuses on effective person search. By detecting frontiers, we construct a belief-guided search field representing the likelihood of the target location. This field is incrementally updated using the target’s past motion cues and recent environmental observations, ensuring efficient and adaptive search.

II-B Person Search Under Dynamic Occlusion

Following a target person in a dynamic environment is challenging, as objects such as people can obscure a robot’s view, causing tracking failures. Effective strategies for searching and re-identifying the target after occlusion are essential. A key difficulty lies in navigating through a dynamic crowd, where one approach is to follow the crowd until the target is re-identified. Crowd-aware navigation methods address this challenge by modeling pedestrian flows. Henry et al.[15] used inverse reinforcement learning (IRL) with Gaussian processes for human-like navigation. Cai et al.[16] proposed a sampling-based flow map algorithm but assumed complete knowledge of pedestrian positions. Dugas et al.[17] introduced a pseudo-fluid model with an observation-based flow-aware planner, while Jacques et al.[18] developed the “Flow Grid” model, enabling the robot to adapt to human motion and optimize paths using a modified A* algorithm. These methods excel in dense crowds or with fast-moving occluders but are less effective when occluders are sparse or slow. In such scenarios, overtaking occluders to re-identify the target becomes a practical and efficient strategy.

Overtaking maneuvers have been widely studied in autonomous driving [19], with approaches generally divided into learning-based and model-based methods. Learning-based methods, such as Chen et al.[20] applying deep reinforcement learning, model human-like overtaking but require large datasets and extensive simulations and face challenges in generalization and explainability. In contrast, model-based methods offer greater interpretability and efficiency. Schwarting et al.[21] developed a nonlinear model predictive control (NMPC) approach accounting for road boundaries, vehicle dynamics, and uncertainties in other traffic participants. Ma et al.[22] used potential field-based methods to enhance safety and comfort during overtaking by incorporating velocity, acceleration, and road boundaries. While effective in vehicular contexts, these methods are not designed for search tasks.

This paper presents a potential field-based strategy to handle occlusions in dynamic environments. By adaptively switching between following and overtaking occluders based on their motion cues, the proposed approach ensures efficient target re-identification in diverse social navigation scenarios.

III Method

The framework of the proposed method is illustrated in Fig. 2. Our approach tracks people and identifies the target person based on our previous work [23]. Additionally, we utilize visual-inertial odometry for robot localization and employ Octomap [24] to map unknown environments. Octomap is a probabilistic-based mapping method that remains robust to dynamic objects.

Leveraging the above perception information, this paper primarily addresses the challenge of person search in unknown and dynamic environments, particularly when the target person is lost due to occlusions. To differentiate between types of occlusions—topographic and dynamic—we use an intersection-over-union (IoU) analysis. If the occluder is identified as a pedestrian via the IoU assessment (i.e., the target person’s bounding box previously interacted with another pedestrian), the system activates an Observation-based Search Field (Sec. III-B) to resume target tracking under dynamic occlusions. In contrast, the presence of static obstacles prompts the deployment of a Belief-guided Search Field (Sec. III-A), enabling smooth adaptation to static occlusions. Detailed method flow can be found in the supplementary materials.

III-A Belief-guided search field

Our approach identifies the optimal search goal using the partially built map, past target trajectory data, and the robot’s current position. It involves three steps: candidate generation, weight calculation, and belief-guided field establishment with a simplified diagram shown in Fig. 3.

III-A1 Candidate Generation

When the target disappears, the robot constructs a belief-guided field to represent the probability of the target’s location. Search candidates are generated using current and historical observation to focus on high-belief regions. In a partially mapped environment, a key issue in our solution involves identifying frontiers, points on the boundary between mapped and unknown regions. These frontiers serve as both observation points and safe navigation locations due to their proximity to mapped free spaces. The belief-guided field is initialized with high values at these frontier points.

Frontiers are detected using Canny Edge Detection on the map[8], with contour centers identified as candidates . These candidates form the high-belief regions guiding the robot’s search. With these candidates, we define the belief-guided field and obtain the searching point as follows:

| (1) |

where represents a position in the environment, is the Gaussian variance, and is the weight of the -th candidate, estimated from the target’s motion cues and environmental information. The maximum operator ensures that overlapping Gaussians prioritize the highest probability, capturing the most likely location.

III-A2 Candidate Weight Calculation

The utility of each candidate is quantified by expected uncertainty in three target variables: information gain , collision risk , and target existence . The candidate weight is computed as:

| (2) |

where represents probabilistic inheritance, capturing probability transfer from farther candidates, and , , and are normalized terms explained below.

Inference Factor : To reduce computational load and quickly locate the target, the inference factor filters candidates based on the maximum distance a pedestrian could travel since last observed, calculated using the maximum velocity of pedestrian. If the distance between a candidate and the last known position of the target exceeds this estimate, it implies the candidate would have been observed if the target were moving in that direction. Thus, further weight calculation is unnecessary for such candidates. The inference factor is defined as:

| (3) |

where is the indicator function, returning one if the condition holds and zero otherwise. is the time elapsed since the target was last seen, and is the Dijkstra-computed distance between the lost position and the candidate in the map.

Information Gain : Information gain prioritizes maximizing observation of unknown areas using entropy to quantify exploration uncertainty [7]. The expected entropy of a candidate is:

| (4) |

where is the occupancy probability of cell , and represents the adjacent cells of the candidate in the map.

Collision Risk : This factor prevents candidates from being too close to obstacles or pedestrians (non-target), reducing collision risk and avoiding invading human comfort space:

| (5) |

where represents the comfortable region around humans [25], is the collision probability with obstacles [26], and ensures numerical stability.

Target Person Existence : To efficiently locate the target, evaluates candidates based on predicted potential locations when the target abruptly disappears. Unlike traditional trajectory prediction relying on global maps, uses human motion cues to estimate potential directions within a limited observation map. It consists of two steps: 1) initial trajectory prediction and 2) probability propagation.

III-A2a Initial Trajectory Prediction

A regression model is constructed based on the target’s historical motion cues, including velocity and positions, to forecast potential trajectories. We use support vector machine regression (SVR), which effectively captures nonlinear relationships and provides accurate fits in constrained environments [12]. The prediction is framed as finding a function, . is the weight vector, is a constant bias vector, and represents a non-linear mapping. Using historical timestamps and positions of the target as the training dataset, the function is learned with SVR theory, enabling estimation of the target’s position at any future timestamp.

III-A2b Probability Propagation

Unlike trajectory predictions using accurate global maps, our algorithm predicts trajectories based on historical human cues and a limited map generated by the robot’s observations. The predicted trajectory is transformed into a probabilistic representation using a Gaussian-based method combined with motion cues. The probability of a point in the trajectory is defined as:

| (6) |

where represents a predicted point, and , the covariance matrix, reflects the probable area of the target based on velocity and social distance [25]:

| (7) |

where , estimated from adjacent points, defines the orientation, resulting in an elliptical probability area in the predicted direction. For predicted items, the probability of the target’s appearance is:

| (8) |

where is the weight of each component. To account for increasing uncertainty over time, weights decrease monotonically:

| (9) |

Probabilistic Inheritance : In unstructured environments, obstacles can significantly restrict visibility, causing the robot to miss detections even at optimal candidate positions. For instance, in Room 1 (Fig. 6(a)), the target (blue dot) is hidden behind Obstacle 1 and Obstacle 2. Without a comprehensive exploration of the room, the robot is likely to miss detecting the target person at this location. To address this, we propose a probabilistic inheritance strategy to balance exhaustive exploration of the room and outside the room. The probability of a candidate inherits from its parent candidate, , the closest candidate from the previous generation. The inherited probability is defined as:

| (10) |

where is the distance between and , and the inheritance probability decays with increasing distance. The normalization factor is adaptive to the room size, ensuring probability decay matches the environment. If candidates inside the room have lower probabilities than previous candidates, the robot transitions from searching inside the room to exploring outside.

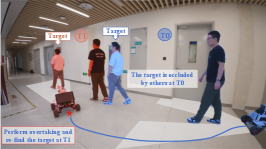

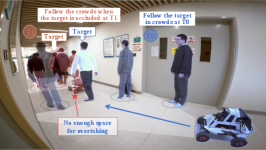

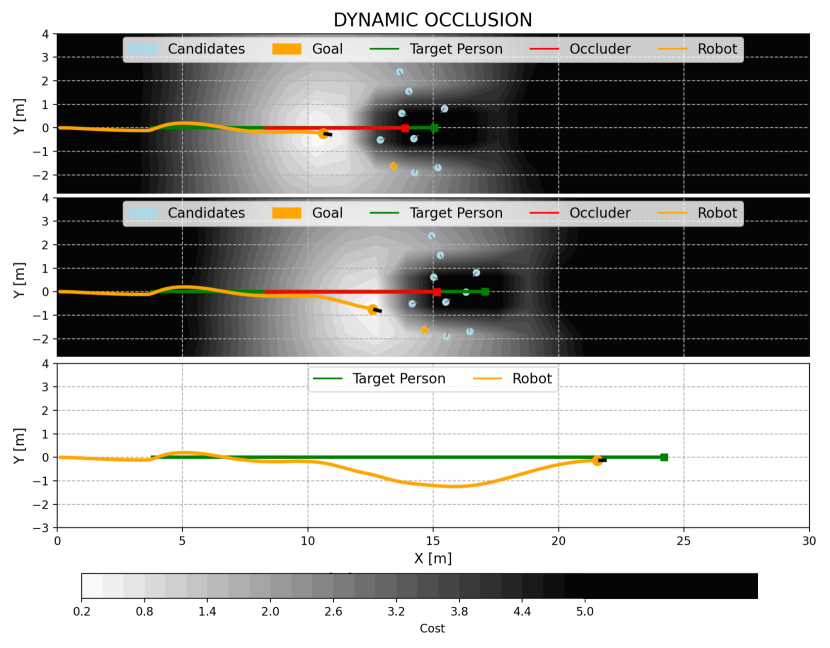

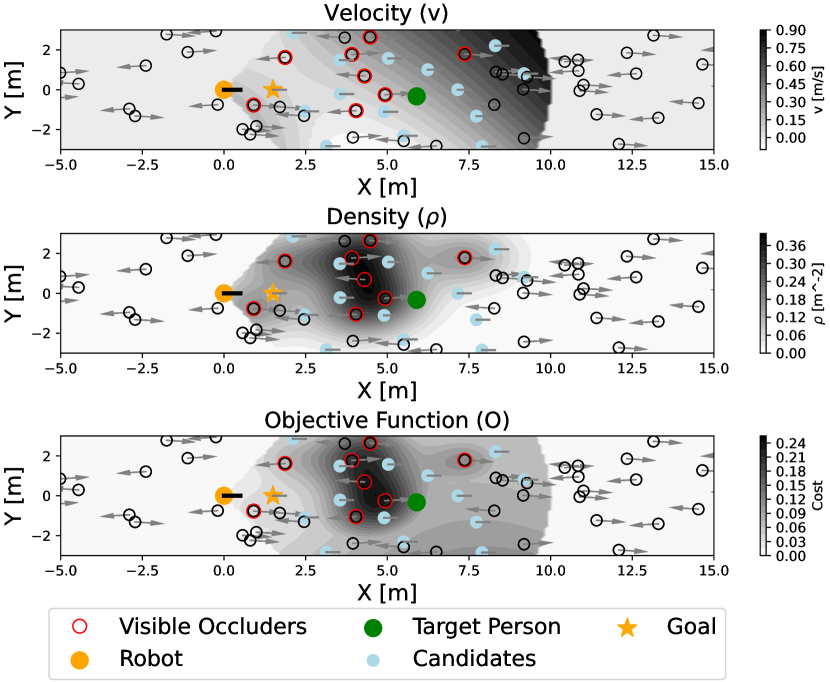

III-B Observation-based search field

Another significant challenge in accurately tracking a target in dynamic environments is dynamic occlusion. Moving pedestrians can obstruct the robot’s view, causing it to lose track of the target. For instance, the target may enter a crowd or random people may walk between the robot and the target. Unlike the belief-guided field used for topographic occlusions, we propose an observation-based search field leveraging the motion cues of occluders to handle dynamic occlusions.

Dynamic occlusions are categorized into two types. Temporary occlusion occurs when a pedestrian briefly crosses between the target and the robot. Here, the occlusion duration is short, allowing the robot to quickly resume tracking without altering its strategy and maintaining the previous track. Long-term occlusion happens when occluders persist in the robot’s view, easily resulting in a track loss. In this case, we categorize occlusions into two types based on the motion cues of the occluder(s) and introduce two corresponding strategies: 1) Observation-Based Potential Field Overtake Strategy, and 2) Fluid Field-Based Following Strategy. Both strategies use vector fields and are triggered when the target is obscured by occluders.

III-B1 Observation-based potential field overtake strategy

When the target is lost due to dynamic occlusion, a natural human response is to overtake the occluders to regain visibility and resume following. To do this, we propose an observation-based potential field overtaking strategy leveraging occluder motion cues. The strategy predicts the occluder trajectory, , and constructs an overtaking field using repulsive and attractive forces inspired by the artificial potential field method. The repulsive force [27] prevents collisions with pedestrians (including occluders) and obstacles:

| (11) |

| (12) |

where is the repulsive gain coefficient, and is the influence distance beyond which the repulsive force ceases. The attractive force guides the robot to optimal positions, using a two-step process: Filter and Plan:

| (13) |

where is the attractive gain coefficient, is the robot’s current position, and represents the observation gain of a candidate given the occluder position and the predicted target position . Here, is a predicted position in front of the occluder. Candidates with higher gains minimize occluder overlap in the image space from the robot’s camera perspective, facilitating better observation of .

We follow the assumption from prior works on person tracking [28, 29, 23], where a person is projected into the image as a bounding box based on the camera’s pose and projection function, . The observation gain is defined as where is the intersection-over-union of two bounding boxes. Additionally, the attractive field incorporates path cost via the direction . The combined field is shown in Fig. 4.

In the filter step, the observation-based potential field filters sampling points to identify the optimal candidate , which maximizes target observation and minimizes trajectory cost. To accelerate selection and ensure smooth navigation, the search area is restricted to the robot’s front, with candidates sampled using the Korobov lattice [30], a method for generating low-discrepancy points. The optimal candidate is determined as:

| (14) |

In addition, we record its minimum cost as . If and the occluders’ maximum velocity is below , the strategy switches to the belief-guided search field (Sec. III-A), suitable for global-scale planning in low-velocity, high-risk environments. If or the occluders’ minimum velocity exceeds , the strategy switches to the fluid-field-based following strategy (Sec. III-B2). Otherwise, becomes the searching point. In the plan step, the attractive and repulsive fields are overlaid, and the gradient of the combined field determines the robot’s movement direction to .

An example of the overtaking process is shown in Fig. 4. A slowly moving distractor (red square) occludes the robot’s view, causing a target loss. In response, an overtaking field is generated, where lighter colors indicate lower costs. With sufficiently low overtaking cost, the robot selects the optimal goal (orange) from candidates (blue) and overtakes the occluder. Initially, the field is nearly symmetric (first row), with low-cost regions on both sides. As the robot moves to the lower region, the path-cost effect reduces the cost there (darker shade), leading to consistent selection of the same overtaking point and producing a smooth overtaking trajectory (orange path, last row).

III-B2 Fluid field-based following strategy

To ensure natural and safe navigation in dynamic occlusion scenarios, following the occluding person is a viable strategy when overtaking is infeasible, such as when occluders move too fast or overtaking costs are too high, risking unsafe behavior. The goal of this strategy is for the robot to follow occluders effectively. Because moving too slowly increases the distance to the occluder, risking target loss, while overtaking may require acceleration, posing collision risks in human-shared environments.

Inspired by the fluid-based navigation model [31, 16], we propose treating detected pedestrians, including occluders, as a pseudo-fluid. This enables the estimation of a velocity field around the robot’s perception, leading to safe and efficient target tracking. The fluid-based vector field is modeled using the following variables.

Velocity Field : To estimate the velocity at , we consider detected pedestrians and use:

| (15) |

where and controls the ”viscosity” of the fluid flow [31].

Density Field : To avoid the robot rushing into high-density areas, the density field is defined as:

| (16) |

where is the number of times has been observed, encouraging navigation to familiar areas, and is a constant to control density smoothness.

Objective Function : The searching point is obtained by minimizing:

| (17) |

where is the robot’s velocity. Candidate points are sampled in front of the robot, and the optimal candidate is selected as the searhcing point. The purpose of this objective function (Eq. 17) is to guide the robot in following occluders by minimizing the deviation from their velocity while accounting for their density. Fig. 5 illustrates the process. With the proposed overtaking and fluid-following fields, our method effectively searches for the target person under dynamic occlusions, ensuring safe navigation in complex environments.

IV Experiments

This section evaluates the effectiveness of the proposed person-search method, particularly with regard to its performance in re-finding a lost target person due to topographic or dynamic occlusions, by comparing it with existing baseline methods under challenging conditions. The parameters of the proposed method are shown in the supplementary materials.

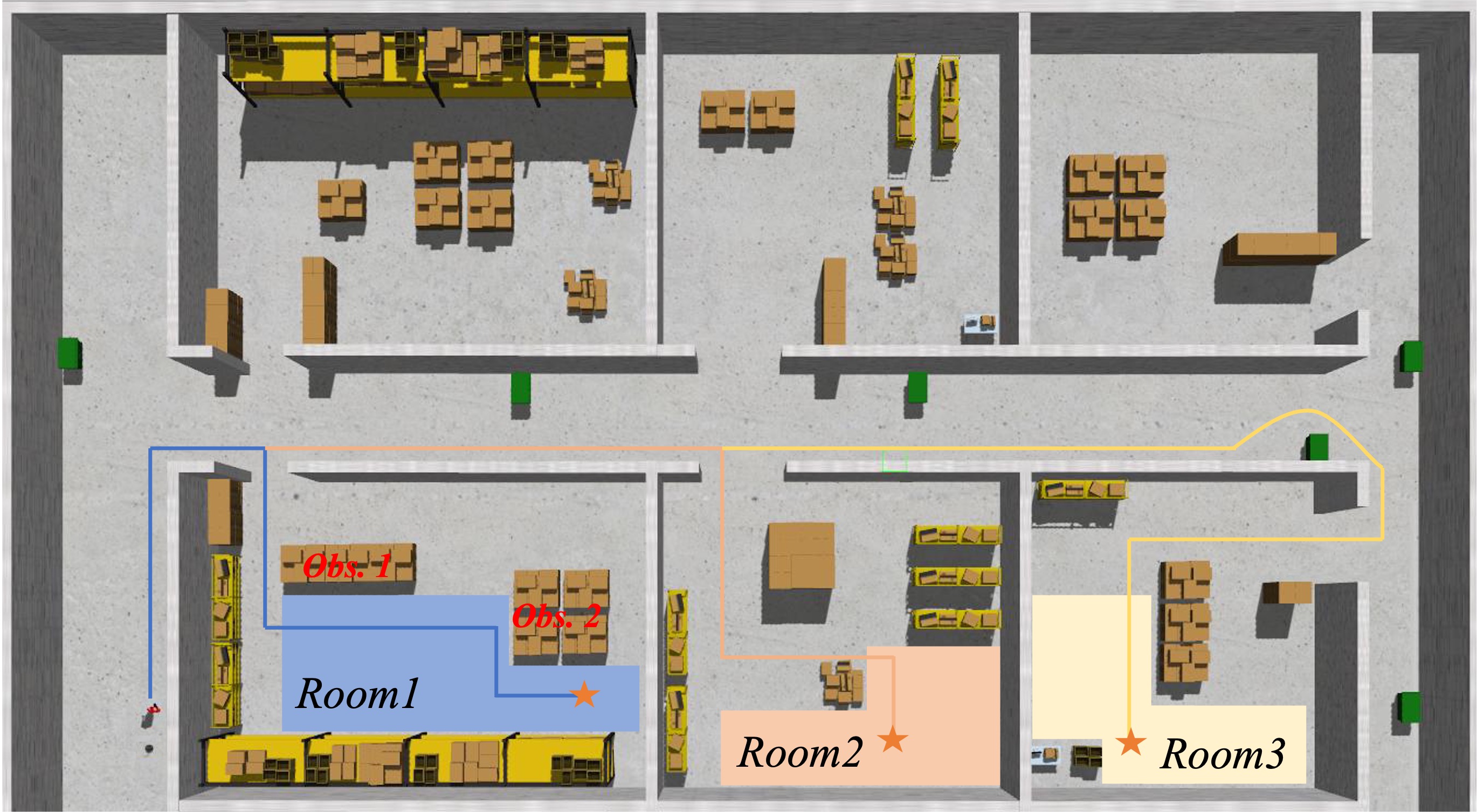

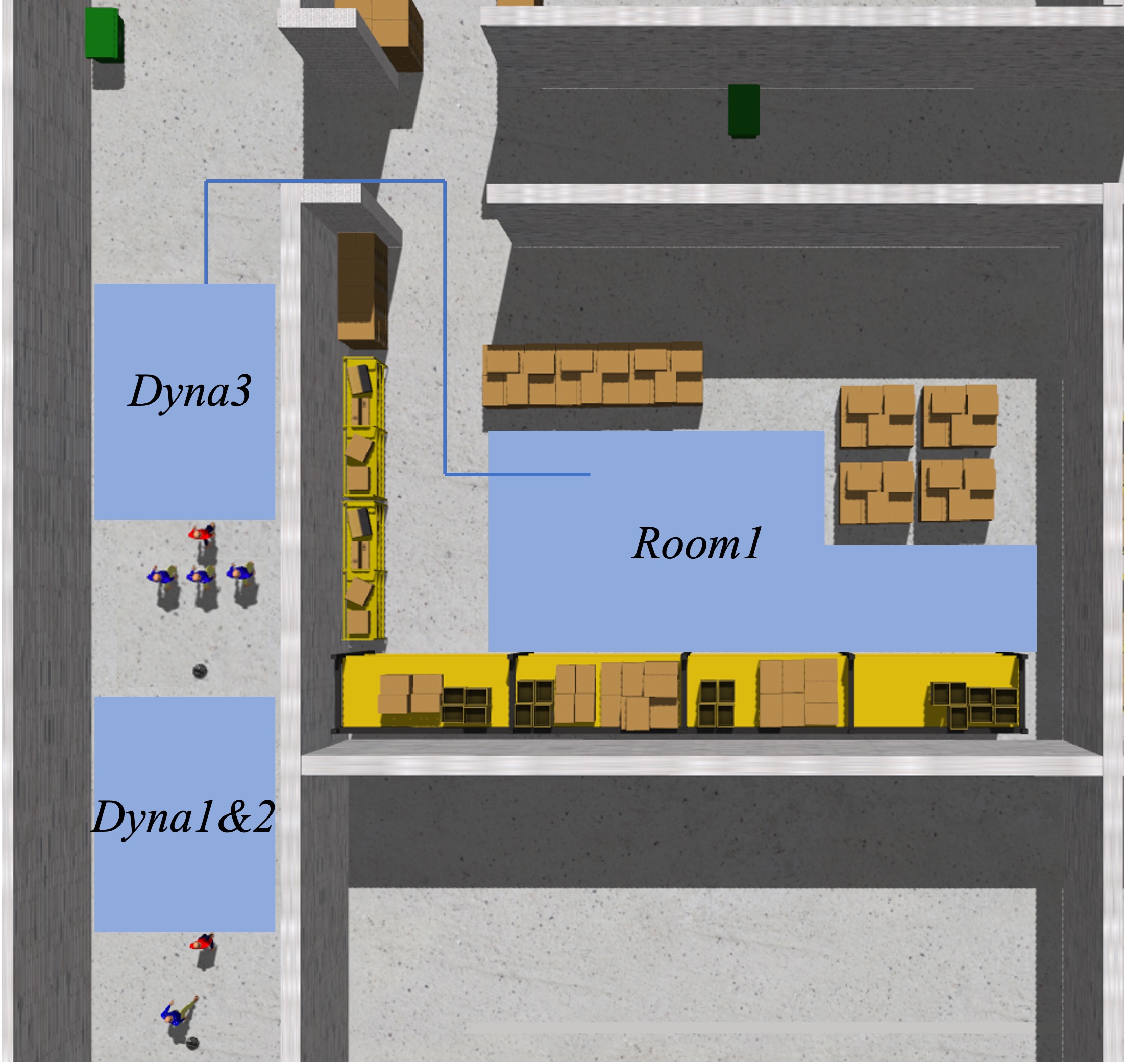

IV-A Experimental Settings

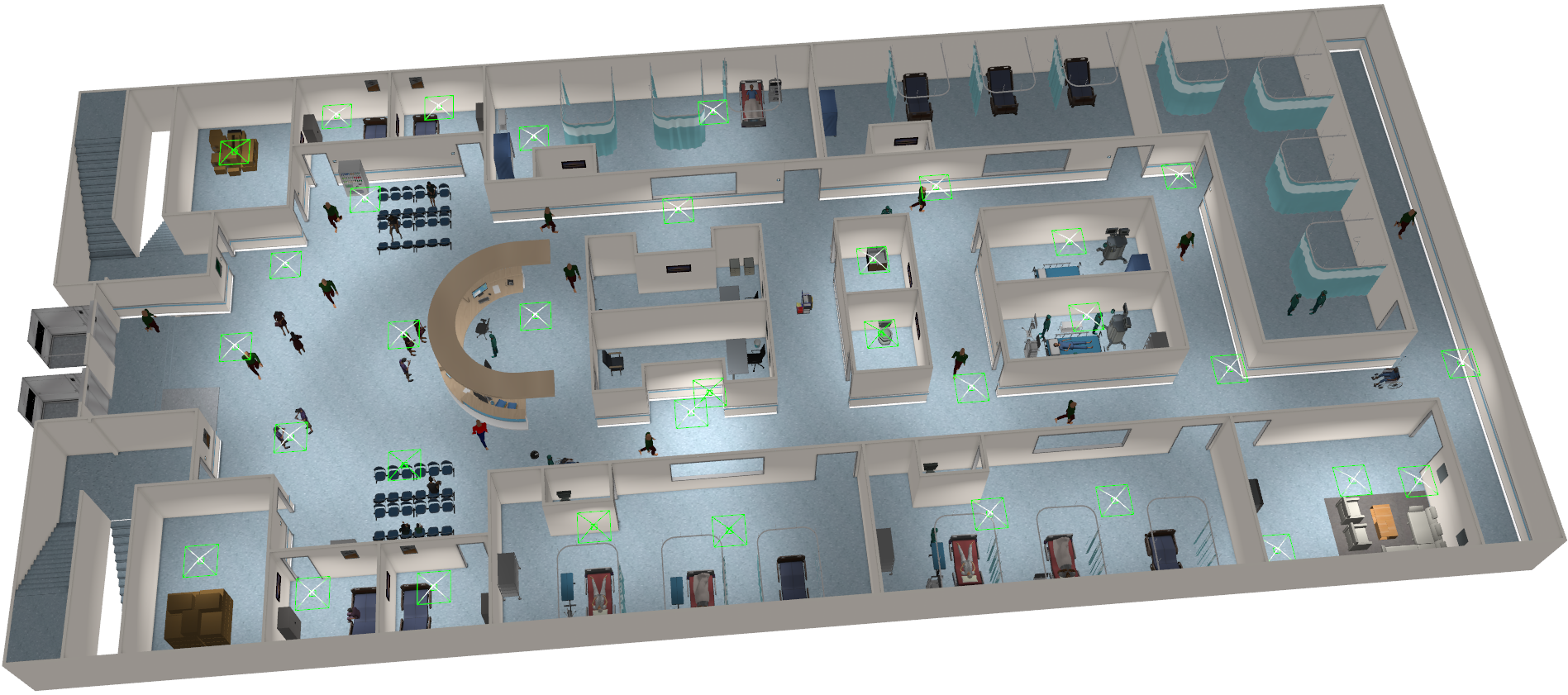

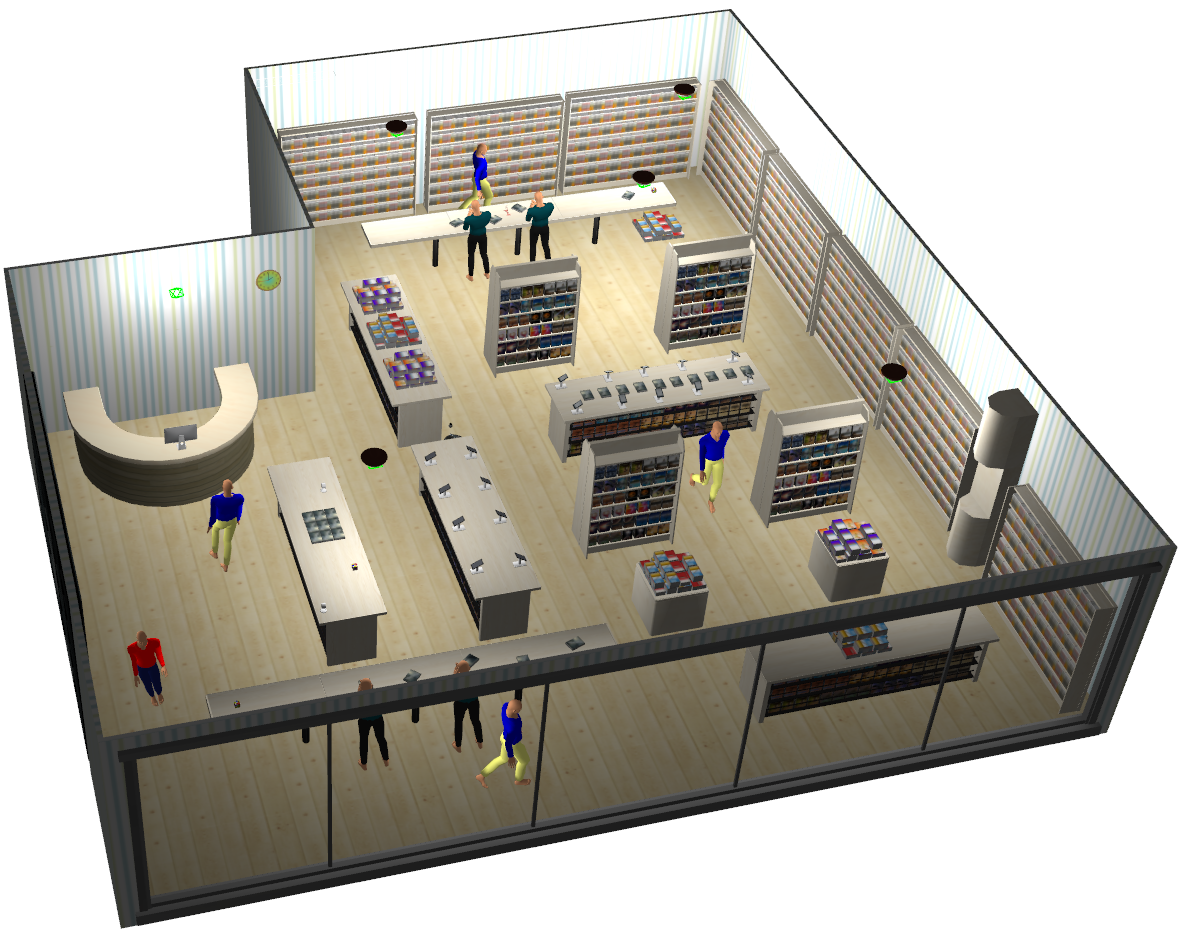

We evaluated our method in both real-world and simulated environments to ensure its robustness. The simulated settings included three room layouts with different scales: Bookstore (small), Hospital (medium), and Factory (large), as shown in Figs. 6(d), 6(c), and 6(a). These environments featured various objects and both static and dynamic individuals to create frequent occlusions, which are challenging for RPF. Additionally, within the factory, we designed six scenarios to assess the robot’s person-search capabilities under topographic and dynamic occlusions (see Figs. 6(a) and 6(b)). These scenarios include difficult U-turns and dynamic occlusions inspired by typical interactions, simulating complex real-life environments. Specifically, we developed three dynamic occlusion experiments: Dyna1: simulates the single-person occlusion with a constant low speed (1.0 m/s). Dyna2: features a single-person occlusion with decreasing speed (from 1.5 m/s to 0.8 m/s). Dyna3: represents multiple-person occlusions.

In the simulated environments, we use a differential-drive robot with a 2D LiDAR for navigation and an RGB-D camera for perception. A target person is re-identified after being observed in over five consecutive frames. Locations are estimated using YOLOX object detection and depth data. Individuals interact according to the social force model [32], ensuring realistic movements. The target’s start and end positions are randomly generated within free space to create various scenarios. Real-world scenarios are illustrated in Fig. 1. Due to page limitations, real-world qualitative and quantitative experimental results, along with all implementation details, are provided in the supplementary materials.

IV-B Compared Algorithms and Evaluation Metrics

To validate the effectiveness of our proposed method, we compared it against several existing person-search baselines. The first baseline, GoToLostLocation (GTLL) [33], directs the robot to the target’s last known location when lost and then rotates to search. The second baseline, GoToPredictedLocation (GTPL) [12], uses an SVR-based model to predict and navigate to the target’s location before searching. Given the scarcity of methods targeting person search in unknown environments, we enhanced these baselines by incorporating a frontier map and a greedy next-best-view (NBV) strategy, resulting in GTLL + Greedy-NBV and GTPL + Greedy-NBV, where the robot first navigates to the initial location and then to the nearest frontier. We also integrated the map-based HB-Particle method [4], which directs the robot to the highest-belief particle with particles propagating into unknown regions, and the information gain-based Active Graph-SLAM [8], which employs frontier-based exploration to reduce pose graph uncertainty.

To assess all methods, we used established person-search metrics: Success Rate (SR) [4, 5], Success Weighted by Inverse Path Length (SPL) [34], and Trajectory and Velocity Analysis (TVA). SR measures the percentage of successful searches within a 180-second time limit over 100 runs per scenario. SPL evaluates search efficiency by considering both success and path optimality. TVA examines the smoothness of robot motion by plotting trajectories, velocities, and distances to the target in various dynamic occlusion scenarios.

IV-C Ablation Experiments

IV-C1 Verification of the inference factor and probabilistic inheritance

To assess the roles of the inference factor and probabilistic inheritance in our belief-guided search framework, we conduct an ablation study with results shown in Table I. Removing either component can cause the robot to navigate incorrectly and significantly reduce its performance. For example, in Room3, SR and SPL were 62% and 60.8%, and 71% and 68.5%, respectively. Similar declines were observed in Factory and Hospital. Visualizations of baselines and our method are shown in the supplementary materials.

Here, we present a visualization of our real-world experiments (see Fig. 7). Initially, the trajectory prediction guided the robot toward the room and left corridor. However, the inference factor incorporated new environmental data, eliminating the left corridor and room candidates (1, 2, and 3) by reducing their belief to zero. This redirected the robot toward the higher-belief candidates in the right corridor (candidate 4 with a 0.014 belief), ensuring a successful search. Without the inference factor, the robot would have followed the initial trajectory, resulting in a failed search.

Furthermore, probabilistic inheritance enables new candidates to inherit beliefs from their parent candidates with decay. This allows the robot to focus on more probable candidates as the search progresses. As shown in the third and fourth subfigures of Fig. 7, candidates in the right corridor continue to inherit belief, guiding the robot to successfully re-find the target. Without probabilistic inheritance, distant candidates would lose all belief, leading to incorrect navigation and search failure. Our approach leverages the local connectivity of frontiers, optimizing search direction towards likely locations. In conclusion, both the inference factor and probabilistic inheritance are essential for effective navigation and search in complex environments, as demonstrated by improved performance in our ablation study.

| Method | Factory∗(%) | Hospital∗(%) | Room3(%) | |||

| SR↑ | SPL↑ | SR↑ | SPL↑ | SR↑ | SPL↑ | |

| w/o inference factor | 68 | 62.0 | 75 | 67.0 | 62 | 60.8 |

| w/o probabilistic inheritance | 56 | 46.4 | 63 | 54.3 | 71 | 68.5 |

| RPF-Search (ours) | 100 | 94.7 | 98 | 94.8 | 100 | 96.2 |

| Method | Dyna1(%) | Dyna2(%) | Dyna3(%) | |||

| SR↑ | SPL↑ | SR↑ | SPL↑ | SR↑ | SPL↑ | |

| w/o obs.-based overtaking field | 25 | 24.4 | 24 | 23.2 | 91 | 87.1 |

| w/o fluid-following field | 95 | 91.6 | 86 | 82.3 | 82 | 78.5 |

| RPF-Search (ours) | 97 | 92.8 | 100 | 95.6 | 92 | 88.0 |

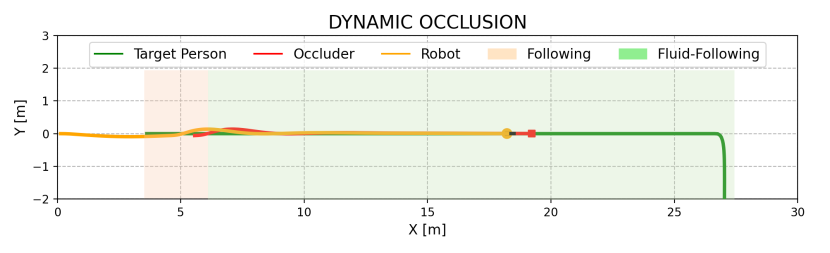

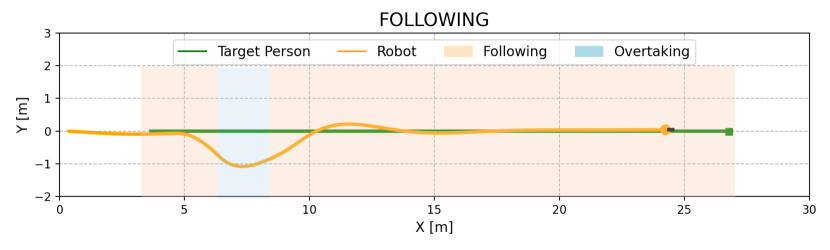

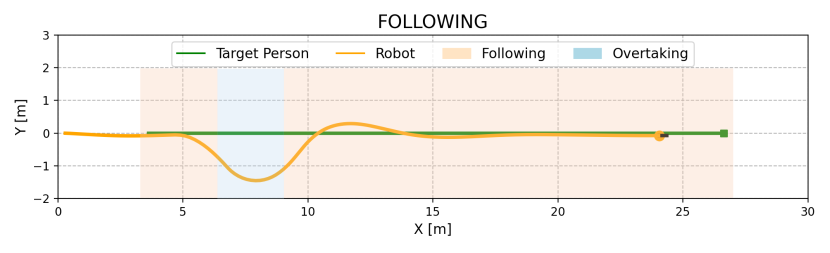

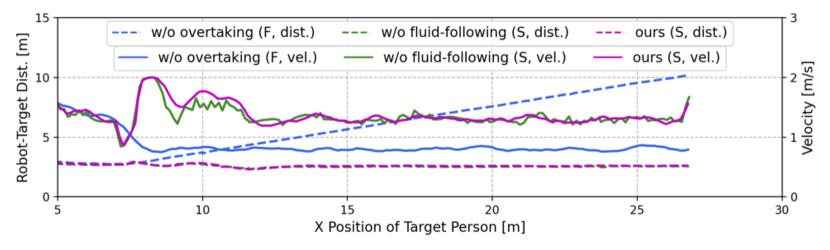

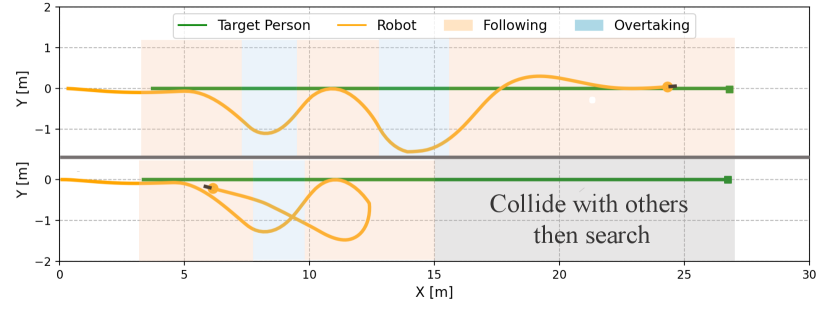

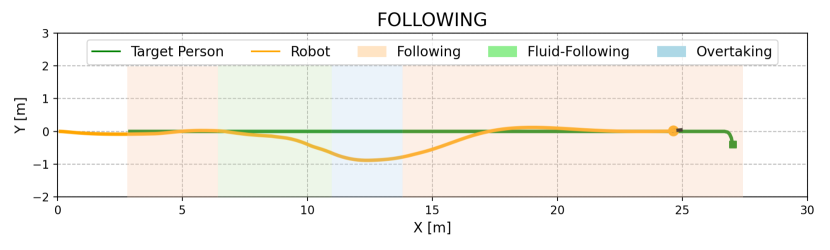

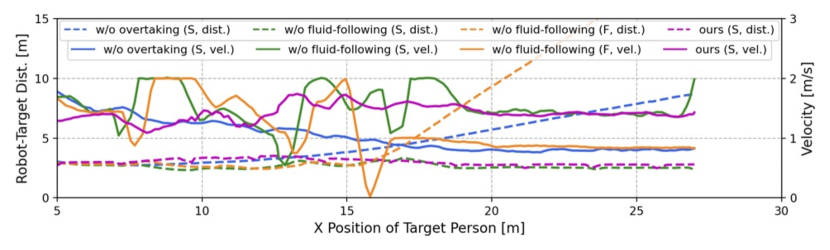

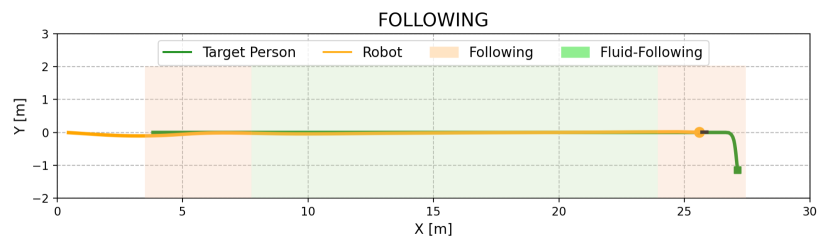

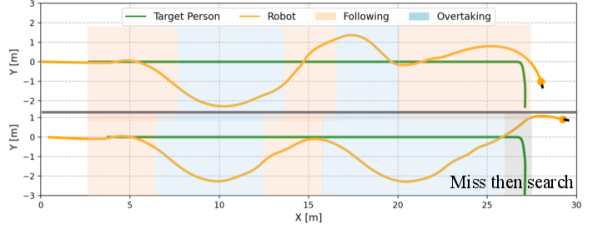

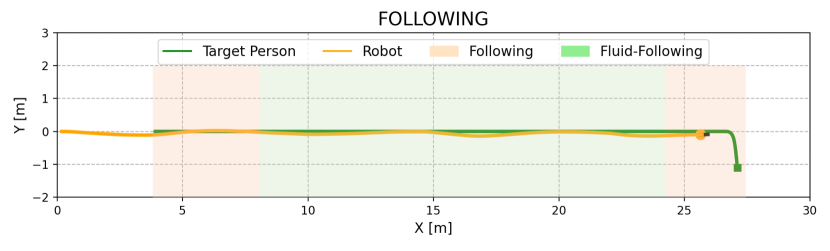

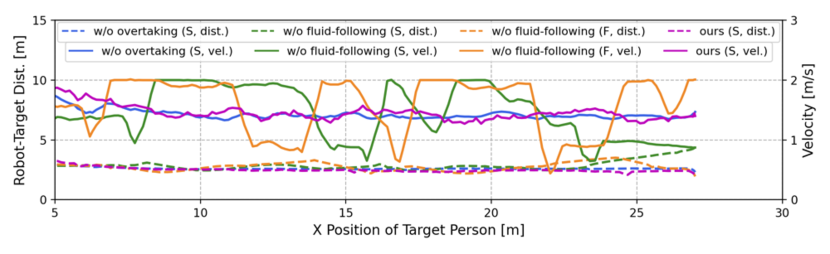

IV-C2 Verification of two field-based strategies

We evaluated the effectiveness of the overtaking and fluid-following fields through an ablation study, with outcomes shown in Table II. In Dyna1, removing the fluid-following field slightly decreased the SR by 2%, whereas removing the observation-based overtaking field caused a drop of 70% in SR. Without overtaking, the robot followed a slow-moving occluder, increasing the target distance and failing to re-identify the target when it turned a corner (Fig. 8(a)). In contrast, the overtaking field enabled the robot to overtake the occluder, maintaining a stable and close distance to the target and successfully completing the search (Fig. 8(b)).

In Dyna2, where the occluder’s speed decreases from 1.5 m/s to 0.8 m/s, the absence of either the observation-based overtaking field or the fluid-following field resulted in reduced performance. Specifically, SR and SPL decreased by 76% and 14%, respectively, without the overtaking field, and by 72.4% and 13.3%, respectively, without the fluid-following field (Table II). Simply following the occluder in this scenario increases the robot-target distance, risking the loss of the target (see Fig. 9(a)). Conversely, direct overtaking can lead to unstable motion or collisions, especially when pursuing a fast-moving occluder (see Fig. 9(b)). Our complete method addresses these issues by overtaking only when clear opportunities arise, such as when the overtaking cost is low and the occluder is moving slowly. This approach ensures smooth trajectories and stable motion, as demonstrated in Figs. 9(c) and 9(d).

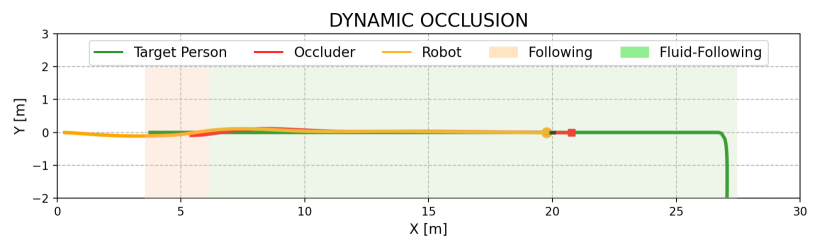

In Dyna3, involving multiple occluders, the absence of the fluid-following field led to frequent and unstable overtaking attempts, resulting in unstable motion and frequent velocity changes (see Fig. 10(b)). In contrast, our complete method employs a fluid-following strategy when overtaking is not feasible, ensuring smooth navigation and successful recovery once the target reappears after multi-person occlusion (refer to Figs. 10(c) and 10(d)). Overall, the overtaking field is essential for maintaining target tracking, while the fluid-following field ensures safe and stable navigation when overtaking is not possible. The combination of both fields significantly enhances person-search ability under dynamic occlusions.

| Method | Room1 | Room2 | Room3 | |||

| SR↑ | SPL↑ | SR↑ | SPL↑ | SR↑ | SPL↑ | |

| GTLL[33] | 8 | 8.0 | 94 | 79.6 | 1 | 1.0 |

| GTPL[12] | 78 | 73.4 | 62 | 53.3 | 45 | 41.8 |

| GTLL[33] + Greedy-NBV | 77 | 74.1 | 94 | 80.5 | 65 | 57.9 |

| GTPL[12] + Greedy-NBV | 94 | 84.6 | 79 | 68.0 | 42 | 40.2 |

| Active Graph-SLAM[8] | 33 | 33.0 | 14 | 21.6 | 61 | 60.8 |

| HB-Particle[4] | 2 | 2.0 | 14 | 12.2 | 0 | 0.0 |

| RPF-Search (ours) | 100 | 96.1 | 100 | 97.1 | 100 | 96.2 |

| Method | Dyna1 | Dyna2 | Dyna3 | |||

| SR↑ | SPL↑ | SR↑ | SPL↑ | SR↑ | SPL↑ | |

| GTLL[33] | 0 | 0.0 | 0 | 0.0 | 0 | 0.0 |

| GTPL[12] | 8 | 8.0 | 5 | 4.9 | 0 | 0.0 |

| GTLL[33] + Greedy-NBV | 51 | 48.8 | 79 | 75.7 | 10 | 9.9 |

| GTPL[12] + Greedy-NBV | 42 | 41.3 | 29 | 28.8 | 34 | 33.3 |

| Active Graph-SLAM[8] | 4 | 4.0 | 1 | 1.0 | 1 | 1.0 |

| HB-Particle[4] | 34 | 33.2 | 12 | 11.5 | 1 | 0.9 |

| RPF-Search (ours) | 97 | 92.8 | 100 | 95.6 | 92 | 88.0 |

| Method | Factory∗ | Hospital∗ | Bookstore∗ | |||

| SR↑ | SPL↑ | SR↑ | SPL↑ | SR↑ | SPL↑ | |

| GTLL[33] | 34 | 32.6 | 32 | 31.0 | 58 | 56.0 |

| GTPL[12] | 80 | 73.9 | 49 | 46.6 | 50 | 48.8 |

| GTLL[33] + Greedy-NBV | 61 | 61.5 | 84 | 81.4 | 88 | 81.5 |

| GTPL[12] + Greedy-NBV | 73 | 67.6 | 65 | 55.9 | 70 | 62.9 |

| Active Graph-SLAM[8] | 19 | 18.4 | 49 | 48.0 | 67 | 66.2 |

| HB-Particle[4] | 8 | 6.9 | 7 | 4.3 | 83 | 69.7 |

| RPF-Search (ours) | 100 | 94.7 | 98 | 94.8 | 98 | 95.7 |

IV-D Topological occlusion scenarios

To independently evaluate the person-search capabilities of both the baseline methods and our approach, we designed three factory scenarios (Room1, Room2, and Room3) focusing on topographic occlusion challenges, including regular paths and U-turns. As shown in Table III, our method achieved the highest SR and SPL in all rooms: 100% SR and 96.1% SPL in Room1, 100% SR and 97.1% SPL in Room2, and 100% SR and 96.2% SPL in Room3. These results outperform the second-best methods by up to +35% in SR and +35.4% in SPL, demonstrating that our approach is more effective in re-identifying targets and enables robots to follow near-optimal paths, reducing unnecessary movements and search time.

Among the baselines, “GTPL + Greedy-NBV” performed well in Room1 with 94% SR and 84.6% SPL by accurately predicting target locations in simpler environments. “GTLL + Greedy-NBV” achieved 94% SR and 80.5% SPL in Room2 since the lost target is usually within the room, allowing the greedy search to effectively locate the person. However, in Room3, limited historical information led “GTPL + Greedy-NBV” to predict trajectories toward the corridor, resulting in a greedy search away from the target room and achieving only 42% SR and 40.2% SPL. Similarly, “GTLL + Greedy-NBV” reached low performance with 65% SR and 57.9% SPL.

In contrast, our method transforms predicted trajectories into probabilistic representations and uses a belief-guided search field that dynamically updates the estimated location by incorporating new environmental data and the target’s past motion cues. This approach allows efficient searching and locating of the person, achieving 100% SR and 96.2% SPL in Room3, significantly outperforming the baseline methods.

IV-E Dynamic Occlusion Scenarios

Dynamic occlusions are common in everyday person-following tasks, especially in environments crowded with people. While robots can often recover tracking after short-term occlusions—since the target person reappears quickly—the greatest challenge arises with long-term occlusions, where other pedestrians obstruct the target for extended periods. As introduced in Fig. 6(b), we designed three dynamic occlusion experiments for simulating realistic long-term occlusion scenarios including Dyna1, Dyna2 and Dyna3. In these scenarios, the target person follows a fixed path into Room1 and is occluded for an extended period in the corridor by other individuals. The optimal search behavior should consider both search efficiency and safe navigation, enabling an intelligent switch between overtaking and following the occluders.

As shown in Table IV, our method achieves superior performance across all dynamic occlusion scenarios. Specifically, we obtained 97% SR and 92.8% SPL in Dyna1, 100% SR and 95.6% SPL in Dyna2, and 92% SR and 88.0% SPL in Dyna3. Existing person-search methods do not explicitly address dynamic occlusion problems and, consequently, perform poorly in these environments. Our method surpasses the second-best baseline by: Dyna1: +46% SR and +44.0% SPL, Dyna2: +21% SR and +19.9% SPL, Dyna3: +58% SR and +54.7% SPL.

IV-F Scenarios with different scales and room structures

The evaluation results of different scales and room structures are shown in Table V, our RPF-Search achieves the highest performance, with 100% SR and 94.7% SPL in Factory, 98% SR and 94.8% SPL in Hospital, and 98% SR and 95.7% SPL in Bookstore. It can be observed that person search in small-scale environments (e.g., Bookstore) is relatively straightforward, with five baselines achieving an SR above 50%, and the second-best method reaching an SR of 88% SR and an SPL of 81.5%. This may be attributed to the fact that the target person is more easily observed as the robot explores the smaller Bookstore environment. In contrast, person search becomes more challenging in larger environments (e.g., Hospital and Factory), where incorrect decisions can lead the robot to move away from the target person. Consequently, only two baselines achieve above 50% SR in Hospital, and three achieve above 50% SR in Factory.

Our method, however, consistently outperforms the second-best approach, with improvements of +20% in terms of SR and +20.8% in terms of SPL in Factory, +14% SR and +13.4% SPL in Hospital, and +10% SR and +14.2% SPL in Bookstore. This demonstrates the effectiveness of our belief-guided field, which leverages newly observed environmental information and the target person’s past motion cues to update the belief of the target person’s location. This approach guides the robot to explore the most probable areas, ultimately leading to a successful re-identification of the target person.

V conclusion

In this paper, we introduce RPF-Search, a novel heuristic-guided, field-based person-search framework designed for robust robot person-following in dynamic and unknown environments. This approach addresses key challenges in human-robot interaction, enabling robots to reliably locate a target person despite frequent occlusions. By constructing an environmental map in real-time and leveraging the motion cues of detected individuals, RPF-Search effectively adapts to both topographic occlusions—utilizing a belief-guided search field—and dynamic occlusions through an observation-based potential field combined with fluid-field dynamics. Experimental results in both simulated and real-world scenarios demonstrate RPF-Search’s significant improvements in search efficiency and success rates compared to traditional person-search methods. This advancement offers a practical and reliable solution for personal assistance and human-robot collaboration. Future work could further enhance these results by incorporating advanced trajectory prediction models and exploring the use of semantic cues.

References

- [1] Q. Yan, J. Huang, Z. Yang, Y. Hasegawa, and T. Fukuda, “Human-following control of cane-type walking-aid robot within fixed relative posture,” IEEE/ASME Trans. Mechatronics, vol. 27, no. 1, pp. 537–548, 2021.

- [2] J. Chen and W.-j. Kim, “A human-following mobile robot providing natural and universal interfaces for control with wireless electronic devices,” IEEE/ASME Trans. Mechatronics, vol. 24, no. 5, pp. 2377–2385, 2019.

- [3] V. Isler, S. Kannan, and S. Khanna, “Randomized pursuit-evasion in a polygonal environment,” IEEE Trans. Robot., vol. 21, no. 5, pp. 875–884, 2005.

- [4] A. Goldhoorn, A. Garrell, R. Alquézar, and A. Sanfeliu, “Searching and tracking people in urban environments with static and dynamic obstacles,” Robot. and Auton. Syst., vol. 98, pp. 147–157, 2017.

- [5] A. Bayoumi, P. Karkowski, and M. Bennewitz, “Speeding up person finding using hidden markov models,” Robot. and Auton. Syst., vol. 115, pp. 40–48, 2019.

- [6] L. Bruckschen, K. Bungert, N. Dengler, and M. Bennewitz, “Predicting human navigation goals based on bayesian inference and activity regions,” Robot. and Auton. Syst., vol. 134, p. 103664, 2020.

- [7] N. Basilico and F. Amigoni, “Exploration strategies based on multi-criteria decision making for searching environments in rescue operations,” Autonomous Robots, vol. 31, pp. 401–417, 2011.

- [8] J. A. Placed and J. A. Castellanos, “A general relationship between optimality criteria and connectivity indices for active graph-slam,” IEEE Robot. Autom. Lett., vol. 8, no. 2, pp. 816–823, 2023.

- [9] F. Niroui, K. Zhang, Z. Kashino, and G. Nejat, “Deep reinforcement learning robot for search and rescue applications: Exploration in unknown cluttered environments,” IEEE Robot. Autom. Lett., vol. 4, no. 2, pp. 610–617, 2019.

- [10] R. Algabri and M.-T. Choi, “Target recovery for robust deep learning-based person following in mobile robots: Online trajectory prediction,” Applied Sciences, vol. 11, no. 9, p. 4165, 2021.

- [11] B.-J. Lee, J. Choi, C. Baek, and B.-T. Zhang, “Robust human following by deep bayesian trajectory prediction for home service robots,” in Proc. IEEE Int. Conf. Robot. Autom., 2018, pp. 7189–7195.

- [12] M. Kim, M. Arduengo, N. Walker, Y. Jiang, J. W. Hart, P. Stone, and L. Sentis, “An architecture for person-following using active target search,” ArXiv, vol. abs/1809.08793, 2018.

- [13] A. Goldhoorn, A. Garrell, R. Alquézar, and A. Sanfeliu, “Continuous real time pomcp to find-and-follow people by a humanoid service robot,” in IEEE Int. Conf. Humanoid Robots. IEEE, 2014, pp. 741–747.

- [14] M. Do Hoang, S.-S. Yun, and J.-S. Choi, “The reliable recovery mechanism for person-following robot in case of missing target,” in IEEE Int. Conf. Ubiquitous Robots and Ambient Intelligence (URAI). IEEE, 2017, pp. 800–803.

- [15] P. Henry, C. Vollmer, B. Ferris, and D. Fox, “Learning to navigate through crowded environments,” in Proc. IEEE Int. Conf. Robot. Autom., 2010, pp. 981–986.

- [16] K. Cai, D. Dugas, R. Siegwart, et al., “Sampling-based path planning in highly dynamic and crowded pedestrian flow,” IEEE Trans. Intell. Transp. Syst., vol. 24, no. 12, pp. 14 732–14 742, 2023.

- [17] D. Dugas, K. Cai, O. Andersson, N. Lawrance, R. Siegwart, and J. J. Chung, “Flowbot: Flow-based modeling for robot navigation,” in Proc. IEEE/RJS Int. Conf. Intell. Robots Syst., 2022, pp. 8799–8805.

- [18] J. Saraydaryan, F. Jumel, and O. Simonin, “Navigation in human flows: planning with adaptive motion grid,” in Proc. IEEE/RJS Int. Conf. Intell. Robots Syst. (IROS) Workshop CrowdNav, 2018.

- [19] S. S. Lodhi, N. Kumar, and P. K. Pandey, “Autonomous vehicular overtaking maneuver: A survey and taxonomy,” Vehicular Communications, vol. 42, p. 100623, 2023.

- [20] X. Chen, J. Wei, X. Ren, K. H. Johansson, and X. Wang, “Automatic overtaking on two-way roads with vehicle interactions based on proximal policy optimization,” in 2021 IEEE Intelligent Vehicles Symposium (IV). IEEE, 2021, pp. 1057–1064.

- [21] W. Schwarting, J. Alonso-Mora, L. Pauli, S. Karaman, and D. Rus, “Parallel autonomy in automated vehicles: Safe motion generation with minimal intervention,” in Proc. IEEE Int. Conf. Robot. Autom. IEEE, 2017, pp. 1928–1935.

- [22] Q. Ma, M. Li, G. Huang, et al., “Overtaking path planning for cav based on improved artificial potential field,” IEEE Trans. Veh. Technol., 2023.

- [23] H. Ye, J. Zhao, Y. Zhan, W. Chen, L. He, and H. Zhang, “Person re-identification for robot person following with online continual learning,” IEEE Robot. Autom. Lett., pp. 1–8, 2024.

- [24] A. Hornung, K. M. Wurm, M. Bennewitz, C. Stachniss, and W. Burgard, “OctoMap: An efficient probabilistic 3D mapping framework based on octrees,” Autonomous Robots, 2013.

- [25] K. Cai, W. Chen, C. Wang, S. Song, and M. Q.-H. Meng, “Human-aware path planning with improved virtual doppler method in highly dynamic environments,” IEEE Trans. Autom. Sci. Eng., vol. 20, no. 2, pp. 1304–1321, 2022.

- [26] C. Fulgenzi, A. Spalanzani, C. Laugier, and C. Tay, “Risk based motion planning and navigation in uncertain dynamic environment,” Inria,” Research Report, Oct. 2010.

- [27] K. Cai, R. Laha, Y. Gong, L. Chen, L. Zhang, L. F. Figueredo, and S. Haddadin, “Demonstration to adaptation: A user-guided framework for sequential and real-time planning,” Proc. IEEE/RJS Int. Conf. Intell. Robots Syst., p. 8, 2024.

- [28] K. Koide and J. Miura, “Identification of a specific person using color, height, and gait features for a person following robot,” Robot. and Auton. Syst., vol. 84, pp. 76–87, 2016.

- [29] H. Ye, J. Zhao, Y. Pan, W. Chen, L. He, and H. Zhang, “Robot person following under partial occlusion,” in Proc. IEEE Int. Conf. Robot. Autom., 2023, pp. 7591–7597.

- [30] N. M. Korobov, “Number-theoretic methods in approximate analysis,” 1963.

- [31] D. Dugas, K. Cai, O. Andersson, N. Lawrance, R. Siegwart, and J. J. Chung, “Flowbot: Flow-based modeling for robot navigation,” in Proc. IEEE/RJS Int. Conf. Intell. Robots Syst., 2022, pp. 8799–8805.

- [32] D. Helbing and P. Molnar, “Social force model for pedestrian dynamics,” Physical review E, vol. 51, no. 5, p. 4282, 1995.

- [33] B. X. Chen, R. Sahdev, and J. K. Tsotsos, “Integrating stereo vision with a cnn tracker for a person-following robot,” in Int. Conf. Comput. Vis. Syst. (ICVS). Springer, 2017, pp. 300–313.

- [34] P. Anderson, A. Chang, et al., “On evaluation of embodied navigation agents,” arXiv preprint arXiv:1807.06757, 2018.

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/7a150448-1843-4643-bbd5-3115058ab130/hanjing.jpg) |

Hanjing Ye received his B.E. and M.S. degrees from Guangdong University of Technology in 2019 and 2022, respectively. He is currently pursuing his Ph.D. degree in Robotics at the Southern University of Science and Technology. His research interests involve robot person following, autonomous navigation and human-robot interaction. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/7a150448-1843-4643-bbd5-3115058ab130/Kuanqi.jpg) |

Kuanqi Cai is currently a Research Fellow at the HRII Lab, Italian Institute of Technology (IIT). Prior to joining IIT, he was a Research Associate at the Technical University of Munich, from 2023 to 2024. In 2021, he was honored as a Robotics Student Fellow at ETH Zurich. He obtained his B.E. degree from Hainan University in 2018 and his M.E. degree from Harbin Institute of Technology in 2021. His current research interests focus on motion planning and human-robot interaction. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/7a150448-1843-4643-bbd5-3115058ab130/zhanyu.jpg) |

Yu Zhan received his B.E. degree in microelectronics from Wuhan University in 2022. He is currently pursuing his Master’s degree from the Southern University of Science and Technology. His research interests include human pose estimation, human-robot interaction and robot vision. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/7a150448-1843-4643-bbd5-3115058ab130/bingyi.jpg) |

Bingyi Xia received his B.E. and M.S. degrees from the Southern University of Science and Technology in 2020 and 2023, respectively. He is currently pursuing his Ph.D. degree with the Department of Electronic and Electrical Engineering at Southern University of Science and Technology, Shenzhen, China. His research interests include robot motion planning. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/7a150448-1843-4643-bbd5-3115058ab130/Arash.jpg) |

Arash Ajoudani is the director of the Human-Robot Interfaces and Interaction (HRI²) laboratory at IIT and a recipient of the ERC grants Ergo-Lean (2019) and Real-Move (2023). He has coordinated multiple Horizon 2020 and Horizon Europe projects and received numerous awards, including the IEEE Robotics and Automation Society (RAS) Early Career Award (2021) and the KUKA Innovation Award (2018). A Senior Editor of IJRR and a member of the IEEE RAS Administrative Committee (2022-2024), his research focuses on physical human-robot interaction, mobile manipulation, and adaptive control. |

![[Uncaptioned image]](https://cdn.awesomepapers.org/papers/7a150448-1843-4643-bbd5-3115058ab130/hz.png) |

Hong Zhang received his PhD in Electrical Engineering from Purdue University in 1986. He was a Professor in the Department of Computing Science, University of Alberta, Canada for over 30 years before he joined the Southern University of Science and Technology (SUSTech), China, in 2020, where he is currently a Chair Professor. Dr. Zhang served as the Editor-in-Chief of the IROS Conference Paper Review Board (2020-2022) and is currently a member of the IEEE Robotics and Automation Society Administrative Committee (2023-25). He is a Fellow of IEEE and a Fellow of the Canadian Academy of Engineering. His research interests include robotics, computer vision, and image processing. |

APPENDIX

V-A Methodology Supplements

We additionally provide the method flows of our belief-guided search field and observation-based search field as shown in Algorithm. 1 and Algorithm. 2, respectively.

V-B Implementation Details and Metrics

| Simulated Environment | Factory | Hospital | Bookstore | ||||||

| Room1 | Room2 | Room3 | Dyna1 | Dyna2 | Dyna3 | Factory∗ | |||

| Size (mm) | 4070 | 2650 | 1413 | ||||||

| Num. of people | 1 | 1 | 1 | 9 | 9 | 11 | 20 | 20 | 9 |

| Target’s path length (m) | 40.0 | 55.0 | 50.0 | 57.0 | 57.0 | 57.0 | 48.3 | 28.6 | 17.9 |

| Robot | Kobuki differential robot with maximum 2.0 m/s | ||||||||

| Sensors | RGB-D camera (120° FoV, 0.03-10 m) and 2D laser rangefinder (0-20 m) | ||||||||

| Real-World Environment | Large U-turn | Long Hallway (S) | Long Hallway (M) | ||||||

| Size (mm) | 203 | 503 | 503 | ||||||

| Num. of people | 1 | 2 | 4 | ||||||

| Target’s path length (m) | 15 | 42 | 42 | ||||||

| Robot | Scout-Mini differential robot with maximum 1.8 m/s | ||||||||

| Sensors | Stereo camera (120° FoV, 0.03-10 m) | ||||||||

| Parameter | ||||||||

| Value | 1.5 m/s | 0.01 | 1.5 m | 1.3 m | 1.1 | 0.9 | 10.0 | 1.3 m/s |

The parameters of the proposed method are shown in Table. VII. In the simulated environments, our differential-drive robot is equipped with a 2D laser rangefinder (0-20 m) for navigation and an RGB-D camera (120° FoV, 0.03-10 m) for perception. The robot’s maximum velocity is 2.0 m/s, and the simulated people’s speeds range from 0.8 to 1.7 m/s. In our real-world experiments, we employ a Scout-Mini differential-drive robot equipped with a stereo camera that offers a 120° horizontal field of view (FoV) and a range of 0.03–10 meters. The stereo camera provides both RGB and depth images. To maintain consistency with our simulations, all planners and controllers are configured identically. For target person tracking and re-identification, we adopt the vision-based tracking method proposed in [23]. All parameters involving simulated and real-world environments are shown in Table VI.

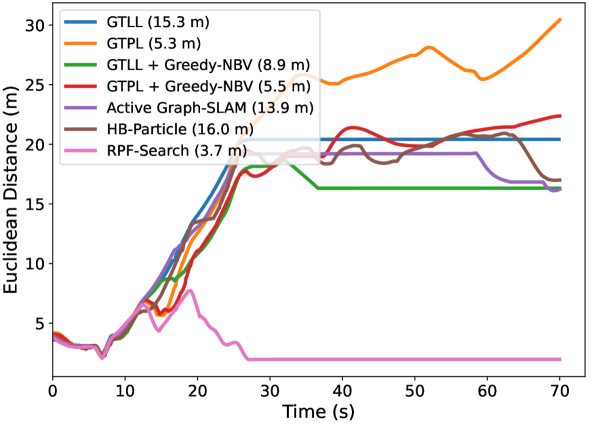

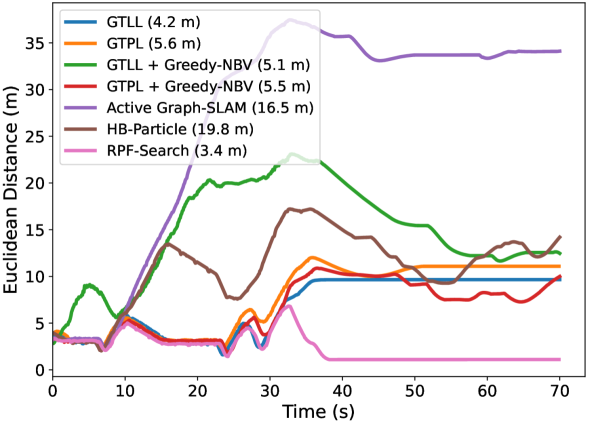

We additionally evaluate the following metric: Euclidean Distance to Target Over Time (EDTOT): An effective person-search approach should help the robot quickly recover the person-following, resulting in a smaller average distance to the target person, so we measure the Euclidean distance between the robot and the target person in each episode.

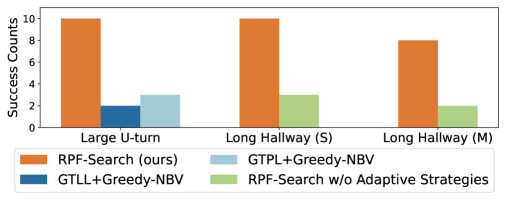

V-C Real-world Experiments

The real-world experiments were conducted in an indoor office environment featuring a long hallway and a large U-turn, specifically designed to test the performance of the algorithm under dynamic and topographic occlusions. In the topographic occlusion experiment (Fig. 1(a)), our proposed algorithm demonstrated significant advantages over GTLL[33] + Greedy-NBV and GTPL[12] + Greedy-NBV, which were the best-performing algorithms in previous comparisons. Notably, our approach achieved perfect tracking recovery, with ten successful runs out of ten — eight more than GTLL and seven more than GTPL, as shown in Fig. 11. This improvement is due to the limitations of GTLL + Greedy-NBV and GTPL + Greedy-NBV, as their reliance on local greedy search often results in suboptimal paths, such as frequent detours or overly conservative trajectories. In contrast, our algorithm leverages a probabilistic distribution to optimize the search process, reducing unnecessary exploration and producing more efficient and natural navigation paths.

In the dynamic occlusion experiments (Figs 1(b) and 1(c)), our algorithm consistently outperformed the compared methods. Unlike existing approaches, which struggle with dynamic occlusions, our algorithm integrates adaptive strategies—such as fluid-following and overtaking—that effectively address varying occlusion scenarios in real-world environments. These adaptive strategies significantly improve the robot’s ability to re-find the target person after occlusions, resulting in a higher success rate. Specifically, our method achieved 7 more successes in Long Hallway (S) and 8 more successes in Long Hallway (M) compared to the version without adaptive strategies, as shown in the experimental data (Fig.11).

Visualizations of the single-person and multi-person occlusion experiments are provided in Fig.12 and Fig.13, respectively. In the single-person occlusion scenario, an observation-based overtaking field is constructed using the motion cues of the occluder. Within this field, overtaking candidates (indicated by yellow points in the figure) are sampled and evaluated. The candidate with the minimum cost, as long as it is below a predefined threshold, is selected as the next search point. Through continuous selection of search points and navigation, the robot smoothly overtakes the occluder and successfully re-finds the target person.

In the multi-person occlusion scenario, our method first constructs the overtaking field. However, because the minimum overtaking cost exceeds a predefined threshold, a fluid field is created using the motion cues of visible occluders to ensure safe navigation and facilitate target searching. The robot follows the fluid field (shown in the second column) until an overtaking opportunity arises (shown in the third column), allowing the robot to resume tracking the target.

V-D Simulated Experiments

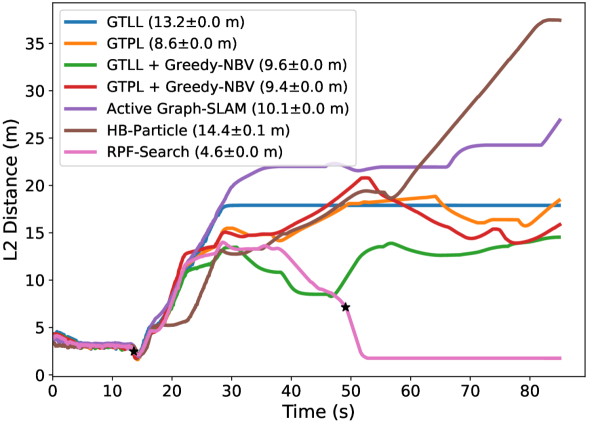

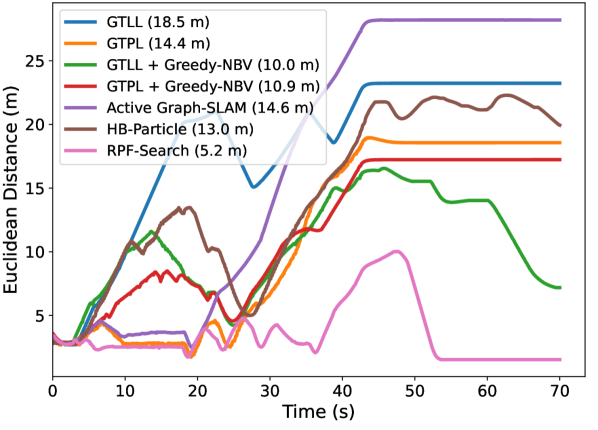

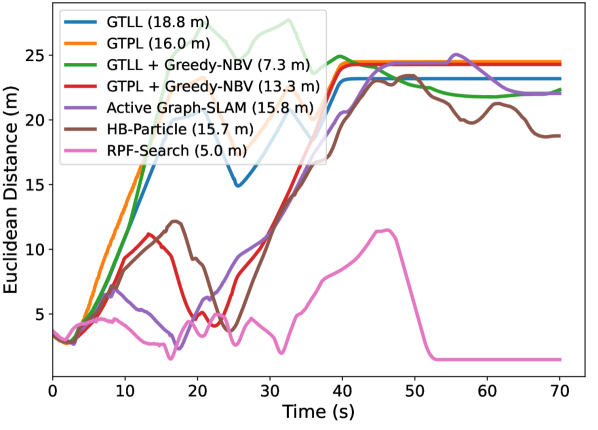

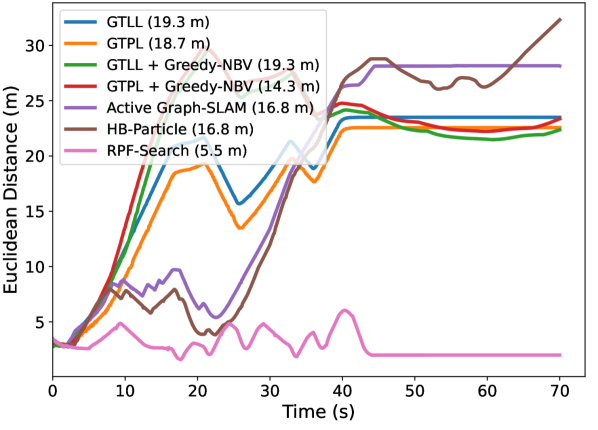

For topographic occlusion, as observed in Fig. 14(a), Fig. 14(b) and Fig. 14(c), when using our RPF-Search method, the distance between the robot and the person quickly converges to the desired following distance after tracking loss. Consequently, as indicated in the legends of these figures, our method achieves the smallest average Euclidean distance to the target, with 3.7 m in Room1, 3.4 m in Room2 and 6.4 m in Room3, surpassing the second-best baseline by reductions of 1.8 m, 0.8 m and 3.0 m, respectively. This demonstrates that our method can help the robot quickly re-find the target person and return to the person-following stage.

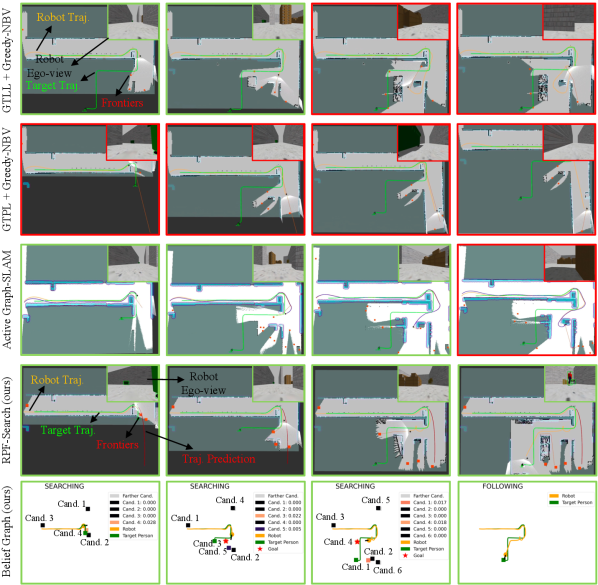

Moreover, we provide a person-search visualization of baselines and our method in Room3 as shown in Fig. 15 where the target person’s route involves making a U-turn corner and entering a room. The visualizations of our method are provided in the last two rows of Fig. 15. Initially, the trajectory prediction (depicted as a red line in the fourth row) directs the robot toward the corridor. However, our method effectively utilizes newly observed environmental information to penalize infeasible candidates through the inference factor (Eq. 3). This factor dictates that if the target person were indeed moving toward the corridor, the robot should have observed them by now. Consequently, as shown in the second belief-guided graph, the belief associated with the corridor candidate reduces to zero; specifically, the belief of ’Cand. 2’ is zero in the second graph. This prompts the robot to redirect its search toward higher-belief candidates within the room, i.e., ’Cand. 3,’ which holds a belief value of 0.022 in the second graph. Without this inference factor, the robot would pursue the target person into the corridor, following the trajectory prediction and ultimately resulting in a failed search.

Additionally, the probabilistic inheritance capability of the belief-guided field (Eq. 10) allows newly observed candidates to inherit belief from parents in a belief-decaying manner. This mechanism enables the robot to continuously adjust its focus toward more probable candidates until locating the target, as shown in the third and fourth graphs of Fig. 15. Due to probabilistic inheritance, as seen in the third graph, ’Cand. 4’ inherits belief from its predecessor in a decayed form, resulting in a belief of 0.018, which guides the robot to continue searching the room until the target person is found. If probabilistic inheritance were removed, the belief of ’Cand. 4’ would drop to zero due to its distance from the probability area defined by the trajectory prediction, causing the robot to navigate incorrectly and leading to a failed search. Our proposed probabilistic inheritance utilizes the local connectivity of newly produced and old frontiers, enabling an optimal selection of inside-room and outside-room explorations.

For dynamic occlusion, EDTOT results shown in Fig. 14(d), Fig. 14(e) and Fig. 14(f) also evidence the effectiveness of our method compared to other baselines. Our method can achieve the lowest average distance with 5.2 m in Dyna1 and 5.0 m in Dyna2 and 5.5 m in Dyna3, surpassing the second-best baseline by reductions of 4.8 m, 2.3 m and 8.8 m, respectively. These significant improvements confirm the effectiveness of our RPF-Search method in handling dynamic occlusions. By incorporating our proposed fluid-following and overtaking fields, the robot can perform safe and smooth search behaviors when dealing with dynamic occlusions.

V-E Limitations and Discussion

Our method demonstrates promising performance for person search in unknown and dynamic environments, but it is not yet an optimal solution. For instance, the current approach relies on a naive Intersection over Union (IoU)-based assessment to trigger the two fields. However, in more complex environments where the target person might transition from dynamic occlusion to topographic occlusion, this simplistic approach can mislead the robot. To address this, a more sophisticated occlusion identification method could be explored. One potential solution is leveraging a large language model or a visual-language model to engage in chain-of-thought reasoning, enabling the system to infer the target person’s occlusion state by analyzing occluders’ motion cues and the surrounding environment.

Furthermore, our method faces challenges in handling long-term disappearances caused by topographic occlusion. Currently, the exclusion of impossible candidates relies heavily on an inference factor that is strongly tied to the duration of the disappearance. When the target is lost for an extended period, this factor becomes uniform across all candidates (frontiers), forcing the method to depend solely on trajectory prediction and probability inheritance. This limitation can result in prolonged search times. To mitigate this, incorporating semantic cues could improve the exclusion process. For example, if prior knowledge indicates that the target person is looking for a drink with an empty bottle, locations such as meeting rooms or gyms would be less likely to contain the person, allowing for more focused and efficient searching.

Additionally, our method can be seamlessly integrated with map-dependent person-search approaches. By dynamically constructing occupancy maps and frontier-based topological graphs during the RPF process, it naturally generates a topo-metric map. The frontiers, serving as nodes, represent safe navigation points and provide maximal observational value. Moreover, during RPF, if the robot accompanies a single user in the same location for an extended period, these nodes can encode the user’s appearance frequency, enabling a more efficient and targeted person search.