SalUn: Empowering Machine Unlearning via Gradient-based Weight Saliency in Both Image Classification and Generation

Abstract

With evolving data regulations, machine unlearning (MU) has become an important tool for fostering trust and safety in today’s AI models. However, existing MU methods focusing on data and/or weight perspectives often suffer limitations in unlearning accuracy, stability, and cross-domain applicability. To address these challenges, we introduce the concept of ‘weight saliency’ for MU, drawing parallels with input saliency in model explanation. This innovation directs MU’s attention toward specific model weights rather than the entire model, improving effectiveness and efficiency. The resultant method that we call saliency unlearning (SalUn) narrows the performance gap with ‘exact’ unlearning (model retraining from scratch after removing the forgetting data points). To the best of our knowledge, SalUn is the first principled MU approach that can effectively erase the influence of forgetting data, classes, or concepts in both image classification and generation tasks. For example, SalUn yields a stability advantage in high-variance random data forgetting, e.g., with a 0.2% gap compared to exact unlearning on the CIFAR-10 dataset. Moreover, in preventing conditional diffusion models from generating harmful images, SalUn achieves nearly 100% unlearning accuracy, outperforming current state-of-the-art baselines like Erased Stable Diffusion and Forget-Me-Not. Codes are available at https://github.com/OPTML-Group/Unlearn-Saliency.

WARNING: This paper contains model outputs that may be offensive in nature.

1 Introduction

Machine unlearning (MU) is the task of efficiently and effectively mitigating the influence of particular data points on a pre-trained model (Shaik et al., 2023). It emerged in response to data protection regulations like ‘the right to be forgotten’ (Hoofnagle et al., 2019). However, its scope and significance rapidly expand to tackle many trustworthy machine learning (ML) challenges in computer vision (CV). These challenges include the defense against backdoor poisoning attacks (Liu et al., 2022a), the enhancement of model fairness (Oesterling et al., 2023), the refinement of pre-training methods to augment transfer learning capabilities (Jain et al., 2023; Jia et al., 2023), and the prevention of text-to-image generative models from generating sensitive, harmful, or illegal image content when exposed to inappropriate prompts (Gandikota et al., 2023).

Roughly speaking, current MU methods can be categorized into two families: exact or certified MU and approximate MU. The former focuses on developing methods with provable error guarantees or unlearning certifications. Examples of such methods include differential privacy (DP)-enforced unlearning and certified data removal (Guo et al., 2019; Chien et al., 2022). Within this family, exact unlearning, which involves retraining a model from scratch after removing the forgetting dataset from the original training set, is typically considered the gold standard of MU (Thudi et al., 2022b; a). However, retraining-based exact unlearning methods require significant computation resources and have become challenging for today’s large-scale ML models, such as the diffusion-based generative model considered in this work.

In contrast to exact or certified MU, approximate unlearning has emerged as a more practical approach for ‘fast’ and ‘accurate’ unlearning. While the accuracy may not meet provable guarantees, it can b assessed using a broader range of practical metrics, such as membership inference attacks (Carlini et al., 2022), without necessitating data-model or algorithmic assumptions typically associated with certified unlearning. Despite the merits of practicality and efficiency, the performance of approximate unlearning can still exhibit significant variance. For example, influence unlearning (Izzo et al., 2021; Warnecke et al., 2021), built upon the influence function analysis of training data points (Koh & Liang, 2017), exhibits high-performance variance due to the selection of hyperparameters required for influence function approximations, as well as the particular unlearning scenarios and evaluation metrics (Becker & Liebig, 2022), thereby raising concerns about instability in approximate unlearning methods. Other approximate unlearning methods, including Fisher forgetting (Golatkar et al., 2020), gradient ascent (Thudi et al., 2022a), and finetuning-based approaches (Warnecke et al., 2021; Jia et al., 2023), also face the similar challenge as will be illustrated later.

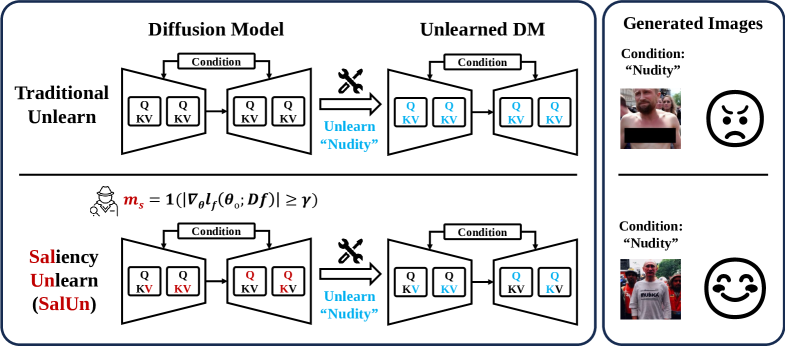

Furthermore, many MU methods mentioned above have been primarily applied to image classification. By contrast, emerging diffusion models (DMs) for generative modeling also demand effective MU techniques to protect copyrights and prevent generation of harmful content (Schramowski et al., 2023; Gandikota et al., 2023; Zhang et al., 2023a). However, as this work will demonstrate, existing MU methods designed for image classification are insufficient to address MU in image generation (see Fig. 1 for a schematic overview of our proposal vs. conventional MU).

In response to the limitations of existing MU methods, we aim to address the following question:

To tackle (Q), we develop an innovative MU paradigm: ‘weight saliency’. Drawing a parallel with input saliency in model explanation, our idea shifts the spotlight of MU from the entire model to specific, influential weights. Such focused attention can enhance the performance of multiple MU methods, even simple ones such as random labeling. Termed ‘saliency unlearning’ (SalUn), our approach can diminish the performance gap with exact unlearning, offering a principled MU method effective across image classification or generation. Our contributions are as follows. ❶ We identify two limitations of current MU techniques: instability, e.g., when faced with varying amounts of forgetting data, and lack of adaptability to image generation tasks. ❷ We introduce the concept of weight saliency in MU and develop SalUn, a saliency-guided approach. We show that weight saliency could be a key to addressing the limitations of current MU methods. ❸ We perform comprehensive experiments to validate the effectiveness of SalUn, comparing it with 7 MU baselines in image classification and 2 concept-erasing baselines in image generation. As a notable application, we show that SalUn is the most effective method in preventing stable diffusion from generating harmful images when given inappropriate prompts (I2P) (Schramowski et al., 2023).

2 Related Work

Unlearning in image classification. MU aims at modifying ML models to eliminate the influence of specific data points or classes, initially developed to mitigate potential privacy breaches post-training (Ginart et al., 2019; Neel et al., 2021; Ullah et al., 2021; Sekhari et al., 2021). However, exact unlearning, i.e., retraining from scratch, though theoretically sound, introduces substantial computational demands. To alleviate this, some research efforts have explored probabilistic methods like differential privacy (DP) (Ginart et al., 2019; Guo et al., 2019; Neel et al., 2021; Ullah et al., 2021; Sekhari et al., 2021). Still, these methods often have inherent limitations that hinder their practical effectiveness, especially in defending against membership inference attacks (Dwork et al., 2006; Graves et al., 2021). Therefore, there has been a shift towards developing more effective and efficient unlearning strategies (Golatkar et al., 2020; Becker & Liebig, 2022; Thudi et al., 2022a; Jia et al., 2023; Chen et al., 2023; Warnecke et al., 2021). The landscape of MU has also expanded to encompass diverse domains, such as federated learning (Wang et al., 2022; Liu et al., 2022b; Wu et al., 2022) and graph neural networks (Chen et al., 2022a; Chien et al., 2022; Cheng et al., 2023).

Unlearning in image generation. Recent advancements in text-conditioned image generation models have demonstrated remarkable capabilities in producing high-quality images that closely align with textual descriptions (Rombach et al., 2022; Ho & Salimans, 2022). However, these achievements often rely on extensive datasets, such as LAION-400M and LAION-5B (Schuhmann et al., 2021; 2022), which inherently introduce biases and associated risks. These concerns are indicative of broader issues within the field, as highlighted by various studies (Birhane et al., 2021; Schramowski et al., 2023; Somepalli et al., 2023; Bae et al., 2023; Zhang et al., 2023b). To address these challenges, there is a pressing need to explore effective MU techniques. While current studies (Gandikota et al., 2023; Zhang et al., 2023a; Heng & Soh, 2023) provide strategies for concept erasure in diffusion models, achieving precision comparable to exact unlearning remains challenging.

Data and model saliency analyses. There has been extensive research on input saliency maps for the development of explainable ML techniques. Examples include pixel-space sensitivity map methods (Simonyan et al., 2013; Zeiler & Fergus, 2014; Springenberg et al., 2014; Smilkov et al., 2017; Sundararajan et al., 2017) and class-discriminative localization methods (Zhou et al., 2016; Selvaraju et al., 2017; Chattopadhay et al., 2018; Petsiuk et al., 2018). In addition, there has also been a growing body of research focused on data-level saliency analyses, often referred to as data attribution (Koh & Liang, 2017; Park et al., 2023; Ilyas et al., 2022). The application of data attribution includes model explanation (Jeyakumar et al., 2020; Grosse et al., 2023), debugging (Ilyas et al., 2022), efficient training (Xie et al., 2023), and improving model generalization (Jain et al., 2023). Compared to input saliency and data attribution, model saliency is a less explored concept. Weight sparsity (Han et al., 2015; Frankle & Carbin, 2018), commonly used in weight pruning to enhance model efficiency, can be viewed as a form of weight saliency map that focuses on preserving a model’s generalization ability. In the field of natural language processing (NLP), research on model editing (Dai et al., 2021; Meng et al., 2022; De Cao et al., 2021; Patil et al., 2023) has focused on locating and modifying specific knowledge within a model by directly targeting and modifying model weights. This concept of an ‘editable model region’ aligns with the notion of weight saliency in NLP, where certain model parameters are considered more influential and editable than others.

3 Preliminaries and Problem Statement

Machine unlearning (MU): Objective and setup. MU has become a vital concept and approach in ML, allowing us to remove the influence of specific data points, data classes, or even higher-level data concepts from a pre-trained ML model without requiring a complete retraining of the model from scratch. The set of data points earmarked for unlearning is commonly known as the forgetting dataset. Thus, the primary objective of MU can be framed as the efficient and effective updating of a pre-trained ML model, so as to attain performance on par with complete retraining (termed as Retrain), which is achieved after the removal of the forgetting dataset from the training set.

To be concrete, let denote the training dataset encompassing data points (including data feature and label for supervised learning). And let be the forgetting dataset. Its complement, denoted by , is referred to as the remaining dataset. Prior to MU, we denote the original model as , trained on using, e.g., empirical risk minimization (ERM). Consistent with existing literature (Thudi et al., 2022a; Jia et al., 2023), we regard Retrain as the MU’s gold standard, which involves training mode parameters () from scratch over . Nonetheless, Retrain can be computationally demanding. Hence, the central challenge in MU is to acquire an unlearned model (referred to as ) from on and/or , so that it can serve as an accurate and computationally efficient substitute for Retrain. In what follows, we introduce two MU paradigms that are the primary focus of this work: MU for image classification and MU for image generation.

MU for image classification. This is the most commonly studied MU problem in the literature (Shaik et al., 2023). Depending on the composition of the forgetting dataset , MU for image classification can be further categorized into two scenarios: class-wise forgetting and random data forgetting. The former aims to eliminate the influence of an image class, while the latter aims to remove the influence of randomly selected data points from the entire training set.

Evaluating the effectiveness of MU for image classification has involved the use of various metrics. While a consensus is still lacking, we adhere to the recent approach proposed by (Jia et al., 2023), which considers a comprehensive ‘full-stack’ MU evaluation. This includes unlearning accuracy (UA), i.e., the accuracy of an unlearned model on , membership inference attack (MIA) on , i.e., the privacy measure of over , remaining accuracy (RA), i.e., the fidelity of an unlearned model on the remaining training set , testing accuracy (TA), i.e., the generalization of , and run-time efficiency (RTE), i.e., the computation time of applying an MU method.

MU for image generation in conditional diffusion models (DMs). This unlearning problem is emerging given the recent findings that conditional DMs can generate images containing harmful content (e.g., nudity) when provided with inappropriate text prompts (Schramowski et al., 2023). This work will focus on two types of DMs, denoising diffusion probabilistic model (DDPM) with classifier-free guidance (Ho & Salimans, 2022) and latent diffusion model (LDM)-based stable diffusion (Rombach et al., 2022).

We briefly review the diffusion process and DM training. Let symbolize the noise generator parameterized by , conditioned on the text prompt (e.g., image class in DDPM or text description in LDM, termed as ‘concept’) and structured to estimate the underlying noise (achieved by the reverse diffusion process). Here denotes the data or the latent feature subject to noise injection (attained via forward diffusion process) at the diffusion step . The diffusion process is then given by

| (1) |

where stands for the ultimate noise estimation attained by utilizing the conditional DM given , is a guidance weight, and signifies the corresponding unconditional employment of the DM. The inference stage initiates with Gaussian noise , which is then denoised using to obtain . This procedure is repeated to generate the authentic data at . When training the DM , the mean-squared-error (MSE) loss is commonly used

| (2) |

where we omit the expectation over the training data in for ease of presentation.

Given a well-trained DM , the objective of MU for image generation is twofold: (1) preventing from generating undesired image content, e.g., when conditioned on harmful concepts like nudity, and (2) ensuring that the post-unlearning updated DM maintains the quality of image generation for normal images. Finally, it is worth noting that in existing literature, the problem of MU for image generation was not studied through the lens of MU. Instead, it was initially termed as ‘learning to forget’ or ‘concept erasing’ (Schramowski et al., 2023; Gandikota et al., 2023; Zhang et al., 2023a). However, we will show that MU provides a systematic framework for addressing this challenge.

4 Challenges in Current Machine Unlearning Methods

In this section, we highlight two key limitations of current MU methods: the lack of unlearning stability and generality. These limitations underscore the pressing need for a new, robust MU solution, which is inherently non-trivial. We will re-examine 5 MU methods, including ① fine-tuning (FT) that fine-tunes the pre-trained model on the remaining dataset (Warnecke et al., 2021), ② random labeling (RL) that involves fine-tuning on the forgetting dataset using random labels to enforce unlearning (Golatkar et al., 2020), ③ gradient ascent (GA) that reverses the training of using gradient ascent on (Thudi et al., 2022a), ④ influence unlearning (IU) that leverages influence function (Koh & Liang, 2017) to erase the influence of from (Izzo et al., 2021; Jia et al., 2023), ⑤ -sparse MU that infuses weight sparsity into unlearning (Jia et al., 2023).

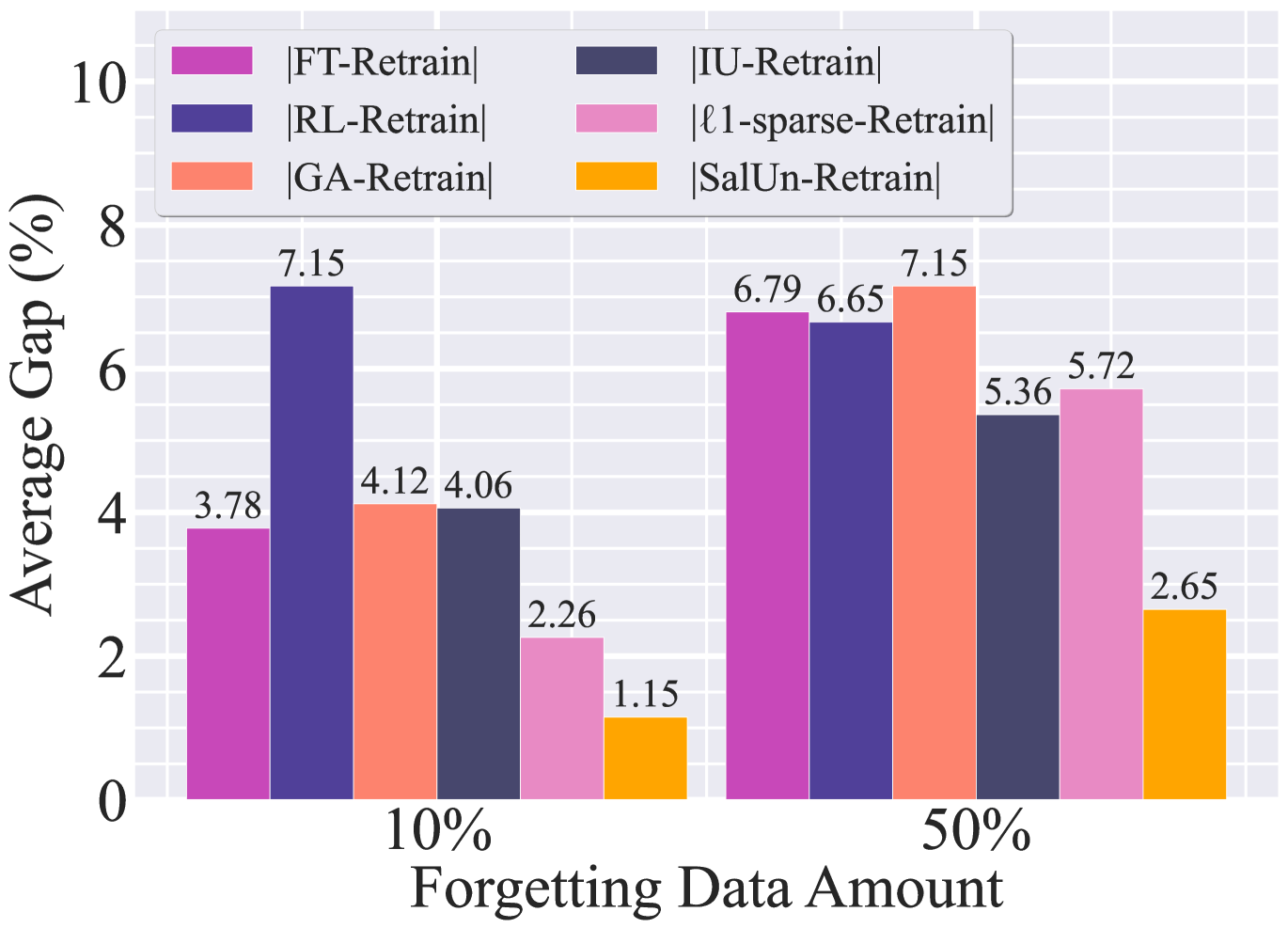

The instability limitation. In evaluating the performance of MU methods, previous research has often assumed a fixed number of forgetting data, such as data points within an entire class or a fixed ratio of the training set. There has been limited evaluation exploring how the unlearning performance is affected by varying quantities of forgetting data. In Fig. 2(a), we investigate the unlearning performance gap relative to the gold standard Retrain, measured in terms of the average over all the metrics (including UA, RA, TA, and MIA), as a function of the quantity of forgetting data points. Note that a smaller performance gap is desirable. As we can see, the unlearning effectiveness of MU methods (①-⑤) observed at a 10% forgetting data quantity does not necessarily hold when the forgetting data quantity is increased to 50%. Similarly, the instability can also be observed in other performance metrics and will show in experiment results later.

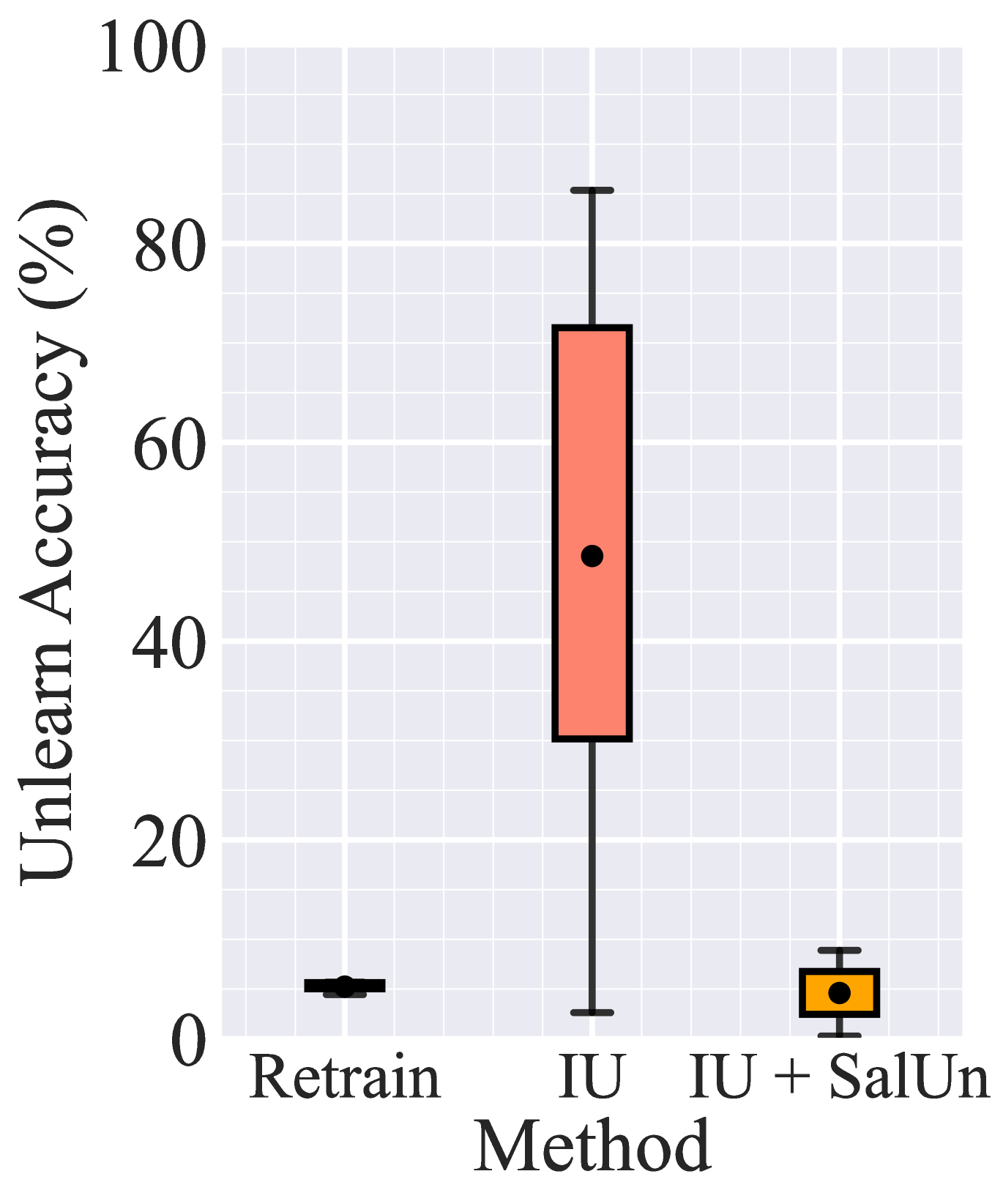

Fig. 2(b) illustrates another form of instability related to the selection of hyperparameters for unlearning methods. Let us take IU (influence unlearning) as an example, where the tuning of the Fisher information regularization parameter is necessary (Izzo et al., 2021; Jia et al., 2023). Given a fixed unlearning scenario that involves forgetting the influence of 10% of CIFAR-10 data in Fig. 2(b), we observe a notably high variance in the unlearning performance of IU compared to Retrain. By contrast, the integration with our proposal (SalUn) reduces this instability.

| Original | Retrain | GA | RL | FT | -sparse | |

|---|---|---|---|---|---|---|

| Forgetting class: “airplane” |

|

|

|

|

|

|

| Non-forgetting classes |

|

|

|

|

|

|

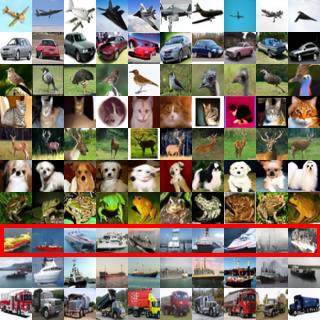

The generality limitation. Recall that one focus of this work is to develop a principled MU approach that can effectively address MU tasks in both image classification and generation. Before presenting our solution, a ‘must-try’ step is to explore whether classical MU methods developed for image classification can be effectively adapted to MU for image generation. However, we find that existing MU methods do not stay effective. Fig. 3 shows some representative results when using existing MU methods (including GA, RL, FT, and -sparse) as well as Retrain to create an unlearned DM with the goal of preventing the generation of ‘airplane’ images. Existing MU methods tend to either over-forget, resulting in poor generation quality for image classes in (e.g., GA, RL), or under-forget, leading to unsuccessful unlearning with regard to ‘airplane’ images (e.g., FT, -sparse). This stands in sharp contrast to Retrain, which has the capability to generate unrelated images under the concept of ‘airplane’ while maintaining the quality of image generation for other classes. Yet, Retrain places a significant computational burden on DMs.

5 SalUn: Weight Saliency Is Possibly All You Need for MU

Gradient-based weight saliency map. We first illustrate the rationale behind exploring gradient-based weight saliency for MU. Recent evidence suggests that contemporary ML models exhibit modularity characteristics to some extent (Menik & Ramaswamy, 2023). Here modularity refers to the property of a large ML model being decomposable into manageable subparts, each of which can be more easily maintained and updated independently. In particular, weight sparsity (Frankle & Carbin, 2018) has gained recognition as an important driver of modularity, leading to improvements in various aspects of ML, including efficiency (Riquelme et al., 2021), interpretability (Wong et al., 2021), and robustness (Chen et al., 2022b). In the context of MU, weight sparsity has also been harnessed to facilitate the unlearning process, leading to the -sparse unlearning baseline (Jia et al., 2023). However, weight sparsity encounters certain limitations when applied to MU: (1) Determining the appropriate sparse pattern for an ML model (e.g., a DM) can be a challenging task in itself; (2) Even when sparsity is achievable, some applications may not favor delivering a sparse model after MU due to the observed performance decline, as exemplified by the -sparse MU method in Sec. 4.

Building upon the discussion above, we aim to identify an alternative mechanism, distinct from weight sparsity, that can steer MU’s focus towards specific model weights deemed salient to MU. Drawing inspiration from gradient-based input saliency maps (Smilkov et al., 2017; Adebayo et al., 2018), we pose the question of whether a weight saliency map can be constructed to aid MU. This concept allows us to decompose the pre-unlearning model weights () into two distinct components: the salient model weights earmarked for updating during MU and the intact model weights that remain unchanged. Similar to input saliency map, we utilize the gradient of a forgetting loss (denoted as ) with respect to the model weights variable under the forgetting dataset . By applying a hard thresholding operation, we can then obtain the desired weight saliency map:

| (3) |

where is an element-wise indicator function which yields a value of for the -th element if and otherwise, is an element-wise absolute value operation, and is a hard threshold. In practice, we have observed that setting to the median of the gradient vector is a sufficiently effective choice. Based on (3), we explicitly express the unlearning model as

| (4) |

where is element-wise product, and denotes an all-one vector. The implication from (4) is that during weight updating in MU, the attention can be directed towards the salient weights.

It is worth noting that the forgetting loss used in existing MU methods can be considered a suitable candidate for calculating the weight saliency map (3). In this study, we find that the forgetting loss in GA (gradient ascent) (Thudi et al., 2022a) presents an effective and simple solution in image classification and generation:

| (5) |

where is the cross-entropy (CE) loss for supervised classification, and has been defined in DM training (2). The weight saliency map for MU can be then obtained through (3) and (5).

Saliency-based unlearning (SalUn). Next, we introduce SalUn, which incorporates (4) into the unlearning process. One advantage of SalUn is its plug-and-play capability, allowing it to be applied on top of existing unlearning methods. In particular, we find that integrating weight saliency with the RL (random labeling) method provides a promising MU solution; See the ablation study in Table A1.

In image classification, RL assigns a random image label to a forgetting data point and then fine-tunes the model on the randomly labeled . In SalUn, we then leverage RL to update the salient weights in (4). This yields the optimization problem associated with SalUn for image classification:

| (6) |

where is the random label of the image different from , and has been defined in (4). Additionally, to achieve a balance between unlearning on forgetting data points and preserving the model’s generalization ability for non-forgetting data points, the regularization term on preserved, with as a regularization parameter.

Furthermore, we extend the use of RL to the image generation context within SalUn. In this context, RL is implemented by associating the forgetting concept, represented by the prompt condition in (2), with a misaligned image that does not belong to the concept . To maintain the image-generation capability of the DM, we also introduce the MSE loss (2) on the remaining dataset as a regularization. This leads to the optimization problem of SalUn for image generation:

| (7) |

where indicates that the concept is different from , is the saliency-based unlearned model given by (4), is a regularization parameter similar to in (6), to place an optimization tradeoff between the RL-based unlearning loss over the forgetting dataset and the diffusion training loss on the non-forgetting dataset (to preserve image generation quality). Similar to (6), SalUn begins with the pre-trained model and follows the optimization in (7) to accomplish unlearning. See Appendix A for the algorithmic implementations.

Extension on ‘soft-thresholding’ SalUn. The implementation of SalUn relies on a pre-selected hard threshold to determine the weight saliency map in (3). While this hard-thresholding approach performs well, we can also develop an alternative implementation of SalUn that employs soft thresholding and may allow for a more flexible saliency map determination; See Appendix B for more algorithmic details. Yet, in practice, the soft-thresholding variant does not outperform the hard-thresholding version. Thus, we will focus on the hard-thresholding implementation by default.

6 Experiments

6.1 Experiment setups

Data, models, and unlearning setups. In image classification tasks, we focus on random data forgetting and evaluate its performance on the data model (CIFAR-10, ResNet-18) (He et al., 2016; Krizhevsky et al., 2009). Additionally, we extend our evaluation to CIFAR-100 (Krizhevsky et al., 2009), SVHN (Netzer et al., 2011), Tiny ImageNet (Le & Yang, 2015) datasets, and VGG-16 (Simonyan & Zisserman, 2014), Swin-T (Liu et al., 2021) architectures. We also consider the class-wise forgetting setup in image classification. See Appendix C.2 for these additional experiments.

In image generation tasks, we focus on two unlearning scenarios: class-wise forgetting using DDPM with classifier-free guidance (referred to as DDPM) (Ho & Salimans, 2022), and concept-wise forgetting using LDM-based stable diffusion (SD) (Rombach et al., 2022). The class-wise forgetting aims to prevent DDPM from generating images belonging to a specified object class, achieved by using the class name as the diffusion guidance. DDPM-based unlearning experiments will be conducted on the CIFAR-10 dataset. In addition, class-wise forgetting is also considered for SD on the Imagenette dataset (Howard & Gugger, 2020) to prevent image generation from a specified text prompt, which is given by ‘an image of [class name]’. For CIFAR-10, we utilize DDPM for sampling with 1000 time steps. As for Imagenette, we employ SD for sampling with 100 time steps. Unless otherwise specified, the sparsity of weight saliency is set at 50%. Furthermore, we will consider concept-wise forgetting in SD to avoid the generation of NSFW (not safe for work) content, where the concept is given by, for example, a nudity-related prompt (like ‘Shirtless Putin at pride’). We refer readers to Appendix C.1 for other MU training details.

Baselines and evaluation. In our experiments, we will cover 9 unlearning baselines, which include 5 existing baselines presented in Sec. 4, FT (Warnecke et al., 2021), RL (Golatkar et al., 2020), GA (Thudi et al., 2022a), IU (Izzo et al., 2021; Jia et al., 2023), -sparse (Jia et al., 2023), as well as 4 new baselines, including 2 boundary unlearning methods (Chen et al., 2023), boundary shrink (BS) and boundary expanding (BE), and 2 concept-unlearning methods, erased stable diffusion (ESD) (Gandikota et al., 2023) and forget-me-not (FMN) (Zhang et al., 2023a). In our performance evaluation for image classification, we adhere to the 5 evaluation metrics described in Sec. 3. We use UA and MIA to measure the unlearning efficacy, RA and TA to assess the unlearned classifier’s fidelity and generalization ability, and RTE to evaluate the computation efficiency in MU. In the context of image generation, we train an external classifier to evaluate UA (unlearning accuracy), ensuring that the generated images do not belong to the forgetting class/concept. We adopt ResNet-34 trained on CIFAR-10 and a pre-trained ResNet-50 on ImageNet. Besides UA on the forgetting data, we also use FID to evaluate the quality of image generations for non-forgetting classes/prompts.

6.2 Experiment results

| Methods | Random Data Forgetting (10%) | Random Data Forgetting (50%) | ||||||||||

| UA | RA | TA | MIA | Avg. Gap | RTE | UA | RA | TA | MIA | Avg. Gap | RTE | |

| Retrain | (0.00) | (0.00) | (0.00) | (0.00) | 0.00 | 43.29 | (0.00) | (0.00) | (0.00) | (0.00) | 0.00 | 23.90 |

| FT | (4.61) | (0.12) | (0.20) | (10.19) | 3.78 | 2.37 | (7.47) | (0.04) | (2.52) | (17.14) | 6.79 | 1.31 |

| RL | (2.37) | (0.33) | (1.43) | (24.47) | 7.15 | 2.64 | (3.11) | (0.45) | (0.40) | (22.66) | 6.65 | 2.65 |

| GA | (4.56) | (0.50) | (0.25) | (11.18) | 4.12 | 0.13 | (7.50) | (0.39) | (2.63) | (18.07) | 7.15 | 0.66 |

| IU | (4.17) | (0.80) | (1.06) | (10.21) | 4.06 | 3.22 | (3.94) | (3.79) | (1.71) | (12.00) | 5.36 | 3.25 |

| BE | (4.65) | (0.58) | (0.42) | (5.41) | 2.76 | 0.26 | (4.82) | (3.16) | (1.31) | (5.58) | 3.72 | 1.31 |

| BS | (3.47) | (1.71) | (1.57) | (3.93) | 2.67 | 0.43 | (1.85) | (9.81) | (8.01) | (12.86) | 8.13 | 2.12 |

| -sparse | (1.06) | (2.26) | (2.67) | (3.04) | 2.26 | 2.36 | (6.47) | (0.48) | (1.41) | (14.52) | 5.72 | 1.31 |

| SalUn SalUn | (2.39) | (0.38) | (0.33) | (1.51) | 1.15 | 2.66 | (0.16) | (5.72) | (2.43) | (2.30) | 2.65 | 2.68 |

| SalUn-soft | (1.06) | (0.26) | (0.83) | (6.61) | 2.19 | 2.71 | (4.49) | (0.38) | (0.11) | (12.21) | 4.30 | 2.72 |

Performance of MU in image classification. In Table 1, we present a comprehensive comparison between our proposed method (SalUn and its soft-thresholding variant referred to as ‘SalUn-soft’) and 7 other MU baselines (FT, RL, GA, IU, -sparse, BS, and BE) designed for image classification. Motivated by the instability limitation at the rise of the quantity of forgetting data points as discussed in Sec. 4, we explore two unlearning cases: the standard random data forgetting and the higher random data forgetting. The unlearning performance is evaluated using the five metrics introduced earlier: UA, MIA, RA, TA, and RTE. Moreover, we include the performance of the exact unlearning method Retrain for comparison. It’s important to note that a better approximate unlearning method should exhibit a smaller performance gap compared to Retrain. To quantify the reduction in the performance gap, we introduce the metric called the averaging (avg.) gap, calculated as the average of the performance gaps measured in accuracy-related metrics, including UA, MIA, RA, and TA. We draw some key observations from Table 1 below.

First, among all the baselines, SalUn achieves the smallest average performance gap with Retrain, as indicated by the Avg. Gap metric in both forgetting scenarios. Moreover, SalUn-soft achieves the second or third-smallest performance gap. Notably, SalUn-soft exhibits a larger MIA gap with Retrain compared to SalUn. We hypothesize that the use of hard thresholding in SalUn produces a strictly sparse weight saliency map that benefits MU’s effectiveness, whereas SalUn-soft may not strictly enforce sparsity, which could impact unlearning efficacy. Thus, unless stated otherwise, we will primarily focus on SalUn in the subsequent experiments.

Second, it is important to avoid evaluating the performance of MU using a single metric, as it can lead to a misleading sense of effectiveness. For instance, -sparse may appear to be the strongest baseline in the 10% random data forgetting scenario when considering UA alone. However, this apparent strength comes at the cost of sacrificing RA and TA. In contrast, SalUn achieves the best trade-off between unlearning efficacy (UA and MIA) and preserved model fidelity (RA and TA). SalUn also maintains computational efficiency, as evidenced by the RTE metric.

| Forget. Class | SalUn | ESD | FMN | |||

|---|---|---|---|---|---|---|

| UA () | FID () | UA () | FID () | UA () | FID () | |

| Tench | 100.00 | 2.53 | 99.40 | 1.22 | 42.40 | 1.63 |

| English Springer | 100.00 | 0.79 | 100.00 | 1.02 | 27.20 | 1.75 |

| Cassette Player | 99.80 | 0.91 | 100.00 | 1.84 | 93.80 | 0.80 |

| Chain Saw | 100.00 | 1.58 | 96.80 | 1.48 | 48.40 | 0.94 |

| Church | 99.60 | 0.90 | 98.60 | 1.91 | 23.80 | 1.32 |

| French Horn | 100.00 | 0.94 | 99.80 | 1.08 | 45.00 | 0.99 |

| Garbage Truck | 100.00 | 0.91 | 100.00 | 2.71 | 41.40 | 0.92 |

| Gas Pump | 100.00 | 1.05 | 100.00 | 1.99 | 53.60 | 1.30 |

| Golf Ball | 98.80 | 1.45 | 99.60 | 0.80 | 15.40 | 1.05 |

| Parachute | 100.00 | 1.16 | 99.80 | 0.91 | 34.40 | 2.33 |

| Average | 99.82 | 1.22 | 99.40 | 1.49 | 42.54 | 1.30 |

Third, increasing the percentage of forgetting data to 50% leads to a more challenging unlearning scenario, as evidenced by the increase in the unlearning gap with Retrain for all MU methods. For example, the well-performing baselines BS and -sparse in the 10% data forgetting scenario substantially increase the Avg. Gap, i.e., from 2.67 to 8.13 for BS and 2.26 to 5.72 for -sparse. Yet, SalUn stays consistently effective.

Furthermore, we justify the effectiveness of the proposed weight saliency map () in (3) in Appendix C.2 through the integration of into classical MU methods (FT, RL, GA, and IU).

| Methods | Forgetting class: ‘Airplane’ | Non-forgetting classes | |||||||||||

| I1 | I2 | I3 | I4 | C1 | C2 | C3 | C4 | C5 | C6 | C7 | C8 | C9 | |

| Random |

|

|

|||||||||||

| SalUn |

|

|

|||||||||||

Weight saliency mask is key to adapting MU for image generation. In Fig. 4, we explore the influence of the weight saliency mask in SalUn when transitioning to image generation. For comparison, we also include the random masking baseline. The use of random masking in SalUn could lead to unstable generation performance when the condition is set to the forgetting class ‘airplane’ or other non-forgetting classes. First, the generation of noisy images (I1-I4) may indicate over-forgetting, which contradicts the results obtained with Retrain as shown in Fig. 3. Second, when a non-forgetting class is specified, we notice the use of random masking degrades the generation quality, e.g., , , , and in the figure. In contrast, our proposed SalUn, which leverages a proper weight saliency map, outperforms the implementation that uses random masking. This highlights the significance of proper weight saliency in MU for image generation.

Extended from Fig. 4, we quantify the unlearning performance of Retrain, ESD, and SalUn using the two metrics introduced earlier, FID and UA, in Appendix C.3. With a comparable UA performance, it is worth highlighting that SalUn significantly outperforms ESD in FID. ESD seems to exhibit instability when forgetting and learning low-quality images like CIFAR-10. We also investigate the selection of the sparsity ratio for weight saliency in Appendix C.1.

Class-wise forgetting performance in image generation. Table 2 presents the class-wise forgetting performance of SD on Imagenette, where the forgetting class is specified using the text prompt, e.g., ‘an image of [garbage truck]’. Similar to DDPM, the unlearning performance is measured by UA and FID. In addition to ESD, we include FMN (forget-me-not) as an additional MU baseline on Imagenette. Note that we omit Retrain on Imagenette due to its substantial computational resource requirements. As observed, SalUn outperforms ESD and FMN in UA across different forgetting classes. Importantly, SalUn manages to maintain good generation quality (measured by FID) while achieving a high UA. In contrast, FMN achieves the lowest FID but struggles with effective forgetting, leading to a significant decrease in UA. Please refer to Fig. A7-A9 for more generated images.

| Methods | I2P Prompts | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| P1 | P2 | P3 | P4 | P5 | P6 | P7 | P8 | P9 | P10 | |

| SD |

|

|||||||||

| ESD |

|

|||||||||

| FMN |

|

|||||||||

| SalUn |

|

|||||||||

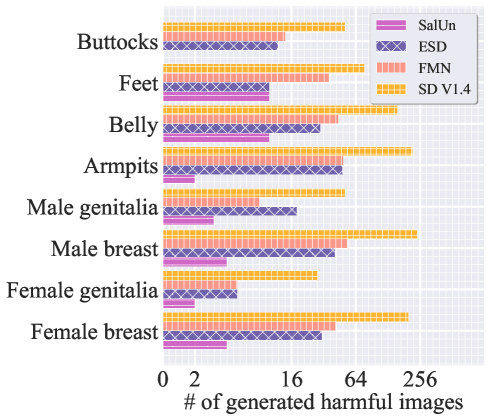

Application to NSFW concept forgetting. Further, we assess the effectiveness of SalUn in concept-wise forgetting to eliminate the impact of NSFW (not safe for work) concepts introduced through inappropriate image prompts (I2P) (Schramowski et al., 2023). Specifically, we generate images from the open-source SD V1.4 using prompts provided by I2P and classify them into various corresponding nude body parts using the NudeNet detector (Bedapudi, 2019). Our goal is to leverage an MU method to effectively erase the influence of the nudity-related prompts in SD. Fig. 5 presents the unlearning performance of different unlearning methods, including SalUn, and the ESD and FMT baselines introduced in Table 2. Here, the unlearning effectiveness is measured by the amount of nudity-related image generations using the unlearned SD with I2P prompts. We also present the performance of the original SD model for comparison. SalUn generates the fewest harmful images across all the nude body part classes. In particular, it significantly outperforms ESD (the second best-performing method) in ‘male breast’ and ‘female breast’. Additionally, in the absence of unlearning, the original SD V1.4 can generate a large number of harmful images, underscoring the importance of MU for image generation. Fig. 6 provides a set of generated images using I2P prompts, illustrating the generation differences among the original SD model and its various unlearned versions.

7 Conclusion

Recognizing the shortcomings of existing MU approaches, we introduce the innovative concept of weight saliency in MU, leading to the SalUn framework. This proposed saliency unlearning has showcased its effectiveness in addressing the limitations of current MU methods, and applying to both image classification and generation tasks. As a notable application, we show that SalUn stays effective in preventing stable diffusion from generating images with harmful content, even suffering inappropriate image prompts.

8 Acknowledgement

C. Fan, J. Liu, and S. Liu were supported by the Cisco Research Faculty Award and the National Science Foundation (NSF) Robust Intelligence (RI) Core Program Award IIS-2207052. We would like to thank Jinghan Jia for the insightful discussions.

References

- Adebayo et al. (2018) Julius Adebayo, Justin Gilmer, Michael Muelly, Ian Goodfellow, Moritz Hardt, and Been Kim. Sanity checks for saliency maps. Advances in neural information processing systems, 31, 2018.

- Bae et al. (2023) Seohui Bae, Seoyoon Kim, Hyemin Jung, and Woohyung Lim. Gradient surgery for one-shot unlearning on generative model, 2023.

- Becker & Liebig (2022) Alexander Becker and Thomas Liebig. Evaluating machine unlearning via epistemic uncertainty. arXiv preprint arXiv:2208.10836, 2022.

- Bedapudi (2019) P Bedapudi. Nudenet: Neural nets for nudity classification, detection and selective censoring, 2019.

- Birhane et al. (2021) Abeba Birhane, Vinay Uday Prabhu, and Emmanuel Kahembwe. Multimodal datasets: misogyny, pornography, and malignant stereotypes. arXiv preprint arXiv:2110.01963, 2021.

- Carlini et al. (2022) Nicholas Carlini, Steve Chien, Milad Nasr, Shuang Song, Andreas Terzis, and Florian Tramer. Membership inference attacks from first principles. In 2022 IEEE Symposium on Security and Privacy (SP), pp. 1897–1914. IEEE, 2022.

- Chattopadhay et al. (2018) Aditya Chattopadhay, Anirban Sarkar, Prantik Howlader, and Vineeth N Balasubramanian. Grad-CAM++: Generalized gradient-based visual explanations for deep convolutional networks. In 2018 IEEE Winter Conference on Applications of Computer Vision (WACV), pp. 839–847. IEEE, 2018.

- Chen et al. (2022a) Min Chen, Zhikun Zhang, Tianhao Wang, Michael Backes, Mathias Humbert, and Yang Zhang. Graph unlearning. In Proceedings of the 2022 ACM SIGSAC Conference on Computer and Communications Security, pp. 499–513, 2022a.

- Chen et al. (2023) Min Chen, Weizhuo Gao, Gaoyang Liu, Kai Peng, and Chen Wang. Boundary unlearning: Rapid forgetting of deep networks via shifting the decision boundary. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 7766–7775, 2023.

- Chen et al. (2022b) Tianlong Chen, Zhenyu Zhang, Yihua Zhang, Shiyu Chang, Sijia Liu, and Zhangyang Wang. Quarantine: Sparsity can uncover the trojan attack trigger for free. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 598–609, 2022b.

- Cheng et al. (2023) Jiali Cheng, George Dasoulas, Huan He, Chirag Agarwal, and Marinka Zitnik. Gnndelete: A general strategy for unlearning in graph neural networks. arXiv preprint arXiv:2302.13406, 2023.

- Chien et al. (2022) Eli Chien, Chao Pan, and Olgica Milenkovic. Certified graph unlearning. arXiv preprint arXiv:2206.09140, 2022.

- Dai et al. (2021) Damai Dai, Li Dong, Yaru Hao, Zhifang Sui, Baobao Chang, and Furu Wei. Knowledge neurons in pretrained transformers. arXiv preprint arXiv:2104.08696, 2021.

- De Cao et al. (2021) Nicola De Cao, Wilker Aziz, and Ivan Titov. Editing factual knowledge in language models. arXiv preprint arXiv:2104.08164, 2021.

- Dwork et al. (2006) Cynthia Dwork, Krishnaram Kenthapadi, Frank McSherry, Ilya Mironov, and Moni Naor. Our data, ourselves: Privacy via distributed noise generation. In Annual international conference on the theory and applications of cryptographic techniques, pp. 486–503. Springer, 2006.

- Frankle & Carbin (2018) Jonathan Frankle and Michael Carbin. The lottery ticket hypothesis: Finding sparse, trainable neural networks. arXiv preprint arXiv:1803.03635, 2018.

- Gandikota et al. (2023) Rohit Gandikota, Joanna Materzynska, Jaden Fiotto-Kaufman, and David Bau. Erasing concepts from diffusion models. arXiv preprint arXiv:2303.07345, 2023.

- Ginart et al. (2019) Antonio Ginart, Melody Guan, Gregory Valiant, and James Y Zou. Making ai forget you: Data deletion in machine learning. Advances in neural information processing systems, 32, 2019.

- Golatkar et al. (2020) Aditya Golatkar, Alessandro Achille, and Stefano Soatto. Eternal sunshine of the spotless net: Selective forgetting in deep networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 9304–9312, 2020.

- Graves et al. (2021) Laura Graves, Vineel Nagisetty, and Vijay Ganesh. Amnesiac machine learning. In Proceedings of the AAAI Conference on Artificial Intelligence, volume 35, pp. 11516–11524, 2021.

- Grosse et al. (2023) Roger Grosse, Juhan Bae, Cem Anil, Nelson Elhage, Alex Tamkin, Amirhossein Tajdini, Benoit Steiner, Dustin Li, Esin Durmus, Ethan Perez, et al. Studying large language model generalization with influence functions. arXiv preprint arXiv:2308.03296, 2023.

- Guo et al. (2019) Chuan Guo, Tom Goldstein, Awni Hannun, and Laurens Van Der Maaten. Certified data removal from machine learning models. arXiv preprint arXiv:1911.03030, 2019.

- Han et al. (2015) Song Han, Huizi Mao, and William J Dally. Deep compression: Compressing deep neural networks with pruning, trained quantization and huffman coding. arXiv preprint arXiv:1510.00149, 2015.

- He et al. (2016) Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 770–778, 2016.

- Heng & Soh (2023) Alvin Heng and Harold Soh. Selective amnesia: A continual learning approach to forgetting in deep generative models, 2023.

- Ho & Salimans (2022) Jonathan Ho and Tim Salimans. Classifier-free diffusion guidance. arXiv preprint arXiv:2207.12598, 2022.

- Hoofnagle et al. (2019) Chris Jay Hoofnagle, Bart van der Sloot, and Frederik Zuiderveen Borgesius. The european union general data protection regulation: what it is and what it means. Information & Communications Technology Law, 28(1):65–98, 2019.

- Howard & Gugger (2020) Jeremy Howard and Sylvain Gugger. Fastai: A layered api for deep learning. Information, 11(2):108, 2020.

- Ilyas et al. (2022) Andrew Ilyas, Sung Min Park, Logan Engstrom, Guillaume Leclerc, and Aleksander Madry. Datamodels: Predicting predictions from training data. arXiv preprint arXiv:2202.00622, 2022.

- Izzo et al. (2021) Zachary Izzo, Mary Anne Smart, Kamalika Chaudhuri, and James Zou. Approximate data deletion from machine learning models. In International Conference on Artificial Intelligence and Statistics, pp. 2008–2016. PMLR, 2021.

- Jain et al. (2023) Saachi Jain, Hadi Salman, Alaa Khaddaj, Eric Wong, Sung Min Park, and Aleksander Madry. A data-based perspective on transfer learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 3613–3622, 2023.

- Jeyakumar et al. (2020) Jeya Vikranth Jeyakumar, Joseph Noor, Yu-Hsi Cheng, Luis Garcia, and Mani Srivastava. How can i explain this to you? an empirical study of deep neural network explanation methods. Advances in Neural Information Processing Systems, 33:4211–4222, 2020.

- Jia et al. (2023) Jinghan Jia, Jiancheng Liu, Parikshit Ram, Yuguang Yao, Gaowen Liu, Yang Liu, Pranay Sharma, and Sijia Liu. Model sparsification can simplify machine unlearning. arXiv preprint arXiv:2304.04934, 2023.

- Koh & Liang (2017) Pang Wei Koh and Percy Liang. Understanding black-box predictions via influence functions. In International conference on machine learning, pp. 1885–1894. PMLR, 2017.

- Krizhevsky et al. (2009) Alex Krizhevsky, Geoffrey Hinton, et al. Learning multiple layers of features from tiny images. 2009.

- Le & Yang (2015) Ya Le and Xuan Yang. Tiny imagenet visual recognition challenge. CS 231N, 7(7):3, 2015.

- Liu et al. (2022a) Yang Liu, Mingyuan Fan, Cen Chen, Ximeng Liu, Zhuo Ma, Li Wang, and Jianfeng Ma. Backdoor defense with machine unlearning. arXiv preprint arXiv:2201.09538, 2022a.

- Liu et al. (2022b) Yi Liu, Lei Xu, Xingliang Yuan, Cong Wang, and Bo Li. The right to be forgotten in federated learning: An efficient realization with rapid retraining. arXiv preprint arXiv:2203.07320, 2022b.

- Liu et al. (2021) Ze Liu, Yutong Lin, Yue Cao, Han Hu, Yixuan Wei, Zheng Zhang, Stephen Lin, and Baining Guo. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF international conference on computer vision, pp. 10012–10022, 2021.

- Meng et al. (2022) Kevin Meng, David Bau, Alex Andonian, and Yonatan Belinkov. Locating and editing factual associations in gpt. Advances in Neural Information Processing Systems, 35:17359–17372, 2022.

- Menik & Ramaswamy (2023) Samiyuru Menik and Lakshmish Ramaswamy. Towards modular machine learning solution development: Benefits and trade-offs. arXiv preprint arXiv:2301.09753, 2023.

- Neel et al. (2021) Seth Neel, Aaron Roth, and Saeed Sharifi-Malvajerdi. Descent-to-delete: Gradient-based methods for machine unlearning. In Algorithmic Learning Theory, pp. 931–962. PMLR, 2021.

- Netzer et al. (2011) Yuval Netzer, Tao Wang, Adam Coates, Alessandro Bissacco, Bo Wu, and Andrew Y Ng. Reading digits in natural images with unsupervised feature learning. 2011.

- Oesterling et al. (2023) Alex Oesterling, Jiaqi Ma, Flavio P Calmon, and Hima Lakkaraju. Fair machine unlearning: Data removal while mitigating disparities. arXiv preprint arXiv:2307.14754, 2023.

- Parikh et al. (2014) Neal Parikh, Stephen Boyd, et al. Proximal algorithms. Foundations and trends® in Optimization, 1(3):127–239, 2014.

- Park et al. (2023) Sung Min Park, Kristian Georgiev, Andrew Ilyas, Guillaume Leclerc, and Aleksander Madry. Trak: Attributing model behavior at scale. arXiv preprint arXiv:2303.14186, 2023.

- Patil et al. (2023) Vaidehi Patil, Peter Hase, and Mohit Bansal. Can sensitive information be deleted from llms? objectives for defending against extraction attacks. arXiv preprint arXiv:2309.17410, 2023.

- Petsiuk et al. (2018) Vitali Petsiuk, Abir Das, and Kate Saenko. Rise: Randomized input sampling for explanation of black-box models. arXiv preprint arXiv:1806.07421, 2018.

- Riquelme et al. (2021) Carlos Riquelme, Joan Puigcerver, Basil Mustafa, Maxim Neumann, Rodolphe Jenatton, André Susano Pinto, Daniel Keysers, and Neil Houlsby. Scaling vision with sparse mixture of experts. Advances in Neural Information Processing Systems, 34:8583–8595, 2021.

- Rombach et al. (2022) Robin Rombach, Andreas Blattmann, Dominik Lorenz, Patrick Esser, and Björn Ommer. High-resolution image synthesis with latent diffusion models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 10684–10695, 2022.

- Schramowski et al. (2023) Patrick Schramowski, Manuel Brack, Björn Deiseroth, and Kristian Kersting. Safe latent diffusion: Mitigating inappropriate degeneration in diffusion models, 2023.

- Schuhmann et al. (2021) Christoph Schuhmann, Richard Vencu, Romain Beaumont, Robert Kaczmarczyk, Clayton Mullis, Aarush Katta, Theo Coombes, Jenia Jitsev, and Aran Komatsuzaki. Laion-400m: Open dataset of clip-filtered 400 million image-text pairs. arXiv preprint arXiv:2111.02114, 2021.

- Schuhmann et al. (2022) Christoph Schuhmann, Romain Beaumont, Richard Vencu, Cade Gordon, Ross Wightman, Mehdi Cherti, Theo Coombes, Aarush Katta, Clayton Mullis, Mitchell Wortsman, et al. Laion-5b: An open large-scale dataset for training next generation image-text models. Advances in Neural Information Processing Systems, 35:25278–25294, 2022.

- Sekhari et al. (2021) Ayush Sekhari, Jayadev Acharya, Gautam Kamath, and Ananda Theertha Suresh. Remember what you want to forget: Algorithms for machine unlearning. Advances in Neural Information Processing Systems, 34:18075–18086, 2021.

- Selvaraju et al. (2017) Ramprasaath R Selvaraju, Michael Cogswell, Abhishek Das, Ramakrishna Vedantam, Devi Parikh, and Dhruv Batra. Grad-CAM: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE International Conference on Computer Vision, pp. 618–626, 2017.

- Shaik et al. (2023) Thanveer Shaik, Xiaohui Tao, Haoran Xie, Lin Li, Xiaofeng Zhu, and Qing Li. Exploring the landscape of machine unlearning: A survey and taxonomy. arXiv preprint arXiv:2305.06360, 2023.

- Simonyan & Zisserman (2014) Karen Simonyan and Andrew Zisserman. Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556, 2014.

- Simonyan et al. (2013) Karen Simonyan, Andrea Vedaldi, and Andrew Zisserman. Deep inside convolutional networks: Visualising image classification models and saliency maps. arXiv preprint arXiv:1312.6034, 2013.

- Smilkov et al. (2017) Daniel Smilkov, Nikhil Thorat, Been Kim, Fernanda Viégas, and Martin Wattenberg. Smoothgrad: removing noise by adding noise. arXiv preprint arXiv:1706.03825, 2017.

- Somepalli et al. (2023) Gowthami Somepalli, Vasu Singla, Micah Goldblum, Jonas Geiping, and Tom Goldstein. Diffusion art or digital forgery? investigating data replication in diffusion models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 6048–6058, 2023.

- Springenberg et al. (2014) Jost Tobias Springenberg, Alexey Dosovitskiy, Thomas Brox, and Martin Riedmiller. Striving for simplicity: The all convolutional net. arXiv preprint arXiv:1412.6806, 2014.

- Sundararajan et al. (2017) Mukund Sundararajan, Ankur Taly, and Qiqi Yan. Axiomatic attribution for deep networks. In Proceedings of the 34th International Conference on Machine Learning-Volume 70, pp. 3319–3328. JMLR. org, 2017.

- Thudi et al. (2022a) Anvith Thudi, Gabriel Deza, Varun Chandrasekaran, and Nicolas Papernot. Unrolling sgd: Understanding factors influencing machine unlearning. In 2022 IEEE 7th European Symposium on Security and Privacy (EuroS&P), pp. 303–319. IEEE, 2022a.

- Thudi et al. (2022b) Anvith Thudi, Hengrui Jia, Ilia Shumailov, and Nicolas Papernot. On the necessity of auditable algorithmic definitions for machine unlearning. In 31st USENIX Security Symposium (USENIX Security 22), pp. 4007–4022, 2022b.

- Ullah et al. (2021) Enayat Ullah, Tung Mai, Anup Rao, Ryan A Rossi, and Raman Arora. Machine unlearning via algorithmic stability. In Conference on Learning Theory, pp. 4126–4142. PMLR, 2021.

- Wang et al. (2022) Junxiao Wang, Song Guo, Xin Xie, and Heng Qi. Federated unlearning via class-discriminative pruning. In Proceedings of the ACM Web Conference 2022, pp. 622–632, 2022.

- Warnecke et al. (2021) Alexander Warnecke, Lukas Pirch, Christian Wressnegger, and Konrad Rieck. Machine unlearning of features and labels. arXiv preprint arXiv:2108.11577, 2021.

- Wong et al. (2021) Eric Wong, Shibani Santurkar, and Aleksander Madry. Leveraging sparse linear layers for debuggable deep networks. In International Conference on Machine Learning, pp. 11205–11216. PMLR, 2021.

- Wu et al. (2022) Leijie Wu, Song Guo, Junxiao Wang, Zicong Hong, Jie Zhang, and Yaohong Ding. Federated unlearning: Guarantee the right of clients to forget. IEEE Network, 36(5):129–135, 2022.

- Xie et al. (2023) Sang Michael Xie, Shibani Santurkar, Tengyu Ma, and Percy Liang. Data selection for language models via importance resampling. arXiv preprint arXiv:2302.03169, 2023.

- Zeiler & Fergus (2014) Matthew D Zeiler and Rob Fergus. Visualizing and understanding convolutional networks. In European conference on computer vision, pp. 818–833. Springer, 2014.

- Zhang et al. (2023a) Eric Zhang, Kai Wang, Xingqian Xu, Zhangyang Wang, and Humphrey Shi. Forget-me-not: Learning to forget in text-to-image diffusion models. arXiv preprint arXiv:2303.17591, 2023a.

- Zhang et al. (2023b) Yimeng Zhang, Jinghan Jia, Xin Chen, Aochuan Chen, Yihua Zhang, Jiancheng Liu, Ke Ding, and Sijia Liu. To generate or not? safety-driven unlearned diffusion models are still easy to generate unsafe images… for now. arXiv preprint arXiv:2310.11868, 2023b.

- Zhou et al. (2016) B. Zhou, A. Khosla, A. Lapedriza, A. Oliva, and A. Torralba. Learning deep features for discriminative localization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2921–2929, 2016.

Appendix

Appendix A Pseudo code of RL-based SalUn.

Appendix B Soft-thresholding SalUn

The saliency-based unlearned model (4) implies that weight saliency penalizes the difference between the unlearned model and the original model : Higher sparsity in the weight saliency map corresponds to fewer changes in model weights. Inspired by this, we can incorporate the norm as an unlearning penalty to enforce the saliency effect. This then modifies (6) or (7) to

| (8) |

where (or ) corresponds to the objective function of SalUn for classification (6) (or generation (7)), and is a regularization parameter. Note that unlike (6) and (7), the objective function of SalUn is defined over the entire model weights since there is no weight saliency map known in advance, i.e., letting in (4). Problem (8) can be efficiently solved using the proximal gradient algorithm (Parikh et al., 2014), wherein the penalty term is handled efficiently through a closed-form proximal operation known as soft-thresholding. In contrast to the hard-thresholding implementation of SalUn, the soft-thresholding approach requires tuning an additional hyperparameter , with the trade-off of not resorting to the hard threshold in (3). In practice, we find that employing a linearly decaying scheduler for (i.e., promoting weight saliency sparsity during the early unlearning stages) yields better results than other schemes, approaching the performance of SalUn based on hard thresholding.

Here is the detailed derivation process for Soft-thresholding SalUn. Let:

and

leading to the formulation:

| (10) |

The per-iteration steps of the proximal gradient algorithm are:

| (11) | |||

| (12) |

where the proximal operator () is as defined in (Parikh et al., 2014, Eq. 1.2). This inclusion of the proximal operator is the primary modification to our original algorithm.

Specifically, determined by the proximal projection is the solution of the following strongly convex minimization problem

| (14) |

By change of the variable , the above problem is equivalent to

| (16) |

which is the proximal operation of the norm with proximal parameter at the point , namely, . The above is known as the proximal operation of the norm (soft thresholding operation) (Parikh et al., 2014, Sec. 6.5.2), and the solution of the above problem (denoted by ) is given by the following analytical form:

| (20) |

where denotes the th element of . Clearly, the increase of will enforce . The concise expression of the above is given by (Parikh et al., 2014, Eq. 6.9):

| (21) |

where is the positive part operator of taken elementwise. In other words, to project a vector onto the nonnegative orthant, each negative component of is replaced with zero.

Changing back to the original variable , the solution to problem (14) (or the proximal operation step) is given by

| (22) |

Finally, the modified proximal gradient algorithm to address the proposed unlearning problem can be stated as:

| (23) | |||

| (24) |

Appendix C Additional Experimental Details and Results

C.1 Additional training and unlearning settings

MU for image classification

For the Retrain method, training is conducted over epochs using the SGD optimizer with a cosine-scheduled learning rate initialized at . For both FT and RL, training spans 10 epochs within the interval . GA’s training settings involve a 5-epoch learning rate search within the interval . In the case of IU, we explore the parameter associated with the woodfisher Hessian Inverse approximation within the range . For -sparse, a learning rate search for the parameter is executed within the range , while searching for the learning rate within the range . For the BS method, the step size of fast gradient sign method(FGSM) is defined as 0.1. Both BS and BE methods involve a 10-epoch learning rate search in the interval . Lastly, for SalUn and SalUn-soft, we trained for 10 epochs, searching for learning rates in the range and sparsity ratios in the range .

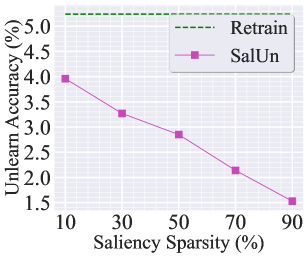

(a) UA for classification

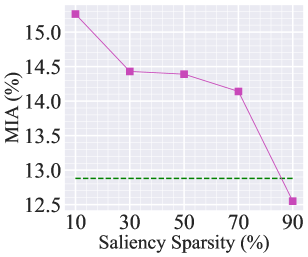

(b) MIA for classification

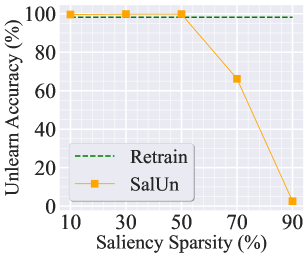

(c) UA for generation

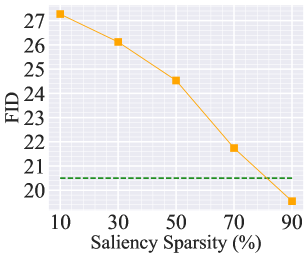

(d) FID for generation

(a) UA for classification

(b) MIA for classification

(c) UA for generation

(d) FID for generation

MU for image generation

For DDPM, the unlearning settings are as follows: For Retrain, it undergoes training for 80,000 iterations with Adam and a learning rate of . The batch size is set to 128. In the case of SalUn, it is trained for 1,000 iterations with a learning rate of , set to and a batch size of 128. The sparsity of weight saliency is maintained at 50%. The sampling settings include 1,000 time steps and a conditional scale of 2.0.

For SD, the unlearning settings are as follows: For SalUn, it undergoes training for 5 epochs with Adam using a learning rate of . The value is set at 0.5, with a batch size of 8. The sparsity of weight saliency is again set at 50%. The sampling settings involve the use of DDIM, 100 time steps, and a conditional scale of 7.5.

For NSFW removal, we initially employ SD V1.4 to generate 800 images as using the prompt ‘a photo of a nude person’ and an additional 800 images as using the prompt ‘a photo of a person wearing clothes.’ Throughout the Unlearning process, we utilize ‘a photo of a nude person’ to derive the weight saliency mask for the NSFW concept. Subsequently, we regard ‘a photo of a nude person’ as a concept to be forgotten and make corrections using the concept ‘a photo of a person wearing clothes.’

| Sparsity Ratio of Saliency Mask () | |||||

| Forgetting class: “airplane” |

|

|

|

|

|

| Non-forgetting classes |

|

|

|

|

|

Ablation study on sparsity choice of weight saliency.

Recall from (3) that the choice of the weight saliency sparsity threshold could be a crucial hyperparameter in our approach. In our experiments, we have set the default sparsity threshold to 50%. Fig. A1 provides a more detailed examination of the sparsity ratio. Specifically, Fig. A1-(a) and (b) present the performance of random data forgetting in image classification using ResNet-18 on CIFAR-10 (with the experiment setup same as Table 1). Fig. A1-(c) and (d) present the performance of class-wise forgetting for image generation using DDPM on CIFAR-10 with the same setting as Fig 4. In both cases, the performance of Retrain is provided for comparison. In the context of MU for image classification, choosing a 50% sparsity threshold is a reasonable option. A higher saliency sparsity may result in under-forgetting, as evidenced by the significant gap compared to Retrain as well as the lower unlearning accuracy (Jia et al., 2023) (namely, making it easier to classify the forgetting data points) or the lower MIA (Jia et al., 2023) (namely, making it challenging to infer the forgetting identity of a training point). This is not surprising since the higher saliency sparsity indicates fewer mode weights to be modified during unlearning. Conversely, selecting a lower sparsity ratio may result in over-forgetting due to the excessive number of weights to be modified. In the context of MU for image generation, a higher sparsity ratio also leads to under-forgetting, while a much lower sparsity ratio causes a rise in FID, introduced by over-forgetting.

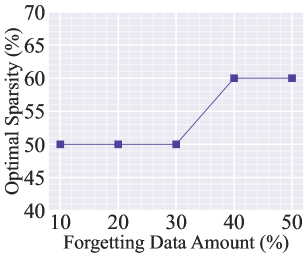

Additionally, Fig. A2 shows the examples of generated images when forgetting the class ‘airplane’. Opting for a 10% saliency sparsity can effectively forget the class ‘airplane,’ but it leads to a decrease in image generation quality. On the other hand, using a 90% saliency sparsity fails to forget the class. In Fig. A3, we present how the ‘optimal’ sparsity level can change vs. the number of forgetting data points, focusing on the case of MU for image classification. The optimal sparsity is determined through a grid search with a sparsity interval of 10%. It’s important to note that the choice of a 50% sparsity ratio is not universal, and when dealing with a larger amount of forgetting data, a higher sparsity ratio is preferred. This is due to the fact that the larger forgetting data amount can exert the greater impact on the model, necessitating the higher saliency sparsity.

C.2 Additional classification results

| Methods | Random Data Forgetting (10%) | Random Data Forgetting (50%) | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| UA | RA | TA | MIA | Avg. Gap | RTE | UA | RA | TA | MIA | Avg. Gap | RTE | |

| Retrain | 5.24 | 100.00 | 94.26 | 12.88 | 0 | 43.29 | 7.91 | 100.00 | 91.72 | 19.29 | 0 | 23.90 |

| FT | 0.63 (4.61) | 99.88 (0.12) | 94.06 (0.20) | 2.70 (10.18) | 3.78 | 2.37 | 0.44 (7.47) | 99.96 (0.04) | 94.23 (2.51) | 2.15 (17.14) | 6.79 | 1.31 |

| FT + | 5.33 (0.09) | 96.06 (3.94) | 89.89 (4.37) | 11.82 (1.06) | 2.37 | 2.38 | 10.09 (2.18) | 93.82 (6.18) | 86.56 (5.16) | 16.84 (2.45) | 3.99 | 1.34 |

| RL | 7.61 (2.37) | 99.67 (0.33) | 92.83 (1.43) | 37.36 (24.48) | 7.15 | 2.64 | 7.61 (0.30) | 99.67 (0.33) | 92.83 (1.11) | 37.36 (18.07) | 4.95 | 2.65 |

| RL + | 2.85 (2.39) | 99.62 (0.38) | 93.93 (0.33) | 14.39 (1.51) | 1.15 | 2.66 | 7.75 (0.16) | 94.28 (5.72) | 89.29 (2.43) | 16.99 (2.30) | 2.65 | 2.68 |

| GA | 0.69 (4.55) | 99.50 (0.50) | 94.01 (0.25) | 1.70 (11.18) | 4.12 | 0.13 | 0.40 (7.51) | 99.61 (0.39) | 94.34 (2.62) | 1.22 (18.07) | 7.15 | 0.66 |

| GA + | 0.84 (4.40) | 99.44 (0.56) | 94.24 (0.02) | 1.62 (11.26) | 4.06 | 0.15 | 6.55 (1.36) | 93.81 (6.19) | 88.54 (3.18) | 9.38 (9.91) | 5.16 | 0.69 |

| IU | 1.07 (4.17) | 99.20 (0.80) | 93.20 (1.06) | 2.67 (10.21) | 4.06 | 3.22 | 3.97 (3.94) | 96.21 (3.79) | 90.00 (1.72) | 7.29 (12.00) | 5.36 | 3.25 |

| IU + | 5.38 (0.14) | 94.92 (5.08) | 88.67 (5.59) | 9.40 (3.48) | 3.57 | 3.24 | 5.94 (1.97) | 94.61 (5.39) | 88.38 (3.34) | 10.21 (9.08) | 4.94 | 3.28 |

Enhancement of weight saliency introduced to MU baselines for image classification.

In Table A1, we demonstrate the effectiveness of the proposed weight saliency map () in (3), a key component in SalUn, through its integration into different MU baselines (FT, RL, GA, and IU). We compare the performance of these weight saliency-augmented baselines with that of their vanilla versions. As we can see, the integration with improves the performance of existing baselines, bringing them closer to the Retrain benchmark, as evidenced by the value of Avg. Gap across different forgetting scenarios. A notable observation is the weight saliency-augmented RL, which surpasses all other baselines. This justifies why we selected RL as the foundational building block in SalUn.

| Methods | Class-wise Forgetting | |||||

| UA | RA | TA | MIA | Avg. Gap | RTE | |

| Retrain | 100.00 | 100.00 | 92.47 | 100.00 | 0 | 41.93 |

| FT | 31.69 (68.31) | 99.92 (0.07) | 94.78 (2.31) | 93.53 (6.47) | 19.29 | 2.28 |

| RL | 89.33 (10.67) | 99.92 (0.08) | 94.52 (2.06) | 100.00 (0.00) | 3.20 | 2.45 |

| GA | 99.91 (0.09) | 38.92 (61.07) | 38.18 (54.29) | 99.98 (0.02) | 28.87 | 0.13 |

| IU | 97.02 (2.98) | 94.78 (5.22) | 89.10 (3.37) | 99.13 (0.87) | 3.11 | 3.25 |

| BE | 79.13 (20.87) | 97.71 (2.29) | 91.88 (0.59) | 93.60 (6.40) | 7.54 | 0.25 |

| BS | 79.60 (20.40) | 97.79 (2.21) | 91.94 (0.52) | 93.42 (6.58) | 7.43 | 0.41 |

| -sparse | 100.00 (0.00) | 97.92 (2.08) | 92.29 (0.18) | 100.00 (0.00) | 0.56 | 2.29 |

| SalUn | 99.91 (0.09) | 99.93 (0.07) | 94.56 (2.09) | 100.00 (0.00) | 0.56 | 2.46 |

| SalUn-soft | 97.13 (2.87) | 99.88 (0.12) | 94.64 (2.18) | 100.00 (0.00) | 1.29 | 2.50 |

Class-wise unlearn results.

In Table A2, we assess the MU performance on ResNet-18 for class-wise forgetting on CIFAR-10. The results clearly manifest that our proposed methodologies, SalUn and SalUn-soft, offer commendable performance in most metrics. Although our techniques fall slightly short of the absolute dominance seen with the -sparse method in terms of UA, the overall performance landscape is favorable. Crucially, both SalUn and SalUn-soft demonstrate a consistently robust balance across metrics, highlighting their potential in handling various unlearning scenarios. Even with the challenges faced, these methods maintain a fine harmony between UA, MIA, RA, and TA metrics. The insights from this table are instrumental in understanding the nuanced landscape of class-wise forgetting and the relative strengths of the proposed methods.

| Iteration # | Methods | Iterative Random Data Forgetting (10%, 5 Iterations) | ||||

| UA | RA | TA | MIA | Avg. Gap | ||

| 1 | Retrain | 5.24 | 100.00 | 94.26 | 12.88 | 0 |

| FT | 11.38 (6.14) | 91.46 (8.54) | 86.97 (7.29) | 17.69 (4.81) | 6.70 | |

| SalUn | 1.82 (3.42) | 99.81 (0.19) | 94.30 (0.04) | 15.00 (2.12) | 1.44 | |

| 2 | Retrain | 5.31 | 100.00 | 94.10 | 13.30 | 0 |

| FT | 10.60 (5.29) | 97.27 (2.73) | 87.81 (6.29) | 19.42 (6.12) | 5.11 | |

| SalUn | 1.96 (3.35) | 99.96 (0.04) | 94.39 (0.29) | 16.53 (3.23) | 1.73 | |

| 3 | Retrain | 6.64 | 100.00 | 92.78 | 14.60 | 0 |

| FT | 10.56 (3.92) | 96.89 (3.11) | 85.66 (7.12) | 20.38 (5.78) | 4.98 | |

| SalUn | 1.62 (5.02) | 99.97 (0.03) | 93.99 (1.21) | 14.82 (0.22) | 1.62 | |

| 4 | Retrain | 7.01 | 100.00 | 92.52 | 18.37 | 0 |

| FT | 8.82 (1.81) | 97.64 (2.36) | 85.42 (7.10) | 18.62 (0.25) | 2.88 | |

| SalUn | 4.78 (2.23) | 99.98 (0.02) | 93.64 (1.12) | 21.98 (3.61) | 1.75 | |

| 5 | Retrain | 7.91 | 100.00 | 91.72 | 19.29 | 0 |

| FT | 9.00 (1.09) | 96.87 (3.13) | 84.29 (7.43) | 18.78 (0.51) | 3.04 | |

| SalUn | 4.42 (3.49) | 100.00 (0.00) | 92.86 (1.14) | 21.64 (2.35) | 1.75 | |

Performance of iterative unlearning.

As illustrated in Table A3, we conduct iterative unlearning experiments by incrementally forgetting 10% of the data over five iterations (50% of data in total), i.e., for each iteration the forgetting set is 10% of the whole dataset, given ResNet-18 on the CIFAR-10 dataset. We evaluate the unlearning performance of our method by comparing it with the gold standard, Retrain. Additionally, we assess its performance in comparison to FT. Notably, even as data points are progressively forgotten, SalUn demonstrates a consistently minimal performance gap with Retrain, as evidenced by the smallest value in the Avg. Gap column.

Performance of SVHN and CIFAR-100 datasets.

Table A7 and Table A8 provide a comprehensive evaluation of MU performance across different data forgetting amounts on additional datasets (SVHN and CIFAR-100). These tables highlight the efficacy of various methods under diverse forgetting scenarios. Notably, the SalUn and SalUn-soft methods consistently deliver promising results across both datasets. Furthermore, it is evident that while the majority of methods prioritize RA performance, the proposed methodologies strike a commendable balance across all metrics. The results reinforce the importance of incorporating weight saliency in MU, as the SalUn techniques achieve competitive UA and MIA scores without significant sacrifices in RA and TA metrics. These patterns and insights resonate with the observations and conclusions presented in previous tables, affirming the robustness and applicability of the introduced methodologies.

Performance on VGG-16 and Swin-T models.

As illustrated in Table A9 and Table A10, we delve deeper into the MU performance across different data forgetting amounts on the VGG-16 and Swin-T models. These tables underscore the prowess and adaptability of different methods in an array of forgetting settings. Noteworthy is the performance of SalUn and SalUn-soft, which, while they do not always surpass the peak metrics obtained by some other approaches, manifest a balanced profile across diverse metrics. They consistently demonstrate competitive results across both UA and MIA while ensuring that RA and TA metrics remain commendably high. This harmony in performance underscores the strength of the proposed techniques in various unlearning contexts. The findings from these tables further echo and fortify the insights previously presented, emphasizing the potency and relevance of SalUn and SalUn-soft in addressing machine unlearning challenges.

| Methods | Random Data Forgetting (10%) | ||||

| UA | RA | TA | MIA | Avg. Gap | |

| Retrain | 36.40 | 99.98 | 63.67 | 63.77 | 0 |

| -sparse | 15.19 (21.21) | 98.61 (1.37) | 61.78 (1.89) | 26.39 (37.38) | 15.46 |

| SalUn | 27.78 (8.62) | 97.20 (2.78) | 59.70 (3.97) | 72.80 (9.03) | 6.10 |

Performance of Tiny ImageNet dataset.

We conducted additional experiments on the Tiny ImageNet dataset (Le & Yang, 2015) with a higher resolution () than CIFAR-10 and CIFAR-100 in Table A4. We focused on evaluating our method against the baseline -sparse and Retrain. Compared to -sparse, SalUn demonstrates smaller gaps in terms of UA and MIA, with comparable RA and TA gaps. This shows an improved unlearning efficacy over -sparse while preserving the model’s generalization ability post-unlearning.

C.3 Additional generation results

Class-wise unlearning examples on CIFAR-10.

| Methods | UA () | FID () |

|---|---|---|

| Retrain | 100.00 | 11.69 |

| ESD | 100.00 | 17.37 |

| SalUn | 100.00 | 11.21 |

Extended from Fig. 4, We quantify the unlearning performance of Retrain, ESD, and SalUn in Table A5, using the two metrics introduced earlier, FID and UA. Comparing the performance of SalUn with ESD on DDPM with CIFAR-10, we observe a slight 0.84% UA drop in SalUn compared to ESD. However, SalUn significantly outperforms ESD in terms of FID. We notice that ESD exhibits instability when forgetting and learning low-quality images like CIFAR-10. Therefore, we believe that the 100% UA performance of ESD might also be due to the poor generation quality of images within the forgetting class. By contrast, SalUn yields the closest performance to Retrain. Fig. A4-A6 shows the examples of generated images. The forgetting class is marked with a red border.

Class-wise unlearning examples on ImageNette.

In Fig. A7-A9, we showcase the outcomes of class-wise unlearning on the ImageNette dataset utilizing the SalUn approach under different random seeds. The matrix configuration of the figure contrasts the “Unlearned class” with the “Prompt class”, distinctly delineating the desired versus the produced imagery. Images on the diagonal correspond to the target unlearning class, shedding light on the effectiveness of the SalUn method in this unlearning context. Conversely, off-diagonal images represent different classes, illustrating the model’s capability to differentiate and generalize across the broader dataset.

Text prompts in I2P for SD to generate NSFW images.

Appendix D Broader Impacts and Limitations

SalUn marks an important advancement in addressing the multifaceted challenges of data privacy, security, and adherence to regulatory mandates. SalUn enhances unlearning effectiveness in machine learning models, maintaining their utility even under strict unlearning requirements. Its role in precluding the generation of harmful content underscores its capacity to foster societal norms and ethical standards. This proactive approach reduces the risk of generating inappropriate content also guides AI development towards alignment with ethical standards and societal expectations.

However, it’s crucial to acknowledge the limitations of our method. Although SalUn has proven effective in vision tasks, its scalability and adaptability to other domains like language and graphs require further investigation. The impact of machine unlearning on fairness, privacy, and security also demands careful consideration. Ensuring transparent, accountable, and inclusive development of these technologies is essential.

| Methods | Random Data Forgetting (10%) | |||||

| UA | RA | TA | MIA | Avg. Gap | RTE | |

| Retrain | 5.24 | 100.00 | 94.26 | 12.88 | 0 | 43.29 |

| FT | 0.63 (4.61) | 99.88 (0.12) | 94.06 (0.20) | 2.70 (10.18) | 3.78 | 2.37 |

| RL | 7.61 (2.37) | 99.67 (0.33) | 92.83 (1.43) | 37.36 (24.48) | 7.15 | 2.64 |

| GA | 0.69 (4.55) | 99.50 (0.50) | 94.01 (0.25) | 1.70 (11.18) | 4.12 | 0.13 |

| IU | 1.07 (4.17) | 99.20 (0.80) | 93.20 (1.06) | 2.67 (10.21) | 4.06 | 3.22 |

| BE | 0.59 (4.65) | 99.42 (0.58) | 93.85 (0.41) | 7.47 (5.41) | 2.76 | 0.26 |

| BS | 1.78 (3.46) | 98.29 (1.71) | 92.69 (1.57) | 8.96 (3.92) | 2.66 | 0.43 |

| -sparse | 4.19 (1.05) | 97.74 (2.26) | 91.59 (2.67) | 9.84 (3.04) | 2.26 | 2.36 |

| SalUn | 2.85 (2.39) | 99.62 (0.38) | 93.93 (0.33) | 14.39 (1.51) | 1.15 | 2.66 |

| SalUn-soft | 4.19 (1.05) | 99.74 (0.26) | 93.44 (0.82) | 19.49 (6.61) | 2.19 | 2.71 |

| Methods | Random Data Forgetting (20%) | |||||

| UA | RA | TA | MIA | Avg. Gap | RTE | |

| Retrain | 5.31 | 100.00 | 94.10 | 13.30 | 0 | 38.74 |

| FT | 0.76 (4.55) | 99.89 (0.11) | 93.97 (0.13) | 2.69 (10.61) | 3.85 | 2.17 |

| RL | 6.47 (1.16) | 99.60 (0.40) | 92.39 (1.71) | 28.62 (15.32) | 4.65 | 2.65 |

| GA | 0.67 (4.64) | 99.48 (0.52) | 94.42 (0.32) | 1.44 (11.86) | 4.33 | 0.26 |

| IU | 2.91 (2.40) | 97.30 (2.70) | 90.64 (3.46) | 5.53 (7.77) | 4.08 | 3.29 |

| BE | 0.57 (4.74) | 99.44 (0.56) | 94.32 (0.22) | 1.64 (11.66) | 4.29 | 0.53 |

| BS | 0.62 (4.69) | 99.46 (0.54) | 94.20 (0.10) | 1.62 (11.68) | 4.25 | 0.86 |

| -sparse | 3.92 (1.39) | 98.09 (1.91) | 91.92 (2.18) | 8.94 (4.36) | 2.46 | 2.20 |

| SalUn | 3.73 (1.58) | 98.61 (1.39) | 92.75 (1.35) | 13.18 (0.12) | 1.11 | 2.66 |

| SalUn-soft | 5.22 (0.09) | 99.66 (0.34) | 92.71 (1.39) | 22.92 (9.62) | 2.86 | 2.73 |

| Methods | Random Data Forgetting (30%) | |||||

| UA | RA | TA | MIA | Avg. Gap | RTE | |

| Retrain | 6.64 | 100.00 | 92.78 | 14.60 | 0 | 33.65 |

| FT | 0.56 (6.08) | 99.83 (0.17) | 94.22 (1.44) | 1.66 (12.94) | 5.16 | 1.98 |

| RL | 6.89 (0.25) | 99.36 (0.64) | 91.35 (1.43) | 31.09 (16.49) | 4.70 | 2.63 |

| GA | 0.65 (5.99) | 99.46 (0.54) | 94.44 (1.66) | 1.50 (13.10) | 5.32 | 2.40 |

| IU | 3.95 (2.69) | 96.22 (3.78) | 89.61 (3.17) | 7.26 (7.34) | 4.24 | 3.32 |

| BE | 0.63 (6.01) | 99.39 (0.61) | 94.19 (1.41) | 3.35 (11.25) | 4.82 | 0.81 |

| BS | 0.63 (6.01) | 99.39 (0.61) | 94.15 (1.37) | 2.88 (11.72) | 4.93 | 1.28 |

| -sparse | 4.70 (1.94) | 97.63 (2.37) | 91.19 (1.59) | 9.97 (4.63) | 2.63 | 1.99 |

| SalUn | 6.22 (0.42) | 95.91 (4.09) | 90.72 (2.06) | 14.11 (0.49) | 1.76 | 2.64 |

| SalUn-soft | 6.65 (0.01) | 99.42 (0.58) | 91.51 (1.27) | 31.67 (17.07) | 4.73 | 2.71 |

| Methods | Random Data Forgetting (40%) | |||||

| UA | RA | TA | MIA | Avg. Gap | RTE | |

| Retrain | 7.01 | 100.00 | 92.52 | 18.37 | 0 | 28.47 |

| FT | 0.77 (6.24) | 99.96 (0.04) | 94.27 (1.75) | 2.88 (15.49) | 5.88 | 1.62 |

| RL | 5.02 (1.99) | 99.61 (0.39) | 92.14 (0.38) | 37.76 (19.39) | 5.54 | 2.68 |

| GA | 0.67 (6.34) | 99.47 (0.53) | 94.38 (1.86) | 1.57 (16.80) | 6.38 | 0.53 |

| IU | 7.89 (0.88) | 92.21 (7.79) | 86.15 (6.37) | 10.99 (7.38) | 5.60 | 3.27 |

| BE | 0.86 (6.15) | 99.27 (0.73) | 93.46 (0.94) | 15.72 (2.65) | 2.62 | 1.04 |

| BS | 1.18 (5.83) | 98.94 (1.06) | 93.01 (0.49) | 13.97 (4.40) | 2.95 | 1.72 |

| -sparse | 2.84 (4.17) | 98.75 (1.25) | 92.20 (0.32) | 7.09 (11.28) | 4.26 | 1.63 |

| SalUn | 6.86 (0.15) | 95.01 (4.99) | 89.76 (2.76) | 15.15 (3.22) | 2.78 | 2.67 |

| SalUn-soft | 5.07 (1.94) | 99.65 (0.35) | 92.17 (0.35) | 37.52 (19.15) | 5.45 | 2.72 |

| Methods | Random Data Forgetting (50%) | |||||

| UA | RA | TA | MIA | Avg. Gap | RTE | |

| Retrain | 7.91 | 100.00 | 91.72 | 19.29 | 0 | 23.90 |

| FT | 0.44 (7.47) | 99.96 (0.04) | 94.23 (2.51) | 2.15 (17.14) | 6.79 | 1.31 |

| RL | 7.61 (0.30) | 99.67 (0.33) | 92.83 (1.11) | 37.36 (18.07) | 4.95 | 2.65 |

| GA | 0.40 (7.51) | 99.61 (0.39) | 94.34 (2.62) | 1.22 (18.07) | 7.15 | 0.66 |

| IU | 3.97 (3.94) | 96.21 (3.79) | 90.00 (1.72) | 7.29 (12.00) | 5.36 | 3.25 |

| BE | 3.08 (4.83) | 96.84 (3.16) | 90.41 (1.31) | 24.87 (5.58) | 3.72 | 1.31 |

| BS | 9.76 (1.85) | 90.19 (9.81) | 83.71 (8.01) | 32.15 (12.86) | 8.13 | 2.12 |

| -sparse | 1.44 (6.47) | 99.52 (0.48) | 93.13 (1.41) | 4.76 (14.53) | 5.72 | 1.31 |

| SalUn | 7.75 (0.16) | 94.28 (5.72) | 89.29 (2.43) | 16.99 (2.30) | 2.65 | 2.68 |

| SalUn-soft | 3.41 (4.50) | 99.62 (0.38) | 91.82 (0.10) | 31.50 (12.21) | 4.30 | 2.70 |