Scalable Bayesian Meta-Learning through Generalized Implicit Gradients

Abstract

Meta-learning owns unique effectiveness and swiftness in tackling emerging tasks with limited data. Its broad applicability is revealed by viewing it as a bi-level optimization problem. The resultant algorithmic viewpoint however, faces scalability issues when the inner-level optimization relies on gradient-based iterations. Implicit differentiation has been considered to alleviate this challenge, but it is restricted to an isotropic Gaussian prior, and only favors deterministic meta-learning approaches. This work markedly mitigates the scalability bottleneck by cross-fertilizing the benefits of implicit differentiation to probabilistic Bayesian meta-learning. The novel implicit Bayesian meta-learning (iBaML) method not only broadens the scope of learnable priors, but also quantifies the associated uncertainty. Furthermore, the ultimate complexity is well controlled regardless of the inner-level optimization trajectory. Analytical error bounds are established to demonstrate the precision and efficiency of the generalized implicit gradient over the explicit one. Extensive numerical tests are also carried out to empirically validate the performance of the proposed method.

1 Introduction

Over the past decade, deep learning (DL) has garnered huge attention from theory, algorithms, and application viewpoints. The underlying success of DL is mainly attributed to the massive datasets, with which large-scale and highly expressive models can be trained. On the other hand, the stimulus of DL, namely data, can be scarce. Nevertheless, in several real-world tasks, such as object recognition and concept comprehension, humans can perform exceptionally well even with very few data samples. This prompts the natural question: How can we endow DL with human’s unique intelligence? By doing so, DL’s data reliance can be alleviated and the subsequent model training can be streamlined. Several trials have been emerging in those “stimulus-lacking” domains, including speech recognition (Miao, Metze, and Rawat 2013), medical imaging (yang et al. 2016), and robot manipulation (Hansen and Wang 2021).

A systematic framework has been explored in recent years to address the aforementioned question, under the terms learning-to-learn or meta-learning (Thrun 1998). In brief, meta-learning extracts task-invariant prior information from a given family of correlated (and thus informative) tasks. Domain-generic knowledge can therein be acquired as an inductive bias and transferred to new tasks outside the set of given ones (Thrun and Pratt 2012; Grant et al. 2018), making it feasible to learn unknown models/tasks even with minimal training samples. One representative example is that of an edge extractor, which can act as a common prior owing to its presence across natural images. Thus, using it can prune degrees of freedom from a number of image classification models. The prior extraction in conventional meta-learning is more of a hand-crafted art; see e.g., (Schmidhuber 1993; Bengio, Bengio, and Cloutier 1995; Schmidhuber, Zhao, and Wiering 1996). This rather “cumbersome art” has been gradually replaced by data-driven approaches. For parametric models of the task-learning process (Santoro et al. 2016; Mishra et al. 2018), the task-invariant “sub-model” can then be shared across different tasks with prior information embedded in the model weights. One typical model is that of recurrent neural networks (RNNs), where task-learning is captured by recurrent cells. However, the resultant black-box learning setup faces interpretability challenges.

As an alternative to model-committed approaches, model-agnostic meta-learning (MAML) transforms task-learning to optimizing the task-specific model parameters, while the prior amounts to initial parameters per task-level optimization, that are shared across tasks and can be learned through differentiable meta-level optimization (Finn, Abbeel, and Levine 2017). Building upon MAML, optimization-based meta-learning has been advocated to ameliorate its performance; see e.g. (Li et al. 2017; Bertinetto et al. 2019; Flennerhag et al. 2020; Abbas et al. 2022). In addition, performance analyses have been reported to better understand the behavior of these optimization-based algorithms (Franceschi et al. 2018; Fallah, Mokhtari, and Ozdaglar 2020; Wang, Sun, and Li 2020; Chen and Chen 2022).

Interestingly, the learned initialization can be approximately viewed as the mean of an implicit Gaussian prior over the task-specific parameters (Grant et al. 2018). Inspired by this interpretation, Bayesian methods have been advocated for meta-learning to further allow for uncertainty quantification in the model parameters. Different from its deterministic counterpart, Bayesian meta-learning seeks a prior distribution over the model parameters that best explains the data. Exact Bayesian inference however, is barely tractable as the posterior is often non-Gaussian, which prompts pursuing approximate inference methods; see e.g., (Yoon et al. 2018; Grant et al. 2018; Finn, Xu, and Levine 2018; Ravi and Beatson 2019).

MAML and its variants have appealing empirical performance, but optimizing the meta-learning loss with backpropagation is challenging due to the high-order derivatives involved. This incurs complexity that grows linearly with the number of task-level optimization steps, which renders the corresponding algorithms barely scalable. For this reason, scalability of meta-learning algorithms is of paramount importance. One remedy is to simply ignore the high-order derivatives, and rely on first-order updates only (Finn, Abbeel, and Levine 2017; Nichol, Achiam, and Schulman 2018). Alternatively, the so-termed implicit (i)MAML relies on implicit differentiation to eliminate the explicit backpropagation. However, the proximal regularization term in iMAML is confined to be a simple isotropic Gaussian prior, which limits model expressiveness (Rajeswaran et al. 2019).

In this paper, we develop a novel implicit Bayesian meta-learning (iBaML) approach that offers the desirable scalability, expressiveness, and performance quantification, and thus broadens the scope and appeal of meta-learning to real application domains. The contribution is threefold.

-

i)

iBaML enjoys complexity that is invariant to the number of gradient steps in task-level optimization. This fundamentally breaks the complexity-accuracy tradeoff, and makes Bayesian meta-learning affordable with more sophisticated task-level optimization algorithms.

-

ii)

Rather than an isotropic Gaussian distribution, iBaML allows for learning more expressive priors. As a Bayesian approach, iBaML can quantify uncertainty of the estimated model parameters.

-

iii)

Through both analytical and numerical performance studies, iBaML showcases its complexity and accuracy merits over the state-of-the-art Bayesian meta-learning methods. In a large regime, the time and space complexity can be reduced even by an order of magnitude.

2 Preliminaries and problem statement

This section outlines the meta-learning formulation in the context of supervised few-shot learning, and touches upon the associated scalability issues.

2.1 Meta-learning setups

Suppose we are given datasets , each of cardinality corresponding to a task indexed by , where is an input vector, and denotes its label. Set is disjointly partitioned into a training set and a validation set , with and for . Typically, is limited, and often much smaller than what is required by supervised DL tasks. However, it is worth stressing that the number of tasks can be considerably large. Thus, can be sufficiently large for learning a prior parameter vector shared by all tasks; e.g., using deep neural networks.

A key attribute of meta-learning is to estimate such a task-invariant prior information parameterized by the meta-parameter based on training data across tasks. Subsequently, and are used to perform task- or inner-level optimization to obtain the task-specific parameter . The estimate of is then evaluated on (and potentially also ) to produce a validation loss. Upon minimizing this loss summed over all the training tasks w.r.t. , this meta- or outer-level optimization yields the task-invariant estimate of . Note that the dimension of is not necessarily identical to that of ; see e.g. (Li et al. 2017; Bertinetto et al. 2019; Lee et al. 2019). As we will see shortly, this nested structure can be formulated as a bi-level optimization problem. This formulation readily suggests application of meta-learning to settings such as hyperparameter tuning that also relies on a similar bi-level optimization (Franceschi et al. 2018).

This bi-level optimization is outlined next for both deterministic and probabilistic Bayesian meta-learning variants.

Optimization-based meta-learning.

For each task , let and denote the losses over and , respectively. Further, let be the meta-parameter estimate, and the regularizer of the learning cost per task . Optimization-based meta-learning boils down to

| (1) | ||||

The regularizer can be either implicit (as in iMAML) or explicit (as in MAML). Further, the task-invariant meta-parameter is calibrated by in order to cope with overfitting. Indeed, an over-parameterized neural network could easily overfit to produce a tiny yet a large .

As reaching global minima can be infeasible especially with highly nonconvex neural networks, a practical alternative is an estimator produced by a function representing an optimization algorithm, such as gradient descent (GD), with a prefixed number of iterations. Thus, a tractable version of (1) is

| (2) | ||||

As an example, can be an one-step gradient descent initialized by with implicit priors () (Finn, Abbeel, and Levine 2017; Grant et al. 2018), which yields the per task parameter estimate

| (3) |

where is the learning rate of GD, and we use the compact gradient notation hereafter. For later use, we also define the (unknown) oracle function that generates the global optimum .

Bayesian meta-learning.

The probabilistic approach to meta-learning takes a Bayesian view of the (now random) vector per task . The task-invariant vector is still deterministic, and parameterizes the prior probability density function (pdf) . Task-specific learning seeks the posterior pdf , where and (⊤ denotes transposition), while the objective per task is to maximize the conditional likelihood . Along similar lines followed by its deterministic optimization-based counterpart, Bayesian meta-learning amounts to

| (4) |

where we used that datasets are independent across tasks, and Bayes’ rule in the second line. Through the posterior , Bayesian meta-learning quantifies the uncertainty of task-specific parameter estimate , thus assessing model robustness. When the posterior of is replaced by its maximum a posteriori point estimator , meaning with denoting Dirac’s delta, it turns out that (2.1) reduces to (1).

Unfortunately, the posterior in (2.1) can be intractable with nonlinear models due to the difficulty of finding analytical solutions. To overcome this, we can resort to the widely adopted approximate variational inference (VI); see e.g. (Finn, Xu, and Levine 2018; Ravi and Beatson 2019; Nguyen, Do, and Carneiro 2020). VI searches over a family of tractable distributions for a surrogate that best matches the true posterior . This can be accomplished by minimizing the KL-divergence between the surrogate pdf and the true one, where determines the variational distribution. Considering that the dimension of can be fairly high, both the prior and surrogate posterior are often set to be Gaussian () with diagonal covariance matrices. Specifically, we select the prior as with covariance and , and the surrogate posterior as with and .

To ensure tractable numerical integration over , the meta-learning loss is often relaxed to an upper bound of . Common choices include applying Jensen’s inequality (Nguyen, Do, and Carneiro 2020) or an extra VI (Finn, Xu, and Levine 2018; Ravi and Beatson 2019) on (2.1). For notational convenience, here we will denote this upper bound by . With VI and a relaxed (upper bound) objective, (2.1) becomes

| (5) | ||||

where depends on in two ways: i) via the intermediate variable ; and, ii) by acting directly on . Note that (5) is general enough to cover the case where is constructed using both and ; see e.g., (Ravi and Beatson 2019). Similar to optimization-based meta-learning, the difficulty in reaching global optima prompts one to substitute with a sub-optimum obtained through an algorithm ; i.e.,

| (6) |

2.2 Scalability issues in meta-learning

Delay and memory resources required for solving (2) and (2.1) are arguably the major challenges that meta-learning faces. Here we will elaborate on these challenges in the optimization-based setup, but the same argument carries over to Bayesian meta-learning too.

Consider minimizing the meta-learning loss in (2) using gradient-based iteration such as Adam (Kingma and Ba 2015). In the -st iteration, gradients must be computed for a batch of tasks. Letting , where denotes the meta-parameter in the -th iteration, the chain rule yields the so-termed meta-gradient

| (7) |

where contains high-order derivatives. When is chosen as the one-step GD (cf. (3)), the meta-gradient is

| (8) |

Fortunately, in this case the meta-gradient can still be computed through the Hessian-vector product (HVP), which incurs spatio-temporal complexity .

In general, is a -step GD for some , which gives rise to high-order derivatives in the meta-gradient. The most efficient computation of the meta-gradient calls for recursive application of HVP times, what incurs an overall complexity of in time, and in space requirements. Empirical wisdom however, favors a large because it leads to improved accuracy in approximating the true meta-gradient . Hence, the linear increase of complexity with will impede the scaling of optimization-based meta-learning algorithms.

When computing the meta-gradient, it should be underscored that the forward implementation of the -step GD function has complexity . However, the constant hidden in the is much smaller compared to the HVP computation in the backward propagation. Typically, the constant is in terms of time and in terms of space; see (Griewank 1993; Rajeswaran et al. 2019). For this reason, we will focus on more efficient means of obtaining the meta-gradient function for Bayesian meta-learning. It is also worth stressing that our results in the next section will hold for an arbitrary vector instead of solely the variable of the -th iteration. Thus, we will use the general vector when introducing our approach, while we will take its value at the point when presenting our meta-learning algorithm.

3 Implicit Bayesian meta-learning

In this section, we will first introduce the proposed implicit Bayesian meta-learning (iBaML) method, which is built on top of implicit differentiation. Then, we will provide theoretical analysis to bound and compare the errors of explicit and implicit differentiation.

3.1 Implicit Bayesian meta-gradients

We start with decomposing the meta-gradient in Bayesian meta-learning (2.1) (henceforth referred to as Bayesian meta-gradient) using the chain rule

| (9) |

where and denote the partial derivatives of a function w.r.t. its first and second arguments, respectively. The computational burden in (3.1) comes from the high-order derivatives present in the Jacobian .

The key idea behind implicit differentiation is to express as a function of itself, so that it can be numerically obtained without using high-order derivatives. The following lemma formalizes how the implicit Jacobian is obtained in our setup. All proofs can be found in the Appendix.

Lemma 1.

Consider the Bayesian meta-learning problem in (5), and let be a local minimum of the task-level KL-divergence generated by . Also, let denote the expected negative log-likelihood (nll) on . If is invertible, then it holds for that

| (10) |

Two remarks are now in order regarding the technical assumption, and connections with iMAML. For notational brevity, define the block matrix

| (11) |

Remark 1.

The invertibility of in Lemma 1 is assumed to ensure uniqueness of . Without this assumption, it turns out that can be a singular point, belonging to a subspace where any point is also a local minimum. The Bayesian meta-gradients (3.1) of the points in this subspace form a set

| (12) |

where † represents pseudo-inverse, and stands for the null space. Upon replacing with , one can generalize Lemma 1, and forgo the invertibility assumption.

Remark 2.

To recognize how Lemma 1 links iBaML with iMAML (Rajeswaran et al. 2019), consider the special case where the covariance matrices of the prior and local minimum are fixed as and for some constant . Since is a constant vector, Lemma 1 boils down to

| (13) |

which coincides with Lemma 1 of (Rajeswaran et al. 2019). Hence, iBaML subsumes iMAML whose expressiveness is confined because is fixed, while iBaML entails a learnable covariance matrix in the prior . In addition, the uncertainty of iMAML’s training posterior can be more challenging to quantify than that in iBaML.

An immediate consequence of Lemma 1 is the so-called generalized implicit gradients. Suppose that involves a sufficiently large for the sub-optimal point to be close to a local optimum . The Bayesian meta-gradient (3.1) can then be approximated through

| (14) | ||||

The approximate implicit gradient in (14) is computationally expensive due to the matrix inversion , which incurs complexity . To relieve the computational burden, a key observation is that is the solution of the optimization problem

| (15) |

Given that the square matrix is by definition symmetric, problem (15) can be efficiently solved using the conjugate gradient (CG) iteration. Specifically, the complexity of CG is dominated by the matrix-vector product (for some vector ), given by

| (16) | ||||

The first term on the right-hand side of (16) is an HVP, and the second is the multiplication of a diagonal matrix with a vector. Note that with the diagonal matrix, the latter term boils down to a dot product, implying that the complexity of each CG iteration is as low as . In practice, a small number of CG iterations suffices to produce an accurate estimate of thanks to its fast convergence rate (Van der Sluis and van der Vorst 1986; Winther 1980). In order to control the total complexity of iBaML, we set the maximum number of CG iterations to a constant .

Having obtained an approximation of the matrix-inverse-vector product , we proceed to estimate the Bayesian meta-gradient. Let be the output of the CG method with subvectors . Then, it follows from (14) that

where we also used the definition (11). Again, the diagonal-matrix-vector products in (3.1) can be efficiently computed through dot products, which incur complexity . The step-by-step pseudocode of the iBaML is listed under Algorithm 1.

In a nutshell, the implicit Bayesian meta-gradient computation consumes time, regardless of the optimization algorithm . One can even employ more complicated algorithms such as second-order matrix-free optimization (Martens and Grosse 2015; Botev, Ritter, and Barber 2017). In addition, as the time complexity does not depend on , one can increase to reduce the approximation error in (14). The space complexity of iBaML is only thanks to the iterative implementation of CG steps. These considerations explain how iBaML addresses the scalability issue of explicit backpropagation.

3.2 Theoretical analysis

This section deals with performance analysis of both explicit and implicit gradients in Bayesian meta-learning to further understand their differences. Similar to (Rajeswaran et al. 2019), our results will rely on the following assumptions.

Assumption 1.

Vector is a local minimum of the KL-divergence in (5).

Assumption 2.

The meta-loss function is -Lipschitz and -smooth w.r.t. while its partial gradient is -Lipschitz w.r.t. .

Assumption 3.

The expected nll function is -smooth, and has a Hessian that is -Lipschitz.

Assumption 4.

Matrices and are both non-singular; that is, their smallest singular value .

Assumption 5.

Prior variances are positive and bounded, meaning .

Based on these assumptions, we can establish the following result.

Theorem 1 (Explicit Bayesian meta-gradient error bound).

Theorem 1 asserts that the error of the explicit Bayesian meta-gradient relative to the true depends on the task-level optimization error as well as the error in the Jacobian, where the former captures the Euclidean distance of the local minimum and its approximation , while the latter characterizes how the sub-optimal function influences the Jacobian. Both errors can be reduced by increasing in the task-level optimization, at the cost of time and space complexity for backpropagating . Ideally, one can have when is a local optimum, and when choosing .

Next, we derive an error bound for implicit differentiation.

Theorem 2 (Implicit Bayesian meta-gradient error bound).

While the bound on implicit meta-gradient also depends on the task-level optimization error, the difference with Theorem 1 is highlighted in the CG error. The fast convergence of CG leads to a tolerable even with a small . As a result, one can opt for a large to reduce task-level optimization error , and a small to obtain a satisfactory approximation of the meta-gradient.

4 Numerical tests

Here we test and showcase on synthetic and real data the analytical novelties of this contribution. Our implementation relies on the PyTorch (Paszke et al. 2019), and codes are available at https://github.com/zhangyilang/iBaML.

4.1 Synthetic data

Here we experiment on the errors between explicit and implicit gradients over a synthetic dataset. The data are generated using the Bayesian linear regression model

| (19) |

where are i.i.d. samples drawn from a distribution that is unknown during meta-training, and is the additive white Gaussian noise (AWGN) with known variance . Although the current training posterior becomes tractable, we still focus on the VI approximation for uniformity. Within this rudimentary linear case, it can be readily verified that the task-level optimum of (5) is given by

| (20a) | ||||

| (20b) | ||||

where is a vector collecting the diagonal entries of matrix . The true posterior in the linear case is , implying that the posterior covariance matrix is essentially approximated by its diagonal counterpart in VI. Lemma 1 and (3.1) imply that the oracle meta-gradient is

| (21) | |||

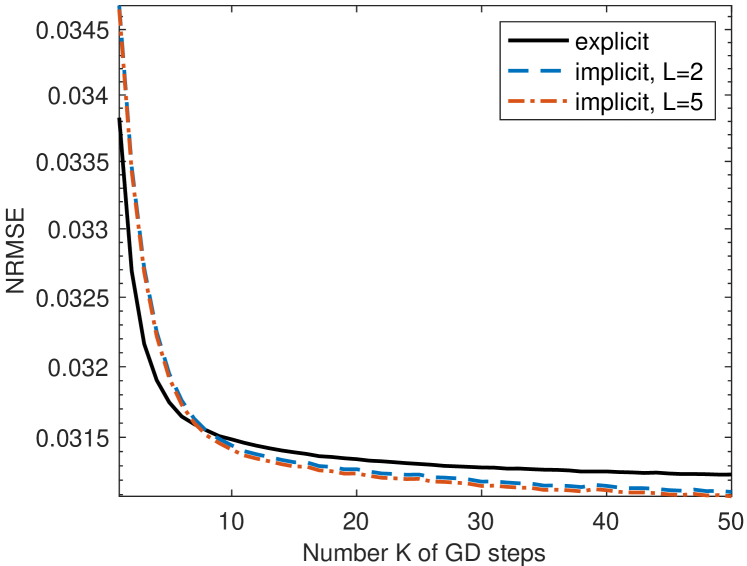

As a benchmark meta-learning algorithm, we selected the amortized Bayesian meta-learning (ABML) in (Ravi and Beatson 2019). The metric used for performance assessment is the normalized root-mean-square error (NRMSE) between the true meta-gradient , and the estimated meta-gradients and ; see also the Appendix for additional details on the numerical test.

Figure 1 depicts the NRMSE as a function of for the first iteration of ABML, that is at the point . For explicit and implicit gradients, the NRMSE decreases as increases, while the former outperforms the latter for , and the vice-versa for . These observations confirm our analytical results. Intuitively, factors and caused by imprecise task-level optimization dominate the upper bounds for small , thus resulting in large NRMSE. Besides, implicit gradients are more sensitive to task-level optimization errors. One conjecture is that iBaML is developed based on Lemma 1, where the matrix inversion can be sensitive to ’s variation. Despite that the conditioning number of takes on a large value purposely so that decreases slowly with , a small suffices to capture accurately implicit gradients. The main reason is that the CG error can become sufficiently small even with only steps, while remains large because GD converges slowly.

4.2 Real data

Next, we conduct tests to assess the performance of iBaML on real datasets. We consider one of the most widely used few-shot dataset for classification miniImageNet (Vinyals et al. 2016). This dataset consists of natural images categorized in classes, with samples per class. All images are cropped to have size of . We adopt the dataset splitting suggested by (Ravi and Larochelle 2017), where , and disjoint classes are used for meta-training, meta-validation and meta-testing, respectively. The setups of the numerical test follow from the standard -class -shot few-shot learning protocol in (Vinyals et al. 2016). In particular, each task has randomly selected classes, and each class contains training images and validation images. In other words, we have and . We further adopt the typical choices with , , and . It should be noted that the training and validation sets are also known as support and query sets in the context of few-shot learning.

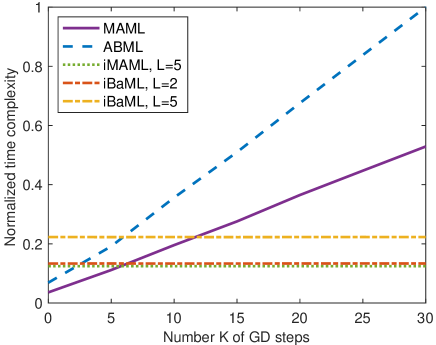

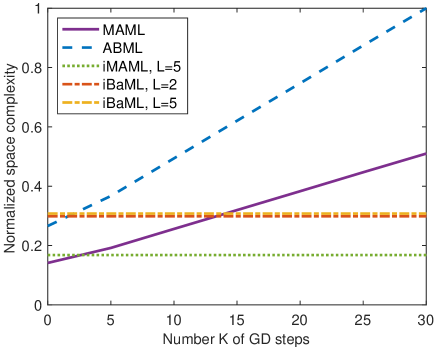

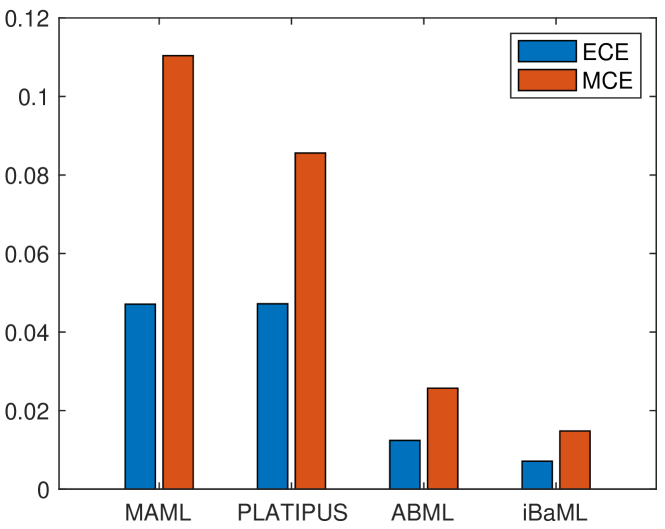

We first empirically compare the computational complexity (time and space) for explicit versus implicit gradients on the -class -shot miniImageNet dataset. Here we are only interested in backward complexity, so the delay and memory requirements for forward pass of is excluded. Figure 2(a) plots the time complexity of explicit and implicit gradients against . It is observed that the time complexity of explicit gradient grows linearly with , while the implicit one increases only with but not . Moreover, the explicit and implicit gradients have comparable time complexity when . As far as space complexity, Figure 2(b) illustrates that memory usage with explicit gradients is proportional to . In contrast, the memory used in the implicit gradient algorithms is nearly invariant across values. Such a memory-saving property is important when meta-learning is employed with models of growing degrees of freedom. Furthermore, one may also notice from both figures that MAML and iMAML incur about time/space complexities of ABML and iBaML. This is because non-Bayesian approaches only optimize the mean vector of the Gaussian prior, whose dimension is , while the probabilistic methods cope with both the mean and diagonal covariance matrix of the pdf with corresponding dimension . This increase in dimensionality doubles the space-time complexity in gradient computations.

| Method | nll | accuracy |

|---|---|---|

| MAML, | ||

| ABML, | ||

| iBaML, | ||

| iBaML, | ||

| iBaML, |

Next, we demonstrate the effectiveness of iBaML in reducing the Bayesian meta-learning loss. The test is conducted on the -class -shot miniImageNet. The model is a standard -layer -channel convolutional neural network, and the chosen baseline algorithms are MAML (Finn, Abbeel, and Levine 2017) and ABML (Ravi and Beatson 2019); see also the Appendix for alternative setups. Due to the large number of training tasks, it is impractical to compute the exact meta-training loss. As an alternative, we adopt the ‘test nll’ (averaged over test tasks) as our metric, and also report their corresponding accuracy. For fairness, we set when implementing the implicit gradients so that the time complexity is similar to explicit one with . The results are listed in Table 1. It is observed that both nll and accuracy improve with , implying that the meta-learning loss can be effectively reduced by trading a small error in gradient estimation.

5 Conclusions

This paper develops a novel so-termed iBaML approach to enhance the scalablity of Bayesian meta-learning. At the core of iBaML is an estimate of meta-gradients using implicit differentiation. Analysis reveals that the estimation error is upper bounded by task-level optimization and CG errors, and these two can be significantly reduced with only a slight increase in time complexity. In addition, the required computational complexity is invariant to the task-level optimization trajectory, what allows iBaML to deal with complicated task-level optimization. Besides analytical performance, extensive numerical tests on synthetic and real datasets are also conducted and demonstrate the appealing merits of iBaML over competing alternatives.

Acknowledgments

This work was supported in part by NSF grants 2220292, 2212318, 2126052, and 2128593.

References

- Abbas et al. (2022) Abbas, M.; Xiao, Q.; Chen, L.; Chen, P.-Y.; and Chen, T. 2022. Sharp-MAML: Sharpness-Aware Model-Agnostic Meta Learning. In Proceedings of the 39th International Conference on Machine Learning, volume 162 of Proceedings of Machine Learning Research, 10–32. PMLR.

- Bengio, Bengio, and Cloutier (1995) Bengio, S.; Bengio, Y.; and Cloutier, J. 1995. On the Search for New Learning Rules for ANNs. Neural Processing Letters, 2(4): 26–30.

- Bertinetto et al. (2019) Bertinetto, L.; Henriques, J. F.; Torr, P.; and Vedaldi, A. 2019. Meta-learning with Differentiable Closed-Form Solvers. In Proceedings of International Conference on Learning Representations.

- Botev, Ritter, and Barber (2017) Botev, A.; Ritter, H.; and Barber, D. 2017. Practical Gauss-Newton Optimisation for Deep Learning. In Proceedings of the 34th International Conference on Machine Learning, volume 70 of Proceedings of Machine Learning Research, 557–565. PMLR.

- Chen and Chen (2022) Chen, L.; and Chen, T. 2022. Is Bayesian Model-Agnostic Meta Learning Better than Model-Agnostic Meta Learning, Provably? In Proceedings of The 25th International Conference on Artificial Intelligence and Statistics, volume 151 of Proceedings of Machine Learning Research, 1733–1774. PMLR.

- Fallah, Mokhtari, and Ozdaglar (2020) Fallah, A.; Mokhtari, A.; and Ozdaglar, A. 2020. On the Convergence Theory of Gradient-Based Model-Agnostic Meta-Learning Algorithms. In Proceedings of the Twenty Third International Conference on Artificial Intelligence and Statistics, volume 108, 1082–1092. PMLR.

- Finn, Abbeel, and Levine (2017) Finn, C.; Abbeel, P.; and Levine, S. 2017. Model-Agnostic Meta-Learning for Fast Adaptation of Deep Networks. In Proceedings of the 34th International Conference on Machine Learning, volume 70, 1126–1135. PMLR.

- Finn, Xu, and Levine (2018) Finn, C.; Xu, K.; and Levine, S. 2018. Probabilistic Model-Agnostic Meta-Learning. In Advances in Neural Information Processing Systems, volume 31. Curran Associates, Inc.

- Flennerhag et al. (2020) Flennerhag, S.; Rusu, A. A.; Pascanu, R.; Visin, F.; Yin, H.; and Hadsell, R. 2020. Meta-Learning with Warped Gradient Descent. In Proceedings of International Conference on Learning Representations.

- Franceschi et al. (2018) Franceschi, L.; Frasconi, P.; Salzo, S.; Grazzi, R.; and Pontil, M. 2018. Bilevel Programming for Hyperparameter Optimization and Meta-Learning. In Proceedings of the 35th International Conference on Machine Learning, volume 80, 1568–1577. PMLR.

- Grant et al. (2018) Grant, E.; Finn, C.; Levine, S.; Darrell, T.; and Griffiths, T. 2018. Recasting Gradient-Based Meta-Learning as Hierarchical Bayes. In Proceedings of International Conference on Learning Representations.

- Griewank (1993) Griewank, A. 1993. Some bounds on the complexity of gradients, Jacobians, and Hessians. In Complexity in numerical optimization, 128–162. World Scientific.

- Hansen and Wang (2021) Hansen, N.; and Wang, X. 2021. Generalization in Reinforcement Learning by Soft Data Augmentation. In 2021 IEEE International Conference on Robotics and Automation (ICRA), 13611–13617.

- Kingma and Ba (2015) Kingma, D. P.; and Ba, J. 2015. Adam: A Method for Stochastic Optimization. In Proceedings of International Conference on Learning Representations.

- Lee et al. (2019) Lee, K.; Maji, S.; Ravichandran, A.; and Soatto, S. 2019. Meta-Learning With Differentiable Convex Optimization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR).

- Li et al. (2017) Li, Z.; Zhou, F.; Chen, F.; and Li, H. 2017. Meta-sgd: Learning to learn quickly for few-shot learning. arXiv preprint arXiv:1707.09835.

- Martens and Grosse (2015) Martens, J.; and Grosse, R. 2015. Optimizing Neural Networks with Kronecker-factored Approximate Curvature. In Proceedings of the 32nd International Conference on Machine Learning, volume 37 of Proceedings of Machine Learning Research, 2408–2417. Lille, France: PMLR.

- Miao, Metze, and Rawat (2013) Miao, Y.; Metze, F.; and Rawat, S. 2013. Deep maxout networks for low-resource speech recognition. In 2013 IEEE Workshop on Automatic Speech Recognition and Understanding, 398–403. IEEE.

- Mishra et al. (2018) Mishra, N.; Rohaninejad, M.; Chen, X.; and Abbeel, P. 2018. A Simple Neural Attentive Meta-Learner. In International Conference on Learning Representations.

- Naeini, Cooper, and Hauskrecht (2015) Naeini, M. P.; Cooper, G.; and Hauskrecht, M. 2015. Obtaining well calibrated probabilities using bayesian binning. In Proceedings of the Twenty Ninth International Conference on Artificial Intelligence and Statistics, 2901–2907. PMLR.

- Nguyen, Do, and Carneiro (2020) Nguyen, C.; Do, T.-T.; and Carneiro, G. 2020. Uncertainty in Model-Agnostic Meta-Learning using Variational Inference. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV).

- Nichol, Achiam, and Schulman (2018) Nichol, A.; Achiam, J.; and Schulman, J. 2018. On First-Order Meta-Learning Algorithms. arXiv preprint arXiv:1803.02999.

- Paszke et al. (2019) Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; Desmaison, A.; Kopf, A.; Yang, E.; DeVito, Z.; Raison, M.; Tejani, A.; Chilamkurthy, S.; Steiner, B.; Fang, L.; Bai, J.; and Chintala, S. 2019. PyTorch: An Imperative Style, High-Performance Deep Learning Library. In Advances in Neural Information Processing Systems, volume 32. Curran Associates, Inc.

- Rajeswaran et al. (2019) Rajeswaran, A.; Finn, C.; Kakade, S. M.; and Levine, S. 2019. Meta-Learning with Implicit Gradients. In Advances in Neural Information Processing Systems, volume 32. Curran Associates, Inc.

- Ravi and Beatson (2019) Ravi, S.; and Beatson, A. 2019. Amortized Bayesian Meta-Learning. In Proceedings of International Conference on Learning Representations.

- Ravi and Larochelle (2017) Ravi, S.; and Larochelle, H. 2017. Optimization as a Model for Few-Shot Learning. In Proceedings of International Conference on Learning Representations.

- Santoro et al. (2016) Santoro, A.; Bartunov, S.; Botvinick, M.; Wierstra, D.; and Lillicrap, T. 2016. Meta-Learning with Memory-Augmented Neural Networks. In Proceedings of the 33rd International Conference on Machine Learning, volume 48, 1842–1850. New York, New York, USA: PMLR.

- Schmidhuber (1993) Schmidhuber, J. 1993. A Neural Network that Embeds its Own Meta-Levels. In IEEE International Conference on Neural Networks, 407–412 vol.1.

- Schmidhuber, Zhao, and Wiering (1996) Schmidhuber, J.; Zhao, J.; and Wiering, M. 1996. Simple Principles of Metalearning. Technical report IDSIA, 69: 1–23.

- Thrun (1998) Thrun, S. 1998. Lifelong Learning Algorithms, 181–209. Boston, MA: Springer US. ISBN 978-1-4615-5529-2.

- Thrun and Pratt (2012) Thrun, S.; and Pratt, L. 2012. Learning to Learn. Springer Science & Business Media.

- Van der Sluis and van der Vorst (1986) Van der Sluis, A.; and van der Vorst, H. A. 1986. The rate of convergence of conjugate gradients. Numerische Mathematik, 48(5): 543–560.

- Vinyals et al. (2016) Vinyals, O.; Blundell, C.; Lillicrap, T.; kavukcuoglu, k.; and Wierstra, D. 2016. Matching Networks for One Shot Learning. In Advances in Neural Information Processing Systems, volume 29. Curran Associates, Inc.

- Wang, Sun, and Li (2020) Wang, H.; Sun, R.; and Li, B. 2020. Global Convergence and Generalization Bound of Gradient-Based Meta-Learning with Deep Neural Nets. arXiv preprint arXiv:2006.14606.

- Winther (1980) Winther, R. 1980. Some Superlinear Convergence Results for the Conjugate Gradient Method. SIAM Journal on Numerical Analysis, 17(1): 14–17.

- yang et al. (2016) yang, y.; Sun, J.; Li, H.; and Xu, Z. 2016. Deep ADMM-Net for Compressive Sensing MRI. In Advances in Neural Information Processing Systems, volume 29. Curran Associates, Inc.

- Yoon et al. (2018) Yoon, J.; Kim, T.; Dia, O.; Kim, S.; Bengio, Y.; and Ahn, S. 2018. Bayesian Model-Agnostic Meta-Learning. In Advances in Neural Information Processing Systems, volume 31. Curran Associates, Inc.

Appendix

A.1 Proof of Lemma 1

Lemma 1 (Restated).

Consider the Bayesian meta-learning problem (5). Let be a local minimum of the task-level KL-divergence generated by ; and, the expected negative log-likelihood (nll) on . If is invertible, it then holds for that

| (22) |

Proof.

We first write out the evidence lower bound (ELBO) of the VI in (2.1).

where . Minimizing the KL divergence amounts to maximizing the ELBO.

From the definitions and , we can write the desired gradient as a block matrix

| (23) |

where with a slight abuse in notation and denote partial gradients. The next step is to express as a function of itself to leverage the implicit differentiation.

Since is a local minimum of , it maximizes the ELBO. The first-order necessary condition for optimality thus yields

| (24) |

Upon defining , the KL-divergence of Gaussian distributions can be written as

| (25) |

and after plugging (Proof.) into (24) and rearranging terms, we arrive at

| (26) |

and

| (27) |

where we used to represent the element-wise inverse of a general vector .

Then, taking gradient w.r.t. on both sides of (26), and employing the chain rule results in

| (28) |

and

| (29) |

Applying the same operation to (27), yields

| (30) |

and

| (31) |

So far, we have written the four blocks of as a function of themselves through implicit differentiation. Hence, the last step is to solve for these four blocks from the linear equations (28)-(31).

Directly solving this linear system of equations will produce complicated results. The trick here is to reformulate them into a compact matrix form:

| (32) |

Now, the matrix equation can be readily solved to obtain

| (33) |

where the fourth equality comes from (27).

∎

A.2 Proof of Theorem 1

Theorem 1 (Explicit meta-gradient error bound, restated).

Proof.

First, it follows by definition (3.1) of Bayesian meta-gradient that

| (35) |

where Assumption 2 was used in the second and last inequalities. What remains is to bound and .

Using Lemma 1 with Assumption 1, we obtain

| (36) |

where the third equality is from the definition of . Likewise, we also have

| (37) |

Upon defining , and adding intermediate terms, we arrive at

| (38) |

Next, we will bound the four summands in (Proof.). Using Assumption 3, it follows that

| (39) |

and

| (40) |

Letting denote the element-wise square of a general vector , we have for the second term that

| (41) |

where for the fourth inequality we employed Assumption 3, and the definition .

Further, we can use Assumption 4 to establish one of the desired upper bounds

| (44) |

and likewise

| (45) |

A.3 Proof of Theorem 2

Theorem 2 (implicit gradient error bound, restated).

A.4 Detailed setups for numerical tests

Synthetic dataset

Across all tests, the dimension , and the standard deviation of AWGN is . Matrix is randomly generated with condition number , and the linear weights are randomly sampled from the oracle distribution . The size of the training and validation sets are fixed as and for . The task-level optimization function is chosen to be the -step GD with learning rate . To run and compute the meta-loss in (2.1), the number of Monte Carlo (MC) samples is set to .

MiniImageNet

The numerical tests on miniImageNet follow the few-learning protocol described in (Vinyals et al. 2016; Finn, Abbeel, and Levine 2017). For meta-level optimization, the total number of iterations is with batch size and meta-learning rate . The meta-level prior of ABML is set to according to (Ravi and Beatson 2019). For task-level optimization, the learning rate is . In addition, the number of MC runs is taken to be for meta-training, and for evaluation.

Furthermore, to ensure that the entries and of the variances are greater than , we instead optimize and . This is possible because for a general , it holds that .