Scalable Extreme Deconvolution

Abstract

The Extreme Deconvolution method fits a probability density to a dataset where each observation has Gaussian noise added with a known sample-specific covariance, originally intended for use with astronomical datasets. The existing fitting method is batch EM, which would not normally be applied to large datasets such as the Gaia catalog containing noisy observations of a billion stars. We propose two minibatch variants of extreme deconvolution, based on an online variation of the EM algorithm, and direct gradient-based optimisation of the log-likelihood, both of which can run on GPUs. We demonstrate that these methods provide faster fitting, whilst being able to scale to much larger models for use with larger datasets.

1 Introduction

Extreme deconvolution is a method that fits Gaussian Mixture Models (GMMs) to noisy data where we know the covariance of the Gaussian noise added to each sample Bovy et al. [2011]. The method was originally developed to perform probabilistic density estimation on the dataset of stellar velocities produced by the Hipparcos satellite Perryman et al. [1997]. The Hipparcos catalogue consists of astrometric solutions (positions and velocities on the sky) and photometry (light intensity) for 118,218 stars, with associated noise covariances provided for each entry.

The successor to the Hipparcos mission, Gaia, aims to produce an even larger catalogue, with entries for an estimated 1 billion astronomical objects The Gaia Collaboration [2016]. Previous work using an extreme deconvolution model on the Gaia catalogue worked with a subset of the data and restricted the number of mixture components, but the intention is to fit models with the full dataset Anderson et al. [2018]. The existing extreme deconvolution algorithm makes a full pass over the dataset before it can update parameters, and the reference implementation requires all the data to fit in memory. To fit such large datasets in reasonable time, we would normally use stochastic or online methods, with updates based on minibatches of data to make the methods practical on GPUs Bottou et al. [2018].

In this work, we develop two minibatch methods for fitting the extreme deconvolution model based on 1) an online variation of the Expectation-Maximisation (EM) algorithm, and 2) a gradient optimizer. Our implementations can run on GPUs, and provide comparable density estimates to the existing method, whilst being much faster to train.

2 Background

The aim of extreme deconvolution is to perform density estimation on a noisy -dimensional dataset , where was generated by adding zero-mean Gaussian noise with known per-datapoint covariance to a projection of a true value ,

| (1) |

We assume that can be modelled by a mixture of Gaussians with components,

| (2) |

parameterised by mixture weight , mean and covariance . As the noise model is Gaussian and the model of the underlying density is a mixture of Gaussians, the probability of is also a Gaussian mixture. The total log-likehood of the model is

| (3) |

Missing data can be handled either by making rank-deficient, or by setting elements of the covariance matrix to very large values.

3 Methods

3.1 Minibatch Expectation-Maximisation

The original method of fitting the extreme deconvolution model used a modification of the Expectation-Maximisation (EM) algorithm for mixture models Dempster et al. [1977]. Here we describe a minibatch version of this algorithm based on Cappé and Moulines [2009]’s online EM algorithm for latent data models. At each iteration , we compute the sufficient statistics of the latent data for each component in the minibatch of size , using our current estimate of the parameters,

| (4) |

The term is the posterior probability of datapoint coming from component . The and terms result from the fact that and are jointly Gaussian, so the distribution of conditioned on is also Gaussian with mean and covariance . The expected sufficient statistics are then summed together over the minibatch,

| (5) |

Stochastic estimates of the sums of sufficient statistics over the whole dataset are then updated with a sufficiently small step size ,

| (6) |

Finally, we normalise the updated sums of expected sufficient statistics to get updated estimates of the parameters,

| (7) |

This procedure is repeated with new randomly-ordered minibatches until convergence. If we set and replace each minibatch with the entire dataset, then the update corresponds to the original batch fitting method. Numerically however, the update for , as written in (7), is inadvisable compared to the batch update given in Bovy et al. [2011]. There is likely to be catastrophic cancellation if the variances of the components are small relative to the means, especially if single precision floats are used, as is standard with GPU computation. In Appendix A we show how the minibatch version of this update can be rewritten in a more numerically stable form.

3.2 Stochastic Gradient Descent

An alternative to EM-based methods is to optimise the log-likelihood directly. The optimization is constrained, because the mixture weights are positive and sum to , and the covariances are positive definite. Directly fitting the log-likelihood with unconstrained gradient-based optimisers requires a transformation of the parameters to remove the constraints Williams [1996]. The mixture weights can be parameterised by taking the softmax of an unconstrained vector , and the covariances represented by its lower triangular Cholesky decomposition , where the diagonal elements of are constrained positive by taking the exponential of unconstrained elements ,

| (8) |

Having removed the constraints, we can optimise the likelihood using any standard minibatch gradient-based optimiser.

For a standard Gaussian mixture model, gradient based optimization has a scaling advantage over EM. There is no need to form the covariance matrix , since the Gaussian density can be evaluated directly from the Cholesky factor in , whereas an EM update is . Unfortunately SGD updates are also for the extreme deconvolution model, as we need to form the covariance for each datapoint.

4 Experiments

We implemented both minibatch approaches in PyTorch, and compared against the reference implementation from Bovy et al. [2011]. To evaluate each method, we used a random sample of rows from the Gaia DR2 source table The Gaia Collaboration [2018]. We selected the 5 primary astrometric features, along with the BP-RP colour and mean magnitude in the G-band. In total there were 2 million rows. Where data were missing, we set the field to zero and the noise variance to a large value. We set the projection to the identity matrix for every sample. This preliminary study uses only a small fraction of the full dataset size, but this allows us to fit the training data into memory, a requirement for use with the original implementation of extreme deconvolution. We used a range of mixture component sizes . In practice we would want to select a value of by cross-validation.

The existing EM method ran on CPU, whilst the minibatch EM and SGD methods ran on GPU. While the absolute times depend strongly on hardware and fine implementation details, they give a sense of the sort of times possible on current workstations, and the relative times across model sizes illustrate how the methods scale. We used a validation set comprising of the rows when developing our experiments. Final model performance was evaluated on a different held-out test set also comprising 10% of the rows at the last stage, with no parameter selection or development done based on this set. Details required for reproducibility are provided in Appendix B.

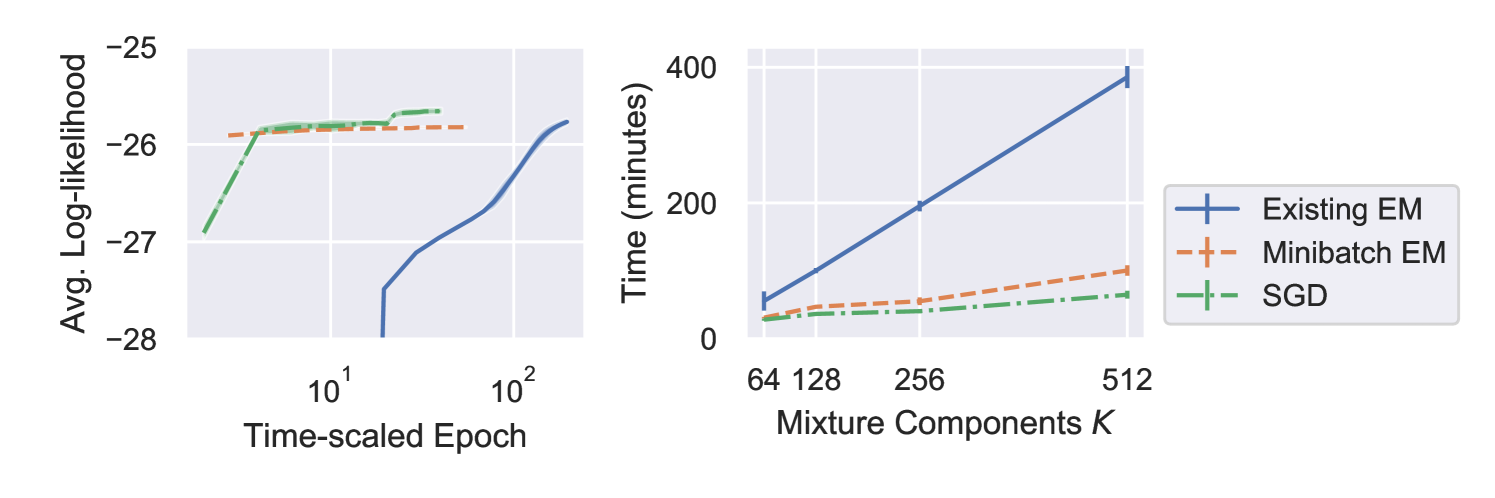

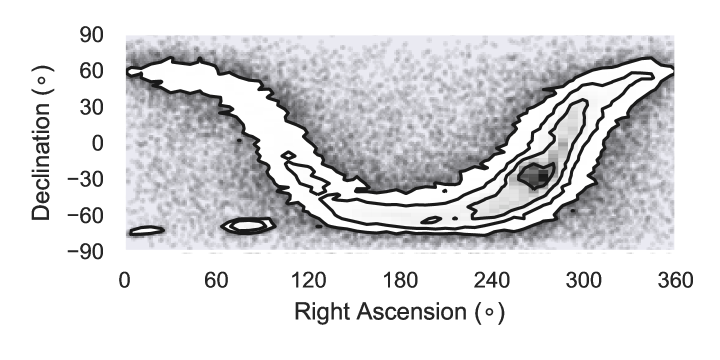

Table 1 reports the validation and test log-likelihoods for each method. The values are similar, but not exactly comparable, as the effect of regularisation differs for each method. Figure 1 plots the training log-likelihood against time-rescaled epoch for , and training time as function of mixture components . Figure 2 shows a 2-D projection from an example model with fitted with the minibatch-EM method.

| Method | K | Validation | Test |

|---|---|---|---|

| Existing EM | 64 | - | |

| Bovy et al. [2011] | 128 | - | |

| 256 | - | ||

| 512 | |||

| Minibatch EM | 64 | - | |

| 128 | - | ||

| 256 | - | ||

| 512 | |||

| SGD | 64 | - | |

| 128 | - | ||

| 256 | - | ||

| 512 |

5 Discussion

Our results have shown that both of our proposed methods perform comparably to the existing method of fitting extreme deconvolution models, whilst converging faster. The results also show that using GPU-based computation speeds up fitting considerably, allowing sublinear scaling of training time with mixture component size .

Further improvements to our approaches are possible. The original paper presents a method of getting out of local maxima by splitting and merging clusters, with the split-merge criteria evaluated on the whole dataset. It should be possible to replace the criteria with stochastic estimates, which would permit them to be used with both the SGD and minibatch EM methods. Our approaches also add more free parameters to be selected, including learning rate and batch size. This adds scope for hyperparameter optimisation to improve the log-likelihood.

In this preliminary study the SGD method provided the best log-likelihood values, was faster to train, and scaled better with component size . In addition, we found SGD to be more numerically stable than minibatch-EM during training. Both minibatch methods will allow us to fit larger models going forwards.

Acknowledgments

We are grateful to David W. Hogg for providing us with the problem and advising us on the Gaia data. Our experiments made use of AstroPy [The Astropy Collaboration, 2013, 2018], corner.py [Foreman-Mackey, 2016], Matplotlib [Hunter, 2007], Numpy [Oliphant, 2006], Pandas [McKinney, 2010], PyTorch [Paszke et al., 2017] and Scikit-learn [Pedregosa et al., 2011]. This work was supported in part by the EPSRC Centre for Doctoral Training in Data Science, funded by the UK Engineering and Physical Sciences Research Council (grant EP/L016427/1) and the University of Edinburgh.

References

- Anderson et al. [2018] L. Anderson, D. W. Hogg, B. Leistedt, A. M. Price-Whelan, and J. Bovy. Improving Gaia Parallax Precision with a Data-driven Model of Stars. The Astronomical Journal, 156(4):145, Sept. 2018.

- Bottou et al. [2018] L. Bottou, F. E. Curtis, and J. Nocedal. Optimization methods for large-scale machine learning. SIAM Rev, 60(2):223–311, 2018.

- Bovy et al. [2011] J. Bovy, D. W. Hogg, and S. T. Roweis. Extreme deconvolution: Inferring complete distribution functions from noisy, heterogeneous and incomplete observations. The Annals of Applied Statistics, 5(2B):1657–1677, June 2011.

- Cappé and Moulines [2009] O. Cappé and E. Moulines. On-line expectation–maximization algorithm for latent data models. Journal of the Royal Statistical Society: Series B (Statistical Methodology), 71(3):593–613, 2009.

- Dempster et al. [1977] A. P. Dempster, N. M. Laird, and D. B. Rubin. Maximum Likelihood from Incomplete Data via the EM Algorithm. Journal of the Royal Statistical Society. Series B (Methodological), 39(1):1–38, 1977.

- Foreman-Mackey [2016] D. Foreman-Mackey. corner.py: Scatterplot matrices in python. The Journal of Open Source Software, 24, 2016.

- Hunter [2007] J. D. Hunter. Matplotlib: A 2d graphics environment. Computing in Science & Engineering, 9(3):90–95, 2007.

- Kingma and Ba [2014] D. P. Kingma and J. Ba. Adam: A Method for Stochastic Optimization. 3rd International Conference for Learning Representations, 2014.

- McKinney [2010] W. McKinney. Data structures for statistical computing in python. In S. van der Walt and J. Millman, editors, Proceedings of the 9th Python in Science Conference, pages 51 – 56, 2010.

- Oliphant [2006] T. Oliphant. NumPy: A guide to NumPy. USA: Trelgol Publishing, 2006.

- Paszke et al. [2017] A. Paszke, S. Gross, S. Chintala, G. Chanan, E. Yang, Z. DeVito, Z. Lin, A. Desmaison, L. Antiga, and A. Lerer. Automatic differentiation in PyTorch. In NIPS Autodiff Workshop, 2017.

- Pedregosa et al. [2011] F. Pedregosa, G. Varoquaux, A. Gramfort, V. Michel, B. Thirion, O. Grisel, M. Blondel, P. Prettenhofer, R. Weiss, V. Dubourg, J. Vanderplas, A. Passos, D. Cournapeau, M. Brucher, M. Perrot, and E. Duchesnay. Scikit-learn: Machine learning in Python. Journal of Machine Learning Research, 12:2825–2830, 2011.

- Perryman et al. [1997] M. A. C. Perryman, L. Lindegren, J. Kovalevsky, E. Høg, U. Bastian, P. L. Bernacca, M. Crézé, F. Donati, M. Grenon, M. Grewing, F. Van Leeuwen, H. Van Der Marel, F. Mignard, C. A. Murray, R. S. Le Poole, H. Schrijver, C. Turon, F. Arenou, M. Froeschlé, and C. S. Petersen. The Hipparcos Catalogue. Astronomy and Astrophysics, 323(1):49–52, 1997.

- Sculley [2010] D. Sculley. Web-scale k-means clustering. In Proceedings of the 19th International Conference on World Wide Web - WWW ’10, page 1177, Raleigh, North Carolina, USA, 2010. ACM Press.

- The Astropy Collaboration [2013] The Astropy Collaboration. Astropy: A community Python package for astronomy. Astronomy & Astrophysics, 558:A33, Oct. 2013.

- The Astropy Collaboration [2018] The Astropy Collaboration. The Astropy Project: Building an Open-science Project and Status of the v2.0 Core Package. The Astronomical Journal, 156:123, Sept. 2018.

- The Gaia Collaboration [2016] The Gaia Collaboration. The Gaia mission. Astronomy and Astrophysics, 595:A1, Nov. 2016.

- The Gaia Collaboration [2018] The Gaia Collaboration. Gaia Data Release 2. Summary of the contents and survey properties. Astronomy & Astrophysics, 616:A1, Aug. 2018.

- Williams [1996] P. M. Williams. Using neural networks to model conditional multivariate densities. Neural Computation, 8(4):843–854, 1996.

Appendix A Stable Covariance Update

In Section 3.1 describing our minibatch-EM method, we noted that the M-step update for the variance of each component as presented in Equation 7 would be prone to catastrophic cancellation as a result of taking a small difference between large values using single-precision floats. Here we present an alternative update for that is less prone to numerical instability, and show that it is equivalent to Equation 7. For clarity we drop the component indicator from the parameters, and add indicators and to distinguish between current and previous estimates of parameters.

First, we define an adjustment operation,

| (9) | ||||

| (10) |

which can be thought of as recentering a scaled variance around a new mean. Equation 9 is how we actually compute the adjustment, to minimise taking small differences between large values, whilst Equation 10 shows the identity we are interested in.

In the M-step at iteration of our minibatch EM approach, we compute estimates of , and as before using Equations 6 and 7. We also compute minibatch-specific parameters using exact sums over the minibatch:

| (11) |

We then compute our new estimate of the variance as a function of the previous estimates , the minibatch values , and the current estimates :

| (12) | ||||

| (13) | ||||

| (14) | ||||

| (15) | ||||

| (16) |

Again, Equation 12 is how we actually compute the update to minimise numerical errors, whilst Equation 16 shows that the update is equivalent to the covariance update defined in Equation 7. Whilst we found this update worked better in practice than a direct implementation, numerical instability is still possible if the standard deviations of the components are small enough relative to the means, and further work is needed to determine if a more stable update can be performed.

Appendix B Experiment Details

Here we provide specific details of our experiments for reproducibility. Code used to run the experiments is available at https://github.com/bayesiains/scalable_xd.

B.1 Dataset

From the Gaia DR2 source table we selected the columns RA, DEC, PARALLAX, PMRA, PMDA, BP_RP and PHOT_G_MEAN_MAG to assemble the observed dataset The Gaia Collaboration [2018]. Random subsampling was done by selecting rows with the value of the RANDOM_INDEX column less than . Noise covariance matrices were assembled using the corresponding error and correlation columns for each variable. Where a column of a row was marked as missing, the corresponding element of was set to zero, the corresponding diagonal element of was set to , and the corresponding off-diagonal elements set to zero. For columns which do not have associated noise, the corresponding diagonal elements of were set to a value of , and corresponding off-diagonal elements to zero.

B.2 Initialisation

For each method, initialisation of the means and weights was done using the estimated counts and centroids after 10 epochs of minibatch k-means clustering Sculley [2010]. Covariances were set to the identity matrix.

B.3 Training

All methods were trained for a total of twenty epochs. Both minibatch methods used a batch size of . For the minibatch-EM method, the step size was started at and reduced by a factor of two after ten epochs. For the SGD method, we used the Adam optimiser with a learning of for the first ten epochs, reducing by a factor of ten for the last ten epochs, and all other parameters set to the suggested defaults Kingma and Ba [2014].

For numerical stability, a very small amount of regularisation was applied to the covariances . Using the original implementation, we set the regularisation constant . For the minibatch-EM method, we added diagonal matrix directly to each covariance matrix after updating them. For the SGD method, we added a penalty term to the loss function. As noted in Section 4, the effect of is not comparable across methods. If we were using larger values of to prevent overfitting rather than just avoiding numerical instability, would be tuned specifically for each method.