Scalable Property Valuation Models via Graph-based Deep Learning

Abstract

This paper aims to enrich the capabilities of existing deep learning-based automated valuation models through an efficient graph representation of peer dependencies, thus capturing intricate spatial relationships. In particular, we develop two novel graph neural network models that effectively identify sequences of neighboring houses with similar features, employing different message passing algorithms. The first strategy consider standard spatial graph convolutions, while the second one utilizes transformer graph convolutions. This approach confers scalability to the modeling process. The experimental evaluation is conducted using a proprietary dataset comprising approximately 200,000 houses located in Santiago, Chile. We show that employing tailored graph neural networks significantly improves the accuracy of house price prediction, especially when utilizing transformer convolutional message passing layers.

Keywords: Property valuation, Deep learning, Peer-dependence valuation models, Real estate appraisal, Graph neural networks.111This is a preprint of a work under submission and thus subject to change. Changes resulting from the publishing process, such as editing, corrections,structural formatting, and other quality control mechanisms may not be reflected in this version of the document.

1 Introduction

The accurate evaluation of property prices plays an indispensable role in various economic facets of society. Serving as a foundational pillar of any economy, the real estate market drives significant property transactions, contributing substantially to a country’s gross domestic product (GDP) [1].

Beyond its macroeconomic implications, on a personal financial scale, property acquisition stands as the most substantial investment for many citizens. Precise property valuations become paramount, ensuring equitable transactions and preventing both overpayment and underselling, this is crucial for a fair and efficient real estate market [1]. Furthermore, the significance of accurately assessing property values extends deeply into the business arena. Real estate agencies and civil construction enterprises hinge their operations on precise property predictors, pivotal in making well-informed decisions [2].

Traditionally rooted in statistical methodologies, property assessment via automated valuation models (AVMs) has witnessed a transformative shift due to the rapid advancement of deep learning (DL) [3]. As such, researchers have redirected their attention toward exploring the application of these cutting-edge models, surpassing the limitations of traditional statistical approaches. In this context, the main virtue of DL models is their capacity to learn spatial pattern effectively using, e.g., recurrent neural networks (RNNs) such as Long Short-Term Memory (LSTM), convolutional neural networks (CNNs), or attention mechanisms [3, 2].

This research contributes to the field of house price prediction by showing the efficacy of incorporating geospatial patterns into the modeling process via graph neural networks (GNNs) with transformer convolutional message passing layers. GNNs can capture intricate relationships and dependencies between entities in a graph, making them well-suited for this task. GNN architectures are highly flexible and adaptable to various types of graphs and tasks. GNNs excel at aggregating both local and global information from neighboring nodes in the graph. By iteratively passing messages between nodes, they can capture hierarchical patterns and dependencies, allowing them to make informed predictions [4]. The main novelty lies in adapting the -nearest similar house sampling (KNHS) approach [5, 6], originally designed for LSTM, to the GNN model. This adaptation yields a scalable framework for real estate appraisal, addressing the limitations of the existing GNN approach for automated valuation.

Our main hypothesis asserts that it is possible to improve the current state-of-the-art AVMs by designing novel strategies based on GNNs. Consequently, this hypothesis suggests that exploring innovative deep-learning strategies tailored to the specific challenges of house price prediction can yield superior results compared to traditional approaches. The findings on a propietary dataset of approximately 200,000 houses in the Santiago housing market highlight the potential for improved accuracy and reliability in predicting house prices, providing valuable insights for real estate academics and practitioners, urban planners, and decision-makers in the housing market. Alongside location data, our dataset encompasses a diverse set of features that characterize the properties. Unlike other benchmark datasets reliant on asking prices, i.e., the amount of money the seller desires a buyer to pay [7], ours considers the final selling prices, thereby mitigating potential biases.

The remainder of this paper is structured as follows: prior studies on DL for property assessment are discussed in Section 2, formalizing relevant aspects of the paper. Next, the proposed GNN models for automated property valuation are presented in Section 3. Experimental results are reported in Section 4. Finally, Section 5 provides the main conclusions of this study, discussing limitations and future extensions.

2 Prior work on automated valuation models

There is a vast literature on property assessment models, ranging from naive techniques to state-of-the-art DL methods. The main approaches are discussed next.

2.1 Comparable sales method

The comparable sales method is the simplest approach to estimate property prices. This method produces an estimate of the market value for a given property by comparing the transaction prices of similar properties [8]. The market value of these similar properties is used as a point of reference, and the estimated price of a given property is derived based on these references and utilizing some form of correction to account for differences that the property may have with its references. In practice, these corrections are typically made through intuition and domain knowledge rather than a quantitative approach.

2.2 Hedonic Regression

Hedonic Regression (HR) models are one of the most widely applied models to estimate the price of a property [9]. This method utilizes a linear regression to measure the contribution of the different property attributes. The basic function of the HR is as follows:

| (1) |

where is the estimated market price of a given property, are the property attributes, are the weights assigned to each property attribute, and is the error term, for .

Although the hedonic regression is a more robust approach than the comparable sales method, as it incorporates a more quantitative method and considers more influential factors, the HR model assumes that the relationship between house attributes follows a linear correlation. This assumption is its pitfall, as the literature shows that the market value of a property also depends on nonlinear relationships [10, 11].

2.3 Traditional machine learning models

The market value of a property depends not only on linear correlations between its features but also on nonlinear relationships. Machine learning models are adept at understanding these nonlinear relationships [10, 11].

Regression trees are a popular machine learning technique used for solving regression problems. These models are a type of supervised learning algorithm that partitions the feature space into a hierarchical structure to make predictions of a target variable. The regression tree comprises several nodes and branches. In the context of property valuation, the root node represents the entire dataset of properties. The model then identifies the best property feature and corresponding threshold and divides the data into two subsets. This process repeats for every subset until reaching the allocated depth of the tree. Once the tree construction is complete, each leaf node represents a final prediction value. For property prediction, the prediction at a leaf node is usually the mean value of a target variable within a subset.

The literature shows that regression trees are capable of capturing the nonlinear relationship in property valuation and can achieve superior results to Hedonic Regression. [12] state that the simplicity and interpretability of these models also make them more suitable than the hedonic model for property assessment. In a study by [13], a random forest outperformed other models, yielding the best results. In contrast, [10] reported best results with Support Vector Machines in the context of real estate value prediction in tourism centers. In [14], best results are achieved with the Ripper Algorithm, a rule-based classification algorithm.

2.4 Local spatial models

Although traditional machine learning methods are capable of capturing the nonlinear relationships in property assessment, they often struggle to fully comprehend the spatial relationships that exist between properties. [12] highlights the influence of various geographical factors, such as distance to points of interest, on property prices. Additionally, [2] shows that peer dependence also plays a pivotal role in property pricing.

To incorporate the geospatial impact into property assessment, models need to define the latent influence that exists locally between a given house and its neighboring properties. One widely used model for this purpose is Locally Weighted Regression (LWR). LWR is a sophisticated regression model that aims to estimate property prices by leveraging the spatial dependencies and relationships that may exist within its neighboring properties. LWR assumes that the price of a given property is not only dependent on its characteristics but also on the characteristics of its surrounding properties [15].

2.5 Deep Learning approaches for real estate appraisal

Most of the research conducted in the field of AVMs today focuses on exploring the application of DL techniques to enhance the predictive capabilities of AVM. Models such as RNN [16] CNN [17], LSTM [18], and GNN [2] have shown promising results that surpass traditional machine learning models [19, 3].

The Peer Dependence Valuation Model (PDVM), proposed by [5], aims to utilize the geospatial relationships among properties using deep learning techniques. The PDVM architecture consists of three main components:

-

1.

-nearest similar house sampling: This is the initial step of the PDVM architecture. It is employed to aggregate sequences of properties and their neighboring houses.

-

2.

Bidirectional long short-term memory (B-LSTM) layer: The B-LSTM layer is utilized to process the sequences generated by the KNHS algorithm. It extracts the representation of the to-be-valued house based on the mutual impact of the houses in the sequence.

-

3.

Fully-connected layer (FC): Finally, an FC serves as a regressor for estimating the house price based on the representations obtained from the previous B-LSTM layers.

Transformers and attention mechanisms have revolutionized natural language processing (NLP) and other sequential data tasks due to their ability to capture long-range dependencies and contextual information more effectively than previous architectures like RNNs and CNNs [20].

At the core of transformers is the self-attention mechanism, which enables the model to weigh the importance of different words (or tokens) in a sequence when processing each word. Unlike traditional sequence-to-sequence models like RNNs, transformers can process the entire input sequence in parallel, making them more efficient for long sequences [20].

Attention-based DL models have been successfully applied in real estate valuation. For instance, [21] utilized a convolutional block attention mechanism for satellite image-based real estate appraisal via CNN. Alternatively, [22] developed an attention-based multimodal fusion model for automated valuation.

Finally, another recent trend in DL is GNN, which incorporates graph theory in the modeling process [23]. A graph is a data structure consisting of vertices or nodes () and edges (). Its structure represents the pairwise relationships between objects. A graph can be defined as follows: . A graph is represented by a matrix, called an adjacency matrix. Given a graph with nodes and edges, its corresponding adjacency matrix will be of dimension , and the value at will describe the pairwise relationship that exists between a and [23].

Graph convolutional networks (GCNs) are well-known DL architectures that perform convolutional operations on graph-structured data [24]. There are two main approaches to convolution: spectral and spatial GCNs, which have distinct advantages and limitations depending on the specific task and characteristics of the graph data. Spectral GCNs operate in the spectral domain, where graph convolution is performed by applying convolutions to the eigenvectors of the graph Laplacian matrix. In contrast, Spatial GCNs operate directly on the spatial domain of the graph, where convolutional operations are performed directly on the nodes and their neighbors in a similar fashion to traditional CNNs [24].

The spatial regression GCN with external attention (A-SRGCNN) is a model proposed in [2], which utilizes spectral GNNs to predict the prices of properties. It employs a spatial graph structure to map the interactions that exist in the housing market and to mimic a locally weighted regression.

After the output of the graph convolutions, it employs external attention to assign weights to the features of the properties. The resulting embedding is then passed to a linear layer to retrieve the final estimated price of each property.

The addition of a second set of locally learnable weights in the graph convolution allows for defining the importance a certain house has on the predicted house, while the graph structure provides a suitable framework to model global interactions. This, combined with the use of a spectral graph structure, further enhances the spatial interactions by utilizing the spectral properties of the graph to understand global interactions.

However, this architecture has some drawbacks in terms of scalability and predictive performance. The graph structure considers an undirected fully-connected graph, meaning that all the nodes are connected to each other. The resulting graph has a size of , where is the number of nodes in the graph. Implementing the graph convolutions in the spectral domain becomes intractable in terms of running times for large datasets. Notice that spectral GCNs often require computationally intensive operations such as eigen-decomposition of the graph Laplacian matrix, which can be computationally expensive for large graphs [24].

Additionally, the fully-connected graph structure of A-SRGCNN is counterproductive when modeling the housing market in large areas. Intuitively, the dependency between two houses that are very far away should be very low. Incorporating these patterns can introduce noise to the model, leading to overfitting.

Based on these limitations, this study proposes two novel GNN-based AVM models inspired by the message-passing scheme introduced in [25], which incorporates self-attention in the message-passing layer to understand the spatial interactions in the housing market. The models are implemented using graph convolutions in the spatial domain to address the memory issue associated with the spectral graph structure of A-SRGCNN. In A-SRGCNN, the primary reason for using external attention is to handle the size of the graph, which is too large to utilize self-attention to model pairwise interactions. Since our approaches are based on Spatial GNNs, we can employ self-attention without incurring computationally intensive operations.

3 The proposed GNN models for automated property valuation

In this section, we propose two GNN methods for real estate appraisal. The main idea is to present the housing market data to a deep neural network by structuring it in a way that represents the spatial structure of the dataset, extending the reasoning behind PDVM [5] to spatial GCNs. The main difference between the two proposed GCNs is that the first one utilizes standard graph convolution layers, while the second one employs transformer graph convolutions. Moreover, the latter approach takes into account edge weights, resulting in a more accurate representation of the neighborhood by incorporating distances between properties.

This section is structured as follows: First, the spatial GCNs are formalized in Section 3.1. Next, the KNHS algorithm developed in [5] is detailed, including the adaptations proposed to improve this approach in the context of GNNs. Finally, the two proposed GCN models for automated property valuation are presented in Section 3.3 and Section 3.4. We refer to these approaches as peer dependence - graph convolutional network (PD-GCN) and peer dependence - transformer graph convolutional network (PD-TGCN), respectively.

3.1 Spatial GCNs

The spatial GCN is a type of message passing scheme that operates in the spatial domain, as opposed to spectral GCN. Message passing is a concept used to describe various algorithms on graphs, including those employed in GNNs. It involves exchanging information (messages) between nodes in a graph, typically using a set of update rules [24].

For each node, information is propagated from its neighboring nodes to create an aggregated representation of each node with its local neighborhood, using convolutional operations.Let denote the matrix of node features serving as input to a convolutional layer. A graph convolution is defined by combining two operations. First, for each node , the information (feature vectors) of its neighbors is aggregated [26]:

| (2) |

This equation can also be expressed as , where the vector defines the weights assigned to each connection in the graph. These weights can be either predetermined or trainable. The weights can be predefined as binary values, indicating whether a node is a neighbor of a given node or not, but they can also be defined as scalar values that indicate the interaction between each node.

Finally, a standard MLP is applied to transform the intermediate representation into the final output of a given layer:

| (3) |

where is a trainable weight matrix and is a trainable bias vector [26].

3.2 -nearest similar house sampling revisited

Assessing the price of a property involves considering various geospatial characteristics, such as its location and the impact of neighboring properties. To model this phenomenon, the KNHS algorithm creates subgroups of houses that most resemble one another, both geographically and in terms of their features [5].

The KNHS algorithm operates in two stages. The first stage identifies the geographical neighbors of a given house feature vector . This is achieved by computing the orthodromic distance between a property’s XY coordinates, typically denoting its main entrance, and those of all other properties within the dataset. It constructs a pairwise distance matrix and selects the properties that fall within a certain distance threshold , designating them as neighbors. Formally, this can be described as:

| (4) |

where

| (5) |

In Eq. (5), and correspond to the latitude and longitude of , respectively. The output of the first stage of the KNHS algorithm will be a matrix containing a sequence for every house containing the feature vectors of all where .

In the second stage of the KNHS algorithm, the comparison of attribute similarities between a given property and its neighbors takes place. First, the Euclidean distance is used between a given and all in to calculate the similarities between a house and all its geospatial neighbors.

| (6) |

where is the number of features. The output sequence of the second stage for a given house will be a sequence of the with the smallest from including . Subsequently, the sequences are ordered so that is in the middle of the sequence and and are the houses most similar to it with the least , and the feature vectors and are the most dissimilar houses with the greatest .

The original KNHS proposed in [5] mainly emphasizes the significance of positioning in the center of the sequence. It suggests that placing the to-be-valued house in this central position allows the model to capitalize on the advantages of both the forward and backward passes related to the LSTM model. However, it does not consider the order of the remaining elements. In the proposed PD-TGCN method, the use of a graph structure and, in particular, edge attributes and weights, has the advantage of utilizing the order of the neighbors and their distance to , incorporating additional information in the modeling process.

In this revised version of the KNHS algorithm, we consider a weighted variant of the distance function that defines the neighborhood (Eq. (6)). The goal is to upweight variables that are more important than others, following the reasoning behind other studies with feature-weighted distance metrics for the computation of -nearest neighbors [27]. The redefined distance measure follows:

| (7) |

3.3 The proposed PD-GCN model

The PD-GCN method consists of two stacked graph convolutions using a mean aggregation function. Each graph convolution layer returns a representation of each node based on its local neighborhood information. These representations use trainable weights at each layer of the graph convolution, allowing it to learn the spatial dependencies that exist between each node and its neighboring nodes. Furthermore, by stacking graph convolutions together, the model is able to learn not only the dependencies from its one-hop neighborhood but also from its -hop neighborhood after stacked graph convolutions, as each layer utilizes the previous representation when aggregating the neighboring nodes’ information [28].

The Graph Convolution block used in the proposed PD-GCN model can be represented through the following expressions. Essentially, each graph convolutional layer updates the feature representation of nodes based on their local neighborhood information, allowing the model to capture and propagate information throughout the graph structure. Let the feature vector of house before the first convolutional layer be denoted as . The feature vector of house computed at the first convolutional layer is as follows:

| (8) |

where

| (9) |

with , being the weight matrices, representing the set of neighbors of house , the number of neighbors of house , and the feature vector of house . The second convolutional layer has the following form:

| (10) |

where

| (11) |

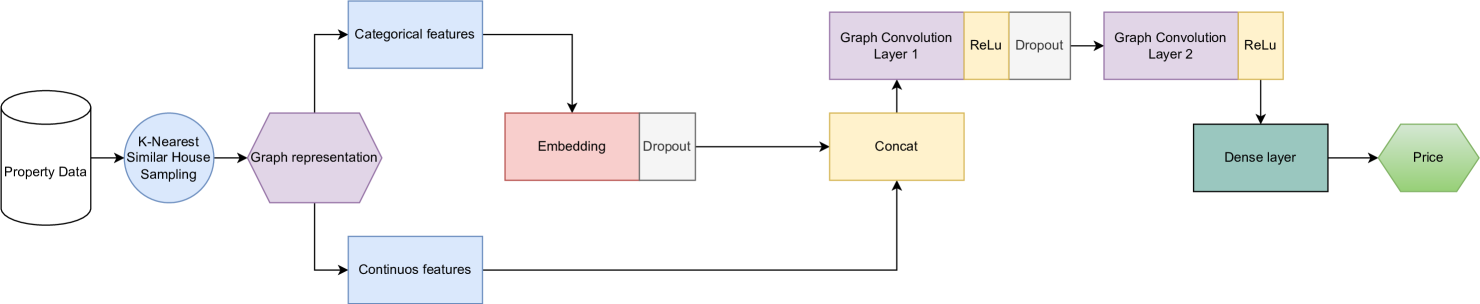

The proposed PD-GCN architecture is depicted in Figure 1. First, the input feature vectors are split into two parts: one containing the continuous features and the other containing the encoded categorical features. The categorical features are then processed through an embedding layer. Subsequently, the categorical embeddings are concatenated back with the continuous features and passed to the GCN block.

The GCN block is the central component of the model and comprises two stacked GCN layers with a mean aggregation function. Each GCN layer extracts the representation of a given house along with its local neighborhood by aggregating the neighborhood information of each house using learnable weights. By stacking two GCN layers, the model not only learns the dependencies within its immediate one-hop neighborhood but also extends its learning to the neighborhoods of its neighbors (two-hop neighborhood). This capability allows the model to capture the complex interactions within the large cities.

The resulting node embeddings from the second convolutional layer are then fed through a dense layer with a ReLU activation function and finally passed through a dense linear layer to obtain the predicted prices of the houses.

3.4 Peer Dependence Transformer Graph Convolution Network (PD-TGCN)

The proposed PD-TGCN model is an adaptation for real estate appraisal of the TGCN model introduced in [25]. The main concept behind the TGCN is to represent interactions between nodes using attention mechanisms, instead of relying on traditional convolutional operations. The self-attention mechanism utilized by the TGCN comprises three components: query, key, and value. The feature vector of each node undergoes three linear transformations to produce query, key, and value vectors. Subsequently, attention scores between nodes are computed by taking the dot product between the query and key vectors, which are then normalized using a softmax operation. Utilizing these attention scores, the TGCN calculates weighted sums of the value vectors to derive the node representations. Through the stacking of multiple TGCN layers, the model is capable of capturing intricate and high-order interactions within the graph structure.

In the proposed PD-TGCN model, the two transformer graph convolutions can be elucidated through the following steps:

First Transformer Convolution Layer:

-

•

Input: The feature vectors of the houses, denoted as , and the attribute vectors of the edges, denoted as

-

•

Query, Key, and Value: The feature vectors are transformed into query , key , and value vectors. The , , and vectors are obtained by applying linear transformations to the input feature vectors. A fourth vector is obtained by an additional linear transformation applied to the edge attribute vector. Formally, for a house , the , , and vectors are:

(12) Similarly for an edge between house and its neighbor , the vector is:

(13) -

•

Self-Attention: The convolutional layer then calculates the self-attention scores using the , and vectors. For each house , the attention score between house and its neighbor is computed as the dot product of their and vectors:

(14) where represents the dimension of and is used to offset the increasing magnitude of the dot products as the dimension grows. The attention weight is then calculated by using a softmax function over all the attention scores of the neighbors of node , transforming the attention scores into a probability distribution where the sum of the attention weights of the neighbors is equal to 1. This scaling is done to determine how much information should be propagated from each neighboring house to house .

(15) -

•

Weighted sum (aggregation): The feature vectors of the neighboring houses and the edge attributes between the house and its neighbors are then aggregated using the attention scores. For each house , the aggregated feature vector is obtained by taking the weighted sum of the vectors of the neighboring houses combined with the edge attributes , where the attention scores act as the weights:

(16) -

•

Linear transformation: Finally, the aggregated feature vector containing the information of house local neighborhood is combined with the original input feature vector of house using a linear transformation:

(17) where is a linear transformation applied to the concatenated input and aggregated feature vectors. The edge attributes are not altered in a transformer graph convolution, in the purpose of maintaining the structural information of the graph.

The output of the first Transformer Convolution Layer is a feature vector of all the house aggregated with its one-hop neighbor features denoted as .

Second Transformer Convolution Layer:

-

•

Input: The output feature vectors from the first layer, containing all nodes aggregated with their one-hop neighbors and the original edge attributes of the graph.

-

•

Query, Key, and Value: Similar to the first layer, the output feature vectors and the edge attribute vectors are transformed into , , and vectors.

-

•

Self-Attention: The self-attention scores and weights are calculated the same way as the steps are performed similarly to the first layer, using the , , and vectors.

-

•

Weighted Sum (Aggregation): The feature vectors of the neighboring nodes are then aggregated using the attention scores. For each node , the aggregated feature vector is obtained by taking the weighted sum of the vectors of the neighboring nodes, where the attention scores act as the weights:

(18) -

•

Linear Transformation: Finally, the aggregated feature vector containing the information of house two-hop neighborhood is combined with the representation of house one-hop neighborhood feature vector using a linear transformation.

(19) The output feature of the second transformer convolution layer is a feature vector of all the house aggregated with its two-hop neighbor features denoted as .

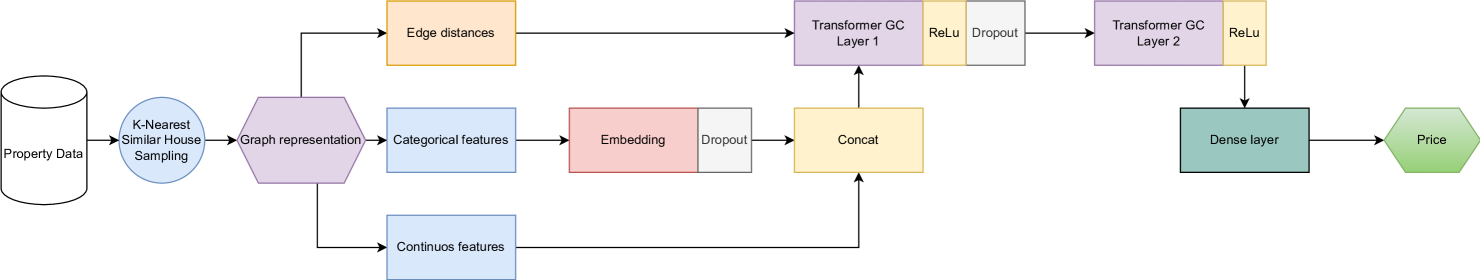

The proposed PD-TGCN architecture is depicted in Figure 2. The model utilizes an embedding layer to train the representations of the categorical features. The output of the embedding layer is then concatenated with continuous features. These concatenated feature vectors are then separately passed with the edge attributes to a Transformer Convolution (TC) block. The TC block contains two stacked TC layers. The first convolution creates a representation of all houses within their one-hop neighborhoods, and by applying a second convolutional layer, the model creates a representation of the two-hop neighborhood as well. Finally, the result from the second TC layer is passed through a linear layer to extract the house prices.

Each transformer graph convolution layer also employs a multi-head attention mechanism. Each head in the transformer model has its own set of query (), key (), and value () transformations. This allows each head to focus on different patterns and dependencies within the data, capturing diverse and complementary information.

For both models, two graph convolution layers were used because of how GNNs work: in each message passing layer, information from neighboring nodes is added to each node, so after one layer, each node will result in the aggregation of its features with those of its first-order neighbors. In the second layer, each node now not only represents its own information but also that of its neighborhood, which then provides a more complete picture of the spatial interactions that exist in the graph. However, including more than two layers leads to the over-smoothing problem. When this phenomenon occurs, information propagated across multiple layers becomes excessively smoothed or averaged out, leading to the loss of important details and nuances in the data [29]. Empirically, we observed that adding more than two layers resulted in worse performance for the model, despite the use of regularization to try to mitigate the effect of over-smoothing.

4 Experimental Results

We applied the proposed GNN models to a proprietary dataset comprising transaction prices of houses in Santiago, Chile. This section is divided as follows: Section 4.1 describes the experimental setting, while a summary of the results is presented in Section 4.2. Finally, results of a sensitivity analysis for the main parameters are reported in Section 4.3.

4.1 Experimental setting and dataset

The final dataset consists of 225,000 houses described by 50 explanatory variables. The data was collected in the 2009-2019 period. All prices are given in UF (unidad de fomento), which serves as the unit of account. Its exchange rate with the Chilean peso is regularly recalibrated to counter inflation, thereby maintaining the purchasing power of the UF nearly steady on a day-to-day basis. This results in a more reliable estimation of a property values in different time periods. The UF value was adjusted semiannually. As a reference, 1 UF = 38.07 USD at 04/17/2024.

The following exploratory variables, we considered for modelling purposes:

-

•

Seven numerical variables describing the property, including its surface area and the appraisal value for taxation purposes.

-

•

Seventeen numerical variables describing the district in which the property is located, such as the number of inhabitants, the number of houses in the district or the total area of the aggregated houses’ lot area in that district.

-

•

Three categorical variables describing both the property and the district, such as the main building material used in construction.

-

•

Twenty-three numerical variables indicating the distance from the property to points of interest, such as subway stations, high schools, shopping centers, bus stops, or hospitals. These variables have shown to be useful in previous studies [30].

The following exclusion criteria were utilized in order to filter out noisy samples:

-

•

The appraisal for taxation purposes tends to be around 30% of the market value. Following an investigation from the Chilean central bank designed to identify inconsistencies [31], only properties where the ratio between the appraisal for taxation purposes and the transaction price is less than 10 and greater than 1 are kept. 3.4% of the samples were deleted in this step.

-

•

Transaction prices outside of the expected range were deleted based on domain knowledge of the Santiago housing market (0.03% of the samples). All values greater than 50,000 UF and less than 400 UF were discarded.

-

•

Only prices per m2 less than 6000 UF and greater than 30 are kept. This range was created based on expert knowledge in the field, discarding 0.03% of the samples.

-

•

Properties sold multiple times in one year were considered market distortions and were therefore discarded (2.3% of the samples).

-

•

All houses containing coordinates that are inconsistent with the commune were also deleted (0.002% of the samples). A commune is the smallest administrative subdivision in Chile, and the province of Santiago encompasses 32 communes.

In order to perform an in-depth analysis of the housing market, eight additional datasets were created by defining groups of adjacent communes. The sample size of each group is reported in Table 1.

| Group | Comunes | Rows |

|---|---|---|

| Group 1 | LO BARNECHEA, LAS CONDES, VITACURA, PROVIDENCIA | 14,799 |

| Group 2 | NUNOA, LA REINA, MACUL, PENALOLEN | 15,489 |

| Group 3 | LA FLORIDA, PUENTE ALTO, LA PINTANA, LA GRANJA | 51,453 |

| Group 4 | SAN RAMON, LA CISTERNA, SAN MIGUEL, SAN JOAQUIN, PEDRO AGUIRRE CERDA | 8268 |

| Group 5 | SAN BERNARDO, EL BOSQUE, LO ESPEJO, CERRILLOS | 19,904 |

| Group 6 | ESTACION CENTRAL, MAIPU, QUINTA NORMAL, LO PRADO, SANTIAGO | 36,956 |

| Group 7 | PUDAHUEL, CERRO NAVIA, RENCA, QUILICURA | 25,661 |

| Group 8 | HUECHURABA, INDEPENDENCIA, RECOLETA, CONCHALI | 9056 |

The datasets were split into training and testing sets with a ratio of 75:25. The mean squared error (MSE) is used as the loss function for all models. It measures the average squared difference between the predicted and actual values. For model evaluation, we consider the mean absolute percentage error (MAPE), which is a widely used metric to assess the accuracy of predictions in property valuation models. It measures the percentage difference between the actual property values and the corresponding model predictions. This makes it easily interpretable in the context of house prices and quantifies how far off the model predictions are from the actual prices on average, in percentage terms.

Regarding variable transformations, the Box-Cox mapping was considered to correct skewness (logarithmic transformation). The min-max scaling was performed on the continuous features. For nominal variables, we use categorical embeddings, as suggested in [32]. This strategy has shown better results than one-hot encoding in deep learning as it captures relationships between categories, being also more efficient in terms of computational costs [32]. As a rule of thumb, the sizes of these categorical embeddings are given by:

| (20) |

4.2 Results summary

We consider Moran’s I [33] for a preliminary geo-spatial analysis. This metric quantifies the degree of similarity between neighboring observations in a spatial dataset. A Moran’s I value ranges from -1 to +1. A positive value indicates positive spatial auto-correlation (i.e., similar values are clustered together), a negative value indicates negative spatial auto-correlation (i.e., similar values are dispersed), and a value close to zero indicates no spatial auto-correlation [33].

The Moran’s I measure for the Santiago housing market is 0.81 with a p-value of 0.001. This indicates a strong positive spatial auto-correlation, implying that properties located near each other tend to have similar prices. The low p-value suggests that the observed Moran’s I value is statistically significant. This result is important as it suggests that models that can accurately model spatial patterns should achieve the best predictive results.

Next, the results in terms of MAPE are presented in Table 2. We compare the proposed PD-GCN and PD-TGCN models with a standard linear regression (LINREG), Random Forest (RF) and Extreme Gradient Boosting (XGBoost), and PDVM as a DL model tailored for real estate appraisal.

| Method | All | Group1 | Group2 | Group3 | Group4 | Group5 | Group6 | Group7 | Group8 |

|---|---|---|---|---|---|---|---|---|---|

| LINREG | 0.246 | 0.213 | 0.283 | 0.233 | 0.312 | 0.210 | 0.228 | 0.204 | 0.288 |

| RF | 0.207 | 0.198 | 0.264 | 0.196 | 0.309 | 0.169 | 0.214 | 0.178 | 0.282 |

| XGBoost | 0.212 | 0.204 | 0.266 | 0.202 | 0.311 | 0.176 | 0.217 | 0.182 | 0.290 |

| PDVM | 0.211 | 0.208 | 0.274 | 0.206 | 0.298 | 0.193 | 0.221 | 0.189 | 0.279 |

| PD-GCN | 0.206 | 0.202 | 0.263 | 0.202 | 0.295 | 0.178 | 0.218 | 0.180 | 0.265 |

| PD-TGCN | 0.204 | 0.198 | 0.259 | 0.197 | 0.310 | 0.173 | 0.215 | 0.177 | 0.266 |

In Table 2, we observe generally good results, with the best MAPE around 20% for most datasets. Among the nine datasets, the proposed models achieve the best results in six, while RF obtained the lowest MAPE in the remaining three datasets. Specifically, PD-GCN and PD-TGCN achieve the best results in two and four datasets, respectively. The GNN models outperform PDVM, which is the main benchmark in this context.

For the main dataset that encompasses all houses (second column in Table 2), the best methods are the proposed PD-TGCN and PD-GCN. This result emphasizes their ability to deal with heterogeneous prices in different areas of the city by defining suitable neighborhoods.

Traditional machine learning models show good results overall, with RF being the third-best model behind the proposed approaches in terms of average MAPE. This can be due to the inclusion of spatial variables in the feature engineering process, such as distances to points of interest.

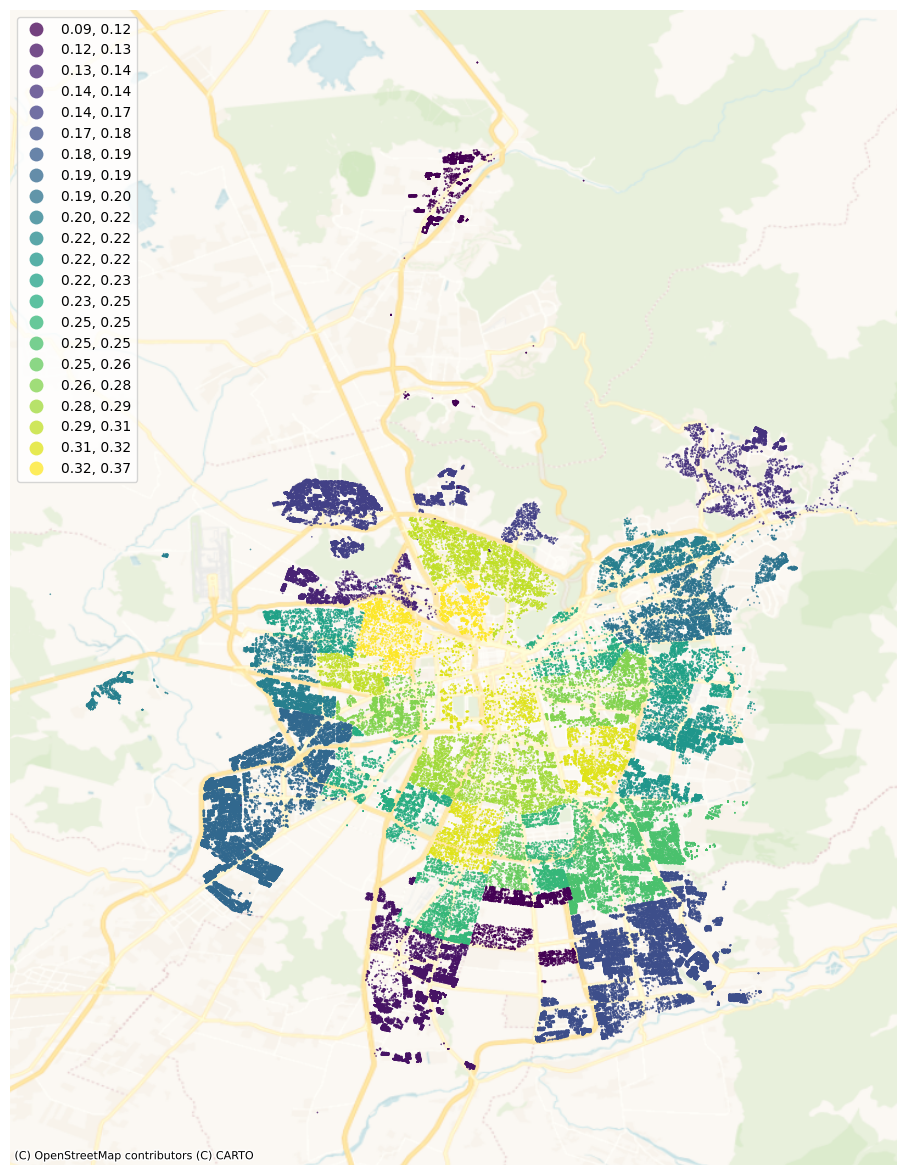

Figure 3 illustrates the distribution of the MAPE measure at the commune level for the PD-TGCN model, which achieved the lowest error. The colors on the map represent the various MAPE values for each commune.

We observe in Figure 3 that the communes located in the city center exhibit the poorest predictive results, whereas the model demonstrates greater efficiency in forecasting property prices for communes situated on the outskirts of Santiago. This trend is consistent across all models. One plausible explanation for this phenomenon is the higher concentration of apartment buildings in the central region of Santiago. Since the data used in this study is limited to houses, the methods cannot fully model the interactions between houses and neighboring apartment buildings. Another source of noise stems from the sale of several downtown houses during this period for the purpose of demolition and subsequent construction of buildings. This activity has contributed to distorting the real estate market in the area.

4.3 Sensitivity analysis

In this section, we discuss the influence of three different variants and parameter values associated with the proposed model. The results are presented in tables 1 and 2 in B. In these tables, the metrics , Root Mean Squared Error (RMSE), and MAPE are reported in the second, third, and fourth columns, respectively. RMSE is the square root of the MSE measure, while computes the proportion of the variance in the target variable that is predictable from the model.

First, we considered different scaling weights for the appraisal for taxation purposes when calculating the Euclidean distance between two houses in the KNHS algorithm. This was done to investigate how the definition of a more similar house impacts the results of different models. The choice to up-weigh this variable was made based on its demonstrated high correlation with home prices. We explore ; see the fifth column in tables 1 and 2.

The results show that the measures are only slightly affected by this scaling factor. The best results are obtained with and , showing that up-weighting the appraisal for taxation purposes has a positive effect in terms of predictive performance.

For the second set of experiments, we explore different values for , the neighbors in the KNHS algorithm, to understand how this parameter influences the predictions of the various models. We explore ; see the sixth column in tables 1 and 2.

In these tables, we observe that greater values improve the results of the KNHS algorithm, achieving the best performance with . We conclude that having a large neighborhood has its benefits in terms of performance; however, a negative consequence is a larger computational cost.

In the last experiment, variants of the KNHS algorithm were tested to evaluate different approaches to connecting neighboring houses. In particular, we compare the standard KNHS algorithm (normal) against random ordering and geospatial k-nearest neighbors (geo); see the seventh column in tables 1 and 2.

Regarding this third experiment, results show that the KNHS algorithm does improve the model results for all three metrics. This indicates that the KNHS algorithm provides important information that the model is able to train upon to understand the Santiago housing market at a deeper level.

The KNHS algorithm was also compared with a geospatial ordering to select the nearest house. The resulting predictions when utilizing this strategy worsen the predictive power for all three metrics when compared with the original KNHS. From this result, we can conclude that the geospatial interactions do not provide sufficient information for the model to improve its understanding of the housing market, and that the second step of the KNHS algorithm of selecting the houses most correlated with each other plays an important role in the model effectiveness.

5 Conclusions

In this study, two graph-based DL models are proposed to predict property prices by leveraging the geospatial interactions that exist between properties. We adapt the KNHS algorithm to model spatial patterns based on neighboring houses, taking advantage of the structure of the spatial GCN model.

The main difference between the two proposed architectures lies in the approach to perform graph convolutions. The PD-GCN method utilizes two standard graph convolutions using a mean aggregation function. In contrast, the PD-TGCN model considers two stacked transformer convolution layers.

Our experimental results on a proprietary dataset for house price prediction in Santiago, Chile, confirm the virtues of the proposed models. The PD-TGCN achieves the best overall results for the nine different sets of experiments designed based on groups of adjacent communes. This method also performs best when all the houses in the sample were considered. The PDVM model, an LSTM-based approach tailored for real estate appraisal, was outperformed by PD-TGCN.

The improvement of the PD-TGCN model when compared with the PD-GCN model also provides insight into the local relationships that exist within a neighborhood. Its improvement originates from the use of the attention mechanism, showcasing that not all neighboring properties affect a given house equally, and understanding these interactions improves the accuracy of the predicted property’s price.

Based on our results, our main hypothesis that it is possible to improve the current state-of-the-art AVMs by designing novel strategies based on GNNs holds. With a 20.4% MAPE, we can also confirm that it is possible to obtain accurate property value evaluation in the Santiago housing market using state-of-the-art artificial intelligence techniques. As a reference, [6] reported a MAPE of 17.5% for the city of Los Angeles using DL, while the best DL models in [5] achieved 20.12% and 29.24% for the cities of Chicago and Detroit, respectively. Our results also confirm the existence of geo-spatial dependencies within the Santiago housing market.

The findings of this study offer promising implications for the broader application of artificial intelligence in the real estate industry and emphasize the need for continual research and development in this area. One limitation of the proposed approach is the use of unimodal models, while the current trend in DL is multimodal learning and information fusion. Multimodal models for real estate appraisal have shown excellent results by, for example, including geographical presentation from street maps in the form of images. Multimodal graph networks are a promising opportunity for future research.

References

- [1] S. Sayce, J. Smith, R. Cooper, and P. Venmore-Rowland, Real Estate Appraisal: from value to worth. John Wiley & Sons, 2009.

- [2] Z. Yang, Z. Hong, R. Zhou, and H. Ai, “Graph convolutional network-based model for megacity real estate valuation,” IEEE Access, vol. 10, pp. 104 811–104 828, 2022.

- [3] S. C. Tekouabou, Ş. C. Gherghina, E. D. Kameni, Y. Filali, and K. Idrissi Gartoumi, “Ai-based on machine learning methods for urban real estate prediction: a systematic survey,” Archives of Computational Methods in Engineering, vol. 31, no. 2, pp. 1079–1095, 2024.

- [4] L. Waikhom and R. Patgiri, “A survey of graph neural networks in various learning paradigms: methods, applications, and challenges,” Artificial Intelligence Review, vol. 56, no. 7, pp. 6295–6364, 2023.

- [5] J. Bin, “Investigations on deep learning techniques for property assessment,” Ph.D. dissertation, University of British Columbia, 2019.

- [6] J. Bin, B. Gardiner, E. Li, and Z. Liu, “Peer-dependence valuation model for real estate appraisal,” Data-Enabled Discovery and Applications, vol. 3, no. 1, p. 2, 2019.

- [7] J. R. Rico-Juan and P. T. de La Paz, “Machine learning with explainability or spatial hedonics tools? an analysis of the asking prices in the housing market in alicante, spain,” Expert Systems with Applications, vol. 171, p. 114590, 2021.

- [8] K. M. Lusht, Real Estate Valuation: Principles and Applications. KML PUblishing, 2001.

- [9] S. Rosen, “Hedonic prices and implicit markets: Product differentiation in pure competition,” Journal of Political Economy, vol. 82, no. 1, pp. 34–55, 1974.

- [10] T. Alkan, Y. Dokuz, A. Ecemiş, A. Bozdağ, and S. S. Durduran, “Using machine learning algorithms for predicting real estate values in tourism centers,” Soft Computing, vol. 27, no. 5, pp. 2601–2613, 2023.

- [11] S. S. Bilgilioğlu and H. M. Yılmaz, “Comparison of different machine learning models for mass appraisal of real estate,” Survey review, vol. 55, no. 388, pp. 32–43, 2023.

- [12] N. Kok, E.-L. Koponen, and C. A. Martinez-Barbosa, “Big data in real estate? from manual appraisal to automated valuation,” The Journal of Portfolio Management, vol. 43, pp. 202–211, 09 2017.

- [13] T. Dimopoulos, H. Tyralis, N. P. Bakas, and D. Hadjimitsis, “Accuracy measurement of random forests and linear regression for mass appraisal models that estimate the prices of residential apartments in nicosia, cyprus,” Advances in Geosciences, vol. 45, pp. 377–382, 2018.

- [14] B. Park and J. K. Bae, “Using machine learning algorithms for housing price prediction: The case of fairfax county, virginia housing data,” Expert systems with applications, vol. 42, no. 6, pp. 2928–2934, 2015.

- [15] B. Huang, B. Wu, and M. Barry, “Geographically and temporally weighted regression for modeling spatio-temporal variation in house prices,” International journal of geographical information science, vol. 24, no. 3, pp. 383–401, 2010.

- [16] J. Bin, S. Tang, Y. Liu, G. Wang, B. Gardiner, Z. Liu, and E. Li, “Regression model for appraisal of real estate using recurrent neural network and boosting tree,” in 2017 2nd IEEE international conference on computational intelligence and applications (ICCIA). IEEE, 2017, pp. 209–213.

- [17] O. Poursaeed, T. Matera, and S. Belongie, “Vision-based real estate price estimation,” Machine Vision and Applications, vol. 29, no. 4, pp. 667–676, 2018.

- [18] L. Yu, C. Jiao, H. Xin, Y. Wang, and K. Wang, “Prediction on housing price based on deep learning,” International Journal of Computer and Information Engineering, vol. 12, no. 2, pp. 90–99, 2018.

- [19] R. B. Abidoye and A. Chan, “Artificial neural network in property valuation: application framework and research trend,” Property Management, vol. 35, pp. 554–571, 2017. [Online]. Available: https://api.semanticscholar.org/CorpusID:158451421

- [20] A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, Ł. Kaiser, and I. Polosukhin, “Attention is all you need,” Advances in neural information processing systems, vol. 30, 2017.

- [21] J.-P. Kucklick and O. Müller, “Tackling the accuracy-interpretability trade-off: Interpretable deep learning models for satellite image-based real estate appraisal,” ACM Transactions on Management Information Systems, vol. 14, no. 1, pp. 1–24, 2023.

- [22] J. Bin, B. Gardiner, Z. Liu, and E. Li, “Attention-based multi-modal fusion for improved real estate appraisal: a case study in los angeles,” Multimedia Tools and Applications, vol. 78, pp. 31 163–31 184, 2019.

- [23] Z. Liu, Y. Wang, S. Wang, X. Zhao, H. Wang, and H. Yin, “Heterogeneous graphs neural networks based on neighbor relationship filtering,” Expert Systems with Applications, vol. 239, p. 122489, 2024.

- [24] H. T. Phan, N. T. Nguyen, and D. Hwang, “Aspect-level sentiment analysis: A survey of graph convolutional network methods,” Information Fusion, vol. 91, pp. 149–172, 2023.

- [25] Y. Shi, Z. Huang, S. Feng, H. Zhong, W. Wang, and Y. Sun, “Masked label prediction: Unified message passing model for semi-supervised classification,” 2021.

- [26] T. Danel, P. Spurek, J. Tabor, M. Śmieja, Ł. Struski, A. Słowik, and Ł. Maziarka, “Spatial graph convolutional networks,” in International Conference on Neural Information Processing. Springer, 2020, pp. 668–675.

- [27] S. Maldonado, C. Vairetti, A. Fernandez, and F. Herrera, “Fw-smote: A feature-weighted oversampling approach for imbalanced classification,” Pattern Recognition, vol. 124, p. 108511, 2022.

- [28] C. Morris, M. Ritzert, M. Fey, W. L. Hamilton, J. E. Lenssen, G. Rattan, and M. Grohe, “Weisfeiler and leman go neural: Higher-order graph neural networks,” 2021.

- [29] Z. Chen, Z. Wu, Z. Lin, S. Wang, C. Plant, and W. Guo, “Agnn: alternating graph-regularized neural networks to alleviate over-smoothing,” IEEE Transactions on Neural Networks and Learning Systems, 2023.

- [30] L. D’Acci, “Quality of urban area, distance from city centre, and housing value. case study on real estate values in turin,” Cities, vol. 91, pp. 71–92, 2019. [Online]. Available: https://www.sciencedirect.com/science/article/pii/S0264275118308552

- [31] R. Flores, J. Pérez, and F. Uribe, “Valoración de la tierra de uso residencial y su contribución al valor de mercado de la vivienda en chile - bcentral.cl,” Sep 2018. [Online]. Available: https://www.bcentral.cl/documents/33528/133329/eee_126.pdf/1294adcd-dee1-6259-674e-2d20e49ea08e?t=1655149111760

- [32] H. Suenaga, “Deep learning 2: Part 1 lesson 4,” Jul 2018, https://medium.com/@hiromi_suenaga/deep-learning-2-part-1-lesson-4-2048a26d58aa.

- [33] P. A. Moran, “Notes on continuous stochastic phenomena,” Biometrika, vol. 37, no. 1-2, pp. 17–23, 1950.

Appendix A Abbreviations

ASRGCNN: Spacial Regression GCN withh external attention

AVM: Automated Valuation Models

BLSTM: Bi-directional Long Short Term Memory

CNNs: Convolutional Neural Networks

DL: Deep Learning

FC: Fully Connected

GCN: Graph Convolution Network

GDP: Gross Domestic Product

GNN: Graph Neural Network

HR: Hedonic Regression

KNHS: K-Nearest House Sampling

LINREG: Linear Regression

LSTM: Long Short-Term Memory

LWR: Locally Weighted Regression

MAPE: Mean Absolute Percentage Error

MLP: Multilayer perceptron

MSE: Mean Squared Error

NLP: Natural Lenguaje Processing

PD-GCN: Peer dependence graph convolutional network

PD-TGCN: Peer dependence transformer graph convolutional network

PDVM: Peer Dependence Valuation Model

RF: Random Forest

RMSE: Root Mean Squared Error

RNNs: Recurrent Neural Networks

TGCN: Transformer Graph Convolutional Network

UF:Unidad de Fomento (Chilean unit of account)

XGBoost: Extreme Gradient Boosting

Appendix B Tables associated to the sensitivity analysis

| R2 | RMSE | MAPE | weight | k | knhs | |

|---|---|---|---|---|---|---|

| 0 | 0.923 | 788.868 | 0.210 | 3.000 | 6 | normal |

| 5 | 0.926 | 773.212 | 0.206 | 3.000 | 16 | normal |

| 10 | 0.922 | 796.935 | 0.208 | 1.500 | 8 | normal |

| 15 | 0.921 | 798.698 | 0.208 | 1.200 | 8 | normal |

| 20 | 0.923 | 787.499 | 0.207 | 3.000 | 12 | normal |

| 25 | 0.921 | 801.659 | 0.208 | 3.000 | 8 | normal |

| 30 | 0.925 | 779.178 | 0.207 | 1.800 | 16 | normal |

| 35 | 0.925 | 803.784 | 0.208 | 1.800 | 14 | normal |

| 40 | 0.923 | 792.903 | 0.208 | 1.800 | 10 | normal |

| 45 | 0.925 | 781.354 | 0.206 | 0.000 | 16 | normal |

| 50 | 0.923 | 789.607 | 0.208 | 0.000 | 8 | normal |

| 55 | 0.925 | 780.039 | 0.207 | 0.000 | 12 | normal |

| 60 | 0.924 | 783.824 | 0.209 | 1.800 | 6 | normal |

| 65 | 0.922 | 792.220 | 0.207 | 1.800 | 12 | normal |

| 70 | 0.927 | 791.150 | 0.208 | 3.000 | 14 | normal |

| 75 | 0.921 | 799.398 | 0.208 | 3.000 | 10 | normal |

| 80 | 0.922 | 796.864 | 0.208 | 3.000 | 8 | normal |

| 85 | 0.923 | 792.106 | 0.208 | 1.800 | 8 | normal |

| 90 | 0.924 | 784.736 | 0.207 | 1.500 | 16 | normal |

| 95 | 0.925 | 778.020 | 0.206 | 1.200 | 16 | normal |

| 100 | 0.923 | 813.366 | 0.209 | 1.200 | 14 | normal |

| 105 | 0.925 | 800.746 | 0.208 | 1.500 | 14 | normal |

| 110 | 0.923 | 788.276 | 0.208 | 1.800 | 8 | normal |

| 115 | 0.921 | 801.401 | 0.209 | 1.500 | 10 | normal |

| 120 | 0.921 | 801.258 | 0.208 | 1.200 | 10 | normal |

| 125 | 0.922 | 792.040 | 0.207 | 1.200 | 12 | normal |

| 130 | 0.922 | 795.227 | 0.207 | 1.500 | 12 | normal |

| 135 | 0.924 | 781.505 | 0.210 | 1.200 | 6 | normal |

| 140 | 0.923 | 788.882 | 0.210 | 1.500 | 6 | normal |

| 228 | 0.913 | 841.743 | 0.216 | 0.000 | 8 | random |

| 230 | 0.915 | 828.734 | 0.215 | 0.000 | 12 | random |

| 232 | 0.915 | 833.265 | 0.216 | 0.000 | 16 | random |

| R2 | RMSE | MAPE | weight | k | knhs | |

|---|---|---|---|---|---|---|

| 1 | 0.922 | 793.945 | 0.208 | 3.000 | 6 | normal |

| 6 | 0.928 | 766.888 | 0.204 | 3.000 | 16 | normal |

| 11 | 0.919 | 808.776 | 0.206 | 1.500 | 8 | normal |

| 16 | 0.921 | 799.328 | 0.206 | 1.200 | 8 | normal |

| 21 | 0.924 | 781.797 | 0.205 | 3.000 | 12 | normal |

| 26 | 0.920 | 804.999 | 0.207 | 3.000 | 8 | normal |

| 31 | 0.926 | 774.598 | 0.205 | 1.800 | 16 | normal |

| 36 | 0.923 | 814.657 | 0.206 | 1.800 | 14 | normal |

| 41 | 0.920 | 806.809 | 0.206 | 1.800 | 10 | normal |

| 46 | 0.925 | 782.398 | 0.206 | 0.000 | 16 | normal |

| 51 | 0.921 | 802.393 | 0.206 | 0.000 | 8 | normal |

| 56 | 0.922 | 790.932 | 0.206 | 0.000 | 12 | normal |

| 61 | 0.924 | 784.820 | 0.208 | 1.800 | 6 | normal |

| 66 | 0.923 | 790.054 | 0.204 | 1.800 | 12 | normal |

| 71 | 0.923 | 813.824 | 0.206 | 3.000 | 14 | normal |

| 76 | 0.919 | 810.996 | 0.206 | 3.000 | 10 | normal |

| 81 | 0.920 | 803.723 | 0.207 | 3.000 | 8 | normal |

| 86 | 0.921 | 802.361 | 0.207 | 1.800 | 8 | normal |

| 91 | 0.925 | 780.344 | 0.205 | 1.500 | 16 | normal |

| 96 | 0.925 | 781.653 | 0.204 | 1.200 | 16 | normal |

| 101 | 0.925 | 801.828 | 0.206 | 1.200 | 14 | normal |

| 106 | 0.924 | 805.740 | 0.206 | 1.500 | 14 | normal |

| 111 | 0.920 | 803.613 | 0.207 | 1.800 | 8 | normal |

| 116 | 0.920 | 804.636 | 0.206 | 1.500 | 10 | normal |

| 121 | 0.921 | 800.967 | 0.206 | 1.200 | 10 | normal |

| 126 | 0.924 | 784.622 | 0.205 | 1.200 | 12 | normal |

| 131 | 0.925 | 776.349 | 0.205 | 1.500 | 12 | normal |

| 136 | 0.923 | 787.485 | 0.208 | 1.200 | 6 | normal |

| 141 | 0.923 | 789.619 | 0.208 | 1.500 | 6 | normal |

| 225 | 0.921 | 800.011 | 0.210 | 0.000 | 8 | geo |

| 226 | 0.916 | 822.098 | 0.211 | 0.000 | 12 | geo |

| 227 | 0.919 | 810.243 | 0.211 | 0.000 | 16 | geo |

| 229 | 0.920 | 806.569 | 0.210 | 0.000 | 8 | random |

| 231 | 0.917 | 820.268 | 0.211 | 0.000 | 12 | random |

| 233 | 0.918 | 816.082 | 0.211 | 0.000 | 16 | random |