∎

33email: {birwin, haber}@eoas.ubc.ca

Secant Penalized BFGS: A Noise Robust Quasi-Newton Method Via Penalizing The Secant Condition

Abstract

In this paper, we introduce a new variant of the BFGS method designed to perform well when gradient measurements are corrupted by noise. We show that by treating the secant condition with a penalty method approach motivated by regularized least squares estimation, one can smoothly interpolate between updating the inverse Hessian approximation with the original BFGS update formula and not updating the inverse Hessian approximation. Furthermore, we find the curvature condition is smoothly relaxed as the interpolation moves towards not updating the inverse Hessian approximation, disappearing entirely when the inverse Hessian approximation is not updated. These developments allow us to develop a method we refer to as secant penalized BFGS (SP-BFGS) that allows one to relax the secant condition based on the amount of noise in the gradient measurements. SP-BFGS provides a means of incrementally updating the new inverse Hessian approximation with a controlled amount of bias towards the previous inverse Hessian approximation, which allows one to replace the overwriting nature of the original BFGS update with an averaging nature that resists the destructive effects of noise and can cope with negative curvature measurements. We discuss the theoretical properties of SP-BFGS, including convergence when minimizing strongly convex functions in the presence of uniformly bounded noise. Finally, we present extensive numerical experiments using over 30 problems from the CUTEst test problem set that demonstrate the superior performance of SP-BFGS compared to BFGS in the presence of both noisy function and gradient evaluations.

Keywords:

Quasi-Newton Methods Secant Condition Penalty Methods Least Squares Estimation Measurement Error Noise Robust Optimization1 Introduction

Over the past 50 years, quasi-Newton methods have proved to be some of the most economical and effective methods for a variety of optimization problems. Originally conceived to provide some of the advantages of second order methods without the full cost of Newton’s method, quasi-Newton methods, which are also referred to as variable metric methods Johnson2019_quasiNewton_notes , are based on the observation that by differencing observed gradients, one can calculate approximate curvature information. This approximate curvature information can then be used to improve the speed of convergence, especially in comparison to first order methods, such as gradient descent. There are currently a variety of different quasi-Newton methods, with the Broyden-Fletcher-Goldfarb-Shanno (BFGS) method 10.1093/imamat/6.1.76 ; 10.1093/comjnl/13.3.317 ; 10.2307/2004873 ; 10.2307/2004840 almost certainly being the best known quasi-Newton method.

Modern quasi-Newton methods were developed for problems involving the optimization of smooth functions without constraints. The BFGS method is the best known quasi-Newton method because in practice it has demonstrated superior performance due to its very effective self-correcting properties Nocedal2006 . Accordingly, BFGS has since been extended to handle box constraints doi:10.1137/0916069 , and shown to be effective even for some nonsmooth optimization problems Lewis2013NonsmoothOV . Furthermore, a limited memory version of BFGS known as L-BFGS Liu1989 has become a favourite algorithm for solving optimization problems with a very large number of variables, as it avoids directly storing approximate inverse Hessian matrices. However, BFGS and its relatives were not designed to explicitly handle noisy optimization problems, and noise can unacceptably degrade the performance of these methods.

The authors of doi:10.1137/140954362 make the important observation that quasi-Newton updating is inherently an overwriting process rather than an averaging process. Fundamentally, differencing noisy gradients can produce harmful efffects because the resulting approximate curvature information may be inaccurate, and this inaccurate curvature information may overwrite accurate curvature information. Newton’s method can naturally be viewed as a local rescaling of coordinates so that the rescaled problem is better conditioned than the original problem. Quasi-Newton methods attempt to perform a similar rescaling, but instead of using the (inverse) Hessian matrix to obtain curvature information for the rescaling, they use differences of gradients to obtain curvature information. Thus, it should be unsurprising that inaccurate curvature information obtained from differencing noisy gradients can be problematic because it means the resulting rescaling of the problem can be poor, and the conditioning of the rescaled problem could be even worse than the conditioning of the original problem.

With the above in mind, several works have dealt with how to improve the performance of quasi-Newton methods in the presence of noise. Many recent works focus on the empirical risk minimization (ERM) problem, which is ubiquitous in machine learning. For example, in doi:10.1137/140954362 the authors propose a technique designed for the stochastic approximation (SA) regime that employs subsampled Hessian-vector products to collect curvature information pointwise and at spaced intervals, in contrast to the classical approach of computing the difference of gradients at each iteration. This work is built upon in pmlr-v51-moritz16 , where the authors present a stochastic L-BFGS algorithm that draws upon the variance reduction approach of Johnson2013AcceleratingSG . In doi:10.1137/15M1053141 , the authors outline a stochastic damped limited-memory BFGS (SdLBFGS) method that employs damping techniques used in sequential quadratic programming (SQP). A stochastic block BFGS method that updates the approximate inverse Hessian matrix using a sketch of the Hessian matrix is proposed in pmlr-v48-gower16 . Further work on stochastic L-BFGS algorithms, including convergence results, can be found in pmlr-v2-schraudolph07a ; 10.5555/2789272.2912100 ; Zhao2018StochasticLI ; 8626766 .

Despite the importance of the ERM problem due to the current prevalence of machine learning, there are still a variety of important noisy optimization problems that arise in other contexts. In engineering design, numerical simulations are often employed in place of conducting costly, if even feasible, physical experiments. In this context, one tries to find optimal design parameters using the numerical simulation instead of physical experiments. Some examples from aerospace engineering, including interplanetary trajectory and wing design, can be found in Fasano2019 ; doi:10.1002/0470855487 ; doi:10.2514/1.J057294 . Examples from materials engineering include stable composite design doi:10.1177/1464420716664921 and ternary alloy composition Graf2017 , amongst others Munoz-Rojas2016 , while examples from electrical engineering include power system operation doi:10.1002/9780470466971 , hardware verification Gal_2020_HowToCatchALion , and antenna design Koziel2014 . Noise is often an unavoidable property of such numerical simulations, as the simulations can include stochastic internal components, and floating point arithmetic vulnerable to roundoff error. Apart from the analysis of the BFGS method with bounded errors in doi:10.1137/19M1240794 , there is relatively little work on the behaviour of quasi-Newton methods in the presence of general bounded noise. As optimizing noisy numerical simulations does not always fit the framework of the ERM problem, analyses of the behaviour of quasi-Newton methods in the presence of general bounded noise are of practical value when optimizing numerical simulations.

1.1 Contributions

Noise is inevitably introduced into machine learning problems due to the approximations required to handle large datasets, and numerical simulations due to the effects of finite precision arithmetic, and parts of the simulator containing inherently stochastic components. In this paper, we return to the fundamental theory underlying the design of quasi-Newton methods, which allows us to design a new variant of the BFGS method that explicitly handles the corrupting effects of noise. We do this as follows:

-

1.

In Section 2, we review the setup and derivation of the original BFGS method.

-

2.

In Section 3, motivated by regularized least squares estimation, we treat the secant condition of BFGS with a penalty method. This creates a new BFGS update formula that we refer to as secant penalized BFGS (SP-BFGS), which we show reduces to the original BFGS update formula in a limiting case, as expected.

-

3.

In Section 4, we present an algorithmic framework for practically implementing SP-BFGS updating. We also discuss implementation details, including how to perform a line search and choose the penalty parameter in the presence of noise.

-

4.

In Section 5, we discuss the theoretical properties of SP-BFGS, including how the penalty parameter influences the eigenvalues of the approximate inverse Hessian. This allows us to show that under appropriate conditions SP-BFGS iterations are guaranteed to converge linearly to a neighborhood of the global minimizer when minimizing strongly convex functions in the presence of uniformly bounded noise.

-

5.

In Section 6, we study the empirical performance of SP-BFGS updating compared to BFGS updating by performing extensive numerical experiments with both convex and nonconvex objective functions corrupted by function and gradient noise. Results from a diverse set of over 30 problems from the CUTEst test problem set demonstrate that intelligently implemented SP-BFGS updating frequently outperforms BFGS updating in the presence of noise.

-

6.

Finally, Section 7 concludes the paper and outlines directions for further work.

2 Mathematical Background

In this section, as preliminaries to the main results of this paper, we review the setup and derivation of the original BFGS method.

2.1 BFGS Setup

The BFGS method was originally designed to solve the following unconstrained optimization problem

| (1) |

with , , and being a smooth twice continuously differentiable and nonnoisy function. Below, we use the notational conventions of Nocedal2006 , including . We begin by using the Taylor expansion of to build a local quadratic model of the objective function at the iterate of the optimization procedure

| (2) |

where is an symmetric positive definite matrix that approximates the Hessian matrix (i.e. ). By setting the gradient of to zero, we see that the unique minimizer of this local quadratic model is

| (3) |

and thus it is natural to update the next iterate as

| (4) |

where is the step size along the direction , which is often chosen using a line search.

To avoid computing from scratch at each iteration , we use the curvature information from recent gradient evaluations to update , and thus relatively economically form . A Taylor expansion of reveals

| (5) |

and so it is reasonable to require that the new approximate Hessian satisfies

| (6) |

which rearranges to

| (7) |

Now, define the two new quantities and as

| (8a) | |||

| (8b) |

Thus, we arrive at (9), which is known as the secant condition

| (9) |

In words, the secant condition dictates that the new approximate Hessian must map the measured displacement into the measured difference of gradients . If we denote the approximate inverse Hessian , then the secant condition can be equivalently expressed as (10)

| (10) |

As is not yet uniquely determined, to obtain the BFGS update formula, we impose a minimum norm restriction. Specifically, we choose to be the solution of the following quadratic program over matrices

| (11) |

where denotes the Frobenius norm, and the principal square root (see MatrixAnalysisHornJohnson or a similar reference) of a symmetric positive definite weight matrix satisfying

| (12) |

As we will see, choosing the weight matrix to satisfy (12) ensures that the resulting optimization method is scale invariant. The weight matrix can be chosen to be any symmetric positive definite matrix satisfying (12), and the specific choice of is not of great importance, as will not appear directly in the main results of this paper. However, as a concrete example from Nocedal2006 , one could assume , where is the average Hessian defined by

| (13) |

2.2 Solving For The BFGS Update

To solve the quadratic program given by (11), we setup a Lagrangian involving the constraints. Recalling that

| (14) |

this gives the Lagrangian defined by (15) below

| (15) |

where is a vector of Lagrange multipliers associated with the secant condition, and is a matrix of Lagrange multipliers associated with the symmetry condition. Taking the derivative of the Lagrangian with respect to the matrix yields

| (16) |

and so we have the Karush-Kuhn-Tucker (KKT) system defined by the three equations (17a), (17b), and (17c) below

| (17a) | |||

| (17b) | |||

| (17c) |

For brevity, we omit the details of the solution of the KKT system defined above because it is a limiting case of the system solved in Theorem 3.1. For an alternative geometric solution technique, we refer the interested reader to Section 2 of doi:10.1080/10556780802367205 . The minimizer is given by the well known BFGS update formula

| (18) |

which, if we define the curvature parameter , can be equivalently written as

| (19) |

Applying the Sherman-Morrison-Woodbury formula (see 10.2307/2030425 ) to the BFGS update formula immediately above, one can also write the BFGS update in terms of the approximate Hessian instead of the approximate inverse Hessian. Again, for brevity, the details are omitted because they are a special case of Theorem 3.2 shown later. The result is

| (20) |

To ensure the updated approximate Hessian is positive definite, we must enforce that

| (21) |

Substituting from the secant condition, the condition (21) becomes

| (22) |

which is known as the curvature condition, as it is equivalent to

| (23) |

3 Derivation Of Secant Penalized BFGS

In this section, having reviewed the construction of the original BFGS method, we now show how treating the secant condition with a penalty method approach motivated by regularized least squares estimation allows one to generalize the original BFGS update.

3.1 Penalizing The Secant Condition

By applying a penalty method (see Chapter 17 of Nocedal2006 ) to the secant condition instead of directly enforcing the secant condition as a constraint, we obtain the problem

| (24) |

where is a penalty parameter that determines how strongly to penalize violations of the secant condition. As we will see, one recovers the solution to the constrained problem (11) in the limit , so can be intuitively thought of as the cost of violating the secant condition. By treating the symmetry constraint with a matrix of Lagrange multipliers again, we obtain the following Lagrangian

| (25) |

Defining the residual associated with the secant condition as and , the first order optimality conditions of (25) can be written as the system

| (26a) | |||

| (26b) | |||

| (26c) |

Note that, as expected, in the limit , the system given by (26a), (26b), and (26c) reduces to the KKT system given by (17a), (17b), and (17c).

We now find an explicit closed form solution to the problem given by (24), which is given in Theorem 3.1.

Theorem 3.1 (SP-BFGS Update)

Proof

See Appendix A. ∎

At this point, a few comments are in order regarding the SP-BFGS update given by (27). First, observe that as , we have that and . As a result, when , one recovers the original BFGS update, as expected. Second, also observe that as , we have that and . As a result, we see that in the case the SP-BFGS update reduces to . This is again expected because as , the cost of violating the secant condition goes to zero, and the minimum norm symmetric update is simply .

We now examine what the analog of the curvature condition (22) is for SP-BFGS. Lemma 1 demonstrates that (29) is the SP-BFGS analog of the BFGS curvature condition (22).

Lemma 1 (Positive Definiteness Of SP-BFGS Update)

If is positive definite, then the given by the SP-BFGS update (27) is positive definite if and only if the SP-BFGS curvature condition

| (29) |

is satisfied.

Proof

See Appendix B. ∎

The result in Lemma 1 warrants some discussion. First, the limiting behaviour with respect to is consistent with Theorem 3.1. As , condition (29) reduces to the BFGS curvature condition (22). As , condition (29) reduces to no condition at all, as is always true. This is consistent with the observation that when , the minimum norm symmetric update is , and in this case is guaranteed to be positive definite if is positive definite, regardless of .

From the proof of Lemma 1 (see (93)), it is now clear that

| (30) |

and so is a convex combination of and . Thus, interpolates between the current inverse Hessian approximation and the original BFGS update, and as decreases, the interpolation is increasingly biased towards the current approximation . From a regularized least squares estimation perspective, plays the role of a regularization parameter that controls the amount of bias in the estimate of . Note that this behaviour is somewhat similar to the behaviour of Powell damping Powell1978 , although Powell damping was introduced to handle approximating a potentially indefinite Hessian of the Lagrangian in constrained optimization problems, and not noise.

We finish introducing the SP-BFGS update by applying the Sherman-Morrison-Woodbury formula to (27), which allows us to write the update in terms of the approximate Hessian instead of the approximate inverse Hessian . The result is given in Theorem 3.2.

Theorem 3.2 (SP-BFGS Inverse Update)

The SP-BFGS update formula given by (27) can be written in terms of as

|

|

Proof

See Appendix C. ∎

Note that the limiting behaviour of Theorem 3.2 with respect to is again consistent. When , we obtain the original BFGS inverse update (20), and when , we obtain . One complication with respect to the SP-BFGS inverse update (98) is that cannot in general be expressed solely in terms of due to the presence of (i.e. ) in the denominator.

4 Algorithmic Framework

We now outline how to practically implement SP-BFGS updating. We consider the situation where one has access to noise corrupted versions of a smooth function and its gradient that can be decomposed as

| (31) |

| (32) |

In (31) and (32), is a smooth twice continuously differentiable function as in Section 2.1, and is a scalar representing noise in the function evaluations. Similarly, is the gradient of the smooth function , while is a vector representing noise in the gradient evaluations. Similar decompositions are used in DFONoisyFunctionsQuasiNewton ; Gal_2020_HowToCatchALion ; doi:10.1137/19M1240794 .

4.1 Minimization Routine

Algorithm 1 outlines a general procedure for minimizing a noisy function with noisy function and gradient values and that can be decomposed as shown in (31) and (32). The inputs to the procedure in Algorithm 1 are a means of evaluating the noisy objective function and gradient , the starting point , and an initial inverse Hessian approximation . As the best convergence/stopping test is problem dependent, we note that standard gradient and function value based tests can be employed in conjuction with smoothing and noise estimation techniques (e.g. see Section 3.3.4 of DFONoisyFunctionsQuasiNewton ). In the next several subsections, we discuss how to choose the penalty parameter and step size , and appropriate courses of action for when the SP-BFGS curvature condition (29) fails.

|

|

4.2 Choosing The Penalty Parameter

As the choice of determines how strongly to bias the estimate of towards , the choice of is fundamentally connected to the amount of noise present in the measured gradients and . In brief, if the amount of noise present in the measured gradients is large, should be small to avoid overfitting the noise, and if the amount of noise present in the measured gradients is small, should be large to avoid underfitting curvature information. To make this point more rigorous, we introduce the following assumption.

Assumption 1 (Uniform Gradient Noise Bound)

There exists a nonnegative constant such that

| (33) |

As is continuous, for each we have

| (34) |

However, due to noise we cannot in general guarantee

| (35) |

Using the continuity of , Assumption 1, and the triangle inequality, one can conclude that

| (36) |

As a result, it is now clear that in the presence of uniformly bounded gradient noise, sending the step size to zero, and thus to zero, only bounds the difference of measured gradients within a ball with radius dependent on the gradient noise bound .

As and can be decomposed into smooth and noise components, so can , giving

| (37) |

In conjunction with the Cauchy-Schwarz inequality, Assumption 1 implies that

| (38) |

and so we have the lower and upper bounds

| (39) |

From (39), it is clear that the bound on the effect of the noise grows linearly with . However, by using the average Hessian from (13) and applying Taylor’s theorem to , it is also clear that

| (40) |

and so

| (41) |

where the term is due to the true curvature of the smooth function , and the term is due to noise. Thus, we have now illustrated an important general behaviour given Assumption 1. As dominates as , the effects of noise can dominate the true curvature for small . Conversely, as dominates as , the true curvature can dominate the effects of noise for large .

Given the above analysis, a simple strategy for choosing is to make grow linearly with , such as

| (42) |

where is a slope parameter. As , , which is desirable because the effects of noise likely dominate as . Increasingly biasing the estimate of towards reduces how much can be corrupted by noise, and relaxes the SP-BFGS curvature condition (29), reducing the likelihood of needing to trigger a recovery procedure described in Section 4.4. Also, as shown earlier, because is continuous, the true difference of gradients is guaranteed to go to zero as approaches zero. As a result, without noise present, it is natural that as . In the presence of noise, we wish for this behaviour to be preserved. Informally, one can intuitively think of wanting to behave as an approximate average inverse Hessian, and the averaging should remove the corrupting effects of noise, leaving to behave as if no noise were present. Similarly, as , , and one recovers the BFGS update in the limit, which is desirable because the effects of noise are likely dominated by the true curvature as . The slope parameter dictates how sensitive is to , and should be set proportional to the gradient noise level (i.e. ). Intuitively, if the gradient noise level is low, should grow quickly with , as the effect of noise diminishes quickly, and vice versa.

It may also be desirable to modify (42) to

| (43) |

where is an intercept parameter. The inclusion of allows one to stop updating if is sufficiently small. For example, it may be desirable to stop updating when one is very close to a stationary point, as gradient measurements are likely heavily dominated by noise.

4.3 Choosing The Step Size

Classically, during BFGS updating is chosen to satisfy the Armijo-Wolfe conditions. As function and gradient evaluations are not corrupted by noise in the classical BFGS setting, we can write the Armijo condition, also known as the sufficient decrease condition, as

| (44) |

and the Wolfe condition, also known as the curvature condition, as

| (45) |

where , with well known choices being and . Observe that by adding to both sides of (45) and multiplying by , (45) becomes

| (46) |

If is a descent direction then , and combined with and , one sees that (46) implies

| (47) |

so (45) effectively enforces (22) when no gradient noise is present.

In the presence of noisy gradients, we argue that in general it no longer makes sense to enforce the Wolfe condition (45). In the presence of gradient noise, (45) becomes

| (48) |

which can behave erratically once the noise vectors and start to dominate the gradient of . For example, the noise vectors and can cause both sides of (48) to erratically change sign, in which case whether or not the Wolfe condition is satisfied can be governed by randomness more than anything else.

We argue that because the SP-BFGS update allows one to relax the curvature condition based on the value of as shown in the SP-BFGS curvature condition (29), it is appropriate to drop the Wolfe condition entirely in the presence of gradient noise and instead employ only a version of the sufficient decrease condition when choosing . In the situation where gradient noise is present but function noise is not (i.e. in (31)), one can use a backtracking line search based on the sufficient decrease condition, which can guarantee convergence to a neighborhood of a stationary point of . The situation where noise is present in both function and gradient evaluations is trickier. Similar to the approach presented in Section 4.2 of DFONoisyFunctionsQuasiNewton , one option is to use a backtracking line search with a relaxed sufficient decrease condition of the form

| (49) |

where is a noise tolerance parameter and . In Theorem 4.2 of DFONoisyFunctionsQuasiNewton , the authors show that under Assumptions 1 and 2, using the iteration (4) and a backtracking line search governed by the relaxed Armijo condition (49) with guarantees linear convergence to a neighborhood of the global minimizer for strongly convex functions.

Assumption 2 (Uniform Function Noise Bound)

There exists a nonnegative constant such that

| (50) |

We agree with the authors of DFONoisyFunctionsQuasiNewton that it is possible to prove an extension of Theorem 4.2 of DFONoisyFunctionsQuasiNewton to a quasi-Newton iteration with positive definite , and briefly outline why in Section 5.2. A quasi-Newton extension of Theorem 4.2 of DFONoisyFunctionsQuasiNewton is relevant to SP-BFGS updating because, as we will formally see in Section 5.1, control of makes it possible to uniformly bound the minimum and maximum eigenvalues of .

4.4 Failed SP-BFGS Curvature Condition Recovery Procedure

In the classical BFGS scenario where no gradient noise is present, the curvature condition (22) may fail if is not chosen based on the Armijo-Wolfe conditions and is not strongly convex. One of the most common strategies to handle this scenario is to skip the BFGS update (i.e. set ) when this occurs, which corresponds to an SP-BFGS update with . However, this simple strategy has the downside of potentially producing poor inverse Hessian approximations if updates are skipped too frequently.

Conditionally skipping BFGS updates is an option in the presence of noisy gradients as well. In addition to skipping BFGS updates when (22) fails, as described above, another course of action sometimes recommended in the presence of noise is to replace (22) with

| (51) |

where is a small positive constant, and skip the BFGS update if (51) is not satisfied. This strategy may be somewhat effective if is large, but reduces back to the initial update skipping approach as . A similar strategy (e.g. see Section 3.3.3 of DFONoisyFunctionsQuasiNewton ) is to replace (22) with

| (52) |

and skip the BFGS update if (52) is not satisfied for some . Notice that none of the aforementioned update skipping strategies allow for curvature information to be incorporated if the measured curvature is negative.

Unlike in the classical BFGS scenario, with SP-BFGS updating, curvature information can be incorporated even if the measured curvature is negative by decreasing towards . In addition to having the option of conditionally skipping updates (i.e. setting ), one can also alternatively relax the SP-BFGS curvature condition by decreasing towards if (29) fails. Since is chosen after and are fixed in Algorithm 1, one can solve for values satisfying (29) when , yielding

| (53) |

for all , assuming that and . Note that if and and (29) fails, then the measured curvature must be negative. The choice of determines how much to shrink compared to the largest value of that still satisfies (29) and thus guarantees the positive definiteness of . Hence, if the value of produced by (42) or (43) is too large and (29) fails, one can choose an acceptable value of by using (53) and selecting a . Thus, instead of skipping the update (i.e. setting ) if (29) fails, one can reduce towards , which has the effect of reducing the magnitude of the update by increasing how much is biased towards . An approach based on reducing towards never entirely skips incorporating measured curvature information, even if the measured curvature information is negative, but instead weights how heavily the measured curvature information affects .

5 Convergence of SP-BFGS

In this section, we discuss relevant theoretical and convergence properties of SP-BFGS. First, it is important to note that for specific choices of the sequence of penalty parameters , known convergence results already exist. Specifically, if for all , then SP-BFGS updating is equivalent to BFGS updating. Although there are not many works on the convergence properties of BFGS updating in the presence of uniformly bounded noise, such as in Assumptions 1 and 2, in doi:10.1137/19M1240794 the authors provide convergence results for a BFGS variant that employs an Armijo-Wolfe line search and lengthens the differencing interval in the presence of uniformly bounded function and gradient noise. At the other extreme, if for all , then one obtains a scaled gradient method for general , and this becomes the gradient method when . Convergence analyses of the gradient method in the presence of uniformly bounded function and gradient noise for both a fixed step size and backtracking line search are provided in Section 4 of DFONoisyFunctionsQuasiNewton .

Given that perhaps the defining feature of SP-BFGS updating is the ability to vary at each iteration, we focus our attention on how varying can influence convergence behaviour in this section. As a result, most of the ensuing analysis centers around situations where the condition number of can be bounded. We do not employ the approach of bounding the cosine of the angle between the descent direction and the negative gradient above zero, and then showing that the condition number of is bounded, which is similar to the approaches taken when no noise is present in 10.2307/2157646 ; 10.2307/2157680 , and when noise is present in doi:10.1137/19M1240794 . Although it may be possible to apply the strategies employed in 10.2307/2157646 ; 10.2307/2157680 ; doi:10.1137/19M1240794 to establish convergence results for SP-BFGS, such an analysis is complicated enough that it is beyond the scope of this initial paper.

5.1 The Influence of on

We first examine how determines how much the maximum and minimum eigenvalues and can change. In what follows, denotes the set of eigenvalues of the matrix . We provide upper bounds on and via Theorem 5.1. As , , and putting an upper bound on is equivalent to putting a lower bound on .

Theorem 5.1 (Eigenvalue Upper Bounds)

Proof

See Appendix D. ∎

With Theorem 5.1 in hand, we now formally see that when , as increases from to , an upper bound on interpolates between and , and an upper bound on interpolates between and . Similarly, when , as increases from towards , an upper bound on interpolates between between and , and an upper bound on interpolates between and . Standard BFGS updating corresponds to setting for all , and as this is the largest possible value of , one can no longer formally guarantee that and are bounded from above at each iteration because the measured curvature may become arbitrarily close to zero due to the effects of noise. The key takeaway is that upper bounds on and can be tightened arbitrarily close to and by shrinking towards zero, as , , and are fixed before the value of is chosen in Algorithm 1.

Thus, if one must enforce a bound of the form or a bound of the form for all , where and are positive constants, there exist nontrivial sequences of sufficiently small with that ensure the bounds hold for all . To see this, observe that the interval can be partitioned into subintervals corresponding to each iteration, and the sum of the subintervals cannot exceed , which can be guaranteed by assigning a small enough value of to each subinterval, as this guarantees the maximum eigenvalue does not grow too much at each iteration . Furthermore, although there clearly exist sequences of that ensure the bounds hold for all that satisfy for all , where is a positive integer, there also exist sequences of that ensure the bounds hold for all where instead only approaches zero in the limit .

5.2 Minimization Of Strongly Convex Functions

Having established that SP-BFGS iterations can maintain bounds on the maximum and minimum eigenvalues of the approximate inverse Hessians via sufficiently small choices of , we now consider minimizing strongly convex functions in the presence of bounded noise. We introduce Assumption 3, and the notation to denote the argument of the unique minimum of , and to denote the minimum.

Assumption 3 (Strong Convexity of )

The function is twice continuously differentiable and there exist positive constants such that

| (56) |

We also state a general result in Lemma 2 that establishes a region where may not provide a descent direction with respect to due to noise dominating gradient measurements. Outside of this region, is guaranteed to provide a descent direction for .

Lemma 2 (Region Where Gradient Noise Can Dominate )

Proof

See Appendix E. ∎

Applying Lemma 2 in the context of SP-BFGS updating makes several convergence properties clear. First, if one chooses such that for all (i.e. the eigenvalues of the approximate inverse Hessian are uniformly bounded from above and below for all ), by Lemma 2 it becomes clear that in the presence of gradient noise and absence of function noise (i.e. in (31)), the iterates of SP-BFGS with a backtracking line search based on (49) with in the worst case approach as . To see this, observe that is guaranteed to provide a descent direction outside of and the sufficient decrease condition guarantees that is not too large, while backtracking guarantees that is not too small. For more background, see Chapter 3 of Nocedal2006 .

Second, if both function and gradient noise are present, and one again chooses such that the bounds hold for all , under additional conditions a worst case analysis in Theorem 5.2 shows that an approach using a sufficiently small fixed step size approaches at a linear rate as . For a general quasi-Newton iteration of the form

| (58) |

with constant step size and , Theorem 5.2 establishes linear convergence to the region where noise can dominate (i.e. in Lemma 2).

Theorem 5.2 (Linear Convergence For Sufficiently Small Fixed )

Suppose that Assumptions 1 and 3 hold. Further suppose that is symmetric positive definite and bounded by , where . Let be such that the inequality

| (59) |

is true for all . Let be the iterates generated by (58), where the constant step size satisfies

| (60) |

Then for all such that , one has the Q-linear convergence result

| (61) |

Similarly, for any , one has the R-linear convergence result

| (62) |

Proof

See Appendix F. ∎

Theorem 5.2 can be considered a quasi-Newton extension of Theorem 4.1 from DFONoisyFunctionsQuasiNewton , which lays the foundation for Theorem 4.2 from DFONoisyFunctionsQuasiNewton . To extend the convergence result of Theorem 5.2 to the backtracking line search approach based on (49), see that (60), (115), and Assumption 2 combined imply

| (63) |

and so if , comparing (49) and (63) makes it clear that (49) will be satisfied for sufficiently small . Hence, the backtracking line search always finds an satisfying (49). For brevity, we defer a full, rigorous quasi-Newton extension of Theorem 4.2 of DFONoisyFunctionsQuasiNewton to future work and instead investigate the performance of an approach based on (49) via numerical experiments in Section 6.

6 Numerical Experiments

In this section, we test instances of Algorithm 1 on a diverse set of 33 test problems for unconstrained minimization. The set of test problems includes convex and nonconvex functions, and well known pathological functions such as the Rosenbrock function 10.1093/comjnl/3.3.175 and its relatives. Described in Section 6.1, the first test problem is similar to the one used in the numerical experiments section of doi:10.1137/19M1240794 , and involves an ill conditioned quadratic function. The other 32 problems are selected problems from the CUTEst test problem set gould-orban-cutest , and are used for tests in Section 6.2. Code for running these numerical experiments was written in the Julia programming language doi:10.1137/141000671 , and utilizes the NLPModels.jl orban-siqueira-nlpmodels-2020 , CUTEst.jl orban-siqueira-cutest-2020 , and Distributions.jl 2019arXiv190708611B ; Distributions.jl-2019 packages. In all the numerical experiments that follow, noise was added to function evaluations by uniformly sampling from the interval , and noise was added to the gradient evaluations by uniformly sampling from the closed Euclidean ball .

6.1 Ill Conditioned Quadratic Function With Additive Gradient Noise Only

The first test problem is strongly convex and consists of the -dimensional quadratic function given by

| (64) |

where the eigenvalues of are . Consequently, the strong convexity parameter is , the Lipschitz constant is , and the condition number of the Hessian is . For this test problem, no noise was added to the function evaluations (i.e. in (31)), and . As a result, in this scenario from Lemma 2 with (i.e. the smallest possible ) becomes

| (65) |

Following the discussion in Section 4.2, we set the penalty parameters via the formula , which corresponds to a choice of in (42). The term was added as a small perturbation to provide numerical stability. The step size was chosen using a backtracking line search based on the sufficient decrease condition (49) with , where is defined by (32), , and . At each iteration, backtracking started from the initial step size , decreasing by a factor of each time the sufficient decrease condition failed. If the backtracking line search exceeded the maximum number of backtracks, we set . However, the maximum number of backtracks was never exceeded when performing experiments with this first test problem.

Algorithm 1 was initialized using the matrix and starting point

| (66) |

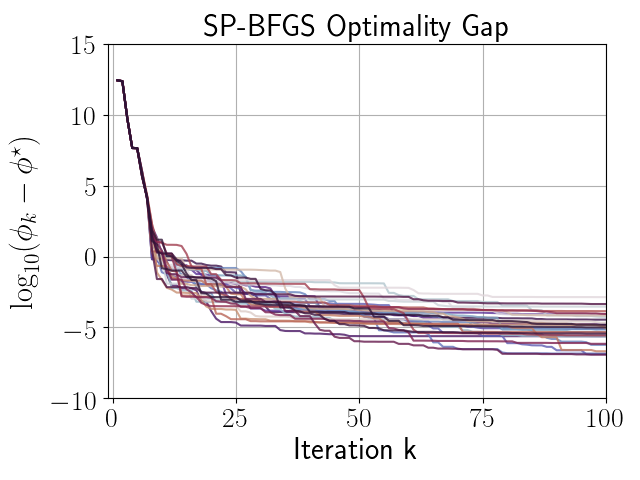

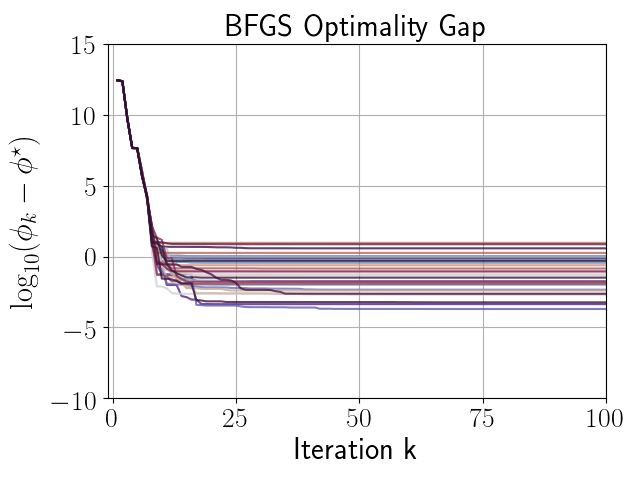

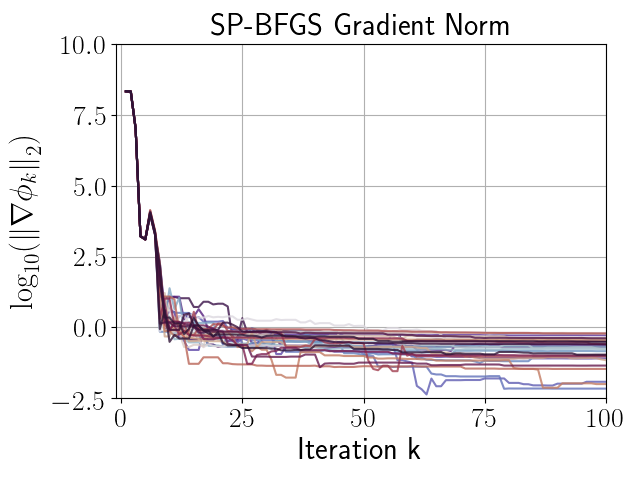

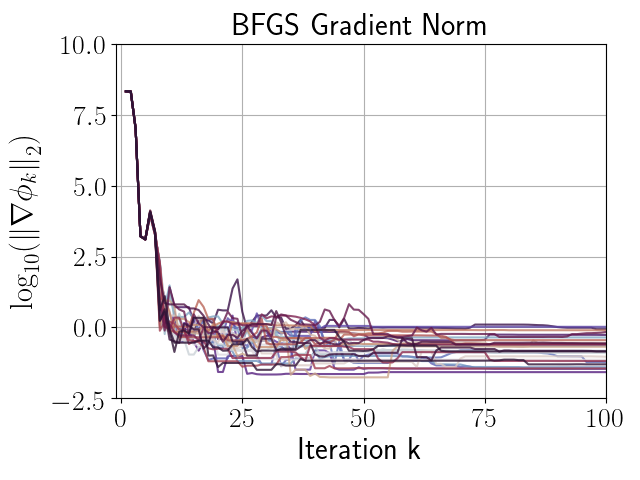

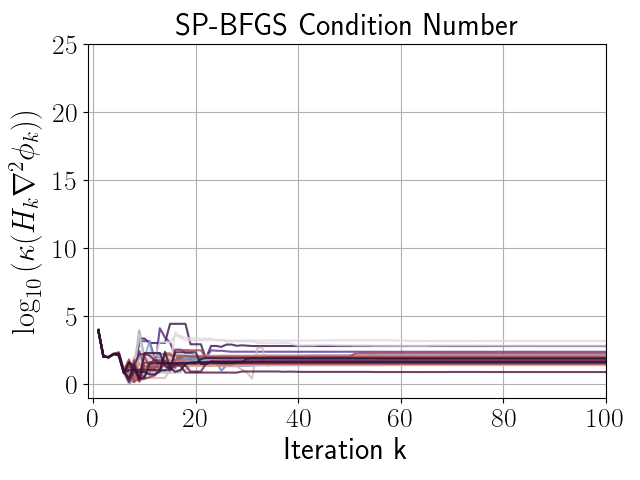

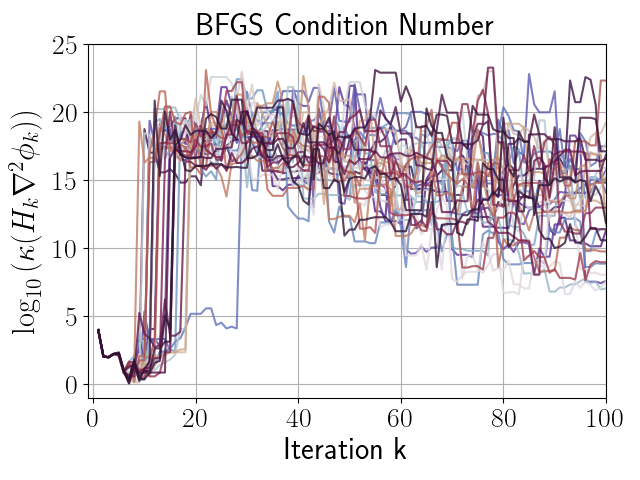

given in (66), with . Figures 3, 3, and 3 compare the performance of independent runs of SP-BFGS vs. BFGS over a fixed budget of iterations. The relevant curvature condition failed an average of total iterations per BFGS run, and total iterations per SP-BFGS run. For the sake of comparability, both SP-BFGS and BFGS skipped the update if the relevant curvature condition failed. Observe that SP-BFGS reduces the objective function value by several more orders of magnitude compared to BFGS on average, and maintains significantly better inverse Hessian approximations than BFGS in the presence of gradient noise.

|

|

|

|

|

|

6.2 CUTEst Test Problems With Various Additive Noise Combinations

The remaining test problems were selected from the CUTEst problem set, the successor of CUTEr gould-orban-toint-cuter . At the time of writing, SIF files and descriptions of all test problems can be found at https://www.cuter.rl.ac.uk/Problems/mastsif.shtml. As a brief summary, some of the problems can be interpreted as least squares type problems (e.g. ARGTRGLS), some of the problems are ill conditioned or singular type problems (e.g. BOXPOWER), some of the problems are well known nonlinear optimization test problems (e.g. ROSENBR) or extensions of them (e.g. ROSENBRTU, SROSENBR), and some of the problems come from real applications (e.g. COATING, HEART6LS, VIBRBEAM). As shown in Tables 2 and 3, the selected CUTEst test problems vary in size from -dimensional to -dimensional.

Using these CUTEst test problems and a fixed budget of objective function evaluations (not iterations) per test, we tested the performance of SP-BFGS compared to BFGS with various combinations of function and gradient noise levels and . For all the experiments in Tables 1, 2, and 3, as well as the additional experiments in Appendix G, both SP-BFGS and BFGS skipped updating if the curvature condition failed. In Tables 1, 2, and 4, the SPBFGS penalty parameter was set as , as the authors heuristically discovered setting works well in practice for a variety of problems. With regards to the backtracking line search based on (49), we set , , , , and the maximum number of backtracks as . We define as a measure of the optimality gap, and use to denote the smallest value of the true function measured at any point during an algorithm run. The true minimum values for each CUTEst problem were obtained from the SIF file for each CUTEst problem. The sample variance (i.e. the variance with Bessel’s correction) is denoted by .

Table 1 compares the performance of SP-BFGS vs. BFGS on the Rosenbrock function (i.e. ROSENBR) corrupted by different combinations of function and gradient noise of varying orders of magnitude. Observe that SP-BFGS outperforms BFGS with respect to the mean and median optimality gap for every noise combination in Table 1, sometimes by several orders of magnitude. Tables 2 and 3 compare the performance of SP-BFGS vs. BFGS on the CUTEst test problems with both function and gradient noise present. Gradient noise was generated using , and function noise was generated using , both to ensure that noise does not initially dominate function or gradient evaluations. Note that as the noise in these numerical experiments is additive, the signal to noise ratio of gradient measurements decreases as a stationary point is approached. Overall, SP-BFGS outperforms BFGS on approximately of the CUTEst problems with both function and gradient noise present, and performs at least as good as BFGS on approximately of these problems. Referring to Appendix G, with only gradient noise present, these percentages become and respectively.

| Mean() | Median() | Min() | Max() | Mean() | |||

| SPBFGS With No Function Noise | |||||||

| -1.4E+01 | -1.4E+01 | -1.8E+01 | -1.2E+01 | 1.4E+00 | 114 | ||

| -1.3E+01 | -1.3E+01 | -1.5E+01 | -8.3E+00 | 2.9E+00 | 104 | ||

| -2.1E+00 | -1.8E+00 | -5.7E+00 | -9.2E-01 | 9.4E-01 | 153 | ||

| 3.5E-02 | 2.9E-01 | -1.9E+00 | 7.9E-01 | 3.9E-01 | 90 | ||

| BFGS With No Function Noise | |||||||

| -1.1E+01 | -1.0E+01 | -1.4E+01 | -8.8E+00 | 1.8E+00 | 263 | ||

| -6.6E+00 | -6.6E+00 | -9.6E+00 | -4.3E+00 | 1.6E+00 | 281 | ||

| -1.5E+00 | -1.2E+00 | -3.3E+00 | -5.4E-01 | 6.3E-01 | 279 | ||

| 1.1E-01 | 4.3E-01 | -2.4E+00 | 6.5E-01 | 4.7E-01 | 373 | ||

| SPBFGS With Low Function Noise Level | |||||||

| -1.4E+01 | -1.4E+01 | -1.5E+01 | -1.3E+01 | 1.9E-01 | 1980 | ||

| -1.0E+01 | -1.0E+01 | -1.2E+01 | -8.0E+00 | 1.3E+00 | 1964 | ||

| -2.1E+00 | -2.0E+00 | -3.6E+00 | -1.6E+00 | 2.0E-01 | 1759 | ||

| 8.7E-02 | 3.1E-01 | -2.2E+00 | 9.1E-01 | 4.5E-01 | 1720 | ||

| BFGS With Low Function Noise Level | |||||||

| -1.1E+01 | -1.2E+01 | -1.5E+01 | -8.7E+00 | 1.7E+00 | 1980 | ||

| -6.6E+00 | -6.5E+00 | -8.8E+00 | -4.7E+00 | 1.2E+00 | 1975 | ||

| -1.2E+00 | -1.1E+00 | -1.8E+00 | -8.6E-01 | 5.9E-02 | 1936 | ||

| 9.5E-02 | 5.1E-01 | -3.1E+00 | 9.2E-01 | 8.5E-01 | 1934 | ||

| SPBFGS With Medium Function Noise Level | |||||||

| -1.4E+01 | -1.4E+01 | -1.5E+01 | -1.3E+01 | 3.4E-01 | 1981 | ||

| -1.0E+01 | -1.0E+01 | -1.3E+01 | -7.5E+00 | 1.5E+00 | 1977 | ||

| -3.4E+00 | -3.0E+00 | -7.5E+00 | -2.0E+00 | 1.7E+00 | 1934 | ||

| -1.8E-01 | 1.7E-01 | -3.7E+00 | 7.4E-01 | 1.0E+00 | 1890 | ||

| BFGS With Medium Function Noise Level | |||||||

| -1.1E+01 | -1.1E+01 | -1.4E+01 | -8.5E+00 | 1.4E+00 | 1981 | ||

| -6.7E+00 | -6.7E+00 | -1.0E+01 | -4.9E+00 | 1.7E+00 | 1979 | ||

| -1.8E+00 | -1.5E+00 | -3.8E+00 | -9.1E-01 | 6.3E-01 | 1961 | ||

| 1.4E-01 | 3.9E-01 | -2.3E+00 | 8.5E-01 | 6.1E-01 | 1953 | ||

| SPBFGS With High Function Noise Level | |||||||

| -1.4E+01 | -1.4E+01 | -1.5E+01 | -1.3E+01 | 2.2E-01 | 1980 | ||

| -1.0E+01 | -1.0E+01 | -1.2E+01 | -7.3E+00 | 9.6E-01 | 1978 | ||

| -3.1E+00 | -2.8E+00 | -5.1E+00 | -1.7E+00 | 8.9E-01 | 1969 | ||

| -2.2E-01 | 1.1E-02 | -1.9E+00 | 8.4E-01 | 7.6E-01 | 1943 | ||

| BFGS With High Function Noise Level | |||||||

| -1.1E+01 | -1.1E+01 | -1.3E+01 | -9.0E+00 | 1.4E+00 | 1980 | ||

| -6.7E+00 | -6.4E+00 | -9.1E+00 | -5.0E+00 | 1.5E+00 | 1980 | ||

| -1.8E+00 | -1.4E+00 | -5.3E+00 | -8.2E-01 | 1.1E+00 | 1973 | ||

| -2.9E-02 | 3.7E-01 | -2.1E+00 | 8.9E-01 | 7.9E-01 | 1965 | ||

| SP-BFGS With Function And Gradient Noise | ||||||

| Problem | Dim. | Mean() | Median() | Min() | Max() | |

| ARGTRGLS | 200 | 4.5E-02 | 4.8E-02 | 1.7E-02 | 8.0E-02 | 2.5E-04 |

| ARWHEAD | 500 | -2.5E+00 | -2.5E+00 | -2.6E+00 | -2.5E+00 | 2.6E-04 |

| BEALE | 2 | -1.1E+01 | -1.1E+01 | -1.4E+01 | -9.8E+00 | 8.0E-01 |

| BOX3 | 3 | -7.1E+00 | -6.8E+00 | -8.9E+00 | -6.5E+00 | 6.2E-01 |

| BOXPOWER | 100 | -3.8E+00 | -3.8E+00 | -4.2E+00 | -3.5E+00 | 5.0E-02 |

| BROWNBS | 2 | -1.2E+00 | -7.4E-01 | -5.2E+00 | 2.0E+00 | 3.5E+00 |

| BROYDNBDLS | 50 | -6.2E+00 | -6.2E+00 | -6.4E+00 | -6.0E+00 | 6.9E-03 |

| CHAINWOO | 100 | 1.7E+00 | 1.8E+00 | 7.7E-03 | 2.1E+00 | 1.6E-01 |

| CHNROSNB | 50 | -4.2E+00 | -4.0E+00 | -5.5E+00 | -3.6E+00 | 3.8E-01 |

| COATING | 134 | -2.7E-02 | -1.2E-02 | -1.3E-01 | 9.6E-02 | 3.5E-03 |

| COOLHANSLS | 9 | -1.2E+00 | -1.1E+00 | -1.6E+00 | -8.7E-01 | 1.7E-02 |

| CUBE | 2 | -5.2E+00 | -4.7E+00 | -8.9E+00 | -3.1E+00 | 2.2E+00 |

| CYCLOOCFLS | 20 | -8.4E+00 | -8.5E+00 | -9.1E+00 | -6.9E+00 | 3.0E-01 |

| EXTROSNB | 10 | -5.2E+00 | -5.2E+00 | -5.2E+00 | -5.1E+00 | 1.3E-03 |

| FMINSRF2 | 64 | -8.7E+00 | -8.7E+00 | -8.7E+00 | -8.6E+00 | 2.6E-04 |

| GENHUMPS | 5 | 4.1E-02 | 2.4E-01 | -2.9E+00 | 7.8E-01 | 4.5E-01 |

| GENROSE | 5 | -9.4E+00 | -9.3E+00 | -9.9E+00 | -9.1E+00 | 5.6E-02 |

| HEART6LS | 6 | -3.5E-01 | 2.7E-01 | -2.0E+00 | 1.2E+00 | 1.5E+00 |

| HELIX | 3 | -6.1E+00 | -6.0E+00 | -7.4E+00 | -4.5E+00 | 5.0E-01 |

| MANCINO | 30 | -2.1E+00 | -2.1E+00 | -2.5E+00 | -1.9E+00 | 1.2E-02 |

| METHANB8LS | 31 | -3.8E+00 | -3.9E+00 | -4.2E+00 | -3.4E+00 | 3.6E-02 |

| MODBEALE | 200 | 1.1E+00 | 1.0E+00 | 4.7E-01 | 1.8E+00 | 1.8E-01 |

| NONDIA | 10 | -4.2E-03 | -4.3E-03 | -4.4E-03 | -3.2E-03 | 9.1E-08 |

| POWELLSG | 4 | -6.1E+00 | -6.0E+00 | -7.9E+00 | -4.6E+00 | 9.1E-01 |

| POWER | 10 | -3.9E+00 | -3.8E+00 | -4.9E+00 | -3.3E+00 | 1.9E-01 |

| ROSENBR | 2 | -8.6E+00 | -8.5E+00 | -1.1E+01 | -6.3E+00 | 1.8E+00 |

| ROSENBRTU | 2 | -1.8E+01 | -1.8E+01 | -2.0E+01 | -1.7E+01 | 4.0E-01 |

| SBRYBND | 500 | 3.9E+00 | 3.9E+00 | 3.9E+00 | 3.9E+00 | 9.2E-06 |

| SINEVAL | 2 | -1.4E+01 | -1.4E+01 | -1.5E+01 | -1.3E+01 | 3.7E-01 |

| SNAIL | 2 | -1.2E+01 | -1.2E+01 | -1.4E+01 | -1.1E+01 | 2.9E-01 |

| SROSENBR | 1000 | 5.0E-01 | 5.0E-01 | 2.9E-01 | 6.8E-01 | 8.6E-03 |

| VIBRBEAM | 8 | 1.5E+00 | 1.5E+00 | 1.2E+00 | 2.1E+00 | 2.6E-02 |

| BFGS With Function And Gradient Noise | ||||||

| Problem | Dim. | Mean() | Median() | Min() | Max() | |

| ARGTRGLS | 200 | 5.6E-02 | 5.5E-02 | 2.4E-02 | 8.4E-02 | 2.7E-04 |

| ARWHEAD | 500 | -2.5E+00 | -2.5E+00 | -2.6E+00 | -2.5E+00 | 4.1E-04 |

| BEALE | 2 | -7.7E+00 | -7.8E+00 | -9.7E+00 | -6.1E+00 | 7.1E-01 |

| BOX3 | 3 | -6.5E+00 | -6.5E+00 | -6.7E+00 | -6.4E+00 | 4.7E-03 |

| BOXPOWER | 100 | -3.7E+00 | -3.7E+00 | -4.2E+00 | -3.4E+00 | 3.4E-02 |

| BROWNBS | 2 | 6.8E-01 | 1.3E+00 | -3.2E+00 | 3.1E+00 | 2.9E+00 |

| BROYDNBDLS | 50 | -6.0E+00 | -6.0E+00 | -6.3E+00 | -5.7E+00 | 2.6E-02 |

| CHAINWOO | 100 | 1.7E+00 | 1.7E+00 | 1.2E+00 | 2.1E+00 | 5.9E-02 |

| CHNROSNB | 50 | -4.2E+00 | -4.1E+00 | -5.7E+00 | -3.4E+00 | 4.4E-01 |

| COATING | 134 | -3.7E-02 | -5.7E-02 | -1.6E-01 | 8.0E-02 | 4.1E-03 |

| COOLHANSLS | 9 | -1.0E+00 | -1.0E+00 | -2.0E+00 | -4.5E-01 | 7.2E-02 |

| CUBE | 2 | -1.6E+00 | -1.4E+00 | -3.6E+00 | -9.7E-01 | 4.1E-01 |

| CYCLOOCFLS | 20 | -7.2E+00 | -7.2E+00 | -9.1E+00 | -5.8E+00 | 8.7E-01 |

| EXTROSNB | 10 | -5.2E+00 | -5.2E+00 | -5.2E+00 | -5.1E+00 | 1.8E-03 |

| FMINSRF2 | 64 | -8.6E+00 | -8.7E+00 | -8.8E+00 | -8.2E+00 | 2.8E-02 |

| GENHUMPS | 5 | 1.2E-01 | 1.2E-01 | -1.2E+00 | 8.1E-01 | 2.3E-01 |

| GENROSE | 5 | -7.5E+00 | -7.6E+00 | -9.1E+00 | -6.2E+00 | 7.3E-01 |

| HEART6LS | 6 | 3.1E-01 | 6.1E-01 | -1.9E+00 | 1.2E+00 | 1.4E+00 |

| HELIX | 3 | -4.5E+00 | -4.7E+00 | -7.0E+00 | -2.7E+00 | 1.1E+00 |

| MANCINO | 30 | -1.6E+00 | -1.6E+00 | -1.8E+00 | -1.3E+00 | 1.3E-02 |

| METHANB8LS | 31 | -3.9E+00 | -3.8E+00 | -4.4E+00 | -3.6E+00 | 5.5E-02 |

| MODBEALE | 200 | 1.1E+00 | 1.1E+00 | 2.9E-01 | 1.8E+00 | 1.6E-01 |

| NONDIA | 10 | -3.7E-03 | -3.8E-03 | -4.4E-03 | -2.6E-03 | 3.1E-07 |

| POWELLSG | 4 | -5.2E+00 | -5.2E+00 | -7.6E+00 | -4.2E+00 | 7.1E-01 |

| POWER | 10 | -3.5E+00 | -3.5E+00 | -4.1E+00 | -2.9E+00 | 1.0E-01 |

| ROSENBR | 2 | -5.9E+00 | -5.5E+00 | -9.2E+00 | -4.5E+00 | 1.4E+00 |

| ROSENBRTU | 2 | -1.6E+01 | -1.6E+01 | -1.8E+01 | -1.4E+01 | 1.5E+00 |

| SBRYBND | 500 | 3.9E+00 | 3.9E+00 | 3.9E+00 | 3.9E+00 | 2.7E-05 |

| SINEVAL | 2 | -1.1E+01 | -1.1E+01 | -1.3E+01 | -8.9E+00 | 1.3E+00 |

| SNAIL | 2 | -9.4E+00 | -9.2E+00 | -1.2E+01 | -8.0E+00 | 7.2E-01 |

| SROSENBR | 1000 | 5.4E-01 | 5.4E-01 | 3.6E-01 | 7.8E-01 | 6.9E-03 |

| VIBRBEAM | 8 | 1.7E+00 | 1.7E+00 | 1.2E+00 | 2.0E+00 | 2.9E-02 |

7 Final Remarks

In this paper, we introduced SP-BFGS, a new variant of the BFGS method designed to resist the corrupting effects of noise. Motivated by regularized least squares estimation, we derived the SP-BFGS update by applying a penalty method to the secant condition. We argued that with an appropriate choice of penalty parameter, SP-BFGS updating is robust to the corrupting effects of noise that can destroy the performance of BFGS. We empirically validated this claim by performing numerical experiments on a diverse set of over test problems with both function and gradient noise of varying orders of magnitude. The results of these numerical experiments showed that SP-BFGS can outperform BFGS approximately or more of the time, and performs at least as good as BFGS approximately or more of the time. Furthermore, a theoretical analysis confirmed that with appropriate choices of penalty parameter, it is possible to guarantee that SP-BFGS is not corrupted arbitrarily badly by noise, unlike standard BFGS. In the future, we believe it is worth investigating the performance of SP-BFGS in the presence of other types of noise, including multiplicative stochastic noise and deterministic noise, and also believe it is worthwhile to study the use of noise estimation techniques in conjunction with SP-BFGS updating. The authors are also working to publish a limited memory version of SP-BFGS for high dimensional noisy problems.

Acknowledgements.

EH and BI’s work is supported by the Natural Sciences and Engineering Research Council of Canada (NSERC) and the University of British Columbia (UBC).Conflict of interest

The authors declare that they have no conflict of interest.

References

- (1) Aydin, L., Aydin, O., Artem, H.S., Mert, A.: Design of dimensionally stable composites using efficient global optimization method. Proceedings of the Institution of Mechanical Engineers, Part L: Journal of Materials: Design and Applications 233(2), 156–168 (2019). DOI 10.1177/1464420716664921. URL https://doi.org/10.1177/1464420716664921

- (2) Berahas, A.S., Byrd, R.H., Nocedal, J.: Derivative-free optimization of noisy functions via quasi-newton methods. SIAM Journal on Optimization 29, 965–993 (2019)

- (3) Besançon, M., Anthoff, D., Arslan, A., Byrne, S., Lin, D., Papamarkou, T., Pearson, J.: Distributions.jl: Definition and modeling of probability distributions in the juliastats ecosystem. arXiv e-prints arXiv:1907.08611 (2019)

- (4) Bezanson, J., Edelman, A., Karpinski, S., Shah, V.B.: Julia: A fresh approach to numerical computing. SIAM Review 59(1), 65–98 (2017). DOI 10.1137/141000671. URL https://doi.org/10.1137/141000671

- (5) Bons, N.P., He, X., Mader, C.A., Martins, J.R.R.A.: Multimodality in aerodynamic wing design optimization. AIAA Journal 57(3), 1004–1018 (2019). DOI 10.2514/1.J057294. URL https://doi.org/10.2514/1.J057294

- (6) Broyden, C.G.: The Convergence of a Class of Double-rank Minimization Algorithms 1. General Considerations. IMA Journal of Applied Mathematics 6(1), 76–90 (1970). DOI 10.1093/imamat/6.1.76. URL https://doi.org/10.1093/imamat/6.1.76

- (7) Byrd, R.H., Hansen, S.L., Nocedal, J., Singer, Y.: A stochastic quasi-newton method for large-scale optimization. SIAM Journal on Optimization 26(2), 1008–1031 (2016). DOI 10.1137/140954362. URL https://doi.org/10.1137/140954362

- (8) Byrd, R.H., Lu, P., Nocedal, J., Zhu, C.: A limited memory algorithm for bound constrained optimization. SIAM Journal on Scientific Computing 16(5), 1190–1208 (1995). DOI 10.1137/0916069. URL https://doi.org/10.1137/0916069

- (9) Byrd, R.H., Nocedal, J.: A tool for the analysis of quasi-newton methods with application to unconstrained minimization. SIAM Journal on Numerical Analysis 26(3), 727–739 (1989). URL http://www.jstor.org/stable/2157680

- (10) Byrd, R.H., Nocedal, J., Yuan, Y.X.: Global convergence of a class of quasi-newton methods on convex problems. SIAM Journal on Numerical Analysis 24(5), 1171–1190 (1987). URL http://www.jstor.org/stable/2157646

- (11) Chang, D., Sun, S., Zhang, C.: An accelerated linearly convergent stochastic l-bfgs algorithm. IEEE Transactions on Neural Networks and Learning Systems 30(11), 3338–3346 (2019)

- (12) Fasano, G., Pintér, J.D.: Modeling and Optimization in Space Engineering: State of the Art and New Challenges. Springer International Publishing (2019)

- (13) Fletcher, R.: A new approach to variable metric algorithms. The Computer Journal 13(3), 317–322 (1970). DOI 10.1093/comjnl/13.3.317. URL https://doi.org/10.1093/comjnl/13.3.317

- (14) Gal, R., Haber, E., Irwin, B., Saleh, B., Ziv, A.: How to catch a lion in the desert: on the solution of the coverage directed generation (CDG) problem. Optimization and Engineering (2020). DOI 10.1007/s11081-020-09507-w. URL https://doi.org/10.1007%2Fs11081-020-09507-w

- (15) Goldfarb, D.: A family of variable-metric methods derived by variational means. Mathematics of Computation 24(109), 23–26 (1970). URL http://www.jstor.org/stable/2004873

- (16) Gould, N.I.M., Orban, D., contributors: The Constrained and Unconstrained Testing Environment with safe threads (CUTEst) for optimization software. https://github.com/ralna/CUTEst (2019)

- (17) Gould, N.I.M., Orban, D., Toint, P.L.: CUTEr a Constrained and Unconstrained Testing Environment, revisited. https://www.cuter.rl.ac.uk (2001)

- (18) Gower, R., Goldfarb, D., Richtarik, P.: Stochastic block bfgs: Squeezing more curvature out of data. In: M.F. Balcan, K.Q. Weinberger (eds.) Proceedings of The 33rd International Conference on Machine Learning, Proceedings of Machine Learning Research, vol. 48, pp. 1869–1878. PMLR, New York, New York, USA (2016). URL http://proceedings.mlr.press/v48/gower16.html

- (19) Graf, P.A., Billups, S.: Mdtri: robust and efficient global mixed integer search of spaces of multiple ternary alloys. Computational Optimization and Applications 68(3), 671–687 (2017). DOI 10.1007/s10589-017-9922-9. URL https://doi.org/10.1007/s10589-017-9922-9

- (20) Güler, O., Gürtuna, F., Shevchenko, O.: Duality in quasi-newton methods and new variational characterizations of the dfp and bfgs updates. Optimization Methods and Software 24(1), 45–62 (2009). DOI 10.1080/10556780802367205. URL https://doi.org/10.1080/10556780802367205

- (21) Hager, W.W.: Updating the inverse of a matrix. SIAM Review 31(2), 221–239 (1989). URL http://www.jstor.org/stable/2030425

- (22) Horn, R.A., Johnson, C.R.: Matrix analysis, 2nd edn. Cambridge University Press, New York (2013)

- (23) Johnson, R., Zhang, T.: Accelerating stochastic gradient descent using predictive variance reduction. In: Advances in Neural Information Processing Systems, pp. 315–323 (2013)

- (24) Johnson, S.G.: Quasi-newton optimization: Origin of the bfgs update (2019). URL https://ocw.mit.edu/courses/mathematics/18-335j-introduction-to-numerical-methods-spring-2019/week-11/MIT18_335JS19_lec30.pdf

- (25) Keane, A.J., Nair, P.B.: Computational Approaches for Aerospace Design: The Pursuit of Excellence. John Wiley & Sons, Ltd (2005). DOI 10.1002/0470855487. URL https://onlinelibrary.wiley.com/doi/abs/10.1002/0470855487

- (26) Koziel, S., Ogurtsov, S.: Antenna Design by Simulation-Driven Optimization. Springer International Publishing (2014)

- (27) Lewis, A.S., Overton, M.L.: Nonsmooth optimization via quasi-newton methods. Mathematical Programming 141, 135–163 (2013)

- (28) Lin, D., White, J.M., Byrne, S., Bates, D., Noack, A., Pearson, J., Arslan, A., Squire, K., Anthoff, D., Papamarkou, T., Besançon, M., Drugowitsch, J., Schauer, M., other contributors: JuliaStats/Distributions.jl: a Julia package for probability distributions and associated functions. https://github.com/JuliaStats/Distributions.jl (2019). DOI 10.5281/zenodo.2647458. URL https://doi.org/10.5281/zenodo.2647458

- (29) Liu, D.C., Nocedal, J.: On the limited memory bfgs method for large scale optimization. Mathematical Programming 45(1), 503–528 (1989). DOI 10.1007/BF01589116. URL https://doi.org/10.1007/BF01589116

- (30) Mokhtari, A., Ribeiro, A.: Global convergence of online limited memory bfgs. Journal of Machine Learning Research 16(1), 3151–3181 (2015)

- (31) Moritz, P., Nishihara, R., Jordan, M.: A linearly-convergent stochastic l-bfgs algorithm. In: A. Gretton, C.C. Robert (eds.) Proceedings of the 19th International Conference on Artificial Intelligence and Statistics, Proceedings of Machine Learning Research, vol. 51, pp. 249–258. PMLR, Cadiz, Spain (2016). URL http://proceedings.mlr.press/v51/moritz16.html

- (32) Muñoz-Rojas, P.A.: Computational Modeling, Optimization and Manufacturing Simulation of Advanced Engineering Materials. Springer (2016)

- (33) Nocedal, J., Wright, S.: Numerical Optimization. Springer, New York (2006)

- (34) Orban, D., Siqueira, A.S., contributors: CUTEst.jl: Julia’s CUTEst interface. https://github.com/JuliaSmoothOptimizers/CUTEst.jl (2020). DOI 10.5281/zenodo.1188851

- (35) Orban, D., Siqueira, A.S., contributors: NLPModels.jl: Data structures for optimization models. https://github.com/JuliaSmoothOptimizers/NLPModels.jl (2020). DOI 10.5281/zenodo.2558627

- (36) Powell, M.J.D.: Algorithms for nonlinear constraints that use lagrangian functions. Mathematical Programming 14(1), 224–248 (1978). DOI 10.1007/BF01588967. URL https://doi.org/10.1007/BF01588967

- (37) Rosenbrock, H.H.: An Automatic Method for Finding the Greatest or Least Value of a Function. The Computer Journal 3(3), 175–184 (1960). DOI 10.1093/comjnl/3.3.175. URL https://doi.org/10.1093/comjnl/3.3.175

- (38) Schraudolph, N.N., Yu, J., Günter, S.: A stochastic quasi-newton method for online convex optimization. In: M. Meila, X. Shen (eds.) Proceedings of the Eleventh International Conference on Artificial Intelligence and Statistics, Proceedings of Machine Learning Research, vol. 2, pp. 436–443. PMLR, San Juan, Puerto Rico (2007). URL http://proceedings.mlr.press/v2/schraudolph07a.html

- (39) Shanno, D.F.: Conditioning of quasi-newton methods for function minimization. Mathematics of Computation 24(111), 647–656 (1970). URL http://www.jstor.org/stable/2004840

- (40) Wang, X., Ma, S., Goldfarb, D., Liu, W.: Stochastic quasi-newton methods for nonconvex stochastic optimization. SIAM Journal on Optimization 27(2), 927–956 (2017). DOI 10.1137/15M1053141. URL https://doi.org/10.1137/15M1053141

- (41) Xie, Y., Byrd, R.H., Nocedal, J.: Analysis of the bfgs method with errors. SIAM Journal on Optimization 30(1), 182–209 (2020). DOI 10.1137/19M1240794. URL https://doi.org/10.1137/19M1240794

- (42) Zhao, R., Haskell, W.B., Tan, V.Y.F.: Stochastic l-bfgs: Improved convergence rates and practical acceleration strategies. IEEE Transactions on Signal Processing 66, 1155–1169 (2018)

- (43) Zhu, J.: Optimization of Power System Operation. John Wiley & Sons, Ltd (2008). DOI 10.1002/9780470466971. URL https://onlinelibrary.wiley.com/doi/abs/10.1002/9780470466971

Appendix A Proof of Theorem 3.1

To produce the SP-BFGS update, we first rearrange (26a), revealing that

| (67) |

and so the symmetry requirement that means transposing (67) gives

| (68) |

which rearranges to

| (69) |

and so

| (70) |

Next, we right multiply (70) by to get

| (71) |

and use (26b) to get that

| (72) |

We now left multiply both sides by and rearrange, giving

| (73) |

This can be rearranged so that is isolated, giving

| (74) |

To get rid of the on the right hand side, we first left multiply both sides by , and then transpose to get

| (75) |

where we have taken advantage of the fact that the transpose of a scalar returns the same scalar. This now allows us to solve for using some basic algebra, and resulting in

| (76) |

Substituting (76) into (74) gives

| (77) |

Now, if we substitute the expression for in (77) into (70), after some simplification we get

| (78) |

Now, we further simplify by applying that , and thus , revealing

| (79) |

which, after a bit of algebra, reveals that the update formula solving the system defined by (26a), (26b), and (26c) can be expressed as

| (80) |

We can make (80) look similar to the common form of the BFGS update given in (19) by defining the two quantities and as in (28) and observing that completing the square gives

| (81) |

which is equivalent to

| (82) |

concluding the proof.

Appendix B Proof of Lemma 1

The given by (27) has the general form

| (83) |

with the specific choices

| (84) |

By definition, is positive definite if

| (85) |

We first show that (29) is a sufficient condition for to be positive definite, given that is positive definite. By applying (83) to (85), we see that

| (86) |

must be true for the choices of and in (84) if is positive definite. Substituting (84) into (86) reveals that

| (87) |

must be true for all if is positive definite. Both and are always nonnegative. To see that , note that because is positive definite, it has a principal square root , and so

| (88) |

We now observe that if , the right term in (87) is zero if and only if . However, if , then the left term in (87) is zero only when . Hence, the condition guarantees that (87) is true for all excluding the zero vector, and thus that is positive definite. The condition expands to

| (89) |

Using the definitions of and in (28), it is clear that , as can only take nonnegative values. Furthermore, as is positive definite, for all . As it is possible for to be zero, we requre . The condition immediately gives (29), as can only be positive if the denominator in its definition is positive. Finally, as can only take nonnegative values, (29) also ensures that is nonnegative, and so when (29) is true, . In summary, we have shown that the condition (29) ensures that the left term in (89) is positive, and the right term nonnegative, so , and thus is positive definite.

We now show that (29) is a necessary condition for to be positive definite, given that is positive definite. If is positive definite, then

| (90) |

assuming . Substituting (26b) into (90) gives

| (91) |

and using (76) shows that (91) is equivalent to

| (92) |

Now, some algebra shows that

| (93) |

and we also know that because is positive definite, for all , by definition , and by the definition of the square of a real number, . As a result,

| (94) |

is guaranteed only if the denominator is positive, which occurs when

| (95) |

This establishes that (29) is a necessary condition for to be positive definite, given that is positive definite, and concludes the proof.

Appendix C Proof of Theorem 3.2

The Sherman-Morrison-Woodbury formula says

| (96) |

Now, observe that the SP-BFGS update (27) can be written in the factored form

| (97) |

Applying the Sherman-Morrison-Woodbury formula (96) to the factored SP-BFGS update (97) with

|

|

yields

|

|

Inverting here gives

and we also have

which is just a matrix with real entries. Now, it becomes clear that

For notational compactness, let

so

where the determinant of is

and we have used the fact that , as this is a scalar quantity. Next,

so fully expanded becomes

|

|

This looks rather ugly at the moment, but we continue by breaking the problem down further, noting that

and

The above intermediate results further simplify to

|

|

Left and right multiplying the line immediately above by gives

|

|

and thus, after dividing out and applying , we arrive at the following final formula

|

|

(98) |

for the SP-BFGS inverse update, which concludes the proof.

Appendix D Proof of Theorem 5.1

Referring to Theorem 3.2, taking the trace of both sides of (98) and applying the linearity and cyclic invariance properties of the trace yields

| (99) |

where

| (100) |

| (101) |

with and defined as

| (102) |

We now observe that after applying some basic algebra, and recalling that is positive definite, one can deduce that for all , the following inequalities hold

| (103) |

By minimizing the absolute value of the common denominator in , and using the inequalities above, we can obtain the bounds

| (104) |

| (105) |

As a result,

| (106) | ||||

| (107) |

and applying establishes (55). Similarly, referring to (80) reveals the upper bound

| (108) |

To establish (54), we apply and to the line above, and then factor. This completes the proof.

Appendix E Proof of Lemma 2

Appendix F Proof of Theorem 5.2

As by Assumption 3, applying Taylor’s theorem and using (58) and strong convexity gives

where is some convex combination of and . Proceeding, note that the smallest from Lemma 2 occurs when , and in this case if . Hence, for all possible choices of it is true that if . Combining this with (59) gives

| (113) |

if . With (113) in hand, continuing to bound terms gives

where the last inequality follows from expanding

| (114) |

and using (60). Simplifying the last inequality reveals that

| (115) |

Since is m-strongly convex by Assumption 3, we can apply

| (116) |

as shown in the proof of Lemma 2 (see Appendix E), which combined with (115) and Assumption 1 gives

| (117) |

Subtracting from both sides, we get

| (118) |

which, by subtracting from both sides and simplifying, gives

thus establishing the Q-linear result (61). We obtain (62) by recursively applying the worst case bound in (61), noting that in the worst case if , then the sequence of iterates remains outside of , only approaching in the limit .

Appendix G Extended Numerical Experiments

Tables 4 and 5 compare the performance of SP-BFGS vs. BFGS on the CUTEst test problems with only gradient noise present (i.e. ). Gradient noise was generated using , where the starting point varies by CUTEst problem, to ensure that noise does not initially dominate gradient evaluations. Overall, SP-BFGS outperforms BFGS on approximately of the CUTEst problems with only gradient noise present, and performs at least as good as BFGS on approximately of these problems.

| SP-BFGS With Gradient Noise Only | ||||||

|---|---|---|---|---|---|---|

| Problem | Dim. | Mean() | Median() | Min() | Max() | |

| ARGTRGLS | 200 | -9.6E-02 | -9.6E-02 | -1.0E-01 | -8.5E-02 | 1.9E-05 |

| ARWHEAD | 500 | -2.8E+00 | -2.8E+00 | -2.8E+00 | -2.7E+00 | 1.7E-03 |

| BEALE | 2 | -1.4E+01 | -1.4E+01 | -1.6E+01 | -7.0E+00 | 4.1E+00 |

| BOX3 | 3 | -6.7E+00 | -6.5E+00 | -1.1E+01 | -6.3E+00 | 6.3E-01 |

| BOXPOWER | 100 | -2.7E+00 | -2.7E+00 | -3.1E+00 | -2.3E+00 | 4.6E-02 |

| BROWNBS | 2 | -4.5E+00 | -5.9E+00 | -8.0E+00 | 1.1E+00 | 8.4E+00 |

| BROYDNBDLS | 50 | -5.4E+00 | -5.4E+00 | -5.9E+00 | -5.0E+00 | 3.4E-02 |

| CHAINWOO | 100 | 1.6E+00 | 1.7E+00 | 7.6E-02 | 2.1E+00 | 1.5E-01 |

| CHNROSNB | 50 | -3.2E+00 | -3.0E+00 | -4.9E+00 | -2.6E+00 | 4.5E-01 |

| COATING | 134 | 3.4E-01 | 3.4E-01 | 1.8E-01 | 4.2E-01 | 3.1E-03 |

| COOLHANSLS | 9 | -9.4E-01 | -9.4E-01 | -1.2E+00 | -4.8E-01 | 4.2E-02 |

| CUBE | 2 | -2.7E+00 | -2.5E+00 | -5.8E+00 | -1.7E+00 | 7.5E-01 |

| CYCLOOCFLS | 20 | -7.4E+00 | -7.2E+00 | -9.3E+00 | -5.9E+00 | 8.1E-01 |

| EXTROSNB | 10 | -5.1E+00 | -5.2E+00 | -5.3E+00 | -4.7E+00 | 3.0E-02 |

| FMINSRF2 | 64 | -8.6E+00 | -8.7E+00 | -8.8E+00 | -8.1E+00 | 3.4E-02 |

| GENHUMPS | 5 | -2.7E+00 | -2.6E+00 | -5.2E+00 | -1.0E+00 | 1.1E+00 |

| GENROSE | 5 | -1.2E+01 | -1.2E+01 | -1.4E+01 | -8.9E+00 | 2.0E+00 |

| HEART6LS | 6 | 1.0E+00 | 1.2E+00 | -1.8E+00 | 1.2E+00 | 5.0E-01 |

| HELIX | 3 | -5.7E+00 | -5.9E+00 | -8.7E+00 | -3.4E+00 | 1.4E+00 |

| MANCINO | 30 | -1.0E+00 | -1.0E+00 | -1.4E+00 | -7.0E-01 | 3.7E-02 |

| METHANB8LS | 31 | -3.6E+00 | -3.6E+00 | -4.0E+00 | -3.3E+00 | 3.1E-02 |

| MODBEALE | 200 | 1.2E+00 | 1.2E+00 | 3.8E-01 | 1.9E+00 | 1.8E-01 |

| NONDIA | 10 | -3.5E-03 | -3.6E-03 | -4.3E-03 | -1.1E-03 | 6.6E-07 |

| POWELLSG | 4 | -5.7E+00 | -5.3E+00 | -9.3E+00 | -4.0E+00 | 1.6E+00 |

| POWER | 10 | -3.5E+00 | -3.5E+00 | -4.4E+00 | -2.8E+00 | 1.3E-01 |

| ROSENBR | 2 | -1.1E+01 | -1.2E+01 | -1.4E+01 | -5.1E+00 | 4.4E+00 |

| ROSENBRTU | 2 | -1.9E+01 | -1.9E+01 | -2.2E+01 | -1.7E+01 | 1.1E+00 |

| SBRYBND | 500 | 3.9E+00 | 3.9E+00 | 3.9E+00 | 3.9E+00 | 2.0E-05 |

| SINEVAL | 2 | -1.3E+01 | -1.3E+01 | -1.8E+01 | -1.1E+01 | 3.3E+00 |

| SNAIL | 2 | -1.5E+01 | -1.6E+01 | -1.8E+01 | -1.2E+01 | 1.6E+00 |

| SROSENBR | 1000 | -9.7E-01 | -9.7E-01 | -1.3E+00 | -4.8E-01 | 3.2E-02 |

| VIBRBEAM | 8 | 1.6E+00 | 1.6E+00 | 1.2E+00 | 2.8E+00 | 9.1E-02 |

| BFGS With Gradient Noise Only | ||||||

|---|---|---|---|---|---|---|

| Problem | Dim. | Mean() | Median() | Min() | Max() | |

| ARGTRGLS | 200 | -9.2E-02 | -9.3E-02 | -9.9E-02 | -8.2E-02 | 1.7E-05 |

| ARWHEAD | 500 | -2.5E+00 | -2.5E+00 | -2.6E+00 | -2.5E+00 | 7.6E-04 |

| BEALE | 2 | -8.3E+00 | -8.5E+00 | -1.2E+01 | -5.8E+00 | 3.9E+00 |

| BOX3 | 3 | -6.4E+00 | -6.4E+00 | -6.6E+00 | -6.3E+00 | 2.3E-03 |

| BOXPOWER | 100 | -2.8E+00 | -2.8E+00 | -3.3E+00 | -2.4E+00 | 6.1E-02 |

| BROWNBS | 2 | 6.8E-02 | 1.0E+00 | -8.2E+00 | 3.6E+00 | 1.0E+01 |

| BROYDNBDLS | 50 | -5.1E+00 | -5.1E+00 | -5.3E+00 | -4.9E+00 | 1.5E-02 |

| CHAINWOO | 100 | 1.7E+00 | 1.8E+00 | 1.1E+00 | 2.2E+00 | 5.8E-02 |

| CHNROSNB | 50 | -2.9E+00 | -2.7E+00 | -4.5E+00 | -2.1E+00 | 3.8E-01 |

| COATING | 134 | 3.6E-01 | 3.7E-01 | 2.1E-01 | 4.2E-01 | 2.6E-03 |

| COOLHANSLS | 9 | -5.6E-01 | -6.4E-01 | -1.3E+00 | 1.9E-01 | 1.9E-01 |

| CUBE | 2 | -1.1E+00 | -1.1E+00 | -1.8E+00 | -9.6E-01 | 5.6E-02 |

| CYCLOOCFLS | 20 | -6.5E+00 | -6.5E+00 | -8.3E+00 | -5.1E+00 | 5.4E-01 |

| EXTROSNB | 10 | -5.1E+00 | -5.1E+00 | -5.3E+00 | -4.9E+00 | 8.1E-03 |

| FMINSRF2 | 64 | -8.2E+00 | -8.2E+00 | -8.7E+00 | -7.3E+00 | 1.4E-01 |

| GENHUMPS | 5 | -1.5E+00 | -1.2E+00 | -4.0E+00 | -2.8E-01 | 8.4E-01 |

| GENROSE | 5 | -6.8E+00 | -6.7E+00 | -8.7E+00 | -5.9E+00 | 4.8E-01 |

| HEART6LS | 6 | 1.2E+00 | 1.2E+00 | 1.2E+00 | 1.2E+00 | 1.9E-04 |

| HELIX | 3 | -4.8E+00 | -4.6E+00 | -8.1E+00 | -2.6E+00 | 2.1E+00 |

| MANCINO | 30 | -8.3E-01 | -8.8E-01 | -1.2E+00 | -3.3E-01 | 5.3E-02 |

| METHANB8LS | 31 | -3.5E+00 | -3.4E+00 | -3.9E+00 | -3.3E+00 | 2.8E-02 |

| MODBEALE | 200 | 1.0E+00 | 1.1E+00 | -6.2E-01 | 2.1E+00 | 3.6E-01 |

| NONDIA | 10 | 1.2E-03 | 1.3E-03 | -4.4E-03 | 1.3E-02 | 2.2E-05 |

| POWELLSG | 4 | -5.3E+00 | -5.0E+00 | -8.0E+00 | -3.6E+00 | 1.6E+00 |

| POWER | 10 | -3.4E+00 | -3.4E+00 | -4.3E+00 | -2.8E+00 | 1.4E-01 |

| ROSENBR | 2 | -6.1E+00 | -5.9E+00 | -1.0E+01 | -3.7E+00 | 2.9E+00 |

| ROSENBRTU | 2 | -1.5E+01 | -1.5E+01 | -1.8E+01 | -1.4E+01 | 1.6E+00 |

| SBRYBND | 500 | 3.9E+00 | 3.9E+00 | 3.9E+00 | 3.9E+00 | 2.0E-05 |

| SINEVAL | 2 | -1.2E+01 | -1.3E+01 | -1.7E+01 | -8.5E+00 | 4.0E+00 |

| SNAIL | 2 | -1.1E+01 | -1.1E+01 | -1.6E+01 | -8.2E+00 | 3.5E+00 |

| SROSENBR | 1000 | -9.1E-01 | -8.8E-01 | -1.3E+00 | -5.1E-01 | 3.1E-02 |

| VIBRBEAM | 8 | 1.7E+00 | 1.6E+00 | 1.4E+00 | 2.6E+00 | 1.0E-01 |