Self-optimizing loop sifting and majorization for 3D reconstruction

Abstract

Visual simultaneous localization and mapping (vSLAM) and 3D reconstruction methods have gone through impressive progress. These methods are very promising for autonomous vehicle and consumer robot applications because they can map large-scale environments such as cities and indoor environments without the need for much human effort. However, when it comes to loop detection and optimization, there is still room for improvement. vSLAM systems tend to add the loops very conservatively to reduce the severe influence of the false loops. These conservative checks usually lead to correct loops rejected, thus decrease performance. In this paper, an algorithm that can sift and majorize loop detections is proposed. Our proposed algorithm can compare the usefulness and effectiveness of different loops with the dense map posterior (DMP) metric. The algorithm tests and decides the acceptance of each loop without a single user-defined threshold. Thus it is adaptive to different data conditions. The proposed method is general and agnostic to sensor type (as long as depth or LiDAR reading presents), loop detection, and optimization methods. Neither does it require a specific type of SLAM system. Thus it has great potential to be applied to various application scenarios. Experiments are conducted on public datasets. Results show that the proposed method outperforms state-of-the-art methods.

INTRODUCTION

Due to rapid development in autonomous vehicles and consumer robots, there is an increasing need for precise 3D maps for route and action planning and navigation. Among 3D mapping methods, visual simultaneous localization and mapping (vSLAM) and 3D reconstruction methods are very promising because they can map large-scale environments such as cities and indoor environments without the need for much human effort involved.

vSLAM and 3D reconstruction methods have gone through impressive progress. In camera tracking, there are different methods, such as sparse keypoint point-based methods [1, 2, 3, 4, 5], direct methods [6, 7], and dense surface-based methods [8, 9]. Additionally, IMU are added to methods [10, 11, 12, 13, 14, 15, 10] to make tracking more accurate. Even though camera tracking algorithms have good performance and low drift, the build-up error can still not be ignored [16]. To solve this problem, loop closure detection [17, 18] and optimization [19] are often leveraged to counter the problem, and it has provided plenty of improvements. However, the problem is not fully solved yet. Intuitively, the more loops in data, the more information to recover more precise camera trajectories and 3D models. But, in practice, when running existing vSLAM systems on datasets with loopy motions, mismatches can always be found in the final results. This means that loops are not successfully detected and utilized.

vSLAM systems tend to add the loops very conservatively to reduce the severe influence of the false loops [16]. These conservative checks are the result of the non-perfect precision performance of loop detection methods. There are high chances that detected loops are incorrect ones.

To solve this challenging problem, we propose an algorithm that can sift and majorize loop detections so that only correct and essential loops are fed into the following optimization steps. The proposed method highly couples with the dense map posterior (DMP) metric [20] that can evaluate 3D reconstruction performance without ground truth measurement. Our proposed algorithm can compare the usefulness and effectiveness of different loops and ultimately sifts out false and unimportant loops. To the best of our knowledge, the contributions of the proposed algorithm are:

-

1.

The proposed algorithm can sift loop detections based on their impact on loop optimization results.

-

2.

It is the first algorithm that can marjorize loop detection only to keep the important ones while ignoring the less relevant ones.

-

3.

Experiments on public datasets show it outperforms state-of-the-art methods.

RELATED WORK

To avoid the severe consequence of optimizing with false loops, vSLAM and 3D mapping systems tend to add the loops very conservatively. ORB-SLAM2 [1] requires the presence of several consistent loops in consecutive keyframes to accept them, where at least one keyframe must be shared in order to be classified as consistent. With this consistency check, ORB-SLAM2 merely takes false loops into optimization but at the price that plenty of correct loops are rejected. ElasticFusion [9] evaluates several characteristics before taking a loop detection into optimization pipelines, including deformation cost and final state of the Gauss-Newton system. Even after all the evaluations, a good loop is often rejected, and not rare to see that an incorrect loop is accepted. BundleFusion [4] filters loop correspondences with cascade checks including local geometric and photometric consistency checks and check on correspondence residual after optimization. The local depth discrepancy check shares a small similarity with our work. However, the check is limited to a very local region with a downsampled depth resolution together with a user-specified threshold. Thus it is not informed about the effect of loop data correspondences impacting a full 3D model. A requirement on a user parameter also makes it ineffective and less adaptive. [2] do this by pruning edges after optimization based on the discrepancy between the individual transformation estimates before and after optimization. We share the idea of observing optimization consequences brought in with a loop, but their impact is measured on a sparse graph while ours is observed on a full 3D dense model.

Another approach to solving the problem is to treat false loops as outlier data and decrease their impact on the optimization [21, 22, 23, 24]. They work well in some cases, but the dependence on initial conditions and the ratio of outliers makes them prune to failures. Choi et al. further develop this idea into an algorithm that is highly coupled with the dense 3D reconstruction problem by specifying both pose graph construction and least square information calculation [25]. This method is very effective when a desired camera scan pattern is followed but it requires keeping surface within camera range all the time thus limiting its flexibility. It also suffers dependence on initial condition and outlier ratio.

Due to the difficulty of balancing precision and recall of loop detections, SUN3D [26] turns to a human-in-the-loop approach by labeling objects in scenes and connect the same objects across frames. This method performs very well in terms of loop precision and recall, but it requires too much effort in labeling; thus is not practical to process data on a large scale.

METHOD

To solve the loop sifting problem, we propose an algorithm specified in Algorithm 1. In the algorithm, a given set of loop detections is denoted as among which each individual one is denoted as . The supporting optimization pose graph is denoted as . The sensor (e.g. camera and LiDAR) data are denoted as .

There are two parts in the algorithm. In the first part, all the loops are tested and evaluated individually on the given initial pose graph. This step first runs optimization with a single loop and then fuses a model with the optimized results . Then a DMP value is evaluated for the fused 3D model . This means that it tests each loop and sees how much improvement it provides by itself. Finally, all these loops are ranked by the calculated DMP value in ascending order (more effective less effective negative impacts).

In the second part, all the loops are tested and evaluated one more time, but in a way that is different from the first time. In this part, the loops are tested in sorted order: the ones that provide more improvements are tested first. When a loop can provide performance improvement on the previous result, it will be added to an accepted set, thus will also impact consequent loop tests. In this way, loops are accepted when they can provide performance improvement on the current status. The first accepted one should make an improvement to the original results from tracking.

IMPLEMENTATION

The proposed method is general and agnostic to loop detection and optimization methods. Neither does it require a specific type of vSLAM system. For our experiments, we choose several well know implementations.

Tracking and optimization pose graph

The proposed method requires an optimization pose graph as input data. The only requirement of the pose graph optimization is that it can handle loop closure optimization. In our implementation, we use sparse image feature-based tracking and mapping method implemented by ORB-SLAM2 [1] with loop detection disabled. The pose graph from ORB-SLAM2 is utilized as the optimization graph for the proposed method. For the purpose of loop sifting and majorization, we find pose graph optimization is good enough; thus, the more time-consuming full bundle adjustment is not included.

The ORB-SLAM2 is a very well implemented sparse feature-based SLAM system. Inside this tracking module, the Oriented FAST and Rotated BRIEF (ORB) features are extracted for keypoint matching. Then frames are tracked against keyframes with motion estimate and then refined with a local sparse map. Keyframes are generated when tracking is weak, or the local bundle adjustment thread is free. Local BA is used to correct the re-projection error of feature correspondences among co-visible keyframes in a background thread. This tracking module provides camera poses for each frame and a co-visibility graph across keyframes.

Model fusion

It is a important step to fuse camera reading data into dense 3D models . For this step, surfels [27] are used as a data representation of 3D model. Each surfel has seven attributes: a position , normal , color , weight , radius , initialization timestamp and last updated timestamp . With a radius property, a surfel can represent a local flat surface around a given a position .

Even though surfel fusion is fast with efficient implementation running on GPU, it takes a considerable amount of time. To speed up the efficiency, we leverage the advantage that surfels can easily be moved rigidly in space. We fuse consecutive frames scene fragments as basic blocks and transform them based on optimized camera trajectories. In this way, the fusion of updated camera pose estimates is approximated with transforming scene fragments to updated location. Thus final results are calculated more efficiently.

Fragment loop to frame loop conversion

Since there are fewer scene fragments than frames, there is a need to convert scene fragment matches to camera frame loops. We do this by connecting a reference frame in one scene fragment and connecting it to all the frames of the other scene fragments and repeat for the other direction.

EXPERIMENTS

Extensive experiments are performed to evaluate our proposed method on two datasets: augmented ICL-NUIM [25] and SUN3D dataset [26]. SMD is short for surface mean distance.

| Traj. RMSE | SMD | DMP | ||||

|---|---|---|---|---|---|---|

| key loops | all loops | key loops | all loops | key loops | all loops | |

| livingroom 1 | 0.082 | 0.175 | 0.027 | 0.059 | 36.9 | 122.8 |

| livingroom 2 | 0.037 | 0.203 | 0.012 | 0.080 | 19.5 | 85.2 |

| office 1 | 0.051 | 0.096 | 0.020 | 0.046 | 143.5 | 200.9 |

| office 2 | 0.036 | 0.085 | 0.014 | 0.024 | 110.5 | 373.2 |

| Average | 0.052 | 0.140 | 0.018 | 0.052 | 77.6 | 195.5 |

Augmented ICL-NUIM dataset

We run experiments on the Augmented ICL-NUIM dataset [25]. This dataset is a synthetic dataset with ground-truth surface models and camera trajectories. The dataset has four data sequences of RGB-D data. For each sequence, there are merged scene fragments available with ground truth registration results. For this dataset, our baseline method is CZK [25] which is published in the same work as the Augmented ICL-NUIM dataset.

| Recall/Precision (%) | Registration | ZGK | Ours |

|---|---|---|---|

| livingroom 1 | 61.2 / 27.2 | 57.6 / 95.1 | 5.5 / 100 |

| livingroom 2 | 49.7 / 17.0 | 49.7 / 97.4 | 3.9 / 100 |

| office 1 | 64.4 / 19.2 | 63.3 / 98.3 | 2.8 / 100 |

| office 2 | 61.5 / 14.9 | 60.7 / 100 | 0.7 / 100 |

Experiments are conducted to evaluate the loop sifting and majorization performance of the proposed method. Performance is evaluated based on precision and recall of loops detected and remaining. Results are reported in table 2. We can see that our method gets 100% percent precision, which is desired. You may notice that the recall reduced dramatically after sifting. The decrease is not because of the strict requirement but because many loops are not very useful. We note that the remaining loops are the core ones that matter most for a better reconstruction quality, which we call it loop majorization.

Many of the original loops are close to each other and connected accurately by ORB-SLAM tacking already. We prove this in another experiment that evaluates the trajectories and reconstructed 3D models of optimization with the key loops identified by our method and all the loops that agree with ground truth. Results are shown in table 1. We can see that more loops do not improve performance instead decrease the performance. This is because some of the loops are not very precise. It will decrease accuracy if two loop regions are well connected originally.

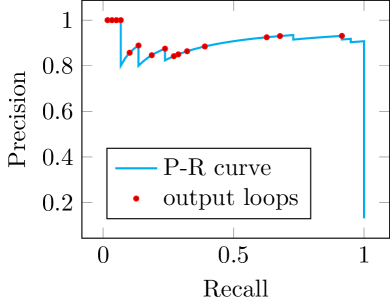

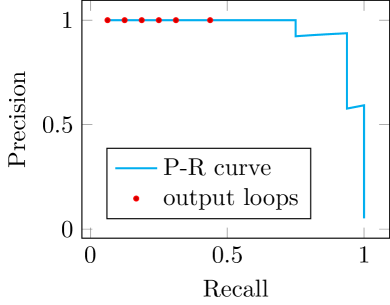

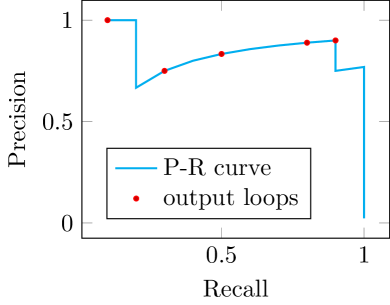

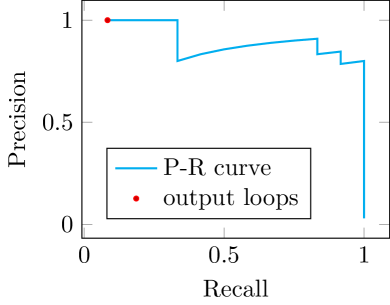

To further understand the proposed method, we draw precision-recall curves of the loop ranking results in the proposed algorithm in figure 1. In the results, we can see the curves all starts from precision. Then the curves keep on high precision values when recall increases. There are a few drop points, which means false positive loops. The majority of the false-positive loops are at the end of the list reflected by the sharp drops when recall reaches . These mean that the ranking has good performance with exceptions. These false-positive loops that remained in the ranking are well handled by the last part, which tests and decides acceptance of each ranked loop. It shows one more strength of our method: it decides the acceptance of correct loops without a single user parameter, even when the ratio of true/false positive loops are drastically different.

| Tracking only | Optimized with output loops | |

|---|---|---|

|

maryland_hotel3 |

||

|

76_studyroom |

||

|

mit_32_d507 |

SUN3D dataset

The SUN3D dataset [26] is a large-scale RGB-D database. It contains many data sequences captured at many places. Among them, there are eight sequences (listed here: http://sun3d.cs.princeton.edu/listNow.html) that are labeled with object annotations and widely used for evaluating SLAM and 3D reconstruction systems. We follow this common practice and run experiments on these sequences. For sequences harvard_c5, harvard_c6 and harvard_c8, there is no loops detected on top of tracking. So we do not include results for them.

| SUN3D | ORB SLAM2 | CZK | BundleFusion | Tracking | Ours | |

|---|---|---|---|---|---|---|

| mit_32_d507 | 573.95 | 750.60 | 334.17 | 441.15 | 904.25 | 296.59 |

| maryland_hotel3 | 145.85 | 107.50 | 108.91 | 128.86 | 111.83 | 96.56 |

| 76_studyroom | 448.84 | 1191.40 | 282.22 | 256.07 | 358.93 | 193.94 |

| mit_dorm_next | 46.38 | 51.88 | 734.50 | 944.81 | 87.04 | 30.16 |

| mit_lab_hj | 180.49 | 162.98 | 244.86 | 207.94 | 155.86 | 199.57 |

| Number of loops | before | after |

|---|---|---|

| mit_32_d507 | 2135 | 34 |

| maryland_hotel3 | 224 | 6 |

| 76_studyroom | 442 | 7 |

| mit_dorm_next | 621 | 6 |

| mit_lab_hj | 219 | 7 |

Quantitatively, we evaluate the DMP metric of different methods and report them in table 3. We compared with four different methods: 1) CZK, which is an offline method that targets the best surface reconstruction quality; 2) SUN3D, which is an offline method that adds manual object labeling as a source of loop closure; 3) ORB-SLAM2, which is a well known good SLAM that also has a tracking part in our system; 4) BundleFusion which is a well-engineered real-time dense SLAM system. The DMP metric evaluates that the proposed method makes reliable improvements on its initial start point: tracking result. Most importantly, it outperforms most methods. To understand the proposed method, we also include the number of loop detections and the number of loops that pass our algorithm, shown in table 4.

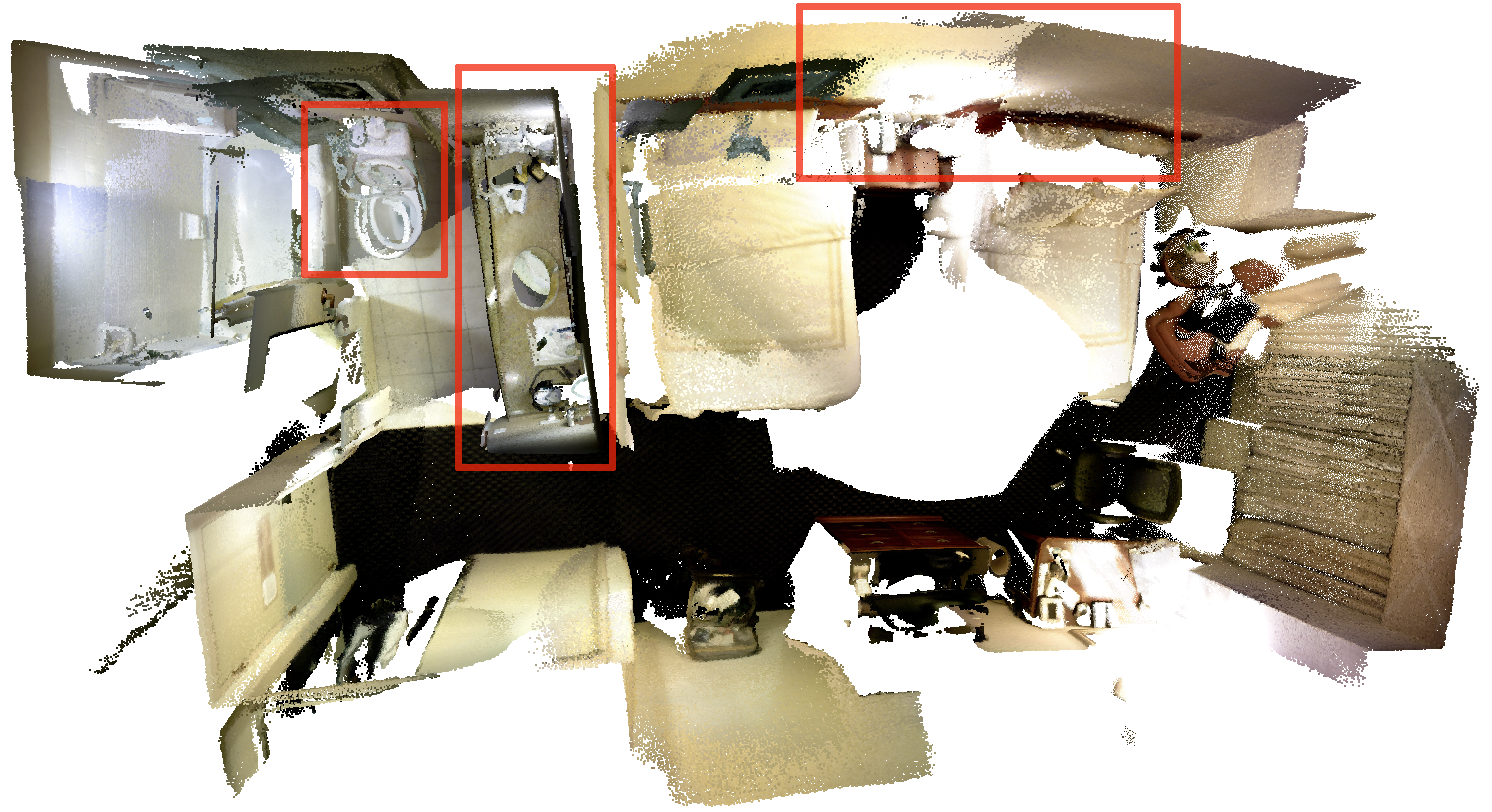

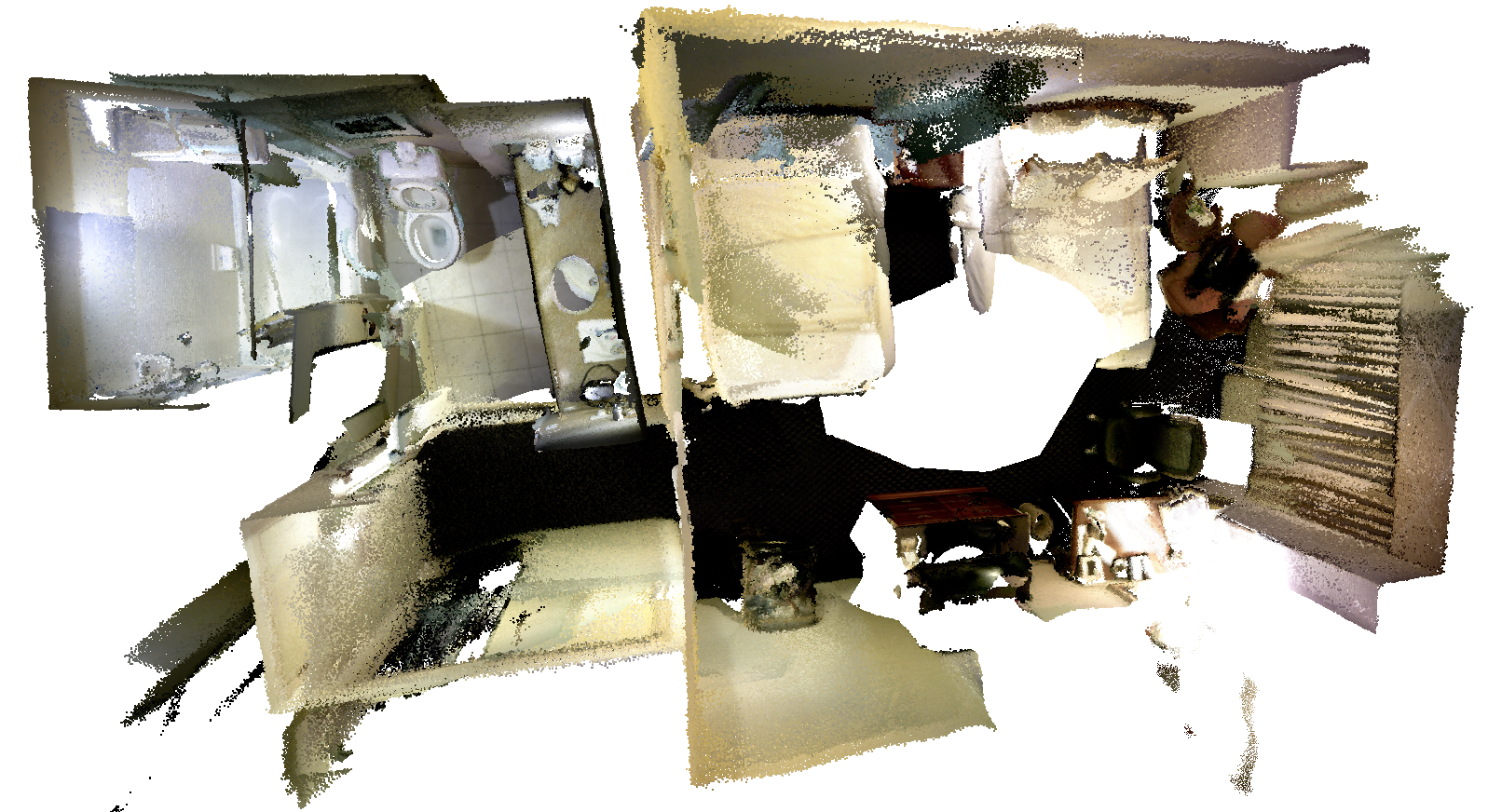

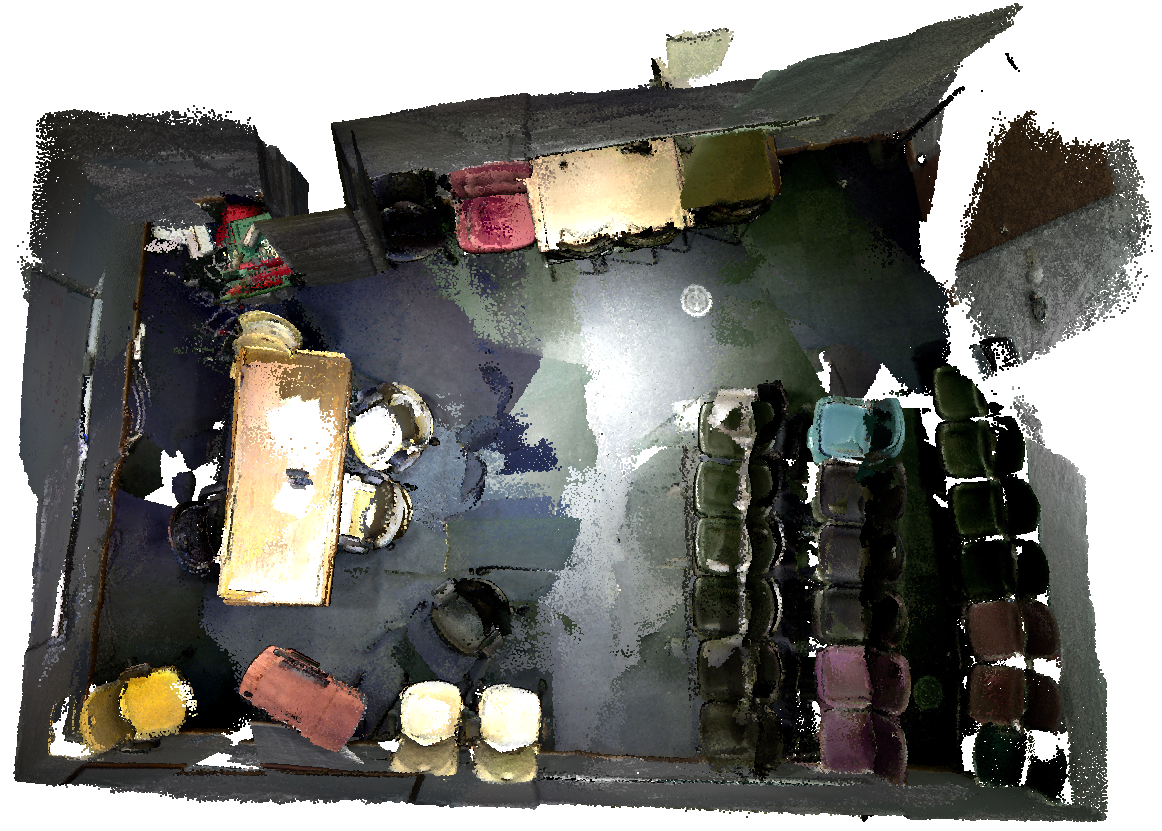

Qualitative, we show results in the form of screenshots of reconstructed 3D models in figure 2. In figure 2, we highlight the mismatches in tracking results so that reader can better compare them with our results. SUN3D data sequences are scanned with very loopy motion in some areas but only once for some other scene parts, thus is considered difficult to process. Readers may refer to http://redwood-data.org/indoor/models.html for CZK results and https://graphics.stanford.edu/projects/bundlefusion/recons.html for BundleFusion results for visual comparison.

CONCLUSION

In this work, an algorithm that can sift and majorize loop detections is proposed. The algorithm tests and decides the acceptance of each loop without a single user-defined threshold. Experiments are conducted on public datasets, including the Augmented ICL-NUIM dataset and the SUN3D dataset. Results show that the proposed method outperforms the state-of-the-art method. It can find key loops with precision and eliminate significant mismatches when processing SUN3D sequences.

References

- [1] Mur-Artal, R., and Tardos, J. D., 2017. “ORB-SLAM2: An Open-Source SLAM System for Monocular, Stereo, and RGB-D Cameras”. IEEE Transactions on Robotics, 33(5), Oct., pp. 1255–1262.

- [2] Endres, F., Hess, J., Sturm, J., Cremers, D., and Burgard, W., 2014. “3-D Mapping With an RGB-D Camera”. IEEE Transactions on Robotics, 30(1), Feb., pp. 177–187.

- [3] Klein, G., and Murray, D., 2007. “Parallel Tracking and Mapping for Small AR Workspaces”. In Proc. of the 2007 6th IEEE and ACM International Symposium on Mixed and Augmented Reality, IEEE.

- [4] Dai, A., Nießner, M., Zollhöfer, M., Izadi, S., and Theobalt, C., 2017. “BundleFusion: Real-Time Globally Consistent 3D Reconstruction Using On-the-Fly Surface Reintegration”. ACM Transactions on Graphics, 36(3), May, pp. 1–18.

- [5] Liang, H.-J., Sanket, N. J., Fermuller, C., and Aloimonos, Y., 2019. “SalientDSO: Bringing Attention to Direct Sparse Odometry”. IEEE Transactions on Automation Science and Engineering, 16(4), Oct., pp. 1619–1626.

- [6] Zubizarreta, J., Aguinaga, I., and Montiel, J. M. M., 2020. “Direct Sparse Mapping”. IEEE Transactions on Robotics, 36(4), Aug., pp. 1363–1370.

- [7] Engel, J., Schöps, T., and Cremers, D., 2014. “LSD-SLAM: Large-scale direct monocular SLAM”. In Proc. of the Computer Vision – ECCV 2014. Springer International Publishing, pp. 834–849.

- [8] Newcombe, R. A., Izadi, S., Hilliges, O., Molyneaux, D., Kim, D., Davison, A. J., Kohi, P., Shotton, J., Hodges, S., and Fitzgibbon, A., 2011. “KinectFusion: Real-time dense surface mapping and tracking”. In Proc. of the 2011 10th IEEE International Symposium on Mixed and Augmented Reality, pp. 127–136.

- [9] Whelan, T., Leutenegger, S., Moreno, R. S., Glocker, B., and Davison, A., 2015. “ElasticFusion: Dense SLAM Without A Pose Graph”. In Proc. of the Robotics: Science and Systems XI, Robotics: Science and Systems Foundation.

- [10] Concha, A., Loianno, G., Kumar, V., and Civera, J., 2016. “Visual-inertial direct SLAM”. In Proc. of the 2016 IEEE International Conference on Robotics and Automation (ICRA), IEEE.

- [11] Usenko, V., Engel, J., Stuckler, J., and Cremers, D., 2016. “Direct visual-inertial odometry with stereo cameras”. In Proc. of the 2016 IEEE International Conference on Robotics and Automation (ICRA), IEEE.

- [12] Nießner, M., Dai, A., and Fisher, M., 2014. “Combining Inertial Navigation and ICP for Real-time 3D Surface Reconstruction.”. In Proc. of the Eurographics (Short Papers), Citeseer, pp. 13–16.

- [13] Zihao Zhu, A., Atanasov, N., and Daniilidis, K., 2017. “Event-Based Visual Inertial Odometry”. In Proc. of The IEEE Conference on Computer Vision and Pattern Recognition (CVPR).

- [14] Dong, J., Fei, X., and Soatto, S., 2017. “Visual-inertial-semantic scene representation for 3D object detection”. In Proc. of The IEEE Conference on Computer Vision and Pattern Recognition (CVPR).

- [15] Liu, H., Chen, M., Zhang, G., Bao, H., and Bao, Y., 2018. “ICE-BA: Incremental, consistent and efficient bundle adjustment for visual-inertial SLAM”. In Proc. of The IEEE Conference on Computer Vision and Pattern Recognition (CVPR).

- [16] Cadena, C., Carlone, L., Carrillo, H., Latif, Y., Scaramuzza, D., Neira, J., Reid, I., and Leonard, J. J., 2016. “Past, Present, and Future of Simultaneous Localization and Mapping: Toward the Robust-Perception Age”. IEEE Transactions on Robotics, 32(6), Dec., pp. 1309–1332.

- [17] Galvez-López, D., and Tardos, J. D., 2012. “Bags of binary words for fast place recognition in image sequences”. IEEE Transactions on Robotics, 28(5), Oct., pp. 1188–1197.

- [18] Cummins, M., and Newman, P., 2008. “FAB-MAP: Probabilistic Localization and Mapping in the Space of Appearance”. International Journal of Robotics Research, 27(6), pp. 647–665.

- [19] Kummerle, R., Grisetti, G., Strasdat, H., Konolige, K., and Burgard, W., 2011. “G2o: A general framework for graph optimization”. In Proc. of the 2011 IEEE International Conference on Robotics and Automation, IEEE.

- [20] Zhang, G., and Chen, Y. A metric for evaluating 3D reconstruction and mapping performance with no ground truthing. arXiv:2101.10402.

- [21] Lee, G. H., Fraundorfer, F., and Pollefeys, M., 2013. “Robust pose-graph loop-closures with expectation-maximization”. In Proc. of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, IEEE.

- [22] Sunderhauf, N., and Protzel, P., 2013. “Switchable constraints vs. max-mixture models vs. RRR - A comparison of three approaches to robust pose graph SLAM”. In Proc. of the 2013 IEEE International Conference on Robotics and Automation, IEEE.

- [23] Agarwal, P., Tipaldi, G. D., Spinello, L., Stachniss, C., and Burgard, W., 2013. “Robust map optimization using dynamic covariance scaling”. In Proc. of the 2013 IEEE International Conference on Robotics and Automation, IEEE.

- [24] Sunderhauf, N., and Protzel, P., 2012. “Switchable constraints for robust pose graph SLAM”. In Proc. of 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, IEEE.

- [25] Choi, S., Zhou, Q.-Y., and Koltun, V., 2015. “Robust reconstruction of indoor scenes”. In Proc. of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), IEEE.

- [26] Xiao, J., Owens, A., and Torralba, A., 2013. “SUN3D: A Database of Big Spaces Reconstructed Using SfM and Object Labels”. In Proc. of the 2013 IEEE International Conference on Computer Vision, IEEE.

- [27] Pfister, H., Zwicker, M., van Baar, J., and Gross, M., 2000. “Surfels: Surface Elements as Rendering Primitives”. In Proc. of the 27th annual conference on Computer graphics and interactive techniques - SIGGRAPH '00, ACM Press.