Self-supervised Pseudo Multi-class Pre-training for

Unsupervised Anomaly Detection and Segmentation in Medical Images

Abstract

Unsupervised anomaly detection (UAD) methods are trained with normal (or healthy) images only, but during testing, they are able to classify normal and abnormal (or disease) images. UAD is an important medical image analysis (MIA) method to be applied in disease screening problems because the training sets available for those problems usually contain only normal images. However, the exclusive reliance on normal images may result in the learning of ineffective low-dimensional image representations that are not sensitive enough to detect and segment unseen abnormal lesions of varying size, appearance, and shape. Pre-training UAD methods with self-supervised learning, based on computer vision techniques, can mitigate this challenge, but they are sub-optimal because they do not explore domain knowledge for designing the pretext tasks, and their contrastive learning losses do not try to cluster the normal training images, which may result in a sparse distribution of normal images that is ineffective for anomaly detection. In this paper, we propose a new self-supervised pre-training method for MIA UAD applications, named Pseudo Multi-class Strong Augmentation via Contrastive Learning (PMSACL). PMSACL consists of a novel optimisation method that contrasts a normal image class from multiple pseudo classes of synthesised abnormal images, with each class enforced to form a dense cluster in the feature space. In the experiments, we show that our PMSACL pre-training improves the accuracy of SOTA UAD methods on many MIA benchmarks using colonoscopy, fundus screening and Covid-19 Chest X-ray datasets. The code is made publicly available via this link.

1 Introduction and Background

Detecting and segmenting abnormal lesions from disease screening datasets is a crucial task in medical images analysis (MIA) [54, 57, 35, 29, 2, 20, 38, 33, 32, 37, 59, 10, 51, 12, 30, 63, 9].

A challenging aspect of this problem is that such screening datasets [58, 44] usually contain a disproportionately large number of normal (or healthy) images, and a tiny amount of abnormal (or disease) images that poorly represent all possible disease sub-classes. Instead of designing a fully supervised training approach to handle such a heavily imbalanced labelled dataset with a poor representation of disease sub-classes, we consider in this paper an alternative approach based on unsupervised anomaly detection (UAD) [60, 11, 8], which is trained exclusively with normal images. There are two advantages with such UAD strategy: 1) the acquisition of the training set is straightforward given the large proportion of normal images in screening datasets; and 2) it is not necessary to collect a representative training set containing abnormal images from all disease sub-classes. Nevertheless, this UAD strategy is challenging because the model needs to classify abnormal images without being exposed to them during training.

UAD methods are generally based on a one-class classifier (OCC) that learns a normal image distribution from the normal training images, and test image anomalies (or abnormal images) are detected based on the extent that they deviate from the learned distribution [48, 50, 22, 11, 36, 62, 55, 8, 57, 42, 46, 56, 61]. One fundamental problem in such UAD methods is the learning of effective image feature representations. To detect lesions in medical images, this problem is particularly critical because anomalous lesions may be represented by subtle deviations from normal tissues (e.g., tiny and flat colon polyps) [57]. If not well trained, these UAD methods can overfit the normal training data and learn ineffective image representations, failing to detect and segment subtle abnormal lesions. Previous papers tackle this problem with the use of ImageNet-based pre-trained models, but transferring representations learned from natural images to medical images may not be optimal and can deteriorate the detection performance in medical domain [60].

Another pre-training approach is based on self-supervised learning (SSL) [25, 21, 3, 7, 24, 31, 60, 16, 15, 49], whose effectiveness depends on the relatedness of the pretext tasks and the final MIA classification task, and on assumptions about the training process. SSL pre-training for UAD methods applied to MIA screening problems have shown promising results [60], but they have been sub-optimally explored given that they were adapted from computer vision methods without using MIA domain knowledge in the design of the pretext tasks or in the training process. For instance, even though normal samples can potentially form multiple sub-clusters in the representational space due to the appearance variability within normal training samples, the particular number of such sub-clusters is unknown and hard to define. Hence, in MIA, normal images can be assumed to form a single class, while disease images can be divided into sub-classes characterised by variations in the number and appearance of lesions. Nevertheless, previous SSL methods in UAD [53, 60, 52] extend contrastive learning [7] by sub-dividing the normal class images into multiple classes charaterised by geometric or appearance transformations. Unfortunately, such training process is challenging for MIA UAD that needs to discriminate a single dense class of normal images against a relatively small number of abnormal sub-classes that lie outside the normal class distribution.

In this paper, we propose the Pseudo Multi-class Strong Augmentation via Contrastive Learning (PMSACL), a new self-supervised pre-training method modelled exclusively with normal training images, and designed to learn effective image representations for different types of downstream UAD methods applied to several MIA problems. The main advantage of PMSACL, compared to previous self-supervised pre-training method for MIA applications [60], is that we rely on MIA domain knowledge to design the training and the pretext tasks. In particular, our training uses contrastive learning to classify training samples into multiple dense clusters in terms of Euclidean distance and cosine similarity, with one cluster representing the normal images and the remaining ones representing pseudo sub-classes of the disease images. These pseudo disease sub-classes are synthesised with our MedMix augmentations that simulate a varying number of lesions of different sizes and appearance in the normal training images (see Fig. 2). Please note that our proposed MedMix is inspired by the previous methods based on cut-paste/cut-mix operations from natural and medical images [16, 39, 15, 49]. We summarise our contributions as follows:

-

•

Our PMSACL is the first self-supervised pre-training method specifically designed for MIA UAD applications, where the main advantage lies in the contrastive learning optimisation that learns multiple classes, one for normal images, and the others for pseudo sub-classes of disease images, which are synthesised by our MedMix augmentations by simulating a varying number of lesions of different sizes and appearance.

-

•

We extend our previously published CCD method [60] by proposing two new loss functions to form denser clusters per class, namely: 1) a multi-centring loss to constrain the feature representations of different classes into a subspace around their class centres; and 2) a non-trivial extension of the normalisation of the standard contrastive loss that repels samples from the same class with less intensity than the samples from different classes.

-

•

The proposed PMSACL is shown to learn effective image representations that can adapt well to different types of downstream UAD methods applied to several MIA problems.

We empirically show that PMSACL pre-training significantly improves the performance of two SOTA anomaly detectors, PaDiM [17] and IGD [11]. Extensive experimental results on four different disease screening medical imaging benchmarks, namely, colonoscopy images from two datasets [6, 36], fundus images for glaucoma detection [28] and Covid-19 Chest X-ray (CXR) dataset [64] show that PMSACL can be used to pre-train diverse SOTA UAD methods to improve their accuracy in detecting and segmenting lesions in diverse medical images.

Relationship to Preliminary Work: An early version of this work was presented in our previously published paper [60]. In this new submission, we considerably expand that previous study by: 1) resolving the strong augmentations issue of CCD that does not synthesise medical image anomalies that are relevant for downstream UAD applications; 2) addressing the issues around CCD’s contrastive learning that does not consider that the downstream UAD methods will classify one class of normal images and a few sub-classes of disease images; 3) providing a more comprehensive literature review; 4) adding more experiments using datasets from many medical domains; and 5) including a more in-depth analysis of the proposed PMSACL.

2 Related Work

UAD approaches [48, 50, 22, 11, 36, 62, 55, 8, 57, 42, 34] can be divided into two categories: predictive-based (e.g., DSVDD [47], OC-SVM [13], and deviation network [42]), and generative-based (e.g., auto-encoder [62, 8, 11, 22] and GAN [48, 35, 1]). Predictive-based UAD approaches train a one-class classifier to describe the distribution of normal data, and discriminate abnormal data using their distance/deviation to the normal data distribution; whereas generative-based UAD approaches train deep generative models to learn latent representations of normal images, and detect anomalies based on image reconstruction error [41]. A fundamental challenge in both types of UAD methods is the learning of expressive feature representations from images, which is particularly important in MIA because abnormal medical images may have subtly looking lesions that can be hard to differentiate from normal images. Hence, if not well trained, these UAD models can become over-confident in classifying normal training data and learn ineffective image representations that will fail to enable the detection and segmentation of lesions.

Pre-training is an effective approach to address the representation challenge described above. A heavily explored pre-training approach is based on using ImageNet [18] pre-trained models, but transferring representations learned from natural images to medical images is not straightforward [60]. Alternatively, the representation challenge can also be tackled by pre-training methods based on self-supervised learning (SSL) that learns auxiliary pretext tasks [25, 21, 3, 7, 24, 31]. SSL is a strategy that has produced effective representations for UAD in general computer vision tasks [25, 21, 3, 53]. However, their application to MIA problems needs to be further investigated because it is not clear how to design effective training or pretext tasks that can work well in the detection of subtle lesions in medical images. Previous UAD methods relied on self-supervised pretext tasks based on the prediction of geometric transformations [25, 21, 3] or contrastive learning using standard data augmentation techniques (e.g., scaling, cropping, etc.) [7, 24] to form a large number of image classes characterising similar and dissimilar pairs. These pretext tasks and training strategy are not specifically related to the detection of subtle anomalies in medical images that contain a normal image class and a small number of disease sub-classes, so they may even degrade the detection accuracy of downstream UAD methods [65].

For SSL UAD pre-training in MIA, the only previous work that we are aware is our previously published CCD method [60] that adapts standard contrastive learning and two general computer vision pretext tasks to image anomaly detection and can be applied to multiple downstream UAD methods. Although achieving good results in many benchmarks, the training explored by CCD does not explore the fact that the downstream UAD methods need to recognise one class of normal images and a small number of sub-classes of disease images, and the CCD’s data augmentation will not synthesise relevant medical image anomalies – both issues can challenge the training of downstream UAD approaches.

3 Method

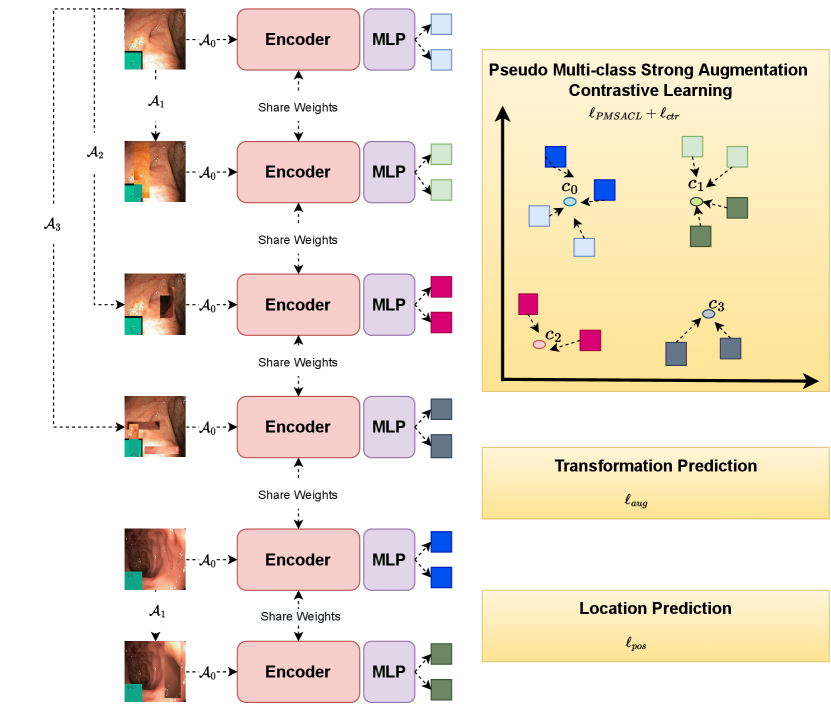

In this section, we introduce the proposed PMSACL pre-training approach depicted in Fig. 1. Given a training medical image dataset , with all images assumed to be from the normal class and (: height, : width, : number of colour channels), our learning strategy involves two stages: 1) the self-supervised pre-training to learn an encoding network (with ), and 2) the fine-tuning of an anomaly detector or segmentation model built from the pre-trained . The approach is evaluated on a testing set , where , and denotes the segmentation mask of the lesion in the image . Below, we first introduce the MedMixdata augmentation in Sec. 3.1, then describe the optimisation proposed for PMSACL in Sec. 3.2, followed by a bried description of the UAD methods in Sec. 3.3.

3.1 MedMix Augmentation

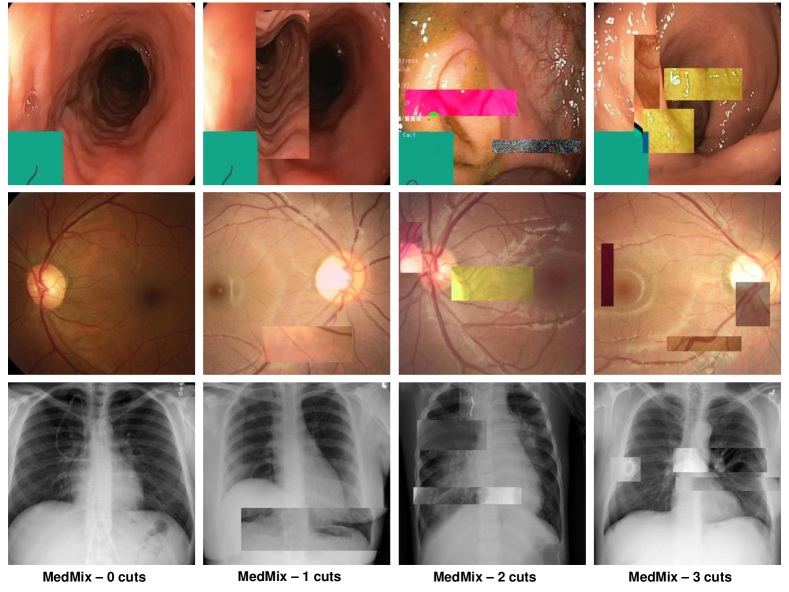

Our MedMix augmentation is designed to augment medical images to simulate multiple lesions. We target a more effective data augmentation for MIA applications than the computer vision augmentations in [60] (e.g., permutations, rotations) that do not simulate medical image anomalies and may yield poor detection performance by downstream UAD methods. We realise that anomalies in different medical domains (e.g., glaucoma and colon polyps) can be visually different, but a commonality among anomalies is that they are usually represented by an unusual growth of abnormal tissue. Hence, we propose the MedMix augmentation to simulate abnormal tissue with a strong augmentation that “constructs” abnormal lesions by the cutting and pasting (from and to normal images) of small and visually deformed patches. This visual deformation is achieved by applying other transformations to patches, such as colour jittering, Gaussian noise and non-linear intensity transformations. This approach is inspired by cutmix [67] and CutPaste [27], where our contribution over those approaches is the intensification of the change present in the cropped patches by the appearance transformations above. These transformations are designed to encourage the model to learn abnormalities in terms of localised image appearance, structure, texture, and colour.

In practice, we design strong augmentation distributions, where includes abnormalities in the image, which means that denotes the normal image distribution and represent the abnormal image distributions, containing anomalous regions. Therefore, our loss targets the classification of MedMix augmentations, as shown in Fig. 2.

3.2 PMSACL Pre-training

The gist of our proposed PMSACL lies in the idea of discriminating the distribution of weakly augmented samples (simulating normal images) from the distributions of different types of strongly augmented samples (simulating multiple classes of abnormal images). Instead of attracting and repelling samples within and between a large number of image classes [53, 60, 52], we propose a new contrastive loss to separate samples from the normal class and samples from pseudo abnormal sub-classes, and to enforce the clusters representing the normal and abnormal sub-classes to be dense. To this end, our proposed loss is defined as:

|

|

(1) |

where denotes the new distribution multi-centring loss, represents the new PMSACL contrastive loss, and are the pretext learning losses to regularise the optimisation [60], and , and are trainable parameters. The loss terms in (1) rely on weak data augmentation distribution, denoted by , and strong data augmentation distributions, represented by , each denoting a different type of augmentation. From each of these distributions, we can sample augmentation functions .

The multi-centring loss in (1) depends on the estimation of the mean representation for each augmentation distribution, computed as

| (2) |

where , with being the mean representation of the training data augmented by the functions sampled from . Note that these mean representations are computed at the beginning of the training and frozen for the rest of the training. The distribution multi-centring loss is then defined as:

| (3) |

which pulls the representations of augmented samples toward their mean representations in (2), making the augmentation clusters dense in Euclidean space.

To further enforce the separation between different clusters and the tightness within each cluster, we introduce a novel contrastive learning loss function. In our contrastive learning, we maximisise the cosine similarity of samples that belong to the same class (i.e., normal or one of the abnormal sub-classes) and minimise the cosine similarity of samples belonging to different classes. An interesting aspect of this optimisation is that samples are centred by their own cluster mean representation from (2), so our contrastive learning, combined with the multi-centred loss in (3) will cluster samples of the same class not only in Euclidean space, but also in inner product space (with cosine measuring similarity between samples). Such re-formulated constrastive learning, combined with the multi-centring loss (3), results in a loss that produces multiple clusters, where cluster contains the normal images and the others, denoted by ), have the synthetised abnormal images. Our PMSACL loss is defined as:

|

|

(4) |

where represents one of two (indexed by ) augmented data obtained from the application of a weak augmentation on a strongly augmented data denoted by with . In (4), we have:

|

|

(5) |

where denotes an indicator function, is defined similarly as in (4), indexes the set of strong augmentations, and indexes one of the two weak augmentations applied to the strongly augmented image. Lastly, to further constrain the normal and strongly augmented data representations in (4), our PMSACL loss minimises the distance between samples centred by their representation means computed as:

| (6) |

where is defined in (2). Also in (4) to map the representations from the same distribution into a denser region of the hyper-sphere [14], we propose a temperature scaling strategy defined as:

| (7) |

where is a scaling factor that controls the shrinkage level of the temperature . As a result, Eq. (7) alters the temperature for the samples that belong to the same strong augmentation distributions (i.e., when ) to a smaller value , which allows smaller amount of repelling strength compared to samples that belongs to strong augmentation distributions (i.e., ). Putting all together, the loss in (4) clusters the image representations into hyper-spheres and regions within the hyper-spheres, where each hyper-sphere and region represent a different type of augmentation.

Inspired by [53, 60], we further constrain the training in (1) with a self-supervised classification constraint that enforces the model to classify the strong augmentation function (Fig. 1):

| (8) |

where is a fully-connected (FC) layer, and is a one-hot vector representing the strong augmentation distribution (i.e., for , and for ).

The final constraint in (1) is based on the relative patch location from the centre of the training image and is adapted for local patches. This constraint is added to learn positional and texture characteristics of the image in a self-supervised manner. Inspired by [19], the positional constraint predicts the relative position of the paired image patches, with its loss defined as

| (9) |

where is a randomly selected fixed-size image patch from , is another image patch from one of its eight neighbouring patches, represents indices to the image lattice, , and is a one-hot encoding of the patch location. The constraints in (8) and (9) are designed to improve training regularisation.

3.3 Anomaly Detection and Segmentation

After pre-training with PMSACL, we fine-tune it with a SOTA UAD, such as IGD [11] or PaDiM [17]. Those methods use the same training set as PMSACL, containing only normal images from healthy patients.

IGD [11] combines three loss functions: 1) two reconstruction losses based on local and global multi-scale structural similarity index measure (MS-SSIM) [66] and mean absolute error (MAE) to train the encoder and decoder , 2) a regularisation loss to train adversarial interpolations from the encoder [5], and 3) an anomaly classification loss to train . The anomaly detection score of image is defined by

| (10) |

where , returns the likelihood that is a normal image, is a hyper-parameter, and

|

|

(11) |

with , and denoting the global and local MS-SSIM scores from the global and local models, respectively [11]. In particular, the Gaussian Anomaly classifier constrains the latent space of the IGD encoder with the following loss:

| (12) |

where the classifier is defined with , represents the encoder parameterised by , with denoting the space of latent embeddings of the auto-encoder. The mean and standard deviation values above are estimated with and , where is a constant that regularises the estimation of to prevent numerical instabilities during training. Such an anomaly classifier optimisation has shown to be less sensitive to outliers compared with other anomaly detection approaches. Anomaly segmentation uses (10) to compute , using global and local models, where is an image patch. This forms a heatmap, where large values of denote anomalous regions. The final heatmap is formed by summing up the global and local heatmaps.

PaDiM [17] utilises the multi-layer features from the pre-trained network to learn a position dependent multi-variate Gaussian distribution of normal image patches. Training uses samples collected from the concatenation of the multi-layer features from each patch position to learn the mean and covariance of the Gaussian model denoted by [17]. Anomaly detection is based on the Mahalanobis distance between the concatenated testing patch feature and the learned Gaussian distribution at that patch position to provide a score of each patch position [17]. In particular, anomaly segmentation is inferred using the following anomaly score map:

| (13) |

and the final score of the whole image is defined as: .

4 Experiments

4.1 Datasets

We test our self-supervised pre-training PMSACL on four health screening datasets, where we run experiments for both anomaly detection and localisation. The datasets for anomaly detection and localisation are: the colonoscopy images of Hyper-Kvasir dataset [6], and the glaucoma dataset using fundus images [28]. We also run anomaly detection without localisation experiments on the following datasets: Liu et al.’s colonoscopy dataset [36], and Covid-19 chest ray dataset [64] – these two datasets do not have lesion segmentation annotations, so we test anomaly detection only.

Hyper-Kvasir is a large multi-class public gastrointestinal imaging dataset [6]. We use a subset of the normal (i.e., healthy) images from the dataset for training. Specifically, 2,100 images from ‘cecum’, ‘ileum’ and ‘bbps-2-3’ are selected as normal, from which we use 1,600 for training and 500 for testing. We also take 1,000 abnormal images and their segmentation masks of polyps to be used exclusively for testing, where all images have size 300 300 pixels.

LAG is a large scale fundus image dataset for glaucoma diagnosis [28]. For the experiments, we use 2,343 normal (negative glaucoma) images for training, and 800 normal images and 1,711 abnormal images with positive glaucoma with annotated attention maps by ophthalmologists for testing, where images are 500 500 pixels. The annotated attention maps are based on eye tracking, in which the maps are used by the ophthalmologists to explore the region of interest for glaucoma diagnosis [28].

Liu et al.’s colonoscopy dataset is a colonoscopy image dataset with 18 colonoscopy videos from 15 patients [36]. The training set contains 13,250 normal (healthy) images without polyps, and the testing set contains 967 images, with 290 abnormal images with polyps and 677 normal (healthy) images without polyps, where all images have size 64 64 pixels.

Covid-X [64] has a training set with 1,670 Covid-19 positive and 13,794 Covid-19 negative CXR images. The test set contains 400 CXR images, consisting of 200 positive and 200 negative images. We train the methods with the 13,794 Covid-19 negative CXR training images and test on the 400 CXR images, where images are 299 299 pixels.

4.2 Implementation Details

For the proposed PMSACL pre-training, we use Resnet18 [23] as the backbone architecture for the encoder , and similarly to previous works [7, 52], we add an MLP to this backbone as the projection head for the contrastive learning, which outputs features in of size 128. All images from the Hyper-Kvasir [6], LAG [28] and Covid-X [64] datasets are resized to 256 256 pixels. For the Liu et al.’s colonoscopy dataset [36], we use the original image size of 64 64 pixels. The batch size is set to 32 and learning rate to 0.01 for the self-supervised pre-training on all datasets. The model is trained using stochastic gradient descent (SGD) optimiser with momentum.

We investigate the impact of different strong augmentations in , including rotation, permutation, cutout, Gaussian noise and our proposed MedMix. For MedMix patches, we randomly apply colour jittering, Gaussian noise, and non-linear intensity transformations (i.e., fisheye and horizontal wave transformations). In particular, the probability of applying the colour jittering, Gaussian noise, fisheye, and horizontal wave transformations is 25%. For the patch selection and placement, we randomly cropped a patch from a random image within the minibatch and randomly paste the cropped patch into a random location of another random image from the minibatch. For non-linear transformation, three randomly selected control points are used to alter the MedMix cropped patches and we follow the default settings from the opencv library. The weak augmentations in are the same as in SimCLR [7], namely: colour jittering, random greyscale, crop, resize, and Gaussian blur. We use the same weak augmentation hyper-parameters as the original SimCLR paper (i.e., Gaussian kernel parameters, crop resolution, etc).

The model pre-trained with PMSACL is fine-tuned with IGD [11] or PaDiM [17]. For IGD [11], we pre-train the global and local models (see Figure 1), where the patch position prediction loss in Eq. 9 is only fine-tuned for the local model. For PaDiM [17], we pre-train the global model and use it to fine-tune the anomaly detection and segmentation models. For the training of IGD [11] and PaDiM [17], we use the hyper-parameters suggested by the respective papers. In our experiments, the local map for IGD is obtained by considering each 3232-pixel patch as an instance and apply our proposed self-supervised learning to it. The global map for IGD is computed based on the whole image sized as 256 256 pixels for Hyper-Kvasir, LAG and Covid-X datasets. For Liu et al.’s colonoscopy dataset, we only train the model globally with the image size 64 64. For the auto-encoder in IGD, we use the setup suggested in [11], where the global model is trained with images of size 256256 pixels or 64 64 for Liu et al.’s colonoscopy dataset, and the local model is trained with image patches of size 3232. For PaDiM [17], we only use the default setup in their work and compute the segmentation mask based on the images of size 256256 pixels for Hyper-Kvasir, LAG and Covid-X datasets, and 64 64 for Liu et al.’s colonoscopy dataset.

4.3 Evaluation Measures

The anomaly detection performance is quantitatively assessed by the area under the receiver operating characteristic curve (AUROC), specificity, sensitivity and accuracy. AUROC assesses anomaly detection by varying the classification threshold and computing the area under the ROC curve. Sensitivity and specificity reflect the percentage of positives and negatives that are correctly detected. Accuracy shows the overall performance of correctly detected samples for both positive and negative images, where the classification threshold is estimated with a small validation set that contains 50 normal and 50 abnormal images that are randomly sampled from the testing set. Note that the validation set is only used for threshold estimation. For anomaly segmentation, the performance is measured by Intersection over Union (IoU), Dice score, and Pro-score [4]. IoU is computed by dividing the intersection by the union between the predicted segmentation and the ground truth mask. Dice also takes the predicted segmentation and the ground truth mask and divides two times their intersection by their sum. Pro-score weights the ground-truth masks of different sizes equally [4] to verify if both large and small abnormal lesions are accurately segmented.

| Methods | AUC | Specificity | Sensitivity | Accuracy |

| DAE [40] | 0.705 | 0.522 | 0.756 | 0.693 |

| OCGAN [43] | 0.813 | 0.691 | 0.811 | 0.795 |

| f-AnoGAN [48] | 0.907 | 0.846 | 0.915 | 0.883 |

| ADGAN [35] | 0.913 | 0.879 | 0.946 | 0.893 |

| MS-SSIM [11] | 0.917 | 0.857 | 0.925 | 0.912 |

| PANDA [45] | 0.937 | 0.805 | 0.919 | 0.917 |

| CutPaste [27] | 0.949 | 0.847 | 0.957 | 0.932 |

| PaDiM [17] | 0.943 | 0.846 | 0.929 | 0.898 |

| CCD - PaDiM | 0.978 | 0.923 | 0.961 | 0.967 |

| PMSACL - PaDiM | 0.996 | 0.966 | 0.981 | 0.983 |

| IGD [11] | 0.939 | 0.858 | 0.913 | 0.906 |

| CCD - IGD | 0.972 | 0.934 | 0.947 | 0.956 |

| PMSACL - IGD | 0.995 | 0.947 | 0.965 | 0.972 |

4.4 Anomaly Detection Results

In this section, we show the anomaly detection results on all datasets.

4.4.1 Hyper-Kvasir

In Table 1, we show the results of anomaly detection on Hyper-Kvasir dataset, where we present results from baseline UAD methods, including OCGAN [43], f-AnoGAN [48], ADGAN [36], and deep autoencoder (DAE) [40] and its variant with MS-SSIM loss [11]. As discussed in Section 3.3, we choose IGD [11] and PaDiM [17] as the anomaly detector for evaluating our proposed PMSACL pre-training approach and compare it with our previously proposed CCD pre-training approach [60] to fine-tune IGD and PaDiM.

Comparing with the baseline UAD methods, the performance of PaDiM and IGD are improved using our PMSACL pre-trained encoder by around 5% and 6% AUC, which achieves SOTA anomaly detection AUC results of 99.6% and 99.5%, respectively, on Hyper-Kvasir. Comparing with our previously proposed CCD pre-training [60], our proposed PMSACL pre-training improves the performance by 2.3% and 1.8% for PaDiM and IGD. This shows that our proposed MedMix and PMSACL loss improve the generalisation ability of the fine-tuning stage for anomaly detection and produce better constrained feature space of normal samples. Moreover, achieving SOTA results on two different types of anomaly detectors suggests that our self-supervised pre-training can produce good representations for both generative and predictive anomaly detectors.

OCGAN [43] constrains the latent space based on two discriminators to force the latent representations of normal data to fall at a bounded area. f-AnoGAN [48] uses an encoder to extract the feature representations of a input image and use a GAN to reconstruct it. ADGAN [35] uses two generators and two discriminators to produce realistic reconstruction of normal samples. These three methods achieve 81.3%, 90.7% and 91.3% AUC on Hyper-Kvasir, respectively, which are well below our self-supervised PMSACL pre-training with IGD and PaDiM. Also, the recently proposed state-of-the-art (SOTA) methods PANDA [45] and CutPaste [27] achieve significantly inferior performance than our PMSACL pre-trained anomaly detectors. Note that CutPaste uses a similar augmentation strategy as MedMix, but with inferior results, indicating the effectiveness of our proposed PMSACL self-supervised loss function. Furthermore, PaDiM with our PMSACL pre-training can achieve the SOTA results of 96.6% specificity, 98.1% sensitivity and 98.3% accuracy. It improves the previous PaDiM using CCD pre-training by 4.3%, 2% and 1.6% for these three evaluation measures. Finally, PaDiM pre-trained with PMSACL significantly outperforms the PaDiM pre-trained with ImageNet [17] by 12%, 5.2% and 8.5% in terms of these three evaluation measures.

| Methods | AUC | Specificity | Sensitivity | Accuracy |

| MS-SSIM [11] | 0.823 | 0.257 | 0.937 | 0.774 |

| f-AnoGAN [48] | 0.778 | 0.565 | 0.899 | 0.763 |

| PANDA [45] | 0.789 | 0.624 | 0.869 | 0.767 |

| CutPaste [27] | 0.745 | 0.372 | 0.788 | 0.685 |

| PaDiM [17] | 0.688 | 0.314 | 0.809 | 0.673 |

| CCD - PaDiM | 0.728 | 0.429 | 0.779 | 0.694 |

| PMSACL - PaDiM | 0.761 | 0.466 | 0.877 | 0.753 |

| IGD [11] | 0.796 | 0.396 | 0.958 | 0.805 |

| CCD - IGD | 0.874 | 0.572 | 0.944 | 0.875 |

| PMSACL - IGD | 0.908 | 0.531 | 0.979 | 0.884 |

4.4.2 LAG

| Methods | AUC | Specificity | Sensitivity | Accuracy |

| DAE [40] | 0.629* | 0.733* | 0.554* | 0.597* |

| OCGAN [43] | 0.592* | 0.716* | 0.534* | 0.624* |

| ADGAN [35] | 0.730* | 0.852* | 0.496* | 0.713* |

| f-AnoGAN [48] | 0.735 | 0.865 | 0.579 | 0.694 |

| PANDA [45] | 0.719 | 0.846 | 0.551 | 0.671 |

| CutPaste [27] | 0.779 | 0.895 | 0.772 | 0.738 |

| PaDiM [17] | 0.741 | 0.851 | 0.738 | 0.751 |

| CCD - PaDiM | 0.789 | 0.946 | 0.792 | 0.767 |

| PMSACL - PaDiM | 0.814 | 0.973 | 0.725 | 0.803 |

| IGD [11] | 0.787 | 0.914 | 0.596 | 0.743 |

| CCD - IGD | 0.837 | 0.985 | 0.774 | 0.815 |

| PMSACL - IGD | 0.851 | 0.986 | 0.792 | 0.829 |

We evaluate the performance of our PMSACL pre-training on the LAG dataset and show results on Table 2. Our PMSACL pre-training improves PaDiM and IGD AUCs by 7.3% and 11.2%, compared with their ImageNet pre-trained model, where the PMSACL pre-trained IGD achieves the SOTA results of 90.8% AUC, 97.9% sensitivity and 88.4% accuracy. Comparing with our previous CCD pre-trained PaDiM and IGD [60], our proposed PMSACL pre-trained PaDiM and IGD surpass them by 3.3% and 3.4% in terms of AUC. The MS-SSIM autoencoder [11], f-AnoGAN [48], PANDA [45], and CutPaste [27] baselines achieve 82.3%, 77.8%, 78.9%, and 74.5% AUC, respectively, which are significantly inferior compared with our PMSACL pre-trained IGD. For LAG, IGD with both reconstruction and anomaly classification constraints can generally outperform PaDiM variants, indicating the superiority of IGD when handling the subtle image features to detect glaucoma.

4.4.3 Liu et al.’s Colonoscopy Dataset

We further test our approach on Liu et al.’s colonoscopy dataset [35], as shown in Table 3. Our PMSACL pre-trained PaDiM improves the ImageNet pre-trained PaDiM by 7.3% AUC, and CCD pre-trained PaDiM by 2.5% of AUC. The IGD with the PMSACL pre-trained encoder achieves the SOTA result of 85.1% AUC, surpassing the previous CCD and ImageNet pre-trained IGD by 1.4% and 6.4% AUC, respectively.

Compared with other UAD approaches, such as f-AnoGAN, ADGAN, OCGAN, PANDA, and CutPaste that achieve 73.5%, 73%, 59.2%, 70.2% and 77.9% AUC, our PMSACL pre-trained IGD and PaDiM produce substantially better results. The gap between PaDiM and IGD may be due to the low resolution of the images in this dataset, which hinders the PaDiM performance that requires dense intermediate feature maps. The additional results of the PMSACL pre-trained IGD are specificity of 98.6%, sensitivity of 79.2%, and accuracy of 82.9%, which demonstrate the robustness of our proposed model.

| Methods | AUC | Specificity | Sensitivity | Accuracy |

| MS-SSIM [11] | 0.634 | 0.572 | 0.406 | 0.577 |

| f-AnoGAN [48] | 0.669 | 0.718 | 0.365 | 0.532 |

| PANDA [45] | 0.629 | 0.762 | 0.447 | 0.591 |

| CutPaste [27] | 0.658 | 0.701 | 0.494 | 0.648 |

| PaDiM [17] | 0.614 | 0.753 | 0.318 | 0.559 |

| CCD - PaDiM | 0.632 | 0.673 | 0.569 | 0.616 |

| PMSACL - PaDiM | 0.658 | 0.749 | 0.467 | 0.615 |

| IGD [11] | 0.699 | 0.885 | 0.490 | 0.688 |

| CCD - IGD | 0.746 | 0.851 | 0.595 | 0.722 |

| PMSACL - IGD | 0.872 | 0.863 | 0.775 | 0.813 |

4.4.4 Covid-X

Table 4 shows that Covid-X results, where our PMSACL pre-trained PaDiM and IGD methods achieve 65.8% and 87.2% AUC on the Covid-X dataset, significantly surpassing their ImageNet pre-trained approaches by 4.4% and 17.2% AUC, and CCD pre-trained by 2.6% and 12.6% AUC. Moreover, our approaches achieve significantly better performance when compared against current SOTA MS-SSIM, f-AnoGAN, PANDA, and CutPaste baselines. The small abnormal lesions in chest X-ray images are hard to detect, so the generative-based anomaly detector IGD can learn more effectively the fine-grained appearances of normal images, leading to better ability to detect unseen anomalous regions during testing with the SOTA results of 87.2% AUC, 77.5% of sensitivity and 81.3% of accuracy. The PMSACL pre-trained IGD achieves 86.3% specificity, which is competitive with the result from IGD pre-trained with ImageNet. It can also be observed that our PMSACL pre-trained PaDiM and IGD improve sensitivity by 14.9% and 28.5%, when compared to the ImageNet pre-trained PaDiM and IGD.

4.4.5 Variability in the Results

We show on Table 5 the standard deviation computed from the AUC, specificity, sensitivity and accuracy results of five different trainings based on different model initialisation of the PMSACL pre-trained PaDiM detector. These results in Table 5 should be studied together with the Tables 1, 2, 4. In general, we conclude that the differences between the methods described in the sections above can be considered significant in most cases given that the standard deviation only varies from to .

| Dataset | AUC | Specificity | Sensitivity | Accuracy |

| Hyper-Kvasir [6] | 0.0084 | 0.0079 | 0.0127 | 0.0036 |

| LAG [28] | 0.0163 | 0.0085 | 0.0105 | 0.0121 |

| Covid-X [64] | 0.0107 | 0.0149 | 0.0092 | 0.0171 |

4.5 Anomaly Localisation Results

In this section, we show the anomaly localisation results on Hyper-Kvasir and LAG.

4.5.1 Hyper-Kvasir

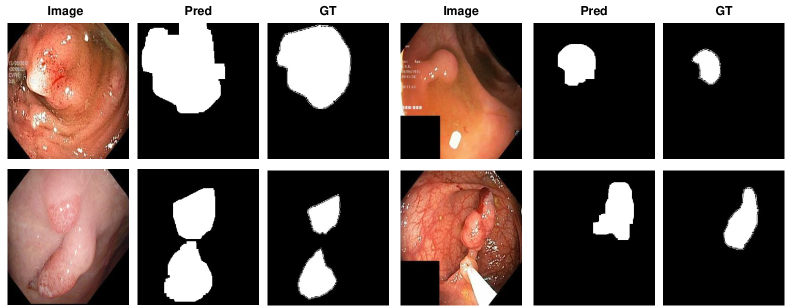

We demonstrate the anomaly localisation performance on Hyper-Kvasir on Table 6. Following [60], we randomly sample 100 abnormal images from the test set and compute the mean segmentation performance over five different such groups of 100 images. The proposed PMSACL pre-training improves the IGD and PaDiM by 1.2% and 2.8% IoU compared with the CCD pre-training, and 8.1% and 6.4% IoU with respect to the ImageNet pre-training, respectively. In addition, our PMSACL pre-trained PaDiM shows the SOTA result of 40.6% IoU and 55.4% Dice, demonstrating the effectiveness of our PMSACL approach for abnormal lesion segmentation. The CCD version of PaDiM achieves the SOTA result of 88.1% Pro-score.

| Methods | IoU | Dice | Pro |

| PaDiM [17] | 0.341 | 0.475 | 0.803 |

| CCD - PaDiM | 0.378 | 0.497 | 0.881 |

| PMSACL - PaDiM | 0.406 | 0.554 | 0.854 |

| IGD [11] | 0.303 | 0.417 | 0.794 |

| CCD - IGD | 0.372 | 0.502 | 0.865 |

| PMSACL - IGD | 0.384 | 0.521 | 0.876 |

4.5.2 LAG

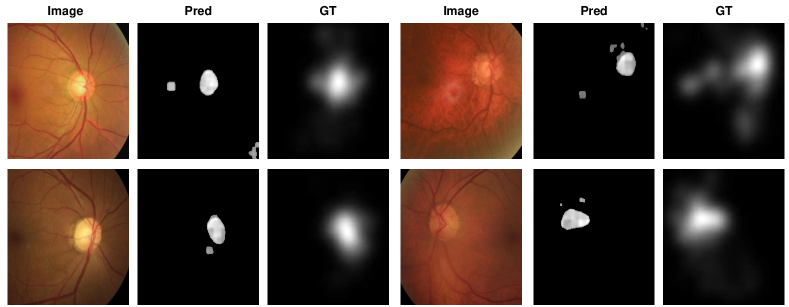

We further demonstrate the segmentation results on LAG dataset on Table 7. The PMSACL pre-trained IGD achieves the SOTA result of 51.6% IoU, 66.7% Dice and 69.3% Pro-score, showing that our model can effectively segment different types of lesions, such as colon polyps or optic disk and cup with Glaucoma. Moreover, PaDiM pre-trained with PMSACL improves PaDiM pre-trained with CCD and ImageNet by 1.3% and 4.8% IoU, respectively. Also, PaDiM with PMSACL pre-training achieves 64.3% Dice and 62.8% Pro-score, which are comparable to the SOTA results by the PMSACL pre-trained IGD.

| Methods | IoU | Dice | Pro |

| PaDiM [17] | 0.427 | 0.579 | 0.596 |

| CCD - PaDiM | 0.462 | 0.612 | 0.634 |

| PMSACL - PaDiM | 0.475 | 0.643 | 0.628 |

| IGD [11] | 0.409 | 0.539 | 0.603 |

| CCD - IGD | 0.509 | 0.645 | 0.677 |

| PMSACL - IGD | 0.516 | 0.667 | 0.693 |

4.6 Qualitative Results

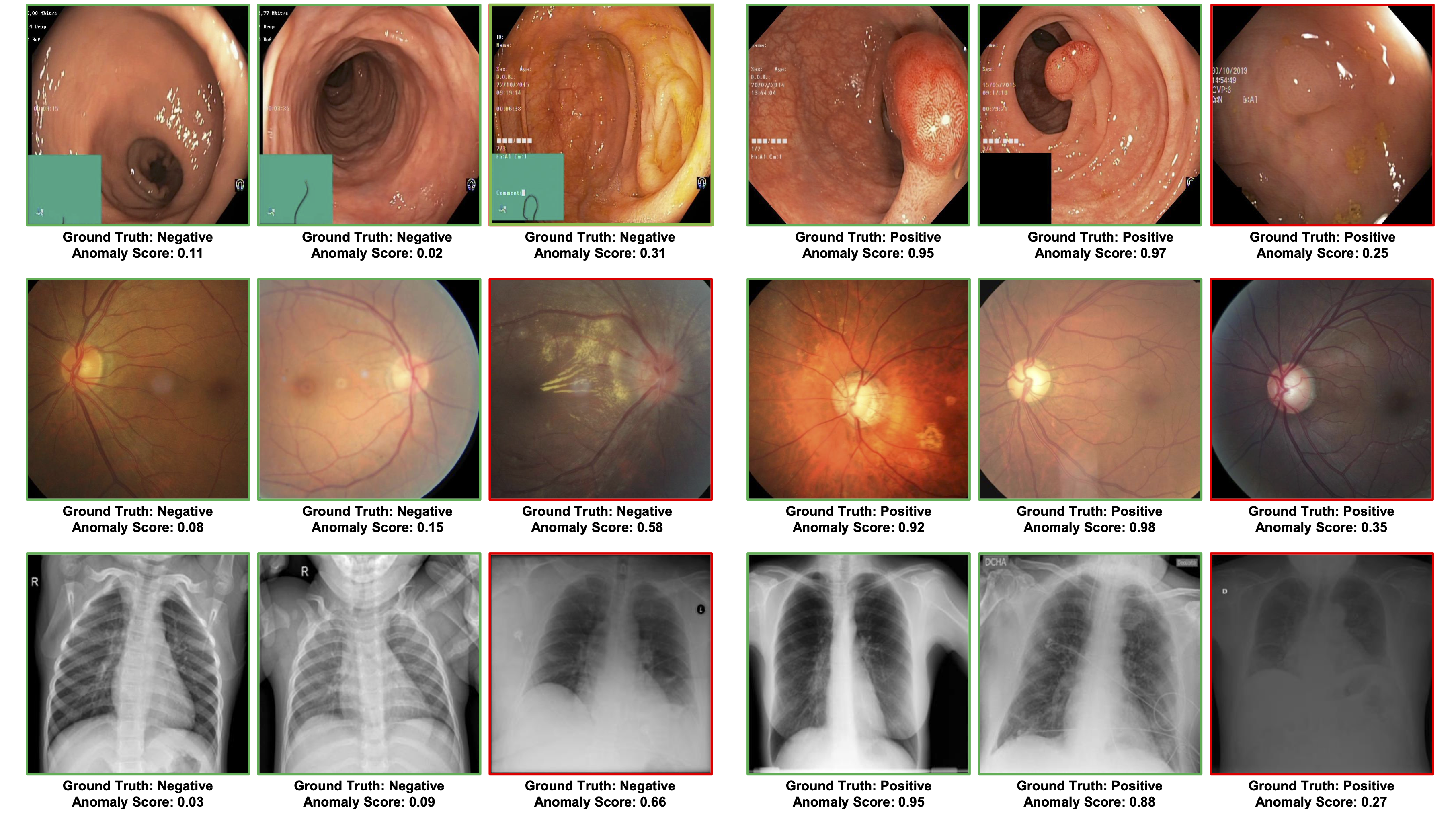

In this section, we show examples of anomaly localisation and detection results, and t-SNE results displaying the distribution of image representations of the normal and pseudo abnormal classes in the feature space.

4.6.1 Anomaly Localisation and Detection Visual Results

The visualisation of polyp localisation results of PaDiM with PMSACL pre-training on Hyper-Kvasir [6] is shown in Fig. 3. Notice that our model can effectively localise colon polyps with various sizes and shapes. We also show the localisation results based on the pixel-level anomaly scores of IGD with PMSACL pre-training on the LAG dataset in Fig. 4.

4.7 Ablation Study

In this section, we study the roles played by PMSACL components. We start by investigating the loss terms in (1). Then we study the impact of using different types of strong data augmentation to generate the pseudo abnormal images and the number of pseudo abnormal classes in MedMix (i.e., the size in (1)). We also compare our approach with other recently proposed self-supervised pre-training approaches.

| CCD | MedMix | AUC - Hyper | AUC - LAG | |||

| ✓ | 0.978 | 0.728 | ||||

| ✓ | ✓ | 0.985 | 0.739 | |||

| ✓ | ✓ | ✓ | 0.990 | 0.745 | ||

| ✓ | ✓ | ✓ | ✓ | 0.993 | 0.753 | |

| ✓ | ✓ | ✓ | ✓ | ✓ | 0.996 | 0.761 |

4.7.1 Loss Terms in PMSACL pre-training

We present an ablation study that shows the influence of each term of our proposed PMSACL pre-training in (1) following PaDiM fine-tuning in Table 8 on Hyper-Kvasir and LAG datasets. Starting from our previous CCD framework [60], which includes the CCD contrastive loss, the strong augmentation loss , and the patch location prediction loss , the performance can reach 97.8% and 72.8% AUC on Hyper-Kvasir and LAG datasets, respectively. Moreover, we notice that MedMix can improve the AUC on both datasets by 1%. Our proposed without temperature scaling strategy and multi-centring loss can improve 0.5% and 0.6% of AUC on both datasets. Then, adding the proposed multi-centring loss and temperature scaling from (7) further boosts the performance to the state-of-the-art results of 99.6% and 76.1% AUC on both datasets. This indicates that the joint training of and with temperature scaling strategy can learn better fine-grained low-dimensional features for the downstream anomaly detectors (i.e., producing denser cluster for normal images).

| Methods | AUC | Specificity | Sensitivity | Accuracy |

| ImageNet | 0.943 | 0.846 | 0.929 | 0.898 |

| SimCLR [7] | 0.945 | 0.794 | 0.942 | 0.914 |

| Rot-Net [21] | 0.938 | 0.856 | 0.905 | 0.905 |

| CSI [53] | 0.946 | 0.952 | 0.914 | 0.933 |

| DROC [52] | 0.931 | 0.954 | 0.881 | 0.914 |

| SupCon [26] | 0.946 | 0.912 | 0.953 | 0.928 |

| CCD [60] | 0.978 | 0.923 | 0.961 | 0.967 |

| PMSACL | 0.996 | 0.966 | 0.981 | 0.983 |

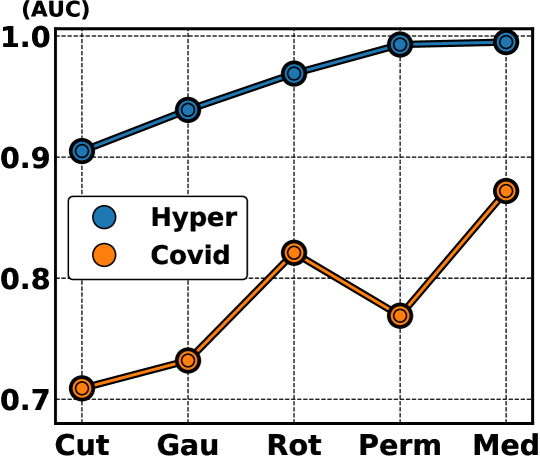

4.7.2 Strong Augmentations

In Fig. 6, we explore the influence of strong augmentation strategies, represented by rotation, permutation, cutout, Gaussian noise and our proposed MedMix on the AUC results of Hyper-Kvasir and Covid-X datasets, based on our self-supervised PMSACL pre-training with IGD as anomaly detector. The performance of our MedMix reaches the SOTA results of 99.5% and 87.2% on those datasets. The second best AUC (96.9%) on Hyper-Kvasir uses random permutations, which were used in CCD pre-training [60], producing an AUC 0.2% worse than our MedMix. For Covid-X, rotation is the second best data augmentation approach with an AUC result that is 5.1% worse than MedMix. Other approaches do not work well with the appearance characteristics of X-ray images, yielding significantly worse results than our MedMix on Covid-X. These results suggest that the use of MedMix as the strong augmentation yields the best AUC results on different medical image benchmarks.

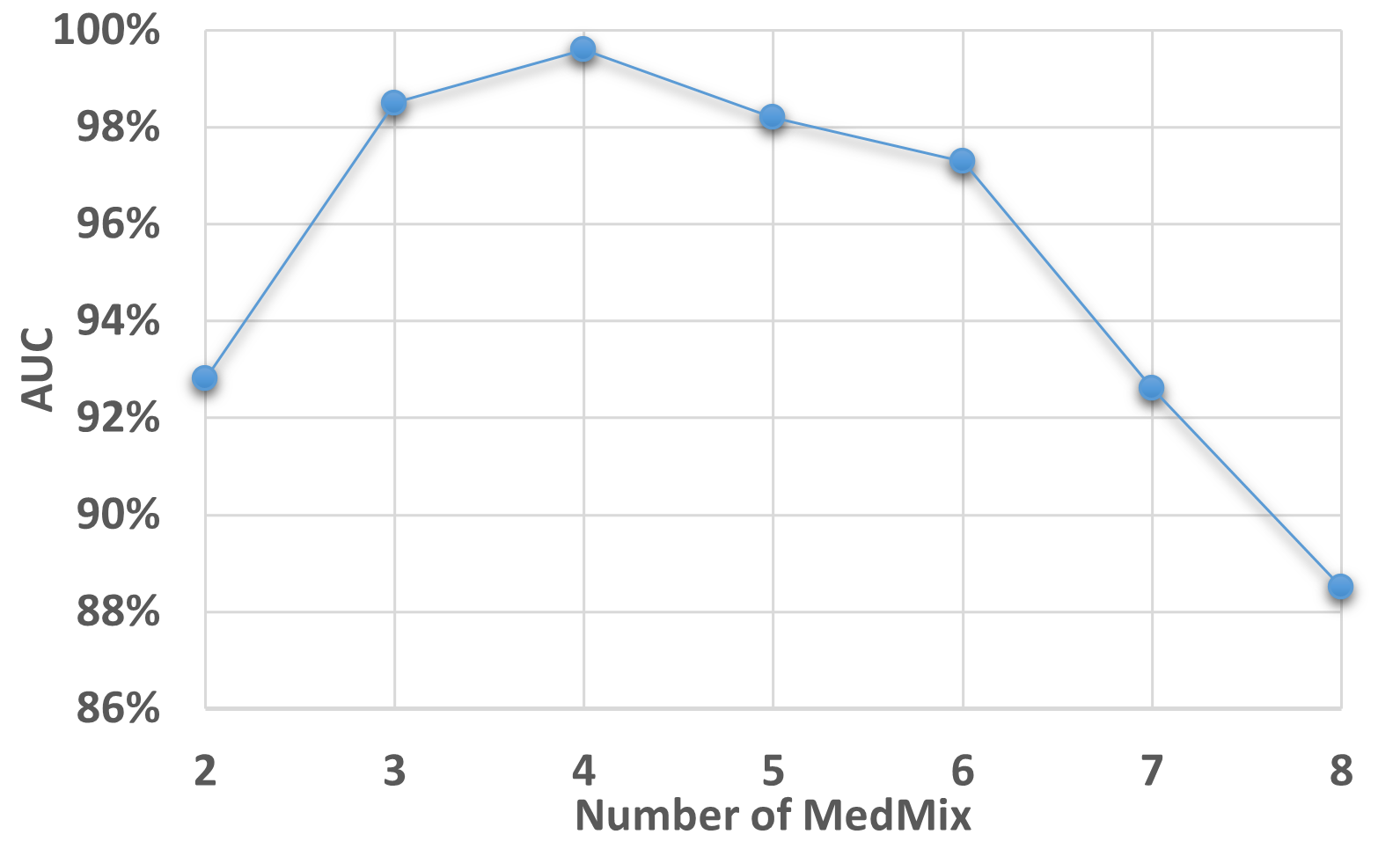

4.7.3 MedMix Augmentations

In Fig. 7, we explore the influence of the number of MedMix augmentation distributions (i.e., ) on the AUC results of Hyper-Kvasir, based on our self-supervised PMSACL pre-training and PaDiM anomaly detector. Our model achieves the best performance when strong augmentation distributions, when it reaches around 98% to 99% AUC. The AUC declines when or . The performance deterioration when is due to an insufficient number of pseudo abnormal training regions from the strong augmentation distributions. When the number of strong augmentation distributions increases to , the pseudo abnormalities may hide most of the normal image regions, causing the model to become over-confident when classifying the pseudo abnormal regions.

4.7.4 Other Self-supervised Methods

In Table 9, we show the results of different pre-training approaches with PaDiM as anomaly detector, on Hyper-Kvasir testing set. It can be observed that our PMSACL approach surpasses the previous SOTA CCD pre-training [60] by 2.2% AUC. Other pre-training methods proposed in computer vision (e.g., ImageNet pre-training, SimCLR [7], Rot-Net [21]) achieve worse results than CCD and PMSACL. An interesting point in this comparison is the relatively poor result from ImageNet pre-training, suggesting that it may not generalise well for anomaly detection in medical images. Finally, our PMSACL achieves better results than previous SOTA UAD SSL approaches CSI [53] and DROC [52] by about 4% to 5% AUC, indicating the effectiveness of our new contrastive loss. We also compare the SOTA supervised contrastive learning SupCon [26], which re-formulates the contrastive loss as a supervised task. To validate the effectiveness of our proposed PMSACL contrastive loss, we adapted SupCon to our pseudo multi-class pre-training paradigm for performance comparison. The anomaly detection performance of our PMSACL significantly surpasses SupCon. We argue that such performance improvement is due to the fact that SupCon does not contrast the samples from the same classes, missing the chance of learning fine-grained normality features between those samples.

| Methods | Hyper | Liu et al. | LAG | Covid |

| Random Centre | 0.902 | 0.792 | 0.803 | 0.795 |

| Equidistant Centre | 0.912 | 0.772 | 0.825 | 0.814 |

| Re-estimate Centre | 0.985 | 0.864 | 0.878 | 0.876 |

| Centres from untrained encoder | 0.995 | 0.851 | 0.908 | 0.872 |

4.7.5 Centering Strategies

As aforementioned, UAD models often suffer from the issue of catastrophic collapse [47, 11], where all training samples are projected to a single point in the representation space. To avoid such an issue, previous UAD methods used a pre-defined class centre and kept it fixed throughout the entire training. We also observe such catastrophic collapse issue when iteratively updating the class centres during our experiments. In particular, the optimisation processes often fail with some of the random seeds and we observed that roughly only one out of five optimisation processes can be successful. Another major drawback of iteratively updating the latent centre through the training process is that such a process often requires a significant amount of computation time due to the large number and resolution of training medical images. From our experiment of re-estimating centres, the overall training time increased about three times. We found that pre-defining the class centres enables the best performance. As shown in Table 10, we added a new ablation study to compare the performance with regard to randomly defined centres, pre-defined equidistant vectors as centres, re-estimated centres over multiple epochs, and our approach of pre-defined centres from an untrained model, on all four medical anomaly detection datasets. The inferior performance of randomly defined centres and pre-defined equidistant centres is because those centres may fall in sub-spaces that are too distant from the actual representation distribution from the beginning of the training process, leading to ineffective optimisation and challenging the optimisation process. Lastly, re-estimating centres every 5 epochs achieves comparable performance than our approaches but sacrificing significant amount of training efficiency and stability.

5 Conclusion

In this paper, we proposed a new self-supervised pre-training approach, namely PMSACL, for UAD methods applied to MIA problems. PMSACL is based on a new contrastive learning optimisation to learn multiple classes of normal and pseudo abnormal images, formed with the proposed MedMix data augmentation that simulates medical abnormalities. After pre-training a UAD model using our PMSACL, we fine-tune it with two SOTA anomaly detecting approaches. Experimental results indicate that our PMSACL pre-training can effectively improve the performance of anomaly detection and segmentation on several medical datasets for both anomaly detectors. In the future, we plan to design a new anomaly detector that suits better the characteristics of our self-supervised PMSACL pre-training.

References

- [1] Samet Akcay, Amir Atapour-Abarghouei, and Toby P Breckon. Ganomaly: Semi-supervised anomaly detection via adversarial training. In Asian conference on computer vision, pages 622–637. Springer, 2018.

- [2] Christoph Baur, Benedikt Wiestler, Shadi Albarqouni, and Nassir Navab. Scale-space autoencoders for unsupervised anomaly segmentation in brain mri. In MICCAI, pages 552–561. Springer, 2020.

- [3] Liron Bergman and Yedid Hoshen. Classification-based anomaly detection for general data. arXiv preprint arXiv:2005.02359, 2020.

- [4] Paul Bergmann, Michael Fauser, David Sattlegger, and Carsten Steger. Uninformed students: Student-teacher anomaly detection with discriminative latent embeddings. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 4183–4192, 2020.

- [5] David Berthelot, Colin Raffel, Aurko Roy, and Ian Goodfellow. Understanding and improving interpolation in autoencoders via an adversarial regularizer. arXiv preprint arXiv:1807.07543, 2018.

- [6] Hanna Borgli and et al. Hyperkvasir, a comprehensive multi-class image and video dataset for gastrointestinal endoscopy. Scientific Data, 7(1):1–14, 2020.

- [7] Ting Chen, Simon Kornblith, Mohammad Norouzi, and Geoffrey Hinton. A simple framework for contrastive learning of visual representations. In ICML, pages 1597–1607. PMLR, 2020.

- [8] Xiaoran Chen, Suhang You, Kerem Can Tezcan, and Ender Konukoglu. Unsupervised lesion detection via image restoration with a normative prior. Medical image analysis, 64:101713, 2020.

- [9] Yuanhong Chen, Fengbei Liu, Hu Wang, Chong Wang, Yu Tian, Yuyuan Liu, and Gustavo Carneiro. Bomd: Bag of multi-label descriptors for noisy chest x-ray classification. arXiv preprint arXiv:2203.01937, 2022.

- [10] Yuanhong Chen, Yuyuan Liu, Chong Wang, Michael Elliott, Chun Fung Kwok, Yu Tian, Fengbei Liu, Helen Frazer, Davis J McCarthy, Gustavo Carneiro, et al. Braixdet: Learning to detect malignant breast lesion with incomplete annotations. arXiv preprint arXiv:2301.13418, 2023.

- [11] Yuanhong Chen, Yu Tian, Guansong Pang, and Gustavo Carneiro. Unsupervised anomaly detection and localisation with multi-scale interpolated gaussian descriptors. arXiv preprint arXiv:2101.10043, 2021.

- [12] Yuanhong Chen, Hu Wang, Chong Wang, Yu Tian, Fengbei Liu, Yuyuan Liu, Michael Elliott, Davis J McCarthy, Helen Frazer, and Gustavo Carneiro. Multi-view local co-occurrence and global consistency learning improve mammogram classification generalisation. In International Conference on Medical Image Computing and Computer-Assisted Intervention, pages 3–13. Springer, 2022.

- [13] Yunqiang Chen, Xiang Sean Zhou, and Thomas S Huang. One-class svm for learning in image retrieval. In Proceedings 2001 International Conference on Image Processing (Cat. No. 01CH37205), volume 1, pages 34–37. IEEE, 2001.

- [14] Hyunsoo Cho, Jinseok Seol, and Sang-goo Lee. Masked contrastive learning for anomaly detection. arXiv preprint arXiv:2105.08793, 2021.

- [15] J. Cho et al. Self-supervised 3d out-of-distribution detection via pseudoanomaly generation. In MICCAI 2021: Biomedical Image Registration, Domain Generalisation and Out-of-Distribution Analysis, 2021.

- [16] Jihoon Cho, Inha Kang, and Jinah Park. Self-supervised 3d out-of-distribution detection via pseudoanomaly generation. In International Conference on Medical Image Computing and Computer-Assisted Intervention, pages 95–103. Springer, 2021.

- [17] Thomas Defard, Aleksandr Setkov, Angelique Loesch, and Romaric Audigier. Padim: a patch distribution modeling framework for anomaly detection and localization. arXiv preprint arXiv:2011.08785, 2020.

- [18] Jia Deng, Wei Dong, Richard Socher, Li-Jia Li, Kai Li, and Li Fei-Fei. Imagenet: A large-scale hierarchical image database. In Computer Vision and Pattern Recognition, 2009. CVPR 2009. IEEE Conference on, pages 248–255. IEEE, 2009.

- [19] Carl Doersch, Abhinav Gupta, and Alexei A Efros. Unsupervised visual representation learning by context prediction. In ICCV, pages 1422–1430, 2015.

- [20] Deng-Ping Fan, Ge-Peng Ji, Tao Zhou, Geng Chen, Huazhu Fu, Jianbing Shen, and Ling Shao. Pranet: Parallel reverse attention network for polyp segmentation. In MICCAI, pages 263–273. Springer, 2020.

- [21] Izhak Golan and Ran El-Yaniv. Deep anomaly detection using geometric transformations. arXiv preprint arXiv:1805.10917, 2018.

- [22] Dong Gong et al. Memorizing normality to detect anomaly: Memory-augmented deep autoencoder for unsupervised anomaly detection. In ICCV, pages 1705–1714, 2019.

- [23] Kaiming He et al. Deep residual learning for image recognition. In CVPR, pages 770–778, 2016.

- [24] Kaiming He et al. Momentum contrast for unsupervised visual representation learning. In CVPR, pages 9729–9738, 2020.

- [25] Dan Hendrycks et al. Using self-supervised learning can improve model robustness and uncertainty. arXiv preprint arXiv:1906.12340, 2019.

- [26] Prannay Khosla, Piotr Teterwak, Chen Wang, Aaron Sarna, Yonglong Tian, Phillip Isola, Aaron Maschinot, Ce Liu, and Dilip Krishnan. Supervised contrastive learning. Advances in Neural Information Processing Systems, 33:18661–18673, 2020.

- [27] Chun-Liang Li, Kihyuk Sohn, Jinsung Yoon, and Tomas Pfister. Cutpaste: Self-supervised learning for anomaly detection and localization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 9664–9674, 2021.

- [28] Liu Li et al. Attention based glaucoma detection: A large-scale database and cnn model. In CVPR, pages 10571–10580, 2019.

- [29] Geert Litjens et al. A survey on deep learning in medical image analysis. Medical image analysis, 42:60–88, 2017.

- [30] Fengbei Liu, Yuanhong Chen, Yu Tian, Yuyuan Liu, Chong Wang, Vasileios Belagiannis, and Gustavo Carneiro. Nvum: Non-volatile unbiased memory for robust medical image classification. In International Conference on Medical Image Computing and Computer-Assisted Intervention, pages 544–553. Springer, 2022.

- [31] Fengbei Liu et al. Self-supervised depth estimation to regularise semantic segmentation in knee arthroscopy. In International Conference on Medical Image Computing and Computer-Assisted Intervention, pages 594–603. Springer, 2020.

- [32] Fengbei Liu, Yu Tian, Filipe R Cordeiro, Vasileios Belagiannis, Ian Reid, and Gustavo Carneiro. Noisy label learning for large-scale medical image classification. arXiv preprint arXiv:2103.04053, 2021.

- [33] Fengbei Liu, Yu Tian, et al. Self-supervised mean teacher for semi-supervised chest x-ray classification. arXiv preprint arXiv:2103.03629, 2021.

- [34] Yuyuan Liu, Choubo Ding, Yu Tian, Guansong Pang, Vasileios Belagiannis, Ian Reid, and Gustavo Carneiro. Residual pattern learning for pixel-wise out-of-distribution detection in semantic segmentation. arXiv preprint arXiv:2211.14512, 2022.

- [35] Y. Liu, Y. Tian, G. Maicas, L. Z. Cheng Tao Pu, R. Singh, J. W. Verjans, and G. Carneiro. Photoshopping colonoscopy video frames. In ISBI, pages 1–5, 2020.

- [36] Y. Liu, Y. Tian, G. Maicas, L. Z. Cheng Tao Pu, R. Singh, J. W. Verjans, and G. Carneiro. Photoshopping colonoscopy video frames. In ISBI, pages 1–5, 2020.

- [37] Yuyuan Liu, Yu Tian, Chong Wang, Yuanhong Chen, Fengbei Liu, Vasileios Belagiannis, and Gustavo Carneiro. Translation consistent semi-supervised segmentation for 3d medical images. arXiv preprint arXiv:2203.14523, 2022.

- [38] Cheng Tao Pu LZ et al. Computer-aided diagnosis for characterisation of colorectal lesions: a comprehensive software including serrated lesions. Gastrointestinal Endoscopy, 2020.

- [39] D. Zimmerer M. Aubreville and M. Heinrich. Miccai 2021: Biomedical image registration, domain generalisation and out-of-distribution analysis. In MICCAI2021, 2021.

- [40] Jonathan Masci and et al. Stacked convolutional auto-encoders for hierarchical feature extraction. In International Conference on Artificial Neural Networks, pages 52–59. Springer, 2011.

- [41] Guansong Pang, Chunhua Shen, Longbing Cao, and Anton Van Den Hengel. Deep learning for anomaly detection: A review. ACM Computing Surveys (CSUR), 54(2):1–38, 2021.

- [42] Guansong Pang, Chunhua Shen, and Anton van den Hengel. Deep anomaly detection with deviation networks. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, pages 353–362, 2019.

- [43] Pramuditha Perera, Ramesh Nallapati, and Bing Xiang. Ocgan: One-class novelty detection using gans with constrained latent representations. In CVPR, pages 2898–2906, 2019.

- [44] L Pu, Zorron Cheng Tao, et al. Prospective study assessing a comprehensive computer-aided diagnosis for characterization of colorectal lesions: results from different centers and imaging technologies. In Journal of Gastroenterology and Hepatology, volume 34, pages 25–26. WILEY 111 RIVER ST, HOBOKEN 07030-5774, NJ USA, 2019.

- [45] Tal Reiss, Niv Cohen, Liron Bergman, and Yedid Hoshen. Panda: Adapting pretrained features for anomaly detection and segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 2806–2814, 2021.

- [46] Tal Reiss and Yedid Hoshen. Mean-shifted contrastive loss for anomaly detection. arXiv preprint arXiv:2106.03844, 2021.

- [47] Lukas Ruff, Robert Vandermeulen, Nico Goernitz, Lucas Deecke, Shoaib Ahmed Siddiqui, Alexander Binder, Emmanuel Müller, and Marius Kloft. Deep one-class classification. In International conference on machine learning, pages 4393–4402. PMLR, 2018.

- [48] Thomas Schlegl et al. f-anogan: Fast unsupervised anomaly detection with generative adversarial networks. Medical image analysis, 54:30–44, 2019.

- [49] H.M. Schlüter et al. Natural synthetic anomalies for self-supervised anomaly detection and localization. In arXiv:2109.15222, 2021.

- [50] Philipp Seeböck, José Ignacio Orlando, Thomas Schlegl, Sebastian M Waldstein, Hrvoje Bogunović, Sophie Klimscha, Georg Langs, and Ursula Schmidt-Erfurth. Exploiting epistemic uncertainty of anatomy segmentation for anomaly detection in retinal oct. IEEE transactions on medical imaging, 39(1):87–98, 2019.

- [51] Min Shi, Anagha Lokhande, Mojtaba S Fazli, Vishal Sharma, Yu Tian, Yan Luo, Louis R Pasquale, Tobias Elze, Michael V Boland, Nazlee Zebardast, et al. Artifact-tolerant clustering-guided contrastive embedding learning for ophthalmic images in glaucoma. IEEE Journal of Biomedical and Health Informatics, 2023.

- [52] Kihyuk Sohn, Chun-Liang Li, Jinsung Yoon, Minho Jin, and Tomas Pfister. Learning and evaluating representations for deep one-class classification. arXiv preprint arXiv:2011.02578, 2020.

- [53] Jihoon Tack, Sangwoo Mo, Jongheon Jeong, and Jinwoo Shin. Csi: Novelty detection via contrastive learning on distributionally shifted instances. arXiv preprint arXiv:2007.08176, 2020.

- [54] Yu Tian et al. One-stage five-class polyp detection and classification. In 2019 IEEE 16th International Symposium on Biomedical Imaging (ISBI 2019), pages 70–73. IEEE, 2019.

- [55] Yu Tian et al. Weakly-supervised video anomaly detection with robust temporal feature magnitude learning. arXiv preprint arXiv:2101.10030, 2021.

- [56] Yu Tian, Yuyuan Liu, Guansong Pang, Fengbei Liu, Yuanhong Chen, and Gustavo Carneiro. Pixel-wise energy-biased abstention learning for anomaly segmentation on complex urban driving scenes. In European Conference on Computer Vision, pages 246–263. Springer, 2022.

- [57] Yu Tian, Gabriel Maicas, Leonardo Zorron Cheng Tao Pu, Rajvinder Singh, Johan W Verjans, and Gustavo Carneiro. Few-shot anomaly detection for polyp frames from colonoscopy. In International Conference on Medical Image Computing and Computer-Assisted Intervention, pages 274–284. Springer, 2020.

- [58] Yu Tian and otherss. Detecting, localising and classifying polyps from colonoscopy videos using deep learning. arXiv preprint arXiv:2101.03285, 2021.

- [59] Yu Tian, Guansong Pang, Fengbei Liu, Yuyuan Liu, Chong Wang, Yuanhong Chen, Johan Verjans, and Gustavo Carneiro. Contrastive transformer-based multiple instance learning for weakly supervised polyp frame detection. In International Conference on Medical Image Computing and Computer-Assisted Intervention, pages 88–98. Springer, 2022.

- [60] Yu Tian, Guansong Pang, Fengbei Liu, Seon Ho Shin, Johan W Verjans, Rajvinder Singh, Gustavo Carneiro, et al. Constrained contrastive distribution learning for unsupervised anomaly detection and localisation in medical images. MICCAI 2021, 2021.

- [61] Yu Tian, Guansong Pang, Yuyuan Liu, Chong Wang, Yuanhong Chen, Fengbei Liu, Rajvinder Singh, Johan W Verjans, and Gustavo Carneiro. Unsupervised anomaly detection in medical images with a memory-augmented multi-level cross-attentional masked autoencoder. arXiv preprint arXiv:2203.11725, 2022.

- [62] Shashanka Venkataramanan, Kuan-Chuan Peng, Rajat Vikram Singh, and Abhijit Mahalanobis. Attention guided anomaly localization in images. In ECCV, pages 485–503. Springer, 2020.

- [63] Chong Wang, Yuanhong Chen, Yuyuan Liu, Yu Tian, Fengbei Liu, Davis J McCarthy, Michael Elliott, Helen Frazer, and Gustavo Carneiro. Knowledge distillation to ensemble global and interpretable prototype-based mammogram classification models. In International Conference on Medical Image Computing and Computer-Assisted Intervention, pages 14–24. Springer, 2022.

- [64] Linda Wang, Zhong Qiu Lin, and Alexander Wong. Covid-net: A tailored deep convolutional neural network design for detection of covid-19 cases from chest x-ray images. Scientific Reports, 10(1):1–12, 2020.

- [65] Tongzhou Wang and Phillip Isola. Understanding contrastive representation learning through alignment and uniformity on the hypersphere. In ICML, pages 9929–9939. PMLR, 2020.

- [66] Zhou Wang and et al. Multiscale structural similarity for image quality assessment. In The Thrity-Seventh Asilomar Conference on Signals, Systems & Computers, 2003, volume 2, pages 1398–1402. Ieee, 2003.

- [67] Sangdoo Yun, Dongyoon Han, Seong Joon Oh, Sanghyuk Chun, Junsuk Choe, and Youngjoon Yoo. Cutmix: Regularization strategy to train strong classifiers with localizable features. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 6023–6032, 2019.