11email: j385chen@uwaterloo.ca, {ben.feng, twirjanto}@uwaterloo.ca 22institutetext: School of Electronic Information and Communications, Huazhong University of Science and Technology

22email: fusichaoupc@163.com, pengqinmu@hust.edu.cn 33institutetext: Cheriton School of Computer Science, University of Waterloo

33email: z43ma@uwaterloo.ca

Semi-supervised Anomaly Detection with Extremely Limited Labels in Dynamic Graphs

Abstract

Semi-supervised graph anomaly detection (GAD) has recently received increasing attention, which aims to distinguish anomalous patterns from graphs under the guidance of a moderate amount of labeled data and a large volume of unlabeled data. Although these proposed semi-supervised GAD methods have achieved great success, their superior performance will be seriously degraded when the provided labels are extremely limited due to some unpredictable factors. Besides, the existing methods primarily focus on anomaly detection in static graphs, and little effort was paid to consider the continuous evolution characteristic of graphs over time (dynamic graphs). To address these challenges, we propose a novel GAD framework (EL2-DGAD) to tackle anomaly detection problem in dynamic graphs with extremely limited labels. Specifically, a transformer-based graph encoder model is designed to more effectively preserve evolving graph structures beyond the local neighborhood. Then, we incorporate an ego-context hypersphere classification loss to classify temporal interactions according to their structure and temporal neighborhoods while ensuring the normal samples are mapped compactly against anomalous data. Finally, the above loss is further augmented with an ego-context contrasting module which utilizes unlabeled data to enhance model generalization. Extensive experiments on four datasets and three label rates demonstrate the effectiveness of the proposed method in comparison to the existing GAD methods.

Keywords:

Graph anomaly detection Semi-supervised learning Extremely limited labels Graph neural network Graph contrastive learning.1 Introduction

Anomaly detection (AD) is the process of identifying irregular patterns in data that deviate from expected behavior [4]. In today’s interconnected world, many datasets from various domains inherently form complex network structures [26, 28, 29, 5, 22]. These network structures are often contaminated with anomalies. For instance, financial networks may exhibit irregular transactional patterns indicative of fraud, while social networks could contain spurious accounts engaged in malicious activities. In light of the inherent complexity of these graph-structured datasets and the severe consequences of ignoring anomalies, there has been a growing research emphasis on graph anomaly detection (GAD) in recent years.

Owing to the rarity of anomaly events in real-world applications, existing GAD methods primarily rely on unsupervised learning-based approaches. These methods typically utilize reconstruction-based [8, 17, 32] and contrastive learning-based techniques [15, 34, 31, 20] to learn normal patterns within unlabeled data. Anomalies are then detected as deviations from these patterns. For example, reconstruction-based methods like DOMINANT [8] and ComGA [17] employ autoencoders to reconstruct graph structures and attributes, whereas anomalies are identified through substantial reconstruction errors. On the other hand, contrastive learning-based methods, such as COLA [15] and PREM [20], detect anomalies by comparing nodes with their surrounding structures and identifying anomalies that have large inconsistencies highlighted via contrastive losses. However, these methods primarily focus on static graphs, failing to consider the dynamic nature of real-world graphs that are characterized by constantly evolving nodes, edges, and attributes. Such dynamic information can be crucial for identifying anomalies. For example, a sudden spike in account creation during off-peak hours could indicate inauthentic behavior, while large financial transfers during low-transaction periods may signal irregular activities.

Recently, detecting anomalies in dynamic graphs has received increasing research interest, which requires models capable of capturing both structural dependencies and temporal patterns [33, 1, 16]. For example, AddGraph [33] processes dynamic graphs as sequences of snapshots, using GCNs [11] and GRUs [6] to model these dependencies within the dynamic graph data; it also applies selective negative sampling by generating negative edges based on node degrees and uses a margin-based loss to ensure normal edges receive lower anomaly scores. Similarly, TADDY [16] operates on discrete graph snapshots, utilizing a transformer model to learn both static graph patterns and their temporal evolution. A binary classifier is trained to distinguish anomalies by comparing real edges with pseudo-anomalous edges. However, these aforementioned methods are primarily unsupervised learning-based and also rely heavily on the quality of the pseudo labels, which may risk identifying noisy samples as anomalies due to the lack of prior anomaly knowledge.

In practice, it is often possible to acquire a limited set of labeled examples. This has spurred increased attention towards semi-supervised GAD, which combines labeled data with unlabeled data to improve detection performance [25, 12, 18, 9, 23]. For instance, SemiADC [18] uses generative adversarial networks to learn the feature distribution of normal nodes and trains a classifier to distinguish these normal features from labeled anomalies. The anomaly scores are computed by combining the classifier output with a temporal consistency score, which is derived from evaluating node behavior across consecutive graph snapshots. Similarly, SAD [23] enhances the node representation learning with a temporal graph encoder that aggregates features across continuous time steps. The model is trained under a supervised deviation network which enforces significant differences between normal and abnormal nodes. It also introduces a pseudo-label-based contrastive learning component to improve performance. Despite the promising results of these semi-supervised dynamic GAD methods, they often hinge on the availability of an adequate number of labeled anomalies. In reality, the rarity and difficulty of accurately identifying anomalies make this assumption impractical in real-world applications, thereby hampering the effectiveness of these methods.

To address these challenges, we propose EL2-DGAD, a novel GAD approach designed to enhance the robustness of existing methods for dynamic graph anomaly detection, especially under the constraints of limited labeled data. Our framework focuses on two critical aspects: improving feature learning in dynamic graph structures and strengthening the robustness of the loss function.

First, we introduce a transformer-based dynamic graph encoder designed to effectively capture both temporal and structural properties of dynamic graphs. Unlike existing methods that primarily focus on local dependencies using GNN-based approaches [27, 23], our encoder leverages both local and global attention mechanisms. The former extracts fine-grained patterns from a node’s neighborhood, while the latter aggregates these patterns across the graph, thereby enhancing the detection of subtle and extensive anomalies across the graph. Furthermore, we integrate continuous-time embeddings into the transformer’s attention mechanisms, allowing our model to seamlessly incorporate temporal dynamics and learn precise, time-sensitive representations. This contrasts with discrete snapshot-based methods [33, 15, 1], which lose precision by grouping events into fixed intervals, making it harder to detect time-sensitive anomalies.

Furthermore, to effectively utilize the limited labels, we propose a novel ego-context hypersphere classification loss. It learns a context-aware hypersphere boundary by considering the “ego” (target instance) and its “context” (historically evolving graph dynamics). By learning a robust dynamic boundary for abundant normal data, rather than modeling the irregular and diverse patterns of anomalies, we reduce the reliance on a large number of labeled anomalies. Instances that deviate from this learned dynamic boundary can be effectively identified as anomalies, even in scenarios with minimal supervision. Lastly, to further improve model generalization, we integrate an ego-context contrastive loss, which ensures the consistency of neighboring information with target instances by utilizing the vast amount of unlabeled data.

To summarize, our main contributions include:

-

•

Novel Problem Setting: To the best of our knowledge, we are the first attempt to investigate and address anomaly detection problem in dynamic graphs with extremely scarce labeling.

-

•

Improving Dynamic Graph Feature Learning: We propose a transformer-based encoder tailored for dynamic graphs. The encoder aims at capturing local and global structural dependencies while integrating continuous-time dynamics, thus enhancing the model’s ability to detect subtle and time-sensitive anomalies within evolving graph structures.

-

•

Enhancing Anomaly Detection with Limited Labels: We propose an ego-context hypersphere classification loss to construct a robust and context-aware boundary for normal patterns, alongside an ego-context contrastive loss to leverage unlabeled data for improved generalization. These two losses jointly enable effective anomaly detection in dynamic graphs under extremely limited labeling conditions.

-

•

Empirical Validation: Extensive experiments on four datasets and three extremely low label rates demonstrate the effectiveness of the proposed method in comparison to existing GAD methods.

2 Methodology

2.1 Problem Formulation

In this paper, we define a dynamic graph as , where represents the set of nodes and consists of temporal edges. Each edge, denoted by , captures an interaction from node to node at time , with serving as the edge feature. To model the temporal dynamics effectively, we introduce two key subgraphs: the ego-graph , which includes all events up to and including time , and the context graph , which contains all events up to time but excludes the event occurring at . Specifically, our method learns to capture the consistency between the ego-graph and its historical context graph. If an event at time significantly deviates from this learned consistency, it is flagged as potentially anomalous.

Our proposed EL2-DGAD framework aims to detect anomalous edges in this dynamic graph setting, where each edge is associated with a latent label , indicating whether the edge is normal () or abnormal (). In this study, we have access to only a limited number of labeled edges, denoted as , while the majority of edges remain unlabeled (), with . The goal is to develop an algorithm that assigns an anomaly score to each edge, represented as , to effectively distinguish between normal and anomalous edges.

2.2 Overview

The overview of EL2-DGAD is shown in Figure 1. Specifically, for each input sample, an ego and a context graph are simultaneously defined. Each of the graphs is encoded through a transformer-based dynamic graph encoder, which builts on top of a series of local and global attentions (Section 2.3). During model training, we introduce an ego-context hypersphere classification loss in Section 2.4.1 that classifies edges by quantifying the similarity between their ego representation and the corresponding dynamic neighboring context. Finally, an ego-context contrastive loss in Section 2.4.2 is incorporated that is designed to mine and learn from the patterns of normality prevalent within the graph based on unlabeled data.

2.3 Graph Encoder

In scenarios with extremely limited labeled data, it is crucial to design a robust model that effectively captures the temporal and structural dependencies within dynamic graphs. These graphs are constantly evolving, and anomalies may manifest through unexpected temporal patterns or deviations from typical dynamic behaviors. For example, a node that is usually inactive suddenly exhibiting a surge in activity could indicate anomalous behavior. In addition to temporal patterns, both the influence of a node’s neighbors and the overall graph structure can reveal anomalies. Neighbor relationships capture local patterns within a node’s immediate vicinity, where anomalies might appear as atypical connections or attribute deviations. Conversely, graph-wide structures represent the global organization of the graph, where anomalies may disrupt community structures or introduce unexpected cross-community interactions. Therefore, a robust backbone model must integrate both local and global information while simultaneously capturing the temporal evolution of the graph.

To address these challenges, we propose a transformer-based dynamic graph encoder designed to effectively capture the temporal and structural properties of dynamic graphs. Drawing inspiration from recent advancements in graph transformers [30, 3], our approach leverages local attention mechanisms to extract fine-grained patterns from a node’s neighborhood and global attention mechanisms to aggregate these patterns across the entire graph, thereby modeling both local and global dependencies. Furthermore, continuous-time embeddings are integrated into the attention mechanism, allowing the model to seamlessly incorporate temporal dynamics.

Concretely, our graph encoder takes a subgraph at time as the input (e.g., or ) and generates node representations that reflect the dynamic status of the nodes. We start by aggregating 1-hop neighborhood information through masked multi-head self-attention, which we refer to as local MHA. To capture the evolving nature of dynamic graphs, we define the entries of , , and at layer as follows:

| (1) | |||

| (2) | |||

| (3) |

where is the output for node from layer , and is a learnable embedding vectors specified by the degree of , as detailed in [30]. MLP denotes the multi-layer perceptrons. , where is the occurring time of edge . is the sinusoidal embedding function [24], which generates a time representation by applying sine and cosine functions to the input time difference , scaled by a range of frequencies.

Then, the attention output of node for a single head can be formulated as:

| (4) |

where is the adjacency matrix of the input graph. Next, we fed the node representation obtained from the local MHA, as the input to the regular multi-head self-attention in [24], which we referred to as the global MHA. Local MHA focuses on learning neighborhood information which is similar to GNNs but with their inherent limitations, whereas global attention broadens the perspective without explicit structural reliance. Thus, this combination enhances the expressiveness of our model in representing graph structures, effectively balancing the strengths of local and global information processing.

Finally, the output of layer is obtained as

| (5) |

where and are the output from the local MHA and global MHA, LN and FFN denote the layer normalization [14] and the feed-forward blocks respectively.

2.4 Model Training

2.4.1 Ego-context HSC loss

To train the model, we incorporate the hypersphere classification (HSC) loss [21], which is well-suited for anomaly detection tasks where labeled anomaly data is limited. Traditional classification approaches often assume that similar data points cluster naturally, but this assumption does not hold for anomalies, which typically do not form distinct clusters [2]. The HSC loss addresses this limitation by constructing a decision boundary in the form of a hypersphere, tailored for distinguishing between normal and anomalous data.

Under our context, the HSC loss of a given sample at is defined as:

| (6) |

where , and are the latent representation and label of an interaction event at time . This formulation is a variant of the cross-entropy loss but with a unique focus: it constructs a spherical decision boundary that compacts normal samples towards a fixed center (the zero vector), while pushing anomalies away by maximizing their distance from this center. In essence, the goal is to pull normal data points closer to the origin, creating a compact representation, and to push out anomalous points to better distinguish them. The final classification output is given by , which represents the probability of an interaction being classified as normal.

To better align with the dynamic nature of graphs, where anomalous interactions are defined relative to their evolving environment, we modify the original HSC loss to explicitly incorporate temporal and structural context. Specifically, we represent the interaction at time using two graph perspectives: the ego graph , which captures the evolving interactions including the event at , and the context graph , which reflects the recent neighborhood structure up to but not including .

The latent representation for the interaction at time is then defined as the difference between these two graph perspectives:

| (7) |

where and are the graph representations for ego and context graphs, respectively. Here, is computed as

| (8) |

where is a two-layer MLP and is the outputs of node for from the graph encoder. A separate graph encoder with different weights is used to compute for the context graph.

By replacing the original latent representation in the HSC loss with , we formulate the ego-context HSC loss as

| (9) |

This modified loss directly measures how the addition of an interaction affects the deviation of the graph representation from the scenario without the interaction. Essentially, classifies interactions based on how well an edge aligns with the established patterns in the evolving graph structure from the past.

2.4.2 Ego-context Contrastive Loss

To mitigate the risk of overfitting to a limited number of labeled samples, we enhance the proposed framework with a contrastive learning component by utilizing the abundant unlabeled data. Here, we aim to harness the rich information in the unlabeled samples, thereby enhancing the ability of the model to discern between normal and anomalous patterns. A straightforward adaptation of the current framework is to maximize the similarity within the ego-context graph pairs from the same edge while minimizing the similarity for pairs from different edges. The underlying assumption is that the majority of edges are aligned with their context, given that the anomalies are rare in real-world situations. Specifically, the loss for an edge occurring at time is defined as:

| (10) |

where represents the context graph representation of a different edge at that is selected randomly within a training batch. The function computes the cosine similarity between two inputs and applies a linear mapping to convert the value within 0 and 1. We use the cosine similarity instead of the one based on Euclidean distance as in Eq. 9 to let the model focus on learning the normal patterns from a different angle without compromising its ability to identify anomalies through the semi-supervised loss. The use of , an MLP projector function, aligns with standard practices in contrastive learning which ensures that the unsupervised learning framework does not overshadow the primary task.

Finally, the overall training loss is defined as:

| (11) |

where is a hyper-parameter controlling the contribution of the contrastive loss.

3 Experiments

| Dataset | # Nodes | # Edges | # Anomalies |

|---|---|---|---|

| UCI | 1899 | 14253 | 415 |

| Digg | 29955 | 87709 | 2554 |

| 10984 | 672447 | 366 | |

| Wikipedia | 9227 | 157474 | 217 |

3.1 Datasets

We evaluate our approach on four dynamic network datasets: UCI [19], Digg [7], Wikipedia [13], and Reddit [13]. The Wikipedia dataset tracks user interactions, where each interaction corresponds to a user editing a wiki page, with the network evolving as users contribute over time. Dynamic labels indicate whether a user is banned from posting. The UCI Messages dataset represents a social network from an online community of students at the University of California, Irvine, where each node denotes a user, and edges signify exchanged messages. The Digg dataset is collected from the social news website Digg.com, which captures user interactions with nodes representing users and edges indicating replies between them. The Reddit dataset is a dynamic network that tracks active users posting across various subreddits, with each interaction representing a user posting in a specific subreddit. Dynamic labels indicate whether a user is banned from posting in a particular subreddit.

Since UCI and Digg do not contain labeled anomalous edges, we introduce synthetic anomalies using an anomaly injection procedure, following a similar setting as in [16]. The process involves clustering nodes into multiple clusters using spectral clustering based on the graph’s structure and then generating a small portion of anomalous edges by randomly connecting nodes from different clusters, ensuring these connections are absent in the original graph. of anomalies are injected to reflect the realistic scenario where anomalies are typically rare. To seamlessly integrate these synthetic anomalies into the dynamic setting, the generated edges are uniformly inserted at random positions among the original edges. Each anomalous edge is assigned a timestamp by randomly selecting a value between the nearest preceding and succeeding normal edges’ timestamps, thus maintaining temporal consistency with the original data.

The datasets are chronologically divided into training, validation, and testing sets based on interaction timings, with a split ratio of 50/20/30. This approach ensures that the model is trained on earlier interactions, validated on more recent data, and tested on the latest interactions, which mirrors real-world scenarios where future data is unknown at training time. In our experiments, we focus on evaluating performance under extremely limited label conditions by providing only 1, 2, or 3 labeled anomaly examples. For the labeled normal samples, we retain a proportion that matches the ratio of labeled anomalies to the total number of anomalies. The summary statistics of all datasets is shown in Table 1.

| Dataset | Wikipedia | UCI | Digg | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Labels | 1 | 2 | 3 | 1 | 2 | 3 | 1 | 2 | 3 | 1 | 2 | 3 |

| AddGraph | 0.631 | 0.641 | 0.663 | 0.732 | 0.732 | 0.754 | 0.739 | 0.740 | 0.742 | 0.578 | 0.580 | 0.582 |

| TGAT | 0.660 | 0.674 | 0.694 | 0.764 | 0.790 | 0.808 | 0.800 | 0.804 | 0.812 | 0.527 | 0.541 | 0.555 |

| GDN | 0.516 | 0.529 | 0.533 | 0.510 | 0.546 | 0.547 | 0.511 | 0.510 | 0.513 | 0.532 | 0.531 | 0.533 |

| SL-GAD | 0.467 | 0.524 | 0.526 | 0.503 | 0.497 | 0.502 | 0.494 | 0.497 | 0.504 | 0.503 | 0.505 | 0.500 |

| TADDY | 0.616 | 0.617 | 0.618 | 0.769 | 0.765 | 0.772 | 0.811 | 0.841 | 0.842 | 0.516 | 0.517 | 0.518 |

| SAD | 0.661 | 0.674 | 0.710 | 0.774 | 0.798 | 0.816 | 0.809 | 0.820 | 0.841 | 0.561 | 0.566 | 0.592 |

| PREM | 0.549 | 0.555 | 0.555 | 0.531 | 0.532 | 0.536 | 0.512 | 0.513 | 0.515 | 0.516 | 0.535 | 0.536 |

| EL2-DGAD | 0.722 | 0.725 | 0.732 | 0.839 | 0.840 | 0.842 | 0.839 | 0.844 | 0.846 | 0.611 | 0.615 | 0.616 |

3.2 Model Implementation

To construct the ego-graph , we include all edges with timestamps up to and including time , capturing the interactions leading up to that specific time point. For the context graph , we similarly include all edges up to time , but explicitly exclude any edge that occurs exactly at time . This differentiation ensures that the context graph only represents historical information, without the current event. To manage the potentially large sizes of and , we implement a sampling strategy that retains a subset of neighboring nodes for each edge endpoint. Specifically, we randomly sample 25, 10, and 5 neighboring nodes at the first, second, and third hops, respectively, with the condition that each sampled neighbor event must have a timestamp no later than the target edge’s timestamp, ensuring that only past interactions are considered.

In addition, our model uses a two-layer transformer with 4 attention heads, and the hidden size is set to 128 across all modules. The contrastive loss weight is set to 0.01 for Digg, UCI, Reddit and 10 for Wikipedia. The models are trained for 20 epochs using the Adam optimizer [10], with a learning rate of 0.0001 for the Digg and UCI datasets, and 0.001 for the Wikipedia and Reddit dataset.

3.3 Baselines and Performance Evaluation

We benchmark our proposed method against several recent GAD models, namely GDN [9], SL-GAD [34], PREM [20], AddGraph [33], TADDY [16], TGAT [27], and SAD [23]. Among these, GDN, SL-GAD, and PREM are designed for static graph anomaly detection, while AddGraph, TADDY, TGAT, and SAD are tailored for dynamic graph settings.

Note that only GDN, TADDY, AddGraph, and SAD can utilize label information by design. For a fair, label-inclusive comparison for other models, we perform a similar setting as in [23]: applying a cross-entropy loss to the edge representations, which are derived from the concatenated node embeddings of the edge endpoints. Specifically, these edge representations are computed using a MLP network, as outlined in Eq. 8. Thus, the performance of all models can be evaluated based on the classification results.

3.4 Comparison with State-of-the-art GAD

Extensive results in Table 2 show that our EL2-DGAD consistently surpasses all comparison methods across four datasets, particularly in scenarios where labeled anomalies are extremely limited. The results highlight the effectiveness of our approach in incorporating both temporal and structural context, enabling the model to maintain robustness even under severe label scarcity.

For models like GDN, PREM, and SL-GAD, which do not incorporate temporal information into their design, the decline in performance underscores the critical importance of capturing temporal dependencies in dynamic graph anomaly detection. Although AddGraph and TADDY are designed for dynamic graphs, their performance still lags behind ours by a significant margin. These methods rely on discrete graph snapshots, where timestamps of events within a single snapshot are assumed to be identical. Consequently, this can miss important details about the continuous evolution of the graph’s structure. Moreover, they lack a robust semi-supervised learning mechanism, making it challenging to effectively utilize the limited labeled data. In contrast, our approach operates directly on continuous dynamic graphs, with the transformer-based encoder attending to the most relevant neighbors based on fine-grained temporal information. Additionally, we introduce an ego-context hypersphere classification loss that enforces the alignment of each edge with its immediate surrounding temporal and structural context. Together, these features enable EL2-DGAD to more efficiently utilize the continuously evolving graph patterns, making it better suited to detect anomalies that are difficult to align with the normal behavior.

While SAD is also a semi-supervised anomaly detection framework designed for dynamic graphs, it exhibits a notable drop in performance under extreme label scarcity. Specifically, SAD relies on a deviation network as the main supervised learning component, using it to generate pseudo-labeled data that guides a separate contrastive learning process. However, when only a few labeled examples are available, the quality of these pseudo-labels declines, undermining the effectiveness of the contrastive learning component. In contrast, our approach decouples the unsupervised learning from the supervised training, allowing the model to retain its ability to generalize effectively even under extremely limited conditions. Furthermore, our approach offers additional advantages. First, the ego-context hypersphere classification loss provides a more precise instance-wise contextual comparison, compared to the deviation network used in SAD, which relies on comparisons to only global statistics. Second, our model employs a more robust transformer-based encoder, compared to the GAT encoder used in SAD, allowing it to more effectively capture temporal interactions between nodes from both local and global perspectives.

3.5 Ablation Experiments

Table 3 presents the results of the ablation study. Overall, EL2-DGAD achieves optimal performance when all components are included. Removing the local MHA leads to a significant performance drop, highlighting its essential role in capturing neighborhood structures. Similarly, omitting the global MHA also results in a noticeable decrease in performance, underscoring the importance of capturing global node interactions. The exclusion of fine-grained timestamp integration within the Transformer’s attention mechanism also considerably degrades performance, especially in the Wikipedia dataset, where a node-to-node interaction can occur at many distinct timestamps. Ignoring these timestamps results in large information loss, as it prevents the model from capturing the temporal dynamics necessary to detect time-sensitive anomalies effectively. This further emphasizes the importance of modeling temporal changes in dynamic graphs. Additionally, the drop in performance without the contrastive loss component indicates the value of leveraging unlabeled data to improve anomaly detection accuracy. Lastly, removing the ego-context contrast from leads to a notable decline in performance. Without ego-context contrast, the model no longer minimizes the distance between each ego graph and its context graph; instead, all normal instances are pulled toward a single center, imposing a stronger regularization. This limits the model’s ability to capture the diverse characteristics of normal instances, thereby reducing its ability to distinguish anomalies from normal events.

| Dataset | Wikipedia | UCI | Digg | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Labels | 1 | 2 | 3 | 1 | 2 | 3 | 1 | 2 | 3 |

| Local MHA | 0.563 | 0.569 | 0.582 | 0.771 | 0.775 | 0.772 | 0.808 | 0.796 | 0.802 |

| Global MHA | 0.694 | 0.709 | 0.716 | 0.835 | 0.836 | 0.838 | 0.802 | 0.807 | 0.800 |

| in MHA | 0.465 | 0.473 | 0.474 | 0.789 | 0.791 | 0.800 | 0.719 | 0.730 | 0.756 |

| 0.653 | 0.683 | 0.683 | 0.838 | 0.837 | 0.840 | 0.815 | 0.821 | 0.821 | |

| ego-context in | 0.650 | 0.683 | 0.704 | 0.824 | 0.824 | 0.837 | 0.730 | 0.745 | 0.745 |

| EL2-DGAD | 0.722 | 0.725 | 0.732 | 0.839 | 0.840 | 0.842 | 0.839 | 0.844 | 0.846 |

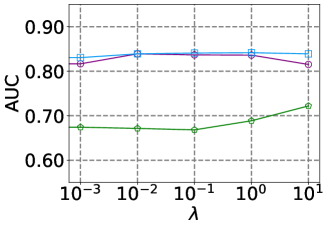

3.6 Parameters Sensitivity

Figure 2 illustrates the AUC performance for three datasets (Digg, UCI, and Wikipedia) as it varies with changes in the balance parameter for the ego-context contrastive learning component and the number of neighbors in the first hop. In Figure 2(a), the Wikipedia dataset shows improved AUC scores with higher values compared to Digg and UCI. This may be due to the greater complexity of anomalies in Wikipedia, making it a more challenging task under extremely limited conditions. Consequently, increasing enhances the model’s ability to capture general patterns of normal samples, which improves the robustness of the model.

In Figure 2(b), AUC performance for all three datasets slightly increases as the number of neighbors rises, indicating that additional neighborhood information helps the model better distinguish between normal and anomalous interactions within the graphs. Overall, the model’s sensitivity to changes in and the number of neighbors remains relatively low, suggesting that it is robust to variations in these hyperparameters.

3.7 t-SNE Visualization

Figure 3 shows t-SNE visualizations of the output embeddings from the layer before anomaly scoring in the Wikipedia dataset, where the selected four models were trained with supervision from only one labeled anomaly. In these visualizations, blue points represent normal instances, while orange points denote anomalies. In the embeddings produced by TGAT and AddGraph, anomalies are dispersed throughout the normal samples, indicating a limited capacity for distinguishing between normal and anomalous instances. Similarly, SAD shows slight improvement with a somewhat better separation, but anomalies remain scattered and intermingled within the normal clusters, lacking a clear boundary.

In contrast, our proposed EL2-DGAD exhibits a relatively clearer separation, with anomalies forming distinct clusters that are well-separated from normal samples. This distinct clustering suggests that EL2-DGAD more effectively learns discriminative embeddings, allowing it to capture the subtle distinctions between normal and anomalous interactions. This improved separation highlights the robustness and effectiveness of our approach for the GAD task, particularly in the challenging scenario of training with extremely limited labeled anomalies.

4 Conclusion

In this paper, we presented EL2-DGAD, the first framework designed to address the anomaly detection problem in dynamic graphs under the challenging condition of extremely limited labeled data. Our approach integrates a transformer-based dynamic graph encoder that captures evolving graph patterns from both local and global perspectives, leveraging continuous-time embeddings to preserve temporal precision. This design is further complemented by an ego-context hypersphere classification loss and an unsupervised ego-context contrastive loss, which together effectively utilize the limited labeled anomalies while harnessing the rich information from abundant unlabeled data. Through extensive experiments on multiple benchmark datasets across varying label rates, EL2-DGAD demonstrates significant performance improvements over existing graph anomaly detection methods. The results validate the effectiveness of our model in accurately detecting anomalies across diverse dynamic graph scenarios, even under severe label constraints.

Acknowledgements

This work was supported in part by the National Key Research and Development Program of China under Grant 2022YFF0712300, in part by the Fundamental Research Funds for the Central Universities under Grant YCJJ20241203.

References

- [1] Cai, L., Chen, Z., Luo, C., Gui, J., Ni, J., Li, D., Chen, H.: Structural temporal graph neural networks for anomaly detection in dynamic graphs. In: Proceedings of the ACM International Conference on Information and Knowledge Management. pp. 3747–3756 (2021)

- [2] Chandola, V., Banerjee, A., Kumar, V.: Anomaly detection: A survey. ACM Computing Surveys 41(3), 1–58 (2009)

- [3] Chen, D., O’Bray, L., Borgwardt, K.: Structure-aware transformer for graph representation learning. In: Proceedings of the International Conference on Machine Learning. pp. 3469–3489 (2022)

- [4] Chen, J., Fu, S., Zhang, Z., Ma, Z., Feng, M., Wirjanto, T.S., Peng, Q.: Towards cross-domain few-shot graph anomaly detection. In: Proceedings of the IEEE International Conference on Data Mining (2024)

- [5] Chen, T., Tsourakakis, C.: Antibenford subgraphs: Unsupervised anomaly detection in financial networks. In: Proceedings of the ACM SIGKDD Conference on Knowledge Discovery and Data Mining. pp. 2762–2770 (2022)

- [6] Chung, J., Gulcehre, C., Cho, K., Bengio, Y.: Empirical evaluation of gated recurrent neural networks on sequence modeling. In: Advances in Neural Information Processing Systems Workshop (2014)

- [7] De Choudhury, M., Sundaram, H., John, A., Seligmann, D.D.: Social synchrony: Predicting mimicry of user actions in online social media. In: Proceedings of the International Conference on Computational Science and Engineering. vol. 4, pp. 151–158 (2009)

- [8] Ding, K., Li, J., Bhanushali, R., Liu, H.: Deep anomaly detection on attributed networks. In: Proceedings of the SIAM International Conference on Data Mining. pp. 594–602 (2019)

- [9] Ding, K., Zhou, Q., Tong, H., Liu, H.: Few-shot network anomaly detection via cross-network meta-learning. In: Proceedings of the Web Conference. pp. 2448–2456 (2021)

- [10] Kinga, D., Adam, J.B., et al.: A method for stochastic optimization. In: Proceedings of the International Conference on Learning Representations (2015)

- [11] Kipf, T.N., Welling, M.: Semi-supervised classification with graph convolutional networks. In: Proceedings of the International Conference on Learning Representations (2017)

- [12] Kumagai, A., Iwata, T., Fujiwara, Y.: Semi-supervised anomaly detection on attributed graphs. In: Proceedings of the International Joint Conference on Neural Networks. pp. 1–8 (2021)

- [13] Kumar, S., Zhang, X., Leskovec, J.: Predicting dynamic embedding trajectory in temporal interaction networks. In: Proceedings of the ACM SIGKDD International Conference on Knowledge Discovery and Data Mining. pp. 1269–1278 (2019)

- [14] Lei Ba, J., Kiros, J.R., Hinton, G.E.: Layer normalization. ArXiv e-prints pp. arXiv–1607 (2016)

- [15] Liu, Y., Li, Z., Pan, S., Gong, C., Zhou, C., Karypis, G.: Anomaly detection on attributed networks via contrastive self-supervised learning. IEEE Transactions on Neural Networks and Learning Systems 33(6), 2378–2392 (2022)

- [16] Liu, Y., Pan, S., Wang, Y.G., Xiong, F., Wang, L., Chen, Q., Lee, V.C.: Anomaly detection in dynamic graphs via transformer. IEEE Transactions on Knowledge and Data Engineering 35(12), 12081–12094 (2021)

- [17] Luo, X., Wu, J., Beheshti, A., Yang, J., Zhang, X., Wang, Y., Xue, S.: Comga: Community-aware attributed graph anomaly detection. In: Proceedings of the ACM International Conference on Web Search and Data Mining. pp. 657–665 (2022)

- [18] Meng, X., Wang, S., Liang, Z., Yao, D., Zhou, J., Zhang, Y.: Semi-supervised anomaly detection in dynamic communication networks. Information Sciences 571, 527–542 (2021)

- [19] Opsahl, T., Panzarasa, P.: Clustering in weighted networks. Social Networks 31(2), 155–163 (2009)

- [20] Pan, J., Liu, Y., Zheng, Y., Pan, S.: Prem: A simple yet effective approach for node-level graph anomaly detection. In: Proceedings of the IEEE International Conference on Data Mining. pp. 1253–1258 (2023)

- [21] Ruff, L., Vandermeulen, R.A., Franks, B.J., Müller, K.R., Kloft, M.: Rethinking assumptions in deep anomaly detection. In: Proceedings of the International Conference on Machine Learning Workshop (2021)

- [22] Tang, J., Zhang, J., Yao, L., Li, J., Zhang, L., Su, Z.: Arnetminer: extraction and mining of academic social networks. In: Proceedings of the ACM SIGKDD International Conference on Knowledge Discovery and Data Mining. pp. 990–998 (2008)

- [23] Tian, S., Dong, J., Li, J., Zhao, W., Xu, X., Song, B., Meng, C., Zhang, T., Chen, L., et al.: Sad: Semi-supervised anomaly detection on dynamic graphs. In: Proceedings of the International Joint Conference on Artificial Intelligence. pp. 2306–2314 (2023)

- [24] Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A.N., Kaiser, Ł., Polosukhin, I.: Attention is all you need. In: Advances in Neural Information Processing Systems. vol. 30 (2017)

- [25] Wang, D., Lin, J., Cui, P., Jia, Q., Wang, Z., Fang, Y., Yu, Q., Zhou, J., Yang, S., Qi, Y.: A semi-supervised graph attentive network for financial fraud detection. In: Proceedings of the IEEE International Conference on Data Mining. pp. 598–607 (2019)

- [26] Wei, S., Wang, Y., Cheung, Y.m.: A branch elimination-based efficient algorithm for large-scale multiple longest common subsequence problem. IEEE Transactions on Knowledge and Data Engineering 35(3), 2179–2192 (2023)

- [27] Xu, D., Ruan, C., Korpeoglu, E., Kumar, S., Achan, K.: Inductive representation learning on temporal graphs. In: Proceedings of the International Conference on Learning Representations (2020)

- [28] Yang, X., Liu, W., Liu, W.: Tensor canonical correlation analysis networks for multi-view remote sensing scene recognition. IEEE Transactions on Knowledge and Data Engineering 34(6), 2948–2961 (2022)

- [29] Yang, X., Liu, W., Liu, W., Tao, D.: A survey on canonical correlation analysis. IEEE Transactions on Knowledge and Data Engineering 33(6), 2349–2368 (2021)

- [30] Ying, C., Cai, T., Luo, S., Zheng, S., Ke, G., He, D., Shen, Y., Liu, T.Y.: Do transformers really perform badly for graph representation? In: Advances in Neural Information Processing Systems. vol. 34, pp. 28877–28888 (2021)

- [31] Zhang, J., Wang, S., Chen, S.: Reconstruction enhanced multi-view contrastive learning for anomaly detection on attributed networks. In: Proceedings of the International Joint Conference on Artificial Intelligence. pp. 2376–2382 (2022)

- [32] Zhang, Z., Zhao, L.: Unsupervised deep subgraph anomaly detection. In: Proceedings of the IEEE International Conference on Data Mining. pp. 753–762 (2022)

- [33] Zheng, L., Li, Z., Li, J., Li, Z., Gao, J.: Addgraph: Anomaly detection in dynamic graph using attention-based temporal gcn. In: Proceedings of the International Joint Conference on Artificial Intelligence. pp. 4419–4425 (2019)

- [34] Zheng, Y., Jin, M., Liu, Y., Chi, L., Phan, K.T., Chen, Y.P.P.: Generative and contrastive self-supervised learning for graph anomaly detection. IEEE Transactions on Knowledge and Data Engineering 35(12), 12220–12233 (2023)