Sequential Low-Rank Change Detection

Abstract

Detecting emergence of a low-rank signal from high-dimensional data is an important problem arising from many applications such as camera surveillance and swarm monitoring using sensors. We consider a procedure based on the largest eigenvalue of the sample covariance matrix over a sliding window to detect the change. To achieve dimensionality reduction, we present a sketching-based approach for rank change detection using the low-dimensional linear sketches of the original high-dimensional observations. The premise is that when the sketching matrix is a random Gaussian matrix, and the dimension of the sketching vector is sufficiently large, the rank of sample covariance matrix for these sketches equals the rank of the original sample covariance matrix with high probability. Hence, we may be able to detect the low-rank change using sample covariance matrices of the sketches without having to recover the original covariance matrix. We character the performance of the largest eigenvalue statistic in terms of the false-alarm-rate and the expected detection delay, and present an efficient online implementation via subspace tracking.

I Introduction

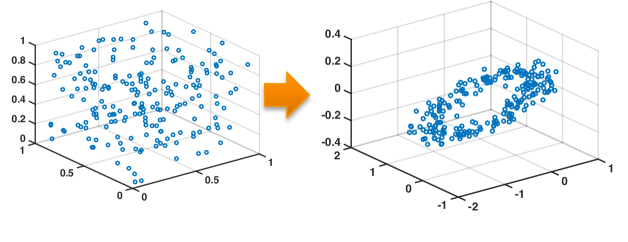

Detecting emergence of a low-rank structure is a problem arising from many high-dimensional streaming data applications, such as video surveillance, financial time series, and sensor networks. The subspace structure may represent an anomaly or novelty that we would like to detect as soon as possible once it appears. One such example is swarm behavior monitoring. Biological swarms consist of many simple individuals following basic rules to form complex collective behaviors [1]. Examples include flocks of birds, schools of fish, and colonies of bacteria. The collective behavior and movement patterns of swarms have inspired much recent research into designing robotic swarms consisting of many agents that use simple algorithms to collectively accomplish complicated tasks, e.g., a swarm of UAVs [2]. Early detection of an emerging or transient behavior that leads to a specific form of behavior is very important for applications in swarm monitoring and control. One key observation, as shown in [1] for classification of behavior purposes, is that many forms of swarm behaviors are represented as low-dimensional linear subspaces. This leads to a low-rank change in the covariance structure of the observations.

In this paper, we propose a sequential change-point detection procedure based on the maximum eigenvalue of the sample covariance, which is a natural approach to detect the emergence of a low-rank signal. We characterize the performance of the detection procedure using two standard performance metrics, the average-run-length (ARL) that is related to the false-alarm-rate, and the expected detection delay. The distribution of the largest eigenvalue of the sample covariance matrix when data are Gaussian is presented in [3], which corresponds to the Tracey-Widom law of order one. However, it is intractable to directly analyze ARL using the Tracey-Widom law. Instead, we use a simple -set argument to decompose the detection statistic into a set of -CUSUM procedures, whose performance is well understood in literature.

Moreover, we present a new approach to detect low-rank changes based on sketching of the observation vectors. Sketching corresponds to linear projection of the original observations: , with . We may use sample covariance matrix of the sketches to detect emergence of a low-rank signal component, since it can be shown that for random projection, it can be shown that when is greater the rank of the signal covariance matrix, the sample covariance matrix of the linear sketches will have the same rank as the signal covariance matrix. We show that the minimum number of sketches is related to the property of the projection and the signal subspace. One key difference of the sketching for low-rank change detection from covariance sketching for low-rank covariance matrix recovery [4] is that, here we do not have to recover the covariance matrix which may require more number of sketches. Hence, sketching is a natural fit to detection problem.

The low-rank change is related to the so-called spiked covariance matrix [3], which assumes that a small number directions explain most of the variance. Such assumption is also made by sparse PCA, where the low-rank component is further assumed to be sparse. The goal of sparse PCA is to estimate such sparse subspaces (see, e.g., [5]). A fixed-sample hypothesis test to determine whether or not there exists a sparse and low-rank component, based on the largest eigenvalue statistic, is studied in [6], where it is shown to asymptotically minimax optimal. Another test statistic for such problems, the so-called Kac-Rice statistic, has been studied in [7]. The Kac-Rice statistic is the conditional survival function of the largest observed singular value conditioned on all other observed singular values, and it has a simple distribution form as uniformly distributed on [0, 1]. However, the statistic involves an infinite integral over the real line, which may not be easy to evaluate, and the test statistic needs to compute all eigenvalues of the sample covariance matrix instead of the largest eigenvalue.

II largest eigenvalue procedure

Assuming a sequence of -dimensional vectors , . There may be a change-point at time such that the distribution of the data stream changes. Our goal is to detect such a change as quickly as possible. Formally, such a problem can be stated as the following hypothesis test:

Here is the noise variance, which is assumed to be known or has been estimated from training data. Assume the signal covariance matrix is low-rank, meaning . The signal covariance matrix is unknown.

One may construct a maximum likelihood ratio statistic. However, since the signal covariance matrix is unknown, we may have to form the generalized likelihood ratio statistic, which replaces the covariance matrix with the sample covariance. This may cause an issue since the statistic involves inversion of the sample covariance matrix, whose numerical property (such as condition number) is usually poor when is large.

Alternatively, we consider the largest eigenvalue of the sample covariance matrix which is a natural detection statistic for detecting a low-rank signal. Assume a scanning window approach. We estimate the sample covariance matrix using samples in a time window of , where is the window size. Assume is chosen sufficiently large and it is greater than the anticipated longest detection delay. Using the ratio of the largest eigenvalue of the sample covariance matrix relative to the noise variance, we form the maximum eigenvalue procedure which is a stopping time given by:

where is the pre-set drift parameter, is the threshold, and denotes the largest eigenvalue of a matrix . Here the index represents the possible change-point location. Hence, samples between corresponds to post-change samples. The sample covariance matrix for post-change samples up to time is given by:

The maximization over corresponds to search for the unknown change-point location. An alarm is fired whenever the detection statistic exceeds the threshold .

III Performance bounds

In this section, we characterize the performance of the procedure in terms of two standard performance metrics, the expected value of the stopping time when there is no change, called the average run length (ARL), and the expected detection (EDD), which is the expected time to stop in the extreme case when the change occurs immediately at .

Our approach is to decompose the maximum eigenvalue statistic to a set of CUSUM procedures. Our argument is based on the -net, which provides a convenient way to discretize unit sphere in our case. The number of such compact set is called the covering number, , which is the minimal cardinality of an -net. The covering number of a unit sphere is given by

Lemma III.1 (Lemma 5.2, [9]).

The unit Euclidean sphere equipped with the Euclidean metric satisfies for every that

The -net help to derive an upper bound of the largest eigenvalue, as stated in the following lemma

Lemma III.2 (Lemma 5.4, [9]).

Let be a symmetric matrix, and let be an -net of for some . Then

Our main theoretical results are the following, which are the lower bound on the ARL and the approximation to EDD of the largest eigenvalue procedure.

Theorem III.3 (Lower bound on ARL).

When

where is the root to the equation , .

The lower bound above can be used to control false-alarm of the procedure. Given a target ARL, we may choose the corresponding threshold .

Let denote the spectral norm of a matrix , which corresponds to the largest eigenvalue. We have the following approximation:

Theorem III.4 (Approximation to EDD).

When

| (1) |

where .

In (1), represents the signal-to-noise ratio. Note that the right-hand-side of (1) is a decreasing function of , which is expected, since the detection delay should be smaller when the signal-to-noise ratio is larger.

The following informal derivation justifies the theorems. First, from Lemma III.1, we have that the detection statistic

For each , , we have

Note that under : , and hence , and

Alternatively, under : , and hence , and hence

Hence, the distribution before the change is random variable, and after the change-point is a scaled random variable. Now define a set of procedures, for each :

Note that each one of these procedures is a CUSUM procedure. Now if set

then due to the above relations, we may bound the average run length of the original procedure in terms of these procedures:

Since each is a CUSUM procedure, whose properties are well understood, we may obtain a lower bound to the ARL of the maximum eigenvalue procedure.

IV Sketching for rank change detection

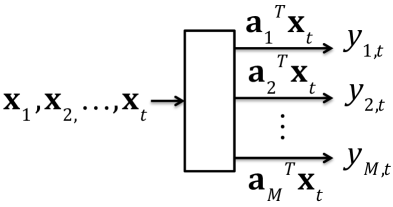

When is large, a common practice is to use a linear projection to reduce data dimensionality. The linear projection maps the original -dimensional vector into a lower dimension -dimensional vector. We refer such linear projection as sketching. One implementation of sketching is illustrated in Fig. 3, where each signal vector is projected by vectors, , …, . The sketching corresponds to linear projection of the original vector:

Define a vector of observations , and

we have , .

One intriguing question is whether we may perform detection of the low-rank change using the linear projections. The answer is yes, as we present in the following. We first show that each linear corresponds to a bi-linear projection of the original covariance matrix. Define the sample covariance matrix of the sketches

| (2) |

A key observation is that for certain choice of , e.g., Gaussian random matrix, when , the rank of is equal to the rank of with probability one. Hence, we may detect change using the largest eigenvalue of the sample covariance matrix of .

A related line of work is covariance sketching, where the goal is to recover a low-rank covariance matrix using the quadratic sketches, which are square of each . This corresponds to only using diagonal entries of the sample covariance matrix (2) of the sketches.

The sketches are still multi-variate Gaussian distributed, and the projection changes the variance. For the sketches, we may consider the following hypothesis test based on the original test form:

Without loss of generality, to preserve noise property (or equivalently, to avoid amplifying noise), i.e., we choose the projection to be orthogonal, i.e., . Moreover, due to the Gaussian form, the analysis for the ARL and EDD of the procedure in Section III still holds, with refined by . Hence, it can be seen from this analysis, that projection affects the signal-to-noise ratio, and hence, not surprisingly, although in principal we only need to be greater than the rank of , in fact, we cannot choose to be arbitrarily small.

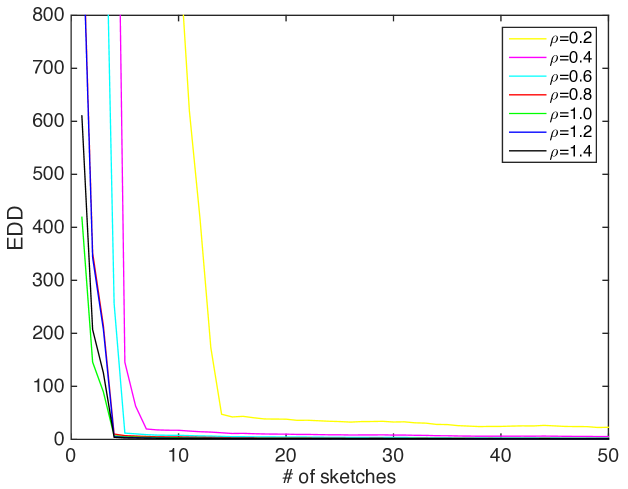

Fig. 4 illustrates this effect. In this example, we use a random subspace of dimension by , and vary the number of sketches , when the post-change covariance matrix of is generated as plus a Gaussian random matrix with rank 3. Then we plot the EDD of the largest eigenvalue procedure when sketches are used. We calibrate the thresholds in each setting so that the ARL is fixed to be 5000. Note that when the number of sketches is sufficiently large (and greater than , the EDD approaches to a small number.

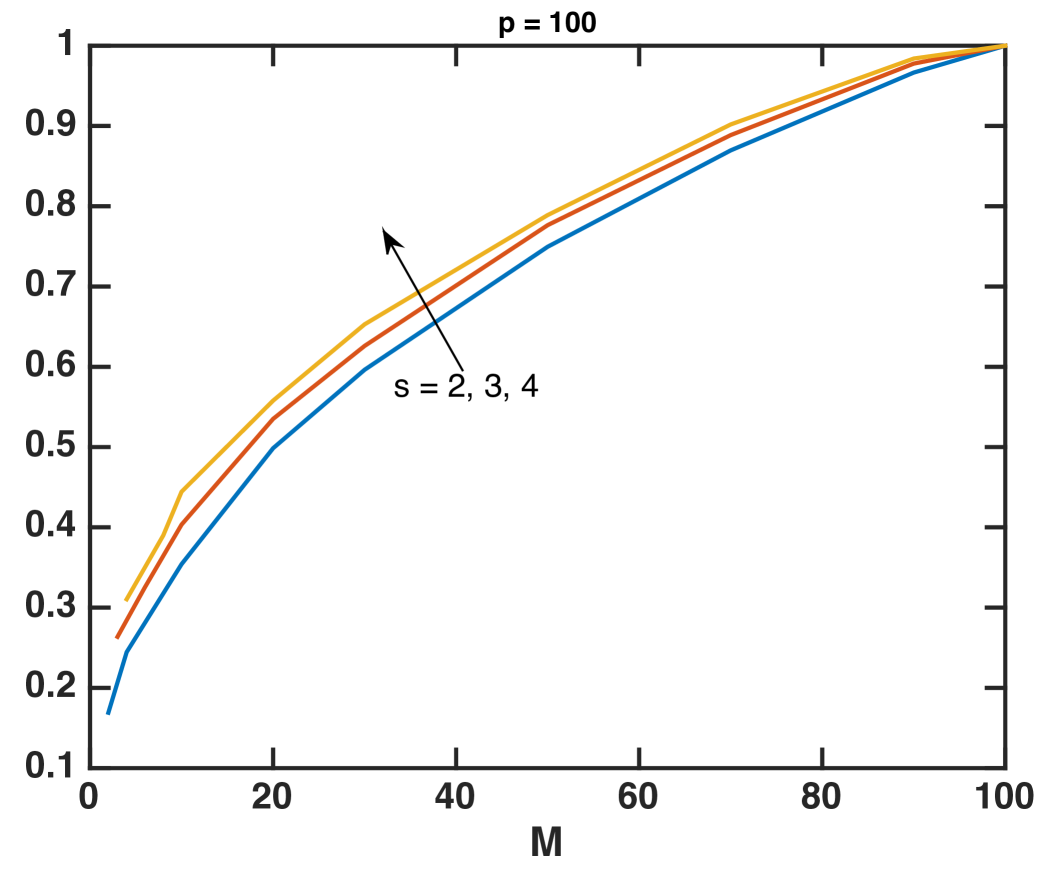

The following analysis may shed some light on how small we may choose to be. Let , which is smaller than the ambient dimension . Since the EDD (1) is a decreasing function of SNR, which is a proportion to . Denote the eigendecomposition of to be . Clearly, SNR for the sketching case will depend on , which depends on the principal angle between two subspaces. Recall that we have required to be a subspace , and hence the SNR will depend on the principal angle between the random projection and the signal subspace . The principal angle between two random subspaces is studied in [10]. The eigenvalues of the sample covariance matrix are jointly Beta distribution. Based on this fact, the following lemma is obtained in [11], which helps us to understand the behavior of the procedure. It characterizes the norm of the fixed unit-norm vector projected by a random subspace.

Lemma IV.1 (Theorem 3 [11]).

Given random subspace , then for any fixed vector with , , and when with , for ,

Using this lemma in our setting, we may obtain a simple lower bound for the :

| (3) |

with high probability, where the last inequality is due to the maximum of a set of Beta random variables. Hence, the number of sketches should scale as .

V Online implementation via subspace tracking

(a):

(b):

There is a connection of the low-rank covariance and subspace model. Assume a rank post-change covariance matrix , and its eigen-decomposion . Then we may expression each observation vector , where is a -dimensional Gaussian random vector with covariance matrix . Before the change, . After the change, and is a -dimensional Gaussian random vector with covariance matrix being . Hence, we may detect the low-rank change by detecting a non-zero , if is known. When is unknown, we may perform an online subspace estimation from a sequence of data.

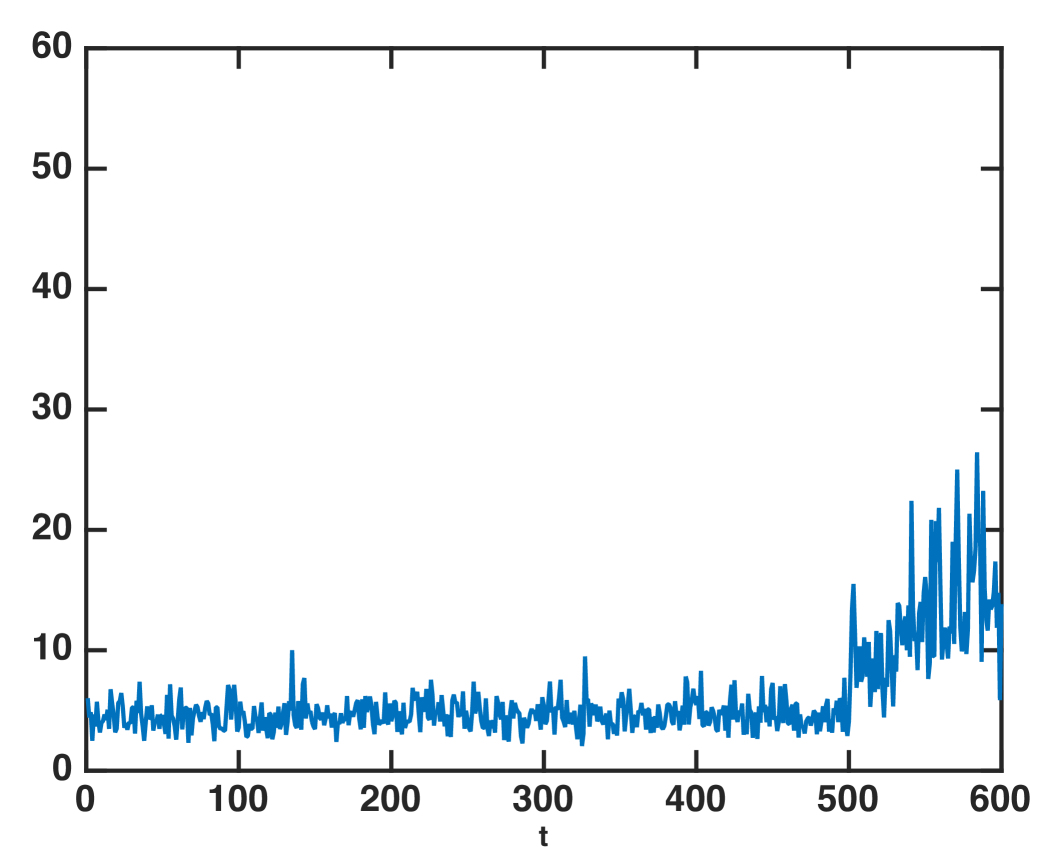

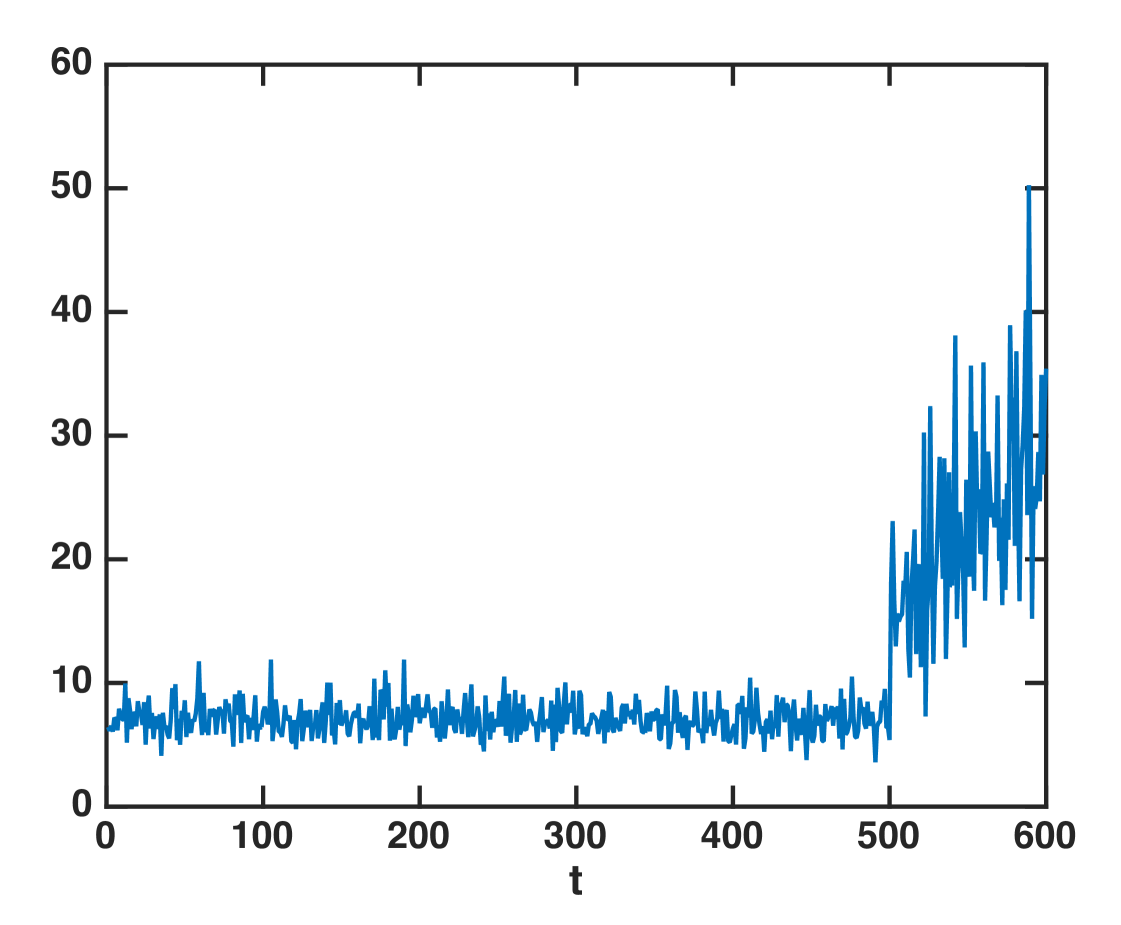

Based on such a connection, we may develop an efficient way to compute the detection statistic online via subspace tracking. This is related to the so-called matched-subspace detection [13], and here we further combine matched-subspace detection with online subspace estimation. Start with an initialization of the subspace , using the sequence of observations , we may update the subspace using stochastic gradient descent on grassmannian manifolds, e.g., via the GROUSE algorithm [12]. Then we perform projection of the next vector , which gives an estimate for . We may claim a change when either (mimicking the largest eigenvalue) or the norm square (mimicking the sum of the eigenvalues) becomes large. Since the subspace tracking algorithm (e.g., GROUSE) can even deal with missing data, this approach allows us to compute the detection statistic even when we can only observe a subset of entries of at each time. Fig. 6 demonstrates the detection statistic computed via GROUSE subspace tracking when only about 70 of the entries can be observed at random locations. There is a change-point at time . Clearly, the detection statistic computed via subspace tracking can detect such a change by raise a large peak.

VI Conclusion

We have presented a sequential change-point detection procedure based on the largest eigenvalue statistic for detecting a low-rank change to the signal covariance matrix. It is related to the so-called spiked covariance model. We present a lower-bound for the average-run-length (ARL) of the procedure when there is no change, which can be used to control false alarm rate. We also present an approximation to the expected detection delay (EDD) of the proposed procedure, which characterizes the dependence of EDD on the spectral norm of the post-change covariance matrix . Our theoretical results are obtained using an -net argument, which leads to a decomposition of the proposed procedure into a set of -CUSUM procedures. We further present a sketching procedure, which linearly projects the original observations into lower-dimensional sketches, and performs the low-rank change detection using these sketches. We demonstrate that when the number of sketches is sufficiently large (on the order of with being the rank of the post-change covariance), the sketching procedure can detect the change with little performance loss relative to the original procedure using full data. Finally, we present an approach to compute the detection statistic online via subspace tracking.

Acknowledgement

The author would like to thank Dake Zhang at Tsinghua University, Lauren Hue-Seversky and Matthew Berger at Air Force Research Lab, Information Directorate for stimulating discussions, and Shuang Li at Georgia Tech for help with numerical examples. This work is partially supported by a AFRI Visiting Faculty Fellowship.

References

- [1] M. Berger, L. Seversky, and D. Brown, “Classifying swarm behavior via compressive subspace learning,” in IEEE International Conference on Robotics and Automation, 2016.

- [2] D. Hambling, “U.S. Navy plans to fly first drone swarm this summer.” http://www.defensetech.org/2016/01/04/u-s-navy-plans-to-fly-first-drone-swarm-this-summer/, Jan. 2016.

- [3] I. M. Johnstone, “On the distribution of the largest engenvalue in principal component analysis,” Ann. Statist., vol. 29, pp. 295–327, 2001.

- [4] Y. Chen, Y. Chi, and A. J. Goldsmith, “Exact and stable covariance estimation from quadratic sampling via convex programming,” IEEE Trans. on Info. Theory, vol. 61, pp. 4034–4059, July 2015.

- [5] T. Cai, Z. Ma, and Y. Wu, “Optimal estimation and rank detection for sparse spiked covariance matrices,” arXiv:1305.3235, 2016.

- [6] Q. Berthet and P. Rigollet, “Optimal detection of sparse principal components in high dimension,” Ann. Statist., vol. 41, no. 1, pp. 1780–1815, 2013.

- [7] J. Taylor, J. Loftus, and R. Tibshrani, “Regression shrinkage and selection via the lasso,” J. Roy. Statist. Soc. Ser. B, vol. 58, pp. 267–288, 1996.

- [8] E. S. Page, “Continuous inspection schemes,” Biometrika, vol. 41, pp. 100–115, June 1954.

- [9] R. Vershynin, “Introduction to the non-asymptotic analysis of random matrices,” arXiv:1011.2037, 2011.

- [10] P.-A. Absil, A. Edelman, and P. Koev, “On the largest principal angle between random subspaces,” Linear Algebra and Appl., 2006.

- [11] Y. Cao, A. Thompson, M. Wang, and Y. Xie, “Sketching for sequential change-point detection,” arXiv:1505.06770, 2015.

- [12] D. Zhang and L. Balzano., “Global convergence of a grassmannian gradient descent algorithm for subspace estimation,” arXiv:1506.07405, 2015.

- [13] L. L. Scharf and B. Friedlander, “Matched subspace detectors,” IEEE Trans. Signal Processing, vol. 42, no. 8, pp. 2146–2157, 1994.