SG-LSTM: Social Group LSTM for Robot Navigation

Through Dense Crowds

Abstract

As personal robots become increasingly accessible and affordable, their applications extend beyond large corporate warehouses and factories to operate in diverse, less controlled environments, where they interact with larger groups of people. In such contexts, ensuring not only safety and efficiency but also mitigating potential adverse psychological impacts on humans and adhering to unwritten social norms become paramount. In this research, we aim to address these challenges by developing a cutting-edge model capable of predicting pedestrian movements and interactions in crowded environments. To this end, we propose a novel approach called the Social Group Long Short-term Memory (SG-LSTM) model, which effectively captures the complexities of human group behavior and interactions within dense surroundings. By integrating social awareness into the LSTM architecture, our model achieves significantly enhanced trajectory predictions. The implementation of our SG-LSTM model empowers navigation algorithms to compute collision-free paths faster and with higher accuracy, particularly in complex and crowded scenarios. To foster further advancements in social navigation research, we contribute a substantial video dataset comprising labeled pedestrian groups, which we release to the broader research community. To thoroughly evaluate the performance of our approach, we conduct extensive experiments on multiple datasets, including ETH, Hotel, and MOT15. We compare various prediction approaches, such as LIN, LSTM, O-LSTM, and S-LSTM, and rigorously assess runtime performance.

I Introduction

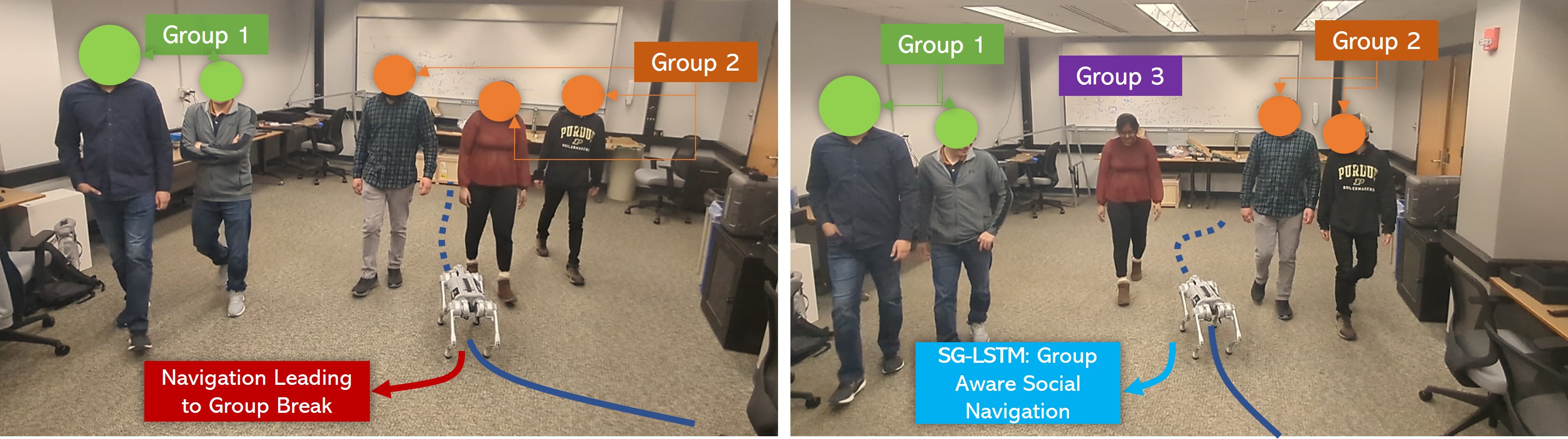

Social navigation is vital in social robotics as it enables robots to navigate and interact with human environments in a socially acceptable and effective way. Social navigation refers to the ability of a robot to move around in space while considering social norms and expectations. This includes navigating around people, avoiding obstacles, and following social conventions such as waiting in line or giving way to others. By incorporating social navigation into their design, social robots can move more smoothly and naturally through human environments, enhancing their social acceptance and effectiveness. This is particularly important for robots designed to interact with humans in public spaces, such as robots that provide guidance or assistance in airports, shopping centers, or hospitals. It also enables robots to interact with humans more effectively by allowing them to understand and respond to social cues and conventions. For example, a robot programmed to navigate a busy hospital corridor must recognize and avoid collisions with people walking in the opposite direction or understand when to yield the right of way to hospital staff rushing to an emergency. Existing socially compliant navigation algorithms are designed to assist service robots in navigating through complex environments safely while respecting social norms and avoiding collisions with pedestrians. However, some of these algorithms treat pedestrians as individual obstacles, making them unsuitable for use in crowded areas [35, 33, 36] where the social dynamics of pedestrians play a more significant role and impact on pedestrian dynamics. Fig. 2 demonstrates a problem that any navigation algorithm would face. A socially-aware navigation algorithm would choose to navigate around and not break a group, whereas a regular navigation algorithm that treats pedestrians as individual obstacles may cause the robot to freeze or decide to break a group.

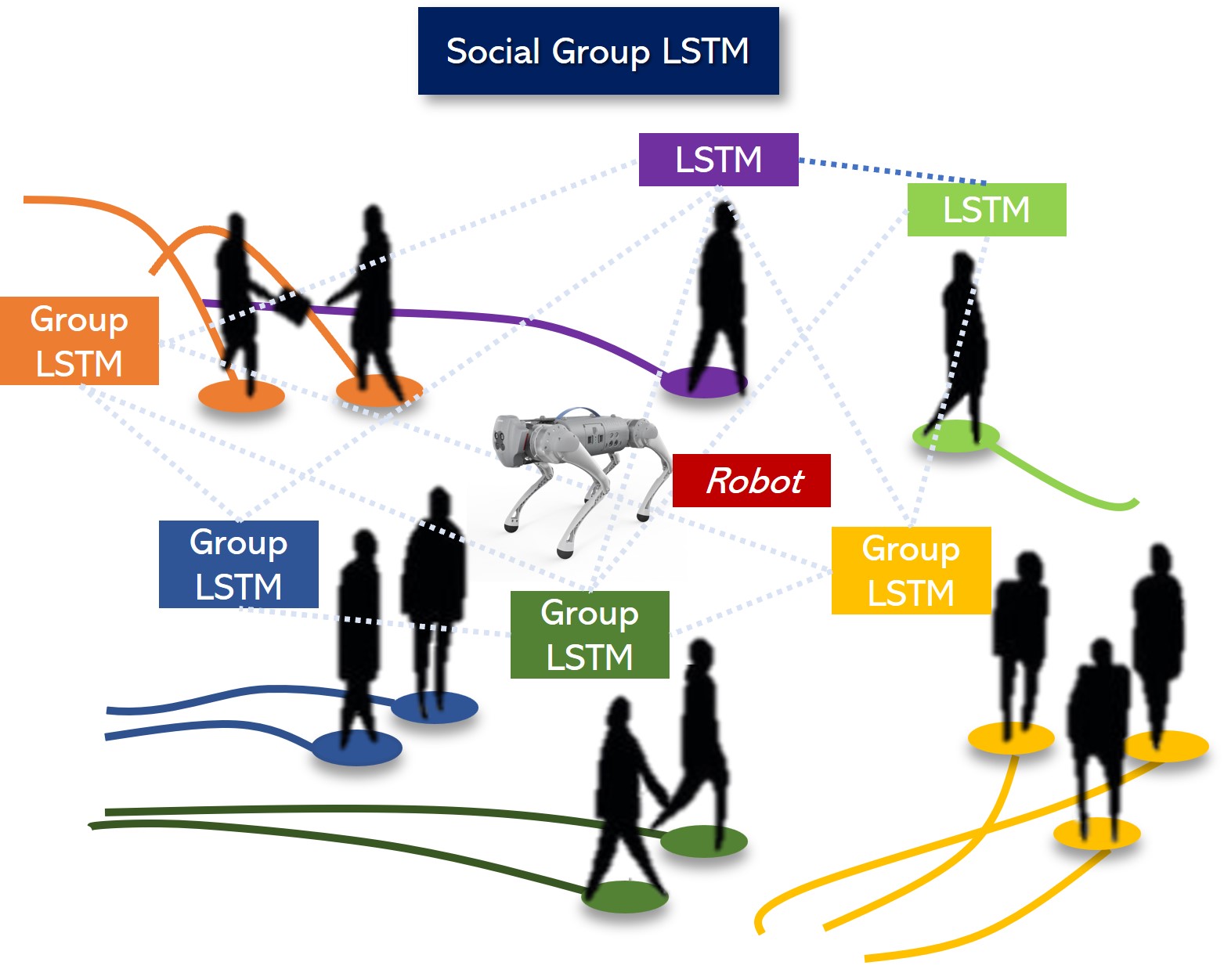

In recent times, algorithms incorporating group cohesion have shown promising results in enhancing agents’ navigation in dense-crowd environments. These algorithms rely on computing group cohesion, which involves analyzing factors like speed, direction, and proximity of individuals within a group to predict how closely they navigate together. However, these approaches have certain limitations, including the need for expensive online computations and reduced accuracy under specific conditions [1]. High crowd density, for example, can significantly slow down the computation of multiple features, negatively affecting navigation performance. Additionally, the effectiveness of these algorithms is hindered when certain features cannot be detected, leading to poorly defined groups. To overcome these challenges, our paper presents an innovative approach that identifies perceptual groups in crowd videos employing a Long Short-term Memory (LSTM) architecture. This socially-compliant trajectory prediction algorithm improves navigation and is illustrated in Fig. 1. Our main contributions are as follows:

-

•

The Social Group Long Short-term Memory (SG-LSTM) model incorporates group dynamics into pedestrian prediction.

-

•

Our hierarchical model independently models pedestrians at both group and individual levels, capturing personal dynamics effectively.

-

•

Our group-optimized approach significantly reduces compute time by over 50%.

-

•

Additionally, we provide a large dataset containing more than 30,000+ human-labeled frames with detailed group information.

For the rest of the paper, we summarize some related work in Sec. II. Sec. III describes the overview and methodology of the system in detail. Followed by Sec. IV, that describes our dataset. In Sec. V, we discuss evaluation metrics, results, and analysis. Finally, Sec. VI discusses future directions and limitations and concludes our approach.

II Related Works

This section reviews existing methods for group identification and pedestrian trajectory prediction.

II-A Human Group Learning

Research has extensively focused on identifying group features and discovering groups using video input. Notably, [2] adopts YOLOv2 for object detection, converting detections into feature vectors and clustering nearby individuals spatially. Objects are initially considered separate clusters, and groups are formed through iterative processes of computing Euclidean distances and updating centroids. Similarly, [4] defines a social group based on proximity, speed, and movement direction of pedestrians. Additionally, Sathyamoorthy et al. [1] propose mathematical models for pedestrian group characterization, considering walking speed, interaction, group size, and proximity as defining factors.

Apart from physical properties like positions and distances in crowds, group identification can also consider collective behaviors [3]. Shao et al. use the Collective Transition (CT) of pedestrians from tracklets to form clusters, defining groups based on high-velocity correlations with anchor tracklets. This approach provides insights into crowd dynamics and social groupings. These studies highlight diverse strategies, utilizing object detection, feature vectorization, clustering, and collective behavior analysis, to effectively identify and categorize groups in crowds from video data.

II-B Social Navigation

This literature review explores a diverse range of research works that contribute to various aspects of efficient and safe navigation in complex scenarios. Cheung et al. [46] propose an innovative vehicle navigation approach based on driver behavior classification, while Chandra et al. [47] introduce CMetric, a driving behavior measure utilizing centrality functions. Randhavane et al. [48] present pedestrian dominance modeling for socially-aware robot navigation, considering pedestrians’ dominance in crowded environments. Bera et al. [49] develop Adapt, a real-time adaptive pedestrian tracking system, and Bera and Manocha [50] present a real-time multilevel crowd-tracking method using reciprocal velocity obstacles. Additionally, Bera et al. [51] introduce GLMP, a real-time pedestrian path prediction model incorporating both global and local movement patterns. Real-time anomaly detection in crowded scenes is addressed by Bera et al. [52], and online parameter learning for data-driven crowd simulation and content generation is proposed by Bera et al. [53]. The research works by Murino et al. [54] explore group and crowd behavior analysis techniques within the domain of computer vision. Furthermore, Bera et al. [55] delve into the concept of “The Socially Invisible Robot,” aiming to enable robots to navigate and interact effectively in social environments. Additionally, Bera et al. [56] present an interactive crowd-behavior learning system facilitating surveillance and training tasks. Chandra et al. [57] propose GraphRQI, a method for classifying driver behaviors using graph spectrums, and Cheung et al. [58] identify driver behaviors using trajectory features to enhance vehicle navigation and safety. These research works collectively contribute to advancing navigation and behavior analysis in various scenarios, providing valuable insights for the development of socially-aware robots and crowd management systems.

The field of computer vision has significantly contributed to modeling pedestrian behavior [5]. The foundational Social Force Model proposed by Helbing and Molnar [5] has found applications in crowd simulation [6] and abnormal behavior detection [9]. Further extensions include joint modeling of pedestrian trajectories and groupings [11] and modeling social and group behavior for multiple people tracking [7]. Notably, there have been studies on people tracking with motion predictions from social forces [8] and detecting social groups in video data [10]. Additionally, image-based motion contexts have been explored for multiple people tracking [12]. Recent works have explored diverse techniques for human activity prediction, including trajectory prediction with PORCA [41], socially-aware navigation using SocioSense [40], and modeling agent interactions with the LSTM-CNN hybrid network [39]. Bera et al. [38] predict pedestrian paths in complex environments. Studies also cover early activity recognition from video streams [31], recognizing human activities from partially observed videos [27], and predicting actions from static scenes [28]. Data-driven activity prediction algorithms have been proposed [29], and Vondrick et al. [30] present a deep learning-based framework for anticipating visual representations. Additionally, the research further explores pedestrian behaviors in stationary crowds, analyzing relationships [17], predicting saliency [18], and socially-aware forecasting [19]. Techniques include data-driven analysis [20], Gaussian process regression flow [21], and trajectory learning [22]. Predictive models cover behavior recognition [23], learning intentions [24], and action forecasting [25]. Kitani et al. [26] forecast future actions from visual input.

Current methods in social navigation often overlook the critical aspect of detecting and preserving the cohesion of social groups in a scene. By neglecting the presence and dynamics of such groups, these approaches may not achieve optimal prediction accuracy and fail to account for the social behavior of individuals. To address this limitation, we present our novel approach, SG-LSTM, which introduces a group-aware paradigm for trajectory prediction. Our approach demonstrates its effectiveness through extensive experiments on various datasets, showcasing its ability to predict socially-compliant trajectories while avoiding collisions with detected groups. By considering group dynamics in trajectory prediction, SG-LSTM represents a significant advancement in the field, promising more reliable and human-friendly robot navigation in complex real-world scenarios.

III Methodology

III-A Overview

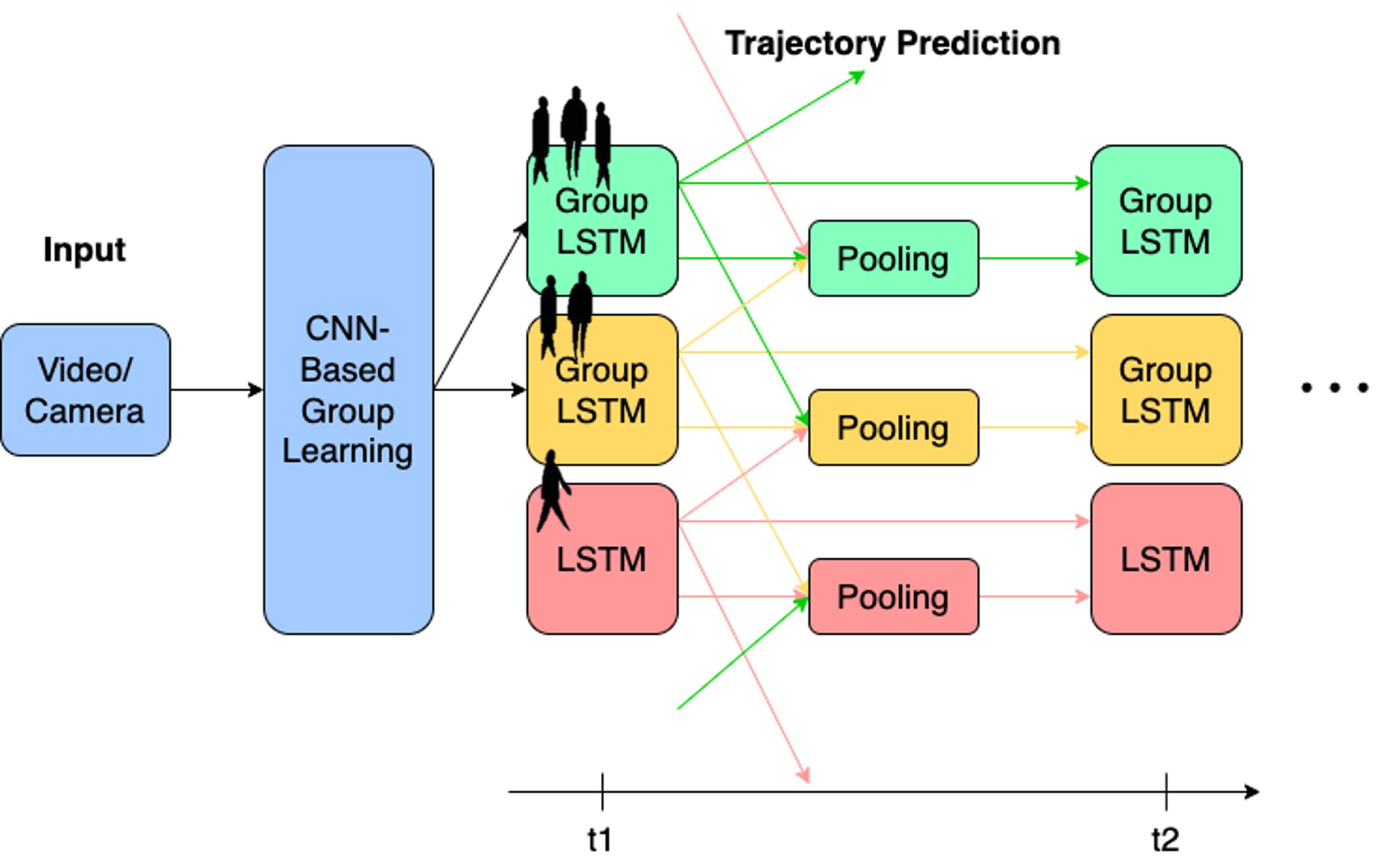

The SG-LSTM takes raw RGB and Depth frames from videos as input. Our CNN-based group learning algorithm then learns to predict groups in dense crowds, providing spatial coordinates of the groups for the trajectory prediction pipeline. The architecture is visualized in Fig. 3, and all symbols used in this paper are summarized in Tab. I.

| Average depth of a group | |

|---|---|

| Centroid of the bounding box | |

| Field of view of the camera | |

| Width of the image frame | |

| Robot’s orientation | |

| Angular displacement of a group w.r.t. the robot | |

| The hidden layer of the LSTM of the group | |

| The vector embedding the input coordinates and hidden tensor of the group at time t | |

| The spatial coordinates of the group w.r.t. the robot at time t | |

| The position of the robot at time t | |

| Speed of the robot | |

| Steering angle of the robot |

III-B Trajectory Prediction Pipeline

Our trajectory prediction algorithm builds upon the Social-LSTM model [32] designed for human trajectory prediction in crowded social scenes. Social-LSTM incorporates social interactions and past trajectories of individuals to predict future movement patterns. Although Social-LSTM is a powerful model for human trajectory prediction in crowded social scenes, it has limitations. Its accuracy tends to decrease with higher pedestrian density or when more agents form groups. By introducing a superior group dynamics model, we enhance the state-of-the-art in two essential ways: achieving more accurate trajectory predictions and better resilience to inaccuracies in detection or sensor output. When we model the group, all pedestrians are considered within one group, allowing the prediction to rely on group trajectories even when tracking for some agents within the group is challenging. This robustness is especially valuable when pedestrian detection algorithms struggle to accurately extract spatial coordinates, particularly for people walking in groups or occluded scenes.

Scaling up Social-LSTM or similar models to handle large and complex scenes with numerous individuals presents challenges due to the substantial computational resources and memory required to capture the social context of the scene. As the model represents each person using an LSTM, a scene with multiple individuals necessitates a corresponding number of LSTMs, leading to increased computational demands. Moreover, these resources may be sub-optimally utilized when dealing with stationary people or individuals moving together as a group. Consequently, there is a growing need for more efficient and scalable trajectory prediction models that can handle such scenarios while maintaining prediction accuracy and computational efficiency.

III-C Problem Formulation

In our approach, we establish spatial coordinates for each detected group as at any given time instant . For pedestrians not belonging to any groups, we treat them as individual groups with a single member. These spatial coordinates are collected over five consecutive time steps, from to , at a frame rate of 30 fps, and then fed into our Social-Group-LSTM model to predict trajectories for the subsequent five-time steps, spanning from to . This time-step configuration allows us to effectively compute and forecast the movement patterns of both grouped and individual pedestrians, enhancing trajectory prediction accuracy and enabling socially-compliant navigation in crowded environments.

III-D Group Localization

Our approach involves training a CNN-based group learning model on our annotated dataset. It builds on a single-stage object learning algorithm, utilizing a spatial pyramid pooling (SPP) module to detect objects at various scales and sizes. This capability proves beneficial for identifying groups with diverse shapes and sizes. Additionally, our approach effectively handles occlusions, where objects in a group partially or fully block each other through a combination of spatial features and attention mechanisms, ensuring accurate object detection within groups.

The trained model outputs bounding boxes around groups in crowded scenes. These group bounding boxes are combined with pedestrian bounding boxes for individuals not belonging to any group, which are already available from the dataset. The bounding boxes are then projected onto depth maps for each scene, and the depth of each pedestrian or group is computed using the centroid of the bounding box. By utilizing the pixel depth value (), the frame width, and the camera’s field of view angle () in radians, we calculate spatial coordinates for all pedestrian groups and ungrouped pedestrians. The angular displacement of each pedestrian/group with respect to the robot can be determined using the centroid of the bounding box and the FOV of the camera, where . The spatial coordinates of the pedestrians/groups are calculated as follows:.

III-E Modeling Social Interactions

In our approach, we employ a social pooling layer, similar to Social-LSTM, to analyze group interactions in a scene. The pooling layer is defined by a tensor for the trajectory, which is given as:

| (1) |

where represents the hidden state corresponding to the group at time step , and is an indicator function that checks if the coordinates corresponding to the neighboring groups of the group, represented as a set , are present in the cell of the grid. This layer effectively captures the social interactions among all the groups in a scene.

The social pooling layer in the Social-Group LSTM model treats each group as a single entity when modeling pedestrian interactions in a scene. This advantageous approach makes it easier for the model to scale up to denser crowds as not every person in the background is represented by an LSTM, unlike the case in Social-LSTM. Consequently, this significantly reduces the number of parameters required to model a densely-crowded scene. Moreover, by grouping people together, the model overcomes any inaccuracies that arise from computing the spatial coordinates of occluded pedestrians in the background, further enhancing the overall accuracy of trajectory predictions.

Model Layers: Our model layers and weights are similar to the Social-LSTM model. The input coordinates and hidden tensor are embedded into the vector and are passed as input to the hidden state () of an LSTM cell with weight W, corresponding to the current time step of the group, .

Trajectory Estimation: The predicted trajectories are assumed to belong to a normal distribution and thus are given as: . Furthermore, the model parameters are trained by minimizing the negative log-Likelihood loss for every trajectory.

| (2) |

The trajectories returned by the model are passed to a navigation system that can use this to compute optimal paths for a robot. Since we predict trajectories using social interactions between pedestrians employing a group detection model, we can say that the robots could leverage this to deploy a socially compliant navigation system that would avoid obstructing through groups.

III-F Robot Navigation

We deploy our algorithm on a Unitree robot dog. The algorithm for predicting paths outlined earlier can also be utilized to navigate through dense crowds or pedestrians without collisions. The approach is based on Generalized Velocity Obstacles (GVO) [37]. The combination of path prediction and GVO is used for car-like robots, taking into account their dynamic constraints.

In this context, we are employing kinematic constraints similar to a car and assuming that the robot can detect the location of dynamic obstacles, such as pedestrians and other robots, nearby, despite sensor noise. We have implemented this method on a robot to navigate a crowded walkway toward its intended destination.

The GVO navigation method is a technique that relies on velocity obstacles to navigate robots that have kinematic constraints. In our situation, we employ kinematic constraints similar to those of a car, and we assume that the robot can detect the positions of nearby moving obstacles, such as pedestrians, albeit with some noise. The robot uses our approach to anticipate the movement of each pedestrian as it navigates through the crowded walkway to reach its intended destination.

A conventional kinematic model [37] is utilized for the robot. The robot’s state is characterized by its position and orientation . It controls the robot’s speed and steering angle, represented by and .

The configuration of the robot is expressed as its position and orientation , and the robot has controls for speed and steering angle, represented by and , respectively.

Assuming that the controls remain unchanged for a given time interval, the position of the robot at a specific time can be determined by the following expression:

The constraints for the robot are specified as follows:

| (3) |

For more details, please refer to [37].

The robot utilizes the prediction scheme we developed to anticipate the paths of the pedestrians and pedestrian groups and prevent any steering actions that could result in a collision. It is assumed that the pedestrians may not actively take measures to avoid colliding with the robot, which implies that the robot is responsible for ensuring collision avoidance.

IV Dataset

IV-A Our SG-LSTM Dataset and Data Annotation

Our dataset (Fig. 4) is based on data collected on the Purdue Campus at West Lafayette and publicly available 3D point cloud, and RGB-D pedestrian dataset [42]. In this study, we manually annotated perceptually-visible pedestrian groups in crowded scenes from RGB frames. We utilized a reference algorithm to aid in labeling, which involved processing RGB frames with 2D bounding boxes of detected pedestrians and their corresponding depth information. Pedestrians’ positions were extrapolated relative to the robot’s coordinates frame using methods presented in [1]. Additionally, we calculated various features, such as group size, walking speed, and proximity of detected pedestrians, crucial for determining group cohesiveness. To classify pedestrians as a group, we referred to the conditions presented in [1]. Subsequently, for all identified groups, we used CVAT.ai to accurately generate 2D bounding boxes. The labeled data was then used to train our model, following the methods explained in Sec. III. The annotated dataset, which includes defaced, time-synced, color, and depth frames, along with the bounding boxes of pedestrian groups and individual pedestrians, will be released alongside this paper.

| Metric and Methods | Datasets | ||

|---|---|---|---|

| Avg. Displacement Error | ETH | MOT15 | Our Dataset |

| Linear | 0.80 | 0.93 | 0.65 |

| LSTM | 0.60 | 0.67 | 0.43 |

| O-LSTM | 0.49 | 0.59 | 0.32 |

| S-LSTM | 0.50 | 0.57 | 0.38 |

| SG-LSTM (Ours) | 0.35 | 0.40 | 0.23 |

| Final Displacement Error | ETH | MOT15 | Our Dataset |

| Linear | 1.31 | 1.01 | 0.91 |

| LSTM | 1.31 | 0.70 | 0.58 |

| O-LSTM | 1.06 | 0.66 | 0.41 |

| S-LSTM | 1.07 | 0.69 | 0.42 |

| SG-LSTM (Ours) | 0.68 | 0.48 | 0.27 |

V Evaluation

In this section, we summarize the performance between our method and the other methods on the metrics chosen for evaluation on our SG-LSTM dataset, the ETH and Hotel dataset, and the MOT15 dataset. Below are the methods we have tested:

-

•

Linear Model: We assumed the pedestrian followed a linear path

-

•

LSTM: Vanilla-LSTM with no social pooling and without grouping

-

•

O-LSTM: LSTM with occupancy maps

-

•

S-LSTM: LSTM with social pooling

-

•

SG-LSTM (Ours): LSTM with social pooling and grouping

And, we used the following metrics to measure the accuracy:

-

•

Average Displacement Error:

We calculate the mean squared error for all the predicted points in trajectories for every individual present in the scene as in [7]. -

•

Final Displacement Error:

We compute the distance between the expected trajectory endpoint and the actual endpoint for every person.

V-A Displacement Errors of Predicted Trajectories

By assuming that the pedestrians traveling as a group follow the same trajectory, we do not need to compute the trajectory for every individual pedestrian. Other trajectory-predicting methods that treat pedestrians as individuals would be less efficient in densely crowded environments due to needing to add trajectories for every person in the scene. And when these methods compute the trajectory for one person, it might treat the surrounding people as individual obstacles. In contrast, our model can avoid these expensive computations by knowing that these people belong to the same group. We show that our assumption that a group of pedestrians shares the same trajectory holds by calculating the average displacement error and the final displacement error between the predicted trajectories and the ground truth, where the ground truth is the trajectories of each person in the scene. The computed error in Tab. II confirms that our assumption holds and does not affect the model’s accuracy.

V-B Runtime Performance

We observe our approach, SG-LSTM, takes, on average, 55.4% less time (Tab. III) than S-LSTM [32]. On average, 30-50% of our tracked pedestrians belong to a group that significantly improves our compute time and is hence more appropriate for edge devices like robots. Lower compute requirements translate to lower costs for both hardware and software development. This means that robot navigation systems can be made more affordable and accessible to a wider range of users. The result is better performance, in terms of accuracy and speed, compared to Social-LSTM.

VI Conclusions, Limitations, and Future Work

We proposed a Social Group Long Short-term Memory (SG-LSTM) model which leverages group dynamics into pedestrian prediction. Our hierarchical model decouples pedestrians on a group and individual level and can model the personal dynamics at both levels. We also show that predicted trajectories can be used for a more socially-efficient navigation system that can leverage this to plan an optimal path for the robot. We also offer runtime numbers and demonstrate that our group-optimized approach leads to over 50% reduction in compute time.

At the same time, there are some limitations to our approach. Pedestrian prediction models like ours often rely solely on the position and motion of pedestrians without considering other contextual factors such as weather, time of day, or the presence of obstacles. Additionally, our model is designed to work only at short distances, such as within a few meters of the robot. This can limit the ability of the robot to plan for long-term interactions with pedestrians. Many pedestrian prediction models are complex and computationally intensive, making them difficult to deploy on resource-constrained robotic platforms.

In the future, we would like to work on these issues. Our model relies on data from a single sensor modality, such as cameras. Future work could explore integrating data from multiple modalities, such as vision, lidar, and radar, to improve the accuracy and robustness of pedestrian prediction models. In the future, we could consider a broader range of contextual information, such as weather conditions, time of day, and pedestrian behavior patterns. This could lead to more accurate and reliable predictions in various scenarios. We could explore how to extend these models to longer time horizons, such as predicting the trajectory of a pedestrian over the next minute or more.

References

- [1] A. J. Sathyamoorthy, U. Patel, M. Paul, N. K. S. Kumar, Y. Savle, and D. Manocha, “CoMet: Modeling Group Cohesion for Socially Compliant Robot Navigation in Crowded Scenes,” IEEE RA-L, vol. 7, no. 2, pp. 1008–1015, Apr. 2022

- [2] M. Yang et al., “Cluster-Based Crowd Movement Behavior Detection,” in 2018 DICTA, Dec. 2018, pp. 1–8

- [3] J. Shao, C. C. Loy, and X. Wang, “Scene-Independent Group Profiling in Crowd,” in 2014 IEEE CVPR, Jun. 2014, pp. 2227–2234

- [4] A. K. Chandran, L. A. Poh, and P. Vadakkepat, “Identifying social groups in pedestrian crowd videos,” in ICAPR, Jan. 2015, pp. 1–6

- [5] D. Helbing and P. Molnar, “Social Force Model for Pedestrian Dynamics,” Phys. Rev. E, vol. 51, no. 5, pp. 4282–4286, May 1995, doi: 10.1103/PhysRevE.51.4282.

- [6] A. Lerner, Y. Chrysanthou, and D. Lischinski, “Crowds by Example,” Computer Graphics Forum, vol. 26, no. 3, pp. 655–664, 2007, doi: 10.1111/j.1467-8659.2007.01089.x.

- [7] S. Pellegrini, A. Ess, K. Schindler, and L. van Gool, “You’ll never walk alone: Modeling social behavior for multi-target tracking,” in 2009 IEEE ICCV, Sep. 2009, pp. 261–268

- [8] M. Luber, J. A. Stork, G. D. Tipaldi, and K. O. Arras, “People tracking with human motion predictions from social forces,” in ICRA, May 2010, pp. 464–469

- [9] R. Mehran, A. Oyama, and M. Shah, “Abnormal crowd behavior detection using social force model,” in CVPR, Jun. 2009, pp. 935–942.

- [10] K. Yamaguchi, A. C. Berg, L. E. Ortiz, and T. L. Berg, “Who are you with and where are you going?,” in CVPR 2011, Jun. 2011, pp. 1345–1352. doi: 10.1109/CVPR.2011.5995468.

- [11] S. Pellegrini, A. Ess, and L. Van Gool, “Improving Data Association by Joint Modeling of Pedestrian Trajectories and Groupings,” in ECCV 2010, Berlin, Heidelberg, 2010, pp. 452–465

- [12] L. Leal-Taixé, M. Fenzi, A. Kuznetsova, B. Rosenhahn, and S. Savarese, “Learning an Image-Based Motion Context for Multiple People Tracking,” in 2014 IEEE CVPR, Jun. 2014, pp. 3542–3549

- [13] A. Treuille, S. Cooper, and Z. Popović, “Continuum crowds,” ACM Trans. Graph., vol. 25, no. 3, pp. 1160–1168, Jul. 2006.

- [14] J. M. Wang, D. J. Fleet, and A. Hertzmann, “Gaussian Process Dynamical Models for Human Motion,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 30, no. 2, pp. 283–298, Feb. 2008.

- [15] G. Antonini, M. Bierlaire, and M. Weber, “Discrete choice models of pedestrian walking behavior,” Transportation Research Part B: Methodological, vol. 40, no. 8, pp. 667–687, Sep. 2006.

- [16] M. K. C. Tay and C. Laugier, “Modelling Smooth Paths Using Gaussian Processes,” in Field and Service Robotics: Results of the 6th International Conference, C. Laugier and R. Siegwart, Eds. Berlin, Heidelberg: Springer, 2008, pp. 381–390.

- [17] S. Yi, H. Li, and X. Wang, “Understanding pedestrian behaviors from stationary crowd groups,” in CVPR, Jun. 2015, pp. 3488–3496.

- [18] H. S. Park and J. Shi, “Social saliency prediction,” in CVPR, Jun. 2015, pp. 4777–4785.

- [19] A. Alahi, V. Ramanathan, and L. Fei-Fei, “Socially-Aware Large-Scale Crowd Forecasting,” in CVPR, Jun. 2014, pp. 2211–2218.

- [20] M. Rodriguez, J. Sivic, I. Laptev, and J.-Y. Audibert, “Data-driven crowd analysis in videos,” in ICCV, Nov. 2011, pp. 1235–1242.

- [21] K. Kim, D. Lee, and I. Essa, “Gaussian process regression flow for analysis of motion trajectories,” in ICCV, Nov. 2011, pp. 1164–1171.

- [22] B. T. Morris and M. M. Trivedi, “Trajectory Learning for Activity Understanding: Unsupervised, Multilevel, and Long-Term Adaptive Approach,” IEEE T-PAMI, vol. 33, no. 11, pp. 2287–2301, Nov. 2011

- [23] J. Azorin-López, M. Saval-Calvo, A. Fuster-Guilló, and A. Oliver-Albert, “A predictive model for recognizing human behaviour based on trajectory representation,” in 2014 IJCNN, Jul. 2014, pp. 1494–1501.

- [24] J. Elfring, R. van de Molengraft, and M. Steinbuch, “Learning intentions for improved human motion prediction,” in 2013 ICAR, Nov. 2013, pp. 1–7.

- [25] Y. Kong, D. Kit, and Y. Fu, “A Discriminative Model with Multiple Temporal Scales for Action Prediction,” in Computer Vision – ECCV 2014, Cham, 2014, pp. 596–611.

- [26] K. M. Kitani, B. D. Ziebart, J. A. Bagnell, and M. Hebert, “Activity Forecasting,” in Computer Vision – ECCV 2012, Berlin, Heidelberg, 2012, pp. 201–214.

- [27] Y. Cao et al., “Recognize Human Activities from Partially Observed Videos,” in 2013 IEEE Conference on Computer Vision and Pattern Recognition, Jun. 2013, pp. 2658–2665.

- [28] T.-H. Vu, C. Olsson, I. Laptev, A. Oliva, and J. Sivic, “Predicting Actions from Static Scenes,” in Computer Vision – ECCV 2014, Cham, 2014, pp. 421–436.

- [29] B. Minor, J. R. Doppa, and D. J. Cook, “Data-Driven Activity Prediction: Algorithms, Evaluation Methodology, and Applications,” in ACM SIGKDD International Conference on Knowledge Discovery and Data Mining. 2015, pp. 805–814.

- [30] C. Vondrick, H. Pirsiavash, and A. Torralba, “Anticipating Visual Representations from Unlabeled Video,” in 2016 IEEE CVPR, Jun. 2016, pp. 98–106. doi: 10.1109/CVPR.2016.18.

- [31] M. S. Ryoo, T. J. Fuchs, L. Xia, J. K. Aggarwal, and L. Matthies, “Early Recognition of Human Activities from First-Person Videos Using Onset Representations.” arXiv, Jul. 06, 2015.

- [32] A. Alahi, K. Goel, V. Ramanathan, A. Robicquet, L. Fei-Fei, and S. Savarese, “Social LSTM: Human Trajectory Prediction in Crowded Spaces,” in 2016 IEEE CVPR, Jun. 2016, pp. 961–971

- [33] A. J. Sathyamoorthy, U. Patel, T. Guan, and D. Manocha, “Frozone: Freezing-Free, Pedestrian-Friendly Navigation in Human Crowds,” IEEE Robotics and Automation Letters, vol. 5, no. 3, pp. 4352–4359, Jul. 2020.

- [34] L. Tai, J. Zhang, M. Liu, and W. Burgard, “Socially Compliant Navigation through Raw Depth Inputs with Generative Adversarial Imitation Learning.” arXiv, Feb. 26, 2018

- [35] Y. F. Chen, M. Everett, M. Liu, and J. P. How, “Socially aware motion planning with deep reinforcement learning,” in IROS, Sep. 2017, pp. 1343–1350.

- [36] Y. F. Chen, M. Liu, M. Everett, and J. P. How, “Decentralized Non-communicating Multiagent Collision Avoidance with Deep Reinforcement Learning.” arXiv, Sep. 28, 2016.

- [37] Wilkie, D., Van Den Berg, J., and Manocha, D. (2009, October). Generalized velocity obstacles. In IROS (pp. 5573-5578). IEEE.

- [38] A. Bera, S. Kim, T. Randhavane, S. Pratapa, and D. Manocha, “GLMP- realtime pedestrian path prediction using global and local movement patterns,” in ICRA, May 2016, pp. 5528–5535.

- [39] R. Chandra, U. Bhattacharya, A. Bera, and D. Manocha, “TraPHic: Trajectory Prediction in Dense and Heterogeneous Traffic Using Weighted Interactions,” in CVPR, Jun. 2019, pp. 8475–8484.

- [40] A. Bera, T. Randhavane, R. Prinja, and D. Manocha, “SocioSense: Robot navigation amongst pedestrians with social and psychological constraints,” in IROS, Sep. 2017, pp. 7018–7025.

- [41] Y. Luo, P. Cai, A. Bera, D. Hsu, W. S. Lee, and D. Manocha, “PORCA: Modeling and Planning for Autonomous Driving Among Many Pedestrians,” IEEE Robotics and Automation Letters, vol. 3, no. 4, pp. 3418–3425, Oct. 2018

- [42] Diego Paez-Granados, Yujie He, David Gonon, Lukas Huber, Aude Billard, January 27, 2021, ”3D point cloud and RGBD of pedestrians in robot crowd navigation: detection and tracking”, IEEE Dataport.

- [43] D. Paez-Granados, Y. He, D. Gonon, L. Huber, and A. Billard, “Pedestrian-Robot Interactions on Autonomous Crowd Navigation: Dataset and Metrics,” p. 3.

- [44] A. Ess, B. Leibe, and L. Van Gool, “Depth and Appearance for Mobile Scene Analysis,” in ICCV 2007, pp. 1–8.

- [45] L. Leal-Taixé, A. Milan, I. Reid, S. Roth, and K. Schindler, “MOTChallenge 2015: Towards a Benchmark for Multi-Target Tracking.” arXiv, Apr. 08, 2015.

- [46] E. Cheung, A. Bera, and D. Manocha, ”Efficient and safe vehicle navigation based on driver behavior classification,” in IROS, pp. 4472–4479, 2012.

- [47] R. Chandra, U. Bhattacharya, T. Mittal, A. Bera, and D. Manocha, ”CMetric: A driving behavior measure using centrality functions,” in IROS, pp. 3943–3950, 2016.

- [48] T. Randhavane, A. Bera, E. Kubin, A. Wang, K. Gray, and D. Manocha, ”Pedestrian dominance modeling for socially-aware robot navigation,” in IROS, pp. 3162–3169, 2016.

- [49] A. Bera, N. Galoppo, D. Sharlet, A. Lake, and D. Manocha, ”Adapt: real-time adaptive pedestrian tracking for crowded scenes,” in CVPR, pp. 2125–2134, 2015.

- [50] A. Bera and D. Manocha, ”Realtime multilevel crowd tracking using reciprocal velocity obstacles,” IEEE TVCG, vol. 23, no. 1, pp. 521–530, 2017.

- [51] A. Bera, S. Kim, T. Randhavane, S. Pratapa, and D. Manocha, ”GLMP-Realtime Pedestrian Path Prediction using Global and Local Movement Patterns,” in IROS, pp. 3935–3942, 2016.

- [52] A. Bera, S. Kim, and D. Manocha, ”Realtime anomaly detection using trajectory-level crowd behavior learning,” in ICCV, pp. 2214–2222, 2017.

- [53] A. Bera, S. Kim, and D. Manocha, ”Online parameter learning for data-driven crowd simulation and content generation,” ACM TOG, vol. 36, no. 6, p. 193, 2017.

- [54] V. Murino, M. Cristani, S. Shah, and S. Savarese, ”Group and crowd behavior for computer vision,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 35, no. 12, pp. 2875–2891, 2012.

- [55] A. Bera, T. Randhavane, E. Kubin, A. Wang, K. Gray, and D. Manocha, ”The Socially Invisible Robot: Navigation in the Social World using Robot Entitativity,” in IROS, pp. 4193–4200, 2019.

- [56] A. Bera, S. Kim, and D. Manocha, ”Interactive crowd-behavior learning for surveillance and training,” in CVPR, pp. 8044–8053, 2018.

- [57] R. Chandra, U. Bhattacharya, X. Mittal, Trisha, Li, A. Bera, and D. Manocha, ”GraphRQI: Classifying Driver Behaviors Using Graph Spectrums,” IEEE Transactions on Intelligent Transportation Systems, vol. 19, no. 5, pp. 1588–1598, 2017.

- [58] E. Cheung, A. Bera, E. Kubin, K. Gray, and D. Manocha, ”Identifying driver behaviors using trajectory features for vehicle navigation,” IEEE Transactions on Intelligent Transportation Systems, vol. 13, no. 3, pp. 1181–1191, 2012.