ShadowCast: Controllable Graph Generation

Abstract

We introduce the controllable graph generation problem, formulated as controlling graph attributes during the generative process to produce desired graphs with understandable structures. Using a transparent and straightforward Markov model to guide this generative process, practitioners can shape and understand the generated graphs. We propose ShadowCast, a generative model capable of controlling graph generation while retaining the original graph’s intrinsic properties. The proposed model is based on a conditional generative adversarial network. Given an observed graph and some user-specified Markov model parameters, ShadowCast controls the conditions to generate desired graphs. Comprehensive experiments on three real-world network datasets demonstrate our model’s competitive performance in the graph generation task. Furthermore, we show its effective controllability by directing ShadowCast to generate hypothetical scenarios with different graph structures.

1 Introduction

In many real-world networks, including but not limited to communication, financial, and social networks, graph generative models are applied to model relationships among actors. It is crucial that the models not only mimic the structure of observed networks but also generate graphs with desired properties because it allows for an increased understanding of these relationships. Currently, there are no such methods designed for the effective control of graph generation.

Meaningful interactions between agents are often investigated under different what-if scenarios, which determines the feasibility of the interactions under abnormal and unforeseen circumstances. In such investigations, instead of using actual data, we can generate synthetic data to study and test the systems (Barse et al., 2003; Skopik et al., 2014). However, there are many challenges. Data is not accessible by direct measurement of the system. Data is not available. Data produced by generative models cannot be understood. To address these challenges, we have to answer a natural and meaningful question: Can we control the generative process to shape and understand the generated graphs?

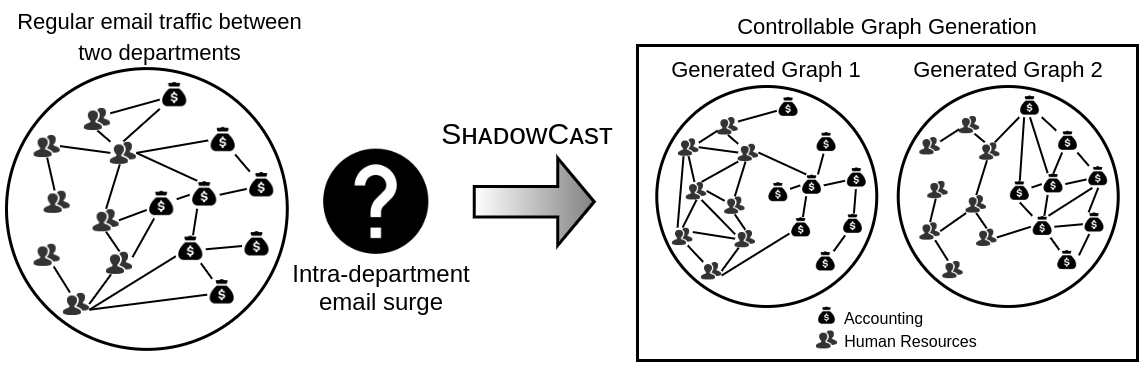

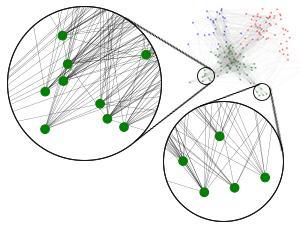

In this work, we introduce the novel problem of controlling graph generation. The goal is to generate graphs of desired shapes by learning to control the associated graph attributes and structure to influence the generative process. We provide an illustrative case study of email communications in an organization with two departments (Figure 1), where interactions of the employees follow a regular pattern during normal operations. Due to limited data, previously observed network information may be missing scenarios of intra-department email surge within either the Human Resources or Accounting departments. When such situations are required for analyzing the system, an ideal model should generate graphs that reflect these scenarios (see box in Figure 1) while maintaining the organizational structure. By effectively controlling the generative process, ShadowCast allows users to generate designed graphs that meet conditions resembling a wide range of possibilities. Overall, this is a meaningful problem because controlling the generative process to understand generated networks proves to be valuable in many applications such as anomaly detection and data augmentation.

Existing graph generative models aim to mimic the structure of given networks, but they cannot easily shape graphs into other desired states. These works either directly capture the graph structure (Cao & Kipf, 2018; Liu et al., 2017; Tavakoli et al., 2017; Zhou et al., 2019; Ma et al., 2018; You et al., 2018; Simonovsky & Komodakis, 2018; Bojchevski et al., 2018) or model node feature information (Kipf & Welling, 2016; Wang et al., 2018; Grover et al., 2019; Zou & Lerman, 2019). Most of them adopt implicit model approaches, such as the popular generative adversarial networks (GANs) (Goodfellow, 2016). Only very recent advances (Li et al., 2018a; Yang et al., 2019) in network generation have started injecting auxiliary information into the model by adding graph-level conditions as additional inputs. However, none of them allow direct control over the generative process, which addresses the fundamental challenge of controlling generated graphs.

Another series of work on graph translation attempts to learn a translation mapping from the input domain to the target graph domain. These methods either perform graph topology translation or predict the node attributes using the learned translation mappings. On the one hand, node attributes translation prediction (Battaglia et al., 2016; Li et al., 2018b; Yu et al., 2018; Gao et al., 2018; Jin et al., 2019) aims to predict node attributes given a graph with fixed topology. On the other hand, graph topology translation (Guo et al., 2018; Sun & Li, 2019; Guo et al., 2019) studies the changing of graph topology domain distributions, forming target graph topologies by assuming that the node attributes are fixed. These works in graph topology translation are closest to our problem, where models generate graphs by discovering the underlying translation rules between input and target graphs. Even though translation mappings can create graphs, they only replicate real target graphs and cannot produce graphs with different structures without a provided target graph.

We propose ShadowCast, an approach for controlling graph generation, which addresses the challenge of generating graphs with user-desired structures. It is achieved by using easy-to-understand node attributes that are intended to capture graph semantics in an explicable way. These attributes form the shadow that we control in order to guide the graph generative process. The model architecture is essentially based on conditional GANs (Mirza & Osindero, 2014). The model introduces control by leveraging the conditions, which we manage with a transparent Markov model, as a control vector to influence the generative process. It allows for user-specified parameters such as density distributions to generate designed graphs that are understandable. Finally, the generator captures essential graph structures while exploring a myriad of other possibilities in multifarious networks.

We first evaluate ShadowCast on three real-world social and information networks to demonstrate its competitive performance against several state-of-the-art graph generation methods in mimicking given graphs. Our model achieves impressive results that are superior in most datasets. In addition, we demonstrate the capability of ShadowCast to produce customized synthetic graphs with different structures through tunable and intuitive parameters, which existing generative models are not designed to perform effectively.

2 Controllable Graph Generation

In this section, we describe the controllable graph generation problem. The core idea of the problem lies in generating graphs of desired structures through the control of its node attributes. We define these attributes and the graph structure as a shadow and introduce our approach ShadowCast. Since it is a challenge to directly control the generation of graphs due to their complex interconnected nature, we model them through shadows, which can be manipulated to control the graph generation. We depict the problem and our approach in detail below (Sections 2.1 and 2.2).

2.1 Problem Formulation

We focus on the novel problem of controlling graph generation. Let denote a graph with nodes and edges . Each node is associated with some identity information, e.g., the employee ID. In addition, we induce another graph with nodes and the same edge connections as in , which we define as shadow . Each of the nodes in is associated with some property label , e.g., the employee’s department, and it “shadows” the corresponding node in . Every node in can be uniquely identified by the identity, whereas the label of each node in is not necessarily unique. Using a transparent and straightforward Markov model to direct this shadow, we control the generative process and shape graphs with understandable outcomes while preserving the original graph properties. We note that there could be other properties of interest, e.g., degree distribution, a shadow with different connectivity than . We leave the inclusion of additional properties as extensions for future work.

In this work, we aim to develop a controllable network graph generative model. By training the model on a graph and its shadow , the model would then monitor the generative process and subject the generation to direction—aiding in the controllability of the generated graphs. Let us define the Controllable Graph Generation problem as such:

Given a graph and its key node attributes , induce another graph with the same structure, defined as shadow , where nodes in are labeled by . Train model to learn a representative shadow , and control to generate graphs ’s with understandable structures.

Following this process, we can leverage node properties such as ground-truth labels and other node attributes, valuable in understanding the model-generated results, as a control vector to guide the graph generation.

2.2 Proposed Model

We propose ShadowCast, a controllable generative model that leverages both conditional modeling and GANs to generate graph-structured data. Our approach is inspired by the graph generative model (Bojchevski et al., 2018) that poses the graph generation problem as learning a distribution of biased random walks over the input graph. However, Bojchevski et al. (2018) is not a controllable generative method.

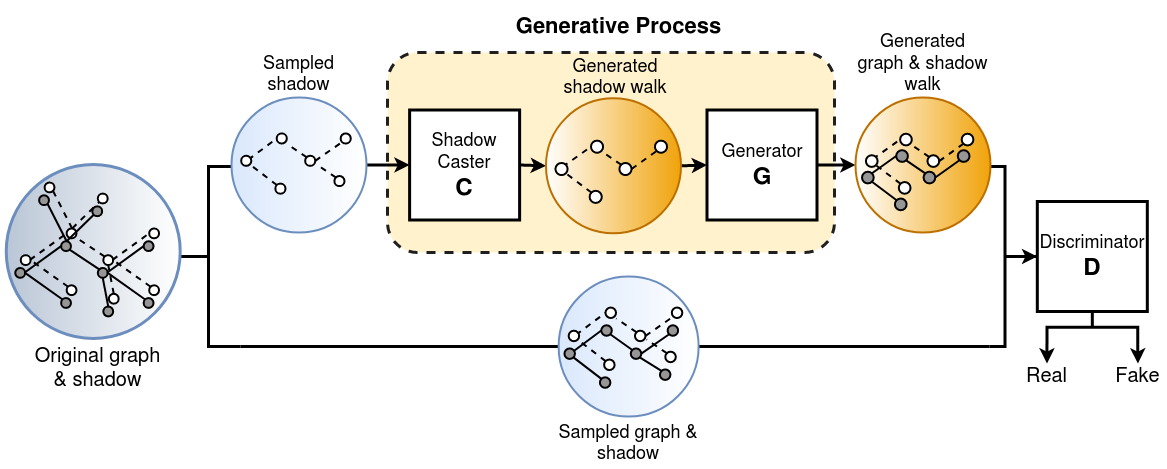

In contrast, we design ShadowCast as a controllable graph generation method. It is based on a conditional GAN framework, which leverages the node attributes as a control vector to influence the generative process. During the training phase, ShadowCast takes an attributed graph as input and induces a shadow , where nodes in correspond to attributes . Next, the model trains shadow caster , which takes in sampled sequences of and produces representative shadow walks that mimic the real shadow. Generative model then takes these representative shadow walks as conditions and generates fake samples of graph walks and shadow walks. The goal of is to capture the distribution of walks over the graph and generate synthetic graph random walks and conditions that are similar to the real walks. At the same time, discriminative model estimates the probability that a graph random walk and its corresponding conditions came from the real graph rather than , to distinguish between the synthetic and real walks.

After training the model, we can specify Markov model parameters () for controlling shadow caster , producing representative shadow walks following the specified dynamics to create a representative shadow . We then direct the generative process with this designed and influence generator to generate desired graphs ’s. We provide details of our model architecture (Figure 2) and design choices below.

Using the conditional GAN framework, we train both and conditioned on some extra information—sampled shadow walks from the shadow . By allowing our model to consider any auxiliary information such as ground-truth communities or data from other sources, the model can leverage extra information from different data modalities, and directly control the data generation process. For example, by using contextual information in the social communications of an organization, we learn semantically meaningful graph representations. We can then explicitly generate networks of any given context.

Following Mirza & Osindero (2014), we introduce the conditional GAN training for graph shadow random walks and define the loss as:

| (1) |

where is a latent noise from a multivariate standard normal distribution. We represent a social transaction network as an input graph of nodes as a binary adjacency matrix . We then sample sets of random walks of length from to use as training data for our model. Following Bojchevski et al. (2018), we use a biased second-order random walk sampling strategy (Grover & Leskovec, 2016)—one of the advantageous properties of random walks is their invariance under node reordering—in order to better capture both global and local graph structures. Another advantage of random walks is that the walks only include connected nodes, which efficiently exploits the sparsity of real-world graphs by including nonzero values of the adjacency matrix . In the rest of this section, we describe in detail each stage of the ShadowCast generation process and formally present the procedure (Algorithm 1).

Shadow Caster

The shadow caster is a sequence-to-sequence model that learns arrays of contiguous node properties from sampled shadow walks on the shadow. The network predicts a sequence of inputs one at a time when some sequence is observed. We model with a long short-term memory (LSTM) (Hochreiter & Schmidhuber, 1997) neural network. Given sampled sequences of shadow walks from the shadow as inputs, the shadow caster then generates synthetic shadow walks to mimic the sampled walks.

Generator

The generator is a probabilistic sequential learning model that generates conditional graph random walks . We model using another parameterized LSTM network . At each step , takes as input the previous memory state of the LSTM model, the current additional information , and the last node . The model produces two values , where denotes the probability distribution over the current node and the current memory state. Next, the current node is sampled from a categorical distribution using a one-hot vector representation, where is the softmax function.

In order to initialize the model, we draw a latent noise from a multivariate standard normal distribution and pass it through a hyperbolic tangent function to compute memory state . Generator takes as inputs the noise and sampled shadow walks , and it outputs graph random walks . Through this process, generates fake random walks.

Discriminator

The discriminator is a binary classification LSTM model. The goal of is to discriminate between real walks sampled from walking on the original graph and fake walks generated by . At each time-step , the discriminator takes two inputs: the current node and the associated shadow , both represented as one-hot vectors. After processing each presented sequence of shadow and graph walks, outputs a score between and , indicating the probability of a real walk.

After training the model, we have a shadow caster and a generator that can produce synthetic graphs. The shadow caster first constructs shadow walks of some user-defined class distribution (a relatively small number of shadow walks, e.g., 10,000). The generator then takes and generates a large set of random graph walks (a much larger number of random walks than for training, e.g., 10M). We construct a score matrix by counting how often an edge appears in the set of graph walks. Next, we convert into a binary adjacency matrix by first setting to get a symmetric matrix. Next, we could use simple binarization strategies such as thresholding or choosing top- entries. However, we follow a probabilistic strategy, introduced in Bojchevski et al. (2018), that mitigates the issue of leaving out the low-degree nodes and producing singletons because the starting nodes of every walk is random.

2.3 Controlling Generated Graphs

Different from existing approaches, our model takes shadow walks—a series of random walks on the node properties graph—as inputs to the generator, and it creates graphs with various densities. To answer questions like: “Why did the model generate such graphs? Could we modify it to our desire?”, we generate graphs that are more understandable by controlling these shadow walk inputs. Our goal is to provide controllability and gain insight into how black-box generative models produce graphs.

For any desired graph, we first build a Markov chain to model and construct sequences of node properties based on some user-specified transition distribution. These sequences are then injected into the shadow caster to generate shadow walks that mimic the original shadow. Next, given a trained ShadowCast model and the shadow walks , the generator produces desired graphs ’s. Through this process, one can control the shadow distributions and study the generated graphs with varying structures.

3 Related Work

Although many existing works study the generalizability of graph generation methods, effectively controlling graph generation remains an open question. From a broader perspective, we can consider the related problems of (1) constructing generative models for graph-structured data and (2) learning translation mappings between input and target graphs to infer their results.

Graph Generation

Most existing graph generation models are designed to generate graphs mimicking the structure of observed graphs. So far, no generative method that shapes graphs into new desired states have been proposed. In general, we can group these graph generative models into two main families—those that directly model the graph structure (Cao & Kipf, 2018; Liu et al., 2017; Tavakoli et al., 2017; Zhou et al., 2019; Ma et al., 2018; Simonovsky & Komodakis, 2018) and others that study the graph in the context of node representations (Kipf & Welling, 2016; Wang et al., 2018; Grover et al., 2019; Zou & Lerman, 2019). While modeling of graph structures approximates the distribution of graphs with minimal assumptions about their structure, modeling node embedding estimates the probabilities of each edge’s existence, which effectively models the relational structure of large graphs.

Recently, some works in graph generation have started exploring network structures of various conditions. These works employ graph-level condition information. In one work, Li et al. (2018a) produce some conditional generation results, where the conditions are graph properties such as the number of nodes and edges. Another work, CondGEN (Yang et al., 2019), injects semantics into the graphs by conditioning the model on supplementary contextual information. The model mainly considers multiple small graphs, each with an accompanying semantic condition to learn a distribution over graphs. While GraphRNN (You et al., 2018) is not a direct conditional model, it decomposes the generative process into sequences of nodes, which potentially allows for explicit conditioning. However, these methods only generate graphs mimicking the observed graphs.

To allow state manipulation and controllable graph generation, our model borrows the concept from NetGAN (Bojchevski et al., 2018), which adapts the standard LSTM to learn a distribution of random walks and exploit sparsity in real-world graphs. In contrast to NetGAN, we integrate a condition-based control mechanism to learn a model that generates custom graphs. Due to the challenging nature of the problem, to the best of our knowledge, no work has definitively considered shaping graphs into new desired states.

Graph Translation

Another series of tangential work, graph translation, attempts to learn a translation mapping from the input domain to the target graph domain. These methods, using translation mappings, either perform graph topology translation or predict the node attributes. Existing graph translation models (Battaglia et al., 2016; Li et al., 2018b; Yu et al., 2018; Gao et al., 2018; Jin et al., 2019) learn to predict node attributes given a graph with fixed topology. While predicting node attribute values is useful to model the behavior of nodes, potentially leading to enhanced performance in areas such as anomaly detection, it is limited in generating graphs.

Topology translation methods (Guo et al., 2018; Sun & Li, 2019), which study the task of changing graph topology domain distributions, form target graph topology (i.e., structure, edges) by assuming that the node attributes are fixed. Guo et al. (2018) introduces a GAN-based graph translator using graph convolution, deconvolution layers, and a conditional discriminator to learn the global and local translation mapping. Sun & Li (2019) proposes a conditioned graph generation model with two edge generation options. It is based on the GraphRNN model that learns the attention on input graph annotations, sequentially generating the nodes to form a target graph.

However, these models either predict node or edge attributes given fixed topology and fixed node attributes, respectively. They cannot simultaneously perform both predictions. Guo et al. (2019) develops an end-to-end framework for such joint prediction to circumvent the limitations of existing graph translation models, integrating both node and edge translations.

4 Experiments

In this section, we first compare and evaluate our approach with other baseline graph generation methods on three datasets to establish our model’s ability to generate high-quality graphs of complex networks. Next, we demonstrate the controllability of ShadowCast by directing the generative process to create graphs according to specifications. Note that generating graphs mimicking any given graph as closely as possible is not our goal. Our objective is to introduce a controllable graph generative approach that provides insights into generated graphs. Through our experiments, we not only demonstrate that ShadowCast exhibits competitive performance in the task of graph generation, but we also show that our model can generate graphs of different density distributions by controlling the shadows.

| Graph | Model |

|

|

|

|

|

|

||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Cora-ML | Real | -0.075 | 0.00277 | 5.636 | 0.485 | 241.0 | 2898.0 | ||||||

| GraphRNN | 0.0625.5e-4 | 0.001212.2e-7 | 1.8925.7e-5 | 0.1191.9e-4 | 507.42.7 | 9023.817.8 | |||||||

| GVAE | -0.3246.1e-3 | 0.012944.2e-4 | 3.4811.1e-2 | 0.8251.1e-3 | 121.67.0 | 15513.0186.1 | |||||||

| NetGAN | -0.0551.5e-3 | 0.001402.8e-5 | 4.9439.5e-3 | 0.4071.3e-3 | 223.62.1 | 1034.618.7 | |||||||

| CondGEN | -0.5242.0e-2 | 0.005249.0e-4 | 2.1681.7e-2 | 0.9461.5e-3 | 404.037.0 | 95843.44780.4 | |||||||

| ShadowCast | -0.0813.1e-3 | 0.001911.5e-4 | 5.1871.0e-2 | 0.4591.3e-3 | 229.67.7 | 1713.626.4 | |||||||

| Enron | Real | -0.003 | 0.03300 | 2.154 | 0.281 | 74.0 | 4784.0 | ||||||

| GraphRNN | 0.0286.3e-3 | 0.021543.1e-4 | 1.9775.5e-3 | 0.1162.0e-3 | 30.80.8 | 1221.634.4 | |||||||

| GVAE | -0.1122.1e-2 | 0.046251.0e-3 | 2.1656.9e-3 | 0.2887.2e-3 | 45.21.3 | 5439.258.7 | |||||||

| NetGAN | 0.1231.2e-2 | 0.030513.4e-4 | 2.1053.8e-3 | 0.2445.8e-3 | 55.81.4 | 3486.057.9 | |||||||

| CondGEN | -0.2872.7e-2 | 0.040741.5e-3 | 2.1022.3e-2 | 0.4637.1e-3 | 70.41.7 | 9619.6183.7 | |||||||

| ShadowCast | -0.0044.6e-3 | 0.034837.8e-4 | 2.2146.2e-3 | 0.2781.8e-3 | 73.22.6 | 5262.242.8 | |||||||

| EUcore-top | Real | -0.085 | 0.03105 | 2.885 | 0.433 | 65.0 | 8133.0 | ||||||

| GraphRNN | -0.0058.6e-3 | 0.008911.1e-4 | 2.1285.2e-3 | 0.1189.6e-4 | 41.00.84 | 2255.259.2 | |||||||

| GVAE | -0.2571.2e-2 | 0.029193.8e-4 | 2.5797.0e-3 | 0.4732.4e-3 | 68.82.3 | 9025.2127 | |||||||

| NetGAN | -0.0281.0e-2 | 0.023353.1e-4 | 2.6421.0e-2 | 0.3591.7e-3 | 62.02.2 | 4639.828.8 | |||||||

| CondGEN | -0.3783.8e-2 | 0.018801.9e-3 | 2.1011.2e-2 | 0.7203.6e-3 | 147.010.0 | 26106.4726.4 | |||||||

| ShadowCast | -0.0341.1e-2 | 0.028473.4e-4 | 2.8431.0e-2 | 0.4352.6e-3 | 66.21.1 | 7414.493.8 |

Datasets

We consider three real-world graphs in social and information networks, where each node belongs to one of the ground-truth communities. Two of the datasets are email communication networks EUcore-top ( = 348, = 3342, = 5) and Enron ( = 154, = 1843, = 3). The other dataset Cora-ML ( = 2810, = 7981, = 7) is a commonly used subset of a large author citation dataset. We provide the links to datasets used in our experiments (see Appendix for details).

We study communication networks: (1) EUcore-top is a network that consists of the top five largest departments in the EUcore email dataset that was created using anonymized emails from a large European research institution. (2) Enron is a dataset of the Enron email corpus where nodes are employees labeled according to their department information. The citation network: (3) Cora-ML is a popular benchmark citation dataset. Nodes labeled according to their paper topic are authors, and edges between them indicate that an author cited another author’s paper.

Baselines

Since guiding the generative process to provide controllable graph generation is a novel task, and no such method is developed, we compare our approach against four current state-of-the-art graph generation baseline methods—GraphRNN (You et al., 2018), GVAE (Simonovsky & Komodakis, 2018), NetGAN (Bojchevski et al., 2018), and CondGEN (Yang et al., 2019). We randomly select of the edges in each graph for training and use the remaining for validation and testing. We refer readers to the Appendix for more details about the model implementation settings, baseline models, datasets, and generated graph visualizations.

Performance

We evaluate ShadowCast against existing benchmark generative models (You et al., 2018; Simonovsky & Komodakis, 2018; Bojchevski et al., 2018; Yang et al., 2019) and present the comparison statistics 111Statistics measuring graphs generated by ShadowCast and the baseline methods include ASST (assortativity), CLUST (clustering coefficient), CPL (character path length), GINI (Gini index), MD (maximum node degree), and TC (triangle count). (Table 1). By comparing the statistics of the real graphs and those generated by each method, closer mean values indicate greater resemblance to the original graphs, thus better performance. In general, baseline methods succeed at replicating the graphs that are directly modeled. Unsurprisingly, GVAE, designed for generating small graphs, performs well in the smaller Enron and EUcore-top datasets. However, it does not recover statistics of the larger graph Cora-ML well. On the other hand, our model captures all graph properties of the datasets, especially excelling in preserving properties of larger graphs, as shown in its generation of the Cora-ML dataset.

ShadowCast, a conditional generative model that considers meaningful auxiliary information (e.g., node labels) of given graphs on top of learning the graph structure, naturally outperforms methods that take an unconditional approach. The baseline methods are designed to generate graphs unconditionally, with the exception of CondGEN. However, CondGEN performs conditional generation with graph-level conditions, which are not as informative as the node-level information we inject into ShadowCast. This rich supplementary node information enables our model to learn better representations of graphs. Hence, ShadowCast achieves such outstanding performance.

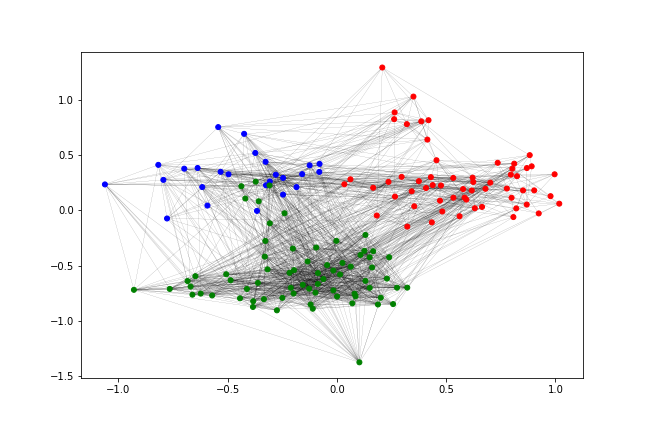

(a) Observed: Enron normal operations

(a) Observed: Enron normal operations

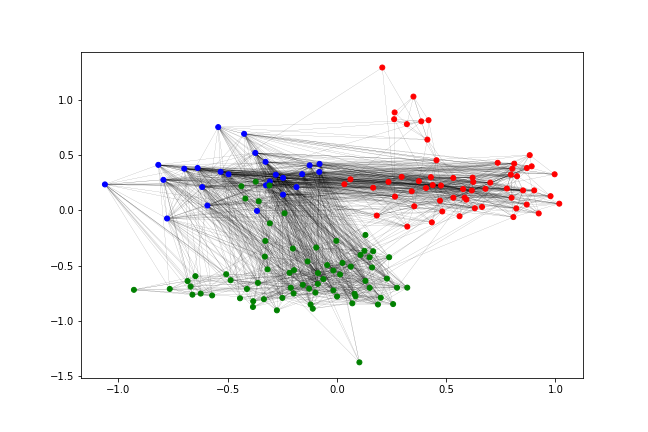

(b) Controlled: Legal (red) internal surge

(b) Controlled: Legal (red) internal surge

(c) Controlled: Finance (green) internal surge

(c) Controlled: Finance (green) internal surge

(d) Controlled: Trading (blue) outgoing surge

(d) Controlled: Trading (blue) outgoing surge

Controlling Generated Graphs

In addition to recreating graphs that closely match the statistics of the input graphs, we demonstrate our model’s ability to generate desired graphs by controlling the parameters of shadows. The shadow allows us a way to gain insight into how graphs are generated and provide controllability. We influence the generative process by constructing shadow walks of preferred distribution using shadow caster . First, we create sequences of node ground-truth labels by specifying the parameters of a transparent and straightforward Markov model: (1) initial probability distribution over labels , where is the probability that the Markov chain will start from label , and (2) transition probability matrix , where each represents the probability of moving from label to label . Next, we input the constructed sequences into shadow caster , which returns model-generated shadow walks. Finally, by injecting these designed shadows into our trained generator , we generate desired graphs of different structures.

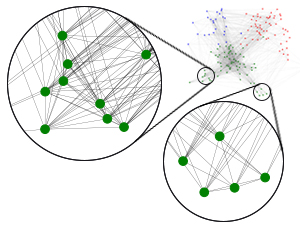

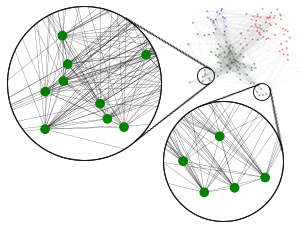

(a) Original graph

(a) Original graph

(b) Controlled graph by ShadowCast

(b) Controlled graph by ShadowCast

(c) Random graph construction

(c) Random graph construction

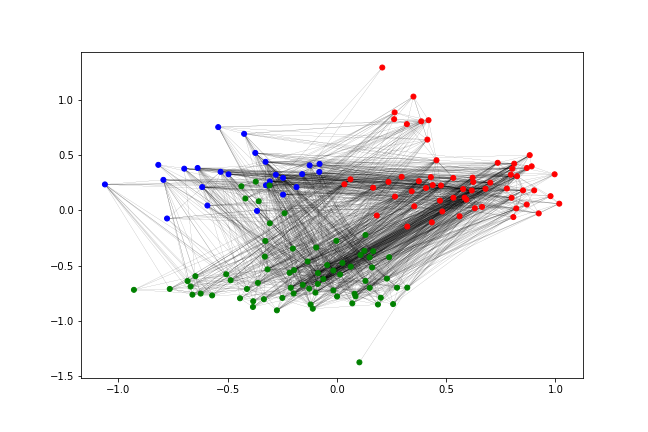

In Figure 3, we show controlled generation examples of the Enron email network, where each employee represented by a node belongs to one of three departments (e.g., Legal, Trading, and Finance offices in the organization). Figure 3(a) is an observed instance of interactions between the departments during normal operations. Due to limited observations, network data of some unprecedented, extraordinary situations may be unavailable. To simulate such occurrences, we can set the distribution of the Legal (red), Trading (blue), and Finance (green) departments with parameters () to control the generative process. Distribution configurations correspond to how likely a sequence of model-generated shadow walks start from a particular department, while the transition probability matrix determines the probability of moving from one department to another. Various configurations () correspond to different cases such as (Figure 3(b)) internal communication surge in the legal team during court pre-trial period, (Figure 3(c)) internal surge in the finance department during financial accounts reporting period, and (Figure 3(d)) increased outgoing communication between the trading team and the other two departments when purchasing a subsidiary trading firm. Thus, by specifying these parameters, we can control and understand the structure of the generated graphs (see Appendix for the specific parameter settings).

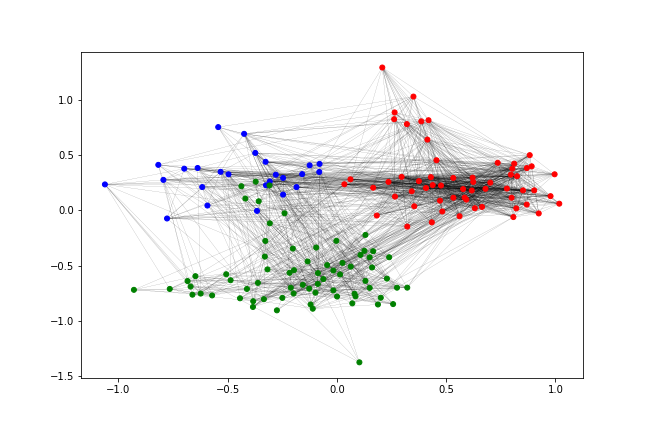

Following the example in Figure 3(c), one might argue that we could also naively create the effect of an internal email surge by randomly removing the finance (green) inter-department edges and adding random intra-department links. While a simple random graph construction could appear legitimate, it tends to have glaring shortcomings. Specifically, it is not clear if this newly formed graph (1) preserves the intrinsic properties of the original network and (2) has an understandable structure. To illustrate the shortcomings, we highlight in Figure 4, circles on the graphs, that the controlled graph (Figure 4(b)) generated by ShadowCast preserves the original graph’s intrinsic properties, whereas the random graph (Figure 4(c)) generated by the Erdös & Rényi (1960) model fails to retain those properties.

In contrast, our approach follows a transparent and straightforward Markov model, providing control over the generated graphs that are modeled based on the original graph. This intuitive approach allows for an increased understanding of the generated graphs. We present a comparison of the graph statistics (Table 2), showing small absolute differences between the given original graph and generated graphs, noting their similarities and retaining the original graph’s intrinsic properties.

5 Conclusion

In this work, we present ShadowCast, a novel controllable graph generative model, which generates designed graphs that are intuitive and understandable. To the best of our knowledge, this method is the first of its kind to specifically address the unique problem of controlling the generative process to produce coherent desired structures of generated graphs. Our model demonstrates how it can leverage graph attributes, directed with a transparent Markov model, as a control vector and allow for adjustable parameters to influence the generative process. By introducing controllability in graph generation, a meaningful problem for a better understanding of generated graph data, we hope to encourage further investigation in this line of work and expand on its applications in different areas.

References

- Barse et al. (2003) Barse, E. L., Kvarnström, H., and Jonsson, E. Synthesizing test data for fraud detection systems. In ACSAC, 2003.

- Battaglia et al. (2016) Battaglia, P., Pascanu, R., Lai, M., Jimenez Rezende, D., and kavukcuoglu, k. Interaction networks for learning about objects, relations and physics. In Advances in Neural Information Processing Systems, 2016.

- Bojchevski et al. (2018) Bojchevski, A., Shchur, O., Zügner, D., and Günnemann, S. NetGAN: Generating graphs via random walks. In ICML, 2018.

- Cao & Kipf (2018) Cao, N. D. and Kipf, T. MolGAN: An implicit generative model for small molecular graphs. arXiv preprint, arXiv:1805.11973, 2018.

- Erdös & Rényi (1960) Erdös, P. and Rényi, A. On the evolution of random graphs. Publ. Math. Inst. Hungary. Acad. Sci., 5:17–61, 1960.

- Gao et al. (2018) Gao, Y., Guo, X., and Zhao, L. Local event forecasting and synthesis using unpaired deep graph translations. In Proceedings of the 2nd ACM SIGSPATIAL Workshop on Analytics for Local Events and News, 2018.

- Goodfellow (2016) Goodfellow, I. NIPS 2016 tutorial: Generative adversarial networks. arXiv preprint, arXiv:1701.00160, 2016.

- Grover & Leskovec (2016) Grover, A. and Leskovec, J. Node2vec: Scalable feature learning for networks. In KDD, 2016.

- Grover et al. (2019) Grover, A., Zweig, A., and Ermon, S. Graphite: Iterative generative modeling of graphs. In ICML, 2019.

- Guo et al. (2018) Guo, X., Wu, L., and Zhao, L. Deep graph translation. arXiv preprint, arXiv:1805.09980, 2018.

- Guo et al. (2019) Guo, X., Zhao, L., Nowzari, C., Rafatirad, S., Homayoun, H., and Pudukotai Dinakarrao, S. M. Deep multi-attributed graph translation with node-edge co-evolution. In 2019 IEEE International Conference on Data Mining (ICDM), 2019.

- Hochreiter & Schmidhuber (1997) Hochreiter, S. and Schmidhuber, J. Long short-term memory. Neural Comput., 9(8):1735–1780, 1997.

- Jin et al. (2019) Jin, W., Yang, K., Barzilay, R., and Jaakkola, T. Learning multimodal graph-to-graph translation for molecule optimization. In International Conference on Learning Representations, 2019.

- Kipf & Welling (2016) Kipf, T. N. and Welling, M. Variational graph auto-encoders. arXiv preprint, arXiv:1611.07308, 2016.

- Li et al. (2018a) Li, Y., Vinyals, O., Dyer, C., Pascanu, R., and Battaglia, P. Learning deep generative models of graphs. arXiv preprint, arXiv:1803.03324, 2018a.

- Li et al. (2018b) Li, Y., Yu, R., Shahabi, C., and Liu, Y. Diffusion convolutional recurrent neural network: Data-driven traffic forecasting. In International Conference on Learning Representations, 2018b.

- Liu et al. (2017) Liu, W., Chen, P.-Y., Cooper, H., Oh, M. H., Yeung, S., and Suzumura, T. Can GAN learn topological features of a graph? arXiv preprint, arXiv:1707.06197, 2017.

- Ma et al. (2018) Ma, T., Chen, J., and Xiao, C. Constrained generation of semantically valid graphs via regularizing variational autoencoders. In NeurIPS, 2018.

- Mirza & Osindero (2014) Mirza, M. and Osindero, S. Conditional generative adversarial nets. arXiv preprint, arXiv:1411.1784, 2014.

- Simonovsky & Komodakis (2018) Simonovsky, M. and Komodakis, N. GraphVAE: Towards generation of small graphs using variational autoencoders. In ICANN, 2018.

- Skopik et al. (2014) Skopik, F., Settanni, G., Fiedler, R., and Friedberg, I. Semi-synthetic data set generation for security software evaluation. In PST, 2014.

- Sun & Li (2019) Sun, M. and Li, P. Graph to graph: a topology aware approach for graph structures learning and generation. In Proceedings of Machine Learning Research, 2019.

- Tavakoli et al. (2017) Tavakoli, S., Hajibagheri, A., and Sukthankar, G. Learning social graph topologies using generative adversarial neural networks. In SBP, 2017.

- Wang et al. (2018) Wang, H., Wang, J., Wang, J., Zhao, M., Zhang, W., Zhang, F., Xie, X., and Guo, M. GraphGAN: Graph representation learning with generative adversarial nets. In AAAI, 2018.

- Yang et al. (2019) Yang, C., Zhuang, P., Shi, W., Luu, A., and Li, P. Conditional structure generation through graph variational generative adversarial nets. In NeurIPS, 2019.

- You et al. (2018) You, J., Ying, R., Ren, X., Hamilton, W. L., and Leskovec, J. GraphRNN: Generating realistic graphs with deep auto-regressive models. In ICML, 2018.

- Yu et al. (2018) Yu, B., Yin, H., and Zhu, Z. Spatio-temporal graph convolutional networks: A deep learning framework for traffic forecasting. In Proceedings of the Twenty-Seventh International Joint Conference on Artificial Intelligence, IJCAI-18, 2018.

- Zhou et al. (2019) Zhou, D., Zheng, L., Xu, J., and He, J. Misc-GAN: A multi-scale generative model for graphs. Frontiers in Big Data, 2:3, 2019.

- Zou & Lerman (2019) Zou, D. and Lerman, G. Encoding robust representation for graph generation. In IJCNN, 2019.