Shape-conditioned 3D Molecule Generation via Equivariant Diffusion Models

Abstract

Ligand-based drug design aims to identify novel drug candidates of similar shapes with known active molecules. In this paper, we formulated an in silico shape-conditioned molecule generation problem to generate 3D molecule structures conditioned on the shape of a given molecule. To address this problem, we developed a translation- and rotation-equivariant shape-guided generative model . consists of an equivariant shape encoder that maps molecular surface shapes into latent embeddings, and an equivariant diffusion model that generates 3D molecules based on these embeddings. Experimental results show that can generate novel, diverse, drug-like molecules that retain 3D molecular shapes similar to the given shape condition. These results demonstrate the potential of in designing drug candidates of desired 3D shapes binding to protein target pockets.

Introduction

Generating novel drug candidates is a critical step in drug discovery to identify possible therapeutic solutions. Conventionally, this process is characterized based on knowledge and experience from medicinal chemists, and is resource- and time-consuming. Recently, computational approaches to molecule generation have been developed to accelerate the conventional paradigm. Existing molecular generative models largely focus on generating either molecule SMILES strings or molecular graphs (Gómez-Bombarelli et al. 2018; Jin, Barzilay, and Jaakkola 2018; Chen et al. 2021), with a recent shift towards 3D molecular structures. Several models (Luo et al. 2021; Peng et al. 2022; Guan et al. 2023) have been designed to generate 3D molecules conditioned on the protein targets, aiming to facilitate structured-based drug design (SBDD) (Batool, Ahmad, and Choi 2019), given that molecules exist in 3D space and the efficacy of drug molecules depends on their 3D structures fitting into protein pockets. However, SBDD relies on the availability of high-quality 3D structures of protein binding pockets, which are lacking for many targets (Zheng et al. 2013).

Different from SBDD, ligand-based drug design (LBDD) (Acharya et al. 2011) utilizes ligands known to interact with a protein target, and does not require knowledge of protein structures. In LBDD, shape-based virtual screening tools such as ROCS (Hawkins, Skillman, and Nicholls 2006) have been widely used to identify molecules with similar shapes to known ligands by enumerating molecules in chemical libraries. However, virtual screen tools cannot probe the novel chemical space. Therefore, it is highly needed to develop generative methods to generate novel molecules with desired 3D shapes.

In this paper, we present a novel generative model for 3D molecule generation conditioned on given 3D shapes. Our method, denoted to as , employs an equivariant shape embedding module to map 3D molecule surface shapes into shape latent embeddings. It then uses a conditional diffusion generative model to generate molecules conditioned on the shape latent embeddings, by iteratively denoising atom positions and atom features (e.g., atom type and aromaticity). During molecule generation, can utilize additional shape guidance by pushing the predicted atoms far from the condition shapes to those shapes. with shape guidance is denoted as . The major contributions of this paper are as follows:

-

•

To the best of our knowledge, is the first diffusion-based method for 3D molecule generation conditioned on 3D molecule shapes.

-

•

leverages a new equivariant shape embedding module to learn 3D surface shape embeddings from cloud points sampled over molecule surfaces.

-

•

uses a novel conditional diffusion model to generate 3D molecule structures. The diffusion model is equivariant to the translation and rotation of molecule shapes. A new weighting scheme over diffusion steps is developed to ensure accurate molecule shape prediction.

-

•

utilizes new shape guidance to direct the generated molecules to better fit the shape condition.

-

•

achieves the highest average 3D shape similarity between the generated molecules and condition molecules, compared to the state-of-the-art baseline.

For reproducibility purposes, detailed parameters in all the experiments, code and data are reported in Supplementary Section LABEL:supp:experiments:parameters.

Related Work

Molecule Generation

A variety of deep generative models have been developed to generate molecules using various molecule representations, incliuding generating SMILES string representations (Gómez-Bombarelli et al. 2018), or 2D molecular graph representations (Jin, Barzilay, and Jaakkola 2018; Chen et al. 2021). Recent efforts have been dedicated to the generation of 3D molecules. These 3D molecule generative models can be divided into two categories: autoregressive models and non-autoregressive models. Autoregressive models generate 3D molecules by sequentially adding atoms into the 3D space (Luo et al. 2021; Peng et al. 2022). While these models ensure the validity and connectivity of generated molecules, any errors made in sequential predictions could accumulate in subsequent predictions. Non-autoregressive models generate 3D molecules using flow-based methods (Garcia Satorras et al. 2021) or diffusion methods (Guan et al. 2023). In these models, atoms are generated or adjusted all together. For example, Hoogeboom et al. (2022) developed an equivariant diffusion model, in which an equivariant network is employed to jointly predict both the positions and features of all atoms.

Shape-Conditioned Molecule Generation

Following the idea of ligand-based drug design (LBDD) (Acharya et al. 2011), previous work has been focused on generating molecules with similar 3D shapes to those of efficacy, based on the observation that structurally similar molecules tend to have similar properties. Papadopoulos et al. (2021) developed a reinforcement learning method to generate SMILES strings of molecules that are similar to known antagonists of DRD2 receptors in 3D shapes and pharmacophores. Imrie et al. (2021) generated 2D molecular graphs conditioned on 3D pharmacophores using a graph-based autoencoder. However, there is limited work that generates 3D molecule structures conditioned on 3D shapes. Adams and Coley (2023) developed a shape-conditioned generative framework for 3D molecule generation. learns a variational autoencoder to generate fragments conditioned on given 3D shapes, and decodes molecules by sequentially attaching fragments with fixed bond lengths and angles. While LBDD plays a vital role in drug discovery, the problem of generating 3D molecule structures conditioned on 3D shapes is still under-addressed.

Definitions and Notations

Problem Definition

Following Adams and Coley (2023), we focus on the 3D molecule generation conditioned on the shape of a given molecule (e.g., a ligand). Specifically, we aim to generate a new molecule , conditioned on the 3D shape of a given molecule , such that 1) is similar to in their 3D shapes, measured by , where is the 3D shape of ; and 2) is dissimilar to in their 2D molecular graph structures, measured by . This conditional 3D shape generation problem is motivated by the fact that in ligand-based drug design, it is desired to find chemically diverse and novel molecules that share similar shapes and similar activities with known active ligands (Ripphausen, Nisius, and Bajorath 2011). Such chemically diverse and novel molecules could expand the search space for drug candidates and potentially enhance the development of effective drugs.

Representations and Notations

We represent a molecule as a set of atoms , where is the number of atoms in ; is the -th atom in ; represents the 3D coordinates of ; and is ’s one-hot atom feature vector indicating the atom type and its aromaticity. Following Guan et al. (2023), bonds between atoms can be uniquely determined by the atom types and the atomic distances among atoms. We represent the 3D surface shape of a molecule as a point cloud constructed by sampling points over the molecular surface. Details about the construction of point clouds from the surface of molecules are available in Supplementary Section LABEL:supp:point_clouds. We denote the point cloud as , where is the number of points in ; is the -th point; and represents the 3D coordinates of . We denote the latent embedding of as , where is the dimension of the latent embedding.

Method

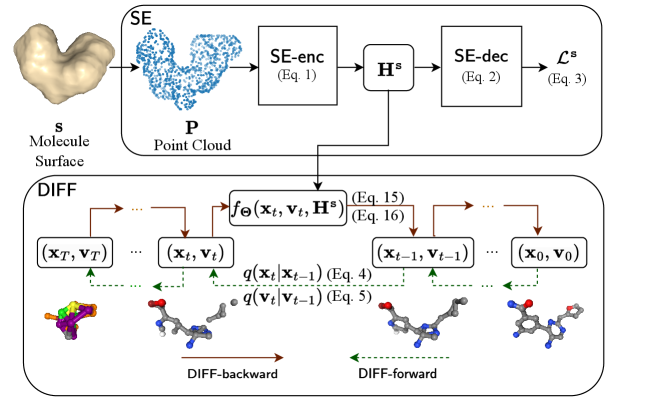

consists of an equivariant shape embedding module that maps 3D molecular surface shapes to latent embeddings, and an equivariant diffusion model that generates 3D molecules conditioned on these embeddings. Figure 1 presents the overall architecture of .

Equivariant Shape Embedding ()

pre-trains a shape embedding module to generate surface shape embeddings . uses an encoder to map to the equivariant latent embedding . employs a decoder to optimize by recovering the signed distances (Park et al. 2019) of sampled query points in 3D space to the molecule surface based on . uses to guide the diffusion process later.

Shape Encoder ()

generates equivariant shape embeddings from the 3D surface shape of molecules, such that is equivariant to both translation and rotation of . That is, any translation and rotation applied to is reflected in accordingly. To ensure translation equivariance, shifts the center of each to zero to eliminate all translations. To ensure rotation equivariance, leverages Vector Neurons (VNs) (Deng et al. 2021) and Dynamic Graph Convolutional Neural Networks (DGCNNs) (Wang et al. 2019) as follows:

where is a VN-based DGCNN network to generate equivariant embedding for each point in ; and is the embedding of generated via a mean-pooling over the embeddings of all the points. Note that generates a matrix as the embedding of each point (i.e., ) to guarantee the equivariance.

Shape Decoder ()

To optimize , learns a decoder to predict the signed distance of a query point sampled from 3D space using Multilayer Perceptrons (MLPs) as follows:

| (1) |

where is the predicted signed distance of , with positive and negative values indicating is inside or outside the surface shape, respectively; is the dot-product operator; is the Euclidean norm of the coordinates of ; is an invariant VN network (Deng et al. 2021) that converts the equivariant shape embedding into an invariant shape embedding. Thus, predicts the signed distance between the query point and 3D surface by jointly considering the position of the query point (), the molecular surface shape () and the interaction between the point and surface . The predicted signed distance is used to calculate the loss for the optimization of (discussed below). As shown in the literature (Deng et al. 2021), remains invariant to the rotation of the 3D molecule surface shapes (i.e., ). We present the sampling process of in the Supplementary Section LABEL:supp:training:shapeemb.

Pre-training

pre-trains by minimizing the squared-errors loss between the predicted and the ground-truth signed distances of query points as follows:

| (2) |

where is the set of sampled query points and is the ground-truth signed distance of query point . By pretraining , learns that will be used as the condition in the following 3D molecule generation.

Shape-Conditioned Molecule Generation

In , a shape-conditioned molecule diffusion model, referred to as , is used to generate a 3D molecule structure (i.e., atom coordinates and features) conditioned on a given 3D surface shape that is represented by the shape latent embedding (Eq. Shape Encoder ()). Following the denoising diffusion probabilistic models (Ho, Jain, and Abbeel 2020), includes a forward diffusion process based on a Markov chain, denoted as , which gradually adds noises step by step to the atom positions and features in the training molecules. The noisy atom positions and features at step are represented as (), and the molecules without any noise are represented as . At the final step , are completely unstructured and resemble a simple distribution like a Normal distribution or a uniform categorical distribution , in which and denotes the identity matrix and identity vector, respectively.

During training, is learned to reverse the forward diffusion process via another Markov chain, referred to as the backward generative process and denoted as , to remove the noises in the noisy molecules. During inference, first samples noisy atom positions and features at step from simple distributions and then generates a 3D molecule structure by removing the noises in the noisy molecules step by step until reaches 1.

Forward Diffusion Process ()

Following the previous work (Guan et al. 2023), at step , a small Gaussian noise and a small categorical noise are added to the continuous atom positions and discrete atom features , respectively. When no ambiguity arises, we will eliminate subscript in the notations and use for brevity. The noise levels of the Gaussian and categorical noises are determined by two predefined variance schedules , where and are selected to be sufficiently small to ensure the smoothness of . The details about variance schedules are available in Supplementary Section LABEL:supp:forward:variance. Formally, for atom positions, the probability of sampled given , denoted as , is defined as follows,

| (3) |

where is a Gaussian distribution of with mean and covariance . Following Hoogeboom et al. (2021), for atom features, the probability of across classes given is defined as follows,

| (4) |

where is a categorical distribution of derived by noising with a uniform noise across classes.

Since the above distributions form Markov chains, the probability of any or can be derived from or :

| (5) | |||||

| (6) | |||||

| (7) |

Note that () is monotonically decreasing from 1 to 0 over . As , and are close to 1, leading to that or approximates or . Conversely, as , and are close to 0, leading to that resembles and resembles .

Using Bayes theorem, the ground-truth Normal posterior of atom positions can be calculated in a closed-form (Ho, Jain, and Abbeel 2020) as below,

| (8) | |||

| (9) |

Similarly, the ground-truth categorical posterior of atom features can be calculated (Hoogeboom et al. 2021) as below,

| (10) | |||

| (11) | |||

| (12) |

where denotes the likelihood of -th class across classes in ; denotes the element-wise product operation; is calculated using and and normalized so as to represent probabilities. The proof of the above equations is available in Supplementary Section LABEL:supp:forward:proof.

Backward Generative Process ()

learns to reverse by denoising from to at , conditioned on the shape latent embedding . Specifically, the probabilities of denoised from are estimated by the approximates of the ground-truth posteriors (Eq. 8) and (Eq. 10). Given that is unknown in the generative process, a predictor is employed to predict at the atom position and feature as below,

| (13) |

where and are the predictions of and at ; is the learnable parameter. Following Ho et al. (2020), with , the probability of denoised from , denoted as , can be estimated by the approximated posterior as below,

| (14) | ||||

where is an estimate of by replacing with its estimate in Eq. 8. Similarly, with , the probability of denoised from , denoted as , can be estimated by the approximated posterior as below,

| (15) |

where is an estimate of by replacing with its estimate in Eq. 10.

Equivariant Shape-Conditioned Molecule Predictor

In , the predictor (Eq. 13) predicts the atom positions and features given the noisy data conditioned on . For brevity, in this subsection, we eliminate the subscript in the notations when no ambiguity arises. leverages two multi-layer graph neural networks: (1) an equivariant graph neural network, denoted as , that equivariantly predicts atom positions that change under transformations, and (2) an invariant graph neural network, denoted as , that invariantly predicts atom features that remain unchanged under transformations. Following the previous work (Guan et al. 2023; Hoogeboom et al. 2022), the translation equivariance of atom position prediction is achieved by shifting a fixed point (e.g., the center of point clouds ) to zero, and therefore only rotation equivariance needs to be considered.

Atom Coordinate Prediction

In , the atom position of at the (+1)-th layer is calculated in an equivariant way as below,

| (16) |

where is the set of -nearest neighbors of based on atomic distances; aggregates the neighborhood information of ; denotes the multi-head attention layer in with heads; is the distance between -th and -th atom positions and at the -th layer; converts into a 3D vector via meaning pooling to adjust the atom position; denotes the equivariant VN-based linear layer (Deng et al. 2021). adjusts the atom positions to fit the shape condition represented by , by considering the current atom positions and the neighborhood information . The learned atom position at the last layer of is used as the prediction of , that is,

| (17) |

Atom Feature Prediction

In , inspired by the previous work (Guan et al. 2023) and VN-Layer (Deng et al. 2021), the atom feature embedding of the -th atom at the (+1)-th layer of is updated in an invariant way as follows,

| (18) |

where denotes the multi-head attention layer in . The learned atom feature embedding at the last layer encodes the neighborhood information of and the conditioned molecular shape, and is used to predict the atom features as follows:

| (19) |

The proof of equivariance in Eq. 16 and invariance in Eq. 18 is available in Supplementary Section LABEL:supp:backward:equivariance and LABEL:supp:backward:invariance.

Model Training

optimizes by minimizing the squared errors between the predicted positions () and the ground-truth positions () of atoms in molecules. Given a particular step , the error is calculated as follows:

| (20) | ||||

where is a weight at step , and is calculated by clipping the signal-to-noise ratio with a threshold . Note that because decreases monotonically as increases from 1 to (Eq. 7), decreases monotonically over as well until it is clipped. Thus, imposes lower weights on the loss when the noise level in is higher (i.e., at later/larger step ). This encourages the model training to focus more on accurately recovering molecule structures when there are sufficient signals in the data, rather than being potentially confused by major noises in the data.

also minimizes the KL divergence (Kullback and Leibler 1951) between the ground-truth posterior (Eq. 10) and its approximate (Eq. 15) for discrete atom features to optimize , following the literature (Hoogeboom et al. 2021). Particularly, the KL divergence at for a given molecule is calculated as follows:

| (21) |

where is a categorical distribution of (Eq. 11); is an estimate of (Eq. 15). The overall loss function is defined as follows:

| (22) |

where is the set of all the molecules in training; is the set of sampled timesteps; is a hyper-parameter to balance () and (). During training, step is uniformly sampled from . The derivation of the loss functions is available in Supplementary Section LABEL:supp:training:loss.

Molecule Generation

with Shape Guidance

During molecule generation, can also utilize additional shape guidance by pushing the predicted atoms to the shape of the given molecule . Particularly, following Adams and Coley et al. (2023), the shape used for guidance is defined as a set of points sampled according to atom positions in . Particularly, for each atom in , 20 points are randomly sampled into from a Gaussian distribution centered at with variance . Given the predicted atom position at step , applies the shape guidance by adjusting the predicted positions to as follows:

| (23) |

where is the weight used to balance the prediction and the adjustment; is the Euclidean distance between and ; is the set of -nearest neighbors of in based on ; is a distance threshold. By doing the above adjustment, the predicted atom positions will be pushed to those of if they are sufficiently far away. Note that the shape guidance is applied exclusively for steps

| (24) |

not for all the steps, and thus it only adjusts predicted atom positions when there are a lot of noises and the prediction needs more guidance. with the shape guidance is referred to as .

Experiments

Data

Following (Adams and Coley 2023), we used molecules in the MOSES dataset (Polykovskiy et al. 2020), with their 3D conformers calculated by RDKit (Landrum et al. 2023). We used the same training and test split as in . Please note that further modifies the generated conformers into artificial ones, by adjusting acyclic bond distances to their empirical means and fixing acyclic bond angles using heuristic rules. Unlike , we did not make any additional adjustments to the calculated 3D conformers, as is designed with sufficient flexibility to accept any 3D conformers as input and generate 3D molecules without restrictions on fixed bond lengths or angles. Limited by the predefined fragment library, also removes molecules with fragments not present in its fragment library. In contrast, we kept all the molecules, as is not based on fragments. Our final training dataset contains 1,593,653 molecules, out of which a random set of 1,000 molecules was selected for validation. Both the and models are trained using this training set. 1,000 test molecules (i.e., conditions) as used in are used to test .

Baselines

We compared and with the state-of-the-art baseline and a virtual screening method over the training dataset, denoted as . As far as we know, is the only generative baseline that generates 3D molecules conditioned on molecule shapes. consists of a fragment-based generative model based on variational autoencoder that sequentially decodes fragments from molecule latent embeddings and shape embeddings, and a rotatable bond scoring framework that adjusts the angles of rotatable bonds between fragments to maximize the 3D shape similarity with the condition molecule. aims to sift through the training set to identify molecules with high shape similarities with the condition molecule. For , we assessed two interpolation levels, and (prior), following the original paper (Adams and Coley 2023). For , and , we generated 50 molecules for each testing molecule (i.e., condition) as the candidates for evaluation. For , we randomly sampled 500 training molecules for each testing molecule, and considered the top-50 molecules with the highest shape similarities as candidates for evaluation.

Evaluation Metrics

We use shape similarity and molecular graph similarity to measure the generated new molecules with respective to the condition . Higher and meanwhile lower indicate better model performance. We also measure the diversity () of the generated molecules, calculated as 1 minus average pairwise among all generated molecules. Higher indicates better performance. Details about the evaluation metrics are available in Supplementary Section LABEL:supp:experiments:metrics.

Performance Comparison

| method | #c% | #u% | (std) | (std) | (std) | (std) | (std) | ||

|---|---|---|---|---|---|---|---|---|---|

| 100.0 | 100.0 | 0.795 | 0.729 (0.039) | 0.226 (0.038) | 0.807 (0.042) | 0.241 (0.087) | 0.759 (0.015) | ||

| (=0.3) | 100.0 | 94.2 | 0.766 | 0.717 (0.083) | 0.349 (0.088) | 0.904 (0.070) | 0.549 (0.243) | 0.677 (0.065) | |

| (=1.0) | 100.0 | 95.0 | 0.760 | 0.670 (0.069) | 0.235 (0.045) | 0.842 (0.061) | 0.271 (0.096) | 0.744 (0.046) | |

| 98.8 | 100.0 | 0.748 | 0.689 (0.044) | 0.239 (0.049) | 0.803 (0.042) | 0.243 (0.068) | 0.712 (0.055) | ||

| 98.7 | 100.0 | 0.749 | 0.746 (0.036) | 0.241 (0.050) | 0.852 (0.034) | 0.247 (0.068) | 0.703 (0.053) |

-

•

Columns represent: “#c%": the percentage of connected molecules; “#u%”: the percentage of unique molecules; “”: the average drug-likeness of generated molecules; “/”: the average of shape or graph similarities between the condition molecules and generated molecules; “std": the standard deviation; “”: the maximum of shape similarities between the condition molecules and generated molecules; “”: the graph similarities between the condition molecules and the molecules with the maximum shape similarities; “”: the diversity among the generated molecules.

Overall Comparison

Table 1 presents the overall comparison of shape-conditioned molecule generation among , , and . As shown in Table 1, achieves the highest average shape similarity 0.7460.036, with 2.3% improvement from the best baseline (0.7290.039), although at the cost of a slightly higher graph similarity (0.2410.050 in vs 0.2260.038 in ). This indicates that could generate molecules that align more closely with the shape conditions than those in the dataset. Furthermore, achieves the second-best performance in maximum shape similarity at 0.8520.034 among all the methods. While it underperforms the best baseline (0.9040.070 for with =0.3) on this metric, achieves substantially lower maximum graph similarity of 0.2470.068 compared with the best baseline (0.5490.243). This highlights the ability of in generating novel molecules that resemble the shape conditions. also achieves the lowest standard deviation values on both the average and maximum shape similarities (0.036 and 0.034, respectively) among all the methods, further demonstrating its ability to consistently generate molecules with high shape similarities.

performs substantially better than on 3D shape similarity metrics (e.g., 0.7460.036 vs 0.6890.044 on ). The superior performance of highlights the importance of shape guidance in the generative process. Although underperforms , it still outperforms with =1.0 in terms of the (i.e., 0.6890.044 vs 0.6700.069).

In terms of the quality of generated molecules, 98.7% of molecules from and 98.8% from are connected, and every connected molecule is unique. with values of 0.3 or 1.0 ensures the 100% connectivity among generated molecules by sequentially attaching fragments. However, out of these connected molecules, 94.2% and 95.0% are unique for with value of 0.3 or 1.0, respectively. In terms of the drug-likeness (QED), both and achieve QED values (e.g., 0.749 for ) close to those of with as 0.3 and 1.0 (e.g., 0.760 for with =0.3). All the generative methods produce slightly inferior QED values to real molecules (0.795 for ). In terms of diversity, and achieve higher diversity values (e.g., 0.7030.053 for ) than with =1.0 (0.6770.065), though slightly lower than with =0.3 and . Overall, and are able to generate connected, unique and diverse molecules with good drug-likeness scores.

Please note that unlike , which neglects distorted bonding geometries in real molecules and limits itself to generating molecules with fixed bond lengths and angles, both and are able to generate molecules without such limitations. Given the superior performance of in shape-conditioned molecule generation, it could serve as a promising tool for ligand-based drug design.

Comparison of Diffusion Weighting Schemes

| method | weights | #c% | #u% | JS divergence | ||

|---|---|---|---|---|---|---|

| bond | C-C | |||||

| 98.8 | 100.0 | 0.748 | 0.095 | 0.321 | ||

| uniform | 89.4 | 100.0 | 0.660 | 0.115 | 0.393 | |

| 98.7 | 100.0 | 0.749 | 0.093 | 0.317 | ||

| uniform | 90.1 | 100.0 | 0.671 | 0.112 | 0.384 | |

-

•

Columns represent: “weights": different weighting schemes; “JS distance of bond/C-C”: the Jensen-Shannon (JS) divergence of bond length among all the bond types (“bond")/carbon-carbon single bonds (“C-C") between real molecules and generated molecules; All the others are identical to those in Table 1.

While previous work (Peng et al. 2023; Guan et al. 2023) applied uniform weights on different diffusion steps, uses different weights (i.e., in Eq. 20). We conducted an ablation study to demonstrate the effectiveness of this new weighting scheme. Particularly, we trained two modules with the varying step weights (with in Eq. 20) and uniform weights, respectively, while fixing all the other hyper-parameters in and . Table 2 presents their performance comparison.

The results in Table 2 show that the different weights on different steps substantially improve the quality of the generated molecules. Specifically, with different weights ensures higher molecular connectivity and drug-likeness than that with uniform weights ( vs for connectivity; vs for QED). with different weights also produces molecules with bond length distributions closer to those of real molecules (i.e., lower Jensen-Shannon divergence), for example, the Jensen-Shannon (JS) divergence of bond lengths between real and generated molecules decreases from 0.115 to 0.095 when different weights are applied. The same trend can be observed for , for which the different weights also improve the generated molecule qualities. Since increases as the noise level in the data decreases (See discussions earlier in “Model Training"), the results in Table 2 demonstrate the effectiveness of the new weighting scheme in promoting new molecules generated more similarly to real ones when the noise level in data is small.

Parameter Study

| JS. bond | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| - | - | 0.748 | 0.094 | 0.689 | 0.239 | 0.803 | 0.243 | ||

| 0.2 | 50 | 0.630 | 0.110 | 0.794 | 0.236 | 0.890 | 0.244 | ||

| 0.2 | 100 | 0.666 | 0.105 | 0.786 | 0.238 | 0.883 | 0.245 | ||

| 0.2 | 300 | 0.749 | 0.093 | 0.746 | 0.241 | 0.852 | 0.247 | ||

| 0.4 | 50 | 0.678 | 0.106 | 0.779 | 0.240 | 0.875 | 0.245 | ||

| 0.4 | 100 | 0.700 | 0.103 | 0.772 | 0.241 | 0.870 | 0.247 | ||

| 0.4 | 300 | 0.752 | 0.093 | 0.738 | 0.242 | 0.845 | 0.247 | ||

| 0.6 | 50 | 0.706 | 0.103 | 0.763 | 0.242 | 0.861 | 0.246 | ||

| 0.6 | 100 | 0.720 | 0.100 | 0.758 | 0.242 | 0.857 | 0.247 | ||

| 0.6 | 300 | 0.753 | 0.093 | 0.731 | 0.242 | 0.838 | 0.247 |

-

•

Columns represent: “”/“”: distance threshold/step threshold in shape guidance; “JS. bond”: the JS divergence of bond length distributions of all the bond types between real molecules and generated molecules; All the others are identical to those in Table 1.

We conducted a parameter study to evaluate the impact of the distance threshold (Eq. 23) and the step threshold (Eq. 24) in the shape guidance. Particularly, using the same trained module, we sampled molecules with different values of and and present the results in Table 3. As shown in Table 3, the average shape similarities and maximum shape similarities consistently decrease as and increase. For example, when , and decreases from 0.794 to 0.763 and 0.890 to 0.861, respectively, as increases from to . Similarly, when , and decreases from 0.794 to 0.746 and 0.890 to 0.852, respectively, as increases from to . As presented in “ with Shape Guidance", larger and indicate stronger shape guidance in . These results demonstrate that stronger shape guidance in could effectively induce higher shape similarities between the given molecule and generated molecules.

It is also noticed that as shown in Table 3, incorporating shape guidance enables a trade-off between the quality of the generated molecules (), and the shape similarities ( and ) between the given molecule and the generated ones. For example, when , increases from 0.630 to 0.749 and decreases from 0.794 to 0.746 as increases from to . These results indicate the effects of and in guiding molecule generation conditioned on given shapes.

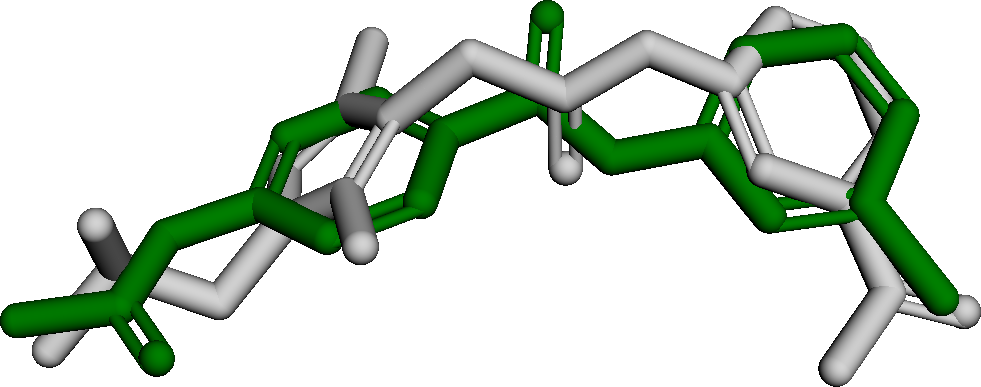

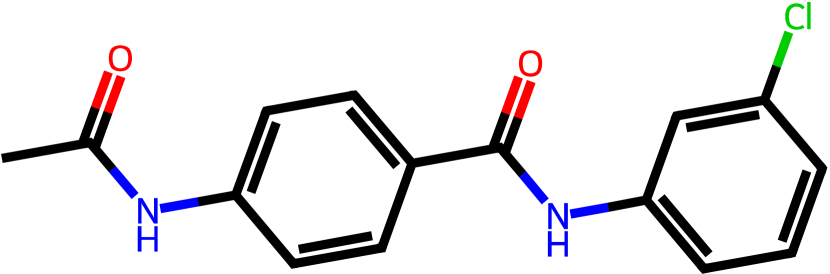

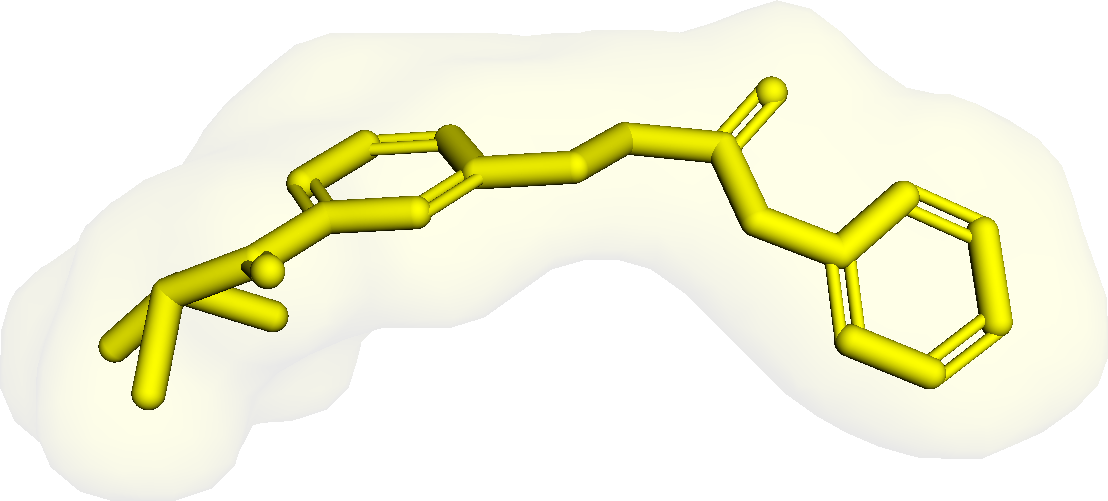

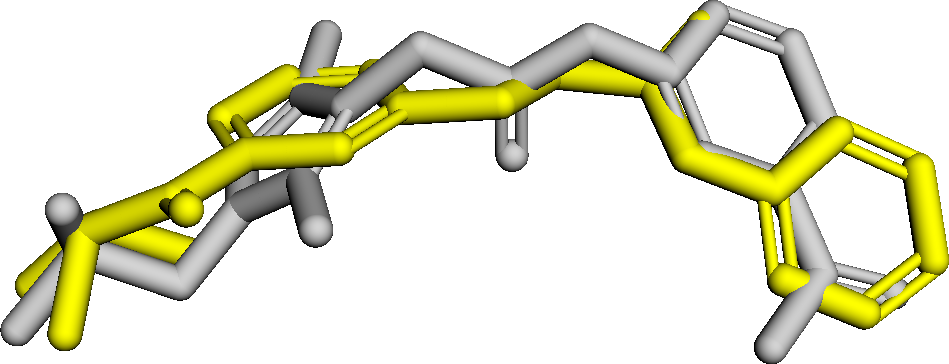

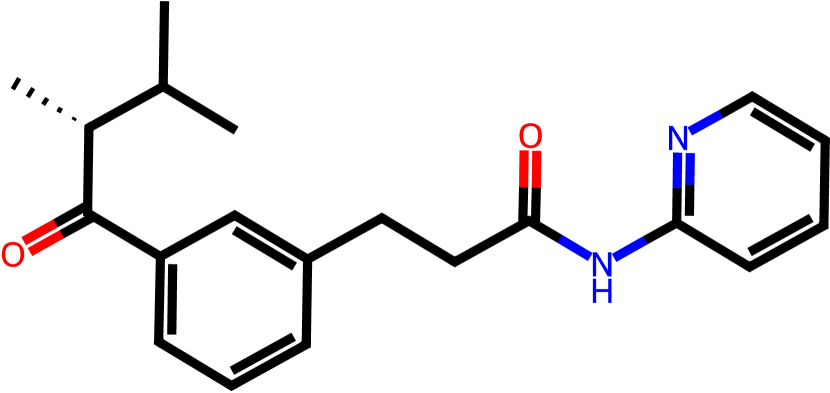

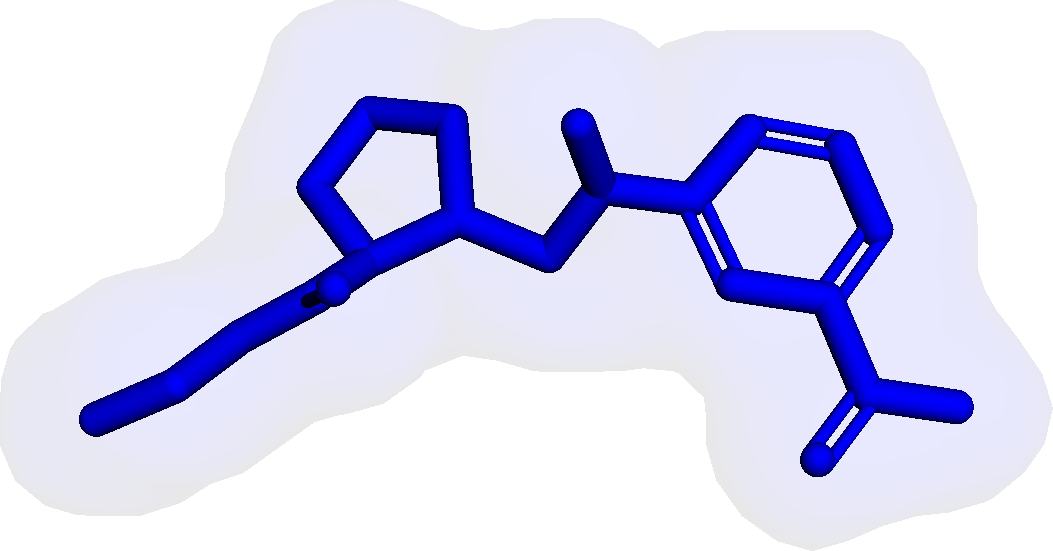

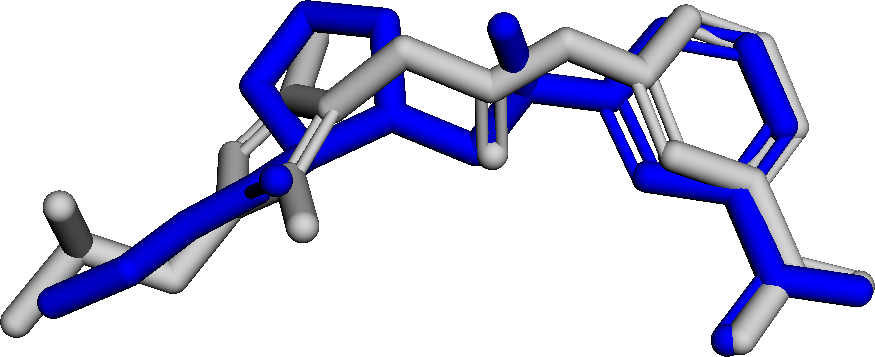

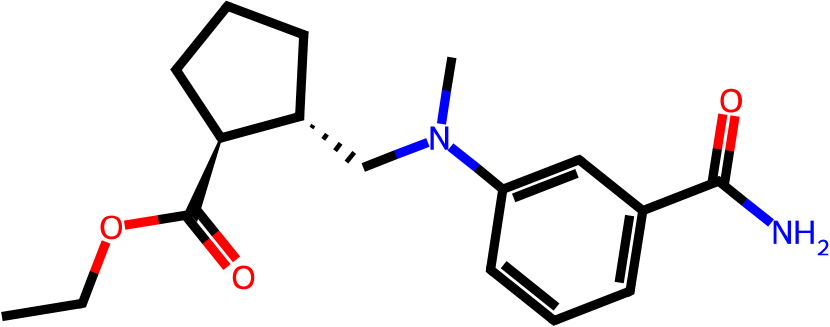

Case Study

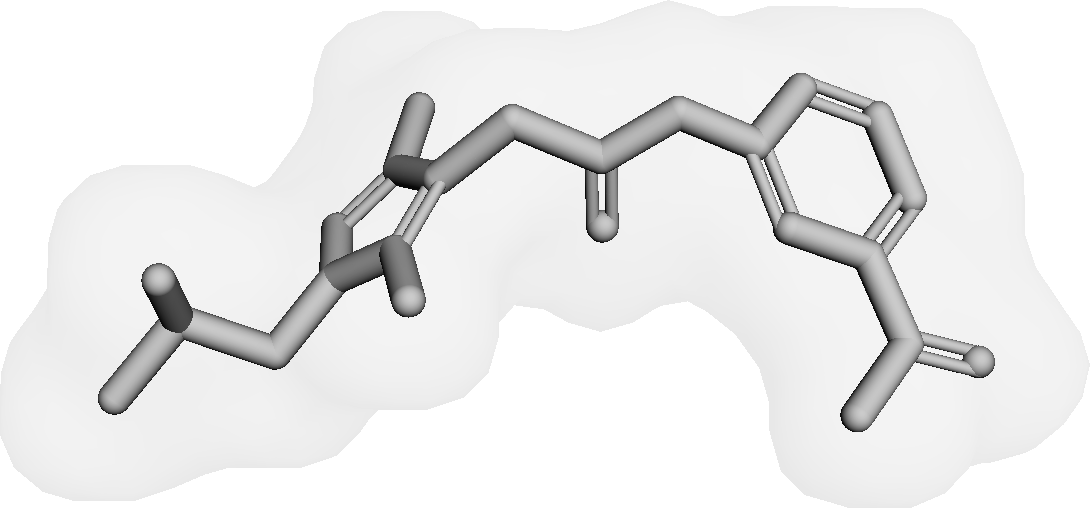

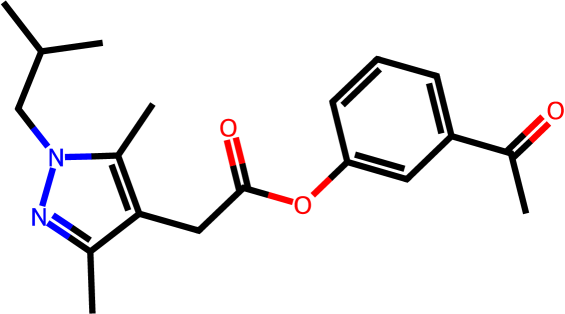

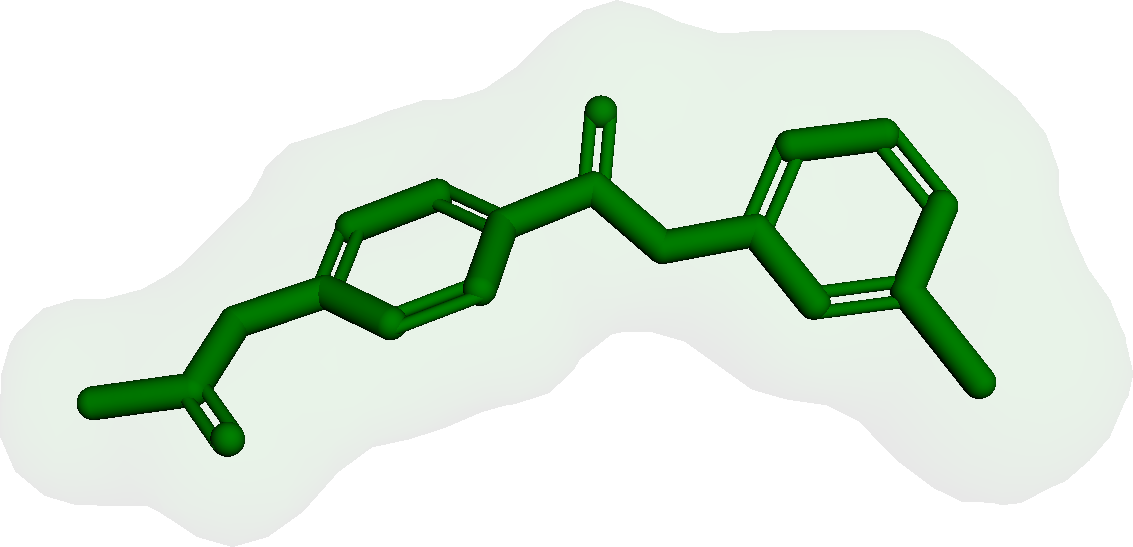

Figure 3 presents three generated molecules from three methods given the same condition molecule. As shown in Figure 3, the molecule generated by has higher shape similarity (0.835) with the condition molecule than those from the baseline methods (0.759 for and 0.749 for ). Particularly, the molecule from has the surface shape (represented as blue shade in Figure 3(d)) most similar to that of the condition molecule. All three molecules have low graph similarities with the condition molecule and higher scores than the condition molecule. This example shows the ability of to generate novel molecules that are more similar in 3D shape to condition molecules than those from baseline methods.

Discussions and Conclusions

In this paper, we develop a novel generative model , which generates 3D molecules conditioned on the 3D shape of given molecules. utilizes a pre-trained equivariant shape encoder to generate equivariant embeddings for 3D shapes of given molecules. Conditioned on the embeddings, learns an equivariant diffusion model to generate novel molecules. To improve the shape similarities between the given molecule and the generated ones, we develop , which incorporates shape guidance to push the generated atom positions to the shape of the given molecule. We compare and against state-of-the-art baseline methods. Our experimental results demonstrate that and could generate molecules with higher shape similarities, and competitive qualities compared to the baseline methods. In future work, we will explore generating 3D molecules jointly conditioned on the shape and the electrostatic, considering that the electrostatic of molecules could also determine the binding activities of molecules.

Acknowledgements

This project was made possible, in part, by support from the National Science Foundation grant nos. IIS-2133650 (X.N.), and The Ohio State University President’s Research Excellence program (X.N.). Any opinions, findings and conclusions or recommendations expressed in this paper are those of the authors and do not necessarily reflect the views of the funding agency.

References

- Acharya et al. (2011) Acharya, C.; Coop, A.; Polli, J. E.; and MacKerell, A. D. 2011. Recent Advances in Ligand-Based Drug Design: Relevance and Utility of the Conformationally Sampled Pharmacophore Approach. Current Computer Aided-Drug Design, 7(1): 10–22.

- Adams and Coley (2023) Adams, K.; and Coley, C. W. 2023. Equivariant Shape-Conditioned Generation of 3D Molecules for Ligand-Based Drug Design. In The Eleventh International Conference on Learning Representations.

- Batool, Ahmad, and Choi (2019) Batool, M.; Ahmad, B.; and Choi, S. 2019. A Structure-Based Drug Discovery Paradigm. International Journal of Molecular Sciences, 20(11): 2783.

- Chen et al. (2022) Chen, Y.; Fernando, B.; Bilen, H.; Nießner, M.; and Gavves, E. 2022. 3D Equivariant Graph Implicit Functions. In Lecture Notes in Computer Science, 485–502. Springer Nature Switzerland.

- Chen et al. (2021) Chen, Z.; Min, M. R.; Parthasarathy, S.; and Ning, X. 2021. A deep generative model for molecule optimization via one fragment modification. Nature Machine Intelligence, 3(12): 1040–1049.

- Deng et al. (2021) Deng, C.; Litany, O.; Duan, Y.; Poulenard, A.; Tagliasacchi, A.; and Guibas, L. J. 2021. Vector Neurons: A General Framework for SO(3)-Equivariant Networks. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), 12200–12209.

- Garcia Satorras et al. (2021) Garcia Satorras, V.; Hoogeboom, E.; Fuchs, F.; Posner, I.; and Welling, M. 2021. E(n) Equivariant Normalizing Flows. In Ranzato, M.; Beygelzimer, A.; Dauphin, Y.; Liang, P.; and Vaughan, J. W., eds., Advances in Neural Information Processing Systems, volume 34, 4181–4192. Curran Associates, Inc.

- Gómez-Bombarelli et al. (2018) Gómez-Bombarelli, R.; Wei, J. N.; Duvenaud, D.; Hernández-Lobato, J. M.; Sánchez-Lengeling, B.; Sheberla, D.; Aguilera-Iparraguirre, J.; Hirzel, T. D.; Adams, R. P.; and Aspuru-Guzik, A. 2018. Automatic Chemical Design Using a Data-Driven Continuous Representation of Molecules. ACS Central Science, 4(2): 268–276.

- Guan et al. (2023) Guan, J.; Qian, W. W.; Peng, X.; Su, Y.; Peng, J.; and Ma, J. 2023. 3D Equivariant Diffusion for Target-Aware Molecule Generation and Affinity Prediction. In The Eleventh International Conference on Learning Representations.

- Hawkins, Skillman, and Nicholls (2006) Hawkins, P. C. D.; Skillman, A. G.; and Nicholls, A. 2006. Comparison of Shape-Matching and Docking as Virtual Screening Tools. Journal of Medicinal Chemistry, 50(1): 74–82.

- Ho, Jain, and Abbeel (2020) Ho, J.; Jain, A.; and Abbeel, P. 2020. Denoising Diffusion Probabilistic Models. In Larochelle, H.; Ranzato, M.; Hadsell, R.; Balcan, M.; and Lin, H., eds., Advances in Neural Information Processing Systems, volume 33, 6840–6851. Curran Associates, Inc.

- Hoogeboom et al. (2021) Hoogeboom, E.; Nielsen, D.; Jaini, P.; Forré, P.; and Welling, M. 2021. Argmax Flows and Multinomial Diffusion: Learning Categorical Distributions. In Ranzato, M.; Beygelzimer, A.; Dauphin, Y.; Liang, P.; and Vaughan, J. W., eds., Advances in Neural Information Processing Systems, volume 34, 12454–12465. Curran Associates, Inc.

- Hoogeboom et al. (2022) Hoogeboom, E.; Satorras, V. G.; Vignac, C.; and Welling, M. 2022. Equivariant Diffusion for Molecule Generation in 3D. In Chaudhuri, K.; Jegelka, S.; Song, L.; Szepesvari, C.; Niu, G.; and Sabato, S., eds., Proceedings of the 39th International Conference on Machine Learning, volume 162 of Proceedings of Machine Learning Research, 8867–8887. PMLR.

- Imrie et al. (2021) Imrie, F.; Hadfield, T. E.; Bradley, A. R.; and Deane, C. M. 2021. Deep generative design with 3D pharmacophoric constraints. Chemical Science, 12(43): 14577–14589.

- Jin, Barzilay, and Jaakkola (2018) Jin, W.; Barzilay, R.; and Jaakkola, T. 2018. Junction Tree Variational Autoencoder for Molecular Graph Generation. In Dy, J.; and Krause, A., eds., Proceedings of the 35th International Conference on Machine Learning, volume 80 of Proceedings of Machine Learning Research, 2323–2332. PMLR.

- Kingma and Ba (2015) Kingma, D. P.; and Ba, J. 2015. Adam: A Method for Stochastic Optimization. In Bengio, Y.; and LeCun, Y., eds., 3rd International Conference on Learning Representations, ICLR 2015, San Diego, CA, USA, 2015.

- Kong et al. (2021) Kong, Z.; Ping, W.; Huang, J.; Zhao, K.; and Catanzaro, B. 2021. DiffWave: A Versatile Diffusion Model for Audio Synthesis. In International Conference on Learning Representations.

- Kullback and Leibler (1951) Kullback, S.; and Leibler, R. A. 1951. On Information and Sufficiency. The Annals of Mathematical Statistics, 22(1): 79–86.

- Landrum et al. (2023) Landrum, G.; Tosco, P.; Kelley, B.; Ric; Cosgrove, D.; Sriniker; Gedeck; Vianello, R.; NadineSchneider; Kawashima, E.; N, D.; Jones, G.; Dalke, A.; Cole, B.; Swain, M.; Turk, S.; AlexanderSavelyev; Vaucher, A.; Wójcikowski, M.; Ichiru Take; Probst, D.; Ujihara, K.; Scalfani, V. F.; Godin, G.; Lehtivarjo, J.; Pahl, A.; Walker, R.; Francois Berenger; Jasondbiggs; and Strets123. 2023. rdkit/rdkit: 2023_03_2 (Q1 2023) Release.

- Luo et al. (2021) Luo, S.; Guan, J.; Ma, J.; and Peng, J. 2021. A 3D Generative Model for Structure-Based Drug Design. In Beygelzimer, A.; Dauphin, Y.; Liang, P.; and Vaughan, J. W., eds., Advances in Neural Information Processing Systems.

- Nichol and Dhariwal (2021) Nichol, A. Q.; and Dhariwal, P. 2021. Improved Denoising Diffusion Probabilistic Models. In Meila, M.; and Zhang, T., eds., Proceedings of the 38th International Conference on Machine Learning, volume 139 of Proceedings of Machine Learning Research, 8162–8171. PMLR.

- Papadopoulos et al. (2021) Papadopoulos, K.; Giblin, K. A.; Janet, J. P.; Patronov, A.; and Engkvist, O. 2021. De novo design with deep generative models based on 3D similarity scoring. Bioorganic &: Medicinal Chemistry, 44: 116308.

- Park et al. (2019) Park, J. J.; Florence, P.; Straub, J.; Newcombe, R.; and Lovegrove, S. 2019. DeepSDF: Learning Continuous Signed Distance Functions for Shape Representation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR).

- Peng et al. (2023) Peng, X.; Guan, J.; Liu, Q.; and Ma, J. 2023. MolDiff: Addressing the Atom-Bond Inconsistency Problem in 3D Molecule Diffusion Generation. In Krause, A.; Brunskill, E.; Cho, K.; Engelhardt, B.; Sabato, S.; and Scarlett, J., eds., Proceedings of the 40th International Conference on Machine Learning, volume 202 of Proceedings of Machine Learning Research, 27611–27629. PMLR.

- Peng et al. (2022) Peng, X.; Luo, S.; Guan, J.; Xie, Q.; Peng, J.; and Ma, J. 2022. Pocket2Mol: Efficient Molecular Sampling Based on 3D Protein Pockets. In Chaudhuri, K.; Jegelka, S.; Song, L.; Szepesvari, C.; Niu, G.; and Sabato, S., eds., Proceedings of the 39th International Conference on Machine Learning, volume 162 of Proceedings of Machine Learning Research, 17644–17655. PMLR.

- Polykovskiy et al. (2020) Polykovskiy, D.; Zhebrak, A.; Sanchez-Lengeling, B.; Golovanov, S.; Tatanov, O.; Belyaev, S.; Kurbanov, R.; Artamonov, A.; Aladinskiy, V.; Veselov, M.; Kadurin, A.; Johansson, S.; Chen, H.; Nikolenko, S.; Aspuru-Guzik, A.; and Zhavoronkov, A. 2020. Molecular Sets (MOSES): A Benchmarking Platform for Molecular Generation Models. Frontiers in Pharmacology, 11.

- Ravi et al. (2020) Ravi, N.; Reizenstein, J.; Novotny, D.; Gordon, T.; Lo, W.-Y.; Johnson, J.; and Gkioxari, G. 2020. Accelerating 3D Deep Learning with PyTorch3D. arXiv:2007.08501.

- Ripphausen, Nisius, and Bajorath (2011) Ripphausen, P.; Nisius, B.; and Bajorath, J. 2011. State-of-the-art in ligand-based virtual screening. Drug Discovery Today, 16(9-10): 372–376.

- Vainio, Puranen, and Johnson (2009) Vainio, M. J.; Puranen, J. S.; and Johnson, M. S. 2009. ShaEP: Molecular Overlay Based on Shape and Electrostatic Potential. Journal of Chemical Information and Modeling, 49(2): 492–502.

- Wang et al. (2019) Wang, Y.; Sun, Y.; Liu, Z.; Sarma, S. E.; Bronstein, M. M.; and Solomon, J. M. 2019. Dynamic Graph CNN for Learning on Point Clouds. ACM Trans. Graph., 38(5).

- Wójcikowski, Zielenkiewicz, and Siedlecki (2015) Wójcikowski, M.; Zielenkiewicz, P.; and Siedlecki, P. 2015. Open Drug Discovery Toolkit (ODDT): a new open-source player in the drug discovery field. Journal of Cheminformatics, 7(1).

- Zheng et al. (2013) Zheng, H.; Hou, J.; Zimmerman, M. D.; Wlodawer, A.; and Minor, W. 2013. The future of crystallography in drug discovery. Expert Opinion on Drug Discovery, 9(2): 125–137.

See pages - of supp.pdf