Shapley Sets: Feature Attribution via Recursive Function Decomposition

Abstract

Despite their ubiquitous use, Shapley value feature attributions can be misleading due to feature interaction in both model and data. We propose an alternative attribution approach, Shapley Sets, which awards value to sets of features. Shapley Sets decomposes the underlying model into non-separable variable groups using a recursive function decomposition algorithm with log linear complexity in the number of variables. Shapley Sets attributes to each non-separable variable group their combined value for a particular prediction. We show that Shapley Sets is equivalent to the Shapley value over the transformed feature set and thus benefits from the same axioms of fairness. Shapley Sets is value function agnostic and we show theoretically and experimentally how Shapley Sets avoids pitfalls associated with Shapley value based alternatives and are particularly advantageous for data types with complex dependency structure.

Keywords Explainability, Feature Attribution, Shapley Value, Function Decomposition, Separability

1 The Shapley Value and Non-separable Functions

In co-operative game theory, one central question is that of fair division: if players form a coalition to achieve a common goal, how should they split the profits? Let be the set of players and all coalitions of players. A function is the -person game in characteristic form, such that defines the worth of coalition where . A solution concept is a mapping assigning a vector to the game . The Shapley value [1] is the most widely known solution concept which uniquely satisfies certain axioms of fairness: efficiency, dummy, symmetry and additivity. Please see [1] for definitions.

Definition 1.1 (Shapley Value).

For the game the Shapley value of player is given as

| (1) |

Under efficiency, the Shapley value decomposes the value of the grand coalition to attribute worth to each individual player. The Shapley value is a fully separable function (Definition 1.2) such that .

Definition 1.2.

[Additively Separable Function] A function with variable set is separable if it has the following form ,

where are non-overlapped sub-vectors of .

Specifically, the function is also called fully additively separable if , while it is regarded as fully non-separable if . While there are other forms of separability, In this paper we use the term separable to refer to additive separability.

The set function may not be fully separable. Within coalitional games this is due to the interaction between players. Consider the following example for the game with player set and . Clearly the game is not fully separable as . The non-separable interaction effects within coalitional games are dealt with by solution concepts which map partially separable into fully separable functions, allowing an individual attribution of worth to each player. The Shapley value provides an attribution where each player receives an average of their marginal contribution to all coalitions.

1.1 Interaction Effects For Feature Attribution

When applying the Shapley value to feature attribution, there are three functions to consider: The model to be explained which operates on a variable set . The set function , which takes as input a set of features and obtains ’s prediction on this coalition of features. The Shapley value which maps the set function into a fully separable function. Given a particular prediction to attribute, where , the value function specifies how the subset of features, , should be removed from .

In coalitional game theory, the Shapley value attributes the difference in value between the grand coalition and the empty set of players. For feature attribution, the Shapley value attributes the change in prediction between instance and baseline. Therefore, the value of the empty coalition, is not guaranteed to be zero but some uninformative baseline prediction and thus the value function must account for the non-zero baseline: . Similarly to coalitional games, the Shapley value fairly allocates interaction effects to each feature. Feature interaction may occur in the data where and/or in the model . The choice of value function, which acts as the interface between the Shapley value and the function determines the kind of interactive effects the Shapley value must allocate between features.

1.2 When Interaction Occurs in the Data

While the following ideas have previously been discussed [2, 3, 4], we re-frame them here within the context of separability which allows us to motivate our proposed attribution method, Shapley Sets.

Example 1: Given the binary variable set and function where is the causal ancestor of such that . It is clear that has no impact on from the perspective of the model. However, from the perspective of the data distribution, is dependent on . Changing will result in a change in therefore, changing to a value non-consistent with does not make sense. Whether to consider as a separate player in the game and attribute value despite it having no direct influence on the model output is an open debate in the literature.

Off-manifold Value Functions There are those who argue that features with no impact on the model should receive no attribution [5, 6]. These methods break all statistical relationships between the inputs to the model by using a value function which calculates the impact of each feature on the model independently of its impact on the distribution of other features. This approach was formalised as by [6]

| (2) |

The expectation is usually taken over the input distribution . However, if this is replaced by an arbitrary distribution, was generalised to by [7] which uses an arbitrary baseline sample ,

| (3) |

There are those who argue that attributions independent of the statistical interactions in the data are inherently misleading [8, 4]. Firstly from a causal perspective, if we consider Example 1, the Shapley value via would assign zero importance to . An attribution ignoring that is directly responsible for is misleading, especially if the attribution is used to recommend changes. Furthermore, evaluates the model on out of distribution samples. If we break the causal relationship between and , and using their independent expected values in , the model is evaluated on samples which is a complete misrepresentation of the truth.

On-manifold Value Functions To combat this problem, on-manifold samples can be calculated by the use of the conditional value function, first introduced by [9] which does consider statistically related features as separate players in the game, allowing the distribution of out of coalition features to be impacted by the feature in question

| (4) |

is often taken as the observational conditional probability whereby the expected conditional is calculated over . This generates on-manifold data samples which address the problems discussed above. Furthermore, features which have no direct impact on the model but an indirect impact through other features are assigned a non zero importance, more accurately reflecting reality. However, the two significant issues with are its computational complexity: requiring the evaluation of the model on multivariate conditional distributions and the undesirable impact of considering all features as players combined with the efficiency which we explicate below.

In assigning non-marginal features a non zero importance, can give misleading explanations which indicate features to change despite having zero impact on the outcome. This weakness of has been formalised as a “violation of sensitivity” [6]: When the relevance of is defined by does not imply that depends on

The failure of sensitivity exhibited by leads to further issues with the generated attributions. Consider Example 1 again, where are binary variables and . Given the input and Under , the Shapley attributions for and would both be greater than the attribution for . Clearly, the attribution of violates sensitivity. Now, consider the an alternative function which is just trained on two features . As . However, now the Shapley values for and are equal. The relative apparent importances of and depend on whether is considered to be a third feature, even though the two functions are effectively the same.

[10] propose a solution to the failure of sensitivity exhibited by following the intuition: If is known to be the deterministic causal ancestor of , one might want to attribute all effect to and none to . In contrast, [4] argue that the only way to remove the problems arising from the failure of sensitivity is to replace the observational with the interventional conditional distribution. However, both the asymmetric and interventional attribiutions above require the specification of the causal structure of the phenomenon being modelled. It has been argued [2] that this requirement is a significant limiting factor in the adoption of either approach. In this paper, we propose an attribution approach which can be used with on and off-manifold value functions. Under , our method generates on-manifold attributions which avoid the failure of sensitivity without requiring any knowledge of the causal structure of the underlying data distribution.

1.3 When Interaction Occurs in the Model

While off-manifold value functions ignore interaction in the data, both on and off-manifold value functions recognize interaction in the model. It has been recognised, however that the Shapley value, in the presence of feature interaction in the model, generates misleading attributions [11].

Example 2: Consider the function and assume that each of the three features are statistically independent, i.e. all interaction between features is defined entirely by the model. Furthermore, it is given that and that our sample to be explained . The Shapley value under both on and off-manifold value functions give equal attributions to each feature. While this attribution makes sense from the perspective of how much each feature contributed to the change in prediction, it does not reflect the true behaviour of the model where changing the value of or would have double the impact on the model as changing . In this paper, we propose a solution concept which would group and unlike the Shapley value, award attribution together, resulting in attributions more faithful to the underlying model when used with on or off-manifold value functions.

2 Shapley Sets of Non-Separable Variable Groups

The problems with Shapley value attributions discussed above occur as it assigns individual value to variables belonging to Non-Separable Variable Groups (NSVGs) in regards to the underlying partially separable function (Definition 1.2). Non-separable groups are used to describe the formed variable groups after a complete (or ideal) decomposition of . An NSVG can also be defined as the minimal set of all interacted variables given the function which we explicate in Definition 2.1.

Definition 2.1 (Non-Separable Variable Group (NSVG)).

Let be the set of decision variables and be a partially separable function satisfying Definition 1.2. If there exists any two candidate decision vectors and , sampled from the domain of , such that the following property holds for any two mutually exclusive subsets , ,

| (5) |

Translating Definition 2.1 for feature attribution, given that is a function over the domain of all the possible subsets of we can rewrite Equation 5 in terms of , where could represent any of the value functions from the previous section but in this paper we restrict . By setting and , for , given that is minimised, if there exist any candidate vectors such that

| (6) |

then is a NSVG.

For , given that is minimised, if there exists any candidate vector such that

| (7) |

then is a NSVG.

Given the partially separable function from Example 2, under , is a NSVG as for settings and .

Given the partially separable function from Example 1, under , the set is a NSVG as for setting .

In this paper, we propose an alternative attribution method which, unlike the Shapley value, does not separate NSVGs to assign attribution. We work under the intuition that any interacting feature whether that be in the model or in the data should not be considered as separate players in the coalitional game but should be awarded value together. In both the examples above, and would receive joint attribution under our proposed method.

Given the partially separable function satisfying Definition 1.2, variable set , and a specified value function , our proposed solution concept , which we term Shapley Sets (SS), finds the optimal decomposition of into the set of NSVGs . The resulting variable grouping satisfies Definition 1.2 and each variable group is composed solely of variables which satisfy definition 2.1. From Definition 1.2, . Given a prediction to be attributed, , our proposed attribution, , therefore returns the attribution for each variable group given as:

| (8) |

Proposition 2.2.

If we model each NSVG, as a super-feature such that , and The Shapley value of each super feature is equivalent to

Proof.

where

Given that each is a NSVG, from Definition 2.1 we know that for any . Therefore,

It follows that given

∎

Proposition 2.2 shows how the attribution given by Shapley Sets (SS) to variable , , is equivalent to the Shapley value when played over the feature set containing the set of NSVGs for a given . SS therefore satisfies the same axioms of fairness as the Shapley value: efficiency, dummy, additivity and symmetry when played over this feature set. However, we have discussed how, despite its axioms, the Shapley value can generate misleading attributions in the presence of feature interaction. In Section 4 we therefore give practical advantages of the SS over the Shapley value. First however, we provide a method for finding the optimal decomposition of into its NSVGs.

3 Computing Shapley Sets

Determining the NSVGs of a function could be achieved manually by partitioning the variable set and determining interaction over every possible candidate vector. However, this would be computationally intractable. Instead, there exists a large body of literature surrounding function decomposition in global optimization problems. Of this work, automatic decomposition methods identify NSVGs. We therefore propose a method for calculating SS which is based on the Recursive Decomposition Grouping algorithm (RDG) as introduced in [12].

To identify whether two sets of variables and interact, RDG uses a fitness measure, based on Definition 2.1 with candidate vectors, as the lower and upper bounds of the domain of . If the difference between the left and right hand side of Equation 5 meets some threshold where is a randomly selected candidate vector, then and are deemed by RDG to interact. To adapt RDG for and , we propose an alternative fitness measure, Definition 3.1, with candidate vectors randomly sampled from which can identify NSVGs in the function and/or in the model.

Definition 3.1 (Shapley Sets Fitness Measure).

Given two sets of variables and a specified value function, , if then there is interaction between and . Or, if then there is interaction between and .

We substitute the SS fitness measure into the RDG algorithm which identifies NSVGs by recursively identifying individual variable sets with which a given variable interacts with. If and a single variable are said to interact they are placed into the same NSVG, . At which point conditional interaction between and the remaining variables is identified. The algorithm iterates over every variable and returns the set of NSVGs. To compute the SS attributions for a given prediction we compute for each NSVG, . Our full algorithm is shown in Algorithm 2. The runtime of SS is as proven in [12].

4 Motivating Shapley Sets

The selection of the value function determines the variable grouping generated. Used with , as interacting features are placed in the same NSVG, the attributions resulting from SS will be more faithful to the underlying model. The SS attribution for in Example 2 would be and .

Used with , as interacting features are placed in the same NSVG, the attributions resulting from SS do not suffer from the violation of sensitivity as described in Section 1.2. Consider again Example 1, as now belong to a NSVG, the SS attributions for are now equal across both and therefore robust to whether non-directly impacting features are included in the model. SS offer a further advantage when used to compare the attributions under on and off-manifold examples. Consider again Example 2 yet now with . The SS attribution via would be and . However, if SS was calculated via indicating that is non-separable and all the features interact.

The comparison between on and off-manifold SS therefore indicate where the feature interaction takes place. We have thus far provided an alternative attribution method to the Shapley Value, SS which can be computed in time with being the number of features. SS can be adapted for arbitrary value functions and offers several advantages over Shapley value based attributions when used with on and off-manifold value functions. In Section 6 we empirically validate the theoretical claims made above but first we discuss related work.

5 Related Work

As Shapley value based feature attribution has a rich literature, we differentiate SS from three approaches which are closest in essence to ours. SS enforce a coalition structure on the Shapley value such that players cannot be considered in isolation from their coalitions. The Owen value is a solution concept for games with an existing coalition structure [13]. The Owen value is the result of a two–step procedure: first, the coalitions play a quotient game among themselves, and each coalition receives a payoff which, in turn, is shared among its individual players in an internal game. Both payoffs are given by applying the Shapley value. This approach is not equivalent to SS, who assume no prior coalitional structure, and instead finds the optimum coalition structure which is the decomposition of into its NSVGs.

Shapley Residuals [11] capture the level to which a value function is inessential to a coalition of features. They show for , that if the game function can be decomposed into for then the value function is inessential with respect to the coalition . In this way we can view Shapley residuals, as an indication that a coalition is an non-separable variable group. However, the Shapley residuals are built on complex Hodge decomposition [14], are difficult to understand and do not offer a better way of attributing to features. In contrast, SS is built on the idea of additive separability, easier to understand, less computationally expensive and propose a solution to the issues with the Shapley value which are analogous to those Shapley residuals were designed to identify.

Grouped Shapley Values Determining the Shapley value of grouped variables has been previously suggested in [15, 16] which identify interaction in the data (based on measures of correlation) to then partition the features into groups, after which the Shapley value is then calculated. Shapley Sets is distinct from the above approaches in the following ways. Firstly, Shapley Sets is capable of uncovering interaction in the model as well as in the data. Secondly, Shapley Sets is designed to find the optimal grouping of the features such that the Shapley value theoretically reduces to the simple computation in Equation 8. Therefore, the grouping under Shapley Sets requires linear time to compute (given the prior decomposition of the variable set under log linear time), whereas the grouping proposed under grouped shapley values [15, 16] still requires exponential computation (to compute exactly although this can be approximated). Shapley Sets, to our knowledge, is the first contribution to the feature attribution literature which automatically decomposes a function into the optimal variable set by which to award attribution.

6 Experimental Motivation of Shapley Sets

We begin with two synthetic experiments. The first of these motivates the use of SS in the presence of interaction in the model. The second motivates the use of the SS in the presence of interaction in the data. We then compare SS to existing Shapley value (SV) based attribution methods on three benchmark datasets. We first however, outline how the value functions are computed for our experiments.

As discussed above, takes as input arbitrary reference vectors. For our experiments we select such that (Equation 2). The expectation is taken over the empirical input distribution For the calculation of (Equation 4), as the true conditional probabilities for the underlying data distribution are unknown we approximate using the underlying data distribution. Approximating conditional distributions can be achieved by directly sampling from the empirical data distribution. However, as noted in [3], the this method of approximating suffers when , due to sparsity in the underlying empirical distribution. We therefore adopt the approach of [3], where under the assumption that each is sampled from a multivariate Gaussian with mean vector and covariance matrix , the conditional distribution is also multivariate Gaussian such that where and . We can therefore sample from the conditional Gaussian distribution with expectation vector and covariance matrix given by and where and are estimated by the sample mean and covariance matrix of .

6.1 Synthetic Experiment: Interaction in the Model

| SS | Shapley Value | |||

|---|---|---|---|---|

We first construct three functions with linear and non-linear feature interactions:

We construct a synthetic dataset of seven features drawn independently from . For each of 100 randomly drawn samples we compute SS under . As we are able to compute the true SVs under for each feature, without relying on a sampling algorithm. As we know the ground truth we calculate the Mean Average Error across all features and samples as our evaluation metric,

| (9) |

where is the attribution given by or to feature in sample . As SS calculates an attribution for a set of features, , the ground truth attribution is the ground truth value of each NSVG. For example, given and , and .

Results are shown in Table 1. SS is successful in decomposing each function into its NSVGs and the attributions awarded to each set matches the ground truth of the function giving MAE of zero for all samples and functions. SV attributions deviate from ground truth by dividing the value of each NSVG between each individual feature which results in misleading attributions, particularly in the presence of inverse relationships between features. For example consider the following sub-component , and a particular sample . SV gives a positive attribution but ’s attribution is negative. Under SS, and are considered as non-separable and awarded a positive attribution together. From its SV attribution, a user may opt to change rather than , however, as these features jointly move the outcome from the baseline to the target, the impact of changing in isolation could be cancelled out by the impact of .

6.2 Synthetic Experiment: Interaction in the Data

| SS | Shap Marg | Shap Cond | ||

|---|---|---|---|---|

We adopt the approach of [8] and propose and underlying linear regression model . We construct a synthetic dataset comprising five features . are all modeled as I.I.D and drawn independently from . , however are modeled as dependent features where . We generate a synthetic dataset consisting samples of each feature and obtain the ground truth labels . We next select a model which is trained on to approximate . We calculate the attributions for each sample in generated by the SV under both and and the attributions from SS under . To evaluate attributions we use the coefficients of the linear regression model as our ground truth attributions . We use (Equation 9) where the ground truth for feature in sample .

Off-manifold attributions in the presence of interaction in the data recover the ground truth attributions reliably when is a linear model, however, that breaks down when non-linear models are used as the approximating function [8]. We therefore compare attributions under , a linear regression model, and , an XGBoost model.

Results are shown in Table 2 where SS outperforms SV on both and . Under , the MAE is lower for SV Marginal than for SV Conditional, validating the findings in [8].

However, when non linear is used, the attributions from SS and SV under outperform SV under . The attributions provided by SS outperform those generated by SV across both models. We now show experimentally the claim that SS under on-manifold value function avoid the issues related to sensitivity. To do this we add a dummy variable to the dataset such that is not used by . We train another XGBoost model, using the new dataset and generate the three sets of attributions as before. Results are shown in Table 2. Under the influence of the dummy, MAE of SV under increases, as the attribution of each of the non-dummy variables moves further away from its true value to accommodate the attribution of the new feature despite it having no effect on the true output. In contrast, as SS includes this dummy feature in the non-separable set . The resulting attribution to the existing features is unchanged and thus the MAE remains constant under the inclusion dummy variables, demonstrating SS’s robustness to how the underlying phenomenon is modelled.

| SS Int | SS Cond | KS | TS | |

|---|---|---|---|---|

| B | ||||

| D | ||||

| C |

6.3 Shapley Sets of Real World Benchmarks

We now evaluate SS on real data: the Diabetes, Boston and Correlation datasets from the Shap library [16]. For each dataset we train either an XGBoost or Random Forest model on the provided train set obtaining score of 0.90 (RF), 0.89 9 (RF) and 0.86 (XGB) respectively. We compute SS attributions for 100 randomly selected samples from the test set under both and . As the dimensionality of the datasets now exceed that capable of being computed by the true Shapley values we compare the SS attributions with the most commonly used approximation techniques: Tree Shap (TS) [17] and Kernel Shap (KS) [16]. Under its original implementation, KS is an approximation of an off-manifold value function and breaks the relationship between input features and the data distribution. TS does not make this assumption and is presented as an on-manifold Shapley value approximation. However, in practice TS performs poorly when there is high dependence between features in the dataset [3]. To evaluate the attributions generated by SS, KS and TS in the absence of a ground truth attribution we use modified versions of the deletion and sensitivity measures which have been used widely across the literature [18]. Deletion is built on the intuition that the magnitude of a feature’s score should reflect its impact on the output. Our metric therefore measures the absolute distance between the target prediction,

and the prediction of a given sample after the most important feature , determined by the attribution method under consideration , has been removed.

| (10) |

| SS Int | SS Cond | KS | TS | |

|---|---|---|---|---|

| B | ||||

| D | ||||

| C |

Low AD indicates that the attribution technique has correctly identified an important feature to remove. As SS attributes to sets of features we allow to be a non-separable variable set as generated by SS. This may influence the reliability of AD due to a varying number of features being removed from an instance. We therefore also assess the sensitivity of the attribution technique which calculates the difference between the sum of all the attributions given by the attribution technique and the prediction of the sample. Ideal attributions have a low sensitivity.

| (11) |

Tables 3 and 4 show how SS has lower (better) deletion than TS and KS across all three datasets. However, KS has the lowest sensitivity score on the Diabetes dataset, we note that for this dataset, there is high variance of the sensitivity score for both SS attributions. This can be largely explained by the sensitivity of SS to the setting of which is discussed further in Section 7.

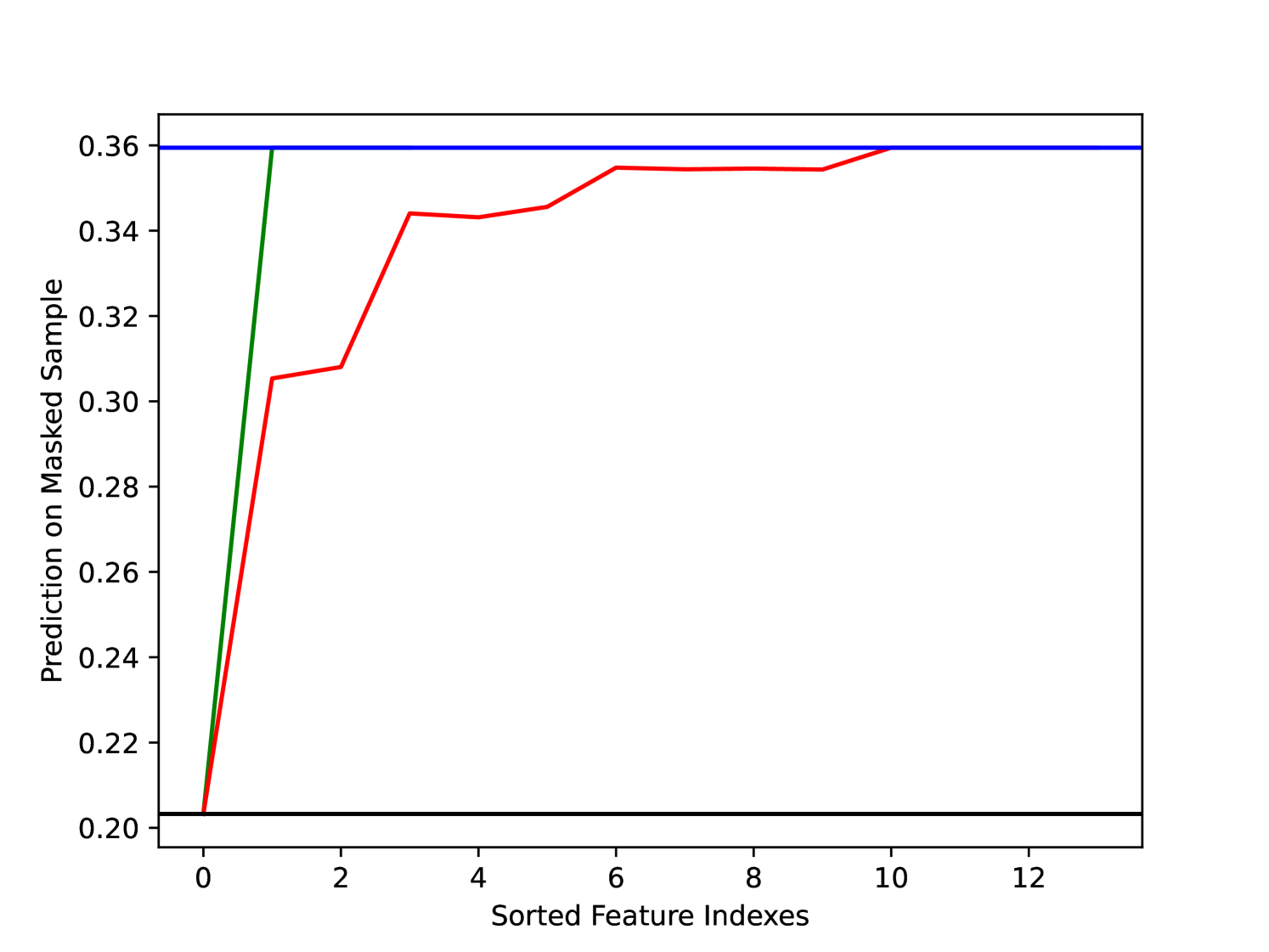

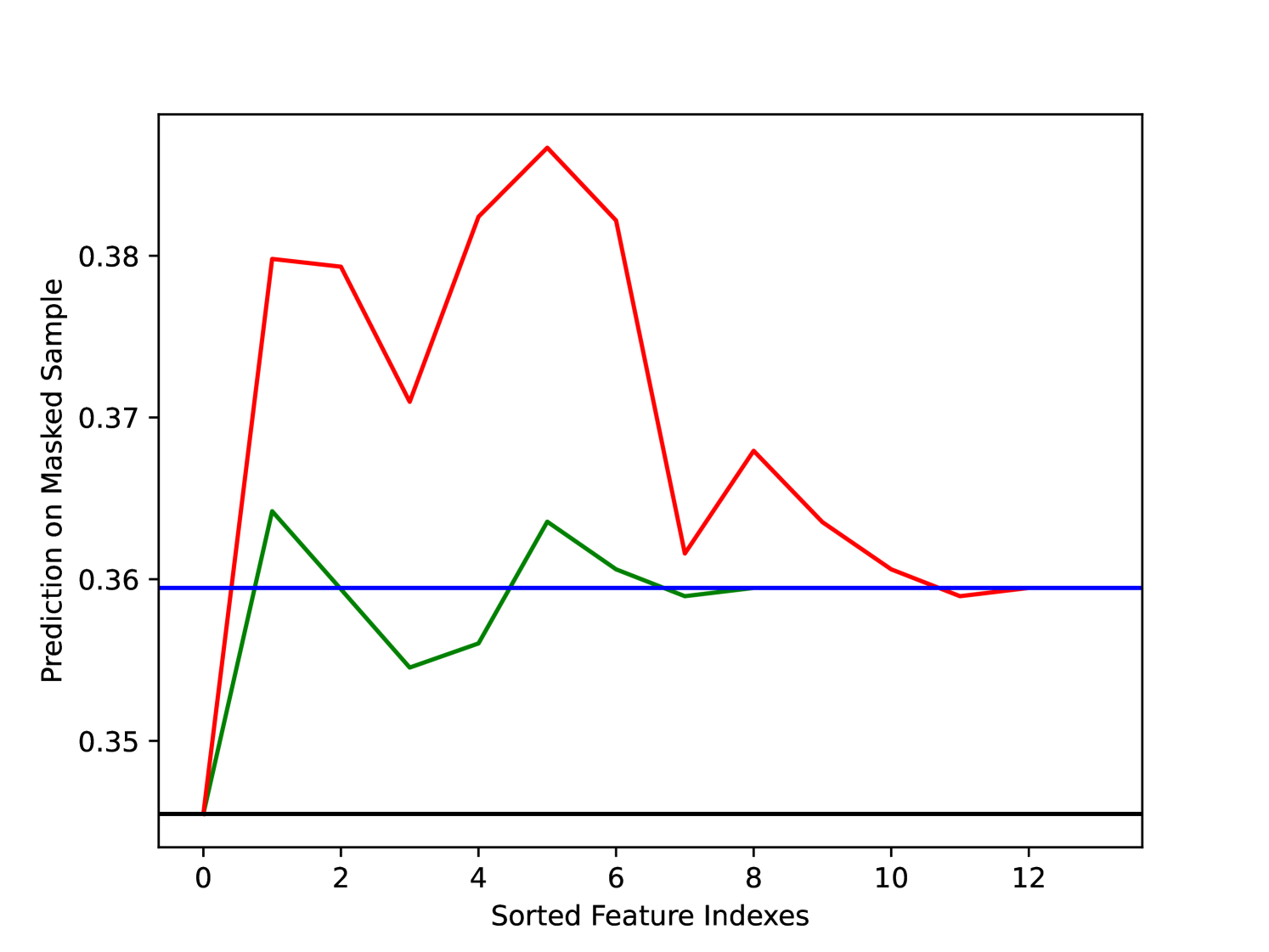

Figure 1 shows the advantage of sets rather than individual attributions. The red and green curves (KS and SS respectively) show the change in prediction as each feature in the sorted attributions is masked consecutively from the input. By considering the effect of sets of interacting features rather than individual features we can see that SS avoids the sub-optimal behaviour of KS which arises due to the interaction effects between features in the model masking each other’s importance. Figure 1 also validates the use of the deletion to compare individual and set attributions as it is clear that masking more features does not guarantee a lower deletion score.

7 Conclusions, Limitations and Future Work

This paper has introduced Shapley Sets (SS), a novel method for feature attribution, which automatically and optimally decomposes a function into a set of NSVGs by which to award attribution. We have shown how SS generates more faithful explanations in the presence of feature interaction both in the data and in the model than Shapley value-based alternatives. To our knowledge, SS is the only method in the literature which automatically generates a grouped attribution vector. Below we explore some limitations of SS and ideas for future work. Sensitivity to Parametrisation: In Algorithm 2, determines the degree to which two sets of variables are considered interacting. The original RDG algorithms recommends the setting as proportional the magnitude of the objective space. This setting works well for SS Interventional. However, we noticed a large variation in the variable grouping generated by SS Conditional under this setting of . This is not surprising as it is known that is sensitive to feature correlations in the data and it is difficult to know how much correlational structure to allow before two features are considered to be causally linked. Future work should therefore look at alternative methods of function decomposition which are not so dependent on the parametrisation of [19]. Assumption of Partially-Separable Model SS assumes that the model to be explained is partially separable. If we consider the function , SS would result in a single attribution to all three features of . This is not useful from an explanation perspective although does inform us about the nature of the underlying model. Furthermore, the assumption of a partially separable function is also made by the Shapley value [2]. Future work should consider function decomposition under a wider class of separability such as multiplicative separability where associated algorithms decompose a function into its additive and multiplicative separable variable sets [19].

Acknowledgments

We would like to thank Giulia Occhini, Alexis Monks, Isobel Shaw, Jennifer Yates, for their invaluable support during the writing of this paper. This work was supported by an Alan Turing Institute PhD Studentship funded under EPSRC grant EP/N510129/1.

References

- [1] Lloyd S Shapley. A value for n-person games. Classics in game theory, 69, 1997.

- [2] I Elizabeth Kumar, Suresh Venkatasubramanian, Carlos Scheidegger, and Sorelle Friedler. Problems with shapley-value-based explanations as feature importance measures. In International Conference on Machine Learning, pages 5491–5500. PMLR, 2020.

- [3] Kjersti Aas, Martin Jullum, and Anders Løland. Explaining individual predictions when features are dependent: More accurate approximations to shapley values. Artificial Intelligence, 298:103502, 2021.

- [4] Tom Heskes, Evi Sijben, Ioan Gabriel Bucur, and Tom Claassen. Causal shapley values: Exploiting causal knowledge to explain individual predictions of complex models. Advances in neural information processing systems, 33:4778–4789, 2020.

- [5] Luke Merrick and Ankur Taly. The explanation game: Explaining machine learning models using shapley values. In International Cross-Domain Conference for Machine Learning and Knowledge Extraction, pages 17–38. Springer, 2020.

- [6] Dominik Janzing, Lenon Minorics, and Patrick Blöbaum. Feature relevance quantification in explainable ai: A causal problem. In International Conference on artificial intelligence and statistics, pages 2907–2916. PMLR, 2020.

- [7] Mukund Sundararajan and Amir Najmi. The many shapley values for model explanation. In International conference on machine learning, pages 9269–9278. PMLR, 2020.

- [8] Giles Hooker, Lucas Mentch, and Siyu Zhou. Unrestricted permutation forces extrapolation: variable importance requires at least one more model, or there is no free variable importance. Statistics and Computing, 31(6):1–16, 2021.

- [9] Erik Štrumbelj and Igor Kononenko. Explaining prediction models and individual predictions with feature contributions. Knowledge and information systems, 41(3):647–665, 2014.

- [10] Christopher Frye, Colin Rowat, and Ilya Feige. Asymmetric shapley values: incorporating causal knowledge into model-agnostic explainability. Advances in Neural Information Processing Systems, 33:1229–1239, 2020.

- [11] Indra Kumar, Carlos Scheidegger, Suresh Venkatasubramanian, and Sorelle Friedler. Shapley residuals: Quantifying the limits of the shapley value for explanations. Advances in Neural Information Processing Systems, 34:26598–26608, 2021.

- [12] Yuan Sun, Michael Kirley, and Saman K Halgamuge. A recursive decomposition method for large scale continuous optimization. IEEE Transactions on Evolutionary Computation, 22(5):647–661, 2017.

- [13] Guilliermo Owen. Values of games with a priori unions. In Mathematical economics and game theory, pages 76–88. Springer, 1977.

- [14] Ari Stern and Alexander Tettenhorst. Hodge decomposition and the shapley value of a cooperative game. Games and Economic Behavior, 113:186–198, 2019.

- [15] Martin Jullum, Annabelle Redelmeier, and Kjersti Aas. groupshapley: Efficient prediction explanation with shapley values for feature groups. arXiv preprint arXiv:2106.12228, 2021.

- [16] Scott M Lundberg and Su-In Lee. A unified approach to interpreting model predictions. In I. Guyon, U. V. Luxburg, S. Bengio, H. Wallach, R. Fergus, S. Vishwanathan, and R. Garnett, editors, Advances in Neural Information Processing Systems 30, pages 4765–4774. Curran Associates, Inc., 2017.

- [17] Scott M. Lundberg, Gabriel Erion, Hugh Chen, Alex DeGrave, Jordan M. Prutkin, Bala Nair, Ronit Katz, Jonathan Himmelfarb, Nisha Bansal, and Su-In Lee. From local explanations to global understanding with explainable ai for trees. Nature Machine Intelligence, 2(1):2522–5839, 2020.

- [18] Arne Gevaert, Axel-Jan Rousseau, Thijs Becker, Dirk Valkenborg, Tijl De Bie, and Yvan Saeys. Evaluating feature attribution methods in the image domain. arXiv preprint arXiv:2202.12270, 2022.

- [19] Minyang Chen, Wei Du, Yang Tang, Yaochu Jin, and Gary G Yen. A decomposition method for both additively and non-additively separable problems. IEEE Transactions on Evolutionary Computation, 2022.