11email: prang@ircam.fr, esling@ircam.fr

Signal-domain representation of symbolic music for learning embedding spaces

Abstract

A key aspect of machine learning models lies in their ability to learn efficient intermediate features. However, the input representation plays a crucial role in this process, and polyphonic musical scores remain a particularly complex type of information. In this paper, we introduce a novel representation of symbolic music data, which transforms a polyphonic score into a continuous signal. We evaluate the ability to learn meaningful features from this representation from a musical point of view. Hence, we introduce an evaluation method relying on principled generation of synthetic data. Finally, to test our proposed representation we conduct an extensive benchmark against recent polyphonic symbolic representations. We show that our signal-like representation leads to better reconstruction and disentangled features. This improvement is reflected in the metric properties and in the generation ability of the space learned from our signal-like representation according to music theory properties.

Keywords:

Representation of music information, embedding spaces, Symbolic music generation1 Introduction

Recent advances in machine learning and deep neural networks have produced extremely promising results. Notably, the fields of computer vision and Natural Language Processing (NLP) have been largely reshaped by these novel approaches Mikolov, Chen\BCBL \BOthers. (\APACyear2013); Bengio (\APACyear2009). Based on these ideas, several models have also been proposed to enhance musical data analysis Park \BBA Lee (\APACyear2015). One of the major reasons behind these successes was the development of efficient embedding spaces for symbolic data Mikolov, Sutskever\BCBL \BOthers. (\APACyear2013); Pennington \BOthers. (\APACyear2014). As these spaces encode semantic relationships between input data as metric properties, they allow to decompose a complex task into two separate and simpler sub-problems. First, a system learns an embedding that disentangles underlying semantic properties of a given type of data, which provides a continuous representation space. Then, another model can learn more advanced tasks based on this adequately organized space. Multiple attempts to mimic NLP embeddings for music have been proposed, based on the premises that they both target symbolic data Huang \BOthers. (\APACyear2016); Madjiheurem \BOthers. (\APACyear2016); Bretan \BOthers. (\APACyear2017). However, these attempts only achieved small enhancements for musical generation and analysis tasks. This lack of adequate embeddings for symbolic music stems from the large discrepancies between the properties of musical and textual data. Indeed, the music vocabulary is smaller, highly repeated, and occurs in a wider variety of contexts in which the semantic properties of each element will be very different. Hence, it is not relevant to encode musical symbols as co-activation matrices Pennington \BOthers. (\APACyear2014) or one-hot vectors Mikolov, Sutskever\BCBL \BOthers. (\APACyear2013) as in the NLP field.

Recently, the success of Variational Auto-Encoder (VAEs) in symbolic music have been demonstrated in the MusicVAE model Roberts \BOthers. (\APACyear2018) which has provided interesting generation results. In addition to a new recurrent architecture, the novelty of this approach comes from the use of an efficient representation of input melodies. Nevertheless, as most recent proposals, this representation only takes into account monophonic melodies, which drastically reduces the scope of its application. Indeed, the musical composition process usually features intricate polyphony, rather than simply stacking monophonic voices together. Motivated by this challenge, we propose in this paper a novel method, which allows to represent any symbolic score as a minimal audio signal. Different MIDI pitches are mapped to prime frequencies and summed across time resulting in a simplified waveform. The process being perfectly invertible, we can retrieve the original score without losing any information. We show that this novel representation (named signal-like) is able to outperform previous propositions on polyphonic data, by comparing it to previous symbolic music representations, namely, the piano-roll, the MIDI-like Oore \BOthers. (\APACyear2018) and the NoteTuple representation Hawthorne \BOthers. (\APACyear2018). To do so, we compute the correlation between the learned embedding and music theory properties, allowing to quantify how different spaces are organized along musical concepts.

2 State-of-the-art

2.1 Embedding spaces

The concept of embedding spaces has originally emerged in the NLP field. Multiple word embedding algorithms have been proposed, the two most notable and widely used contributions being Word2Vec Mikolov, Chen\BCBL \BOthers. (\APACyear2013) and GloVe Pennington \BOthers. (\APACyear2014). By using such vectors as input representation for other machine learning tasks, NLP made colossal improvements. This success of embeddings has paved the way for a wide variety of applications such as sentiment analysis Tang \BOthers. (\APACyear2014), information retrieval Palangi \BOthers. (\APACyear2016) or symbolic music field Madjiheurem \BOthers. (\APACyear2016); Bretan \BOthers. (\APACyear2017); Huang \BOthers. (\APACyear2016).

More recently, the Variational Auto-Encoders (VAEs) Kingma \BBA Welling (\APACyear2013) have provided an elegant approach for learning embedding spaces. Similarly to a classical auto-encoder, these networks are composed of an encoder which embeds the input in a lower dimensional latent space, , followed by a decoder , which tries to reconstruct the original input, so that

Then, the generated outputs are compared to the inputs through a loss function that the network tries to minimize. In the case of VAEs, this loss function is defined by two different terms as follows

| (1) |

The first term (expected log-likelihood) defines a reconstruction loss, which pushes the decoder to produce outputs that are as close as possible to the inputs. Thus, it encourages learning an accurate reconstruction of the data. The second term (Kullback-Leibler divergence) acts as a regularizer, enforcing information sharing between sample-wise distributions. Indeed, forcing the latent distribution of the data to be close to the normal distribution (with zero mean and unit variance) prevents the encoding network to isolate each projection, hence favoring close embedding vectors for similar inputs.

One of the most efficient models for symbolic music based on variational auto-encoding is MusicVAE Roberts \BOthers. (\APACyear2018). In this work, input scores are sliced in few bars and represented using a simplified version of the MIDI-like representation (see next section). The model is defined as a recurrent VAE, where the encoder is a two-layered bidirectional LSTM network that produces a sequence of hidden states from an input sequence . The final encoding is obtained as a function of the last hidden state . For the decoder, the authors rely on a hierarchical recurrent structure composed of two LSTM networks. The first one, called conductor RNN, segments the output target into non-overlapping sub-sequences and produces an embedding vector for each time step. The second LSTM network auto-regressively produces a sequence of distributions over output tokens for each sub-sequence via a softmax output layer.

2.2 Symbolic music representations

The performances of machine learning techniques for symbolic generation is critically influenced by the properties of the input representation. The most common way to represent polyphonic music is through the piano-roll representation. Here, time is discretized with a reference quantum to provide a matrix that represents note activation in musical sequences. Due to its sparsity and its repetitive nature, this representation poses several issues for learning, which warranted the definition of alternate approaches.

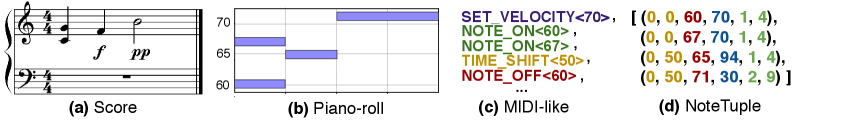

The first alternative representation has been proposed in Oore \BOthers. (\APACyear2018). This MIDI-like approach relies on an event-based vocabulary composed by four main MIDI events. The NOTE_ON events with the corresponding NOTE_OFF events, the TIME_SHIFT events and the SET_VELOCITY events. The resulting representation of an input piece is a variable-length sequence of discrete events taken from this vocabulary. This representation can handle any form of music with polyphony and variable number of voices or time signatures. An example is depicted in figure 1 (c).

However, as the MIDI-like representation relies on the idea of time shifts, all the attributes corresponding to a given note (velocity, note ON and note OFF) may be encoded at very distant positions of a sequence. To alleviate this particular issue, the NoteTuple representation Hawthorne \BOthers. (\APACyear2018) was recently proposed. In this method, each note is represented by a tuple composed by four attributes, namely, the time offset from the previous note, pitch, velocity and duration. An example is displayed in figure 1 (d).

3 Signal-like representation

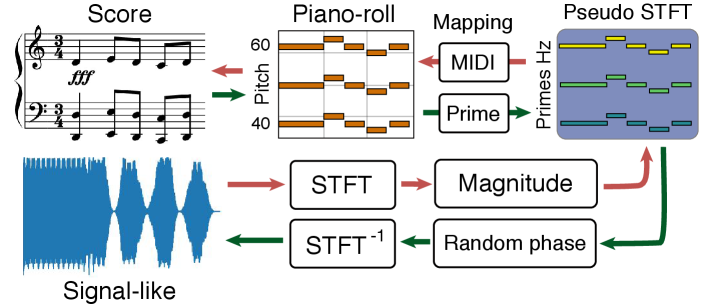

In machine learning applied to music, the audio signal information offers several desirable properties. Indeed, it naturally contains polyphonic information as a decomposable sum. Moreover, there has been some large successes in using raw signal for learning music generation tasks Oord \BOthers. (\APACyear2016). In this paper, we show that relying on a compact signal-like representation for polyphonic symbolic music can lead to large enhancements in tasks related to learning embedding. Hence, we aim to transform any given piano-roll as a spectrogram generating the most compact signal representation possible. To do so, we first map each MIDI pitch to prime numbers, starting from 43 (for MIDI pitch 0) to 2063 (for MIDI pitch 127) and removing consecutive numbers with a gap smaller than 3 (in order to not obtain pseudo frequencies that are too close together). In this representation, harmonic relationships may create phase effects detrimental to the inversion. Indeed, as the frequencies are arbitrary chosen and each represent a single MIDI pitch, the emergence of new harmonics during the inversion process would lead to the addition of notes which are not present in the original score. The use of prime numbers allow to mitigate this undesirable effect. Note that this also restrains the maximum frequency to a rather small value, allowing for a compact resulting signal. Then, we add an artificial phase, by setting imaginary parts to that create a complex matrix. Doing so, we can apply the inverse Short-Time Fourier Transform (STFT) to the resulting matrix and obtain a compact signal-like representation of the score. In our experiments, we use a window size of 2048 with an hop size equal to . Note that this whole process is invertible, as depicted in Figure 2.

4 Evaluation

In order to precisely evaluate how embedding spaces are able to capture musical theory principles, we design a principled generative approach. This allows to obtain large sets of evaluation data with controlled properties, while following known music theory rules. For our experiments, we produce a dataset composed by bars of synthetic chorales (see the support page for details).111https://github.com/MagiCzOOz/signallike-embedding We obtain a set of 21966 realisations from 370 different skeletons where the links between each realisation and its corresponding skeleton have been kept. In this paper, this corpus is used only for evaluation purpose. Hence, none of these data are used during the training.

Based on this corpus, we can analyze different aspects of the organization of learned embedding spaces. First, we can analyze the behavior of different spaces based on tonality. An adequate clustering of the different tonalities across the space would prove its capacity to encode this high level musical information. Second, we can compute the distance between a realisation and its corresponding skeleton based on the number of non-harmonic tones. In this case, if the space is well-organized, we expect these distances to evolve linearly with the number of additional tones. Third, we can compute the distance between a realisation and its corresponding skeleton based on the type of non-harmonic tones. A link between these distances and the note type would show that the embedding space can handle advanced musical concepts.

5 Experiments

In order to compare the efficiency of piano-roll, MIDI-like, NoteTuple and Signal-like representations, we evaluate them with the same common learning approach. Based on the success of the MusicVAE model Roberts \BOthers. (\APACyear2018), we rely on this architecture for our experiments. We use a recurrent encoder with a two-layers bidirectional LSTM of 1024 units. For the decoder, we rely on a hierarchical recurrent architecture with a two-layer unidirectional LSTM with a hidden size of 1024 for both the conductor and decoder. We use a latent size of 256 which largely reduces the input dimensionality while keeping enough information for the reconstruction.

As discussed previously, we train the models on the JSB chorales dataset. We use data augmentation based on transposition as proposed in Hadjeres \BOthers. (\APACyear2017), ensuring that transposed chorales are still correct from a music theory standpoint. This leads to an extended set of 2418 training and 549 testing chorales. As the model takes musical bars as input, we split the MIDI files into separate bars and compute different representations on each bar. We filter bars to have a maximum of 64 events, retaining more than of all the data. After these operations, we obtain 36801 training and 8850 testing bars. Regarding the MIDI-like representation, we introduce a dummy event as padding to obtain a constant-size representation. We use a similar approach for the NoteTuple representation by taking 16 tuples by bar and padding with empty tuples.

All the models are trained with the ADAM optimizer Kingma \BBA Ba (\APACyear2014) with an initial learning rate of and a batch size of . In order to train the piano-roll and signal-like representation, we rely on a Mean Squared Error (MSE) loss. Since the MIDI-like and NoteTuple representation are categorical, we rather rely on a cross-entropy loss.

6 Results

6.1 Reconstruction

First, we compare the capacity of each model to reconstruct the original data, by relying on a frame-level accuracy measure. We divide musical sequences into 16 frames and compute the accuracy as a ratio involving correct (true positives) and wrong (false negatives and positives) active notes in each one of them. We also display separately the best reconstruction accuracy and KL divergence results on the test set in Table 1.

| Input |

|

KL div | |||

| Monophonic | Piano-roll | ||||

| MIDI-like-mono | |||||

| Polyphonic | Piano-roll | ||||

| MIDI-like | - | ||||

| NoteTuple | |||||

| Signal-like | 96.5 |

As we can see, the MIDI-like shows great performance in the reconstruction of monophonic musical bars but are unable to achieve the reconstruction with polyphonic ones. Indeed, due to the nature of the MIDI-like representation, even a unique error on a NOTE_ON or NOTE_OFF event leads to an ill-defined musical sequences with notes that never end or never start. Concerning NoteTuple, the models have been able to encode information about the number of notes, duration and time offsets, which are almost perfectly reconstructed. However, a large number of mistakes in the pitches of individual notes cause the frame-level accuracy score to be low. Hence, despite the use of regularization techniques (dropout, data augmentation) these two representation seem to largely suffer from over-fitting.

On the other hand, great results have been achieved with the signal-like representation. In addition to a better reconstruction accuracy, our representation has improved learning stability by avoiding the exploding gradient problem Pascanu \BOthers. (\APACyear2012) which occurred with the piano-roll representation. Moreover, the robustness of our proposal decreases the loss of reconstruction information while minimizing the KL div and thus lead to a much better trade-off score.

6.2 Music theory analysis

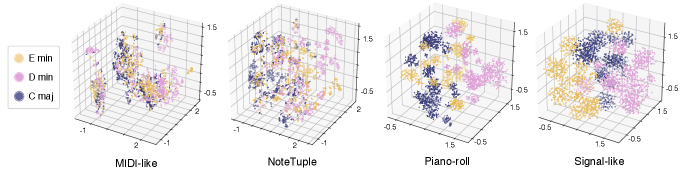

First, we embed unseen synthetic bars in order to compare their respective projections in the different embeddings. As the latent spaces have 128 dimensions, precluding direct visualization, we rely on a t-SNE dimensionality reduction Maaten \BBA Hinton (\APACyear2008) with 1000 iterations and a perplexity of 30. We display in Figure 3 the results of this analysis. It clearly appears that the use of our proposed signal-like representation greatly improves the efficiency of the latent space in encoding a unseen high-level musical feature such as the tonality.

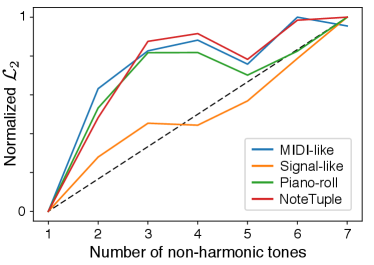

Then, we compute statistics over metadata of the toy dataset in order to evaluate how different spaces have organized the realizations with respect to the number of non-harmonic tones. As we can see in Figure 4, the distances between the original skeleton and its different realizations in the space learned through the signal-like representation provide an almost linear relationship with the number of non-harmonic tones. This highlights the fact that the signal-like space is better organized from a musical point of view.

6.3 Symbolic music generation

To highlight the generative capacity of the latent spaces trained by using our signal-like representation, we generate interpolations between two random points in the latent space, which are available on the support page.222https://github.com/MagiCzOOz/signallike-embedding The generations generally provide smooth evolution from one point to the other, even though the two underlying musical sequences are largely different. Hence, this shows that our signal-like representation can be used in creative applications, while providing organized latent structures.

7 Conclusion

In this paper, we have proposed a new representation for symbolic music and evaluated it through the learning of musical embedding spaces. We have shown that our signal-like representation have improved the quality of the learned spaces for both the reconstruction quality and the organization of the underlying spaces. In future work, we are interested in optimizing the model for our representation to understand various musical qualities. Furthermore, in addition to the pure random latent generation, several creative applications could be explored by relying on these symbolic embedding spaces.

References

- Bengio (\APACyear2009) \APACinsertmetastarbengio2009learning{APACrefauthors}Bengio, Y. \APACrefYearMonthDay2009. \BBOQ\APACrefatitleLearning deep architectures for AI Learning deep architectures for ai.\BBCQ \APACjournalVolNumPagesFoundations and trends® in Machine Learning211–127. \PrintBackRefs\CurrentBib

- Bretan \BOthers. (\APACyear2017) \APACinsertmetastarbretan2017learning{APACrefauthors}Bretan, M., Oore, S., Eck, D.\BCBL \BBA Heck, L. \APACrefYearMonthDay2017. \BBOQ\APACrefatitleLearning and Evaluating Musical Features with Deep Autoencoders Learning and evaluating musical features with deep autoencoders.\BBCQ \APACjournalVolNumPagesarXiv preprint arXiv:1706.04486. \PrintBackRefs\CurrentBib

- Hadjeres \BOthers. (\APACyear2017) \APACinsertmetastarhadjeres2017deepbach{APACrefauthors}Hadjeres, G., Pachet, F.\BCBL \BBA Nielsen, F. \APACrefYearMonthDay2017. \BBOQ\APACrefatitleDeepbach: a steerable model for bach chorales generation Deepbach: a steerable model for bach chorales generation.\BBCQ \BIn \APACrefbtitleProceedings of the 34th International Conference on Machine Learning-Volume 70 Proceedings of the 34th international conference on machine learning-volume 70 (\BPGS 1362–1371). \PrintBackRefs\CurrentBib

- Hawthorne \BOthers. (\APACyear2018) \APACinsertmetastarhawthorne2018transformer{APACrefauthors}Hawthorne, C., Huang, A., Ippolito, D.\BCBL \BBA Eck, D. \APACrefYearMonthDay2018. \BBOQ\APACrefatitleTransformer-NADE for Piano Performances Transformer-nade for piano performances.\BBCQ \BIn \APACrefbtitleNIPS Second Workshop on Machine Learning for Creativity and Design. Nips second workshop on machine learning for creativity and design. \PrintBackRefs\CurrentBib

- Huang \BOthers. (\APACyear2016) \APACinsertmetastarhuang2016chordripple{APACrefauthors}Huang, C\BHBIZ\BPBIA., Duvenaud, D.\BCBL \BBA Gajos, K\BPBIZ. \APACrefYearMonthDay2016. \BBOQ\APACrefatitleChordripple: Recommending chords to help novice composers go beyond the ordinary Chordripple: Recommending chords to help novice composers go beyond the ordinary.\BBCQ \BIn \APACrefbtitleProceedings of the 21st International Conference on Intelligent User Interfaces Proceedings of the 21st international conference on intelligent user interfaces (\BPGS 241–250). \PrintBackRefs\CurrentBib

- Kingma \BBA Ba (\APACyear2014) \APACinsertmetastarkingma2014adam{APACrefauthors}Kingma, D\BPBIP.\BCBT \BBA Ba, J. \APACrefYearMonthDay2014. \BBOQ\APACrefatitleAdam: A method for stochastic optimization Adam: A method for stochastic optimization.\BBCQ \APACjournalVolNumPagesarXiv preprint arXiv:1412.6980. \PrintBackRefs\CurrentBib

- Kingma \BBA Welling (\APACyear2013) \APACinsertmetastarkingma2013auto{APACrefauthors}Kingma, D\BPBIP.\BCBT \BBA Welling, M. \APACrefYearMonthDay2013. \BBOQ\APACrefatitleAuto-encoding variational bayes Auto-encoding variational bayes.\BBCQ \APACjournalVolNumPagesarXiv preprint arXiv:1312.6114. \PrintBackRefs\CurrentBib

- Maaten \BBA Hinton (\APACyear2008) \APACinsertmetastarmaaten2008visualizing{APACrefauthors}Maaten, L\BPBIv\BPBId.\BCBT \BBA Hinton, G. \APACrefYearMonthDay2008. \BBOQ\APACrefatitleVisualizing data using t-SNE Visualizing data using t-sne.\BBCQ \APACjournalVolNumPagesJournal of Machine Learning Research9Nov2579–2605. \PrintBackRefs\CurrentBib

- Madjiheurem \BOthers. (\APACyear2016) \APACinsertmetastarmadjiheurem2016chord2vec{APACrefauthors}Madjiheurem, S., Qu, L.\BCBL \BBA Walder, C. \APACrefYearMonthDay2016. \BBOQ\APACrefatitleChord2Vec: Learning musical chord embeddings Chord2vec: Learning musical chord embeddings.\BBCQ \BIn \APACrefbtitleProceedings of the Constructive Machine Learning Workshop at 30th Conference on Neural Information Processing Systems (NIPS’2016), Barcelona, Spain. Proceedings of the constructive machine learning workshop at 30th conference on neural information processing systems (nips’2016), barcelona, spain. \PrintBackRefs\CurrentBib

- Mikolov, Chen\BCBL \BOthers. (\APACyear2013) \APACinsertmetastarmikolov2013efficient{APACrefauthors}Mikolov, T., Chen, K., Corrado, G.\BCBL \BBA Dean, J. \APACrefYearMonthDay2013. \BBOQ\APACrefatitleEfficient estimation of word representations in vector space Efficient estimation of word representations in vector space.\BBCQ \APACjournalVolNumPagesarXiv preprint arXiv:1301.3781. \PrintBackRefs\CurrentBib

- Mikolov, Sutskever\BCBL \BOthers. (\APACyear2013) \APACinsertmetastarmikolov2013distributed{APACrefauthors}Mikolov, T., Sutskever, I., Chen, K., Corrado, G\BPBIS.\BCBL \BBA Dean, J. \APACrefYearMonthDay2013. \BBOQ\APACrefatitleDistributed representations of words and phrases and their compositionality Distributed representations of words and phrases and their compositionality.\BBCQ \BIn \APACrefbtitleAdvances in neural information processing systems Advances in neural information processing systems (\BPGS 3111–3119). \PrintBackRefs\CurrentBib

- Oord \BOthers. (\APACyear2016) \APACinsertmetastaroord2016wavenet{APACrefauthors}Oord, A\BPBIv\BPBId., Dieleman, S., Zen, H., Simonyan, K., Vinyals, O., Graves, A.\BDBLKavukcuoglu, K. \APACrefYearMonthDay2016. \BBOQ\APACrefatitleWavenet: A generative model for raw audio Wavenet: A generative model for raw audio.\BBCQ \APACjournalVolNumPagesarXiv preprint arXiv:1609.03499. \PrintBackRefs\CurrentBib

- Oore \BOthers. (\APACyear2018) \APACinsertmetastaroore2018time{APACrefauthors}Oore, S., Simon, I., Dieleman, S., Eck, D.\BCBL \BBA Simonyan, K. \APACrefYearMonthDay2018. \BBOQ\APACrefatitleThis time with feeling: Learning expressive musical performance This time with feeling: Learning expressive musical performance.\BBCQ \APACjournalVolNumPagesNeural Computing and Applications1–13. \PrintBackRefs\CurrentBib

- Palangi \BOthers. (\APACyear2016) \APACinsertmetastarpalangi2016deep{APACrefauthors}Palangi, H., Deng, L., Shen, Y., Gao, J., He, X., Chen, J.\BDBLWard, R. \APACrefYearMonthDay2016. \BBOQ\APACrefatitleDeep sentence embedding using long short-term memory networks: Analysis and application to information retrieval Deep sentence embedding using long short-term memory networks: Analysis and application to information retrieval.\BBCQ \APACjournalVolNumPagesIEEE/ACM Transactions on Audio, Speech and Language Processing (TASLP)244694–707. \PrintBackRefs\CurrentBib

- Park \BBA Lee (\APACyear2015) \APACinsertmetastarpark2015music{APACrefauthors}Park, T.\BCBT \BBA Lee, T. \APACrefYearMonthDay2015. \BBOQ\APACrefatitleMusic-noise segmentation in spectrotemporal domain using convolutional neural networks Music-noise segmentation in spectrotemporal domain using convolutional neural networks.\BBCQ \BIn \APACrefbtitle16th International Society for Music Information Retrieval Conference (ISMIR). 16th international society for music information retrieval conference (ismir). \PrintBackRefs\CurrentBib

- Pascanu \BOthers. (\APACyear2012) \APACinsertmetastarpascanu2012understanding{APACrefauthors}Pascanu, R., Mikolov, T.\BCBL \BBA Bengio, Y. \APACrefYearMonthDay2012. \BBOQ\APACrefatitleUnderstanding the exploding gradient problem Understanding the exploding gradient problem.\BBCQ \APACjournalVolNumPagesCoRR, abs/1211.50632417. \PrintBackRefs\CurrentBib

- Pennington \BOthers. (\APACyear2014) \APACinsertmetastarpennington2014glove{APACrefauthors}Pennington, J., Socher, R.\BCBL \BBA Manning, C. \APACrefYearMonthDay2014. \BBOQ\APACrefatitleGlove: Global vectors for word representation Glove: Global vectors for word representation.\BBCQ \BIn \APACrefbtitleProceedings of the 2014 conference on empirical methods in natural language processing (EMNLP) Proceedings of the 2014 conference on empirical methods in natural language processing (emnlp) (\BPGS 1532–1543). \PrintBackRefs\CurrentBib

- Roberts \BOthers. (\APACyear2018) \APACinsertmetastarroberts2018hierarchical{APACrefauthors}Roberts, A., Engel, J., Raffel, C., Hawthorne, C.\BCBL \BBA Eck, D. \APACrefYearMonthDay2018. \BBOQ\APACrefatitleA hierarchical latent vector model for learning long-term structure in music A hierarchical latent vector model for learning long-term structure in music.\BBCQ \APACjournalVolNumPagesarXiv preprint arXiv:1803.05428. \PrintBackRefs\CurrentBib

- Tang \BOthers. (\APACyear2014) \APACinsertmetastartang2014learning{APACrefauthors}Tang, D., Wei, F., Yang, N., Zhou, M., Liu, T.\BCBL \BBA Qin, B. \APACrefYearMonthDay2014. \BBOQ\APACrefatitleLearning sentiment-specific word embedding for twitter sentiment classification Learning sentiment-specific word embedding for twitter sentiment classification.\BBCQ \BIn \APACrefbtitleProceedings of the 52nd Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers) Proceedings of the 52nd annual meeting of the association for computational linguistics (volume 1: Long papers) (\BVOL 1, \BPGS 1555–1565). \PrintBackRefs\CurrentBib