SIM-ECG: A Signal Importance Mask-driven ECG Classification System

Abstract

Heart disease is the number one killer, and ECGs can assist in early diagnosis and prevention of deadly outcomes. Accurate ECG interpretation is critical in detecting heart diseases; however, they are often misinterpreted due to a lack of training or insufficient time spent to detect minute anomalies. Subsequently, researchers turned to machine learning to assist in the analysis. However, existing systems are not as accurate as skilled ECG readers, and black-box approaches to providing diagnosis result in a lack of trust by medical personnel in given diagnosis. To address these issues, we propose a signal importance mask feedback-based machine learning system that continuously accepts feedback, improves accuracy and explains the resulting diagnosis. This allows medical personnel to quickly glance at the output and either accept the results, validate the explanation and diagnosis, or quickly correct areas of misinterpretation, giving feedback to the system for improvement. We have tested our system on a publicly available dataset consisting of healthy and disease-indicating samples. We empirically show that our algorithm is better in terms of standard performance measures such as F-score and Macro AUC compared to normal training baseline (without feedback); we also show that our model generates better interpretability maps.

1 Introduction

Heart disease is the leading cause of death in the US and worldwide for both men and women [13]. In the US, heart diseases have been the leading cause of death since 2015, and the number of deaths from heart disease increased by 4.8% from 2019 to 2020 [1]. The Center for Disease Control and Prevention notes that heart disease accounts for one in every four deaths in the United States each year. These deaths are attributed to many factors ranging from undetected heart diseases causing sudden death, late detection that may damage heart muscles and require repair, or even improper monitoring after successful heart surgery. Every year, more than 5 million Americans are affected by Heart Failures. Electrocardiogram (ECG), which records the heart’s electrical activity, has long been the preferred and trusted technique for doctors to detect and diagnose these heart conditions. Therefore, accurate ECG analysis is critical in early disease detection and saving patients’ lives. However, this is not the case quite frequently. A recent study found that 30% of myocardial infarction events were misclassified as low risk, with ECG misinterpretation responsible for half of the misclassifications [7]. Misdiagnosis is also a top concern expressed by cardiac patients [9]. It is therefore essential to develop mechanisms to improve analysis accuracy.

The automation of ECG reading has been a long-standing need. Trying to analyze the signals manually is mundane, requires extreme concentration, causes mental fatigue, and is not well reimbursed adequately, to the amount of effort, by the insurance companies [6]. Consequently, the number of people who can read ECG signals is shrinking, and experienced doctors don’t have enough time to scrutinize patients’ ECGs. However, commercial ECG machines only aid with basic preprocessing and analysis: they give information such as PR intervals, QRS duration, irregular rhythms, and a potential indication of abnormal ECG signals – these analyses are not very sophisticated and offer limited assistance in detection of more complex cardiac conditions [10, 29]. Furthermore, many arising personal ECG monitoring devices also provide basic analysis either locally or send data to more sophisticated processing and eventually, human reading [26].

The machine learning community observed this need, and numerous machine learning algorithms were proposed for disease detection. Convolutional Neural Network assisted in arrhythmia and myocardial detection [12]. Other algorithms such as decision trees, -nearest neighbor, logistic regression, support vector machines [8], and Inception neural networks [20] have also been evaluated. While existing machine learning algorithms have succeeded in classifying basic cardiac conditions, classification of more complex cardiac events is still challenging, and existing solutions are no match for highly skilled human readers. Furthermore, existing solutions provide diagnosis in a black-box manner, requiring the medicinal personnel to carefully analyze the ECG again to validate the algorithm’s interpretations. We propose a system that can continuously collect ECG signal importance mask feedback from expert ECG readers and evolve its detection algorithms to surpass existing static solutions to address those challenges. Furthermore, the proposed system provides a visual interpretation of why the system decided and what portion of the signals were used in making the decision, allowing for quick validation by the reader. Our model and interpretability maps can save manual labor and provide readers with a precise region of the ECG signals where the disease manifestation is visible, which allows them to focus on validating the results instead of trying to find them in the first place.

2 Related Work

Deep learning has recently gained popularity in heart disease detection from 12-lead ECG signals. Models like residual convolutional neural networks, convolutional neural networks (CNNs), bidirectional long short term memory (LSTM), CNN and LSTM, CNNs, and densely-connected CNNs, have been used for small scale (-way) classification problem from 12-lead ECG signals [21, 2, 16, 14, 11, 31]. Lack of trust by medical personnel in black-box approaches resulted in recent efforts on interpretability analysis of ECG classification models [32], by using convolutional and recurrent neural networks for arrhythmia classification from 12-lead signals and providing explanation from attention mechanism. Similarly, [34] performs interpretability analysis using the Shapley additive explanations method. Recurrent neural networks (RNNs) for atrial fibrillation (AF) detection from a single-lead ECG signal combined with interpretability maps from attention mechanism have been explored [17]. All of the above methods are used for small scale classification (-way) and do not learn from doctor’s feedback. In contrast, our proposed solution simultaneously tackles large-scale (71-way) multi-label classification tasks, with a training procedure that considers doctors’ signal importance masks (a set of time windows in ECG signal that are most indicative of the diseases exhibits) and provides model interpretability analysis.

Auxiliary information such as bounding boxes in computer vision [5] and rationales in natural language processing [33, 24, 36] have been used to improve model accuracy and interpretability. Theoretical analysis on learning from auxiliary information beyond labels has also been explored [28, 19, 3]. Alignment of the attention maps generated by models to the attention maps labeled by experts to improve the accuracy and interpretability of CNNs has also been explored [15]. The addition of a regularization term in the training loss function that penalizes model gradients outside important regions in examples’ input features has also been explored [23, 4], and we utilize those ideas in our solution. Use of part localization information to train CNNs to improve the accuracy has been proposed in [35]. Recent use of explanation penalization terms to loss function that tries to match the model explanation with human explanation has improved the accuracy and interpretability of image and text classification systems [22]. Although using auxiliary information for improving the trustworthiness of image classification models and natural language models have been well studied in computer vision and natural language processing domains as discussed above, our work is the first that applies it for accurate and interpretable ECG signal-based heart disease prediction.

3 System

The goal of our system is to detect cardiac diseases with high accuracy and provide its rationale, i.e., identifying important parts of the input ECG responsible for the predicted disease to allow quick validation by medical personnel and remove the black box approach of just providing the diagnosis. Existing solutions take labeled data to train models and use the trained models to classify new data in the field. This approach is desired if there is no expert to evaluate the data, and the system has to make decisions independently. Applying this classic approach, the doctors are presented with a result of a detected cardiac condition that they can accept or reject. This decision process is a black-box approach, and the doctor does not know how the machine arrived at the given classification. Our process starts similarly, but the learning system and doctor repeatedly communicate with each other with the following protocol: (1) Upon receiving a patient’s ECG, the system detects the patient’s heart condition while also pinpoints parts of the ECG that are responsible for its prediction – for example, it highlights the difference in peaks in the ECG. This makes the decision process more trustworthy and acceptable to the medical community; (2) The doctors review the system’s prediction and explanation and make their treatment decisions. The doctor can also provide feedback to the system by either confirming the prediction and explanation or correcting the prediction and by providing signal importance masks, i.e., parts of the ECG they believe are most relevant to the patient’s condition.

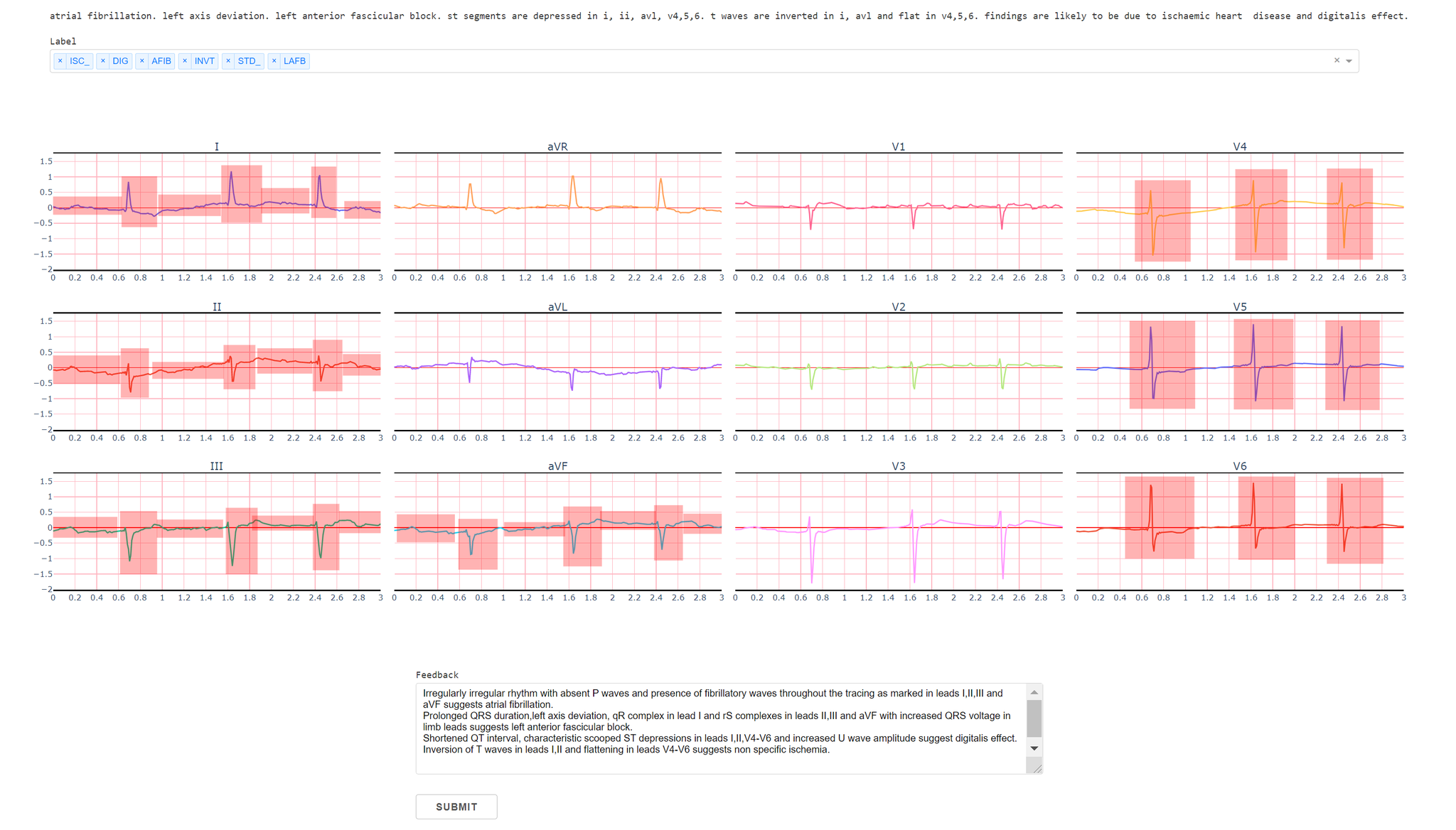

We created a web application where doctors can highlight important regions in 12-lead ECG signals to provide the signal importance masks. Figure 1 shows our interface where doctors can highlight certain regions as well as provide feedback in the form of natural language explanation. The web application was built using Flask with a PostgreSQL database. The doctors highlight all important regions for the given labels, and the feedback is stored in the database, which is subsequently used by our model training algorithm. Although we collect doctors’ text explanations feedback in our system, we do not utilize them in our model training; we leave improving ECG classification models using text feedback as interesting future work.

4 Model Training Algorithm

Our model training algorithm is inspired by recent works on learning by incorporating interpretable auxiliary information [23]. Recall that we would like to train classification models for 71-way heart disease classification from 12-lead ECG signals with signal importance masks being part of training data. Specifically, the training data are represented as a set of tuples , where for each example , is its 12-lead ECG signal representation; in our ECG dataset, . is the class labeling, where , for each coordinate , and indicate that label is present and absent, respectively. In our training data, the doctor has provided signal importance masks to some of the examples – we denote by the index set of such examples.

Our model is represented by a function , where represents the ECG signal, and represents the model parameter. Given , the model output lies in , and we use to denote its multi-label classification result, where if and otherwise. Our training process will hinge on finding that has a small average multi-label logistic loss on the training examples; here, the multi-label logistic loss of model on a multi-label example is defined as .

Our training objective function follows [23] that proposes a regularized loss objective that takes advantage of signal importance masks, defined as:

where

| (1) |

and is a tuning parameter. Each example can be viewed as introducing two parts of losses that contribute to the training objective: the first part is the standard multi-label logistic loss ; the second part , only applies to examples in whose signal importance mask is available. This term regularizes the sensitivity of the model with respect to the input using the signal importance masks: it penalizes the model from being too sensitive to parts of the ECGs outside their corresponding signal importance masks.

5 Experiments

We evaluate our algorithm using the large-scale 71-way multi-label heart disease classification dataset consisting of 12-lead ECG signals provided by [30]. We follow the previously developed pipeline [27] as a baseline system with folds 1-8 as the training set, fold 9 as the validation set, and fold 10 as the test set. The dataset consists of 10-second recordings of the ECG signals with 17441 training examples, 2193 validation examples, and 2203 test examples. Similarly, we follow the previous evaluation metrics to evaluate and compare the models, based on Fmax and macro-AUC scores [27]. Macro AUC score is used to accommodate for class imbalance, as the number of normal ECG signals is quite high [30]. We use the inception1d architecture to perform our experiments, which was shown to perform the best among all architectures [27].

We use PyTorch [18] for all our experiments. Prior approach [27] uses multiple sliding windows of 2.5 seconds and aggregates the predictions from these windows to make a final prediction which acts as a data augmentation during the training phase. We use different training and inference to avoid the long time it takes for doctors to provide feedback for the full 10-second signal. We only use the first 3 seconds of the signal in training set for the feedback. To make a fair comparison between our model training and the baseline system, we use the first 3 seconds for training, but we still use full 10-seconds signals for validation and test.

We collected signal importance mask feedback from doctors for 1359 training examples out of all 17441 training examples. We use Adam optimizer with an initial learning rate of and batch size of 64 for all of our experiments. We repeated our experiment five times.

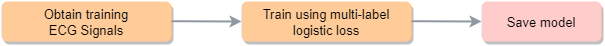

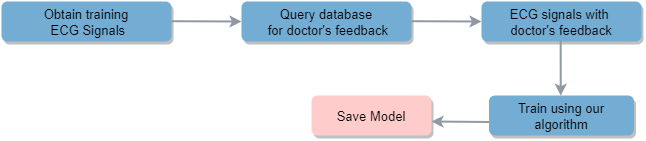

Figure 2 shows the normal training pipeline (Normal for short), while Figure 3 shows our training pipeline (Feedback for short).

5.1 Results

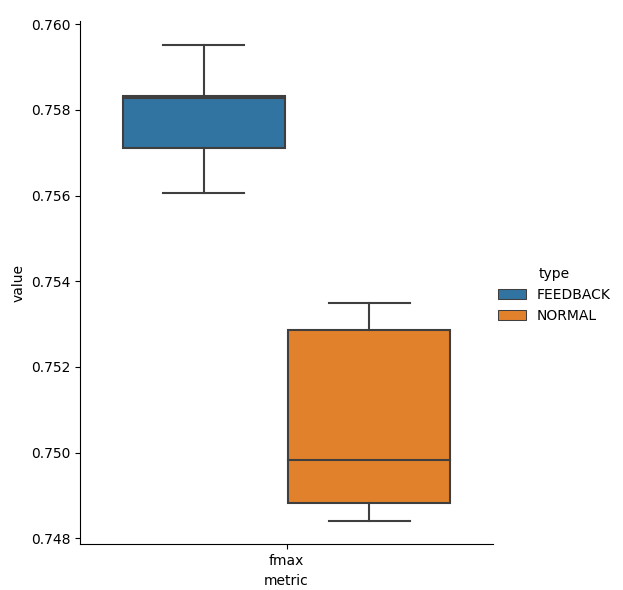

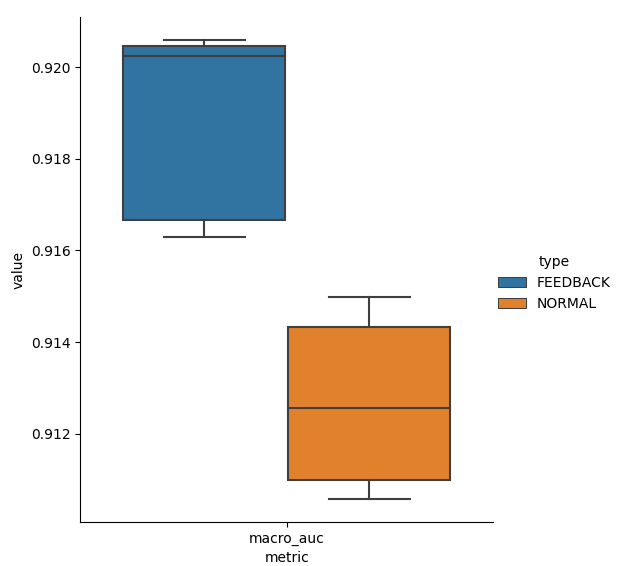

Figure 4 gives boxplots for Fmax and Macro AUC scores on the test dataset for Normal and Feedback. Thanks to incorporating doctors’ feedback in training, Feedback has higher scores than Normal.

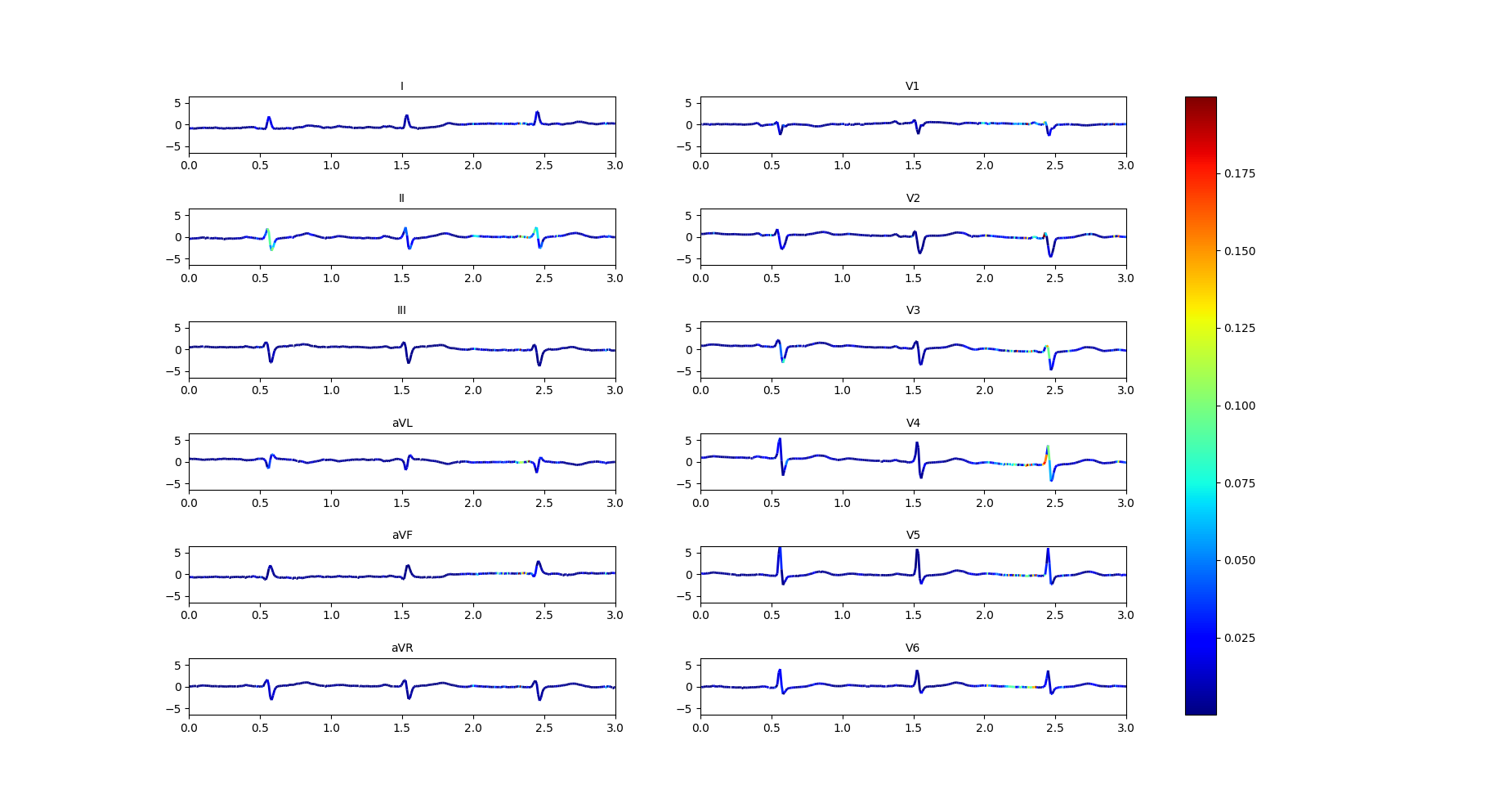

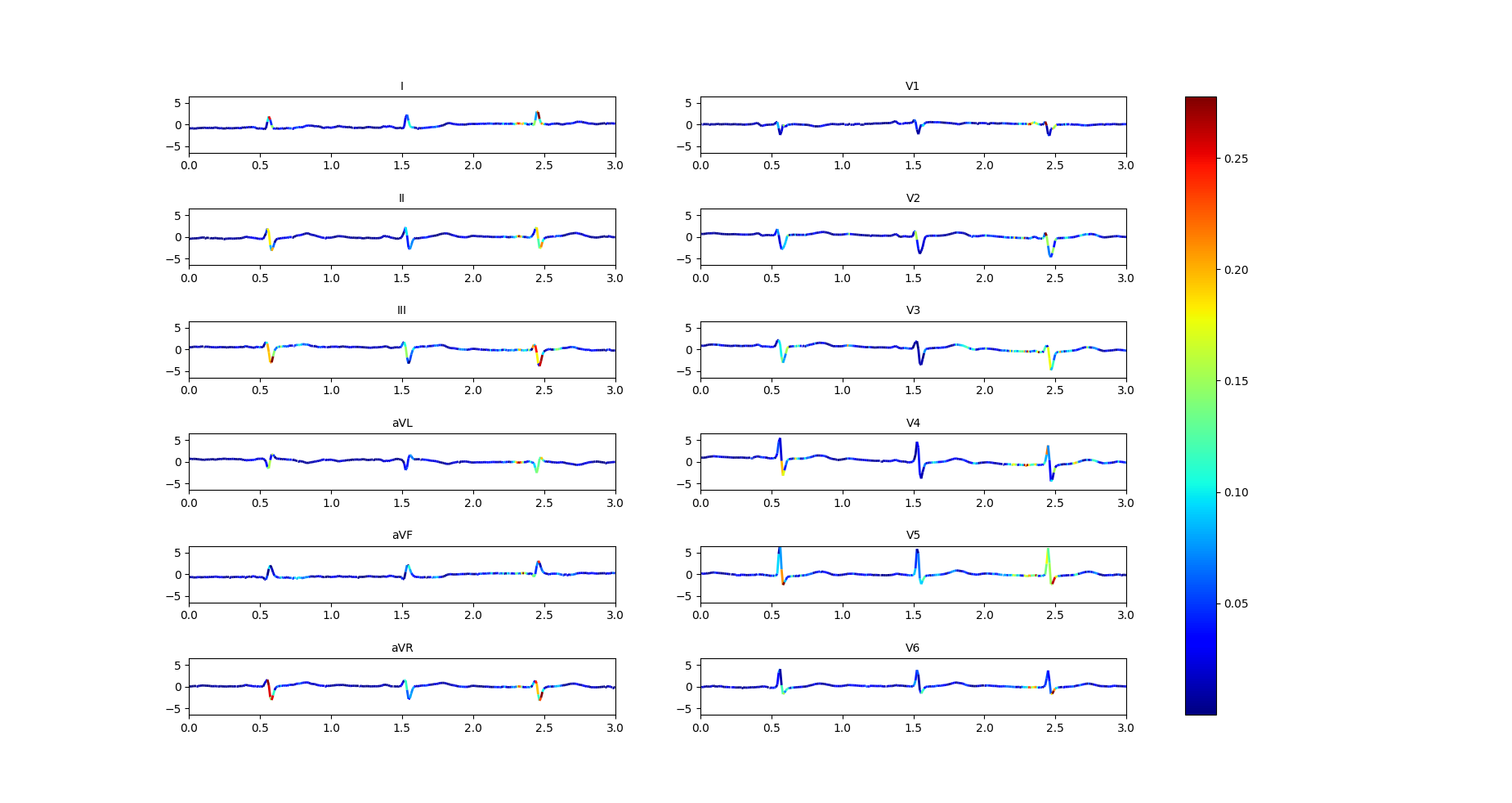

Improvement in interpretability can accelerate ECG reading and evaluation by doctors and are at the core of our design. To generate the interpretability diagrams, we take the gradients of the output with respect to all 12 leads of the input signals and highlight the important regions [25], i.e., regions where the gradients are large. The colormap is given in the right part of Figures 5 and 6 where blue color (resp. red color) indicates lower (resp. higher) value of gradients, indicating regions of lower (resp. higher) importance; regions with colors that interpolate between blue and red indicates intermediate levels of importance.

We have presented our interpretability maps to our medical team in a blind study. The interpretability maps generated by our models with feedback were always selected to be superior to those generated without the feedback. Figure 5 and Figure 6 demonstrate this on an example with labels ‘LAFB’: Left Anterior Fascicular Block and ‘SR’: Sinus Rhythm. Both models make correct predictions, but the model trained by Feedback highlights the correct regions while the model trained by Normal does not. Specifically, the doctor commented that: “Left axis deviation, prolonged QRS duration, QR complexes in lead I and RS complexes in leads II, III, AVF and increased QRS voltages in these leads are more accurately highlighted in the model trained by Feedback to show left anterior fascicular block(LAFB). P waves are well marked to show a sinus rhythm (SR) as compared to the model trained by Normal, which doesn’t highlight these important regions.”

6 Conclusion

We have built a system that can collect signal importance masks for 12-lead ECG signals from doctors. We have proposed and evaluated an ECG classification algorithm that utilizes signal importance mask feedback from doctors for accurate and interpretable predictions of 71 heart diseases. Our experiments demonstrate that collecting signal importance mask feedback for 1359 out of 17441 (7.7%) training examples already shows good improvement over the normal training baseline. We conjecture that training with larger feedback set can lead to even better models. In addition, we have collected natural language explanation feedback from doctors, which can be utilized in future works to train better models. We leave these as interesting avenues for future investigation.

References

- [1] Farida B Ahmad and Robert N Anderson. The leading causes of death in the us for 2020. JAMA, 325(18):1829–1830, 2021.

- [2] Ulas Baran Baloglu, Muhammed Talo, Ozal Yildirim, Ru San Tan, and U Rajendra Acharya. Classification of myocardial infarction with multi-lead ecg signals and deep cnn. Pattern Recognition Letters, 122:23–30, 2019.

- [3] Sanjoy Dasgupta, Akansha Dey, Nicholas Roberts, and Sivan Sabato. Learning from discriminative feature feedback. In NeurIPS, pages 3959–3967, 2018.

- [4] KC Dharma and Chicheng Zhang. Improving the trustworthiness of image classification models by utilizing bounding-box annotations.

- [5] Jeff Donahue and Kristen Grauman. Annotator rationales for visual recognition. In 2011 International Conference on Computer Vision, pages 1395–1402. IEEE, 2011.

- [6] Barbara J Drew, Kathleen Dracup, Rory Childers, John Michael Criley, Gordon Fung, Frank Marcus, David Mortara, Michael Laks, and Mel Scheinman. Finding ecg readers in clinical practice: is it time to change the paradigm? Journal of the American College of Cardiology, 64(5):528–528, 2014.

- [7] Ziad Faramand, Stephanie O. Frisch, Amber DeSantis, Mohammad Alrawashdeh, Christian Martin-Gill, Clifton Callaway, and Salah Al-Zaiti. Lack of significant coronary history and ecg misinterpretation are the strongest predictors of undertriage in prehospital chest pain. Journal of Emergency Nursing, 45(2):161–168, 2019.

- [8] Amin Ul Haq, Jian Ping Li, Muhammad Hammad Memon, Shah Nazir, and Ruinan Sun. A hybrid intelligent system framework for the prediction of heart disease using machine learning algorithms. Mobile Information Systems, 2018, 2018.

- [9] HeartFlow. Misdiagnosis is a top concern of cardiac patients.

- [10] Javier Hoffmann, Safdar Mahmood, Priscile Suawa Fogou, Nevin George, Solaiman Raha, Sabur Safi, Kurt JG Schmailzl, Marcelo Brandalero, and Michael Hübner. A survey on machine learning approaches to ecg processing. In 2020 Signal Processing: Algorithms, Architectures, Arrangements, and Applications (SPA), pages 36–41. IEEE, 2020.

- [11] J Weston Hughes, Taylor Sittler, Anthony D Joseph, Jeffrey E Olgin, Joseph E Gonzalez, and Geoffrey H Tison. Using multitask learning to improve 12-lead electrocardiogram classification. arXiv preprint arXiv:1812.00497, 2018.

- [12] Mohammad Kachuee, Shayan Fazeli, and Majid Sarrafzadeh. Ecg heartbeat classification: A deep transferable representation. In 2018 IEEE International Conference on Healthcare Informatics (ICHI), pages 443–444. IEEE, 2018.

- [13] Patrizio Lancellotti, Mai-Linh Nguyen Trung, Cécile Oury, and Marie Moonen. Cancer and cardiovascular mortality risk: is the die cast? European Heart Journal, 42(1):110–112, 2021.

- [14] Chengsi Luo, Hongxiu Jiang, Quanchi Li, and Nini Rao. Multi-label classification of abnormalities in 12-lead ecg using 1d cnn and lstm. In Machine Learning and Medical Engineering for Cardiovascular Health and Intravascular Imaging and Computer Assisted Stenting, pages 55–63. Springer, 2019.

- [15] Masahiro Mitsuhara, Hiroshi Fukui, Yusuke Sakashita, Takanori Ogata, Tsubasa Hirakawa, Takayoshi Yamashita, and Hironobu Fujiyoshi. Embedding human knowledge in deep neural network via attention map. arXiv preprint arXiv:1905.03540, 5, 2019.

- [16] Ahmed Mostayed, Junye Luo, Xingliang Shu, and William Wee. Classification of 12-lead ecg signals with bi-directional lstm network. arXiv preprint arXiv:1811.02090, 2018.

- [17] Sajad Mousavi, Fatemeh Afghah, and U Rajendra Acharya. Han-ecg: An interpretable atrial fibrillation detection model using hierarchical attention networks. Computers in biology and medicine, 127:104057, 2020.

- [18] Adam Paszke, Sam Gross, Francisco Massa, Adam Lerer, James Bradbury, Gregory Chanan, Trevor Killeen, Zeming Lin, Natalia Gimelshein, Luca Antiga, et al. Pytorch: An imperative style, high-performance deep learning library. Advances in neural information processing systems, 32:8026–8037, 2019.

- [19] Stefanos Poulis and Sanjoy Dasgupta. Learning with feature feedback: from theory to practice. In Artificial Intelligence and Statistics, pages 1104–1113. PMLR, 2017.

- [20] Tahsin Reasat and Celia Shahnaz. Detection of inferior myocardial infarction using shallow convolutional neural networks. In 2017 IEEE Region 10 Humanitarian Technology Conference (R10-HTC), pages 718–721. IEEE, 2017.

- [21] Antônio H Ribeiro, Manoel Horta Ribeiro, Gabriela MM Paixão, Derick M Oliveira, Paulo R Gomes, Jéssica A Canazart, Milton PS Ferreira, Carl R Andersson, Peter W Macfarlane, Wagner Meira Jr, et al. Automatic diagnosis of the 12-lead ecg using a deep neural network. Nature communications, 11(1):1–9, 2020.

- [22] Laura Rieger, Chandan Singh, W James Murdoch, and Bin Yu. Interpretations are useful: penalizing explanations to align neural networks with prior knowledge. arXiv preprint arXiv:1909.13584, 2019.

- [23] Andrew Slavin Ross, Michael C Hughes, and Finale Doshi-Velez. Right for the right reasons: Training differentiable models by constraining their explanations. arXiv preprint arXiv:1703.03717, 2017.

- [24] Manali Sharma and Mustafa Bilgic. Learning with rationales for document classification. Machine Learning, 107(5):797–824, 2018.

- [25] Karen Simonyan, Andrea Vedaldi, and Andrew Zisserman. Deep inside convolutional networks: Visualising image classification models and saliency maps. arXiv preprint arXiv:1312.6034, 2013.

- [26] Annemarijn SM Steijlen, Kaspar MB Jansen, Armagan Albayrak, Derk O Verschure, and Diederik F Van Wijk. A novel 12-lead electrocardiographic system for home use: development and usability testing. JMIR mHealth and uHealth, 6(7):e10126, 2018.

- [27] Nils Strodthoff, Patrick Wagner, Tobias Schaeffter, and Wojciech Samek. Deep learning for ecg analysis: Benchmarks and insights from ptb-xl. arXiv preprint arXiv:2004.13701, 2020.

- [28] Vladimir Vapnik and Akshay Vashist. A new learning paradigm: Learning using privileged information. Neural networks, 22(5-6):544–557, 2009.

- [29] Marko Velic, Ivan Padavic, and Sinisa Car. Computer aided ecg analysis—state of the art and upcoming challenges. In Eurocon 2013, pages 1778–1784. IEEE, 2013.

- [30] Patrick Wagner, Nils Strodthoff, Ralf-Dieter Bousseljot, Dieter Kreiseler, Fatima I Lunze, Wojciech Samek, and Tobias Schaeffter. Ptb-xl, a large publicly available electrocardiography dataset. Scientific data, 7(1):1–15, 2020.

- [31] Chunli Wang, Shan Yang, Xun Tang, and Bin Li. A 12-lead ecg arrhythmia classification method based on 1d densely connected cnn. In Machine Learning and Medical Engineering for Cardiovascular Health and Intravascular Imaging and Computer Assisted Stenting, pages 72–79. Springer, 2019.

- [32] Qihang Yao, Ruxin Wang, Xiaomao Fan, Jikui Liu, and Ye Li. Multi-class arrhythmia detection from 12-lead varied-length ecg using attention-based time-incremental convolutional neural network. Information Fusion, 53:174–182, 2020.

- [33] Omar F Zaidan, Jason Eisner, and Christine Piatko. Machine learning with annotator rationales to reduce annotation cost. In Proceedings of the NIPS* 2008 workshop on cost sensitive learning, pages 260–267, 2008.

- [34] Dongdong Zhang, Samuel Yang, Xiaohui Yuan, and Ping Zhang. Interpretable deep learning for automatic diagnosis of 12-lead electrocardiogram. Iscience, 24(4):102373, 2021.

- [35] Ning Zhang, Jeff Donahue, Ross Girshick, and Trevor Darrell. Part-based r-cnns for fine-grained category detection. In European conference on computer vision, pages 834–849. Springer, 2014.

- [36] Ye Zhang, Iain Marshall, and Byron C Wallace. Rationale-augmented convolutional neural networks for text classification. In Proceedings of the Conference on Empirical Methods in Natural Language Processing. Conference on Empirical Methods in Natural Language Processing, volume 2016, page 795. NIH Public Access, 2016.