Simplification Methods for Sum-of-Squares Programs

Abstract

A sum-of-squares is a polynomial that can be expressed as a sum of squares of other polynomials. Determining if a sum-of-squares decomposition exists for a given polynomial is equivalent to a linear matrix inequality feasibility problem. The computation required to solve the feasibility problem depends on the number of monomials used in the decomposition. The Newton polytope is a method to prune unnecessary monomials from the decomposition. This method requires the construction of a convex hull and this can be time consuming for polynomials with many terms. This paper presents a new algorithm for removing monomials based on a simple property of positive semidefinite matrices. It returns a set of monomials that is never larger than the set returned by the Newton polytope method and, for some polynomials, is a strictly smaller set. Moreover, the algorithm takes significantly less computation than the convex hull construction. This algorithm is then extended to a more general simplification method for sum-of-squares programming.

I Introduction

A polynomial is a sum-of-squares (SOS) if it can be expressed as a sum of squares of other polynomials. There are close connections between SOS polynomials and positive semidefinite matrices [3, 2, 4, 13, 11, 7, 12]. For a given polynomial the search for an SOS decomposition is equivalent to a linear matrix inequality feasibility problem. It is also possible to formulate optimization problems with polynomial sum-of-squares constraints [11, 12]. There is freely available software that can be used to solve these SOS feasibility and optimization problems [14, 8, 1, 6]. Many nonlinear analysis problems, e.g. Lyapunov stability analysis, can be formulated within this optimization framework [11, 12, 19, 20].

Computational growth is a significant issue for these optimization problems. For example, consider the search for an SOS decomposition: given a polynomial and a vector of monomials , does there exist a matrix such that ? The computation required to solve the corresponding linear matrix inequality feasibility problem grows with the number of monomials in the vector . The Newton polytope [15, 18] is a method to prune unnecessary monomials from the vector . This method is implemented in SOSTOOLs [14]. One drawback is that this method requires the construction of a convex hull and this construction itself can be time consuming for polynomials with many terms.

This paper presents an alternative monomial reduction method called the zero diagonal algorithm. This algorithm is based on a simple property of positive semidefinite matrices: if the diagonal entry of a positive semidefinite matrix is zero then the entire row and column must be zero. The zero diagonal algorithm simply searches for diagonal entries of that are constrained to be zero and then prunes the corresponding monomials. This algorithm can be implemented with very little computational cost using the Matlab find command. It is shown that final list of monomials returned by the zero diagonal algorithm is never larger than the pruned list obtained from the Newton polytope method. For some problems the zero diagonal algorithm returns a strictly smaller set of monomials. Results contained in this paper are similar to and preceded by those found in the prior work [9, 21].

The basic idea in the zero diagonal algorithm is then extended to a more general simplification method for sum-of-squares programs. The more general method also removes free variables that are implicitly constrained to be equal to zero. This can improve the numerical conditioning and reduce the computation time required to solve the SOS program. Both the zero diagonal elimination algorithm and the simplification procedure for SOS programs are implemented in SOSOPT [1].

II SOS Polynomials

denotes the set of nonnegative integers, , and is the set of -dimensional vectors with entries in . For , a monomial in variables is given by . is the degree vector associated with the monomial . The degree of a monomial is defined as . A polynomial is a finite linear combination of monomials:

| (1) |

where , , and is a finite collection of vectors in . denotes the set of all polynomials in variables with real coefficients. Using the definition of for a monomial, the degree of is defined as .

A polynomial is a sum-of-squares (SOS) if there exist polynomials such that . The set of SOS polynomials is a subset of and is denoted by . If is a sum-of-squares then . However, non-negative polynomials are not necessarily SOS [16].

Define as the column vector of all monomials in variables of degree : 111Any ordering of the monomials can be used to form . In Equation 2, precedes in the definition of if OR and the first nonzero entry of .

| (2) |

There are monomials in variables of degree . Thus is a column vector of length . If is a polynomial in variables with degree then by definition is a finite linear combination of monomials of degree . Consequently, there exists such that .

Two useful facts from [15] are:

-

1.

If is a sum-of-squares then must have even degree.

-

2.

If is degree () and then .

The following theorem, introduced as the “Gram Matrix” method by [4, 13], connects SOS polynomials and positive semidefinite matrices.

Theorem 1

Let be a polynomial of degree and be the vector of monomials defined in Equation 2. Then is a SOS if and only if there exists a symmetric matrix such that and .

Proof:

() If is a SOS, then there exists polynomials such that . By fact 2 above, for all . Thus, for each there exists a vector, , such that . Define the matrix whose column is and define . Then .

() Assume there exists such that and . Define . There exists a matrix such that . Let denote the column of and define the polynomials . By definition of , . ∎

Determining if an SOS decomposition exists for a given polynomial is equivalent to a feasibility problem:

| (3) |

is constrained to be positive semi-definite and equating coefficients of and imposes linear equality constraints on the entries of . Thus this is a linear matrix inequality (LMI) feasibility problem. There is software available to solve for SOS decompositions [14, 8, 1]. These toolboxes convert the SOS feasibility problem to an LMI problem. The LMI problem is then solved with a freely available LMI solver, e.g. Sedumi [17], and an SOS decomposition is constructed if a feasible solution is found. These software packages also solve SOS synthesis problems where some of the coefficients of the polynomial are treated as free variables to be computed as part of the optimization. These more general SOS optimization problems are discussed further in Section V. Many analysis problems for polynomial dynamical systems can be posed within this SOS synthesis framework [11, 12, 19, 20].

III Newton Polytope

As discussed in the previous section, the search for an SOS decomposition is equivalent to an LMI feasibility problem. One issue is that the computational complexity of this LMI feasibility problem grows with the dimension of the Gram matrix. For a polynomial of degree in variables there are, in general, monomials in and the Gram matrix is . grows rapidly with both the number of variables and the degree of the polynomial. However, any particular polynomial may have an SOS decomposition with fewer monomials. The Newton Polytope [15, 18] is an algorithm to reduce the dimension by pruning unnecessary monomials from .

First, some terminology is provided regarding polytopes [10, 5]. For any set , denotes the convex hull of . Let be a convex set. A point is an extreme point if it does not belong to the relative interior of any segment . In other words, if and such that then . A convex polytope (or simply polytope) is the convex hull of a non-empty, finite set . The extreme points of a polytope are called the vertices. Let be a polytope and let be the (finite) set of vertices of . Then and is a minimal vertex representation of . The polytope may be equivalently described as an intersection of a finite collection of halfspaces, i.e. there exists a matrix and a vector such that . This is a facet or half-space representation of .

The Newton Polytope (or cage) of a polynomial is defined as [15]. The reduced Newton polytope is . The following theorem from [15] is a key result for monomial reduction. 222 A polynomial is a form if all monomials have the same degree. The results in [15] are stated and proved for forms. A given polynomial can be converted to a form by adding a single dummy variable of appropriate degree to each monomial. The results in [15] apply to polynomials by this homogenization procedure.

Theorem 2

If then the vertices of are vectors whose entries are even numbers and .

This theorem implies that any monomial appearing in the vector of an SOS decomposition must satisfy . This forms the basis for the Newton polytope method for pruning monomials: Let be a given polynomial of degree in variables with monomial degree vectors specified by the finite set . First, create the vector consisting of all monomials of degree in variables. There are monomials in this complete list. Second, compute a half-space representation for the reduced Newton polytope . Third, prune out any monomials in that are not elements of . This algorithm is implemented in SOSTOOLs [14]. The third step amounts to checking each monomial in to see if the corresponding degree vector satisfies the half-plane constraints . This step is computationally very fast. The second step requires computing a half-plane representation for the convex hull of . This can be done in Matlab, e.g. with convhulln. However, this step can be time-consuming when the polynomial has many terms ( has many elements). The next section provides an alternative implementation of the Newton Polytope algorithm that avoids constructing the half-space representation of the reduced Newton polytope.

Example: Consider the following polynomial

| (4) |

is a degree four polynomial in two variables. The list of all monomials in two variables with degree is:

| (5) |

The length of is . An SOS decomposition of a degree four polynomial would, in general, include all six of these monomials. The Newton Polytope can be used to prune some unnecessary monomials in this list.

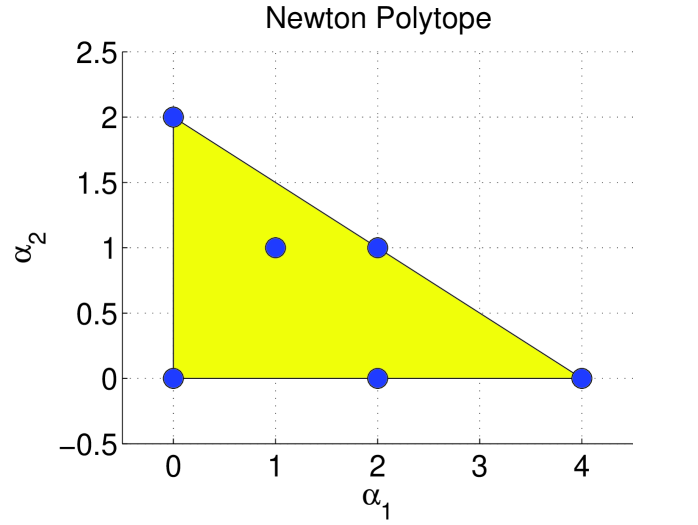

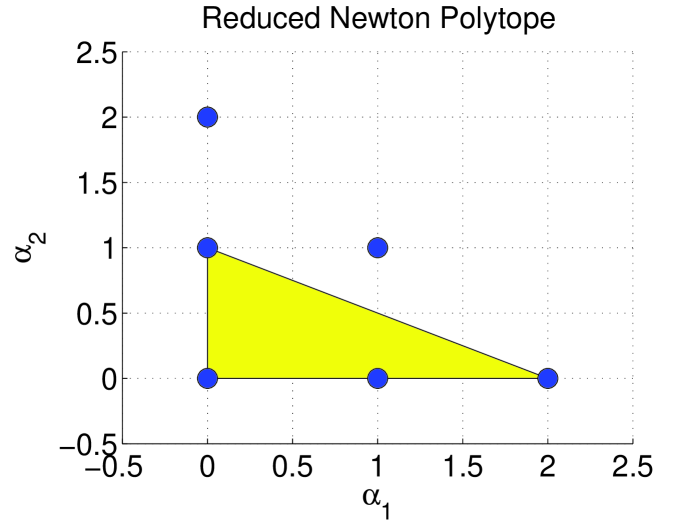

The set of monomial degree vectors for is . These vectors are shown as circles in Figure 1. The Newton Polytope is the large triangle with vertices . Figure 2 shows the degree vectors for the six monomials in (circles) and the reduced Newton polytope (large triangle). The reduced Newton polytope is the triangle with vertices . By Theorem 2, and can not appear in any SOS decomposition of because . These monomials can be pruned from and the search for an SOS decomposition can be performed using only the four monomials in the reduced Newton polytope:

| (6) |

The length of the reduced vector is . The SOS feasibility problem with this reduced vector (Equation 3) is feasible. The following matrix is one feasible solution:

| (7) |

is SOS since and .

IV Zero Diagonal Algorithm

The zero diagonal algorithm searches for diagonal entries of the Gram matrix that are constrained to be zero and then prunes the associated monomials from . The remainder of the section describes this algorithm in more detail.

As mentioned in Section II, equating the coefficients of and leads to linear equality constraints on the entries of . The structure of these equations plays an important role in the proposed algorithm. Let be the vector of all monomials in variables of degree (Equation 2). Define the corresponding set of degree vectors as . is a polynomial in with coefficients that are linear functions of the entries of :

| (8) |

The entries of are not independent: it is possible that for some . The unique degree vectors in Equation 8 are given by the set

| (9) |

The polynomial can be rewritten as:

| (10) |

where . Equating the coefficients of and yields the following linear equality constraints on the entries of :

| (13) |

There exists and such that these equality constraints are given by 333In addition to the equality constraints due to there are also equality constraints due to the symmetry condition . Some solvers, e.g. Sedumi [17], internally handle these symmetry constraints. where is the vector obtained by vertically stacking the columns of . The dimension is equal to the number of elements of .

The zero diagonal algorithm is based on two lemmas.

Lemma 1

If then

| (16) |

Lemma 2

If , , and then where is the vector obtained by deleting the element of and is the matrix obtained by deleting the row and column from .

Lemma 1 follows from Equation 13. means that is the unique decomposition of as a product of monomials in . There is no other decomposition of as a product of monomials in . In this case, places a direct constraint on that must hold for all possible Gram matrices.

Lemma 2 follows from a simple property of positive semidefinite matrices: If and then for . If then an SOS decomposition of , if one exists, does not depend on the monomial and can be removed from .

The zero diagonal algorithm is given in Table I. The sets denote the pruned list of monomial degree vectors at the iterate. The main step in the iteration is the search for equations that directly constrain a diagonal entry to be zero (Step 6). This step can be performed very fast since it can be implemented using the find command in Matlab. Based on Lemma 2, if then the monomial and the row and column of can be removed. This is equivalent to zeroing out the corresponding columns of (Step 7). This implementation has the advantage that and do not need to be recomputed for each updated set . Zeroing out columns of in Step 7 also means that new equations of the form may be uncovered during the next iteration. The iteration continues until no new zero diagonal entries of are discovered. The next theorem proves that if is a SOS then the decomposition must be expressible using only monomials associated with the final set . Moreover, , i.e. the list of monomials returned by the zero diagonal algorithm is never larger than the list obtained from the Newton polytope method. In fact, there are polynomials for which the zero diagonal algorithm returns a strictly smaller list of monomials than the Newton polytope. The second example below provides an instance of this fact.

| 1. | Given: | A polynomial . |

| 2. | Initialization: Set and | |

| 3. | Form : Construct the equality constraint data, and | |

| , obtained by equating coefficients of . | ||

| 4. | Iteration: | |

| 5. | Set , , and | |

| 6. | Search : If there is an equation of the form | |

| then set and where are the | ||

| entries of corresponding to the row and column of . | ||

| 7. | For each set the column of equal to zero. | |

| 8. | Terminate if otherwise return to step 5. | |

| 9. | Return: , , |

Theorem 3

The zero diagonal algorithm terminates in a finite number of steps, , and . Moreover, if then .

Proof:

has elements. The algorithm terminates unless at least one point is removed from . Thus the algorithm must terminate after steps.

To show consider a vertex of . If there exists such that then . This follows from and the definition of a vertex. As a consequence, . By Lemma 1

| (19) |

since was not removed at step 6 during the final iteration and thus . This implies that , i.e. contains all vertices of . Hence .

Finally it is shown that . by Theorem 2 and by the choice of . Thus . Let be the vector of monomials associated with . If then there exists a such that . If the iteration removes no degree vectors then and the proof is complete. Assume the iteration removes at least one degree vector and let be the first removed degree vector. Based on Step 6, constrains . By Lemma 2 the monomial cannot appear in any . Hence . Induction can be used to show holds after each step including the final step . ∎

This algorithm is currently implemented in SOSOPT [1]. The results in Theorem 3 still hold if is chosen to be any set satisfying . Simple heuristics can be used to obtain an initial set of monomials with fewer than elements. can then be used to initialize the zero diagonal algorithm. The next step is to construct the matrix and vector obtained by equating the coefficients of and . This step is required to formulate the LMI feasibility problem and it is not an additional computational cost associated with the zero diagonal algorithm. contains the final reduced set of monomial degree vectors. If at least one degree vector was pruned then the returned matrix and vector may contain entire columns or rows of zeros. These rows/and columns can be deleted prior to passing the data to a a semi-definite programming solver. The next two examples demonstrate the basic ideas of the algorithm.

Example: Consider again the polynomial in Equation 4. The full list of all monomials in two variables with degree consists of six monomials (Equation 5). Equating the coefficients of and yields the following linear equality constraints on the entries of :

A matrix and vector can be constructed to represent these equations in the form . Note that and this implies that for any SOS decomposition of . Thus the monomial can not appear in any SOS decomposition and it can be removed from the list. After eliminating and removing the row and column of , the equality constraints reduce to:

Removing the row and column of is equivalent to zeroing out the appropriate columns of the matrix . This uncovers the new constraint which implies that the monomial can be pruned from the list. After eliminating , the procedure can be repeated once again after removing the row and column of . No new diagonal entries of are constrained to be zero and hence no additional monomials can be pruned from . The final list of monomials consists of four monomials.

| (20) |

The Newton polytope method returned the same list.

Example: Consider the polynomial . The Newton polytope is . The reduced Newton polytope is . The monomial vector corresponding to is:

| (21) |

There are monomials in two variables with degree . For simplicity, assume the zero diagonal algorithm is initialized with . Equating coefficients of and yields the constraint in the first iteration of the zero diagonal algorithm. The monomial is pruned and no additional monomials are removed at the next iteration. The zero diagonal algorithm returns . is a proper subset of . The same set of monomials is returned by the zero diagonal algorithm after 13 steps if is initialized with the degree vectors corresponding to all possible monomials in two variables with degree . This example demonstrates that the zero diagonal algorithm can return a strictly smaller set of monomials than the Newton polytope method.

V Simplification Method for SOS Programs

This section describes a simplification method for SOS programs that is based on the zero diagonal algorithm. A sum-of-squares program is an optimization problem with a linear cost and affine SOS constraints on the decision variables [14]:

| (22) | ||||

are decision variables. The polynomials are given problem data and are affine in :

| (23) |

Theorem 1 is used to convert an SOS program into a semidefinite program (SDP). The constraint can be equivalently written as:

| (24) | |||

| (25) |

If then, in general, must contain all monomials in variables of degree . is a new matrix of decision variables that is introduced when converting an SOS constraint to an LMI constraint. Equating the coefficients of and imposes linear equality constraints on the decision variables and . There exists a matrix and vector such that the linear equations for all SOS constraints are given by where

| (26) |

denotes the vector obtained by vertically stacking the columns of . The dimension is equal to where is (). After introducing a Gram matrix for each constraint the SOS program can be expressed as:

| (27) | ||||

Equation 27 is an SDP expressed in Sedumi [17] primal form. is a vector of free decision variables and contain decision variables that are constrained to lie in the positive semi-definite cone. Sedumi internally handles the symmetry constraints implied by .

The SOS simplification procedure is a generalization of the zero diagonal algorithm. It prunes the list of monomials used in each SOS constraint. It also attempts to remove free decision variables that are implicitly constrained to be zero. Specifically, the constraints in some SOS programs imply both and , i.e. there is an implicit constraint that for some . Appendix A.1 of [19] provides some simple examples of how these implicit constraints can arise in nonlinear analysis problems. For these simple examples it is possible to discover the implicit constraints by examination. For larger, more complicated analysis problems it can be difficult to detect that implicit constraints exist. The SOS simplification procedure described below automatically uncovers some classes of implicit constraints and removes these decision variables from the optimization. This is important because implicit constraints can cause numerical issues for SDP solvers. A significant reduction in computation time and improvement in numerical accuracy has been observed when implicitly constrained variables are removed prior to calling Sedumi.

The general SOS simplification procedure is shown in Table II. To ease the notation the algorithm is only shown for the case of one SOS constraint (). The extension to SOS programs with multiple constraints () is straight-forward. The algorithm is initialized with a finite set of vectors . The Newton polytope of depends on the choice of so must be chosen so that it contains all possible reduced Newton polytopes. One choice is to initialize corresponding to the degree vectors of all monomials in variables and degree . and need to be computed when formulating the SDP so this step is not additional computation associated with the simplification procedure. The last pre-processing step is the initialization of the sign vector . The entries of are , , or if it can be determined from the constraints that is , or , respectively. NaN if no sign information can be determined for . If corresponds to a diagonal entry of then can be initialized to .

The main iteration step is the search for equations that directly constrain any decision variable to be zero (Step 7a). This is similar to the zero diagonal algorithm. The iteration also attempts to determine sign information about the decision variables. Steps 7b-7d update the sign vector based on equality constraints involving a single decision variable. For example, a decision variable must be zero if the decision variable has been previously determined to be and the current equality constraint implies that it must be (Step 7c). These decision variables can be removed from the optimization. Step 8 processes equality constraints involving two decision variables. The logic for this case is omitted due to space constraints. The processing of equality constraints can be performed very fast using the find command in Matlab. Steps 9 and 10 prune monomials and zero out appropriate columns of . The iteration continues until no additional information can be determined about the sign of the decision variables.

| 1. | Given: | Polynomials in variables . Define | |

| 2. | Initialization: Set and choose a finite set | ||

| such that . | |||

| 3. | Form : Construct the equality constraint data, | ||

| and obtained by equating coefficients of | |||

| where and . | |||

| 4. | Sign Data: Initialize the vector to be if | ||

| corresponds to a diagonal entry of . Otherwise set NaN. | |||

| 5. | Iteration: | ||

| 6. | Set , , , and | ||

| 7. | Pro | cess equality equality constraints of the form | |

| where | |||

| 7a. | If then set and | ||

| 7b. | Else if NaN then set sign and | ||

| 7c. | Else if and sign then set | ||

| and | |||

| 7d. | Else if and sign then set | ||

| and | |||

| 8. | Process equality equality constraints of the form | ||

| . | |||

| 9. | If for any , corresponds to a diagonal entry | ||

| then set and where are the | |||

| entries of corresponding to the row and column of . | |||

| 10. | For each set the column of equal to zero. | ||

| 11. | Terminate if and otherwise return to step 6. | ||

| 12. | Return: , , , |

VI Conclusions

The Newton polytope is a method to prune unnecessary monomials from an SOS decomposition. The method requires the construction of a convex hull and this can be time time consuming for polynomials with many terms. This paper presented a zero diagonal algorithm for pruning monomials. The algorithm is based on a simple property of positive semidefinite matrices. The algorithm is fast since it only requires searching a set of linear equality constraints for those having certain properties. Moreover, the set of monomials returned by the algorithm is a subset of the set returned by the Newton polytope method. The zero diagonal algorithm was extended to a more general reduction method for sum-of-squares programming.

VII Acknowledgments

This research was partially supported under the NASA Langley NRA contract NNH077ZEA001N entitled “Analytical Validation Tools for Safety Critical Systems” and the NASA Langley NNX08AC65A contract entitled ’Fault Diagnosis, Prognosis and Reliable Flight Envelope Assessment.” The technical contract monitors are Dr. Christine Belcastro and Dr. Suresh Joshi respectively.

References

- [1] G.J. Balas, A. Packard, P. Seiler, and U. Topcu. Robustness analysis of nonlinear systems. http://www.aem.umn.edu/AerospaceControl/, 2009.

- [2] G. Chesi, A. Garulli, A. Tesi, and A. Vicino. Homogeneous Polynomial Forms for Robustness Analysis of Uncertain Systems. Springer, 2009.

- [3] G. Chesi, A. Tesi, A. Vicino, and R. Genesio. On convexification of some minimum distance problems. In European Control Conference, 1999.

- [4] M.D. Choi, T.Y. Lam, and B. Reznick. Sums of squares of real polynomials. Proc. of Symposia in Pure Math., 58(2):103–126, 1995.

- [5] B. Grünbaum. Convex Polytopes. Springer Verlag, 2003.

- [6] D. Henrion, J. B. Lasserre, and J. Loefberg. Gloptipoly 3: moments, optimization and semidefinite programming. Optimization Methods and Software, 24(4-5):761–779, 2009.

- [7] J.B. Lasserre. Global optimization with polynomials and the problem of moments. SIAM Journal on Optimization, 11(3):796–817, 2001.

- [8] J. Lofberg. Yalmip : A toolbox for modeling and optimization in MATLAB. In Proc. of the CACSD Conference, Taipei, Taiwan, 2004.

- [9] J. Lofberg. Pre- and post-processing sum-of-squares problems in practice. IEEE Transactions on Automatic Control, 54(5):1007–1011, 2009.

- [10] A. Brønsted. An Introduction to Convex Polytopes. Springer-Verlag, 1983.

- [11] P. Parrilo. Structured Semidefinite Programs and Semialgebraic Geometry Methods in Robustness and Optimization. PhD thesis, California Institute of Technology, 2000.

- [12] P. Parrilo. Semidefinite programming relaxations for semialgebraic problems. Mathematical Programming Ser. B, 96(2):293–320, 2003.

- [13] V. Powers and T. Wörmann. An algorithm for sums of squares of real polynomials. J. of Pure and Applied Algebra, 127:99–104, 1998.

- [14] S. Prajna, A. Papachristodoulou, P. Seiler, and P. A. Parrilo. SOSTOOLS: Sum of squares optimization toolbox for MATLAB, 2004.

- [15] B. Reznick. Extremal PSD forms with few terms. Duke Mathematical Journal, 45(2):363–374, 1978.

- [16] B. Reznick. Some concrete aspects of Hilberts 17th problem. Contemporary Mathematics, 253(251-272), 2000.

- [17] J.F. Sturm. Using SeDuMi 1.02, a MATLAB toolbox for optimization over symmetric cones. Optimization Methods and Software, pages 625–653, 1999.

- [18] B. Sturmfels. Polynomial equations and convex polytopes. American Mathematical Monthly, 105(10):907–922, 1998.

- [19] W. Tan. Nonlinear Control Analysis and Synthesis using Sum-of-Squares Programming. PhD thesis, Univ. of Calif., Berkeley, 2006.

- [20] U. Topcu. Quantitative Local Analysis of Nonlinear Systems. PhD thesis, Univ. of California, Berkeley, 2008.

- [21] H. Waki and M. Muramatsu. A facial reduction algorithm for finding sparse sos representations. Technical Report CS-09-02, Dept. of Comp. Science, The University of Electro-Communications, 2009.