Soft Retargeting Network for Click Through Rate Prediction

Abstract.

The study of user interest models has received a great deal of attention in click through rate (CTR) prediction recently. These models aim at capturing user interest from different perspectives, including user interest evolution, session interest, multiple interests, etc. In this paper, we focus on a new type of user interest, i.e., user retargeting interest. User retargeting interest is defined as user’s click interest on target items the same as or similar to historical click items. We propose a novel soft retargeting network (SRN) to model this specific interest. Specifically, we first calculate the similarity between target item and each historical item with the help of graph embedding. Then we learn to aggregate the similarity weights to measure the extent of user’s click interest on target item. Furthermore, we model the evolution of user retargeting interest. Experimental results on public datasets and industrial dataset demonstrate that our model achieves significant improvements over state-of-the-art models.

1. Introduction

Click through rate prediction plays a vital role in recommender system and online advertising. Due to the rapid growth of user historical behavior data, user behavior modeling has been widely adopted in CTR prediction, which focuses on capturing the dynamics of user interest from user historical behaviors (Zhou et al., 2018, 2019; Cen et al., 2020; Sun et al., 2019; Feng et al., 2019; Zhang et al., 2021; Chen et al., 2019; Pi et al., 2019, 2020; Ren et al., 2019). These works model user interest from different perspectives, including capturing user interest using attention (Zhou et al., 2018) or transformer (Chen et al., 2019; Sun et al., 2019) , user interest evolution (Zhou et al., 2019), user interest interaction among sessions (Feng et al., 2019), multiple interests (Cen et al., 2020), and long-term user behavior (Pi et al., 2019, 2020; Ren et al., 2019).

In this paper, we aim to model a specific user interest, i.e., user retargeting interest. User retargeting interest is user’s click interest on the items the same as or similar to historical clicked items (we name them retargeted items). These items are usually retrieved by retargeting service (Kantola, 2014) for reminding users of the products they visited before, or by matching stage of online advertising system for recommending similar products based on user historical behavior. As retargeted items are highly relevant to user’s recent interest, they can improve user engagement with products/brands dramatically in online advertising. It is reported that the average CTR of retargeted ads is 10 times higher than regular display ads (Wishpond.com, 2014). We also observe a similar phenomenon in our native ads scenario that the CTR of retargeted items is 2 times higher than other items. Although retargeted items are much more likely to be clicked, conventional user behavior models do not distinguish retargeted items from other items, which may underestimate the CTR of retargeted items and lose the opportunity of getting more clicks and revenue.

To understand user retargeting interest, we examine the cases of target items and user behavior sequences in our scenario. We notice that retargeted item has a strong correlation to user historical click items. For a retargeted item, it is obvious that the user has clicked the same or similar items before and left corresponding click records. Our idea is to locate and make good use of these records for representing user retargeting interest.

We propose a novel soft retargeting network (SRN) to model user retargeting interest. We first calculate similarity weights between target item and historical items with the help of graph embedding. Then we aggregate the similarity weights to measure the extent of user’s click interest on retargeted item. Furthermore, we model the evolution of user retargeting interest. To the best of our knowledge, our study is the first to model user retargeting interest in click through rate prediction.

The rest of the paper is organized as follows: Section 2 introduces the SRN model. Section 3 presents experimental results on public datasets and industrial dataset. Section 4 concludes the paper.

2. The proposed approach

In this section, we first introduce a hard retargeting network (HRN) as a naive approach to model user retargeting interest. We then elaborate the network structure and key components of SRN.

2.1. Hard retargeting network

To measure user’s interest on retargeted item, the basic idea of HRN is to count how many times user has clicked this item in user behavior sequence and use the click count to represent user interest. Intuitively, the more user clicks an item before, the more likely user is interested in the item.

HRN first enumerates the items in user behavior sequence and checks whether target item and historical item are the same item by comparing their embedding vectors:

| (1) |

where is the embedding of target item , is the embedding of historical item , is the similarity weight between target item and historical item . Note that target item and historical item share the same embedding dictionary, we can ensure two items are the same if their embedding vectors are equal.

For user behavior sequence , we can get a sequence of similarity weights . can be viewed as user’s historical click records on target item.

We perform max pooling on and get for target item . If , target item is a retargeted item for HRN. In addition, we perform sum pooling on to get:

| (2) |

where corresponds to the historical click number of target item. We then adopt binning method to transform into a categorical feature , where is an equal width interval binning method (Dougherty et al., 1995) to discrete with bin size . For example, if , . HRN looks up the embedding of from embedding layer and feeds it into CTR model for prediction. Although simple, the performance of HRN is particularly good in e-commerce scenarios.

For simplicity, we only consider historical click item sequence during the introduction of HRN and SRN. Our approach can easily be extended to historical click brand/shop/category sequence.

2.2. Soft retargeting network

In HRN, only target item the same as historical item is treated as retargeted item. The ratio of retargeted items in sample data is rather low, e.g., only 5% for item and 15% for shop in our scenario, which heavily limits the performance of HRN. To overcome the limitation, we propose a SRN model to expand the scope of retargeted items and model user retargeting interest at a broader level.

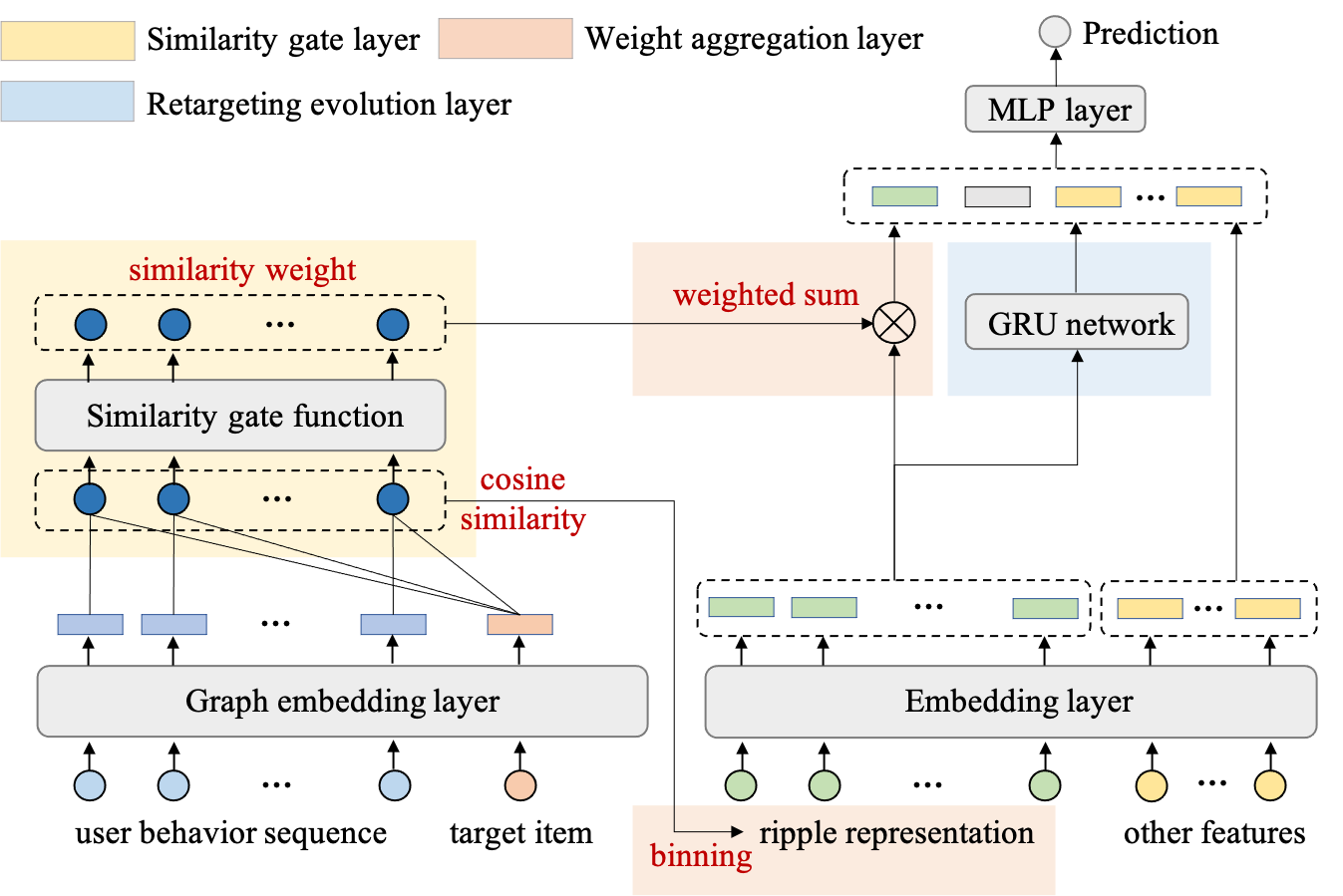

The framework of SRN is illustrated in Fig. 1. The idea of SRN is similar to that of HRN. It computes a sequence of similarity weights for user behavior sequence and aggregates similarity weights to represent user retargeting interest. We will detail the key components of SRN in the following subsections:

Graph embedding layer. To measure the similarity between target item and history items, a natural choice is just using the item embedding learned by CTR model to calculate cosine similarity. However, we find that the quality of CTR embedding is poor in practice. It is probably due to the fact that CTR embedding is learned for CTR tasks and may be incapable of capturing the similarity between items. Inspired by the success of graph embedding (Hamilton et al., 2017b; Wang et al., 2019; Hamilton et al., 2017a; Ying et al., 2018), we switch to using graph embedding to represent items in SRN. We pre-train a graph network based on user-item interaction records and generate a graph embedding dictionary (see Section 3.1 for more details). Graph embedding layer looks up and generates a graph embedding for target item and a graph embedding for each historical item .

Similarity gate layer. Based on graph embedding, similarity gate layer calculates the cosine similarity between target item and historical item :

| (3) |

Note that cosine similarity is different from the similarity weight used in HRN. Firstly, but . Secondly, cosine similarity is a metric used to measure the similarity between embedding vectors. Similarity weight is item similarity visually perceived by user. While there is a strong correlation between them, there are also many cases where they differ.

We carefully examine items in our scenario and summarize three correlation rules for cosine similarity and similarity weight:

-

•

Rule 1: When , item and item are the same item and .

-

•

Rule 2: When (e.g., ), item and item can be two different products, e.g., iphone and microphone. In this case, drops dramatically and should approach .

-

•

Rule 3: When , item and item can be the same product sold by different shops, or two products with the same brand, e.g., iPhone 11 and iPhone SE. In this case, should be a large value, e.g., 0.95.

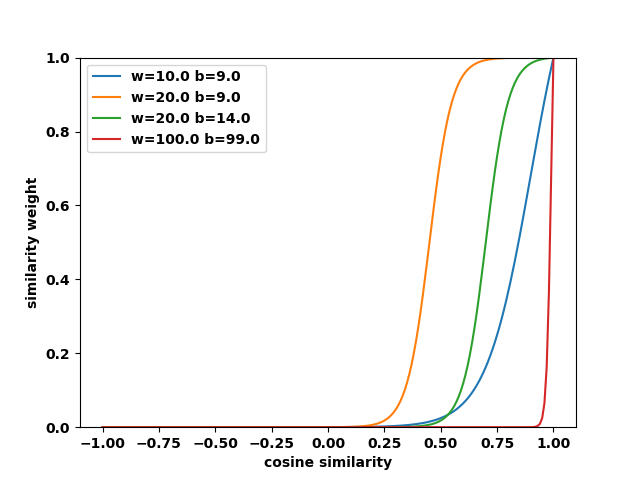

To satisfy these rules, we design a similarity gate function to map to :

| (4) |

| (5) |

where and . Fig. 2 plots the curve of for different values of and . We can see indeed suppresses when is below some threshold and the value of is determined by . When , outputs a high value of and the shape of in is controlled by . In practice, we usually set good initial values for and (e.g., and ) and let CTR model learn the optimal .

For user behavior sequence, similarity gate layer outputs a sequence of similarity weights .

Weight aggregation layer. We perform max pooling on to get the maximum similarity weight just like in HRN. reflects the likelihood that target item is a retargeted item. The higher the value of , the more likely is a retargeted item. We can see that the scope of retargeted item in SRN is definitely broader than that in HRN.

We can follow the operation in HRN to model user retargeting interest, i.e., sum pooling on and binning. However, the binning operation is non-differentiable and blocks the updating of similarity gate layer. Instead, we put binning operation on and generate a categorical feature (we name it ripple representation). We look up the embedding vector of ripple representation from embedding layer as and represent user retargeting interest as:

| (6) |

As is a linear function with limited model capacity, may be inaccurate when . Ripple representation can complement the limitation of by providing an embedding representation of .

Retargeting evolution layer. We notice that user retargeting interest may evolve over time. Suppose there are two sequences of similarity weights and , weight aggregation layer would produce the same results. However, suggests that user’s interest on target item grows rapidly whereas indicates that user may lose interest in target item. We use GRU network (Cho et al., 2014) to capture the evolution of user retargeting interest:

| (7) |

We concatenate and to get the output of SRN and feed into MLP layer for CTR prediction.

3. Experiments

In this section we present the experimental setup and conduct experiments to evaluate the performance of our model.

3.1. Experimental setup

Datasets. As SRN is mainly designed for click interest, we choose two public datasets which contain user click records and an industrial dataset for evaluation: 1) Taobao dataset111https://tianchi.aliyun.com/dataset/dataDetail?dataId=649. It is a collection of user behaviors from Taobao’s recommender system (Zhu et al., 2018) and contains 89 million records, 1 million users, and 4 million items from 9407 categories. We only use click behaviors of each user. 2) Alimama dataset222https://tianchi.aliyun.com/dataset/dataDetail?dataId=56. It is a dataset of click rate prediction about display ads provided by Alimama, an online advertising platform in China. It contains 26 million display/click records from 20170506 to 20170513, 1 million users, and 0.85 million items. We use the first 7 days’ data as training sample and the last day’s data as test sample. 3) Industrial dataset. It is collected from our online advertising system for a native ads scenario from 20220318 to 20220417. The dataset contains 780 million display/click records, 10.4 million users, and 0.78 million items. Each record contains a real-time click sequence from the preceding 3 days. We use the first 30 days’ data as training sample and the last day’s data as test sample.

Graph Construction. For each dataset, we pre-train a heterogeneous graph network based on the training sample of CTR task. The types of graph nodes include user, item, and its side information (brand/shop/category for Alimama dataset and industrial dataset, and category for Taobao dataset). The graph edges include: 1) user-item edge. If user clicks item , there is an edge between and . User-item edge is used as label in link prediction task. 2) user-side information edge. If user clicks an item with side information (e.g., shop), there is an edge between and . 3) item-item edge. If item and item are adjacent in user behavior sequence and the time interval between item and item is within 60 seconds, there is an edge between and . 4) item-side information edge. If item has a side info , there is an edge between and .

Notice that the edges are undirected and unweighted. We then traverse the graph to sample nodes and their neighborhoods according to meta paths. Meta paths include , , , , , . We aggregate the node’s neighborhoods using the HAN approach (Wang et al., 2019) and adopt a link prediction task to supervise the learning of embedding.

Competitors. We compare SRN with HRN and some widely used CTR models, including DNN, DIN (Zhou et al., 2018), DIEN (Zhou et al., 2019) and BST (Chen et al., 2019).

Parameter Configuration. Alimama and industrial dataset use historical click item/brand/shop/category sequence. Taobao dataset uses historical click item/category sequence. The maximum sequence length is 100 and 64 for public datasets and industrial dataset respectively. The dimension of CTR embedding and graph embedding are 8 and 32 respectively. The optimizer is Adagrad with learning rate 0.01. For SRN, , is 10, 9 for historical item sequence and 10, 8 for historical brand/shop/category sequence, respectively.

Evaluation Metrics. We use two common used metrics, and , to evaluate these models.

| Models | Taobao | Alimama | Industrial | |||

|---|---|---|---|---|---|---|

| AUC | LogLoss | AUC | LogLoss | AUC | LogLoss | |

| DNN | 0.8557 | 0.3254 | 0.6359 | 0.1941 | 0.6805 | 0.1244 |

| DIN | 0.8731 | 0.3080 | 0.6378 | 0.1938 | 0.6838 | 0.1243 |

| DIEN | 0.8623 | 0.3200 | 0.6369 | 0.1939 | 0.6864 | 0.1240 |

| BST | 0.8573 | 0.3240 | 0.6401 | 0.1936 | 0.6876 | 0.1238 |

| HRN | 0.8838 | 0.2919 | 0.6376 | 0.1938 | 0.6842 | 0.1241 |

| SRN | 0.8853 | 0.2905 | 0.6609 | 0.1904 | 0.6918 | 0.1235 |

| Models | Item | Brand | Shop | Category |

|---|---|---|---|---|

| HRN | 5.43% | 11.3% | 14.9% | 47.5% |

| SRN () | 33.4% | 13.3% | 26.3% | 51.0% |

| Models | Retargeted items | The others |

|---|---|---|

| DNN | 0.6748 | 0.6791 |

| DIEN | 0.6816 (+0.0058) | 0.6861 (+0.0070) |

| BST | 0.6809 (+0.0061) | 0.6847 (+0.0056) |

| SRN | 0.6914 (+0.0166) | 0.6881 (+0.0090) |

| Average similarity | CTR embedding | Graph embedding |

|---|---|---|

| Intra-category | 0.03294 | 0.5413 |

| Inter-category | 0.03285 | 0.0094 |

| Model | AUC | Diff |

|---|---|---|

| 0.6918 | ||

| 0.6909 | -0.0009 | |

| 0.6876 | -0.0042 | |

| 0.6905 | -0.0013 | |

| 0.6886 | -0.0032 |

3.2. Performance evaluation

As shown in Table 1, HRN achieves solid performance gain compared to DNN base. SRN outperforms HRN with an AUC gain of 0.0015, 0.0233, 0.0076 in public datasets and industrial dataset, respectively. To explain the advantages of SRN over HRN, we assume target item with is retargeted item in SRN and define retargeting ratio for item as the ratio of the total number for retargeted items to sample number in test dataset. Retargeting ratio for brand/shop/category can be defined in the same way. We compare the retargeting ratio of SRN with that of HRN in industrial dataset. Table 2 shows the retargeting ratio of SRN is much higher than HRN for item and shop. It seems that SRN can capture more retargeted items/shops and thus boost the performance significantly.

SRN outperforms DNN, DIN, DIEN, and BST significantly with an AUC gain of at least 0.0122, 0.0208, 0.0042 in the three datasets, respectively. To demonstrate the effectiveness of SRN in modeling user retargeting interest, we compare the performance of SRN with that of baseline models on retargeted items (also selected by ) and the other items in industrial dataset. Table 3 shows that the AUC gain of SRN on retargeted items is much higher than that on the others. For DIEN and BST, the AUC gain on retargeted items is nearly the same as that on the others.

We conduct online A/B experiments to evaluate SRN in online system. The experiment lasts for 14 days and SRN achieves 6.88 CTR and 6.12 RPM gain compared to product model (DIN+HRN).

3.3. Ablation study

The result of ablation study on industrial dataset is shown in Table 5. Retargeting evolution layer (GRU) brings a notable AUC gain of 0.0009. sets to and relies on ripple representation to represent the interest , which causes an AUC loss of 0.0013. It demonstrates the importance of similarity weights in capturing user retargeting interest. adopts the summing and binning operation used in HRN to aggregate similarity weights, which causes an AUC loss of 0.0032. This suggests that binning operation may lead to sub-optimal performance and weight aggregation layer is more flexible and powerful.

Using CTR embedding instead of graph embedding () causes the performance to decrease dramatically, which indicates the quality of graph embedding is much better than CTR embedding. To evaluate the quality of graph/CTR embedding, we select top 100 categories according to PV number and sample 100 items for each category in industrial dataset. We calculate the cosine similarities between each item and the other items using graph/CTR embedding respectively. For each item, we can get the average similarity within the same category (intra-category similarity) and from the different categories (inter-category similarity). We then compute the average intra- and inter-category similairity for graph/CTR embedding respectively. Table 4 shows that intra-category similarity is much higher than inter-category similarity for graph embedding whereas the difference is negligible for CTR embedding. It demonstrates the superiority of graph embedding in identifying similar items.

4. Conclusion

In this paper, we propose a novel soft retargeting network to model user retargeting interest. SRN calculates the similarity between target item and historical click items with graph embedding and generates user’s historical click records on items similar to target item. It then aggregates these records to represent user’s interest on retargeted items. The experimental results demonstrate that our model achieves significant improvements over the competitors.

References

- (1)

- Cen et al. (2020) Yukuo Cen, Jianwei Zhang, Xu Zou, Chang Zhou, Hongxia Yang, and Jie Tang. 2020. Controllable multi-interest framework for recommendation. In Proceedings of the 26th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining. Association for Computing Machinery, New York, NY, USA, 2942–2951.

- Chen et al. (2019) Qiwei Chen, Huan Zhao, Wei Li, Pipei Huang, and Wenwu Ou. 2019. Behavior Sequence Transformer for E-Commerce Recommendation in Alibaba. In Proceedings of the 1st International Workshop on Deep Learning Practice for High-Dimensional Sparse Data (Anchorage, Alaska) (DLP-KDD ’19). Association for Computing Machinery, New York, NY, USA, Article 12, 4 pages. https://doi.org/10.1145/3326937.3341261

- Cho et al. (2014) Kyunghyun Cho, Bart van Merrienboer, Çaglar Gülçehre, Fethi Bougares, Holger Schwenk, and Yoshua Bengio. 2014. Learning Phrase Representations using RNN Encoder-Decoder for Statistical Machine Translation. CoRR abs/1406.1078 (2014). arXiv:1406.1078 http://arxiv.org/abs/1406.1078

- Dougherty et al. (1995) James Dougherty, Ron Kohavi, and Mehran Sahami. 1995. Supervised and Unsupervised Discretization of Continuous Features. In Machine Learning Proceedings 1995, Armand Prieditis and Stuart Russell (Eds.). Morgan Kaufmann, San Francisco (CA), 194–202. https://doi.org/10.1016/B978-1-55860-377-6.50032-3

- Feng et al. (2019) Yufei Feng, Fuyu Lv, Weichen Shen, Menghan Wang, Fei Sun, Yu Zhu, and Keping Yang. 2019. Deep Session Interest Network for Click-Through Rate Prediction. In Proceedings of the Twenty-Eighth International Joint Conference on Artificial Intelligence, IJCAI-19. International Joint Conferences on Artificial Intelligence Organization, 2301–2307. https://doi.org/10.24963/ijcai.2019/319

- Hamilton et al. (2017a) William L. Hamilton, Rex Ying, and Jure Leskovec. 2017a. Inductive Representation Learning on Large Graphs. In Proceedings of the 31st International Conference on Neural Information Processing Systems (Long Beach, California, USA) (NIPS’17). Curran Associates Inc., Red Hook, NY, USA, 1025–1035.

- Hamilton et al. (2017b) William L. Hamilton, Rex Ying, and Jure Leskovec. 2017b. Representation Learning on Graphs: Methods and Applications. CoRR abs/1709.05584 (2017). arXiv:1709.05584 http://arxiv.org/abs/1709.05584

- Kantola (2014) Jose Kantola. 2014. The Effectiveness of Retargeting in Online Advertising. Master’s thesis. Aalto University. School of Science. http://urn.fi/URN:NBN:fi:aalto-201412163235

- Pi et al. (2019) Qi Pi, Weijie Bian, Guorui Zhou, Xiaoqiang Zhu, and Kun Gai. 2019. Practice on long sequential user behavior modeling for click-through rate prediction. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining. Association for Computing Machinery, New York, NY, USA, 2671–2679.

- Pi et al. (2020) Qi Pi, Guorui Zhou, Yujing Zhang, Zhe Wang, Lejian Ren, Ying Fan, Xiaoqiang Zhu, and Kun Gai. 2020. Search-based user interest modeling with lifelong sequential behavior data for click-through rate prediction. In Proceedings of the 29th ACM International Conference on Information & Knowledge Management. Association for Computing Machinery, 2685–2692.

- Ren et al. (2019) Kan Ren, Jiarui Qin, Yuchen Fang, Weinan Zhang, Lei Zheng, Weijie Bian, Guorui Zhou, Jian Xu, Yong Yu, Xiaoqiang Zhu, et al. 2019. Lifelong sequential modeling with personalized memorization for user response prediction. In Proceedings of the 42nd International ACM SIGIR Conference on Research and Development in Information Retrieval. 565–574.

- Sun et al. (2019) Fei Sun, Jun Liu, Jian Wu, Changhua Pei, Xiao Lin, Wenwu Ou, and Peng Jiang. 2019. BERT4Rec: sequential recommendation with bidirectional encoder representations from transformer. In Proceedings of the 28th ACM International Conference on Information and Knowledge Management (Beijing, China) (CIKM ’19). Association for Computing Machinery, New York, NY, USA, 1441–1450.

- Wang et al. (2019) Xiao Wang, Houye Ji, Chuan Shi, Bai Wang, Yanfang Ye, Peng Cui, and Philip S Yu. 2019. Heterogeneous Graph Attention Network. In The World Wide Web Conference (San Francisco, CA, USA) (WWW ’19). Association for Computing Machinery, New York, NY, USA, 2022–2032. https://doi.org/10.1145/3308558.3313562

- Wishpond.com (2014) Wishpond.com. 2014. 7 Incredible Retargeting Ad Stats. https://blog.wishpond.com/post/97225536354/infographic-7-incredible-retargeting-ad-stats

- Ying et al. (2018) Rex Ying, Ruining He, Kaifeng Chen, Pong Eksombatchai, William L. Hamilton, and Jure Leskovec. 2018. Graph Convolutional Neural Networks for Web-Scale Recommender Systems. In Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (London, United Kingdom) (KDD ’18). Association for Computing Machinery, New York, NY, USA, 974–983. https://doi.org/10.1145/3219819.3219890

- Zhang et al. (2021) Weinan Zhang, Jiarui Qin, Wei Guo, Ruiming Tang, and Xiuqiang He. 2021. Deep learning for click-through rate estimation. In Proceedings of the Thirtieth International Joint Conference on Artificial Intelligence, IJCAI-21, Zhi-Hua Zhou (Ed.). International Joint Conferences on Artificial Intelligence Organization, 4695–4703. https://doi.org/10.24963/ijcai.2021/636 Survey Track.

- Zhou et al. (2019) Guorui Zhou, Na Mou, Ying Fan, Qi Pi, Weijie Bian, Chang Zhou, Xiaoqiang Zhu, and Kun Gai. 2019. Deep interest evolution network for click-through rate prediction. In Proceedings of the AAAI conference on artificial intelligence, Vol. 33. 5941–5948.

- Zhou et al. (2018) Guorui Zhou, Xiaoqiang Zhu, Chenru Song, Ying Fan, Han Zhu, Xiao Ma, Yanghui Yan, Junqi Jin, Han Li, and Kun Gai. 2018. Deep interest network for click-through rate prediction. In Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining. 1059–1068.

- Zhu et al. (2018) Han Zhu, Xiang Li, Pengye Zhang, Guozheng Li, Jie He, Han Li, and Kun Gai. 2018. Learning tree-based deep model for recommender systems. In Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining. Association for Computing Machinery, 1079–1088.