SoftED: Metrics for Soft Evaluation of Time Series Event Detection

Abstract

Time series event detectors are evaluated mainly by standard classification metrics focusing solely on detection accuracy. However, inaccuracy in detecting an event can often result from its preceding or delayed effects reflected in neighboring detections. These detections are valuable to trigger necessary actions or help mitigate unwelcome consequences. In this context, current metrics are insufficient and inadequate for the context of event detection. There is a demand for metrics that incorporate both the concept of time and temporal tolerance for neighboring detections. Inspired by fuzzy sets, this paper introduces SoftED metrics, a new set designed for soft evaluating event detectors. They enable the evaluation of the detection accuracy and the degree to which their detections represent events. A new general protocol inspired by competency questions is also introduced to evaluate temporal tolerant metrics for event detection. The SoftED metrics can improve event detection evaluations by associating events and their representative detections, incorporating temporal tolerance in over 36% of the overall conducted detector evaluations compared to the usual classification metrics. Following the proposed evaluation protocol, SoftED metrics were evaluated by domain specialists who indicated their contribution to detection evaluation and method selection.

Please cite the updated journal paper published at https://doi.org/10.1016/j.cie.2024.110728.

Keywords Time Series Event Detection Evaluation Metrics Soft Computing

1 Introduction

In time series analysis, it is often possible to observe a significant change in observations at a certain instant or interval. Such a change generally characterizes the occurrence of an event [30]. An event can represent a phenomenon with a defined meaning in a domain. Event detection is the process of identifying events in a time series. With this process, we may be interested in learning/identifying past events [48, 20, 2, 3, 57, 75, 46, 66], identifying events in real-time (online detection) [73, 1, 5, 43], or even predicting future events before they happen (event prediction) [71, 53, 41, 26, 74]. It is recognized as a basic function in surveillance and monitoring systems and has gained much attention in research for application domains involving large datasets from critical systems [48].

To address the task of time series event detection, several methods have been developed and are surveyed in the literature [32, 12, 29, 15, 16, 10, 6, 56]. Each detection method (detector, for short) specializes in time series that present different characteristics or make assumptions about the data distribution. Therefore, assessing detection performance is important to infer their adequacy for a particular application [24]. In this case, detection performance refers to how accurate an event detector is at identifying events in a time series. Detection performance is generally measured by classification metrics [31].

Currently, standard classification metrics (around since the 1950s), including Recall, Precision, and F1, are usually adopted [37, 62]. Although Accuracy is a specific metric [31], the expression detection accuracy is henceforth used to refer to the ability of a method to detect events correctly. Classification metrics focus mainly on an analysis of detection accuracy. On the other hand, inaccuracy in event detection does not always indicate a bad result, especially when detections are sufficiently close to events.

1.1 Motivating example and problem definition

This section gives an example of the problem of evaluating inaccurate event detections and defines the problem in the paper concerning the demand for adequate detection performance metrics. Consider, for example, a time series containing an event at time , represented in Figure 1. Since detectors A and B are applied to , a user must select one of them as the most adequate for the underlying application. Detector A detects an event at time , while Detector B detects an event at time (). As none of the methods could correctly detect the event at time , based on the usual detection accuracy evaluation, the user would deem both inaccurate and disposable.

However, inaccuracy in detecting an event can often result from its preceding or lingering effects. Take the adoption of a new policy in a business. While a domain specialist may consider the moment of policy enforcement as a company event, its effects on profit may only be detectable a few months later. On the other hand, preparations for policy adoption may be detectable in the antecedent months. Moreover, when accurate detections are not achievable, which is common, detection applications demand events to be identified as soon as possible [37], or early enough to allow necessary actions to be taken, mitigating possible critical system failures or help mitigate urban problems resulting from extreme weather events, for example. In this context, the results of Methods A and B would be valuable to the user. Note that while Detector B seems to anticipate the event, its application to and resulting detection is made after the event’s occurrence. On the other hand, the detection of Detector A came temporally closer to the event, possibly more representative of its effects.

In this context, evaluating event detection is particularly challenging, and the detection accuracy metrics usually adopted are insufficient and inadequate for the task [59]. Standard classification metrics do not consider the concept of time, which is fundamental in the context of time series analysis, and do not reward early detection [1], for example, or any relevant neighboring detections. For the remainder of this paper, neighboring or close detections refer to detections whose temporal distance to events is within a desired threshold. Current metrics only reward true positives (exact matches in event detection). All other results are “harshly” and equally discredited.

In this case, there is a demand to soften the usual concept of detection accuracy and evaluate the methods while considering neighboring detections. However, state-of-the-art metrics designed for scoring anomaly detection, such as the NAB score [37], are still limited [59], while also being biased towards results preceding events. To the best of our knowledge, no metrics available in the literature consider the concept of time and tolerance for detections sufficiently close to time series events. This paper focuses on addressing this demand.

1.2 Contribution

This paper introduces the SoftED metrics, a new set of metrics for evaluating event detectors regarding their detection accuracy and ability to produce neighboring detections to events. Inspired by fuzzy sets, SoftED metrics assess the degree to which a detection represents a particular event. It is an innovative approach since, while fuzzification is commonly applied to time series observations [33], in SoftED, the fuzzification occurs in the time dimension [72]. Hence, they incorporated time and temporal tolerance in evaluating the accuracy of event detection. These are the scenarios that domain specialists and users often face. Up until now, there have been no standard or adequate evaluation metrics for event detection in such scenarios. SoftED metrics soften the standard classification metrics, which are considered in this paper as hard metrics, to support the decision-making regarding the most appropriate method for a given application.

This paper also contributes by introducing a new general protocol for evaluating the performance of metrics in time series event detection. This protocol is designed to evaluate metrics that incorporate temporal tolerance into detection evaluation. It is inspired by a strategy commonly adopted in ontology design. It is based on using competency questions [64, 45].

Computational experiments were conducted to analyze the contribution of the developed metrics against the usual hard and state-of-the-art metrics [37]. The results illustrate that SoftED can improve event detection evaluation by associating events and their representative (neighboring) detections, incorporating temporal tolerance in over 36% of the conducted experiments compared to usual hard metrics. More importantly, following the proposed evaluation protocol, we evaluated the SoftED metrics based on the devised competency questions. Domain specialists confirmed the contribution of SoftED metrics to the problem of detector evaluation.

The remainder of this paper is organized as follows. Section 2 provides concepts on time series event detection and reviews the literature on detection performance metrics for detection evaluation. Section 3 formalizes the developed SoftED metrics. Section 4 presents a quantitative and qualitative experimental evaluation of the developed metrics and their empirical results. Finally, conclusions are made in Section 5.

2 Literature review

This section provides relevant concepts on time series events and their detection and reviews the literature on detection performance metrics and related works. Events are pervasive in real-world time series, especially in the presence of nonstationarity [30, 54]. Commonly, the occurrence of an event can be detected by observing anomalies or change points. Most event detectors in the literature specialize in identifying a specific type of event. There exist methods that can detect multiple events in time series, generally involving the detection of both anomalies and change points [38, 4, 74]. Nonetheless, these methods are still scarce. This paper approaches methods for detecting anomalies, change points, or both.

2.1 Time series events

Events correspond to a phenomenon, generally pre-defined in a particular domain, with an inconstant or irregular occurrence relevant to an application. In time series, events represent significant changes in expected behavior at a certain time or interval [30]. In general, instant events of a given time series = <>, can be identified in a simplified way by using the Equation 1, where represents the length of nearby observations111Due to limited space, the general formalization of event intervals lie outside the scope of this paper.. A temporal component (TC) for an observation is expressed as . The TC can refer to the observation itself or its instant trend, respectively represented as and .

Considering a typical autoregressive behavior [27], one can expect a TC for to be related to previous observations. Let be the expected TC for based on the previous observations, where . Analogously, one can also expect TC for to be explained from the following observations [40]. So let be the expected TC for based on the following observations, where . If a TC for escapes the expected value above a threshold based on previous or following observations [27, 40], it can be considered an event. Equation 1 also considers an event if the expected TC from previous and following observations are different above the threshold .

| (1) |

Anomalies

Most commonly, events detected in time series refer to anomalies. Anomalies appear not to be generated by the same process as most of the observations in the time series [12]. Thus, anomalies can be modeled as isolated observations of nearby data. In this case, an event identified in can be considered an anomaly if it escapes expected TC before and after time point according to in Equation 2. Generally, anomalies are identified by deviations from the time series inherent trend.

| (2) |

Change points

Change points in a time series are the points or intervals in time that represent a transition between different states in a process that generates the time series [60]. In this case, a change point event identified in time follows the expected behavior observed before or after the time point , but not both at the same time according to in Equation 3. It can also refer to a significant difference between the expected trend before or after time point .

| (3) |

2.2 Event detection

Event detection is the process of identifying the occurrence of such events based on data analysis. It is recognized as a basic function in surveillance and monitoring systems. Moreover, it becomes even more relevant for applications based on time series and sensor data analysis [48]. Event detectors found in the literature are usually based on model deviation analysis, classification-based analysis, clustering-based analysis, domain-based analysis, or statistical techniques [12, 31, 48, 3]. Regardless of the adopted detection strategy, an important aspect of any event detector is how the events are reported. Typically, the outputs produced by event detectors are either scores or labels. Scoring detectors assign an anomaly score to each instance in the data depending on the degree to which that instance is considered an anomaly. On the other hand, labeling detectors assign a label (normal or anomalous) to each data instance. Such methods are the most commonly found in the literature [12].

2.3 Detection performance metrics

Detectors might vary performance under different time series [23]. Therefore, there is a demand to compare the results they provide. Such a process aims to guide the choice of suitable methods for detecting events of a time series in a particular application. For comparing event detectors, standard classification metrics, such as F1, Precision, and Recall, are usually adopted [31, 37, 62].

As usual, the standard classification metrics depend on measures of true positives (), true negatives (), false positives (), and false negatives () [31]. In event detection, the refers to the number of events correctly detected (labeled) by the method. Analogously, is the number of observations that are correctly not detected. On the other hand, the measure is the number of detections that did not match any event, that is, false alarms. Analogously, is the number of undetected events. Among the standard classification metrics, Precision and Recall are widely adopted. Precision reflects the percentage of detections corresponding to time series events (exactness), whereas Recall reflects the percentage of correctly detected events (completeness). Precision and Recall are combined in the Fβ metrics [31]. The F1 metric is also widely used to help gauge the quality of event detection balancing Precision and Recall [62].

As discussed in Section 1.1, event detection is particularly challenging to evaluate, event detection is particularly challenging to evaluate. In this context, the Numenta Anomaly Benchmark (NAB) provided a common scoring algorithm for evaluating and comparing the efficacy of anomaly detectors [37]. The NAB score metric is computed based on anomaly windows of observations centered around each event in a time series. Given an anomaly window, NAB uses the sigmoidal scoring function to compute the weights of each anomaly detection. It rewards earlier detections within a given window and penalizes s. Also, NAB allows the definition of application profiles: standard, reward low s, and reward low s. Based on the window size, the standard profile gives relative weights to s, s, and s.

Nonetheless, the NAB scoring system presents challenges for its usage in real-world applications. For example, the anomaly window size is automatically defined as 10% of the time series size, divided by the number of events it contains, and values generally not known in advance, especially in streaming environments. Furthermore, Singh and Olinsky [59] pointed out the scoring equations’ poor definitions and arbitrary constants. Finally, score values increase with the number of events and detections. Every user can tweak the weights in application profiles, making it difficult to interpret and benchmark results from other users or setups.

In addition to NAB [37], this section presents other works related to the problem of analyzing and comparing event detection performance [12]. Recent works focus on the development of benchmarks to evaluate univariate time series anomaly detectors [36, 8, 70]. Jacob et al. [36] provide a comprehensive benchmark for explainable anomaly detection over high-dimensional time series. In contrast, the benchmark developed by Boniol et al. [8] allows the user to assess the advantages and limitations of anomaly detectors and detection accuracy metrics.

Standard classification metrics are generally used for evaluating the ability of an algorithm to distinguish normal from abnormal data samples [12, 62]. Aminikhanghahi and Cook [4] review traditional metrics for change point detection evaluation, such as Sensitivity, G-mean, F-Measure, ROC, PR-Curve, and MSE. Detection evaluation measures have also been investigated in the areas of sequence data anomaly detection [13], time series mining and representation [19], and sensor-based human activity learning [17, 68].

Metrics found in the literature are mainly designed to evaluate the detection of instant anomalies. However, many real-world event occurrences extend over an interval (range-based). Motivated by this, Tatbul et al. [62] and Paparrizos et al. [47] extend the well-known Precision and Recall metrics, and the AUC-based metrics, respectively, to measure the accuracy of detection algorithms over range-based anomalies. Other recent metrics developed for detecting range-based time series anomalies are also included in the benchmark of Boniol et al. [8]. In addition, Wenig et al. [70] published a benchmarking toolkit for algorithms designed for detecting anomalous subsequences in time series [7, 9].

Few works opt to evaluate event detection algorithms based on metrics other than traditional ones. For example, Wang, Vuran, and Goddard [67] calculate the delay until an individual node and the delivery delay in a transmission network detect an event. The work presents a framework for capturing delays in detecting events in large-scale WSN networks with a time-space simulation. Conversely, Tatbul et al. [62] also observe the neighborhood of event detections, not to calculate detection delays, but to evaluate positional tendency in anomaly ranges. Our previous work uses the delay measure to evaluate the bias of algorithms to detect real events in time series [22]. It furthers a qualitative analysis of the tendency of algorithms to detect before or after the occurrence of an event.

Under these circumstances, there is still a demand for event detection performance metrics that incorporate both the concept of time and tolerance for detections sufficiently close to time series events. Therefore, this paper contributes by introducing new metrics for evaluating methods regarding their detection accuracy and considering neighboring detections, incorporating temporal tolerance for inaccuracy in event detection.

3 SoftED

This paper adopts a distance-based approach to develop novel metrics to evaluate the performance of methods for detecting events in time series. The inspiration for the proposed solution is found in soft (or approximate) computing. Soft computing is a collection of methodologies that exploit tolerance for inaccuracy, uncertainty, and partial truth to achieve tractability, robustness, and low solution cost [63]. In this context, the main proposed idea is to soften the hard metrics (standard classification metrics) to incorporate temporal tolerance or inaccuracy in event detection. Such metrics seek to support the decision-making of the most appropriate method for a given application with a basis not only on the usual analysis of the detection accuracy but also on the analysis of the ability of a method to produce detections that are close enough to actual time series events. Henceforth, the proposed approach is Soft Classification Metrics for Event Detection or SoftED. This section formalizes the SoftED metrics.

Figure 2 gives a general idea of the proposed approach, illustrating the key difference between the standard hard evaluation and the proposed soft evaluation. Blue rhombuses represent actual time-series events. Circles correspond to detections produced by a particular detector. The hard evaluation concerns a binary value regarding whether detection is a perfect match to the actual event. In this case, circles are green when they perfectly match the events and red when they do not. Conversely, soft evaluation assesses the degree to which detection relates to a particular event.

3.1 Defining an event membership function

We incorporate a distance-based temporal tolerance for events. It is done by defining the relevance of a particular detection to an event. This section formalizes the proposed approach. Table 1 defines the main variables used in the formalization of SoftED. Given a time series of length containing a set of events, , where , , is the j–th event in occurring at time point . A particular detector applied to produces a set of detections, , where , , is the i–th detection in indicating the time point as a detection occurrence.

| Var. | Value | Description |

| set of time series events | ||

| number of events | ||

| event index | ||

| the j–th event in | ||

| time point | time point where the occurs | |

| set of detections | ||

| number of detections | ||

| detection index | ||

| the i–th detection in | ||

| time point | time point where occurs | |

| time duration | constant of tolerance for event detections |

The degree to which a detection is relevant to a particular event is given by an event membership function as defined in Equation 4 and illustrated in Figure 3(a). The represents a Euclidean distance function, which is of simple computation and interpretation. Moreover, this solution was inspired by Fuzzy sets, where we innovate by fuzzifying the time dimension rather than the time series observations [72]. The definition of considers the acceptable tolerance for inaccuracy in event detection for a particular domain application. The acceptable time range in which an event detection is relevant for allowing an adequate response reaction to a domain event is given by the constant . For example, a meteorologist needs to provide alerts of extreme weather events at least 6 hours in advance, or an engineer needs to intervene in the operation of an overheated piece of machinery within 5 minutes before critical system failure. In this case, they might set to 6 hours or 5 minutes, respectively. However, this approach defines a domain-agnostic default value of , set to , defining a tolerance window of observations enough to hold the central limit theorem.

| (4) |

Figure 3(b) represents the evaluation of for two detections, and , produced by a particular detector. In this context, gives the extent to which a detection represents event , or, in other words, its temporal closeness to a hard true positive (TP) regarding . In that case, detection is closer to a TP, and lies outside the tolerance range given by and could be considered a false positive.

3.2 Maintaining integrity with hard metrics

We are interested in the SoftED metrics, which are still preserving concepts applicable to traditional (hard) metrics. In particular, the SoftED metrics are designed to express the same properties as their hard correspondents. Moreover, they are designed to maintain the reference to the perfect detection performance (score of as in hard metrics) and indicate how close a detector came to it. To achieve this goal, this approach defines constraints necessary for maintaining integrity concerning the standard hard metrics:

-

1.

A given detection must have only one associated score.

-

2.

The total score associated with a given event must not surpass .

The first constraint comes from the idea that the detection should not be rewarded more than once. It avoids the possibility of the total score for surpassing the perfect reference score of . Take, for example, the first scenario, presented in Figure 3(c), in which we have one detection and many close events. The detection is evaluated for events , , and resulting in three different membership evaluation of , , and , respectively. Nevertheless, to maintain integrity with hard metrics, a given detection must not have more than one score. Otherwise, would be rewarded three times, and its total score could surpass the score of a perfect match, which would be .

To address this issue, we devise a strategy for attributing each detection to a particular event . The adopted attribution strategy is based on the temporal distance between and . It facilitates interpretation and avoids the need to solve an optimization problem for each detection. In that case, we attribute to the event that maximizes the membership evaluation . This attribution is given by defined in Equation 5. According to Figure 3(c), is attributed to event given the maximum membership evaluation of . If there is a tie for the maximum membership evaluation of two or more events, represents the set of events to which is attributed. Consequently, we can also derive the set of detections attributed to each event , , defined by Equation 6. The addition of a detection to the set is further conditioned by the tolerance range, that is, a membership evaluation greater than ().

| (5) |

| (6) |

The second constraint defined by this approach comes from the idea that a particular detector should not be rewarded more than once for detecting the same event . It assures that the total score of detections for event does not surpass the perfect reference score of . Take, for example, the second scenario, presented in Figure 3(d), in which many detections are attributed to the same event. The event is present in the sets , , and . Moreover, contains , , and . But to maintain integrity with hard metrics, the total score for () must not surpass the score of a perfect match.

To address this issue, we devise an analogous distance-based strategy for attributing a representative detection to each event . In that case, we attribute to event the detection , contained in , that maximizes the membership evaluation . This attribution is given by defined in Equation 7. According to Figure 3(d), is best represented by detection given the maximum membership evaluation of . Consequently, we can compute the associated score for each event as , defined by Equation 8.

| (7) |

| (8) |

Finally, each detection produced by a particular detector is scored by defined in Equation 9. Representative detections () are scored based on . All other detections are scored . This definition ensures the total score for detections of a particular method does not surpass the number of real events contained in the time series . The Equation 10 holds and maintains the reference to the score of a perfect detection Recall according to usual hard metrics. Furthermore, it penalizes false positives and multiple detections for the same event .

| (9) |

| (10) |

The pseudocode for calculating for each detection is given by Algorithm 1, which defines the function . This function takes three arguments: , , and . The argument is a logical vector of the same length as a time series (), indicating the detections by a given method, and is a logical vector of the same length, indicating the true events in . The argument is an optional parameter (default is set to ) that sets the temporal tolerance for a detection to be considered a match with a true event.

The function in Algorithm 1 first takes the vectors and containing the indices of all events and detections in the input vectors and , respectively. Then, for each -th detection, it finds the indices of all matching events within a distance of (line ) and stores them in . If no matching events exist, is . Otherwise, it calculates the membership scores between each matching event in and the -th detection (line ) according to Equation 4. Finally, the soft score for each detection () is the maximum membership score among the matching events (line ), indicating the best match between the current detection and the events and respecting the constraints necessary for maintaining integrity with hard metrics defined by Equations 5 to 10. Lastly, a vector of soft scores for each detection () is returned.

3.3 Computing the SoftED metrics

The scores computed for each detection , , are used to create soft versions of the hard metrics TP, FP, TN, and FN, as formalized in Table 2. In particular, while the value of TP gives the number of detections that perfectly matched an event (score of ), the sum of scores indicate the degree to which the detections of a method approximate the events contained in time series given the temporal tolerance of observations. Hence, the soft version of the TP metric, is given by . Conversely, the soft version of FN, , indicates the degree to which a detector could not approximate the events in an acceptable time range. The can then be defined as the difference between and the perfect recall score ().

On the other hand, while the value of FP gives the number of detections that did not match an event (score of ), its soft version, , indicates how far the detections of a method came to the events contained in time series given the temporal tolerance of observations. In that sense, is the complement of and can be defined by . Finally, the soft version of TN, , indicates the degree to which a detector could avoid nonevent observations of (). The is given by the difference between and the perfect specificity score ().

Due to the imposed constraints described in Section 3.2, the defined SoftED metrics , , , and , hold the same properties and the same scale as traditional hard metrics. Consequently, using the same characteristic formulas, they can derive soft versions of traditional scoring methods, such as Sensitivity, Specificity, Precision, Recall, and F1. Moreover, SoftED scoring methods still provide the same interpretation while including temporal tolerance for inaccuracy, which is pervasive in time series event detection applications. An implementation of SoftED metrics in R is made publicly available at Github222SoftED implementation, datasets and experiment codes: https://github.com/cefet-rj-dal/softed.

4 Experimental evaluation

SoftED metrics were submitted to an experimental evaluation to analyze their contribution against the traditional hard metrics and the NAB score, the current state-of-the-art detection scoring methods [37]. The SoftED metrics are evaluated based on both complementary analyses: quantitative and qualitative. For that, a large set of computational experiments were performed with the application of several different methods for event detection in real-world and synthetic time series datasets containing ground truth event data. Detection results were evaluated based on SoftED, hard, and NAB metrics. First, this section describes the adopted time series datasets and experimental settings. Finally, the quantitative and qualitative results are presented.

4.1 Datasets

This section presents the datasets selected for evaluating the SoftED metrics. The selected datasets are widely available in the literature and are composed of simulated and real-world time series regarding several different domain applications such as water quality monitoring (GECCO) [51]333The GECCO dataset is provided by the R-package EventDetectR [42]., network service traffic (Yahoo) [69], social media (NAB) [1], oil well exploration (3W) [65], and public health (NMR) 444The NMR dataset was produced by Fiocruz and comprised data on neonatal mortality in Brazilian health facilities from 2005 to 2017. It is publicly available at https://doi.org/10.7303/syn23651701., among others. The selected datasets present over hundred representative time series containing different events. In particular, GECCO, Yahoo, and NAB contain mostly anomalies, while 3W and NMR contain mostly change points. Moreover, the datasets present different nonstationarity and statistical properties to provide a more thorough discussion of the effects of the incorporated temporal tolerance on event detection evaluation on diverse datasets.

4.2 Experimental settings

For evaluating SoftED metrics, a set of up to different event detectors were applied to all time series in the adopted datasets, totalizing event detection experiments. Each experiment comprised an offline detection application, where the methods had access to the entire time series given as input. The applied detectors are implemented and publicly available in the Harbinger framework [55]. It integrates and enables the benchmarking of different state-of-the-art event detectors. These methods encompass searching for anomalies and change points using statistical, volatility, proximity, and machine learning methods. The adopted methods are described in detail by Escobar et al. [22], namely: the Forward and Backward Inertial Anomaly Detector (FBIAD) [40], K-Nearest Neighbors (KNN-CAD) [25], anomalize (based on time series decomposition [21, 58]) [29, 18], and GARCH [11], for anomaly detection; the Exponentially Weighted Moving Average (EWMA) [50], seminal method of detecting change points (SCP) [30], and ChangeFinder (CF) [60], for change point detection; and the machine learning methods based on the use Feed-Forward Neural Network (NNET) [52], Convolutional Neural Networks (CNN) [28, 39], Support Vector Machine (SVM) [14, 49], Extreme Learning Machine (ELM) [34, 61], and K-MEANS [44], for general purpose event detection.

In each experiment, detectors were evaluated using the hard metrics Precision, Recall, and F1. Among them, the F1 was the main metric used for comparison. The NAB score was also computed with the standard application profile for each detector result. The NAB scoring algorithm is implemented and publicly available in the R-package otsad [35]. Anomaly window sizes were automatically set. During the computation of the NAB score, confusion matrix metrics are built. Based on these metrics, the F1 metric of the NAB scoring approach was also computed. Finally, the SoftED metrics were computed for soft evaluation of the applied event detectors. In particular, this experimental evaluation sets the constant of temporal tolerance, , to its default value () unless stated otherwise. Nevertheless, also experimented with values in for sensitivity analysis.

The datasets and codes used in this experimental evaluation were available for reproducibility2.

4.3 Quantitative analysis

This section presents the quantitative analysis of the SoftED metrics. The main goal of this analysis is to assess the effects of temporal tolerance incorporated by SoftED in event detection evaluation. In particular, this section intends to assess (i) whether the proposed metrics can incorporate temporal tolerance to event detection evaluation and, if so, (ii) whether the incorporated temporal tolerance affects the selection of detectors. Answering both questions demands an experimental evaluation to compare the proposed metrics against other baseline and state-of-the-art metrics.

Quantitative experiment 1

The first experiment assesses the number of times SoftED metrics considered more s while evaluating detectors to answer whether SoftED can incorporate temporal tolerance to event detection evaluation. We are interested in comparing SoftED F1 and hard F1 metrics for that. Time series detections where SoftED F1 was higher than its corresponding hard metric represent the incorporation of temporal tolerance. In contrast, detection results that maintained an unchanged F1 score had their evaluation confirmed, representing either perfect recall scenarios in which no tolerance is needed or scenarios with a low rate of neighboring detections in which there are few opportunities for tolerance. Finally, there are inaccurate results, with detections that did not allow temporal tolerance, presenting zero Precision/Recall, and no detections were sufficiently close to events given the defined tolerance level (). In the latter case, the F1 metric cannot be computed.

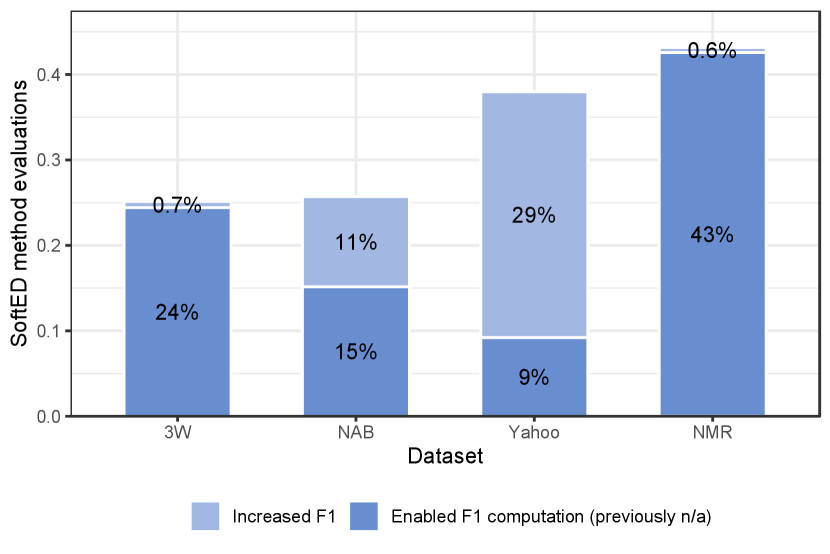

Figure 4(a) compares SoftED F1 and hard F1 metrics for each adopted dataset. The percentage of time series detections where SoftED F1 incorporated temporal tolerance is presented in blue. SoftED metrics incorporated temporal tolerance in over 43% (NMR) and at least 25% (3W) of detector evaluations in all datasets. In total, 36% of the overall conducted time series detections were more tolerantly evaluated (in blue). Furthermore, 45% of all detection results had their evaluation confirmed (in gray), maintaining an unchanged F1 score, reaching a maximum of 64% for the NAB dataset and a minimum of 6% for the NMR dataset. Finally, the other 19% of the overall results corresponded to inaccurate detections that did not allow temporal tolerance (in red). The percentages of inaccurate results (F1 n/a) for each dataset are also in red in Figure 4(a).

Figure 4(b) details the cases of incorporated tolerance. The datasets NAB and Yahoo presented an increase in F1 in 11% and 29% of the cases, respectively (lighter blue). The other respective 15% and 9% are cases in which methods got no s, presenting zero Precision/Recall and non-applicable F1 based on hard metrics (darker blue). Nonetheless, SoftED could score sufficiently close detections, enabling the evaluation of such methods. This is also the case for almost all evaluations of the 3W and NMR datasets incorporating temporal tolerance. In fact, in total, 17% of the overall conducted detection evaluations could not have been made without SoftED metrics incorporating temporal tolerance.

It is possible to observe by Figure 4 that datasets that contained more anomalies (NAB and Yahoo) got more accurate detection results. It occurs as most adopted methods are designed for anomaly detection. Also, the number of anomaly events in their time series gives several opportunities for incorporating temporal tolerance and increasing F1. On the other hand, 3W and NMR datasets, containing only one or two change points per series, got a higher rate of inaccurate detections. These results indicate that change points pose a particular challenge for detection evaluation. SoftED metrics contribute by incorporating temporal tolerance whenever possible and scoring methods that could be disregarded.

Quantitative experiment 2

The second experiment focuses on whether the temporal tolerance incorporated by SoftED can affect the selection of different detectors. For that, we measured the number of times using SoftED metrics as criteria changed the ranking of the best-evaluated detectors. Figure 5 presents the changes in the top-ranked methods for each time series based on the SoftED F1 metric compared to the hard F1. For all datasets, there were changes in the best-evaluated detector (Top 1) in over 74% (NMR) or at least 6% (Yahoo) of the cases (in blue), affecting the recommendation of the most suitable detector for their time series. While the most accurate results maintained their top position (in dark gray), overall adopted time series, 31% of detectors that could have been dismissed became the most prone to selection.

Furthermore, SoftED metrics also caused changes in the second (Top 2) and third-best (Top 3) evaluated methods. Percentages for each dataset are depicted in Figure 5. Considering the adopted time series, 24% of the methods in the Top 2 climbed to that position, while 16% dropped to that position when other methods assumed the Top 1 (in light gray). For methods in the Top 3, 23% climbed to the position, and in 24% of the cases, they were pushed down by methods that climbed to the first two rank positions. Due to the higher rates of perfect recall results in the NAB and Yahoo datasets (Figure 4), most of the methods applied maintained their ranking positions at the top. In contrast, 3W and NMR datasets presented more changes in ranking based on the SoftED metrics, affecting the selection of suitable methods, especially for change point detection.

4.3.1 Complementary analysis

Once the temporal tolerance and its effects in the ranking of detectors are, a complementary analysis can be performed to answer whether the definition of different levels of temporal tolerance affects the computation of the proposed metrics and to what extent. For that, one may conduct a sensitivity analysis by comparing the proposed metrics using different levels of temporal tolerance. The temporal tolerance level of SoftED metrics is given by the constant set to , , and , besides the minimum value of as in the previous experiments. Figure 6 presents the average difference between SoftED and hard Precision and Recall metrics given the different levels of temporal tolerance for each dataset. As temporal tolerance increases, more s were considered, and metrics increased in value, which means the detectors were more tolerantly evaluated. In particular, higher levels of temporal tolerance lead to a decrease in the number of s, which most directly affected Recall values.

A final complementary experiment also aims to answer whether the temporal tolerance incorporated by the proposed metrics differs from that incorporated by NAB score anomaly windows. For that, we measured the number of times the NAB F1 metrics, derived from the NAB scoring algorithm, considered more s than hard F1 metrics while evaluating detectors. This measure is compared against the tolerance incorporated by SoftED metrics presented in Experiment 1 (Figure 4(a)). For datasets 3W, NAB, and Yahoo, NAB increased the incorporated tolerance at 42%, 40%, and 39%, respectively. Whereas, for the NMR dataset, the percentage of incorporated tolerance decreased by 6%.

NAB metrics were more tolerant than SoftED in method evaluations over most datasets, which does not mean better. The tolerance level incorporated by NAB depends directly on the anomaly window size, which is automatically set by the algorithm. Table 3 presents the interval and the average of the anomaly window sizes set for the time series of each dataset. Since automatic anomaly window sizes set for NAB metrics change with each series, it did not allow a comparison under the same standardized conditions. While the tolerance level given by SoftED was consistently set by , giving a tolerance window of observations, the NAB anomaly windows were mostly wider, reaching a maximum of observations or on average for the 3W dataset. Wider anomaly windows allow a greater number of hard s to be considered s, which causes F1 metrics to increase in value. It is similar to what was discussed in Experiment 3, explaining the increase in tolerance opportunities. The inverse is also true, as exemplified by the NMR dataset, for which anomaly windows did not surpass observations, decreasing the number of tolerance opportunities compared to SoftED.

| Dataset | Anomaly window sizes | |

|---|---|---|

| Interval | Mean | |

| 3W | [52, 12626] | 1357 |

| NAB | [0, 902] | 286 |

| Yahoo | [0, 168] | 38 |

| NMR | [0, 14] | 13 |

It is also important to note from Table 3 that the anomaly window size computation proposed by the NAB algorithm allows the definition of zero-sized windows, which do not give any tolerance to inaccuracy as in hard metrics or narrow windows, which are not enough to hold the central limit theorem guaranteed in SoftED results. On the other hand, the NAB window size automatic definition is not domain-dependent. Consequently, domain specialists may find windows too wide or too narrow for their detection application, making the incorporated tolerance and metric results non-applicable or difficult to interpret. In this context, SoftED contributes by allowing domain specialists to define the desired temporal tolerance level for their detector results.

4.4 Qualitative analysis

Although the analysis of Section 4.3 is meant to evaluate the quantitative effects of temporal tolerance, it is not enough to answer whether these effects benefit the evaluation of event detection performance. Hence, there is a demand for a qualitative analysis of the contribution of the temporal tolerance incorporated by the proposed metrics under different scenarios. In this context, a given tolerant metric contributes if its adoption leads to selecting the ideal event detector for a given detection scenario. For that, we established a protocol (Section 4.4.1) and conducted the qualitative analysis (Section 4.4.2).

4.4.1 Metric evaluation protocol

This section proposes a general protocol for evaluating metrics in the context of time series event detection. In particular, this protocol is designed to evaluate metrics that incorporate temporal tolerance into detection. We established six competency questions (CQ)s [64, 45], which encompass a set of questions stated and replied to in natural language [45]. The proposed CQs for this evaluation protocol are designed to qualitatively analyze the contribution of temporal tolerance in event detection under different scenarios. A list of detection scenarios (one for each CQ) was established for that.

Each scenario corresponds to two different detectors (A and B) applied to a particular time series. Their detections are evaluated and compared based on the proposed metrics and other baseline and state-of-the-art metrics. The specialists can then assess the metrics that contribute most in any given scenario. Each scenario is associated with a specific CQ. To succeed in the evaluation, the proposed metric (SoftED) should be affirmative for all CQ.

The CQs (CQ1 to CQ6) are presented as follows. Each scenario (S1 to S6) characterizes the context for their evaluation.

-

CQ1

Do the proposed metrics foster selecting the most suitable detector for precise detection (perfect recall)?

-

S1

Perfect recall: This scenario does not need temporal tolerance. All metrics should be able to reward perfect recalls.

-

CQ2

Do the proposed metrics foster selecting the most suitable detector approximate detection (partial recall)?

-

S2

Event neighborhood: Scenario in which methods produced detections that may not coincide with events but are in their surroundings (neighborhood). Tolerant metrics should be able to reward close detections.

-

CQ3

Do the proposed metrics foster selecting the most suitable detector for symmetric detections?

-

S3

Detection symmetry: Scenario in which methods produced symmetric detections. They have the same distance from the event and differ only in whether they come before or after it. Tolerant metrics should be able to reward close detections regardless of their relative position.

-

CQ4

Do the proposed metrics foster selecting the most suitable detector with fewer false positives?

-

S4

Number of detections: Scenario in which methods produced detections close to the event contained in the series, differing only in the number of detections made. The closest detections have the same distance from the event. Tolerant metrics should be able to reward the closest detections while penalizing unnecessary s.

-

CQ5

Do the proposed metrics foster selecting the most suitable detector where detections are proximate to events?

-

S5

Detection distances: Scenario in which methods produced detections close to events differing only on their distance to them. Tolerant metrics should be able to reward the closest detections.

-

CQ6

Do the proposed metrics foster selecting the most suitable detector resilient of detection bias?

-

S6

Detection bias: Scenario in which metrics are prone to bias in detection evaluation. Methods produced both s and close detections before the event. Tolerant metrics should be able to reward close detections while maintaining the relative weight of s.

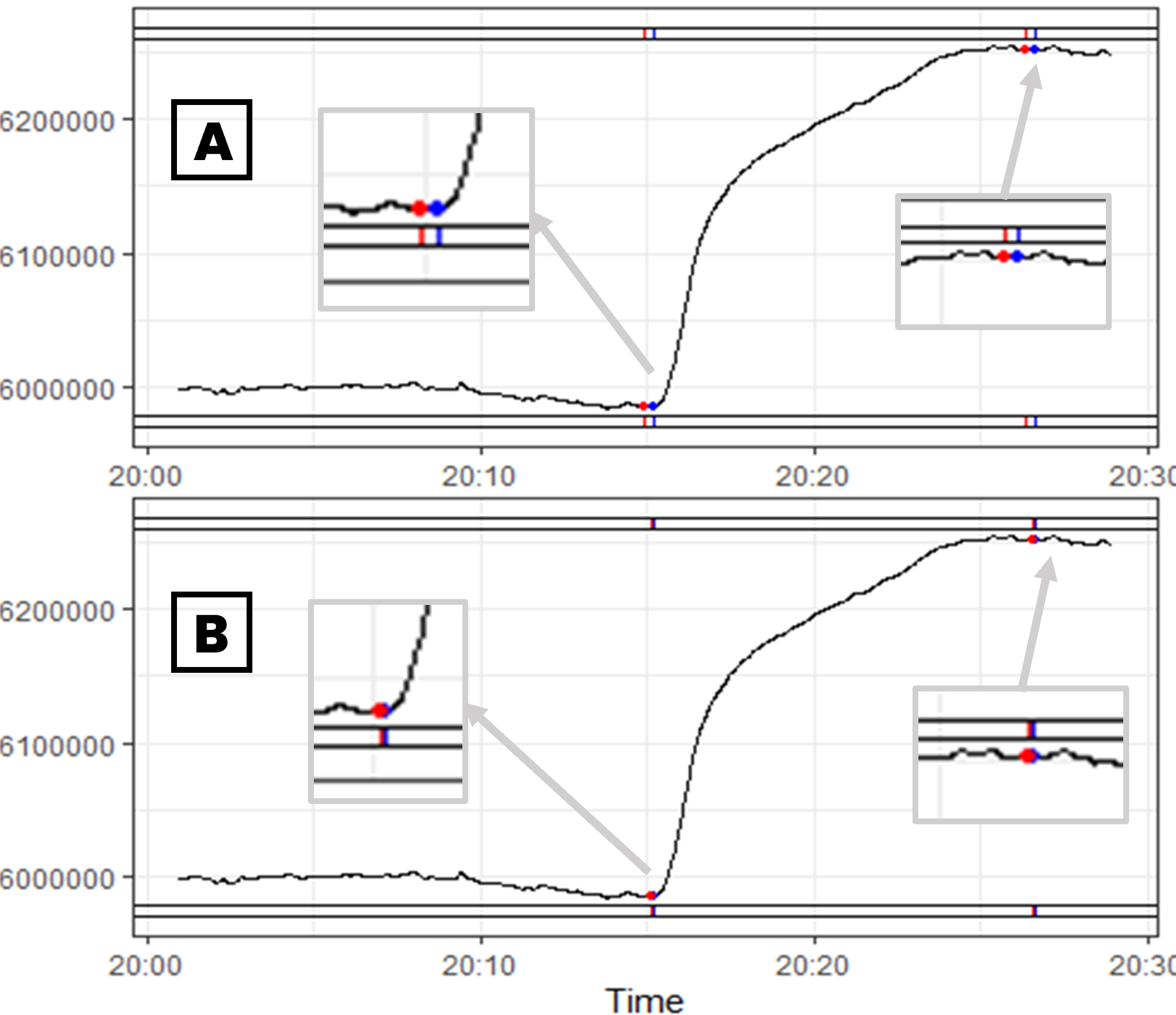

The CQs are evaluated in a survey. The survey explores the problem of selecting the most suitable detector in six scenarios. Two detectors (A and B) were applied to each scenario’s representative time series of the GECCO, 3W, or NMR datasets. The plots of the detection results were presented to participants as in Figure 7, where blue dots represent events, red dots represent detections, and green dots represent detections that match events.

Table 4 is also presented to all participants. It contains detection evaluation metrics computed for detectors A and B for each scenario, namely the F1 metric in its hard and SoftED versions and the NAB score. Values that maximize each metric and could be used to recommend a particular detector are underlined.

Given the results of both methods, the participants are requested to analyze the plots in Figure 7 and answer the first question (Q1):

-

Q1

Which event detector performed better?

Q1 was closed with three disjoint options: Detector A, Detector B, or None. The main goal of Q1 is to get the intuition and personal opinions of the most suitable detector for selection on that particular application scenario.

Next, the participants are requested to analyze the metrics in Table 4 and answer, for each scenario, the second question (Q2):

-

Q2

Which metric corroborates with your opinion?

Q2 was also closed with three joint options: F1, NAB score, or Other. The main goal of Q2 was to assess the metrics (and corresponding evaluation approach) that would foster the selection of the most suitable detector in that particular application scenario.

4.4.2 Qualitative results

This section presents a qualitative analysis of SoftED metrics compared to hard metrics and the NAB score. To this end, following the proposed metric evaluation protocol, we have surveyed participants, of which specialists from three domains: oil exploration, public health, and weather monitoring. We have interviewed three specialists from Petrobras (Brazil oil company), five specialists from the Oswaldo Cruz Foundation (Fiocruz), linked to the Brazilian Ministry of Health, the most prominent institution of science and technology applied to health in Latin America, and five weather forecast specialists from the Rio Operations Center (COR) of the City Hall of Rio de Janeiro. All interviewed specialists work on the problem of time series analysis and event detection daily as part of research projects. Furthermore, the other participants were volunteer students from the Federal Center for Technological Education of Rio de Janeiro (CEFET/RJ) and the National Laboratory for Scientific Computing (LNCC). They were introduced to characterize common sense interpretation.

| Scenario | Detector | Metric | ||

| F1 | NAB score | |||

| Hard | SoftED | |||

| S1 | A | 0.40 | 0.40 | 16.05 |

| B | 1 | 1 | 35.87 | |

| S2 | A | n/a | 0.07 | - 36.53 |

| B | n/a | 0.01 | - 50.17 | |

| S3 | A | n/a | 0.87 | 0.94 |

| B | n/a | 0.87 | 0.77 | |

| S4 | A | n/a | 0.12 | 0.85 |

| B | n/a | 0.6 | 0.85 | |

| S5 | A | n/a | 0.07 | 1.89 |

| B | n/a | 0.87 | 1.76 | |

| S6 | A | 1 | 1 | 0.88 |

| B | n/a | 0.53 | 1 | |

Table 5 presents the domain specialists’ responses to the questions for each scenario. Their winning responses are underlined. Furthermore, student volunteers’ winning responses are given to study how specialist opinion compares with common sense. All participants were also allowed to comment and elaborate on their responses for each scenario in an open question. The remainder of this section further discusses the results of each scenario.

| Scenario | Specialists responses | Volunteer winning responses | ||||||

|---|---|---|---|---|---|---|---|---|

| Q1 | Q2 | Q1 | Q2 | |||||

| Detector A | Detector B | None | F1 | NAB score | Other | |||

| S1 | 1 (8%) | 12 (92%) | 0 (0%) | 12 (92%) | 6 (46%) | 1 (8%) | Detector B (84%) | F1 (96%) |

| S2 | 11 (84%) | 1 (8%) | 1 (8%) | 12 (92%) | 7 (54%) | 1 (8%) | Detector A (88%) | F1 (96%) |

| S3 | 12 (92%) | 0 (0%) | 1 (8%) | 4 (31%) | 12 (92%) | 0 (0%) | Detector A (84%) | NAB score (82%) |

| S4 | 0 (0%) | 12 (92%) | 1 (8%) | 13 (100%) | 1 (8%) | 0 (0%) | Detector B (86%) | F1 (98%) |

| S5 | 3 (23%) | 10 (77%) | 0 (0%) | 11 (85%) | 5 (38%) | 0 (0%) | Detector B (74%) | F1 (86%) |

| S6 | 10 (77%) | 3 (23%) | 0 (0%) | 10 (77%) | 6 (46%) | 0 (0%) | Detector A (82%) | F1 (89%) |

CQ 1

The first scenario refers to a scenario of perfect recall, where Detector A and Detector B detected all events in the GECCO dataset. However, Detector A presents more details (in red). Table 5 shows that almost all specialists (12/13) agreed that Detector B performed better. According to them, Detector B managed to minimize s, presenting a higher Precision rate, indicated by the F1 metric, which was also the winning response for Q2 with 12/13 votes. For this scenario, both hard and SoftED F1 give the same evaluation of Detector B so that both approaches can be used for recommendation.

Nonetheless, specialists (46%) also selected the NAB score, which also corroborates with the recommendation of Detector B. Other specialists said they preferred not to select the NAB score, as they were unfamiliar with the metric and wanted to avoid concluding with this scenario.

CQ 2

The second scenario is based on another time series from the GECCO dataset. It addresses the scenario in which Detector A and Detector B presented detections that, despite not coinciding with the events contained in the series, are in the surroundings or the neighborhood of the events. Furthermore, Detector A and Detector B detections differ in the distance to events. In this case, most specialists (11/13) agreed to select Detector A as giving the best detection performance, for their detections are temporally closer to the events. Both metrics, F1 and NAB score, corroborated with specialists’ opinions recommending Detector A, while F1 was the winning response to Q2. At this point, it is important to note that the hard approach to F1 computation can no longer evaluate the methods, as both results had no Precision or Recall. Hence, the winning response for Q2 regards the F1 metric produced by the SoftED approach as the one that fosters the selection of the best detection performance according to specialists.

CQ 3

The third scenario is based on a time series from the NMR dataset containing monthly neonatal mortality rates for a healthcare facility in Brazil over the years. In this scenario, Detector A and Detector B produced only one detection close to the event contained in the series. The detections of Detector A and Detector B are symmetric. They have the same distance from the event and differ only in whether they come before or after it. In this scenario, almost all specialists (12/13) responded that Detector A gave the best detection performance, as it seems to anticipate the event, allowing time to take prior needed actions. Furthermore, as there was a tie regarding the F1 metrics, the NAB score was the winning response, corroborating with specialists’ opinions.

However, a public health specialist from Fiocruz disagreed and responded that none of the methods performed better, which is corroborated by the F1 metrics. For example, consider implementing a public health policy in which a human milk bank is supposed to decrease neonatal mortality rates. Although it makes sense to detect the first effects of preparing for the implementation of the policy, it may not be reasonable to give greater weight to anticipated detections rather than the detection of the effects after the implementation. They defend:

It is important to deepen the understanding of the context of the event and the reach of its effects (before and after).

CQ 4

The fourth scenario is based on a time series taken from the 3W dataset produced by Petrobras. In this scenario, Detector A and Detector B presented detections close to the event contained in the series, differing only in the number of detections made. The closest detections for both methods have the same distance from the event. For this scenario, except for one specialist that responded None to Q1, all specialists agree that Detector B performed better. As it minimizes the overall s, it increases Precision, which conditions F1, the winning response of Q2, selected by 100% of the specialists. The NAB score indicates a tie between both methods, therefore not penalizing the excess s, and the hard F1 does not provide any evaluation. In this case, the SoftED F1 metric is the only one corroborating with specialists’ opinions.

CQ 5

The fifth scenario is based on another time series from the 3W dataset. This scenario addresses the problem of evaluating methods based on their detection proximity to events. In this scenario, both Detector A and Detector B presented a detection close and antecedent to the two events contained in the series. Detector A and Detector B differ only concerning the distance of their detections to the events. Most specialists (10/13) agreed that Detector B performed better, as they say:

Giving greater weight to detections closer to the actual events seems reasonable.

Again, the only metric that corroborated the specialists’ opinion was the SoftED F1. Furthermore, specialists mentioned that SoftED F1 was approximately 12 times greater for Detector B than Detector A, while the difference in the NAB score did not seem high enough to give the same confidence in Detector A’s results.

CQ 6

Finally, we used another time series of neonatal mortality rates from the NMR dataset for the sixth and final scenario. This scenario addresses the problem of detection bias in detection evaluation. Detector A and Detector B produced a detection related to the event contained in the series. However, Detector A and Detector B detections differ regarding their distance to the event. Detector A managed to correctly detect the time series event, while the detection of Detector B came close before the event. Most specialists (10/13) agreed that Detector A performed better since it produced, for all intents and purposes, a , presenting perfect recall and perfect Precision. On the other hand, the evaluation of Detector B depended solely on the incorporation of temporal tolerance.

As metrics disagree with the recommendation, the F1 metric again corroborates with the specialists’ opinion, being the winning response for Q2. In particular, the SoftED F1 metric is the only approach that recommends Detector A. The hard F1 metric cannot be computed for Detector B, being incomparable. The difference in metric values of SoftED is also greater than for the NAB score, increasing confidence in the recommendation.

4.4.3 Discussion of results

Given different detection evaluation scenarios, the majority of the domain specialists agreed that the most desired detector for selection was the one that minimizes s and s, giving higher Precision and Recall rates while also producing detections that are temporally closer to the events. In this context, the F1 was the metric most corroborated with specialists’ opinions for of the scenarios. In particular, according to specialists in four scenarios, the SoftED F1 metric was the only one that furthered the selection of the most desired detector. To elaborate, a domain specialist from Fiocruz argued that:

For health policies, for example, the SoftED approach makes more sense since the hard and NAB approaches do not seem adequate for events that produce prior and subsequent effects that may have a gradual and non-monotonous evolution.

Volunteer winning responses in Table 5 also indicate that common sense does not differ from specialist opinion, which means the contribution of SoftED metrics is noticeable even to a wider non-specialist public.

There was still one scenario (S3) where the NAB score was the metric that most corroborated with specialists. They claimed that for their usual detection application, it is interesting to have s (warnings) before the event, so there is a time window for measures to be taken to prevent any of its unwelcome effects. Also, the longer the time window set by s preceding the event, the better, as there is more valuable time to take preventive actions.

It is important to mention that, to avoid bias in the responses, the discussion regarding our motivating example of Section 1.1 was not presented before the interviews. Hence, detections that preceded the events were misconceived as event predictions [74]. As presented earlier, detections preceding events can be made past their occurrence. Evaluating methods that anticipate events is not about how temporally distant a preceding detection is from the events. It is actually about the time lag needed for a detector to detect the event accurately. The misconception regarding detections that preceded the events was addressed in detail by the end of the interviews. Furthermore, the specialists see the evaluation regarding detection lags, that is, analyzing its ability to anticipate (or not) the events as complementary to detection performance but also important.

5 Conclusions

This paper introduced the SoftED metrics, which are new softened versions of the standard classification metrics. Inspired by Fuzzy sets, they are designed to incorporate temporal tolerance in evaluating event detection performance in time series applications. SoftED metrics support the comparative analysis of methods based on their ability to accurately produce detections of interest to the user, given their desired tolerance level.

This paper also introduces a new general protocol inspired by competency questions to evaluate temporal tolerant metrics for event detection. Following the proposed evaluation protocol, the SoftED metrics were quantitatively and qualitatively compared against the current state-of-the-art methods. The metrics incorporated temporal tolerance in event detection, enabling evaluations that could not have been made without them while also confirming accurate results. Moreover, specialists noted the contribution of SoftED metrics to the problem of detector evaluation in different domains.

Acknowledgments

The authors thank CNPq, CAPES (finance code 001), FAPERJ, and CEFET/RJ for partially funding this research.

Conflict of interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

References

- [1] S. Ahmad, A. Lavin, S. Purdy, and Z. Agha. Unsupervised real-time anomaly detection for streaming data. Neurocomputing, 262:134–147, 2017.

- [2] M. Ahmed, A. Mahmood, and M. Islam. A survey of anomaly detection techniques in financial domain. Future Generation Computer Systems, 55:278–288, 2016.

- [3] E. Alevizos, A. Skarlatidis, A. Artikis, and G. Paliouras. Probabilistic complex event recognition: A survey. ACM Computing Surveys, 50(5), 2017.

- [4] S. Aminikhanghahi and D. Cook. A survey of methods for time series change point detection. Knowledge and Information Systems, 51(2):339–367, 2017.

- [5] R. Ariyaluran Habeeb, F. Nasaruddin, A. Gani, I. Targio Hashem, E. Ahmed, and M. Imran. Real-time big data processing for anomaly detection: A Survey. International Journal of Information Management, 45:289–307, 2019.

- [6] A. Bl\a’azquez-Garc\a’ıa, A. Conde, U. Mori, and J. Lozano. A Review on Outlier/Anomaly Detection in Time Series Data. ACM Computing Surveys, 54(3), 2021.

- [7] P. Boniol and T. Palpanas. Series2graph: Graph-based subsequence anomaly detection for time series. Proceedings of the VLDB Endowment, 13(11):1821–1834, 2020.

- [8] P. Boniol, J. Paparrizos, Y. Kang, T. Palpanas, R. Tsay, A. Elmore, and M. Franklin. Theseus: Navigating the Labyrinth of Time-Series Anomaly Detection. Proceedings of the VLDB Endowment, 15(12):3702–3705, 2022.

- [9] P. Boniol, J. Paparrizos, T. Palpanas, and M. Franklin. Sand in action: Subsequence anomaly detection for streams. Proceedings of the VLDB Endowment, 14(12):2867–2870, 2021.

- [10] M. Braei and S. Wagner. Anomaly Detection in Univariate Time-series: A Survey on the State-of-the-Art, apr 2020.

- [11] R. Carmona. Statistical Analysis of Financial Data in R. Springer Science & Business Media, dec 2013.

- [12] V. Chandola, A. Banerjee, and V. Kumar. Anomaly detection: A survey. ACM Computing Surveys, 41(3), 2009.

- [13] V. Chandola, V. Mithal, and V. Kumar. Comparative evaluation of anomaly detection techniques for sequence data. In Proceedings - IEEE International Conference on Data Mining, ICDM, pages 743–748, 2008.

- [14] V. Chauhan, K. Dahiya, and A. Sharma. Problem formulations and solvers in linear SVM: a review. Artificial Intelligence Review, 52(2):803–855, 2019.

- [15] D. Choudhary, A. Kejariwal, and F. Orsini. On the Runtime-Efficacy Trade-off of Anomaly Detection Techniques for Real-Time Streaming Data, oct 2017.

- [16] A. Cook, G. Misirli, and Z. Fan. Anomaly Detection for IoT Time-Series Data: A Survey. IEEE Internet of Things Journal, 7(7):6481–6494, 2020.

- [17] D. J. Cook and N. C. Krishnan. Activity Learning: Discovering, Recognizing, and Predicting Human Behavior from Sensor Data. John Wiley & Sons, feb 2015.

- [18] M. Dancho and D. Vaughan. anomalize: Tidy Anomaly Detection, 2020.

- [19] H. Ding, G. Trajcevski, P. Scheuermann, X. Wang, and E. Keogh. Querying and mining of time series data: Experimental comparison of representations and distance measures. In Proceedings of the VLDB Endowment, volume 1, pages 1542–1552, 2008.

- [20] X. Ding, Y. Li, A. Belatreche, and L. Maguire. An experimental evaluation of novelty detection methods. Neurocomputing, 135:313–327, 2014.

- [21] G. Dudek. Neural networks for pattern-based short-term load forecasting: A comparative study. Neurocomputing, 205:64–74, 2016.

- [22] L. Escobar, R. Salles, J. Lima, C. Gea, L. Baroni, A. Ziviani, P. Pires, F. Delicato, R. Coutinho, L. Assis, and E. Ogasawara. Evaluating Temporal Bias in Time Series Event Detection Methods. Journal of Information and Data Management, 12(3), oct 2021.

- [23] H. Fanaee-T and J. Gama. Event labeling combining ensemble detectors and background knowledge. Progress in Artificial Intelligence, 2(2-3):113–127, 2014.

- [24] R. Fontugne, P. Borgnat, P. Abry, and K. Fukuda. MAWILab: Combining diverse anomaly detectors for automated anomaly labeling and performance benchmarking. In Proceedings of the 6th International Conference on Emerging Networking Experiments and Technologies, Co-NEXT’10, 2010.

- [25] A. Gammerman and V. Vovk. Hedging predictions in machine learning. Computer Journal, 50(2):151–163, 2007.

- [26] F. Gmati, S. Chakhar, W. Chaari, and M. Xu. A taxonomy of event prediction methods. Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), 11606 LNAI:12–26, 2019.

- [27] D. N. Gujarati. Essentials of Econometrics. SAGE, sep 2021.

- [28] T. Guo, J. Dong, H. Li, and Y. Gao. Simple convolutional neural network on image classification. In 2017 IEEE 2nd International Conference on Big Data Analysis, ICBDA 2017, pages 721–724, 2017.

- [29] M. Gupta, J. Gao, C. Aggarwal, and J. Han. Outlier Detection for Temporal Data: A Survey. IEEE Transactions on Knowledge and Data Engineering, 26(9):2250–2267, 2014.

- [30] V. Guralnik and J. Srivastava. Event detection from time series data. In Proceedings of the fifth ACM SIGKDD international conference on Knowledge discovery and data mining, KDD ’99, pages 33–42, New York, NY, USA, 1999. Association for Computing Machinery.

- [31] J. Han, J. Pei, and H. Tong. Data Mining: Concepts and Techniques. Morgan Kaufmann, Cambridge, MA, 4th edition edition, oct 2022.

- [32] V. Hodge and J. Austin. A survey of outlier detection methodologies. Artificial Intelligence Review, 22(2):85–126, 2004.

- [33] E. Hüllermeier. Fuzzy sets in machine learning and data mining. Applied Soft Computing, 11(2):1493–1505, 2011.

- [34] S. Ismaeel, A. Miri, and D. Chourishi. Using the Extreme Learning Machine (ELM) technique for heart disease diagnosis. In 2015 IEEE Canada International Humanitarian Technology Conference, IHTC 2015, 2015.

- [35] A. Iturria, J. Carrasco, F. Herrera, S. Charramendieta, and K. Intxausti. otsad: Online Time Series Anomaly Detectors. Technical report, https://cran.r-project.org/web/packages/otsad/, 2019.

- [36] V. Jacob, F. Song, A. Stiegler, B. Rad, Y. Diao, and N. Tatbul. Exathlon: A benchmark for explainable anomaly detection over time series. Proceedings of the VLDB Endowment, 14(11):2613–2626, 2021.

- [37] A. Lavin and S. Ahmad. Evaluating Real-Time Anomaly Detection Algorithms – The Numenta Anomaly Benchmark. In 2015 IEEE 14th International Conference on Machine Learning and Applications (ICMLA), pages 38–44, dec 2015.

- [38] V. Lawhern, W. Hairston, and K. Robbins. DETECT: A MATLAB Toolbox for Event Detection and Identification in Time Series, with Applications to Artifact Detection in EEG Signals. PLoS ONE, 8(4), 2013.

- [39] B. Lim and S. Zohren. Time-series forecasting with deep learning: A survey. Philosophical Transactions of the Royal Society A: Mathematical, Physical and Engineering Sciences, 379(2194), 2021.

- [40] J. Lima, R. Salles, F. Porto, R. Coutinho, P. Alpis, L. Escobar, E. Pacitti, and E. Ogasawara. Forward and Backward Inertial Anomaly Detector: A Novel Time Series Event Detection Method. In Proceedings of the International Joint Conference on Neural Networks, volume 2022-July, 2022.

- [41] S. Molaei and M. Keyvanpour. An analytical review for event prediction system on time series. In 2015 2nd International Conference on Pattern Recognition and Image Analysis, IPRIA 2015, 2015.

- [42] S. Moritz and F. Rehbach. EventDetectR. Technical report, https://cran.r-project.org/web/packages/EventDetectR/index.html, 2020.

- [43] M. Munir, M. Chattha, A. Dengel, and S. Ahmed. A comparative analysis of traditional and deep learning-based anomaly detection methods for streaming data. In Proceedings - 18th IEEE International Conference on Machine Learning and Applications, ICMLA 2019, pages 561–566, 2019.

- [44] A. Muniyandi, R. Rajeswari, and R. Rajaram. Network anomaly detection by cascading k-Means clustering and C4.5 decision tree algorithm. In Procedia Engineering, volume 30, pages 174–182, 2012.

- [45] N. F. Noy and C. D. Hafner. The state of the art in ontology design: A survey and comparative review. AI magazine, 18(3):53–53, 1997.

- [46] G. Pang, C. Shen, L. Cao, and A. Hengel. Deep Learning for Anomaly Detection: A Review. ACM Computing Surveys, 54(2), 2021.

- [47] J. Paparrizos, P. Boniol, T. Palpanas, R. Tsay, A. Elmore, and M. Franklin. Volume Under the Surface: A New Accuracy Evaluation Measure for Time-Series Anomaly Detection. Proceedings of the VLDB Endowment, 15(11):2774–2787, 2022.

- [48] M. Pimentel, D. Clifton, L. Clifton, and L. Tarassenko. A review of novelty detection. Signal Processing, 99:215–249, 2014.

- [49] Rahul and B. Choudhary. An Advanced Genetic Algorithm with Improved Support Vector Machine for Multi-Class Classification of Real Power Quality Events. Electric Power Systems Research, 191, 2021.

- [50] H. Raza, G. Prasad, and Y. Li. EWMA model based shift-detection methods for detecting covariate shifts in non-stationary environments. Pattern Recognition, 48(3):659–669, 2015.

- [51] F. Rehbach, S. Moritz, S. Chandrasekaran, M. Rebolledo, M. Friese, and T. Bartz-Beielstein. GECCO Industrial Challenge 2018 Dataset. Technical report, https://zenodo.org/record/3884398#.Y9qShK3MJD8, 2018.

- [52] F. Riese and S. Keller. Supervised, semi-supervised, and unsupervised learning for hyperspectral regression. Advances in Computer Vision and Pattern Recognition, pages 187–232, 2020.

- [53] F. Salfner, M. Lenk, and M. Malek. A survey of online failure prediction methods. ACM Computing Surveys, 42(3), 2010.

- [54] R. Salles, K. Belloze, F. Porto, P. Gonzalez, and E. Ogasawara. Nonstationary time series transformation methods: An experimental review. Knowledge-Based Systems, 164:274–291, 2019.

- [55] R. Salles, L. Escobar, L. Baroni, R. Zorrilla, A. Ziviani, V. Kreischer, F. Delicato, P. F. Pires, L. Maia, R. Coutinho, L. Assis, and E. Ogasawara. Harbinger: Um framework para integração e análise de métodos de detecção de eventos em séries temporais. In Anais do Simpósio Brasileiro de Banco de Dados (SBBD), pages 73–84. SBC, sep 2020.

- [56] S. Schmidl, P. Wenig, and T. Papenbrock. Anomaly Detection in Time Series: A Comprehensive Evaluation. Proceedings of the VLDB Endowment, 15(9):1779–1797, 2022.

- [57] G. Sebestyen, A. Hangan, Z. Czako, and G. Kovacs. A taxonomy and platform for anomaly detection. In 2018 IEEE International Conference on Automation, Quality and Testing, Robotics, AQTR 2018 - THETA 21st Edition, Proceedings, pages 1–6, 2018.

- [58] R. H. Shumway and D. S. Stoffer. Time Series Analysis and Its Applications: With R Examples. Springer, apr 2017.

- [59] N. Singh and C. Olinsky. Demystifying Numenta anomaly benchmark. In Proceedings of the International Joint Conference on Neural Networks, volume 2017-May, pages 1570–1577, 2017.

- [60] J.-I. Takeuchi and K. Yamanishi. A unifying framework for detecting outliers and change points from time series. IEEE Transactions on Knowledge and Data Engineering, 18(4):482–492, 2006.

- [61] J. Tang, C. Deng, and G.-B. Huang. Extreme Learning Machine for Multilayer Perceptron. IEEE Transactions on Neural Networks and Learning Systems, 27(4):809–821, 2016.

- [62] N. Tatbul, T. Lee, S. Zdonik, M. Alam, and J. Gottschlich. Precision and recall for time series. In Advances in Neural Information Processing Systems, volume 2018-December, pages 1920–1930, 2018.

- [63] A. Tettamanzi and M. Tomassini. Soft Computing: Integrating Evolutionary, Neural, and Fuzzy Systems. Springer Science & Business Media, apr 2013.

- [64] M. Uschold and M. Gruninger. Ontologies: Principles, methods and applications. The knowledge engineering review, 11(2):93–136, 1996.

- [65] R. Vargas, C. Munaro, P. Ciarelli, A. Medeiros, B. Amaral, D. Barrionuevo, J. Ara\a’ujo, J. Ribeiro, and L. Magalhães. A realistic and public dataset with rare undesirable real events in oil wells. Journal of Petroleum Science and Engineering, 181, 2019.

- [66] H. Wang, M. Bah, and M. Hammad. Progress in Outlier Detection Techniques: A Survey. IEEE Access, 7:107964–108000, 2019.

- [67] Y. Wang, M. Vuran, and S. Goddard. Analysis of event detection delay in wireless sensor networks. In Proceedings - IEEE INFOCOM, pages 1296–1304, 2011.

- [68] J. Ward, P. Lukowicz, and H. Gellersen. Performance metrics for activity recognition. ACM Transactions on Intelligent Systems and Technology, 2(1), 2011.

- [69] Y. Webscope. Labeled anomaly detection dataset. Technical report, https://webscope.sandbox.yahoo.com/catalog.php?datatype=s\&did=70, mar 2015.

- [70] P. Wenig Phillip.Wenig@Hpi.De, S. Schmidl Sebastian.Schmidl@Hpi.De, and T. Papenbrock. TimeEval: A Benchmarking Toolkit for Time Series Anomaly Detection Algorithms. Proceedings of the VLDB Endowment, 15(12):3678–3681, 2022.

- [71] X.-B. Yan, L. Tao, Y.-J. Li, and G.-B. Cui. Research on event prediction in time-series data. In Proceedings of 2004 International Conference on Machine Learning and Cybernetics, volume 5, pages 2874–2878, 2004.

- [72] L. Zadeh. Fuzzy sets. Information and Control, 8(3):338–353, 1965.

- [73] Y. Zhang, N. Meratnia, and P. Havinga. Outlier detection techniques for wireless sensor networks: A survey. IEEE Communications Surveys and Tutorials, 12(2):159–170, 2010.

- [74] L. Zhao. Event Prediction in the Big Data Era: A Systematic Survey. ACM Computing Surveys, 54(5), 2021.

- [75] Y. Zhou, R. Arghandeh, H. Zou, and C. Spanos. Nonparametric Event Detection in Multiple Time Series for Power Distribution Networks. IEEE Transactions on Industrial Electronics, 66(2):1619–1628, 2019.