0020716YYxxxxxxxx \issn1029-0265 \issnp0020-7160 \jvol00 \jnum00 \jyear2011 \jmonthJuly

Solvers and precondtioners based on Gauss-Seidel and Jacobi algorithms for non-symmetric stochastic Galerkin system of equations

Abstract

In this work, solvers and preconditioners based on Gauss-Seidel and Jacobi algorithms are explored for stochastic Galerkin discretization of partial differential equations (PDEs) with random input data. Gauss-Seidel and Jacobi algorithms are formulated such that the existing software is leveraged in the computational effort. These algorithms are also used as preconditioners to Krylov iterative methods. The solvers and preconditiners are compared with Krylov based iterative methods with the traditional mean-based preconditioner [13] and Kronecker-product preconditioner [17] by solving a steady state state advection-diffusion equation, which upon discretization, results in a non-symmetric positive definite matrix on left-hand-side. Numerical results show that an approximate version of Gauss-Seidel algorithm is a good preconditioner for GMRES to solve non-symmetric Galerkin system of equations.

keywords:

Stochastic finite element method; Krylov based iterative methods; Relaxation based preconditioning; Jacobi and Gauss-Seidel algorithms; polynomial chaos1 Introduction

To account for uncertainties in model, data and parameters, physical phenomena are often modeled as partial differential equations (PDEs) with random coefficients. While Monte Carlo sampling methods remain the standard for providing a probabilistic characterization to the solution of these problems, there is growing need for approaches that address the computational challenge associated with statistical sampling of large scale computational models. More recently, methods based on stochastic Galerkin approximations [8, 3] have been increasingly explored as an alternative to Monte Carlo for solving these problems because of their computational and analytical properties, as well as for their ability to provide an approximation to the deterministic mapping that exists between random input parameters and random solution.

Stochastic finite element methods based on intrusive stochastic Galerkin methods ([14, 12, 4, 10]) and non-intrusive stochastic collocation methods ([16, 19, 11, 2]) have gained popularity in recent years. Here the intrusive and the non-intrusive methods are defined as methods which require significant changes to software already available for solving deterministic PDEs and methods that can use this legacy software without modifications, respectively. Both methods exploit solution regularity to improve on the convergence rates of Monte Carlo methods. The first approach translates the stochastic PDE into a coupled set of deterministic PDEs while the second samples the stochastic PDE at a predetermined set of collocation points, resulting in a set of uncoupled deterministic PDEs. The solution at these collocation points is then used to interpolate the solution in the entire random input domain. Extending legacy software to support stochastic collocation methods is simpler than supporting stochastic Galerkin methods (SGMs). Moreover, intrusive SGMs require specialized linear solvers. However, the resulting set of PDEs in the stochastic Galerkin system is much smaller in number than that in the collocation method. For a canonical random diffusion problem, it is shown [5] that SGM using iterative Krylov-based linear solvers and mean-based preconditioning [13] is more efficient than the non-intrusive sparse grid collocation method.

While the stochastic Galerkin method is often considered to be a fully intrusive method, there are in fact a variety of solver approaches for the stochastic Galerkin method with varying level of intrusiveness. In the present work, the less intrusive Gauss-Seidel and Jacobi mean-based algorithms are explored to solve stochastic Galerkin system of equations. We consider these methods to be less intrusive than the Krylov-based methods as they allow reuse of existing deterministic solvers. Moreover preconditioning techniques for Krylov-based methods based on Gauss-Seidel and Jacobi ideas are also explored and compared to traditional mean-based preconditioning. Various iterative methods and preconditioners based on matrix splitting methods to solve stochastic Galerkin system of equations are compared in [14]. The -splitting methods discussed in section 3.1.3 in [14] are similar to the Gauss-Seidel and Jacobi algorithms discussed in this paper. In [14], it was reported that the mean-based preconditioner was more efficient in terms of computational time than the other -splitting methods considered. A symmetric Gauss-Seidel preconditioner was used in [14] which involves one forward and one backward Gauss-Seidel iteration. In the present paper, we use only one forward Gauss-Seidel iteration for preconditioning and hence the computational cost of this preconditioner is half that of the symmetric Gauss-Seidel preconditioner. Although, these solvers and preconditioners can be used to solve any stochastic Galerkin system of equations, we focus here on solving non-symmetric stochastic Galerkin system of equations. Gauss-Seidel and Jacobi algorithms are carefully formulated such that the number of matrix-vector products are minimized. Unlike the mean-based preconditioner, which has information from the mean stiffness matrix only, preconditioners based on Gauss-Seidel and Jacobi algorithms make use of higher order information of the stochastic stiffness matrix. Another preconditioner that makes use of higher order information is the Kronecker product preconditioner defined in [17]. This preconditioner has the Kronecker product structure of the Galerkin stiffness matrix and is constructed to be close to the stochastic stiffness matrix. All these techniques are then compared by solving a non-symmetric test problem with random diffusion coefficient. These comparisons demonstrate a trade-off in computational cost versus intrusiveness with the Krylov-based methods using an approximate Gauss-Seidel or Jacobi mean preconditioner being the most efficient. Main contribution in this paper is to adapt Gauss-Seidel and Jacobi algorithms such that the legacy software can be reused to solve stochastic partial differential equations and to reduce the computational cost of Krylov based iterative methods by using these algorithms as preconditioners in which the number of matrix-vector products is reduced by minimizing the duplicated computations.

This paper is organized as follows. Two models of the input random field are developed in section 3 which dictate very different behavior for the stochastic solution methods considered next. Section 4 describes the stochastic Galerkin method. Various solver and preconditioning methods for solving the stochastic Galerkin system of equations are introduced in section 5. In section 6, a test problem governed by the advection-diffusion equation with a random diffusion coefficient is formulated. In section 7, numerical experiments are carried out to compare the efficiency of various solver and preconditioning methods that have been introduced in section 5. Finally section 8 provides the concluding remarks.

2 Stochastic partial differential equations

Let be an open subset of and be a complete probability space with sample space , -algebra and probability measure . We are interested in studying the following stochastic partial differential equation: find a random field, such that the following holds -almost surely (-a.s.):

| (1) |

subject to the boundary condition

| (2) |

where is a differential operator and is a boundary operator.

3 Input random field model

Uncertainty in stochastic PDEs often arises by treating coefficients in the differential operator as random fields. For computational purposes, each stochastic coefficient must be discretized in both spatial and stochastic domains. To this end, it is often approximated with a truncated series expansion that separates the spatial variable from the stochastic variable resulting in a representation by a finite number of random variables. In the present problem, two cases of random field models are considered. In the first case, the random field is assumed to be charcaterized by a truncated Karhunen-Loève expansion obtained by specifying the covariance function and a product uniform measure on the associated random variables. In the second case, the random field is assumed to have a log-normal distribution, that is where is a Gaussian random field, and is approximated by a truncated polynomial chaos expansion.

3.1 Karhunen-Loève representation

Let be the covariance function of the random field , where denotes mathematical expectation. Then can be approximated through its truncated Karhunen-Loève (K-L) expansion [8] given by

| (3) |

where is the mean of the random field and are solutions of the integral eigenvalue problem

| (4) |

The eigenvalues are positive and non-increasing, and the eigenfunctions are orthonormal, that is,

| (5) |

where is the Kronecker delta. In Eq. 3, are uncorrelated random variables with zero mean. As a first test-case, the diffusion coefficient is modeled as a random field with an exponential covariance function

| (6) |

In addition, the random variables in the K-L expansion are assumed to be independent identically distributed with a uniform distribution.

3.2 Polynomial chaos representation

In general, the K-L expansion results in a representation that, while linear in the KL random variables, involves variables that are statistically dependent with a joint distribution that is data-dependent. For problems driven by experimental evidence, this could present both theoretical and algorithmic challenges. An alternative representation relies on representing the random field directly in the form of a polynomial chaos decomposition [8]. Accordingly, the random field is approximated as

| (7) |

where are orthogonal polynomials with respect to measure of the random variables which are chosen, typically, to be statistically independent. Specifically,

| (8) |

Accordingly, and as a second test-case, the diffusion coefficient , is modeled as a log-normal random field [7] where and is a Gaussian random field with exponential covariance (6). Here, is approximated with a truncated K-L expansion of the form

| (9) |

In this case the random variables are standard normal random variables and thus are independent and can be approximated with a truncated polynomial chaos expansion (7) where are multidimensional Hermite polynomials in gaussian random variables . For a given total polynomial order , the total number of polynomials is .

4 Stochastic Galerkin method

Let be the subspace of the Sobolev space that vanishes on the boundary and is equipped with the norm . Problem (1) can then be written in the following equivalent variational form [9]: find such that

| (10) |

where is a continuous and coercive bilinear form and is a continuous bounded linear functional. In the stochastic Galerkin method, we seek the solution of the variational problem (10) in a tensor product space , where, is finite dimensional space of continuous polynomials corresponding to the spatial discretization of and is the space of random variables spanned by polynomial chaos [8] of order up to . Then the finite dimensional approximation of the exact solution on the tensor product space is given as the solution to

| (11) |

In equation (11) the input random field in the bilinear form can be approximated using either a K-L expansion or a polynomial chaos expansion depending on a choice of model for the random field. The finite dimensional approximation is represented with truncated polynomial chaos expansion, where the multidimensional polynomial chaos are orthogonal with respect to the probability measure of the underlying random variables. The resulting set of coupled PDEs are then discretized using standard techniques such as the finite element or finite difference methods. In the present problem, the set of coupled PDEs are discretized using the finite element method and the resulting system of linear equations can be written as

| (12) |

where , and when is approximated by a truncated K-L expansion, or and when is approximated by a polynomial chaos expansion. Here are the polynomial chaos coefficients of the stiffness matrix (section (3.4) of [13]) and are the polynomial chaos coefficients of the discrete solution vector

| (13) |

Equation (12) can be written in the form of a global stochastic stiffness matrix of size by as

| (14) |

where . We will denote this system as . In practice it is prohibitive to assemble and store the global stochastic stiffness matrix in this form, rather each block of the stochastic stiffness matrix can be computed from the when needed. The stochastic stiffness matrix is block sparse, which means that some of the off-diagonal blocks are zero matrices, because of the fact that the defined above vanishes for certain combination of and . Unlike the off-diagonal blocks, the diagonal blocks have contributions from the mean striffness matrix and thus dominate over the off-diagonals. These properties of block sparsity and diagonal dominance are exploited to develop solvers and preconditioners based on Jacobi and Gauss-Seidel algorithms.

5 Solution methods for stochastic Galerkin systems

In this section, various solver techniques and preconditioning methods for solving the linear algebraic equations arising from stochastic Galerkin discretizations (12) are described. The solver methods discussed are: relaxation methods, namely, a Jacobi mean method and a Gauss-Seidel mean method, and Krylov-based iterative methods [15]. Also various stochastic preconditioners used to accelerate convergence of the Krylov methods are discussed, including mean-based [13], Gauss-Seidel mean, approximate Gauss-Seidel mean, approximate Jacobi mean and Kronecker product [17] preconditioners. The relaxation schemes can be viewed as fixed point iterations on a preconditioned system [15]. In Jacobi and Gauss-Seidel methods, mean splitting is used rather than traditional diagonal block splitting as it allows use of the same mean matrix for all inner deterministic solves (and thus reuse of the preconditioner ).

5.1 Jacobi mean algorithm

In this method, systems of equations of size equal to that of the deterministic system are solved iteratively by updating the right-hand-side to obtain the solution to the stochastic Galerkin system of equations (12):

| (15) |

The above system of equations is solved for using any solution technique appropriate for the mean matrix . Thus existing legacy software can be used with minimal modification to solve the stochastic Galerkin system. In this work, Krylov-based iterative methods with appropriate preconditioners will be used. One cycle of solves from is considered one Jacobi outer iteration, and after each outer iteration, the right-hand-side in equation (15) is updated replacing with the new solution . These outer iterations are continued until the required convergence tolerance is achieved. The Jacobi mean algorithm is shown in Algorithm 1.

1. Choose initial guess and compute residual

2. Iteration count,

3. while do

4. for do

5. Solve

6. end for

7.

8.

9. end while.

Note that for a given outer iteration, all of the right-hand-sides for are available simultaneously, and thus their solution can be efficiently parallelized. Moreover block algorithms optimized for multiple right-hand-sides may be used to further increase performance. Finally this approach does not require a large amount of memory to compute the solution. The disadvantage of the method is that it may not converge or may converge very slowly when the diagonal blocks of the stochastic stiffness matrix are less dominant over off-diagonal blocks.

5.2 Gauss-Seidel mean iterative method

The Gauss-Seidel method considered is similar to the Jacobi method, except the right-hand-side in equation (15) is updated after each deterministic solve with the newly computed . Symbolically this is written

| (16) |

As before, one cycle of solves from is considered one outer iteration of the Gauss-Seidel method, and these outer iterations are repeated until the required convergence tolerance is achieved. Note however that computing the updates as shown here would result in a large number of duplicated matrix-vector products for each outer iteration. Instead, after each is computed by solving the mean linear system, we first compute for all in which is nonzero for any . Then for each corresponding we update . This allows all of the right-hand-sides to be updated as required using the fewest number of matrix-vector products and without resorting to storing intermediate products. The complete Gauss-Seidel algorithm is shown in Algorithm 2.

1. Choose initial guess and compute residual

2. Iteration count,

3. Initialize

4. while do

5.

6. for do

7. Solve

8.

9. for

10.

11. for

12. if then

13.

14.

15. endif

16. end for

17. end for

18.

19. end for

20.

21. end while.

Often this method converges in fewer iterations than the Jacobi method, at the expense of no longer having all of the right-hand-sides available simultaneously. Unlike diagonal block splitting methods defined in [14], mean block splitting is used in both Jacobi and Gauss-Seidel algorithms and hence the left-hand-side matrix is the mean matrix for all inner deterministic problems and only the right-hand-side changes. In such cases recycled Krylov basis methods could be explored to increase performance.

5.3 Krylov based iterative methods with matrix-free operations

Krylov based iterative methods [15] such as the conjugate gradient (CG) method and the generalized minimal residual (GMRES) method can be used to solve the stochastic Galerkin system (12) in which matrix vector products are computed using “matrix-free” operations:

| (17) |

If the matrix vector products are computed from Eq. 17, it is not required to assemble the full stochastic Galerkin stiffness matrix, drastically decreasing memory requirements. However if a large number of iterations of a Krylov method such as GMRES are required, allocation of the Krylov basis may still require a very large amount of memory. Thus good preconditioning strategies for the stochastic Galerkin system are required, several of which will be discussed below.

Mean-based preconditioner

The mean-based preconditioner [13] is given by where is a preconditioner for the mean. The mean-based preconditioner is very efficient to compute and apply, since it only must be generated once from a matrix that is of the size of the deterministic system. However it doesn’t incorporate any higher-order stochastic information, thus its performance degrades as the stochastic dimension, polynomial order, or random field variance increases [12, 17].

Gauss-Seidel preconditioner

One or more outer iterations of the Gauss-Seidel mean algorithm can be used as a preconditioner to the Krylov based iterative methods. An advantage of this method is that the cost of applying the preconditioner can be controlled by adjusting the tolerance of the inner deterministic solves and number of outer iterations. Decreasing this tolerance and increasing the number of outer iterations will reduce the number of iterations in the Krylov method, but make the preconditioner more expensive to apply, and thus these must be balanced to minimize overall computational cost. Generally we have found the cost of the preconditioner to be dominated by solving the mean systems, and thus the performance was improved by loosening the outer solver tolerance or limiting the number of outer iterations. In the results presented below we limited the preconditioner to only one Gauss-Seidel iteration.

Approximate Gauss-Seidel preconditioner

The process of increasing the inner solver tolerance can be taken to its extreme of replacing the inner mean solves by application of the mean preconditioner. As with the Gauss-Seidel preconditioner above, we found experimentally that this approach worked best with only one Gauss-Seidel iteration, and adding additional iterations did not improve the quality of the preconditioner. We also found that the cost of the preconditioner was reduced dramatically if only the first-order terms in the expansion for the stiffness matrix were used in the preconditioner and using higher-order terms did not improve performance. We refer to this as the approximate Gauss-Seidel preconditioner.

Approximate Jacobi preconditioner

Similar to the approximate Gauss-Seidel preconditioner, Jacobi iterations can be used with a preconditioner in place of the mean stiffness matrix. In this case we used two outer Jacobi iterations, since the first iteration is equivalent to mean-based preconditioning (i.e., the additional terms on the right-hand-side of equation (15) are zero). Increasing the number of outer iterations did not improve the efficiency of the overall solver. We refer to this as the approximate Jacobi preconditioner. Both approximate Gauss-Seidel and approximate Jacobi preconditioners are found to be very effective in reducing the number of Krylov iterations and are also not very expensive to apply.

Kronecker product preconditioner

The Kronecker product preconditioner [17] is defined as , where is the mean stiffness matrix and is

| (18) |

where, . Unlike the mean-based preconditioner, the Kronecker product preconditioner incorporates higher order stochastic information allowing the Krylov based algorithm to converge in fewer iterations. However the disadvantage is that the cost of constructing the Kronecker product preconditioner is greater than that for the mean-based preconditioner, and is also more expensive to apply. Over all, solution time is found to be less than that with mean-based preconditioner as reported in [17]. However, we found in our numerical experiments that the approximate Gauss-Seidel and approximate Jacobi preconditioner performed better than the Kronecker product preconditioner.

6 Problem Statement

In this work, a stochastic steady state advection-diffusion equation [20] with Dirichlet boundary conditions is used as a test problem for various solution methods. Assume to be a random field that is bounded and strictly positive, that is,

| (19) |

For the steady-state advection-diffusion equation, we wish to compute a random field , such that the following holds -almost surely (-a.s.):

| (20) | ||||

| (21) |

where is the advection direction, .

Problem (20) can then be written in the following equivalent variational form [9]: find such that

| (22) |

where is the continuous and coercive (from assumption (19)) bilinear form given by

| (23) |

and is the continuous bounded linear functional given by

| (24) |

From the Lax-Milgram lemma, equation (22) has unique a solution in .

7 Numerical illustration

To compare the performance of different solvers and preconditioners discussed above, a 2-D stochastic diffusion equation and a 2-D stochastic advection-diffusion equation presented in section 6 are solved using the stochastic Galerkin method described in section 4. In the above two problems, the diffusion coefficient is modeled as both a random field discretized using a truncated K-L expansion with uniform random variables (section 3.1) and a log-normal random field discretized using a truncated polynomial chaos expansion (section 3.2). In the first case, the orthogonal polynomials used in the stochastic Galerkin method are tensor products of 1-D Legendre polynomials and in the second case tensor products of Hermite polynomials are used. For simplicity a constant unit force is used as the right-hand-side in equation (20). The spatial dimensions are discretized using standard finite element mesh with linear quadrilateral elements. In advection-diffusion equation, the parameter, . The corresponding stochastic Galerkin linear system is constructed using the Stokhos and Epetra packages in Trilinos. For the Jacobi solver, Gauss-Seidel solver and Gauss-Seidel preconditioner the non-symmetric linear systems obtained from the discretization of stochastic advection-diffusion equation are solved via multi-grid preconditioned GMRES provided by the AztecOO and ML packages in Trilinos.

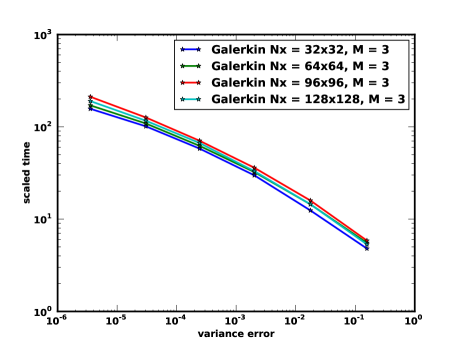

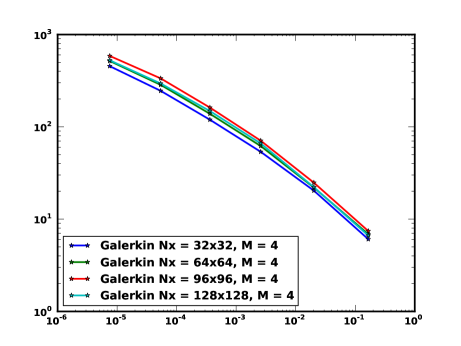

For the numerical comparisons, we chose to discretize the domain into a grid resulting in a total number of nodes, . In figure (1) the solution time for the stochastic Galerkin method, scaled by the deterministic solution time at the mean of the random field, is compared for different mesh sizes (, , and ) for the diffusion problem demonstrating that the solution time does not depend strongly on the mesh size (as is to be expected for the multi-grid preconditioner). The scaled solution time for these solvers and preconditioning techniques as a function of the standard deviation of the input random field, stochastic dimension, and polynomial order are then tabulated in Tables 1–6. In the tables, the number of Krylov iterations for the aforementioned preconditioners and iterations for Gauss-Seidel and Jacobi solvers are provided in parentheses. In the tables, MB, AGS, AJ, GS and KP are the mean-based, approximate Gauss-Seidel, approximate Jacobi, Gauss-Seidel and Kronecker-product preconditioners respectively. GS in the solution methods refers to the Gauss-Seidel mean algorithm (Algorithm-2). “Jacobi” refers to the Jacobi mean algorithm (Algorithm-1). The solution tolerance for all of the stochastic Galerkin solvers is . For the Gauss-Seidel and Jacobi solvers, the inner solver tolerance is . All the computations are performed using a single core of an 8 core, Intel Xeon machine with 2.66 GHz and 16GB Memory.

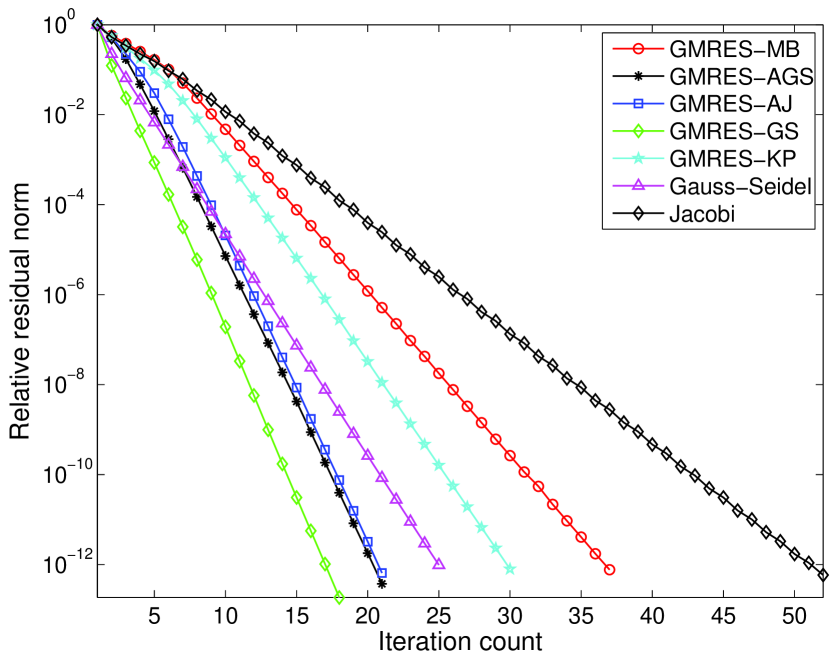

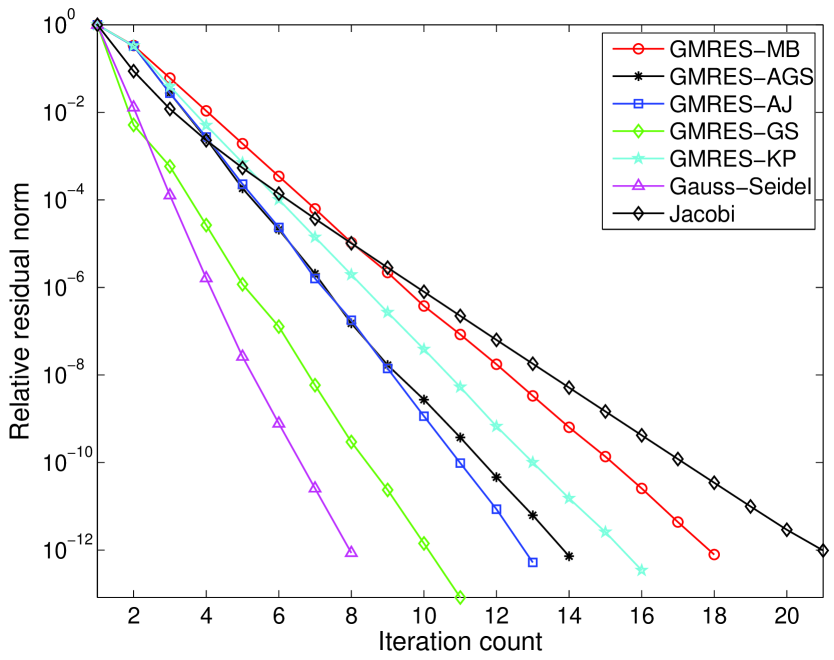

Figures (2) and (3) show the plots of relative residual error vs iteration count for the stochastic Galerkin system with stochastic dimension 4 and polynomial order 4 and standard deviation 0.1. It can be observed that the matrix-free Krylov solver with the Gauss-Seidel preconditioner takes the least number of iterations in case of uniform random field and Gauss-Seidel solver in case of log-normal random field, whereas the Jacobi solver takes highest number of iterations in both cases for a given tolerance. However in terms of solution time, the matrix-free Krylov solver with the approximate Gauss-Seidel preconditioner is the most efficient compared to all other stochastic Galerkin solvers. Comparison in terms of iteration count alone is misleading to evaluate the computational cost because, in each iteration the cost of preconditioner as observed in the case of Gauss-Seidel preconditioner could be very high resulting in higher computational cost even with small number of iterations. Hence we also compare the solution time for all preconditioners and solvers.

In the tables, “Div” means diverged. In the case of diffusion coefficient modeled with uniform random random variables, with small variance (), it can be observed from Tables 1 and 2 that more intrusive Krylov-based stochastic Galerkin solvers are more efficient than less intrusive Gauss-Seidel and Jacobi solvers. Moreover the approximate Gauss-Seidel and Jacobi preconditioners are a significant improvement over the traditional mean-based approach. However as the variance of the random field increases, we see from Table 3 that the Gauss-Seidel and Jacobi solvers suffer considerably, whereas the the Krylov-based approaches (excluding the Gauss-Seidel preconditioner) still perform quite well. This is not unexpected, as the operator becomes more indefinite as the variance increases. Since the efficiency of the preconditioners and solvers based on Gauss-Seidel and Jacobi algorithms depends heavily on the efficiency of the deterministic solver, a good preconditioner for the mean stiffness matrix will significantly improve the above mentioned stochastic Galerkin preconditioners and solvers.

In the case of the log-normal random field, we can see from Tables 4 and 5 that Gauss-Seidel and Jacobi solvers have not performed well in terms of solution time. The Gauss-Seidel solver is comparable to the Krylov solver with the AGS preconditioner only in case of log-normal random field and at higher polynomial chaos order. For higher variance of the random field, we see from Table 6 that the Jacobi solver diverges. This problem might be addressed to some extent by using the true diagonal matrix from global stochastic stiffness matrix as the left-hand-side in the Jacobi solver and preconditioner instead of the mean matrix . From the Krylov iteration count provided in the parentheses of the tables, we can observe that the preconditioners and solvers based on Gauss-Seidel and Jacobi are robust with respect to stochastic dimension and polynomial order, however they are not robust with respect to the variance of the input random field. The numerical results clearly show that the approximate Gauss-Seidel preconditioner performs betters than the mean-based preconditioner. It was reported in [14] that the mean-based preconditioner was more efficient in terms of computational time than the Gauss-Seidel preconditioner. It could be due to the use of symmetric Gauss-Seidel preconditioner and duplicated matrix-vector products in the Gauss-Seidel preconditioner. In our work, we use non-symmetric Gauss-Seidel preconditioner which is half expensive as that of its symmetric version and we also minimize the duplicated matrix-vector products in Gauss-Seidel algorithm (Algorithm-2).

8 Conclusions

In this work, various preconditioners for Krylov-based methods and solver methods based on Gauss-Seidel and Jacobi method are introduced. Results are compared with Krylov based methods (GMRES) with mean-based preconditioning. The less intrusive Gauss-Seidel/Jacobi approaches did not perform well. However these solvers can be used when legacy software has to be used to solve SPDEs with the stochastic Galerkin descritization. The use of approximate Gauss-Seidel or Jacobi preconditioners yields a significant improvement over traditional mean-based preconditioning. The Kronecker product preconditioner proposed in [17] was in between mean-based preconditioner and approximate Gauss-Seidel preconditioner. Block and recycled Krylov methods present additional promising alternatives for improve the efficiency of the Jacobi and Gauss-Seidel solvers.

Acknowledgements

This work was partially supported by the US Department of Energy through the NNSA Advanced Scientific Computing and Office of Science Advanced Scientific Computing Research programs.

References

- [1] B.M. Adams, K.R. Dalbey, M.S. Eldred, D.M. Gay, L.P. Swiler, W.J. Bohnhoff, J.P. Eddy, K. Haskell, and P.D. Hough, DAKOTA, A Multilevel Parallel Object-Oriented Framework for Design Optimization, Parameter Estimation, Uncertainty Quantification, and Sensitivity Analysis, Sandia National Laboratories, tech. rep.sand2010-2183 ed. (2010).

- [2] I. Babuška, F. Nobile, and R. Tempone, A stochastic collocation method for elliptic partial differential equations with random input data, SIAM J. Numer. Anal. 45 (2007), pp. 1005–1034.

- [3] I. Babuška, R. Tempone, and G.E. Zouraris, Galerkin finite element approximations of stochastic elliptic partial differential equations, SIAM J. Numer. Math. 42 (2004), pp. 800–825.

- [4] M. Eiermann, O.G. Ernst, and E. Ullmann, Computationa aspects of the stochastic finite element method, Computing and Visualization in Science 10 (2007), pp. 3–15.

- [5] H.C. Elman, C.W. Miller, E.T. Phipps, and R.S. Tuminaro, Assessment of collocation and galerkin approaches to linear diffusion equations with random data, International Journal for Uncertainty Quantifications 1 (2011), pp. 19–34.

- [6] A. Genz and B. Keister, Fully symmetric interpolatory rules for multiple integrals over infinite regions with gaussian weight, Journal of computational and applied mathematics 71 (1996), pp. 299–309.

- [7] R. Ghanem, Ingredients for a general purpose stochastic finite elements implementation, Computer Methods in Applied Mechanics and Engineering 168 (1999), pp. 19–34.

- [8] R. Ghanem and P. Spanos, Stochastic Finite Elements: A Spectral Approach, Springer-Verlag (1991).

- [9] R.G. Ghanem and A. Doostan, On the construction and analysis of stochastic models: characterization and propagation of the errors associated with limited data, Journal of Computational Physics 213 (2006), pp. 63–81.

- [10] R.G. Ghanem and R.M. Kruger, Numerical solution of spectral stochastic finite element systems, Comput. Methods Appl. Mech. Engrg. 129 (1996), pp. 289–303.

- [11] F. Nobile, R. Tempone, and C.G. Webster, A sparse grid stochastic collocation method for partial differential equations with random input data, SIAM Journal of Numerical Analysis 46 (2008), pp. 2309–2345.

- [12] M.F. Pellissetti and R.G. Ghanem, Iterative solution of systems of linear equations arising in the context of stochastic finite elements, Advances in Engineering Software 31 (2000), pp. 607–616.

- [13] C. Powell and H. Elman, Block-diagonal preconditioning for spectral stochastic finite element systems, IMA Journal of Numerical Analysis 29 (2009), pp. 350–375.

- [14] E. Rosseel and S. Vandewalle, Iterative solvers for the stochastic finite element method, SIAM J. Sci. Comput 32 (2010), pp. 372–397.

- [15] Y. Saad, Iterative Methods for Sparse Linear Systems, SIAM (1996).

- [16] S. Smolyak, Quadrature and interpolation formulas for tensor products of certain classes of functions, Dokl. Akad. Nauk SSSR 148 (1963), pp. 1042–1043.

- [17] E. Ullmann, A kronecker product preconditioner for stochastic galerkin finite element discretizations, SIAM J. Sci. Comput. 32 (2010), pp. 923–946.

- [18] N. Weiner, The homogeneous chaos, American Journal of Mathematics 60 (1963), pp. 897–936.

- [19] D. Xiu and J. Hesthaven, High-order collocation methods for differential equations with random inputs, SIAM Journal of Scientific Computing 60 (2005), pp. 1118–1139.

- [20] X. Xu, A. Janka, and J.A. Désidéri, Cascadic multigrid for the advection-diffusion equation, INRIA, rr4574 ed. (2002).

| Stoch. | Preconditioners for GMRES | GS, Jacobi Solvers | |||||

|---|---|---|---|---|---|---|---|

| dim | MB | AGS | AJ | GS | KP | GS | Jacobi |

| 1 | 12 (24) | 7 (15) | 14 (15) | 65 (12) | 9 (18) | 64 (14) | 114 (27) |

| 2 | 47 (30) | 27 (17) | 48 (17) | 240 (15) | 37 (23) | 256 (19) | 489 (39) |

| 3 | 133 (34) | 74 (19) | 129 (19) | 631 (17) | 106 (27) | 727 (23) | 1353 (46) |

| 4 | 291 (36) | 165 (20) | 285 (20) | 1343 (18) | 246 (29) | 1579 (25) | 3088 (52) |

| 5 | 598 (38) | 339 (21) | 562 (21) | 2571 (19) | 532 (31) | 3098 (27) | 5941 (55) |

| 6 | 1068 (40) | 611 (22) | 1012 (22) | 4994 (21) | 989 (34) | 5808 (30) | 11567 (62) |

| 7 | 1740 (41) | 976 (22) | 1690 (22) | 7589 (21) | 1619 (35) | 9951 (32) | 21199 (64) |

| PC | Preconditioners for GMRES | GS, Jacobi Solvers | |||||

|---|---|---|---|---|---|---|---|

| order | MB | AGS | AJ | GS | KP | GS | Jacobi |

| 2 | 41 (26) | 26 (16) | 45 (16) | 213 (13) | 35 (22) | 233 (17) | 429 (33) |

| 3 | 125 (32) | 70 (18) | 124 (18) | 604 (16) | 102 (26) | 663 (21) | 1278 (43) |

| 4 | 291 (36) | 165 (20) | 285 (20) | 1343 (18) | 246 (29) | 1579 (25) | 3088 (52) |

| 5 | 626 (40) | 336 (21) | 591 (22) | 2654 (20) | 543 (32) | 3302 (29) | 6436 (60) |

| 6 | 1163 (44) | 637 (23) | 1104 (24) | 5082 (22) | 1008 (35) | 6311 (33) | 12558 (68) |

| 7 | 1981 (47) | 1062 (24) | 1825 (25) | 8443 (23) | 1713 (38) | 11143 (36) | 25117 (74) |

| Preconditioners for GMRES | GS, Jacobi Solvers | ||||||

| MB | AGS | AJ | GS | KP | GS | Jacobi | |

| 0.10 | 291 (36) | 165 (20) | 285 (20) | 1343 (18) | 246 (29) | 1579 (25) | 3088 (52) |

| 0.11 | 328 (41) | 182 (22) | 326 (23) | 1556 (21) | 284 (33) | 1960 (31) | 3711 (62) |

| 0.12 | 389 (49) | 218 (26) | 371 (26) | 1921 (25) | 325 (39) | 2404 (38) | 4624 (76) |

| 0.13 | 469 (58) | 257 (30) | 461 (31) | 2430 (30) | 380 (46) | 3039 (48) | 5975 (97) |

| 0.14 | 589 (73) | 324 (38) | 565 (39) | 2968 (38) | 478 (57) | 4123 (65) | 8153 (131) |

| 0.15 | 810 (101) | 437 (52) | 742 (52) | 3949 (52) | 650 (78) | 6110 (96) | 12514 (196) |

| Stoch. | Preconditioners for GMRES | GS, Jacobi Solvers | |||||

|---|---|---|---|---|---|---|---|

| dim | MB | AGS | AJ | GS | KP | GS | Jacobi |

| 1 | 9 (16) | 8 (13) | 12 (12) | 48 (9) | 12 (12) | 36 (8) | 81 (19) |

| 2 | 33 (17) | 26 (13) | 40 (12) | 148 (9) | 40 (12) | 108 (8) | 255 (20) |

| 3 | 89 (17) | 72 (13) | 102 (12) | 396 (10) | 102 (12) | 252 (8) | 627 (21) |

| 4 | 229 (17) | 184 (13) | 241 (12) | 858 (10) | 215 (12) | 507 (8) | 1263 (21) |

| 5 | 594 (17) | 473 (13) | 576 (12) | 1765 (10) | 572 (12) | 915 (8) | 2399 (22) |

| 6 | 1426 (18) | 1061 (13) | 1221 (12) | 3345 (10) | 1370 (15) | 1737 (8) | 4044 (22) |

| PC | Preconditioners for GMRES | GS, Jacobi Solvers | |||||

|---|---|---|---|---|---|---|---|

| order | MB | AGS | AJ | GS | KP | GS | Jacobi |

| 2 | 25 (15) | 23 (13) | 36 (12) | 129 (8) | 36 (12) | 94 (7) | 202 (16) |

| 3 | 77 (16) | 65 (13) | 97 (12) | 353 (9) | 96 (12) | 252 (8) | 564 (19) |

| 4 | 229 (17) | 184 (13) | 241 (12) | 858 (10) | 215 (12) | 507 (8) | 1263 (21) |

| 5 | 733 (18) | 585 (14) | 645 (12) | 1882 (10) | 640 (12) | 1030 (9) | 2631 (24) |

| 6 | 2157 (19) | 1664 (14) | 1784 (13) | 4085 (10) | 1938 (15) | 1731 (9) | 4821 (26) |

| Preconditioners for GMRES | GS, Jacobi Solvers | ||||||

| MB | AGS | AJ | GS | KP | GS | Jacobi | |

| 0.10 | 229 (17) | 184 (13) | 241(12) | 858 (10) | 215 (15) | 507 (8) | 1263 (21) |

| 0.15 | 275 (20) | 214 (15) | 267 (13) | 1020 (12) | 266 (13) | 634 (10) | 2083 (34) |

| 0.20 | 333 (24) | 255 (18) | 285 (14) | 1177 (14) | 285 (14) | 825 (13) | 3889 (62) |

| 0.25 | 391 (28) | 285 (20) | 305 (15) | 1415 (17) | 304 (15) | 1018 (16) | 17511 (254) |

| 0.30 | 464 (33) | 328 (23) | 361 (18) | 1656 (20) | 362 (18) | 1210 (19) | Div |

| 0.35 | 529 (38) | 387 (27) | 660 (32) | 1979 (24) | 664 (32) | 1464 (23) | Div |