Solving Large-Scale Sparse PCA to Certifiable (Near) Optimality

Abstract

Sparse principal component analysis (PCA) is a popular dimensionality reduction technique for obtaining principal components which are linear combinations of a small subset of the original features. Existing approaches cannot supply certifiably optimal principal components with more than of variables. By reformulating sparse PCA as a convex mixed-integer semidefinite optimization problem, we design a cutting-plane method which solves the problem to certifiable optimality at the scale of selecting covariates from variables, and provides small bound gaps at a larger scale. We also propose a convex relaxation and greedy rounding scheme that provides bound gaps of in practice within minutes for s or hours for s and is therefore a viable alternative to the exact method at scale. Using real-world financial and medical datasets, we illustrate our approach’s ability to derive interpretable principal components tractably at scale.

Keywords: Sparse PCA, Sparse Eigenvalues, Semidefinite Optimization

1 Introduction

In the era of big data, interpretable methods for compressing a high-dimensional dataset into a lower dimensional set which shares the same essential characteristics are imperative. Since the work of Hotelling (1933), principal component analysis (PCA) has been one of the most popular approaches for completing this task. Formally, given centered data and its normalized empirical covariance matrix , PCA selects one or more leading eigenvectors of and subsequently projects onto these eigenvectors. This can be achieved in time by taking a singular value decomposition .

A common criticism111A second criticism of PCA is that, as set up here, it uses the sample correlation or covariance matrix. This is a drawback, because sample covariance matrices are poorly conditioned estimators which over-disperses the sample eigenvalues, particularly in high-dimensional settings. In practice, this can be rectified by, e.g., using a shrinkage estimator (see, e.g., Ledoit and Wolf, 2004). We do not do so here for simplicity, but we recommend doing so if using the techniques developed in this paper in practice. of PCA is that the columns of are not interpretable, since each eigenvector is a linear combination of all original features. This causes difficulties because:

-

•

In medical applications such as cancer detection, PCs generated during exploratory data analysis need to supply interpretable modes of variation (Hsu et al., 2014).

-

•

In scientific applications such as protein folding, each original co-ordinate axis has a physical interpretation, and the reduced set of co-ordinate axes should too.

-

•

In finance applications such as investing capital across index funds, each non-zero entry in each eigenvector used to reduce the feature space incurs a transaction cost.

-

•

If , PCA suffers from a curse of dimensionality and becomes physically meaningless (Amini and Wainwright, 2008).

One common method for obtaining interpretable principal components is to stipulate that they are sparse, i.e., maximize variance while containing at most non-zero entries. This approach leads to the following non-convex mixed-integer quadratically constrained problem (see d’Aspremont et al., 2005):

| (1) |

where the constraint forces variance to be explained in a compelling fashion.

1.1 Background and Literature Review

Owing to sparse PCA’s fundamental importance in a variety of applications including best subset selection (d’Aspremont et al., 2008), natural language processing (Zhang et al., 2012), compressed sensing (Candes and Tao, 2007), and clustering (Luss and d’Aspremont, 2010), three distinct classes of methods for addressing Problem (1) have arisen. Namely, (a) heuristic methods which obtain high-quality sparse PCs in an efficient fashion but do not supply guarantees on the quality of the solution, (b) convex relaxations which obtain certifiably near-optimal solutions by solving a convex relaxation and rounding, and (c) exact methods which obtain certifiably optimal solutions, albeit in exponential time.

Heuristic Approaches:

The importance of identifying a small number of interpretable principal components has been well-documented in the literature since the work of Hotelling (1933) (see also Jeffers, 1967), giving rise to many distinct heuristic approaches for obtaining high-quality solutions to Problem (1). Two interesting such approaches are to rotate dense principal components to promote sparsity (Kaiser, 1958; Richman, 1986; Jolliffe, 1995), or apply an penalty term as a convex surrogate to the cardinality constraint (Jolliffe et al., 2003; Zou et al., 2006). Unfortunately, the former approach does not provide performance guarantees, while the latter approach still results in a non-convex optimization problem.

More recently, motivated by the need to rapidly obtain high-quality sparse principal components at scale, a wide variety of first-order heuristic methods have emerged. The first such modern heuristic was developed by Journée et al. (2010), and involves combining the power method with thresholding and re-normalization steps. By pursuing similar ideas, several related methods have since been developed (see Witten et al., 2009; Hein and Bühler, 2010; Richtárik et al., 2020; Luss and Teboulle, 2013; Yuan and Zhang, 2013). Unfortunately, while these methods are often very effective in practice, they sometimes badly fail to recover an optimal sparse principal component, and a practitioner using a heuristic method typically has no way of knowing when this has occurred. Indeed, Berk and Bertsimas (2019) recently compared heuristic methods, including most of those reviewed here, on instances of sparse PCA, and found that none of the heuristic methods successfully recovered an optimal solution in all cases (i.e., no heuristic was right all the time).

Convex Relaxations:

Motivated by the shortcomings of heuristic approaches on high-dimensional datasets, and the successful application of semi-definite optimization in obtaining high-quality approximation bounds in other applications (see Goemans and Williamson, 1995; Wolkowicz et al., 2012), a variety of convex relaxations have been proposed for sparse PCA. The first such convex relaxation was proposed by d’Aspremont et al. (2005), who reformulated sparse PCA as the rank-constrained mixed-integer semidefinite optimization problem (MISDO):

| (2) |

where models the outer product . Note that, for a rank-one matrix , the constraint in (2) is equivalent to the constraint in (1), since a vector is -sparse if its outer product is -sparse. After performing this reformulation, d’Aspremont et al. (2005) relaxed both the cardinality and rank constraints and instead solved

| (3) |

which supplies a valid upper bound on Problem (1)’s objective.

The semidefinite approach has since been refined in a number of follow-up works. Among others, d’Aspremont et al. (2008), building upon the work of Ben-Tal and Nemirovski (2002), proposed a different semidefinite relaxation which supplies a sufficient condition for optimality via the primal-dual KKT conditions, and d’Aspremont et al. (2014) analyzed the quality of the semidefinite relaxation in order to obtain high-quality approximation bounds. A common theme in these approaches is that they require solving large-scale semidefinite optimization problems. This presents difficulties for practitioners because state-of-the-art implementations of interior point methods such as Mosek require memory to solve Problem (3), and therefore currently cannot solve instances of Problem (3) with (see Bertsimas and Cory-Wright, 2020, for a recent comparison). Techniques other than interior point methods, e.g., ADMM or augmented Lagrangian methods as reviewed in Majumdar et al. (2019) could also be used to solve Problem (3), although they tend to require more runtime than IPMs to obtain a solution of a similar accuracy and be numerically unstable for problem sizes where IPMs run out of memory (Majumdar et al., 2019).

A number of works have also studied the statistical estimation properties of Problem (3), by assuming an underlying probabilistic model. Among others, Amini and Wainwright (2008) have demonstrated the asymptotic consistency of Problem (3) under a spiked covariance model once the number of samples used to generate the covariance matrix exceeds a certain threshold; see Vu and Lei (2012); Berthet and Rigollet (2013); Wang et al. (2016) for further results in this direction, Miolane (2018) for a recent survey.

In an complementary direction, Dey et al. (2018) has recently questioned the modeling paradigm of lifting to a higher dimensional space by instead considering the following (tighter) relaxation of sparse PCA in the original problem space

| (4) |

Interestingly, Problem (4)’s relaxation provides a -factor bound approximation of Problem (1)’s objective, while Problem (3)’s upper bound may be exponentially larger in the worst case (Amini and Wainwright, 2008). This additional tightness, however, comes at a price: Problem (4) is NP-hard to solve—indeed, providing a constant-factor guarantee on sparse PCA is NP-hard (Magdon-Ismail, 2017)—and thus (4) is best formulated as a MIO, while Problem (3) can be solved in polynomial time.

More recently, by building on the work of Kim and Kojima (2001), Bertsimas and Cory-Wright (2020) introduced a second-order cone relaxation of (2) which scales to , and matches the semidefinite bound after imposing a small number of cuts. Moreover, it typically supplies bound gaps of less than . However, it does not supply an exact certificate of optimality, which is often desirable, for instance in medical applications.

A fundamental drawback of existing convex relaxation techniques is that they are not coupled with rounding schemes for obtaining high-quality feasible solutions. This is problematic, because optimizers are typically interested in obtaining high-quality solutions, rather than certificates. In this paper, we take a step in this direction, by deriving new convex relaxations that naturally give rise to greedy and random rounding schemes. The fundamental point of difference between our relaxations and existing relaxations is that we derive our relaxations by rewriting sparse PCA as a MISDO and dropping an integrality constraint, rather than using more ad-hoc techniques.

Exact Methods:

Motivated by the successful application of mixed-integer optimization for solving statistical learning problems such as best subset selection (Bertsimas and Van Parys, 2020) and sparse classification (Bertsimas et al., 2017), several exact methods for solving sparse PCA to certifiable optimality have been proposed. The first branch-and-bound algorithm for solving Problem (1) was proposed by Moghaddam et al. (2006), by applying norm equivalence relations to obtain valid bounds. However, Moghaddam et al. (2006) did not couple their approach with high-quality initial solutions and tractable bounds to prune partial solutions. Consequently, they could not scale their approach beyond .

A more sophisticated branch-and-bound scheme was recently proposed by Berk and Bertsimas (2019), which couples tighter Gershgorin Circle Theorem bounds (Horn and Johnson, 1990, Chapter 6) with a fast heuristic due to Yuan and Zhang (2013) to solve problems up to . However, their method cannot scale beyond s, because the bounds obtained are too weak to avoid enumerating a sizeable portion of the tree.

Recently, the authors developed a framework for reformulating convex mixed-integer optimization problems with logical constraints (see Bertsimas et al., 2019), and demonstrated that this framework allows a number of problems of practical relevance to be solved to certifiably optimality via a cutting-plane method. In this paper, we build upon this work by reformulating Problem (1) as a convex mixed-integer semidefinite optimization problem, and leverage this reformulation to design a cutting-plane method which solves sparse PCA to certifiable optimality. A key feature of our approach is that we need not solve any semidefinite subproblems. Rather, we use concepts from SDO to design a semidefinite-free approach which uses simple linear algebra techniques.

Concurrently to our initial submission, Li and Xie (2020) also attempted to reformulate sparse PCA as an MISDO, and proposed valid inequalities for strengthening their formulation and local search algorithms for obtaining high-quality solutions at scale. Our work differs in the following two ways. First, we propose strengthening the MISDO formulation using the Gershgorin circle theorem and demonstrate that this allows our MISDO formulation to scale to problems with s of features, while they do not, to our knowledge, solve any MISDOs to certifiable optimality where . Second, we develop tractable second-order cone relaxations and greedy rounding schemes which allow practitioners to obtain certifiably near optimal sparse principal components even in the presence of s of features. More remarkable than the differences between the works however is the similarities: more than years after d’Aspremont et al. (2005)’s landmark paper first appeared, both works proposed reformulating sparse PCA as an MISDO less than a week apart. In our view, this demonstrates that the ideas contained in both works transcend sparse PCA, and can perhaps be applied to other problems in the optimization literature which have not yet been formulated as MISDOs.

1.2 Contributions and Structure

The main contributions of the paper are twofold. First, we reformulate sparse PCA exactly as a mixed-integer semidefinite optimization problem; a reformulation which is, to the best of our knowledge, novel. Second, we leverage this MISDO formulation to design efficient algorithms for solving non-convex mixed-integer quadratic optimization problems, such as sparse PCA, to certifiable optimality or within of optimality in practice at a larger scale than existing state-of-the-art methods. The structure and detailed contributions of the paper are as follows:

-

•

In Section 2.1, we reformulate Problem (1) as a mixed-integer SDO. We propose a cutting-plane method which solves it to certifiable optimality in Section 2.2. Our algorithm decomposes the problem into a purely binary master problem and a semidefinite separation problem. Interestingly, we show in Section 2.3 that the separation problems can be solved efficiently via a leading eigenvalue computation and does not require any SDO solver. Finally, the Gershgorin Circle theorem has been empirically successful for deriving upper-bounds on the objective value of (1) (Berk and Bertsimas, 2019). We theoretically analyze the quality of such bounds in Section 2.4 and show in Section 2.5 that tighter bounds derived from Brauer’s ovals of Cassini theorem can also be imposed via mixed-integer second-order cone constraints.

-

•

In Section 3, we analyze the semidefinite reformulation’s convex relaxation, and introduce a greedy rounding scheme (Section 3.1) which supplies high-quality solutions to Problem (1) in polynomial time, together with a sub-optimality gap (see numerical experiments in Section 4). To further improve the quality of rounded solution and the optimality gap, we introduce strengthening inequalities (Section 3.2). While solving the strengthened formulation exactly would result in an intractable MISDO problem, solving its relaxation and rounding the solution appears as an efficient strategy to return high-quality solutions with a numerical certificate of near-optimality.

-

•

In Section 4, we apply the cutting-plane and random rounding methods to derive optimal and near optimal sparse principal components for problems in the UCI dataset. We also compare our method’s performance against the method of Berk and Bertsimas (2019), and find that our exact cutting-plane method performs comparably, while our relax+round approach successfully scales to problems an order of magnitude larger and often returns solutions which outperform the exact method at sizes which the exact method cannot currently scale to. A key feature of our numerical success is that we sidestep the computational difficulties in solving SDOs at scale by proposing semidefinite-free methods for solving the convex relaxations, i.e., solving second-order cone relaxations.

Notation:

We let nonbold face characters such as denote scalars, lowercase bold faced characters such as denote vectors, uppercase bold faced characters such as denote matrices, and calligraphic uppercase characters such as denote sets. We let denote the set of running indices . We let denote a vector of all ’s, denote a vector of all ’s, and denote the identity matrix, with dimension implied by the context.

We also use an assortment of matrix operators. We let denote the Euclidean inner product between two matrices, denote the Frobenius norm of a matrix, denote the spectral norm of a matrix, denote the nuclear norm of a matrix, denote the Moore-Penrose psuedoinverse of a matrix and denote the positive semidefinite cone; see Horn and Johnson (1990) for a general theory of matrix operators.

2 An Exact Mixed-Integer Semidefinite Optimization Algorithm

In Section 2.1, we reformulate Problem (1) as a convex mixed-integer semidefinite optimization problem. From this formulation, we propose an outer-approximation scheme (Section 2.2) which, as we show in Section 2.3, does not require solving any semidefinite problems. We improve convergence of the algorithm by deriving quality upper-bounds on Problem’s (1) objective value in Section 2.4 and 2.5.

2.1 A Mixed-Integer Semidefinite Reformulation

Starting from the rank-constrained SDO formulation (2), we introduce binary variables to model whether is non-zero, via the logical constraint if ; note that we need not require that if , since is a symmetric matrix. By enforcing the logical constraint via for sufficiently large , Problem (2) becomes

| s.t. |

To obtain a MISDO reformulation, we omit the rank constraint. In general, omitting a rank constraint generates a relaxation and induces some loss of optimality. Remarkably, this omission is without loss of optimality in this case. Indeed, the objective is convex and therefore some rank-one extreme matrices is optimal. We formalize this observation in the following theorem; note that a similar result—although in the context of computing Restricted Isometry constants and with a different proof—exists (Gally and Pfetsh, 2016):

Theorem 1

Remark 2

Observe that if and we set in Problem (5) and omit the valid inequality then the optimal value of the continuous relaxation is trivially . Indeed, letting be a leading eigenvector of the unconstrained problem (where ), we can set , where the inequality holds since , and , meaning (a) by norm equivalence and (b) and thus solves this continuous relaxation. Therefore, setting if and/or imposing the valid inequality is necessary for obtaining non-trivial relaxations whenever is small.

Proof It suffices to demonstrate that for any feasible solution to (1) we can construct a feasible solution to (5) with an equal or greater payoff, and vice versa.

- •

-

•

Let be a feasible solution to Problem (5), and let be a Cholesky decomposition of , where , and . Observe that since we can perform the Cholesky decomposition on the submatrix of induced by , and “pad” out the remaining entries of each with s to obtain the decomposition of . Therefore, let us set . Then, is a feasible solution to (1) with an equal or greater payoff.

Finally, we let , if , as the minors imply whenever (c.f. Gally and Pfetsh, 2016, Lemma 1).

Theorem 1 reformulates Problem (1) as a mixed-integer SDO. Therefore, we can solve Problem (5) using general branch-and-cut techniques for semidefinite optimization problems (see Gally et al., 2018; Kobayashi and Takano, 2020). However, this approach is not scalable, as it comprises solving a large number of semidefinite subproblems and the community does not know how to efficiently warm-start interior point methods (IPMs) for SDOs.

Alternatively, we propose a saddle-point reformulation of Problem (5) which avoids the computational difficulty of solving a large number of SDOs by exploiting problem structure, as we will show in Section 2.3. The following result reformulates Problem (5) as a max-min saddle-point problem amenable to outer-approximation:

Theorem 3

Problem (5) attains the same optimal value as the following problem:

| (6) | ||||

| (7) | ||||

| s.t. | ||||

Remark 4

The above theorem demonstrates that is concave in , by rewriting it as the infimum of functions which are linear in (Boyd and Vandenberghe, 2004).

Proof Let us introduce auxiliary variables to model the absolute value of and rewrite the inner optimization problem of (5) as

| (8) | ||||||

| s.t. | ||||||

where we associate dual constraint multipliers with primal constraints in square brackets. For such that , the maximization problem induced by satisfies Slater’s condition (see, e.g., Boyd and Vandenberghe, 2004, Chapter 5.2.3), strong duality applies and leads to

| s.t. |

We eliminate from the dual problem above by optimizing over and setting .

Note that for , the primal subproblem is infeasible and the dual subproblem has objective , but this can safely be ignored since is certainly suboptimal.

2.2 A Cutting-Plane Method

Theorem 3 shows that evaluating yields the globally valid overestimator:

where is a supergradient of at , at no additional cost. In particular, we have

where , constitutes an optimal choice of for a fixed . This observation leads to an efficient strategy for maximizing : iteratively maximizing and refining a piecewise linear upper estimator of . This strategy is called outer-approximation (OA), and was originally proposed by Duran and Grossmann (1986). OA works by iteratively constructing estimators of the following form at each iteration :

| (9) |

After constructing each overestimator, we maximize over to obtain , and evaluate and its supergradient at . This procedure yields a non-increasing sequence of overestimators which converge to the optimal value of within a finite number of iterations , since is a finite set and OA never visits a point twice. Additionally, we can avoid solving a different MILO at each OA iteration by integrating the entire algorithm within a single branch-and-bound tree, as proposed by Quesada and Grossmann (1992), using lazy constraint callbacks. Lazy constraint callbacks are now standard components of modern MILO solvers such as Gurobi or CPLEX and substantially speed-up OA. We formalize this procedure in Algorithm 1; note that denotes the set of supergradients of at .

2.3 A Semidefinite-free Subproblem Strategy

Our derivation and analysis of Algorithm 1 indicates that we can solve Problem (1) to certifiable optimality by solving a (potentially large) number of semidefinite subproblems (7), which might be prohibitive in practice. Therefore, we now derive a computationally efficient subproblem strategy which crucially does not require solving any semidefinite programs. Formally, we have the following result:

Theorem 5

For any , optimal dual variables in (7) are

| (10) | |||

| (11) |

where denotes the leading eigenvalue of a matrix, is a permutation such that (resp. ) denotes the entries of where (); is similar, and denotes the th largest element of .

Remark 6

Proof We appeal to strong duality and complementary slackness. Observe that, for any , is the optimal value of a maximization problem over a closed convex compact set. Therefore, there exists some optimal primal solution without loss of generality. Moreover, since the primal has non-empty relative interior with respect to the non-affine constraints, it satisfies the Slater constraint qualification and strong duality holds (see, e.g., Boyd and Vandenberghe, 2004, Chapter 5.2.3). Therefore, by complementary slackness (see, e.g., Boyd and Vandenberghe, 2004, Chapter 5.5.2), there must exist some dual-optimal solution which obeys complementarity with . Moreover, is implied by , while is implied by and . Therefore, by complementary slackness, we can take the constraints , to be inactive when without loss of generality, which implies that if in some dual-optimal solution. Moreover, we also have if , since obeys the dual feasibility constraint , and therefore is itself symmetric.

Next, observe that, by strong duality, in this dual-optimal solution, since only takes non-zero values if and does not contribute to the objective, and is similar.

Next, observe that, by strong duality and complementary slackness, any dual feasible satisfying the above conditions is dual-optimal. Therefore, we need to find an such that

By the generalized Schur complement lemma (see Boyd et al., 1994, Equation 2.41), this is PSD if and only if

-

1.

,

-

2.

, and

-

3.

.

The first two conditions hold because, as argued above, is optimal and therefore feasible, and the conditions are independent of . Therefore, it suffices to pick in order that the third condition holds. We achieve this by setting so the PSD constraint in condition (3) holds with equality.

Finally, let us optimize for to obtain stronger cuts (when we can pick any feasible , but optimizing to set to be as small as possible gives stronger cuts). This is equivalent to solving the univariate minimization problem for each :

Moreover, it is a standard result from max- optimization (see, e.g., Zakeri et al., 2014; Todd, 2018) that

this is achieved by setting to be the largest element of in absolute magnitude, where we include twice since while if .

2.4 Strengthening the Master Problem via the Gershgorin Circle Theorem

To accelerate Algorithm 1, we strengthen the master problem by imposing bounds from the circle theorem. Formally, we have the following result, which can be deduced from (Horn and Johnson, 1990, Theorem 6.1.1):

Theorem 7

For any vector we have the following upper bound on

| (12) |

Observe that this bound cannot be used to directly strengthen Algorithm 1’s master problem, since the bound is not convex in . Nonetheless, it can be successfully applied if we (a) impose a big-M assumption on Problem (1)’s optimal objective and (b) introduce additional binary variables which model whether the th Gershgorin disc is active; recall that each eigenvalue is contained in the union of the discs. Formally, we impose the following valid inequalities in the master problem:

| (13) |

where is the epigraph variable maximized in the master problem stated in Algorithm 1, and is an upper bound on the sum of the largest absolute entries in any column of . Note that we set since if the th column of does not feature in the relevant submatrix of . In the above inequalities, a valid is given by any bound on the optimal objective. Since Theorem 7 supplies one such bound for any given , we can compute

| (14) |

which can be done in time.

To further improve Algorithm 1, we also make use of the Gershgorin circle theorem before generating each outer-approximation cut. Namely, at a given node in a branch-and-bound tree, there are indices where has been fixed to , indices where has been fixed to , and indices where has not yet been fixed. Accordingly, we compute the worst-case Gershgorin bound—by taking the worst-case bound over each index such that has not yet been fixed to , i.e.,

If this bound is larger than our incumbent solution then we generate an outer-approximation cut, otherwise the entire subtree rooted at this node does not contain an optimal solution and we use instruct the solver to avoid exploring this node via a callback.

Our numerical results in Section 4 echo the empirical findings of Berk and Bertsimas (2019) and indicate that Algorithm 1 performs substantially better when the Gershgorin bound is supplied in the master problem. Therefore, it is interesting to theoretically investigate the tightness, or at least the quality, of Gershgorin’s bound. We supply some results in this direction in the following proposition:

Proposition 8

Suppose that is a scaled diagonally dominant matrix as defined by Boman et al. (2005), i.e., there exists some vector such that

Then, letting , the Gershgorin circle theorem provides a -factor approximation, i.e.,

| (15) |

Remark 9

Observe that, for a fixed , the ratio need only be computed over indices such that . Moreover, for a partially specified —which might arise at an intermediate node in a branch-and-bound tree generated by Algorithm 1—the ratio need only be computed over indices where is unspecified or set to . This suggests that the quality of the Gershgorin bound improves upon branching.

Remark 10

In particular, if is a diagonal matrix, then Equation (13)’s bound is tight - which follows from the fact that the spectrum of and the discs coincide if and only if is diagonal (see, e.g, Horn and Johnson, 1990, Chapter 6). Alternatively, if is a diagonally dominant matrix then and the Gershgorin circle theorem provides a factor approximation.

Proof Scaled diagonally dominant matrices have scaled diagonally dominant principal minors—this is trivially true because

for the same vector and therefore the following chain of inequalities holds

where the second inequality follows because , the third inequality follows from the scaled diagonal dominance of the principal submatrices of , and the fourth inequality holds because the leading eigenvalue of a PSD matrix is at least as large as each diagonal.

2.5 Beyond Gershgorin: Further Strengthening via Brauer’s Ovals of Cassini

Given the relevance of Gershgorin’s bound, we propose, in this section, a stronger —yet more expensive to implement— upper bound, based on an generalization of the Gershgorin Circle theorem, namely Brauer’s ovals of Cassini.

First, we derive a new upper-bound on that is at least as strong as the one presented in Theorem 7 and often strictly stronger (Horn and Johnson, 1990, Chapter 6):

Theorem 11

For any vector , we have the following upper bound on :

| (16) |

where is the absolute sum of off-diagonal entries in the th column of the submatrix of induced by .

Proof Let us first recall that, per Brauer (1952)’s original result, all eigenvalues of a matrix are contained in the union of the following ovals of Cassini:

where is the absolute sum of off-diagonal entries in the th column of . Next, let us observe that, if is a dominant eigenvalue of a PSD matrix then and, in the th oval, the bound reduces to

| (17) |

which, by the quadratic formula, implies an upper bound is . The result follows because if the th row of cannot be used to bound .

Theorem 11’s inequality can be enforced numerically as mixed-integer second order cone constraints. Indeed, the square root term in (16) can be modeled using second-order cone, and the bilinear terms only involve binary variables and can be linearized. Completing the square in Equation (17), (16) is equivalent to the following system of mixed-integer second-order cone inequalities:

where is a product of binary variables which can be modeled using, e.g., the McCormick inequalities , and is an upper bound on the right-hand-side of the inequality for any , which can be computed in time in much the same manner as a big- constant was computed in the previous section. Note that we do not make use of these inequalities directly in our numerical experiments, due to their high computational cost. However, an interesting extension would be to introduce the binary variables dynamically, via branch-and-cut-and-price (Barnhart et al., 1998).

Since the bound derived from the ovals of Cassini (Theorem 11) is at least as strong as the Gershgorin circle’s one (Theorem 7), it satisfies the same approximation guarantee (Proposition 8). In particular, it is tight when is diagonal and provides a factor approximation for diagonally dominant matrices. Actually, we now prove a stronger result and demonstrate that Theorem 11 provides a factor bound on for doubly diagonally dominant matrices—a broader class of matrices than diagonally dominant matrices (see Li and Tsatsomeros, 1997, for a general theory):

Proposition 12

Let be a doubly diagonally dominant matrix, i.e.,

where is the sum of the off-diagonal entries in the th column of . Then, we have that

| (18) |

Proof Observe that if then

The result then follows in essentially the same fashion as Proposition 8.

3 Convex Relaxations and Rounding Methods

For large-scale instances, high-quality solutions can be obtained by solving a convex relaxation of Problem (5) and rounding the optimal solution. In Section 3.1, we propose relaxing in (5) to and applying a greedy rounding scheme. We further tighten this relaxation using second-order cones constraints in Section 3.2.

3.1 A Boolean Relaxation and a Greedy Rounding Method

We first consider a Boolean relaxation of (5), which we obtain222Note that we omit the constraints when we develop our convex relaxations, since they are essentially dominated by the constraint we introduce in the next section; we introduced these inequalities to improve our semidefinite-free subproblem strategy for the exact method. by relaxing to . This gives , i.e.,

| (19) |

A useful strategy for obtaining a high-quality feasible solution is to solve (19) and set for indices corresponding to the largest ’s in (19) as proposed in the randomized case for general integer optimization problems by Raghavan and Tompson (1987). We formalize this in Algorithm 2. We remark that rounding strategies for sparse PCA have previously been proposed (see Fountoulakis et al., 2017; Dey et al., 2017; Chowdhury et al., 2020), however, the idea of rounding and then optimizing for appears to be new.

Remark 13

Our numerical results in Section 4 reveal that explicitly imposing a PSD constraint on in the relaxation (19)—or the ones derived later in the following section—prevents our approximation algorithm from scaling to larger problem sizes than the exact Algorithm 1 can already solve. Therefore, to improve scalability, the semidefinite cone can be safely approximated via its second-order cone relaxation, , plus a small number of cuts of the form as presented in Bertsimas and Cory-Wright (2020).

Remark 14

Rather than relaxing and greedily rounding , one could consider a higher dimensional relax-and-round scheme where we let model the outer product via , , , and require that . Indeed, a natural “round” component of such a relax-and-round scheme is precisely Goemans-Williamson rounding (Goemans and Williamson, 1995; Bertsimas and Ye, 1998), which performs at least as well as greedy rounding in both theory and practice. Unfortunately, some preliminary numerical experiments indicated that Goemans-Williamson rounding is not actually much better than greedy rounding in practice, and is considerably more expensive to implement. Therefore, we defer the details of the Goemans-Williamson scheme to Appendix A, and do not consider it any further in this paper.

3.2 Valid Inequalities for Strengthening Convex Relaxations

We now propose valid inequalities which allow us to improve the quality of the convex relaxations discussed previously. Note that as convex relaxations and random rounding methods are two sides of the same coin (Barak et al., 2014), applying these valid inequalities also improves the quality of the randomly rounded solutions.

Theorem 15

Proof The first inequality is trivial. The second inequality holds because is indeed a valid relaxation of Problem (1). Indeed, follows from the cardinality and big-M constraints. The semidefinite constraint impose second-order cone constraints on the minors of , , which can be aggregated into (see Bertsimas and Cory-Wright, 2020, for derivations).

Finally, suppose that an optimal solution to Problem (20) is of rank one, i.e., the optimal matrix can be decomposed as . Then, the SOCP inequalities imply that . However, , which implies that , i.e., for any index such that . Since , this implies that , i.e., also solves Problem (2).

As our numerical experiments will demonstrate and despite the simplicity of our rounding mechanism in Algorithm 2, the relaxation (20) provides high-quality solutions to the original sparse PCA problem (1), without introducing any additional variables. We remark that other inequalities, including the second-order cone inequalities proposed in Li and Xie (2020, Lemma 2 (ii)), could further improve the convex relaxation; we leave integrating these inequalities within our framework as future work.

4 Numerical Results

We now assess the numerical behavior of the algorithms proposed in Section 2 and 3. To bridge the gap between theory and practice, we present a Julia code which implements the described convex relaxation and greedy rounding procedure on GitHub333https://github.com/ryancorywright/ScalableSPCA.jl. The code requires a conic solver such as Mosek and several open source Julia packages to be installed.

4.1 Performance of Exact Methods

In this section, we apply Algorithm 1 to medium and large-scale sparse principal component analysis problems, with and without Gershgorin circle theorem bounds in the master problem. All experiments were implemented in Julia , using Gurobi and JuMP.jl , and performed on a standard Macbook Pro laptop, with a GHz -Core Intel i9 CPU, using GB DDR4 RAM. We compare our approach to the branch-and-bound algorithm444The solve times for their method, as reported here, differ from those reported in Berk and Bertsimas (2019) due to a small typo in their implementation (line of their branchAndBound.jl code should read “if ”, not “if ” in order to correctly compute the Gershgorin circle theorem bound); correcting this is necessary to ensure that we obtain correct results from their method. developed by Berk and Bertsimas (2019) on the UCI pitprops, wine, miniboone, communities, arrythmia and micromass datasets, both in terms of runtime and the number of nodes expanded; we refer to Berk and Bertsimas (2019); Bertsimas and Cory-Wright (2020) for descriptions of these datasets. Note that we normalized all datasets before running the method (i.e., we compute the leading sparse principal components of correlation matrices). Additionally, we warm-start all methods with the solution from the method of Yuan and Zhang (2013), to maintain a fair comparison.

Table 1 reports the time for Algorithm 1 (with and without Gershgorin circle theorem bounds in the master problem) and the method of Berk and Bertsimas (2019) to identify the leading -sparse principal component for , along with the number of nodes expanded, and the number of outer approximation cuts generated. We impose a relative optimality tolerance of for all approaches , i.e., terminate each method when where denotes the current objective bound and denotes the current incumbent objective value. Note that denotes the dimensionality of the correlation matrix, and denotes the target sparsity.

| Dataset | Alg. 1 | Alg. 1+ Circle Theorem | Method of B.+B. | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Time(s) | Nodes | Cuts | Time(s) | Nodes | Cuts | Time(s) | Nodes | |||

| Pitprops | 0.06 | |||||||||

| 0.02 | 0.02 | |||||||||

| Wine | 0.02 | |||||||||

| 0.02 | ||||||||||

| Miniboone | 0.01 | 0.01 | ||||||||

| 0.01 | ||||||||||

| 0.01 | ||||||||||

| Communities | () | 0.20 | ||||||||

| () | 0.34 | |||||||||

| ( | ( | |||||||||

| Arrhythmia | () | 4.17 | ||||||||

| ( | () | (0.83%) | ||||||||

| () | ||||||||||

| Micromass | 24.31 | |||||||||

| 241.86 | ||||||||||

| ( | () | |||||||||

Our main findings from these experiments are as follows:

-

•

For smaller problems, the strength of Algorithm 1’s cuts allows it to outperform state-of-the-art methods such as the method of Berk and Bertsimas (2019). Moreover, for larger problem sizes, the adaptive branching strategy performs comparably to Algorithm 1. This suggests that the relative merits of both approaches are roughly even, and which method is preferable may depend on the problem data.

-

•

Generating outer-approximation cuts and valid upper bounds from the Gershgorin circle theorem are both powerful ideas, but the greatest aggregate power appears to arise from intersecting these bounds, rather than using one bound alone.

-

•

Once both and are sufficiently large (e.g. and ), no approach is able to solve the problem to provable optimality within s. This motivates our study of convex relaxations and randomized rounding methods in the next section.

4.2 Convex Relaxations and Randomized Rounding Methods

In this section, we apply Algorithm 2 to obtain high quality convex relaxations and feasible solutions for the datasets studied in the previous subsection, and compare the relaxation to a difference convex relaxation developed by d’Aspremont et al. (2008), in terms of the quality of the upper bound and the resulting greedily rounded solutions. All experiments were implemented using the same specifications as the previous section. Note that d’Aspremont et al. (2008)’s upper bound555 Strictly speaking, d’Aspremont et al. (2008) does not actually write down this formulation in their work. Indeed, their bound involves dual variables which cannot be used directly to generate feasible solutions via greedy rounding. However, the fact that this bound and (d’Aspremont et al., 2008, Problem (8)) are dual to each other follows directly from strong semidefinite duality, and therefore we refer to this formulation as being due to d’Aspremont et al. (2008) (it essentially is). which we compare against is:

| (21) |

where is a Cholesky decomposition of , and we obtain feasible solutions from this relaxation by greedily rounding an optimal in the bound à la Algorithm 2. To allow for a fair comparison, we also consider augmenting this formulation with the inequalities derived in Section 3.2 to obtain the following stronger yet more expensive to solve relaxation:

| (22) | ||||

We first apply these relaxations on datasets where Algorithm 1 terminates, hence the optimal solution is known and can be compared against. We report the quality of both methods with and without the additional inequalities discussed in Section 3.2, in Tables 2-3 respectively666For the instances of (21) or (22) where we used SCS version (with default parameters) instead of Mosek, since Mosek required more memory than was available in our computing environment, and SCS takes an augmented Lagrangian approach which is less numerically stable but requires significantly less memory. That is, (21)’s formulation is too expensive to solve via IPMs on a laptop when ..

| Dataset | Alg. 2 with (19) | Alg. 2 with (21) | ||||||

| R. gap | O. gap | Time(s) | R. gap | O. gap | Time(s) | |||

| Pitprops | ||||||||

| Wine | ||||||||

| Miniboone | ||||||||

| Dataset | Alg. 2 with (20) | Alg. 2 with (22) | ||||||

| R. gap | O. gap | Time(s) | R. gap | O. gap | Time(s) | |||

| Pitprops | ||||||||

| Wine | ||||||||

| Miniboone | ||||||||

Observe that applying Algorithm 2 without the additional inequalities (Table 2) yields rather poor relaxations and randomly rounded solutions. However, by intersecting our relaxations with the additional inequalities from Section 3.2 (Table 3), we obtain extremely high quality relaxations. Indeed, with the additional inequalities, Algorithm 2 using formulation (20) identifies the optimal solution in all instances (0% O. gap), and always supplies a bound gap of less than . Moreover, in terms of obtaining high-quality solutions, the new inequalites allow Problem (20) to perform as well or better as Problem (21), despite optimizing over one semidefinite matrix, rather than semidefinite matrices. This suggests that Problem (20) should be considered as a viable, more scalable and more accurate alternative to existing SDO relaxations such as Problem (21). For this reason, we shall only consider using Problem (20)’s formulation for the rest of the paper.

We remark however that the key drawback of applying these methods is that, as implemented in this section, they do not scale to sizes beyond which Algorithm 1 successfully solves. This is a drawback because Algorithm 1 supplies an exact certificate of optimality, while these methods do not. In the following set of experiments, we therefore investigate numerical techniques to improve the scalability of Algorithm 2.

4.3 Scalable Dual Bounds and Randomized Rounding Methods

To improve the scalability of Algorithm 2, we relax the PSD constraint on in (19) and (20). With these enhancements, we demonstrate that Algorithm 2 can be successfully scaled to generate high-quality bounds for matrices.

As discussed in Remark 13, we can replace the PSD constraint by requiring that the two by two minors of are non-negative: . Second, we consider adding linear inequalities of the form , for some vector (see Bertsimas and Cory-Wright, 2020, for a discussion). Table 4 reports the performance of Algorithm 2 (with the relaxation (20)) with these two approximations of the positive semidefinite cone, “Minors” and “Minors + 20 inequalities” respectively. Note that we report the entire duality gap (i.e. do not break the gap down into its relaxation and objective gap components) since, as reflected in Table 1, some of these instances are currently too large to solve to optimality.

| Dataset | Minors | Minors + 20 inequalities | ||||

| Gap | Time(s) | Gap | Time(s) | |||

| Pitprops | ||||||

| Wine | ||||||

| Miniboone | ||||||

| Communities | ||||||

| Arrhythmia | ||||||

| Micromass | ||||||

Observe that if we impose constraints on the minors only then we obtain a solution certifiably within of optimality in seconds (resp. minutes) for s (resp. s). Moreover, adding linear inequalities, we obtain a solution within of optimality in minutes (resp. hours) for s (resp. s). Moreover, the bound gaps compare favorably to Algorithm 1 and the method of Berk and Bertsimas (2019) for instances which these methods could not solve to certifiable optimality. For instance, for the Arrhythmia dataset when we obtain a bound gap of less than in s, while the method of Berk and Bertsimas (2019) obtains a bound gap of in s. This illustrates the value of the proposed relax+round method on datasets which are currently too large to be optimized over exactly.

To conclude this section, we explore Algorithm 2’s ability to scale to even higher dimensional datasets in a high performance setting, by running the method on one Intel Xeon E5–2690 v4 2.6GHz CPU core using 600 GB RAM. Table 5 reports the methods scalability and performance on the Wilshire , and Arcene UCI datasets. For the Gisette dataset, we report on the methods performance when we include the first and rows/columns (as well as all rows/columns). Similarly, for the Arcene dataset we report on the method’s performance when we include the first , or rows/columns. We do not report results for the Arcene dataset for , as computing this requires more memory than was available (i.e. GB RAM).

We do not report the method’s performance when we impose linear inequalities for the PSD cone, as solving the relaxation without them is already rather time consuming. Moreover, we do not impose the minor constraints to save memory, do not impose when to save even more memory, and report the overall bound gap, as improving upon the randomly rounded solution is challenging in a high-dimensional setting.

| Dataset | Algorithm 2 (SOC relax)+Inequalities | |||

| Bound gap | Time(s) | |||

| Wilshire | ||||

| Gisette | ||||

| Gisette | ||||

| Gisette | ||||

| Arcene | ||||

| Arcene | ||||

| Arcene | ||||

These results suggest that if we solve the SOC relaxation using a first-order method rather than an interior point method, our approach could successfully generate certifiably near-optimal PCs when s, particularly if combined with a feature screening technique (see d’Aspremont et al., 2008; Atamtürk and Gomez, 2020).

4.4 Performance of Exact and Approximate Methods on Synthetic Data

We now compare the exact and approximate methods against existing state-of-the-art methods in a spiked covariance matrix setting. We use the experimental setup laid out in d’Aspremont et al. (2008, Section 7.1). We recover the leading principal component of a test matrix777This statement of the test matrix is different to d’Aspremont et al. (2008, Section 7.1), who write , rather than . However, it agrees with their source code. , where , , is a noisy matrix with i.i.d. standard uniform entries, is a vector of signals such that

| (23) |

and is the signal-to-noise ratio. The methods which we compare are:

-

•

Exact: Algorithm 1 with Gershgorin inequalities and a time limit of s.

- •

-

•

Greedy: as proposed by Moghaddam et al. (2006) and laid out in (d’Aspremont et al., 2008, Algorithm 1), start with a solution of cardinality and iteratively augment this solution vector with the index which gives the maximum variance contribution. Note that d’Aspremont et al. (2008) found this method outperformed the other methods (approximate greedy, thresholding and sorting) they considered in their work.

-

•

Truncated Power Method: as proposed by Yuan and Zhang (2013), alternate between applying the power method to the solution vector and truncating the vector to ensure that it is -sparse. Note that Berk and Bertsimas (2019) found that this approach performed better than other state-of-the-art methods across the real-world datasets studied in the previous section of this paper and often matched the performance of the method of Berk and Bertsimas (2019)—indeed, it functions as a warm-start for the later method.

-

•

Sorting: sort the entries of by magnitude and set for the largest entries of , as studied in d’Aspremont et al. (2008). This naive method serves as a benchmark for the value of optimization in the more sophisticated methods considered here.

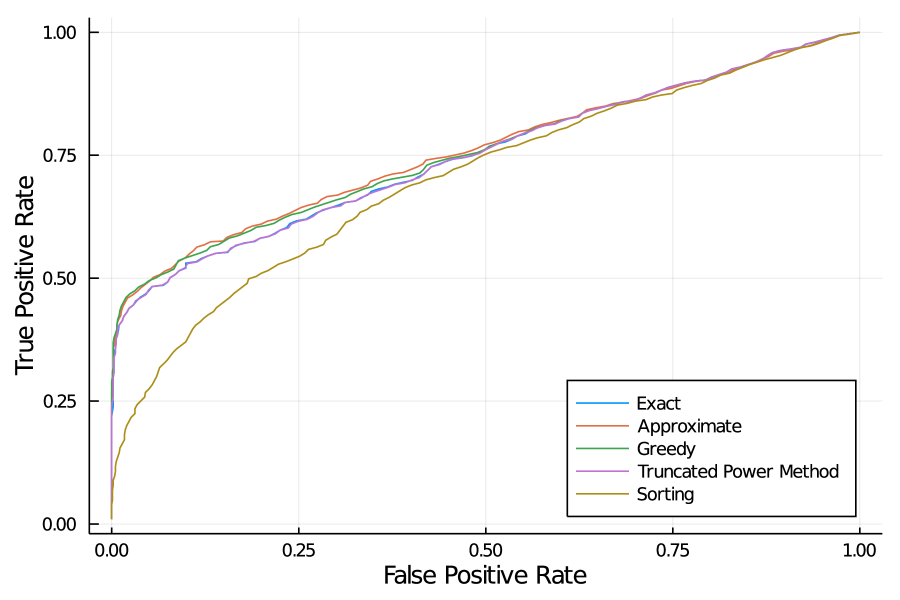

Figures 1 depicts the ROC curve (true positive rate vs. false positive rate for recovering the support of ) over synthetic random instances, as we vary for each instance. We observe that among all methods, the sorting method is the least accurate, with a substantially larger false detection rate for a given true positive rate than the remaining methods (AUC). The truncated power method and our exact method888Note that the exact method would dominate the remaining methods if given an unlimited runtime budget. Its poor performance reflects its inability to find the true optimal solution within seconds. then offer a substantial improvement over sorting, with respective AUCs of and . The greedy method then offers a modest improvement over them (AUC) and the approximate relax+round method is the most accurate (AUC).

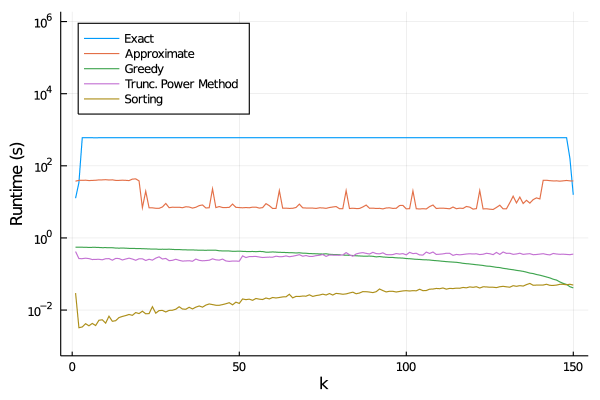

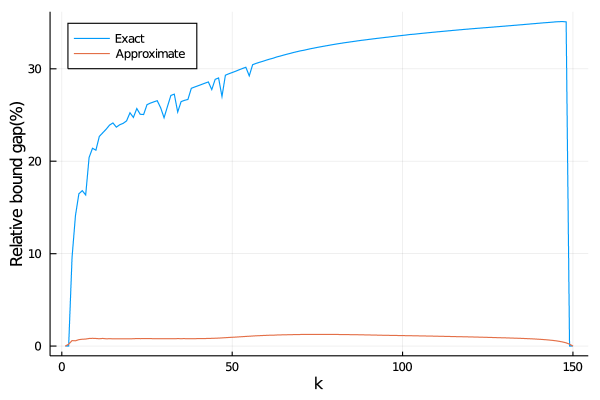

In addition to support recovery, Figure 2 reports average runtime (left panel) and average optimality gap (right panel) over the same instances. Observe that among all methods, only the exact and the approximate relax+round methods provide optimality gaps, i.e., numerical certificates of near optimality. On this metric, relax+round supplies average bound gaps of or less on all instances, while the exact method typically supplies bound gaps of or more. This comparison illustrates the tightness of the valid inequalities from Section 3.2 that we included in the relaxation. Moreover, the relax+round method converges in less than one minute on all instances. All told, the relax+round method is the best performing method overall, although if is set to be sufficiently close to or all methods behave comparably. In particular, the relax+round method should be preferred over the exact method, even though the exact method performs better at smaller problem sizes.

4.5 Summary and Guidelines From Experiments

In summary, our main findings from our numerical experiments are as follows:

-

•

For small or medium scale problems where or , exact methods such as Algorithm 1 or the method of Berk and Bertsimas (2019) reliably obtain certifiably optimal or near-optimal solutions in a short amount of time, and should therefore be preferred over other methods. However, for larger-scale sparse PCA problems, exact methods currently do not scale as well as approximate or heuristic methods.

-

•

For larger-scale sparse PCA problems, our proposed combination of solving a second-order cone relaxation and rounding greedily reliably supplies certifiably near-optimal solutions in practice (if not in theory) in a relatively small amount of time. Moreover, it outperforms other state-of-the-art heuristics including the greedy method of Moghaddam et al. (2006); d’Aspremont et al. (2008) and the Truncated Power Method of Yuan and Zhang (2013). Accordingly, it should be considered as a reliable and more accurate alternative for problems where s.

-

•

In practice, for even larger-scale problem sizes, we recommend using a combination of these methods: a computationally cheaper method (with set in the s) as a feature screening method, to be followed by the approximate relax+round method (with set in the s) and/or the exact method, if time permits.

5 Three Extensions and their Mixed-Integer Conic Formulations

We conclude by discussing three extensions of sparse PCA where our methodology applies.

5.1 Non-Negative Sparse PCA

One potential extension to this paper would be to develop a certifiably optimal algorithm for non-negative sparse PCA (see Zass and Shashua, 2007, for a discussion), i.e., develop a tractable reformulation of

Unfortunately, we cannot develop a MISDO reformulation of non-negative sparse PCA mutatis mutandis Theorem 1. Indeed, while we can still set and relax the rank-one constraint, if we do so then, by the non-negativity of , lifting yields:

| (24) | ||||

| s.t. |

where denotes the completely positive cone, which is NP-hard to separate over and cannot currently be optimized over tractably (Dong and Anstreicher, 2013). Nonetheless, we can develop relatively tractable mixed-integer conic upper and lower bounds for non-negative sparse PCA. Indeed, we can obtain a fairly tight upper bound by replacing the completely positive cone with the larger doubly non-negative cone , which is a high-quality outer-approximation of , indeed exact when (Burer et al., 2009).

Unfortunately, this relaxation is strictly different in general, since the extreme rays of the doubly non-negative cone are not necessarily rank-one when (Burer et al., 2009). Nonetheless, to obtain feasible solutions which supply lower bounds, we could inner approximate the completely positive cone with the cone of non-negative scaled diagonally dominant matrices (see Ahmadi and Majumdar, 2019; Bostanabad et al., 2020).

5.2 Sparse PCA on Rectangular Matrices

A second extension would be to extend our methodology to the non-square case:

| (25) |

Observe that computing the spectral norm of a matrix is equivalent to:

| (26) |

where, in an optimal solution, stands for , stands for and stands for —this can be seen by taking the dual of (Recht et al., 2010, Equation 2.4).

Therefore, by using the same argument as in the positive semidefinite case, we can rewrite sparse PCA on rectangular matrices as the following MISDO:

| (27) | ||||

| s.t. | ||||

5.3 Sparse PCA with Multiple Principal Components

A third extension where our methodology is applicable is the problem of obtaining multiple principal components simultaneously, rather than deflating after obtaining each principal component. As there are multiple definitions of this problem, we now discuss the extent to which our framework encompasses each case.

Common Support:

Perhaps the simplest extension of sparse PCA to a multi-component setting arises when all principal components have common support. By retaining the vector of binary variables and employing the Ky-Fan theorem (c.f. Wolkowicz et al., 2012, Theorem 2.3.8) to cope with multiple principal components, we obtain the following formulation in much the same manner as previously:

| (28) |

Notably, the logical constraint if , which formed the basis of our subproblem strategy, still successfully models the sparsity constraint. This suggests that (a) one can derive an equivalent subproblem strategy under common support, and (b) a cutting-plane method for common support should scale equally well as with a single component.

Disjoint Support:

In a sparse PCA problem with disjoint support (Vu and Lei, 2012) , simultaneously computing the first principal components is equivalent to solving:

| (29) | ||||

where is a binary variable denoting whether feature is a member of the th principal component. By applying the technique used to derive Theorem 1 mutatis mutandis, and invoking the Ky-Fan theorem (c.f. Wolkowicz et al., 2012, Theorem 2.3.8) to cope with the rank- constraint, we obtain:

| (30) | ||||

where is a binary matrix denoting whether features and are members of the same principal component; this problem can be addressed by a cutting-plane method in much the same manner as when .

Acknowledgments

We are grateful to the three anonymous referees and the associate editor for many valuable comments which improved the paper.

References

- Ahmadi and Majumdar [2019] A. A. Ahmadi and A. Majumdar. DSOS and SDSOS optimization: More tractable alternatives to sum of squares and semidefinite optimization. SIAM Journal on Applied Algebra and Geometry, 3(2):193–230, 2019.

- Amini and Wainwright [2008] A. A. Amini and M. J. Wainwright. High-dimensional analysis of semidefinite relaxations for sparse principal components. In 2008 IEEE international symposium on information theory, pages 2454–2458. IEEE, 2008.

- Atamtürk and Gomez [2020] A. Atamtürk and A. Gomez. Safe screening rules for l0-regression. Optimization Online, 2020.

- Bao et al. [2011] X. Bao, N. V. Sahinidis, and M. Tawarmalani. Semidefinite relaxations for quadratically constrained quadratic programming: A review and comparisons. Mathematical Programming, 129(1):129, 2011.

- Barak et al. [2014] B. Barak, J. A. Kelner, and D. Steurer. Rounding sum-of-squares relaxations. In Proceedings of the forty-sixth annual ACM symposium on Theory of computing, pages 31–40, 2014.

- Barnhart et al. [1998] C. Barnhart, E. L. Johnson, G. L. Nemhauser, M. W. Savelsbergh, and P. H. Vance. Branch-and-price: Column generation for solving huge integer programs. Operations Research, 46(3):316–329, 1998.

- Ben-Tal and Nemirovski [2002] A. Ben-Tal and A. Nemirovski. On tractable approximations of uncertain linear matrix inequalities affected by interval uncertainty. SIAM Journal on Optimization, 12(3):811–833, 2002.

- Berk and Bertsimas [2019] L. Berk and D. Bertsimas. Certifiably optimal sparse principal component analysis. Mathematical Programming Computation, 11(3):381–420, 2019.

- Berthet and Rigollet [2013] Q. Berthet and P. Rigollet. Optimal detection of sparse principal components in high dimension. Annals of Statistics, 41(4):1780–1815, 2013.

- Bertsimas and Cory-Wright [2020] D. Bertsimas and R. Cory-Wright. On polyhedral and second-order-cone decompositions of semidefinite optimization problems. Operations Research Letters, 48(1):78–85, 2020.

- Bertsimas and Van Parys [2020] D. Bertsimas and B. Van Parys. Sparse high-dimensional regression: Exact scalable algorithms and phase transitions. Annals of Statistics, 48(1):300–323, 2020.

- Bertsimas and Ye [1998] D. Bertsimas and Y. Ye. Semidefinite relaxations, multivariate normal distributions, and order statistics. In Handbook of Combinatorial Optimization, pages 1473–1491. Springer, 1998.

- Bertsimas et al. [2017] D. Bertsimas, J. Pauphilet, and B. Van Parys. Sparse classification and phase transitions: A discrete optimization perspective. arXiv preprint arXiv:1710.01352, 2017.

- Bertsimas et al. [2019] D. Bertsimas, R. Cory-Wright, and J. Pauphilet. A unified approach to mixed-integer optimization problems with logical constraints. arXiv preprint arXiv:1907.02109, 2019.

- Boman et al. [2005] E. G. Boman, D. Chen, O. Parekh, and S. Toledo. On factor width and symmetric h-matrices. Linear Algebra and its Applications, 405:239–248, 2005.

- Bostanabad et al. [2020] M. S. Bostanabad, J. Gouveia, and T. K. Pong. Inner approximating the completely positive cone via the cone of scaled diagonally dominant matrices. Journal on Global Optimization, 76:383–405, 2020.

- Boyd and Vandenberghe [2004] S. Boyd and L. Vandenberghe. Convex optimization. Cambridge university press, Cambridge, 2004.

- Boyd et al. [1994] S. Boyd, L. El Ghaoui, E. Feron, and V. Balakrishnan. Linear matrix inequalities in system and control theory, volume 15. Studies in Applied Mathematics, Society for Industrial and Applied Mathematics, Philadelphia, PA, 1994.

- Brauer [1952] A. Brauer. Limits for the characteristic roots of a matrix iv. Duke Mathematical Journal, 19:75–91, 1952.

- Burer et al. [2009] S. Burer, K. M. Anstreicher, and M. Dür. The difference between 5 5 doubly nonnegative and completely positive matrices. Linear Algebra and its Applications, 431(9):1539–1552, 2009.

- Candes and Tao [2007] E. Candes and T. Tao. The Dantzig selector: Statistical estimation when p is much larger than n. Annals of Statistics, 35(6):2313–2351, 2007.

- Chan et al. [2016] S. O. Chan, D. Papailliopoulos, and A. Rubinstein. On the approximability of sparse PCA. In Conference on Learning Theory, pages 623–646, 2016.

- Chen et al. [2019] Y. Chen, Y. Ye, and M. Wang. Approximation hardness for a class of sparse optimization problems. Journal of Machine Learning Research, 20(38):1–27, 2019.

- Chowdhury et al. [2020] A. Chowdhury, P. Drineas, D. P. Woodruff, and S. Zhou. Approximation algorithms for sparse principal component analysis. arXiv preprint arXiv:2006.12748, 2020.

- d’Aspremont et al. [2005] A. d’Aspremont, L. E. Ghaoui, M. I. Jordan, and G. R. Lanckriet. A direct formulation for sparse pca using semidefinite programming. In Advances in neural information processing systems, pages 41–48, 2005.

- d’Aspremont et al. [2008] A. d’Aspremont, F. Bach, and L. E. Ghaoui. Optimal solutions for sparse principal component analysis. Journal of Machine Learning Research, 9(Jul):1269–1294, 2008.

- Dey et al. [2017] S. S. Dey, R. Mazumder, M. Molinaro, and G. Wang. Sparse principal component analysis and its -relaxation. arXiv preprint arXiv:1712.00800, 2017.

- Dey et al. [2018] S. S. Dey, R. Mazumder, and G. Wang. A convex integer programming approach for optimal sparse PCA. arXiv preprint arXiv:1810.09062, 2018.

- Dong and Anstreicher [2013] H. Dong and K. Anstreicher. Separating doubly nonnegative and completely positive matrices. Mathematical Programming, 137(1-2):131–153, 2013.

- Dong et al. [2015] H. Dong, K. Chen, and J. Linderoth. Regularization vs. relaxation: A conic optimization perspective of statistical variable selection. arXiv:1510.06083, 2015.

- Duran and Grossmann [1986] M. A. Duran and I. E. Grossmann. An outer-approximation algorithm for a class of mixed-integer nonlinear programs. Mathematical Programming, 36(3):307–339, 1986.

- d’Aspremont and Boyd [2003] A. d’Aspremont and S. Boyd. Relaxations and randomized methods for nonconvex QCQPs. EE392o Class Notes, Stanford University, 1:1–16, 2003.

- d’Aspremont et al. [2014] A. d’Aspremont, F. Bach, and L. El Ghaoui. Approximation bounds for sparse principal component analysis. Mathematical Programming, 148(1-2):89–110, 2014.

- Fountoulakis et al. [2017] K. Fountoulakis, A. Kundu, E.-M. Kontopoulou, and P. Drineas. A randomized rounding algorithm for sparse PCA. ACM Transactions on Knowledge Discovery from Data (TKDD), 11(3):1–26, 2017.

- Gally and Pfetsh [2016] T. Gally and M. E. Pfetsh. Computing restricted isometry constants via mixed-integer semidefinite programming. Optimization Online, 2016.

- Gally et al. [2018] T. Gally, M. E. Pfetsch, and S. Ulbrich. A framework for solving mixed-integer semidefinite programs. Optimization Methods & Software, 33(3):594–632, 2018.

- Goemans and Williamson [1995] M. X. Goemans and D. P. Williamson. Improved approximation algorithms for maximum cut and satisfiability problems using semidefinite programming. Journal of the ACM (JACM), 42(6):1115–1145, 1995.

- Hein and Bühler [2010] M. Hein and T. Bühler. An inverse power method for nonlinear eigenproblems with applications in 1-spectral clustering and sparse PCA. In Advances in neural information processing systems, pages 847–855, 2010.

- Horn and Johnson [1990] R. A. Horn and C. R. Johnson. Matrix analysis. Cambridge university press, 1990.

- Hotelling [1933] H. Hotelling. Analysis of a complex of statistical variables into principal components. Journal of educational psychology, 24(6):417, 1933.

- Hsu et al. [2014] Y.-L. Hsu, P.-Y. Huang, and D.-T. Chen. Sparse principal component analysis in cancer research. Translational cancer research, 3(3):182, 2014.

- Jeffers [1967] J. N. Jeffers. Two case studies in the application of principal component analysis. Journal of the Royal Statistical Society: Series C (Applied Statistics), 16(3):225–236, 1967.

- Jolliffe [1995] I. T. Jolliffe. Rotation of principal components: choice of normalization constraints. Journal of Applied Statistics, 22(1):29–35, 1995.

- Jolliffe et al. [2003] I. T. Jolliffe, N. T. Trendafilov, and M. Uddin. A modified principal component technique based on the lasso. Journal of computational and Graphical Statistics, 12(3):531–547, 2003.

- Journée et al. [2010] M. Journée, Y. Nesterov, P. Richtárik, and R. Sepulchre. Generalized power method for sparse principal component analysis. Journal of Machine Learning Research, 11(2), 2010.

- Kaiser [1958] H. F. Kaiser. The varimax criterion for analytic rotation in factor analysis. Psychometrika, 23(3):187–200, 1958.

- Kim and Kojima [2001] S. Kim and M. Kojima. Second order cone programming relaxation of nonconvex quadratic optimization problems. Optimization methods and software, 15(3-4):201–224, 2001.

- Kobayashi and Takano [2020] K. Kobayashi and Y. Takano. A branch-and-cut algorithm for solving mixed-integer semidefinite optimization problems. Computational Optimization & Applications, 75(2):493–513, 2020.

- Ledoit and Wolf [2004] O. Ledoit and M. Wolf. A well-conditioned estimator for large-dimensional covariance matrices. Journal of multivariate analysis, 88(2):365–411, 2004.

- Li and Tsatsomeros [1997] B. Li and M. Tsatsomeros. Doubly diagonally dominant matrices. Linear Algebra and Its Applications, 261(1-3):221–235, 1997.

- Li and Xie [2020] Y. Li and W. Xie. Exact and approximation algorithms for sparse PCA. arXiv preprint arXiv:2008.12438, 2020.

- Luss and d’Aspremont [2010] R. Luss and A. d’Aspremont. Clustering and feature selection using sparse principal component analysis. Optimization & Engineering, 11(1):145–157, 2010.

- Luss and Teboulle [2013] R. Luss and M. Teboulle. Conditional gradient algorithms for rank-one matrix approximations with a sparsity constraint. SIAM Review, 55(1):65–98, 2013.

- Magdon-Ismail [2017] M. Magdon-Ismail. NP-hardness and inapproximability of sparse PCA. Information Processing Letters, 126:35–38, 2017.

- Majumdar et al. [2019] A. Majumdar, G. Hall, and A. A. Ahmadi. A survey of recent scalability improvements for semidefinite programming with applications in machine learning, control, and robotics. arXiv preprint arXiv:1908.05209, 2019.

- Miolane [2018] L. Miolane. Phase transitions in spiked matrix estimation: information-theoretic analysis. arXiv preprint arXiv:1806.04343, 2018.

- Moghaddam et al. [2006] B. Moghaddam, Y. Weiss, and S. Avidan. Spectral bounds for sparse pca: Exact and greedy algorithms. In Advances in neural information processing systems, pages 915–922, 2006.

- Quesada and Grossmann [1992] I. Quesada and I. E. Grossmann. An LP/NLP based branch and bound algorithm for convex MINLP optimization problems. Computers & Chemical Engineering, 16(10-11):937–947, 1992.

- Raghavan and Tompson [1987] P. Raghavan and C. D. Tompson. Randomized rounding: a technique for provably good algorithms and algorithmic proofs. Combinatorica, 7(4):365–374, 1987.

- Raghavendra and Steurer [2010] P. Raghavendra and D. Steurer. Graph expansion and the unique games conjecture. In Proceedings of the forty-second ACM symposium on Theory of computing, pages 755–764, 2010.

- Recht et al. [2010] B. Recht, M. Fazel, and P. A. Parrilo. Guaranteed minimum-rank solutions of linear matrix equations via nuclear norm minimization. SIAM Review, 52(3):471–501, 2010.

- Richman [1986] M. B. Richman. Rotation of principal components. Journal of climatology, 6(3):293–335, 1986.

- Richtárik et al. [2020] P. Richtárik, M. Jahani, S. D. Ahipaşaoğlu, and M. Takáč. Alternating maximization: Unifying framework for 8 sparse PCA formulations and efficient parallel codes. Optimization and Engineering, pages 1–27, 2020.

- Todd [2018] M. J. Todd. On max-k-sums. Mathematical Programming, 171(1):489–517, 2018.

- Vu and Lei [2012] V. Vu and J. Lei. Minimax rates of estimation for sparse PCA in high dimensions. In Artificial intelligence and statistics, pages 1278–1286, 2012.

- Wang et al. [2016] T. Wang, Q. Berthet, and R. J. Samworth. Statistical and computational trade-offs in estimation of sparse principal components. Annals of Statistics, 44(5):1896–1930, 2016.

- Witten et al. [2009] D. M. Witten, R. Tibshirani, and T. Hastie. A penalized matrix decomposition, with applications to sparse principal components and canonical correlation analysis. Biostatistics, 10(3):515–534, 2009.

- Wolkowicz et al. [2012] H. Wolkowicz, R. Saigal, and L. Vandenberghe. Handbook of semidefinite programming: theory, algorithms, and applications, volume 27. Springer Science & Business Media, 2012.

- Yuan and Zhang [2013] X.-T. Yuan and T. Zhang. Truncated power method for sparse eigenvalue problems. Journal of Machine Learning Research, 14(Apr):899–925, 2013.

- Zakeri et al. [2014] G. Zakeri, D. Craigie, A. Philpott, and M. Todd. Optimization of demand response through peak shaving. Oper. Res. Letters, 42(1):97–101, 2014.

- Zass and Shashua [2007] R. Zass and A. Shashua. Nonnegative sparse PCA. In Advances in neural information processing systems, pages 1561–1568, 2007.

- Zhang et al. [2012] Y. Zhang, A. d’Aspremont, and L. El Ghaoui. Sparse PCA: Convex relaxations, algorithms and applications. In Handbook on Semidefinite, Conic and Polynomial Optimization, pages 915–940. Springer, 2012.

- Zou et al. [2006] H. Zou, T. Hastie, and R. Tibshirani. Sparse principal component analysis. Journal of computational and graphical statistics, 15(2):265–286, 2006.

A A Doubly Non-Negative Relaxation and a Goemans-Williamson Rounding Scheme

The MISDO formulation (5) we derived in Section 2 features big- constraints of the form . We did not include the equally valid inequalities , because they are redundant with the fact that is symmetric. Actually, (5) is equivalent to

| (31) |

The formulation above features products of binary variables .Therefore, unlike several other problems involving cardinality constraints such as compressed sensing, relaxations of sparse PCA benefit from invoking an optimization hierarchy (see d’Aspremont and Boyd, 2003, Section 2.4.1, for a counterexample specific to compressed sensing). In particular, let us model the outer product by introducing a matrix and imposing the semidefinite constraint . We tighten the formulation by requiring that and imposing the linear inequalities . Hence, we obtain:

| (32) | ||||

Problem (32) is a doubly non-negative relaxation, as we have intersected the Shor and RLT relaxations. This is noteworthy, because doubly non-negative relaxations dominate most other popular relaxations with variables (Bao et al., 2011, Theorem 1).

Relaxation (32) is amenable to a Goemans-Williamson rounding scheme (Goemans and Williamson, 1995). Namely, let denote optimal choices of in Problem (32), be normally distributed random vector such that , and be a rounding of the vector such that for the largest entries of ; this is, up to feasibility on , equivalent to the hyperplane rounding scheme of Goemans and Williamson (1995) (see Bertsimas and Ye, 1998, for a proof). We formalize this procedure in Algorithm 3. As Algorithm 3 returns one of multiple possible ’s, a computationally useful strategy is to run the random rounding component several times and return the best solution.

A very interesting question is whether it is possible to produce a constant factor guarantee on the quality of Algorithm 3’s rounding, as Goemans and Williamson (1995) successfully did for binary quadratic optimization. Unfortunately, despite our best effort, this does not appear to be possible as the quality of the rounding depends on the value of the optimal dual variables, which are hard to control in this setting. This should not be too surprising for two distinct reasons. Namely, (a) sparse regression, which reduces to sparse PCA (see d’Aspremont et al., 2008, Section 6.1) is strongly NP-hard (Chen et al., 2019), and (b) sparse PCA is hard to approximate within a constant factor under the Small Set Expansion (SSE) hypothesis (Chan et al., 2016), meaning that producing a constant factor guarantee would contradict the SSE hypothesis of Raghavendra and Steurer (2010).

We close this appendix by noting that a similar in spirit (although different in both derivation and implementation) combination of taking a semidefinite relaxation of and rounding à la Goemans-Williamson has been proposed for sparse regression problems (Dong et al., 2015).