Solving Multi-Group Neutron Diffusion Eigenvalue Problem with Decoupling Residual Loss Function

Abstract

In the midst of the neural network’s success in solving partial differential equations, tackling eigenvalue problems using neural networks remains a challenging task. However, the Physics Constrained-General Inverse Power Method Neural Network (PC-GIPMNN) approach was proposed and successfully applied to solve the single-group critical problems in reactor physics. This paper aims to solve critical problems in multi-group scenarios and in more complex geometries. Hence, inspired by the merits of traditional source iterative method, which can overcome the ill-condition of the right side of the equations effectively and solve the multi-group problem effectively, we propose two residual loss function called Decoupling Residual loss function and Direct Iterative loss function. Our loss function can deal with multi-group eigenvalue problem, and also single-group eigenvalue problem. Using the new residual loss functions, our study solves one-dimensional, two-dimensional, and three-dimensional multi-group problems in nuclear reactor physics without prior data. In numerical experiments, our approach demonstrates superior generalization capabilities compared to previous work.

keywords: deep learning, eigenvalue problem, nuclear reactors, multi-group problem

1 Introduction

The neutron diffusion equations are fundamental equations in nuclear reactor physics, which are used to describe the transport behavior of neutrons in a nuclear reactor and derived by neutron diffusion theory [1]. Depending on different physical situations and assumptions, the neutron diffusion equations can be further developed into more complex equations, such as the multi-group diffusion equations, neutron noise equations, and so on. The neutron diffusion equation can be simplified from the Boltzmann transport equation, which accurately describes the neutron transport process, and the neutron diffusion equation includes single-group, multi-group, transient and steady-state problems. The neutron diffusion equations can be used for fuel management to determine the optimal arrangement of fuel rods, thereby maximizing fuel utilization. The neutron diffusion equations can also be used for reactor operation control to maintain the stability and safety performance of the reactor, such as controlling the power of the reactor by controlling the neutron flux in the reactor.

Over the past few decades, researchers have developed a range of solution methods for problems such as finite difference[2], finite element[3], finite volume[4] [5] ,nodal expansion[6] and methods of characteristic[7], among others. However, with the potential of neural networks being explored in various fields, there is an urgent need for research in using neural networks to solve physically relevant multi-group neutron diffusion eigenvalue problems (NDEPs) in nuclear reactor scenarios.

As early as 1994, Dissanayake et al.[8] attempted to use neural network methods to solve simple cases of linear and nonlinear problems. In 1998, Lagris et al.[9] presented a more comprehensive algorithmic framework for using neural networks to solve partial differential equations. Numerous researchers have made continuous efforts in the development of related works. Currently, the most popular framework is the Physics-Informed Neural Networks (PINNs) proposed by Raissi et al.[10] This framework directly links neural networks with the information of physical equations through the form of a loss function. It is evident that neural network based methods for solving partial differential equations offer several advantages:

-

•

Independence from mesh generation: Neural networks do not rely on mesh files for solving PDEs. Instead, they utilize collected training samples or data points, which eliminates the need for grid generation and adapts well to irregular or complex geometries.

-

•

Integration of observational data: Neural networks have the capability to incorporate observational or experimental data into the learning process. This allows them to effectively combine the physical information from the equations with the available data, resulting in enhanced accuracy and predictive capabilities compared to traditional solver algorithms that solely rely on the physics of the equations.

-

•

Handling high-dimensional problems: Neural networks demonstrate their advantage in dealing with high-dimensional problems, overcoming the curse of dimensionality. Neural networks can effectively learn and represent complex relationships in high-dimensional spaces, making them suitable for a wide range of problems with multiple variables or parameters.

The PINNs method has also been applied in inverse problems [11] [12], as well as in numerical solutions for solving stochastic differential equations [13] [14]. For interface problems that are more commonly encountered in practical applications, there are also related studies [15] [16] [17].

Recently, neural network algorithms have been gradually maturing, and they have been instrumental in solving engineering problems with increased robustness and efficiency. Cheng et al.[18] [19] simplified the process of data assimilation (DA) using neural networks. Gong et al.[20] utilized the data-enabled physics-informed approach to solve the neutron field and coefficients in nuclear reactor physics. Phillips et al.[21] replaced the discrete forms in traditional numerical methods with convolutional kernels from CNNs for numerical computation, yielding satisfactory results.

Based on the aforementioned advantages, there also have been numerous works applying neural network algorithms to solve PDEs in various problem domains. Researchers utilize Physics-informed PointNet [22] to predict the velocity and pressure fields of two-dimensional steady incompressible flow in porous media. Zhou et al.[23] proposed the Multi-Scale Physics Constrained Neural Network (MSPCNN) to solve fluid dynamics problems by integrating fidelity terms at multiple scales. Mao et al.[24] utilized the PINN framework with the integration of prior data point losses to solve one-dimensional and two-dimensional forward and inverse problems in high-speed flow. These examples highlight the strong capability of neural network algorithms to integrate physical information with data points, which is crucial for solving real-world physical problems.

Specifically, there is still considerable research being conducted on PDEs in the field of nuclear reactor physics, which is also the focus of this paper. We have listed the relevant articles in Table 1.

The eigenvalue problem in one dimension was solved for a single material by Wang et al[26]. Yang et al.[27] utilized the Data Enabled Physics Informed Neural Network (DEPINN) approach, where observed data points are incorporated into the neural network for solving the problem. This approach eliminates the need for regularization techniques. On the other hand, Yang et al.[28] [29] focused on solving eigenvalue problems solely based on the information provided by the physical equations, without considering any prior data points:

-

•

The main emphasis of Generalized Inverse Power Method is to combine neural networks with the traditional power iteration method. It represents a practical implementation of neural networks within the computational framework of traditional eigenvalue problems.

-

•

Physics Constrained refers to the application of neural networks to enforce the continuity of energy groups and flux, which effectively enhances the fidelity of the physical modeling itself.

-

•

Based on the definition of eigenvalues and in combination with control equations, this paper adopts Rayleigh-Quotient expression to accelerate the convergence of eigenvalues. The objective is to improve the convergence rate of the eigenvalue solution.

However, there have been no reported studies utilizing neural networks to solve the multi-group eigenvalue problem without the introduction of prior data points. The multi-group problem is particularly relevant in nuclear reactor physics, where there is a strong engineering demand for its solution. Therefore, the main contributions of this paper are as follows:

-

•

This paper introduces a novel loss function called decoupling loss function that effectively handles the ill-conditioned structure of the governing equations when solving multi-group neutron diffusion problems. It is designed to be applicable even in the case of degenerating into a single-group scenario.

-

•

In this paper, the decoupling loss function is applied to multidimensional (1D, 2D, and 3D) multi-group complex interface problems. This marks the first application of neural network-based solutions to multi-group neutron diffusion problems in real 3D physical scenarios of nuclear reactors.

-

•

In this paper, detailed numerical experiments are conducted for different reactor geometries, including sampling from training points and applying interface conditions. These experiments provide a solid foundation for future research in this field.

These contributions highlight the advancements made in applying neural networks to solve the multi-group eigenvalue problem, shedding light on the challenges and potential solutions in the field of nuclear reactor physics.

The subsequent content of this paper is as follows. In Section 2, we provide an overview of problems being solved. In Section 3, we delve into the details of the neural network algorithm employed in this study. We presents the numerical results obtained from applying the neural network algorithm to specific problems in Section 4. Finally, we draw conclusions in Section 5.

| Method | NoEG | NoM | Dim | Eig | TD | Model |

|---|---|---|---|---|---|---|

| PIDL[30] | 1 | 1 | 2 | - | Diffusion | |

| 1 | 2 | 2 | - | - | Diffusion | |

| SVD-AE[31] | 1 | 2 | 1 | - | Diffusion | |

| 1 | 4 | 2 | - | Diffusion | ||

| BDPINN[32] | 1 | 2 | 1 | - | - | Transport |

| 1 | 2 | 2 | - | - | Transport | |

| 2 | 3 | 2 | - | - | Transport | |

| BC-cPINN[26] | 1 | 1/2/5 | 1 | - | - | Diffusion |

| 1 | 1/2 | 1 | - | Diffusion | ||

| DEPINN[27] | 1 | 1 | 1/2 | - | Diffusion | |

| 2 | 4 | 2 | - | Diffusion | ||

| PC-GIPMNN[29] | 1 | 3 | 1 | - | Diffusion | |

| 1 | 4/6 | 2 | - | Diffusion | ||

| PINNs[33] | 1 | 2 | 2 | - | - | Diffusion |

| 1 | 2/3 | 2 | - | Diffusion | ||

| 2 | 1/3 | 1 | - | - | Diffusion | |

| 2 | 2 | 2 | - | - | Diffusion | |

| 2 | 3 | 1/2 | - | Diffusion | ||

| Present work | 2 | 2/3/4/5 | 1/2/3 | - | Diffusion |

-

*

‘NoEG’, ‘NoM’, ‘Dim’, ‘Eig’ and ‘TD’ mean number of energy group, number of material, dimension, eigenvalue and time-dependent, respectively.

-

*

In the table, we use ‘’ and ‘-’ to indicate whether the model covers a particular scenario. ‘’ represents ‘yes’ and indicates that the model covers the scenario, while ‘-’ represents ‘no’ and indicates that the model does not cover the scenario.

-

*

Among the aforementioned work, the problems being solved in this paper are based on realistic models of nuclear reactor cores [34] and are solved without introducing any observation points.

2 Problem Statement

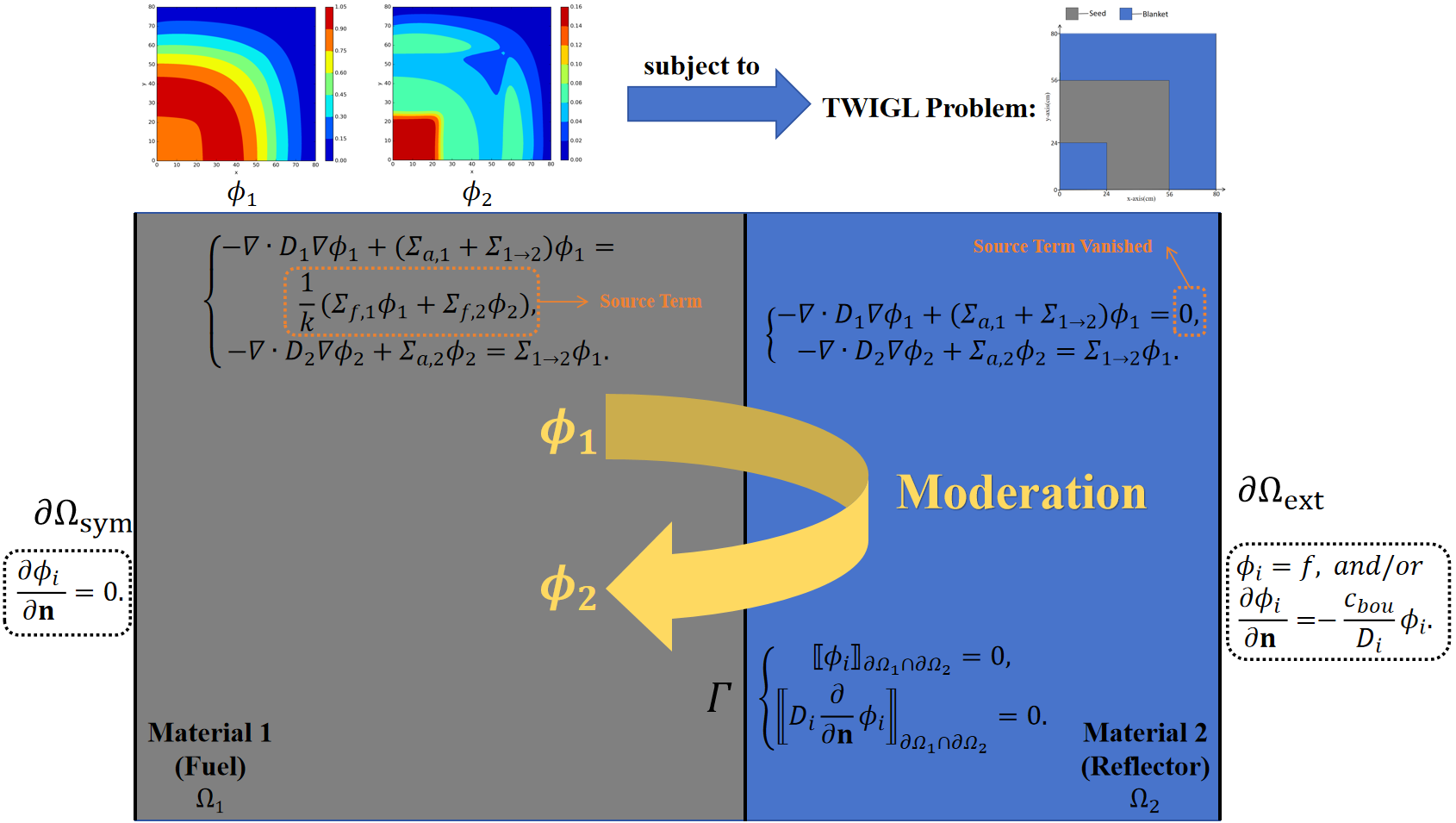

First, let us consider a non time-dependent two-group diffusion problem where the physical equations include the fast group and thermal group in (1), which is defined in domain . The diffusion of neutrons in two groups can be described by coupled diffusion equations that account for processes such as neutron sources, absorption, scattering, and moderation. The left-hand side of the equations considers the diffusion and reaction processes for both fast and thermal neutron groups, with neutrons from the fast group transitioning into the thermal group. This system of equations assumes that fission neutrons only fall within the fast group range; hence, the source term in the thermal group equation originates solely from the moderation process of the fast group.

| (1) |

| (2) |

where represent diffusion coefficient, macroscopic absorption cross section and fission cross section, respectively. stands for scattering cross section from fast group to thermal group. and denote the eigenvalue and eigenfunctions, respectively. The boundary conditions for the problem depend on the specific physical problem at hand. However, we can summarize them into (2), where represent different energy groups, represents the normal direction, is a constant coefficient for the Robin condition, indicates the symmetric boundary where the homogeneous Neumann condition is applied, while and represent different types of external boundaries, where applies the Dirichlet boundary condition and applies the Robin boundary condition. For different test cases, the applied boundary conditions vary. The specific details will be discussed in Section 4.

From mathematical perspectives, (1) is a system of eigenvalue partial differential equations, defined on the domain . The number of solutions to such problems are infinite, but for such a critical system of neutron diffusion problems, the primary concern in nuclear reactors is to determine the maximum value of and the corresponding values of and when reaches its maximum. This value of is referred to as , the effective multiplication factor. It can be observed that obtaining the maximum value of and the corresponding and is equivalent to solving for the smallest eigenvalue and eigenvector of equation (1). Since this paper only considers the solution of the effective multiplication factor, the term will be used to denote hereinafter.

Neutron diffusion eigenvalue problems originate from nuclear reactor cores. The nuclear reactor core is a complex geometrical region composed of multiple materials, which leads to the coefficients in the equations appearing as piecewise constants, and the smoothness of the solutions is also affected at the interfaces. Therefore, we will introduce interface conditions suitable for neutron diffusion eigenvalue problems, which are crucial for solving such problems. For a problem consisting of two regions (Figure.1), we impose the following constraints on the solution at the interface:

| (3) |

for , where

| (4) |

| (5) |

| (6) |

and means interface between any pair of materials. If it is a multi-interface problem, we can apply the same conditions (3) at the interfaces between each pair of materials.

3 Neural Networks

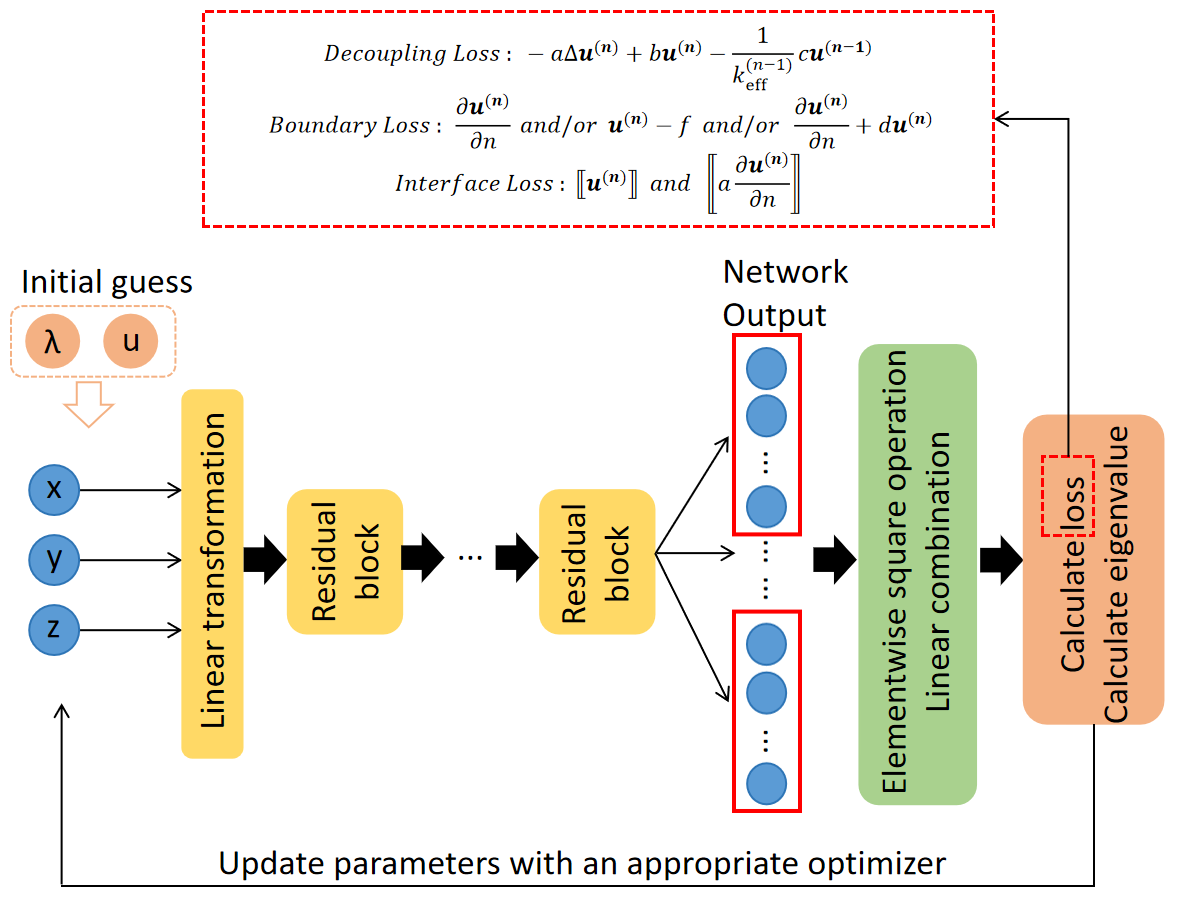

In this paper, we choose a residual neural network as an approximator, which typically consists of an input layer, residual block(s), identity connections and output layer. Here, denote weight matrix and bias, respectively, and is activation function. Meanwhile we define as residual block. For residual neural networks, the first step is to use linear transformations to change the dimensionality of the input data to match the number of neurons within the residual block. We have the flexibility to choose the number of residual blocks and the number of neurons in every residual block, while the sizes of the input and output need to be determined based on the specific problem at hand.

If the input data and the number of neurons in every residual block is , the and . In the residual block, there is also an identity mapping, which we denote as . In the case where we need to solve a system of partial differential equations involving functions, the output layer should produce results. Therefore, the dimensions of the weight matrix and bias in the output layer will be determined by the number . However, there is no activation function before the output layer, as shown in (9). For clarity, we have put a schematic diagram of the algorithm in Figure 2.

| (7) |

| (8) |

| (9) |

3.1 Network Structure

For a PDE system with unknown functions, a straightforward approach is to define the neural network as a -dimensional input and -dimensional output network. However, this approach clearly cannot achieve the crucial interface conditions. For a multi-region problem with regions, in practice, we can adjust the setup to one neural network by using neurons in the output layer to represent solutions in each of the regions. Therefore, we want to build a neural network with -dimension input and -dimension output, where is the number of different regions. is the restriction of the function to the region . Here, is a vector of components. Thus output of neural network is presented as (10) and interface condition can be implemented as (11) (12).

| (10) |

| (11) |

| (12) |

The numerical solution by neural network is a weighted linear combination of respect to :

| (13) |

However, in this paper, we only consider the case where , as the case where has already been mentioned in [29].

3.2 Loss function

In this section, our loss functions will be presented in accordance with the partitioning of our training points. and are used to represent the residual training points, boundary training points, and interface condition training points of the equation, respectively.

3.2.1 Residual loss

The critical state of the steady-state neutron diffusion equation is characterized by a system of eigenvalue equations involving multiple energy group variables (14). Each equation contains variables from different energy groups and here we consider a general form with a shift of eigenvalue:

| (14) | ||||

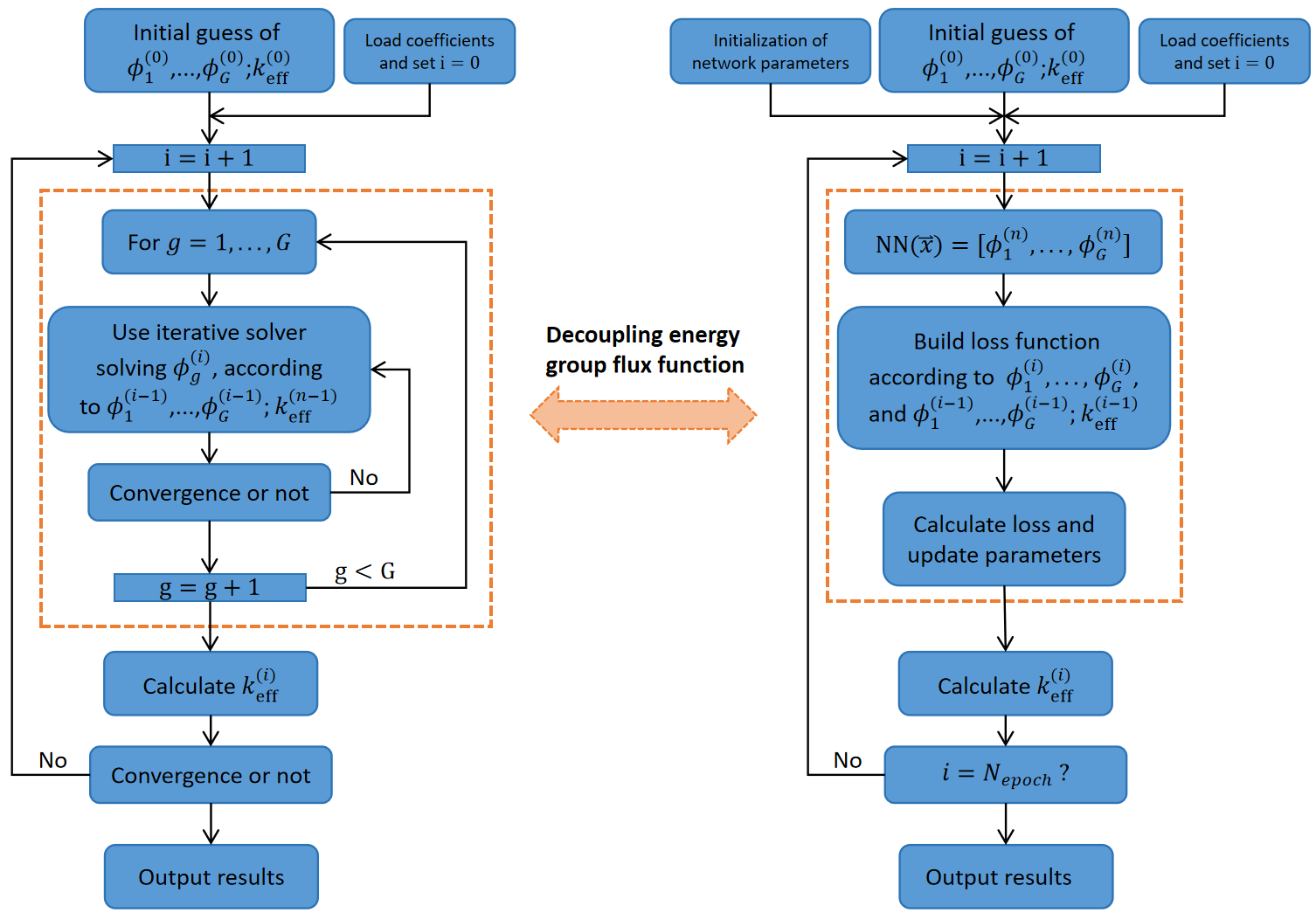

In conventional computational methods , researchers commonly use source iteration, also known as power iteration [35], to solve the aforementioned problem. Firstly, conventional methods need to discretize the equation on the grid points with some specific finite difference scheme. We derive the discretized form into following:

| (15) |

where matrix and contain diffusion absorption and scattering terms from (14) left hand side, matrix represents fission terms from right hand side of (14) and vector contains the value on the grid points.

The source iteration approach involves providing an initial guess, substituting it into the right-hand side of the equation system, and sequentially solving equations for different energy groups. This process yields a new set of values. Then, based on the physical meaning of , the value for this iteration step is computed, resulting in a new right-hand side term. During the iteration, if we disregard the scattering of neutrons from the lower energy group to the higher energy group [36], the process can be simplified as follows:

| (16) |

where

| (17) |

We rewrite the formation (16) (17) into block-matrix form for further discussion:

| (18) |

where

| (19) | |||

and , where are block matrix and are results in iteration. The key in the source iteration approach is to modify the left side of (18) into diagonal block matrix, and the right hand side of (18) is replaced by a known source term:

| (20) |

As a result, we can equivalently transform the solution of equation (14) into a sequential solution of equation (16) or (20) for . In this case, the right-hand side becomes a fixed source term rather than an eigenvalue term. In this paper, we consider a neutron diffusion equation with , as described in (14):

| (21) |

Based on the decoupling concept of conventional solving methods for multi-group neutron diffusion equations (16)-(20), we have also adopted a similar technique in designing the loss function for (21), where the fast and thermal group fluxes are independently solved using two distinct iterative equations. Therefore, we replace the remaining irrelevant group fluxes with the functions obtained in the previous step of the neural network (22b) (22d) while the relevant group functions are represented by the current output of the neural network (22a) (22c). This ensures that the entire residual function corresponds to a partial differential equation with a single unknown function. By utilizing (23), we obtain the final form of the loss function, which is named the Decoupling loss function. It is worth noting that in the loss function of neural networks, there is no need for discretization of the equations. We typically employ automatic differentiation [37] to handle the derivative operations that arise in the equations.

| (22a) | ||||

| (22b) | ||||

| (22c) | ||||

| (22d) | ||||

| (23) |

We present the application of the neural network solving process using the new Decoupling loss function and the traditional solving method in Figure 3 to illustrate their connection and differences.

Alternatively, in the case where decoupling is not considered, if the inverse power method is used to solve for the minimum eigenvalue, the terms involving eigenvalue pairs usually rely on information from the previous iteration step. However, in certain problem settings, the information of can be lost due to coefficients being zero. In (24a)-(24d), we separate the equation into parts that are related to the eigenvalue and those that are unrelated. We place them on the left and right sides of the equation respectively. Then, we replace in the second equation with (24c), in order to maintain the iteration information of the previous step’s when . Therefore, we also propose a Direct Iterative loss function (25) that addresses this issue:

| (24a) | ||||

| (24b) | ||||

| (24c) | ||||

| (24d) | ||||

| (25) |

3.2.2 Boundary loss

However, for the boundary training points , the specific form of the loss function needs to be determined based on the applied boundary conditions. Therefore, we provide a more detailed classification here, dividing into , , and , and defining separate boundary loss functions (26a) (26b) (26c) for each. Clearly, . For the sake of convenience in the subsequent discussion, we collectively refer to the loss functions (26a) (26b) (26c) corresponding to , , and as .

| (26a) | ||||

| (26b) | ||||

| (26c) | ||||

3.2.3 Interface loss

When it comes to setting the loss function, solely relying on boundary conditions and the governing equations is often insufficient to solve the multi-region eigenvalue problem. We must introduce crucial interface conditions (3) between different materials to accurately capture the behavior of the system. For the interface condition training points , since there are constraints on both the primitive function and its first-order derivative, we have defined two types of loss functions, (27) and (28), respectively.

| (27) |

| (28) |

In the subsequent numerical experiments section, different scenarios will correspond to different boundary losses. The specific settings will be elaborated in Section 4 of the paper. The total loss should be written in the following form, where the represent the weights for different loss terms:

| (29) |

3.3 Eigenvalue

For the critical problem in nuclear reactors, it is not only necessary to determine the unknown functions but also to find the eigenvalues. In non-eigenvalue problems, each coefficient in the equation is known, which significantly simplifies the problem-solving process. However, in eigenvalue problems, the challenge lies in efficiently obtaining eigenvalues to an acceptable level of accuracy and subsequently utilizing neural networks to compute the corresponding eigenfunctions. This is where the introduction of the Rayleigh-Quotient (30) becomes crucial. Here, only the set of points used for the residual part has been selected to approximate the expression for the Rayleigh-Quotient.

| (30) | ||||

3.4 Algorithm

In the case of critical in nuclear reactor physics, we only need to find the minimum eigenvalue. Moreover, at this stage, the corresponding physical quantity on the computational domain should be non-negative. Therefore, our neural network design includes a post-processing step to ensure that the output satisfies the non-negativity condition. We achieve this by applying an element-wise squaring operation to the output of the neural network , ensuring that the computed function values are non-negative. Our algorithm is presented in Algorithm 1.

4 Numerical Experiments

In this section, we plan to show numerical results for solving few eigenvalue problems. We give statements of problem to be solved by our method. And we will propose corresponding evaluation criteria to verify the validity of numerical results in Section 4.1. Further, we will give details, numerical solutions and related evaluation results of one-dimensional problem [38], two-dimensional and three-dimensional TWIGL problem [39], and two-dimensional and three-dimensional IAEA problem [34] with different residual loss function applied.

As mentioned earlier, we have a total of three training point sets for our training points. The boundary point set consists of points strictly defined on the boundary, and different examples will be used as uniform sampling points on boundary according to different resolutions. The interface point set and residual point set are disjoint, meaning that if a coordinate point is subject to an interface condition, it will not be subjected to the equation loss condition, even if it satisfies both conditions.

4.1 Error criterion

In this section, we propose our evaluation method for the quality of numerical solutions. Additionally, due to the engineering application of the problem, we will provide acceptance criteria for the numerical solutions in practical problems. We will continue to use the symbols introduced in Section 3 and define our solution as .To evaluate the quality of the numerical solutions, we will introduce a high-fidelity solution obtained by FreeFEM++ [40] as our reference solution, which is defined as .

Next, we will provide different relative error formulation for the solution in terms of the -norm:

| (31) |

where the subscript denotes relative, and the superscript means -norm. Here, can only take and .

Since the eigenvalues in these cases are scalars and real numbers, the relative error with respect to the minimal eigenvalue is defined as follows:

| (32) |

4.2 1-D Problem

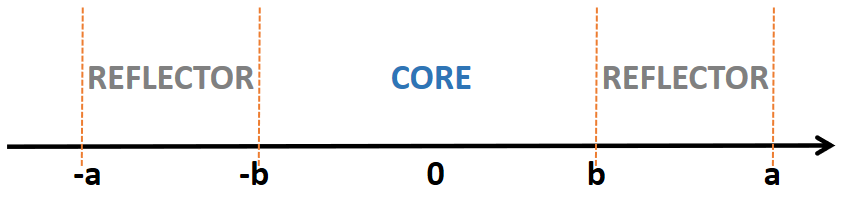

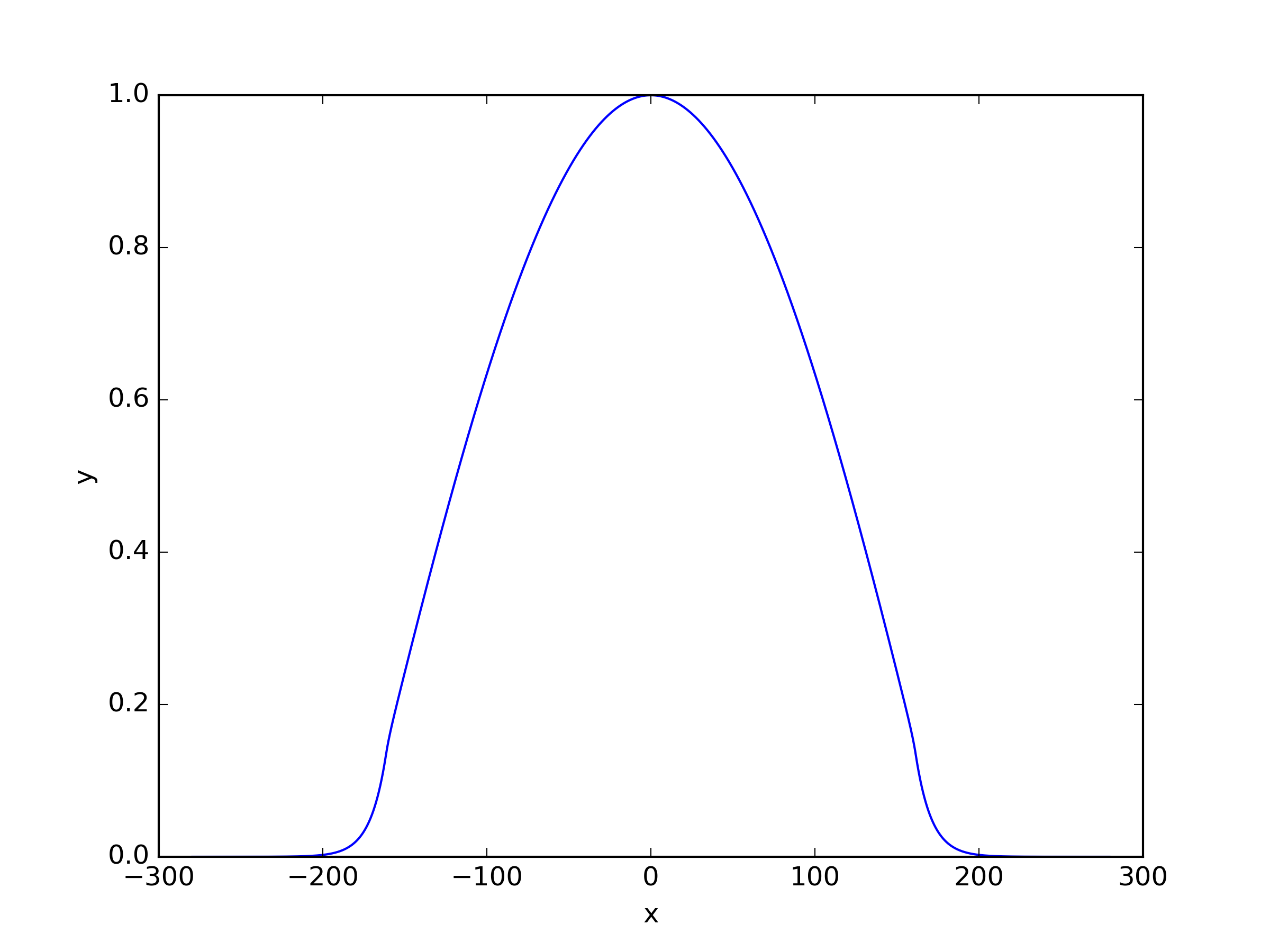

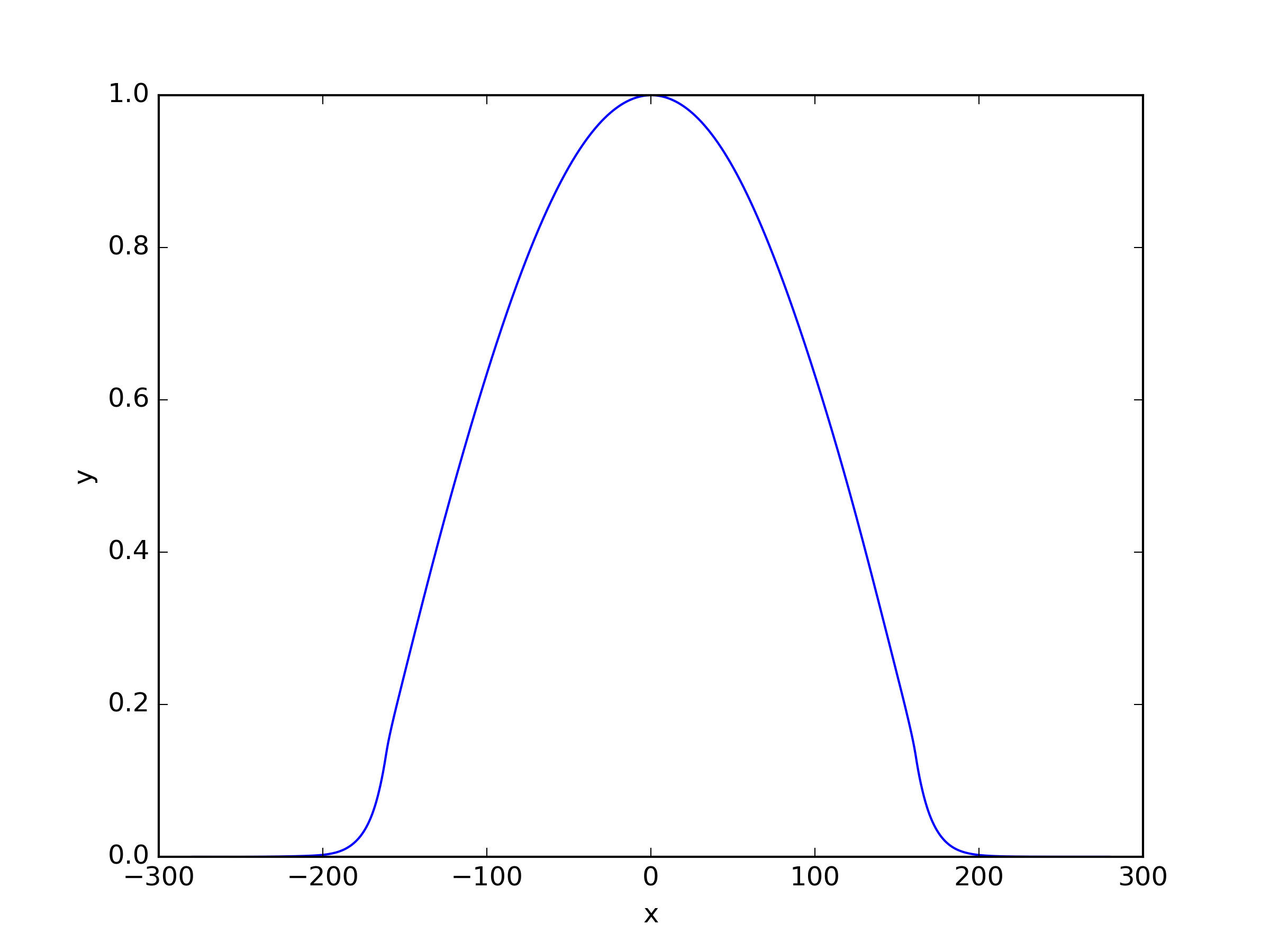

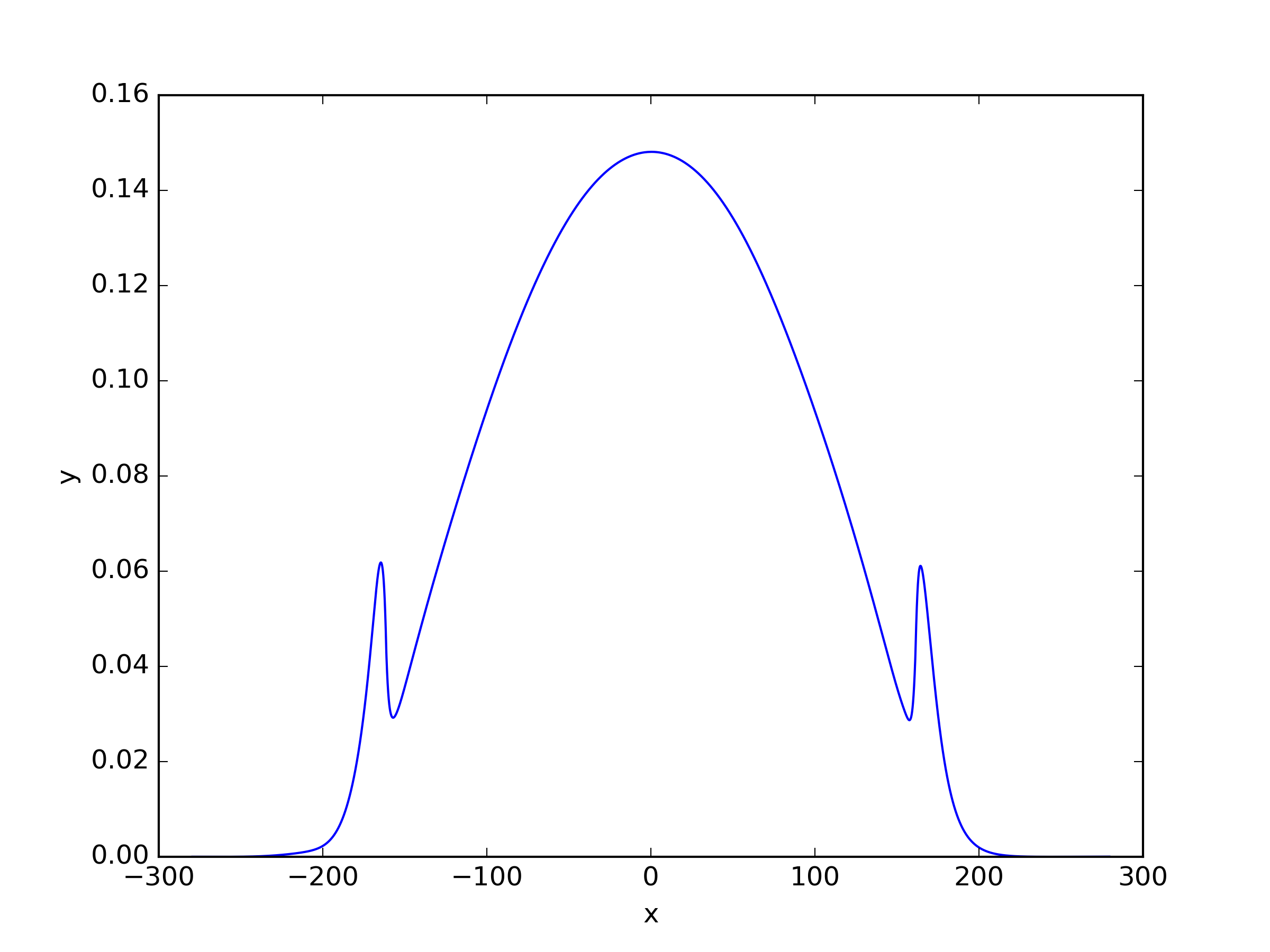

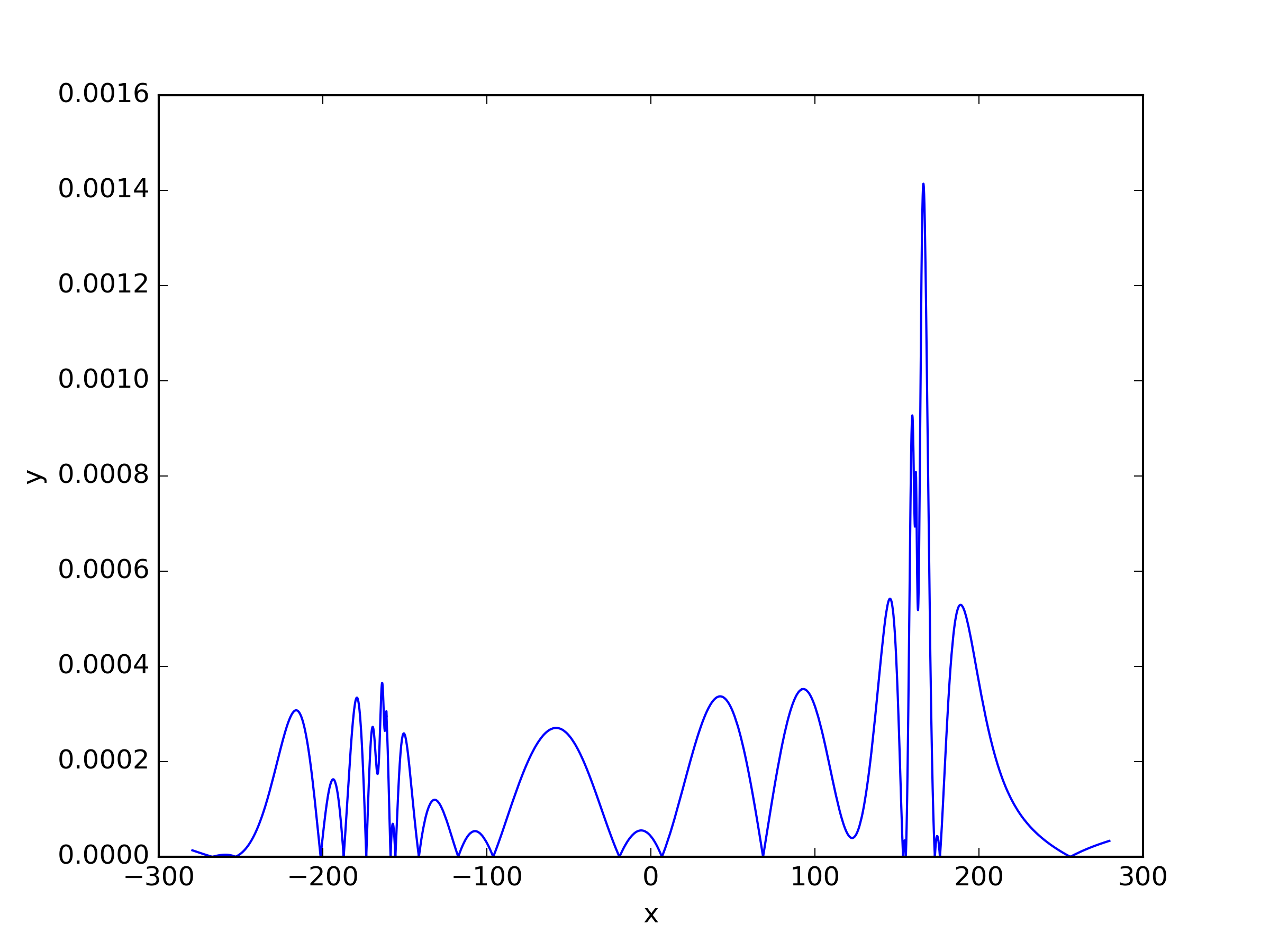

This problem originates from the core of the Swedish Ringhals-4 pressurized water reactor. The cross-section data for various materials were obtained from simulation calculations, then transformed using homogenization techniques to make them applicable to the one-dimensional scenario. In this paper, this is considered as the benchmark problem for the one-dimensional case, and the corresponding data is presented in Table 2. The computational domain for this problem is depicted in the Figure 4, consisting of three regions and two types of materials. Robin boundary conditions are applied at the endpoints of the domain, so we apply (26c) as the boundary loss function and .

We solved the problem using FreeFEM++ on a uniform grid with as a reference solution. The reference eigenvalue is . Similarly, for our neural network, we selected 11,181 uniformly distributed sampling points as our training data, where 11,179 are residual points and 2 are interface points. We chose 2 residual blocks, with 20 neurons per layer in each residual block. The Adam optimizer with a learning rate of was used for epochs optimization during the training process.

| Core | 1.4376 | 0.3723 | 0.0115 | 0.1019 | 0.0151 | 0.0057 | 0.1425 |

| Reflector | 1.3116 | 0.2624 | -0.0098 | 0.0284 | 0.0238 | 0.0 | 0.0 |

We applied three different residual loss functions to this one-dimensional case, and for (23) and (25), we added a shift . When the shift term for and is set to 0, it corresponds to the same loss function. The results of the solutions can be seen in Table 3 and Figure 5. Here refers to inverse power method residual loss function proposed in [29], and we also apply this residual loss function in other problems. The expression of it is shown as (33). We observed that the inverse power method loss function (33), which was effective for solving single-group problems, did not perform well for multi-group problems. Surprisingly, showed the best performance in this case.

| (33) | ||||

| Shift | ||||||

|---|---|---|---|---|---|---|

| 0 | 1.7623e-04 | 4.8424e-02 | 2.9796e-02 | 1.7779e-01 | 5.0444e-02 | |

| 0 | 1.7743e-04 | 4.5991e-03 | 2.3375e-03 | 2.3234e-02 | 6.8866e-03 | |

| 1 | 7.2184e-04 | 2.6505e-02 | 1.6108e-02 | 1.4934e-01 | 3.8318e-02 | |

| 1 | 1.0351e-04 | 2.3804e-03 | 1.7113e-03 | 9.5453e-03 | 2.9083e-03 |

4.3 2-D Problem

4.3.1 TWIGL

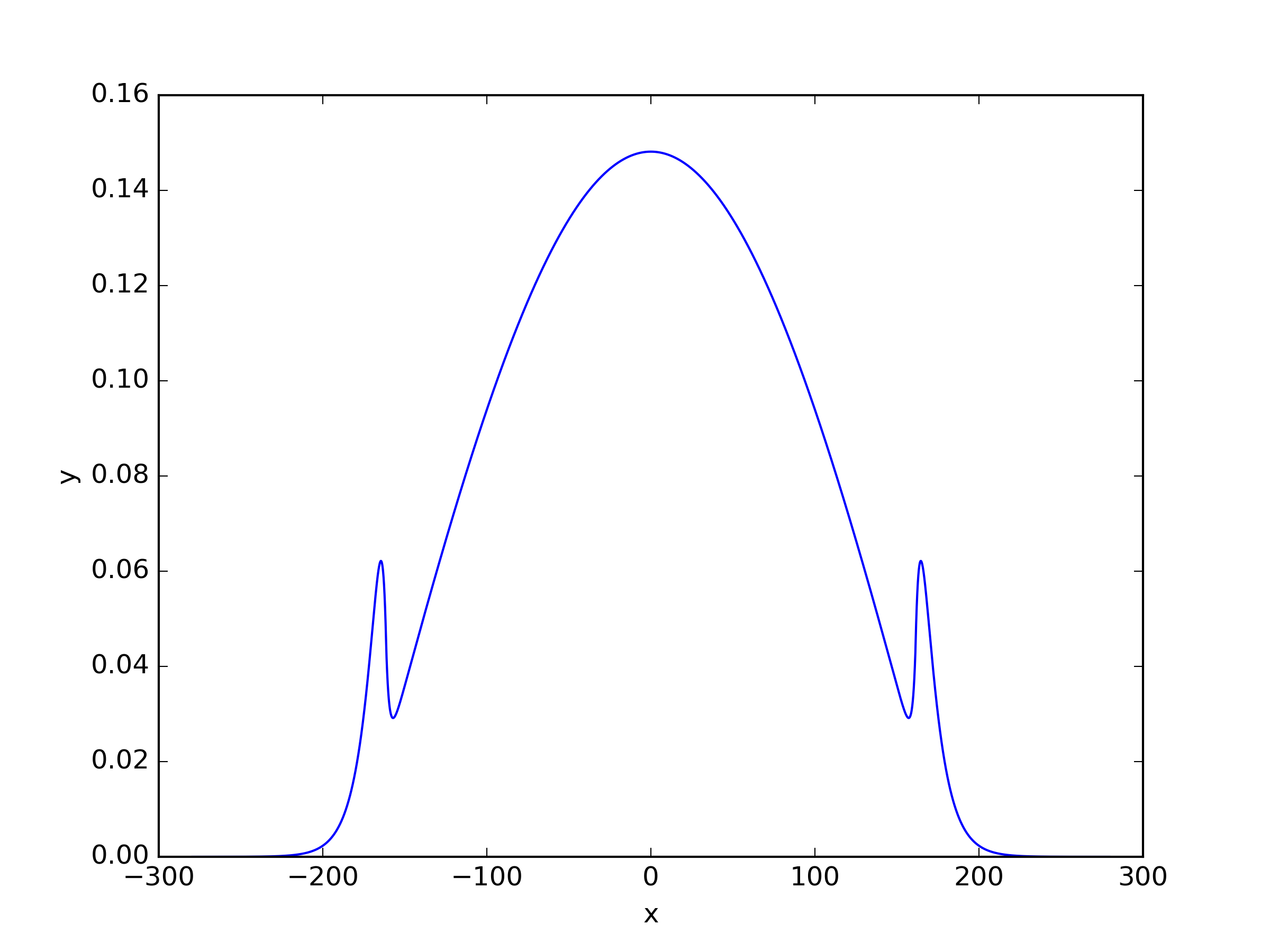

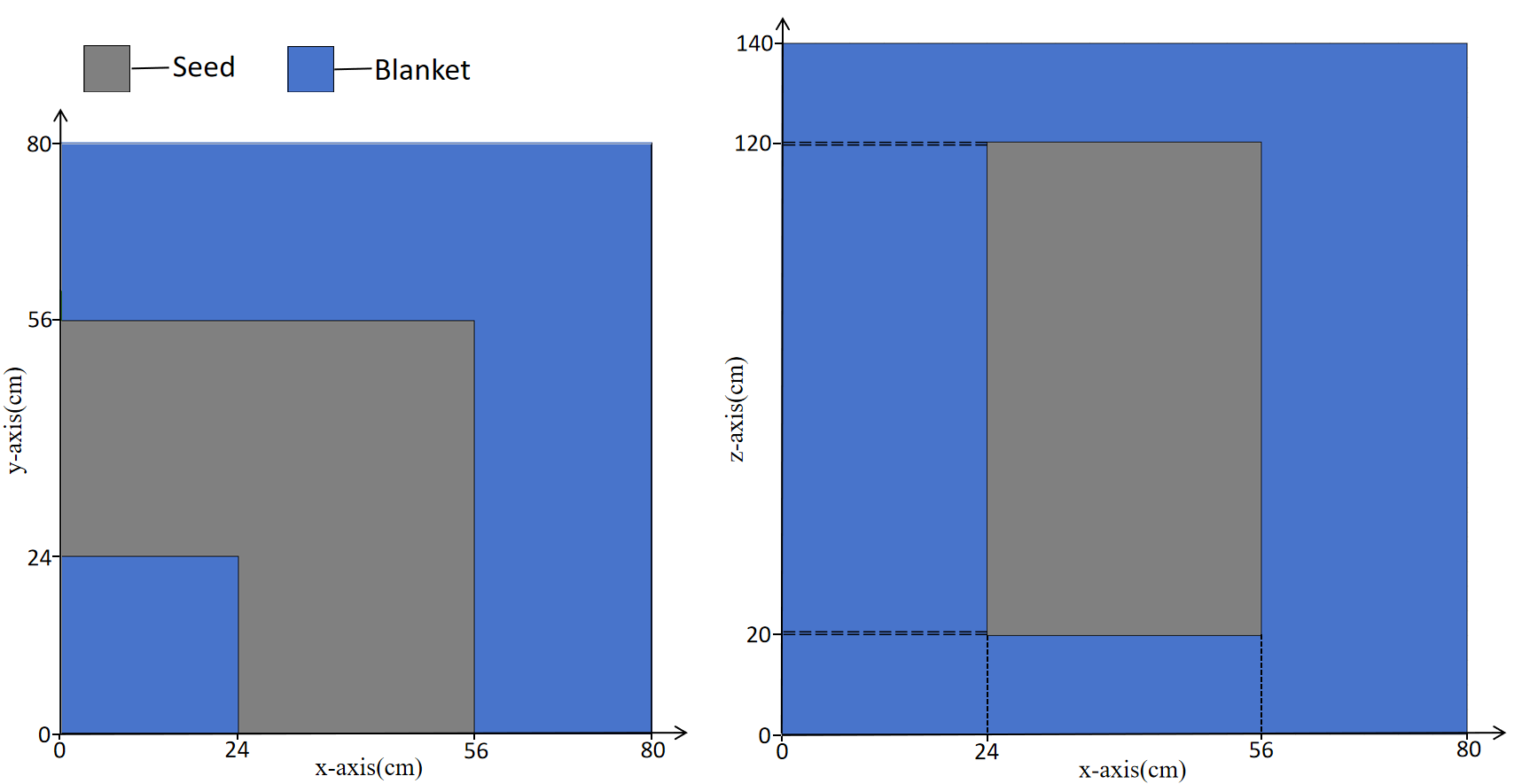

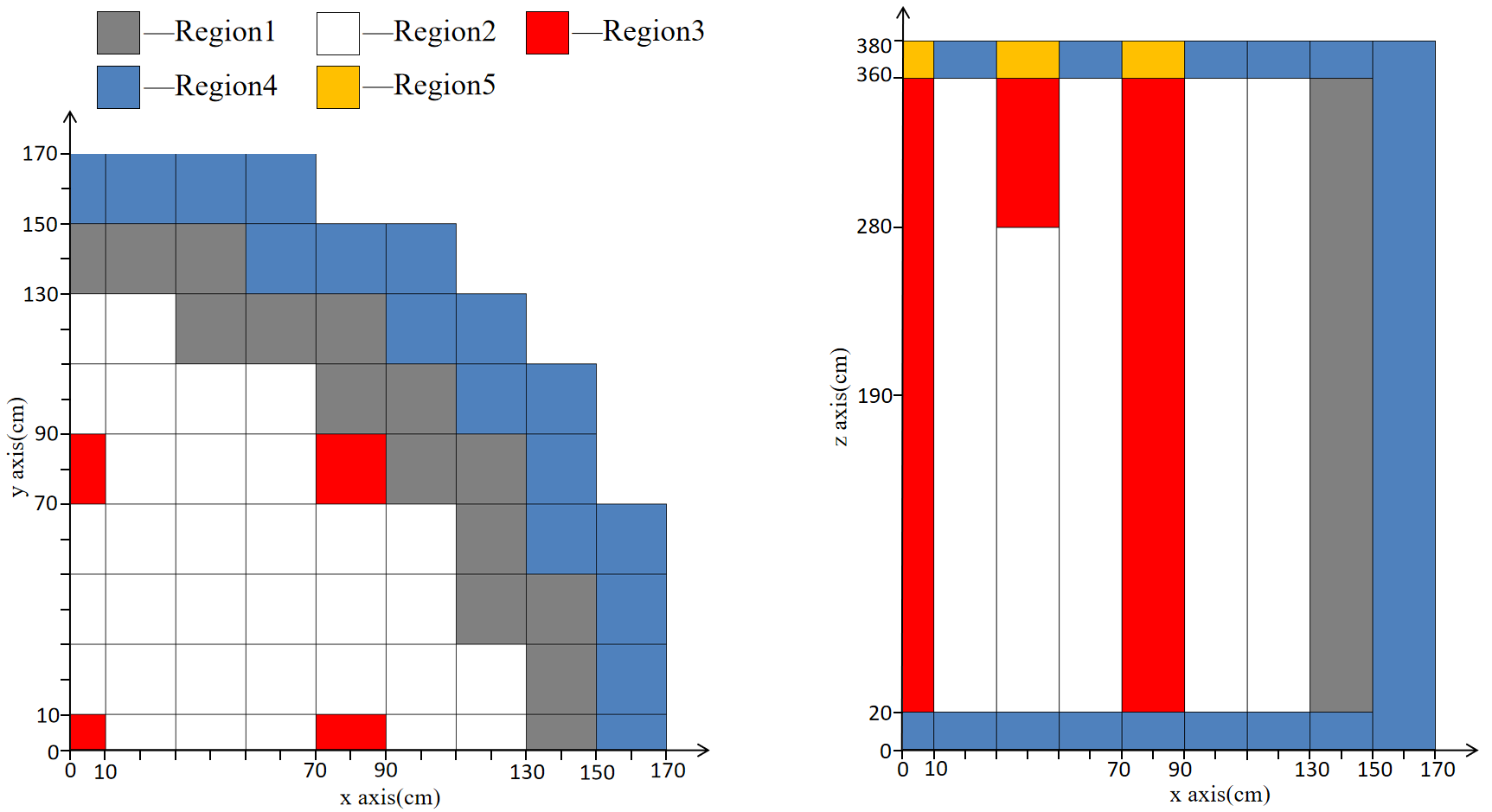

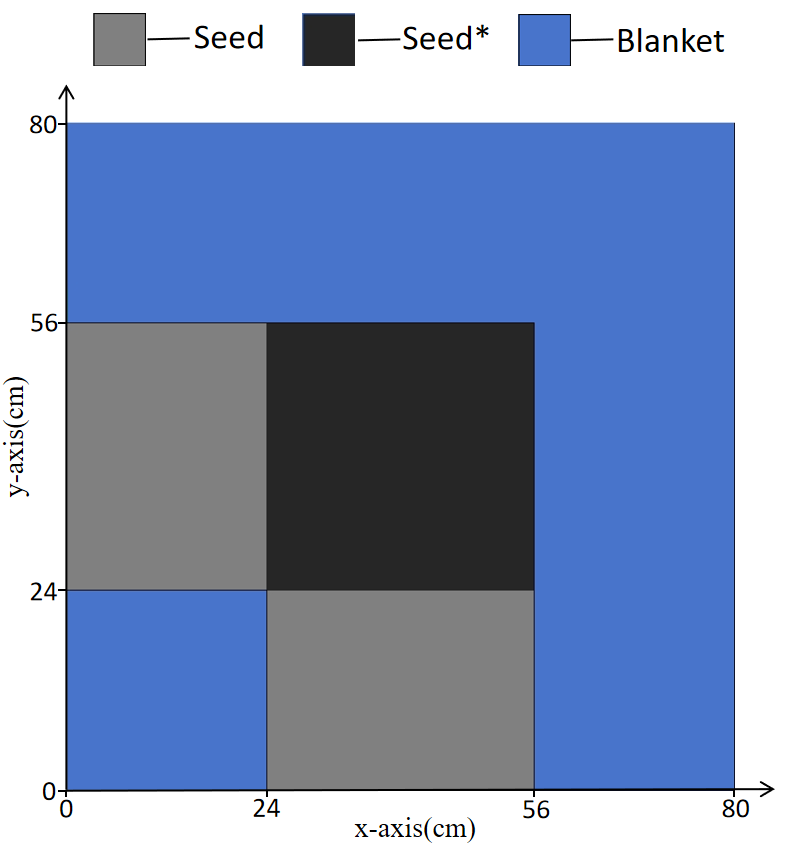

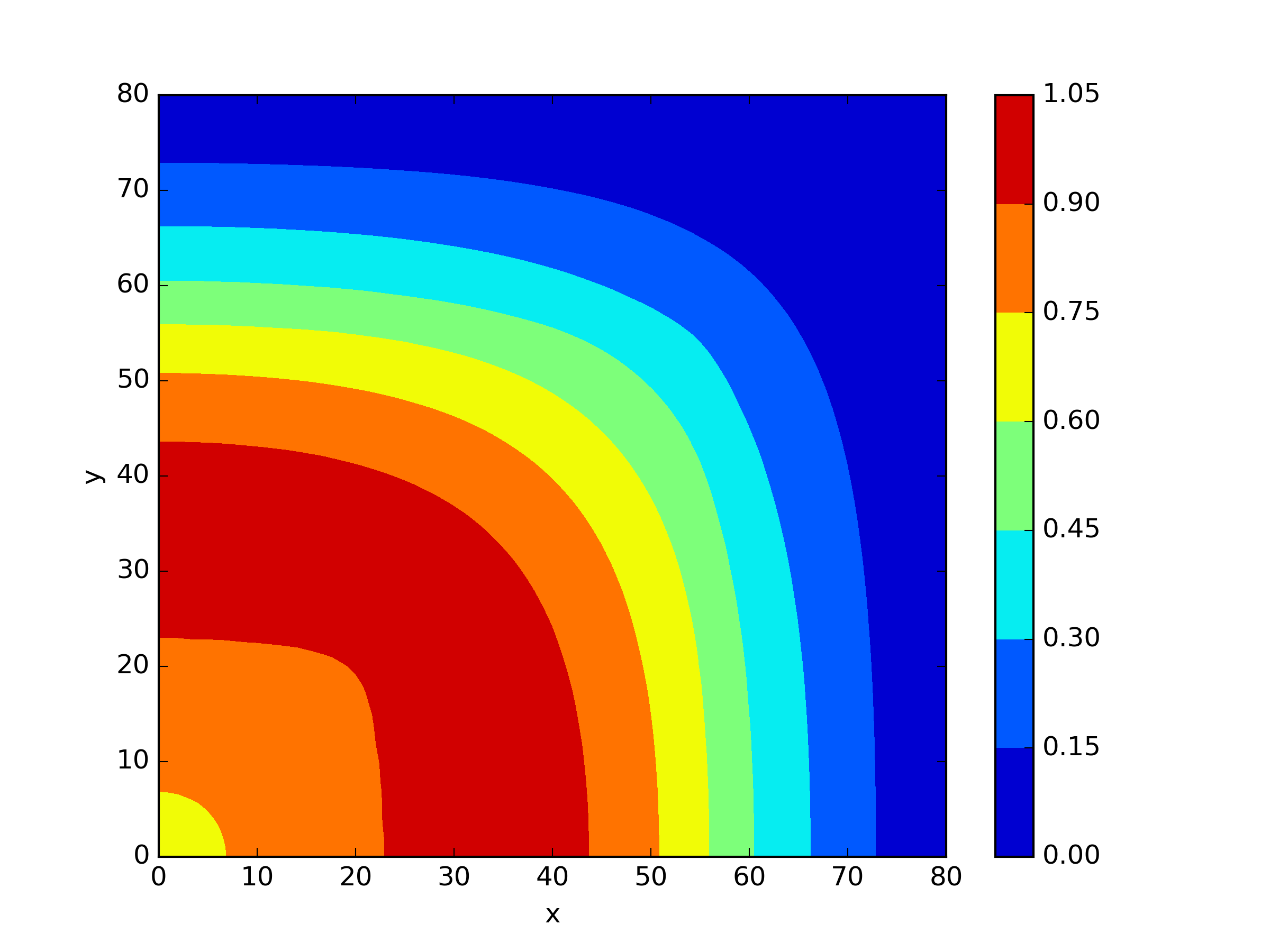

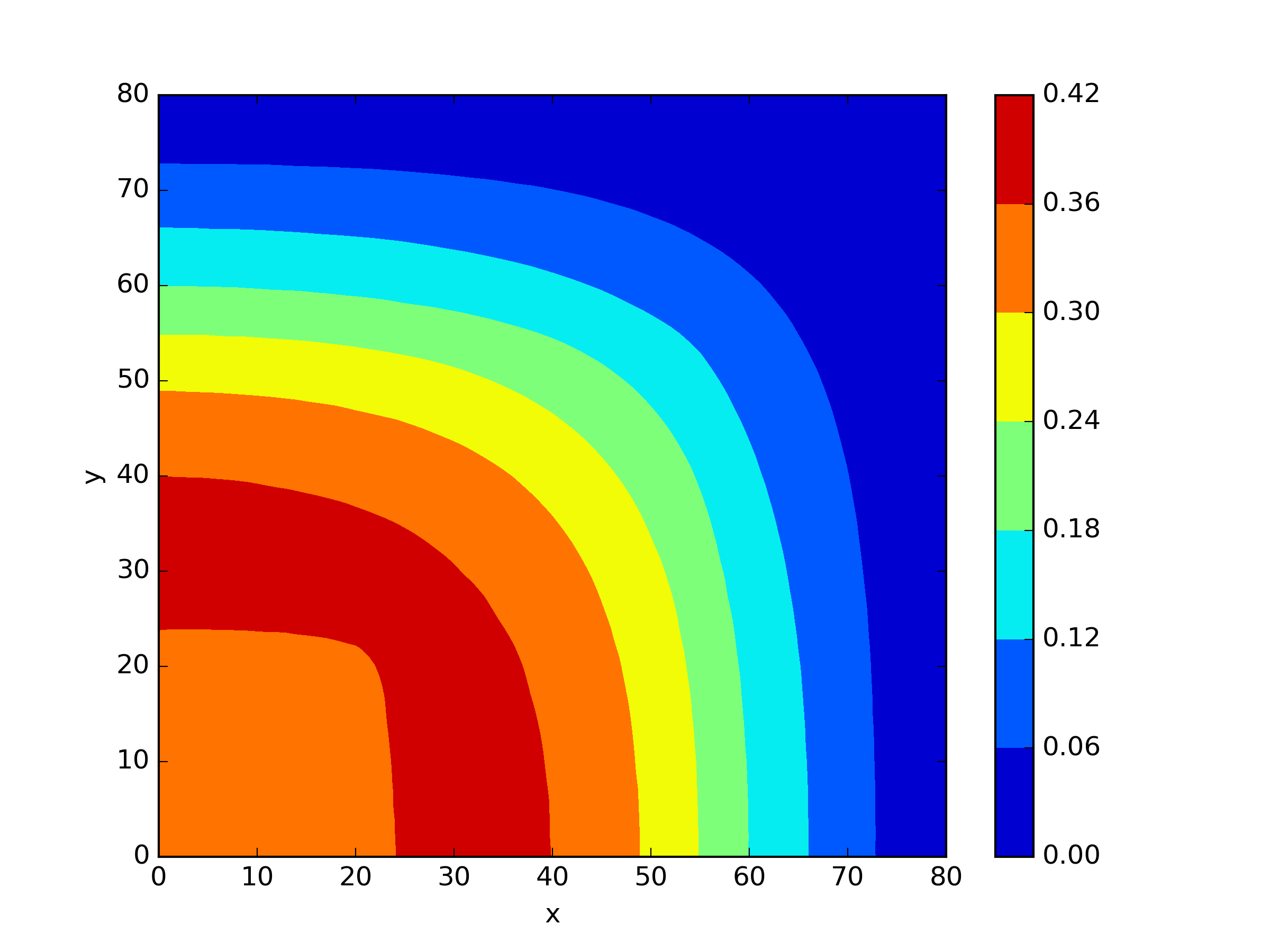

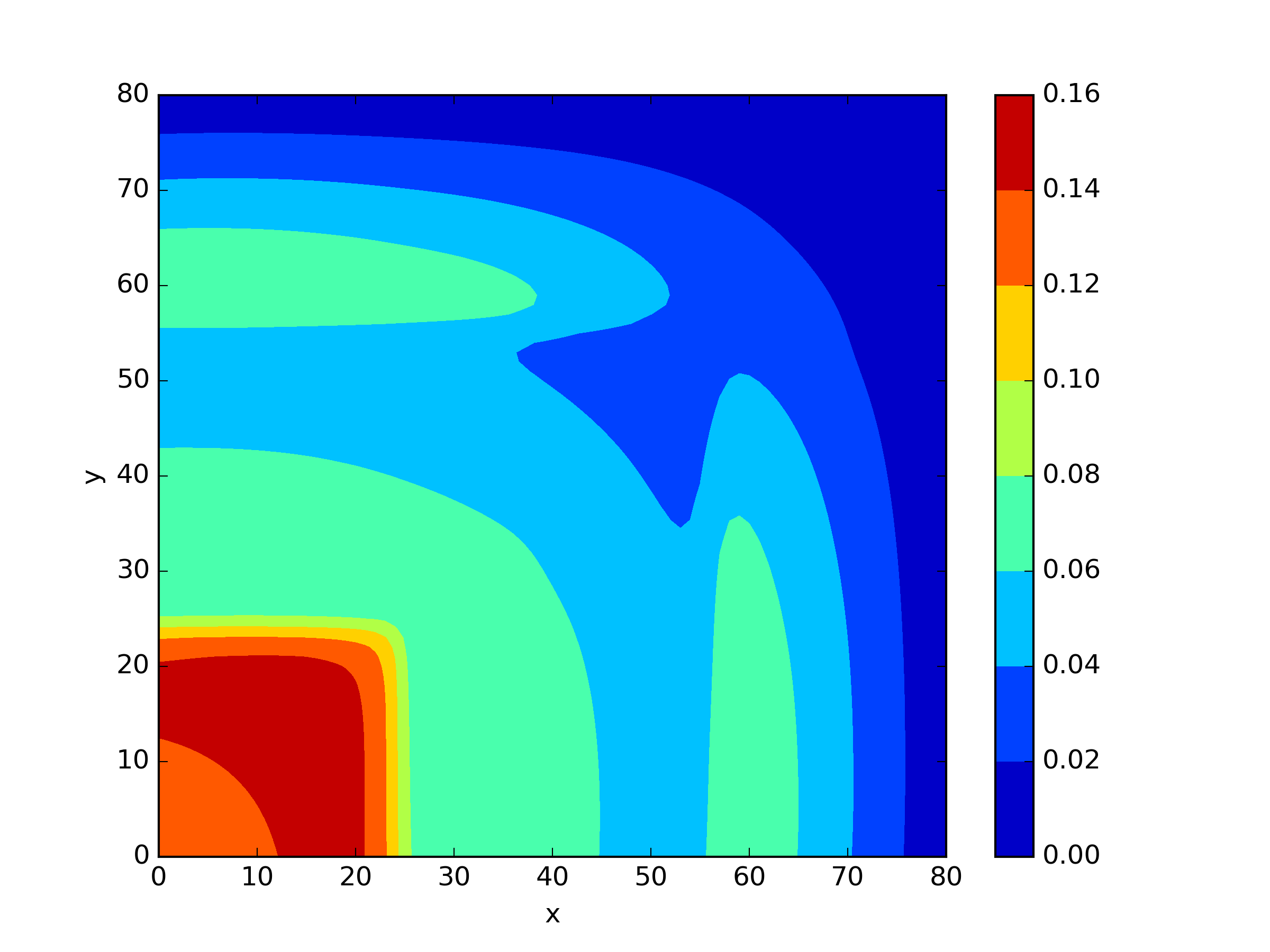

The TWIGL model is a square-shaped nuclear reactor core with dimensions of 160 centimeters for both length and width. For the critical case, the geometric region consists of only two materials: seed and blanket. By exploiting the symmetry of the reactor core, we can reduce the computational domain of the partial differential equation to one-fourth of its original size (Figure 6). At the real outer boundary, homogeneous Dirichlet boundary conditions are applied, while homogeneous Neumann boundary conditions are set at the symmetric boundaries. Therefore, we will apply the Neumann boundary loss function (26b) at the boundaries x=0 and y=0, and the Dirichlet boundary loss function (26a) at the boundaries x=80 and y=80. The corresponding coefficients are presented in the Table 4.

We solved the problem using the finite element method with 320 points on the boundaries with intervals and , and then constructed the internal element mesh using the Delaunay algorithm. This critical eigenvalue solved by FreeFEM is . For our neural network, we have selected a total of 6,399 residual points and 162 interface points. We chose 4 residual blocks, with 32 neurons per layer in each residual block. The Adam optimizer with a learning rate of was used for epochs optimization during the training process.

| Region | |||||||

|---|---|---|---|---|---|---|---|

| Seed | 1.4 | 0.4 | 0.01 | 0.15 | 0.01 | 0.007 | 0.2 |

| Blanket | 1.3 | 0.5 | 0.008 | 0.05 | 0.01 | 0.003 | 0.06 |

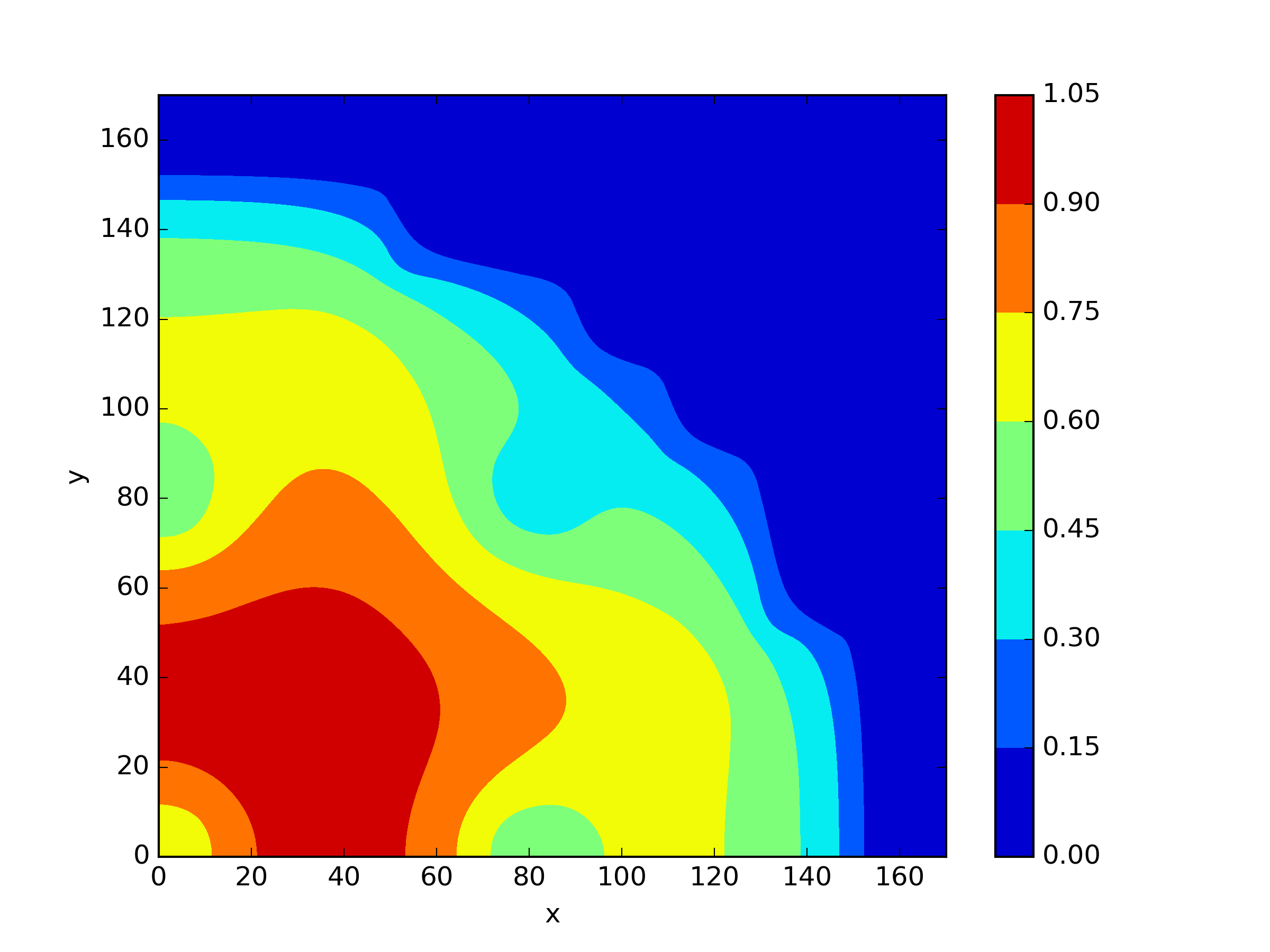

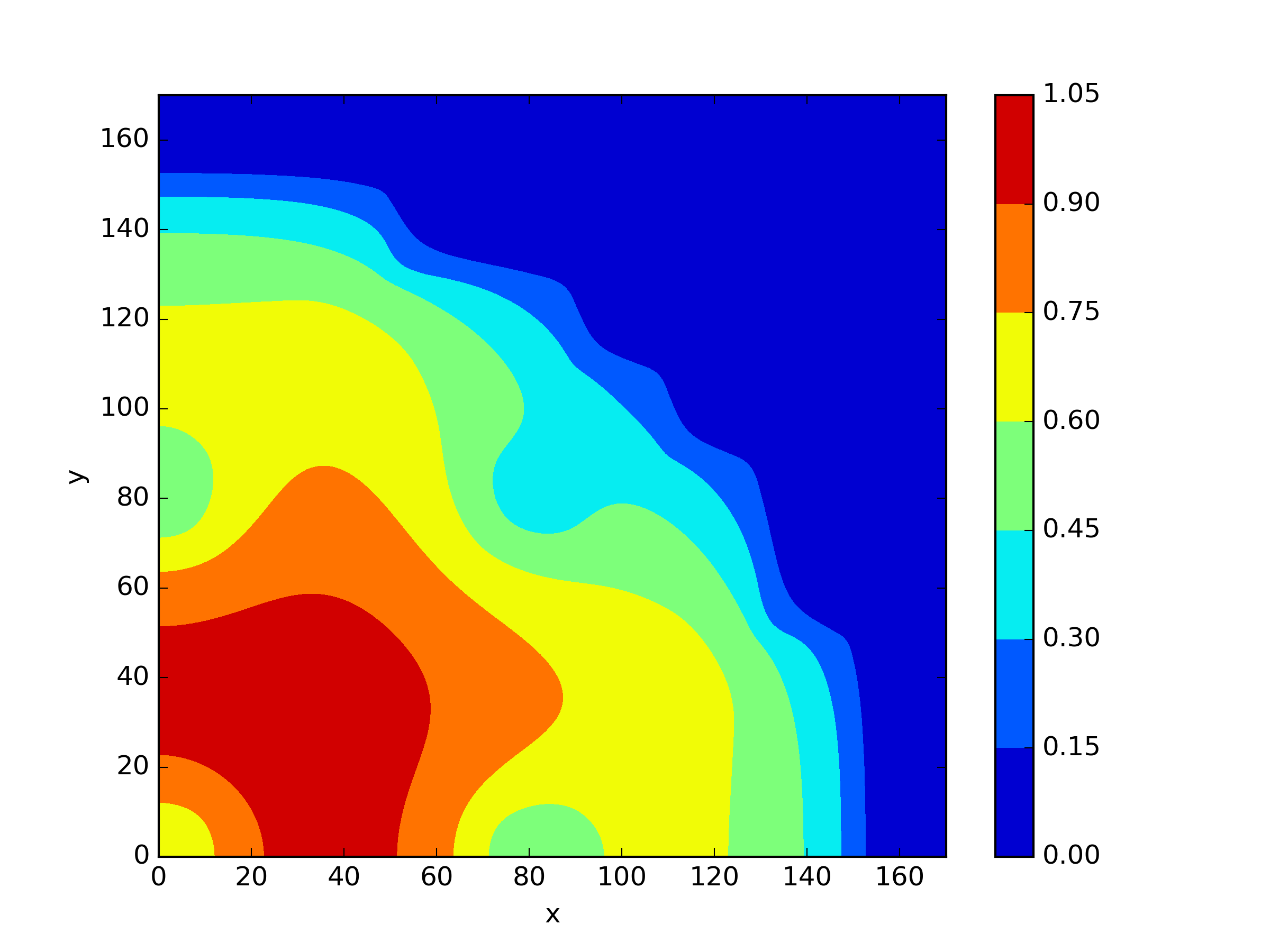

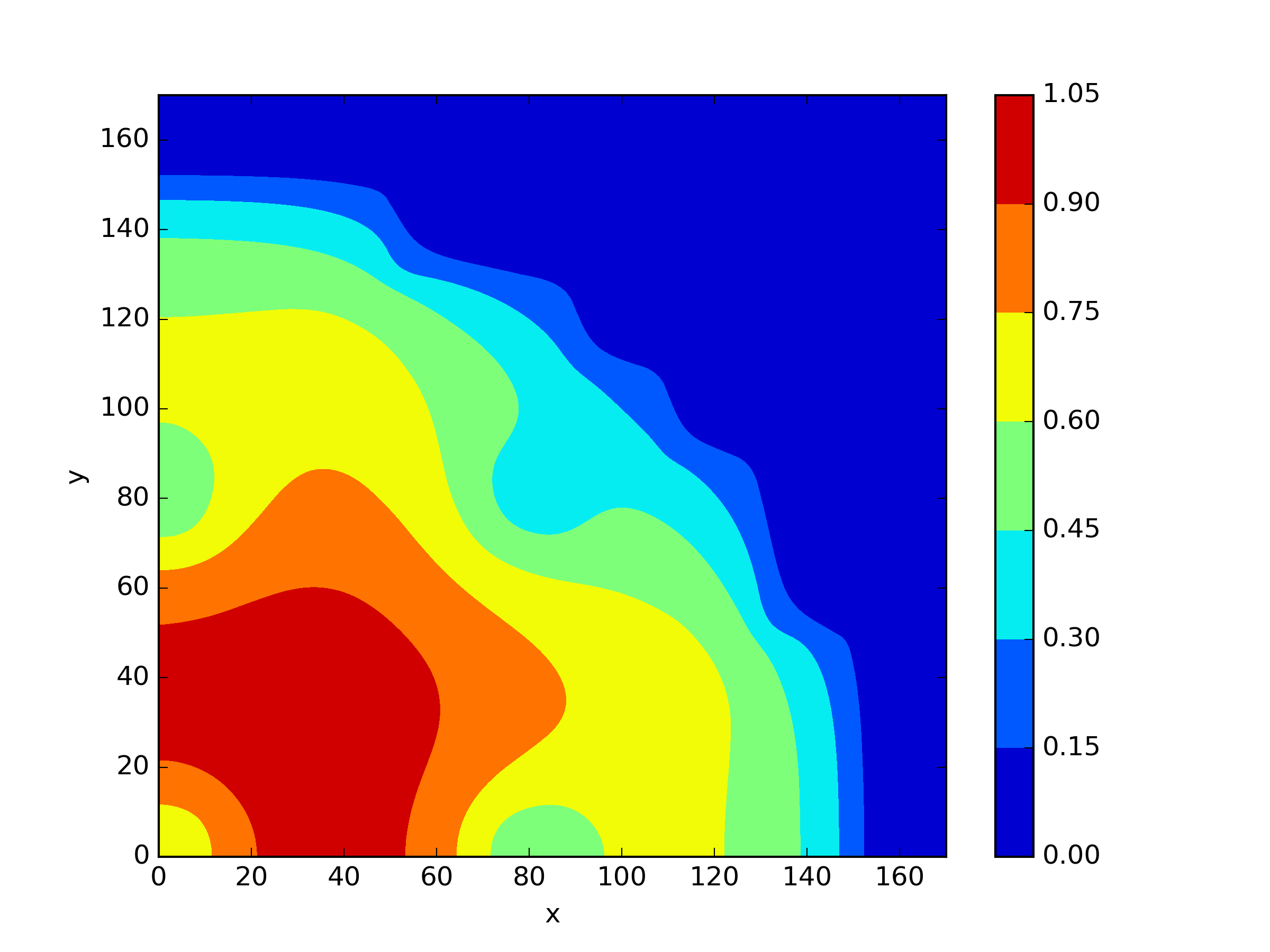

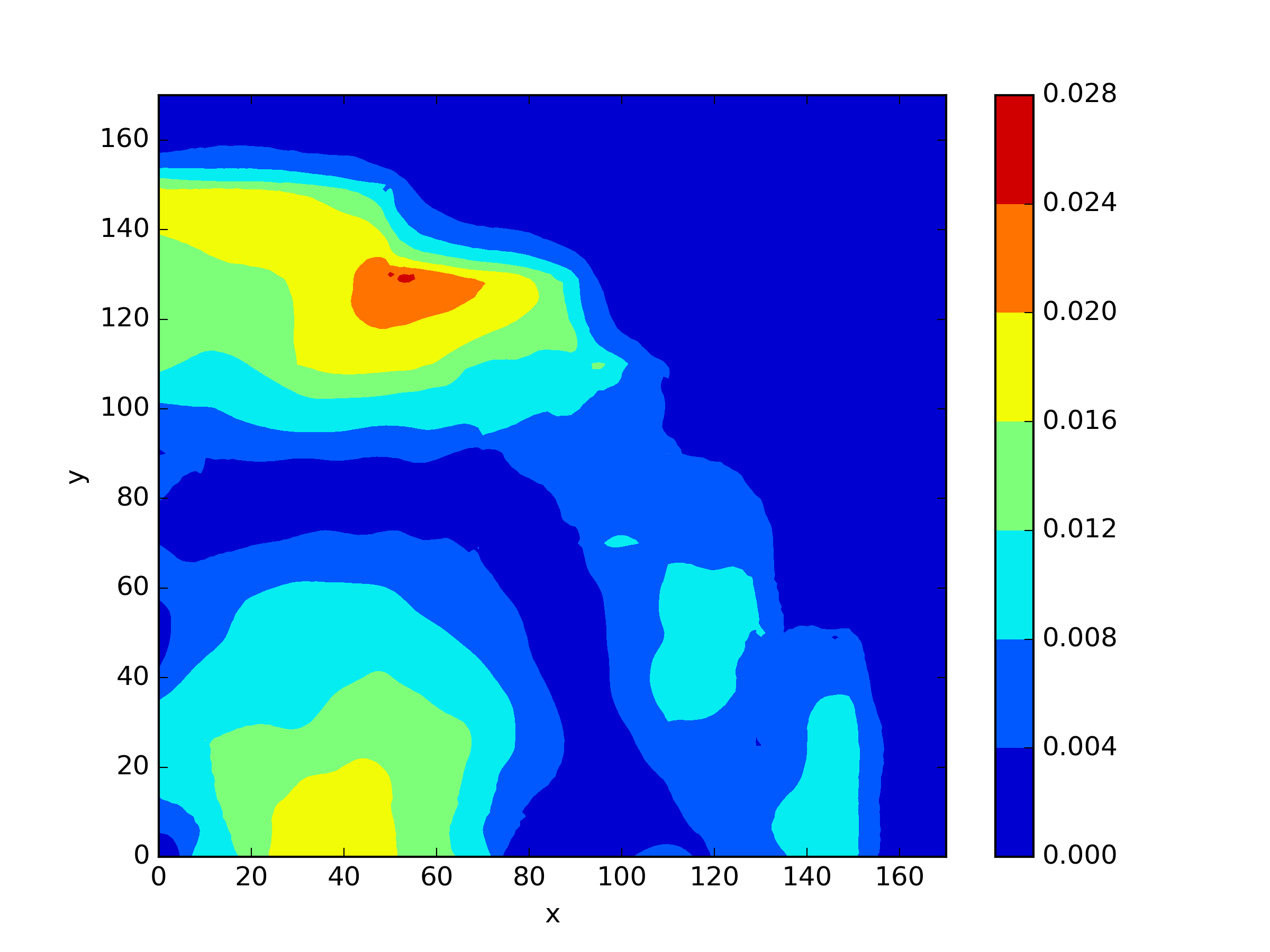

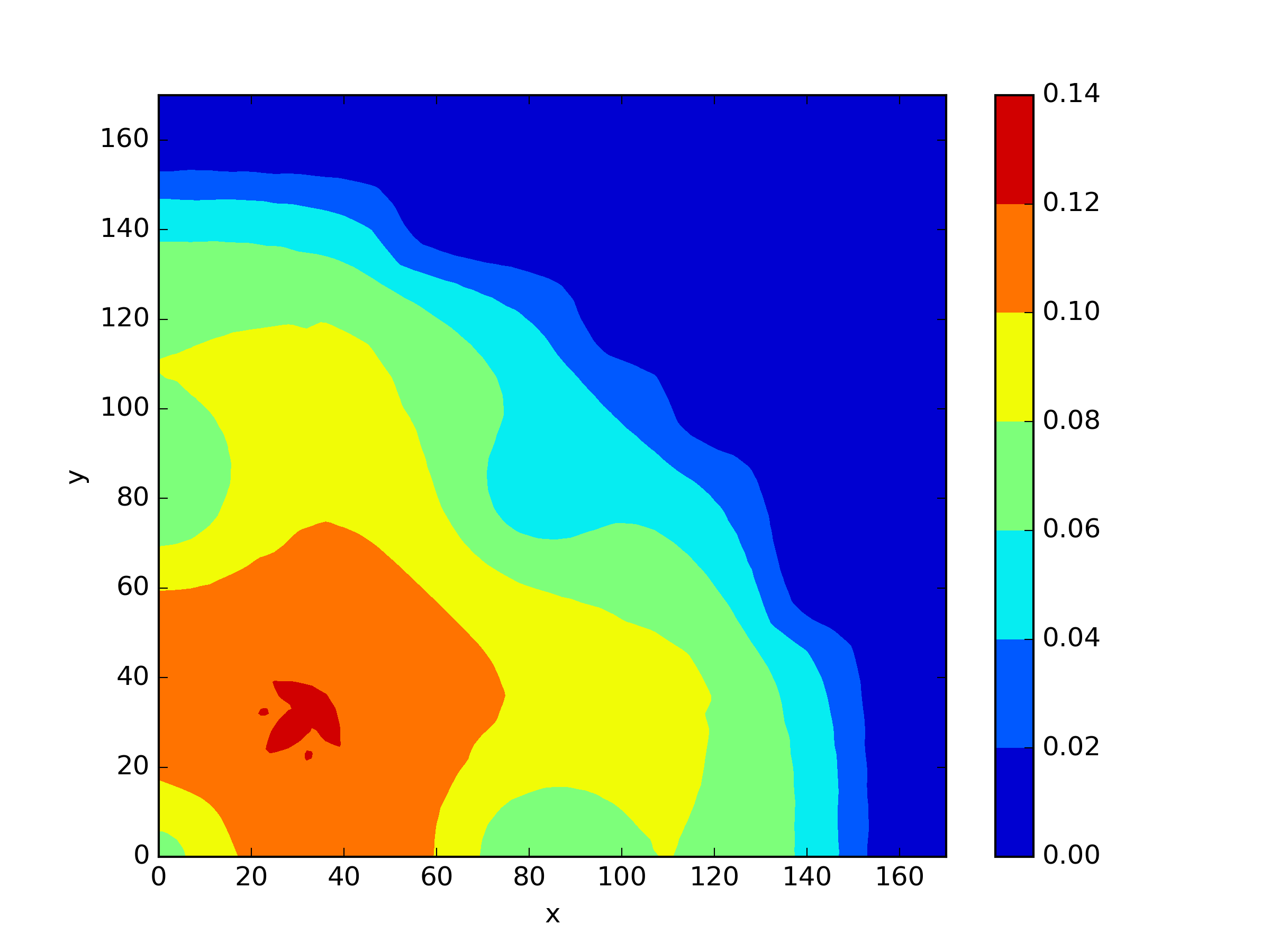

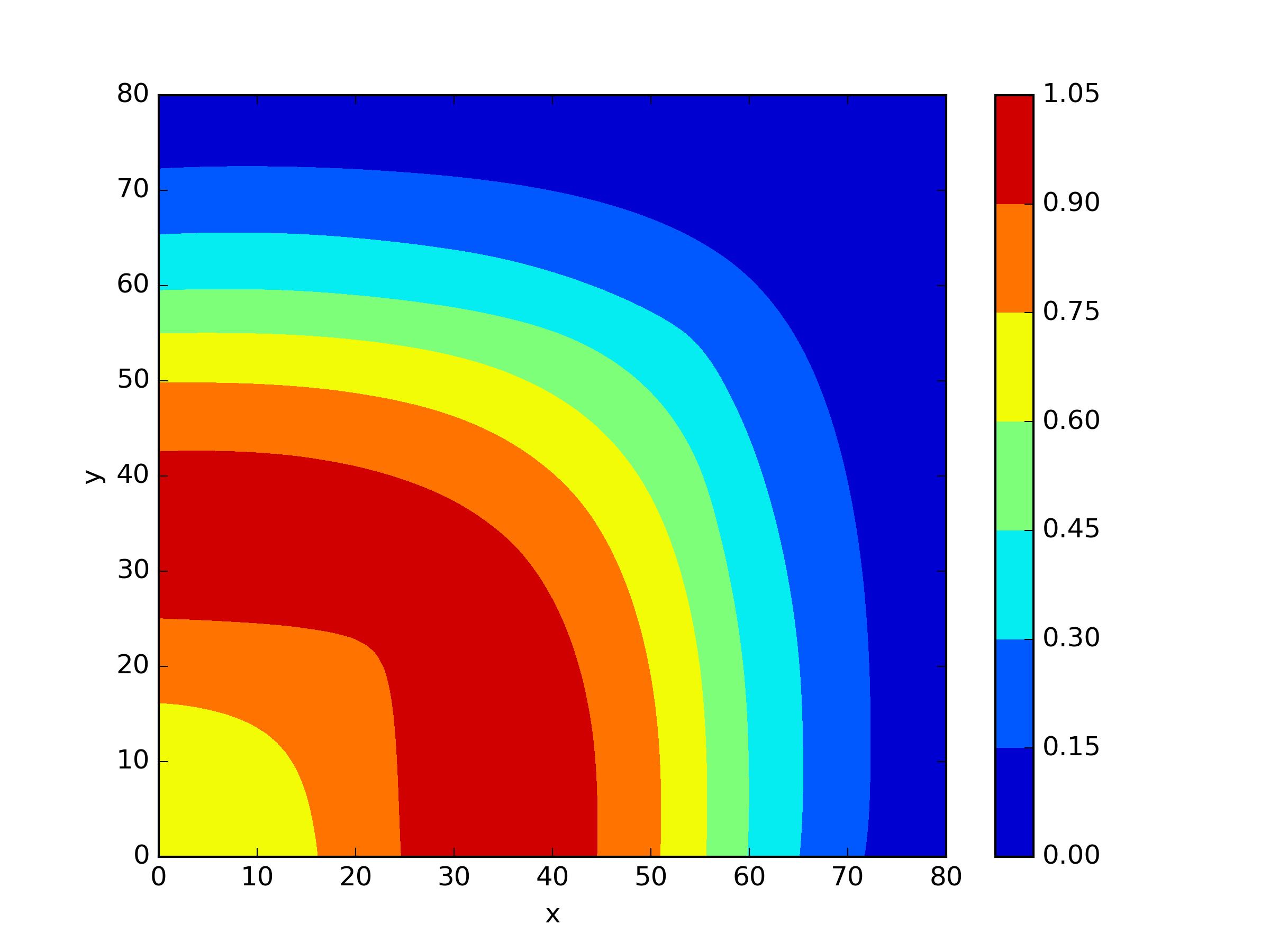

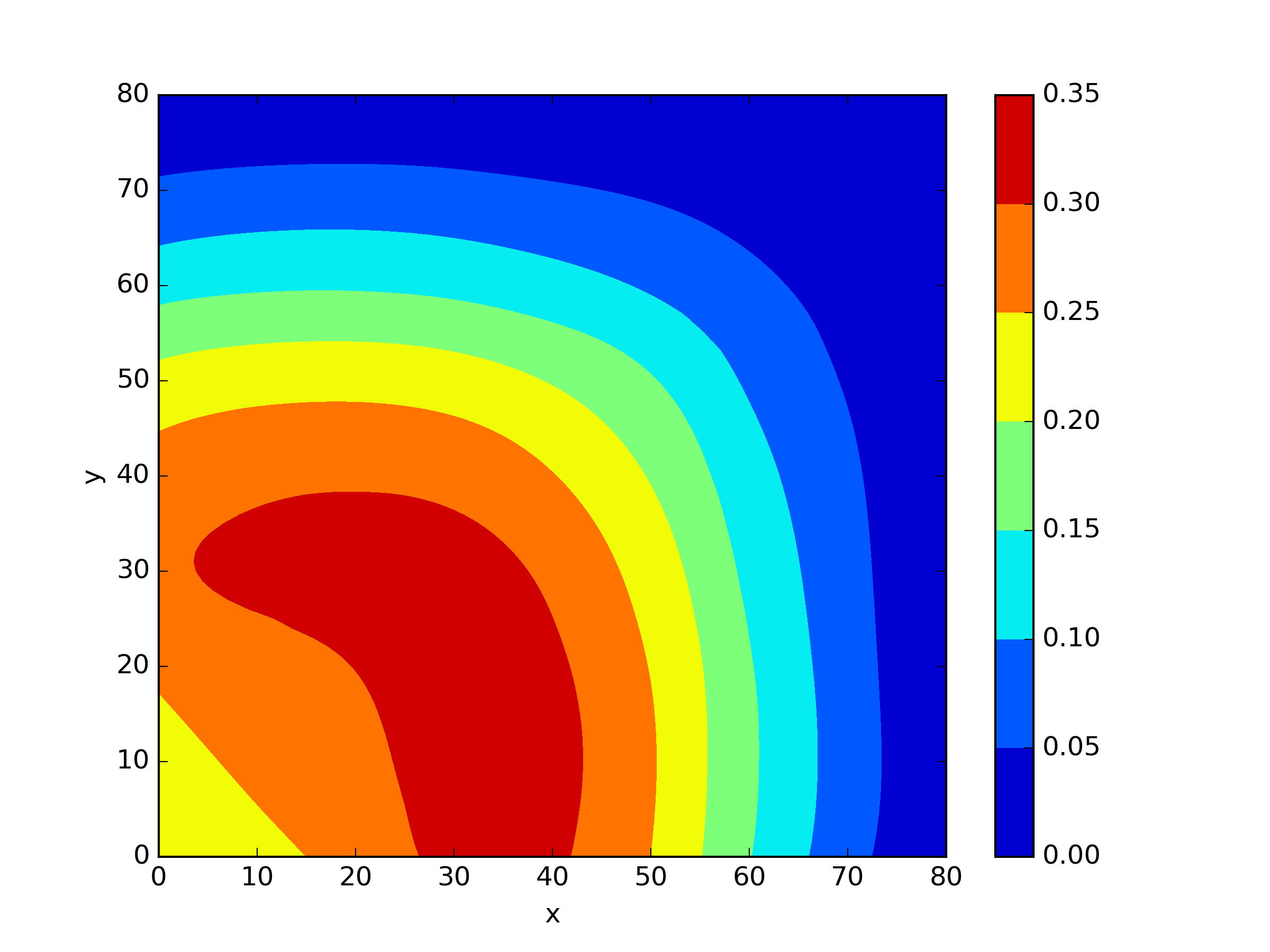

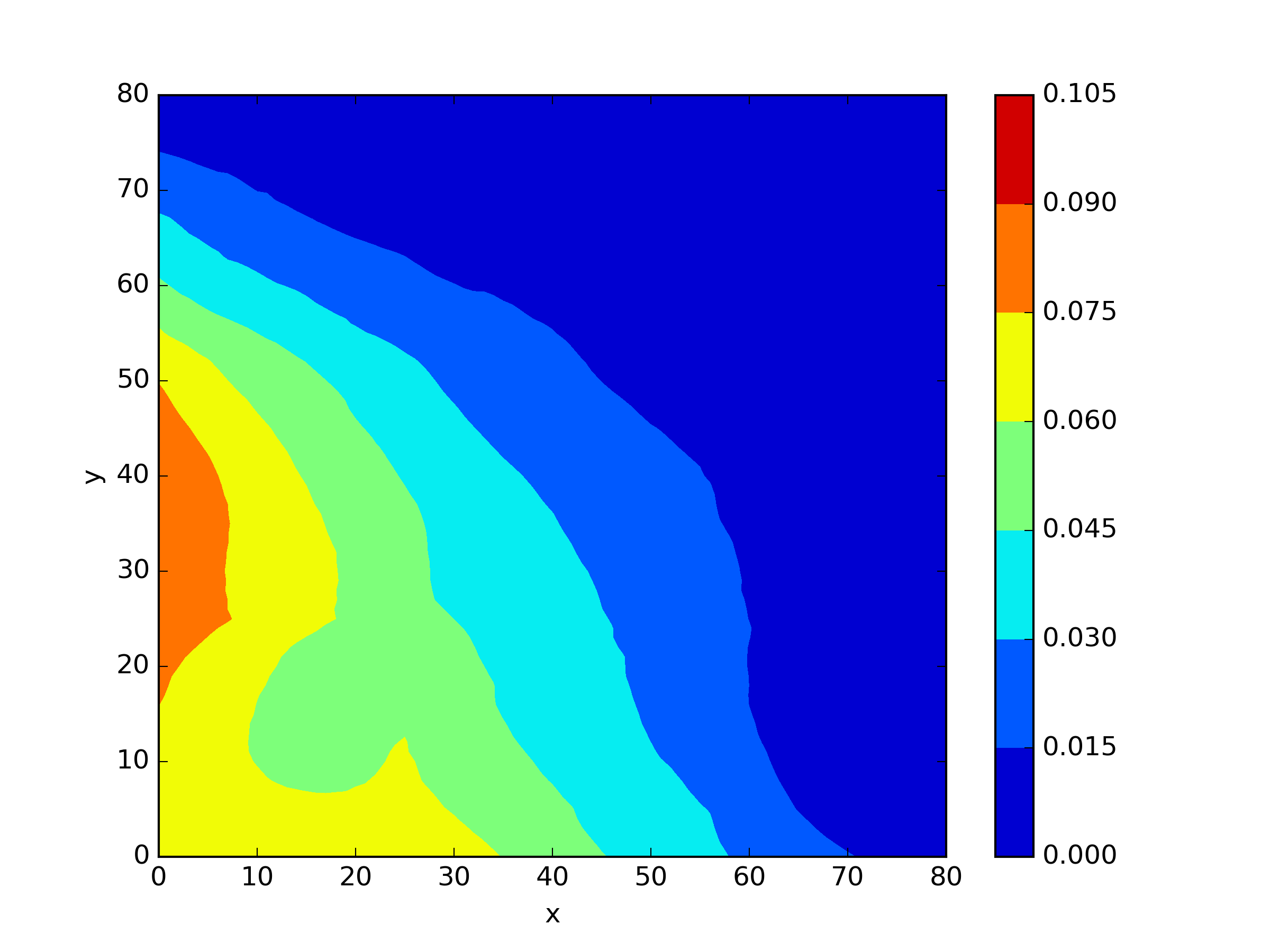

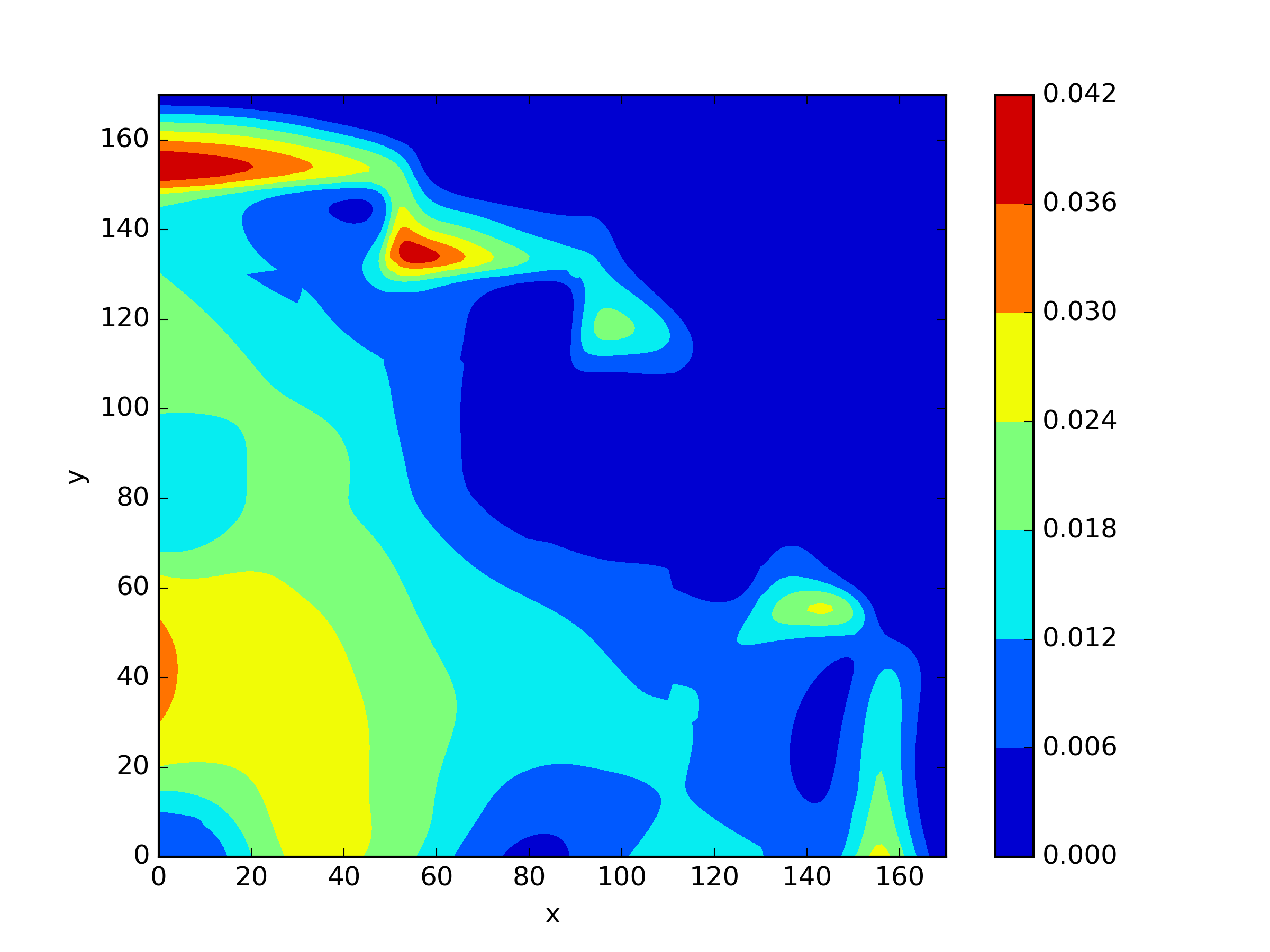

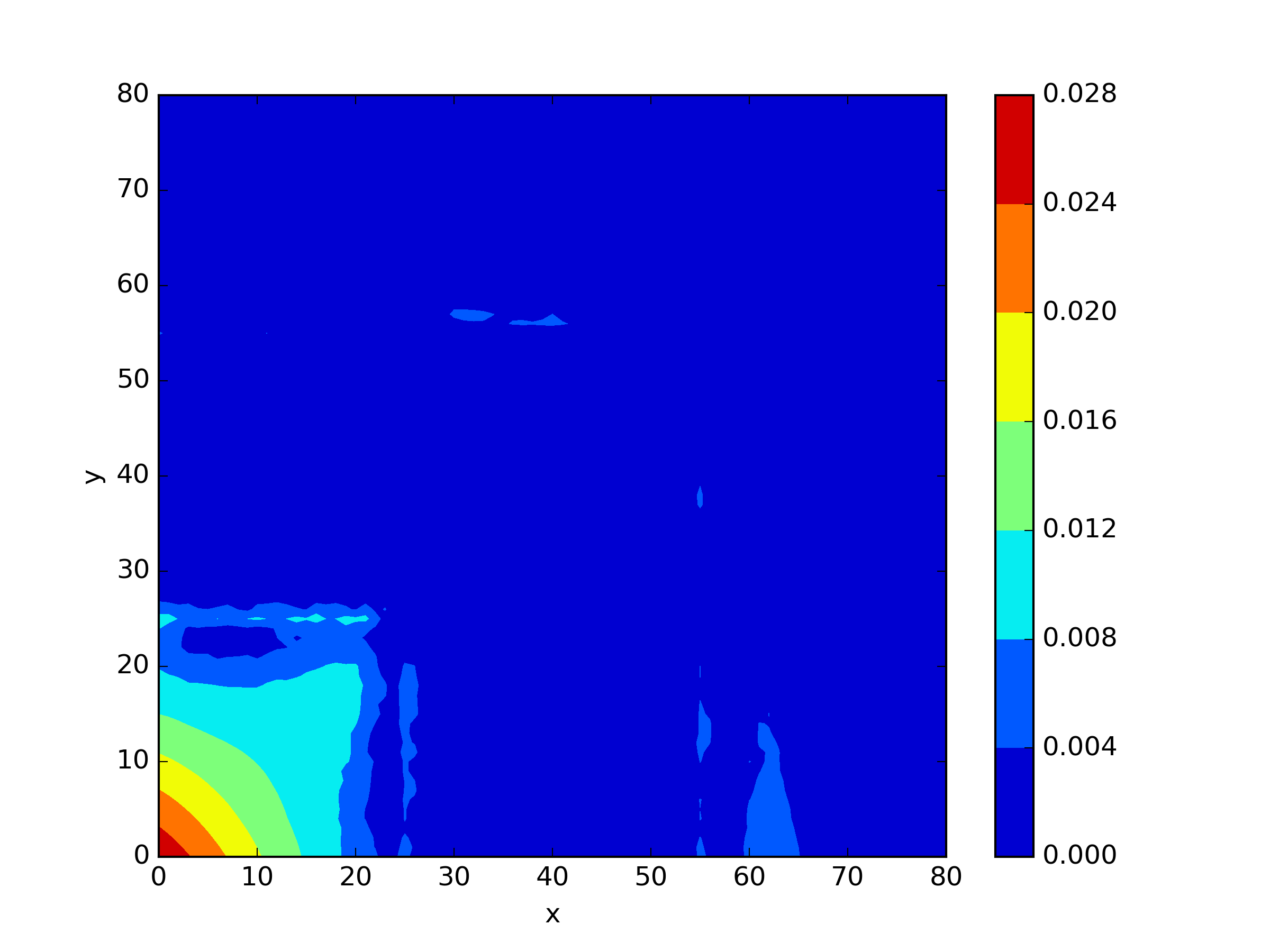

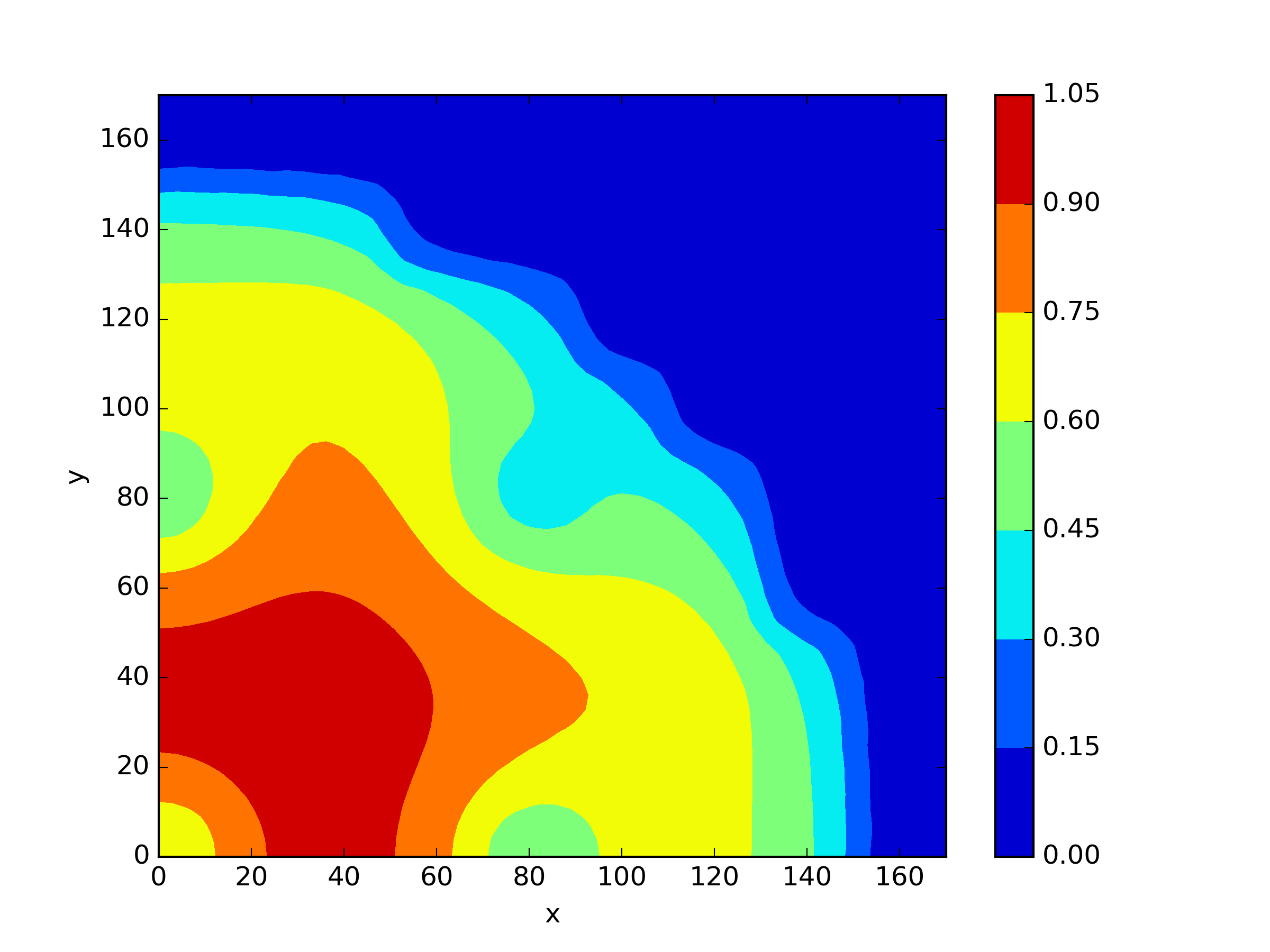

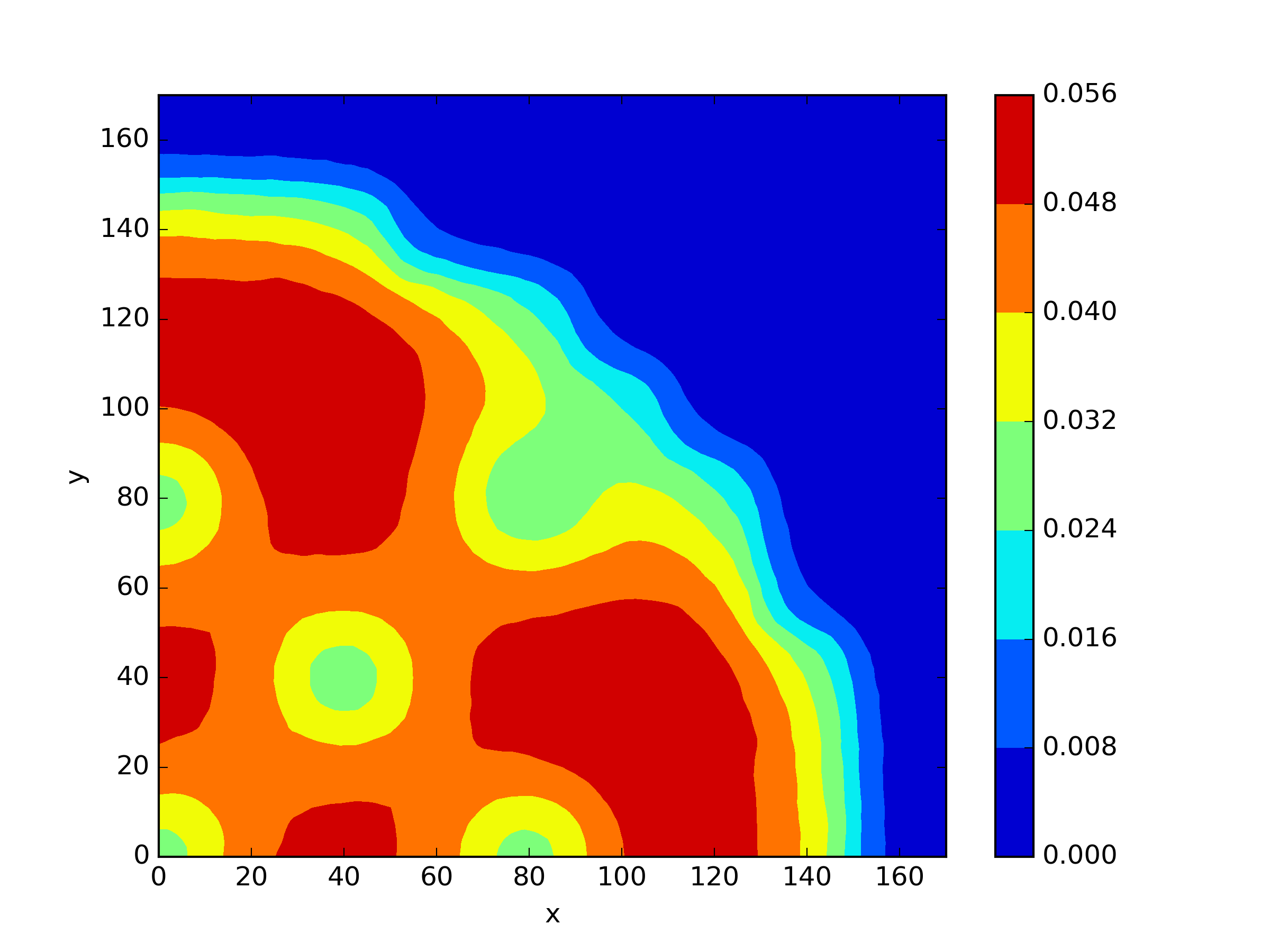

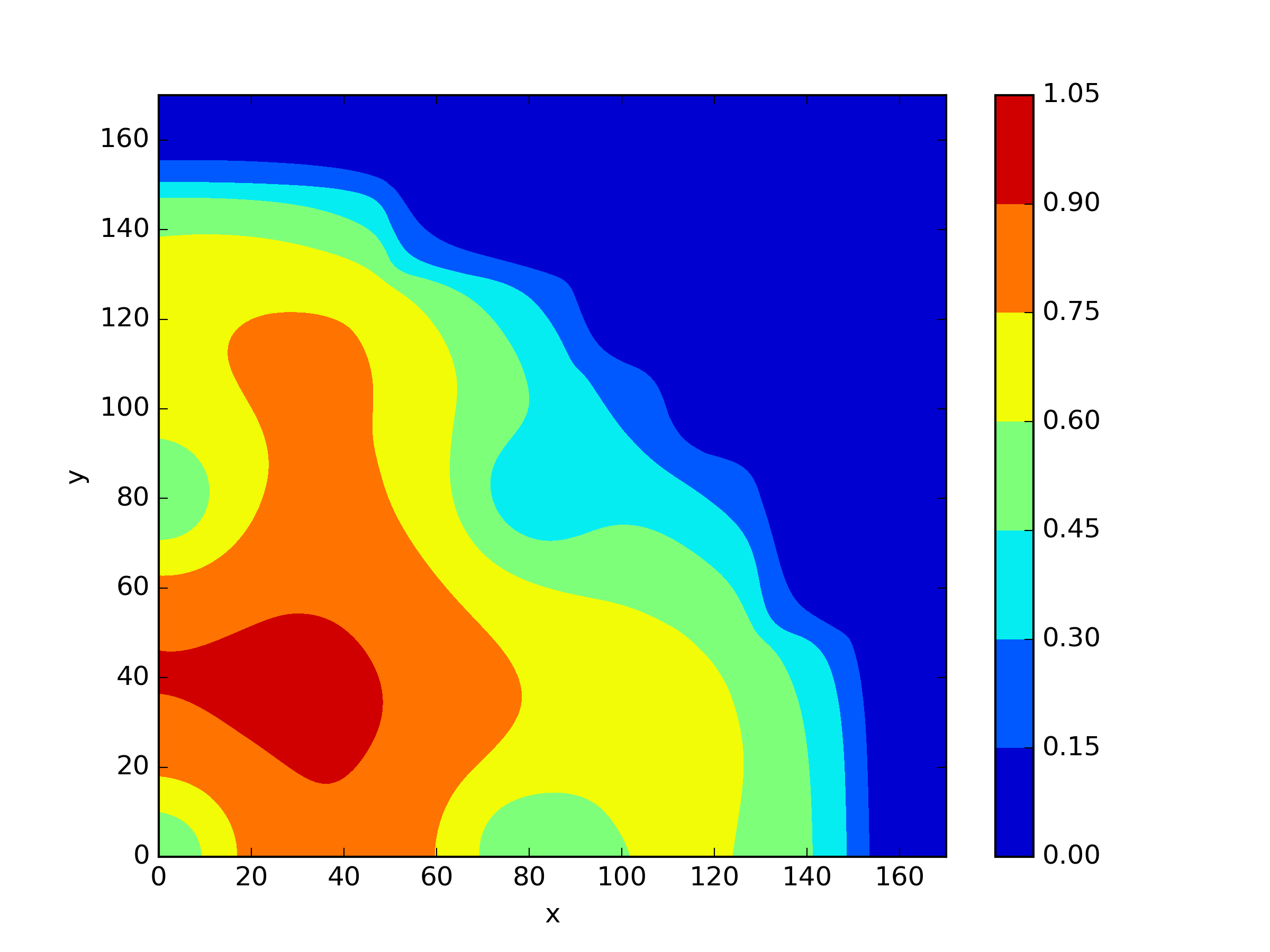

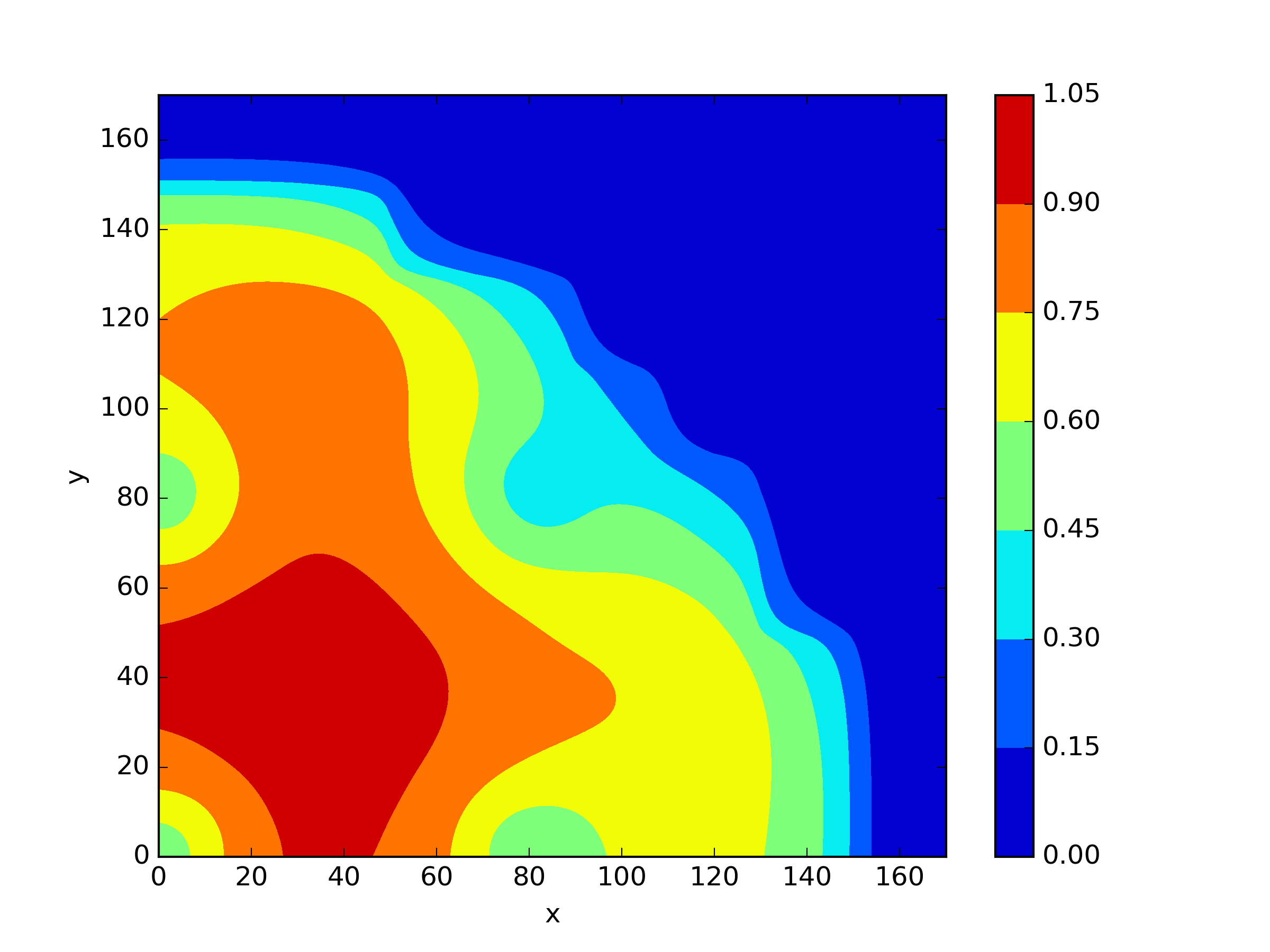

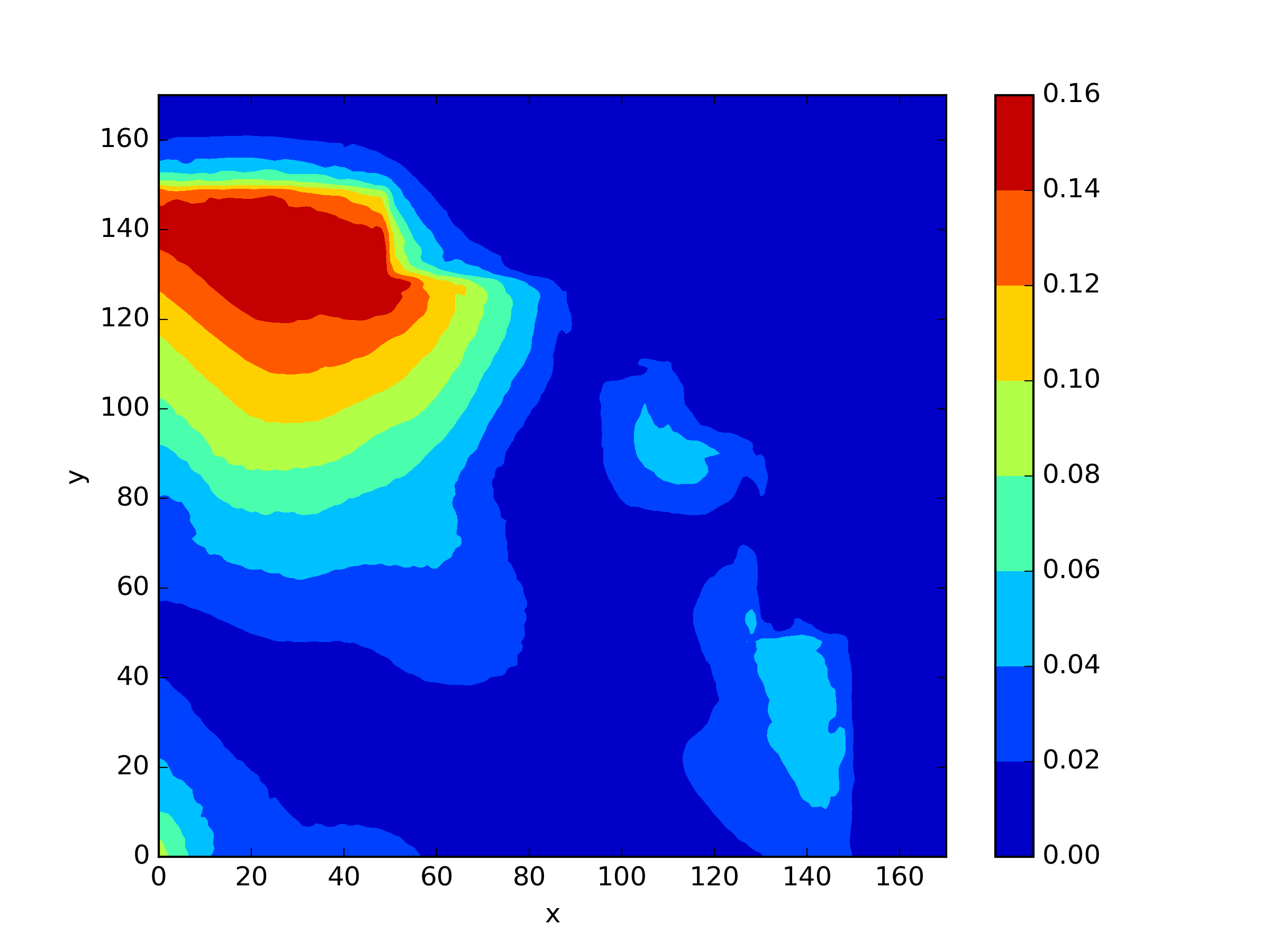

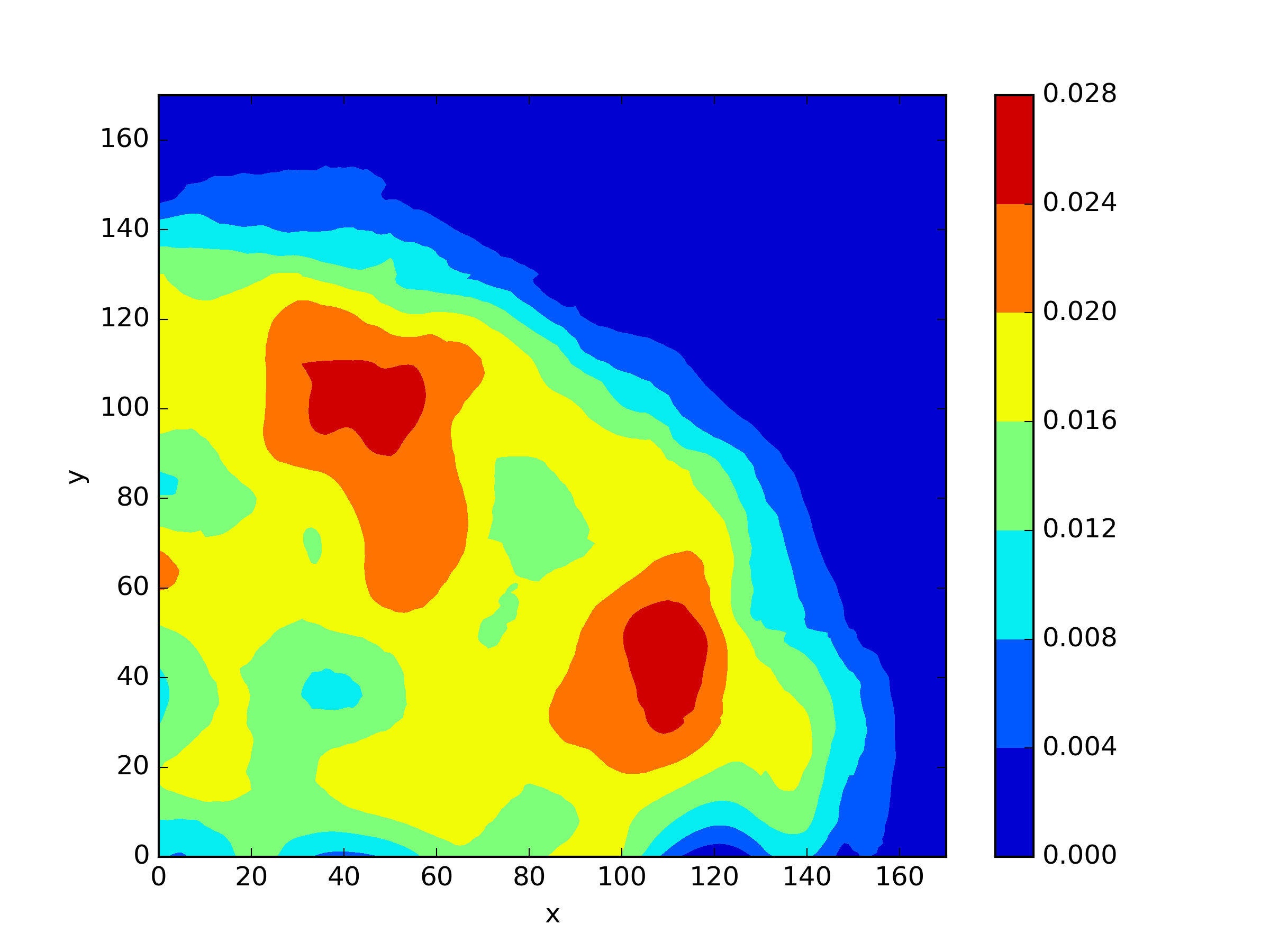

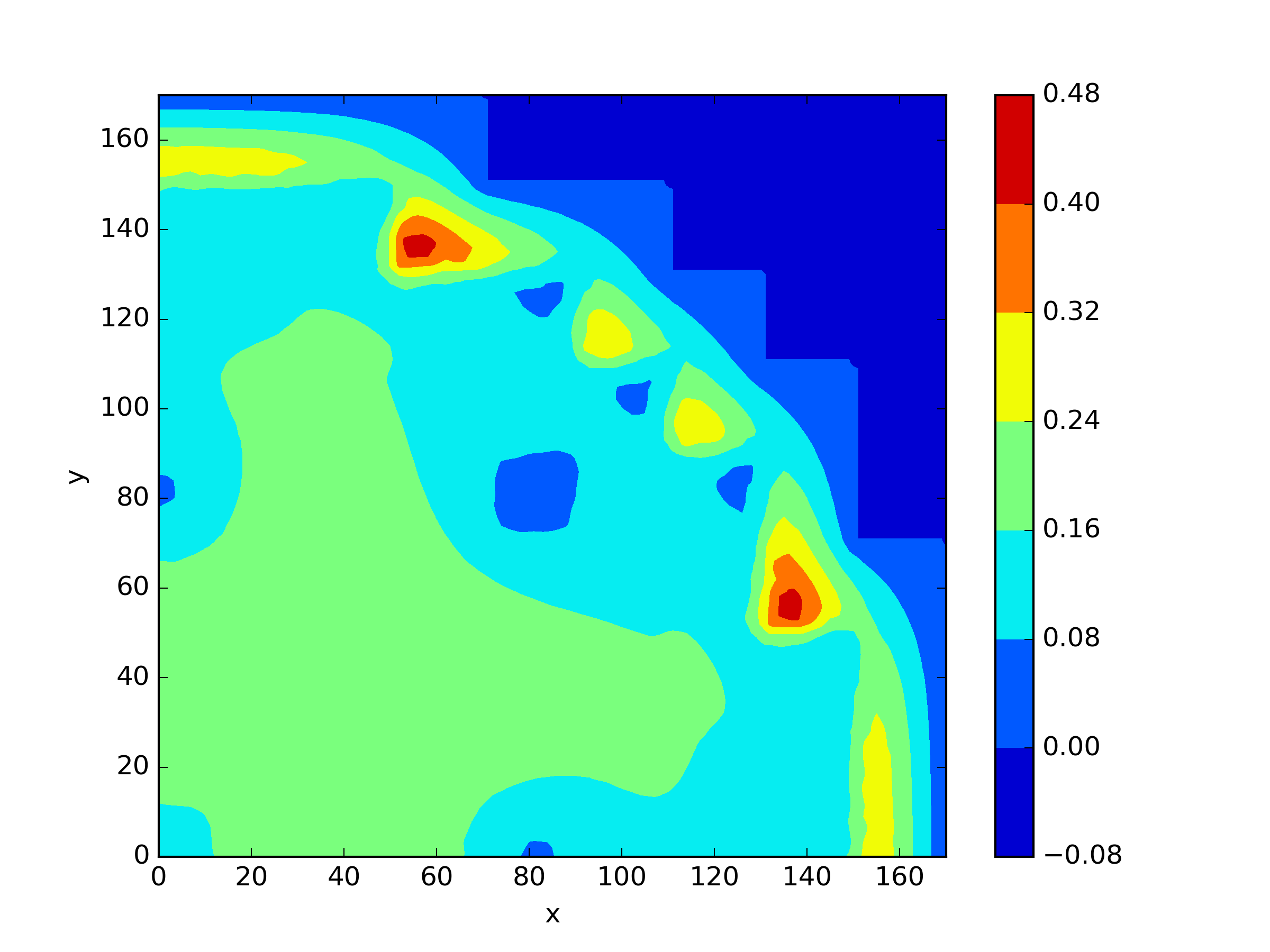

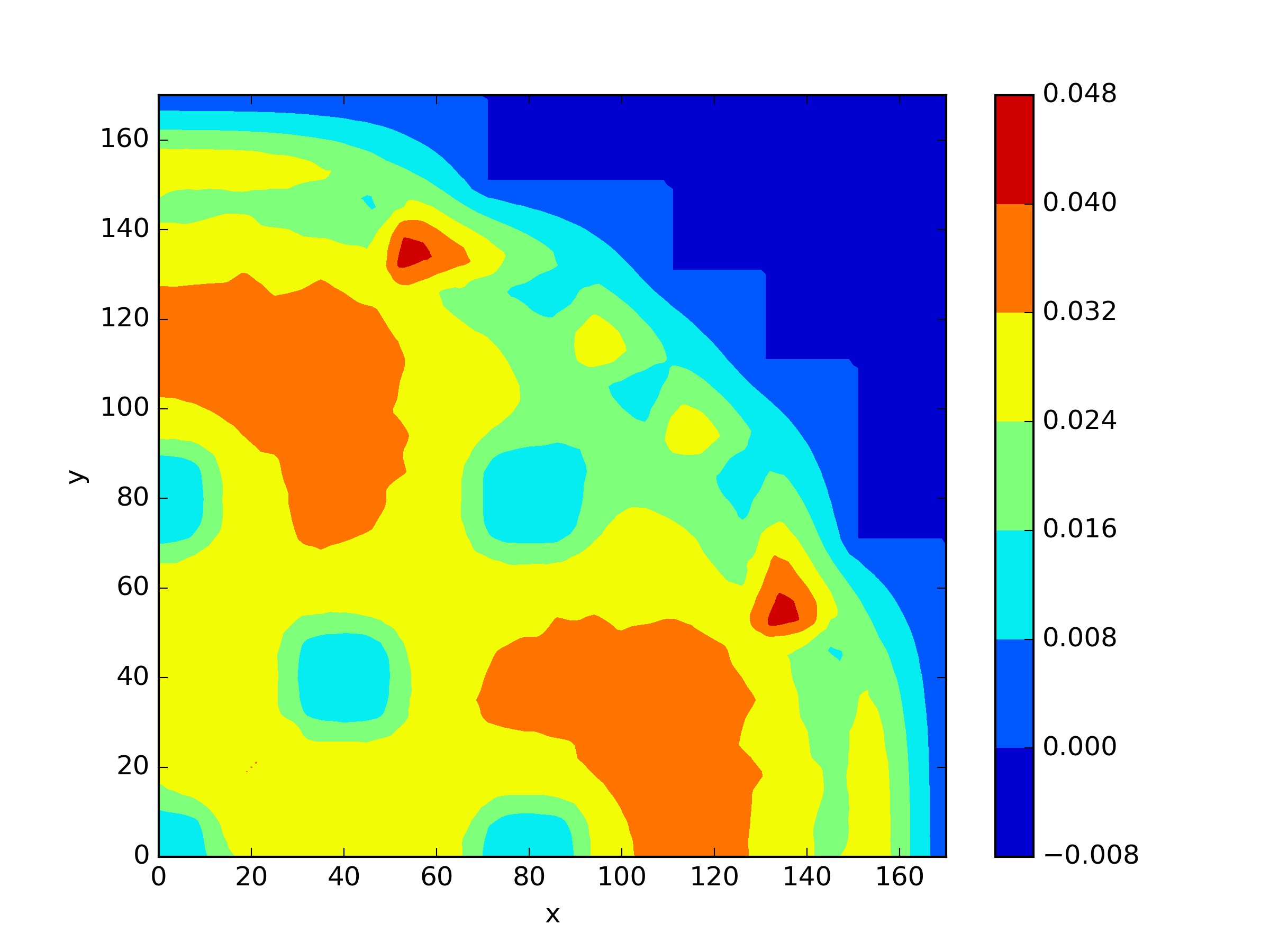

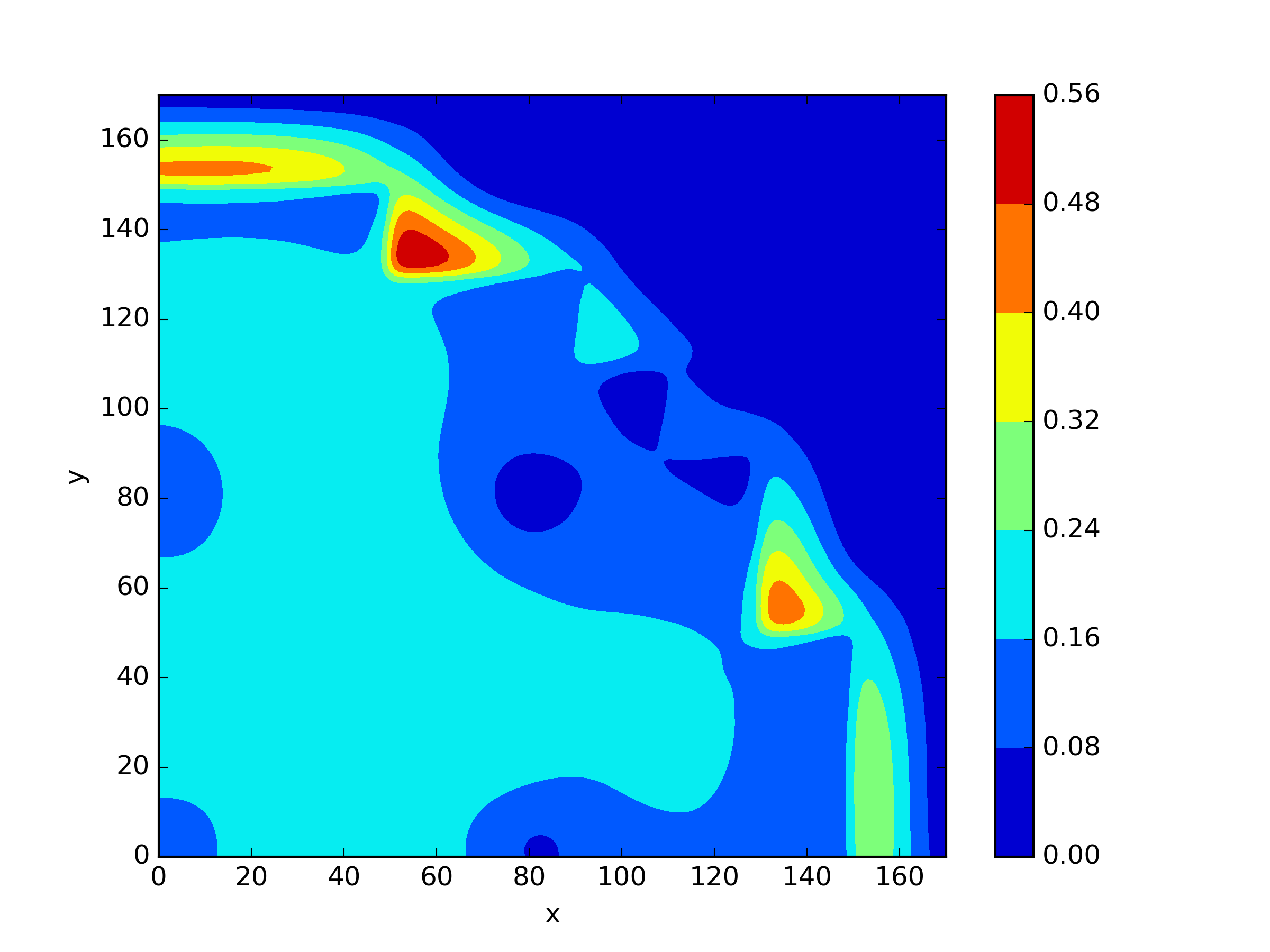

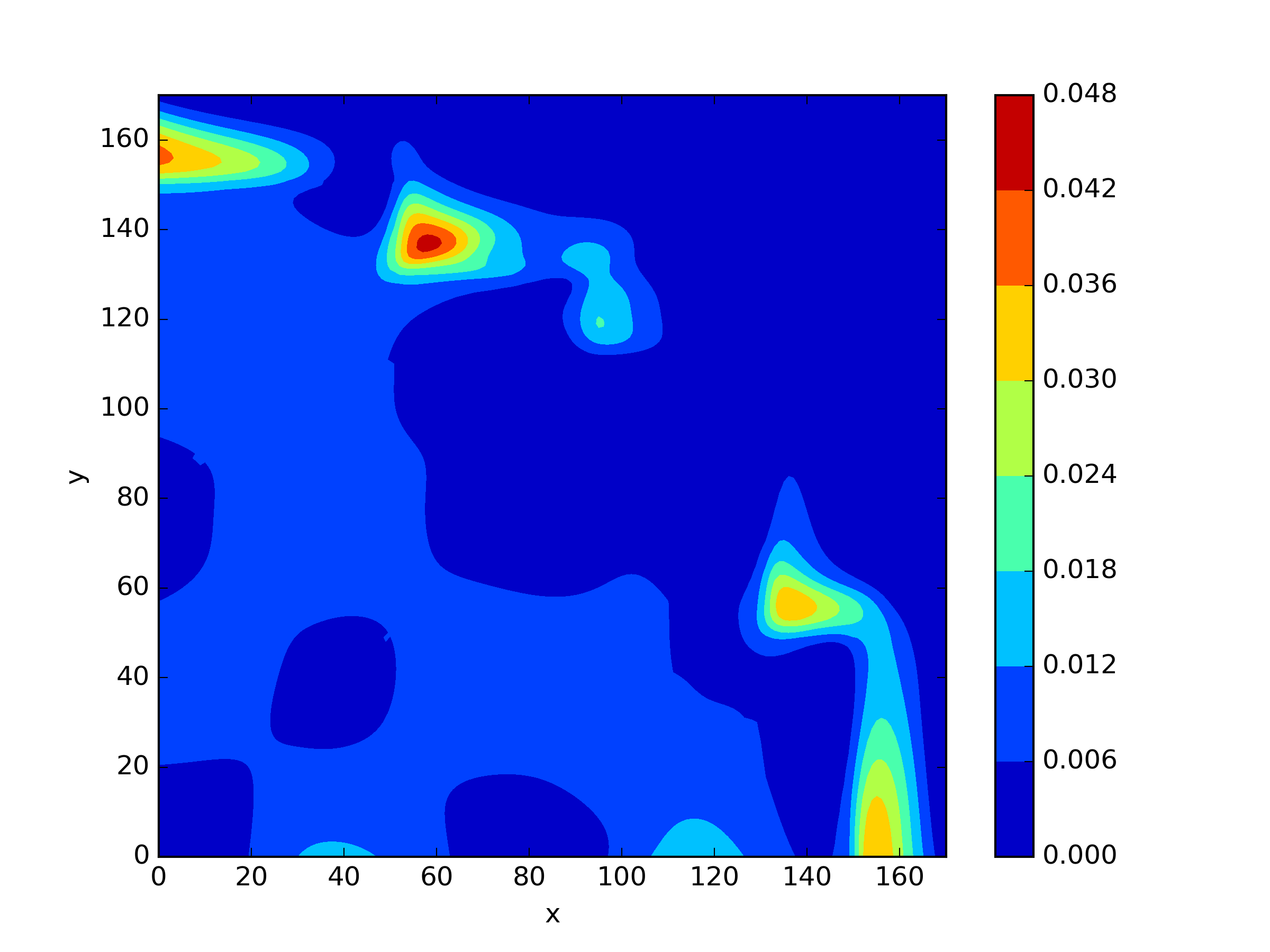

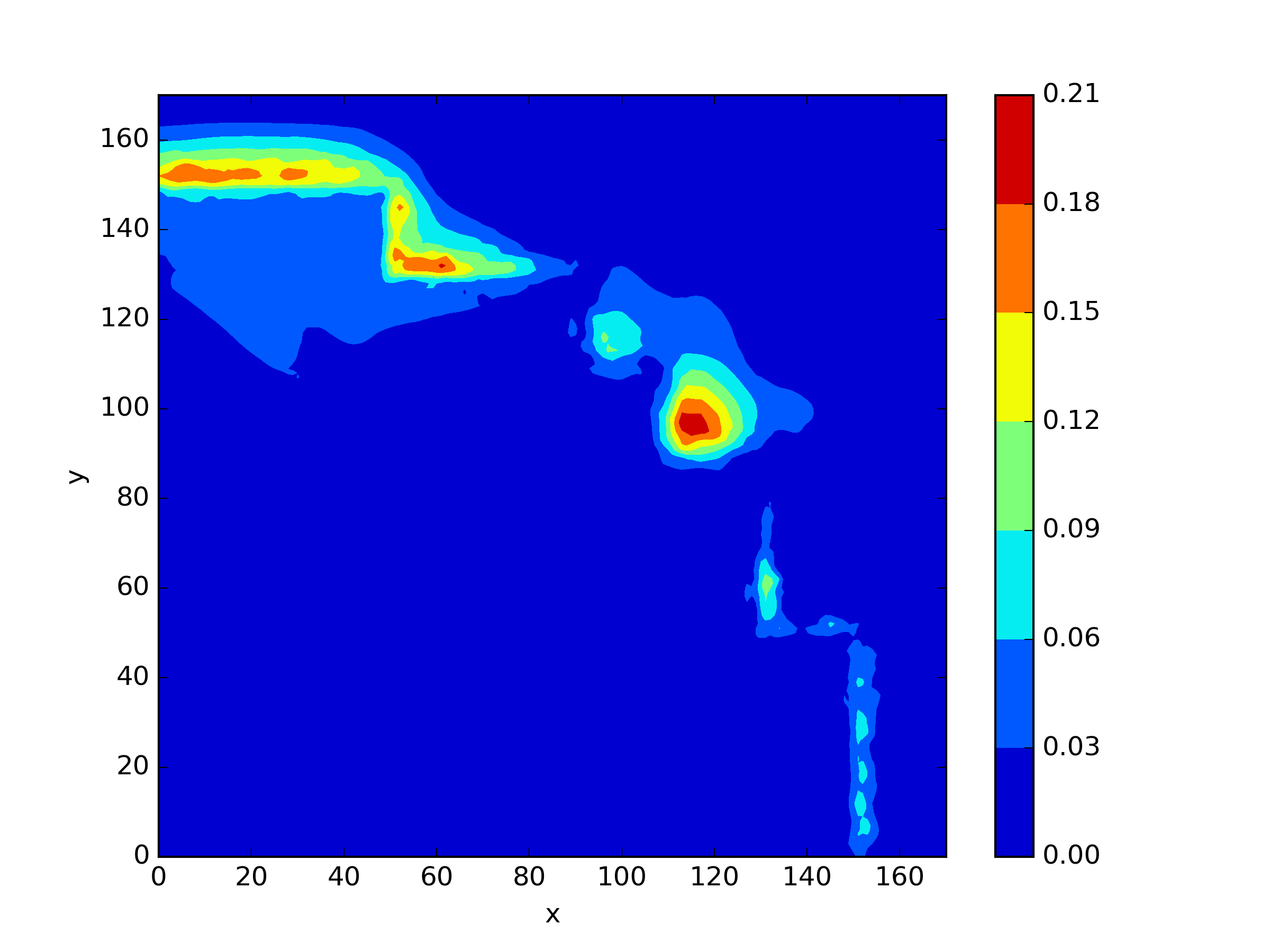

We applied and mentioned earlier to this scenario. For , we only tested the approach without shift. For and , we attempted to use a shift value of 1. The results are presented in Table 5 and Figure 7. We found that did not exhibit any significant disadvantages in solving this problem. However, it can be observed that the methods with a shift in and achieved slightly higher accuracy compared to the method without shift.

| Shift | ||||||

|---|---|---|---|---|---|---|

| 0 | 4.6363e-03 | 3.4499e-02 | 1.3948e-02 | 5.1534e-02 | 2.4732e-02 | |

| 0 | 4.3026e-03 | 3.4864e-02 | 1.6327e-02 | 5.6210e-02 | 2.7221e-02 | |

| 1 | 3.3199e-03 | 2.1937e-02 | 8.9844e-03 | 5.3010e-02 | 2.9896e-02 | |

| 1 | 3.9902e-03 | 3.1621e-02 | 1.4112e-02 | 5.4007e-02 | 2.6699e-02 |

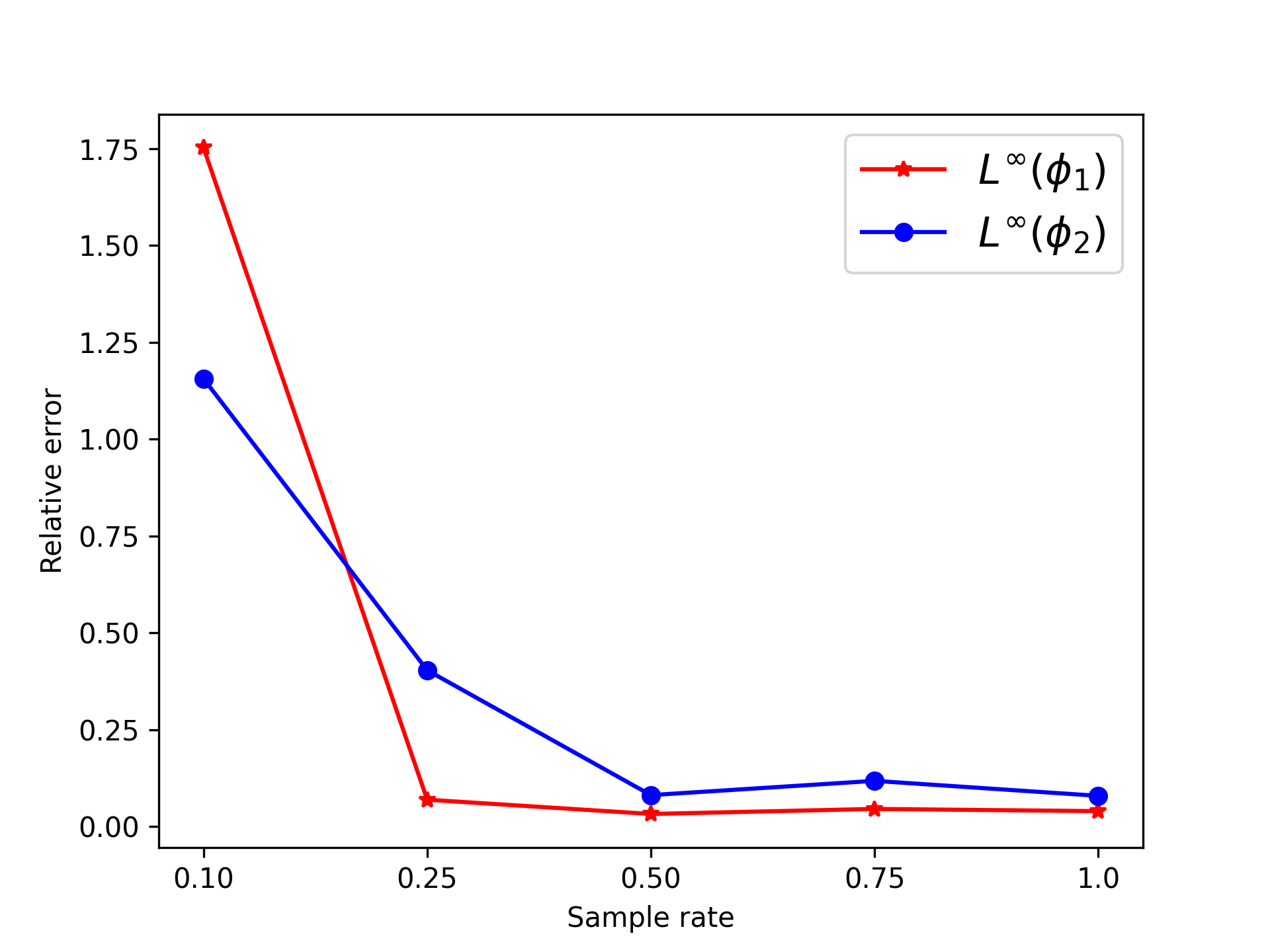

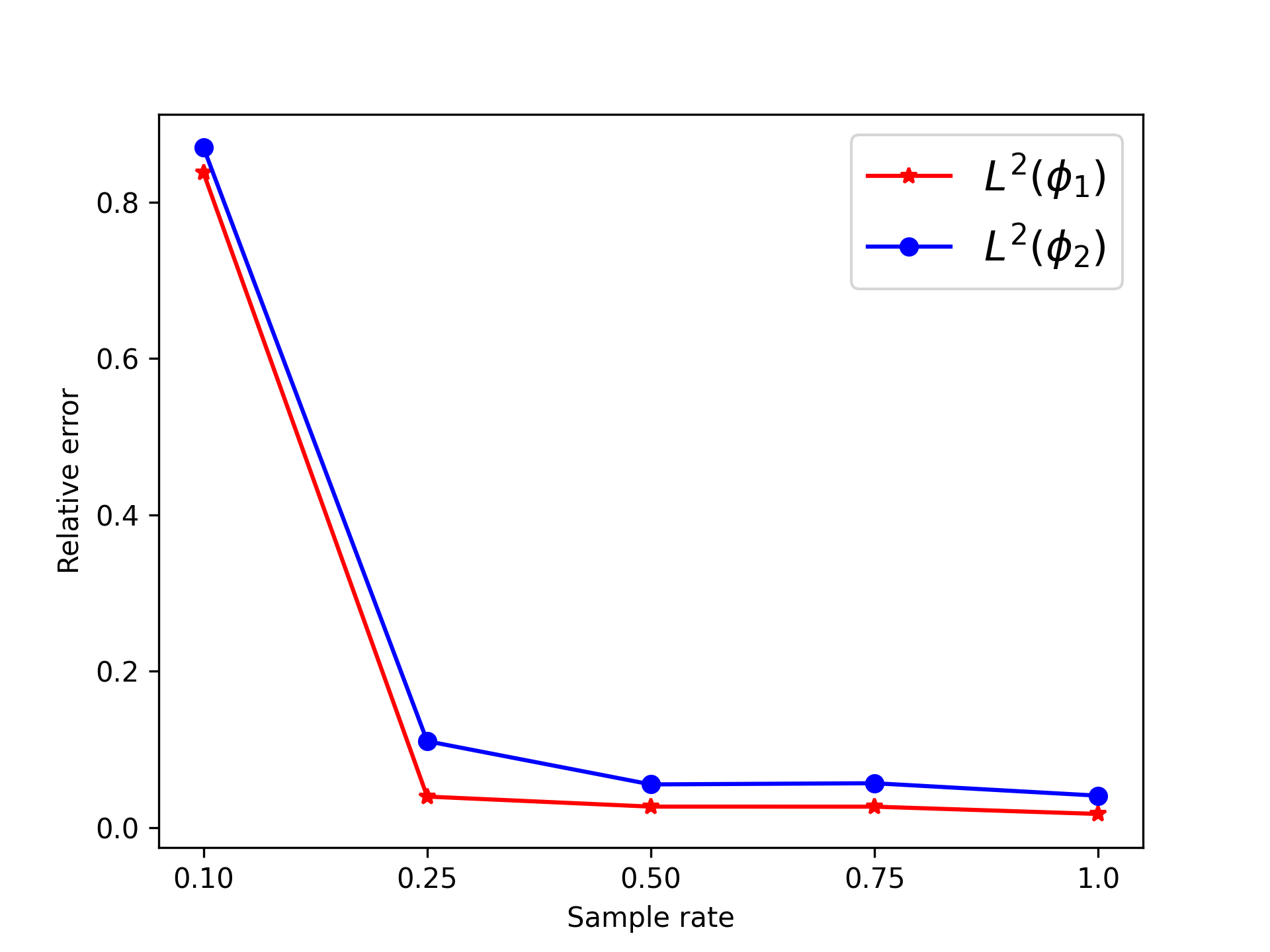

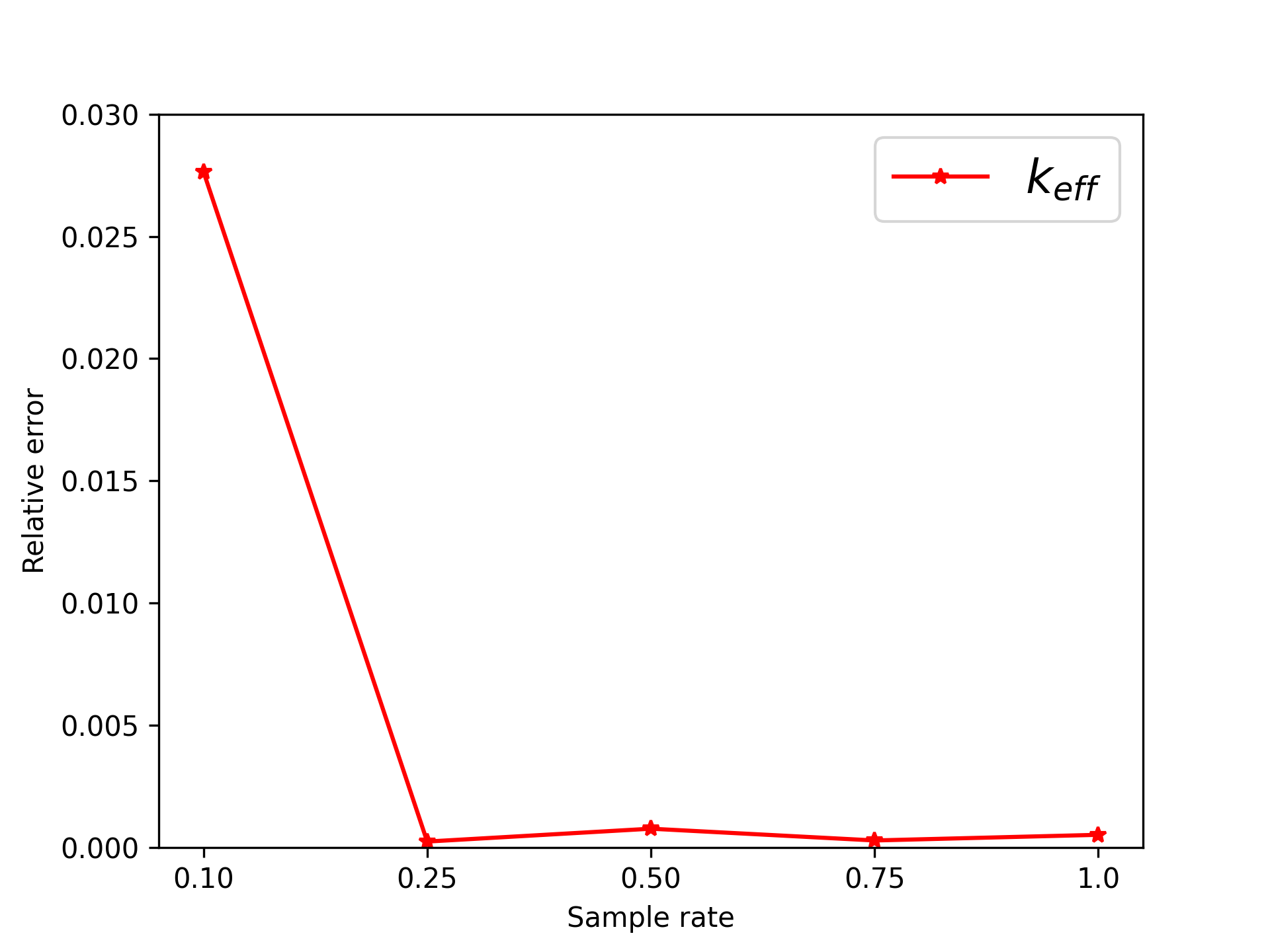

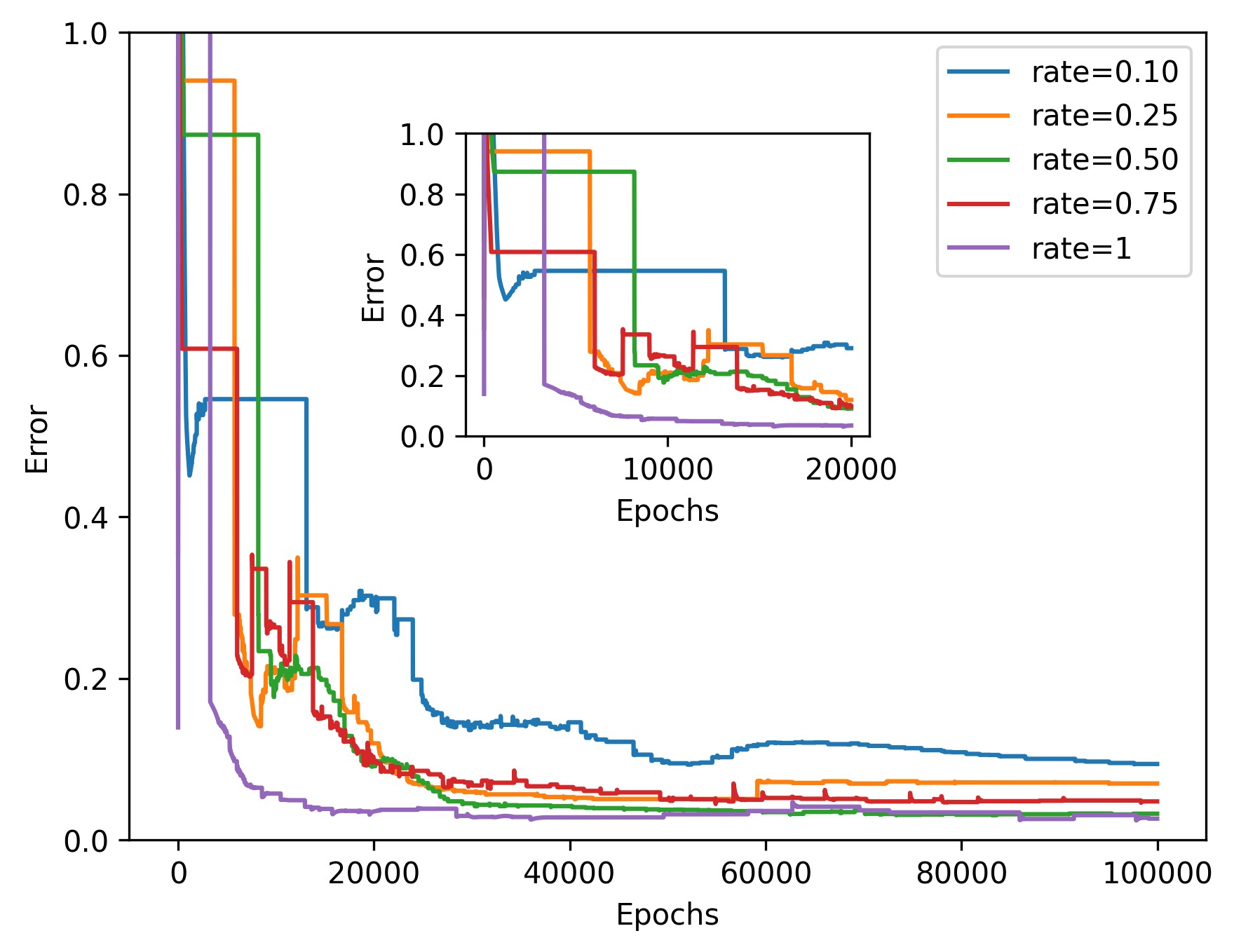

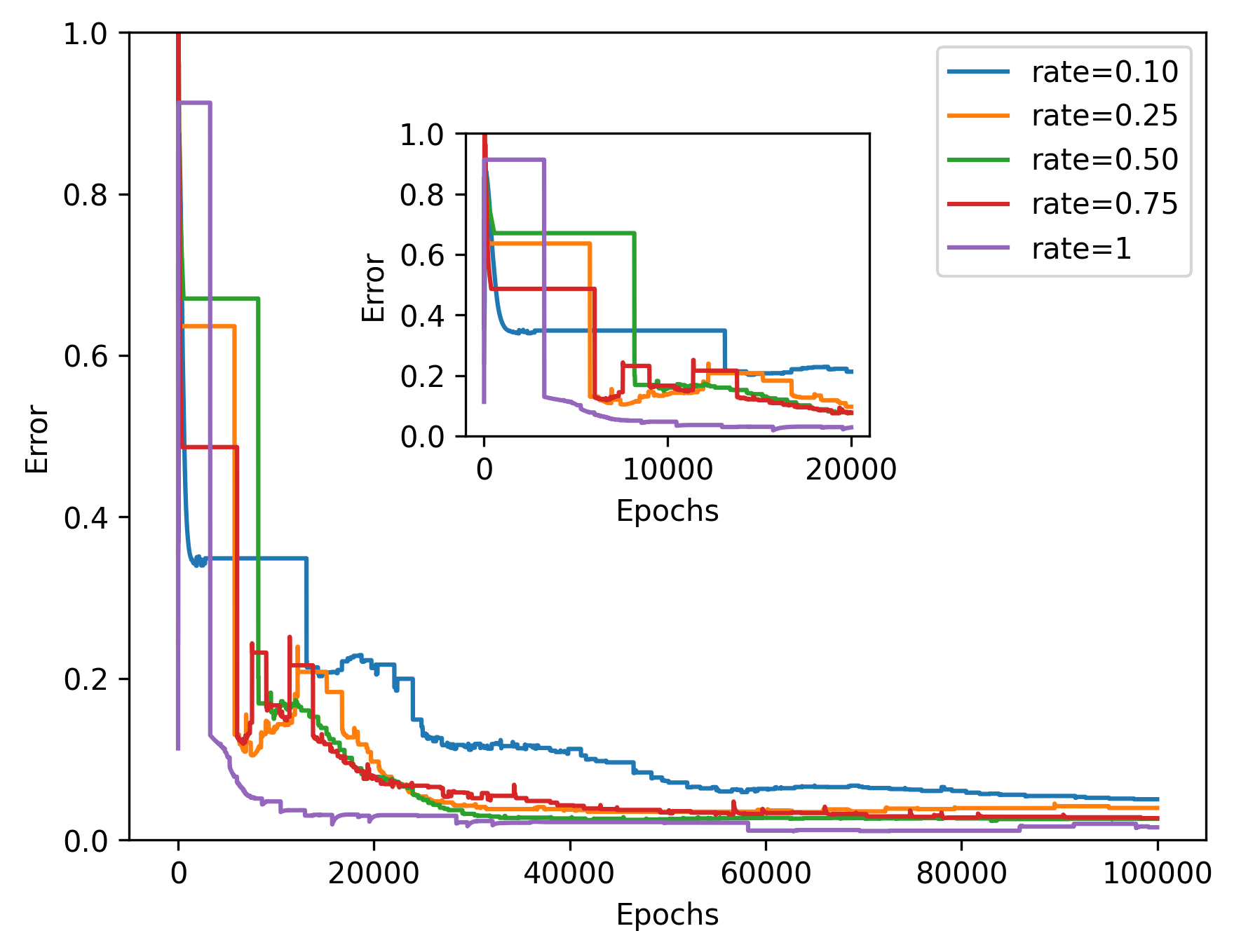

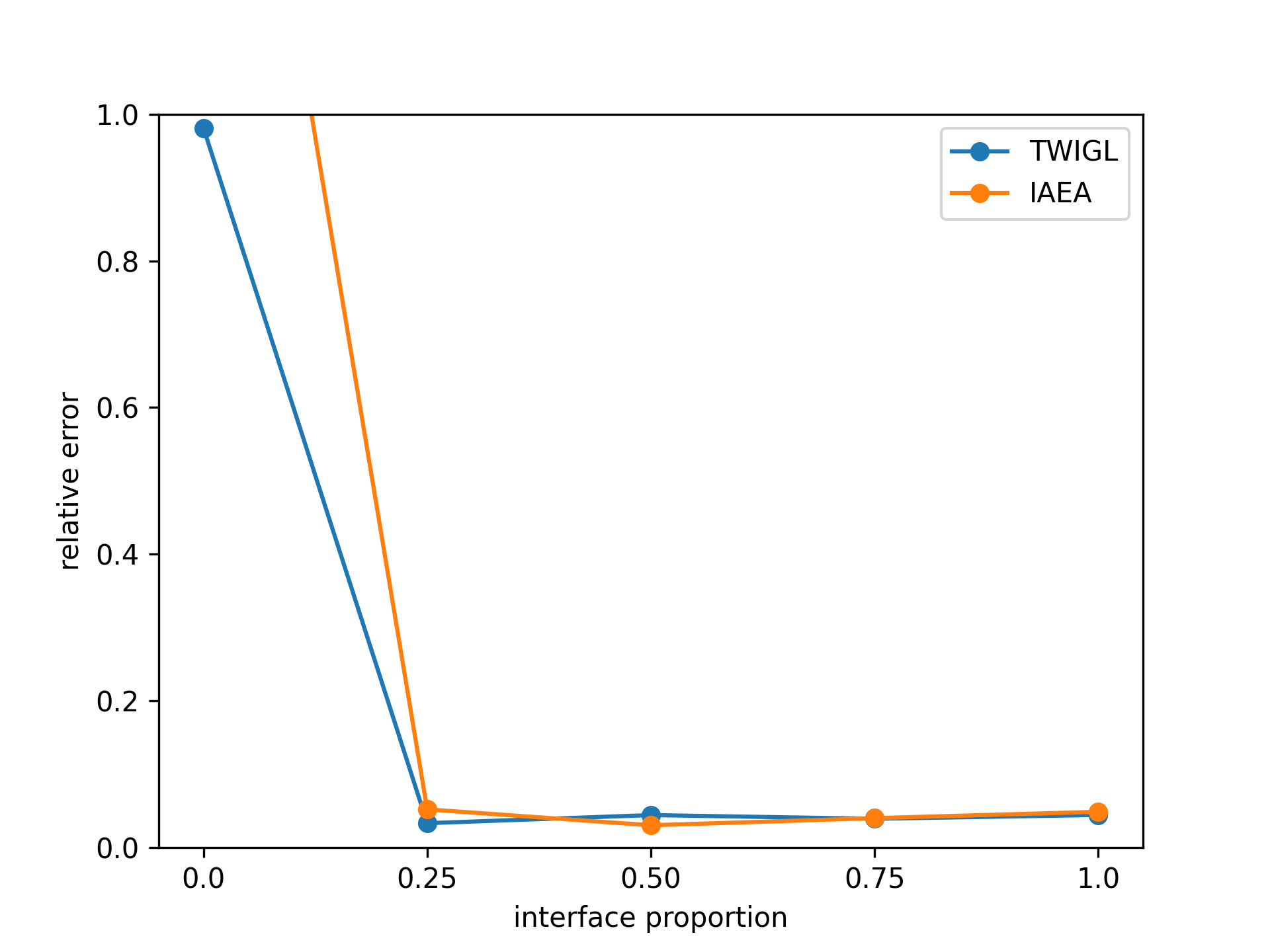

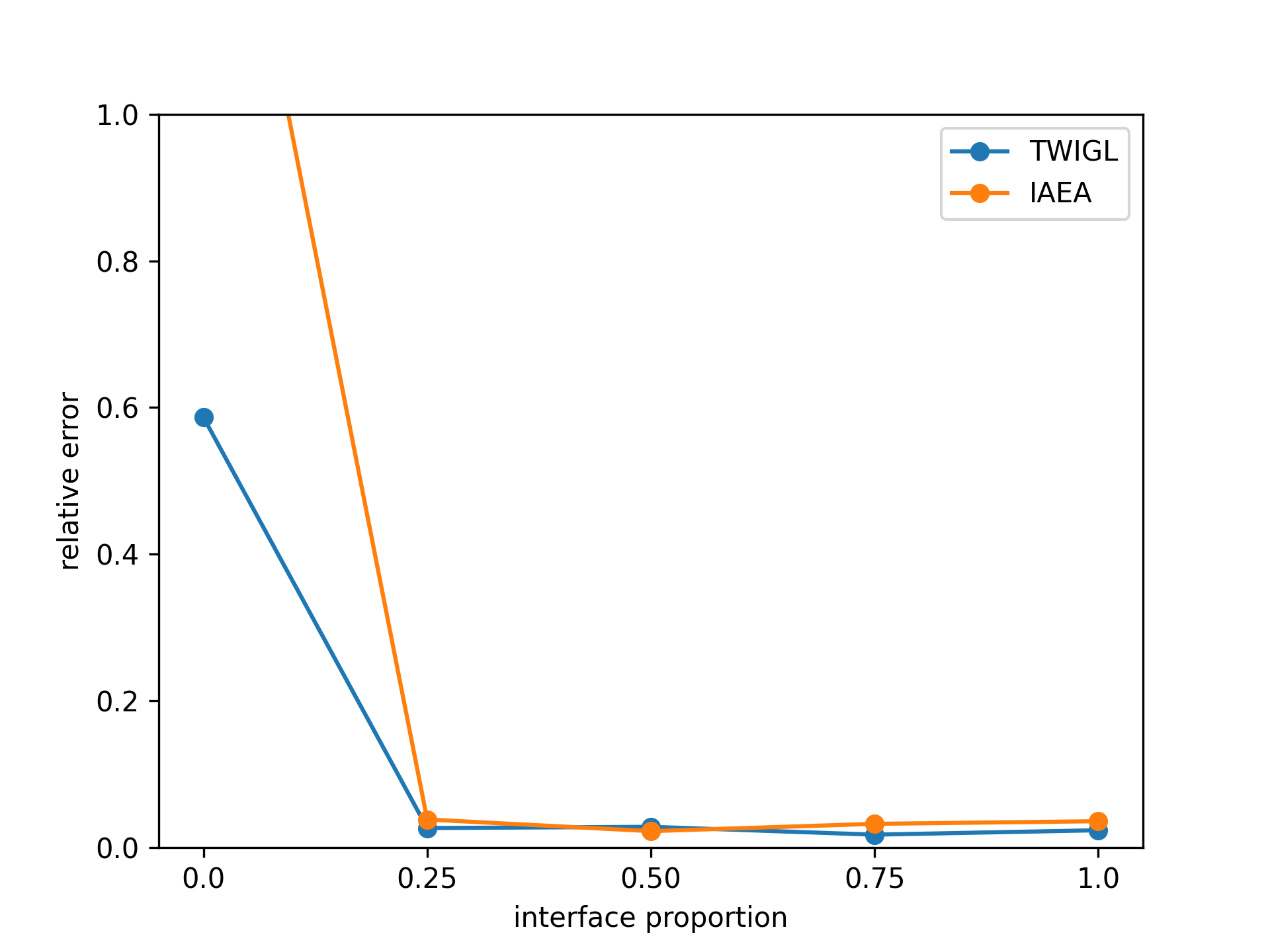

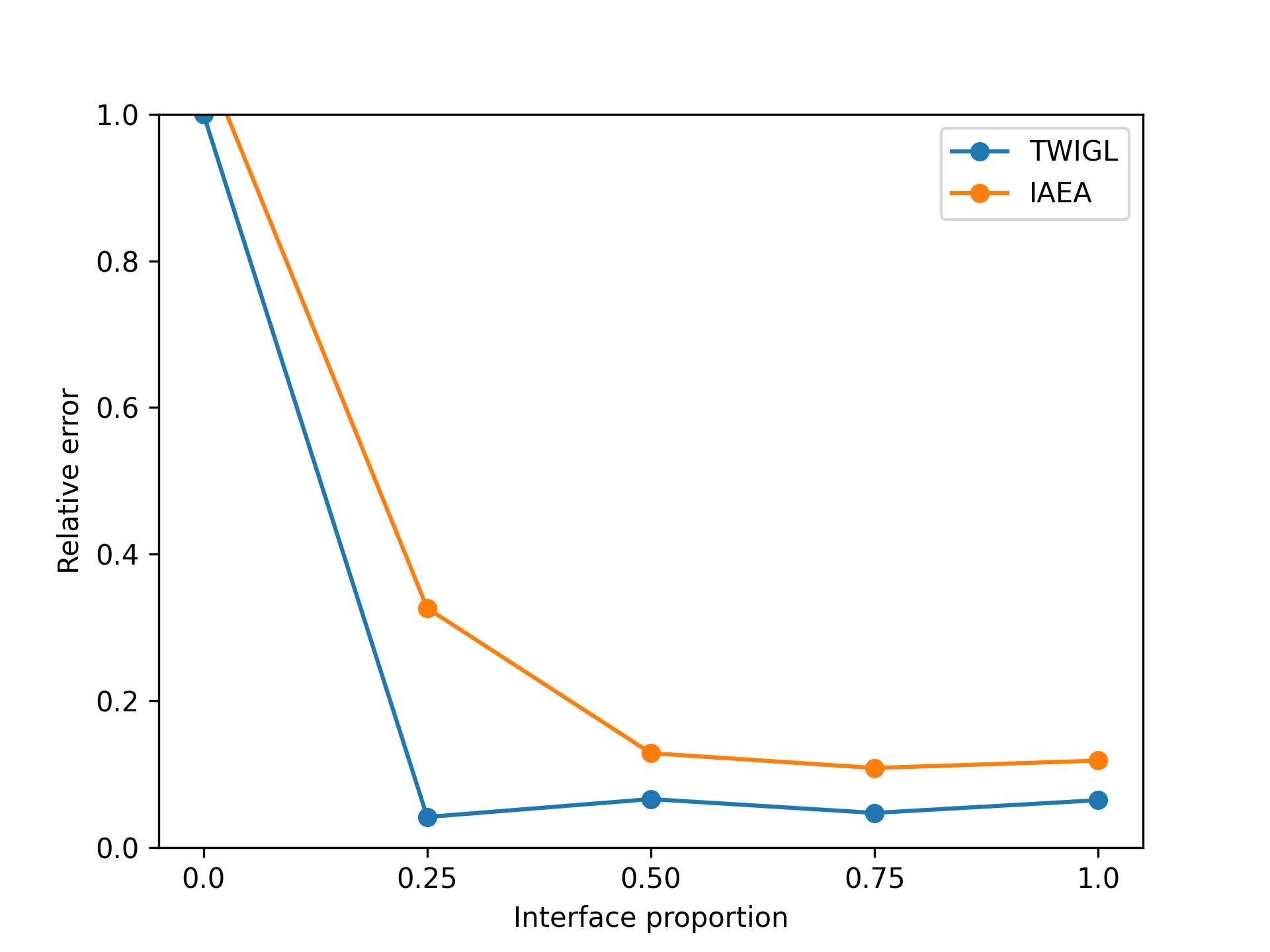

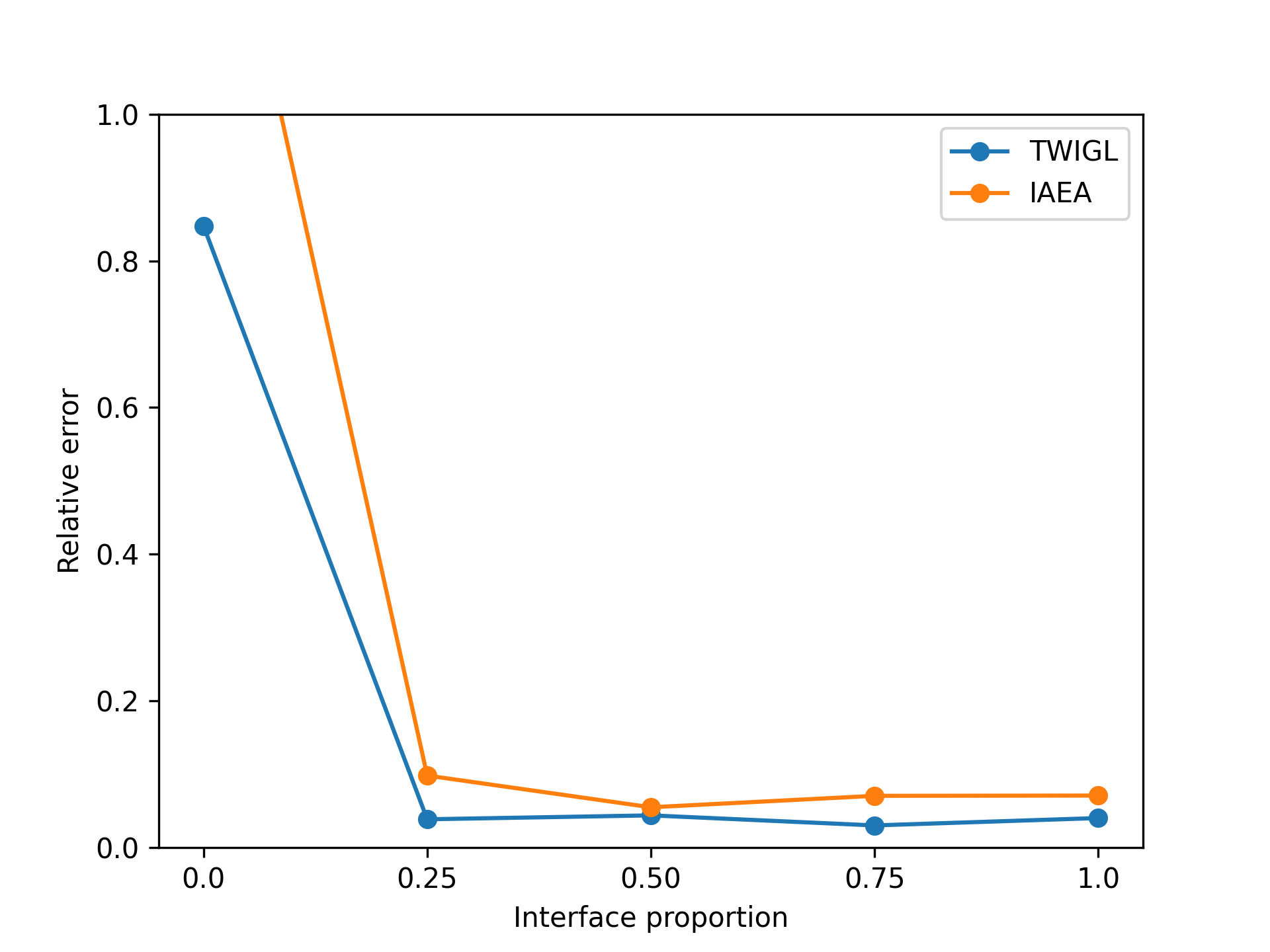

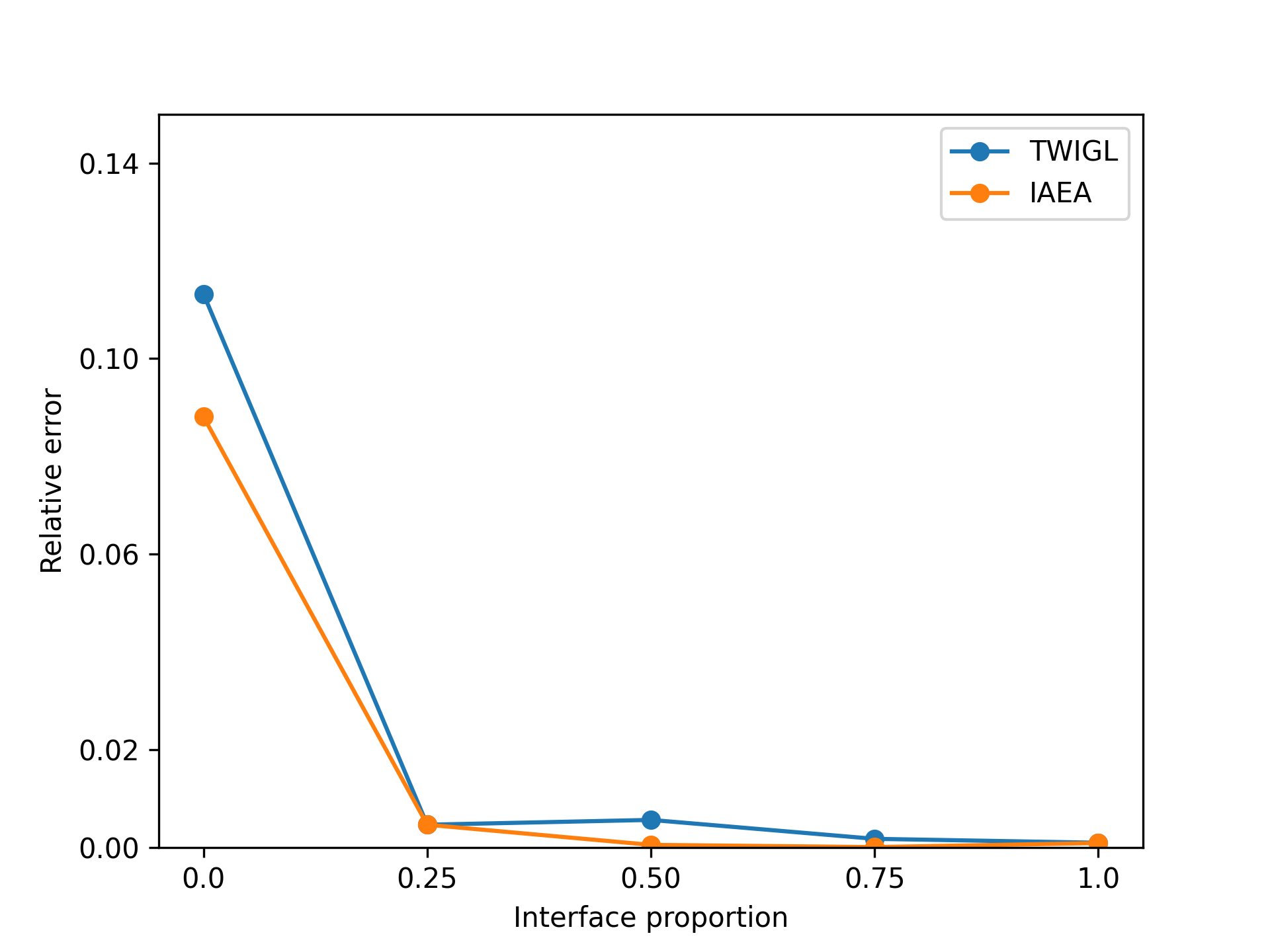

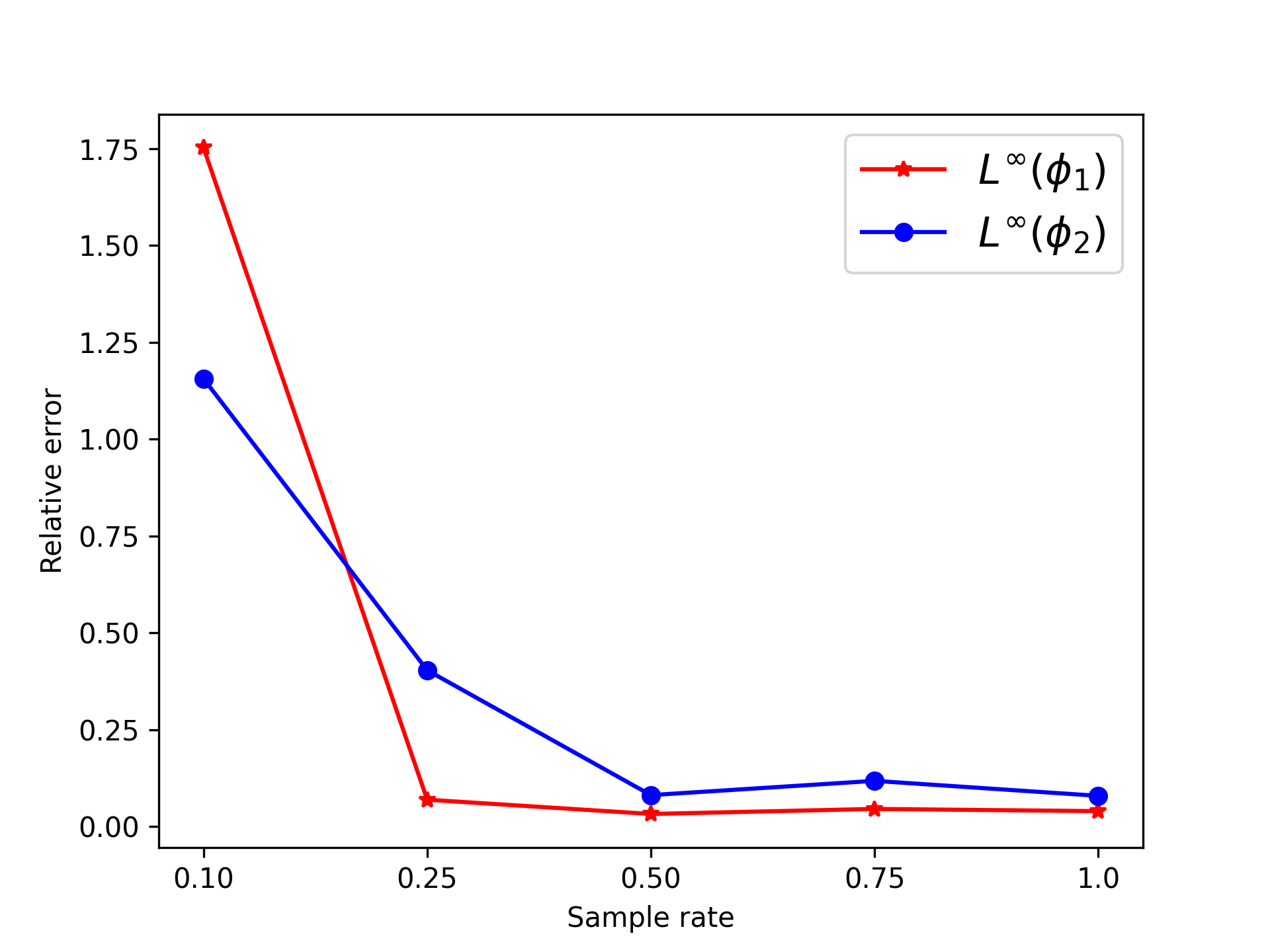

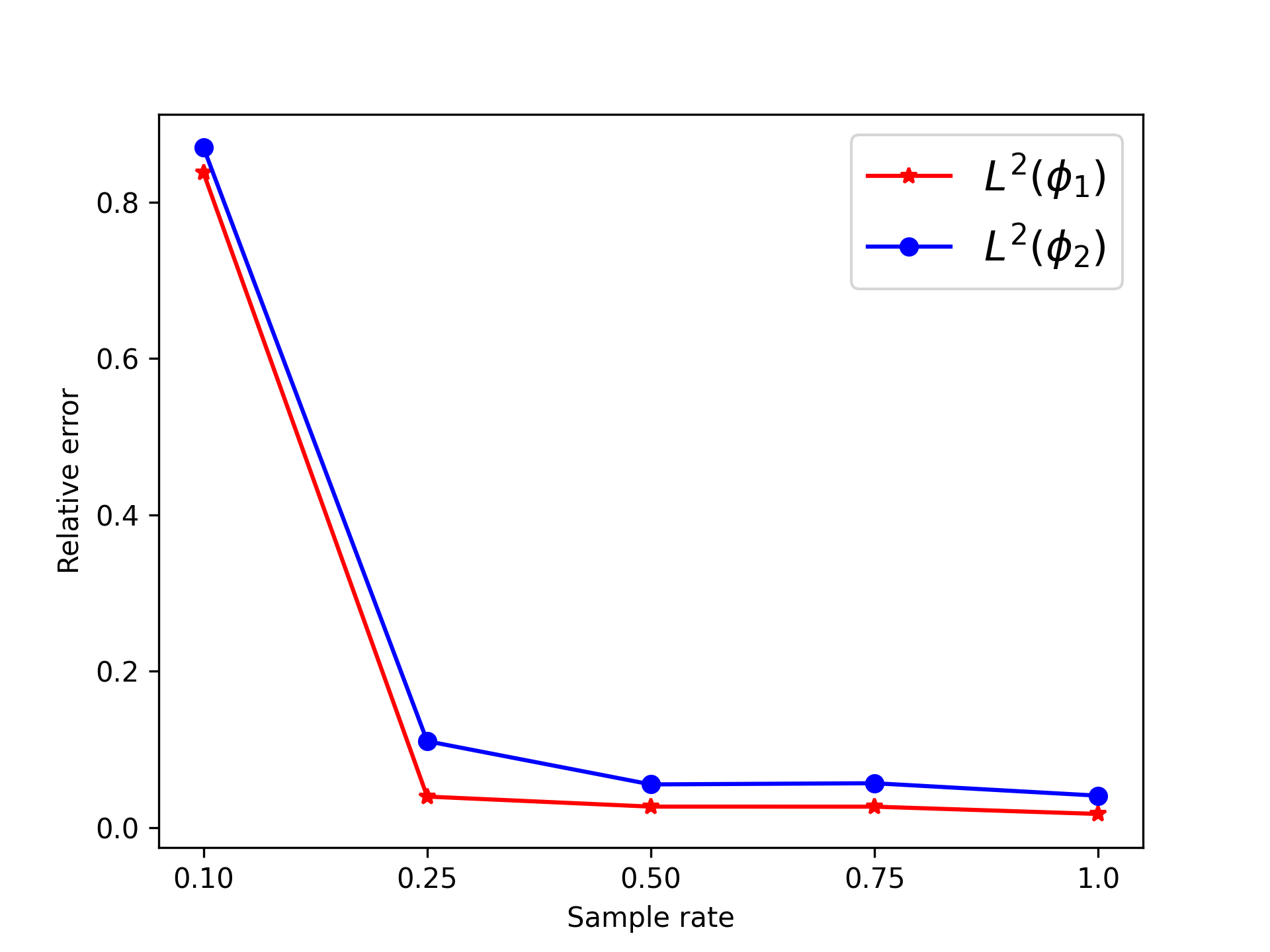

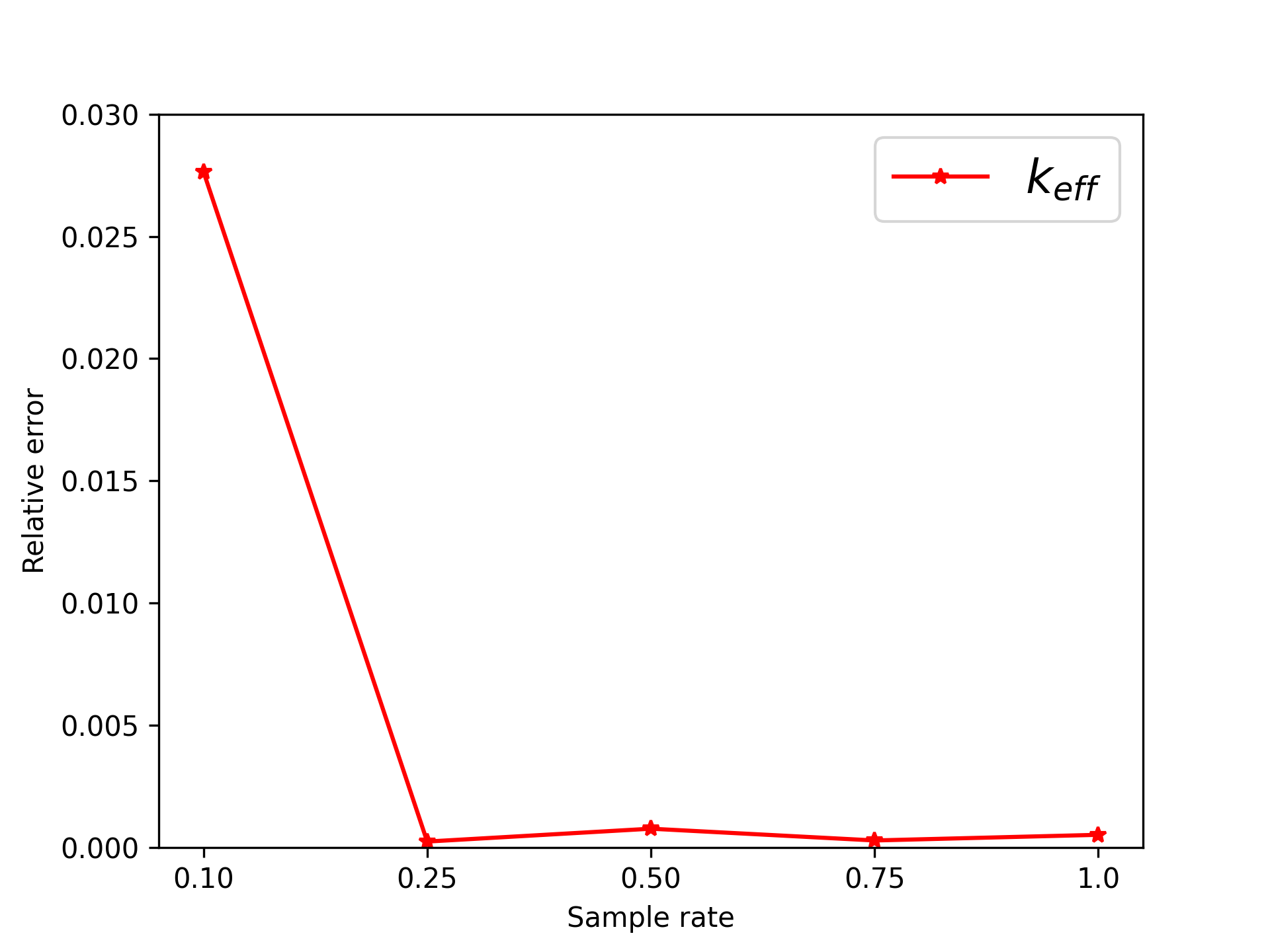

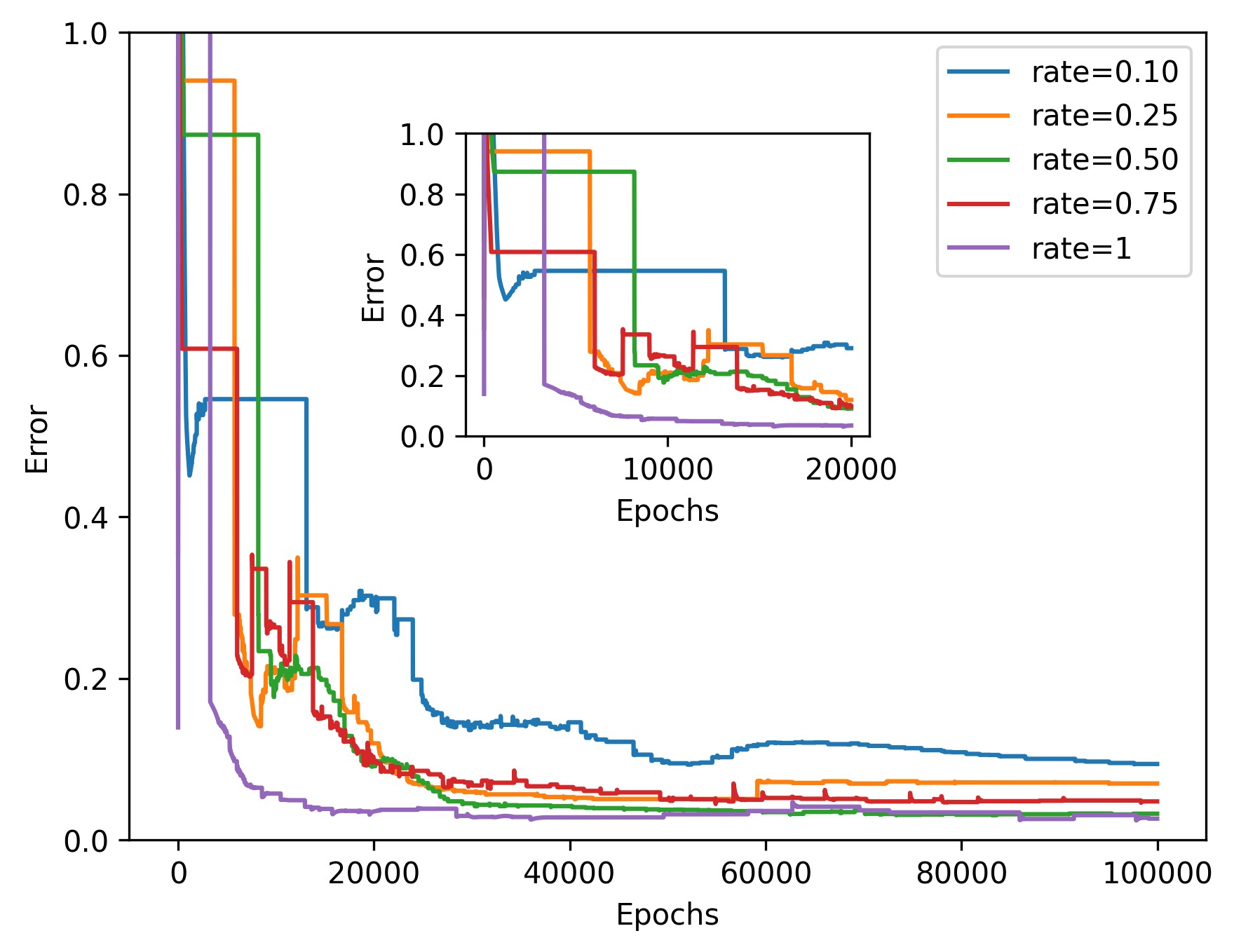

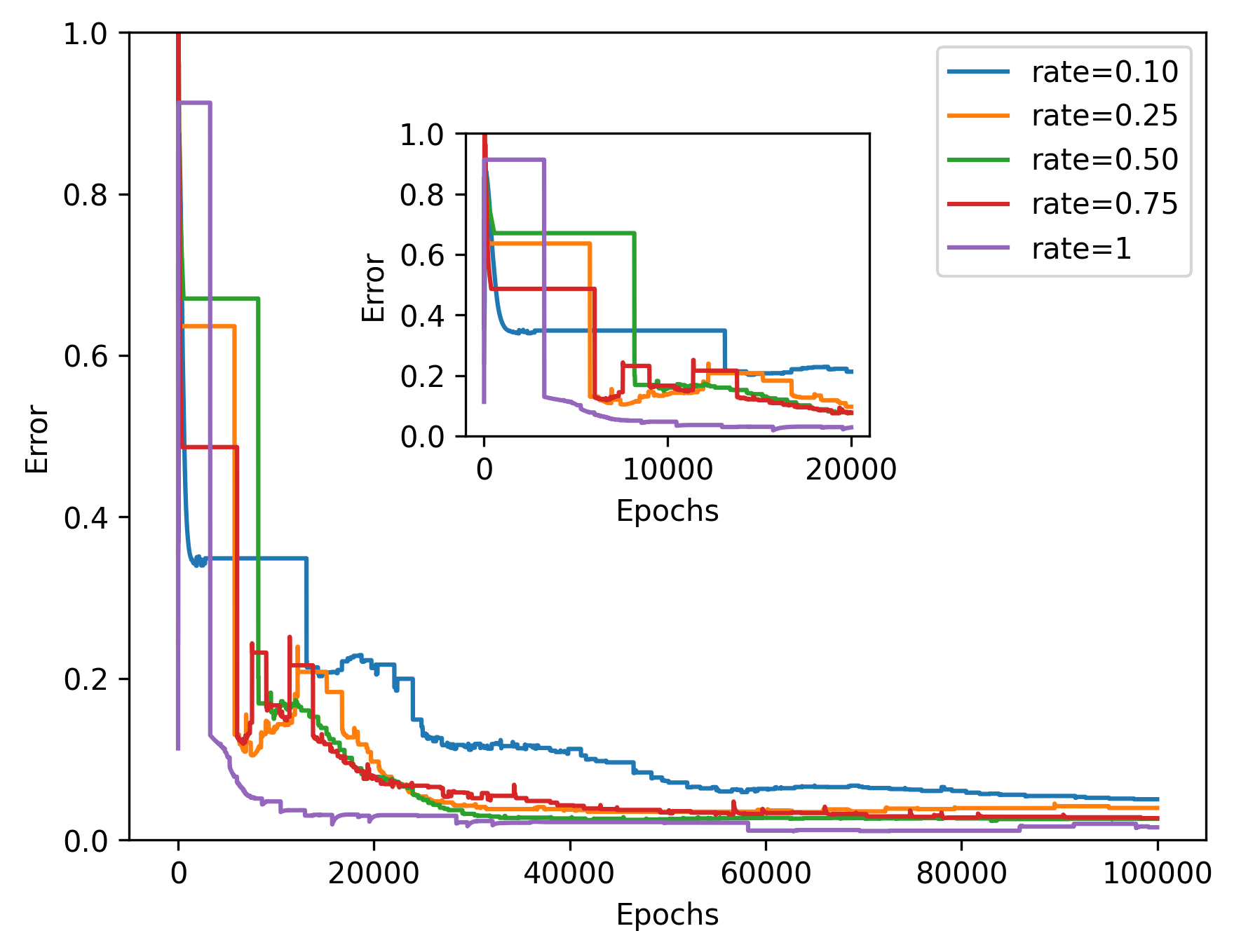

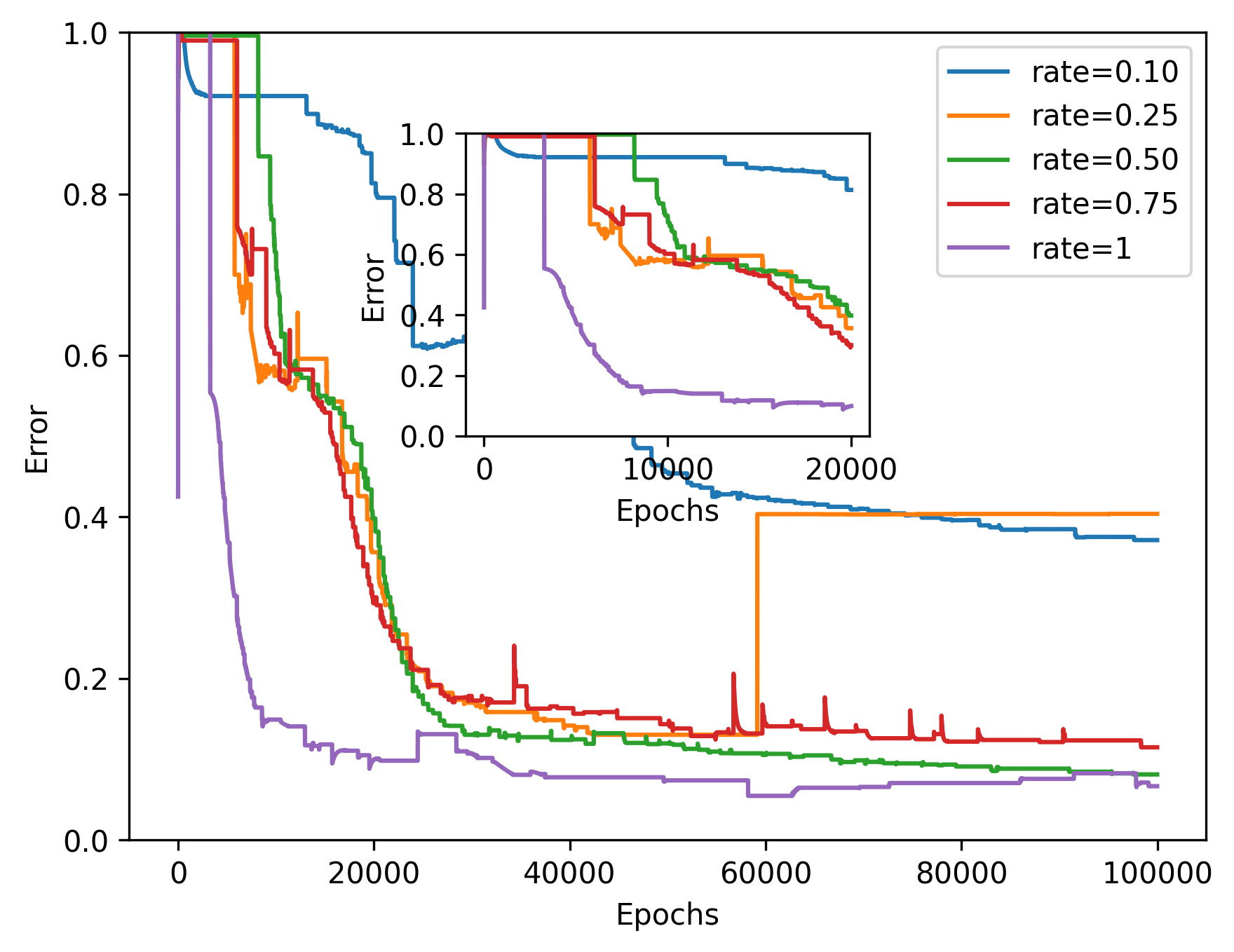

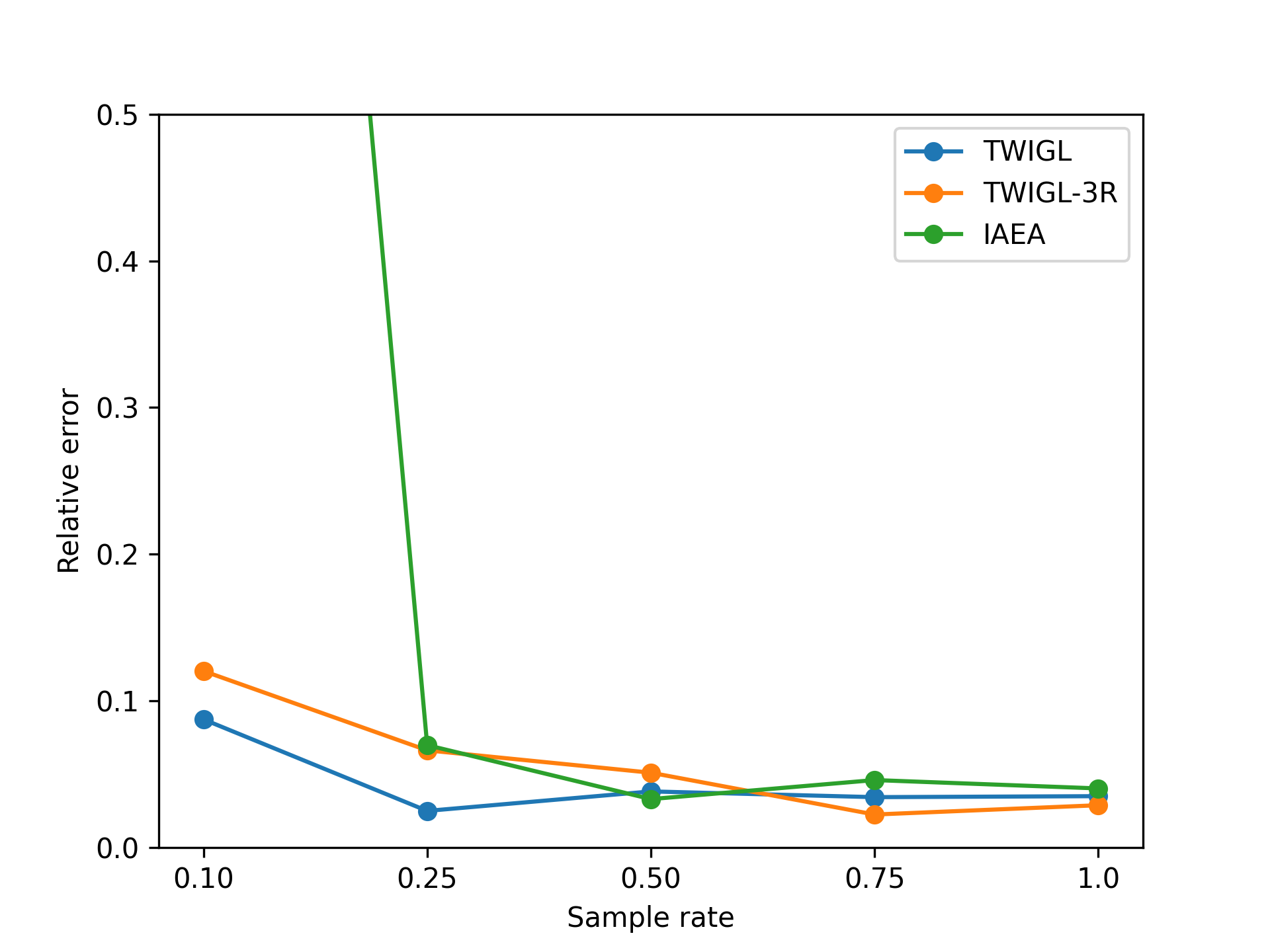

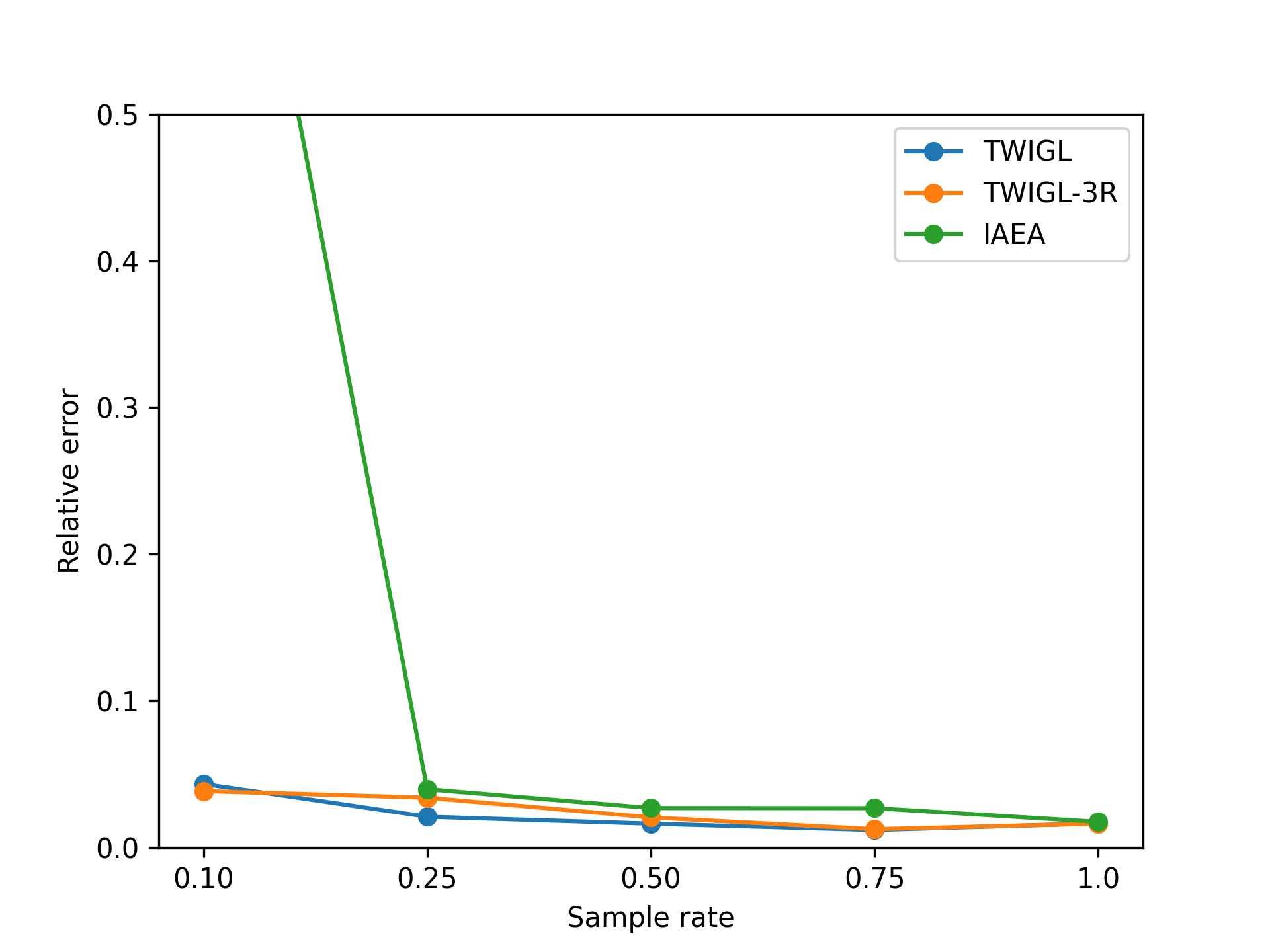

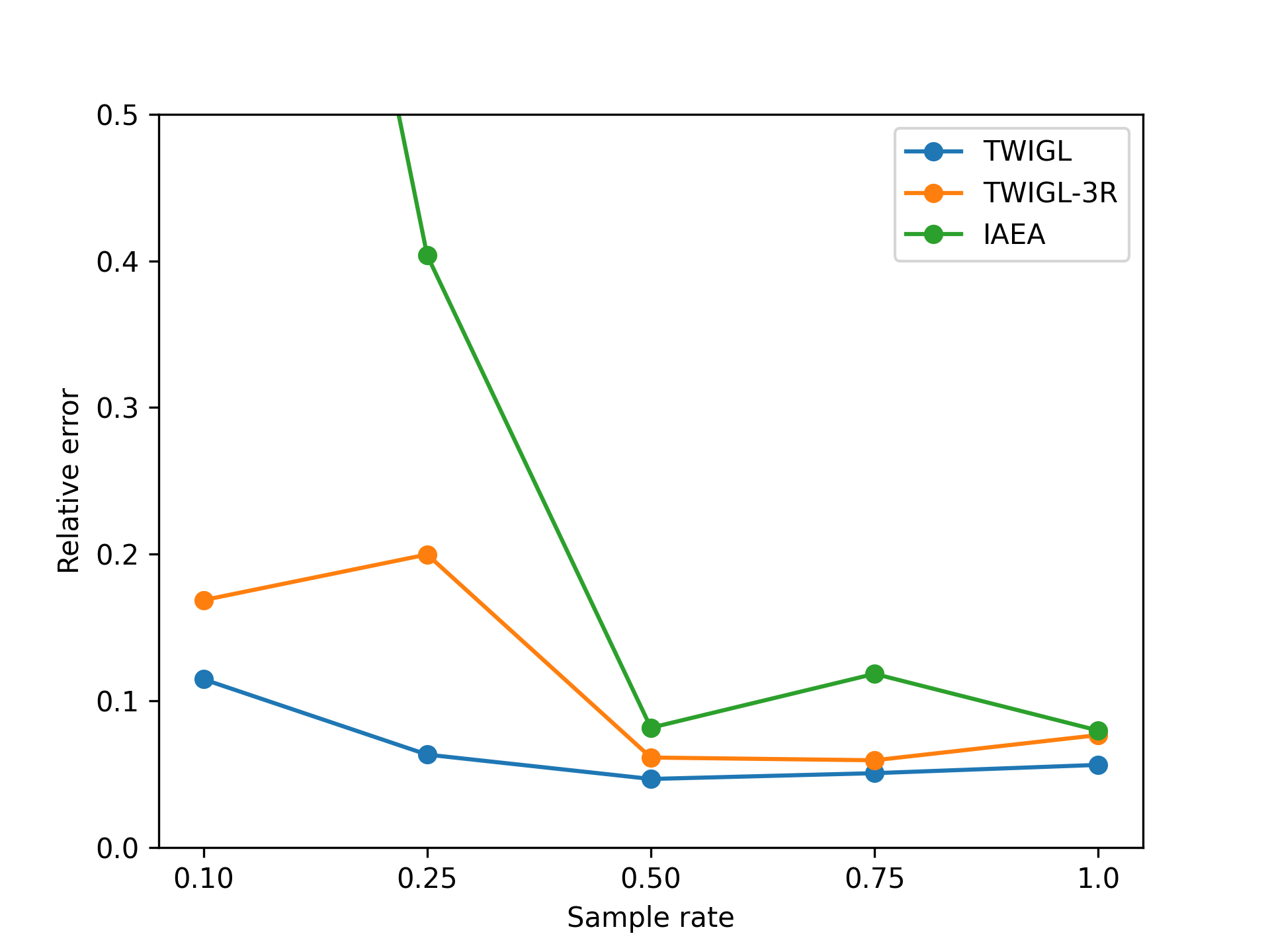

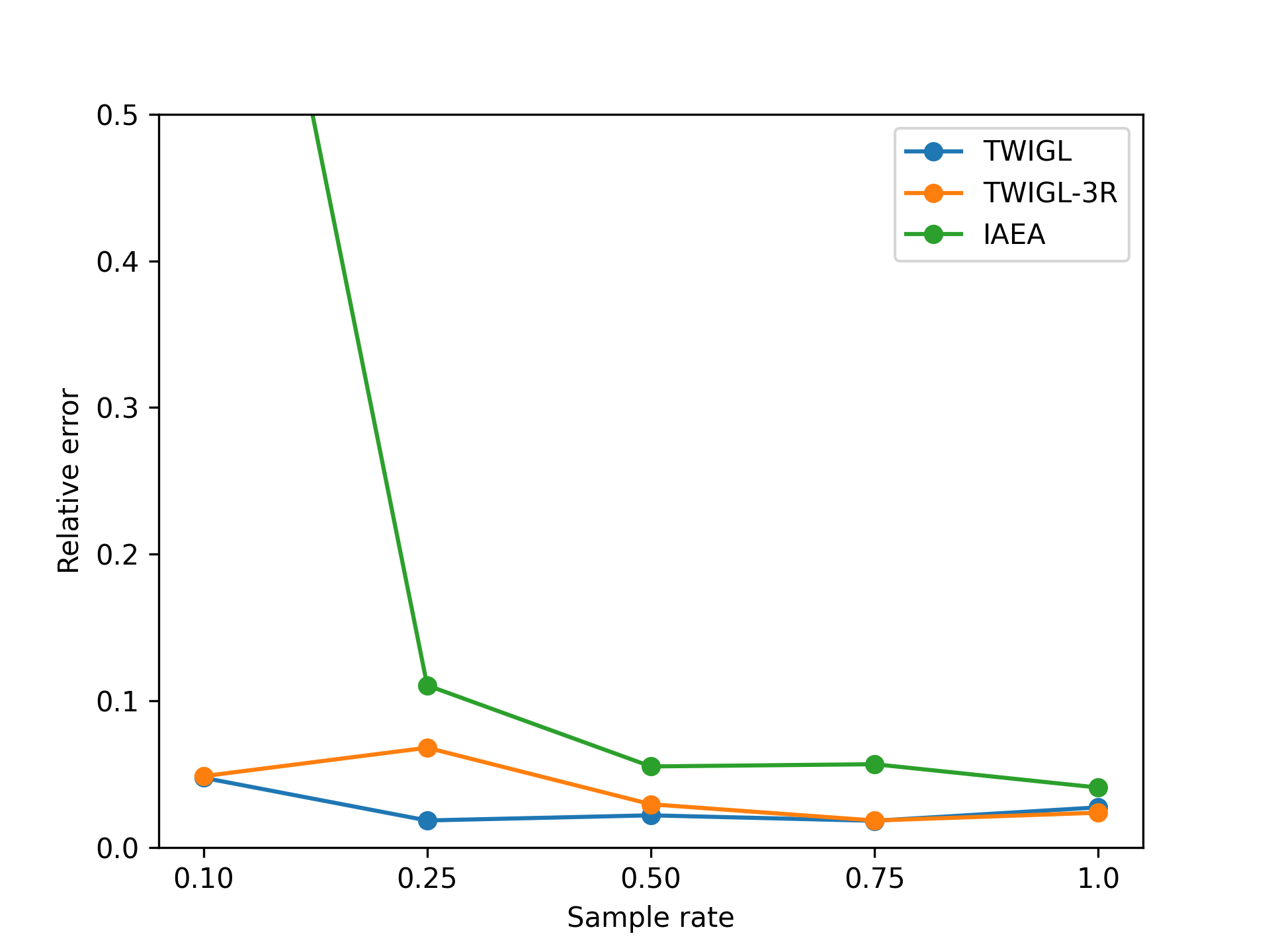

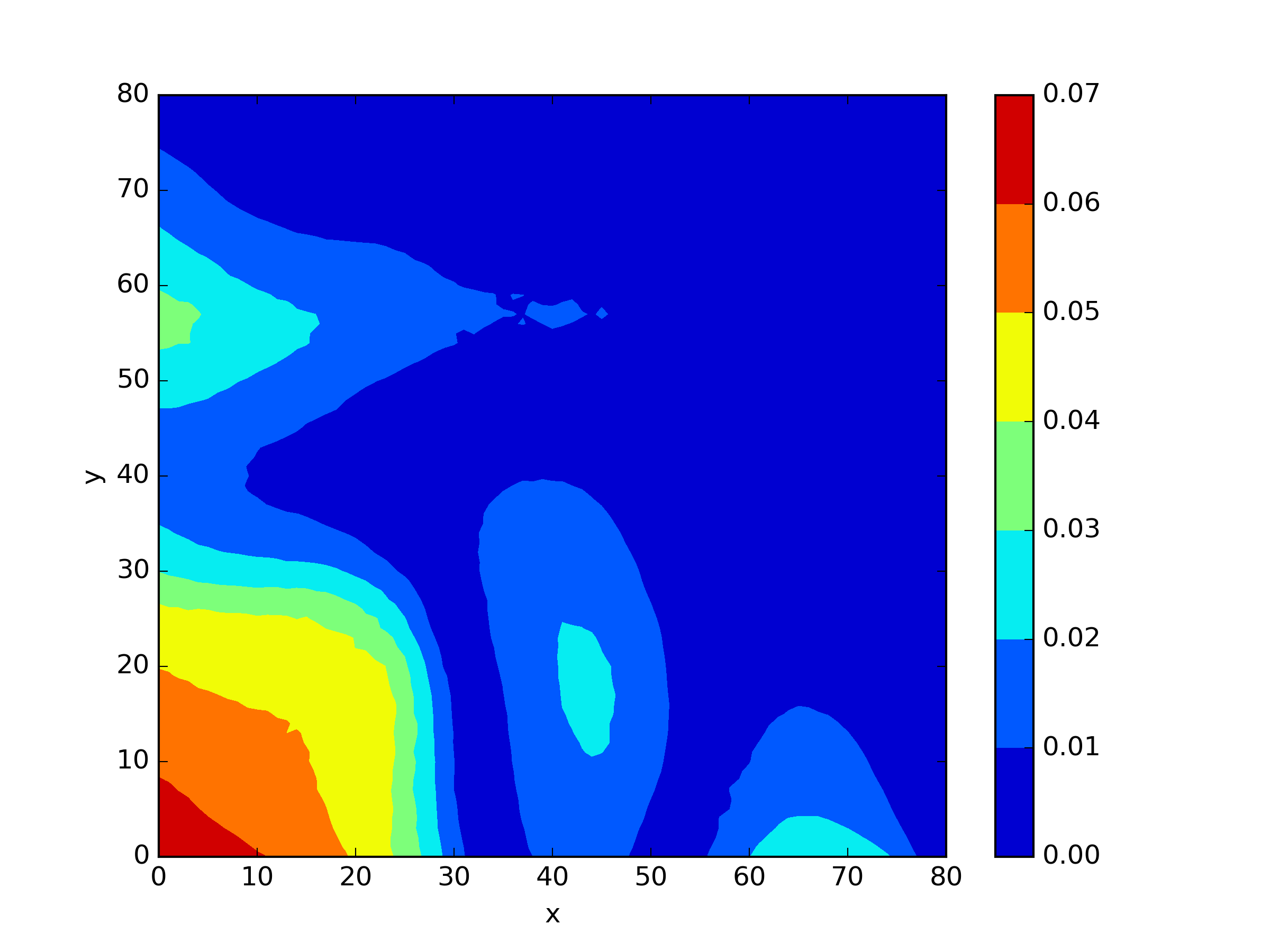

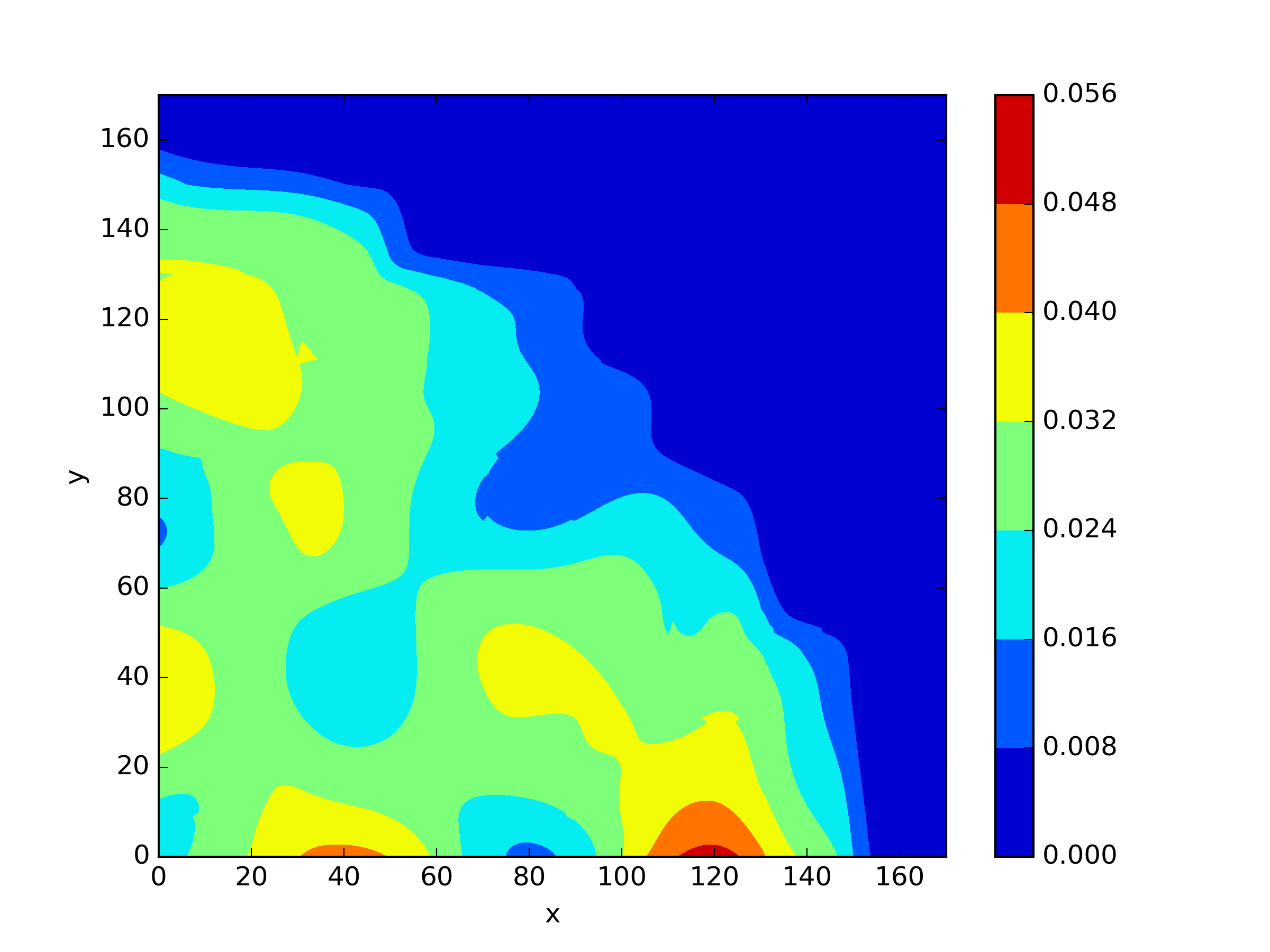

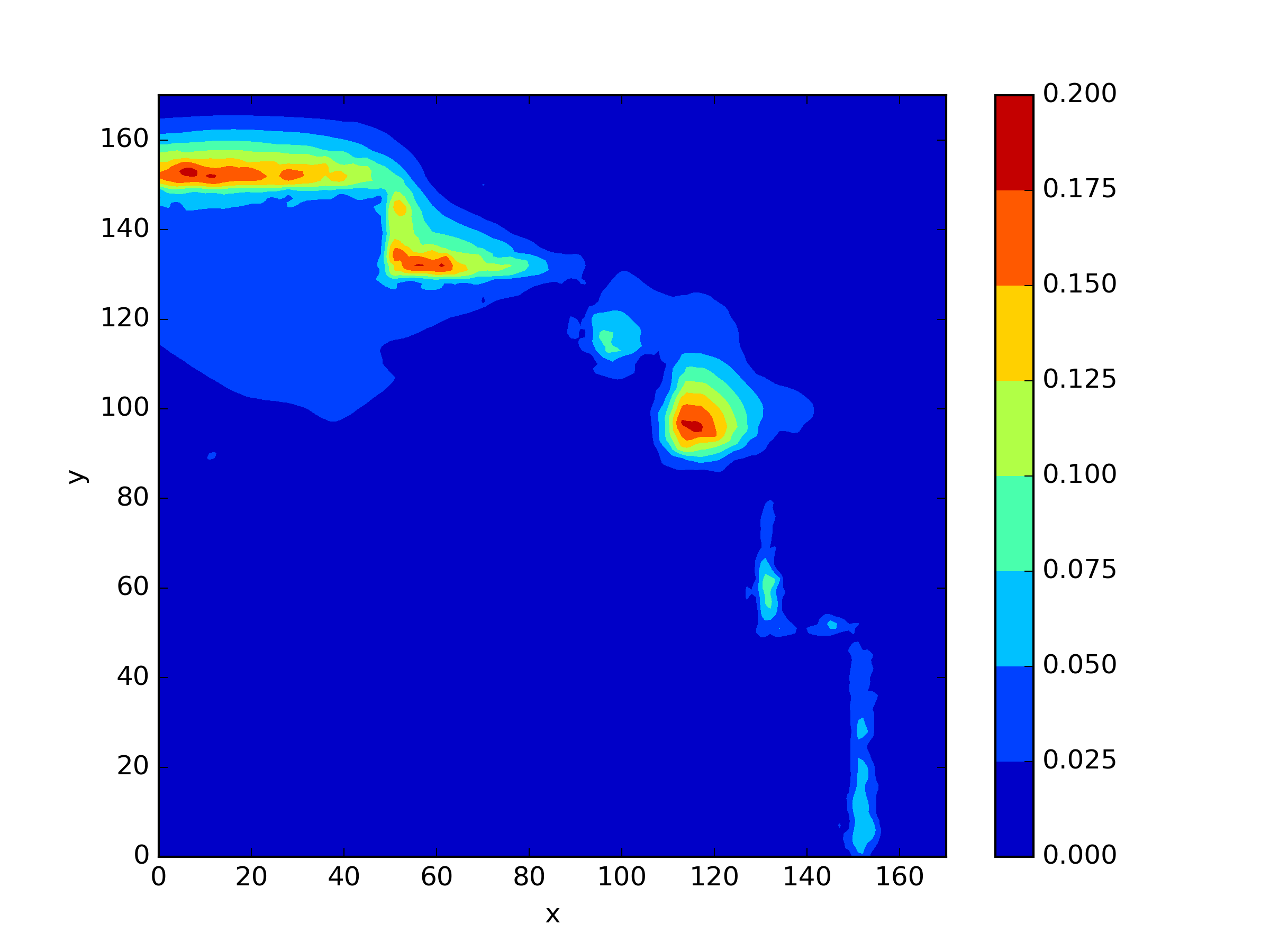

In the two-dimensional problem of TWIGL, we conducted a series of experiments regarding the number of sampling points and the solution accuracy. In these experiments, we initially used 6561 sampling points as the complete set of sampling points. In the mentioned paper, the sampling rates of 0.1, 0.25, 0.5, and 0.75 for residual points refer to using only , and of the residual points as training data, respectively. For example, a sampling rate of 0.5 means that only half of the residual points, which amounts to 3,281 points, are used for residual loss function training. We found that a significant reduction in the number of sampling points, such as a sampling rate of 0.1, leads to a noticeable decrease in accuracy (Figure 8). Reducing the sampling rate will invariably result in slower convergence of the neural network (Figure 9).

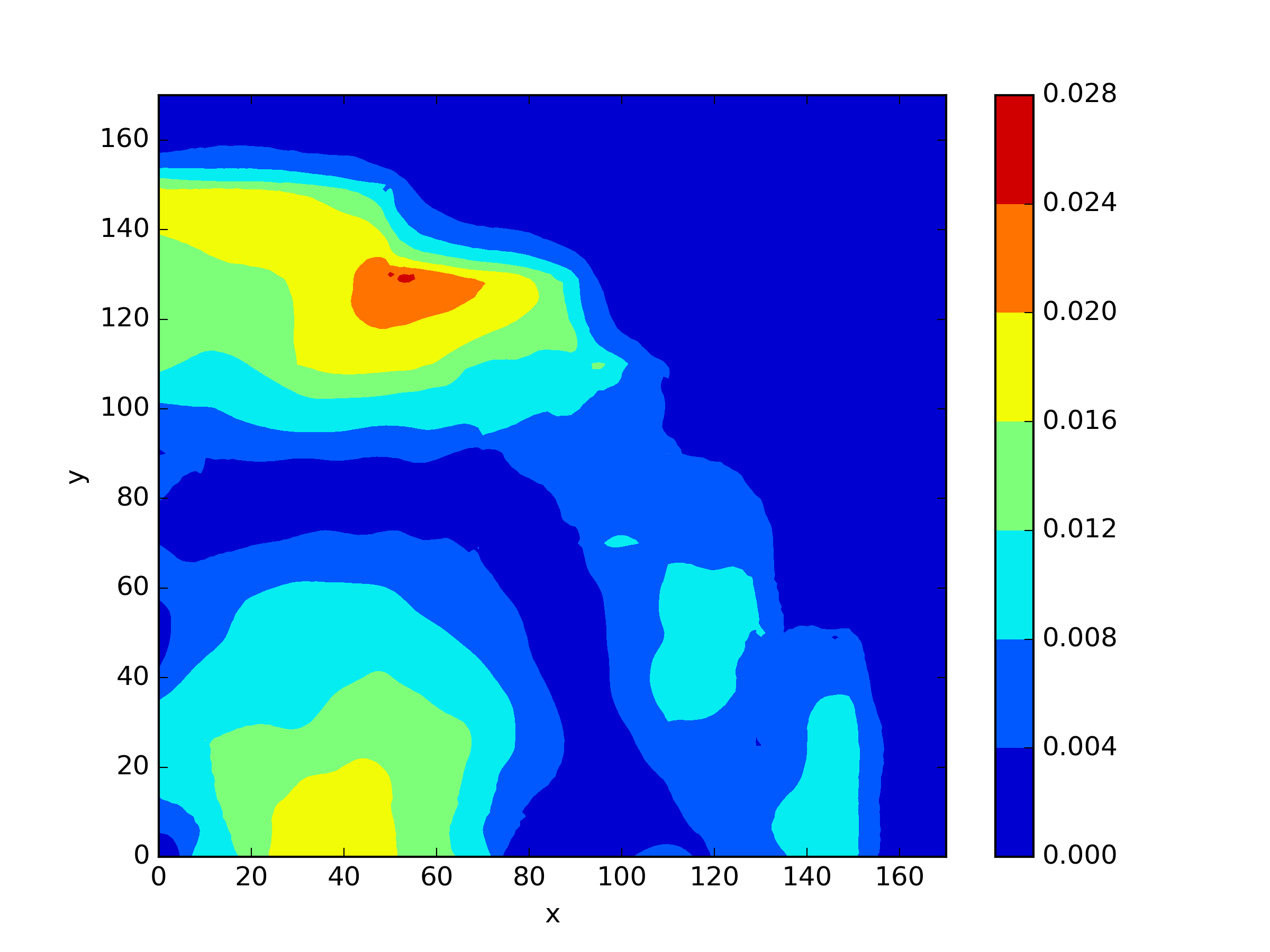

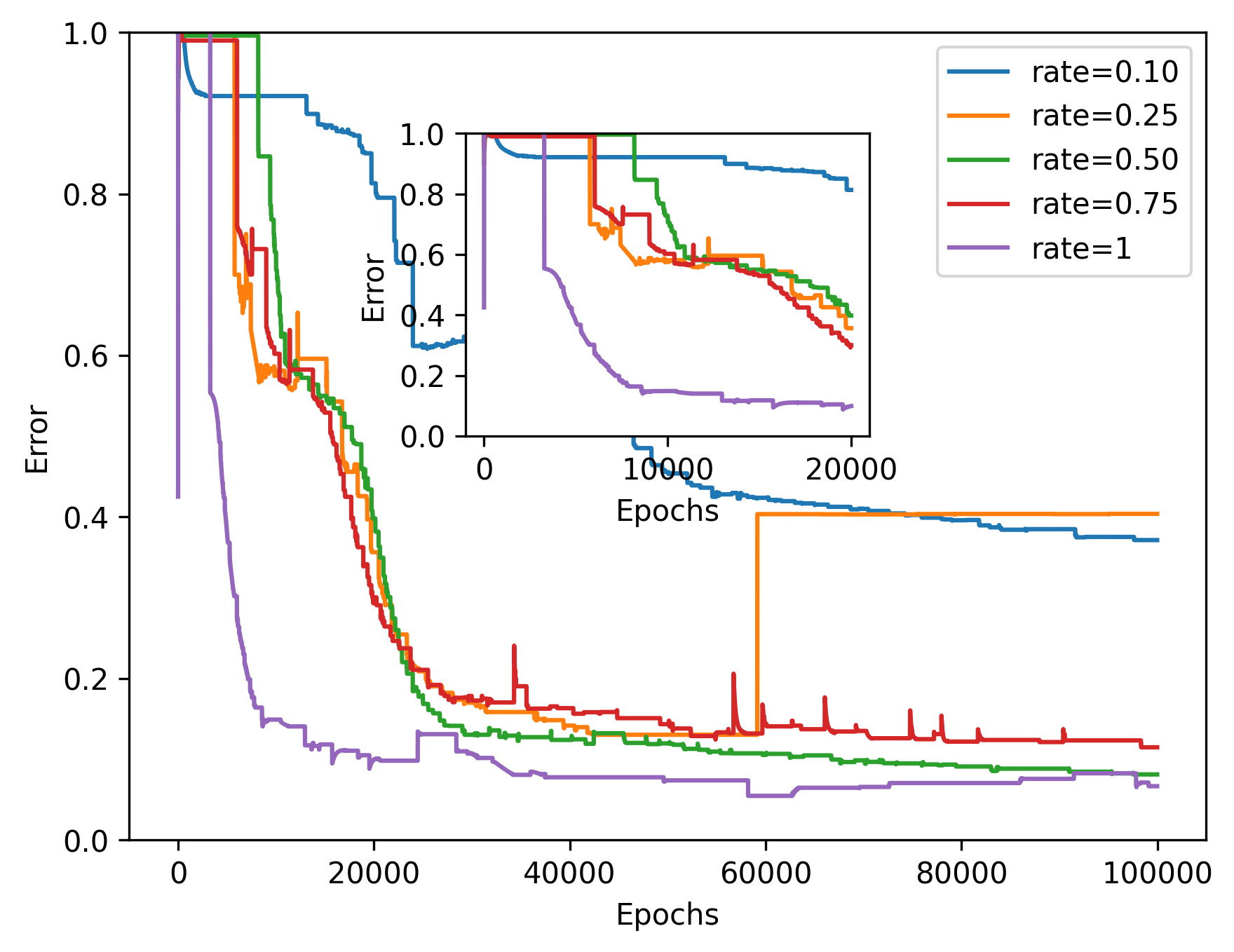

Additionally, we conducted another series of experiments to examine the impact of introducing interface conditions on the numerical experiments. In this experiment, while keeping the number of training points constant, we reduced the number of points corresponding to the interface conditions. As a result, the training points for the equation loss term increased. We tested the numerical examples using models ranging from fully incorporating interface conditions to not including them at all. The results of this experiment indicate that neural network completely fails to solve the problem when interface conditions are not introduced (Figure 10 and 11).

4.3.2 IAEA

The IAEA benchmark problem is a classic benchmark case widely used for the development of reactor physics computational programs, as published by the Argonne National Laboratory. It refers to a three-dimensional neutron diffusion eigenvalue problem. The computational domain of the problem is illustrated in Figure 12, and it also includes a two-dimensional problem, which is a cross-section at . The material parameters are presented in Table 6. The computational domain includes fuel, control rods, reflector, and their corresponding mixed regions, with a total of four regions corresponding to four sets of coefficients. We apply the Neumann loss function (26b) at the boundaries and , and impose Robin boundary conditions (26c) at the external boundaries where . The critical eigenvalue of this problem is , which is solved by FreeFEM++ with intervals and .

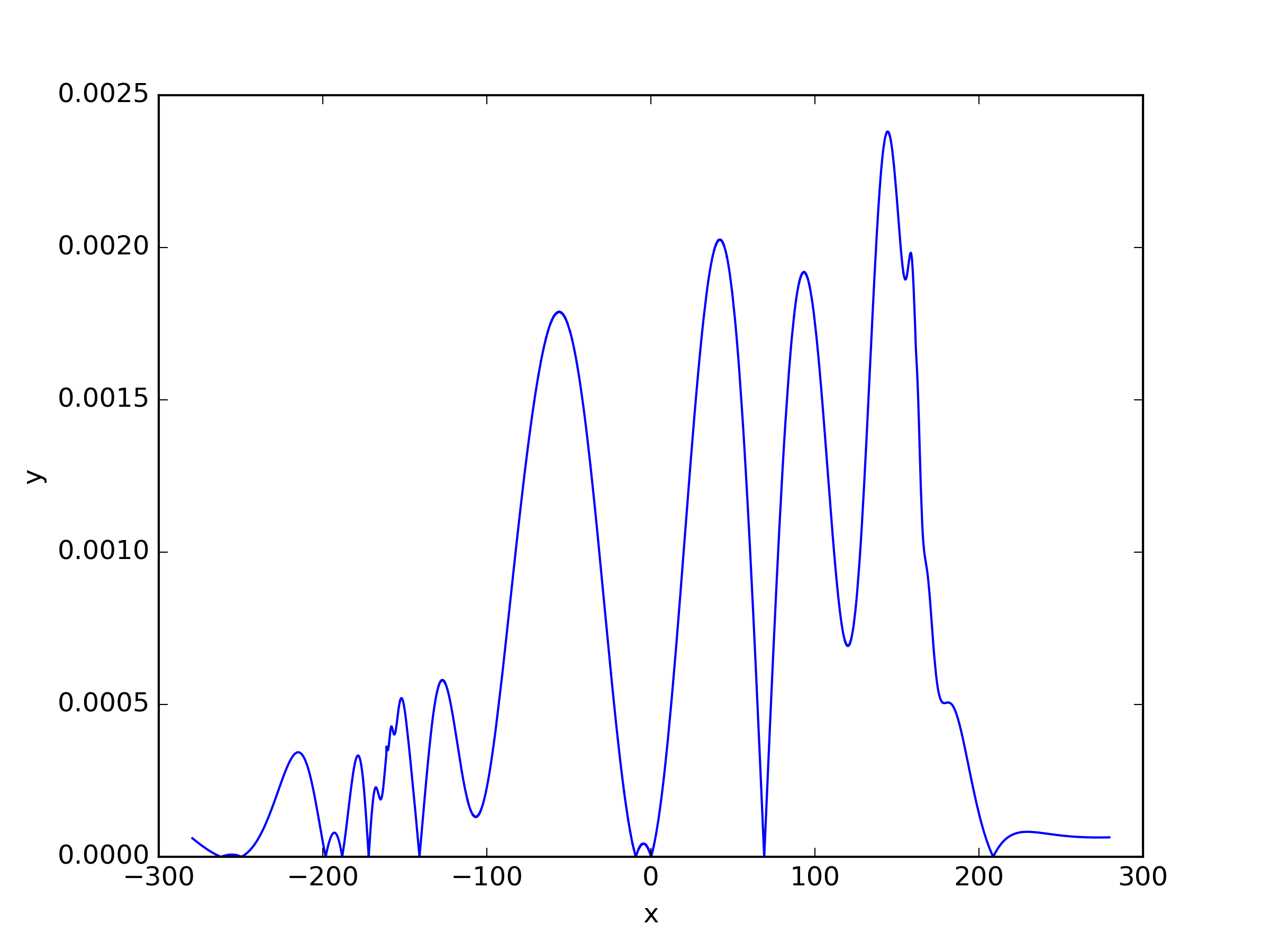

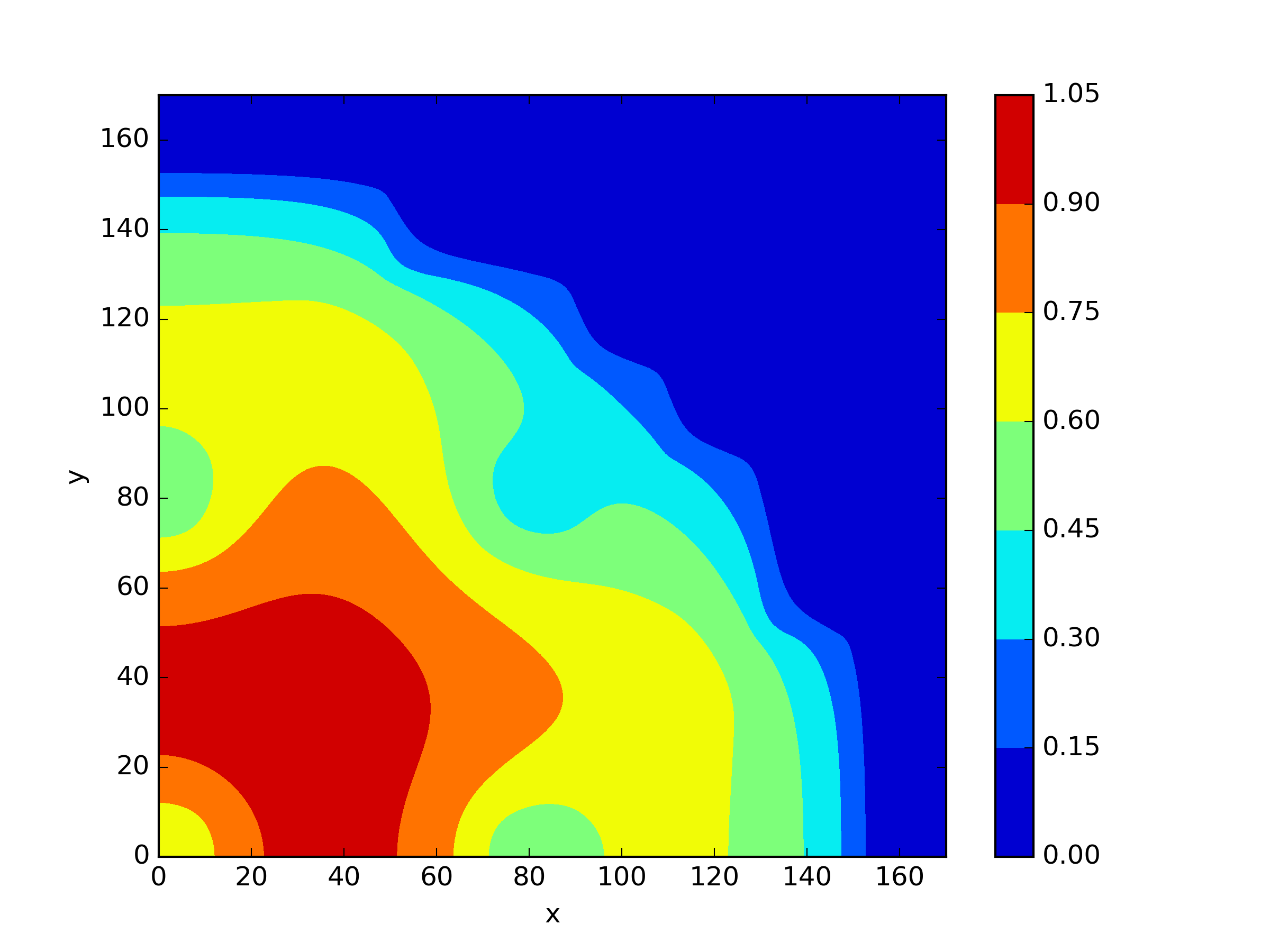

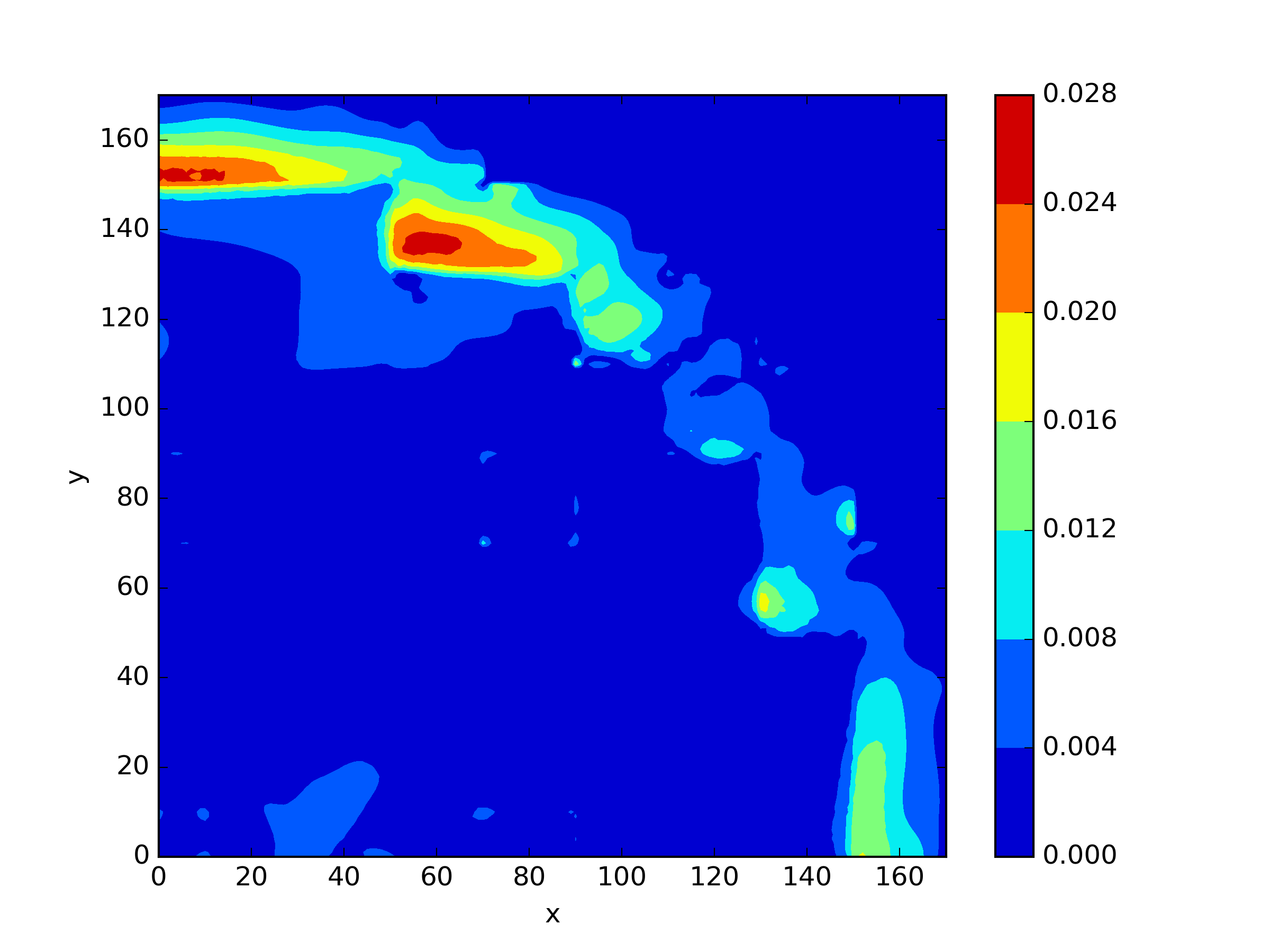

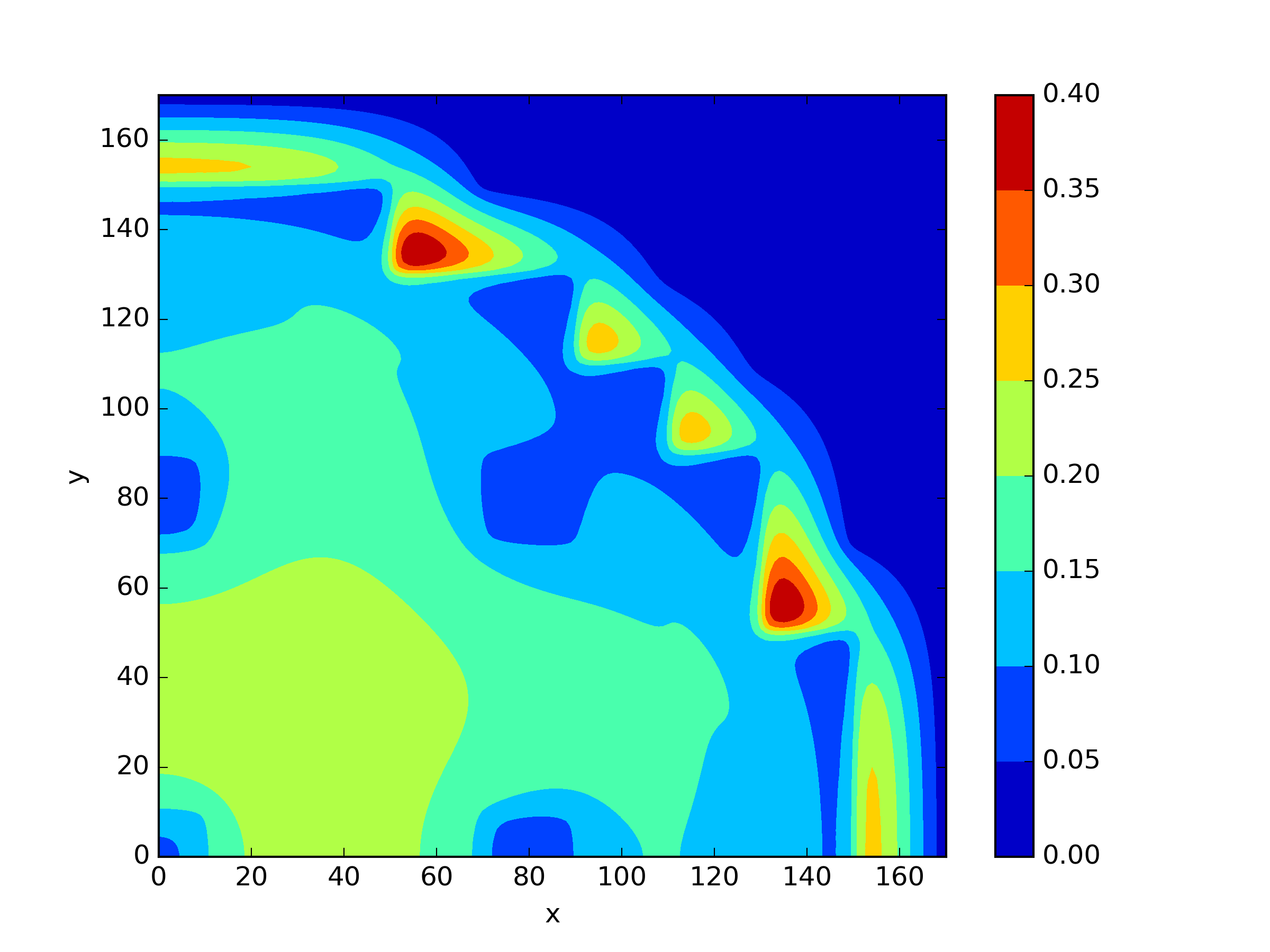

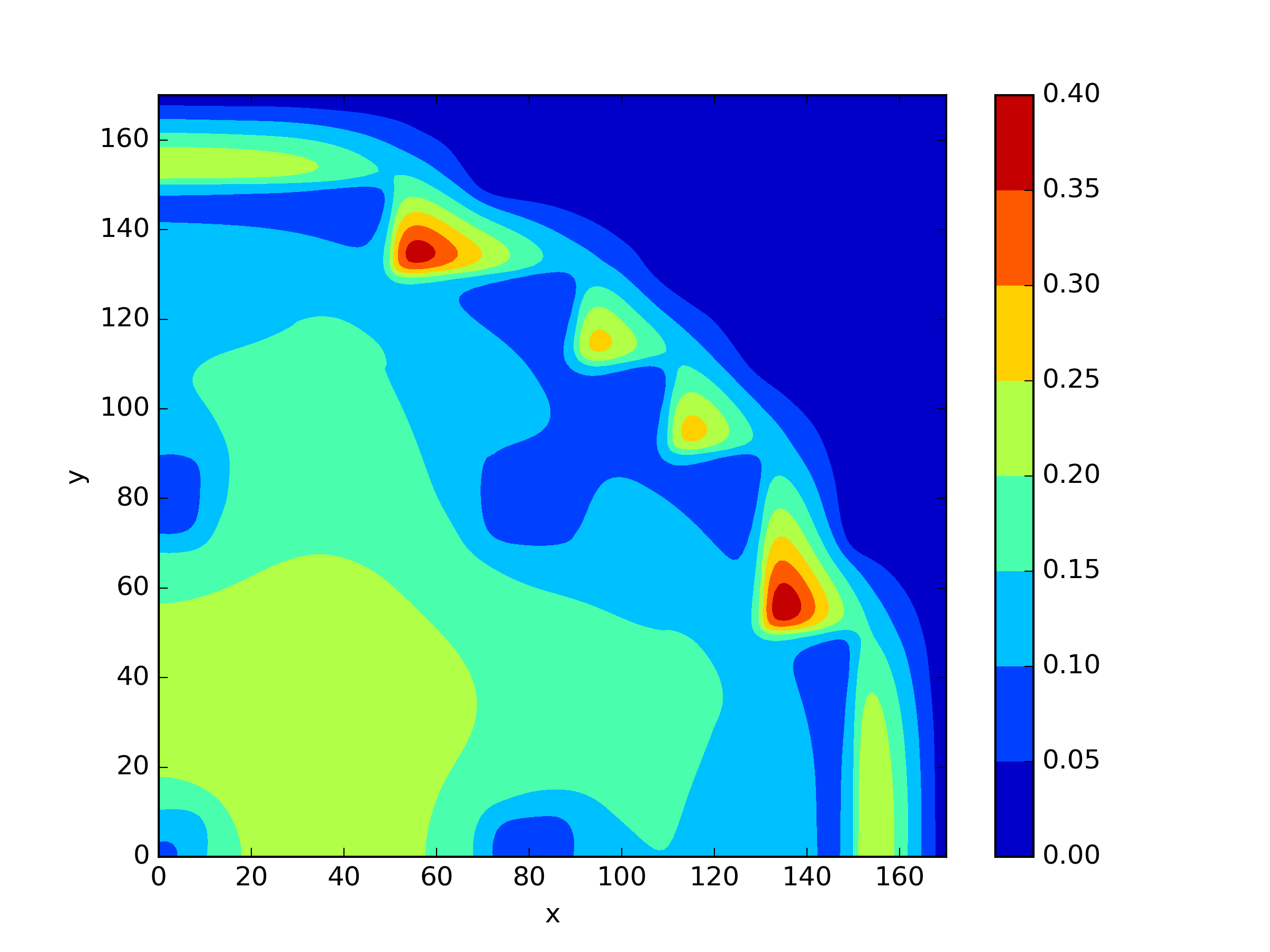

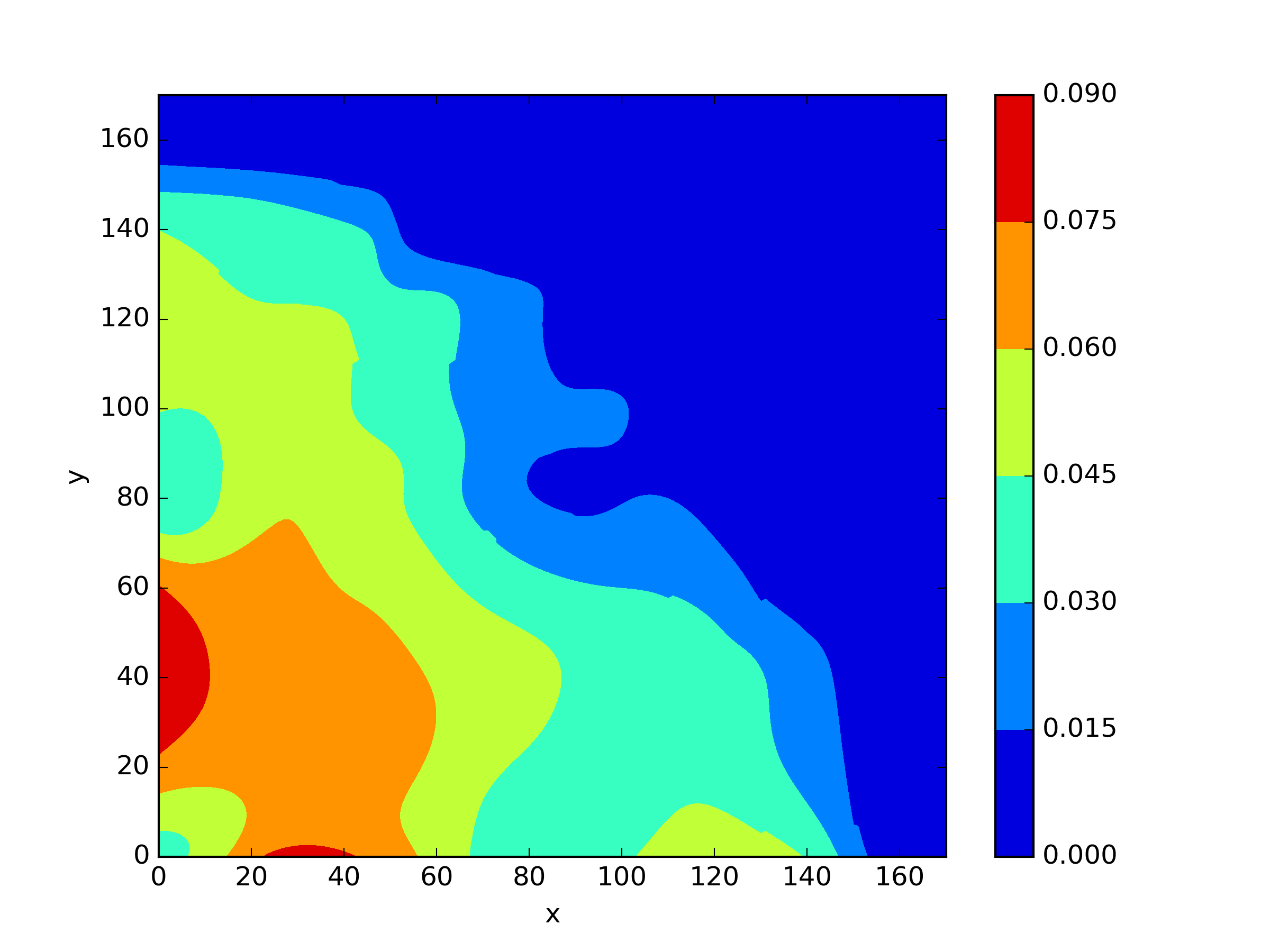

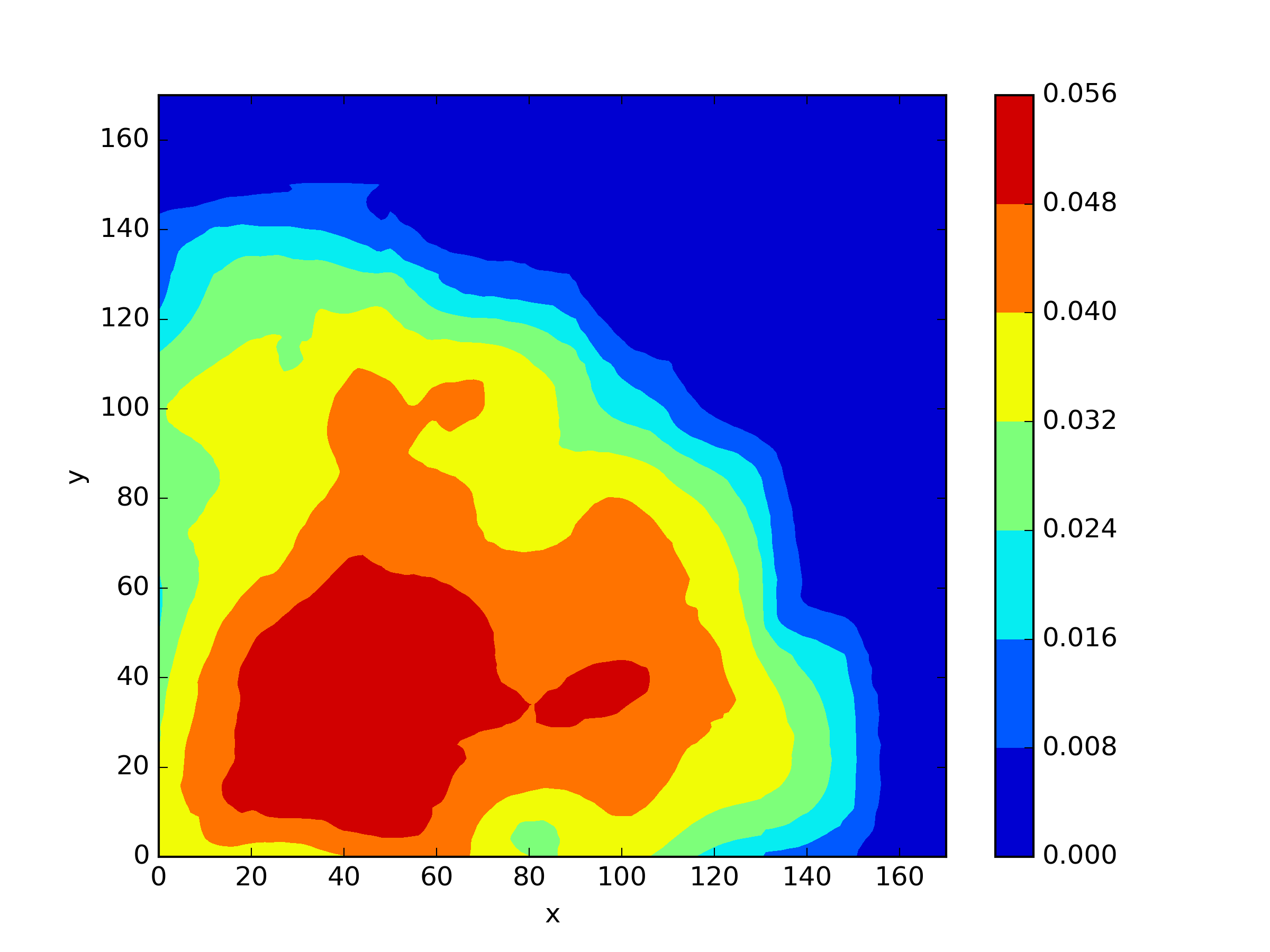

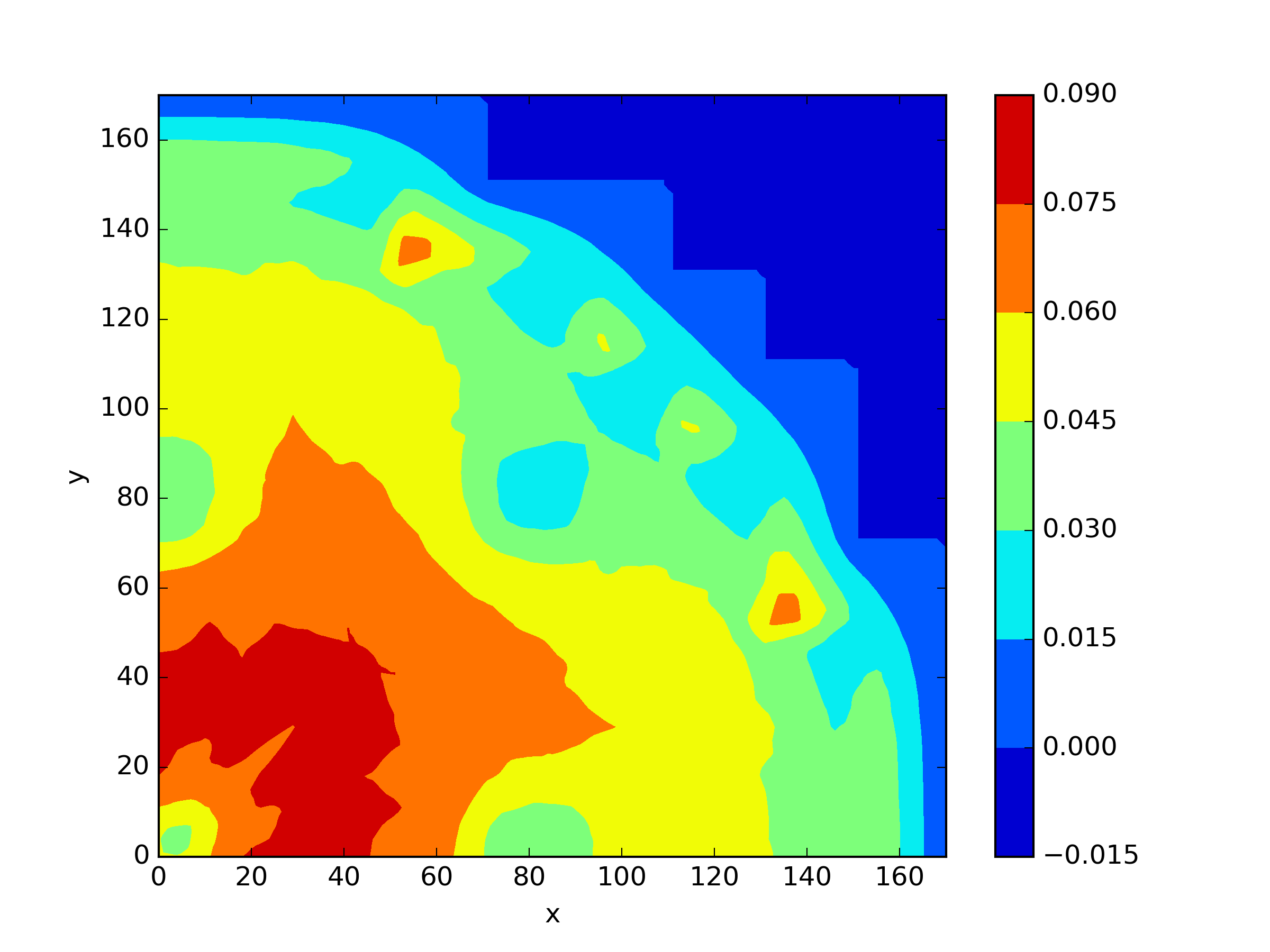

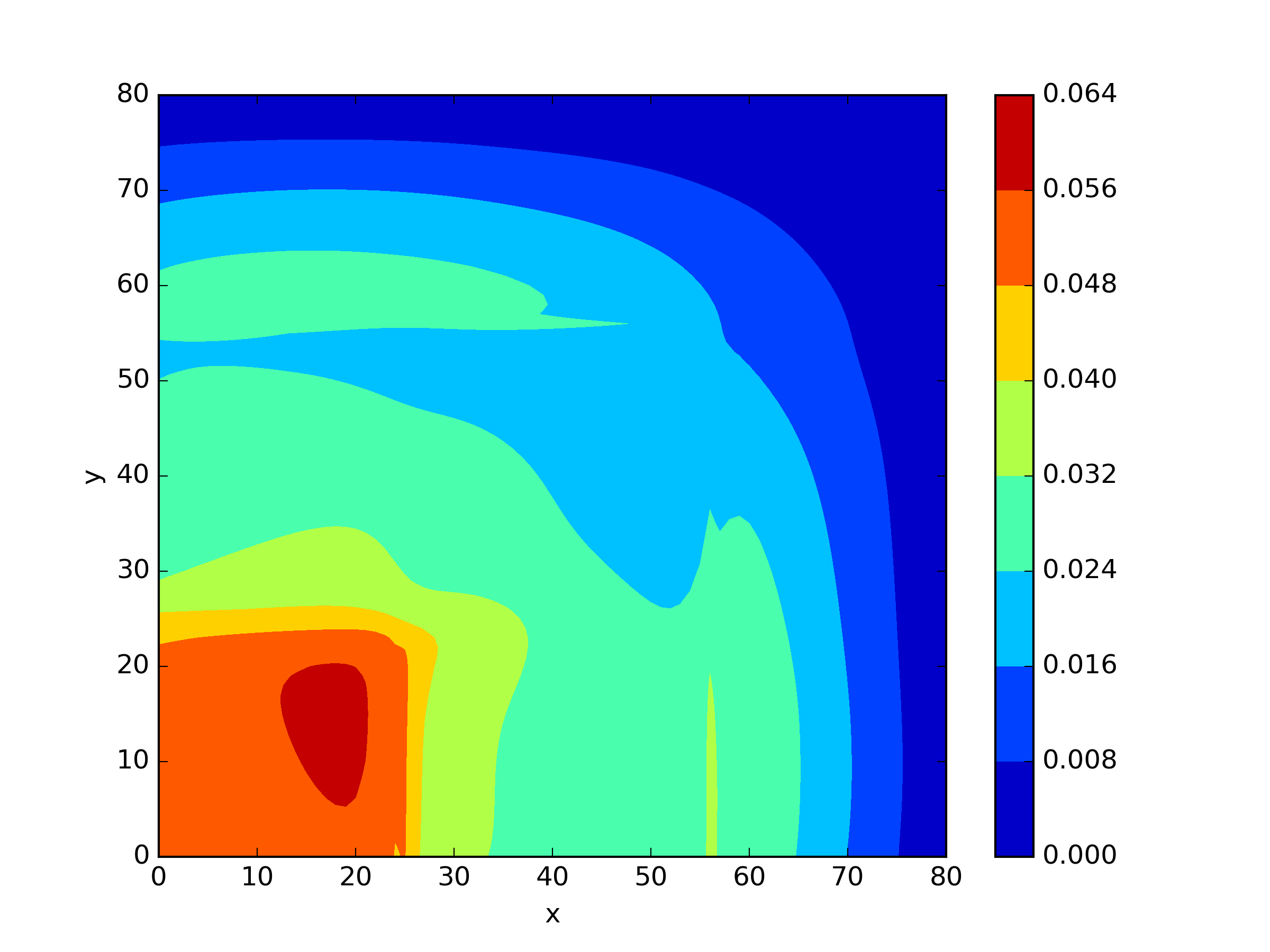

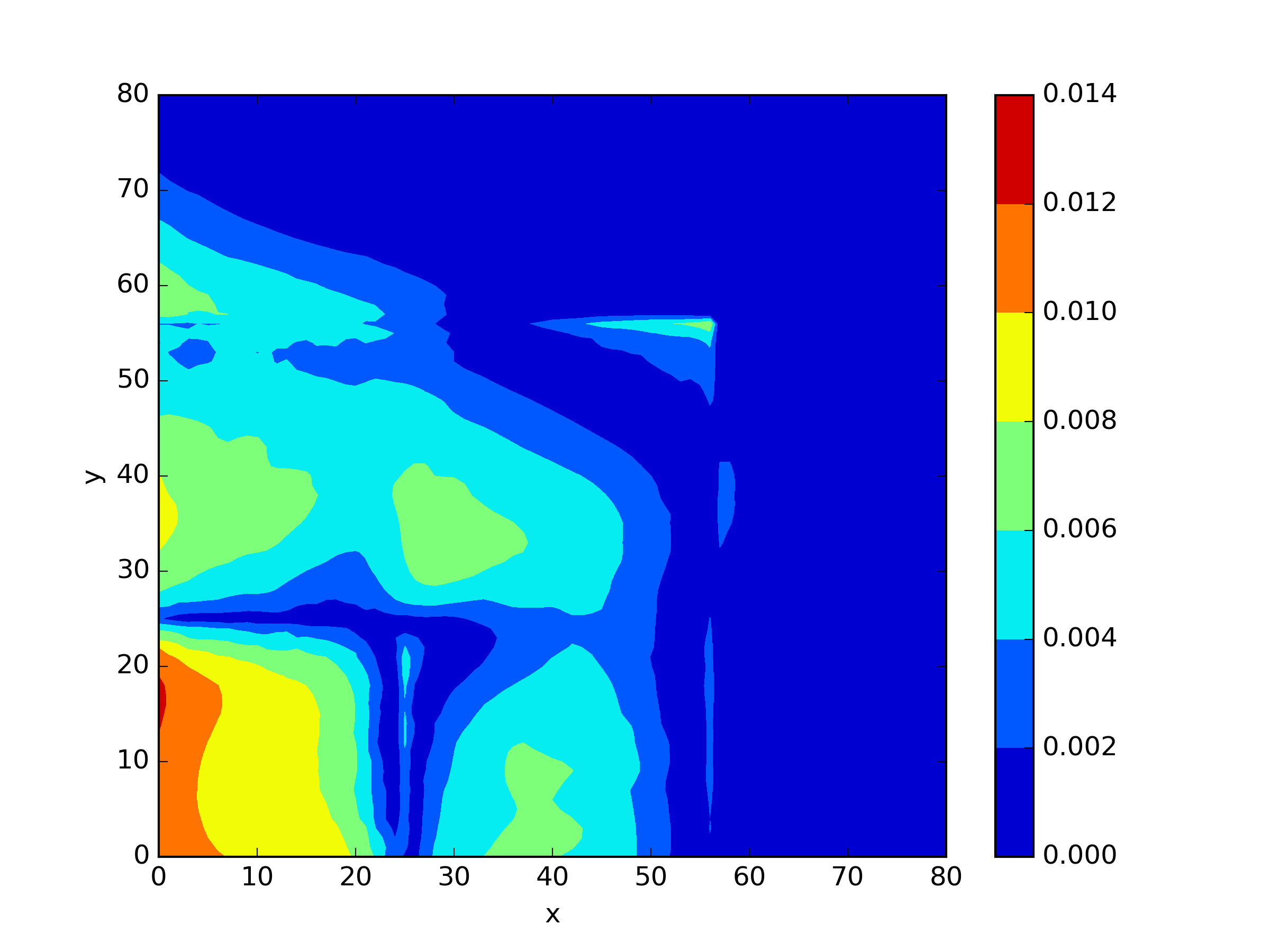

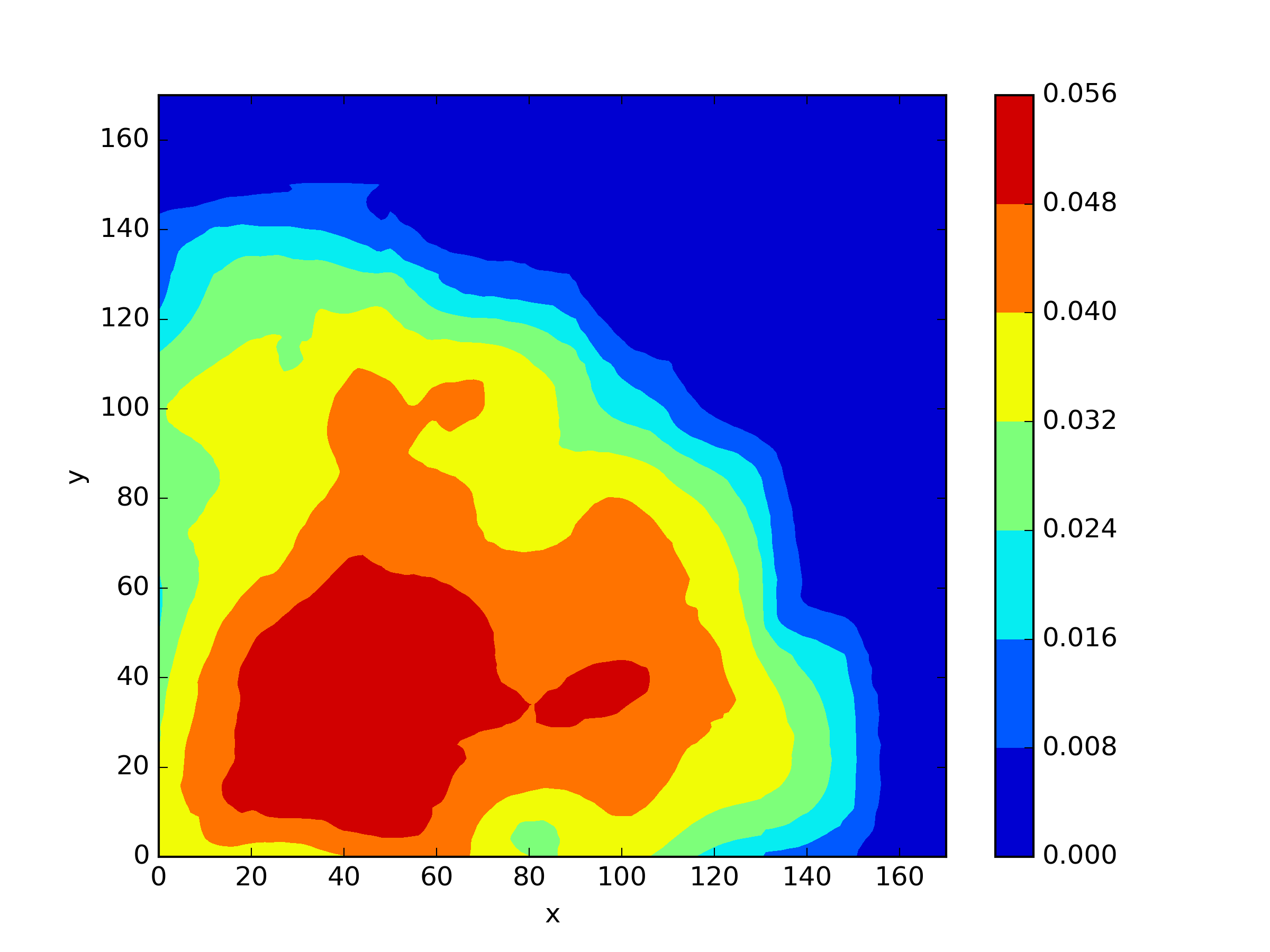

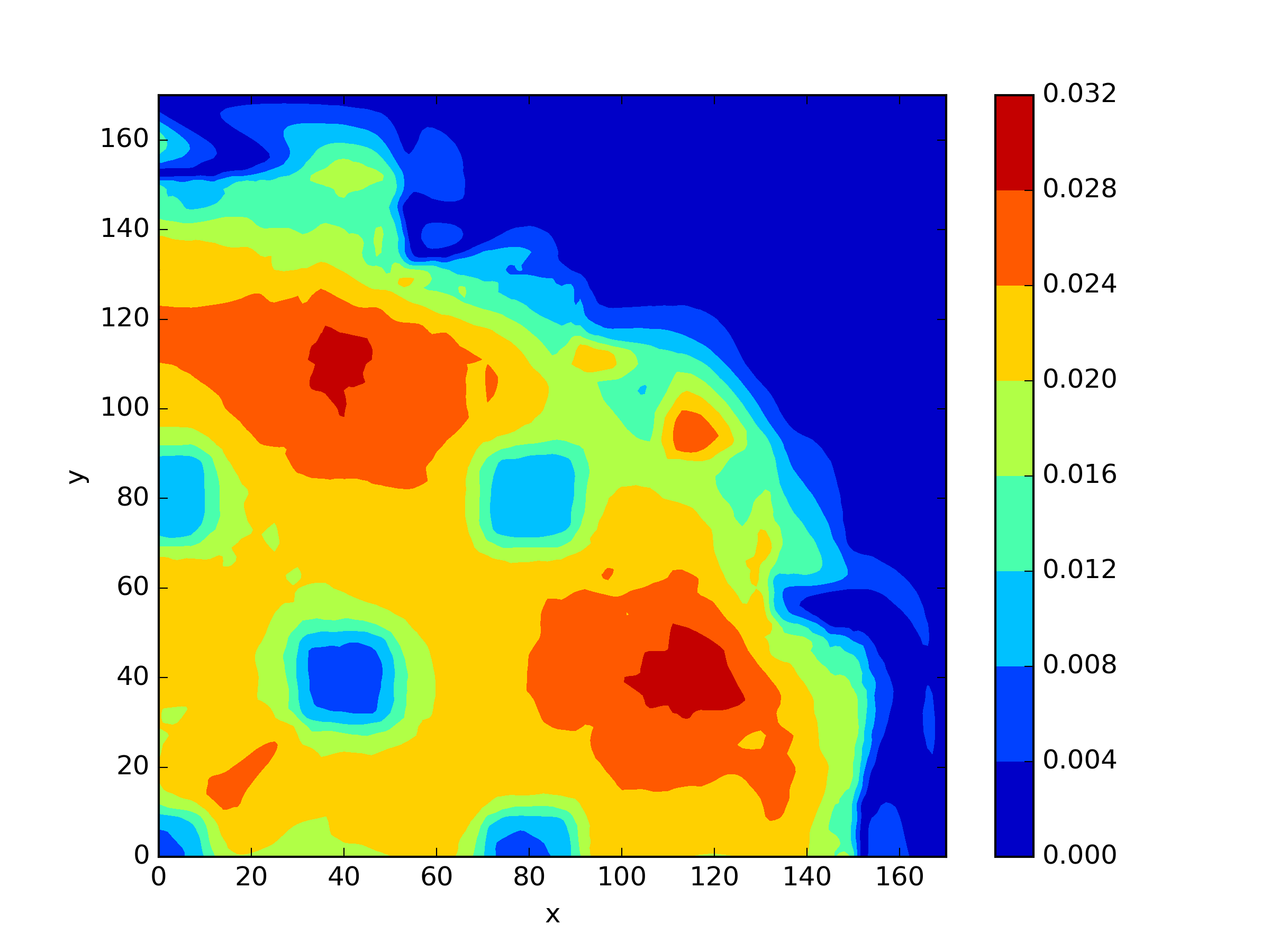

Indeed, the IAEA problem is larger in scale and involves more materials compared to the TWIGL problem, which increases the difficulty of solving it using neural networks. We chose 4 residual blocks, with 40 neurons per layer in each residual block. The Adam optimizer with a learning rate of was used for epochs optimization during the training process. For the two-dimensional IAEA problem, we have selected 23,738 residual points and 703 interface points. We applied the three residual loss function mentioned earlier to this problem as well (Table 7 and Figure 13). In this scenario, the drawback of is amplified, as evident from the table. The errors of are significantly larger because discards the information of during the iteration process, resulting in a decrease in the solution accuracy, while and continue to maintain a good level of accuracy. Furthermore, the results with shift show slightly better performance.

| Region-1 | 1.5 | 0.4 | 0.01 | 0.085 | 0.02 | 0.0 | 0.135 |

| Region-2 | 1.5 | 0.4 | 0.01 | 0.08 | 0.02 | 0.0 | 0.135 |

| Region-3 | 1.5 | 0.4 | 0.01 | 0.13 | 0.02 | 0.0 | 0.135 |

| Region-4 | 2.0 | 0.3 | 0.0 | 0.01 | 0.04 | 0.0 | 0.0 |

| Region-5 | 2.0 | 0.3 | 0.0 | 0.055 | 0.04 | 0.0 | 0.0 |

| Shift | ||||||

|---|---|---|---|---|---|---|

| 0 | 3.5993e-04 | 5.8825e-02 | 3.4499e-02 | 1.5972e-01 | 7.1278e-02 | |

| 0 | 7.8179e-04 | 2.9658e-02 | 2.2165e-02 | 8.3564e-02 | 5.0352e-02 | |

| 1 | 5.1034e-04 | 4.0134e-02 | 1.7337e-02 | 7.9622e-02 | 4.0727e-02 | |

| 1 | 6.9851e-04 | 2.6378e-02 | 1.5618e-02 | 6.5194e-02 | 3.5830e-02 |

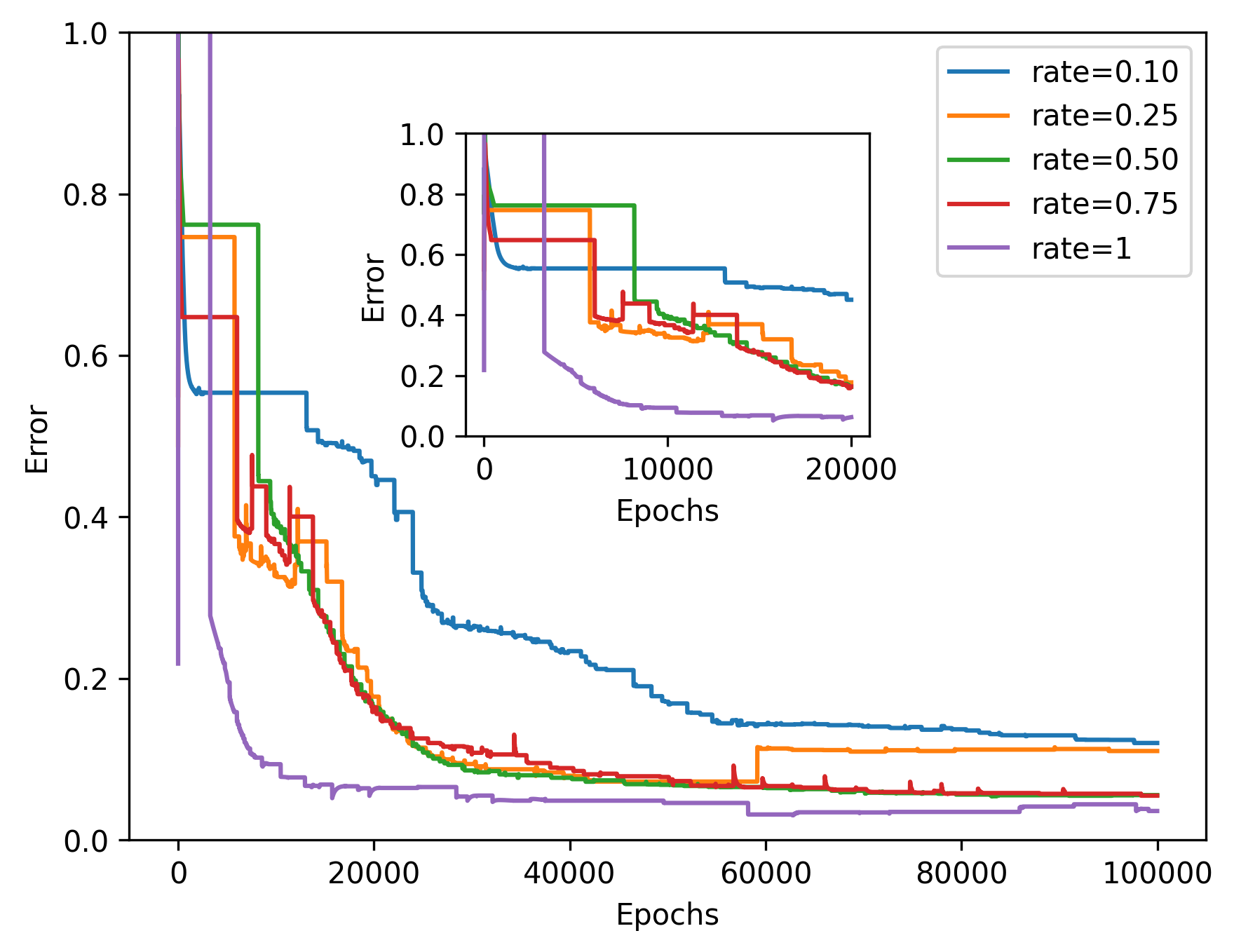

Similarly, we investigated the impact of the number of sampling points on the solution accuracy for the two-dimensional problem of IAEA. We conducted tests using five different levels of training point numbers. The experimental results (Figure 14 and Figure 15), in the context of this more complex problem, clearly demonstrate the importance of the number of sampling points for problem solving. Having a greater number of sampling points yields higher accuracy in the results.

To investigate the effectiveness of applying interface conditions to the IAEA problem, we conducted an experiment related to interface conditions. Based on the experimental results (Figure 10 and Figure 11), it is evident that when interface conditions are not applied at all, the problem remains unsolvable. Moreover, for more complex problems, the implementation of interface conditions becomes even more crucial.

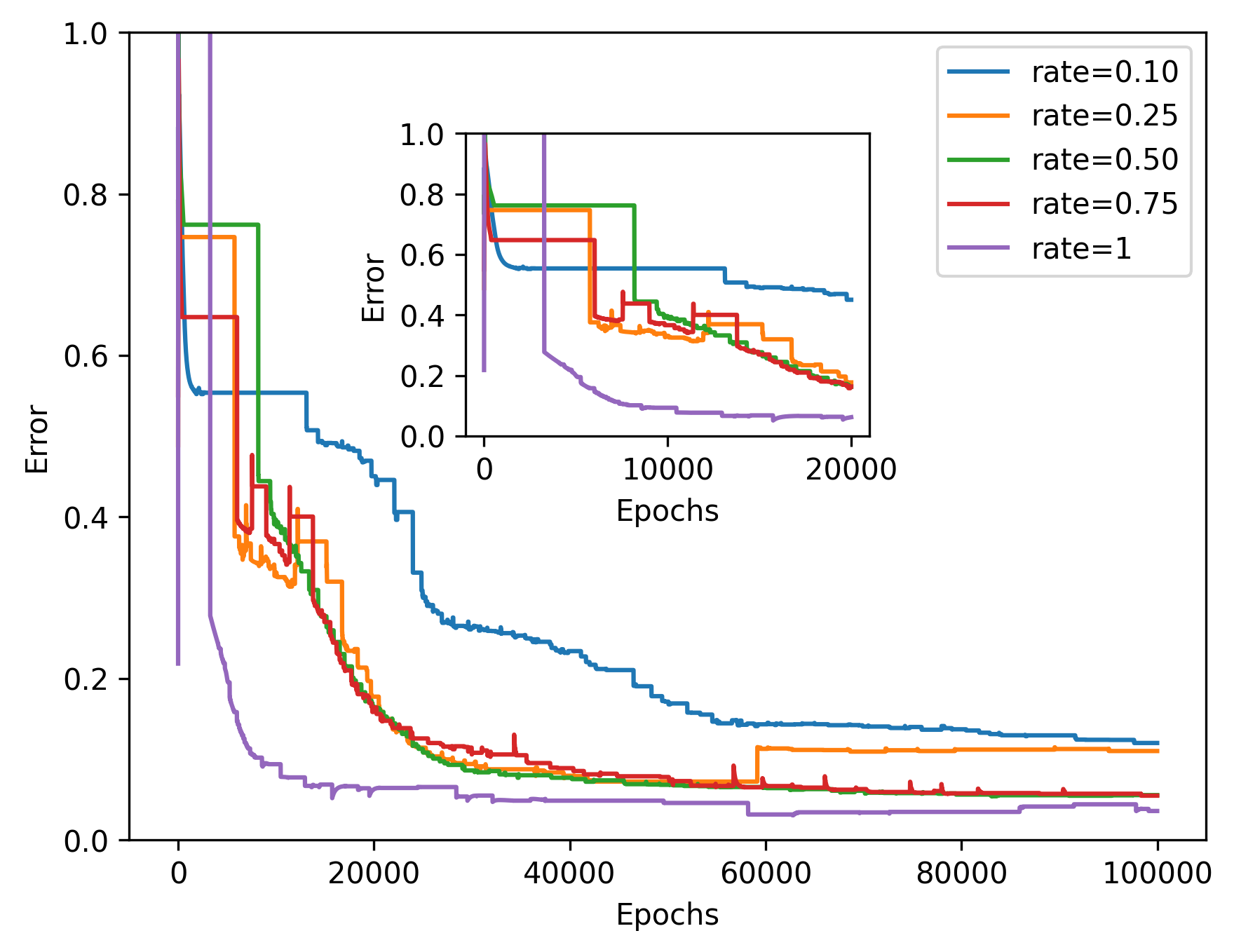

4.3.3 3-Region TWIGL

In this section, we also considered a slightly more complex version of the TWIGL problem. We extended the computational domain from two materials to three materials, resulting in more intricate interface conditions (Figure 16). The boundary conditions for this case are the same as the previous TWIGL problem. Subsequently, we performed neural network computations for this problem at different sampling rates, comparing them with the performance of the best-performing algorithm for TWIGL, as well as two other two-dimensional problems. The set of training points is consistent with the TWIGL problem. The results are illustrated in Figure 17.

| Region | |||||||

|---|---|---|---|---|---|---|---|

| Seed* | 1.35 | 0.45 | 0.009 | 0.1 | 0.01 | 0.005 | 0.13 |

From the Figure 17, it is evident that as we progress from TWIGL to 3-R TWIGL and then to the IAEA problem, the average error increases. This implies that the size and complexity of the problem significantly affect the accuracy of our neural network solution. However, it is worth noting that regardless of the case, there are always instances where the results satisfy a threshold of less than 0.08 or even 0.05, which are the engineering acceptance criteria.

4.4 3-D Problem

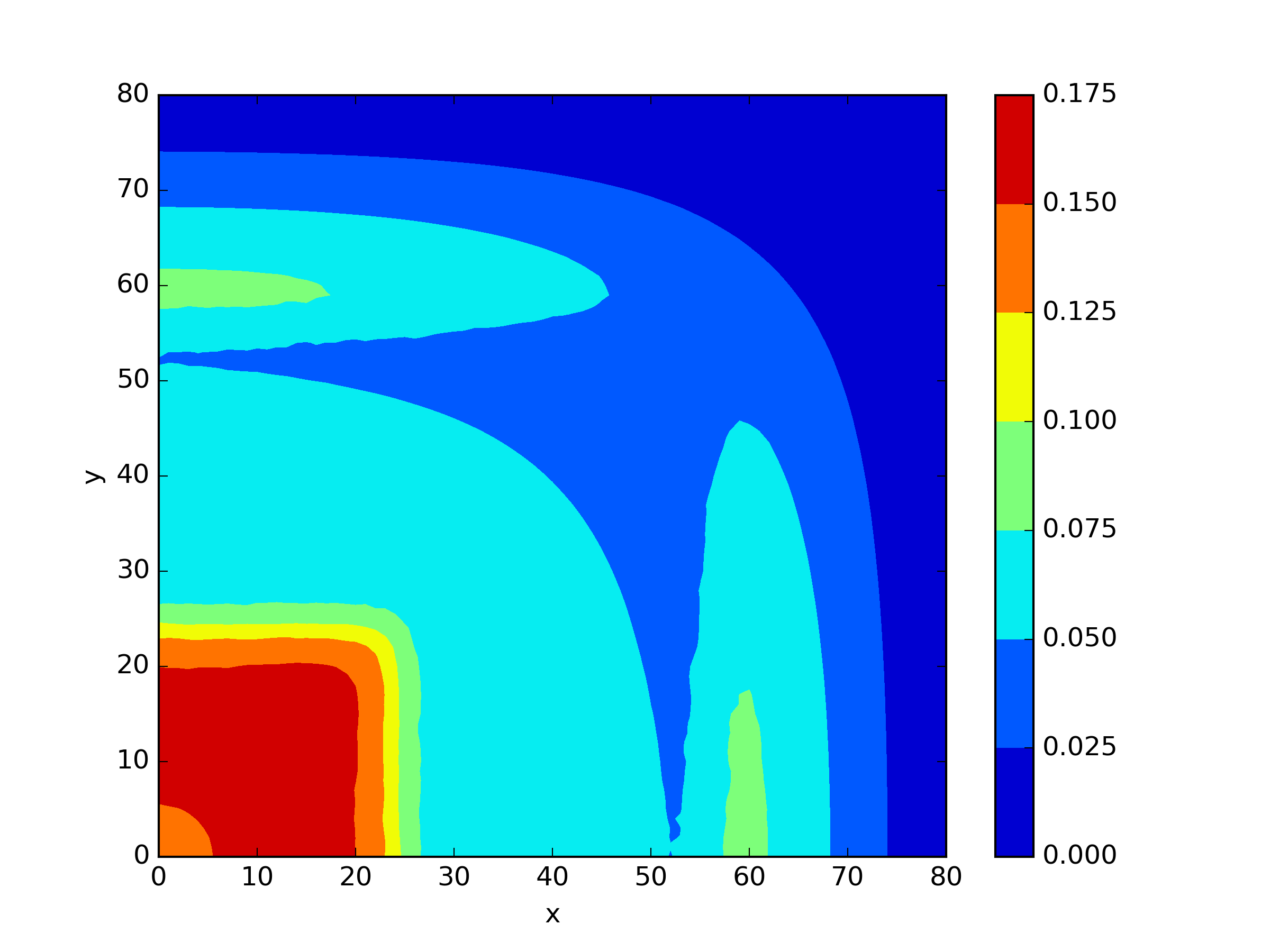

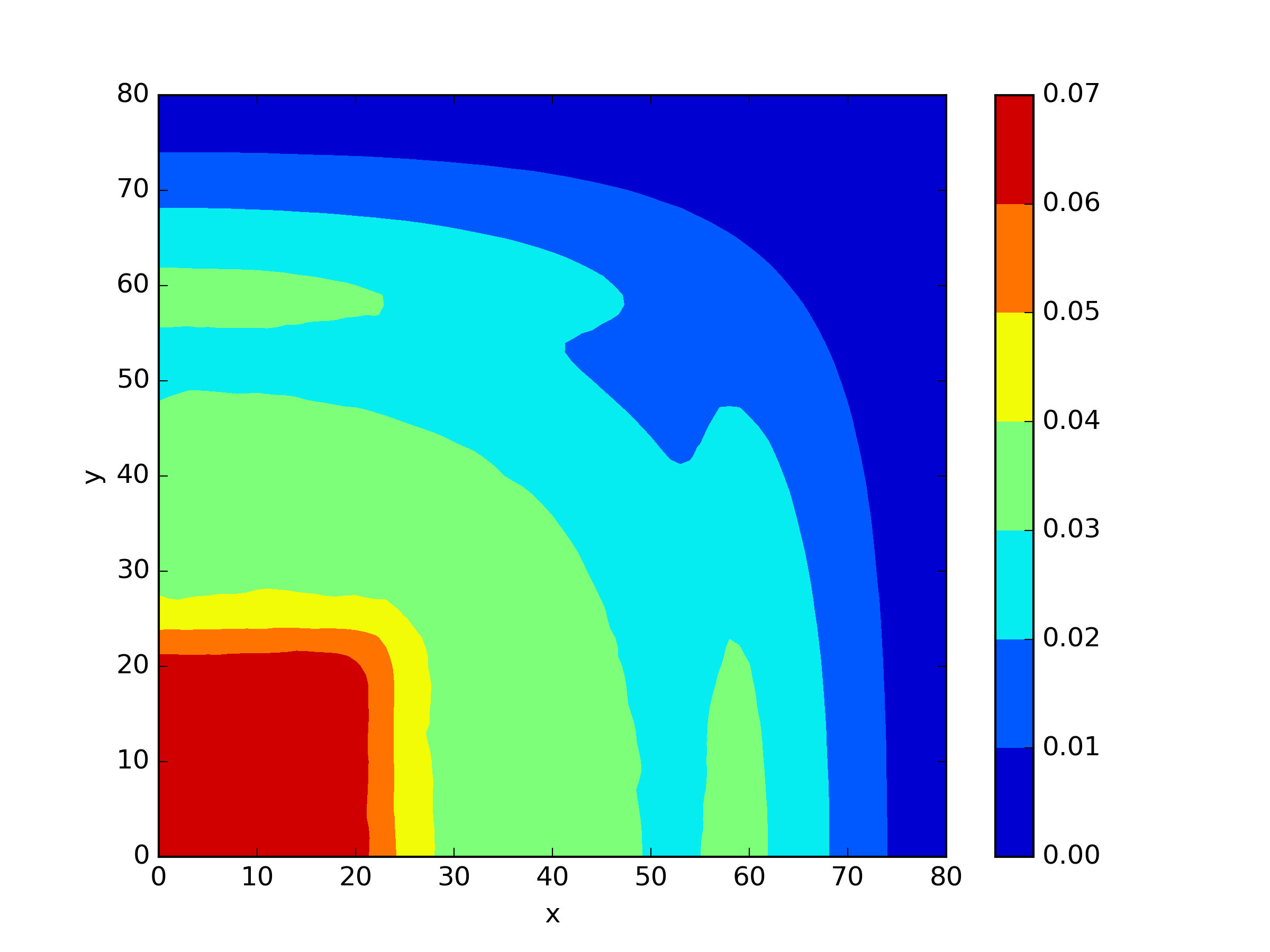

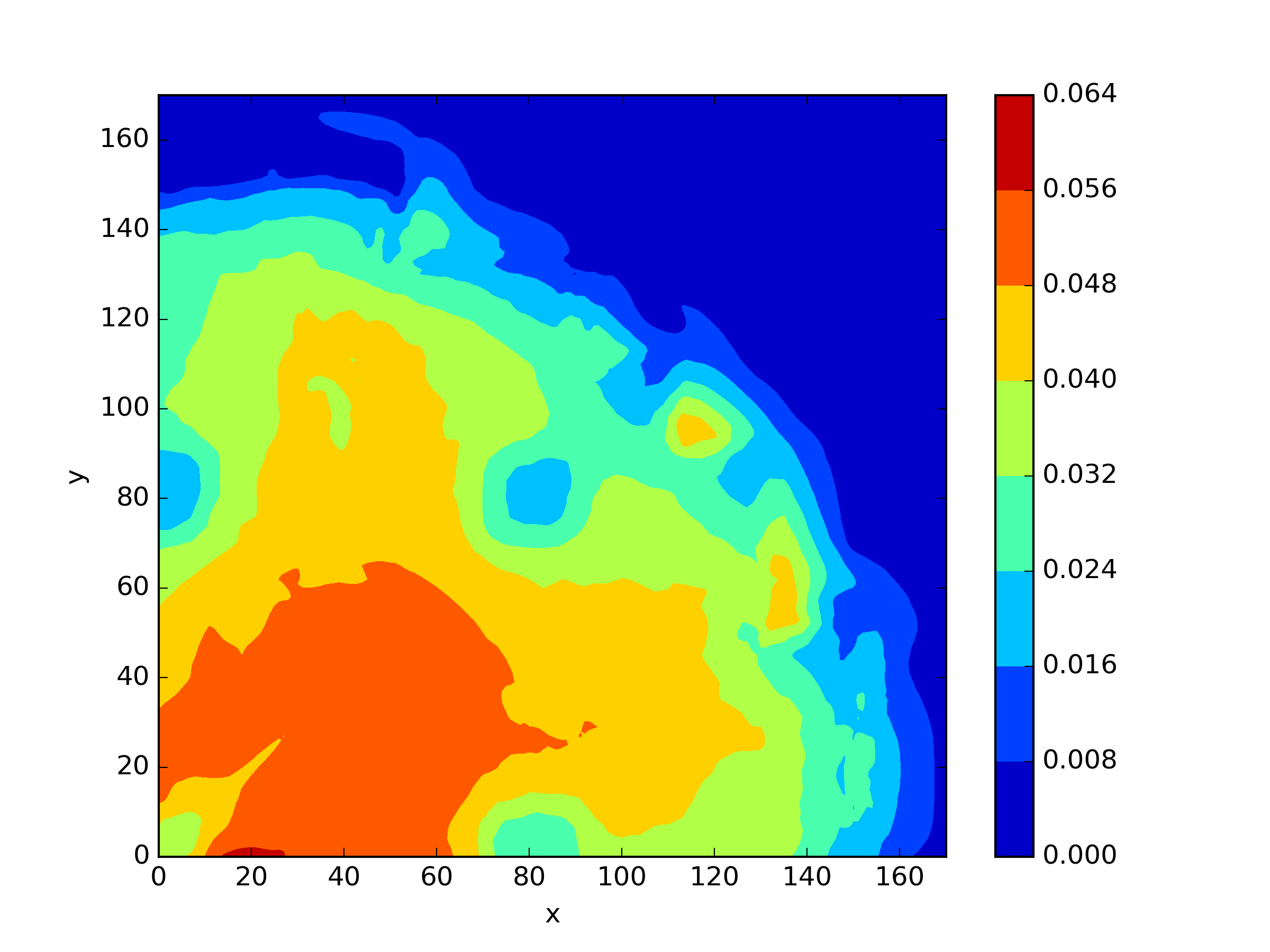

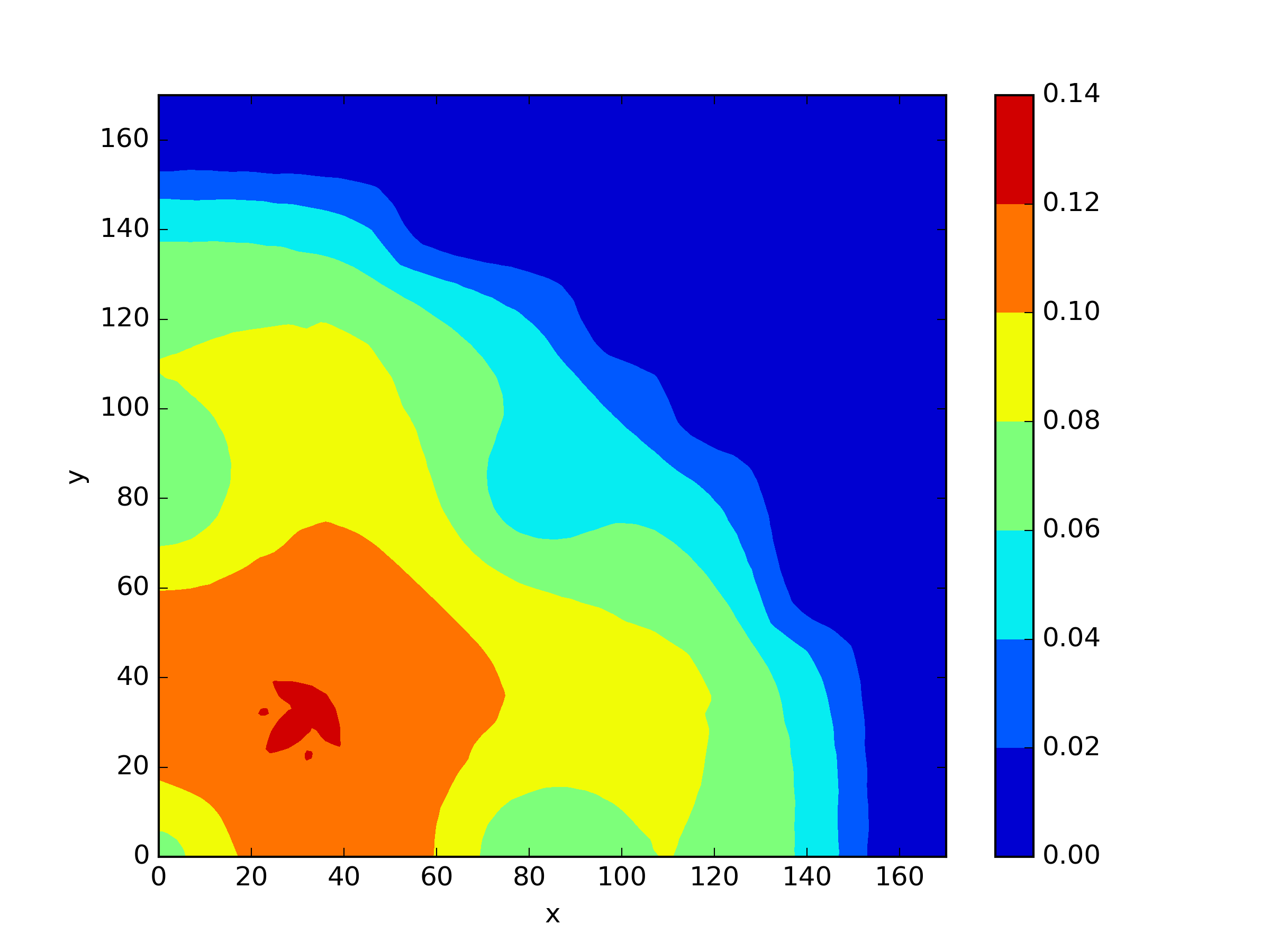

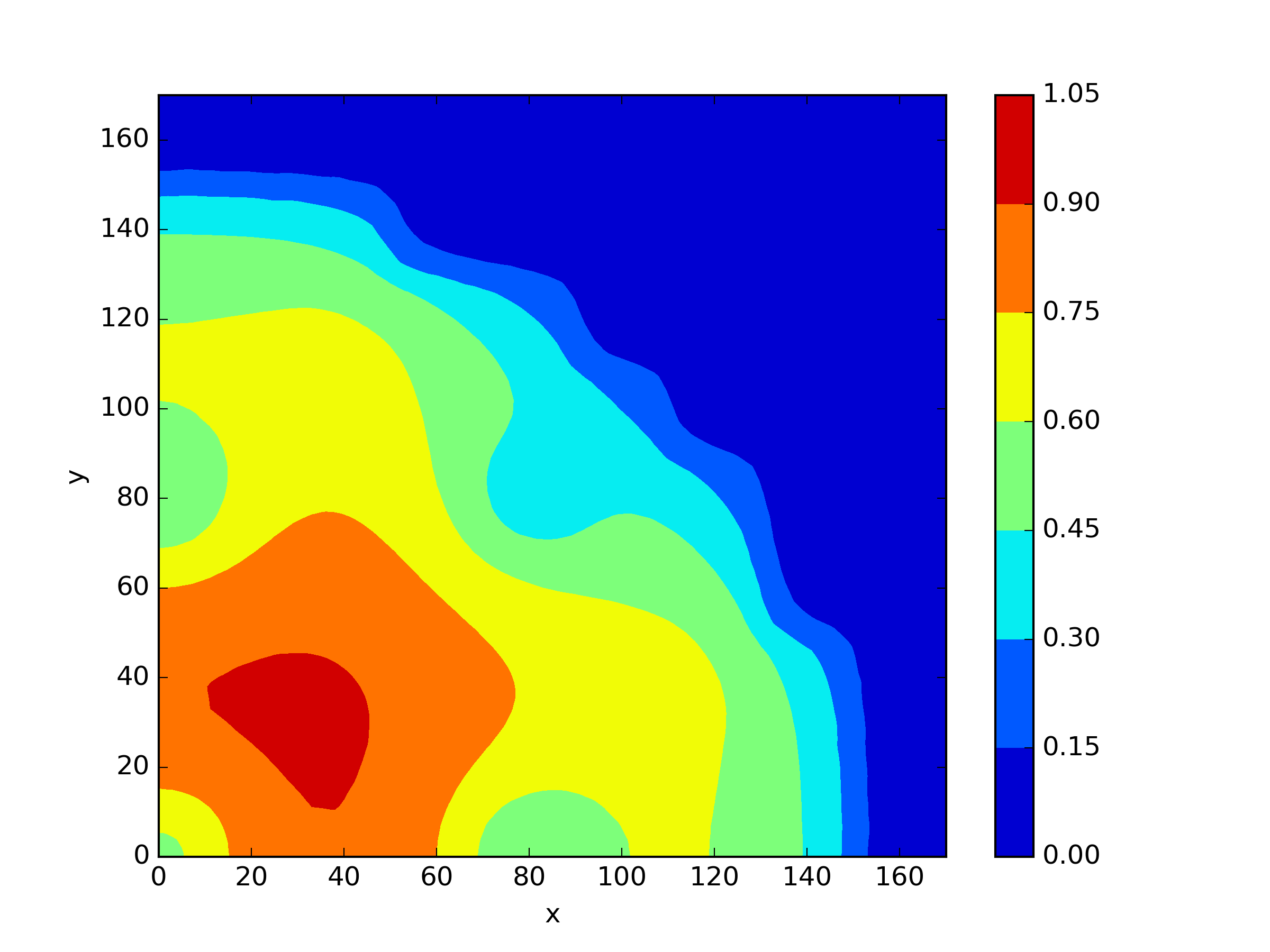

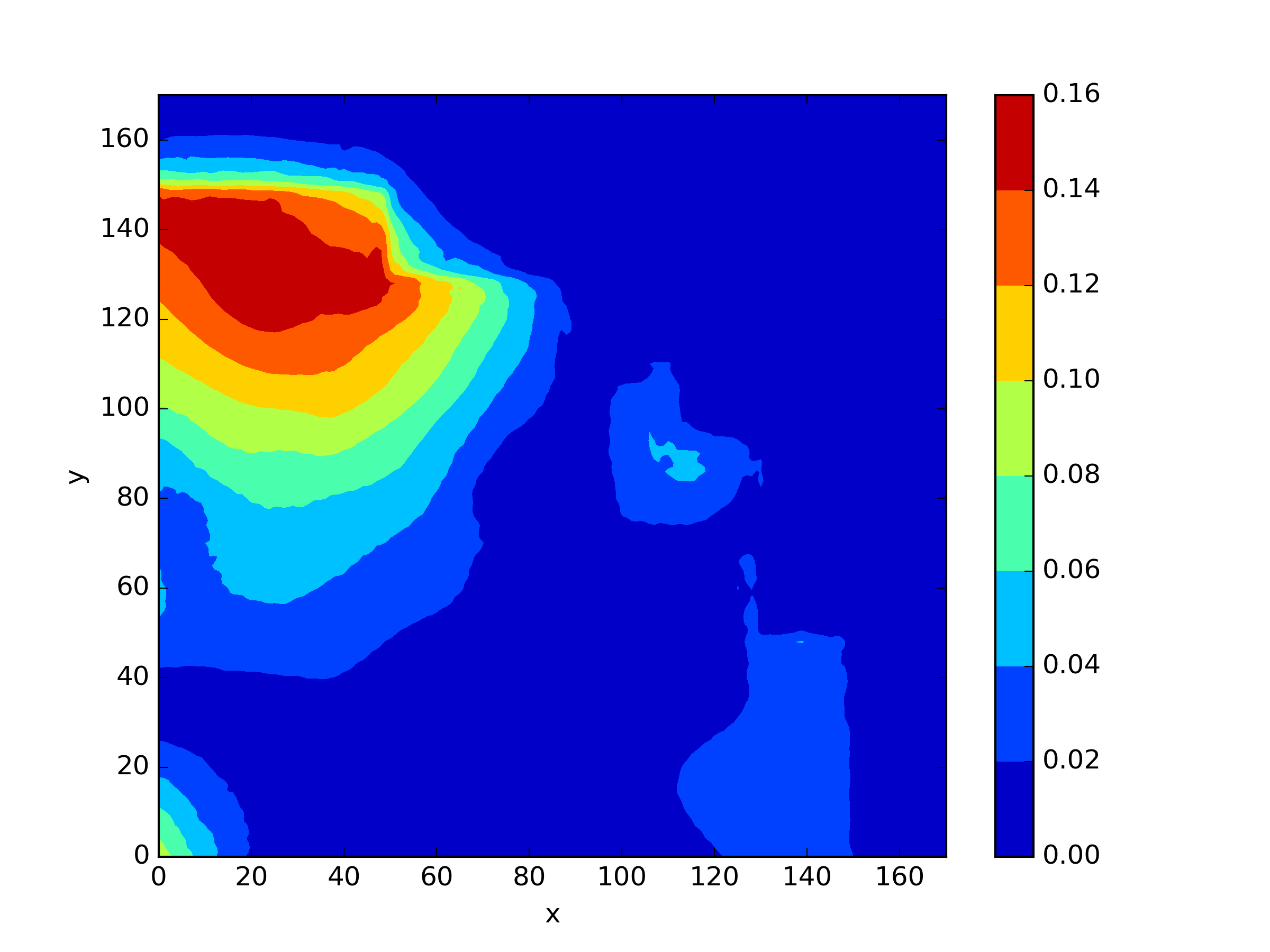

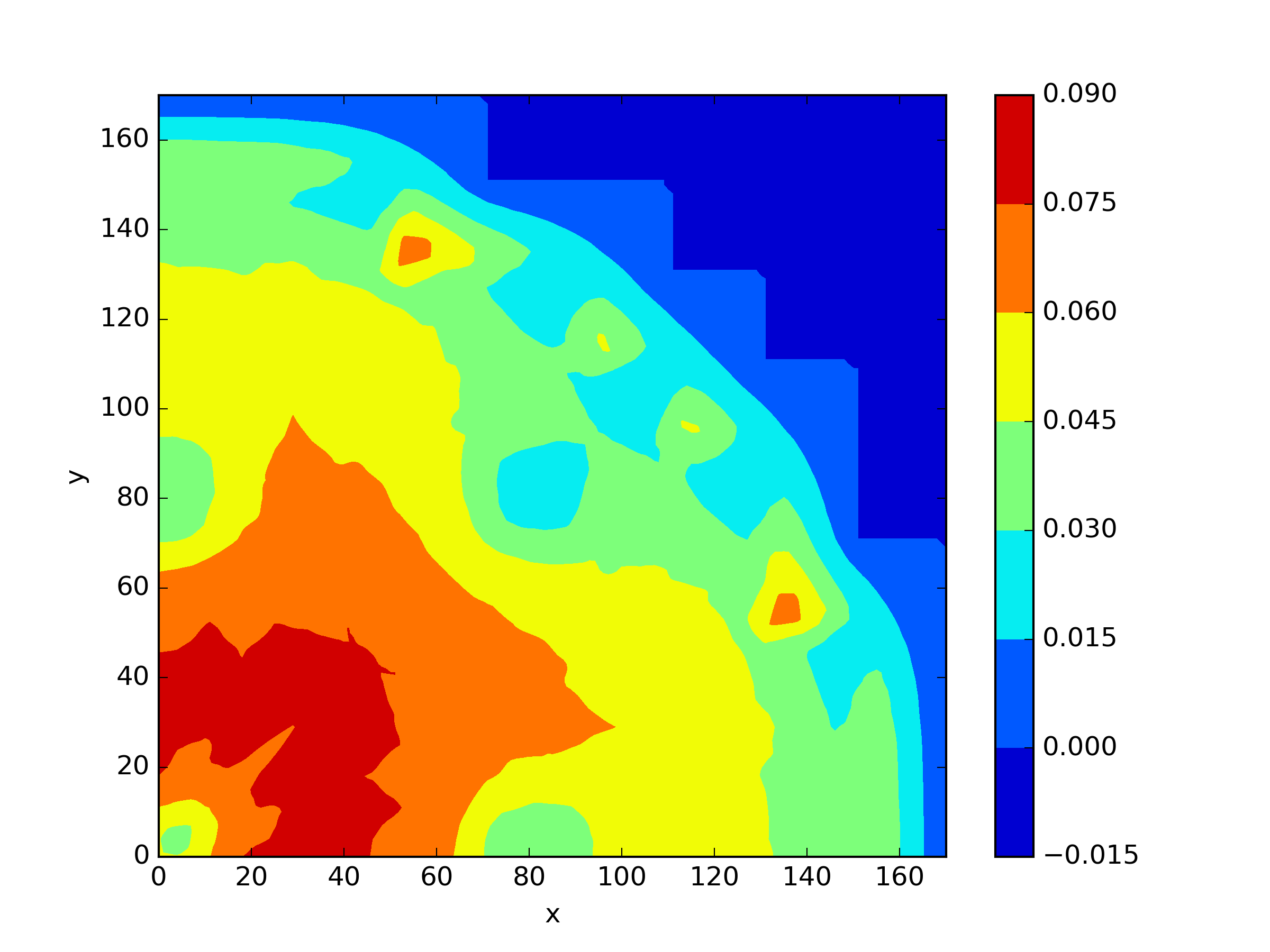

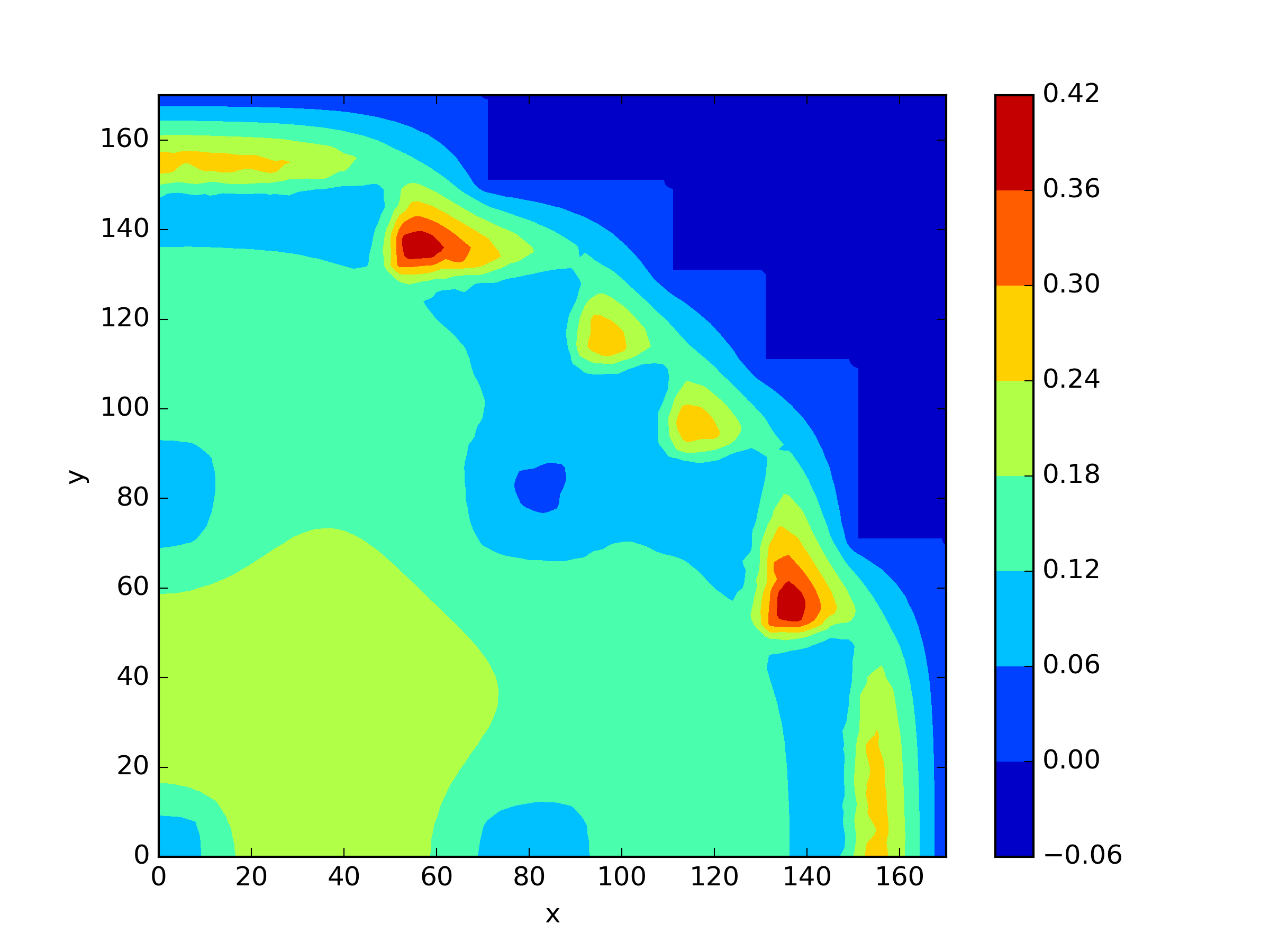

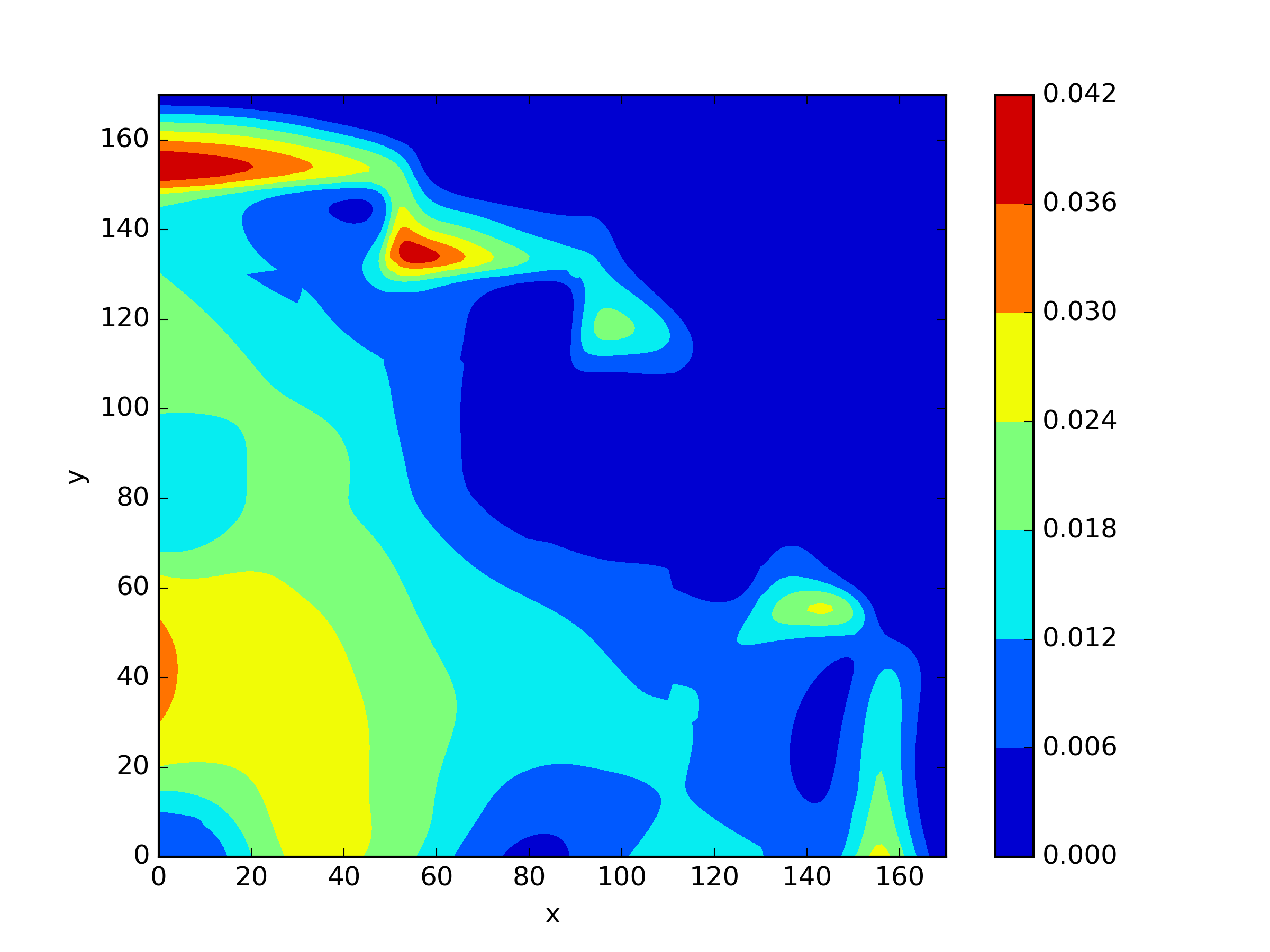

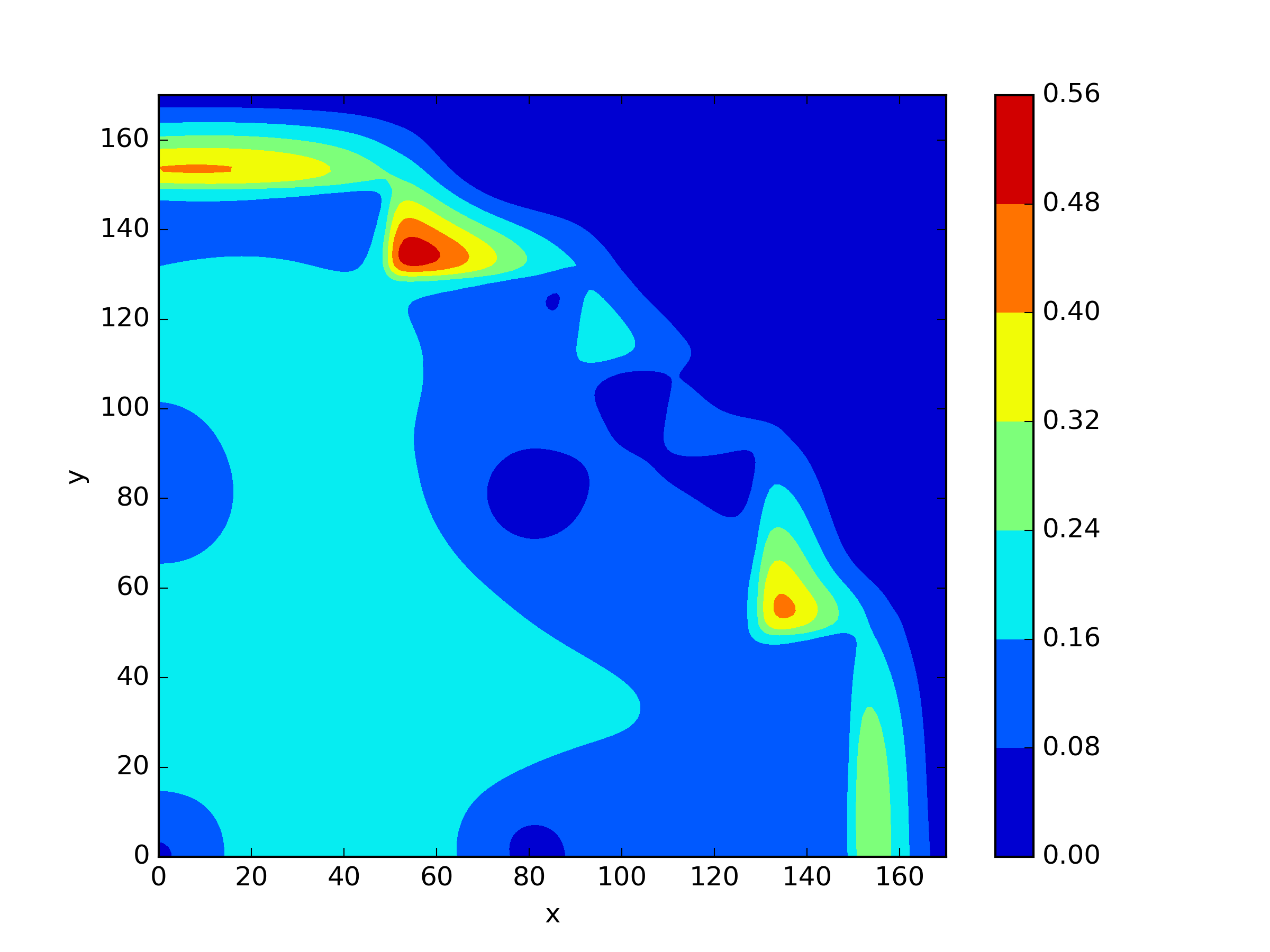

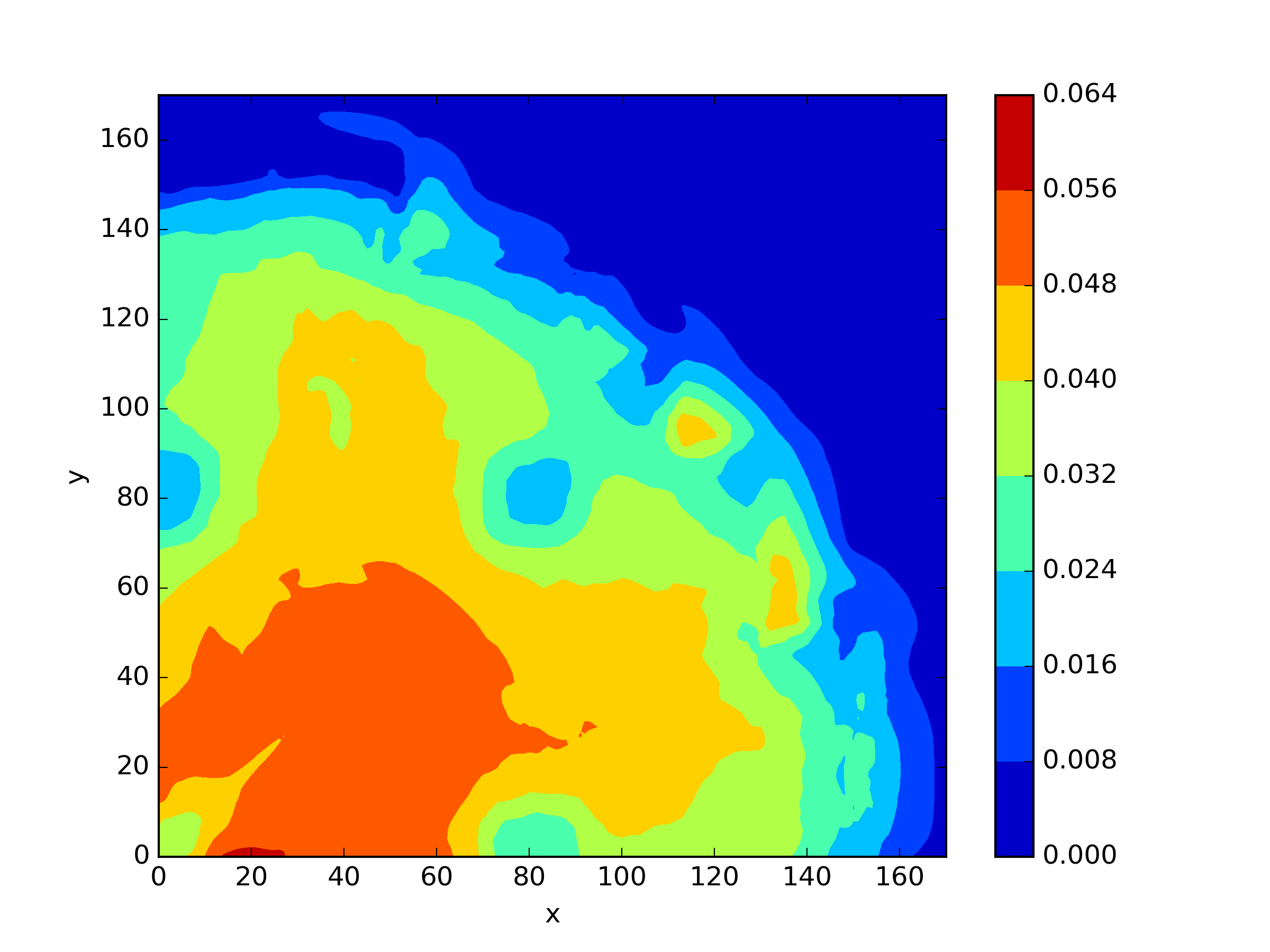

In the case of the 3-D TWIGL and IAEA problems, we initially employed FreeFEM++ as our reference solution. For discretization, we utilized a grid with intervals of 1 in the x and y directions, and intervals of 10 in the z direction. For these two three-dimensional problems, the boundary conditions from the two-dimensional problems naturally extend to the three-dimensional problems. The top and bottom surfaces of the three-dimensional computational domain are considered as the boundaries corresponding to the external interfaces. Quadratic polynomial elements were employed for the numerical approximation. Critical eigenvalue of three-dimensional TWIGL problem is and three-dimensional IAEA problem is .

Our neural network was trained using a sampling approach where we considered all integer coordinate points within the computational domain. For the 3-D TWIGL problem, we selected 4 residual blocks, each containing 80 neurons in a single layer. As for the 3-D IAEA problem, we opted for 6 residual blocks, each with 60 neurons in a single layer. For both problems, The optimization algorithm remained Adam with a learning rate of and we have selected (25) as residual loss function. For three-dimensional problems, the TWIGL problem comprises 9,027,249 residual training points and 21,384 interface points; the IAEA problem includes 93,903,717 residual points and 284,772 interface points.

| TWIGL-3D | 6.2617e-03 | 5.5424e-02 | 8.1373e-02 |

| IAEA-3D | 3.4704e-03 | 1.2472e-01 | 2.6125e-01 |

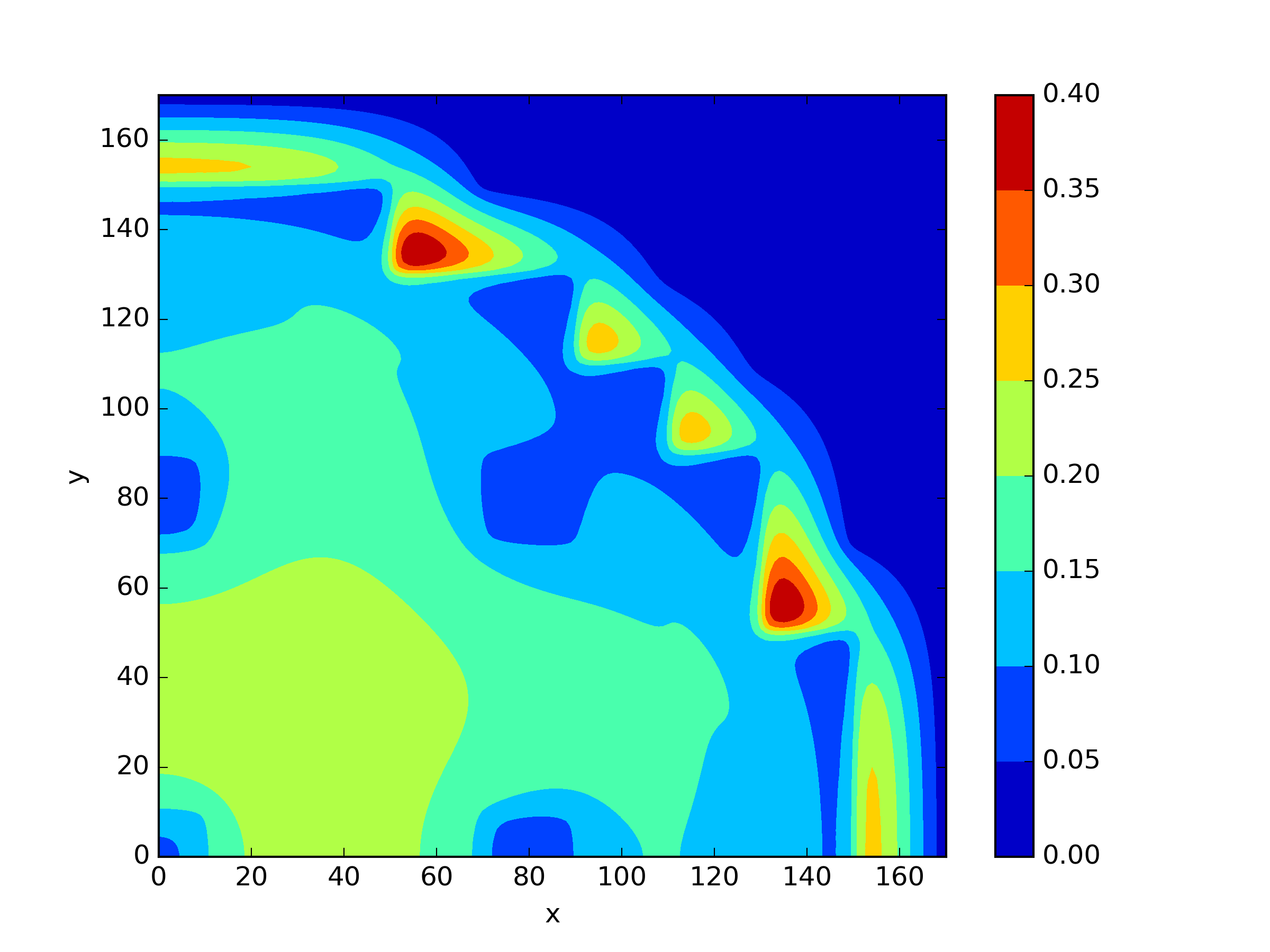

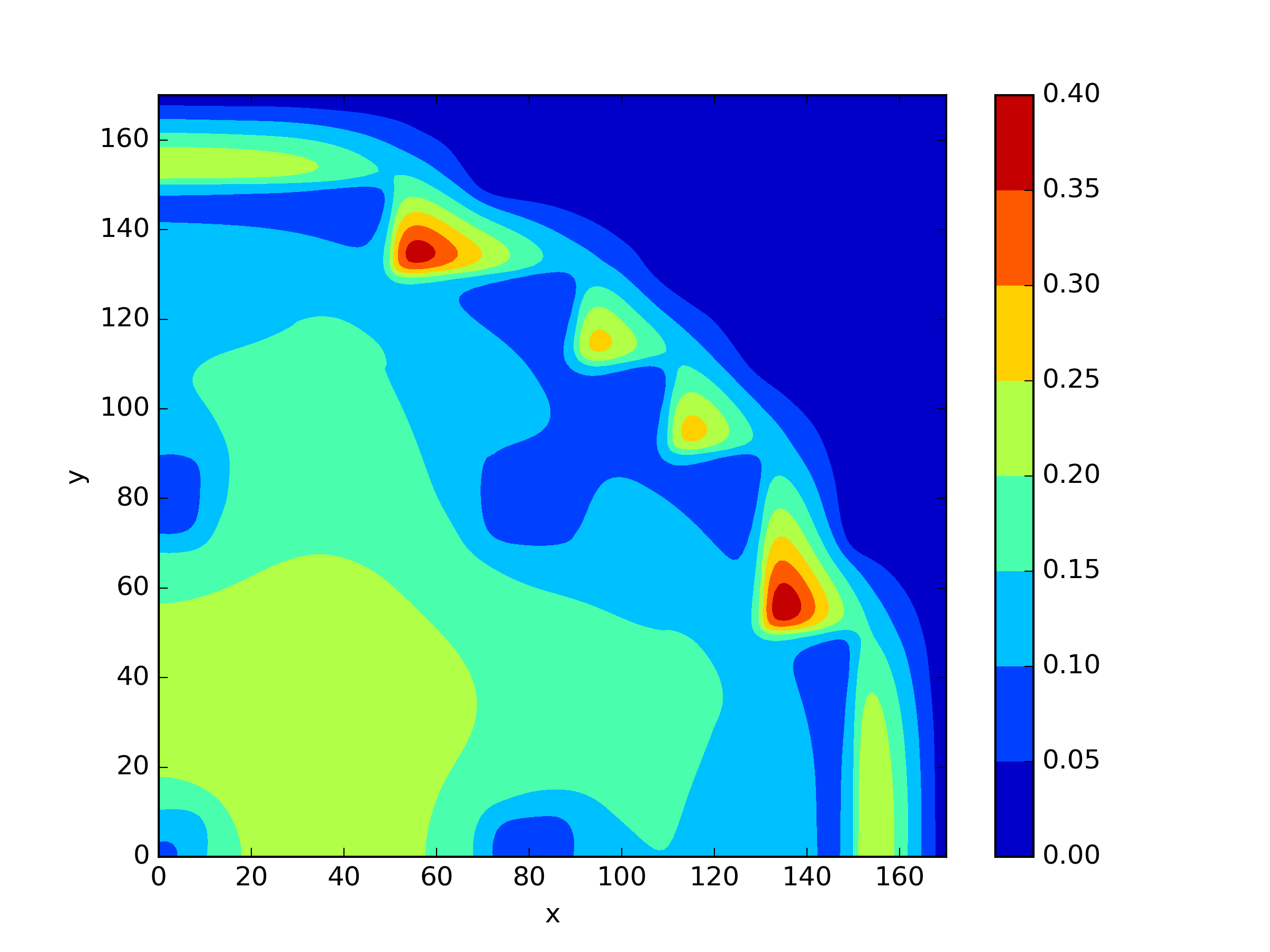

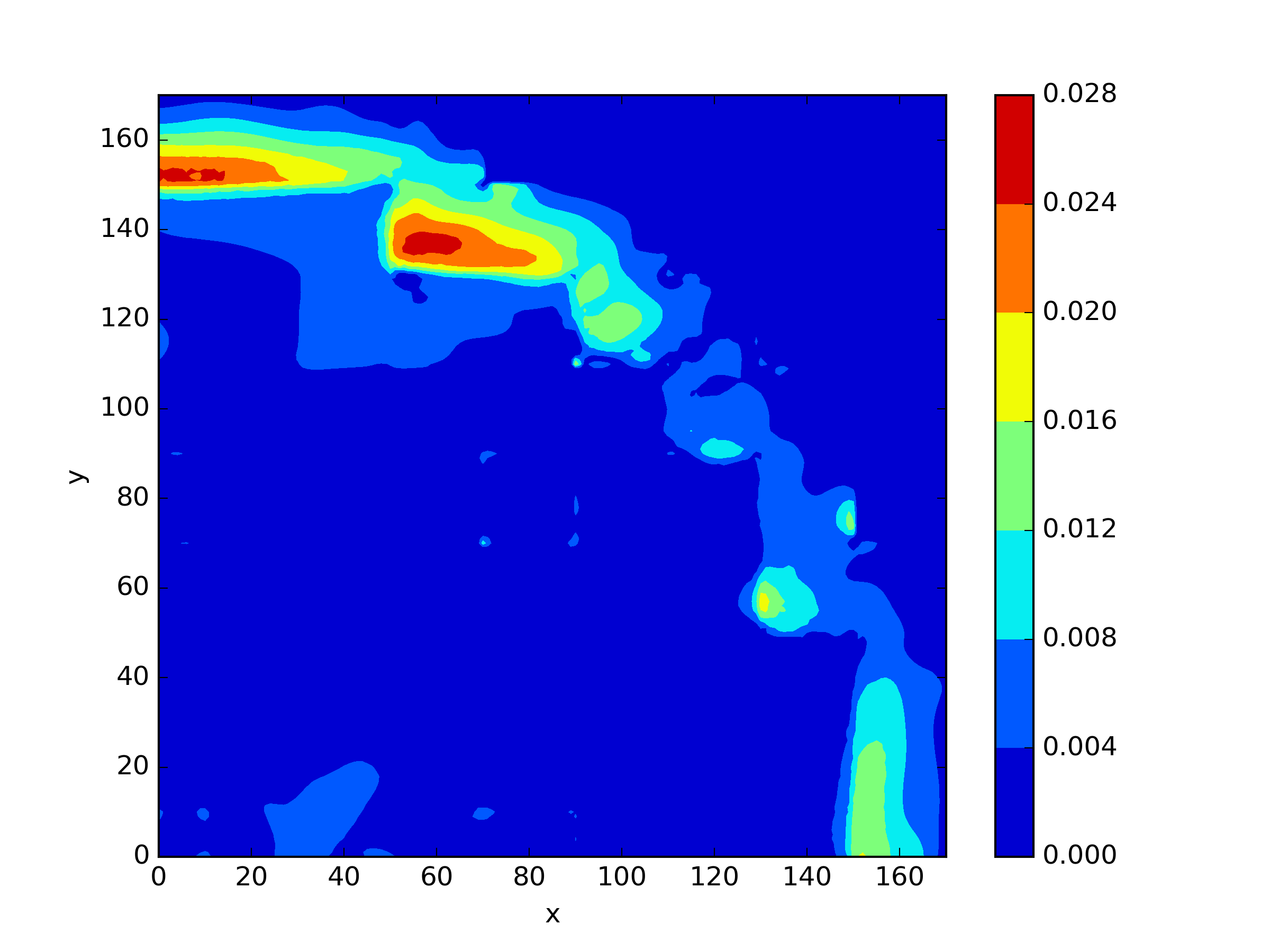

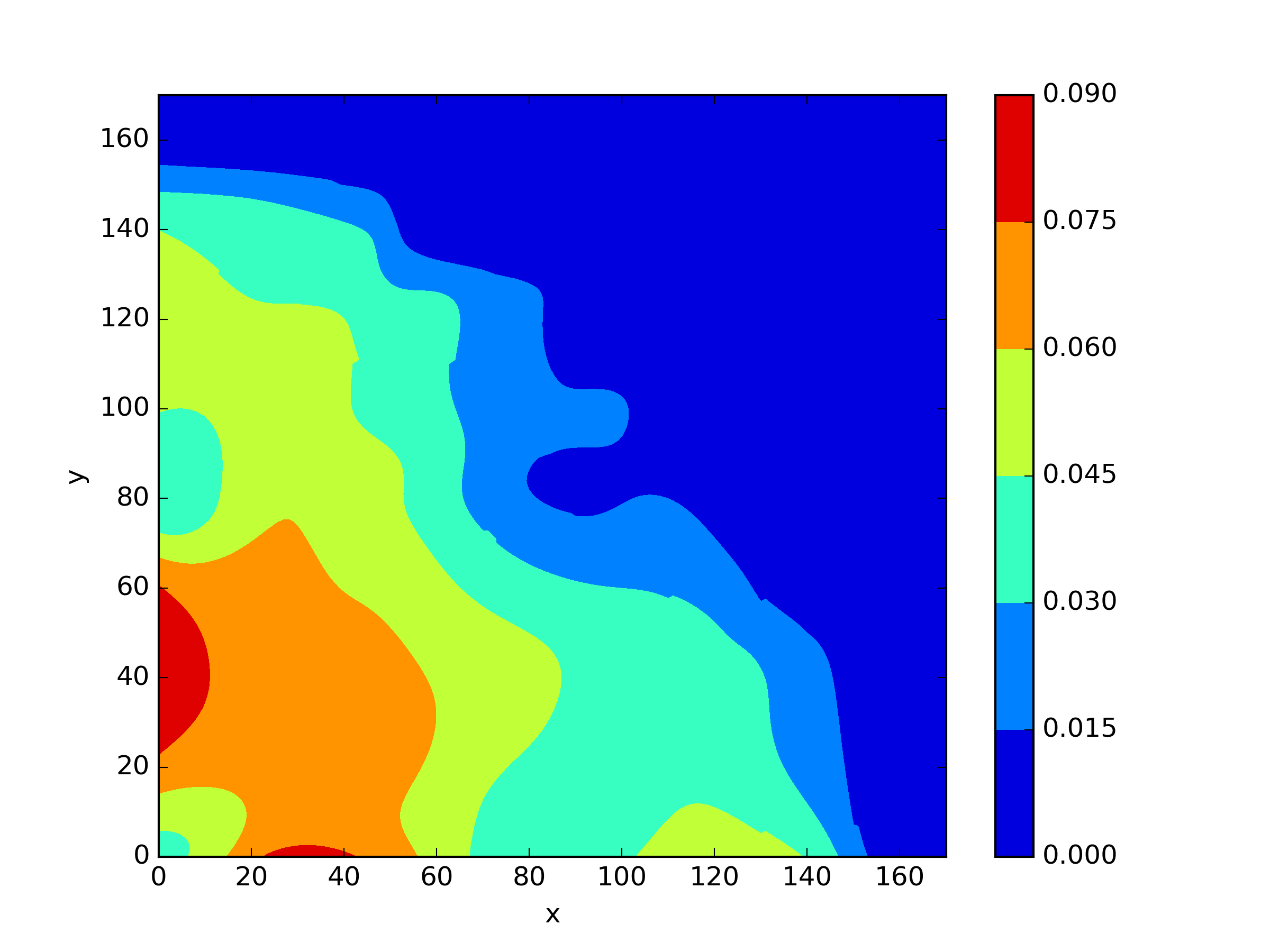

The numerical results Table 9 Figure 18 and Figure 19 indicate that our neural network algorithm has captured most of features of the 3D case in both the TWIGL and IAEA problems. The significant infinite error in in the IAEA problem is primarily due to the sharp variations in the solution within the reflector region, where vanishes resulting in disappearance of right hand side of (1). In contrast, the solution within the core region is relatively easier to obtain, as evidenced by the comparison of the solutions between IAEA and TWIGL. There is still room for improvement, as we are in the face of realistic problems.

5 Conclusion

The main contribution of this paper are the application of neural networks to solve multi-group neutron diffusion eigenvalue problem in nuclear reactor physics and proposal of two new residual loss function. We have successfully solved multiple physical problems in one-dimensional and two-dimensional within an acceptable range of error, even with random initial guess of eigenvalue and eigenfunctions. Two new loss functions, decoupling residual loss function (23) and direct iterative residual loss function (25), are proposed and compared with the previous PC-GIPMNN method for two-group neutron diffusion eigenvalue problem.

Numerous numerical experiments based on benchmark problem in nuclear reactor physics reveal the following points:

-

•

For the same problem, increasing the number of sampling points leads to higher accuracy. However, for a fixed neural network structure, the accuracy will not infinitely improve. Nevertheless, increasing the number of sampling points can accelerate the convergence of the solution.

-

•

For the same interface eigenvalue problem, the introduction of interface conditions is crucial for solving the problem itself. The accurate modeling of interface conditions significantly impacts the quality of the solution.

-

•

As the geometric complexity and scale of the solved problem increase, the difficulty of the solution also rises. However, neural networks still possess the capability to capture high-precision solutions even in challenging scenarios.

These findings highlight the importance of sampling points, interface conditions, and the ability of neural networks when combined with decoupling residual loss function to handle complex and large-scale problems while achieving acceptable accurate solutions.

| Case | Time |

|---|---|

| One-dimensional problem | 0.4644 s |

| Two-dimensional TWIGL problem | 1.6541 s |

| Two-dimensional TWIGL-3R problem | 2.0659 s |

| Two-dimensional IAEA problem | 2.4642 s |

| Three-dimensional TWIGL problem | 24.1786 s |

| Three-dimensional IAEA problem | 293.1382 s |

On the other hands, the ability of neural network to solve complex three-dimensional problems with high accuracy can be challenging due to the increased computational complexity. More sophisticated approaches and large training dataset may be required to improve the accuracy of the neural network solution for three-dimensional problems. We have observed that there is still a contradiction between a large number of sampling points and minimizing the solution time (Table 10) within the framework of this paper. In the case of three-dimensional problems, the accuracy achieved by the neural network is not yet satisfactory.

Many researchers have discovered challenges and confusions also existing in using neural networks to solve PDEs during their research process [41] [42]. At the same time, maintaining solution accuracy as the problem becomes larger and more complex is a goal that many researchers are actively pursuing, as referenced in [43] and [44].

It is interesting to further improve the accuracy of decoupling residual loss function and direct iterative residual loss function applied to this kind of problem. The experiments in this paper show that it is crucial to combine appropriate physical information as a loss function.

The Boltzmann transport equation in nuclear reactor physics involves the transport and interactions of neutrons in reactor materials, considering position, direction, energy, and time. We conduct a series of research on issues related to neutron diffusion models which is derived from Boltzmann transport equation under simplified assumptions with the aim of exploring the efficient integration of deep learning with precise nuclear reactor physics models. This, in turn, enables the development of deep learning frameworks for neutron transport coupled with thermal-hydraulics, structural mechanics, and other physical fields.

Hence, for our future work:

-

•

Hoping to have a deeper understanding of the physical properties of the transport equation and deriving a new type of loss function which could significantly overcome the obstacle caused by larger and more complex geometry domain.

-

•

Exploring techniques to strike a balance between the number of training points, time of solving problems, and solution accuracy.

-

•

Paying attention to the problem of multi-physics coupling solved by neural network, hoping to overcome the problems encountered in this process by hybrid model.

More than these, we also remain attentive to the research of sampling strategy and other neural network theory.

Acknowledgments

This research was partially supported by the Natural Science Foundation of Shanghai (No.23ZR1429300), Innovation Funds of CNNC (Lingchuang Fund, Contract No.CNNC-LCKY-202234), the Project of the Innovation Center of Science Technology and Industry(Contract No.HDLCXZX-2023-HD-039-02), Natural Science Foundation of Sichuan Province, China (2023NSFSC0075) , the National key R & D Program of China (No.2022YFE03040002) and the National Natural Science Foundation of China (No.11971020, No.12371434).

References

- [1] G. I. Bell, S. Glasstone S, Nuclear reactor theory, US Atomic Energy Commission, Washington, DC (United States), (1970).

- [2] Y. M. Hamada, Higher order compact finite difference schemes for steady and transient solutions of space–time neutron diffusion model, Ann. Nucl. Energy., 175(2022), 109177.

- [3] K. Azekura, New finite element solution technique for neutron diffusion equations, J. Nucl. Sci. Technol., 17.2(1980), 89-97.

- [4] C. Demaziere, CORE SIM: A multi-purpose neutronic tool for research and education, Ann. Nucl. Energy., 38.12(2011), 2698-2718.

- [5] Á. Bernal, R. Miró, D. Ginestar, et al. Resolution of the generalized eigenvalue problem in the neutron diffusion equation discretized by the finite volume method, Abstr. Appl. Anal., Hindawi, 1(2014): 913043.

- [6] P. J. Turinsky, R. M. K. Al-Chalabi, P. Engrand, et al. NESTLE: Few-group neutron diffusion equation solver utilizing the nodal expansion method for eigenvalue, adjoint, fixed-source steady-state and transient problems, No. EGG-NRE-11406. EG and G Idaho, Inc., Idaho Falls, ID (United States); Los Alamos National Lab.(LANL), Los Alamos, NM (United States), (1994).

- [7] S. Tao, Y. Xu, Neutron transport analysis of C5G7-TD benchmark with PANDAS-MOC, Ann. Nucl. Energy., 169(2022), 108966.

- [8] M. Dissanayake, N. Phan‐Thien, Neural‐network‐based approximations for solving partial differential equations, Commun. Numer. Meth. En., 10.3(1994), 195-201.

- [9] I. E. Lagaris, A. Likas, D. I. Fotiadis, Artificial neural networks for solving ordinary and partial differential equations, IEEE. T. Neural. Networ., 9.5 (1998): 987-1000.

- [10] M. Raissi, P. Perdikaris, G. E. Karniadakis, Physics-informed neural networks: A deep learning framework for solving forward and inverse problems involving nonlinear partial differential equations, J. Comput. Phys., 378(2019), 686-707.

- [11] M. Raissi, A. Yazdani, G. E. Karniadakis, Hidden fluid mechanics: Learning velocity and pressure fields from flow visualizations, Science., 367.6481(2020), 1026-1030.

- [12] Y. Chen, L. Lu, G. E. Karniadakis, et al. Physics-informed neural networks for inverse problems in nano-optics and metamaterials, Opt. Express., 28.8 (2020), 11618-11633.

- [13] D. Zhang, L. Guo, G. E. Karniadakis, Learning in modal space: Solving time-dependent stochastic PDEs using physics-informed neural networks, SIAM. J. Sci. Comput., 42.2 (2020), A639-A665.

- [14] L. Yang, D. Zhang, G. E. Karniadakis, Physics-informed generative adversarial networks for stochastic differential equations, SIAM. J. Sci. Comput., 42.1(2020), A292-A317.

- [15] S. Wu, B. Lu, INN: Interfaced neural networks as an accessible meshless approach for solving interface PDE problems, J. Comput. Phys., 470(2022), 111588.

- [16] W. F. Hu, T. S. Lin, M. C. Lai, A discontinuity capturing shallow neural network for elliptic interface problems, J. Comput. Phys., 469(2022), 111576.

- [17] W. F. Hu, Y. J. Shih, T. S. Lin, et al. A shallow physics-informed neural network for solving partial differential equations on static and evolving surfaces, Comput. Method. Appl. M., 418(2024), 116486.

- [18] S. Cheng, J. Chen, C. Anastasiou, et al. Generalised latent assimilation in heterogeneous reduced spaces with machine learning surrogate models. J. Sci. Comput., 94.1 (2023), 11.

- [19] S. Cheng, C. Liu, Y. Guo, et al. Efficient deep data assimilation with sparse observations and time-varying sensors, J. Comput. Phys., 496(2024), 112581.

- [20] H. Gong, S. Cheng, Z. Chen, et al. Data-enabled physics-informed machine learning for reduced-order modeling digital twin: application to nuclear reactor physics, Nucl. Sci. Eng., 196.6 (2022), 668-693.

- [21] Phillips, Toby RF, et al. An autoencoder‐based reduced‐order model for eigenvalue problems with application to neutron diffusion, Int. J. Numer. Meth. Eng., 122.15(2021), 3780-3811.

- [22] A. Kashefi, T. Mukerji, Prediction of fluid flow in porous media by sparse observations and physics-informed PointNet, Neural. Networks., 167(2023), 80-91.

- [23] H. Zhou, S. Cheng, R. Arcucci, Multi-fidelity physics constrained neural networks for dynamical systems, Comput. Method. Appl. M., 420(2024), 116758.

- [24] Z. Mao, A. D. Jagtap, G. E. Karniadakis, Physics-informed neural networks for high-speed flows, Comput. Method. Appl. M., 360(2020), 112789.

- [25] K. Nath, X. Meng, D. J. Smith, et al. Physics-informed neural networks for predicting gas flow dynamics and unknown parameters in diesel engines, Sci. REP-UK, 13.1(2023), 13683.

- [26] J. Wang, X. Peng, Z. Chen, et al. Surrogate modeling for neutron diffusion problems based on conservative physics-informed neural networks with boundary conditions enforcement, Ann. Nucl. Energy., 176(2022), 109234.

- [27] Y. Yang, H. Gong, S. Q. Zhang, et al. A data-enabled physics-informed neural network with comprehensive numerical study on solving neutron diffusion eigenvalue problems, Ann. Nucl. Energy., 183(2023), 109656.

- [28] Q. H. Yang, Y. T. Deng, Y. Yang, et al. Neural networks based on power method and inverse power method for solving linear eigenvalue problems, Comput. Math. Appl., 147(2023), 14-24.

- [29] Q. H. Yang, Y. Yang, Y. T. Deng, et al. Physics-Constrained neural network for solving discontinuous interface K-eigenvalue problem with application to reactor physics, Nucl. Sci. Tech., 34.10(2023), 161.

- [30] Y. Xie, Y. Wang, Y. Ma, et al. Neural network based deep learning method for multi-dimensional neutron diffusion problems with novel treatment to boundary, J. Nucl. Eng., 2.4(2021), 533-552.

- [31] T. R. F. Phillips, C. E. Heaney, P. N. Smith, et al,. An autoencoder‐based reduced‐order model for eigenvalue problems with application to neutron diffusion, Int. J. Numer. Meth. Eng., 122.15(2021), 3780-3811.

- [32] Y. Xie, Y. Wang, Y. Ma, Boundary dependent physics-informed neural network for solving neutron transport equation, Ann. Nucl. Energy., 195(2024), 110181.

- [33] M. H. Elhareef, Z. Wu, Physics-informed neural network method and application to nuclear reactor calculations: A pilot study, Nucl. Sci. Eng., 197.4(2023), 601-622.

- [34] G. Theler, F. J. Bonetto, A. Clausse, Solution of the 2D IAEA PWR Benchmark with the neutronic code milonga, Actas de la Reunión Anual de la Asociación Argentina de Tecnología Nuclear, XXXVIII, (2011).

- [35] W. M. Stacey, Nuclear reactor physics, John Wiley and Sons, (2018).

- [36] S. Marguet, The physics of nuclear reactors, Springer, (2018).

- [37] A. Paszke, S. Gross, S. Chintala, et al. Automatic differentiation in pytorch, (2017).

- [38] C. Demazière, C. Sunde, Calculation of the eigenfunctions and corresponding eigenvalues of the two-group diffusion equation in heterogeneous systems, Proceedings of the Joint International Topical Meeting on Mathematics and Computation and Supercomputing in Nuclear Applications (M and C+ SNA 2007), (2007), 15-19.

- [39] L. A. Hageman, J. B. Yasinsky, Comparison of alternating-direction time-differencing methods with other implicit methods for the solution of the neutron group-diffusion equations, Nucl. Sci. Eng., 38.1(1969), 8-32.

- [40] F. Hecht, New development in FreeFem++, J. Numer. Math., 20.3-4(2012): 251-266.

- [41] T. G. Grossmann, U. J. Komorowska, J. Latz, et al, Can physics-informed neural networks beat the finite element method?, IMA J. Appl. Math., (2024): hxae011.

- [42] A. Krishnapriyan, A. Gholami, S. Zhe, et al, Characterizing possible failure modes in physics-informed neural networks, Adv. Neural Inf. Process. Syst., 34(2021), 26548-26560.

- [43] K. Tang, X. Wan, C. Yang, DAS-PINNs: A deep adaptive sampling method for solving high-dimensional partial differential equations, J. Comput. Phys., 476(2023), 111868.

- [44] Y. Yang, Q. H. Yang, Y. T. Deng, et al., Moving sampling physics-informed neural networks induced by moving mesh PDE, Neural. Networks., 180(2024), 106706.