First Author, Second Author, Third Author

11institutetext: University of Washington, Seattle WA 98195, USA,

11email: jianghao@uw.edu

22institutetext: University of Washington, Seattle WA 98195, USA,

22email: matt45@uw.edu

33institutetext: Texas Tech University, Lubbock TX 79409, USA,

33email: kgolubni@ttu.edu

Solving the option Forecast problem by a numerical method for the Black-Scholes Equation with Machine Learning Classification Model

Abstract

In this study, we propose novel classification models that integrate the Quasi-Reversibility Method (QRM) with advanced machine learning techniques to enhance the prediction of option prices. The QRM, well known for solving the Black-Scholes equation under challenging conditions, forecasts option prices one day in advance [3]. By leveraging the QRM-derived minimizer with machine learning classification models, we aim to categorize options as either increasing or decreasing in value. This integration enables more refined categorization of financial instruments, blending numerical analysis and predictive modeling.

Using these classifications, we implement trading strategies to enhance the predictive power of QRM extrapolations. These strategies leverage both historical data trends and QRM forecasts, potentially improving trading efficiency, especially in volatile markets. To validate our approach, we collected 23,548 real-world options data points across various time periods and market conditions, creating a comprehensive dataset to rigorously test our models. This data, combined with the QRM minimizer, trains machine learning models, including decision trees, random forests, gradient boosting classifiers, k-nearest neighbors, and neural networks.

The models’ performance is assessed using metrics such as accuracy, precision, and recall to evaluate the framework’s consistency and reliability. Our goal is to identify which models, paired with QRM-derived features, perform most effectively for option price prediction. This study ultimately aims to provide a robust predictive tool that is practical for short-term trading and grounded in established mathematical finance methods.

keywords:

Black-Scholes equation, stock, options, classification model, ill-posed problem, quasi-reversibility method, random forest, decision tree1 Introduction and Overview

The stock market, also known as the equity market, consists of buyers and sellers trading ownership of businesses. To profit in such a market, one must intuitively guess market trends, aiming to buy low and sell high. However, due to the market’s volatility and the ill-posed nature of predicting exact stock prices caused by noisy data and model sensitivity, accurate predictions remain challenging. Therefore, a more generalized approach, such as estimating market gains or losses, is often more feasible.

Options trading involves contracts that grant the right to buy or sell stock in the future. Predicting whether an option will trade higher can lead to market profits. We developed the Quasi-Reversibility Method (QRM) to capture market movements by solving the ill-posed problem of the Black–Scholes model to predict option prices one day in advance [3]. Our research utilizes the QRM-derived minimizer, which predicts future option data, combined with other stock market features to forecast market movements. By integrating these option price predictions, we aim to improve the accuracy of predicting whether the overall market will gap up or down, acknowledging the short-term inefficiencies that may exist in the market.

We propose using ensemble methods, such as gradient boosting trees and random forests, as well as other advanced classification techniques including k-nearest neighbors, and neural networks, to classify future market data. By combining these classification models with QRM, we aim to capture critical market features that influence overall price movements, assuming limited market volatility in the short term. Our approach involves designing weak learners to classify market movements into different categories and then improving the results by minimizing the loss function.

This paper provides an introduction to the Quasi-Reversibility Model (QRM) employed in our research, along with a brief summary of prior work in this area. Additionally, we present various classification models, including gradient boosting, random forests, k-nearest neighbors, and neural networks, and explain how these models are applied using the results obtained from QRM. The findings are demonstrated through an analysis of key features and confusion matrices. Following this, we provide a comprehensive summary of critical evaluation metrics such as precision, recall, and accuracy. Finally, we discuss future developments and potential directions for this research. If the proposed classification models yield favorable results, they could enhance the profitability of options trading by improving the prediction of market movements.

2 Quasi-reversibility Model and Black–Scholes Model

2.1 Black-Scholes Equation

The Black-Scholes equation is a backward parabolic partial differential equation used in mathematical finance. The proposed model uses initial boundary conditions for the stock of interest to simulate a more accurate options trading strategy. Since it is an ill-posed problem, we introduce the variable to solve the equation backward, where at any given time , we have:

| (1) |

The Black-Scholes equation is given by:

| (2) |

Assuming that the function satisfies the Black-Scholes equation with a volatility coefficient , we can rewrite the equation as:

| (3) |

with the initial condition:

| (4) |

where is the payoff function, is the stock price, and at maturity time . We assume the risk-free interest rate to be zero, and thus, represents the price of an option at time [6].

2.2 Quasi-Reversibility Method

We introduce this method to solve Black-Scholes equations with certain approximations. We aim to solve the following equation:

| (5) |

with the Dirichlet boundary conditions:

| (6) |

and initial conditions:

| (7) |

where:

| (8) |

| (9) |

with , and . represents the partial differential operator for the Black-Scholes equation, where is the stock price, is the volatility of the option, and are the bid and ask prices of the option, and and are the bid and ask prices of the stock. We assume that the bid price is always less than the ask price.

The method involves five steps to solve the Black-Scholes equations:

-

•

First, solve for the dimensionless variable, namely .

-

•

Secondly, perform interpolation and extrapolation to forecast the option price.

-

•

Thirdly, apply the initial boundary conditions to the PDE: Partial Differential Equation.

-

•

Fourthly, apply regularization to solve the method numerically.

-

•

Lastly, solve the minimizing problem using finite difference and regularization [6].

2.3 Analysis

Assuming , the functions:

| (10) |

allow us to find the solution that satisfies the following conditions:

| (11) |

| (12) |

| (13) |

We will perform a general convergence analysis based on a new Carleman estimate, assuming the time interval is within a short period. Let’s consider a general case with the given properties. Given a non-negative constant and let be . Suppose there are two non-negative constants with . Suppose the functions satisfy:

| (14) |

Then, the general version of the original problem is formulated as:

| (15) |

with the boundary condition:

| (16) |

and the initial condition:

| (17) |

We are trying to find a convergent solution to the general solutions [11]. Our previous initial results using neural networks (NN) were presented in the paper [6], where we tested three different methods: the quasi-reversibility method for solving ill-posed problems, and two models using multilayer fully connected NNs—classification and regression.

| Method | Accuracy | Precision | Recall |

|---|---|---|---|

| QRM | 49.77% | 55.77% | 52.43% |

| Binary Classification | 56.36% | 59.56% | 70.22% |

| Neural Network | 55.42% | 60.32% | 61.29% |

3 Classification Algorithms

3.1 Introduction

Classification algorithms are a subset of supervised machine learning techniques that aim to categorize input data into predefined classes or labels. These algorithms learn from labeled training data, where the input features are associated with known output labels, to build a model that can predict the class of new, unseen data. Common classification algorithms include decision trees, random forests, k-nearest neighbors (KNN), and neural networks. Each algorithm has its strengths and is chosen based on the nature of the dataset and the specific requirements of the problem. For instance, decision trees are easy to interpret and visualize, while neural networks can model complex relationships in data. The performance of classification algorithms is typically evaluated using metrics such as accuracy, precision, recall, and the F1 score. These metrics provide insights into how well the model distinguishes between different classes. Properly selecting and tuning these algorithms is crucial for developing effective predictive models in various domains, from medical diagnosis to stock market forecasting [4].

3.2 Decision Tree

A decision tree is a classification algorithm that utilizes a tree-like model. This model includes a root node with no incoming edges, decision nodes with exactly one incoming edge and at least one outgoing edge, and leaf nodes with only incoming edges and no outgoing edges. The incoming edge represents the result of a previous decision, while the outgoing edges represent possible outcomes of current decisions. It is also possible to have branches consisting of multiple nodes. Note that the decision tree classifies data into final categories based on these decision paths [5].

The concept behind a decision tree is straightforward. By using specific features of the data, the tree splits the data at each node based on the values of these features, with each outgoing edge from a node representing a possible outcome from the decision made. While a decision tree does not have to be binary—meaning nodes can have more than two outgoing edges—we will use a binary tree in our case because we want to capture the binary movement of options as either increasing or decreasing. We will feed the tree with data derived from the QRM minimizer and market features, splitting the data into training, validation, and test sets. Subsequently, we will create trees with different combinations of features to generate outcomes for our trading strategy. Below is an illustration showing a basic binary decision tree, which we refer to as a classification tree (see Figure 1).

3.3 Data Split

Data splitting is the process used to determine the decisions at each node of a decision tree. Generally, we select a feature that divides the data roughly in half to serve as the root node, which helps to keep the tree balanced. At the same time, we design the leaf nodes to have a bias towards one of the classifying classes, enabling us to make a final decision.

3.3.1 Entropy

Entropy is a measure of the disorder or unpredictability of a feature relative to our data. It determines how effectively the data can be classified into a specific class. The formula for entropy is given below:

| (18) |

where represents the entropy or disorder of the feature, is the probability of encountering feature , and is the total number of features. Entropy can range from 0 to 1, with 0 indicating the lowest entropy, signaling no disorder. This is undesirable for training as it implies that all data points have been classified into a single class. Conversely, an entropy of 1 indicates a balanced data split, which is ideal for training. Moreover, entropy is used to calculate the information gain at each node.

3.3.2 Information Gain

Information Gain measures the change in entropy resulting from splitting the data on a feature. The formula is as follows:

| (19) |

where represents the measure of disorder before the split, denotes the number of observations in the split, and is the disorder of the target feature in split . By subtracting the combined entropy of all child nodes from the entropy of the parent node, we can determine how much ”information” we have gained or lost. A higher Information Gain indicates a larger reduction in entropy or disorder. Information Gain is used to assess whether a split was beneficial by evaluating how much ”noise” was eliminated due to the split.

3.3.3 Gini Impurity

Gini Impurity measures the probability that an instance of a random variable is incorrectly classified if that instance were randomly classified according to the distribution of class labels from the dataset. The formula is presented below:

| (20) |

where is the number of classes, and is the probability of an item being labeled as class . To calculate Gini Impurity, one sums the squared probabilities for each class, , and subtracts this value from 1. Gini Impurity varies between 0 and 1, where 0 represents complete purity (all elements belong to a single class), 0.5 represents an equal distribution of elements across classes, and 1 indicates maximum impurity, with elements uniformly distributed across multiple classes.

3.4 Hyperparameter Selection

Hyperparameters define a model much like how the rules of a sport define that sport. One can differentiate between models through their hyperparameters, as these address questions related to model design. Such questions might include determining the maximum depth of a tree, the maximum number of nodes, or the learning rate for gradient descent. Hyperparameters are not features of the model but rather constitute its structure. They determine how the algorithm behaves during execution, making tuning these hyperparameters essential to minimize noise and enhance accuracy. The selection process can vary; one might manually adjust hyperparameters hoping to achieve better results, or use a method such as Grid Search. Grid Search involves building a model for each possible combination of hyperparameters, evaluating each model, and selecting the one with the most promising results. Another method, Random Search, generates random hyperparameter values from a data distribution for each parameter. This process is called Random Search due to the randomness introduced by not using a predefined set of values, but rather randomly sampling the values from a distribution [10].

3.5 Ensemble Methods

The ensemble method utilizes groups of base learners or models to form a final decision by averaging the outputs of different learners or models. This approach is akin to voting, where the decision is based on the majority opinion. Since individual models might exhibit high bias or variance, combining models can reduce these issues by either taking random samples from the same model or using different models to train the data. This principle underlies the bagging and boosting methods, where bagging trains weaker learners in parallel and boosting trains them sequentially. We employ these methods to mitigate the potential for high bias.

3.6 Gradient Descent

Gradient descent is a first-order iterative optimization method that seeks to find the local minima of an algorithm. It is a boosting method that aims to reduce inaccuracies in our model by transforming a weak model into a stronger one by addressing its shortcomings. The concept involves observing the gradient at the current position and then taking a step in the opposite direction, which results in the steepest descent towards the minimum. This method is analogous to descending a hill along the steepest path. Additionally, there is a parameter known as the learning rate, which determines the size of each step taken during the descent. A larger learning rate might result in overshooting the minimum and ascending the opposite slope, while a smaller step ensures greater accuracy but may be inefficient and susceptible to saddle points, which can mislead the algorithm into thinking a local minimum has been reached (2. In this method, we use a loss function to gauge our progress down the hill; essentially, it represents the difference between the actual output of our model and our prediction, which we refer to as the error. We strive to minimize the loss function until it approaches zero, ideally reaching the global minimum. However, there are challenges associated with using this algorithm. It is a greedy method that focuses solely on the current location, potentially leading to a local minimum that may not represent the optimal solution [8].

3.7 Gradient Classification

Here, we aim to apply gradient descent to our decision tree to enhance accuracy. First, we introduce the mean squared error, which evaluates the quality of our splits. Since we are addressing an optimization problem—specifically, aiming for a classification to determine the labels of different classes—we will utilize the log-loss function and strive to minimize it for improved accuracy.

The idea is to use some input data along with a differentiable loss function. For classification, we employ the log-loss function, which is defined as follows:

| (21) |

The logarithmic loss is a metric that evaluates the prediction probability corresponding to the true label. With this loss function, we can proceed with the iterations:

-

1.

Initialize our model with a constant value:

(22) -

2.

Compute the pseudo-residuals for each data point:

(23) -

3.

Fit a regression tree to the pseudo-residuals.

-

4.

Compute the multiplier by solving the one-dimensional optimization problem:

(24) -

5.

Update the model:

(25)

The final output, , will serve as our new decision tree model [10]. By differentiating the log-loss function at every iteration, we can significantly improve the accuracy of our decision tree. Additionally, employing cross-validation helps mitigate overfitting in deeper trees that show higher training accuracy.

3.8 Random Forest

We employ a Bagging method, which selects a subset of the data to create multiple diverse training sets. These sets are then trained independently, and the majority of the predictions are averaged to obtain a more accurate estimation while reducing overfitting [9]. In this project, we intend to use the random forest algorithm, which applies the bagging method to develop numerous random, uncorrelated decision trees—a ”forest.” This process, known as ”the random subspace method,” aims to generate trees with low correlation among them. Unlike decision trees that use all provided features, random forests consider only a fraction of the features. The data split is performed based on Gini, entropy, or log-loss criteria, determined through hyperparameter tuning. Additionally, to identify important features, we calculate the importance of a feature by assessing the decrease in node impurity weighted by the probability of reaching that node:

| (26) |

where:

-

•

= weighted samples that reach node ,

-

•

= impurity of node ,

-

•

= left split on node ,

-

•

= right split on node [10].

Although random forest is known for its accuracy, it can be an extremely time-consuming process because it trains many trees simultaneously. Moreover, interpreting the results from such a large collection of decision trees can be challenging. This is why we utilize feature importance metrics to aid in interpreting the results. Finally, we choose to apply the random forest algorithm to our data because we believe that the market exhibits certain patterns. By correctly identifying and acting on these patterns, we can enhance the predictions made from the QRM. Furthermore, since our primary goal is to classify future options as either increasing or decreasing, having majority votes from different trees can reduce overfitting and bias within the short period of option price predictions.

3.9 Neural Networks

Neural networks are a fundamental component of artificial intelligence, modeled after the human brain’s architecture. They consist of layers of interconnected nodes, or neurons, that process input data and adjust their connections to minimize prediction errors through a process known as backpropagation. This iterative learning process allows neural networks to improve their accuracy over time. Neural networks are particularly effective for complex tasks involving large amounts of data, such as image and speech recognition. Advances in deep learning, which involves neural networks with multiple hidden layers, have significantly improved their ability to model intricate patterns and relationships in data [1].

3.10 K-Nearest Neighbors

The k-nearest neighbors (KNN) algorithm is a simple, yet powerful, machine learning technique used for classification and regression. KNN classifies a new data point based on the majority class of its k-nearest neighbors, determined by a distance metric such as Euclidean distance. As a non-parametric method, KNN does not make assumptions about the underlying data distribution, making it flexible and easy to implement. However, it can be computationally intensive with large datasets due to the necessity of calculating distances for all training samples. KNN is particularly effective for small datasets with clear class boundaries and is frequently employed in pattern recognition and recommendation systems [2].

4 Result and Data

4.1 Introduction

The results were generated using a robust combination of machine learning methods, integrating the Quasi-Reversibility Method (QRM) as a significant feature in our analysis. We ensured the integrity and reproducibility of our data by setting the random state to 42. This approach provides consistency across different runs of the experiment. Furthermore, we leveraged real market data sourced from Bloomberg, ensuring that our findings are grounded in actual market conditions. By incorporating diverse methodologies and high-quality data, our results offer a comprehensive and reliable analysis of options pricing and market prediction.

4.2 Classification Matrix

We define the option price of a certain day to be the average of the bid and the asking price. All the results below were calculated based on the following matrix:

-

•

Predicted label of Up represents that we predicted the option price would increase the next day.

-

•

Predicted label of Down represents that we predicted the option price would decrease the next day.

-

•

True label of Up represents that the real option price increases the next day.

-

•

True label of Down represents that the real option price decreases the next day.

4.3 Decision Tree

Since the tree structure is too complex for demonstration purposes, we will only show a partial tree representing the first few layers 4.

4.4 Decision Tree with Gradient Boosting

Since the tree structure is too complex for demonstration purposes, we will only show a partial tree representing the first few layers 6.

4.5 Random Forest

Since the tree structure is too complex for demonstration purposes, we will only show a partial tree representing the first few layers 8.

4.6 KNN

Since the KNN boundary is too complex for demonstration purposes, we will only show a partial boundary representing the first few boundaries 10.

4.7 Neural Network

Since the Neural Network full layer is too complex for demonstration purposes, we will only show partial neuron graphs 12.

4.8 Stock Market Data:

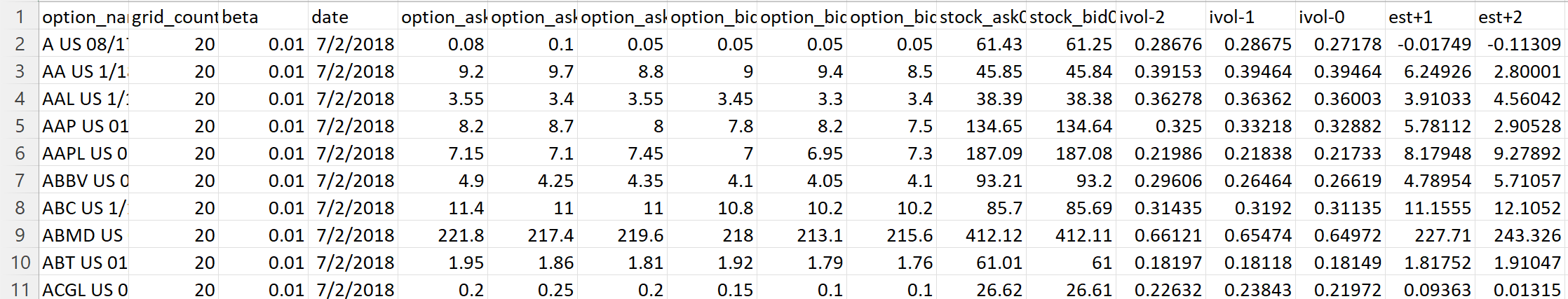

The data used for this project were sourced from Bloomberg.com [7]. The dataset comprises stock prices, implied volatility, option prices, QRM estimators, and estimator errors across five different days for 23,548 unique stocks. This extensive dataset was selected to ensure a comprehensive representation of the entire stock market, avoiding biases toward any specific sector. We believe this diverse dataset provides a robust foundation for achieving more reliable and promising results. Additionally, the chosen features are instrumental in predicting future movements in options, which are subsequently utilized as inputs for our classification models.

4.9 Result

| Method | Accuracy | Precision | Recall |

|---|---|---|---|

| Decision Tree | 58.74% | 62.17% | 77.53% |

| Gradient Boosting | 72.2% | 75.18% | 79.25% |

| Random Forest | 66.64% | 68.67% | 80.38% |

| K Nearest Neighbors | 53.22% | 59.7% | 64.73% |

| Neural Network | 67.36% | 69.6% | 79.75% |

5 Summary and Future Development

Although accurately predicting exact option prices remains challenging due to market volatility, we can make reliable predictions about whether an option’s price will increase or decrease. These predictions are derived from market features extracted from the dataset of 23,548 entries and the minimizer obtained using the Quasi-Reversibility Method (QRM). Various classification models were tested, and their results are summarized in the table above. Based on our assumptions, we interpret the precision percentage as a key indicator of profitability when buying a call option. Using the Decision Tree model, we achieved a precision of 62.17, which improved to 68.67 with the Random Forest model and further to 75.18 when employing Gradient Boosting 2. Compared to prior results from Table 1 1, which showed a precision of 60.32 using a Neural Network, our current models demonstrate a notable improvement in precision and profitability potential.

However, the current approach only predicts whether a option will increase or decrease in value one day in advance, without accounting for factors such as transaction fees, holding periods, or longer-term market volatility. Additionally, the dataset spans a relatively short timeframe, which may limit its ability to capture longer-term market trends. Furthermore, the model currently classifies non-profitable options as price increases, which could skew the interpretation of results.

In future work, we plan to enhance our methodology by predicting the percentage change in option prices rather than simply classifying price movements as increases or decreases. This improvement will provide a more detailed understanding of market dynamics and help traders identify options with higher profit potential. Additionally, we aim to refine the Quasi-Reversibility Method (QRM) minimizer to improve its robustness and accuracy in handling noisy market data, incorporating techniques such as adaptive regularization for more reliable predictions. We also plan to explore advanced machine learning models beyond tree-based classifiers that are capable of capturing complex temporal patterns.

Appendix

First, we have some input for our classification:

| (27) |

Also, we have a loss function:

| (28) |

where

-

1.

- The input data fed into the model,

-

2.

- The output we are trying to predict.

Likelihood Function: The likelihood function describes the joint probability of the observed data as a function of the parameters of our input:

| (29) |

The likelihood function is the product of all such probabilities:

| (30) |

Here, we use the log-likelihood function:

| (31) |

Here, must be binary (either 0 or 1), and represents the predicted probability. Since we aim to improve predictions, we want to maximize the log-likelihood function. To use this as our loss function, we simply invert it:

| (32) |

Minimizing the log-loss function corresponds to a better fit. Since the likelihood function is a probability function, we convert it into a function of corresponding odds using the logit function:

| (33) |

where represents the odds of the given probability. Let’s perform some algebraic manipulations:

| (34) |

Let be denoted as . Then, solve for :

| (35) |

Substituting back:

| (36) |

Substituting these into the log-loss function:

| (37) |

Differentiating the loss function:

| (38) |

This can be simplified to, Predicted minus Observed:

| (39) | |||

| (40) |

[13]

References

-

[1]

Su-Hyun Han, Ko Woon Kim, SangYun Kim, Young Chul Youn, Artificial Neural Network: Understanding the Basic Concepts without Mathematics.

Available from: https://www.ncbi.nlm.nih.gov/pmc/articles/PMC6428006/, 17(3): 83–89, 2018 Sep. DOI: 10.12779/dnd.2018.17.3.83 (accessed 7 November 2024). -

[2]

IBM. What is the K-nearest neighbors algorithm?

Available from: https://www.ibm.com/topics/knn#:~:text=The%20k%2Dnearest%20neighbors%20(KNN,used%20in%20machine%20learning%20today.

(accessed 7 November 2024). - [3] Michael V Klibanov, Andrey V Kuzhuget, Kirill V Golubnichiy, An ill-posed problem for the Black-Scholes equation for a profitable forecast of prices of options on real market data, Inverse Problems, 32(1), 2016. DOI: 10.1088/0266-5611/32/1/015010

-

[4]

Scikit-learn. Supervised learning.

Available from: https://scikit-learn.org/stable/supervised˙learning.html (accessed 7 November 2024). - [5] L. Breiman, J. Friedman, R. Olshen, C. Stone, Classification and Regression Trees, Wadsworth, Belmont, CA, 1984. DOI: 10.1201/9781315139470

- [6] Mikhail V. Klibanov, Kirill V. Golubnichiy, Andrey V. Nikitin, Quasi-Reversibility Method and Neural Network Machine Learning for Forecasting of option Prices, Advances in Inverse Problems for Partial Differential Equations, 2023. DOI: https://doi.org/10.1090/conm/784

-

[7]

University of Washington. Bloomberg Terminal.

Available from: https://bloomberg.com (accessed 7 November 2024). - [8] Lemaréchal, C., Cauchy and the Gradient Method, Doc Math Extra: 251–254, 2012. DOI: 10.1007/978-1-4614-1948-85

- [9] Breiman, L., Random Forests, Machine Learning 45, 5–32, 2001. DOI: 10.1023/A:1010933404324

- [10] Pedregosa, F., Varoquaux, G., Gramfort, A., Michel, V., Thirion, B., Grisel, O., Blondel, M., Prettenhofer, P., Weiss, R., Dubourg, V., Vanderplas, J., Passos, A., Cournapeau, D., Brucher, M., Perrot, M., Duchesnay, E., Scikit-learn: Machine Learning in Python, Journal of Machine Learning Research, 12(2825–2930), 2011. DOI: https://doi.org/10.48550/arXiv.1201.0490

- [11] Klibanov, Michael V., Shananin, Aleksander A., Golubnichiy, Kirill V., Kravchenko, Sergey M., Forecasting options Prices via the Solution of an Ill-Posed Problem for the Black-Scholes Equation, Inverse Problems, 38(11), 2022. DOI: 10.1088/1361-6420/ac91ec

- [12] Pedro Santa-Clara, Alessio Saretto, Option strategies: Good deals and margin calls, Journal of Financial Markets, Volume 12, Issue 3, 2009. DOI: https://doi.org/10.1016/j.finmar.2008.10.001

- [13] Jurafsky, Daniel, Martin, James H., Speech and Language Processing: An Introduction to Natural Language Processing, Computational Linguistics, and Speech Recognition, Prentice Hall PTR (3rd ed.). pp 76-95, 2020.