Sparse Bayesian Estimation of Parameters in Linear-Gaussian State-Space Models

Abstract

State-space models (SSMs) are a powerful statistical tool for modelling time-varying systems via a latent state. In these models, the latent state is never directly observed. Instead, a sequence of data points related to the state are obtained. The linear-Gaussian state-space model is widely used, since it allows for exact inference when all model parameters are known, however this is rarely the case. The estimation of these parameters is a very challenging but essential task to perform inference and prediction. In the linear-Gaussian model, the state dynamics are described via a state transition matrix. This model parameter is known to behard to estimate, since it encodes the relationships between the state elements, which are never observed. In many applications, this transition matrix is sparse since not all state components directly affect all other state components. However, most parameter estimation methods do not exploit this feature. In this work we propose SpaRJ, a fully probabilistic Bayesian approach that obtains sparse samples from the posterior distribution of the transition matrix. Our method explores sparsity by traversing a set of models that exhibit differing sparsity patterns in the transition matrix. Moreover, we also design new effective rules to explore transition matrices within the same level of sparsity. This novel methodology has strong theoretical guarantees, and unveils the latent structure of the data generating process, thereby enhancing interpretability. The performance of SpaRJ is showcased in example with dimension 144 in the parameter space, and in a numerical example with real data.

Index Terms:

Bayesian methods, graphical inference, Kalman filtering, parameter estimation, sparsity detection, state-space modelling, Markov chain Monte Carlo.I Introduction

State-space models (SSMs) are a flexible statistical framework for the probabilistic description of time-variable systems via coupled series of hidden states and associated observations. These models are used to decode motor neuron kinematics from hand movements [1], perform epidemiological forecasting for policy makers [2, 3], and to determine the trajectory of lunar spacecraft from noisy telemetry data [4], among many other applications. In general, SSMs are used in many important problems within, but not limited to, signal processing, statistics, and econometrics [5]. In some cases, the true SSM is perfectly known, and the interest is in inferring the sequence of underlying hidden states. In the Bayesian paradigm, estimating the sequence of hidden states is achieved by obtaining a sequence of posterior distributions of the hidden state, known as filtering distributions [6]. The linear-Gaussian SSM (LGSSM) is the case where the state and observation models are linear with Gaussian noises. In this case, the sequence of exact filtering distributions is obtained via the Kalman filtering equations [7]. In the case of non-linear dynamics, the filtering distributions must be approximated, for instance via the extended Kalman filter [8] or the unscented Kalman filter [9]. In even more generic models, such as those with non-Gaussian noise, particle filters (PFs) are often used, which approximate the state posterior distributions via Monte Carlo samples [10, 11, 12, 13, 14]. All of these filtering methods assume that the model parameters are known. However, model parameters are often unknown, and must therefore be estimated. Parameter estimation is a much more difficult task than filtering, and is also more computationally expensive, since most parameter estimation algorithms require a large number of evaluations of the filtering equations to yield acceptable parameter estimates. There exist several generic methods to do this, with techniques based on Markov chain Monte Carlo (MCMC) [15] and expectation maximisation (EM) [6] arguably being the most commonly used parameter estimation techniques for state-space models.

When estimating model parameters, it is crucial that the estimates reflect the structure of the underlying system. For instance, in real-world systems, the underlying dynamics are often composed of simple units, with each unit interacting with only a subset of the overall system, but when observed together these units exhibit complex behaviour [16]. This structure can be recovered by promoting sparsity in the parameter estimates. In addition to better representing the underlying system, sparse parameter estimates have several other advantages. By promoting sparsity uninformative terms are removed from the inference, thereby reducing the dimension of the parameter space, improving model interpretability. Furthermore, parameter sparsity allows us to infer the connectivity of the state space [17], which is useful in several applications, such as biology [18, 19], social networks [20], and neuroscience [21]. In state-space models the sparsity structure can be represented as a directed graph, with the nodes signifying the state variables, and edges indicating signifying between variables. In the LGSSM specifically, this graph can be represented by an adjacency matrix with identical sparsity to the transition matrix. This interpretation of a sparse transition matrix as a weighted directed graph was recently proposed in the GraphEM algorithm [17, 22, 23], in which a sparse point-wise maximum-a-posteriori estimator for the transition matrix of the LGSSM is obtained via an EM algorithm. However, this point estimator does not quantify uncertainty, therefore disallowing a probabilistic evaluation of sparsity. The capability to quantify and propagate the uncertainty of an estimate is highly desired in modern applications, as it allows for more informed decision-making processes, as well as providing a better understanding of the underlying model dynamics.

In this work, we propose the sparse reversible jump (SpaRJ) algorithm, a fully Bayesian method to estimate the state transition matrix in LGSSMs. This matrix is probabilistically approximated by a stochastic measure constructed from samples obtained from the posterior of this model parameter. The method (a) promotes sparsity in the transition matrix, (b) quantifies the uncertainty, including sparsity uncertainty in each element of the transition matrix, and (c) provides a probabilistic interpretation of (order one) Granger causality between the hidden state dimensions, which is interpreted as a probabilistic network of how the information flows between consecutive time steps.

SpaRJ exploits desirable structure properties within the SSM, which presents computational advantages w.r.t. to other well established MCMC methods such as particle MCMC [15], i.e., a decrease in computational cost for a given performance or an improvement in performance for a given computational cost.

Our method is built on a novel interpretation of sparsity in the transition matrix as a model constraint. SpaRJ belongs to the family of reversible jump Markov chain Monte Carlo (RJMCMC) [24, 25], a framework for the simultaneous sampling of both model and parameter spaces. We note that RJMCMC methods are not a single algorithm, but a wide family of methods (as it is the case of MCMC methods). Thus, specific algorithms are required to make significant design choices so the RJMCMC approach can be applied in different scenarios [24, 25, 26, 27]. In the case of SpaRJ, we design both specific transition kernels and parameter rejuvenation schemes, so the algorithm can efficiently explore both the parameter space, and the sparsity of the parameter in a hierarchical fashion. As RJMCMC is itself a modified Metropolis-Hastings method, the solid theoretical guarantees of both precursors are inherited by our proposed algorithm, such as the asymptotic correctness of distribution of both model and parameter [24]. Our method outperforms the current state-of-the-art methods in two numerical experiments. We test SpaRJ in a synthetic example with dimension up to 144 in the parameter space. In this example, a total of models are to be explored (i.e., the number of different sparsity levels). Then, we run a numerical example with real data of time series measuring daily temperature. The novel probabilistic graphical interpretation allows recovery of a probabilistic (Granger) causal graph, showcasing the large impact that this novel approach can have in relevant applications of science and engineering. The model transition kernels used by SpaRJ are designed to allow the exploitation of sparse structures that are common in many applications, which reduces the computational complexity of the resulting (sparse) models once the transition matrix has been estimated (see for instance [16]). SpaRJ retains strong theoretical guarantees, inherited from the underlying Metropolis-Hastings method, thanks to careful design of the transitions kernels, e.g., keeping the convergence properties of the algorithm. Extending our methodology to parameters other than the transition matrix is readily possible. In particular, we make explicit both a model and parameter proposal for extension to the state covariance parameter .

Contributions. The main contributions of this paper111A limited version of this work was presented by the authors in the conference paper [28], which contains a simpler version of the method with no theoretical discussion, methodological insights, or exhaustive numerical validation. are summarised as follows:

-

•

The proposed SpaRJ algorithm is the first method to estimate probabilistically the state transition matrix in LGSSMs (i.e, treating as a random variable rather than a fixed unknown) under sparsity constraints. This is achieved by taking to be a random variable, and sampling the posterior distribution under a unique interpretation of sparsity as a model. This new capability allows for powerful inference to be performed with enhanced interpretability in this relevant model, e.g., the construction of a probabilistic Granger causal network mapping the state space, which was not possible before.

-

•

The proposed method is the first method to quantify the uncertainty associated with the occurrence of sparsity in SSMs, e.g., in the probability of sparsity occurring in a given element of the transition matrix. This capability is unique among parameter estimation techniques in this field.

-

•

Our method proposes an interpretation of sparsity as a model, allowing the use of RJMCMC for sparsity detection in state-space modelling. This is the first RJMCMC method to have been applied to sparsity recovery in state-space models, probably because RJMCMC methods require careful design of several parts of the algorithm, especially for high dimensional parameter spaces as is the case for the matrix valued parameters of the LGSSM.

Structure. In Section II we present the components of the problem and present some of the underlying algorithms, as well as the notation we will use. Section III presents the method, with further elucidation in Section IV. We present several challenging numerical experiments in Section V, showcasing the performance of our method, and comparing to a recent method with similar goals. We provide some concluding remarks in Section VI.

II Background

II-A State-space models

Let us consider the additive linear-Gaussian state-space model (LGSSM), given by

| (1) | ||||

for , where is the hidden state with associated observation at time , is the state transition matrix, is the observation matrix, is the state noise, and is the observation noise. The state prior is , with and known. We assume that the model parameters remain fixed.

A common task in state-space modelling is the estimation of the series of for , also known as the filtering distributions. In the case of the LGSSM, these distributions are obtained exactly via the Kalman filter equations [6, 7]. The linear-Gaussian assumption is not overly restrictive, as many systems can be approximated via linearisation, and for continuous problems Gaussian noises are very common.

Note that, the posterior distribution of any given parameter can be factorised as

| (2) |

where is the parameter of interest, is the prior ascribed to the parameter, and is extracted from the Kalman filter via the recursion

| (3) |

where [6]. This factorisation gives the target distribution for estimating parameters in an LGSSM. In this work we focus on probabilistically estimating , and therefore we are interested in the posterior .

In LGSSMs, and are frequently assumed to be known as parameters of the observation instrument, but and are often unknown. For the purposes of this work, we assume that all parameters except are known, or are suitably estimated, although our method can be extended to all parameters of the linear-Gaussian state-space model, as discussed in Section IV-E.

II-B Parameter estimation in SSMs

The estimation of the parameters of a state-space model is, in general, a difficult and computationally intensive task [6, 15]. This difficulty follows from the state dynamics not being directly observed, and stochasticity in the observations.

In this work we focus on Bayesian techniques, as our method is Bayesian, with this focus therefore allowing easier comparison. Frequentist methods for parameter estimation in SSMs are common however, with some relevant references being [29, 30, 31].

There are two main approaches to estimating and summarising parameters in state-space models, which we can broadly classify as point estimation methods and probabilistic methods.

Point estimation methods. The goal of a point estimation method is to find a single parameter value that is, in some way, the value that best summarises the parameter given the data. An archetypal point estimate is the maximum likelihood estimator (MLE) [32]. In a state-space model, when estimating a parameter denoted , the MLE, denoted , is given by

The MLE is fundamentally a frequentist estimator, and hence no prior distribution is used. The Bayesian equivalent to the maximum likelihood estimator is the maximum a posteriori estimator, denoted , and given by

from which we see that the MLE is the MAP if .

A common method for point estimation in LGSSMs and in general is the expectation-maximisation (EM) algorithm [33], as explicit formulae exist for the conditional MLE of all parameters in the case of the LGSSM, and thus the model parameters can be estimated iteratively. The EM algorithm allows for all model parameters to be estimated simultaneously, but converges much more slowly as the number parameters to estimate increases [6]. Furthermore, this method does not allow for quantification of the uncertainty in the resultant estimates.

Probabilistic methods. Distributional methods estimate the target probability density function (pdf) of the parameter given the data, often through the generation of Monte Carlo samples. In the case of state-space models, for a parameter the target distribution is , and the set of Monte Carlo samples is , with A common class of methods used to obtain these samples is Markov chain Monte Carlo (MCMC), with methods such as particle MCMC [15] seeing wide use in SSMs. MCMC is a class of sampling methods that construct, and subsequently sample from, a Markov chain that has the target as its equilibrium distribution [26]. The elements of this chain are then taken to be Monte Carlo samples from the target distribution, although typically a number of the initial samples are discarded to ensure that the samples used are from after the chain has converged [26].

Note that there exist probabilistic methods, such as Laplace approximation [34] and variational inference [35], that provide analytical approximations and do not directly give samples. These methods are seldom used in state-space modelling. Distributional methods give more flexibility than point estimates, as they capture distributional behaviour and inherently quantify uncertainty, as well as allowing point estimates to be estimated from the samples, such as the aforementioned MAP estimator being the maximising argument for the posterior likelihood.

II-C Sparse modelling

When fitting and designing statistical models, the presence of sparsity in parameters is often desirable, as it reduces the number of relevant variables thus easing interpretation and simplifying inference. Furthermore, real systems are often made up of several interacting dense blocks that, when taken as a whole, exhibit complex dynamics [16]. Sparse estimation methods allow for this structure to be recovered, resulting in estimates that can reflect the structure of the underlying system. Sparsity is ubiquitous within signal processing, with signal decomposition into a sparse combination of components being very common [36, 37, 38], which can be parameterised via model parameters. Furthermore, within signal processing, there exist a number of existing sparse Bayesian methods, such as [39, 40, 41, 42], although these do not operate within the paradigm of state-space modelling.

There are several approaches to estimate model parameters such that sparsity may be present. One approach may be to construct many models with unique combinations of sparse and dense elements, fit all of these models, and then select the best model according to some criteria (see [43, 44, 45] for examples). This approach is conceptually sound, but computationally expensive for even a small number of parameters , as models must be fitted in order to obtain likelihood estimates, or other goodness-of-fit metrics. Another approach is to estimate the model parameters under a sparsity inducing penalty, with the classic example of such a penalty being the LASSO [46, 47]. This approach, commonly called regularisation, allows for only one model to be fitted rather than many, but increases the computational complexity of fitting the model. This single regularised estimate is, in most cases, more expensive to compute than fitting a non-regularised estimate as required by the previous approach, but this cost is typically far less than the cumulative cost of all required estimates in the previous approach. Regularisation is a common way to obtain sparse estimates, and can be extended to Bayesian modelling and estimation in the form of sparsity inducing priors [47, 48].

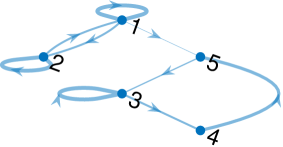

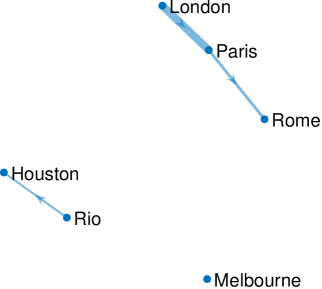

In LGSSMs, a sparse estimate of the transition matrix can be interpreted as the adjacency matrix of a weighed directed graph , with the nodes being the state elements, and the edges the corresponding elements of [17, 22]. We illustrate this with an example in Fig. 1. We note that there exist a number of graph estimation methods that can be applied to time series data, such as [49, 50, 51, 52], although these methods do not utilise the structure of the state-space model. Furthermore, these methods often employ an acyclicity constraint, which prevents the results from exhibiting cycles, which are a common feature in real world dynamical systems, for example those resulting from discretised systems of ODEs.

The graph thus encodes the linear, between-step relationships of state elements, simplifying model interpretation. Under this graphical interpretation, being non-zero implies that knowledge of the th element of the state improves the prediction of the th value. The presence of an edge from node to node implies a Granger-causal relationship between the state elements, as knowledge of the past values of the th element at time improves the prediction of the th element at time , which is precisely the definition of a Granger-causal relationship [53].

II-D Reversible jump Markov chain Monte Carlo

Reversible jump Markov chain Monte Carlo (RJMCMC) was proposed as a method for Bayesian model selection [24], and has since seen use in fields such as ecology [54], Gaussian mixture modelling [27], and hidden Markov modelling [25]. RJMCMC has even been applied within the realm of signal processing, with some relevant references being [55, 56, 57]. However, RJMCMC has not been applied to the estimation the sparsity of model parameters within signal processing.

RJMCMC is an extension of the Metropolis-Hastings algorithm that allows for the sampling of a discrete model space, and thus the inclusion of many models within a single sampling chain. RJMCMC is a hierarchical sampler, with an upper layer sampling the models, and a lower layer sampling the posterior distribution of the parameters within the model. This hierarchy allows the use of standard MCMC methods for the lower layer, with the difficulty coming in designing the upper layer [27].

RJMCMC traverses the model space via transition kernels between pairs of models, with the jumps occurring probabilistically. This lends the model space an interpretation as a directed graph, with nodes representing the models and edges representing the jumps between models. Let be the parameter space associated with model , and an associated realisation of the model parameters. Denote by the probability of jumping from model to . Note that is zero if and only if is zero. Let be the current model, and be a candidate model. In order to construct a Markov kernel for the transition between models, a symmetry constraint is imposed, i.e., if it is possible to jump from to , it must also be possible to jump from to [26]. In general however, the dimension of the parameter spaces is not equal, hence it is not possible to construct an invertible mapping between them, violating the required symmetry. Reversible jump MCMC addresses this by introducing a dimension matching condition [24]; the spaces and are augmented with simulated draws from selected distributions such that

where is a bijection, and are known distributions.

The parameter mappings and stochastic draws change the equilibrium distribution of the chain, which means that sampling will not be asymptotically correct. This counteracted by modifying the acceptance ratio; in the case of jumping from model to model , the acceptance ratio is given by

| (4) |

where is the density associated, with model evaluated at . The proposal (, ) is accepted with probability , and is otherwise rejected. On rejection, the previous value of the chain is kept, (, ).

In this way, given data , RJMCMC samples the joint posterior . However, in the case only where only is of interest, following standard Monte Carlo rules, we can discard the samples of to obtain [26].

Reversible jump MCMC incorporates many models into a single chain, so it is simple to compare or average models. However, the parameter mappings and model jump probabilities must be well designed. Poor selection of these parameters will typically lead to poor mixing in the model space [24, 26]. Our method explores only a single overall model, which simplifies the mappings. We impose a pairwise structure on the model space, simplifying the jumps significantly.

II-E Model definitions and notation

In order to use RJMCMC to explore sparsity in the transition matrix of a linear-Gaussian state-space model, we must first construct a set of candidate sub-models of the LGSSM that exhibit various sparsity levels. To this end we introduce the notation in Table I.

| Notation | Meaning |

|---|---|

| Model at iteration | |

| Indices of dense elements in | |

| Parameter space sampled at iteration | |

| Number of dense/sparse elements at iteration |

Denote by the model selected by the algorithm at iteration . This model is uniquely defined by the associated set of indices of dense elements in , which we denote . Note that there are thus models in our model space, precluding parallel evaluation for even small . It is therefore not possible to use methods such as Bayes factors or marginal likelihood to compare models, as is standard in Bayesian model selection in state-space models [43, 45], as the computational cost is infeasible, due to these requiring all models to be evaluated in order to be compared. The number of elements of , denoted by , is the number of dense elements at iteration , and therefore the number of non-zero elements of . Denote by the number of sparse elements at iteration . If the true value of a parameter is known, then it will be presented without subscript or superscript. We denote by a superscript the complement to a set, and note that denotes the set of indices of elements sparse in at iteration .

Each model has an associated parameter space, which we denote by . As the parameter space of a sparse parameter is , we therefore have

| (5) |

where is the support for . In the Bayesian paradigm, we can interpret the sparsity of model as a prior constraint induced by , under which the elements indexed by are always of value , and the elements indexed by are distributed as . This is equivalent to fixing the value of the elements of indexed by to , as in Eq. (5).

We denote the identity matrix by , and the -vector with all elements equal to by . We denote by any unspecified parameters of a function or distribution. For example, means that the parameters of the distribution are unspecified, typically as they are irrelevant to the discussion.

We provide a table of our most used acronyms in Table II.

| Abbreviation | Meaning |

|---|---|

| MCMC | Markov Chain Monte Carlo |

| RJMCMC | Reversible Jump MCMC |

| MH | Metropolis Hastings |

| RWMH | Random walk Metropolis Hastings |

| EM | Expectation-Maximisation |

| SSM | State-space model |

| LGSSM | Linear Gaussian state-space model |

III The SpaRJ algorithm

We now present the SpaRJ algorithm, a novel RJMCMC method to obtain sparse samples from the posterior distribution of the transition matrix of an LGSSM. We present our method in Algorithm 1 for estimating the transition matrix , although the algorithm can be adapted to estimating any unknown parameter of the LGSSM. Note that our method samples the joint posterior hierarchically, by first sampling from and then sampling from conditional on . However, as we are only interested in the posterior of the transition matrix , we marginalise by discarding the samples of when performing inference [26].

In order to apply our method, we must provide the values of all known parameters of the LGSSM, (used when evaluating the Kalman filter), and initial values for the unknown parameters and . We initialise the model sampling by setting to the fully dense model. The initial value can be selected in a number of ways, such as randomly or via optimisation [26]. We obtain the initial log-likelihood, , by running a Kalman filter with the chosen initial value , giving . We define a prior on the transition matrix, with some examples given in Section IV-C2. The prior distribution incorporates our prior knowledge as to the value of the transition matrix , and can be used to promote sparsity. However, it is not required in order to recover sparse samples, and can be chosen to be uninformative or diffuse.

The method iterates times, each iteration yielding a single sample, outputting samples, . While adapting the number of iterations is possible, e.g. by following [58], we present with fixed so as to provide a simpler algorithm. Note that, at each iteration we start in model , with transition matrix , and log-likelihood of . Each iteration is split into three steps: model proposal (Step 1), parameter proposal (Step 2), and accept-reject (Step 3). We note that the model is not fixed, and is sampled at each iteration, allowing for evidence-based recovery of sparsity.

Step 1: Propose . At iteration , we retain the previous model with probability (w.p.) , and hence setting . If a model jump occurs, we set the proposed model to be sparser than w.p. , and denser otherwise. To create a model that is sparser, we select a number of dense elements to then make sparse. To create a denser model, we select a number of sparse elements to make dense. The number of elements to change, , is drawn from a truncated Poisson distribution (see Appendix -B) with rate parameter , with this range required in order to bias the jump to models close to the previous model. This distribution is chosen as it exhibits the required property of an easily scaled support, needed as the maximal jump distance changes with the current model. Note that this distribution does not form a prior over the model space, but instead is used to generate jump kernels, which are then used to explore the model space. The model space prior is discussed in Section IV-C3, and is by default diffuse, i.e. . The truncated Poisson distribution is simple to sample, as it is a special case of the categorical distribution. The elements to change are then selected uniformly. The proposed model is always strictly denser than, strictly sparser than, or identical to , following the construction of model jumps in Section IV-A2.

Step 2: Propose . If the proposed model differs from the previous model , then the parameters are mapped to via eq. (11), a modified identity mapping. This mapping is augmented with stochastic draws if the dimension of the parameter space increases, and has elements removed if the dimension decreases. This mapping has identity Jacobian matrix, and is thus absent from the acceptance ratio.

If the proposed model is the same as the previous model , then is sampled from the conditional posterior . To achieve this, we use a random walk Metropolis-Hastings (RWMH) sampler. The RWMH sampler requires a single run of the Kalman filter per iteration, which is the most computationally expensive component of the algorithm. This single run follows from the joint accept-reject decision in Step 3, which allows us to ignore the accept-reject step of a RWMH sampler that jointly assesses all proposals, as in the case of SpaRJ. We cover the parameter proposal process further in Section IV-B. Note that any sampler can be used, even a non-MCMC method, with RWMH chosen for simplicity, computational speed, and to give a baseline statistical performance.

Step 3: Metropolis accept-reject. Once the model and parameter values have been proposed, a Metropolis-Hastings acceptance step is performed. We run a Kalman filter with to calculate the log-likelihood of the proposal, .

Prior knowledge is included via a function of the prior probability densities, denoted , which encodes both our prior knowledge of the parameter values and of the model (hence the sparsity). A wide range of prior distributions can be used, with our preference being the Laplace distribution, with the associated given in eq. (14), which is known to promote sparsity in Bayesian inference [47]. Note that the prior is not required to yield sparse samples, but is useful to combat potential over-fitting resulting from the large number of parameters to fit. If we denote by our prior on the transition matrix, then . In Section IV-C2, we provide suggestions as to choosing a prior, and hence , .

When defining the model space in Section II-E, we note that each model is uniquely determined by the sparsity structure it imposes, with this structure being present in all samples of generated from this model. We can therefore assess the model against our prior knowledge solely based on the sample structure, without a separate prior on the model space. An example of such a function is the -norm, which penalises the number of non-zero elements, a property determined entirely by the model that can be assessed via the samples. The log-acceptance ratio of the proposed values and is given by , where is given in Appendix -C. The model and parameter proposals are jointly accepted with probability , and are otherwise rejected. If the proposals are accepted, then we set , , and . Otherwise, we set , , and .

IV Algorithm design

We now detail the three steps of Algorithm 1 as presented in Section III. This section is structured to follow the steps of the algorithm for clarity and reproducibility.

IV-A Step 1: Model sampling

In order to explore potential sparsity of using RJMCMC, we design a model jumping scheme that exploits the structure inherent to the model space.

IV-A1 Model jumping scheme (steps 1.1 and 1.2 in Alg. 2)

At each iteration, the algorithm proposes to jump models with probability (w.p.) , as in Step 1 of Algorithm 2. If the algorithm proposes a model jump, then the proposed model will be sparser than w.p. , and denser than otherwise. If no model jump is proposed, then .

There are thus three distinct outcomes of the model jumping step: retention of the previous model, proposing to jump to a sparser model, or proposing to jump to to a denser model. Note that in some cases it is not possible to jump in both directions, and hence if the jumping scheme proposes a model jump, the jump direction is deterministic. This changes the model jump probability, with the results detailed in Appendix -C.

IV-A2 Model space adjacency (steps 1.2s and 1.2d in Alg. 2)

Given the jump direction from Step 1.1, we denote by the number of elements that are to be made sparse or dense. We draw (see Appendix -B), where is the maximum jump distance in the chosen direction, equal to if jumping denser, and if jumping sparser. The rate should be chosen with to prefer jumps to closely related models. We find experimentally that gives good results, and note that is equivalent to a scheme in which the sparsity can change by one element only. Due to the small size of the space in a grid search would also be possible. The resulting model jump probabilities are asymmetric, with the modification to the acceptance ratio given in Appendix -C.

In order to provide a set of candidate models for , we impose an adjacency condition. We say model is densely -adjacent to model if both and , and sparsely -adjacent if both and . In other words, if the model differs in elements from in a given direction only, then it is -adjacent in that direction; if the model differs from in both the sparse and dense directions, e.g. two elements are sparser and one element is denser, then it is not adjacent to . Note that, if is sparsely -adjacent to , then is densely -adjacent to , satisfying the reversibility condition of RJMCMC. With this adjacency condition, for a given and jump direction, the proposal is uniformly selected from the models -adjacent to in the given direction. Note that, given , it is theoretically possible to reach any model in a maximum of two jumps: one to the maximally sparse model and one jump denser to the desired model.

It is possible to extend this proposal technique to jumping in both direction simultaneously, rather than requiring combinations of birth-death moves to achieve the result. This is omitted for simplicity. The natural solution to this is evaluating each jump with an accept-reject step, which effectively recovers the current scheme. It is also possible to propose as a randomly selected list of indices with length , effectively shuffling the sparse elements around. This could potentially allow for more robust exploration of the posterior, but these moves would be very unlikely to be accepted. We therefore believe our proposal method to be a good compromise between simplicity and robustness, with good performance as evidenced by Section V.

IV-A3 Choice of parameters for model jumps

The value of the hyper-parameters and affects the acceptance rate of the proposed models and parameter values. It is known that an acceptance rate close to is optimal for a random walk Metropolis-Hastings sampler [59], and works well as a rule of thumb for RJMCMC algorithms [60]. We aim to have our within-model samples accepted at close to this rate, and thus must not propose to change model too often. This is because model changes can significantly alter the conditional posterior , often leading to a low acceptance probability. We recommend using a model retention probability of . We find this gives enough iterations per model to average close to the optimal acceptance rate, whilst also proposing to jump models relatively frequently, allowing for exploration of sparsity. We recommend setting , making the model proposal process symmetric, although can reflect prior knowledge of the sparsity of , with larger indicating a preference for sparsity. The algorithm is relatively insensitive to the value of and , allowing for these parameters to be chosen easily. However, and can be tuned during the burn-in period, with the objective of reaching a given acceptance rate. We find that using a value of works well for both parameters, due to the structure of the model space and restricted parameter space of stable LGSSMs

IV-B Step 2: Parameter sampling and mapping

Since our method applies MCMC to sample the posterior distribution , we must define a parameter proposal routine. Once a model has been proposed, the algorithm proposes a parameter value, , which is constrained to the parameter space of . The process by which we generate the parameter proposal depends on whether or not the proposed model is the same as the previous model . If , the conditional posterior of the transition matrix, , is sampled. Otherwise, the parameter value is mapped to the parameter space of .

IV-B1 Sampling under a given model (Step 2.1)

To generate the parameter proposal , we sample from , the posterior distribution of the transition matrix under the model . This distribution can be written as

| (6) | ||||

where is the prior assigned to the transition matrix under the proposed model, which can be written

| (7) |

with deriving from , and denoting a point mass at . By substituting into eq. (3) we obtain

| (8) |

and can hence evaluate , allowing us to sample from .

We propose to sample from this distribution using a random walk Metropolis-Hastings (RWMH) sampler. For the walk distribution, we use a Laplace distribution for each element of , with all steps element-wise distributed i.i.d. , with discussed below. We can thus view our proposed parameter proposal as drawing from

| (9) |

The Laplace distribution is selected, primarily due to its relationship with our proposed prior distribution for , itself a Laplace distribution [47]. In addition, the mass concentration of the Laplace distribution means that the walk will primarily propose values close to the previous value, increasing the acceptance rate, but can also propose values that are further from the accepted value, improving the mixing of the sample chain. The value of is chosen to give a within-model acceptance rate near the optimal rate of [59], with consistently yielding rates close to this. A grid search suffices to select the value of this parameter as, for stable systems, the space of feasible values is small ( is recommended).

IV-B2 Completion distributions (steps 2.2s, 2.2d)

In our algorithm, when a model jump occurs the dimension of the parameter space always changes. For example, jumping to a sparser model is equivalent in the parameters space to discarding parameters and decreasing the dimension of the parameter space. However, if jumping to a denser model, hence increasing the dimension of the parameter space, we require a method to assign a value to the new parameter. RJMCMC accomplishes this by augmenting the parameter mapping from model to with draws from a completion distribution , defined for each possible model jump [24].

Rather than defining a distribution for every pair of models, we exploit the numerical properties of sparsity to define a global completion distribution . In our samples, sparse elements take the value zero. In order to propose parameters close to the previous parameters, we draw the value of newly dense elements such that the value is close to zero. In order to accomplish this, we choose a as the global completion distribution , due to its mass concentration and relation to the prior we propose in Section IV-C2. The parameter is subject to choice, and for stable systems we find that performs well, although the parameter could be tuned during the burn-in period. A simple grid search suffices to select the value of this parameter as the method is robust to parameter specification via the accept-reject step [26]. For stable systems, the search space for this parameter is small, approximately , so a grid search is most efficient in terms of computational cost. If the prior is chosen as per the recommendations in Section IV-C1, then we can interpret this renewal process as drawing the values for newly dense elements from the prior.

IV-B3 Mapping between parameter spaces (steps 2.2s, 2.2d)

In order to jump models, we must be able to map between the parameter spaces of the previous model and the proposal. This is, in general, a difficult task [24, 26], but is eased in our case as the models we are sampling are specific cases of the same model, and thus the parameters are the same between models. We therefore use an augmented identity mapping to preserve the interpretation of parameter values between models. Written in terms of , this mapping is given by

| (10) | ||||

with i.i.d. . In order to obtain the Jacobian required to evaluate eq. (4), the transformation must be written as applied to the parameter space, giving

| (11) |

As sparse elements are, by construction, not in the parameter space, they are not present in the transformation, and are taken to be zero in by definition. The Jacobian of the parameter mapping given by eq. (11) is a identity matrix, and hence the Jacobian determinant term in Eq. (4) is constant and equal to one.

IV-C Step 3: MH accept-reject

In this section, we first discuss the MH acceptance ratio, and then the way that prior knowledge of the transition matrix is incorporated, and how this relates to the model space. We then discuss the implications of sparsity in the samples.

IV-C1 Modified acceptance ratio

The modified Metropolis-Hastings acceptance ratio for our method is given by

| (12) |

The first term is a correction for detailed balance, required due to the stochastic completion of the parameter mappings. The second term is analogous to the modification required when using an asymmetric proposal in RWMH, but relating to the model space. The last term of the expression is the standard symmetric Metropolis acceptance ratio. Note that the Jacobian term from eq. (4) is equal to one in our case, and is therefore omitted.

IV-C2 Incorporating prior knowledge

The prior distribution, , quantifies our pre-existing knowledge on , including both its sparsity structure. We do not enforce sparsity via this distribution. Since it encodes our knowledge of all elements of we call it the overall prior. We can interpret the prior of conditional on a given model , or , as encoding the sparsity from the model, and write it as in eq. (7). In this way, we can interpret the sparsity constraint as a series of priors. The relationship between the prior conditional on the model and the overall prior is given by

| (13) |

where is the overall prior, and the conditional prior containing sparsity in elements indexed by , given in eq. (7), and is the prior assigned to the model space, which is described in Section IV-A2.

We apply the prior via the function , where is a vector of prior hyper-parameters. When written in terms of the prior,

We recommend an element-wise Laplace prior on the transition matrix , given by with subject to choice, which results in

| (14) |

after combining all to yield . This is equivalent to the LASSO penalty [46, 47] in regression, which is known to promote sparsity. Experimentally, we find that choosing consistently results in good performance. For more information on selecting parameters for the Laplace prior, and the Laplace prior in general, we refer the reader to [47]. Note that the Laplace prior is the Bayesian equivalent to LASSO regression [46, 47], with penalties and priors having an equivalence in Bayesian statistics, as both encode the prior knowledge of a parameter.

If the parameter is not determined based on prior knowledge, it is possible to use a simple grid search to select the value of the parameter as explained above. Furthermore, if a is used for the completion distribution , then dense values are re-initialised using the prior, reinforcing the interpretation of the prior as our existing knowledge. Note that using this prior is not required to recover sparsity, as this occurs as a result of the model sampling. We have performed several runs utilising a diffuse prior on the parameter space, with the results being similar to those where our suggested prior is used. Priors other than Laplace can be used, without compromising the recovery of sparsity, such as the ridge prior, or a diffuse prior. We use the LASSO penalty for its connection to sparsity, as well as for direct comparability to the existing literature.

As the parameter proposal uses a standard MCMC scheme, a prior sensitivity analysis can be used to determine the effect of the chosen prior. In order to validate our recommendations, we have run multiple sensitivity analyses for the diffuse prior and several Laplace priors of varying scale, and have observed that the results are independent of the prior in all but the most extreme cases in which the prior is nearly a point mass.

IV-C3 Model space prior

As the model space encodes only the sparsity of , a prior on that incorporates this structure is also implicitly a prior on the model space. If no such prior is applied, then the implicit prior on the model space is diffuse, with . This follows from evaluating at an arbitrary under each model via Eq. (13), giving when has the sparsity structure induced by , noting if does not have this structure. As this holds for all models, it follows that , and as the model space is discrete and finite, we can obtain an explicit value for the prior. Note that a diffuse prior is, in general, not allowed on the model space if using a posteriori model comparison methods [44]. However a diffuse prior is standard for RJMCMC [24, 26, 27], as the model space is sampled, and the model dynamically assessed alongside the parameter. This diffuse prior encodes our lack of prior knowledge as to the specific sparsity structure of .

IV-C4 Probabilistic Granger causality

In LGSSMs, an element of the state space Granger-causes element if knowledge of at time improves the prediction of at time . We can therefore derive probabilistic Granger-causal relationships from our samples of the transition matrix, as the sampler assesses the proposals using their likelihood, which is equivalent to assessing their predictive capabilities. These probabilistic Granger-causal relationships are powerful, as they allow the probability of a relationship between variables to be quantified.

Note that being zero in the transition matrix does not necessarily mean independence of the state elements, but directed conditional independence on the scale of one time step. This conditional independence means that does not Granger-cause , however may indirectly affect through another variable over multiple time steps.

IV-D Extending SpaRJ to other parameters

Our method can be used to obtain sparse estimates of any of the parameters of the LGSSM, although some modifications are required to extend the formulation given above (for the transition matrix). Extending our method to the matrix requires only for the parameter and model proposal to be changed to reflect the size of .

Extending the method to covariance matrices requires the proposal value to be constrained such that the resulting matrix is positive semi-definite. If the method does not jump models, remaining in model , a possible proposal distribution for the state covariance proposal is

| (15) |

where is larger than , with working well experimentally. This proposal has expectation close to , and is positive semi-definite. Note that this distribution is not applied to if estimating only , e.g., in Section III or in other subsections of Section IV, and applies only to the extension to sampling the covariance parameter. We enforce the sparsity structure of in , by setting elements sparse under to after sampling but before the accept-reject step, replacing Step 2.1 in Algorithm 3, where the model does not change.

The model proposal step (Step 2.2 in Algorithm 3) would also need to be modified, with the diagonal being dense at all times, and enforcing indices and to have the same sparsity. The model space is therefore reduced, and model adjacency is assessed via only the upper triangular. This modification to the model proposal process completes the alterations required to use our method to sparsely sample the state covariance matrix. Note that the covariance parameters cannot be interpreted as encoding state connections, and therefore cannot be interpreted graphically in the same way as in the transition matrix.

IV-E Computational cost

The computational cost of our method is very similar to that of regular MCMC methods when applied to state-space models. The most computationally intensive component of the algorithm is the evaluation of the Kalman filtering equations, with this being over of the computational time in our testing. The additional costs compared to a random walk Metropolis-Hastings (RWMH) method are one or two draws from a uniform distribution, zero or one draw from a truncated Poisson distribution (equivalent to a categorical distribution), and some additional array accesses and comparisons. These extra costs are negligible compared to the cost of evaluating the Kalman filtering equations, with the computational cost and complexity being determined by the matrix operations therein, resulting in a complexity of for our algorithm, where the dominating and terms result from the matrix operations performed by Kalman filter. The computational cost of our method is empirically demonstrated in Section V, and is functionally equivalent to a standard MCMC method that does not explore sparsity. Thus in practice, given the cost of the filtering equations, the sparsity is explored for free.

V Numerical study

We now present the results of three sets of simulation studies to evaluate our method, showcasing the performance of SpaRJ in several scenarios. The section is divided into three synthetic data experiments and one real data problem. First, we evaluate the method with isotropic covariance matrices over variable and . Next, we investigate the effect of known and unknown anisotropic state covariance over variable and . The third experiment explores the effect of the true level of sparsity in the transition matrix on the quality of inference. We then use real data to recover geographical relationships from global temperature data. Finally, we explore the convergence characteristics of the method and check guarantees.

For the synthetic experiments, we generate observations following eq. (1), with , , and take and unless stated otherwise. The state covariance matrix is specified per study. We generate transition matrices and synthetic data for . Whilst this may seem limited in dimension, this equates to performing inference in and dimensional spaces, as each element of the transition matrix is an independent parameter. Furthermore, we sample the model space, which is of size , e.g. when . In all experiments, we run SpaRJ for iterations, discarding the first as burn-in. The matrix is generated using an EM scheme, initialised at a random element-wise standard normal matrix. We set and in all cases. The LASSO penalty is used, with chosen per experiment. We use a truncated Poisson distribution for the jump size, with .

We contrast our proposed method method with GraphEM [17, 22], an algorithm with similar goals based on proximal optimisation. In addition, we compare with the conditional Granger causality (CGC) method of [19] and the DAG-based method (DAGMA) of [52]. These methods do not exploit the state-space model structure, and are trained only on the observations . We note that there are not other RJMCMC-based methods that are applicable to this problem. We therefore compare with a reference MCMC implementation that does not exploit sparsity, and is hence dense in all elements of the estimate. This is equivalent to running our method, but with except for the corresponding to the fully dense matrix, hence effectively removing Step 1 in Algorithm 1 and Section III. We compare the metrics of RMSE, precision, recall, specificity (true negative rate), and F1 score, with an element being sparse encoded as a positive, and dense as a negative.

We use these metrics, which are associated with classification rather than regression, as our method outputs truly sparse samples, and therefore allows for parameters to be classified as sparse or dense without thresholding on their numerical value, or using confidence/credible intervals. We take an element to be sparse under SpaRJ by majority vote of the samples. We average the metrics over 100 independent runs of each algorithm. The average time taken runs to complete is given, with the runs being performed in parallel on an 8 core processor. No special effort was put into optimising any single method, and all methods that use the Kalman filter utilise the same implementation thereof. Note that the DAGMA implementation is GPU accelerated, whereas all other methods utilise only the CPU. RMSE for SpaRJ and for the reference MCMC is calculated with respect to the mean of post-burnin samples for each chain. Note the RMSE is computed relative to , not the sequence of underlying hidden states as is often the case. RMSE is not meaningful for CGC, as it estimates only connectivity. We generate our matrices by drawing the dense elements from a standard normal, and then divide by the magnitude of its maximal singular value to give a stable system.

V-A Synthetic data validation

V-A1 Isotropic covariances and

We test the performance of the method with isotropic covariance matrices and .

Dimension 3 matrix. We generate for dimension with sparsity in one element per row and one element per column. We set , and .

Dimension 6 block diagonal matrix. We generate for dimension as a block diagonal matrix with blocks. We set , , and .

Dimension 12 block diagonal matrix. We generate for dimension as a block diagonal matrix with blocks. We set , , and .

| method | RMSE | spec. | recall | prec. | F1 | Time (s) | |

|---|---|---|---|---|---|---|---|

| GraphEM | 0.099 | 0.86 | 0.98 | 0.79 | 0.88 | 0.043 | |

| SpaRJ | 0.092 | 0.98 | 0.99 | 0.99 | 0.99 | 0.68 | |

| CGC | – | 0.85 | 0.95 | 0.87 | 0.92 | 0.32 | |

| DAGMA | 0.49 | 0.17 | 0.67 | 0.29 | 0.40 | 0.25 | |

| MCMC | 0.103 | 1 | 0 | – | 0 | 0.65 | |

| GraphEM | 0.103 | 0.83 | 0.90 | 0.91 | 0.91 | 0.22 | |

| SpaRJ | 0.094 | 0.88 | 0.96 | 0.94 | 0.95 | 3.2 | |

| CGC | – | 0.87 | 0.93 | 0.91 | 0.90 | 1.6 | |

| DAGMA | 0.27 | 0.17 | 0.96 | 0.69 | 0.87 | 0.62 | |

| MCMC | 0.114 | 1 | 0 | – | 0 | 3.3 | |

| GraphEM | 0.090 | 0.85 | 0.77 | 0.96 | 0.85 | 0.93 | |

| SpaRJ | 0.071 | 0.83 | 0.89 | 0.91 | 0.90 | 14.5 | |

| CGC | – | 0.80 | 0.67 | 0.75 | 0.71 | 6.5 | |

| DAGMA | 0.16 | 0.25 | 0.98 | 0.86 | 0.92 | 1.0 | |

| MCMC | 0.107 | 1 | 0 | – | 0 | 14.4 |

Table III evidences a good performance from SpaRJ, exhibiting the capability to extract the sparsity structure in all examples. Furthermore, point estimates resulting from SpaRJ are consistently closer to the true value than those from comparable methods, as evidenced by the lower RMSE. Note that in all cases the DAG based method recovered overly sparse graphs, as evidenced by the poor specificity scores. We further note that DAGMA is designed to recover acyclic graphs, with all graphs here being cyclical, further degrading performance.

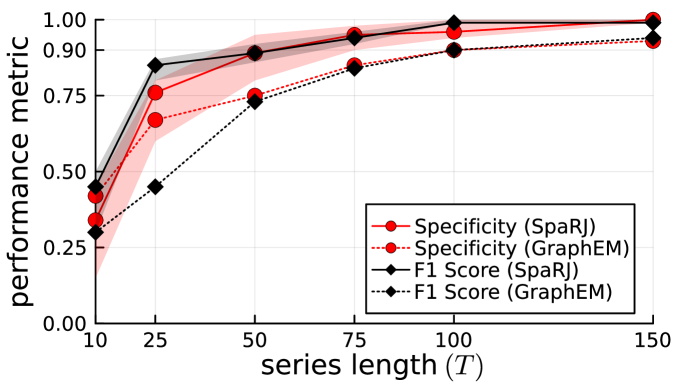

In order to test the relationship between the recovered values and the number of observations , we now demonstrate our method for different values of using the same system as previously. In Figure 2, we show averaged metrics over 100 independent runs for SpaRJ and GraphEM. We see that the longer the series the better the overall performance, with SpaRJ giving a better overall performance than GraphEM.

The change in the quality of inference with the times series length illustrated in Figure 2 is typical for parameter estimation methods in state-space modelling, as a longer series gives more statistical information with which to perform inference.

V-A2 Known anisotropic state covariance

We now generate synthetic data using a less favourable regime, under an anisotropic state covariance . In order to do this, we note that all covariance matrices can be expressed in the form

| (16) |

where is an orthogonal matrix, and are the eigenvalues of , with .

To generate a covariance matrix, we first generate an orthogonal matrix following the algorithm of [61]. We then draw , and sort in descending order. Finally, we obtain via evaluation of Eq. (16). In this way, we generate a random positive definite matrix, with all elements non-zero.

To allow direct comparison with the previous results, we use the same set of model parameters as before, except we randomly generate the covariance for each system as above.

| method | RMSE | spec. | recall | prec. | F1 | Time (s) | |

|---|---|---|---|---|---|---|---|

| GraphEM | 0.093 | 0.98 | 0.85 | 0.97 | 0.88 | 0.052 | |

| SpaRJ | 0.087 | 0.98 | 0.99 | 0.98 | 0.99 | 0.67 | |

| CGC | – | 0.72 | 0.93 | 0.43 | 0.59 | 0.32 | |

| DAGMA | 0.61 | 0.17 | 0.33 | 0.17 | 0.22 | 0.25 | |

| MCMC | 0.107 | 1 | 0 | – | 0 | 0.68 | |

| GraphEM | 0.09 | 0.99 | 0.48 | 0.98 | 0.63 | 0.24 | |

| SpaRJ | 0.07 | 0.88 | 0.90 | 0.94 | 0.92 | 3.3 | |

| CGC | – | 0.63 | 0.65 | 0.32 | 0.45 | 1.7 | |

| DAGMA | 0.26 | 0.25 | 0.96 | 0.71 | 0.82 | 0.62 | |

| MCMC | 0.09 | 1 | 0 | – | 0 | 3.3 | |

| GraphEM | 0.097 | 0.98 | 0.30 | 0.99 | 0.46 | 0.95 | |

| SpaRJ | 0.082 | 0.95 | 0.83 | 0.99 | 0.90 | 14.4 | |

| CGC | – | 0.75 | 0.57 | 0.43 | 0.49 | 6.5 | |

| DAGMA | 0.16 | 0.25 | 0.97 | 0.86 | 0.91 | 1.0 | |

| MCMC | 0.099 | 1 | 0 | – | 0 | 14.4 |

In Table IV see that there is only a small apparent difference in performance between isotropic covariance and non-isotropic state covariances, providing that the covariance is known. This is expected, as a known covariance would not affect the estimation of the value of the state transition matrix. However, when estimating sparsity, the anisotropic nature of the state covariance does have an effect. This is due to the value of the state elements affecting each other in more than one way, as is the case in the isotropic covariance case. There is thus a small drop in metrics in all cases due to this additional source of error. We note that whilst DAGMA may seem to perform well due to the high F1 scores, it does this by recovering an overly sparse graph as indicated by the low specificity. For example, only 9 elements are recovered as dense in the dimensional system, out of a true 24 dense elements, which does not well represent the underlying system.

We now perform a sensitivity analysis, in which we vary the strength of the prior by varying , and observe the effect on the results. We will perform this analysis on the system with a known anisotropic covariance. The results of this analysis are presented in Table V. We see that the results are not dependent on the prior parameter, meaning that the parameter can be chosen without excess computation or prior knowledge required.

| RMSE | spec. | recall | prec. | F1 | Time (s) | |

|---|---|---|---|---|---|---|

| 0.082 | 0.95 | 0.83 | 0.99 | 0.90 | 14.2 | |

| 0.085 | 0.95 | 0.83 | 0.98 | 0.90 | 14.1 | |

| 0.081 | 0.94 | 0.83 | 0.99 | 0.89 | 14.3 | |

| 0.083 | 0.93 | 0.82 | 0.99 | 0.90 | 14.3 | |

| 0.081 | 0.94 | 0.82 | 0.99 | 0.89 | 14.2 | |

| 0.082 | 0.94 | 0.82 | 0.99 | 0.90 | 14.2 |

V-A3 Estimated unknown anisotropic covariance

In many scenarios, the true value of the state covariance is unknown, and must be estimated. As we wish to assess the performance of our method in this scenario, we use the same true state covariance as Section V-A2, but input an estimated state covariance. However, as both and are now unknown, we must estimate both parameters in order to obtain an estimate for . We therefore iteratively estimate and using their analytic maximisers, and input the resulting estimate for into the tested methods. The estimated resulting from this initialisation is discarded, and is not used in our method, nor in any other method.

| method | RMSE | spec. | recall | prec. | F1 | Time (s) | |

|---|---|---|---|---|---|---|---|

| GraphEM | 0.123 | 0.75 | 0.72 | 0.62 | 0.65 | 0.05 | |

| SpaRJ | 0.099 | 0.87 | 0.98 | 0.80 | 0.89 | 0.64 | |

| CGC | – | 0.72 | 0.93 | 0.43 | 0.59 | 0.32 | |

| DAGMA | 0.61 | 0.17 | 0.33 | 0.17 | 0.22 | 0.25 | |

| MCMC | 0.127 | 1 | 0 | – | 0 | 0.68 | |

| GraphEM | 0.097 | 0.68 | 0.38 | 0.70 | 0.49 | 0.21 | |

| SpaRJ | 0.078 | 0.75 | 0.53 | 0.81 | 0.63 | 3.2 | |

| CGC | – | 0.63 | 0.65 | 0.32 | 0.45 | 1.7 | |

| DAGMA | 0.26 | 0.25 | 0.96 | 0.71 | 0.82 | 0.62 | |

| MCMC | 0.152 | 1 | 0 | – | 0 | 3.5 | |

| GraphEM | 0.102 | 0.76 | 0.34 | 0.88 | 0.49 | 0.92 | |

| SpaRJ | 0.074 | 0.60 | 0.53 | 0.88 | 0.65 | 14.7 | |

| CGC | – | 0.75 | 0.57 | 0.43 | 0.49 | 6.5 | |

| DAGMA | 0.16 | 0.25 | 0.97 | 0.86 | 0.91 | 1.0 | |

| MCMC | 0.124 | 1 | 0 | – | 0 | 15.0 |

We see in Table VI that our method performs well under these challenging conditions, consistently outperforming existing methods. Note that the CGC and DAGMA metrics are unchanged from the previous section, as these methods does not require accept estimate for . The deterioration of metrics is expected in this experiment, as we are inferring both the value of and the value of from the same data, with the estimation of being conditional on the estimated value of . However, the sparsity structures of the estimates are better than comparable methods, and the parameter value is still well estimated, as evidenced by the RMSE value.

V-A4 Variable levels of sparsity

We now explore the performance of our method under variable levels of sparsity. To facilitate direct comparison, all other parameters of the state-space model remain the same between sparsity levels, as well as the matrix from which is generated. This experiment is performed on a transition matrix, with , , , and .

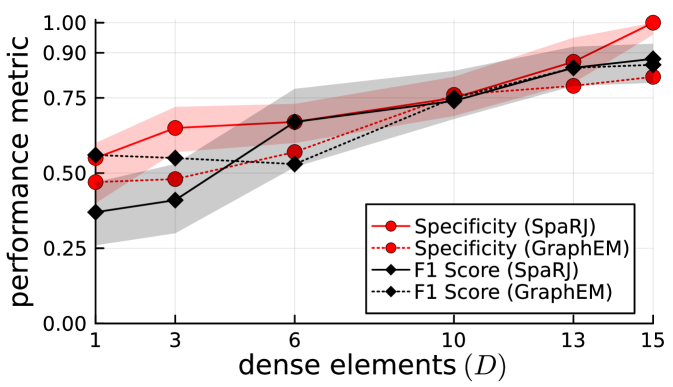

Note that, in systems with many dense elements, the effect of each element can be emulated by changing the values of a number of other elements, making sparsity recovery difficult in these cases. Our algorithm performs well in general, consistently outperforming other methods in this case.

We observe from Figure 3 that both methods generally perform better as the number of sparse elements increases. This is due to sharper likelihood changes occurring when sparsity changes when assessing these models. For particularly dense transition matrices, GraphEM outperforms SpaRJ, due to the model proposal step of SpaRJ requiring more transitions to walk the larger space. This could be remedied with a prior encoding more information for these sampling regimes. For example, a penalty relating to the number of sparse elements could be incorporated into the prior. This ease of encoding prior preference in the model space is a strength of SpaRJ, and is not possible in comparable methods. Furthermore, SpaRJ can be assisted by GraphEM via the provision of a sparse initial value , which will greatly speed convergence. We have not done this for any of the numerical experiments, but in practice we recommended doing so.

V-B Application to global temperature data

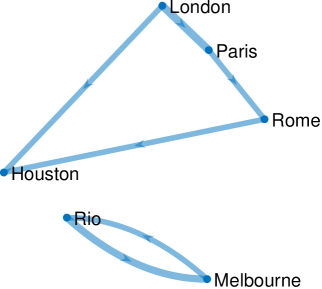

We now apply our method to real data. We use the average daily temperature of 324 cities from 1995 to 2021, curated by the United States Environmental Protection Agency [62]. We subset the data to the cities of London (GB), Paris (FR), Rome (IT), Melbourne (AU), Houston (US), and Rio de Janeiro (BR) in 2010. We subset to a single year to avoid missing data.

We set the parameters as follows: and . We estimate using the EM scheme detailed in Section V-A3, and set as per the data specification. The results for GraphEM are given graphically in Figure 4(a), while Figure 4(b) displays the results for SpaRJ. In Figure 4, edge thickness for GraphEM is proportional to the number of times an edge appears in independent runs, whereas for SpaRJ edge thickness is proportional to the number of post-burnin samples from chains with the edge present.

It is well known that weather phenomena are highly non-linear, and are driven by both local and global factors. Not all driving factors are recorded in the data, therefore making it a challenging task to extract statistically causal relationships between locations. For example, neither barometric pressure nor rainfall are utilised, which would assist with localisation [63].

We see that the geographical relationships between the cities are well recovered by SpaRJ. We see that the European cities form one cluster, Melbourne is separate, and Rio weakly affects Houston in Figure 4(b).

Second, note that there exists no ground truth to compare against in this problem. GraphEM recovers the graph given in Figure 4(a), which does not reflect the geographical positioning of the cities, as there are connections across large distances which is not physically reasonable. In addition, this graph cannot be interpreted probabilistically, as is possible with SpaRJ. On the other hand, GraphEM is generally faster compared to SpaRJ.

The SpaRJ estimate, presented in Figure 4(b), offers the capacity for additional inference. For instance, in Figure 4(a), all edges recovered by GraphEM are of a similar thickness, indicating that they are recovered by many independent runs of the algorithm. This is a desirable characteristic of GraphEM, as it is indicative of good convergence, although it does not admit a probabilistic interpretation of state connectivity.

SpaRJ recovers the edges probabilistically, which is made apparent in Figure 4(b) by the variable edge thicknesses. In SpaRJ, the number of post-burnin samples in which a given edge is present gives an estimate of the probability that this edge is present. This is of particular interest when inferring potential causal relationships, as in this example. This property follows from the broader capacity of SpaRJ to provide Monte Carlo uncertainty quantification. For example, a credible interval for the probability of an edge being present can easily be obtained via bootstrapping with the output of SpaRJ, which is not possible with GraphEM, as it is designed to converge to a point.

We used a value of in GraphEM for this estimation. However, increasing would make the self-self edges disappear (i.e., zeros would appear in the diagonal of before edges among cities would be removed). Comparing the results in Figure 4, we see that the output from SpaRJ is more feasible when accounting for geophysical properties. It is not reasonable for the weather of cities to affect each other across very large distances and oceans over a daily timescale, and the parameter estimate should reflect this. This spatial isolation is present in the SpaRJ estimate in Figure 4(b), but is absent from the GraphEM result in Figure 4(a).

V-C Assessing convergence

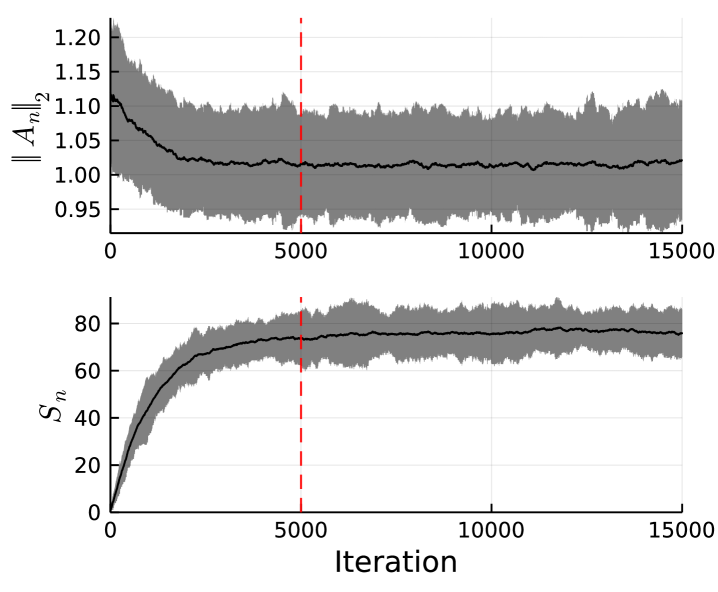

As our method is a MCMC method, it is not desirable to converge in a point-wise sense, however it is desirable to converge in distribution to the target distribution. However, we cannot use standard metrics such as [64] to assess convergence, as these assume that the same parameters are being estimated at all times, which is not the case in our method. In the literature there are specific methods to assess convergence for RJMCMC algorithms, with proposed methods [brooks1998convergence] breaking down for large model spaces with few visited models (as is the case here). However, due to the tight linking between the model space and the parameter space, we can assess convergence via a combination of model metrics and parameter statistics [26].

In order to properly assess convergence, we must take into account both the model space and parameter space, and assess convergence in both. As our model space is closely linked to the values in the parameter space, we are able to assess convergence by jointly observing parameter and model metrics.

We track both the spectral norm of the sampled (parameter metric) and the number of sparse elements (model metric), and plot them in Figure 5. We observe that convergence in both the parameter space and the model space occur quickly, and that convergence seems to occur before the burn-in period ends. This is the case for all examples, with the exemplar system being the slowest to converge. It is possible to decrease the time to convergence in several ways, such as better estimates of the model parameters. However, parameters such as and will also alter the speed of convergence, although the manner in which they do so is dependent on the true dynamics. We note that convergence speed is faster for lower dimensional , and conversely is slower for larger . This is due to a larger matrix having more variables to estimate, and hence requiring sampling from a higher dimensional space. Furthermore, the sampler converges faster for longer series lengths. Experimentally, sparser models benefit from a larger , whereas denser models benefit from a smaller . Increasing increases convergence speed in the parameter space, but decreases convergence speed in the model space. Convergence could also be improved by using a gradient-based sampler for the parameter posterior, as the gradient is available in closed form [6]. We find that the increase in computational cost and the reduction in modularity is not worth the increased speed of convergence. Finally, note that our method inherits the convergence guarantees of RWMH and RJMCMC, and therefore for a finite dimensional parameter space we are guaranteed to converge to the target sampling distribution given sufficient iterations.

VI Conclusion

In this work we have proposed the SpaRJ algorithm, a novel Bayesian method for recovering sparse estimates of the transition matrix of a linear-Gaussian state-space model. In addition, SpaRJ provides Bayesian uncertainty quantification of Granger causality between state elements, following from the interpretation of the transition matrix as representing information flow within an LGSSM. The method, built on reversible jump Markov chain Monte Carlo, has strong theoretical guarantees, displays performance exceeding state-of-the-art methods in both challenging synthetic experiments and when operating on real-world data, and exhibits great potential for extension.

-A Guidance for choice of parameters

| Parameter | Hint | Recommended value(s) |

|---|---|---|

| Increase to promote sparsity | ||

| Decrease to increase acceptance rate | , | |

| Increase to increase acceptance rate | ||

| Increase to promote sparsity | ||

| Decrease to increase acceptance rate |

-B Truncated Poisson distribution

Denote the Poisson distribution with rate that is left-truncated at and right-truncated at by . This distribution has support , and probability mass function of

| (17) |

-C Correction terms

In order to maintain detailed balance in the sampling chain, we must account for the unequal model transition probabilities, which is done via a correction term. These terms arise from the RJMCMC acceptance probability,

| (18) |

in which is the ratio of the probability of the reverse jump to that of the forward jump. All other terms in the acceptance ratio are calculated in Algorithm 1, with the Jacobian term ignored as per Section IV-B3.

As we are using log likelihoods and log acceptance ratios, we compute our correction on the log scale. For a given jump distance , we denote this log correction term , with when jumping sparser, and when jumping denser. This term is equal to the term in the acceptance ratio in Step 3 of Algorithm 1. The calculations for both sparser jumps and denser jumps proceed similarly, thus we detail only the derivation for sparser jumps.

The forward jump is a jump sparser, which occurs with probability . When jumping sparser we truncate the jump distribution at . Hence, the probability of drawing a given jump length for the jump distance is . Given , the probability of choosing a given set of elements in the forward jump is . Multiplying these terms we obtain

The reverse jump is a jump denser, which occurs with probability . When jumping sparser, we truncate the jump distribution at , the number of sparse elements after the forward jump occurs. Hence the probability of drawing a given for the jump distance is . Given , the probability of choosing a given set of elements in the reverse jump is . Multiplying these terms we obtain

From which we obtain the acceptance ratio

Writing we then have

| (19) |

with the corresponding term for the denser jump being

| (20) |

In the case of jumping to and from maximal density (MD) and maximal sparsity (MS) further adjustment is required. In these cases we replace following Table VIII.

| Jump | to MS | to MD | from MS | from MS |

|---|---|---|---|---|

References

- [1] M. Aghagolzadeh and W. Truccolo, “Latent state-space models for neural decoding,” in 2014 36th Annual International Conference of the IEEE Engineering in Medicine and Biology Society. IEEE, 2014, pp. 3033–3036.

- [2] E. S. Knock, L. K. Whittles, J. A. Lees, P. N. Perez-Guzman, R. Verity, R. G. FitzJohn, K. A. M. Gaythorpe, N. Imai, W. Hinsley, L. C. Okell, A. Rosello, N. Kantas, C. E. Walters, S. Bhatia, O. J. Watson, C. Whittaker, L. Cattarino, A. Boonyasiri, B. A. Djaafara, K. Fraser, H. Fu, H. Wang, X. Xi, C. A. Donnelly, E. Jauneikaite, D. J. Laydon, P. J. White, A. C. Ghani, N. M. Ferguson, A. Cori, and M. Baguelin, “Key epidemiological drivers and impact of interventions in the 2020 SARS-CoV-2 epidemic in England,” Sci Transl Med, vol. 13, no. 602, 07 2021.

- [3] S. N. Wood and E. C. Wit, “Was R less than 1 before the English lockdowns? On modelling mechanistic detail, causality and inference about Covid-19,” PLOS ONE, vol. 16, no. 9, pp. 1–19, 09 2021. [Online]. Available: https://doi.org/10.1371/journal.pone.0257455

- [4] M. S. Grewal and A. P. Andrews, “Applications of Kalman filtering in aerospace 1960 to the present [historical perspectives],” IEEE Control Systems Magazine, vol. 30, no. 3, pp. 69–78, 2010.

- [5] J. D. Hamilton, “A standard error for the estimated state vector of a state-space model,” Journal of Econometrics, vol. 33, no. 3, pp. 387–397, 1986.

- [6] S. Särkkä, Bayesian filtering and smoothing. Cambridge University Press, 2013, no. 3.

- [7] R. E. Kalman, “A new approach to linear filtering and prediction problems,” Transactions of the ASME–Journal of Basic Engineering, vol. 82, no. Series D, pp. 35–45, 1960.

- [8] G. A. Einicke and L. B. White, “Robust extended Kalman filtering,” IEEE Transactions on Signal Processing, vol. 47, no. 9, pp. 2596–2599, 1999.

- [9] E. A. Wan and R. Van Der Merwe, “The unscented Kalman filter for nonlinear estimation,” in Proceedings of the IEEE 2000 Adaptive Systems for Signal Processing, Communications, and Control Symposium (Cat. No. 00EX373). IEEE, 2000, pp. 153–158.

- [10] N. Gordon, D. Salmond, and A. F. M. Smith, “Novel approach to nonlinear and non-Gaussian Bayesian state estimation,” IEE Proceedings-F Radar and Signal Processing, vol. 140, pp. 107–113, 1993.

- [11] P. M. Djuric, J. H. Kotecha, J. Zhang, Y. Huang, T. Ghirmai, M. F. Bugallo, and J. Miguez, “Particle filtering,” IEEE signal processing magazine, vol. 20, no. 5, pp. 19–38, 2003.

- [12] A. Doucet, A. M. Johansen et al., “A tutorial on particle filtering and smoothing: Fifteen years later,” Handbook of nonlinear filtering, vol. 12, no. 656-704, p. 3, 2009.

- [13] V. Elvira, L. Martino, M. F. Bugallo, and P. M. Djuric, “Elucidating the auxiliary particle filter via multiple importance sampling [lecture notes],” IEEE Signal Processing Magazine, vol. 36, no. 6, pp. 145–152, 2019.

- [14] N. Branchini and V. Elvira, “Optimized auxiliary particle filters: adapting mixture proposals via convex optimization,” in Uncertainty in Artificial Intelligence. PMLR, 2021, pp. 1289–1299.

- [15] C. Andrieu, A. Doucet, and R. Holenstein, “Particle Markov chain Monte Carlo methods,” Journal of the Royal Statistical Society: Series B (Statistical Methodology), vol. 72, no. 3, pp. 269–342, 2010.

- [16] D. J. Watts and S. H. Strogatz, “Collective dynamics of ‘small-world’ networks,” Nature, vol. 393, no. 6684, pp. 440–442, 1998.

- [17] E. Chouzenoux and V. Elvira, “Graphem: EM algorithm for blind Kalman filtering under graphical sparsity constraints,” in ICASSP 2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). IEEE, 2020, pp. 5840–5844.

- [18] A. Pirayre, C. Couprie, L. Duval, and J. Pesquet, “BRANE Clust: Cluster-assisted gene regulatory network inference refinement,” IEEE/ACM Transactions on Computational Biology and Bioinformatics, vol. 15, no. 3, pp. 850–860, May 2018.

- [19] D. Luengo, G. Rios-Munoz, V. Elvira, C. Sanchez, and A. Artes-Rodriguez, “Hierarchical algorithms for causality retrieval in atrial fibrillation intracavitary electrograms,” IEEE Journal of Biomedical and Health Informatics, vol. 12, no. 1, pp. 143–155, Jan. 2019.

- [20] C. Ravazzi, R. Tempo, and F. Dabbene, “Learning influence structure in sparse social networks,” IEEE Transactions on Control of Network Systems, vol. PP, pp. 1–1, 12 2017.

- [21] J. Richiardi, S. Achard, B. Horst, , and D. V. D. Ville, “Machine learning with brain graphs,” IEEE Signal Processing Magazine, vol. 30, no. 3, pp. 58–70, 2013.

- [22] V. Elvira and É. Chouzenoux, “Graphical inference in linear-gaussian state-space models,” IEEE Transactions on Signal Processing, vol. 70, pp. 4757–4771, 2022.

- [23] E. Chouzenoux and V. Elvira, “Graphit: Iterative reweighted algorithm for sparse graph inference in state-space models,” in ICASSP 2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). IEEE, 2023, pp. 1–5.

- [24] P. J. Green, “Reversible jump Markov chain Monte Carlo computation and Bayesian model determination,” Biometrika, vol. 82, no. 4, pp. 711–732, 1995.