Sparsity in Optimal Randomized Classification Trees

Abstract

Decision trees are popular Classification and Regression tools and, when small-sized, easy to interpret. Traditionally, a greedy approach has been used to build the trees, yielding a very fast training process; however, controlling sparsity (a proxy for interpretability) is challenging. In recent studies, optimal decision trees, where all decisions are optimized simultaneously, have shown a better learning performance, especially when oblique cuts are implemented. In this paper, we propose a continuous optimization approach to build sparse optimal classification trees, based on oblique cuts, with the aim of using fewer predictor variables in the cuts as well as along the whole tree. Both types of sparsity, namely local and global, are modeled by means of regularizations with polyhedral norms. The computational experience reported supports the usefulness of our methodology. In all our data sets, local and global sparsity can be improved without harming classification accuracy. Unlike greedy approaches, our ability to easily trade in some of our classification accuracy for a gain in global sparsity is shown.

keywords:

Data mining , Optimal Classification Trees , Global and Local Sparsity , Nonlinear ProgrammingSee FrontPage

1 Introduction

Decision trees [40] are a popular non-parametric tool for Classification and Regression in Statistics and Machine Learning [21]. Since they are rule-based, when small-sized, they are deemed to be leaders in terms of interpretability [1, 2, 9, 15, 17, 23, 27, 28, 32, 36].

It is well-known that the problem of building optimal decision trees is NP-complete [22].

For this reason, classic decision trees have been traditionally designed using greedy procedures in which at each branch node of the tree, some purity criterion is (locally) optimized. For instance, CARTs [8] employ a greedy and recursive partitioning procedure which is computationally cheap, especially since orthogonal cuts are implemented, i.e., one single predictor variable is involved in each branching rule. These rules are of maximal sparsity at each branching node (excellent local sparsity), making classic decision trees locally easy to interpret. However, when deep, they become to be harder to interpret since many predictor variables are, in general, involved across all branching rules (not so good global sparsity).

Addressing global sparsity is a challenge in decision trees and, to the best of our knowledge, this has not been tackled appropriately in the literature. Standard CARTs or Random Forests (RFs) [5, 7, 13, 16] cannot manage it due to the greedy construction of the trees. Nonetheless, some attempts have been made, see [11, 12]. Classic decision trees usually select their orthogonal cuts at each branch node by optimizing an information theory criterion among all possible predictor variables and thresholds. The regularization framework in [11] considers a penalty to this criterion for predictor variables that have not appeared yet in the tree. This approach is refined in [12], by also including the importance scores of the predictor variables, obtained in a preprocessing step running a preliminary RF.

The mainstream trend of using a greedy strategy in the construction of decision trees may lead to myopic decisions, which, in turn, may affect the overall learning performance. The major advances in Mathematical Optimization [10, 30, 33] have led to different approaches to build decision trees with some overall optimality criterion, called hereafter optimal classification trees. It is worth mentioning recent proposals which grow optimal classification trees of a pre-established depth, both deterministic [4, 14, 18, 37, 38] and randomized [6]. The deterministic approaches formulate the problem of building the tree as a mixed-integer linear optimization problem. Such approach is the most natural, since many discrete decisions are to be made when building a decision tree. Although the results of such optimal classification trees are encouraging, the inclusion of integer decision variables makes the computing times explode, giving rise to models trained over a small subsample of the data set [18] and, as customary, with a CPU time limit being imposed to the optimization solver. On the other hand, a continuous optimization-based approach to build optimal randomized classification trees is proposed in [6]. This is achieved by replacing the yes/no decisions in traditional trees by probabilistic decisions, i.e., instead of deciding at each branch node if an individual goes either to the left or to the right child node in the tree, the probability of going to the left is sought. The numerical results in [6] illustrate the good performance achieved in very short time. All these optimization-based approaches are flexible enough to address critical issues that the greedy nature of classic decision trees would find it difficult, such as preferences on the classification performance in some class where misclassifying is more damaging [6, 37, 38], or controlling the number of predictor variables used along the tree (local and global sparsity).

Optimal classification trees have been grown with both orthogonal [4, 14, 18] and oblique cuts [3, 4, 6, 29, 37, 38]. Oblique cuts are more flexible than orthogonal ones since a combination of several predictor variables is allowed in the branching. Trees based on oblique cuts lead to similar or even better learning performance than those based on orthogonal cuts, and, at the same time, they exhibit a shallow depth, since several orthogonal cuts may be reduced to one single oblique cut. Apart from the flexibility that we can borrow from them, many integer decision variables associated with orthogonal cuts are not present in the oblique ones, which eases the optimization. Therefore, optimal classification trees based on oblique cuts require a lower training computing time while showing much more promising results in terms of accuracy. However, this comes at the expense of damaging interpretability, since, in principle, all the predictor variables could appear in each branching rule. In this paper, we tackle this issue.

We propose a novel optimized classification tree, based on the methodology in [6] and, therefore, in oblique cuts, that yields rules/trees that are sparser, and thus enhance interpretability. We model this as a continuous optimization problem.

As in the classic LASSO model [35], sparsity is sought by means of regularization terms.

We model local sparsity with the -norm, and the global sparsity with the -norm. The reguralization has been applied to other classifiers, for instance, Support Vector Machines [25, 26, 41], but the is more popular. A novel continuous-based approach for building this sparse optimal randomized classification tree is provided. Theoretical results on the range of the sparsity parameters are shown. Our numerical results, where well-known real data sets are used, illustrate the efectiveness of our methodology: sparsity in optimal classification trees improves without harming learning performance. In addition, our ability to trade in some of our classification accuracy, still being superior to CART, to be comparable to CART in terms of global sparsity is shown.

The remainder of the paper is organized as follows. In Section 2 we detail the construction of the Sparse Optimal Randomized Classification Tree. Some theoretical properties are given in Section 3. In Section 4, our numerical experience is reported.

Finally, conclusions and possible lines of future research are provided in Section 5.

2 Sparsity in Optimal Randomized Classification Trees

2.1 Introduction

We assume given a training sample , where represents the -dimensional vector of predictor variables of individual , and indicates the class membership. Without loss of generality, we assume .

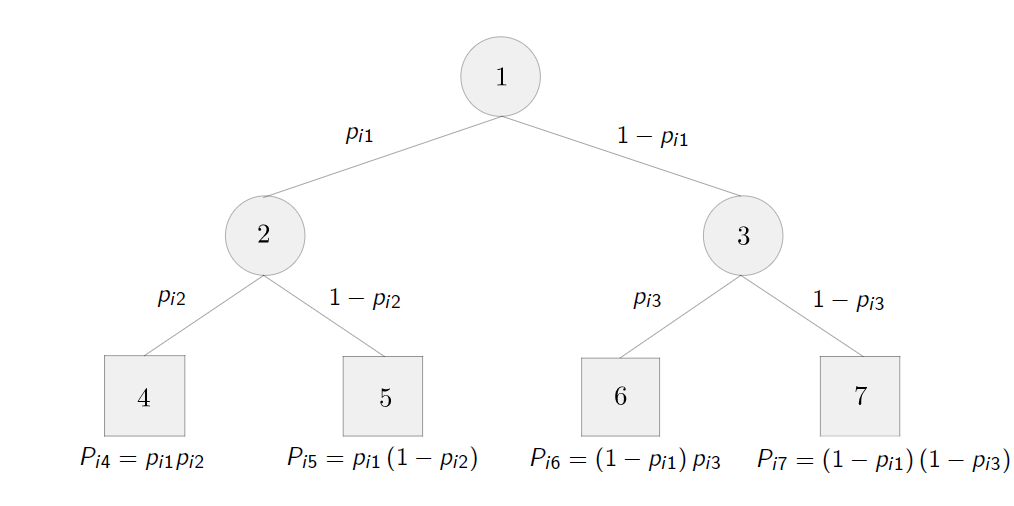

Sparse Optimal Randomized Classification Trees, addressed in this paper, extend the Optimal Randomized Classification Trees (ORCTs) in [6]. An ORCT is an optimal binary classification tree of a given depth , obtained by minimizing the expected misclassification cost over the training sample. Figure 1

shows the structure of an ORCT of depth . Unlike classic decision trees, oblique cuts, on which more than one predictor variable takes part, are performed. ORCTs are modeled by means of a Non-Linear Continuous Optimization formulation. The usual deterministic yes/no rule at each branch node is replaced by a smoother rule: a probabilistic decision rule at each branch node, induced by a cumulative density function (CDF) , is obtained. Therefore, the movements in ORCTs can be seen as randomized: at a given branch node of an ORCT, a random variable will be generated to indicate by which branch an individual has to continue. Since binary trees are built, the Bernoulli distribution is appropriate, whose probability of success will be determined by the value of this CDF, evaluated over the vector of predictor variables. More precisely, at a given branch node of the tree, an individual with predictor variables will go either to the left or to the right child nodes with probabilities and , respectively, where and are decision variables. For further details on the construction of ORCTs, the reader is referred to [6]. Sparse ORCT, S-ORCT, minimizes the expected misclassification cost over the training sample regularized with two polyhedral norms.

The following notation is needed:

| Parameters | |

| depth of the binary tree, | |

| number of individuals in the training sample, | |

| number of predictor variables, | |

| number of classes, | |

| training sample, where and | |

| set of individuals in the training sample belonging to class , | |

| misclassification cost incurred when classifying an individual , whose class is , in class , , | |

| univariate continuous CDF centered at , used to define the probabilities for an individual to go to the left or the right child node in the tree. We will assume that is the CDF of a continuous random variable with density , | |

| local sparsity regularization parameter, | |

| global sparsity regularization parameter, | |

| Nodes | |

| set of branch nodes, | |

| set of leaf nodes, | |

| set of ancestor nodes of leaf node whose left branch takes part in the path from the root node to leaf node , , | |

| set of ancestor nodes of leaf node whose right branch takes part in the path from the root node to leaf node , , | |

| Decision variables | |

| coefficient of predictor variable in the oblique cut at branch node , with being the matrix of these coefficients, . The expressions and will denote the -th row and the -th column of , respectively, | |

| location parameter at branch node , being the vector that comprises every , i.e., , | |

| probability of being assigned to class label for an individual at leaf node , being the matrix such that . | |

| Probabilities | |

| probability of individual going down the left branch at branch node . Its expression is , | |

| probability of individual falling into leaf node . Its expression is | |

| expected misclassification cost over the training sample. Its expression is . |

2.2 The formulation

With these parameters and decision variables, the S-ORCT is formulated as follows:

| (1) | ||||

| s.t. | (2) | |||

| (3) | ||||

| (4) | ||||

| (5) | ||||

| (6) |

In the objective function we have three terms, the first being the expected misclassification cost in the training sample, while the second and the third are regularization terms. The second term addresses local sparsity, since it penalizes the coefficients of the predictor variables used in the cuts along the tree. Instead, the third term controls whether a given predictor variable is ever used across the whole tree, thus addressing global sparsity. The -norm is used as a group penalty function, by forcing the coefficients linked to the same predictor variable to be shrunk simultaneously along all branch nodes. Note that both local and global sparsity are equivalent when dealing with depth , as there is a single cut across the whole tree.

In terms of the feasible region, for each leaf node , represents the probability that an individual at node is assigned to class . Constraints (2) force that such probabilities sum to , while constraints (3) force the sum of the probabilities along all leaf nodes assigned to class to be at least one.

Theorem 1 guarantees the existence of an optimal deterministic solution, i.e., such probabilities will all be in , and thus (6) can be replaced by

| (7) |

Constraints (6) and (7) will be used interchangeably when needed.

Proof.

The continuity of the objective function (1), defined over a compact set, ensures the existence of an optimal solution of the optimization problem (1)-(6), by Weierstrass Theorem. Let be an optimal solution. Fixed , then is optimal to the following problem in the decision variables :

This is a transportation problem, to which the integrality of an optimal solution is well-known to hold, i.e., there exists for all such that is also optimal for (1)-(6). ∎

Theorem 1 gives a new interpretation of constraints (2)-(3): if (7) is used instead of (6), when takes the value , then all the individuals at node are labelled as ; and , otherwise. Constraints (2) state that any leaf node must be labelled with exactly one class label, and constraints (3) state that each class has at least one node with such label.

Once the optimization problem is solved, the S-ORCT predicts the class of a new unlabeled observation with predictor vector with a probabilistic rule, namely, we estimate the probability of being in class as . If a deterministic classification rule is sought, we allocate to the most probable class. Moreover, if prior probabilities are given, one can also use the Bayes rule.

ORCTs were also shown to deal effectively with controlling the correct classification rate on different classes. This idea can also be applied to S-ORCTs. Hence, given the classes to be controlled and their corresponding desired performances , the expectation of achieving each performance guarantee can be computed with the ORCT parameters, provided that the following set of constrainsts is added to the model:

| (8) |

With these constraints we have a direct control on the classification performance in each class separately. This is useful when dealing with imbalanced data sets.

2.3 A smooth reformulation

Problem (1)-(6) is non-smooth due to the norms and appearing in the objective function. A smooth version is easily obtained by rewritting both regularization terms using new decision variables. Since the first regularization term includes absolute values,

decision variables , are split into their positive and negative counterparts , respectively, holding and . Similarly, we denote and . Regarding the second regularization term, new decision variables are needed:

and have to force .

3 Theoretical properties

This section discusses some theoretical properties enjoyed by the S-ORCT. Let us consider the objective function of (1)-(6). When taking and large enough, the first term related to the performance of the classifier becomes negligible and therefore will shrink to . The tree with is the sparsest possible tree though not the best promising one from the accuracy point of view, since none of the predictor variables is used to classify. In this case, the probability of an individual with predictor variables being assigned to class is independent of , and nothing more than the distribution of classes is available. In this section, we derive upper bounds for the sparsity parameters, and , in the sense that above these bounds the sparsest tree (with ) is a stationary point of the S-ORCT, that is, there exists such that the necessary optimality condition with respect to is satisfied. This is done in Theorems 2 and 3.

Theorem 2.

Let . For

is a stationary point of the S-ORCT.

Proof.

Let be such that they satisfy the assumptions.

By Theorem 1, there exists optimal solution to (1)-(6) satisfying . In the following we will show that is a stationary point of the S-ORCT, i.e.,

| (19) |

where is the subdifferential operator.

For every , we have that

Hence,

if, and only if,

if, and only if, there exist such that

if, and only if, there exist such that

Let us consider

and check that the conditions are satisfied:

Therefore, the desired result follows. ∎

A stronger result is proven for the S-ORCT of depth and . Since local and global sparsity are equivalent for the S-ORCT of depth , without loss of generality, we can assume that . Therefore, the objective function of the S-ORCT of depth can be written as:

where

| (20) |

and

A technical lemma is needed to prove the desired result.

Lemma 1.

For any allocation rule , the objective function of the S-ORCT of depth , , is monotonic in when .

Proof.

Fixed , and ,

where

and

Thus,

Since is a probability density function, the expression will always have the same sign for any value of and the desired result follows. ∎

Theorem 3.

For

| (21) |

is a stationary point of the S-ORCT of depth .

Proof.

Using the monotonicity of proven in Lemma 1 and Theorem 2 with , we have that for

| (22) |

where is as in (20), is a stationary point of thr S-ORCT. The remainder of the proof is devoted to rewriting (22) as in (21).

We proceed with the calculation of the gradient.

For :

where

and

Thus,

Now, we look for the maximum among every possible allocation of the decision variables , i.e.:

where

and .

A finite number of transportation problems is to be solved, with the form:

| s.t. | |||

for which the integrality property holds. Then, we only have as possible solutions: or . Thus, the optimal objective is obtained as follows:

Let us define

and the result holds when

∎

4 Computational experience

4.1 Introduction

The aim of this section is to illustrate the performance of our sparse optimal randomized classification trees S-ORCT’s. We have run our model for a grid of values of the sparsity regularization parameters and . The message that can be drawn from our experimental experience is twofold. First, we show empirically that our S-ORCT can gain in both local and global sparsity, without harming classification accuracy. Second, we benchmark our approach against CART, the classic approach to build decision trees, which considers orthogonal cuts and therefore has the best possible local sparsity. We show that we are able to trade in some of our classification accuracy, still being superior to CART, to be comparable to CART in terms of global sparsity.

The S-ORCT smooth formulation (9)-(16) has been implemented using Pyomo optimization modeling language [19, 20] in Python 3.5 [31]. As solver, we have used IPOPT 3.11.1 [39], and have followed a multistart approach, where the process is repeated times starting from different random initial solutions. For CART, the implementation in the rpart R package [34] is used. Our experiments have been conducted on a PC, with an Intel® CoreTM i7-2600 CPU 3.40GHz processor and 16 GB RAM. The operating system is 64 bits.

The remainder of the section is structured as follows. Section 4.2 gives details on the procedure followed to test S-ORCT. In Sections 4.3 and 4.4, respectively, we discuss the results for local and global sparsities separately, while in Section 4.5 we present results when both sparsities are simultaneously taken into account. Finally, Section 4.6 statistically compares S-ORCT versus CART in terms of classification accuracy and global sparsity.

4.2 Setup

An assorted collection of well-known real data sets from the UCI Machine Learning Repository [24] has been chosen for the computational experiments. Table 2 lists their names together with their number of observations, number of predictor variables and number of classes with the corresponding class distribution. In our pursuit of building small and, therefore, less complex trees, the construction of S-ORCTs has been restricted to depth for two-class problems and depth for three- and four- class problems.

| Data set | Abbrev. | Class distribution | |||

| Monks-problems-3 | Monks-3 | 122 | 11 | 2 | 51% - 49% |

| Monks-problems-1 | Monks-1 | 124 | 11 | 2 | 50% - 50% |

| Monks-problems-2 | Monks-2 | 169 | 11 | 2 | 62% - 38% |

| Connectionist-bench-sonar | Sonar | 208 | 60 | 2 | 55% - 45% |

| Ionosphere | Ionosphere | 351 | 34 | 2 | 64% - 36% |

| Breast-cancer-Wisconsin | Wisconsin | 569 | 30 | 2 | 63% - 37% |

| Credit-approval | Creditapproval | 653 | 37 | 2 | 55% - 45% |

| Pima-indians-diabetes | Pima | 768 | 8 | 2 | 65% - 35% |

| Statlog-project-German-credit | Germancredit | 1000 | 48 | 2 | 70% - 30% |

| Banknote-authentification | Banknote | 1372 | 4 | 2 | 56% - 44% |

| Ozone-level-detection-one | Ozone | 1848 | 72 | 2 | 97% - 3% |

| Spambase | Spam | 4601 | 57 | 2 | 61% - 39% |

| Iris | Iris | 150 | 4 | 3 | 33.3%-33.3%-33.3% |

| Wine | Wine | 178 | 13 | 3 | 40%-33%-27% |

| Seeds | Seeds | 210 | 7 | 3 | 33.3%-33.3%-33.3% |

| Balance-scale | Balance | 625 | 16 | 3 | 46%-46%-8% |

| Thyroid-disease-ann-thyroid | Thyroid | 3772 | 21 | 3 | 92.5%-5%-2.5% |

| Car-evaluation | Car | 1728 | 15 | 4 | 70%-22%-4%-4% |

Each data set has been split into two subsets: the training subset (75%) and the test subset (25%). The corresponding S-ORCT is built on the training subset and, then, accuracy, local and global sparsities are measured. The out-of-sample accuracy over the test subset is denoted by acc. Local sparsity is denoted by and reads as the average percentage of predictor variables not used per branch node:

Global sparsity, , is measured as the percentage of predictor variables not used at any of the branch nodes, i.e., across the whole tree:

Note that when , local and global sparsity are measuring the same since there is a single cut across the whole tree. The training/testing procedure has been repeated ten times in order to avoid the effect of the initial split of the data. The results shown in the tables represent the average of such ten runs to each of the three performance criteria.

In what follows, we describe the choices made for the parameters in S-ORCT. Equal misclassification weights, , have been used for the experiments. We have added the set of constraints (8) with . The logistic CDF has been chosen for our experiments:

with a large value of , namely, . The larger the value of , the closer the decision rule defined by is to a deterministic rule. We will illustrate that a small level of randomization is enough for obtaining good results. We have trained S-ORCT, as formulated in (9)-(16), for pairs of values for starting from followed by the grid , and, similarly, followed by the grid . We start solving the optimization problem with , where the multistart approach uses 20 random initial solutions. We continue solving the optimization problem for but with larger values of . Once all values of are executed, we start the process all over again with the next value of in the grid. For pair , we feed the corresponding optimization problem with the 20 solutions resulting from the problem solved for the previous pair. For a given initial solution, the computing time taken by the S-ORCT typically ranges from 0.33 seconds (in Monks-1) to 22.27 seconds (in Thyroid).

For CART, the default parameter setting in rpart is used.

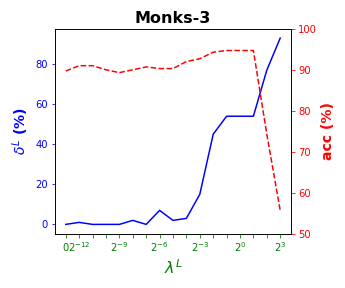

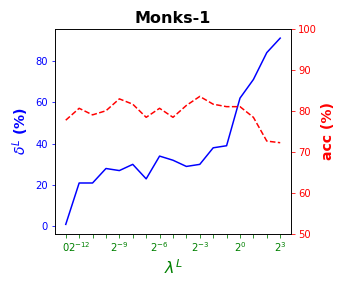

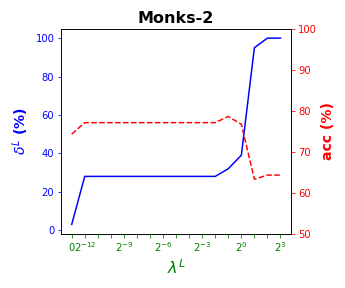

4.3 Results for local sparsity

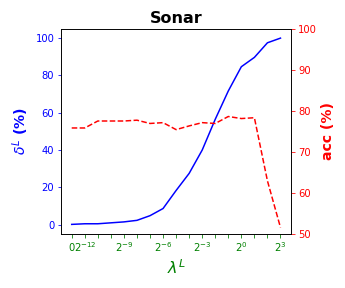

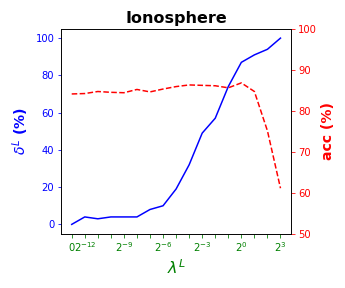

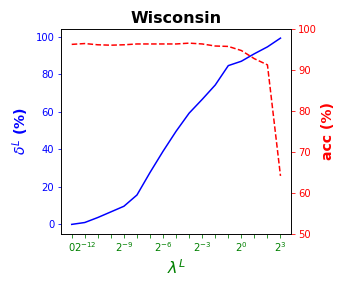

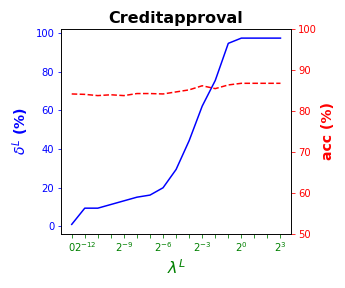

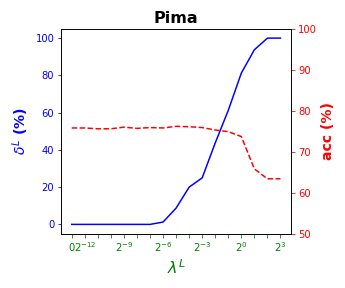

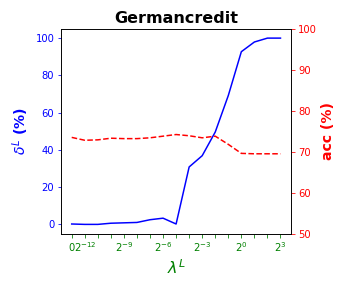

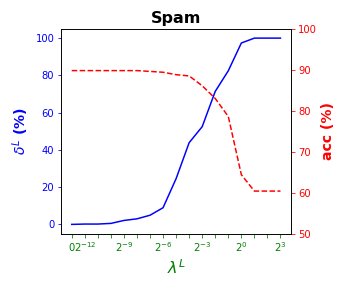

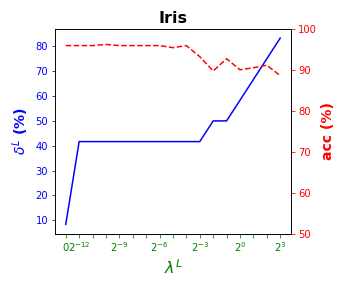

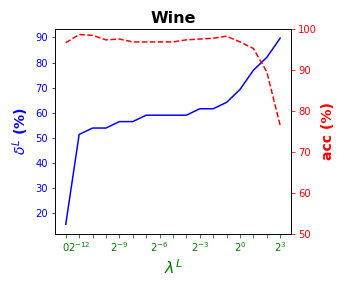

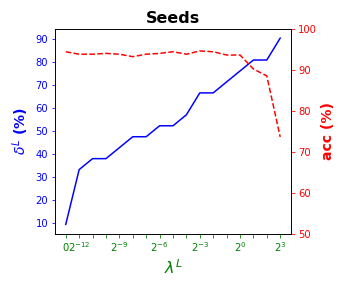

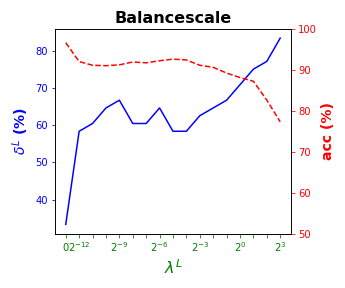

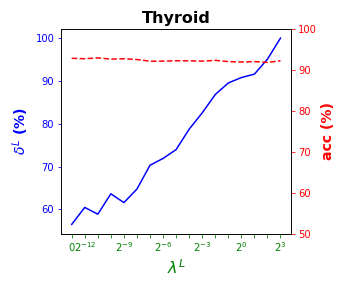

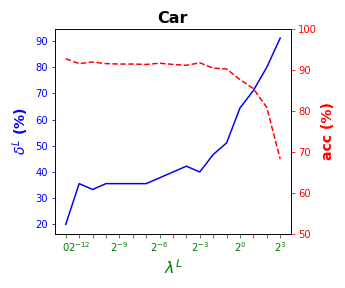

Tables 3 and 4 present the results of the so-called local S-ORCT, i.e., when and thus only local sparsity is taken into account. Figures 2 and 3 depict these results per data set, by showing simultaneously (blue solid line) and acc (red dashed line) as a function of the grid of the ’s considered. As expected, the larger the , the larger the . The sparsest tree is shown in most of the data sets for large values of the parameter , where the best solution in terms of sparsity is obtained but the worst possible one in terms of accuracy. In terms of accuracy, the best rates are sometimes achieved when not all the predictor variables are included in the model. For instance, best performance is reached when sparsity is about for Pima, the for Monks-1, the for Monks-2, the for Germancredit, the for Car, the for Thyroid, the for Monks-3, the for Iris, the for Sonar, the for both Wine and Seeds and the for Ionosphere. We highlight the Creditapproval data set, on which one single predictor variable can already guarantee very good accuracy. For Ozone, accuracy remains over the for the grid of ’s considered. Accuracy might be slightly damaged but a great gain in sparsity is obtained. This is the case for Banknote, Spam, Balance or Wisconsin, which present a loss of accuracy lower than the percentage point (p.p.), p.p., p.p. and p.p. but , , and of local sparsity is reached, respectively.

| Monks-3 | Monks-1 | Monks-2 | Sonar | Ionosphere | Wisconsin | Creditapproval | Pima | Germancredit | Banknote | Ozone | Spam | |||||||||||||

| acc | acc | acc | acc | acc | acc | acc | acc | acc | acc | acc | acc | |||||||||||||

| 0 | 89.7 | 1 | 77.7 | 3 | 74.3 | 0 | 75.8 | 0 | 84.1 | 0 | 96.2 | 1 | 84.1 | 0 | 75.8 | 0 | 73.5 | 0 | 99.0 | 0 | 96.5 | 0 | 89.8 | |

| 1 | 91.0 | 21 | 80.6 | 28 | 77.1 | 0 | 75.8 | 4 | 84.2 | 1 | 96.4 | 9 | 84.0 | 0 | 75.8 | 0 | 72.8 | 0 | 99.0 | 4 | 96.6 | 0 | 89.8 | |

| 0 | 91.0 | 21 | 79.0 | 28 | 77.1 | 0 | 77.5 | 3 | 84.7 | 4 | 96.1 | 9 | 83.7 | 0 | 75.6 | 0 | 72.9 | 0 | 99.0 | 10 | 96.5 | 0 | 89.8 | |

| 0 | 90.0 | 28 | 80.0 | 28 | 77.1 | 1 | 77.5 | 4 | 84.5 | 7 | 96.0 | 11 | 83.9 | 0 | 75.6 | 1 | 73.3 | 0 | 99.1 | 18 | 96.4 | 1 | 89.8 | |

| 0 | 89.3 | 27 | 82.9 | 28 | 77.1 | 2 | 77.5 | 4 | 84.4 | 10 | 96.1 | 13 | 83.7 | 0 | 76 | 1 | 73.2 | 3 | 99.1 | 29 | 96.5 | 2 | 89.8 | |

| 2 | 90.0 | 30 | 81.6 | 28 | 77.1 | 2 | 77.7 | 4 | 85.2 | 16 | 96.3 | 15 | 84.2 | 0 | 75.7 | 1 | 73.2 | 3 | 99.1 | 44 | 96.4 | 3 | 89.8 | |

| 0 | 90.7 | 23 | 78.4 | 28 | 77.1 | 5 | 76.9 | 8 | 84.6 | 28 | 96.3 | 16 | 84.2 | 0 | 75.9 | 2 | 73.4 | 3 | 99.0 | 62 | 96.4 | 5 | 89.6 | |

| 7 | 90.3 | 34 | 80.6 | 28 | 77.1 | 9 | 77.1 | 10 | 85.3 | 39 | 96.3 | 20 | 84.1 | 1 | 75.8 | 3 | 73.8 | 3 | 98.7 | 78 | 96.6 | 9 | 89.4 | |

| 2 | 90.3 | 32 | 78.4 | 28 | 77.1 | 18 | 75.4 | 19 | 85.9 | 50 | 96.3 | 29 | 84.6 | 9 | 76.2 | 0 | 74.2 | 23 | 98.5 | 83 | 96.6 | 25 | 88.8 | |

| 3 | 92.0 | 29 | 81.3 | 28 | 77.1 | 28 | 76.3 | 32 | 86.3 | 59 | 96.5 | 44 | 85.1 | 20 | 76.1 | 31 | 73.9 | 15 | 98.3 | 87 | 96.6 | 44 | 88.5 | |

| 15 | 92.7 | 30 | 83.5 | 28 | 77.1 | 40 | 77.1 | 49 | 86.2 | 67 | 96.3 | 62 | 86.1 | 25 | 75.9 | 37 | 73.4 | 10 | 98.0 | 90 | 96.7 | 52 | 86.1 | |

| 45 | 94.3 | 38 | 81.6 | 28 | 77.1 | 56 | 76.9 | 57 | 86.1 | 74 | 95.8 | 75 | 85.4 | 44 | 75.3 | 50 | 73.8 | 25 | 98.0 | 92 | 96.7 | 71 | 83 | |

| 54 | 94.7 | 39 | 81.0 | 32 | 78.6 | 72 | 78.6 | 74 | 85.6 | 85 | 95.7 | 95 | 86.3 | 61 | 74.9 | 69 | 71.8 | 25 | 97.5 | 95 | 96.7 | 82 | 78.6 | |

| 54 | 94.7 | 62 | 81.0 | 39 | 76.7 | 85 | 78.1 | 87 | 86.8 | 87 | 94.7 | 97 | 86.7 | 81 | 73.7 | 93 | 69.6 | 25 | 96.7 | 96 | 96.7 | 97 | 64.4 | |

| 54 | 94.7 | 71 | 78.4 | 95 | 63.3 | 90 | 78.3 | 91 | 84.7 | 91 | 92.7 | 97 | 86.7 | 94 | 65.8 | 98 | 69.5 | 50 | 85.8 | 97 | 96.7 | 100 | 60.4 | |

| 77 | 74.3 | 84 | 72.6 | 100 | 64.3 | 98 | 62.9 | 94 | 75.1 | 95 | 91.2 | 97 | 86.7 | 100 | 63.4 | 100 | 69.5 | 50 | 84.0 | 100 | 96.7 | 100 | 60.4 | |

| 93 | 55.7 | 91 | 72.2 | 100 | 64.3 | 100 | 51.5 | 100 | 61.1 | 99 | 64.1 | 97 | 86.7 | 100 | 63.4 | 100 | 69.5 | 100 | 56.3 | 100 | 96.7 | 100 | 60.4 | |

| Iris | Wine | Seeds | Balance | Thyroid | Car | |||||||

| acc | acc | acc | acc | acc | acc | |||||||

| 8 | 95.9 | 15 | 96.6 | 10 | 94.4 | 33 | 96.6 | 57 | 92.8 | 20 | 92.7 | |

| 42 | 95.9 | 51 | 98.6 | 33 | 93.8 | 58 | 92.0 | 61 | 92.7 | 36 | 91.5 | |

| 42 | 95.9 | 54 | 98.4 | 38 | 93.8 | 60 | 91.1 | 59 | 92.9 | 33 | 91.9 | |

| 42 | 96.2 | 54 | 97.3 | 38 | 94.0 | 65 | 91.0 | 64 | 92.6 | 36 | 91.5 | |

| 42 | 95.9 | 56 | 97.5 | 43 | 93.8 | 67 | 91.2 | 62 | 92.7 | 36 | 91.4 | |

| 42 | 95.9 | 56 | 96.8 | 48 | 93.2 | 60 | 91.9 | 65 | 92.5 | 36 | 91.4 | |

| 42 | 95.9 | 59 | 96.8 | 48 | 91.3 | 60 | 91.7 | 70 | 92.1 | 36 | 91.3 | |

| 42 | 95.9 | 59 | 96.8 | 52 | 94.0 | 65 | 92.2 | 72 | 92.1 | 38 | 91.6 | |

| 42 | 95.4 | 59 | 96.8 | 52 | 94.4 | 58 | 92.6 | 74 | 92.2 | 40 | 91.3 | |

| 42 | 95.9 | 59 | 97.3 | 57 | 93.8 | 58 | 92.4 | 79 | 92.2 | 42 | 91.1 | |

| 42 | 93.2 | 62 | 97.5 | 67 | 94.6 | 63 | 91.1 | 83 | 92.1 | 40 | 91.7 | |

| 50 | 89.7 | 62 | 97.7 | 67 | 94.4 | 65 | 90.6 | 87 | 92.3 | 47 | 90.4 | |

| 50 | 92.7 | 64 | 98.2 | 71 | 93.6 | 67 | 89.2 | 90 | 92.0 | 51 | 90.2 | |

| 58 | 90.0 | 69 | 96.8 | 76 | 93.6 | 71 | 88.1 | 91 | 91.9 | 64 | 87.6 | |

| 67 | 90.5 | 77 | 95.2 | 81 | 90.2 | 75 | 87.2 | 92 | 92.0 | 71 | 85.4 | |

| 75 | 91.1 | 82 | 89.5 | 81 | 88.5 | 77 | 82.6 | 95 | 91.8 | 80 | 80.8 | |

| 83 | 88.6 | 90 | 76.4 | 91 | 73.6 | 83 | 77.3 | 100 | 92.2 | 91 | 68.2 | |

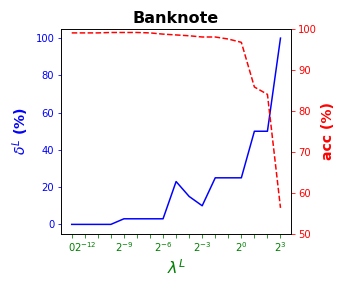

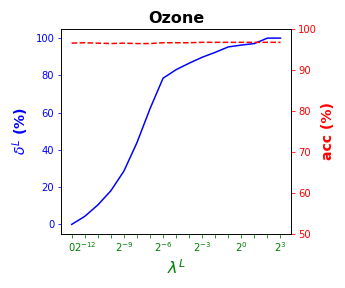

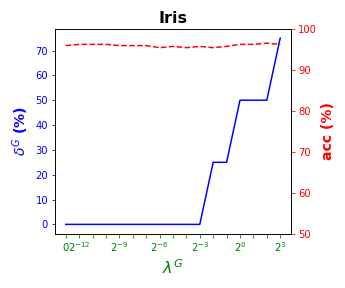

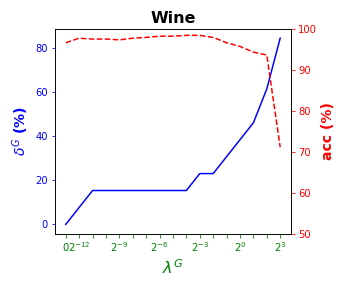

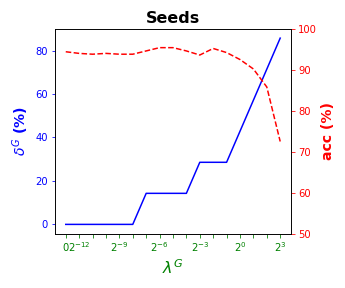

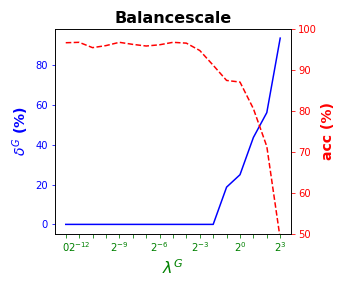

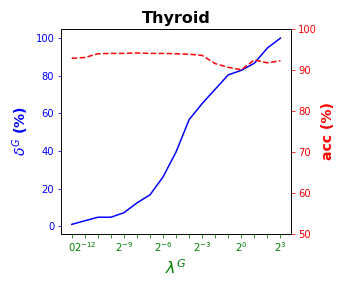

4.4 Results for global sparsity

This section is devoted to the global S-ORCT, i.e., when and thus only global sparsity is taken into account. We focus on depth , since for global sparsity is equal to local sparsity. Similarly to Subsection 4.3, Table 5

| Iris | Wine | Seeds | Balance | Thyroid | Car | |||||||

| acc | acc | acc | acc | acc | acc | |||||||

| 0 | 95.9 | 0 | 96.6 | 0 | 94.4 | 0 | 96.6 | 1 | 92.8 | 0 | 92.7 | |

| 0 | 96.2 | 18 | 97.7 | 0 | 94.0 | 0 | 96.7 | 3 | 93.0 | 0 | 93.4 | |

| 0 | 96.2 | 15 | 97.5 | 0 | 93.8 | 0 | 95.4 | 5 | 93.9 | 0 | 93.7 | |

| 0 | 96.2 | 15 | 97.5 | 0 | 94.0 | 0 | 95.9 | 5 | 93.9 | 0 | 94.1 | |

| 0 | 95.9 | 15 | 97.3 | 0 | 93.8 | 0 | 96.7 | 7 | 94.0 | 0 | 94.0 | |

| 0 | 95.9 | 15 | 97.7 | 0 | 93.8 | 0 | 96.2 | 12 | 94.1 | 0 | 94.7 | |

| 0 | 95.9 | 15 | 97.9 | 14 | 94.6 | 0 | 95.8 | 17 | 94.0 | 0 | 95.0 | |

| 0 | 95.4 | 15 | 98.2 | 14 | 95.4 | 0 | 96.1 | 26 | 94.0 | 0 | 94.9 | |

| 2 | 95.7 | 15 | 98.2 | 14 | 95.4 | 0 | 96.7 | 40 | 93.9 | 0 | 94.9 | |

| 0 | 95.4 | 15 | 98.4 | 14 | 94.6 | 0 | 96.5 | 57 | 93.8 | 0 | 94.7 | |

| 0 | 95.7 | 23 | 98.4 | 29 | 93.6 | 0 | 94.7 | 65 | 93.5 | 7 | 94.6 | |

| 25 | 95.4 | 23 | 97.9 | 29 | 95.2 | 0 | 91.1 | 73 | 91.5 | 7 | 94.1 | |

| 25 | 95.7 | 31 | 96.6 | 29 | 94.2 | 19 | 87.4 | 81 | 90.6 | 13 | 92.2 | |

| 50 | 96.2 | 39 | 95.7 | 43 | 92.5 | 25 | 87.0 | 83 | 90.0 | 27 | 86.7 | |

| 50 | 96.2 | 46 | 94.3 | 57 | 90.2 | 44 | 80.5 | 87 | 92.4 | 47 | 79.8 | |

| 50 | 96.5 | 62 | 93.6 | 71 | 85.8 | 56 | 71.3 | 95 | 91.7 | 73 | 68.2 | |

| 75 | 96.2 | 85 | 71.1 | 86 | 72.5 | 94 | 48.8 | 100 | 92.2 | 80 | 68.2 | |

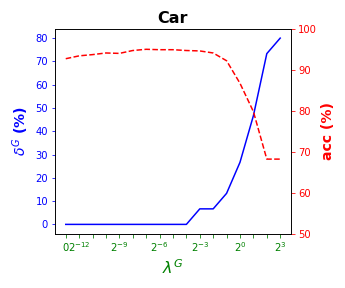

presents the results of the global S-ORCT, while Figure 4

visualizes these results by showing simultaneously, per data set, (blue solid line) and acc (red dashed line) as a function of the grid of the ’s considered. As for local sparsity, as grows, increases. For Iris and Seeds, a similar classification accuracy to that with all of the predictor variables is obtained while removing the and of them, respectively. For Wine, the best rates of accuracy are obtained with of global sparsity. A loss of less than p.p. of accuracy is observed for Balance but of predictor variables are not being used, respectively. Car remains around the accuracy rate of while using half of the predictor variables. Thyroid, an imbalanced data set, is over the of accuracy for the whole grid of ’s considered.

4.5 Results for local and global sparsity

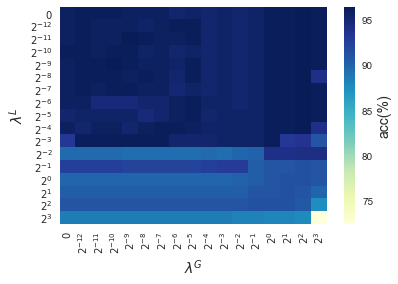

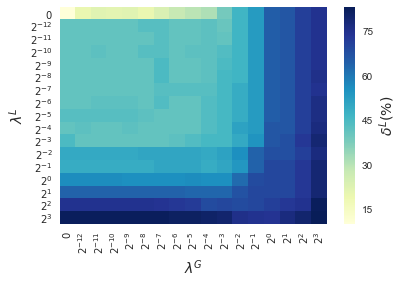

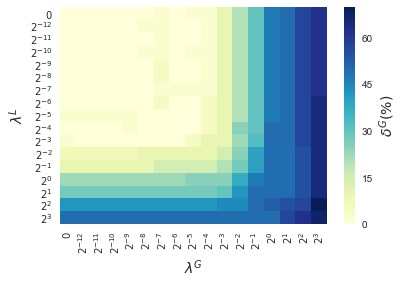

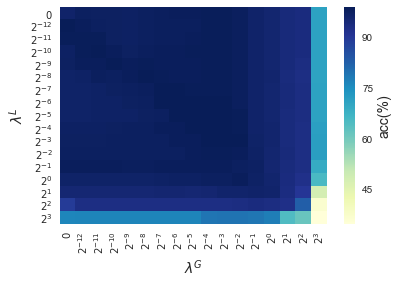

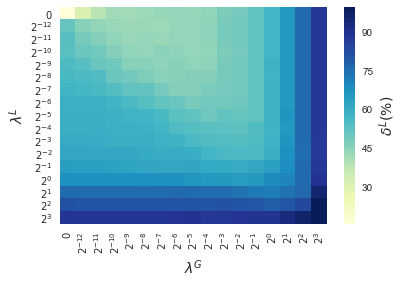

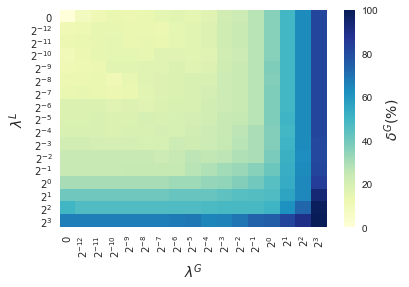

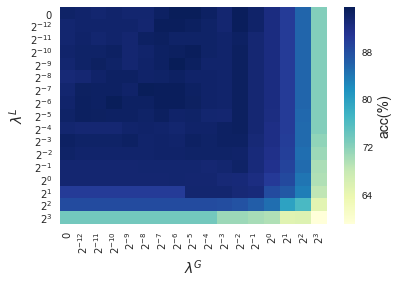

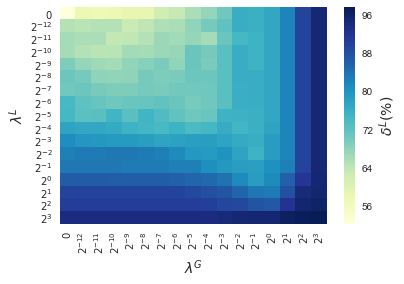

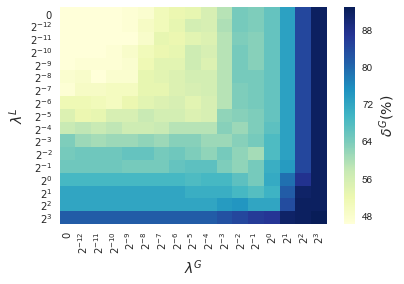

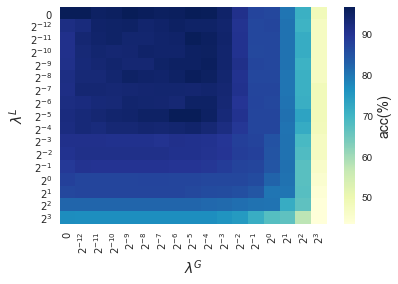

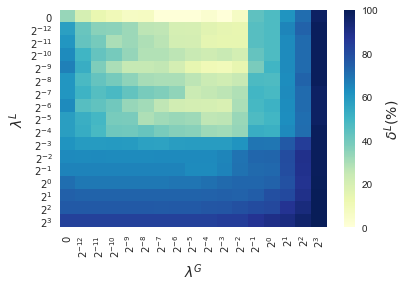

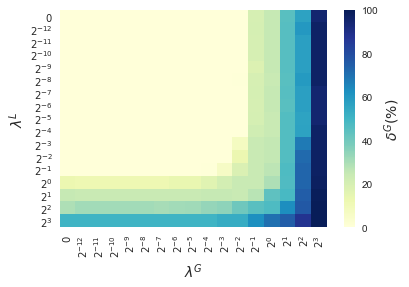

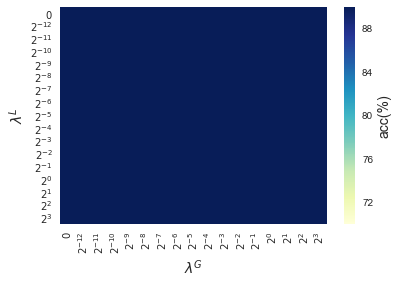

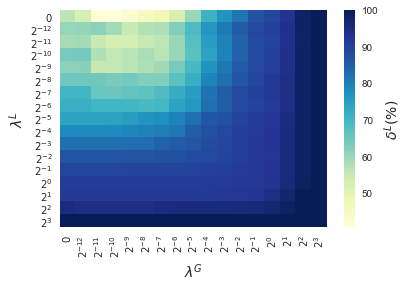

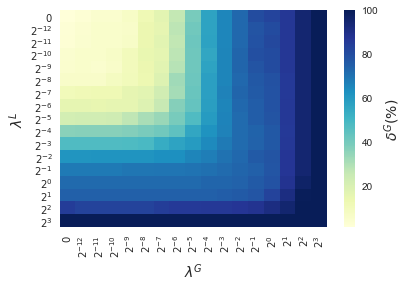

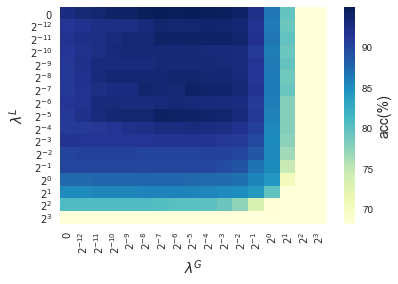

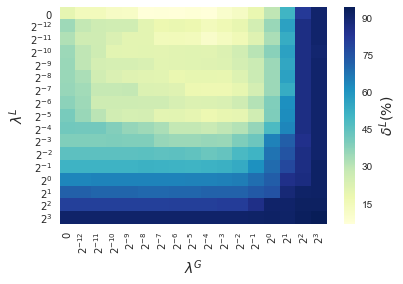

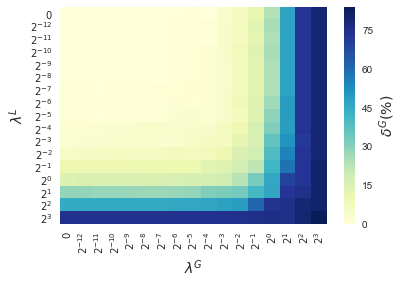

In this section, results enforcing local and global sparsity are presented by means of heatmaps, as seen in Figure 5. The experiment has been conducted on data sets of and classes, for which S-ORCTs of depth are built. For each dataset, three heatmaps are depicted as a function of the grid of the sparsity regularization parameters, and : the average out-of-sample accuracy, acc, and the local and global sparsities, and , respectively, obtained over the ten runs performed. The color bar of each heatmap goes from light green to dark blue, being the latter the maximum accuracy, local sparsity or global sparsity achieved, respectively. As a general behavior, the best rates of accuracy are not always achieved only for , but also for other pairs of the chosen grid, i.e., the data set remains equally well explained while needing less information. As before, according to local sparsity, for a fixed , has a growing trend. A similar behavior is observed for when is fixed. It is also worth mentioning that small changes of quickly lead to a gain in . Nevertheless, as expected, the gain in is slower for the same range in .

4.6 Comparison S-ORCT versus CART

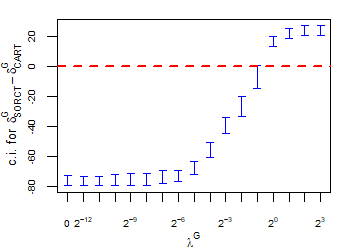

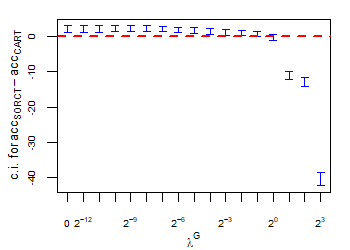

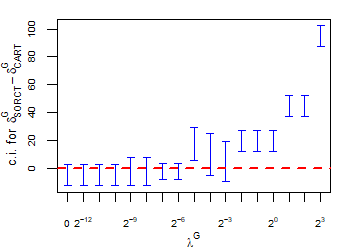

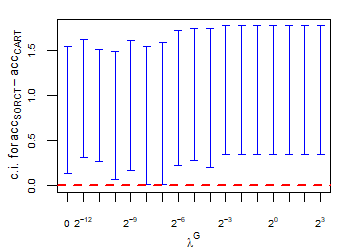

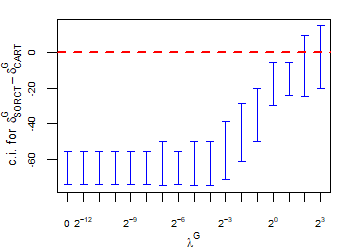

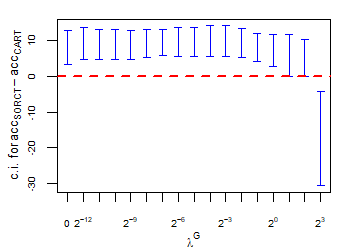

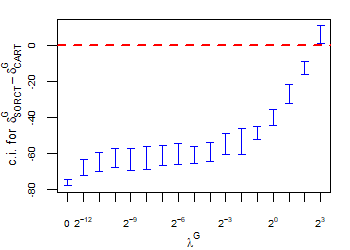

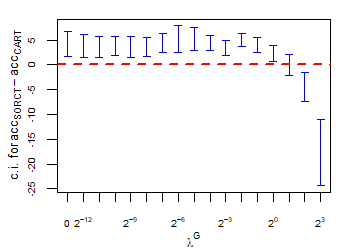

A statistical comparison between the proposed S-ORCT and CART, the classic approach to build decision trees, is provided in this section. As stated in the introduction of the paper, CARTs, as many other approaches that implement orthogonal cuts [4, 14, 18], are leaders in terms of local sparsity. Thus, the comparison S-ORCT versus CART is performed in terms of accuracy and global sparsity. Tables 3 and 5 for S-ORCT have been considered for the experiment.

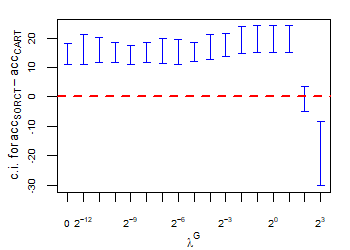

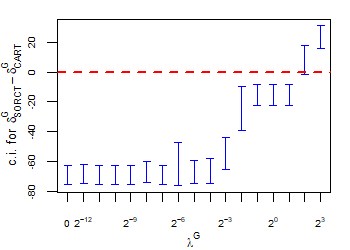

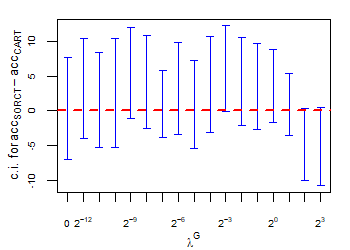

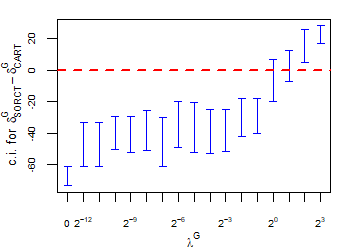

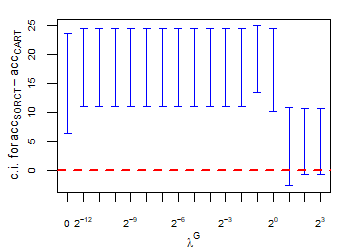

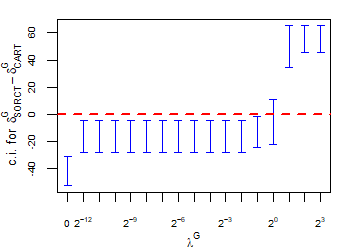

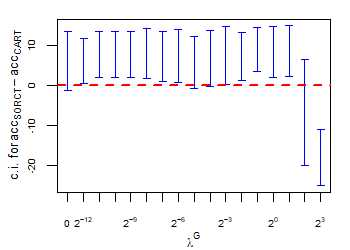

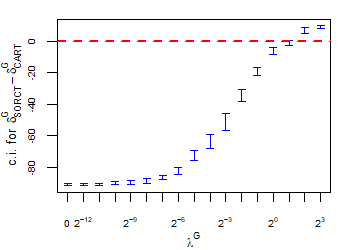

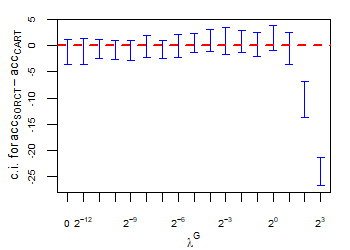

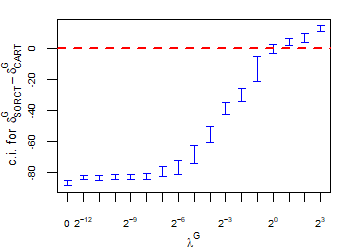

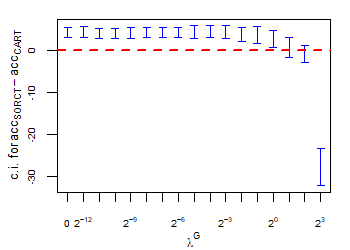

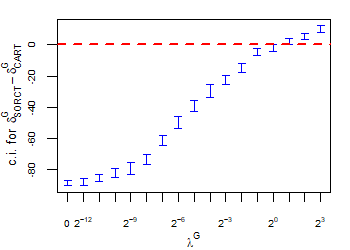

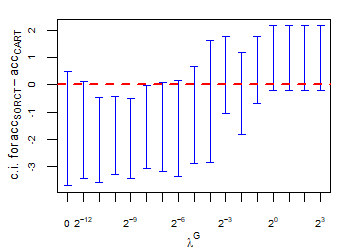

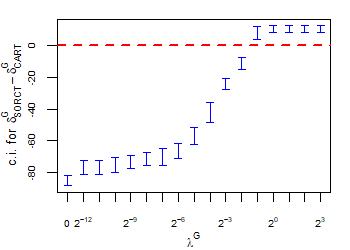

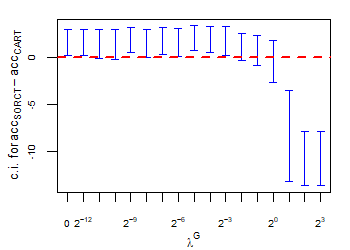

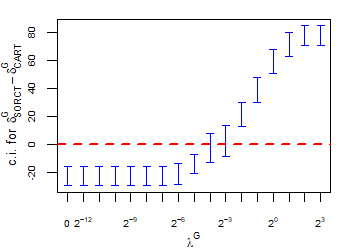

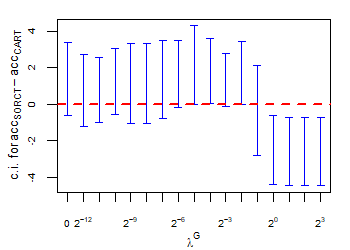

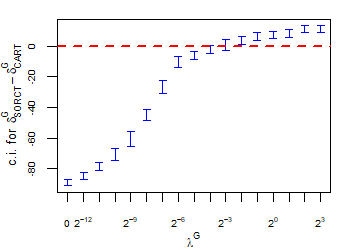

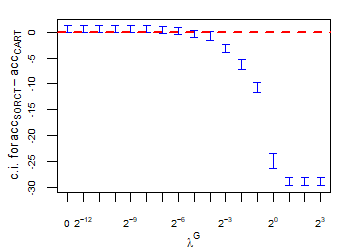

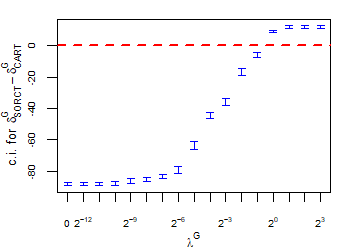

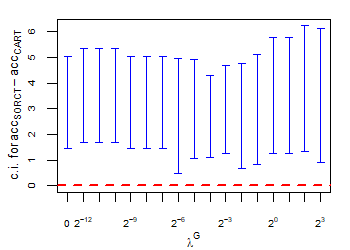

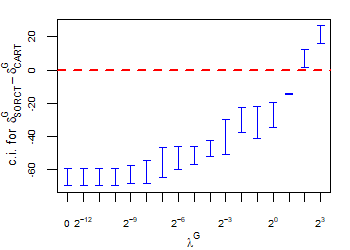

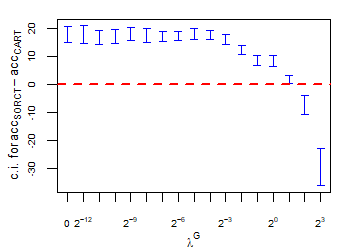

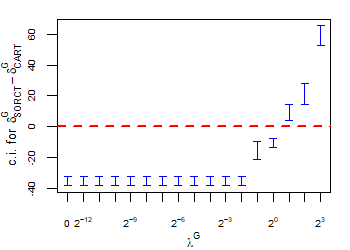

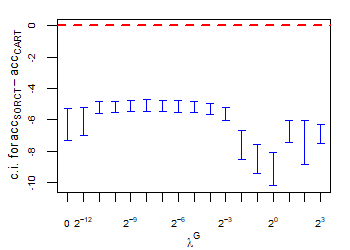

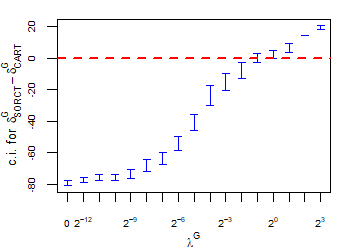

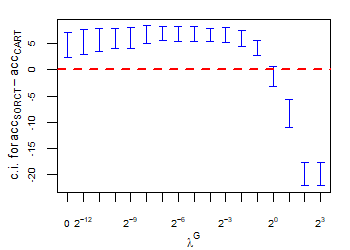

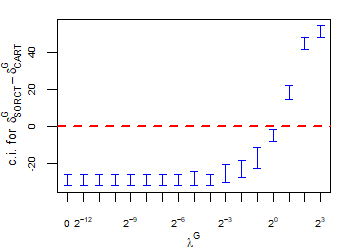

CART has been trained and tested over the same ten runs as S-ORCT. For each pair S-ORCT versus CART, two hypothesis tests for the equality of means of paired samples were carried out, one for accuracy and another for global sparsity, assuming normality, at a significance level. For this task, the t.test function in R has been used. Figure 6 depicts, for each data set, the resulting confidence intervals (blue solid line) at the confidence level for the difference in average accuracy (on the left) and global sparsity (on the right) between S-ORCT and CART. The red dashed horizontal line represents the null hypothesis in each case. Except for Creditapproval and Thyroid, for the smaller values of , our approach is significantly better than, or at least comparable to, CART in terms of accuracy, while CART is significantly better than, or at least comparable to, in terms of global sparsity. For the larger values of , our approach starts to be comparable and then dominate CART in terms of global sparsity at the cost of accuracy.

5 Conclusions and future research

Recently, several proposals focused on building optimal classification trees are found in the literature to address the shortcomings of the classic greedy approaches. In this paper, we have proposed a novel continuous optimization-based approach, the Sparse Optimal Randomized Classification Tree (S-ORCT), in which a compromise between good classification accuracy and sparsity is pursued. Local and global sparsity in the tree are modeled by including in the objective function norm-like regularizations, namely, and , respectively. Our numerical results illustrate that our approach can improve both sparsities without harming classification accuracy. Unlike CART, we are able to easily trade in some of our classification accuracy for a gain in global sparsity.

Some extensions of our approach are of interest. First, this metholodogy can be extended straightaway to a regression tree counterpart, where the response variable is continuous. Second, categorical data is addressed in this paper through the inclusion of dummy predictor variables. For a given categorical predictor variable, and by means of an -norm regularization, one can link all its dummies across all the branch nodes in the tree, with the aim of better modeling its contribution to the classifier. Third, it is known that bagging trees tends to enhance accuracy. An appropiate bagging scheme of our approach, where sparsity is a key point, is a nontrivial design question.

Acknowledgements. This research has been financed in part by research projects EC H2020 MSCA RISE NeEDS (Grant agreement ID: 822214), COSECLA - Fundación BBVA, MTM2015-65915R, Spain, P11-FQM-7603 and FQM-329, Junta de Andalucía, the last three with EU ERF funds. This support is gratefully acknowledged.

References

- Athey [2018] Athey, S. (2018). The impact of machine learning on economics. In The Economics of Artificial Intelligence: An Agenda. University of Chicago Press.

- Baesens et al. [2003] Baesens, B., Setiono, R., Mues, C., & Vanthienen, J. (2003). Using neural network rule extraction and decision tables for credit-risk evaluation. Management Science, 49, 312–329.

- Bennett & Blue [1996] Bennett, K. P., & Blue, J. (1996). Optimal decision trees. Rensselaer Polytechnic Institute Math Report, 214.

- Bertsimas & Dunn [2017] Bertsimas, D., & Dunn, J. (2017). Optimal classification trees. Machine Learning, 106, 1039–1082.

- Biau & Scornet [2016] Biau, G., & Scornet, E. (2016). A random forest guided tour. Test, 25, 197–227.

- Blanquero et al. [2018] Blanquero, R., Carrizosa, E., Molero-Río, C., & Romero Morales, D. (2018). Optimal Randomized Classification Trees. https://www.researchgate.net/publication/326901224_Optimal_Randomized_Classification_Trees.

- Breiman [2001] Breiman, L. (2001). Random forests. Machine Learning, 45, 5–32.

- Breiman et al. [1984] Breiman, L., Friedman, J., Stone, C. J., & Olshen, R. A. (1984). Classification and regression trees. CRC press.

- Carrizosa et al. [2011] Carrizosa, E., Martín-Barragán, B., & Romero Morales, D. (2011). Detecting relevant variables and interactions in supervised classification. European Journal of Operational Research, 213, 260–269.

- Carrizosa & Romero Morales [2013] Carrizosa, E., & Romero Morales, D. (2013). Supervised classification and mathematical optimization. Computers & Operations Research, 40, 150–165.

- Deng & Runger [2012] Deng, H., & Runger, G. (2012). Feature selection via regularized trees. In The 2012 International Joint Conference on Neural Networks (IJCNN) (pp. 1–8). IEEE.

- Deng & Runger [2013] Deng, H., & Runger, G. (2013). Gene selection with guided regularized random forest. Pattern Recognition, 46, 3483–3489.

- Fernández-Delgado et al. [2014] Fernández-Delgado, M., Cernadas, E., Barro, S., & Amorim, D. (2014). Do we need hundreds of classifiers to solve real world classification problems? Journal of Machine Learning Research, 15, 3133–3181.

- Firat et al. [2018] Firat, M., Crognier, G., Gabor, A. F., Zhang, Y., & Hurkens, C. (2018). Constructing classification trees using column generation. arXiv preprint arXiv:1810.06684, .

- Freitas [2014] Freitas, A. (2014). Comprehensible classification models: a position paper. ACM SIGKDD Explorations Newsletter, 15, 1–10.

- Genuer et al. [2017] Genuer, R., Poggi, J.-M., Tuleau-Malot, C., & Villa-Vialaneix, N. (2017). Random Forests for Big Data. Big Data Research, 9, 28–46.

- Goodman & Flaxman [2016] Goodman, B., & Flaxman, S. (2016). European Union regulations on algorithmic decision-making and a “right to explanation”. arXiv preprint arXiv:1606.08813, .

- Günlük et al. [2018] Günlük, O., Kalagnanam, J., Menickelly, M., & Scheinberg, K. (2018). Optimal Decision Trees for Categorical Data via Integer Programming. arXiv preprint arXiv:1612.03225v2, .

- Hart et al. [2017] Hart, W. E., Laird, C. D., Watson, J.-P., Woodruff, D. L., Hackebeil, G. A., Nicholson, B. L., & Siirola, J. D. (2017). Pyomo–Optimization Modeling in Python volume 67. (2nd ed.). Springer Science & Business Media.

- Hart et al. [2011] Hart, W. E., Watson, J.-P., & Woodruff, D. L. (2011). Pyomo: modeling and solving mathematical programs in Python. Mathematical Programming Computation, 3, 219–260.

- Hastie et al. [2009] Hastie, T., Tibshirani, R., & Friedman, J. (2009). The Elements of Statistical Learning. (2nd ed.). New York: Springer.

- Hyafil & Rivest [1976] Hyafil, L., & Rivest, R. L. (1976). Constructing optimal binary decision trees is NP-complete. Information Processing Letters, 5, 15–17.

- Jung et al. [2017] Jung, J., Concannon, C., Shroff, R., Goel, S., & Goldstein, D. G. (2017). Simple rules for complex decisions. arXiv preprint arXiv:1702.04690, .

- Lichman [2013] Lichman, M. (2013). UCI Machine Learning Repository. http://archive.ics.uci.edu/ml. University of California, Irvine, School of Information and Computer Sciences.

- Maldonado et al. [2017] Maldonado, S., Bravo, C., Lopez, J., & Perez, J. (2017). Integrated framework for profit-based feature selection and SVM classification in credit scoring. Decision Support Systems, 104, 113–121.

- Maldonado & Lopez [2017] Maldonado, S., & Lopez, J. (2017). Synchronized feature selection for support vector machines with twin hyperplanes. Knowledge-Based Systems, 132, 119–128.

- Martens et al. [2007] Martens, D., Baesens, B., Van Gestel, T., & Vanthienen, J. (2007). Comprehensible credit scoring models using rule extraction from support vector machines. European Journal of Operational Research, 183, 1466–1476.

- Martín-Barragán et al. [2014] Martín-Barragán, B., Lillo, R., & Romo, J. (2014). Interpretable support vector machines for functional data. European Journal of Operational Research, 232, 146–155.

- Norouzi et al. [2015] Norouzi, M., Collins, M., Johnson, M. A., Fleet, D. J., & Kohli, P. (2015). Efficient non-greedy optimization of decision trees. In Advances in Neural Information Processing Systems (pp. 1729–1737).

- Olafsson et al. [2008] Olafsson, S., Li, X., & Wu, S. (2008). Operations research and data mining. European Journal of Operational Research, 187, 1429–1448.

- Python Core Team [2015] Python Core Team (2015). Python: A dynamic, open source programming language. Python Software Foundation. URL: https://www.python.org.

- Ridgeway [2013] Ridgeway, G. (2013). The pitfalls of prediction. National Institute of Justice Journal, 271, 34–40.

- Silva [2017] Silva, A. P. D. (2017). Optimization approaches to supervised classification. European Journal of Operational Research, 261, 772–788.

- Therneau et al. [2015] Therneau, T., Atkinson, B., & Ripley, B. (2015). rpart: Recursive Partitioning and Regression Trees. URL: https://CRAN.R-project.org/package=rpart R package version 4.1-10.

- Tibshirani et al. [2015] Tibshirani, R., Wainwright, M., & Hastie, T. (2015). Statistical Learning with Sparsity. The Lasso and Generalizations. Chapman and Hall/CRC.

- Ustun & Rudin [2016] Ustun, B., & Rudin, C. (2016). Supersparse linear integer models for optimized medical scoring systems. Machine Learning, 102, 349–391.

- Verwer & Zhang [2017] Verwer, S., & Zhang, Y. (2017). Learning decision trees with flexible constraints and objectives using integer optimization. In International Conference on AI and OR Techniques in Constraint Programming for Combinatorial Optimization Problems (pp. 94–103). Springer.

- Verwer et al. [2017] Verwer, S., Zhang, Y., & Ye, Q. C. (2017). Auction optimization using regression trees and linear models as integer programs. Artificial Intelligence, 244, 368–395.

- Wächter & Biegler [2006] Wächter, A., & Biegler, L. T. (2006). On the implementation of an interior-point filter line-search algorithm for large-scale nonlinear programming. Mathematical Programming, 106, 25–57.

- Yang et al. [2017] Yang, L., Liu, S., Tsoka, S., & Papageorgiou, L. G. (2017). A regression tree approach using mathematical programming. Expert Systems with Applications, 78, 347–357.

- Zou & Yuan [2008] Zou, H., & Yuan, M. (2008). The F-infinity norm support vector machine. Statistica Sinica, 18, 379–398.