Spatial Transformer Point Convolution

Abstract

Point clouds are unstructured and unordered in the embedded 3D space. In order to produce consistent responses under different permutation layouts, most existing methods aggregate local spatial points through maximum or summation operation. But such an aggregation essentially belongs to the isotropic filtering on all operated points therein, which tends to lose the information of geometric structures. In this paper, we propose a spatial transformer point convolution (STPC) method to achieve anisotropic convolution filtering on point clouds. To capture and represent implicit geometric structures, we specifically introduce spatial direction dictionary to learn those latent geometric components. To better encode unordered neighbor points, we design sparse deformer to transform them into the canonical ordered dictionary space by using direction dictionary learning. In the transformed space, the standard image-like convolution can be leveraged to generate anisotropic filtering, which is more robust to express those finer variances of local regions. Dictionary learning and encoding processes are encapsulated into a network module and jointly learnt in an end-to-end manner. Extensive experiments on several public datasets (including S3DIS, Semantic3D, SemanticKITTI) demonstrate the effectiveness of our proposed method in point clouds semantic segmentation task.

1 Introduction

Recent advances in 3D hardware sensors (e.g., 3D scanners, LiDARs and RGB-D cameras) have improved the accessibility of point clouds, which can intuitively reflect the spatial geometric information of objects in 3D space. Recently, 3D semantic segmentation of point clouds, which aims to label each point with a corresponding category information, has drawn increasing attention due to its broad applicability to the fields of general environment perception. It enables various higher-level potential applications, including autonomous driving, human-computer interaction, virtual reality and robotics.

With the significant progress of deep learning on grid-shaped images/videos [13, 7], recent studies began to explore how to apply convolutional neural networks (CNN) on irregular-structured point clouds [22, 24]. For example, some previous methods converted point clouds data to regular data representation by employing multi-view images [29, 15] or voxels [20, 42, 23], and then CNN-like operations (e.g., 2D CNN and 3D CNN) can be performed on unstructured point clouds. Although these methods have made some progress, the projection and voxelization steps inevitably lead to information loss of original 3D data. Furthermore, as a pioneering work, PointNet [22] was proposed to directly process point clouds without intermediate data conversion. It employed the shared multi-layer perceptron (MLP) to learn features of each point and then a symmetric function is used to aggregate global features. This network can achieve permutation invariance for unordered 3D point clouds, but cannot well consider the local geometric information between points (including direction, shape and topological structure). Subsequently, several dedicated neural modules have been proposed to aggregate local geometric information of point clouds for further boosting the semantic segmentation performance, such as Pointnet++ [24], RS-CNN [19], Geo-CNN [45], PointWeb [47] and ShellNet [46]. For example, PointNet++ adopted a hierarchical neural network to process a set of points sampled in a metric space [24]; RS-CNN defined the low-level relation as a compact feature vector with 10 channels, then contextual shape-aware representation was learned for all points [19].

However, most of these approaches always aggregate/mix these geometric information together to explore the relationship between a point and its neighbor points. Such aggregated methods essentially belong to the isotropic filtering on all operated points therein, which tends to lose the information of 3D geometric structures.

In this paper, we propose a novel spatial transformer point convolution (STPC) method to achieve anisotropic convolution filtering on point clouds, and finally boost the performance of 3D semantic segmentation task. Given a 3D point clouds sample, our proposed STPC framework can predict the point-wise categorization in an end-to-end fashion. Inspired by the classic bag-of-words model [26, 27], we specifically build a spatial direction dictionary to represent these latent spatial directions/geometric components of local point clouds data. By virtue of the dictionary, these unordered and unstructured neighbor points can be coordinated into the canonical dictionary space by using sparse direction encoding. Subsequently, in the transformed dictionary space, a typical image-like convolution operation can be applied to perform the anisotropic filtering process for robustly capturing these finer geometric structure information of point clouds. Both dictionary learning and encoding processes are encapsuled into a network module, and the entire spatial transformer point convolution is also jointed to optimize in an end-to-end neural network. The proposed STPC framework can better capture these subtle geometric structure information, especially for those finer variances of local regions in 3D point clouds.

We summarize the main contributions as three folds:

-

•

We propose a novel spatial transformer point convolution (STPC) framework to deal with semantic segmentation on point cloud data, which successfully performs anisotropic convolution filtering on unstructured data like the standard convolution on images.

-

•

We propose a direction dictionary induced spatial direction encoding method, which transforms unordered neighbor points into a latent atom-coordinated system and further well encode those subtle structure variances of local neighbor points.

-

•

Comprehensive evaluations on three point cloud datasets (including S3DIS, Semantic3D, SemanticKITTI) demonstrate the superiority of our proposed STPC when compared with other state-of-the-art methods in the point cloud semantic segmentation problem.

2 Related Work

In this section, we focus on point clouds data analysis and briefly review three types of existing deep learning methods according to their underlying technologies.

Multiview-based convolution methods: Some existing methods [29, 15, 4] project point clouds into a set of renderings from different viewpoints and then the standard convolutional neural network can be applied. MVCNN[29] projected a 3D object into multiple views and extracted the corresponding view-wise features, then simply max-pools multi-view features into a global descriptor for accurate object recognition. Felix et al.[15] projected a 3D point clouds onto 2D planes from multiple virtual camera views. Then, a multi-stream FCN was used to predict pixel-wise scores on synthetic images. The final semantic label of each point was obtained by fusing the reprojected scores over different views. However, these view-based convolution methods lost spatial information during projection, and can not fully exploit the underlying geometric and structural information. Also, the performance of multi-view semantic segmentation methods is sensitive to viewpoint selection and occlusions.

Voxelization-based convolution methods: Volumetric representation naturally preserves the neighborhood structure of 3D point clouds. Its regular data format allows direct application of standard 3D convolutions [20, 42, 31, 10, 16]. VoxNet [20] introduced a volumetric occupancy network to achieve robust 3D object recognition. 3D ShapeNet [42] was proposed to represent 3D shape by a probability distribution of binary variables on voxel grids. To alleviate the problem of computation and memory footprint growing cubically with the resolution, Kd-trees [11] and octrees [25, 37] were introduced to efficiently model point clouds. However, the voxelization step inherently causes discretization artifacts and information loss. It is non-trivial to select an appropriate grid resolution in practice.

Point-based methods. The point-based method directly takes raw point clouds as input without converting them to other formats. As a pioneering work, PointNet [22] used shared multi-layer perceptron to learn features of each point and then a symmetric function was used to aggregate global features. This network can achieve permutation invariance for unordered 3D point clouds but the local geometric information between points can not be captured. Subsequently, neighbouring feature pooling methods [24, 47, 46], graph message passing methods [35, 36, 38], kernel-based convolution methods [2, 5, 9, 32], and attention-based aggregation methods [43, 45] were proposed to learn per-point local features. Although these methods have shown promising results, they did not implement the anisotropic filtering on point clouds. To generate anisotropic filtering on point clouds, PointCNN [17] learnt a -transformation from the input points to weight the input features associated with the points and permute the points into a latent and potential canonical order. TangentConv [30] was proposed by projecting local neighbor points to local tangent planes and processing them with 2D convolutions. A-CNN [12] can order neighboring in a clockwise/counterclockwise manner on a tangent plane and then apply annular convolutions. FPConv [18] performed a local flattening by learning a weight map to softly project surrounding points onto a 2D grid. Our STPC is different from the above anisotropic methods in three folds: i) introduces a flexible spatial direction dictionary as the latent coordinate system (each atom may be viewed as a coordinate direction), ii) uses the inspirit of sparse coding and transformer to sparsely encode neighbor points into the dictionary system, and iii) filter kernels are defined on latent coordinate directions for anisotropic convolution.

3 Our Approach

3.1 Overview

The standard convolution on images/videos is conditioned to grid shape region, and performs anisotropic filtering in order to encode subtle texture appearances. The point clouds data are often embedded in an unstructured and non-regular 3D space, and thus the standard convolution kernels are difficult to be defined on point clouds data due to its irregular property. Most existing convolution methods on point clouds [22, 24] take the sum-/max-aggregation on local neighbor points without considering the local space topology of points therein. As a contrast, we attempt to construct an anisotropic filtering on 3D point clouds to better encode these finer characteristics of points in each local 3D spatial region. To this end, we introduce spatial direction dictionaries to coordinate these nearest points into latent normal spaces, where each atom of the dictionary implicitly defines a spatial direction. The previous filter operated on all nearest points can be untied to adapt to different atoms, which naturally results to anisotropic convolution filtering analogous to the standard convolution. This anisotropic filtering can be named as spatial transformer point convolution (STPC).

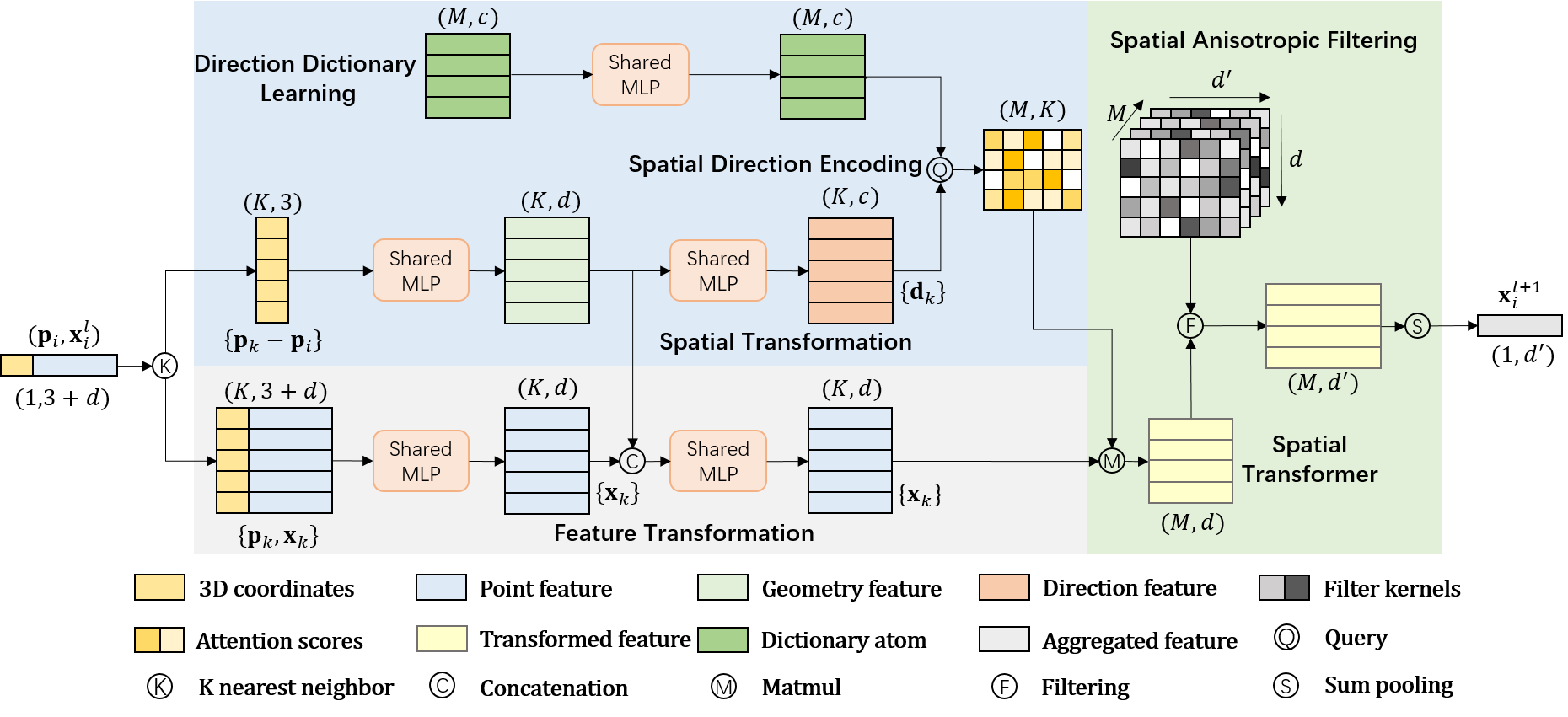

Given one reference point , we can sample the nearest neighbor points as a set . Obviously, each point has 3D coordinate value, denoted as 111Here the lowercase denotes one general point, while the bold-type denotes its spatial x-y-z coordinates. . Point clouds often carry some additional attributes, e.g., RGB color or intermediate learnt features. We denote the attributes of point as a vector . Next, we perform an anisotropic filtering on the point set to produce a robust response of the reference point . Below we define one-time spatial transformer point convolution process, which can be encapsulated into an STPC module as one layer. Formally,

| (1) | ||||

| (2) | ||||

| (3) | ||||

| (4) |

where is the layer number of neural network, is the number of atoms in the spatial direction dictionary , is the encoding coefficient w.r.t the point , is the accumulated component at the -th atom for all neighbor points of , is the anisotropic convolution response of point . In the above formulas,

-

•

Direction dictionary leaning . Due to spatial encoding, this dictionary should emphasize spatial information (source from points positions), rather than attribute information. Meantime, the dictionary should be dynamic and depended on prior states by accompanying with stacked modules.

-

•

Spatial direction encoding . The point is represented with the atoms of the direction dictionary by using sparse coding or nearest neighbor reconstruction. The representation coefficients describe the latent coordinate information of this point in the dictionary space. The spatial transformation is used to extract spatial features from 3D coordinates.

-

•

Spatial transformer . The neighbor points set is projected onto atom points, and forms a regular coordinate system defined by atoms. The feature transformation is used to learn point features/attributes.

-

•

Anisotropic convolution (i.e., STPC) . As the dictionary implicitly defines a regular coordinate system, the spatial transformed features just corresponds to the atoms therein. Hence, we could perform different filtering for each of them, which really works like the standard convolution on different relative spatial positions.

3.2 Direction Dictionary Learning

In order to produce consistent responses under different permutation layouts, most existing methods mix these geometric information together to explore the relationship between a point and its neighbor points. Intuitively, these geometric/direction information of point clouds lie in a dense space with several latent components/directions. Inspired by the idea of bag-of-words, here we learn a spatial direction dictionary in the -dimensional space, which can be then used to sparsely quantize local neighborhood structure of point clouds. Specifically, we build a spatial direction dictionary with atoms through random initialization, where each atom refer to a spatial direction information. Here the initialized dictionary can be noted as . Due to the sparsity of point clouds, a certain number of atoms are sufficient to encode each neighborhood region, and the redundancy and expensive computing costs can be also avoided in practice. With the prior states of direction dictionary in previous layer, we can dynamically optimize/update it while satisfying the constrain of atom decorrelation.

| (5) |

where and denote the states of direction dictionary in the -th and -th layers, the dictionary learning function is implemented by a shared multi-layer perceptron. In the direction dictionary learning process, we use the cosine similarity to measure the similarity between each of them.

| (6) |

where the greater the cosine similarity is, the more similar the spatial directions between and are. The constraint is that the atoms of the spatial direction dictionary are discrete and not similar between them. We can iteratively learn the spatial direction dictionary by minimizing the above constraint, and then the atoms can discretely represent different spatial directions in the high-dimensional dictionary space.

3.3 Spatial Direction Encoding

For a single point, its x-y-z coordinates can not fully explore the local geometric information with its neighbor points, which is important for the semantic segmentation task. Several recent works[45, 19, 8] have explicitly embedded the low-level elements of neighbor points to encode local features. These above methods focus on expressing local structure relationships by employing multiple low-level elements, but the distinguishable spatial direction information of each point would be drowned out. Therefore, we introduce a spatial direction encoding method to capture and represent implicit geometric structures and distinguishable direction information. For the -th point, its spatial direction information can be represented as , and each of them can be encoded as:

| (7) |

where the point set refers to the nearest neighbor points of the point , is the feature dimension of , and the spatial transformation is performed by the shared multi-layer perceptron for extracting the spatial direction information.

In the -th convolution layer, we can encode the local spatial information of point by employing the direction dictionary and spatial direction information . For one nearest point of the point , we can encode it with the atoms of the direction dictionary by using sparse coding. Analogous to the self-attention mechanism [34] in the NLP field, and refer to a query and one dictionary atom, respectively. For describing the latent coordinate information of the point , we can get the representation coefficient by computing the correlation between spatial direction information and the atom . Specifically, we use the cosine similarity as Eqn. (6) to measure the correlation between them and an exponential function is used to make the representation coefficients more sparse. The spatial encoding process can be expressed as computing the direction-dependent relationship between and each atom :

| (8) |

where may be viewed as the attention score/coefficient of the point w.r.t the atom . Thus the spatial information of the point in the dictionary space is encoded as . Besides, the study on sparse coding [44] demonstrates that the sparsity of representation coefficients is important to overcomplete dictionaries. Hence, we discard those trivial coefficients in by setting a small threshold value (i.e., = 0.01), which results into sparse representation in practice.

3.4 Spatial Anisotropic Filtering

To encode the unordered local information of the point , we introduce a spatial transformer for projecting the neighbor points set into a canonical order dictionary space. After obtaining the attention score, the feature of all neighbor points can be transformed into the canonical ordered dictionary space.

| (9) | ||||

where the feature transformation refers to the feature extracting process by employing the shared multi-layer perceptron, the x-y-z coordinates and the geometry-aware features of the point are used as inputs. Thus, any disordered neighborhood can be transformed into the canonical ordered dictionary space, and then we can perform an anisotropic convolution filtering to produce a robust response of the point .

| (10) | ||||

where denotes the spatial transformed features with the ordered structure in the dictionary space, refers to the learnt weights for performing anisotropic convolution.

4 Experiments

4.1 Experiment Setting

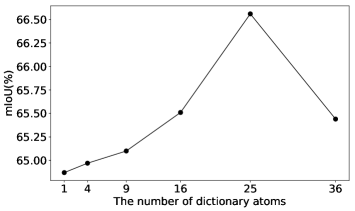

To demonstrate the efficiency of our proposed STPC method, we conduct semantic segmentation experiments on three large-scale point clouds datasets, including S3DIS [1], Semantic3D [6] and SemanticKITTI [3]. Given an point clouds as input, the widely-used encoder-decoder architecture is used to predict the point-wise label in an end-to-end way. In the encoding stage, we stack five STPC modules to extract per-point features, each of which is followed by a random sampling operation [8] to reduce the size of the point clouds. In the decoding stage, five upsampling layers are stacked to propagate features by employing the nearest point interpolation technique. Meanwhile, skip connection is applied to concatenate the upsampled features with the intermediate features produced by encoding layers. To predict the categories of input point clouds, we stack several fully-connected layers on this responses, and use the cross-entropy loss during training. Follow the same protocols as in [8], we split piont cloud data into training set and testing set, and adopt the same original features as inputs (3D coordinates and color information for S3DIS and Semantic3D, and only 3D coordinates for SemanticKITTI). We adopt the mean IoU (mIoU), mean class Accuracy (mAcc) and Overall Accuracy (OA) over the total classes as the standard metrics. The learning rate is initialized at 0.01 and decreases by 5% after each epoch. The number of nearest points K is set as 16. As in RandLA-Net [8], we use the Adam optimizer with default parameters and the same data processing methods. Our learnt spatial direction dictionary contains 25 atoms (i.e., ), the dimension of each atom is 16 (i.e., ), each of which represents a different spatial direction. For smooth updates, the learning rate of direction dictionary learning is set to 0.01 times the global network. All models in our experiment are trained and tested based on an NVIDIA RTX2080Ti GPU.

| Method | OA(%) | mAcc(%) | mIoU(%) | ceil. | floor | wall | beam | col. | wind. | door | table | chair | sofa | book. | board | clut. |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| PointNet [22] | - | 49.0 | 41.1 | 88.8 | 97.3 | 69.8 | 0.1 | 3.9 | 46.3 | 10.8 | 58.9 | 52.6 | 5.9 | 40.3 | 26.4 | 33.2 |

| SegCloud [31] | - | 48.9 | 57.4 | 90.1 | 96.1 | 69.9 | 0.0 | 18.4 | 38.4 | 23.1 | 70.4 | 75.9 | 40.9 | 58.4 | 13.0 | 41.6 |

| TangentConv [30] | - | 62.2 | 52.6 | 90.5 | 97.7 | 74.0 | 0.0 | 20.7 | 39.0 | 31.3 | 77.5 | 69.4 | 57.3 | 38.5 | 48.8 | 39.8 |

| SPGraph [14] | 86.4 | 66.5 | 58.0 | 89.4 | 96.9 | 78.1 | 0.0 | 42.8 | 48.9 | 61.6 | 84.7 | 75.4 | 69.8 | 52.6 | 2.1 | 52.2 |

| PointCNN [17] | 85.9 | 63.9 | 57.2 | 92.3 | 98.2 | 79.4 | 0.0 | 17.6 | 22.8 | 62.1 | 74.4 | 80.6 | 31.7 | 66.7 | 62.1 | 56.8 |

| PointConv [41] | 85.4 | 64.7 | 58.3 | 92.8 | 96.3 | 77.0 | 0.0 | 18.2 | 47.7 | 54.3 | 87.9 | 72.8 | 61.6 | 65.9 | 33.9 | 49.3 |

| PAT [45] | - | 70.8 | 60.1 | 93.0 | 98.5 | 72.3 | 1.0 | 41.5 | 85.1 | 38.2 | 57.7 | 83.6 | 48.1 | 67.0 | 61.3 | 33.6 |

| PointWeb [47] | 87.0 | 66.7 | 60.3 | 92.0 | 98.5 | 79.4 | 0.0 | 21.1 | 59.7 | 34.8 | 76.3 | 88.3 | 46.9 | 69.3 | 64.9 | 52.5 |

| KPConv rigid [32] | - | 70.9 | 65.4 | 92.6 | 97.3 | 81.4 | 0.0 | 16.5 | 54.5 | 69.5 | 80.2 | 90.1 | 66.4 | 74.6 | 63.7 | 58.1 |

| KPConv deform [32] | - | 72.8 | 67.1 | 92.8 | 97.3 | 82.4 | 0.0 | 23.9 | 58.0 | 69.0 | 81.5 | 91.0 | 75.4 | 75.3 | 66.7 | 58.9 |

| Our STPC | 88.5 | 75.3 | 66.6 | 91.7 | 96.7 | 82.1 | 0.0 | 37.3 | 64.7 | 52.6 | 79.7 | 89.2 | 76.3 | 72.5 | 66.5 | 56.1 |

| Method | mIoU(%) | OA(%) | man-made | natural | high veg. | low veg. | buildings | hard scape | scanning art. | cars |

|---|---|---|---|---|---|---|---|---|---|---|

| SegCloud [31] | 61.3 | 88.1 | 83.9 | 66.0 | 86.0 | 40.5 | 91.1 | 30.9 | 27.5 | 64.3 |

| ShellNet [46] | 69.3 | 93.2 | 96.3 | 90.4 | 83.9 | 41.0 | 94.2 | 34.7 | 43.9 | 70.2 |

| GACNet [36] | 70.8 | 91.9 | 86.4 | 77.7 | 88.5 | 60.6 | 94.2 | 37.3 | 43.5 | 77.8 |

| SPGraph [14] | 73.2 | 94.0 | 97.4 | 92.6 | 87.9 | 44.0 | 83.2 | 31.0 | 63.5 | 76.2 |

| KPConv rigid [32] | 74.6 | 92.9 | 90.9 | 82.2 | 84.2 | 47.9 | 94.9 | 40.0 | 77.3 | 79.7 |

| RGNet [33] | 74.7 | 94.5 | 97.5 | 93.0 | 88.1 | 48.1 | 94.6 | 36.2 | 72.0 | 68.0 |

| RandLA-Net [8] | 77.4 | 94.8 | 95.6 | 91.4 | 86.6 | 51.5 | 95.7 | 51.5 | 69.8 | 76.8 |

| Our STPC | 76.2 | 94.8 | 97.9 | 93.0 | 88.1 | 49.3 | 95.4 | 47.3 | 59.0 | 79.6 |

4.2 Results and Comparisons

S3DIS dataset[1]: Table 1 shows the performance of our STPC method and comparisons with several state-of-the-arts on OA, mAcc and mIoU metrics. our STPC method can significantly outperform four baselines: 2.1% over SPGraph [14], 2.6% over PointCNN [17], 3.1% over PointConv [41] and 1.5% over PointWeb [47] in term of OA score. When compared with these existing point clouds semantic segmentation approaches, our method in term of mAcc score also achieves the best result, representing improvement of 26.3%, 13.1%, 11.4%, 4.5%, and 2.5% over PointNet [22], TangentConv [30], PointCNN [17], PAT [45], KPConv deform [32]. The proposed STPC method can also obtain a better performance than these exiting methods on mIoU score, except for KPConv deform method [32]. Furthermore, we also report the mIoU scores for each class in Table 1. Generally, when compared with these baselines, our STPC method shows the comparable performance, e.g, 76.3% vs 75.4% [32] for sofa, 89.2% vs 91.0 [32] for chair, 66.5% vs 64.9 [47] for board, and 82.1% vs 79.4% [17] for wall. It demonstrates that our STPC method performs very well on predicting the point-wise labels by considering the anisotropic convolution filtering technology on 3D point clouds data.

Semantic3D dataset [6]: We report the results of comparisons between the baselines and our STPC method on the Semantic3D dataset (reduced-8). As shown in Table 2, comparisons with previous methods [31, 46, 32, 33] demonstrate that our proposed STPC obtains the best performance, achieving improvements of 0.3% over RGNet [33], 1.9% over KPConv rigid [32], 1.6% over ShellNet [46] and 6.7% over SegCloud [31] in term of OA score. The STPC achieves 76.2% in term of OA score, which can outperform most existing methods [31, 46, 32, 33] , except the RandLA-Net method [8]. For example, when compared with the classic SPGraph [14], we can boost the semantic segmentation performance by 0.8 and 3.0% in terms of OA and mIoU scores, respectively. Furthermore, when predicting the labels for each category, the mIoU scores for man-made and natural can achieve the best performance with 97.9% and 93.0% segmentation results. This indicates that our SPTC can better capture these finer local features of point clouds and then improve the discriminative capability of semantic segmentation network.

SemanticKITTI dataset [3]: We finally compare the proposed STPC method with two families of recent point clouds semantic segmentation approaches, including point based methods [22, 14, 24, 30, 8] and projection based approaches [39, 40, 3, 21]. The quantitative comparison of segmentation results is reported in Table 3. When compared with all point based methods and projection based approaches, the STPC can achieve the best performance in term of mIoU score, and also obtain the better results in most categories (11 out of 19). Especially in the motorcyclist category, the mIoU results of all these existing methods are quite low (e.g., 0.0% with PointNet [22], SPLATNet [28] and PointNet++ [24], 0.9% with SqueezeSeg [39], 7.2% with RandLA-Net [8]), but we can achieve 15.3% segmentation result, which is much higher than the second-ranked TangentConv method [30] by improving 7.2%. Compared with the best projection based approach (i.e., RangeNet53++ [21]), we outperform it by 2.4% in term of mIoU score, and obtain the better results in 14 categories. Compared with the best point based method (i.e., RandLA-Net [8]), we outperform it by 0.7% in term of mIoU score, and achieve the better results in 16 categories. Some visualization results between our STPC method and the compared RandLA-Net [8] can be found in Fig. 4. These above experimental results indicate that our proposed STPC method can better predict the point-wise category information of point clouds data by improving the predictive capability of the network.

| Methods | Size |

mIoU(%) |

road |

sidewalk |

parking |

other-ground |

building |

car |

truck |

bicycle |

motorcycle |

other-vehicle |

vegetation |

trunk |

terrain |

person |

bicyclist |

motorcyclist |

fence |

pole |

traffic-sign |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| SqueezeSeg [39] | 64*2048 pixels | 29.5 | 85.4 | 54.3 | 26.9 | 4.5 | 57.4 | 68.8 | 3.3 | 16.0 | 4.1 | 3.6 | 60.0 | 24.3 | 53.7 | 12.9 | 13.1 | 0.9 | 29.0 | 17.5 | 24.5 |

| SqueezeSegV2 [40] | 39.7 | 88.6 | 67.6 | 45.8 | 17.7 | 73.7 | 81.8 | 13.4 | 18.5 | 17.9 | 14.0 | 71.8 | 35.8 | 60.2 | 20.1 | 25.1 | 3.9 | 41.1 | 20.2 | 36.3 | |

| DarkNet21Seg [3] | 47.4 | 91.4 | 74.0 | 57.0 | 26.4 | 81.9 | 85.4 | 18.6 | 26.2 | 26.5 | 15.6 | 77.6 | 48.4 | 63.6 | 31.8 | 33.6 | 4.0 | 52.3 | 36.0 | 50.0 | |

| DarkNet23Seg [3] | 49.9 | 91.8 | 74.6 | 64.8 | 27.9 | 84.1 | 86.4 | 25.5 | 24.5 | 32.7 | 22.6 | 78.3 | 50.1 | 64.0 | 36.2 | 33.6 | 4.7 | 55.0 | 38.9 | 52.2 | |

| RangeNet53++ [21] | 52.2 | 91.8 | 75.2 | 65.0 | 27.8 | 87.4 | 91.4 | 25.7 | 25.7 | 34.4 | 23.0 | 80.5 | 55.1 | 64.6 | 38.3 | 38.8 | 4.8 | 58.6 | 47.9 | 55.9 | |

| PointNet [22] | 50K pts | 14.6 | 61.6 | 35.7 | 15.8 | 1.4 | 41.4 | 46.3 | 0.1 | 1.3 | 0.3 | 0.8 | 31.0 | 4.6 | 17.6 | 0.2 | 0.2 | 0.0 | 12.9 | 2.4 | 3.7 |

| SPGraph [14] | 17.4 | 45.0 | 28.5 | 0.6 | 0.6 | 64.3 | 49.3 | 0.1 | 0.2 | 0.2 | 0.8 | 48.9 | 27.2 | 24.6 | 0.3 | 2.7 | 0.1 | 20.8 | 15.9 | 0.8 | |

| SPLATNet [28] | 18.4 | 64.6 | 39.1 | 0.4 | 0.0 | 58.3 | 58.2 | 0.0 | 0.0 | 0.0 | 0.0 | 71.1 | 9.9 | 19.3 | 0.0 | 0.0 | 0.0 | 23.1 | 5.6 | 0.0 | |

| PointNet++ [24] | 20.1 | 72.0 | 41.8 | 18.7 | 5.6 | 62.3 | 53.7 | 0.9 | 1.9 | 0.2 | 0.2 | 46.5 | 13.8 | 30.0 | 0.9 | 1.0 | 0.0 | 16.9 | 6.0 | 8.9 | |

| TangentConv [30] | 40.9 | 83.9 | 63.9 | 33.4 | 15.4 | 83.4 | 90.8 | 15.2 | 2.7 | 16.5 | 12.1 | 79.5 | 49.3 | 58.1 | 23.0 | 28.4 | 8.1 | 49.0 | 35.8 | 28.5 | |

| RandLA-Net [8] | 53.9 | 90.7 | 73.7 | 60.3 | 20.4 | 86.9 | 94.2 | 40.1 | 26.0 | 25.8 | 38.9 | 81.4 | 61.3 | 66.8 | 49.2 | 48.2 | 7.2 | 56.3 | 49.2 | 47.7 | |

| Our STPC | 50K pts | 54.6 | 90.8 | 74.1 | 63.6 | 5.3 | 90.7 | 94.7 | 34.4 | 48.9 | 39.7 | 24.5 | 82.7 | 62.1 | 67.5 | 51.1 | 48.9 | 15.3 | 61.5 | 51.4 | 47.9 |

| methods | mIoU(%) |

|---|---|

| Conv. on unordered points | 64.15 |

| Isotropic Conv. after max-pooling | 65.31 |

| Isotropic Conv. after mean-pooling | 64.17 |

| Isotropic Conv. after sum-pooling | 64.87 |

| Anisotropic Conv. (ours) | 66.56 |

4.3 Ablation Study

In this section, we conduct the following ablation studies for our spatial transformer point convolution module. All ablated networks are trained on area 14 and 6, and tested on area 5 of S3DIS dataset.

As illustrated in Figure.3, we compare the semantic segmentation performance with different number of atoms (i.e., ) in the spatial direction dictionary. When the number of atoms increases from 1 to 25, the performance of point clouds semantic segmentation is also improving from 64.87% to 66.56% in term of mIoU score. If the number of atoms continues to increase, the segmentation performance would be slightly decline. Our STPC method achieves the best result when the number of dictionary atoms is 25 (). If the number of atoms is too small, the local geometric information cannot be captured finely in the anisotropic convolution filtering process. When the number of atoms equals to 1, our method would degenerate to the sum operation, while if the number of atoms is too large, the learnt weights tend to average on each atom, and these finer features would be lost. Therefore, a suitable number of dictionary atoms can make the proposed SPTC method to capture the feature varieties of point clouds.

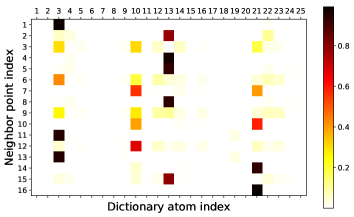

For better understanding the direction dictionary learning process, we visualize the learned representation coefficients in Eqn. 8, which can reflect the direction-dependent relationship between one neighbor point and each atom . As shown in Fig.3, the vertical ordinates refer to the index of the K nearest neighbor points (i.e., , ) and the horizontal ordinates denote the index of dictionary atoms (i.e., , ). The local neighbor points around the point can be projected onto different dictionary atoms. It indicates that the feature-dense local point clouds can be sparsely encoded by sparse discrete direction dictionary.

For exploring the effectiveness of the anisotropic filtering/convolution in our network, we evaluate the segmentation performance with several network variants on the S3DIS dataset. Specifically, we directly perform convolution on unordered point clouds (“Conv. on unordered points"), and conduct isotropic convolution by aggregating local spatial points after max-, mean-, and sum-pooling operations, named “Isotropic Conv. after max-pooling", “Isotropic Conv. after mean-pooling", and “Isotropic Conv. after sum-pooling", respectively. As can be seen in Table 4, when compared with “Conv. on unordered points", our proposed anisotropic filtering can improve the segmentation perform from 64.15% to 66.56%. When compared with three different isotropic convolutions, the performance of our proposed anisotropic convolution is better than three isotropic convolution methods, representing improvements of 1.25%, 2.39% and 1.69% over “Isotropic Conv. after max-pooling", “Isotropic Conv. after mean-pooling", and “Isotropic Conv. after sum-pooling". It proves that our anisotropic filtering method (i.e., STPC) can capture and represent implicit geometric structures, which is more robust to express those finer variances of local regions.

5 Conclusion

In this work, we track the point clouds semantic segmentation problem with the spatial transformer point convolution method, which can better predict the point-wise category information of 3D point clouds. By employing the spatial direction information of point clouds, we learn the spatial direction dictionary to represent those latent geometric components. By projecting these unordered neighbor points into the canonical dictionary space, we introduce the spatial transformer point convolution to perform the anisotropic filtering process. The direction dictionary learning, spatial direction encoding, spatial anisotropic filtering processes can be integrated into an unified network and jointly optimized in an end-to-end fashion. Extensive experimental results clearly demonstrate the effectiveness of the proposed spatial transformer point convolution method.

Broader Impact

Future Societal Consequences. Recent advances in 3D hardware sensors (e.g., 3D scanners, LiDARs and RGB-D cameras) has improved the accessibility of point clouds, which can intuitively reflect the spatial geometric information of objects in 3D space. 3D semantic segmentation of point clouds, which aims to label each point with a corresponding category information, has drawn increasing attention due to its broad applicability to the fields of general environment perception. It enables various higher-level potential applications, including autonomous driving, human-computer interaction, virtual reality and robotics. Meanwhile, with the development of artificial intelligence, deep learning has made significantly progress in the field of computer vision.

This work "Spatial Transformer Point Convolution" focuses on the anisotropic convolution filtering method on point clouds. Different from these previous methods, our proposed STPC can perform the anisotropic convolution filtering on point clouds by expressing those finer variances of local regions, and then further improve the performance of point clouds semantic segmentation. Extensive experiments have proved its discriminative capability.

This work will be of great significance for the applications in the 3D semantic segmentation domain and will further promote the development of artificial intelligence.

Ethical Consideration. This work mainly performs scene understating in 3D space, and facilitate the development of some high-level vision applications. For example, it can help understand our living environment to facilitate the human-computer interaction. For the negative outcome, it will depend on the specific task and the criteria for assessing positive and negative. Besides, in this paper, all used datasets are publically available as academic research, and the evaluation metrics are also standard.

References

- [1] Iro Armeni, Sasha Sax, Amir R Zamir, and Silvio Savarese. Joint 2d-3d-semantic data for indoor scene understanding. arXiv preprint arXiv:1702.01105, 2017.

- [2] Matan Atzmon, Haggai Maron, and Yaron Lipman. Point convolutional neural networks by extension operators. arXiv preprint arXiv:1803.10091, 2018.

- [3] Jens Behley, Martin Garbade, Andres Milioto, Jan Quenzel, Sven Behnke, Cyrill Stachniss, and Jurgen Gall. Semantickitti: A dataset for semantic scene understanding of lidar sequences. In Proceedings of the IEEE International Conference on Computer Vision, pages 9297–9307, 2019.

- [4] Yifan Feng, Zizhao Zhang, Xibin Zhao, Rongrong Ji, and Yue Gao. Gvcnn: Group-view convolutional neural networks for 3d shape recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 264–272, 2018.

- [5] Fabian Groh, Patrick Wieschollek, and Hendrik P A Lensch. Flex-convolution (million-scale point-cloud learning beyond grid-worlds). arXiv: Computer Vision and Pattern Recognition, 2018.

- [6] Timo Hackel, Nikolay Savinov, Lubor Ladicky, Jan D Wegner, Konrad Schindler, and Marc Pollefeys. Semantic3d. net: A new large-scale point cloud classification benchmark. arXiv preprint arXiv:1704.03847, 2017.

- [7] Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Deep residual learning for image recognition. In CVPR, pages 770–778, 2016.

- [8] Qingyong Hu, Bo Yang, Linhai Xie, Stefano Rosa, Yulan Guo, Zhihua Wang, Niki Trigoni, and Andrew Markham. Randla-net: Efficient semantic segmentation of large-scale point clouds. arXiv preprint arXiv:1911.11236, 2019.

- [9] Binh-Son Hua, Minh-Khoi Tran, and Sai-Kit Yeung. Pointwise convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 984–993, 2018.

- [10] Jing Huang and Suya You. Point cloud labeling using 3d convolutional neural network. In 2016 23rd International Conference on Pattern Recognition (ICPR), pages 2670–2675. IEEE, 2016.

- [11] Roman Klokov and Victor Lempitsky. Escape from cells: Deep kd-networks for the recognition of 3d point cloud models. In Proceedings of the IEEE International Conference on Computer Vision, pages 863–872, 2017.

- [12] Artem Komarichev, Zichun Zhong, and Jing Hua. A-cnn: Annularly convolutional neural networks on point clouds. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 7421–7430, 2019.

- [13] Alex Krizhevsky, Ilya Sutskever, and Geoffrey E. Hinton. Imagenet classification with deep convolutional neural networks. In Advances in neural information processing systems, pages 1097–1105, 2012.

- [14] Loic Landrieu and Martin Simonovsky. Large-scale point cloud semantic segmentation with superpoint graphs. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 4558–4567, 2018.

- [15] Felix Järemo Lawin, Martin Danelljan, Patrik Tosteberg, Goutam Bhat, Fahad Shahbaz Khan, and Michael Felsberg. Deep projective 3d semantic segmentation. In International Conference on Computer Analysis of Images and Patterns, pages 95–107. Springer, 2017.

- [16] Truc Le and Ye Duan. Pointgrid: A deep network for 3d shape understanding. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 9204–9214, 2018.

- [17] Yangyan Li, Rui Bu, Mingchao Sun, Wei Wu, Xinhan Di, and Baoquan Chen. Pointcnn: Convolution on x-transformed points. In Advances in neural information processing systems, pages 820–830, 2018.

- [18] Yiqun Lin, Zizheng Yan, Haibin Huang, Dong Du, Ligang Liu, Shuguang Cui, and Xiaoguang Han. Fpconv: Learning local flattening for point convolution. arXiv preprint arXiv:2002.10701, 2020.

- [19] Yongcheng Liu, Bin Fan, Shiming Xiang, and Chunhong Pan. Relation-shape convolutional neural network for point cloud analysis. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 8895–8904, 2019.

- [20] Daniel Maturana and Sebastian Scherer. Voxnet: A 3d convolutional neural network for real-time object recognition. In 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), pages 922–928. IEEE, 2015.

- [21] Andres Milioto, Ignacio Vizzo, Jens Behley, and Cyrill Stachniss. Rangenet++: Fast and accurate lidar semantic segmentation. In Proc. of the IEEE/RSJ Intl. Conf. on Intelligent Robots and Systems (IROS), 2019.

- [22] Charles R Qi, Hao Su, Kaichun Mo, and Leonidas J Guibas. Pointnet: Deep learning on point sets for 3d classification and segmentation. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 652–660, 2017.

- [23] Charles R Qi, Hao Su, Matthias Nießner, Angela Dai, Mengyuan Yan, and Leonidas J Guibas. Volumetric and multi-view cnns for object classification on 3d data. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 5648–5656, 2016.

- [24] Charles Ruizhongtai Qi, Li Yi, Hao Su, and Leonidas J Guibas. Pointnet++: Deep hierarchical feature learning on point sets in a metric space. In Advances in neural information processing systems, pages 5099–5108, 2017.

- [25] Gernot Riegler, Ali Osman Ulusoy, and Andreas Geiger. Octnet: Learning deep 3d representations at high resolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 3577–3586, 2017.

- [26] Fabrizio Sebastiani. Machine learning in automated text categorization. ACM Computing Surveys, 34(1):1–47, 2002.

- [27] Josef Sivic and Andrew Zisserman. Efficient visual search of videos cast as text retrieval. IEEE Transactions on Pattern Analysis and Machine Intelligence, 31(4):591–606, 2009.

- [28] Hang Su, Varun Jampani, Deqing Sun, Subhransu Maji, Evangelos Kalogerakis, Ming-Hsuan Yang, and Jan Kautz. Splatnet: Sparse lattice networks for point cloud processing. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 2530–2539, 2018.

- [29] Hang Su, Subhransu Maji, Evangelos Kalogerakis, and Erik Learned-Miller. Multi-view convolutional neural networks for 3d shape recognition. In Proceedings of the IEEE international conference on computer vision, pages 945–953, 2015.

- [30] Maxim Tatarchenko, Jaesik Park, Vladlen Koltun, and Qian-Yi Zhou. Tangent convolutions for dense prediction in 3d. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 3887–3896, 2018.

- [31] Lyne Tchapmi, Christopher Choy, Iro Armeni, JunYoung Gwak, and Silvio Savarese. Segcloud: Semantic segmentation of 3d point clouds. In 2017 international conference on 3D vision (3DV), pages 537–547. IEEE, 2017.

- [32] Hugues Thomas, Charles R Qi, Jean-Emmanuel Deschaud, Beatriz Marcotegui, Francois Goulette, and Leonidas J Guibas. Kpconv: Flexible and deformable convolution for point clouds. In Proceedings of the IEEE International Conference on Computer Vision, pages 6411–6420, 2019.

- [33] Giang Truong, Syed Zulqarnain Gilani, Syed Mohammed Shamsul Islam, and David Suter. Fast point cloud registration using semantic segmentation. In 2019 Digital Image Computing: Techniques and Applications (DICTA), pages 1–8. IEEE, 2019.

- [34] Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N Gomez, Lukasz Kaiser, and Illia Polosukhin. Attention is all you need. In Advances in neural information processing systems, pages 5998–6008, 2017.

- [35] Chu Wang, Babak Samari, and Kaleem Siddiqi. Local spectral graph convolution for point set feature learning. pages 56–71, 2018.

- [36] Lei Wang, Yuchun Huang, Yaolin Hou, Shenman Zhang, and Jie Shan. Graph attention convolution for point cloud semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 10296–10305, 2019.

- [37] Pengshuai Wang, Yang Liu, Yuxiao Guo, Chunyu Sun, and Xin Tong. O-cnn: octree-based convolutional neural networks for 3d shape analysis. ACM Transactions on Graphics, 36(4):72, 2017.

- [38] Yue Wang, Yongbin Sun, Ziwei Liu, Sanjay E Sarma, Michael M Bronstein, and Justin Solomon. Dynamic graph cnn for learning on point clouds. ACM Transactions on Graphics, 38(5):146, 2019.

- [39] Bichen Wu, Alvin Wan, Xiangyu Yue, and Kurt Keutzer. Squeezeseg: Convolutional neural nets with recurrent crf for real-time road-object segmentation from 3d lidar point cloud. In 2018 IEEE International Conference on Robotics and Automation (ICRA), pages 1887–1893. IEEE, 2018.

- [40] Bichen Wu, Xuanyu Zhou, Sicheng Zhao, Xiangyu Yue, and Kurt Keutzer. Squeezesegv2: Improved model structure and unsupervised domain adaptation for road-object segmentation from a lidar point cloud. In 2019 International Conference on Robotics and Automation (ICRA), pages 4376–4382. IEEE, 2019.

- [41] Wenxuan Wu, Zhongang Qi, and Li Fuxin. Pointconv: Deep convolutional networks on 3d point clouds. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 9621–9630, 2019.

- [42] Zhirong Wu, Shuran Song, Aditya Khosla, Fisher Yu, Linguang Zhang, Xiaoou Tang, and Jianxiong Xiao. 3d shapenets: A deep representation for volumetric shapes. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 1912–1920, 2015.

- [43] Saining Xie, Sainan Liu, Zeyu Chen, and Zhuowen Tu. Attentional shapecontextnet for point cloud recognition. pages 4606–4615, 2018.

- [44] Jianchao Yang, Kai Yu, Yihong Gong, and Thomas S Huang. Linear spatial pyramid matching using sparse coding for image classification. pages 1794–1801, 2009.

- [45] Jiancheng Yang, Qiang Zhang, Bingbing Ni, Linguo Li, Jinxian Liu, Mengdie Zhou, and Qi Tian. Modeling point clouds with self-attention and gumbel subset sampling. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 3323–3332, 2019.

- [46] Zhiyuan Zhang, Binh-Son Hua, and Sai-Kit Yeung. Shellnet: Efficient point cloud convolutional neural networks using concentric shells statistics. In Proceedings of the IEEE International Conference on Computer Vision, pages 1607–1616, 2019.

- [47] Hengshuang Zhao, Li Jiang, Chi-Wing Fu, and Jiaya Jia. Pointweb: Enhancing local neighborhood features for point cloud processing. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 5565–5573, 2019.