Spatio-temporal point processes with deep non-stationary kernels

Abstract

Point process data are becoming ubiquitous in modern applications, such as social networks, health care, and finance. Despite the powerful expressiveness of the popular recurrent neural network (RNN) models for point process data, they may not successfully capture sophisticated non-stationary dependencies in the data due to their recurrent structures. Another popular type of deep model for point process data is based on representing the influence kernel (rather than the intensity function) by neural networks. We take the latter approach and develop a new deep non-stationary influence kernel that can model non-stationary spatio-temporal point processes. The main idea is to approximate the influence kernel with a novel and general low-rank decomposition, enabling efficient representation through deep neural networks and computational efficiency and better performance. We also take a new approach to maintain the non-negativity constraint of the conditional intensity by introducing a log-barrier penalty. We demonstrate our proposed method’s good performance and computational efficiency compared with the state-of-the-art on simulated and real data.

1 Introduction

Point process data, consisting of sequential events with timestamps and associated information such as location or category, are ubiquitous in modern scientific fields and real-world applications. The distribution of events is of great scientific and practical interest, both for predicting new events and understanding the events’ generative dynamics (Reinhart, 2018). To model such discrete events in continuous time and space, spatio-temporal point processes (STPPs) are widely used in a diverse range of domains, including modeling earthquakes (Ogata, 1988, 1998), the spread of infectious diseases (Schoenberg et al., 2019; Dong et al., 2021), and wildfire propagation Hering et al. (2009).

A modeling challenge is to accurately capture the underlying generative model of event occurrence in general spatio-temporal point processes (STPP) while maintaining the model efficiency. Specific parametric forms of conditional intensity are proposed in seminal works of Hawkes process (Hawkes, 1971; Ogata, 1988) to tackle the issue of computational complexity in STPPs, which requires evaluating the complex multivariate integral in the likelihood function. They use an exponentially decaying influence kernel to measure the influence of a past event over time and assume the influence of all past events is positive and linearly additive. Despite computational simplicity (since the integral of the likelihood function is avoided), such a parametric form limits the model’s practicality in modern applications.

Recent models use neural networks in modeling point processes to capture complicated event occurrences. RNN (Du et al., 2016) and LSTM (Mei and Eisner, 2017) have been used by taking advantage of their representation power and capability in capturing event temporal dependencies. However, the recurrent structures of RNN-based models cannot capture long-range dependency (Bengio et al., 1994) and attention-based structure (Zhang et al., 2020; Zuo et al., 2020) is introduced to address such limitations of RNN. Despite much development, existing models still cannot sufficiently capture spatio-temporal non-stationarity, which are common in real-world data (Graham et al., 2013; Dong et al., 2021). Moreover, while RNN-type models may produce strong prediction performance, the models consist of general forms of network layers and the modeling power relies on the hidden states, thus often not easily interpretable.

A promising approach to overcome the above model restrictions is point process models that combine statistical models with neural network representation, such as Zhu et al. (2022) and Chen et al. (2020), to enjoy both the interpretability and expressive power of neural networks. In particular, the idea is to represent the (possibly non-stationary) influence kernel based on a spectral decomposition and represent the basis functions using neural networks. However, the prior work (Zhu et al., 2022) is not specifically designed for non-stationary kernel and the low-rank representation can be made significantly more efficient, which is the main focus of this paper.

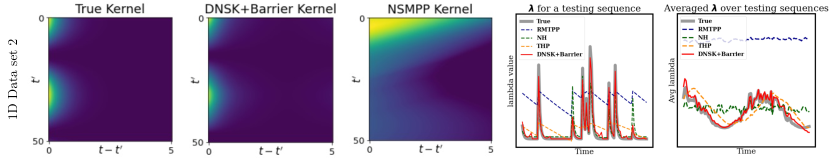

Contribution. In this paper, we develop a non-stationary kernel (referred to as DNSK) for (possibly non-stationary) spatio-temporal processes that enjoy efficient low-rank representation, which leads to much improved computational efficiency and predictive performance. The construction is based on an interesting observation that by reparameterize the influence kernel from the original form of , (where is the historical even time, and is the current time) to an equivalent form (which thus is parameterized by the displacement instead), the rank can be reduced significantly, as shown in Figure 1. This observation inspired us to design a much more efficient representation of the non-stationary point processes with much fewer basis functions to represent the same kernel.

In summary, the contributions of our paper include

-

•

We introduce an efficient low-rank representation of the influence kernel based on a novel “displacement” re-parameterization. Our representation can well-approximate a large class of general non-stationary influence kernels and is generalizable to spatio-temporal kernels (also potentially to data with high-dimensional marks). Efficient representation leads to lower computational cost and better prediction power, as demonstrated in our experiments.

-

•

In model fitting, we introduce a log-barrier penalty term in the objective function to ensure the non-negative conditional intensity function so the model is statistically meaningful, and the problem is numerically stable. This approach also enables the model to learn general influence functions (that can have negative values), which is a drastic improvement from existing influence kernel-based methods that require the kernel functions to be non-negative.

-

•

Using extensive synthetic and real data experiments, we show the competitive performance of our proposed methods in both model recovery and event prediction compared with the state-of-the-art, such as the RNN-based and transformer-based models.

1.1 Related works

The original work of A. Hawkes (Hawkes, 1971) provides classic self-exciting point processes for temporal events, which express the conditional intensity function with an influence kernel and a base rate. Ogata (1988, 1998) propose a parametric form of spatio-temporal influence kernel which enjoys strong model interpretability and efficiency. However, such simple parametric forms own limited expressiveness in characterizing the complex events’ dynamic in modern applications.

Neural networks have been widely adopted in point processes (Xiao et al., 2017; Chen et al., 2020). Du et al. (2016) incorporates recurrent neural networks and Mei and Eisner (2017) use a continuous-time invariant of LSTM to model event influence with exponential decay over time. These RNN-based models may be unable to capture complicated event dependencies due to the recurrent structure. Zhang et al. (2020); Zuo et al. (2020) introduce self-attentive structures into point processes for their capability to memorize long-term influence by dealing with an event sequence as a whole. The main limitation is that they assume a dot-product-based score function and assume linearly decaying of event influence. Omi et al. (2019) propose a fully-connected neural network to model the cumulative intensity function to go beyond parametric decaying influence. However, the event embeddings are still generated by RNN, and fitting cumulative intensity function by neural networks lacks model interpretability. Note that all the above models tackle temporal events with categorical marks, which are inapplicable in continuous time and location space.

Recent works adopt neural networks in learning the influence kernel function. The kernel introduced in Okawa et al. (2021) uses neural networks to model the latent dynamic of time interval but still assumes an exponentially decaying influence over time. Zhu et al. (2022) proposes a kernel representation using spectral decomposition and represents feature functions using deep neural networks to harvest powerful model expressiveness when dealing with marked event data. Our method considers an alternative novel kernel representation that allows the general kernel to be expressed further low-rankly.

2 Background

Spatio-temporal point processes (STPPs). (Reinhart, 2018; Moller and Waagepetersen, 2003) have been widely used to model sequences of random events that happen in continuous time and space. Let denote the event stream with time and location of th event. The event number is also random. Given the observed history before time , an STPP is then fully characterized by the conditional intensity function

| (1) |

where is a ball centered at with radius , and the counting measure is defined as the number of events occurring in . Naturally for any arbitrary and . In the following, we omit the dependency on history and use common shorthand . The log-likelihood of observing on is given by (Daley et al., 2003)

| (2) |

Neural point processes. parameterize the conditional intensity function by taking advantage of recurrent neural networks (RNNs). In Du et al. (2016), an input vector which extracts the information of event and the associated event attributes (can be event mark or location) is fed into the RNN. A hidden state vector is updated by , where is a mapping fulfilled by recurrent neural network operations. The conditional intensity function on is then defined as , where is an exponential transformation that guarantees a positive intensity. In Mei and Eisner (2017) the RNN is replaced by a continuous-time LSTM module with hidden states defined on and a Softplus function . Attention-based models are introduced in Zuo et al. (2020); Zhang et al. (2020) to overcome the inability of RNNs to capture sophisticated event dependencies due to their recurrent structures.

Hawkes process. (Hawkes, 1971) is a well-known generalized point process model. Assuming the influences from past events are linearly additive, the conditional intensity function takes the form of

| (3) |

where is an influence kernel function that captures event interactions. Commonly the kernel function is assumed to be stationary, that is, only depends on and , which limits the model expressivity. In this work, we aim to capture complicated non-stationarity in spatio-temporal event dependencies by leveraging the strong approximation power of neural networks in kernel fitting.

3 Low-rank deep non-stationary kernel

Due to the intricate dependencies between events, it is challenging to choose the form of kernel function that achieves great model expressiveness while enjoying high model efficiency. In this section, we introduce a unified model with a low-rank deep non-stationary kernel to capture the complex heterogeneity in events’ influence over spatio-temporal space.

3.1 Kernel with history and spatio-temporal displacement

For the influence kernel function , by using the displacements in and as variables, we first re-parameterize the kernel as , where the minus in refers to element-wise difference between and when . Then we achieve a finite-rank decomposed representation based on (truncated) singular value decomposition (SVD) for kernel functions (Mollenhauer et al., 2020) (which can be understood as the kernel version of matrix SVD, where the eigendecomposition is based on Mercer’s Theorem (Mercer, 1909)), and that the decomposed spatial (and temporal) kernel functions can be approximated under shared basis functions (cf. Assumption A.2). The resulting approximate finite-rank representation is written as (details are in Appendix A.1)

| (4) |

Here are two sets of temporal basis functions that characterize the temporal influence of event at and the decaying effect brought by elapsed time . Similarly, spatial basis functions capture the spatial influence of event at and the decayed influence after spreading over the displacement of . The corresponding weight at different spatio-temporal ranks combines each set of basis functions into a weighted summation, leading to the final expression of influence kernel .

To further enhance the model expressiveness, we use a fully-connected neural network to represent each basis function. The history or displacement is taken as the input and fed through multiple hidden layers equipped with Softplus non-linear activation function. To allow for inhibiting influence from past events (negative value of influence kernel ), we use a linear output layer for each neural network. For an influence kernel with temporal rank and spatial rank , we need independent neural networks for modeling.

The benefits of our proposed kernel framework lies in the following: (i) The kernel parameterization with displacement significantly reduces the rank needed when representing the complicated kernel encountered in practice as shown in Figure 1. (ii) The non-stationarity of original influence of historical events over spatio-temporal space can be conveniently captured by in-homogeneous , , making the model applicable in modeling general STPPs. (iii) The propagating patterns of influence are characterized by , which go beyond simple parametric forms. In particular, when the events’ influence has finite range, i.e. there exist and such that the influence decays to zero if or , we can restrict the parameterization of and on a local domain instead of , which further reduce the model complexity. Details of choosing kernel and neural network architectures are described in Appendix C.

Remark 1 (the class of influence kernel expressed).

The proposed deep kernel representation covers a large class of non-stationary kernels generally used in STPPs. In particular, the proposed form of the kernel does not need to be positive semi-definite or even symmetric (Reinhart, 2018). The low-rank decomposed formulation (4) is of SVD-type (cf. Appendix A.1). While each (and ) can be viewed as stationary (i.e., shift-invariant), the combination with left modes in the summation enables to model spatio-temporal non-stationarity. The technical assumptions A.1 and A.2 do not require more than the existence of a low-rank decomposition motivated by kernel SVD. As long as the many functions , , and , are sufficiently regular, they can be approximated and learned by a neural network. The universal approximation power of neural networks enables our framework to express a broad range of general kernel functions, and the low-rank decomposed form reduces the modeling of a spatio-temporal kernel to finite many functions on time and space domains (the right modes are on truncated domains), respectively.

4 Efficient computation of model

We consider model optimization through Maximum likelihood estimation (MLE) (Reinhart, 2018). The resulting conditional intensity function could now be negative by allowing inhibiting historical influence. A common approach to guarantee the non-negativity is to adopt a nonlinear positive activation function in the conditional intensity (Du et al., 2016; Zhu et al., 2022). However, the integral of such a nonlinear intensity over spatio-temporal space is computationally expensive. To tackle this, we first introduce a log-barrier to the MLE optimization problem to guarantee the non-negativity of conditional intensity function and maintain its linearity. Then we provide a computationally efficient strategy that benefits from the linearity of the conditional intensity. The extension of the approach to point process data with marks is given in Appendix B.

4.1 Model optimization with log-barrier

We re-denote in (2) by in terms of model parameter . The constrained MLE optimization problem for model parameter estimation can be formulated as:

Introduce a log-barrier method (Boyd et al., 2004) to ensure the non-negativity of , and penalize the values of on a dense enough grid . The log-barrier is defined as

| (5) |

where indicate the index of the gird, and is a lower bound of conditional intensity function on the grid to guarantee the feasibility of logarithm operation. The MLE optimization problem can be written as

| (6) | ||||

where is a weight to control the trade-off between log-likelihood and log-barrier; and can be set accordingly during the learning procedure. Details can be found in Appendix A.2.

Note that previous works (Du et al., 2016; Mei and Eisner, 2017; Pan et al., 2021; Zuo et al., 2020; Zhu et al., 2022) use a scaled positive transformation to guarantee non-negativity conditional intensity function. Compared with them, the log-barrier method preserves the linearity of the conditional intensity function. As shown in Table 1, such a log-barrier method enables efficient model computation (See more details in Section 4.2) and enhance the model recovery power.

4.2 Model computation

The log-likelihood computation of general STPPs (especially those with general influence function) is often difficult and requires numerical integral and thus time-consuming. Given a sequence of events of number , the complexity of neural network evaluation is of for the term of log-summation and of () when using numerical integration for the double integral term with sampled points in a multi-dimensional space. In the following, we circumvent the calculation difficulty by proposing an efficient computation for with complexity of neural network evaluation through a domain discretization strategy.

Computation of log-summation.

The first log-summation term in (2) can be written as:

| (7) |

Note that each only needs to be evaluated at event time and each is evaluated at all the event location . To avoid the redundant evaluations of over every pair of , we set up a uniform grid over time horizon and evaluate on the grid. The value of can be obtained by linear interpolation with values on two adjacent grid points of . By doing so, we only need to evaluate for times on the grids. Note that can be simply feed with when without any neural network evaluation.

Here we directly evaluate since numerical interpolation is less accurate in location space. Note that one does not need to evaluate every pair of index . Instead, we have . We use to other pairs of .

Computation of integral.

A benefit of our approach is that we avoid numerical integration for the conditional intensity function (needed to evaluate the likelihood function), since the design of the kernel allows us to decompose the desired integral to integrating basis functions. Specifically, we have

| (8) | ||||

To compute the integral of , we take the advantage of the pre-computed on the grid . Let . Then can be computed by the linear interpolation of values of at two adjacent grid points (in ) of . In particular, evaluated on equals to the cumulative sum of divided by the grid width.

The integral of can be estimated based on a grid in since it decays outside the ball. For each , , where . Thus the integral is well estimated with the evaluations of on grid set . Note that in practice we only evaluate on once and use subsets of the evaluations for different . More details about grid-based computation can be found in Appendix A.3.

Computation of log-barrier.

The barrier term is calculated in a similar way as (7) by replacing with , i.e. we use interpolation to calculate and evaluate on a subset of , .

4.3 Computational complexity

| 1D Data set 1 | 3D Data set 1 | |||

|---|---|---|---|---|

| Model | #Parameters | Training time | #Parameters | Training time |

| NSMPP | ||||

| DNSK+Barrier | ||||

The evaluation of and over events costs complexity. The evaluation of is of complexity since it relies on the grid . The evaluation of costs no more than complexity. We note that are all constant that much less than event number , thus the overall computation complexity will be . We compare the model training time per epoch for a baseline equipped with a softplus activation function (NSMPP) and our model with log-barrier method (DNSK+Barrier) on a 1D synthetic data set and a 3D synthetic data set. The quantitative results in Table 1 demonstrates the efficiency improvement of our model by using log-barrier technique. More details about the computation complexity analysis can be found in Appendix A.4.

5 Experiment

We use large-scale synthetic and real data sets to demonstrate the superior performance of our model and present the results in this section. Experimental details and results can be found in Appendix C. Codes will be released upon publication.

Baselines.

We compare our method (DNSK+Barrier) with: (i) RMTPP (RMTPP) (Du et al., 2016); (ii) Neural Hawkes (NH) (Mei and Eisner, 2017); (iii) Transformer Hawkes process (THP) (Zuo et al., 2020); (iv) Parametric Hawkes process (PHP+exp) with exponentially decaying spatio-temporal kernel; (v) Neual spectral marked point processes (NSMPP) (Zhu et al., 2022); (vi) DNSK without log-barrier but with a non-negative Softplus activation function (DNSK+Softplus). We note that RMTPP, NH and THP directly model conditional intensity function using neural networks while others learn the influence kernel in the framework of (3). In particular, NSMPP designs the kernel based on singular value decomposition but parameterizes it without displacement. The model parameters are estimated using the training data via Adam optimization method (Kingma and Ba, 2014). Details of training can be found in Appendix A.2 and C.

5.1 Synthetic data experiments

Synthetic data sets.

To show the effectiveness of DNSK+Barrier, we conduct all the models on three temporal data sets and three spatio-temporal data sets generated by following true kernels: (i) 1D exponential kernel (ii) 1D non-stationary kernel; (iii) 1D infinite rank kernel; (iv) 2D exponential kernel; (v) 3D non-stationary inhibition kernel; (vi) 3D non-stationary mixture kernel. Data sets are generated using thinning algorithm in Daley and Vere-Jones (2008). Each data set is composed of sequences. Details of kernel formulas and data generation can be found in Appendix C.

We consider two performance metrics for testing data evaluation: Mean relative error (MRE) of the predicted intensity and log-likelihood. The true and predicted can be calculated using (4) with true and learned kernel. The MRE for one test trajectory is defined as and the averaged MRE over all test trajectories is reported. The log-likelihood for observing each testing sequence can be computed according to (2), and the average predictive log-likelihood per event is reported. The log-likelihood shows the model’s goodness-of-fit, and the intensity evaluation further reflects the model’s ability to recover the underlying mechanism of event occurrence and predict the future.

| Model | 1D Data set 1 | 1D Data set 2 | 1D Data set 3 | 2D Data set 1 | 3D Data set 1 | 3D Data set 2 |

|---|---|---|---|---|---|---|

| RMTPP | ||||||

| NH | ||||||

| THP | ||||||

| PHP+exp | ||||||

| NSMPP | ||||||

| DNSK+Softplus | ||||||

| DNSK+Barrier |

The heat maps in Figure 2 visualize the results of non-stationary kernel recovery for DNSK+Barrier and NSMPP on 1D Data set 2 and 3 (The true kernel used in 1D Data set 3 is the one in Figure 1). DNSK+Barrier recovers the true kernel more accurately than NSMPP, indicating the strong representation power of the low-rank kernel parameterization with displacements. Line charts in Figure 2 present the recovered intensities with the true ones (dark grey curves). It demonstrates that our method can accurately capture the temporal dynamics of events. In particular, the average conditional intensity over multiple testing sequences shows the model’s ability to recover data non-stationarity over time. While DNSK+Barrier successfully captures the non-stationarity among data, both RMTPP and NH fail to do so by showing a flat curve of the averaged intensity. Note that THP with positional encoding recovers the data non-stationarity (as shown in two figures in the last column). However, our method still outperforms THP which suffers from limited model expressiveness when complicated propagation of event influence is involved (see two figures in the penultimate column).

Tabel 2 summarized the quantitative results of testing log-likelihood and MRE. It shows that DNSK+Barrier has superior predictive performance against baselines in characterizing the dynamics of data generation in spatio-temporal space. Specifically, with evidently over-parameterization for 1D Data set 1 generated by a stationary exponentially decaying kernel, our model can still approximate the kernel and recover the true conditional intensity without overfitting, which shows the adaptiveness of our model. Moreover, DNSK+Barrier enjoys outstanding performance gain when learning a diverse variety of complicated non-stationary kernels. The comparison between DNSK+Softplus and DNSK+Barrier proves that the model with log-barrier achieves a better recovery performance by maintaining the linearity of the conditional intensity. THP outperforms RMTPP in non-stationary cases but is still limited due to its pre-assumed parametric form of influence propagation. More results about kernel and intensity recovery can be found in Appendix C.

5.2 Real data results

Real data sets.

We provide a comprehensive evaluation of our approach on several real-world data sets: we first use two popular data sets containing time-stamped events with categorical marks to demonstrate the robustness of DNSK+Barrier in marked STPPs (refer to Appendix B for detailed definition and kernel modeling): (i) Financial Transactions. (Du et al., 2016). This data set contains transaction records of a stock in one day with time unit milliseconds and the action (mark) of each transaction. We partition the events into different sequences by time stamps. (ii) StackOverflow (Leskovec and Krevl, 2014): The data is collected from the website StackOverflow over two years, containing reward records for users who promote engagement in the community. Each user’s reward history is treated as a sequence.

Next, we demonstrate the practical versatility of the model using the following spatio-temporal data sets: (i) Southern California earthquake data provided by Southern California Earthquake Data Center (SCEDC) contains time and location information of earthquakes in Southern California. We collect 19,414 records from 1999 to 2019 with magnitude larger than 2.5 and partition the data into multiple sequences by month with average length of 40.2. (ii) Atlanta robbery & burglary data. Atlanta Police Department (APD) provides a proprietary data source for city crime. We extract 3420 reported robberies and 14958 burglaries with time and location from 2013 to 2019. Two crime types are preprocessed as separate data sets on a 10-day basis with average lengths of 13.7 and 58.7.

Finally, the model’s ability to tackle high-dimensional marks is evaluated with Atlanta textual crime data. The proprietary data set provided by APD records 4644 crime incidents from 2016 to 2017 with time, location, and comprehensive text descriptions. The text information is preprocessed by TF-IDF technique, leading to a -dimensional mark for each event.

| Financial | StackOverflow | |||||

| Model | Testing | Time RMSE | Type Accuracy | Testing | Time RMSE | Type Accuracy |

| RMTPP | ||||||

| NH | ||||||

| THP | –1.231 | |||||

| NSMPP | ||||||

| DNSK+Softplus | ||||||

| DNSK+Barrier | –0.709 | 0.153 | 0.630 | 4.833 | 0.508 | |

Table 3 summarizes the results of models dealing with categorical marks. Event time and type prediction are evaluated by Root Mean Square Error (RMSE) and accuracy, respectively. We can see that DNSK+Barrier outperforms the baselines in all prediction tasks by providing less time RMSE and higher type accuracy.

For real-world spatio-temporal data, we report average predictive log-likelihood per event for the testing set since MRE is not applicable. Besides, we perform online prediction for earthquake data to demonstrate the model predicting ability. The probability density function which represents the conditional probability that the next event will occur at given history can be written as The predicted time and location of the next event can be computed as , We predict the the time and location of the last event in each sequence. The mean absolute error (MAE) of the predictions is computed. The quantitative results in Table 4 show that DNSK+Barrier provides more accurate predictions than other alternatives with higher event log-likelihood.

To demonstrate our model’s interpretability and power to capture heterogeneous data characteristics, we visualize the learned influence kernels and predicted conditional intensity for two crime categories in Figure 3. The first column shows kernel evaluations at fixed geolocation in downtown Atlanta which intuitively reflect the spatial influence of crimes in that neighborhood. The influence of a robbery in the downtown area is more intensive but regional, while a burglary which is hard to be responded to by police in time would impact a larger neighborhood along major highways of Atlanta. We also provide the predicted conditional intensity over space for two crimes. As we can observe, DNSK+Barrier captures the occurrence of events in regions with a higher crime rate, and crimes of the same category happening in different regions would influence their neighborhoods differently. We note that this example emphasizes the ability of the proposed method to recover data non-stationarity with different sequence lengths, and improve the limited model interpretability of other neural network-based methods (RMTPP, NH, and THP) in practice.

| South California Earthquake | Atlanta Robbery | Atlanta Burglary | Atlanta Textual Crime | |||

|---|---|---|---|---|---|---|

| Model | Testing | Time MAE | Location MAE | Testing | Testing | Testing |

| RMTPP | / | |||||

| NH | / | |||||

| THP | / | |||||

| PHP+exp | ||||||

| NSMPP | ||||||

| DNSK+Softplus | ||||||

| DNSK+Barrier | 1.474 | 0.431 | ||||

-

•

1RMTPP, NH, and THP are not applicable when dealing with high-dimensional data.

For Atlanta textual crime data, we borrow the idea in Zhu and Xie (2022) by encoding the highly sparse TF-IDF representation into a binary mark vector with dimension using Restricted Boltzmann Machine (RBM) (Fischer and Igel, 2012). The average testing log-likelihoods per event for each model are reported in Table 4. The results show that DNSK+Barrier outperforms PHP+exp in Zhu and Xie (2022) and NSMPP by achieving a higher testing log-likelihood. We visualize the basis functions of learned influence kernel by DNSK+Barrier in Figure A.4 in Appendix.

6 Conclusion

We propose a deep non-stationary kernel for spatio-temporal point processes using a low-rank parameterization scheme based on displacement, which enables the model to be further low-rank when learning complicated influence kernel, thus significantly reducing the model complexity. The non-negativity of the solution is guaranteed by a log-barrier method which maintains the linearity of the conditional intensity function. Based on that, we propose a computationally efficient strategy for model estimation. The superior performance of our model is demonstrated using synthetic and real data sets.

Acknowledgement

The work of Dong and Xie are partially supported by by an NSF CAREER CCF-1650913, and NSF DMS-2134037, CMMI-2015787, DMS-1938106, and DMS-1830210.

References

- Reinhart [2018] Alex Reinhart. A review of self-exciting spatio-temporal point processes and their applications. Statistical Science, 33(3):299–318, 2018.

- Ogata [1988] Yosihiko Ogata. Statistical models for earthquake occurrences and residual analysis for point processes. Journal of the American Statistical association, 83(401):9–27, 1988.

- Ogata [1998] Yosihiko Ogata. Space-time point-process models for earthquake occurrences. Annals of the Institute of Statistical Mathematics, 50(2):379–402, 1998.

- Schoenberg et al. [2019] Frederic Paik Schoenberg, Marc Hoffmann, and Ryan J Harrigan. A recursive point process model for infectious diseases. Annals of the Institute of Statistical Mathematics, 71(5):1271–1287, 2019.

- Dong et al. [2021] Zheng Dong, Shixiang Zhu, Yao Xie, Jorge Mateu, and Francisco J Rodríguez-Cortés. Non-stationary spatio-temporal point process modeling for high-resolution covid-19 data. arXiv preprint arXiv:2109.09029, 2021.

- Hering et al. [2009] Amanda S Hering, Cynthia L Bell, and Marc G Genton. Modeling spatio-temporal wildfire ignition point patterns. Environmental and Ecological Statistics, 16(2):225–250, 2009.

- Hawkes [1971] Alan G Hawkes. Spectra of some self-exciting and mutually exciting point processes. Biometrika, 58(1):83–90, 1971.

- Du et al. [2016] Nan Du, Hanjun Dai, Rakshit Trivedi, Utkarsh Upadhyay, Manuel Gomez-Rodriguez, and Le Song. Recurrent marked temporal point processes: Embedding event history to vector. In Proceedings of the 22nd ACM SIGKDD international conference on knowledge discovery and data mining, pages 1555–1564, 2016.

- Mei and Eisner [2017] Hongyuan Mei and Jason M Eisner. The neural hawkes process: A neurally self-modulating multivariate point process. Advances in neural information processing systems, 30, 2017.

- Bengio et al. [1994] Yoshua Bengio, Patrice Simard, and Paolo Frasconi. Learning long-term dependencies with gradient descent is difficult. IEEE transactions on neural networks, 5(2):157–166, 1994.

- Zhang et al. [2020] Qiang Zhang, Aldo Lipani, Omer Kirnap, and Emine Yilmaz. Self-attentive hawkes process. In International conference on machine learning, pages 11183–11193. PMLR, 2020.

- Zuo et al. [2020] Simiao Zuo, Haoming Jiang, Zichong Li, Tuo Zhao, and Hongyuan Zha. Transformer hawkes process. In International conference on machine learning, pages 11692–11702. PMLR, 2020.

- Graham et al. [2013] Michael Graham, Jarno Kiviaho, and Jussi Nikkinen. Short-term and long-term dependencies of the s&p 500 index and commodity prices. Quantitative Finance, 13(4):583–592, 2013.

- Zhu et al. [2022] Shixiang Zhu, Haoyun Wang, Zheng Dong, Xiuyuan Cheng, and Yao Xie. Neural spectral marked point processes. In International Conference on Learning Representations, 2022. URL https://openreview.net/forum?id=0rcbOaoBXbg.

- Chen et al. [2020] Ricky TQ Chen, Brandon Amos, and Maximilian Nickel. Neural spatio-temporal point processes. arXiv preprint arXiv:2011.04583, 2020.

- Xiao et al. [2017] Shuai Xiao, Junchi Yan, Xiaokang Yang, Hongyuan Zha, and Stephen Chu. Modeling the intensity function of point process via recurrent neural networks. In Proceedings of the AAAI Conference on Artificial Intelligence, volume 31, 2017.

- Omi et al. [2019] Takahiro Omi, Kazuyuki Aihara, et al. Fully neural network based model for general temporal point processes. Advances in neural information processing systems, 32, 2019.

- Okawa et al. [2021] Maya Okawa, Tomoharu Iwata, Yusuke Tanaka, Hiroyuki Toda, Takeshi Kurashima, and Hisashi Kashima. Dynamic hawkes processes for discovering time-evolving communities’ states behind diffusion processes. In Proceedings of the 27th ACM SIGKDD Conference on Knowledge Discovery & Data Mining, pages 1276–1286, 2021.

- Moller and Waagepetersen [2003] Jesper Moller and Rasmus Plenge Waagepetersen. Statistical inference and simulation for spatial point processes. CRC press, 2003.

- Daley et al. [2003] Daryl J Daley, David Vere-Jones, et al. An introduction to the theory of point processes: volume I: elementary theory and methods. Springer, 2003.

- Mollenhauer et al. [2020] Mattes Mollenhauer, Ingmar Schuster, Stefan Klus, and Christof Schütte. Singular value decomposition of operators on reproducing kernel hilbert spaces. In Advances in Dynamics, Optimization and Computation: A volume dedicated to Michael Dellnitz on the occasion of his 60th birthday, pages 109–131. Springer, 2020.

- Mercer [1909] J Mercer. Functions ofpositive and negativetypeand theircommection with the theory ofintegral equations. Philos. Trinsdictions Rogyal Soc, 209:4–415, 1909.

- Boyd et al. [2004] Stephen Boyd, Stephen P Boyd, and Lieven Vandenberghe. Convex optimization. Cambridge university press, 2004.

- Pan et al. [2021] Zhimeng Pan, Zheng Wang, Jeff M Phillips, and Shandian Zhe. Self-adaptable point processes with nonparametric time decays. Advances in Neural Information Processing Systems, 34, 2021.

- Kingma and Ba [2014] Diederik P. Kingma and Jimmy Ba. Adam: A method for stochastic optimization, 2014. URL https://arxiv.org/abs/1412.6980.

- Daley and Vere-Jones [2008] Daryl J Daley and David Vere-Jones. An Introduction to the Theory of Point Processes. Volume II: General Theory and Structure. Springer, 2008.

- Leskovec and Krevl [2014] Jure Leskovec and Andrej Krevl. Snap datasets: Stanford large network dataset collection, 2014.

- Zhu and Xie [2022] Shixiang Zhu and Yao Xie. Spatiotemporal-textual point processes for crime linkage detection. The Annals of Applied Statistics, 16(2):1151–1170, 2022.

- Fischer and Igel [2012] Asja Fischer and Christian Igel. An introduction to restricted boltzmann machines. In Iberoamerican congress on pattern recognition, pages 14–36. Springer, 2012.

- Lewis and Shedler [1979] PA W Lewis and Gerald S Shedler. Simulation of nonhomogeneous poisson processes by thinning. Naval research logistics quarterly, 26(3):403–413, 1979.

Appendix A Additional methodology details

A.1 Derivation of (4)

We denote , the variables , , and , where the sets . Viewing the spatial and temporal variables, i.e., and , as left and right mode variables, respectively, the kernel function SVD [Mollenhauer et al., 2020, Mercer, 1909] of gives that

| (A.1) |

We assume that the SVD can be truncated at with a residual of for some small , and this holds as long as the singular values decay sufficiently fast. To fulfill the approximate finite-rank representation, it suffices to have the scalars and the functions and so that the expansion approximates the kernel , even if they are not SVD of the kernel. This leads to the following assumption:

Assumption A.1.

There exist coefficients , and functions , s.t.

| (A.2) |

To proceed, one can apply kernel SVD again to and respectively, and obtain left and right singular functions that potentially differ for different . Here, we impose that across , the singular functions of are the same (as shown below, being approximately same suffices) set of basis functions, that is,

As we will truncate to be up to a finite rank again (up to an residual) we require the (approximately) shared singular modes only up to . Similarly as above, technically it suffices to have a finite-rank expansion to achieve the error without requiring them to be SVD, which leads to the following assumption where we assume the same condition for :

Assumption A.2.

For the and in (A.2), up to an error,

(i) The temporal kernel functions can be approximated under a same set of left and right basis functions, i.e., there exist coefficients , and functions , for , s.t.

| (A.3) |

(ii) The spatial kernel functions can be approximated under a same set of left and right basis functions, i.e., there exist coefficients , and functions , for , s.t.

| (A.4) |

A.2 Algorithms

A.3 Grid-based model computation

In this section, we elaborate on the details of the grid-based efficient model computation.

In Figure A.1, we visualize the procedure of computing the integrals of and in (8), respectively. Panel (a) illustrates the calculation of . As explained in Section 4.2, the evaluations of only happens on the grid over (since when ). The value of on the grid can be obtained through numerical integration. Then given , the value of is calculated using linear interpolation of on two adjacent grid points of . Panel (b) shows the computation of . Given , since when . Then is discretized into the grid , and can be calculated based on the value of on the grid points in (the deep red dots in Figure A.1(b)) using numerical integration.

| Spatial resolution: | |||

|---|---|---|---|

| Temporal resolution: | 1000 | 1500 | 3000 |

| 30 | |||

| 50 | |||

| 100 | |||

To evaluate the sensitivity of our model to the chosen grids, we compare the performance of DNSK+Barrier on 3D Data set 2 using grids with different resolutions. The quantitative results of testing log-likelihood and intensity prediction error are reported in Table A.1. We use for the experiments in the main paper. As we can see, the model shows similar performances when a higher grid resolution is used and works slightly less accurately but still better than other baselines with less number of grid points. It reveals that our choice of grid resolution is accurate enough to capture the complex dynamics of event occurrences for this non-stationary data, and the model performance is robust to different grid resolutions.

In practice, the grids can be flexibly chosen to reach the balance of model accuracy and computational efficiency. For instance, the number of uniformly distributed grid points along one dimension can be chosen around , where is the average number of events in one observed sequence. Note that or would be far less than the total number of observed events because we use thousands of sequences ( in our synthetic experiments) for model learning. And the grid size can be even smaller when it comes to non-Lebesgue-measured space.

A.4 Details of computational complexity

We provide the detailed analysis of the computation complexity of in Section 4.3 as following:

Computation of log-summation. The evaluation of and over events costs complexity. The evaluation of is of complexity since it relies on the grid . With the assumption that the conditional intensity is bounded by a constant in a finite time horizon [Lewis and Shedler, 1979, Daley et al., 2003, Zhu et al., 2022], for each fixed , the cardinality of set is less than , which leads to a complexity of evaluation.

Computation of integral. The integration of only relies on numerical operations of on grids without extra evaluations of neural networks. The integration of depends on the evaluation on grid of complexity.

Computation of barrier. on grid is estimated by numerical interpolation of previously computed on grid . Additional neural network evaluations of cost no more than complexity.

Appendix B Deep non-stationary kernel for Marked STPPs

In marked STPPs [Reinhart, 2018], each observed event is associated with additional information describing event attribute, denoted as . Let denote the event sequence. Given the observed history , the conditional intensity function of a marked STPPs is similarly defined as:

where is a ball centered at with radius . The log-likelihood of observing on is given by

B.1 Kernel incorporating marks

One of the salient features of our spatio-temporal kernel framework is that it can be conveniently adopted in modeling marked STPPs with additional sets of mark basis functions . We modify the influence kernel function accordingly as following:

Here and represented by independent neural networks model the influence of historical mark and current mark , respectively. Since the mark space is always categorical and the difference between and is of little practical meaning, we use and to model and separately instead of modeling .

B.2 Log-barrier and model computation

The conditional intensity for marked spatio-temporal point processes at can be written as:

We need to guarantee the non-negativity of over the space of . When the total number of unique categorical mark in is small, the log-barrier can be conveniently computed as the summation of on grids . In the following we focus on the case that is high-dimensional with number of unique marks.

For model simplicity we use non-negative and in this case (which can be done by adding a non-negative activation function to the linear output layer in neural networks). We re-write and denote as following:

Note that the function in the brackets are only with regard to . We denote it as (since it is in the th rank of mark). Since , the non-negativity of can be guaranteed by the non-negativity of . Thus we apply log-barrier method on . The log-barrier term becomes:

Since our model is low-rank, the value of will not be large.

For the model computation, the additional evaluations for on events is of complexity and the evaluations for only depends on the unique number of marks which at most of . The log-barrier method does not introduce extra evaluation in mark space. Thus the overall computation complexity for DNSK in marked STPPs is still .

Appendix C Additional experimental results

In this section we provide details of data sets and experimental setup, together with additional experimental results.

Synthetic data sets.

To show the robustness of our model, we generate three temporal data sets and three spatio-temporal data sets using the following kernels:

-

(i)

1D Data set 1 with exponential kernel: .

-

(ii)

1D Data set 2 with non-stationary kernel: .

-

(iii)

1D Data set 3 with infinite rank kernel:

-

(iv)

2D Data set 1 with exponential kernel: .

-

(v)

3D Data set 1 with non-stationary inhibition kernel:

, where .

-

(vi)

3D Data set 2 with non-stationary mixture kernel:

, where , and .

Note that kernel (iii) is the one we illustrated in Figure 1, which is of infinite rank according to the formulas. In Figure 1, the value matrix of and are the kernel evaluations on a same uniform grid. As we can see, the rank of the value matrix of the same kernel is reduced from to after changing to the displacement-based kernel parameterization.

Details of Experimental setup.

For RMTPP and NH we test embedding size of and choose for experiments. For THP we take the default experiment setting recommended by Zuo et al. [2020]. For NSMPP we use the same model setting in Zhu et al. [2022] with rank . Each experiment is implemented by the following procedure: Given the data set, we split of the sequences as training set and as testing set.

We use independent fully-connected neural networks with two-hidden layers for each basis function. Each layer contains hidden nodes. The temporal rank of DNSK+Barrier is set to be for synthetic data (i), (ii), (iv), (v), for (vi), and for (iii). The spatial rank is for synthetic data (iv), (v) and for (vi). The temporal and spatial rank for real data are both set to be through cross validation. For each real data set, the is chosen to be around and is for each data set since the location space is normalized before training. The hyper-parameter of DNSK+Softplus are the same as DNSK+Barrier. For RMTPP, NH, and THP the batch size is and the learning rate is . For others, the batch size is and the learning rate is . The quantitative results are collected by running each experiment for independent times. All experiments are implemented on Google Colaboratory (Pro version) with 25GB RAM and a Tesla T4 GPU.

C.1 Synthetic results with 2D & 3D kernel

In this section we present additional experiment results for the synthetic data sets with 2D exponential and 3D non-stationary mixture kernel. Our proposed model successfully recovers the kernel and event conditional intensity in both case. Note that the recovery of 3D mixture kernel demonstrates the capability of our model to handle complex event dependency with mixture patterns by conveniently setting time and mark rank to be more than 1.

C.2 Atlanta textual crime data with high-dimensional marks

Figure A.4 visualizes the fitting and prediction results of DNSK+Barrier. Our model presents an decaying pattern in temporal effect and captures two different patterns of spatial influence for incidents in the northeast. Besides, the in-sample and out-of-sample intensity predictions demonstrate the ability of DNSK to characterize the event occurrences by showing different conditional intensities.