Spectral Embedding of Graph Networks

Abstract

We introduce an unsupervised graph embedding that trades off local node similarity and connectivity, and global structure. The embedding is based on a generalized graph Laplacian, whose eigenvectors compactly capture both network structure and neighborhood proximity in a single representation. The key idea is to transform the given graph into one whose weights measure the centrality of an edge by the fraction of the number of shortest paths that pass through that edge, and employ its spectral proprieties in the representation. Testing the resulting graph network representation shows significant improvement over the sate of the art in data analysis tasks including social networks and material science. We also test our method on node classification from the human-SARS CoV-2 protein-protein interactome.

1 Introduction

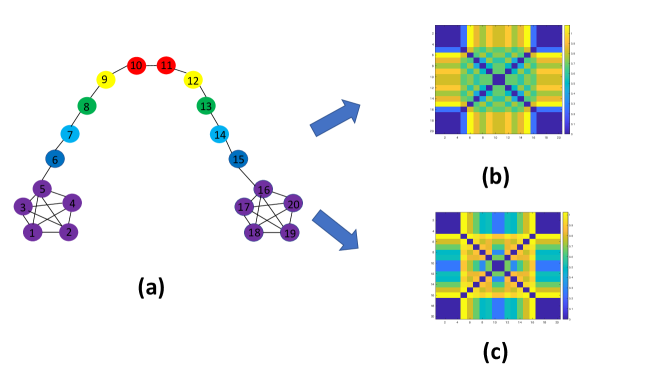

Consider the “barbell” graph in Fig. 1(a). There are two qualitatively different kinds of nodes: Those on the handle (6 through 15), and those at its ends (1-5 and 16-20). Some nodes play a structural role, such as 5 and 16 that join the ends to the handle. Our goal is to design a representation of the graph that can capture both the local similarity between adjacent nodes, as well as the non-local similarity of distant nodes based on their structural properties. Such a representation would empower network topology analysis in fields as diverse as material science, social science, biology and commerce. The key contribution of this paper is an unsupervised approach to learn an embedding function associated with each node, that trades off local and structural similarity. At the core is a method that employs a set of two basis functions to represent the graph structure.

We propose to employ basis functions that allow to compactly capture local and structural similarity using the edge betweeness centrality graph. For a graph with vertices in the set and edge weights in , we measure the centrality of an edge by the fraction of the number of shortest paths that pass through that edge, called edge betweenness centrality (EBC). From the betweenness centrality of the edges of , we construct a modified graph with the same connectivity of , but edges weighted by their EBC value. We call the resulting graph the edge betweeness centrality graph (BCG), and indicate it with . Note that the EBC computes the importance of an edge in the network in terms of how often it is on shortest paths between origin and destination nodes. We use the spectral proprieties of the BCG to define our embedding in a way that captures non-local variations.

We consider two properties of the BCG, which are not necessarily manifest in the original graph : First, edges with high EBC tend to distribute almost uniformly in different parts of real-world networks. Second, the EBC can take a broad range of values across such networks. We use these properties to construct graph embeddings, and show that they are informative of the structure of the graph.

The embedding we propose captures both local as well as structural properties that each node can exhibit simultaneously. While structural information is localized, in the sense of being associated to a particular node, it is not local, as it depends on properties of nodes beyond its neighbors.

Our approach is reminiscent of uncertainty principles developed in graph harmonic analysis [3], that explore to what extent signals can be represented simultaneously in the vertex and spectral domains. We extend this approach to characterize the relation between the spectral and non-local graph domain spread of signals defined on the nodes. We do so using a generalized Laplacian whose node embeddings simultaneously capture local and structural properties.

One can employ the generalized Laplacian derived from our approach, by applying off-the-shelf techniques to generate graph representations that capture complex patterns in the graph networks. While in general there is no single “true” universal way to capture embedding distances between nodes on the graph, our approach allows flexibility in generating embeddings which can be useful for several tasks. We show our method’s viability in a number of unsupervised learning tasks including node classification in social networks, node in protein-to-protein interaction (PPI) networks which include the human-SARS- CoV-2 protein-protein interactome, clustering and forecasting failure of edges in material science.

2 Related Work

We employ BCG as a tool to rank the importance of edges in the graph network given an adjacency matrix.

Graph centrality based measurements, such as vertex centrality, eigenvector centrality [20], and edge betweeness centrality [27] have been widely used in network analysis and its diverse applications [7, 10]. Feature learning in a graph , which aims to learn a function from the graph nodes into a vector space , has recently gained considerable interest in network analysis [22, 30, 29]. Graph convolutional networks [24, 11, 37] are among the most successful for graph based feature learning, combining the expressiveness of neural networks with graph structures.

Our work is related to uncertainty principles in graphs [3] and studied in later works [35]. [3] suggests extending traditional uncertainty principles in signal processing to more general domains such as irregular and unweighted graphs [32]. [3] provides definitions of graph and spectral spreads, where the graph spread is defined for an arbitrary vertex and studies to what extend a signal can be localized both in the graph and spectral domains.

Other related work includes different approaches in manifold learning [31], [2], [36], manifold regularization [18], [13], [15], [16] [14], [17], [12], graph diffusion kernels [9] and kernel methods widely used in computer graphics [4, 34] for shape detection. Most methods assume that signals defined over the graph nodes exhibit some level of smoothness with respect to the connectivity of the nodes in the graph and therefore are biased to capture local similarity.

3 Preliminaries and Definitions

Consider an undirected, weighted graph with nodes and edges where denotes the weight of an edge between nodes and . The degree of a node is the sum of the weights of edges connected to it. The combinatorial graph Laplacian is denoted by , and defined as , with the diagonal degree matrix with entries . The eigenvalues and eigenvectors of are denoted by and , respectively.

3.0.1 The Edge Betweeness Centrality Graph (BCG)

The edge betweeness centrality (EBC) is defined as

| (1) |

where is the number of the shortest distance paths from node to node and is the number of those paths that includes the edge . Next we defined the BCG which modifies the edges in to the EBC weights and shares the same connectivity as . Formally the similarity matrix representing connectivity between nodes in the graph is given by

| (2) |

Note that while the graph shares the same connectivity as , the spectral representation given by the eigensystem of the graph Laplacian , the eigenvalues and their associated eigenvectors and , are rather different. Importantly, the eigenvectors provide a different realization of the graph structure in comparison to the eigenvectors of an unweighted graph, that is captured by a different diffusion process around each vertex.

3.0.2 Total Vertex and Spectral Spreads

The vertex spread of a general weighted and undirected graph is defined as follows:

Definition 1

(Total vertex domain spread)

The global spread of a non zero signal with respect to a matrix (corresponding to an arbitrary affinity matrix) is defined as

| (3) |

where corresponds to vertices connected in the similarity graph .

Definition 2

(Spectral spread) [3]

The spectral spread of a non zero signal with respect to a similarity affinity matrix is defined as

| (4) |

where

| (5) |

is the graph Fourier transform of the signal with respect to the eigenvalue .

To distinguish between the vertex and spectral spreads of the input similarity matrix and the edge betweeness centrality graph , we denote the total vertex domain and spectral spreads of the former as while the latter is denoted as , respectively.

4 Proposed Node Embeddings

To find node embeddings that represent the local and structural properties in the graph, we utilize the revisited definitions of vertex and spectral spreads of the graph provided in the previous section. We focus on the spreads of the BCG , and embeddings using that delineates a trade-off between local connectivity and structural role properties of the graph’s nodes. The trade-off will be realized by characterizing the region enclosing all possible pairs of vertex and spectral spreads of the BCG . Specifically, searching for the lower boundary of the feasibility region of the vertex and spectral spreads of is shown to yield a generalized eigenvalue problem, whose corresponding eigenvectors producing a representation which trade-off between local and structural node similarity.

The induced edge rankings from in results in changes of the associated eigenvalues and eigenvectors, which now reflects the communication network using the EBC measurement.

4.1 The Graph-Spectral Feasibility Domain

We derive the proposed graph representation from the curve enclosing the feasibility domain of vertex and spectral spreads of the BCG. The definition below is given for a general weighted graphs.

Definition 3

Feasibility domain of

The feasibility domain of the spectral and graph spreads corresponding to the BCG is

| (6) |

Note that contains all possible values that can be obtained on with respect to a non zero signal .

4.2 Graph Differentiation

The lower boundary of the curve enclosing the feasibility domain of the spreads , with respect to a unit norm vector is defined as

| (7) |

To solve (7), we use the Lagrangian

| (8) |

with . Differentiating the Lagrangian and comparing the results to zero, we obtain the following generalized eigenvalue problem:

Denoting

| (10) |

we can write the generalized eigenvalue problem (9) using the matrix pencil

| (11) |

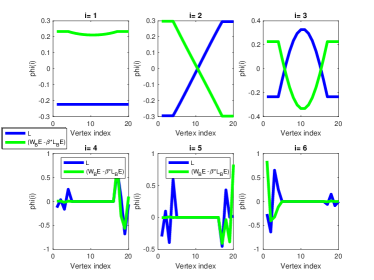

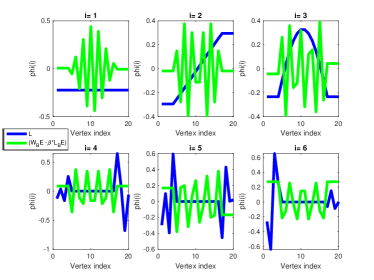

The scalar can be considered as a parameter that controls the trade-off between the total vertex and spectral spreads. An example for the trade-off in the barbell graph as captured by ) is illustrated in Fig. (a) and (b) using = -200, and = -0.2, respectively. Functions colored with green correspond to the eigenvectors of ), functions colored with blue correspond to the eigenvectors of . As can be seen, when is large, the eigenvectors of ) reveal structure which is similar to the eigenvectors of , while small values of produce structure which is similar to those corresponding to .

4.3 Graph Representation and Interpretation

For analysis and practical considerations it is often useful to encode the network captured in using a semi positive definite operator. For practical consideration, we transform into a semi positive definite matrix using a simple perturbation matrix , where , , where is the smallest eigenvalue of , and is the identity matrix. Setting

| (12) |

ensures that is semi positive definite (SPD) matrix. Letting and be the eigenvalues corresponding to and , respectively, we can see that the choice made in (12) ensures that the original spacing in eigenvalues of is preserved in . The embedding method proposed using is coined Graph Spectral Spread Embedding (GSSE). Note that there is a geometric interpretation which is related to the way was obtained from the lower boundary curve enclosing the feasibility domain ; Setting instead another perturbation matrix with and defining

| (13) |

where corresponds to the minimum eigenvalue of . we have that

| (14) |

which defines a half plane in .

Properties: The feasibility domain is a bounded set since

| (15) |

where corresponds to the largest eigenvalue of the affinity graph. We provide quantitative analysis that bound the largest eigenvalue of the betweeness graph centrality, by the largest value of the vertex betweeness centrality of the graph (see Lemma 2 in the appendix).

4.4 Embedding using Sylvester equation

In this section we construct a node embedding using a linear mapping between the subspaces and , which capture local and structural properties. We exploit the fact that the correspondence between the nodes represented by the two subspaces is known, and propose using the solution of a Sylvester equation as the node feature representation. This choice is motivated by Roth’s removal rule, which states that one can find a complementary subspace by solving the Sylvester equation. and can be considered two different operators with known correspondence, the identity map. Thus, the resulting embedding given by the eigensystem of the Sylvester equation will be composed of two hybrid representations associated with the graph networks and local connectivity. The method is coined Graph Sylvester Embedding (GSE).

The Sylvester operator is used to express the eigenvalues and eigenvectors of and using a single operator , imposing commutativity, where we solve

| (16) |

for , a scalar regularizer, is the identity matrix, where . The resulting eigensystem of is described in the next Lemma below.

Lemma 1

Let , and in the Sylvester equation (17), be symmetric. Assume that for some . Let , , , and the corresponding eigenvectors and eigenvalues of and , respectively. Moreover, assume that for all . Let be the solution to the Sylvester system (17). Then the associated eigenvalues and eigenvectors of are and , respectively.

Applying Lemma 1 with , and we obtain that the set of eigenvectors corresponding to the solution to the Sylvester equation are

, where correspond to the set of eigenvectors of , , respectively.

The proposed embedding is constructed from the set of eigenvectors and associated eigenvalues corresponding to and , respectively. The performance of these embeddings is now evaluated empirically.

5 Experimental Results

We evaluate our method on both synthetic and real world networks in several applications including social, commerce and material science. We also report preliminary results on a recent dataset on protein interaction in Covid-19.

Baseline: To apply our approach in an unsupervised learning settings, we employ an off-the-shelf spectral descriptor, the Wave Kernel Descriptor (WKS) [4]. Given an input of eigenvectors, their associated eigenvalues and a parameter , the WKS descriptor constructs a feature vector for each node . To construct a graph embedding we compute the smallest eigenvalues of and their associated eigenvectors and provide it as an input to the the WKS descriptor. Note that when using Sylvester embedding, we compute the largest eigenvalues and their associated eigenvectors corresponding to the solution .

The baseline is therefore the WKS descriptor applied to . As shown in the experimental results, the improvement in comparison to the baseline is up to 84%.

5.1 Node Classification

Datasets. We compare our method using the Barbell graph (Figure 1) for a qualitative comparison, and the mirrored Karate network for a quantitative comparison of node classification of structural similarity. The mirrored Karate network (based on a experiment suggested in [30]) is composed of two copies corresponding to the Karate Club network [38], where each node has a mirror node in the duplicated graph. We follow the exact same protocol which was suggested in [19], where the performance is evaluated by measuring the percentage of nodes whose nearest neighbor in the embedding space corresponds to its structural equivalent role.

Barbell Graph Our graph embeddings is able to identify nodes 5 and 16 as ones that stand out and have a large distance in the embeddings space from the rest of the nodes to which they are connected to (nodes 1 to 4 and 17 to 20, that are considered locally similar to 5 and 16, respectively). For example, the heat maps shown in Fig.1(b) and (c) represent embeddings using the same number of eigenvectors corresponding to and respectively. In these cases, nodes 5 and 16 do not stands out and their distances to locally similar nodes is very small. Karate network We compare to a number of state-of-the-art methods in node feature embeddings including node2vec [22] struc2vec [30], Deep Walk [29], and GraphWave [19]. The baseline which is the WKS descriptor [4] combined with the Laplacian of the unweighted graph is also reported in Table 1, where our approach shows a significant improvement over the baseline. The experimental results in Table (showing the best and average results for the varying number of edges) demonstrates that our method consistently performs well for a varying number of mirrored edges, and is improving the accuracy by more than comparing to the best method.

| Method/Data | Best | Avg. |

|---|---|---|

| DeepWalk [29] | 8.5 | 8.5 |

| WKS [4] | 15.6 | |

| RolX [23] | 84.5 | 82.2 |

| n2vec[22] | 8.5 | 8.5 |

| s2vec[30] | 59.4 | 52.5 |

| GraphWave [19] | 86 | 83 |

| GSE | 100 | 88 |

| GSSE | 100 | 88 |

5.2 Node Classification on SARS-CoV-2 PPI Networks

Effective representation of nodes with similar network topology plays an important role in the problem of protein protein interaction, where graph networks that describe protein-protein interaction (PPI) encode valuable information that can provide insights of biological function. To understand the development of COVID-19, the analysis of PPI networks is ongoing [26]. It is important to develop robust computational methods that can reveal biological processes and host signaling pathways that may be used by SARS-CoV-2 to infect cells.

One promising approach aims to develop drugs that target human proteins that the virus requires. Recent research responding to COVID-19 aims to prioritize additional human protein by employing the known human protein interactors of SARS-CoV-2 proteins and a whole-genome protein interaction network in order to predict potential interactors with high accuracy [25].

Another important problem in studying PPI networks is the problem of network alignment or node correspondence which, by finding topological similarities between networks, can be useful to understanding biological function and evolution.

We consider a problem of node classification by finding node correspondence using

network alignment on a recently published dataset of human proteins that physically interact with SARS-CoV-2 [21].

Dataset: We test using the STRING network from the STRING database, a PPI network that consists of 18,886 nodes and 977,789 edges. The STRING network includes 330 human proteins that physically interact with SARS-CoV-2. It is likely to have both false positives and false negatives edges. For instance, the relative expression of the viral protein could increase the number of interacting partners detected, generating false positives [25].

We aim to find network alignment between two copies of the STRING network, given the STRING network and an additional STRING network which is created by randomly removing 10 and 20 percentage of noisy edges. Each of the networks includes 330 nodes corresponding to the known human protein interactors of SARS-CoV-2. Given the node correspondence of the protein interactors of SARS-CoV-2, we connect each pair of nodes corresponding to the same human protein interactors of SARS-CoV-2 with an edge, which is resulted in a network composed of the two STRING networks. We then apply the proposed GSSE and GSE to generate node embeddings. For comparison, we compute the node embeddings using graph embeddings methods including Laplacian Eigenmaps (LE) [5], Locally Linear Embedding (LLE)[31], Hope [28], and Regularized Laplacian (RL)[41], which are given the same input network. Additionally, we compare to network alignment methods, such as Isorank [33] and Final [39] and iNEAT [40]. For the network alignments methods, we provide as an input the corresponding affinity graphs and a matrix with the known correspondence between the nodes that correspond to human proteins that physically interact with SARS-CoV-2.

Table 4 shows the percentage of nodes whose nearest neighbor corresponds to its true node correspondence using GSE and GSSE in the STRING network, which is compared to Graph Embeddings and network alignment methods. It is evident that graph embedding methods such as LLE and LE, which are rooted in manifold learning that is biased to local similarity and heavily relies on the graph smoothness are not effective for this task. Network alignments methods perform better, while our proposed GSE is improving the accuracy by more than comparing to the best method.

| Method/noisy edges percentage | 10 | 20 |

|---|---|---|

| LE [5] | 5.38 | 5.38 |

| LLE [31] | 1.5 | 1.3 |

| RL [41] | 1 | - |

| HOPE [28] | 2 | - |

| Isorank [33] | 41 | 40 |

| Final [39] | 58.2 | 56.6 |

| iNEAT [40] | 63.8 | 56.1 |

| GSE | 76.4 | 60 |

| GSSE | 48 | 20.21 |

5.3 Forecasting Failed Edges

Forecasting fracture locations in a progressively failing disordered structure is a crucial task in structural materials [7]. Recently, networks were used to represent 2 dimensional (2D) disordered lattices and have been shown a promising ability to detect failures locations inside 2D disordered lattices. A promising aspect of this line of work is the possibility to assess failure locations of a structure without needing to study its detailed energetic states [7]. In [7], it has been shown that the EBC can serve as a meaningful measurement to forecast the failure locations observed on a contact networks in 2D granular network. As failure locations were shown to occur predominately at locations that have large EBC values, [7] proposed a thresholding scheme to compare the EBC of each edge to the mean EBC in order to forecast edges corresponding to failed beams.

Dataset: The set of disordered structures was derived from experimentally determined force networks in granular materials [6]. The network data is available in the Dryad repository [8].

We tested 6 different initial networks, with mean degrees , 3.0, 3.6, following the same datasets corresponding to different initial granular configurations.

For GSSE and GSE we used and , respectively, in all experiments. Table 3 shows the success rates in forecasting failed edges for the 6 networks. In comparison to the forecasting approach proposed in [8], our framework performs consistently better. In particular, the proposed GSE which is based on Sylvester embedding provides the best results for all networks.

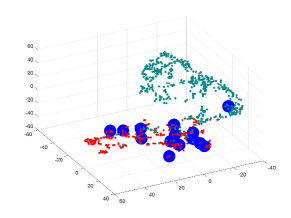

We employ the tools developed in Sect. 4, with simple adaptation to create edge embedding. First, by constructing node embedding, and then using the concatenated nodes features to construct edge embeddings. While our method is guided by the finding that failed edges occur predominantly in edges above the mean EBC, it doesn’t rely on a single thersholding criteria to asses failed edges. Instead, we defer to make decisions about failing edges in the edge embedding space, which provides an effective tool to process, analyze and visualize the network.

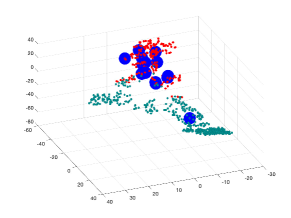

Fig. 3 (b) and (c) are showing visualization using t-SNE of our proposed methods for edge embedding. The points colored in red correspond to the edges whose values are above the mean EBC, while the points colored in turquoise correspond to edges bellow the mean EBC. The blue enlarged dots correspond to the failed edges in the system which were successfully detected by each method. In the examples illustrated, our proposed embeddeding successfully forecast all failed edges, which were mapped to the same cluster including edges whose EBC value was below the mean value. Table 3 shows a quantitative results: the forecasting approach proposed [7] will miss failed edges whose EBC is below the EBC mean, while embeddings using standard spectral embedding was found even less successful.

6 Discussion

We proposed a graph embedding to capture the structure of a graph with both local and global characteristics, by incorporating basis functions that can be derived from the spectral representation of a graph coined the BCG. The BCG is obtained by modifying the original edges based on the edge betweeness centrality (EBC), which measures the fractional number of shortest paths that are passing through an edge. Our work focuses on methods to learn the structure of graph networks without the need for supervision. This can be beneficial in performing data without the need for human annotation. Besides the cost of time and effort, this means that the data does not need to be seen by humans, which improves security and reduces the risk of accidental sharing of private data.

We have proposed two different approaches: The first, GSSE, is rooted in the study of signal localization on irregular graphs, and it trades off vertex and spectral domains to balance representations that capture local and structural similarity between the graph nodes. The second, GSE is motivated by similar considerations is derived from solving a Sylvester equation. The advantage is that it allows more flexibility and control beyond scalar modulation between the two bases.

Uncertainty principles inspire our work to incorporate the edge betweeness graph centrality (BCG) as a building block to create new graph topology that reflects node’s structural role. In particular, the edge value in the edge betweeness graph centrality (EBC) is a useful tool to create an oscillatory pattern of higher frequencies which is also vertex localized. In contrast, unweighted graphs or graphs with very similar edges have limited ability to represent structural similarity as they associate signals decaying slowly with a larger graph neighborhood.

6.1 Limitations and Future Work

Computational Complexity: The implementation of our approach is computationally intensive, with most of the burden falling on the computation of the BCG, which is where is the total number of edges and is the total number of nodes, thus approximately for sparse graphs. The execution time of our method with a Python code implementation using Intel Core i7 7700 Quad-Core 3.6GHz with 64B Memory on the Cora dataset with approximately 2700 nodes takes 24.9 seconds.

The local clustering coefficient: Another limitation is concerned with applying our method to graphs in which the average local clustering coefficient is small, in particular when the graph contains a large number of vertices whose clustering coefficient is zero.

If the degree of a node is 0 or 1, the corresponding clustering coefficient of that node is 0. In clustering tasks, our approach is shown to improves the baseline when the average local clustering coefficient is not too small, and is less effective when the average local clustering coefficient is very small.

Future work include expanding our approach to address semi-supervised applications, for example by incorporating generalized graph Laplacian or BCG in training using Graph convolutional networks. Another direction is to explore alternative approaches for finding a trade-off between local and structural similarity, tailored to a specific domain application using a functional applied to the adjacency graph in a similar spirit to the approach we propose with the BCG.

Acknowledgments

This work was supported in part by ONR grant #N00014-19-1-2229. The authors wish to thank Mason Porter for helpful discussions and suggestions in the earlier formulation of the manuscript. We also want to thank Yoram Louzoun, Roded Sharan, and Jie Chen for valuable feedback on the manuscript. We are also thankful for Simon Kasif, T. M. Murali, Mark Crovella, Catherine M. Della Santina, and Jeffrey Law for sharing insights from their work on Identifying Human Interactors of SARS-CoV-2 Proteins for COVID-19 using network label propagation, as well as sharing the STRING dataset.

References

- [1] On spectral clustering: Analysis and an algorithm. In: ADVANCES IN NEURAL INFORMATION PROCESSING SYSTEMS (2001)

- [2] Laplacian eigenmaps for dimensionality reduction and data representation. Neural Computation (2003)

- [3] Agaskar, A., Lu, Y.M.: A spectral graph uncertainty principle. IEEE Transactions on Information Theory 59(7)

- [4] Aubry, M., Schlickewei, U., Cremers, D.: The wave kernel signature: A quantum mechanical approach to shape analysis. In: ICCV Workshops. pp. 1626–1633. IEEE Computer Society (2011)

- [5] Belkin, M., Niyogi, P.: Laplacian eigenmaps for dimensionality reduction and data representation. Neural Computation 15(6), 1373–1396 (2003)

- [6] Berthier, E., Kollmer, J.E., Henkes, S.E., Liu, K., Schwarz, J.M., Daniels, K.E.: Rigidity percolation control of the brittle-ductile transition in disordered networks. Phys. Rev. Materials (2019)

- [7] Berthier, E., Porter, M.A., Daniels, K.E.: Forecasting failure locations in 2-dimensional disordered lattices. Proceedings of the National Academy of Sciences 116(34)

- [8] Berthier, E.e.a.: Rigidity percolation control of the brittle-ductile transition in disordered networks dryad, dataset,. Phys. Rev. Materials (2019)

- [9] Coifman, R.R., Lafon, S., Lee, A.B., Maggioni, M., Nadler, B., Warner, F., Zucker, S.W.: Geometric diffusions as a tool for harmonic analysis and structure definition of data: Diffusion maps. Proceedings of the National Academy of Sciences of the United States of America (2005)

- [10] CUCURINGU, M., ROMBACH, P., LEE, S.H., PORTER, M.A.: Detection of core periphery structure in networks using spectral methods and geodesic paths. European Journal of Applied Mathematics

- [11] Defferrard, M., Bresson, X., Vandergheynst, P.: Convolutional neural networks on graphs with fast localized spectral filtering. In: Advances in Neural Information Processing Systems 29 (2016)

- [12] Deutsch, S., Bertozzi, A., Soatto, S.: Zero shot learning with the isoperimetric loss. Tech. rep., University of California, Los Angeles (UCLA) (2020)

- [13] Deutsch, S., Kolouri, S., Kim, K., Owechko, Y., Soatto, S.: Zero shot learning via multi-scale manifold regularization. CVPR (2017)

- [14] Deutsch, S., Masi, I., Soatto, S.: Finding structure in point cloud data with the robust isoperimetric loss. In: Scale Space and Variational Methods in Computer Vision - 7th International Conference, SSVM 2019, Hofgeismar, Germany, June 30 - July 4, 2019, Proceedings (2019)

- [15] Deutsch, S., Medioni, G.: Intersecting manifolds: Detection, segmentation, and labeling. In: Proceedings of the Twenty-Fourth International Joint Conference on Artificial Intelligence (2015)

- [16] Deutsch, S., Medioni, G.: Unsupervised learning using the tensor voting graph. In: Scale Space and Variational Methods in Computer Vision (SSVM) (2015)

- [17] Deutsch, S., Medioni, G.G.: Learning the geometric structure of manifolds with singularities using the tensor voting graph. Journal of Mathematical Imaging and Vision 57(3), 402–422 (2017)

- [18] Deutsch, S., Ortega, A., Medioni, G.: Manifold denoising based on spectral graph wavelets. International Conference on Acoustics, Speech and Signal Processing (ICASSP) (2016)

- [19] Donnat, C., Zitnik, M., Hallac, D., Leskovec, J.: Learning structural node embeddings via diffusion wavelets. Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining - KDD 18 (2018)

- [20] Freeman, L.: A set of measures of centrality based on betweenness. Sociometry (1977)

- [21] Gordon, D.E., Jang, G.M., Bouhaddou, M., Xu, J., Obernier, K., O’Meara, M.J., Guo, J.Z., Swaney, D.L., Tummino, T.A., Huettenhain, R., Kaake, R.M., Richards, A.L., Tutuncuoglu, B., Foussard, H., Batra, J., Haas, K., Modak, M., Kim, M., Haas, P., Polacco, B.J., Braberg, H., Fabius, J.M., Eckhardt, M., Soucheray, M., Bennett, M.J., Cakir, M., McGregor, M.J., Li, Q., Naing, Z.Z.C., Zhou, Y., Peng, S., Kirby, I.T., Melnyk, J.E., Chorba, J.S., Lou, K., Dai, S.A., Shen, W., Shi, Y., Zhang, Z., Barrio-Hernandez, I., Memon, D., Hernandez-Armenta, C., Mathy, C.J., Perica, T., Pilla, K.B., Ganesan, S.J., Saltzberg, D.J., Ramachandran, R., Liu, X., Rosenthal, S.B., Calviello, L., Venkataramanan, S., Liboy-Lugo, J., Lin, Y., Wankowicz, S.A., Bohn, M., Sharp, P.P., Trenker, R., Young, J.M., Cavero, D.A., Hiatt, J., Roth, T.L., Rathore, U., Subramanian, A., Noack, J., Hubert, M., Roesch, F., Vallet, T., Meyer, B., White, K.M., Miorin, L., Rosenberg, O.S., Verba, K.A., Agard, D., Ott, M., Emerman, M., Ruggero, D., García-Sastre, A., Jura, N., von Zastrow, M., Taunton, J., Ashworth, A., Schwartz, O., Vignuzzi, M., d’Enfert, C., Mukherjee, S., Jacobson, M., Malik, H.S., Fujimori, D.G., Ideker, T., Craik, C.S., Floor, S., Fraser, J.S., Gross, J., Sali, A., Kortemme, T., Beltrao, P., Shokat, K., Shoichet, B.K., Krogan, N.J.: A sars-cov-2-human protein-protein interaction map reveals drug targets and potential drug-repurposing. Nature (2020)

- [22] Grover, A., Leskovec, J.: node2vec: Scalable feature learning for networks (2016)

- [23] Henderson, K., Gallagher, B., Eliassi-Rad, T., Tong, H., Basu, S., Akoglu, L., Koutra, D., Faloutsos, C., Li, L.: Rolx: Structural role extraction & mining in large graphs. In: Proceedings of the 18th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining. KDD ’12 (2012)

- [24] Kipf, T.N., Welling, M.: Semi-supervised classification with graph convolutional networks. In: Proceedings of the 5th International Conference on Learning Representations. ICLR ’17 (2017)

- [25] Law, J.N., Akers, K., Tasnina, N., Santina, C.M.D., Kshirsagar, M., Klein-Seetharaman, J., Crovella, M., Rajagopalan, P., Kasif, S., Murali, T.M.: Identifying human interactors of sars-cov-2 proteins and drug targets for covid-19 using network-based label propagation (2020)

- [26] MP, M.L., Middleton, P.: Ongoing clinical trials for the management of the covid-19 pandemic. trends pharmacol sci. (2020)

- [27] Newman, M.E.: Community structure in social and biological networks. Proceedings of the National Academy of Sciences (2002)

- [28] Ou, M., Cui, P., Pei, J., Zhang, Z., Zhu, W.: Asymmetric transitivity preserving graph embedding. In: KDD (2016)

- [29] Perozzi, B., Al-Rfou, R., Skiena, S.: Deepwalk. Proceedings of the 20th ACM SIGKDD international conference on Knowledge discovery and data mining - KDD (2014)

- [30] Ribeiro, L.F., Saverese, P.H., Figueiredo, D.R.: struc2vec. Proceedings of the 23rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining - KDD (2017)

- [31] Roweis, S., Saul, L.: Nonlinear dimensionality reduction by locally linear embedding. SCIENCE 290, 2323–2326 (2000)

- [32] Shuman, D., Narang, S., Frossard, P., Ortega, A., Vandergheynst, P.: The emerging field of signal processing on graphs: Extending high-dimensional data analysis to networks and other irregular domains. IEEE Signal Processing Magazine (2013)

- [33] Singh, R., Xu, J., Berger, B.: Global alignment of multiple protein interaction networks with application to functional orthology detection. Proceedings of the National Academy of Sciences (2008)

- [34] Sun, J., Ovsjanikov, M., Guibas, L.: A concise and provably informative multi-scale signature based on heat diffusion. Computer Graphics Forum (2009)

- [35] Teke, O., Vaidyanathan, P.P.: Uncertainty principles and sparse eigenvectors of graphs. IEEE Transactions on Signal Processing 65(20), 5406–5420 (2017)

- [36] Tenenbaum, J., de Silva, V., Langford, J.: A global geometric framework for nonlinear dimensionality reduction. Science (2000)

- [37] Veličković, P., Cucurull, G., Casanova, A., Romero, A., Liò, P., Bengio, Y.: Graph Attention Networks. International Conference on Learning Representations (2018)

- [38] Zachary, W.: An information flow model for conflict and fission in small groups. Journal of Anthropological Research

- [39] Zhang, S., Tong, H.: Final: Fast attributed network alignment. In: KDD (2016)

- [40] Zhang, S., Tong, H., Tang, J., Xu, J., Fan, W.: Incomplete network alignment: Problem definitions and fast solutions. ACM Trans. Knowl. Discov. Data 14(4) (2020)

- [41] Zhou, D., Schölkopf, B.: A regularization framework for learning from graph data. In: ICML 2004 (2004)

7 Appendix

The Sylvester operator is used to express the eigenvalues and eigenvectors of and using a single operator , imposing commutativity, where we solve

| (17) |

for , a scalar regularizer, is the identity matrix. We take , and , where .

The resulting eigensystem of is described in the next Lemma below.

Lemma 1

Let , and in the Sylvester equation (17), be symmetric. Assume that for some . Let , , , and the corresponding eigenvectors and eigenvalues of and , respectively. Moreover, assume that for all . Let be the solution to the Sylvester system (17). Then the associated eigenvalues and eigenvectors of are and , respectively.

Proof of Lemma 1 Let be an arbitrary eigenvector of . Multiplying (17) by we obtain

| (18) |

since is an eigenvalue of with associated eigenvalue we have

| (19) |

Multiplying both sides of the equation above by we obtain

| (20) |

since is assumed to be a symmetric matrix, we have that the left eigenvector with corresponding eigenvalue is also a right eigenvector with the same eigenvalue , hence

| (21) |

Rearranging the equation above and dividing by we obtain

| (22) |

Next, note that must be a symmetric matrix since and are assumed to be symmetric matrices. Therefore, taking the transpose on of both size of the equation above we obtain:

| (23) |

thus

| (24) |

and since , then is an eigenvector of with an associated eigenvalue .

The next Lemma, Lemma 2 , states that the largest eigenvalue of the betweeness graph centrality, , is bounded by the largest value of the vertex betweeness centrality of the graph.

Lemma 2

Let denote the number of shortest distance paths passing between vertex and that are passing through a vertex . We have that the largest eigenvalue of the betweeness centrality graph satisfies:

| (25) |

Proof of Lemma 2: Let be the eigenvector associated with the largest eigenvalue of the geodesic edge betweeness centrality graph. By definition of the betweeness centrality graph we have that

| (26) |

where the right hand is a result of the largest eigenvalue of a symmetric similarity matrix being bounded by the maximal vertex degree of the graph. On the other hand, for any vertex we have the equality

| (28) |

and thus the proof is concluded.

Note that the bound is not sharp for all graphs since in general there is no equality between the largest eigenvalue of a graph and the value of the node with maximal degree.

7.1 Additional experiments on Node Classification using SARS-CoV-2 PPI Networks

We report additional experimental results on the SARS- CoV-2 PPI networks for the task of node classification using network alignment. We follow a similar protocol as in the previous experiments that aim to find network alignment between two copies of the STRING network. An additional STRING network is created by randomly removing 20 of noisy edges from the STRING network. In this experiment, we randomly subsample the set of the 332 nodes corresponding to the known SARS-CoV-2 interactors and use only 163 nodes as the given known node correspondence. Since Graph Embeddings are found less effective for this task, we only compare to network alignments methods, including Isorank (Singh, Xu, and Berger 2008), Final (Zhang and Tong 2016), and iNEAT (Zhang et al. 2020). Table 1 shows the percentage of nodes whose nearest neighbor corresponds to its true node correspondence using GSE in the STRING network. The comparison results demonstrate that our method remains robust even when using a much smaller number of correspondence nodes.

| Method/noisy edges percentage | 20 |

|---|---|

| Isorank (Singh, Xu, and Berger 2008) | 23 |

| Final (Zhang and Tong 2016) | 36 |

| iNEAT (Zhang et al. 2020) | 37 |

| GSE | 58 |

7.2 Clustering

Datasets. The European air-traffic network [30] is a data collected from the statistical office of the European union. The network has 399 nodes and 5,995 edges. Airport activity is measured by the total number of landings plus takeoffs in the corresponding period [30]. The Cora dataset [Cora] is a citation network where nodes are documents and edges are citation links. The network has 2,708 nodes and 5,429 edges. Evaluation: We evaluate our approach in clustering accuracy comparing to the ground truth using purity measure. We compute node feature representation for each node and then construct a new graph which is based on the nearest neighbor graph based on the new features. We compare our method to a number of clustering methods, including Spectral Clustering [1] and Affinity Propagation.

Summary of the experimental results: on the

European air-traffic network The experimental results, compare to spectral clustering and affinity propagation and measured in clustering accuracy by purity evaluation, results in an improvement of 24.1 and 28.2 using GSSE, and 20 and 20.3 using GSE, respectively, while using BCG directly we obtain an improvement of 6 and 10.

Citation network For the Cora dataset, the performance comparing to spectral clustering and affinity propagation using the GSSE and GSE shows an improvements of less than , while using the BCG shows an improvement of 29.35 comparing to spectral clustering and affinity propagation.