∎

22email: harry.oviedo@cimat.mx 33institutetext: H. Lara 44institutetext: Universidade Federal de Santa Catarina, Campus Blumenau, Blumenau, Brazil.

44email: hugo.lara.urdaneta@ufsc.br

Spectral Residual Method for Nonlinear Equations on Riemannian Manifolds

Abstract

In this paper, the spectral algorithm for nonlinear equations (SANE) is adapted to the problem of finding a zero of a given tangent vector field on a Riemannian manifold. The generalized version of SANE uses, in a systematic way, the tangent vector field as a search direction and a continuous real–valued function that adapts this direction and ensures that it verifies a descent condition for an associated merit function. In order to speed up the convergence of the proposed method, we incorporate a Riemannian adaptive spectral parameter in combination with a non–monotone globalization technique. The global convergence of the proposed procedure is established under some standard assumptions. Numerical results indicate that our algorithm is very effective and efficient solving tangent vector field on different Riemannian manifolds and competes favorably with a Polak–Ribiére–Polyak Method recently published and other methods existing in the literature.

Keywords:

Tangent vector field Riemannian manifold Nonlinear system of equations Spectral residual method Non–monotone line search.MSC:

65K05, 90C30, 90C56, 53C21.1 Introduction

In this work, we consider the problem of finding a zero of a tangent vector field over a Riemannian manifold , with an associated Riemannian metric (a local inner product which induces a corresponding local metric). The problem can be mathematically formulated as the solution of the following nonlinear equation

| (1) |

where is a continuously differentiable tangent vector field, and denotes the tangent bundle of , i.e., is the union of all tangent spaces at points in the manifold. Here, denotes the zero vector of the tangent space . This kind of problem appears frequently in several applications, for example: statistical principal component analysis oja1989neural , where the Oja’s flow induces the associated vector field, total energy minimization in electronic structure calculations martin2020electronic ; saad2010numerical ; zhang2014gradient , linear eigenvalue problems manton2002optimization ; wen2016trace , dimension reduction techniques in pattern recognition kokiopoulou2011trace ; turaga2008statistical ; zhang2018robust , Riemannian optimization problems, where the Riemannian gradient flow leads to the associated tangent vector field absil2009optimization ; edelman1998geometry ; ring2012optimization , among others.

Problem (1) is closely related to the problem of minimizing a differentiable function over the manifold ,

| (2) |

where is a smooth function. Different iterative methods have been developed for solving (2). Some popular schemes are based on gradient method cedeno2018projected ; iannazzo2018riemannian ; manton2002optimization ; oviedoimplicit ; oviedo2019scaled ; oviedo2020two ; oviedo2019non , conjugate gradient methods absil2009optimization ; edelman1998geometry ; zhu2017riemannian , Newton’s method absil2009optimization ; sato2014riemannian , or quasi–Newton methods absil2009optimization . All these numerical methods can be used to find a zero of the following tangent vector field equation,

| (3) |

where denotes the Riemannian gradient of , which is a particular case of problem (1). The Riemannian line–search methods, designed to solve the optimization problem (2) construct a sequence of points using the following recursive formula

| (4) |

where is a retraction (see Definition 1), and is a descent direction, i.e, verifies the inequality for all . Among the Riemannian line–search methods, the Riemannian gradient approach exhibits the lowest cost per iteration. This method uses the gradient vector field to define the search direction by , at each iteration.

In the literature, there are some iterative algorithms addressing the problem (1). In adler2002newton ; dedieu2003newton ; li2005convergence were developed several Riemannian Newton methods for the solution of tangent vector fields on general Riemannian manifolds. Among the features of Newton’s method, the requirement of using second–order information and geodesics (that involves the computation of exponential mapping) to ensure keeping into the corresponding manifold, leads to the growth of computational cost. In addition, the authors in breiding2018convergence proposed a Riemannian Gauss–Newton method to address the solution of (1) through the optimization problem (2). Recently, in yao2020riemannian was introduced a Riemannian conjugate gradient to deal with the numerical solution of (1), that does not need derivative computation (it does not use the Jacobian of ), and that incorporates the use of retractions (see Definition 1), which is a mapping that generalizes the definition of geodesics, and that was introduced by Absil in absil2009optimization to deal with optimization problems on matrix manifolds.

In the Euclidean case in (1), i.e., for the solution of standard nonlinear system of equations, the authors in cruz2003nonmonotone introduced a method called SANE, which uses the residual as a search direction. Then the trial point, at each iteration, is computed by , where is a spectral coefficient based on the Barzilai–Borwein step–size barzilai1988two ; raydan1993barzilai . This iterative process uses precisely the functional , in order to define the search direction. It’s feature of been a derivative–free procedure, is highly attractive, lowering the storage requirements and the computational cost per iteration.

Motivated by the Riemannian gradient and SANE methods, in this paper, we introduce RSANE, which is a generalization of SANE to tackle the numerical solution of nonlinear equations on Riemannian manifolds. In particular, we modified the update formula of SANE by incorporating a retraction, in order to guarantee that each point belongs to the desired manifold. By following the ideas of the Riemannian Barzilai–Borwein method developed by Iannazzo et.al in iannazzo2018riemannian , we propose an extension of the spectral parameter to the case of Riemannian manifolds, using mappings so–called scaled vector transport. In addition, we present the convergence analysis of the proposed method obtained under the Zhang–Hager globalization strategy zhang2004nonmonotone . Finally, some numerical experiments are reported to illustrate the efficiency and effectiveness of our proposal.

The rest of this paper is organized as follow. To do this article self–contained, we briefly review, in Section 2, some concepts and tools from Riemannian geometry that it can be founded in absil2009optimization . In Section 3, we present our proposed Riemannian spectral residual method (RSANE) for solving (1). Section 4 is devoted to the convergence analysis concerning our proposed method. In Section 5 numerical tests are carried out, in order to illustrate the good performance of our approach considering the computing of eigenspaces associated to given symmetric matrices using both simulated data and real data. Finally, conclusions and perspectives are provided in Section 7.

2 Preliminaries on Riemannian Geometry

In this section, we briefly review some notions and tools of Riemannian geometry crucial for understanding this paper, by summarizing absil2009optimization .

Let be a Riemannian manifold with an associated Riemannian metric , and let be its tangent vector space at a given point . In addition, let a smooth scalar function defined on the Riemannian manifold , the Riemannian gradient of at , denoted by , is defined as the unique element of that verifies

where is the function that takes any point to the directional derivative of in the direction , evaluated at . In the particular case that is a Riemannian submanifold of an Euclidean space , we have an explicit evaluation of the gradient: let a smooth function defined on and let , then the Riemannian gradient of evaluated at is equal to the orthogonal projection of the standard gradient of onto , that is,

| (5) |

This result provides us an important tool to compute the Riemannian gradient, which will be useful in the experiments section.

Another fundamental concept for this work is retraction. This can be seen as a smooth function that pragmatically approximates the notion of geodesics edelman1998geometry . Now we present its rigorous definition.

Definition 1 (absil2009optimization )

A retraction on a manifold is a smooth mapping from the tangent bundle onto with the following properties. Let denote the restriction of to .

-

1.

, where denotes the zero element of

-

2.

With the canonical identification, , satisfies

where denotes the identity mapping on .

The second condition in Definition 1 is known as local rigidity condition.

The concept of vector transport, which appears in absil2009optimization , provides us a tool to perform operations between two or more vectors that belong to different tangent spaces of , and can be seen as a relaxation of the purely Riemannian concept of parallel transport edelman1998geometry .

Definition 2 (absil2009optimization )

A vector transport on a manifold is a smooth mapping

satisfying the following properties for all where denote the Whitney sum, that is,

-

1.

There exists a retraction , called the retraction associated with , such that

where denotes the foot of the tangent vector .

-

2.

for all .

-

3.

, for all and .

Next, the concept of isometry zhu2017riemannian is established, which is a property satisfied by some vector transports.

Definition 3 (zhu2017riemannian )

A vector transport on a manifold is called isometric if it satisfies

| (6) |

for all , where is the retraction associated with .

3 Spectral Approach for Tangent Vector Field on Riemannian Manifolds

In this section, we shall establish our proposal RSANE. An intuitive way to solve (1) is to promote the reduction of the residual , which we can achieve by solving the following auxiliar manifold constrained optimization problem

| (7) |

We can deal with this optimization model using some Riemannian optimization method. Nevertheless, we are interested in directly solving the Riemannian nonlinear equation (1). For this purpose, we consider the following iterative method, based on the SANE method,

| (8) |

Firstly, the vector is not necessarily a descent direction for the merit function and secondly, that does not necessarily belong to the manifold . We can overcome the first one, by modifying this vector with the sign of , following the same idea used in SANE method, in order to force satisfies the descent condition. Observe that the Riemannian gradient method of can be computed by

| (9) |

that is, to compute the Riemannian gradient of at , we need to calculate the adjoint of the Jacobian of evaluated at .

On the other hand, the second disadvantage can be easily remedied by incorporating a retraction, similarly to the scheme (4). Keeping in mid all theses considerations, we now propose our Riemannian spectral residual method, which computes the iterates recursively by

| (10) |

where represents the step–size, is a retraction and the search direction is determined by

| (11) |

where is defined by . Observe that is a continuous function for all , a crucial property to our convergence analysis.

3.1 A Nonmonotone Line Search with a Riemannian Spectral Parameter

In the scenario of the solution of nonlinear systems of equations over , the SANE method uses a spectral parameter inspired by the Barzilai–Borwein step–size, originally introduced in barzilai1988two to speed up the convergence of gradient–type methods, in the context of optimization. SANE computes this spectral parameter as follow

| (12) |

where , , and denotes the Jacobian matrix of the vector–valued function .

In the framework of Riemannian manifolds, the vectors and lie in different tangent spaces, then the difference between these vectors may not be well defined (this is only well defined over linear manifolds). The same drawback occurs with the difference between the points and . Therefore, we cannot directly use the parameter (12) to address the numerical solution of (1). In iannazzo2018riemannian Iannazzo et. al. was extended the Barzilai–Borwein step–sizes in the context of optimization on Riemannian manifolds, through the use of a vector transport (see Definition 2). This strategy transports the calculated directions to the correct tangent space, providing a way to overcome the drawback. Guided by the descriptions contained in iannazzo2018riemannian , we propose the following generalization of the spectral parameter (12),

| (13) |

where ,

| (14) |

and

| (15) |

where is any vector transport satisfying the Ring–Wirth non–expansive condition,

| (16) |

Another alternative for the spectral parameter is

| (17) |

In order to take advantage of both spectral parameters and , we adopt the following adaptive strategy

| (18) |

Note that it is always possible to define a transporter (a function that sends vectors from a tangent space to another tangent space) that satisfies the condition (16), by scaling,

| (19) |

This function, introduced by Sato and Iwai in sato2015new , is referred as scaled vector transport. Observe that (19) is not necessarily a vector transport. However for the extension of the spectral parameter to the setting of Riemannian manifolds, it is not strictly mandatory. In fact, it is enough having a non–expansive transporter available. Therefore, we will use the scaled vector transport (19), in the construction of the vectors and in equations (14)–(15).

Since the spectral parameter does not necessarily reduces the value of the merit function at each iteration, the convergence result could be invalid. We can overcome this drawback by incorporating a globalization strategy, which guarantees convergence by regulating the step–size only occasionally (see cruz2003nonmonotone ; raydan1997barzilai ; zhang2004nonmonotone ). In the seminal paper cruz2003nonmonotone , the authors consider the globalization technique proposed by Grippo et.al. in grippo1986nonmonotone , in the definition of SANE method.

We could define our Riemannian generalization of SANE incorporating this non–monotone technique, and so analyze the convergence following the ideas described in cruz2003nonmonotone ; iannazzo2018riemannian . Instead of that, in this work, to define RSANE we adopt a more elegant globalization strategy proposed by Zhang and Hager in zhang2004nonmonotone . Specifically, we compute where is the smallest integer satisfying

| (20) |

where each value is given by a convex combination of and the previous as

for , starting at and . In the sequel our generalization RSANE will be described in detail.

Remark 1

In Algorithm 1, we replace the nonmonotone condition (20) by

| (21) |

We remark that with this relaxed condition, Algorithm 1 is well defined. In fact, if at iteration the procedure does not stop at Step 4, then is a descent direction (see Lemma 2), and for all there exists such that the non–monotone Zhang–Hager condition (20) holds by continuity, for sufficiently small(a proof of this fact appears in zhang2004nonmonotone ). In addition, it follows form Step 4, (20) and Lemma 2 that

which implies that the relaxed condition (21) is also verified for all that satisfy (20).

Remark 2

The bottleneck of Algorithm 1 appears in step 2, since to calculate , we must compute the Riemannian gradient of , which implies evaluating the Jacobian of (see equation (9)). However, given a retraction , can be approximated using finite differences as follow

| (22) | |||||

where is a small real number. The fact that this approximation does not need the explicit knowledge of the Jacobian operator is useful for large–scale problems.

The following lemma establishes that in most cases is positive.

Lemma 1

Let be computed as in (13), then when one of the following cases holds

-

1.

;

-

2.

and .

Proof

Since then . Suppose that (a) holds, then

which implies that .

On the other hand, if (b) holds, from the Cauchy–Schwarz inequality, we find that

and so

and hence , which proves the lemma.

The same theoretical result is verified for spectral parameter , and therefore it is also valid for the adaptive parameter .

Notice that if the transporter is isometric (see Definition 3), then the second condition of item (b) in Lemma 1 is reduced to . In addition, observe that if we set in Algorithm 1, then we have that for all . Therefore this condition would always be verified under these choices of and .

Lemma 2

4 Convergence Analysis

In this section, we analyse the global convergence for our Algorithm 1 under mild assumptions. Our analysis consists on a generalization of the global convergence of line–search methods for unconstrained optimization, presented in zhang2004nonmonotone and an adaptation of Theorem 4.3.1 in absil2009optimization .

The following lemma establishes that is bounded below by the sequence .

Lemma 3

Proof

Firstly, we define by

observe that the derivative of is

it follows from the non–monotone condition (21) that

| (24) |

which implies that for all . Hence, the function is nondecreasing, and for all . Then, taking we obtain

| (25) |

which completes the proof.

In order to prove the global convergence of our proposed algorithm, we need the following asymptotic property.

Lemma 4

Any infinite sequence generated by Algorithm 1 verifies the following property

| (26) |

Proof

By the construction of Algorithm 1 and using Lemma 2, we have

| (27) |

Hence, is monotonically decreasing and bounded below by zero, therefore it converges to some limit . It follows from Step 7 and Step 12 in Algorithm 1 that

| (28) |

Merging this result with the fact that , we have

| (29) |

which proves the lemma.

The theorem below establishes a global convergence property concerning Algorithm 1. The proof can be seen as a modification to that of Theorem 3.4 in yao2020riemannian , and to that of Theorem 4.1 in oviedoimplicit .

Theorem 4.1

Algorithm 1 either terminates at a finite iteration where , or it generates an infinite sequence such that

Proof

Let us assume that Algorithm 1 does not terminate, and let be any accumulation point of the sequence . We may assume that , taking a subsequence if necessary. By contradiction, suppose that there exists such that

| (30) |

In view of (30) and Lemma 4 we have

| (31) |

Firstly, we define the curve for all , which is smooth due to the differentiability of the retraction . Since the parameter is chosen by carrying out a backtracking process, then , for all greater than some , where is the smallest positive integer number such that the relaxed nonmonotone condition (21) is fulfilled. Thus, the scalar violates the condition (21), i.e., it holds

| (32) |

where the last inequality is obtained using Lemma 3.

Let us set , then (32) is equivalent to

| (33) |

It follows from the mean value theorem, that there exists such that for all , or equivalently

| (34) |

In view of the continuity of functions , , , , the smoothness and local rigidity condition of the retraction , and taking limit in (34), we arrive at

or equivalently,

| (35) |

where . Since Algorithm 1 does not terminate, form Step 3 we have

| (36) |

Applying limits in (36) we find that . Merging this last result with (35), we arrive at

Since then we have , this last result contradicts (30), which completes the proof.

By Theorem 4.1, we obtain the following theoretical consequence under compactness assumptions.

Corollary 1

Let be an infinite sequence generated by Algorithm 1. Suppose that the level set is compact (which holds in particular when the Riemannian manifold is compact). Then

Proof

Remark 3

The main drawback of Algorithm 1 is that it can prematurely terminate with a bad breakdown (). One way to remedy this problem is to use as the search direction, if a bad breakdown occurs at –th iteration; and then in the next iteration, we can retry using the steps of Algorithm 1. We may even use any tangent direction such that , as long as a bad breakdown happens, in order to overcome this difficulty.

5 Numerical Experiments

In order to give further insight into the RSANE method we present the results of some numerical experiments. We test our algorithm on some randomly generated gradient tangent vector fields on three different Riemannian manifold, involving the unit sphere, the Stiefel manifold and oblique manifold. All experiments have been performed on a intel(R) CORE(TM) i7–4770, CPU 3.40 GHz with 1TB HD and 16GB RAM memory. The algorithm was coded in Matlab with double precision. The running times are always given in CPU seconds. For numerical comparisons, we consider the SANE method proposed in cruz2003nonmonotone , and the recently published Riemannian Derivative–Free Conjugate gradient Polak–Ribiére–Polyak method (CGPR) for the numerical solution of tangent vectors field yao2020riemannian . The Matlab codes of our RSANE, SANE and CGPR are available in: http://www.optimization-online.org/DB_HTML/2020/09/8028.html

6 Implementation details

In our implementation, in addition to monitoring the residual norm , we also check the relative changes of the two consecutive iterates and their corresponding residual values

| (38) |

Here denotes the Frobenius norm of the matrix . In the case when is a vector, this norm is reduced to the standard norm on . Although the residual is meaningless in the Riemannian context, for our numerical experiments, we will only consider Riemannian manifolds embedded in the Euclidean space , and the residual is well defined for these types of manifolds.

We let all the algorithms run up to iterations and stop them at iteration if , or and , or

Here, the defaults values of and are -5, -15, -15 and , respectively. In addition, in Algorithm 1 we use , e-3 (the initial step–size ), -10, +10, , and -4 as defaults values.

6.1 Considered manifolds and their geometric tools

In this subsection, we present three Riemannian manifolds that we will use for the numerical experiments in the remainder subsections, as well as some tools necessary for the algorithms, associated with each manifold, such as vectors transports and retractions.

Firstly we considere the unit sphere given by

| (39) |

It is well–known that the tangent space of the unit sphere at is given by . Let be endowed with the inner product inherited from the classical inner product on ,

Then, with this inner product defines an dimensional Riemannian sub–manifold of . The retraction on is chosen as in absil2009optimization ,

for all and . In addition, for this particular manifold, we consider the vector transport based on orthogonal projection

Notice that this vector transport verifies the Ring–Wirth non-expansive condition (16).

The second Riemannian manifold considered in this section is the Stiefel manifold, which is defined as

| (40) |

where denotes the identity matrix of size –by–. By differentiating both sides of , we obtain the tangent space of at , given by . Let be endowed with induced Riemannian metric from , i.e.,

| (41) |

The pair , where is the inner product given in (41), forms a Riemannian sub–manifold of the Euclidean space , and its dimension is equal to , absil2009optimization . For our numerical experiments concerning the Stiefel manifold we will use the retractions, introduced in absil2009optimization , given by

| (42) |

and the retraction based on the matrix polar decomposition

| (43) |

for all and . In equation (42), denotes the orthogonal factor obtained form the QR–factorization of , such that where belongs and is the upper triangular matrix with strictly positive diagonal elements. Additionally, we consider the following vector transport

| (44) |

where is any of the two retractions defined in (42)–(43), and is the function that assigns to the matrix its symmetric part. This vector transport is inspired by the orthogonal projection on the tangent space of the Stiefel manifold. Moreover, the function (44) verifies the Ring–Wirth non-expansive condition (16).

Our third example, the oblique manifold, is defied as

| (45) |

where denotes the matrix with all its off–diagonal entries assigned to zero. The tangent space associated to at is given by . Again, if we endow with the inner product inherited from the standard inner product on , given by (41), then the becomes an embedded Riemannian manifold on . For this particular manifold, we consider the retraction, which appears in absil2006joint , defined by

| (46) |

for all and . We will use another vector transport based on the orthogonal projection operator on ,

| (47) |

for all and .

6.2 Eigenvalues computation on the sphere

For the first test problem, we consider the standard eigenvalue problem

| (48) |

where is a given symmetric matrix. The values of that verify (48) are known as eigenvalues of and the corresponding vectors are the eigenvectors. A simple way to compute extreme eigenvalues of is by minimizing (or maximizing) the associated Rayleigh quotient

| (49) |

This function is a continuously differentiable map , whose gradient is given by . It is clear that any eigenvector and its corresponding eigenvalue satisfy that , and thus in that case , is a critical point of , i.e., . By noting that if, and only if is a solution of the following nonlinear system of equations

| (50) |

we can address directly this eigenvalue problem by using SANE method. On the other hand, by introducing the constraint , the nonlinear system can be cast to the following tangent vector field, defined on a Riemannian manifold (the unit sphere)

| (51) |

Note that for all , we have . Therefore effectively maps point on the sphere to its corresponding tangent space, i.e., defines a tangent vector field.

For illustrative purposes, in this subsection we compare the numerical performance of the SANE method solving (50), versus

its generalized Riemannian version RSANE solving (51). To do this, we consider 40 instances of symmetric and positive definite sparse matrices taken from the UF sparse Matrix collection davis2011university 111The SuiteSparse Matrix Collection tool–box is available in https://sparse.tamu.edu/, which contains several matrices that arise in real applications. For this experiment, we stop both algorithms when is founded a vector that satisfies the inequality , where , with e-5. Note that for our proposed algorithm , for all generated by RSANE, since our proposal preserves the constraint , hence this stop criterion is well defined for our algorithm. The starting vector was generated by , where .

The numerical results associated to this experiments are summarized in Table 1. In this table, we report the number of iteration (Nitr); the CPU–time in seconds (Time); the residual value (NrmF), where denotes the estimated solution obtained by each algorithm; and the number of function evaluations (Nfe). In the case of SANE this is the number of times that SANE evaluates the map, while for our RSANE, Nfe denotes the number of times that it evaluates the functional .

We observe, from Table 1, that the RSANE algorithm is a robust option to solve linear eigenvalue problems. The performance of both SANE and RSANE varied over different matrices. We note that our RSANE is very competitive for large–scale problems. Indeed, the RSANE algorithm outperforms its Euclidean version (SANE) in general terms, since RSANE took on average a total of 3644.1 iterations and 31.167 seconds to solve all the 40 problems, while SANE took 34.079 seconds and 3864.3 iterations to solve all the instances on average. It is worth noting that although RSANE failed on problem bcsstk13, it can become successful on bcsstk16, while both methods take the maximum number of iterations on problems bcsstk27, bcsstk28 and nasa4704.

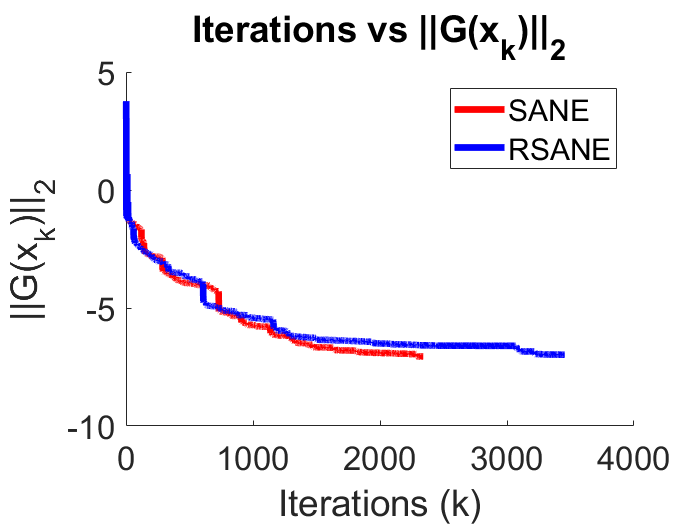

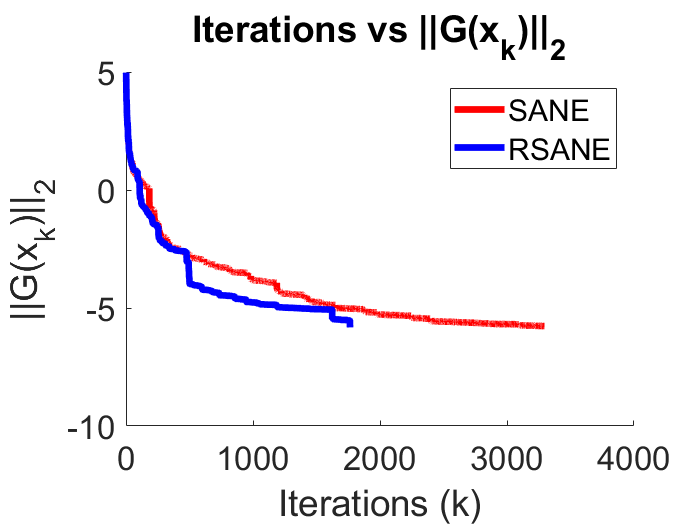

In Figure 1, we plot the convergence history of SANE and RSANE methods for the particular instances “1138_bus” and “s3rmt3m1”, respectively. From this figure, we note that RSANE can converge faster than SANE, which is illustrated in Figure 1 (b). However the opposite conclusion is obtained from Figure 1(a).

| SANE | RSANE | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| Name | n | Nfe | NrmF | Nitr | Time | Nfe | NrmF | Nitr | Time |

| 1138_bus | 1138 | 10621 | 9.70e-6 | 2791 | 0.284 | 14778 | 7.21e-6 | 3781 | 0.449 |

| af_0_k101 | 503625 | 2269 | 1.95e-5 | 692 | 51.875 | 2074 | 1.99e-5 | 644 | 49.273 |

| af_1_k101 | 503625 | 1798 | 2.00e-5 | 560 | 39.915 | 1702 | 1.99e-5 | 451 | 33.397 |

| af_2_k101 | 503625 | 2683 | 2.00e-5 | 796 | 59.179 | 1421 | 1.93e-5 | 451 | 34.259 |

| af_3_k101 | 503625 | 2207 | 1.93e-5 | 664 | 49.175 | 1757 | 1.92e-5 | 541 | 42.234 |

| af_4_k101 | 503625 | 2281 | 1.98e-5 | 694 | 51.156 | 1758 | 1.85e-5 | 545 | 43.613 |

| af_5_k101 | 503625 | 2651 | 1.76e-5 | 789 | 58.730 | 1975 | 1.79e-5 | 607 | 47.731 |

| af_shell3 | 504855 | 305 | 1.99e-5 | 119 | 6.989 | 328 | 1.79e-5 | 125 | 8.026 |

| af_shell7 | 504855 | 452 | 1.14e-5 | 165 | 10.204 | 363 | 1.53e-5 | 133 | 8.851 |

| apache1 | 80800 | 15377 | 1.88e-5 | 3992 | 14.466 | 34224 | 1.54e-5 | 8434 | 34.905 |

| apache2 | 715176 | 58685 | 1.93e-5 | 13970 | 801.886 | 52821 | 6.89e-6 | 12516 | 785.019 |

| bcsstk10 | 1086 | 2450 | 1.30e-5 | 737 | 0.063 | 1954 | 1.87e-5 | 603 | 0.054 |

| bcsstk11 | 1473 | 883 | 1.76e-5 | 302 | 0.034 | 5421 | 2.00e-5 | 1501 | 0.199 |

| bcsstk13 | 2003 | 19254 | 1.98e-5 | 3293 | 1.372 | 88608 | 3.08e-4 | 15000 | 6.523 |

| bcsstk14 | 1806 | 76 | 7.15e-6 | 35 | 0.006 | 4674 | 1.94e-5 | 1341 | 0.283 |

| bcsstk16 | 4884 | 105001 | 7.49e+0 | 15000 | 24.274 | 1430 | 1.41e-5 | 458 | 0.386 |

| bcsstk27 | 1224 | 64534 | 3.43e-4 | 15000 | 2.937 | 63935 | 2.11e-4 | 15000 | 3.064 |

| bcsstk28 | 4410 | 62724 | 2.32e-4 | 15000 | 13.300 | 63527 | 1.83e-4 | 15000 | 14.293 |

| bcsstk36 | 23052 | 54355 | 2.00e-5 | 12861 | 76.108 | 26406 | 1.99e-5 | 6561 | 38.742 |

| bcsstm12 | 1473 | 11960 | 1.94e-5 | 3017 | 0.295 | 8632 | 1.99e-5 | 2268 | 0.240 |

| bcsstm23 | 3134 | 14536 | 1.98e-5 | 3670 | 0.500 | 14820 | 2.00e-5 | 3735 | 0.652 |

| bcsstm24 | 3562 | 8255 | 2.00e-5 | 2228 | 0.312 | 6285 | 1.85e-5 | 1721 | 0.267 |

| cfd1 | 70656 | 2693 | 2.00e-5 | 801 | 6.265 | 2201 | 1.83e-5 | 659 | 5.238 |

| ex15 | 6867 | 197 | 7.92e-6 | 76 | 0.027 | 9578 | 1.97e-5 | 2606 | 1.334 |

| fv1 | 9604 | 371 | 1.81e-5 | 136 | 0.061 | 474 | 1.85e-5 | 170 | 0.0949 |

| fv2 | 9801 | 369 | 1.54e-5 | 138 | 0.066 | 334 | 1.51e-5 | 127 | 0.0637 |

| fv3 | 9801 | 506 | 1.82e-5 | 183 | 0.077 | 371 | 1.52e-5 | 136 | 0.0664 |

| Kuu | 7102 | 5050 | 3.28e-6 | 1479 | 1.533 | 2674 | 4.82e-6 | 819 | 0.9281 |

| mhd4800b | 4800 | 1959 | 1.98e-5 | 605 | 0.146 | 1784 | 1.96e-5 | 561 | 0.1363 |

| msc23052 | 23052 | 43296 | 1.99e-5 | 10410 | 62.815 | 37779 | 1.99e-5 | 9217 | 56.9276 |

| Muu | 7102 | 29 | 1.78e-5 | 13 | 0.006 | 28 | 1.70e-5 | 12 | 0.0052 |

| nasa4704 | 4704 | 63663 | 8.21e-5 | 15000 | 7.418 | 63440 | 5.79e-5 | 15000 | 8.264 |

| s1rmq4m1 | 5489 | 9000 | 2.00e-5 | 2411 | 2.394 | 11052 | 1.99e-5 | 2933 | 3.2589 |

| s1rmt3m1 | 5489 | 23019 | 1.98e-5 | 5895 | 4.566 | 13688 | 1.93e-5 | 3592 | 3.1067 |

| s2rmq4m1 | 5489 | 9839 | 2.00e-5 | 2564 | 2.384 | 10292 | 1.96e-5 | 2683 | 2.774 |

| s2rmt3m1 | 5489 | 17422 | 2.00e-5 | 4437 | 3.504 | 21669 | 1.93e-5 | 5385 | 4.9953 |

| s3rmq4m1 | 5489 | 6063 | 1.85e-5 | 1710 | 1.500 | 5422 | 1.20e-5 | 1517 | 1.4757 |

| s3rmt3m1 | 5489 | 12250 | 1.76e-5 | 3295 | 2.467 | 6331 | 1.52e-5 | 1764 | 1.3742 |

| s3rmt3m3 | 5357 | 13090 | 1.89e-5 | 3461 | 2.569 | 9911 | 1.83e-5 | 2669 | 2.1663 |

| sts4098 | 4098 | 22153 | 1.89e-5 | 5583 | 2.335 | 17664 | 1.93e-5 | 4499 | 2.0278 |

6.3 A nonlinear eigenvalue problem on the Stiefel manifold

As a second experiment, we investigate the performance our RSANE method applied to deal with the following Stiefel manifold constrained nonlinear eigenvalue problem

| (52a) | |||||

| (52b) | |||||

where , where is the pseudo–inverse matrix of the discrete Laplacian operator , and , here denotes the vector containing the diagonal elements of the matrix . Observe that can be seen as a block of eigenvalues of the nonlinear matrix . In fact, in the special case that the operator is constant and , this problem is reduced to the linear eigenvalue problem (48). This kind of nonlinear eigenvalue problem appear frequently in total energy minimization in electronic structure calculations cedeno2018projected ; saad2010numerical . Pre–multiplying by both sides of (52a) and using (52b) we obtain , so substituting this result in (52a), we obtain the following tangent vector field defined on the Stiefel manifold

| (53) |

where .

In Table 2 we report the results obtained by running the RSANE and CGPR methods on the problem of finding a zero of (53), with six possible choices for the pair , obtained by varying and in and , respectively. For comparison purposes, we repeat our experiments over 30 different random generated starting points for each pair of and report the averaged number of iteration (Nitr), the averaged number of the evaluation of the functional (Nfe), the averaged total computing time in seconds (Time) and the averaged residual (NrmF) given by , where denotes the estimated solution obtained by the respective algorithm for the –th starting point, for all . In addition, for all the experiments we fix , e-4 as the tolerance for the termination rule based on residual norm . In this table, RSANE_polar and RSANE_qr denotes our Algorithm 1 using the retractions defined in (43) and (42), respectively. Similarly, CGPR_polar and CGPR_qr denotes the Riemannian Derivative–Free conjugate gradient Polak–Ribiére–Polyak method developed in yao2020riemannian using the retractions (43) and (42), respectively.

As shown in Table 2, RSANE is superior to the Riemannian conjugate gradient method solving nonlinear eigenvalues problems for different choices of . In particular, we note that for problems with , our proposal basically converges in half of the iterations that the CGPR takes to reach the desired precision.

| Method | Nitr | Time | NrmF | Nfe | Nitr | Time | NrmF | Nfe |

|---|---|---|---|---|---|---|---|---|

| RSANE-polar | 70.0 | 0.022 | 7.85e-6 | 167.2 | 632.9 | 0.898 | 2.10e-4 | 2170.1 |

| RSANE-qr | 67.8 | 0.016 | 6.87e-6 | 161.9 | 620.6 | 0.505 | 4.10e-4 | 2126.9 |

| CGPR-polar | 79.0 | 0.029 | 8.30e-5 | 231.3 | 1209.4 | 2.747 | 1.46e-3 | 6586.4 |

| CGPR-qr | 78.2 | 0.024 | 8.05e-5 | 229.3 | 996.8 | 1.296 | 3.30e-4 | 5451.3 |

| RSANE-polar | 65.0 | 0.200 | 8.10e-6 | 151.6 | 311.6 | 2.048 | 7.79e-6 | 955.4 |

| RSANE-qr | 68.0 | 0.211 | 7.43e-6 | 160.0 | 311.5 | 1.928 | 8.79e-6 | 954.6 |

| CGPR-polar | 74.3 | 0.284 | 8.14e-5 | 209.3 | 737.5 | 7.757 | 8.86e-5 | 3601.4 |

| CGPR-qr | 73.6 | 0.280 | 8.17e-5 | 210.2 | 767.1 | 7.583 | 9.12e-5 | 3731.1 |

| RSANE-polar | 68.9 | 0.628 | 7.55e-6 | 159.8 | 316.3 | 5.272 | 8.12e-6 | 968.9 |

| RSANE-qr | 66.8 | 0.604 | 6.86e-6 | 153.4 | 321.3 | 5.294 | 8.03e-6 | 987.6 |

| CGPR-polar | 73.5 | 0.820 | 7.57e-5 | 208.5 | 790.3 | 21.224 | 9.36e-5 | 3880.0 |

| CGPR-qr | 77.4 | 0.860 | 6.87e-5 | 219.6 | 881.2 | 23.505 | 9.50e-5 | 4347.7 |

6.4 Joint diagonalization on the oblique manifold

In this subsection we analyze the numerical behaviour of our RSANE method solving the nonlinear equation based on the tangent vector field obtained from the Riemannian gradient of the scalar function , defined by

| (54) |

where and are given symmetric matrices. The minimization of this function on is frequently used to perform independent component analysis, see absil2006joint . It is well–known that the problem of minimizing (54) is closely related to the problem of finding a zero to the Riemannian gradient of the function , which is given by

| (55) |

for details see Section 3 in absil2006joint . In order to study the numerical performance of our RSANE compared with the CGPR method, we consider the following tangent vector field

| (56) |

which is obtained form the Riemannian gradient of .

Now, we present a computational comparison considering the RSANE and CGPR yao2020riemannian methods on the solution of the tangent vector field (56). To do this, we set , and study the numerical behavior of both methods for the pairs of values y . For each pair , we repeat 30 independent runs of RSANE and CGPR, generating the symmetric matrices as follows

where was a diagonal matrix, whose diagonal elements are given by , for all ; and the were randomly generated matrices, whose entries follow a standard normal distribution. This particular structure of the ’s matrices was taken from zhu2017riemannian . The starting point was randomly generated using the following Matlab commands

for all . For this comparison, we use e- as the tolerance for the stopping rule based on the residual norm .

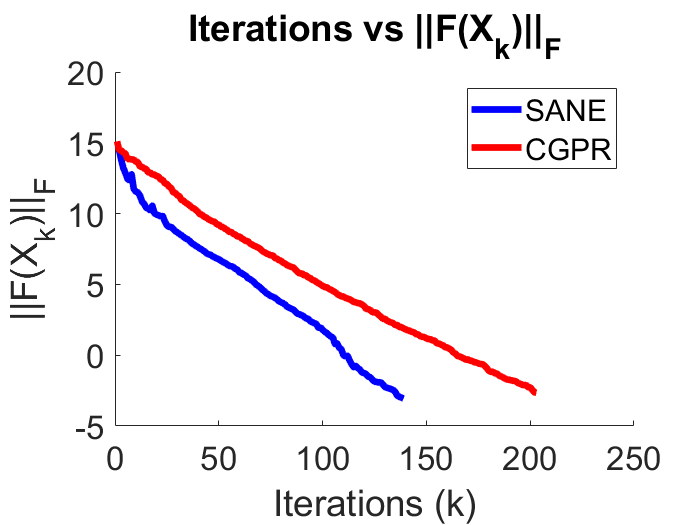

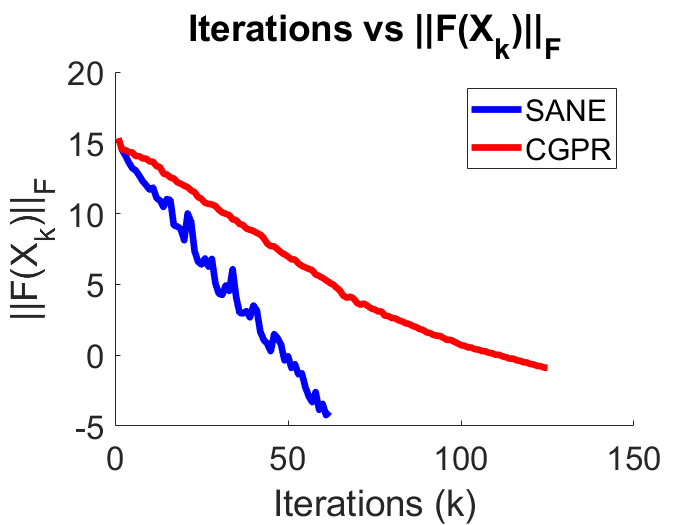

The mean of number of iteration, number of evaluation of , total computational time in seconds, and the residual “NrmF” defined as in the previous subsection, are reported in Table 3. In addition, in Figure 2 we plot the numerical behavior associated to the average residual curve throughout the iterations, for all the methods and for each pairs .

From Table 3 and Figure 2, we can see that both RSANE and CGPR can find an approximation of the solution of problem (56) with the pre–established precision for the residual norm, in the two cases of considered. In addition, we clearly observe that RSANE performs better than CGPR in terms of the mean value of iterations and CPU–time.

| Method | Nitr | Time | NrmF | Nfe |

| RSANE | 157.8 | 3.950 | 6.85e-6 | 390.0 |

| CGPR | 238.8 | 7.589 | 7.84e-6 | 741.8 |

| RSANE | 68.1 | 4.894 | 6.50e-6 | 142.5 |

| CGPR | 153.4 | 14.342 | 7.59e-6 | 416.1 |

7 Concluding Remarks

In this paper, a Riemannian residual approach for finding a zero of a tangent vector field is proposed. The new approach can be seen

as an extended version of the SANE method developed in cruz2003nonmonotone , for the solution of large–scale nonlinear systems

of equations. Since the proposed method systematically uses the tangent vector field for building the search direction, RSANE is very easy

to implement and has low storage requirements, which is suitable for solving large–scale problems. In addition, our proposal uses a modification

of the Riemannian Barzilai–Borwein step–sizes introduced in iannazzo2018riemannian , combined with the Zhang–Hager globalization

strategy zhang2004nonmonotone , in order to guarantee the convergence of the associated residual sequence.

The preliminary numerical results show that RSANE performs efficiently, dealing with several tangent vector fields considering both real and simulated data, and different Riemannian manifolds. In particular, RSANE is competitive against its Euclidean version (SANE), solving large–scale eigenvalue problems. Additionally, RSANE outperforms the derivative-free conjugate gradient algorithm recently published in yao2020riemannian , on two considered matrix manifolds.

References

- [1] P-A Absil and Kyle A Gallivan. Joint diagonalization on the oblique manifold for independent component analysis. In 2006 IEEE International Conference on Acoustics Speech and Signal Processing Proceedings, volume 5, pages V–V. IEEE, 2006.

- [2] P-A Absil, Robert Mahony, and Rodolphe Sepulchre. Optimization algorithms on matrix manifolds. Princeton University Press, 2009.

- [3] Roy L Adler, Jean-Pierre Dedieu, Joseph Y Margulies, Marco Martens, and Mike Shub. Newton’s method on riemannian manifolds and a geometric model for the human spine. IMA Journal of Numerical Analysis, 22(3):359–390, 2002.

- [4] Jonathan Barzilai and Jonathan M Borwein. Two-point step size gradient methods. IMA journal of numerical analysis, 8(1):141–148, 1988.

- [5] Paul Breiding and Nick Vannieuwenhoven. Convergence analysis of riemannian gauss–newton methods and its connection with the geometric condition number. Applied Mathematics Letters, 78:42–50, 2018.

- [6] Oscar Susano Dalmau Cedeno and Harry Fernando Oviedo Leon. Projected nonmonotone search methods for optimization with orthogonality constraints. Computational and Applied Mathematics, 37(3):3118–3144, 2018.

- [7] Timothy A Davis and Yifan Hu. The university of florida sparse matrix collection. ACM Transactions on Mathematical Software (TOMS), 38(1):1, 2011.

- [8] Jean-Pierre Dedieu, Pierre Priouret, and Gregorio Malajovich. Newton’s method on riemannian manifolds: covariant alpha theory. IMA Journal of Numerical Analysis, 23(3):395–419, 2003.

- [9] Alan Edelman, Tomás A Arias, and Steven T Smith. The geometry of algorithms with orthogonality constraints. SIAM journal on Matrix Analysis and Applications, 20(2):303–353, 1998.

- [10] Luigi Grippo, Francesco Lampariello, and Stephano Lucidi. A nonmonotone line search technique for newton’s method. SIAM Journal on Numerical Analysis, 23(4):707–716, 1986.

- [11] Bruno Iannazzo and Margherita Porcelli. The riemannian barzilai–borwein method with nonmonotone line search and the matrix geometric mean computation. IMA Journal of Numerical Analysis, 38(1):495–517, 2018.

- [12] Effrosini Kokiopoulou, Jie Chen, and Yousef Saad. Trace optimization and eigenproblems in dimension reduction methods. Numerical Linear Algebra with Applications, 18(3):565–602, 2011.

- [13] William La-Cruz and Marcos Raydan. Nonmonotone spectral methods for large-scale nonlinear systems. Optimization Methods and Software, 18(5):583–599, 2003.

- [14] Chong Li and Jinhua Wang. Convergence of the newton method and uniqueness of zeros of vector fields on riemannian manifolds. Science in China Series A: Mathematics, 48(11):1465–1478, 2005.

- [15] Jonathan H Manton. Optimization algorithms exploiting unitary constraints. IEEE Transactions on Signal Processing, 50(3):635–650, 2002.

- [16] Richard M Martin. Electronic structure: basic theory and practical methods. Cambridge university press, 2020.

- [17] Erkki Oja. Neural networks, principal components, and subspaces. International journal of neural systems, 1(01):61–68, 1989.

- [18] Harry Oviedo. Implicit steepest descent algorithm for optimization with orthogonality constraints.

- [19] Harry Oviedo and Oscar Dalmau. A scaled gradient projection method for minimization over the stiefel manifold. In Mexican International Conference on Artificial Intelligence, pages 239–250. Springer, 2019.

- [20] Harry Oviedo, Oscar Dalmau, and Hugo Lara. Two adaptive scaled gradient projection methods for stiefel manifold constrained optimization. Numerical Algorithms, pages 1–21, 2020.

- [21] Harry Oviedo, Hugo Lara, and Oscar Dalmau. A non-monotone linear search algorithm with mixed direction on stiefel manifold. Optimization Methods and Software, 34(2):437–457, 2019.

- [22] Marcos Raydan. On the barzilai and borwein choice of steplength for the gradient method. IMA Journal of Numerical Analysis, 13(3):321–326, 1993.

- [23] Marcos Raydan. The barzilai and borwein gradient method for the large scale unconstrained minimization problem. SIAM Journal on Optimization, 7(1):26–33, 1997.

- [24] Wolfgang Ring and Benedikt Wirth. Optimization methods on riemannian manifolds and their application to shape space. SIAM Journal on Optimization, 22(2):596–627, 2012.

- [25] Yousef Saad, James R Chelikowsky, and Suzanne M Shontz. Numerical methods for electronic structure calculations of materials. SIAM review, 52(1):3–54, 2010.

- [26] Hiroyuki Sato. Riemannian newton’s method for joint diagonalization on the stiefel manifold with application to ica. arXiv preprint arXiv:1403.8064, 2014.

- [27] Hiroyuki Sato and Toshihiro Iwai. A new, globally convergent riemannian conjugate gradient method. Optimization, 64(4):1011–1031, 2015.

- [28] Pavan Turaga, Ashok Veeraraghavan, and Rama Chellappa. Statistical analysis on stiefel and grassmann manifolds with applications in computer vision. In 2008 IEEE Conference on Computer Vision and Pattern Recognition, pages 1–8. IEEE, 2008.

- [29] Zaiwen Wen, Chao Yang, Xin Liu, and Yin Zhang. Trace-penalty minimization for large-scale eigenspace computation. Journal of Scientific Computing, 66(3):1175–1203, 2016.

- [30] Teng-Teng Yao, Zhi Zhao, Zheng-Jian Bai, and Xiao-Qing Jin. A riemannian derivative-free polak–ribiére–polyak method for tangent vector field. Numerical Algorithms, pages 1–31, 2020.

- [31] Hongchao Zhang and William W Hager. A nonmonotone line search technique and its application to unconstrained optimization. SIAM journal on Optimization, 14(4):1043–1056, 2004.

- [32] Teng Zhang and Yi Yang. Robust pca by manifold optimization. The Journal of Machine Learning Research, 19(1):3101–3139, 2018.

- [33] Xin Zhang, Jinwei Zhu, Zaiwen Wen, and Aihui Zhou. Gradient type optimization methods for electronic structure calculations. SIAM Journal on Scientific Computing, 36(3):C265–C289, 2014.

- [34] Xiaojing Zhu. A riemannian conjugate gradient method for optimization on the stiefel manifold. Computational optimization and Applications, 67(1):73–110, 2017.