SPONGE Extension

Regularized spectral methods for clustering signed networks

Abstract

We study the problem of -way clustering in signed graphs. Considerable attention in recent years has been devoted to analyzing and modeling signed graphs, where the affinity measure between nodes takes either positive or negative values. Recently, [CDGT19] proposed a spectral method, namely SPONGE (Signed Positive over Negative Generalized Eigenproblem), which casts the clustering task as a generalized eigenvalue problem optimizing a suitably defined objective function. This approach is motivated by social balance theory, where the clustering task aims to decompose a given network into disjoint groups, such that individuals within the same group are connected by as many positive edges as possible, while individuals from different groups are mainly connected by negative edges. Through extensive numerical simulations, SPONGE was shown to achieve state-of-the-art empirical performance. On the theoretical front, [CDGT19] analyzed SPONGE, as well as the popular Signed Laplacian based spectral method under the setting of a Signed Stochastic Block Model, for equal-sized clusters, in the regime where the graph is moderately dense.

In this work, we build on the results in [CDGT19] on two fronts for the normalized versions of SPONGE and the Signed Laplacian. Firstly, for both algorithms, we extend the theoretical analysis in [CDGT19] to the general setting of unequal-sized clusters in the moderately dense regime. Secondly, we introduce regularized versions of both methods to handle sparse graphs – a regime where standard spectral methods are known to underperform – and provide theoretical guarantees under the same setting of a Signed Stochastic Block Model. To the best of our knowledge, regularized spectral methods have so far not been considered in the setting of clustering signed graphs. We complement our theoretical results with an extensive set of numerical experiments on synthetic data.

Keywords: signed clustering, graph Laplacians, stochastic block models, spectral methods, regularization techniques, sparse graphs.

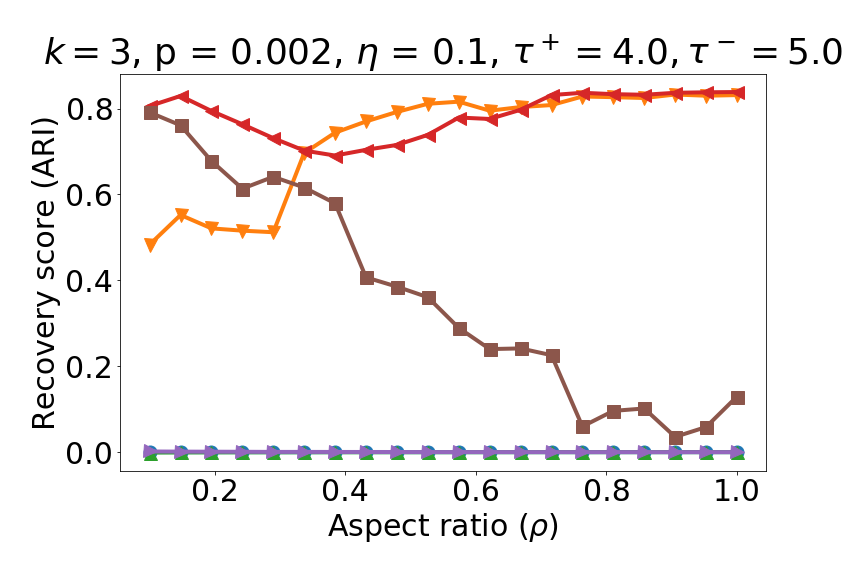

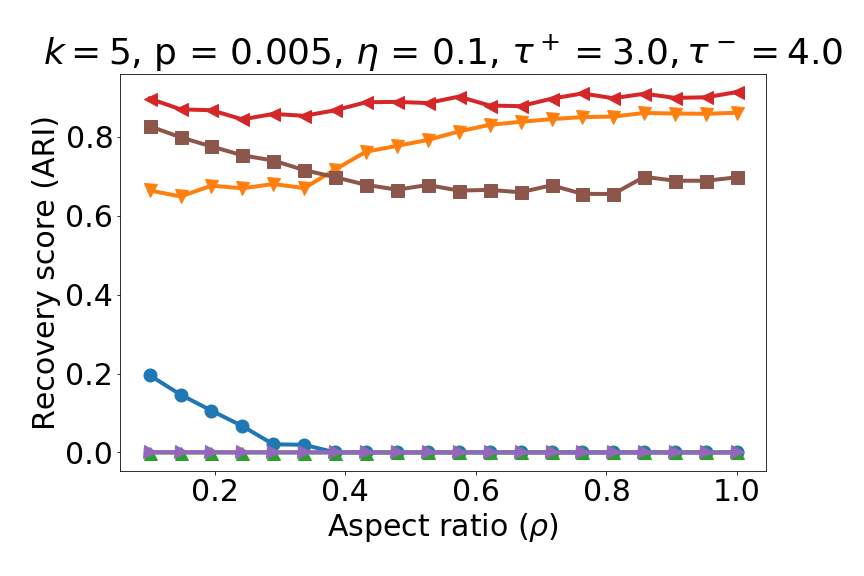

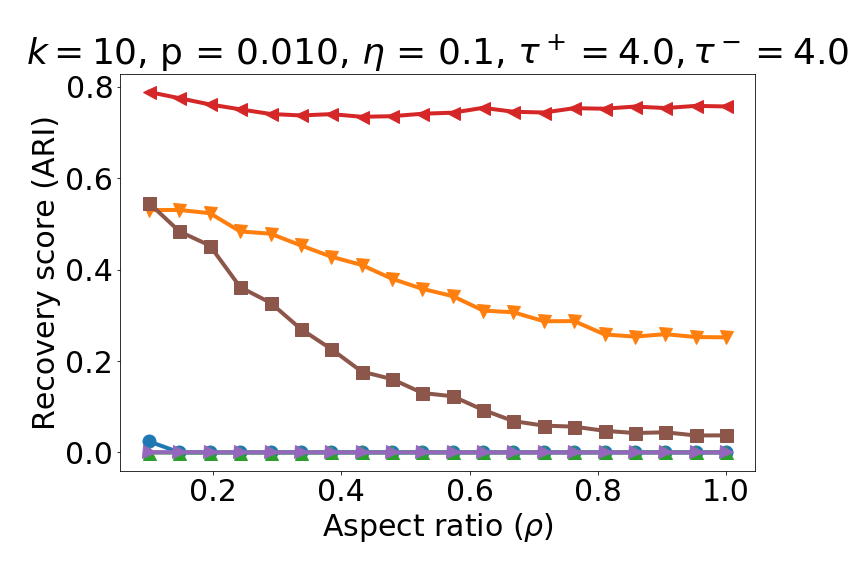

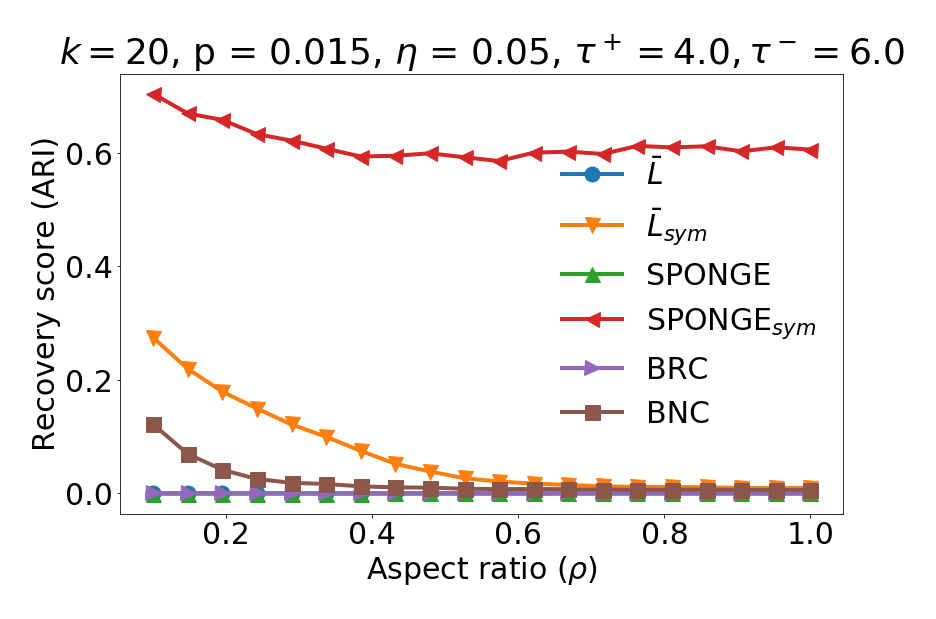

1 Introduction

Signed graphs.

The recent years have seen a significant increase in interest for analysis of signed graphs, for tasks such as clustering [CHN+14, CDGT19], link prediction [LHK10, KSSF16] and visualization [KSL+10]. Signed graphs are an increasingly popular family of undirected graphs, for which the edge weights may take both positive and negative values, thus encoding a measure of similarity or dissimilarity between the nodes. Signed social graphs have also received considerable attention to model trust relationships between entities, with positive (respectively, negative) edges encoding trust (respectively, distrust) relationships.

Clustering is arguably one of the most popular tasks in unsupervised machine learning, aiming at partitioning the node set such that the average connectivity or similarity between pairs of nodes within the same cluster is larger than that of pairs of nodes spanning different clusters. While the problem of clustering undirected unsigned graphs has been thoroughly studied for the past two decades (and to some extent, also that of clustering directed graphs in recent years), a lot less research has been undertaken on studying signed graphs.

Spectral clustering and regularization.

Spectral clustering methods have become a fundamental tool with a broad range of applications in areas including network science, machine learning and data mining [vL07]. The attractivity of spectral clustering methods stems, on one hand, from its computational scalability by leveraging state-of-the-art eigensolvers, and on the other hand, from the fact that such algorithms are amenable to a theoretical analysis under suitably defined stochastic block models that quantify robustness to noise and sparsity of the measurement graph. Furthermore, on the theoretical side, understanding the spectrum of the adjacency matrix and its Laplacians, is crucial for the development of efficient algorithms with performance guarantees, and leads to a very mathematically rich set of problems. One such example from the latter class is that of Cheeger inequalities for general graphs, which relate the dominant eigenvalues of the Laplacian to edge expansion on graphs [Chu96], extended to the setup of directed graphs [Chu05], and more recently, to the graph Connection Laplacian arising in the context of the group synchronization problem [BSS13], and higher-order Cheeger inequalities for multiway spectral clustering [LGT14]. There has been significant recent advances in theoretically analyzing spectral clustering methods in the context of stochastic block models; for a detailed survey, we refer the reader to the comprehensive recent survey of Abbe [Abb17].

In general, spectral clustering algorithms for unsigned and signed graphs typically have a common pipeline, where a suitable graph operator is considered (e.g., the graph Laplacian), its (usually ) extremal eigenvectors are computed, and the resulting point cloud in is clustered using a variation of the popular -means algorithm [RCY+11]. The main motivation for our current work stems from the lack of statistical guarantees in the above literature for the signed clustering problem, in the context of sparse graphs and large number of clusters . The problem of -way clustering in signed graphs aims to find a partition of the node set into disjoint clusters, such that most edges within clusters are positive, while most edges across clusters are negative, thus altogether maximizing the number of satisfied edges in the graph. Another potential formulation to consider is to minimize the number of (unsatisfied) edges violating the partitions, i.e, the number of negative edges within clusters and positive edges across clusters.

A regularization step has been introduced in the recent literature motivated by the observation that properly regularizing the adjacency matrix of a graph can significantly improve performance of spectral algorithms in the sparse regime. It was well known beforehand that standard spectral clustering often fails to produce meaningful results for sparse networks that exhibit strong degree heterogeneity [ACBL13, J+15]. To this end, [CCT12] proposed the regularized graph Laplacian where , for . The spectral algorithm introduced and analyzed in [CCT12] splits the nodes into two random subsets and only relies on the subgraph induced by only one of the subsets to compute the spectral decomposition. Tai and Karl [QR13] studied the more traditional formulation of a spectral clustering algorithm that uses the spectral decomposition on the entire matrix [NJW+02], and proposed a regularized spectral clustering which they analyze. Subsequently, [JY16] provided a theoretical justification for the regularization , where denotes the all ones matrix, partly explaining the empirical findings of [ACBL13] that the performance of regularized spectral clustering becomes insensitive for larger values of regularization parameters, and show that such large values can lead to better results. It is this latter form of regularization that we would be leveraging in our present work, in the context of clustering signed graphs. Additional references and discussion on the regularization literature are provided in Section 1.2.

Motivation & Applications.

The recent surge of interest in analyzing signed graphs has been fueled by a very wide range of real-world applications, in the context of clustering, link prediction, and node rankings. Such social signed networks model trust relationships between users with positive (trust) and negative (distrust) edges. A number of online social services such as Epinions [epi] and Slashdot [sla] that allow users to express their opinions are naturally represented as signed social networks [LHK10]. [BSG+12] considered shopping bipartite networks that encode like and dislike preferences between users and products. Other domain specific applications include personalized rankings via signed random walks [JJSK16], node rankings and centrality measures [LFZ19], node classification [TAL16], community detection [YCL07, CWP+16], and anomaly detection, as in [KSS14] which classifies users of an online signed social network as malicious or benign. In the very active research area of synthetic data generation, generative models for signed networks inspired by Structural Balance Theory have been proposed in [DMT18]. Learning low-dimensional representations of graphs (network embeddings) have received tremendous attention in the recent machine learning literature, and graph convolutional networks-based methods have also been proposed for the setting of signed graphs, including [DMT18, LTJC20], which provide network embeddings to facilitate subsequent downstream tasks, including clustering and link prediction.

A key motivation for our line of work stems from time series clustering [ASW15], an ubiquitous task arising in many applications that consider biological gene expression data [FSK+12], economic time series that capture macroeconomic variables [Foc05], and financial time series corresponding to large baskets of instruments in the stock market [ZJGK10, PPTV06]. Driven by the clustering task, a popular approach in the literature is to consider similarity measures based on the Pearson correlation coefficient that captures linear dependence between variables and takes values in . By construing the correlation matrix as a weighted network whose (signed) edge weights capture the pairwise correlations, we cluster the multivariate time series by clustering the underlying signed network. To increase robustness, tests of statistical significance are often applied to individual pairwise correlations, indicating the probability of observing a correlation at least as large as the measured sample correlation, assuming the null hypothesis is true. Such a thresholding step on the -value associated to each individual sample correlation [HLN15], renders the correlation network as a sparse matrix, which is one of the main motivations of our current work which proposes and analyzes algorithms for handling such sparse signed networks. We refer the reader to the popular work of Smith et al. [SMSK+11] for a detailed survey and comparison of various methodologies for turning time series data into networks, where the authors explore the interplay between fMRI time series and the network generation process. Importantly, they conclude that, in general, correlation-based approaches can be quite successful at estimating the connectivity of brain networks from fMRI time series.

Paper outline.

This paper is structured as follows. The remainder of this Section 1 establishes the notation used throughout the paper, followed by a brief survey of related work in the signed clustering literature and graph regularization techniques for general graphs, along by a brief summary of our main contributions. Section 2 lays out the problem setup leading to our proposed algorithms in the context of the signed stochastic block model we subsequently analyze. Section 3 is a high-level summary of our main results across the two algorithms we consider. Section 4 contains the analysis of the proposed SPONGEsym algorithm, for both the sparse and dense regimes, for general number of clusters. Similarly, Section 5 contains the main theoretical results for the symmetric Signed Laplacian, under both sparsity regimes as well. Section 6 contains detailed numerical experiments on various synthetic data sets, showcasing the performance of our proposed algorithms as we vary the number of clusters, the relative cluster sizes, the sparsity regimes, and the regularization parameters. Finally, Section 7 is a summary and discussion of our main findings, with an outlook towards potential future directions. We defer to the Appendix additional proof details and a summary of the main technical tools used throughout.

1.1 Notation

We denote by a signed graph with vertex set , edge set , and adjacency matrix . We will also refer to the unsigned subgraphs of positive (resp. negative) edges (resp. ) with adjacency matrices (resp. ), such that . More precisely, and , with , and . We denote by the signed degree matrix, with the unsigned versions given by and . For a subset of nodes , we denote its complement by .

For a matrix , denotes its spectral norm , i.e., its largest singular value, and denotes its Frobenius norm. When is a symmetric matrix, we denote be the matrix whose columns are given by the eigenvectors corresponding to the smallest eigenvalues, and let denote the range space of these eigenvectors. We denote the eigenvalues of by , with the ordering

We also denote to be the -th row of . We denote (resp. ) the all ones column vector of size (resp. ) and . denotes the square identity matrix of size and is shortened to when . is the matrix of all ones. Finally, for , we write if there exists a universal constant such that . If and , then we write .

1.2 Related literature on signed clustering and graph regularization techniques

Signed clustering.

There exists a very rich literature on algorithms developed to solve the -way clustering problem, with spectral methods playing a central role in the developments of the last two decades. Such spectral techniques optimize an objective function via the eigen-decomposition of a suitably chosen graph operator (typically a graph Laplacian) built directly from the data, in order to obtain a low-dimensional embedding (most often of dimension or ). A clustering algorithm such as -means or -means++ is subsequently applied in order to extract the final partition.

Kunegis et al. in [KSL+10] introduced the combinatorial Signed Laplacian for the 2-way clustering problem. For heterogeneous degree distributions, normalized extensions are generally preferred, such as the random-walk Signed Laplacian , and the symmetric Signed Laplacian . Chiang et al. [CWD12] pointed out a weakness in the Signed Laplacian objective for -way clustering with , and proposed instead a Balanced Normalized Cut (BNC) objective based on the operator . Mercado et al. [MTH16] based their clustering algorithm on a new operator called the Geometric Mean of Laplacians, and later extended this method in [MTH19] to a family of operators called the Matrix Power Mean of Laplacians. Previous work [CDGT19] by a subset of the authors of the present paper introduced the symmetric SPONGE objective using the matrix operator , using the unsigned normalized Laplacians and regularization parameters . This work also provides theoretical guarantees for the SPONGE and Signed Laplacian algorithms, in the setting of a Signed Stochastic Block Model.

In [MTH16] and [MTH19], Mercado et al. study the eigenspaces - in expectations and in probability - of several graph operators in a certain Signed Stochastic Block Model. However, this generative model differs from the one proposed in [CDGT19] that we analyze in this work. In the former, the positive and negative adjacency matrices do not have disjoint support, contrary to the latter. Moreover, their analysis is performed in the case of equal-size clusters. We will later show in our analysis that their result for the symmetric Signed Laplacian is not applicable in our setting.

Hsieh et al. [HCD12] proposed to perform low-rank matrix completion as a preprocessing step, before clustering using the top eigenvectors of the completed matrix. For , [Cuc15] showed that signed clustering can be cast as an instance of the group synchronization [Sin11] problem over , potentially with constraints given by available side information, for which spectral, semidefinite programming relaxations, and message passing algorithms have been considered. In recent work, [CPv19] proposed a formulation for the signed clustering problem that relates to graph-based diffuse interface models utilizing the Ginzburg-Landau functionals, based on an adaptation of the classic numerical Merriman-Bence-Osher (MBO) scheme for minimizing such graph-based functionals [MSB14]. We refer the reader to [Gal13] for a recent survey on clustering signed and unsigned graphs.

In a different line of work, known as correlation clustering, Bansal et al. [BBC04] considered the problem of clustering signed complete graphs, proved that it is NP-complete, and proposed two approximation algorithms with theoretical guarantees on their performance. On a related note, Demaine and Immorlica [DEFI06] studied the same problem but for arbitrary weighted graphs, and proposed an O() approximation algorithm based on linear programming. For correlation clustering, in contrast to -way clustering, the number of clusters is not given in advance, and there is no normalization with respect to size or volume.

Regularization in the sparse regime.

In many applications, real-world networks are sparse. In this context, regularization methods have increased the performance of traditional spectral clustering techniques, both for synthetic Stochastic Block Models and real data sets [CCT12, ACBL13, JY16, LLV15].

Chaudhuri et al. [CCT12] regularize the Laplacian matrix by adding a (typically small) weight to the diagonal entries of the degree matrix with . Amini et al. [ACBL13] regularize the graph by adding a weight to every edge, leading to the Laplacian with and . Le et al. [LLV17] proved that this technique makes the adjacency and Laplacian matrices concentrate for inhomogeneous Erdős-Rényi graphs. Zhang et al. [ZR18] showed that this technique prevents spectral clustering from overfitting through the analysis of dangling sets. In [LLV17], Le et al. propose a graph trimming method in order to reduce the degree of certain nodes. This is achieved by reducing the entries of the adjacency matrix that lead to high-degree vertices. Zhou and Amini [ZA18] added a spectral truncation step after this regularization method, and proved consistency results in the bipartite Stochastic Block Model.

Very recently, regularization methods using powers of the adjacency matrix have been introduced. Abbe et al. [ABARS20] transform the adjacency matrix into the operator , where the indicator function is applied entrywise. With this method, spectral clustering achieves the fundamental limit for weak recovery in the sparse setting. Very similarly, Stefan and Massoulié [SM19] transform the adjacency matrix into a distance matrix of outreach , which links pairs of nodes that are far apart w.r.t the graph distance.

1.3 Summary of our main contributions

This work extends the results obtained in [CDGT19] by a subset of the authors of our present paper. This previous work introduced the SPONGE algorithm, a principled and scalable spectral method for the signed clustering task that amounts to solving a generalized eigenvalue problem. [CDGT19] provided a theoretical analysis of both the newly introduced SPONGE algorithm and the popular Signed Laplacian-based method [KSL+10], quantifying their robustness against the sampling sparsity and noise level, under the setting of a Signed Stochastic Block Model (SSBM). These were the first such theoretical guarantees for the signed clustering problem under a suitably defined stochastic graph model. However, the analysis in [CDGT19] was restricted to the setting of equally-sized clusters, which is less realistic in light of most real world applications. Furthermore, the same previous line of work considered the moderately dense regime in terms of the edge sampling probability, in particular, it operated in the setting where , i.e., . Many real world applications involve large but very sparse graphs, with , which provides motivation for our present work.

We summarize below our main contributions, and start with the remark that the theoretical analysis in the present paper pertains to the normalized version of SPONGE (denoted as SPONGEsym) and the symmetric Signed Laplacian, while [CDGT19] analyzed only the un-normalized versions of these signed operators. The experiments reported in [CDGT19] also considered such normalized matrix operators, and reported their superior performance over their respective un-normalized versions, further providing motivational ground for our current work.

-

(i)

Our first main contribution is to analyze the two above-mentioned signed operators, namely SPONGEsym and the symmetric Signed Laplacian, in the general SSBM model with and unequal-cluster sizes, in the moderately dense regime. In particular, we evaluate the accuracy of both signed clustering algorithms by bounding the mis-clustering rate of the entire pipeline, as achieved by the popular -means algorithm.

-

(ii)

Our second contribution is to introduce and analyze new regularized versions of both SPONGEsym and the symmetric Signed Laplacian, under the same general SSBM model, but in the sparse graph regime , a setting where standard spectral methods are known to underperform. To the best of our knowledge, this sparsity regime has not been previously considered in the literature of signed networks; such regularized spectral methods have so far not been considered in the setting of clustering signed networks, or more broadly in the signed networks literature, where such regularization could prove useful for other related downstream tasks. One important aspect of regularization techniques is the choice of the regularization parameters. We show that our proposed algorithms can benefit from careful regularization and attain a higher level of accuracy in the sparse regime, provided that the regularization parameters scale as an adequate power of the average degree in the graph.

2 Problem setup

This section details the two algorithms for the signed clustering problem that we will analyze subsequently, namely, SPONGEsym(Symmetric Signed Positive Over Negative Generalized Eigenproblem) and the symmetric Signed Laplacian, along with their respective regularized versions.

2.1 Clustering via the SPONGEsym algorithm

The symmetric SPONGE method, denoted as SPONGEsym, aims at jointly minimizing two measures of badness in a signed clustering problem. For an unsigned graph and , we define the cut function , and denote the volume of by .

For a given cluster set , is the total weight of edges crossing from to and is the sum of (weighted) degrees of nodes in . With this notation in mind and motivated by the approach of [CKC+16] in the context of constrained clustering, the symmetric SPONGE algorithm for signed clustering aims at minimizing the following two measures of badness given by and . To this end, we consider “merging” the objectives, and aim to solve

where denote trade-off parameters. For -way signed clustering into disjoint clusters , we arrive at the combinatorial optimization problem

| (1) |

Let denote respectively the degree matrix and un-normalized Laplacian associated with , and denote the symmetric Laplacian matrix for (similarly for ). For a subset , denote to be the indicator vector for so that equals if , and is otherwise. Now define the normalized indicator vector where

In light on this, one can verify that

Hence (1) is equivalent to the following discrete optimization problem

| (2) |

which is NP-Hard. A common approach to solve this problem is to drop the discreteness constraints, and allow to take values in . To this end, we introduce a new set of vectors such that they are orthonormal with respect to the matrix , i.e., . This leads to the continuous optimization problem

| (3) |

Note that the above choice of vectors is not really a relaxation of (2) since are not necessarily ()-orthonormal, but (3) can be conveniently formulated as a suitable generalized eigenvalue problem, similar to the approach in [CKC+16]. Indeed, denoting , and , (3) can be rewritten as

the solution to which is well known to be given by the smallest eigenvectors of

see for e.g. [ST00, Theorem 2.1]. However this is not practically viable for large scale problems, since computing itself is already expensive. To circumvent this issue, one can instead consider the embedding in corresponding to the smallest generalized eigenvectors of the symmetric definite pair . There exist many efficient solvers for solving large scale generalized eigenproblems for symmetric definite matrix pairs. In our experiments, we use the LOBPCG (Locally Optimal Block Preconditioned Conjugate Gradient method) solver introduced in [Kny01].

One can verify that is an eigenpair111With denoting its eigenvalue, and the corresponding eigenvector. of iff is a generalized eigenpair of . Indeed, for symmetric matrices with , it holds true for that

Therefore, denoting to be the matrix consisting of the smallest eigenvectors of , and to be the matrix of the smallest generalized eigenvectors of , it follows that

| (4) |

Hence upon computing , we will apply a suitable clustering algorithm on the rows of such as the popular -means++ [AV07], to arrive at the final partition.

Remark 2.1.

Clustering in the sparse regime.

We also provide a version of SPONGEsym for the case where is sparse, i.e., the graph has very few edges and is typically disconnected. In this setting, we consider a regularized version of SPONGEsym wherein a weight is added to each edge (including self-loops) of the positive and negative subgraphs, respectively. Formally, for regularization parameters , let us define to be the regularized adjacency matrices for the unsigned graphs respectively. Denoting to be the degree matrix of , the normalized Laplacians corresponding to are given by

Given the above modifications, let denote the matrix consisting of the smallest eigenvectors of

For the same reasons discussed earlier, we will consider the embedding given by the smallest generalized eigenvectors of the matrix pencil , namely where

as in (44). The rows of can then be clustered using an appropriate clustering procedure, such as -means++.

Remark 2.2.

Regularized spectral clustering for unsigned graphs involves adding to the adjacency matrix, followed by clustering the embedding given by the smallest eigenvectors of the normalized Laplacian (of the regularized adjacency), see for e.g. [ACBL13, LLV17]. To the best of our knowledge, regularized spectral clustering methods have not been explored thus far in the context of sparse signed graphs.

2.2 Clustering via the symmetric Signed Laplacian

The rationale behind the use of the (un-normalized) Signed Laplacian for clustering is justified by Kunegis et al. in [KSL+10] using the signed ratio cut function. For ,

| (5) |

For -way clustering, minimizing this objective corresponds to minimizing the number of positive edges between the two classes and the number of negative edges inside each class. Moreover, (5) is equivalent to the following optimization problem

where is the set of vectors of the form .

However, Gallier [Gal16] noted that this equivalence does not generalize to , and defined a new notion of signed cut, called the signed normalized cut function. For a partition with membership matrix ,

with the -th column of . Compared to (5), this objective also penalizes the number of negative edges across two subsets, which may not be a desirable feature for signed clustering. Minimizing this function with a relaxation of the constraint that leads to the following problem

The minimum of this problem is obtained by stacking column-wise the eigenvectors of corresponding to the smallest eigenvalues, i.e. . Therefore, one can apply a clustering algorithm to the rows of the matrix to find a partition of the set of nodes .

In fact, we will consider using only the smallest eigenvectors of and applying the -means++ algorithm on the rows of . This will be justified in our analysis via a stochastic generative model, namely the Signed Stochastic Block Model (SSBM), introduced in the next subsection. Under this model assumption, we will see later that the embedding given by the smallest eigenvectors of the symmetric Signed Laplacian of the expected graph has distinct rows (with two rows being equal if and only if the corresponding nodes belong to the same cluster).

Clustering in the sparse regime.

When is sparse, we propose a spectral clustering method based on a regularization of the signed graph, leading to a regularized Signed Laplacian. To this end, for , recall the regularized adjacency matrices , with degree matrices , for the unsigned graphs respectively. In light of this, the regularized signed adjacency and degree matrices are defined as follows

with . Our regularized Signed Laplacian is the symmetric Signed Laplacian on this regularized signed graph, i.e.

| (6) |

Similarly to the symmetric Signed Laplacian, our clustering algorithm in the sparse case finds the smallest eigenvectors of and applies the -means algorithm on the rows of .

Remark 2.3.

For the choice , the regularized Laplacian becomes

with . This regularization scheme is very similar to the degree-corrected normalized Laplacian defined in [CCT12].

2.3 Signed Stochastic Block Model (SSBM)

Our work theoretically analyzes the clustering performance of SPONGEsym and the symmetric Signed Laplacian algorithms under a signed random graph model, also considered previously in [CDGT19, CPv19]. We recall here its definition and parameters.

-

•

: the number of nodes in network;

-

•

: the number of planted communities;

-

•

: the probability of an edge to be present;

-

•

: the probability of flipping the sign of an edge;

-

•

: an arbitrary partition of the vertices with sizes .

We first partition the vertices (arbitrarily) into clusters where . Next, we generate a noiseless measurement graph from the Erdős-Rényi model , wherein each edge takes value if both its endpoints are contained in the same cluster, and otherwise. To model noise, we flip the sign of each edge independently with probability . This results in the realization of a signed graph instance from the SSBM ensemble.

Let denote the adjacency matrix of , and note that are independent random variables. Recall that , where are the adjacency matrices of the unsigned graphs respectively. Then, are independent, and similarly are also independent. But for given with , and are dependent. Let denote the degree of a node in cluster , for in the graph . Moreover, under this model, the expected signed degree matrix is the scaled identity matrix , with .

Remark 2.4.

Contrary to stochastic block models for unsigned graphs, we do not require (for the purpose of detecting clusters) that the intra-cluster edge probabilities to be different from those of inter-cluster edges, since the sign of the edges already achieves this purpose implicitly. In fact, it is the noise parameter that is crucial for identifying the underlying latent cluster structure.

To formulate our theoretical results we will also need the following notations. Let denote the fraction of nodes in cluster , with (resp. ) denoting the fraction for the largest (resp. smallest) cluster. Hence, the size of the largest (resp. smallest) cluster is (resp. ). Following the notation in [LR15], we will denote to be the class of “membership” matrices of size , and denote to be the ground-truth membership matrix containing distinct indicator row-vectors (one for each cluster), i.e., for and ,

We also define the normalized membership matrix corresponding to , where for and ,

3 Summary of main results

We now summarize our theoretical results for SPONGEsym and the symmetric Signed Laplacian methods, when the graph is generated from the SSBM ensemble.

3.1 Symmetric SPONGE

We begin by describing conditions under which the rows of the matrix approximately preserve the ground truth clustering structure. Before explaining our results, let us denote the matrix to be the analogue of for the expected graph, i.e.,

where . We first show that for suitable values of (with large enough), the smallest eigenvectors of , denoted by , are given by , for some rotation matrix . Hence, the rows of have the same clustering structure as that of . Denoting to be the matrix consisting of the smallest generalized eigenvectors of , and recalling (4), we can relate and via

| (7) |

It turns out that when , and in light of the expression for from (24), we arrive at , where is as in (18). Since is invertible, it follows that has distinct rows, with the rows that belong to the same cluster being identical. The remaining arguments revolve around deriving concentration bounds on , which imply (for large enough) that the distance between the column spans of and is small, i.e., there exists an orthonormal matrix such that is small. Finally, the expressions in (4) and (7) altogether imply that is small, which is an indication that the rows of approximately preserve the clustering structure encoded in .

The above discussion is summarized in the following theorem, which is our first main result for SPONGEsym in the moderately dense regime.

Theorem 3.1 (Restating Theorem 4.13; Eigenspace alignment of SPONGEsym in the dense case).

Assuming , suppose that are chosen to satisfy

where satisfy one of the following conditions

-

1.

and , or

-

2.

and .

Then and , where is a rotation matrix, and is as defined in (18). Moreover, for any , there exists a constant such that the following is true. If satisfies

with as in (45), then with probability at least , there exists an orthogonal matrix such that

Let us now interpret the scaling of the terms and in Theorem 3.1, and provide some intuition.

-

1.

In general, when no assumption is made on the noise level , we have and the requirement on is . Then a sufficient set of conditions on are

(8) Moreover, we see from (45) that , and thus . Hence, a sufficient condition on is

- 2.

-

3.

When , then , which might lead one to believe that the clustering performance improves accordingly. This is not the case however, since when is large, then and , which means that clustering the rows of (resp. ) is roughly equivalent to clustering the rows of (resp. ). Moreover, note that for large , we have and and thus the negative subgraph has no effect on the clustering performance.

SPONGEsym in the sparse regime.

Notice that the above theorem required the sparsity parameter , when is large enough. This condition on is essentially required to show concentration bounds on in Lemma 4.11, which in turn implies a concentration bound on (see Lemma 4.12). However, in the sparse regime is of the order , and thus Lemma 4.11 does not apply in this setting. In fact, it is not difficult to see that the matrices will not concentrate222See for e.g., [LLV17]. around in the sparse regime. On the other hand, by relying on a recent result in [LLV17, Theorem 4.1] on the concentration of the normalized Laplacian of regularized adjacency matrices of inhomogeneous Erdős-Rényi graphs in the sparse regime (see Theorem 4.15), we show concentration bounds on and , which hold when and (see Lemma 4.16). As before, these concentration bounds can then be shown to imply a concentration bound on (see Lemma 4.17). Other than these technical differences, the remainder of the arguments follow the same structure as in the proof of Theorem 3.1, thus leading to the following result in the sparse regime.

Theorem 3.2 (Restating Theorem 4.18 ).

Assuming , suppose are chosen to satisfy

where satisfy one of the following conditions

-

1.

and , or

-

2.

and .

Then and , where is a rotation matrix, and is as defined in (18). Moreover, there exists a constant such that for and , if satisfies

and , then with probability at least , there exists a rotation so that

Here, , with as defined in (45).

The following remarks are in order.

-

1.

It is clear that can neither be too small (since this would imply lack of concentration), nor too large (since this would destroy the latent geometries of ). The choice provides a trade-off, and leads to the bounds when (see Lemma 4.16).

-

2.

In general, for , it suffices that satisfy (8) and . As discussed earlier, , and hence it suffices that .

Mis-clustering error bounds.

Thus far, our analysis has shown that under suitable conditions on and , the matrix (or in the sparse regime) is close to for some rotation , with the rows of preserving the ground truth clustering. This suggests that by applying the -means clustering algorithm on the rows of (or ) one should be able to approximately recover the underlying communities. However, the -means problem for clustering points in is known to be NP-Hard in general, even for or [ADHP09, Das08, MNV12]. On the other hand, there exist efficient -approximation algorithms (for ), such as, for e.g., the algorithm of Kumar et al. [KSS04] which has a running time of .

Using standard tools [LR15, Lemma 5.1], we can bound the mis-clustering error when a -approximate -means algorithm is applied on the rows of (or ), provided the estimation error bound is small enough. In the following theorem, the sets , contain those vertices in for which we cannot guarantee correct clustering.

Theorem 3.3 (Re-Stating Theorem 4.20).

Under the notation and assumptions of Theorem 3.1, let be a -approximate solution to the -means problem . Denoting

it holds with probability at least that

| (9) |

In particular, if satisfies

then there exists a permutation matrix such that , where .

In the sparse regime, the above statement holds under the notation and assumptions of Theorem 3.2 with replaced with , and with probability at least .

We remark that when , the bound on becomes independent of and is of the form . This is also true for the mis-clustering bound in (9), which is of the form

3.2 Symmetric Signed Laplacian

We now describe our results for the symmetric Signed Laplacian. We recall that and denote the adjacency and degree matrices of the expected graph, under the SSBM ensemble. We define

| (10) |

to be the normalized Signed Laplacian of the expected graph. Moreover, denotes the aspect ratio, measuring the discrepancy between the smallest and largest cluster sizes in the SSBM.

We will first show that for large enough, the smallest eigenvectors of , denoted by , are given by , with a matrix whose columns are the smallest eigenvectors of a matrix defined in Lemma 5.1. We will then prove that the rows of impart the same clustering structure as that of . The remaining arguments revolve around deriving concentration bounds on , which imply, for and large enough, that the distance between the column spans of and is small, i.e. there exists a unitary matrix such that is small. Altogether, this allows us to conclude that the rows of approximately encode the clustering structure of . The above discussion is summarized in the following theorem, which is our first main result for the symmetric Signed Laplacian, in the moderately dense regime.

Theorem 3.4 (Eigenspace alignment in the dense case).

Assuming , , , suppose the aspect ratio satisfies

| (11) |

and suppose that, for , it holds true that

| (12) |

Then there exists a universal constant , such that with probability at least , there exists an orthogonal matrix such that

where is a matrix whose columns are the smallest eigenvectors of the matrix defined in Lemma 5.1.

Remark 3.5.

(Related work) As previously explained, for the special case where and with equal-size clusters, a similar result was proved in [CDGT19, Theorem 3]. Under a different SSBM model, the Signed Laplacian clustering algorithm was analyzed by Mercado et al. [MTH19] for general . Although their generative model is more general than our SSBM, their results on the symmetric Signed Laplacian do not apply here. More precisely, one assumption of Theorem 3 [MTH19] translates into our model as , which does not hold for and .

Remark 3.6.

(Assumptions) The condition on the aspect ratio (11) is essential to apply a perturbation technique, where the reference is the setting with equal-size clusters, i.e. (see Lemma 5.3). In the sparsity condition (12), we note that the constant scales quadratically with the number of classes and as with the error on the eigenspace. However, we conjecture that this assumption is only an artefact of the proof technique, and that the result could hold for more general graphs with very unbalanced cluster sizes.

Regularized Signed Laplacian.

We now consider the sparse regime and show that we can recover the ground-truth clustering structure up to some small error using the regularized Signed Laplacian , provided that , and are large enough, and that the regularization parameters are well-chosen. We denote to be the equivalent of the regularized Laplacian for the expected graph in our SSBM, i.e.

with , resp. , denoting the adjacency matrix, resp. the degree matrix, of the expected regularized graph. The next theorem is an intermediate result, which provides a high probability bound on and .

Theorem 3.7.

(Error bound for the regularized Signed Laplacian) Assuming , , and regularization parameters , , it holds true that for any , with probability at least , we have

| (13) |

with an absolute constant. Moreover, it also holds true that

| (14) |

In particular, for the choice , if , we obtain

Remark 3.8.

The above theorem shows the concentration of our regularized Laplacian towards the regularized Laplacian (13) and the Signed Laplacian (14) of the expected graph. More precisely, if for some well-chosen parameters , these upper bounds are small, e.g , then we have since (see Appendix E).

Using this concentration bound, we can show that the eigenspaces and are “close”, provided that , is close enough to 1, and is well-chosen. This is stated in the next theorem.

Theorem 3.9 (Eigenspace alignment in the sparse case).

Remark 3.10.

In the sparse setting, the constant before the factor in the sparsity condition (15) scales as . However for fixed, it would hold if as .

Remark 3.11.

In practice, one can choose the regularization parameters by first estimating the sparsity parameter , e.g. from the fraction of connected pairs of nodes

then choosing so that . However, from this analysis, it is not clear how one would suitably choose and .

Mis-clustering error bounds.

Since and are “close” to , we recover the ground-truth clustering structure up to some error, which we quantify in the following theorem, where we bound the mis-clustering rate when using a -approximate -means error on the rows of (resp. ).

Theorem 3.12.

(Number of mis-clustered nodes) Let and , and suppose that and satisfy the assumptions of Theorem 3.4 (resp. Theorem 3.9 and ). Let be the -approximation of the -means problem

Let and . Then with probability at least (resp. ), there exists a permutation such that and

In particular, the set of mis-clustered nodes is a subset of .

4 Analysis of SPONGE Symmetric

This section contains the proof of our main results for SPONGEsym, divided over the following subsections. Section 4.1 describes the eigen-decomposition of the matrix , thus revealing that a subset of its eigenvectors contain relevant information about . Section 4.2 provides conditions on which ensure that (for some rotation matrix ), along with a lower bound on the eigengap . Section 4.3 then derives concentration bounds on using standard tools from the random matrix literature. These results are combined in Section 4.4 to derive error bounds for estimating and up to a rotation (using the Davis-Kahan theorem). The results summarized thus far pertain to the “dense” regime, where we require when is large. Section 4.5 extends these results to the sparse regime where , for the regularized version of SPONGEsym. Finally, we conclude in Section 4.6 by translating our results from Sections 4.4 and 4.5 to obtain mis-clustering error bounds for a -approximate -means algorithm, by leveraging previous tools from the literature [LR15].

4.1 Eigen-decomposition of

The following lemma shows that a subset of the eigenvectors of indeed contain information about , i.e., the ground-truth clustering.

Lemma 4.1 (Spectrum of ).

Let , and denote the expected degree of a node in cluster , . Let , , , and , for , for some . Let the columns of contain eigenvectors of which are orthogonal to the column span of . It holds true that

| (16) |

where is a rotation matrix, and is a diagonal matrix, such that , where

| (17) |

| (18) |

Proof.

We first consider the spectrum of , followed by that of and , which altogether will reveal the spectral decomposition of .

Analysis in expectation of the spectra of . Without loss of generality, we may assume that cluster contains the first vertices, cluster the next vertices and similarly for the remaining clusters. Note that , where for , straightforward calculations reveal that , and . One can rewrite the matrices in the more convenient form

| (19) |

since the column vectors of are eigenvectors of , and the eigenvalues of are apparent because is a diagonal matrix. Note that (19) is true in general, and does not make any assumption on the placement of the vertices into their respective cluster. Furthermore, one can verify that admits the eigen-decomposition

| (20) |

and similarly, can be decomposed as

Analysis of the spectra of and . We start by observing that

| (21) |

In light of (20), one can write as

| (22) |

Similarly, using the expression for , the expression for can be written as

| (23) |

Combining (19), (22), and (23) into (21), we readily arrive at

| (24) |

where and are defined as in the statement of the lemma. The spectral decomposition of now follows trivially using (24), along with the spectral decomposition . ∎

Lemma 4.1 reveals that we need to extract the -informative eigenvectors from the -eigenvectors of . Clearly, it suffices to recover any orthonormal basis for the column span of , since the rows of any such corresponding matrix (one instance of which is ) will exhibit the same clustering structure as .

4.2 Ensuring and bounding the spectral gap

In this section, our aim is to show that, for suitable values of , the eigenvectors corresponding to the smallest eigenvalues of are given by , i.e., . This is equivalent to ensuring (recall Lemma 4.1) that

| (25) |

Moreover, we will need to find a strictly positive lower-bound on the spectral gap , as it will be used later on, in order to show that the column span of is close to that of . We first consider the equal-sized clusters case, and then proceed to the general-sized clusters case.

4.2.1 Spectral gap for equal-sized clusters

When the cluster sizes are equal, the analysis is considerably cleaner than the general setting. Let us first establish notation specific to the equal-sized clusters case.

Remark 4.2 (Notation for the equal-sized clusters).

In the following lemma, we show the exact value of .

Lemma 4.3 (Bounding the spectral norm of ).

For equal-sized clusters, the following holds true

Proof.

The lemma follows directly from Lemma D.1. ∎

Next, we derive conditions on which ensure .

Lemma 4.4 (Conditions on and ).

Suppose , and , . If , satisfy

-

1.

-

2.

Then it holds true that , i.e.,

Proof.

Recalling the expression for from Lemma 4.3, we will ensure that each term inside the max is less than . To derive the first condition of the lemma, we simply ensure that

Before deriving the second condition, let us note additional useful bounds on which will be needed later.

-

1.

.

-

2.

Since , we obtain that . This also implies that .

-

Therefore, combining the above two bounds, we arrive at

-

3.

.

-

4.

Since , it holds that .

-

5.

Therefore, combining the above two conditions yields

To derive the second condition, we need to ensure , which is equivalent to

Now, we can lower bound “term 2” in the above equation as

Hence from the above two equations, we observe that it suffices that satisfy

∎

Next, we derive sufficient conditions on which ensure a lower bound on the spectral gap

Lemma 4.5 (Conditions on , and lower-bound on spectral gap).

Suppose , then the following holds.

-

1.

If satisfy

then , and , i.e., .

-

2.

If and satisfy

then , and , i.e., .

Proof.

We need to ensure the following two conditions for a suitably chosen .

| (26) | ||||

| (27) |

1. Ensuring (26)

We can rewrite (26) as

| (28) |

Using the expressions for , we can write the coefficients of the terms as follows.

Moreover, using the bounds on derived in Lemma 4.4, we can lower bound the RHS term in (28) as

From the above considerations, we see that (28) is ensured provided

| (29) |

We outline two possible ways in which (29) is ensured.

- •

- •

2. Ensuring (27)

Note that one can rewrite (27) as

| (32) |

Since , (32) is ensured provided

which in turn holds if each LHS term is respectively less than half of the RHS term. This leads to the condition

Finally, plugging the choices and in the above equation, and combining it with the conditions derived for ensuring (26), we readily arrive (after minor simplifications) at the statements in the Lemma. ∎

4.2.2 Spectral gap for the general case

For the general-sized clusters case, it is difficult to find the exact value of . Therefore, in the following lemma, we show an upper bound on this quantity by bounding the spectral norms of and .

Lemma 4.6 (Bounding the spectral norm of and ).

Recall . Then it holds true that

| (33) | ||||

| (34) |

From the above two inequalities, it follows that

The proof of the above lemma is deferred to Appendix D.

Remark 4.7.

Recall ; with a slight abuse of notation, let denote the degree of the largest cluster (of size ). As before, we now derive conditions on which ensure , or equivalently,

| (35) |

Additionally, we find sufficient conditions on which ensure a lower bound on the spectral gap . These are shown in the following lemma.

Lemma 4.8 (Conditions on , and Lower-Bound on Spectral Gap).

Suppose , then the following is true.

-

1.

If satisfy

(36) then , i.e., .

- 2.

-

3.

The statement in part () also holds for the choice , and provided .

Proof.

From (35) and Lemma 4.6, it suffices to show for that

| (38) |

For the stated condition on , it is easy to verify that

Using these bounds in (38), observe that it suffices that satisfy

| (39) |

To establish the second part of the Lemma, we begin by rewriting (39) as

| (40) |

and observe that

| (41) |

This verifies (37) in the statement of the Lemma. The “moreover” part is established by ensuring that each term on the LHS of (37) is a sufficiently small fraction of the RHS term. In particular, it is enough to choose this fraction to be for the first two terms, and for the third term.

4.3 Concentration bound for

In this section, we bound the “distance” between and , i.e., . This is shown via individually bounding the terms , and . To this end, we first recall the following Theorem from [CR11].

Theorem 4.9 (Bounding , [CR11]).

Let denote the normalized Laplacian of a random graph, and the normalized Laplacian of the expected graph. Let be the minimum expected degree of the graph. Choose . Then there exists a constant such that, if , then with probability at least , it holds true that

Remark 4.10.

A similar result appears in [Imb09] for the (unsigned) inhomogeneous Erdős-Rényi model, where , with the smallest expected degree of the graph.

Using Theorem 4.9, we readily obtain the following concentration bounds for and .

Lemma 4.11 (Bounding ).

Assuming , there exists a constant such that if , then with probability at least ,

Proof.

Note that the minimum expected degrees of the positive and negative subgraphs are given by , respectively. For the stated condition on , it is easily seen that

| (42) |

Invoking Theorem 4.9, and observing that are ensured for the stated condition on , the statement follows via the union bound. ∎

Next, using the above lemma, we can upper bound . This will help us show that and are “close”.

Lemma 4.12 (Bounding ).

Proof.

Since are positive definite, therefore using Proposition C.2, we obtain the bound

| (43) |

We know that and . Moreover, by Weyl’s inequality [Wey12] (see Appendix B). Hence (43) simplifies to

where the last inequality can be verified by examining the expression of in (24), and noting from the definition of that holds (via Weyl’s inequality). ∎

4.4 Estimating and up to a rotation

We are now ready to combine the results of the previous sections to show that if are large enough, then the distance between the subspaces spanned by and is small, i.e., there exists an orthonormal matrix such that is close to . For chosen suitably, we have seen in Lemma 4.8 that for a rotation , hence this suggests that the rows of will then also approximately preserve the clustering structure of .

With as defined in Lemma 4.12 recall from (4), (7) that can be written as

| (44) |

Therefore if , then using the expression for from (24) we see that , and thus the rows of also preserve the ground truth clustering structure. Moreover, if is small, then it can be shown to imply a bound on . Hence the rows of will approximately preserve the clustering structure of .

Before stating the theorem, let us define the terms

| (45) |

Theorem 4.13.

Assuming , suppose are chosen to satisfy

where satisfy one of the following conditions.

-

1.

and , or

-

2.

and .

Then and where is a rotation matrix, and is as defined in (18). Moreover, for any , there exists a constant such that the following is true. If satisfies

with as in (45), then with probability at least , there exists an orthogonal matrix such that

Proof.

We will first simplify the upper bound on in Lemma 4.12, starting by bounding . If , it is easy to verify that which implies . Moreover, we observe from Lemma 4.11 that is ensured if where . These considerations altogether imply

| (46) |

where in the penultimate inequality we used , and the last inequality uses Lemma 4.11.

Next, we will use the Davis-Kahan theorem [DK70] (see Appendix B) for bounding the distance . Applied to our setup, it yields

| (47) |

provided . From Weyl’s inequality, we know that . Moreover, under the stated conditions on , we obtain from Lemma 4.8 the bound

where in the last inequality we used the simplifications and in the expressions for . Hence using (46), we observe that if

then the RHS of (47) can be bounded as

It follows that there exists an orthogonal matrix so that

for the stated bound on . This establishes the first part of the Theorem.

In order to bound , we obtain from (44) that

| (48) |

The term can be bounded as

| (49) |

where the penultimate inequality uses Proposition C.1, and the last inequality follows from Lemma 4.11 with a minor simplification of the constant. Plugging (49) in (48) leads to the stated bound for . ∎

4.5 Clustering sparse graphs

We now turn our attention to the sparse regime where . In this regime, Lemma 4.11 is no longer applicable since it requires . In fact, it is not difficult to see that the matrices will not concentrate around in this sparsity regime. To circumvent this issue, we will aim to show that the normalized Laplacian corresponding to the regularized adjacencies concentrate around , for carefully chosen values of .

To show this, we rely on the following theorem from [LLV17], which states that the symmetric Laplacian of the regularized adjacency matrix is close to the symmetric Laplacian of the expected regularized adjacency matrix, for inhomogeneous Erdős-Rényi graphs.

Theorem 4.14 (Theorem 4.1 of [LLV17]).

Consider a random graph from the inhomogeneous Erdős-Rényi model (), and let . Choose a number . Then, for any , being an absolute constant, with probability at least

| (50) |

The above result leads to a bound on the distance between and the normalized Laplacian of the expected (un-regularized) adjacency matrix.

Theorem 4.15 (Concentration of Regularized Laplacians).

Consider a random graph from the inhomogeneous Erdős-Rényi model (), and let , . Choose a number . Then, for any , being an absolute constant, with probability at least

| (51) |

Proof.

To establish the above lemma we make use of triangle inequality, where we use the fact that . We know the bound on the first term on the RHS from Lemma 4.14 (which holds with probability ). To bound the second term on the RHS, note that

The second term of the inequality can be easily bounded as follows.

To analyse the first term, we observe that

where in the first inequality we use the fact that , and in the last inequality we use the fact that for two numbers if then . We have all the components to plug into the triangle inequality, which yields the desired statement of the theorem. ∎

We now translate Theorem 4.15 to our setting for and show that if for large enough, then for the choices , the bounds hold with sufficiently high probability.

Lemma 4.16.

Let and . Then for the choices , and any , there exists a constant such that with probability at least , it holds true that

| (52) | ||||

| (53) |

Proof.

We will apply Theorem 4.15 to the subgraphs . Let us denote to be the quantity , and to be the minimum expected degree for the positive and negative subgraphs, respectively. From the SSBM model, it can be verified that . We also know that and , where for the stated condition on , satisfy the bounds in (42). The latter can be written as

Let us denote for convenience. In order to show (52), we obtain from Theorem 4.15 that, with probability at least ,

where the last inequality uses . Now note that if , then the above bound simplifies to

| (54) |

Choosing such that , or equivalently, , and plugging this in (54), we arrive at (52). Clearly, is equivalent to the stated condition on . The bound in (53) follows in an identical manner and is omitted. ∎

We are now in a position to write the bound on in terms of , in a completely analogous manner to Lemma 4.12.

Lemma 4.17 (Adapting Lemma 4.12 for the sparse regime).

Next, we derive the main theorem for SPONGEsym in the sparse regime, which is the analogue of Theorem 4.13. The first part of the Theorem remains unchanged, i.e., for large enough and chosen suitably, we have and for a rotation , and . The remaining arguments follow the same outline of Theorem 4.13, i.e., (a) using Lemma 4.17 and Lemma 4.16 to obtain a concentration bound on (when ), and (b) using the Davis-Kahan theorem to show that the column span of is close to . The latter bound then implies that is close (up to a rotation) to , where we recall

| (55) |

with as defined in Lemma 4.17.

Theorem 4.18.

Assuming , suppose are chosen to satisfy

where satisfy one of the following conditions.

-

1.

and , or

-

2.

and .

Then and where is a rotation matrix, and is as defined in (18). Moreover, there exists a constant such that for and , if satisfies

and , then with probability at least , there exists a rotation so that

Here, with as defined in (45).

Proof.

We will first simplify the upper bound on in Lemma 4.17. Note that implies , and moreover, we can bound uniformly (from (52), (53)) as

| (56) |

Note that if . Under these considerations, the bound in Lemma 4.17 simplifies to

Following the steps in the proof of Theorem 4.13, we observe that is guaranteed to hold, provided

Then, we obtain via the Davis-Kahan theorem that there exists an orthogonal matrix such that

for the stated bound on in the theorem. This establishes the first part of the theorem.

In order to bound , first observe that

| (57) |

The second term can be bounded as

| (58) |

where the penultimate inequality uses Proposition C.1, and the last inequality uses (56). Plugging (58) into (57) leads to the stated bound for . ∎

4.6 Mis-clustering rate from -means

We now analyze the mis-clustering error rate when we apply a -approximate -means algorithm (e.g., [KSS04]) on the rows of (respectively, in the sparse regime). To this end, we rely on the following result from [LR15], which when applied to our setting, yields that the mis-clustering error is bounded by the estimation error (or in the sparse setting). By an -approximate algorithm, we mean an algorithm that is provably within an factor of the cost of the optimal solution achieved by -means.

Lemma 4.19 (Lemma 5.3 of [LR15], Approximate -means error bound).

For any , and any two matrices , such that with , let be a -approximate solution to the -means problem so that

and . For any , define then

| (59) |

Moreover, if

| (60) |

then there exists a permutation matrix such that , where .

Combining Lemma 4.19 with the perturbation results of Theorem 4.13 and Theorem 4.18, we readily arrive at mis-clustering error bounds for SPONGEsym.

Theorem 4.20 (Mis-clustering error for SPONGEsym).

Under the notation and assumptions of Theorem 4.13, let be a -approximate solution to the -means problem . Denoting

it holds with probability at least that

In particular, if satisfies

then there exists a permutation matrix such that , where .

In the sparse regime, the above statement holds under the notation and assumptions of Theorem 4.18 with replaced with , and with probability at least .

Proof.

Since has rank at most , we obtain from Theorem 4.13 that

| (61) |

We now use Lemma 4.19 with and . It follows from (44) and Lemma 4.1 that where . Denoting , we can write , where is the ground truth membership matrix, and for each , it holds true that

From (18), one can verify using Weyl’s inequality that

where the last inequality holds if . The above considerations imply that . Now with as defined in the statement, we obtain from (59) and (61) that

where the last inequality uses for . This yields the first part of the Theorem.

For the second part, we need to ensure (60) holds. Using (61) and the expression for , it is easy to verify that (60) holds for the stated condition on .

Finally, the statement for the sparse regime readily follows in an analogous manner (replacing with ), by following the same steps as above. ∎

5 Concentration results for the symmetric Signed Laplacian

This section contains proofs of the main results for the symmetric Signed Laplacian, in both the dense regime and the sparse regime . Before proceeding with an overview of the main steps, for ease of reference, we summarize in the Table below the notation specific to this section.

| Notation | Description |

|---|---|

| symmetric Signed Laplacian | |

| population Signed Laplacian | |

| regularized Laplacian | |

| population regularized Laplacian | |

| regularization parameters | |

| expected signed degree | |

| aspect ratio |

The proof of Theorem 3.4 is built on the following steps. In Section 5.1, we compute the eigen-decomposition of the Signed Laplacian of the expected graph . Then in Section 5.2, we show and are “close”, and obtain an upper bound on the error . Finally, in Section 5.3, we use the Davis-Kahan theorem (see Theorem B.2) to bound the error between the subspaces and . To prove Theorem 3.7, in Section 5.4, we first use a decomposition of the set of edges and characterize the behaviour of the regularized Signed Laplacian on each subset. This leads in Section 5.5 to the error bounds of Theorem 3.7. Finally, the proof of Theorem 3.9, that bound the error on the eigenspace, relies on the same arguments as Theorem 3.4 and can be found in Section 5.6. Similarly to the approach for SPONGEsym, the mis-clustering error is obtained using a ()-approximate solution of the -means problem applied to the rows of (resp. ). This solution contains, in particular, an estimated membership matrix . The bound on the mis-clustering error of the algorithm given in Theorem 3.12 is derived using Lemma 4.19 (Lemma 5.3 of [LR15]), in Section 5.7.

5.1 Analysis of the expected Signed Laplacian

In this section, we compute the eigen-decomposition of the matrix . In particular, we aim at proving a lower bound on the eigengap between the and smallest eigenvalues. For equal-size clusters, there is an explicit expression for this eigengap.

5.1.1 Matrix decomposition

Lemma 5.1.

Let denote the normalized membership matrix in the SSBM. Let be a matrix whose columns are any orthonormal base of the subspace orthogonal to . The Signed Laplacian of the expected graph has the following decomposition

| (62) |

with , and is a matrix such that

| (63) |

Proof.

On one hand, we recall from Section 2.3 that the expected degree matrix is a scaled identity matrix , with . Thus, any vector is an eigenvector of with corresponding eigenvalue , and it holds true that

| (64) |

We can infer from Lemma 5.1 that the spectrum of is the union of the spectrum of the matrix and . Moreover, denoting , we have . For a SSBM with equal-size clusters, we are able to find explicit expressions for the eigenvalues of .

5.1.2 Spectrum of the Signed Laplacian: equal-size clusters

In this section, we assume that the clusters in the SSBM have equal sizes . In this case,

and denoting by the matrix in this setting of equal clusters, we may write

| (66) |

Hence, the spectrum of contains only two different values. The largest one has multiplicity 1, and is the corresponding largest eigenvector. The remaining eigenvalues are all equal. In fact, we have

One can easily check that these eigenvalues are positive, and that the following inequality holds true

We finally have

Note that for , and the spectrum of contains only two values . For , . Writing the spectral decomposition

with and being the matrix of eigenvectors associated to , we conclude that . In fact, since has distinct rows and is a unitary matrix, also has distinct rows. As is the all one’s vector , has distinct rows as well. These observations are summarized in the following lemma and lead to the expression of the eigengap.

Lemma 5.2 (Eigengap for equal-size clusters).

For the SSBM with clusters of equal-size , we have that , where corresponds to the smallest eigenvectors of . Moreover, with the eigengap defined as

it holds true that

| (67) |

5.1.3 Non-equal-size clusters

In the general setting of non-equal-size clusters, it is difficult to obtain an explicit expression of the spectrum of . Thus, using a perturbation method, we establish a lower bound on the eigengap, provided that the aspect ratio is close to 1. Recall that

| (68) |

We note that this matrix is of the form , with being a diagonal matrix and a vector. Using again the spectral decomposition

| (69) |

where is the largest eigenvector and contains the smallest eigenvectors of , we would like to ensure that the smallest eigenvectors of are related to the eigenvectors of in the following way . Note that is not necessarily the all one’s vector, and has at least distinct rows. To this end, we will like to ensure that

| (70) |

From Weyl’s inequality (see Theorem B.1), we know that

which in particular implies

Moreover, when , and when . Thus, for Condition 70 to be true, it suffices to ensure

using (67). In this case, we indeed have that . As it will be convenient later, we will ensure a slightly stronger condition, i.e.

| (71) |

Now we compute the error . We recall that and denote , then (68) becomes

Using (66), we obtain

| (72) |

For the first term on the RHS, we have

| (73) |

while for the second term on the RHS, we have

| (74) |

By combining (73) and (74) into (72), we arrive at

using that . Now since and from Condition 71, it suffices that satisfies

Finally, we can compute

Hence we arrive at the following lemma.

Lemma 5.3 (General lower-bound on the eigengap).

For a SSBM with clusters of general sizes and aspect ratio satisfying

it holds true that , where corresponds to the smallest eigenvectors of . Furthermore, we can lower-bound the spectral gap as

We will now show that concentrates around the population Laplacian , provided the graph is dense enough.

5.2 Concentration of the Signed Laplacian in the dense regime

In the moderately dense regime where , the adjacency and the degree matrices concentrate towards their expected counterparts, as increases. This can be established using standard concentration tools from the literature.

Lemma 5.4.

We have the following concentration inequalities for and

-

1.

,

In particular, there exists a universal constant such that

-

2.

If ,

Proof.

For the first statement, we recall that is a symmetric matrix, with and with independent entries above the diagonal . We denote . If lie in the same cluster,

If lie in different clusters,

One can easily check that in both cases, it holds true that

Thus we can conclude that for each , the following holds

Hence, . Moreover, . Therefore, we can apply the concentration bound for the norm of symmetric matrices by Bandeira and van Handel [BvH16, Corollary 3.12, Remark 3.13] (recalled in Appendix A.2) with , in order to bound . For any given , we have that

with probability at least , where only depends on .

For the second statement, we apply Chernoff’s bound (see Appendix A.1) to the random variables where we note that are independent Bernoulli random variables with mean . Hence, . Let and assuming that (so that ), we obtain

using that . Applying the union bound, we finally obtain that

∎

Lemma 5.5.

If , and , then with probability at least , it follows that

Proof.

We first note that using the proof of Lemma 5.4, with probability at least , we have that , with . Consequently,

since for . We now apply the first inequality of Proposition C.2 with . We obtain

It remains to prove that . It holds since is a diagonal matrix, thus and similarly to Lemma E.1, it is straightforward to prove that , therefore .

∎

Lemma 5.6.

Under the assumptions of Theorem 3.4, if , then with probability at least there exists a universal constant such that

5.3 Proof of Theorem 3.4

The proof of this theorem relies on the Davis-Kahan theorem. Using Weyl’s inequality (see Theorem B.1) and Lemma 5.6, we obtain for all ,

In particular, for the -th smallest eigenvalue,

For , we will like to ensure that

| (75) |

From Lemma 5.3, if , then . Then for the previous condition (75) to hold, it is sufficient that

| (76) |

with . We note that since , hence (76) implies that .

With this condition, we now apply the Davis-Kahan theorem (Theorem B.2)

Using Proposition B.3, there then exists an orthogonal matrix so that

5.4 Properties of the regularized Laplacian in the sparse regime

The analysis of the signed regularized Laplacian differs from the one of unsigned regularized Laplacian. In particular, Lemma 4.14 cannot be directly applied, since the trimming approach of the adjacency matrix for unsigned graphs is not available in this case. However, we will also use arguments by Le et al. in [LLV15] and [LLV17] for unsigned directed adjacency matrices in the inhomogeneous Erdős-Rényi model . More precisely, in Section 5.4.1, we will prove that the adjacency matrix concentrates on a large subset of edges called the core. On this subset, the unregularized (resp. regularized) Laplacian also concentrates towards the expected matrix (resp. ). In Section 5.4.2, we will show that on the remaining subset of nodes, the norm of the regularized Laplacian is relatively small.

5.4.1 Properties of the signed adjacency and degree matrices

In this section, we adapt the results by [LLV17] for the signed adjacency matrix and the degree matrix in our SSBM. Similarly to Theorem 2.6 [LLV17] (see Theorem A.3), the following lemma shows that the set of edges can be decomposed into a large block, and two blocks with respectively few columns and few rows.

Lemma 5.7.

(Decomposition of the set of edges for the SSBM) Let be the signed adjacency matrix of a graph sampled from the SSBM. For any , with probability at least , the set of edges can be partitioned into three classes and such that

-

1.

the signed adjacency matrix concentrates on

with a constant;

-

2.

(resp. ) intersects at most columns (resp. rows) of ;

-

3.

each row (resp. column) of (resp. ) has at most non-zero entries.

Remark 5.8.

We underline that this lemma is valid because the unsigned adjacency matrices and have disjoint support. We do not know if similar results could be obtained for the Signed Stochastic Block Model defined by Mercado et al. in [MTH16].

Proof.

We denote (resp. ) the upper (resp. lower) triangular part of the unsigned adjacency matrices. Using this decomposition, we have

We note that have disjoint supports, and each of them has independent entries. We can hence apply Theorem A.3 to each of these matrices, where we note that for each matrix

With probability at least , there exists four partitions of that have the subsequent properties. For e.g., for ,

-

•

;

-

•

(resp. ) intersects at most columns (resp. rows) of ;

-

•

each row (resp. column) of (resp. ) have at most ones.

We note that this decomposition holds simultaneously for and . Taking the unions of these subsets,

and similarly for and , we have, with the triangle inequality

with . Moreover, each row of (resp. each column of ) has at most entries equal to 1 and entries equal to , which means at most non-zero entries. Finally (resp. ) intersects at most rows (resp. columns) of . ∎

For the degree matrix , we use inequality (4.3) from [LLV17]. Recall that the degree of node is which is a sum of independent Bernoulli variables with bounded variance . We can thus find an upper bound on the error . This bound is weaker than the one obtained in Lemma 5.4 with the assumption .

Lemma 5.9.

There exists a constant such that for any , with probability at least , it holds true

5.4.2 Properties of the regularized Laplacian outside the core

In this section, we will bound the norm of the Signed Laplacian restricted to the subsets of edges and . The following “restriction lemma” is an extension of Lemma 8.1 in [LLV15] for Signed Laplacian matrices.

Lemma 5.10.

(Restriction of Signed Laplacian) Let be a symmetric matrix, its regularized form as described in Section 2.2, and . We denote the regularized degree matrix , and the modified “Laplacian” and the matrix such that the entries outside of are set to 0. Let such that the degree of each node in is less that times the the corresponding degree in . Then we have

Proof.

We denote (resp. ) the degree matrix of (resp. ) and its regularized “Laplacian” (it is not necessarily a symmetric matrix) where

By definition of , . Since in , some entries in are set to 0, we have that for all ,

Moreover, by assumption, . We denote , and , and now we have

Because and , by sub-multiplicativity of the norm, we thus obtain

In addition, by considering the symmetric matrix

we have . In fact, is equal to the identity matrix minus the regularized Laplacian of

Using Appendix E, we can conclude that the eigenvalues of are between -1 and 1, leading to . Hence, we finally arrive at . ∎

Remark 5.11.

We note that this lemma is not specific to the rows of the matrix , and one could also derive the same lemma with the assumptions on the columns of the matrix.

5.5 Error bounds w.r.t the expected regularized Laplacian and expected Signed Laplacian

In this section, we prove an upper bound on the errors and from Theorem 3.7. We will use the decomposition of the set of edges from Lemma 5.7, and sum the errors on each of these subsets of edges. We recall that on the subset , we have an upper bound on . We will also use the fact that the regularized degrees are lower-bounded by the regularization parameter . On the subsets and , we will use Lemma 5.10 to upper bound the norm of the regularized Laplacian.

Lemma 5.12.

Under the assumptions of Theorem 3.7, for any , with probability at least , we have

| (77) |

Proof.

Let with

We will bound the norm of on , and the norms of and on the residuals . We first use the triangle inequality to obtain

1. Bounding the norm .

Denoting , we have that

| (78) | ||||

| (79) |

To upper bound (78) by (79), we have used the simplification trick in the proof of [LLV17, Theorem 4.1] which we now recall. Firstly, the second factor of (78) can be upper bounded in the following way. For ,

| (80) |

where the inequality comes from the fact that . Secondly, we use the inequality and we recall that by definition, we can bound the first factor of (78) by . This finally leads to (79).

2. Bounding the norm .

We first note that

We also recall that . Hence, using Lemma 5.7, with probability at least , we have

| (82) |

3. Bounding .

Using the proof of Lemma 5.7, each row of has at most non-zeros entries and intersects at most columns. Thus, for all

as . We can thus apply Lemma 5.10 with , and we arrive at

We also obtain the same bound for . Similarly, we have and

We arrive at , and finally, we also have .

4. Bounding .