StarVQA: Space-Time Attention for

Video Quality Assessment

Abstract

The attention mechanism is blooming in computer vision nowadays. However, its application to video quality assessment (VQA) has not been reported. Evaluating the quality of in-the-wild videos is challenging due to the unknown of pristine reference and shooting distortion. This paper presents a novel space-time attention network for the VQA problem, named StarVQA. StarVQA builds a Transformer by alternately concatenating the divided space-time attention. To adapt the Transformer architecture for training, StarVQA designs a vectorized regression loss by encoding the mean opinion score (MOS) to the probability vector and embedding a special vectorized label token as the learnable variable. To capture the long-range spatiotemporal dependencies of a video sequence, StarVQA encodes the space-time position information of each patch to the input of the Transformer. Various experiments are conducted on the de-facto in-the-wild video datasets, including LIVE-VQC, KoNViD-1k, LSVQ, and LSVQ-1080p. Experimental results demonstrate the superiority of the proposed StarVQA over the state-of-the-art. Code and model will be available at: https://github.com/DVL/StarVQA.

Index Terms:

video quality assessment, in-the-wild videos, synthetic distortion, attention, TransformerI Introduction

For the last few years, user-generated content (UGC) has shown an explosive growth on major social platforms, such as TikTok, Facebook, Instagram, YouTube, and Twitter [20, 6]. This causes a serious problem in content storage, streaming, and usage. Primarily, a deluge of low-quality videos captured by some amateur videographers in severe environments floods into the Internet. It is an urgent task for video quality assessment (VQA) tools to screen these videos according to their quality. However, evaluating the perceptual quality of in-the-wild videos is extremely hard because neither pristine reference nor shooting distortion is available [18].

Convolutional neural networks (CNNs) have delivered remarkable performance on a wide range of computer vision tasks. For example, some deep CNN-based VQA models were proposed [12, 33, 31, 14, 15, 7, 30, 26, 29], yielding promising results on the synthetically distorted video datasets. In [12], DeepVQA employed the deep CNN and aggregation network to learn spatiotemporal visual sensitivity maps. In [33], Zhang et al. exploited transfer learning to develop a general-purpose VQA framework. You and Korhonen [31] used 3D convolution network to extract local spatiotemporal features from small clips in the video. VSFA [14, 15] utilized the pre-trained ResNet-50 to extract spatial features of each frame. RIRNet [7] designed a motion perception network to fuse the motion information from different temporal frequencies. PVQ [30] extracted the 2D and 3D features to train a time series regressor and predict both the global and local space-time quality. Tu et al. [26] proposed to combine the spatiotemporal scene statistics and high-level semantic information for video quality prediction. Such semantic information was also oriented to design the VQA method in [29]. Unfortunately, the above methods still struggle on the performance improvement on the in-the-wild videos [30, 24, 11] since both the reference videos and distortion types are not available, and the receptive field of convolutional kernels is limited [1].

The success of attention mechanism in natural language processing (NLP) has recently inspired approaches in computer vision by integrating Transformers into CNN [16] [28] or taking the place of CNN completely [23] [21]. For example, Vision Transformer (ViT) [8] (a pure Transformer-based architecture) has outperformed its convolutional counterparts in image classification tasks. Transformer does not use any convolutions but is based on multi-headed self-attention [27]. This mechanism is particularly effective in modeling the long-term dependency of sequential language. Videos and sentences are both sequential. Thus, one expects that such self-attention will be effective for video modeling as well [3]. Inspired by ViT, several Transformer-based models [1, 3, 9, 22, 17, 5] were developed for video classification tasks. These models lead to higher classification accuracy compared with 3D convolutional networks. Unlike the classification tasks that aim at distinguishing among multiple different discrete values, the regression tasks need to output a continuous real value as close to the ground truth as possible. Researches showed that transferring existing classification networks to regression tasks performed not well [8, 3, 32]. As noted by A. C. Bovik [4], “Unlike human participation in crowdsourced picture labeling experiments like ImageNet, where each human label might need only 0.5-1.0 seconds to apply, human quality judgments on pictures generally required 10-20x that amount to time for a subject to feel comfortable in making their assessments on a Likert scale [10].” In general, a clip of video has a long duration including hundreds of images, and its perceptual quality difference from other videos with different content is extremely subtle. Can the Transformer be applied to VQA? If yes, how to implement it effectively? These questions become the original motivation of this work.

In this paper, we design a space-time attention network for VQA, named StarVQA. It is a pure Transformer-based model without using any convolution operations. As a result, StarVQA can capture the long-range spatiotemporal dependencies of a video sequence and guarantee a very fast convergence speed. The major contributions of this work are three-fold: 1) A novel Transformer network is built. To our best knowledge, this is the first work to apply Transformer to the VQA problem. 2) A vectorized regression loss function is designed, which can facilitate the training of StarVQA. 3) Experiments are performed on four benchmark in-the-wild video datasets and show that StarVQA achieves competitive performance compared with five state-of-the-art methods.

II Proposed StarVQA

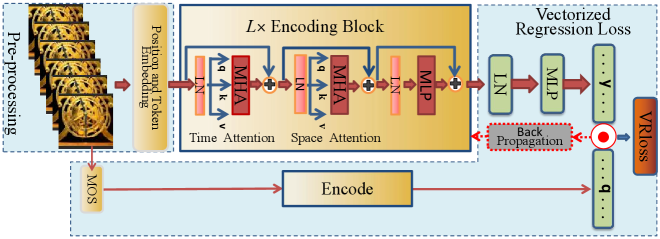

In this paper, matrices, vectors, and scalar variables are in bold uppercase, bold lowercase, and italic lowercase, respectively. , , and denote the integer set , the floor operation, and the transpose operation, respectively. The overall framework of the proposed StarVQA is shown in Fig. 1, which consists of pre-processing, encoding blocks (the divided space-time attention concatenated with a residual connection block), and vectorized regression loss modules. In the following, we describe each of them in detail.

Pre-processing Module. To match the input of the StarVQA network, we need to pre-process the video sequences. First, we select frames from each video sequence according to equal-interval sampling, and then crop the selected frame to size in a random way, where and denote the height and weight of the cropped frame respectively, and the number 3 means the three color channels of R, G, and B. Next, the cropped video frame is divided into many non-overlapping patches with size. Thus, there are patches in total for each cropped frame. Then each patch is flattened into a column vector with dimensions. Denote the column vector as the -th patch of the -th selected frame, where and .

Since the self-attention mechanism can capture the long-range dependences of spatiotemporal information [3], we use a spatiotemporal position vector (denoted as where is set to the dimensions of a patch) to encode each patch into an initial embedding vector by

| (1) |

where denotes a learnable matrix. Next, we add in the first position of the sequence of embedding vectors for and a special learnable vector representing the embedding of the vectored label token. Finally, we obtain the input of the first encoding block of the StarVQA network (denoted as ) as follows:

| (2) |

Time-attention Module. With the input , we can calculate the query (), key (), value () vectors of the first encoding block. For each patch, the values of , , and of the current block can be successively calculated from the output of the previous block . For convenience, hereafter and can take 0 value due to an addition of a label token. The calculation process is expressed as

| (3) |

| (4) |

| (5) |

where denotes LayerNorm [2], , , and denote the learnable query, key, and value matrices on the -th encoding block respectively, and denotes an index over multi-headed attentions and denotes the total number of attention heads. The latent dimension for each attention head is set to .

Next, we compute the weight of self-attention. As done in [3], we use an alternative, more efficient architecture for spatiotemporal attention, where time-attention and space-attention are separately applied one after the other. First, time-attention is computed by comparing each patch with all the patches at the same spatial location. Thus, we have

| (6) |

where SM() denotes the softmax activation function. It can be seen from Eq. (6) that the time-attention coefficient is extracted when is fixed to a constant. Then, the encoding coefficients can be calculated by using the self-attention weight, which is expressed by

| (7) |

The concatenation of these encoding coefficient vectors from all heads is projected by

| (8) |

where is a learnable mapping matrix with size.

Space-attention Module. To compute the , , and values of spatial self-attention, we only need to take as the input of function of Eqs. (3)-(5). Similar to the temporal self-attention mechanism, the weight of spatial self-attention is computed by

| (9) |

Therefore, we obtain the encoding weight of spatial self-attention as follows

| (10) |

Like Eq. (8), the output of space-attention can be written by

| (11) |

Finally, this output is passed through a multilayer perceptron (MLP), using residual connections after each operation:

| (12) |

By now, we obtain the output of the encoding block . And this output will be taken as the input of the encoding block until .

Vectorized Regression Loss Module. Our attempt to construct the end-to-end Transformer-based networks and use existing loss functions (such as -norm, hinge, and cross-entropy losses) to train such networks [8, 3] perform poorly in regression tasks. To adapt the Transformer architecture, we propose to embed a unique vectorized label token to the input of encoding blocks as the learnable variable. Accordingly, the mean opinion score (MOS) is encoded in a vector form. Based on this observation, we design a vectorized regression (VR) loss function for the training of StarVQA.

After obtaining the output of overall encoding blocks, the embedded label token can be taken out to compute the loss. In this module, we employ MLP and SM to generate a probability vector, which is expressed as

| (13) |

Inspired by [32], we encode the MOS of a video sequence to the probability vector. For this purpose, we first scale the MOS to the interval of [0.0, 5.0]. Then the scaled MOS is encoded to a probability vector by

| (14) |

where denotes an anchor vector. In Eq. (14), represents the probability of the -th anchor corresponding to the MOS. Finally, our VR loss is written as

| (15) |

where and denote the inner product operation and -norm, respectively. With this loss function, we can train the StarVQA network until convergence. In the last, a linear SVR model can be used to decode the output vector and predict the scores of video sequences.

III Experimental Results

Experimental Setup. Our network is built on the Pytorch framework and trained on a machine equipped with four Tesla P100 GPUs. Four in-the-wild VQA datasets are used for the verification, including KoNViD-1k [11], LIVE-VQC [24], LSVQ [30], and LSVQ-1080p [30]. By convention, of the dataset is for training, and the remaining is for the test. The performance results are averaged on 10 random rounds. Besides, the related parameters are set to , , , , and .

Datasets Description. LIVE-VQC contains 585 videos labeled by MOS of [0.0, 100.0] with resolution from 240p to 1080p. KoNViD-1k contains 1,200 videos labeled by MOS of [0.0, 5.0] with fixed resolution 960p. LSVQ (excluding LSVQ-1080p) contains 38,811 videos labeled by MOS of [0.0, 100.0] with diverse resolutions. LSVQ-1080p consists of 3,573 videos (more than 93% with resolution 1080p or higher), which are all extracted from the original LSVQ. Note that LSVQ-1080p does not contain any overlapping samples with LSVQ and is specifically designed for the performance verification on high-resolution videos.

Convergence Speed. According to our experiment on the KoNViD-1k dataset, StarVQA achieves 0.72 on both SROCC and PLCC performances when the number of epochs is only five. After the number of epochs exceeds ten, the performance of StarVQA remains almost unchanged. This shows that StarVQA can be well trained at breakneck speed.

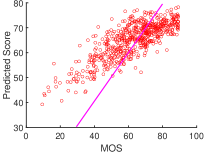

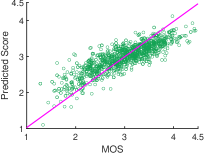

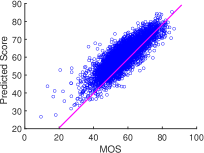

Overall Performance on Individual Dataset. The scatter plot of predicted quality scores on different datasets is shown in Fig. 2. We can see that the outputs of StarVQA are all close to the ground truths. This visually demonstrates that the performance of StarVQA remains stable on video sequences from different datasets. Especially, StarVQA on LSVQ gets the most closely centered on the reference line among the three datasets. This is because LSVQ contains a large number of samples, which is most suitable for the Transformer-based networks.

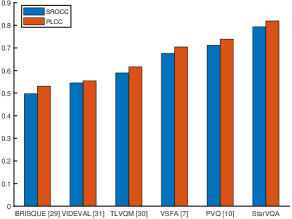

Performance Comparison with state-of-the-art. In this part, we compare the proposed StarVQA with five state-of-the-art methods, including BRISQUE [19], TLVQM [13], VIDEVAL [25], VSFA [14], and PVQ [30]. Comparison results are shown in Table I and Fig. 3. It can be seen from Table I that StarVQA performs the best on the KoNViD-1k and LSVQ datasets. Nevertheless, our model does not get state-of-the-art performance on LIVE-VQC. This implies that the Transformer architecture may not be very suitable for small datasets. Promisingly, for high-resolution videos, the advantage of the Transformer architecture becomes obvious. From Fig. 3, it is clearly shown that StarVQA surpasses all the competitors when pre-trained on LSVQ and tested on LSVQ-1080p.

Performance on Cross-dataset. To verify the generalization of StarVQA, we conduct a cross-dataset experiment. The result is shown in Table II. Note that the comparison result for the cross-LSVQ test on LSVQ-1080p has shown in Fig. 3. It can be seen from Table II and Fig. 3 that StarVQA shows good generalization.

| LIVE-VQC | KoNViD-1k | LSVQ | ||||

| Models | SROCC | PLCC | SROCC | PLCC | SROCC | PLCC |

| BRISQUE [19] | 0.592 | 0.638 | 0.657 | 0.658 | 0.579 | 0.576 |

| TLVQM [13] | 0.799 | 0.803 | 0.773 | 0.769 | 0.772 | 0.774 |

| VIDEVAL[25] | 0.752 | 0.751 | 0.783 | 0.780 | 0.794 | 0.783 |

| VSFA [14] | 0.773 | 0.795 | 0.773 | 0.775 | 0.801 | 0.796 |

| PVQ [30] | 0.827 | 0.837 | 0.791 | 0.786 | 0.827 | 0.828 |

| StarVQA | 0.732 | 0.808 | 0.812 | 0.796 | 0.851 | 0.857 |

| Training dataset | LSVQ | |||

| Testing datasets | LIVE-VQC | KoNViD-1k | ||

| Models | SROCC | PLCC | SROCC | PLCC |

| BRISQUE [19] | 0.524 | 0.536 | 0.646 | 0.647 |

| TLVQM [13] | 0.670 | 0.691 | 0.732 | 0.724 |

| VIDEVAL [25] | 0.630 | 0.640 | 0.751 | 0.741 |

| VSFA [14] | 0.734 | 0.772 | 0.784 | 0.794 |

| PVQ [30] | 0.770 | 0.807 | 0.791 | 0.795 |

| StarVQA | 0.753 | 0.809 | 0.842 | 0.849 |

IV Conclusion

Based on the attention mechanism [27] and TimeSformer [3], this paper has developed a novel space-time attention network for video quality assessment, named StarVQA. To the best of our knowledge, we are the first to apply Transformer to the VQA field. Furthermore, a new vectorized regression loss function has designed to adapt the Transformer-based architecture for training. Experimental results show that StarVQA achieves competitive performance compared with five typical VQA methods. This work broadens Transformer to a new application and demonstrates that the attention has excellent potential in the VQA field.

It is worth mentioning that the result presented in this paper is encouraging. As the first-of-its-kind efforts in the application of Transformer to VQA, great progress may be made with increasing frames selected from a video sequence. The number of frames used in the experiments only takes 8 due to the computation and memory constraints (i.e. 4Tesla P100 GPUs). According to the result reported in TimeSformer [3], the accuracy improvement on video classification tasks almost linearly increases as the number of input frames increases. Therefore, we would like to point out that if using clips of 32 or more frames, StarVQA will be a significant departure from current state-of-the-art convolutional models. Besides, we wonder how many encoding blocks used in StarVQA are in a good balance between performance and computation. Under the same computational cost, the convergence comparison of StarVQA with popular CNNs is necessary to be analyzed rigorously. These are worth our further investigation in future.

References

- [1] Anurag Arnab, Mostafa Dehghani, Georg Heigold, Chen Sun, Mario Lučić, and Cordelia Schmid. ViVit: A video vision transformer. arXiv preprint arXiv:2103.15691, 2021.

- [2] Jimmy Lei Ba, Jamie Ryan Kiros, and Geoffrey E Hinton. Layer normalization. arXiv preprint arXiv:1607.06450, 2016.

- [3] Gedas Bertasius, Heng Wang, and Lorenzo Torresani. Is space-time attention all you need for video understanding? arXiv preprint arXiv:2102.05095, 2021.

- [4] Alan C. Bovik. Weeping and gnashing of teeth: Teaching deep learning in image and video processing classes. In Proceedings of IEEE Southwest Symposium on Image Analysis and Interpretation (SSIAI), pages 125–129, 2020.

- [5] Adrian Bulat, Juan-Manuel Perez-Rua, Swathikiran Sudhakaran, Brais Martinez, and Georgios Tzimiropoulos. Space-time mixing attention for video transformer. arXiv preprint arXiv:2106.05968, 2021.

- [6] Li-Heng Chen, Christos G Bampis, Zhi Li, and Alan C Bovik. Learning to distort images using generative adversarial networks. IEEE Signal Processing Letters, 27:2144–2148, 2020.

- [7] Pengfei Chen, Leida Li, Lei Ma, Jinjian Wu, and Guangming Shi. RIRNet: Recurrent-in-recurrent network for video quality assessment. In Proceedings of ACM International Conference on Multimedia (ACM-MM), pages 834–842, 2020.

- [8] Alexey Dosovitskiy, Lucas Beyer, Alexander Kolesnikov, Dirk Weissenborn, Xiaohua Zhai, Thomas Unterthiner, Mostafa Dehghani, Matthias Minderer, Georg Heigold, Sylvain Gelly, et al. An image is worth 16x16 words: Transformers for image recognition at scale. In Proceedings of International Conference on Learning Representations (ICLR), pages 1–12, 2021.

- [9] Haoqi Fan, Bo Xiong, Karttikeya Mangalam, Yanghao Li, Zhicheng Yan, Jitendra Malik, and Christoph Feichtenhofer. Multiscale vision transformers. arXiv preprint arXiv:2104.11227, 2021.

- [10] D Ghadiyaram and Alan C. Bovik. Massive online crowdsourced study of subjective and objective picture quality. IEEE transactions on image processing, 25(1):372–387, 2016.

- [11] Vlad Hosu, Franz Hahn, Mohsen Jenadeleh, Hanhe Lin, Hui Men, Tamás Szirányi, Shujun Li, and Dietmar Saupe. The konstanz natural video database (KoNViD-1k). In Proceedings of ACM International Conference on Quality of Multimedia Experience (QoMEX), pages 1–6, 2017.

- [12] Woojae Kim, Jongyoo Kim, Sewoong Ahn, Jinwoo Kim, and Sanghoon Lee. Deep video quality assessor: From spatio-temporal visual sensitivity to a convolutional neural aggregation network. In Proceedings of European Conference on Computer Vision (ECCV), pages 219–234, 2018.

- [13] Jari Korhonen. Two-level approach for no-reference consumer video quality assessment. IEEE Transactions on Image Processing, 28(12):5923–5938, 2019.

- [14] Dingquan Li, Tingting Jiang, and Ming Jiang. Quality assessment of in-the-wild videos. In Proceedings of ACM International Conference on Multimedia (ACM-MM), pages 2351–2359, 2019.

- [15] Dingquan Li, Tingting Jiang, and Ming Jiang. Unified quality assessment of in-the-wild videos with mixed datasets training. International Journal of Computer Vision, 129(4):1238–1257, 2021.

- [16] Wentao Liu, Zhengfang Duanmu, and Zhou Wang. End-to-end blind quality qssessment of compressed videos using deep neural networks. In Proceedings of ACM International Conference on Multimedia (ACM-MM), pages 546–554, 2018.

- [17] Ze Liu, Jia Ning, Yue Cao, Yixuan Wei, Zheng Zhang, Stephen Lin, and Han Hu. Video swin transformer. arXiv preprint arXiv:2106.13230, 2021.

- [18] Shankhanil Mitra, Rajiv Soundararajan, and Sumohana S Channappayya. Predicting spatio-temporal entropic differences for robust no reference video quality assessment. IEEE Signal Processing Letters, 28:170–174, 2021.

- [19] Anish Mittal, Anush Krishna Moorthy, and Alan Conrad Bovik. No-reference image quality assessment in the spatial domain. IEEE Transactions on image processing, 21(12):4695–4708, 2012.

- [20] Omnicore. Tiktok by the numbers.

- [21] Niki Parmar, Ashish Vaswani, Jakob Uszkoreit, Lukasz Kaiser, Noam Shazeer, Alexander Ku, and Dustin Tran. Image transformer. In Proceedings of International Conference on Machine Learning (ICML), pages 4055–4064, 2018.

- [22] Mandela Patrick, Dylan Campbell, Yuki M Asano, Ishan Misra Florian Metze, Christoph Feichtenhofer, Andrea Vedaldi, Jo Henriques, et al. Keeping your eye on the ball: Trajectory attention in video transformers. arXiv preprint arXiv:2106.05392, 2021.

- [23] Prajit Ramachandran, Niki Parmar, Ashish Vaswani, Irwan Bello, Anselm Levskaya, and Jonathon Shlens. Stand-alone self-attention in vision models. In Proceedings of Advances in Neural Information Processing Systems (NeurIPS), pages 68–80, 2019.

- [24] Zeina Sinno and Alan Conrad Bovik. Large-scale study of perceptual video quality. IEEE Transactions on Image Processing, 28(2):612–627, 2018.

- [25] Zhengzhong Tu, Yilin Wang, Neil Birkbeck, Balu Adsumilli, and Alan C Bovik. UGC-VQA: Benchmarking blind video quality assessment for user generated content. IEEE Transactions on Image Processing, 30:4449–4464, 2021.

- [26] Zhengzhong Tu, Xiangxu Yu, Yilin Wang, Neil Birkbeck, Balu Adsumilli, and Alan C Bovik. RAPIQUE: Rapid and accurate video quality prediction of user generated content. IEEE Open Journal of Signal Processing, to be published, doi: 10.1109/OJSP.2021.3090333.

- [27] Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N Gomez, Łukasz Kaiser, and Illia Polosukhin. Attention is all you need. In Proceedings of Advances in Neural Information Processing Systems (NIPS), pages 5998–6008, 2017.

- [28] Xiaolong Wang, Ross Girshick, Abhinav Gupta, and Kaiming He. Non-local neural networks. In Proceedings of IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pages 7794–7803, 2018.

- [29] Wei Wu, Qinyao Li, Zhenzhong Chen, and Shan Liu. Semantic information oriented no-reference video quality assessment. IEEE Signal Processing Letters, 28:204–208, 2021.

- [30] Zhenqiang Ying, Maniratnam Mandal, Deepti Ghadiyaram, and Alan C Bovik. Patch-VQ: ‘patching up’ the video quality problem. In Proceedings of IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pages 14019–14029, 2021.

- [31] Junyong You and Jari Korhonen. Deep neural networks for no-reference video quality assessment. In Proceedings of IEEE International Conference on Image Processing (ICIP), pages 2349–2353, 2019.

- [32] Hui Zeng, Lei Zhang, and Alan C Bovik. Blind image quality assessment with a probabilistic quality representation. In Proceedings of IEEE International Conference on Image Processing (ICIP), pages 609–613, 2018.

- [33] Yu Zhang, Xinbo Gao, Lihuo He, Wen Lu, and Ran He. Blind video quality assessment with weakly supervised learning and resampling strategy. IEEE Transactions on Circuits and Systems for Video Technology, 29(8):2244–2255, 2019.