[1]\fnmFei \surSha [1]\fnmLeonardo\surZepeda-Núñez

1]\orgdivGoogle Research, \orgaddress\cityMountain View, \stateCA, \countryUSA 2]\orgdivCalifornia Institute of Technology, \orgaddress\cityPasadena, \stateCA, \countryUSA

Statistical Downscaling via High-Dimensional Distribution Matching with Generative Models

Abstract

Statistical downscaling is a technique used in climate modeling to increase the resolution of climate simulations. High-resolution climate information is essential for various high-impact applications, including natural hazard risk assessment. However, simulating climate at high resolution is intractable. Thus, climate simulations are often conducted at a coarse scale and then downscaled to the desired resolution. Existing downscaling techniques are either simulation-based methods with high computational costs, or statistical approaches with limitations in accuracy or application specificity. We introduce Generative Bias Correction and Super-Resolution (GenBCSR), a two-stage probabilistic framework for statistical downscaling that overcomes the limitations of previous methods. GenBCSR employs two transformations to match high-dimensional distributions at different resolutions: (i) the first stage, bias correction, aligns the distributions at coarse scale, (ii) the second stage, statistical super-resolution, lifts the corrected coarse distribution by introducing fine-grained details. Each stage is instantiated by a state-of-the-art generative model, resulting in an efficient and effective computational pipeline for the well-studied distribution matching problem. By framing the downscaling problem as distribution matching, GenBCSR relaxes the constraints of supervised learning, which requires samples to be aligned. Despite not requiring such correspondence, we show that GenBCSR surpasses standard approaches in predictive accuracy of critical impact variables, particularly in predicting the tails (99% percentile) of composite indexes composed of interacting variables, achieving up to 4-5 folds of error reduction.

Significance statement

Methods for downscaling coarse global climate projections to regional high resolution information fall into two categories: physics-based simulations that are computationally demanding and statistical approaches that doe not model high-dimensional distributions thus incapable of capturing important inter-variable spatio-temporal correlations. The proposed paradigm is powered by state-of-the-art generative models. It avoids the high computational cost of physical simulations, and is able to capture extreme events at the tails of the distributions more accurately than competing methods. Thus, our approach opens the door to downscaling large climate ensembles, enabling impact-relevant local climate risk assessments.

1 Introduction

Statistical downscaling is a crucial tool for studying and correlating simulations of complex dynamical systems at multiple spatio-temporal scales. For example, in climate simulations, the computational complexity of general circulation models (GCMs) [1] grows at least cubically111The number of horizontal degrees of freedom grows quadratically with resolution, while the number of time-steps increases linearly in order to satisfy the CFL condition [2]. with respect to the horizontal resolution, quickly becoming prohibitive [3]. This severely limits the resolution of long-running climate projections, the cornerstone of climate modeling, particularly as large ensembles are routinely required to assess the risk induced by climate change [4, 5], which are necessary to inform climate adaptation policies.

Consequently, climate data at impact-relevant scales needs to be downscaled from coarser lower-resolution models’ outputs. This is a challenging task: coarser models do not resolve small-scale dynamics, thus creating bias [6, 3, 7], and they lack the necessary physical details (for instance, orographic weather effects [8]) to be of practical use for regional or local climate impact studies [9, 10], including risk assessment of extreme weather events [11, 12] such as extreme flooding [13, 14], heat waves [15], or wildfires [16]. This has resulted in an acute need for high-resolution, fine-grained data for economic sectors likely to be impacted by such phenomena [17, 18, 19, 20]. In return, this need has spurred the development of downscaling techniques and frameworks [21, 22], including recent machine-learning based ones [23, 24, 25, 26, 27, 28, 29].

At the most abstract level, statistical downscaling [21, 22] learns a statistical map (e.g., quantile mapping or generalized linear regression) from low- to high-resolution data. However, it poses several unique challenges. First, unlike supervised machine learning (ML), there is typically no natural pairing of samples from the low-resolution model (such as climate models [30]) with samples from higher-resolution ones (such as weather models that assimilate observations [31]) due to the chaotic divergence of the underlying physics. That is, a coarse sample at time does not correspond to a fine-grained sample at the same — the former is not a downsampled version of the later, and finding a temporal alignment to match them is futile.

While such pairing does not truly exist [23], several recent studies in climate sciences have relied in synthetically-generated approximately-paired datasets via dynamical downscaling. Dynamical downscaling runs costly hand-tuned regional high-resolution models that incorporate the coarse data through forcing and boundary conditions [32, 33]. Despite being the current method of choice for obtaining regional climate information [34], dynamical downscaling is computationally expensive, resulting in limited data availability, restricted geographic coverage, and inadequate uncertainty quantification of the high-resolution samples. In summary, requiring data in correspondence for training severely limits the potential applicability of supervised ML methodologies, despite their promising results [24, 25, 35, 26, 27, 28].

Second, unlike the setting of (image) super-resolution [36], in which an ML model learns the (pseudo) inverse of a downsampling operator [37, 38], downscaling additionally requires bias correction. Super-resolution can be recast as frequency extrapolation [39], in which the model reconstructs high-frequency content, while matching the low-frequency information of a low-resolution input. However, the restriction of the target high-resolution data may not match the distribution of the low-resolution data in Fourier space [40]. Therefore, debiasing is necessary to correct the Fourier spectrum of the coarse input to render it admissible for the target distribution [41]. Debiasing allows us to address the crucial yet challenging prerequisite of aligning the low-frequency statistics between the low- and high-resolution datasets.

Given these two difficulties, statistical downscaling can be naturally framed as aligning two probability distributions, at different resolution222This problem is related to the well-known Gromov-Wasserstein problem [42]., linked by an unknown map; such a map emerges from both distributions representing the same underlying physical system, albeit with different characterizations of the system’s statistics at multiple spatio-temporal resolutions. The core challenge is then: how do we structure the downscaling map so that the (probabilistic) alignment can effectively remediate the bias introduced by the coarser data distribution?

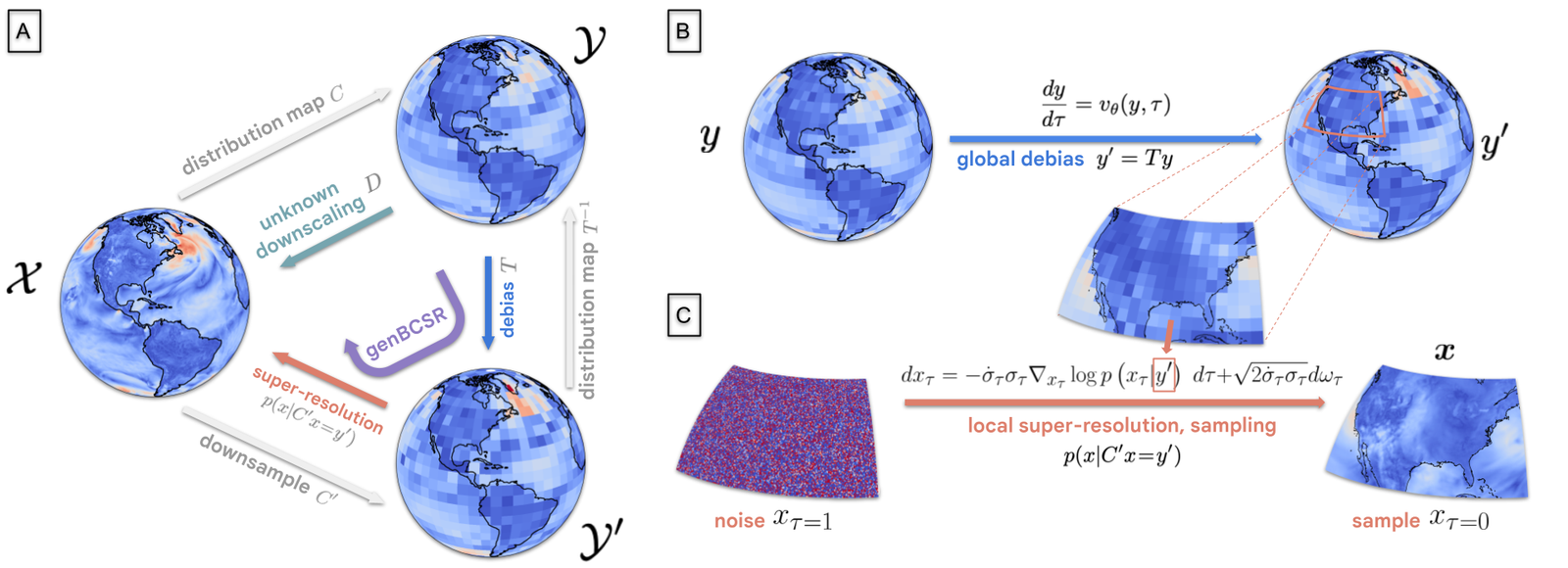

In this work, we introduce GenBCSR, Generative Bias Correction and Super-Resolution, a framework for statistical downscaling that induces a factorization of two tasks: bias correction (or debiasing) and super-resolution (or upsampling), which are instantiated using state-of-the-art generative models and applied to a challenging downscaling problem.

The bias correction step is reduced to the alignment of two distribution manifolds: the weather manifold and the climate simulation manifold. These manifolds stem from the dissipative nature of the underlying dynamical systems, which induces the data distribution to concentrate on a relatively low-dimensional manifold. The alignment is achieved using a rectified flow approach [43] that approximately solves an optimal transport problem [44], which preserves the marginals of each manifold. As such, the alignment depends on the upstream physics of the climate model, as it dictates the underlying structure of the climate simulation manifold.

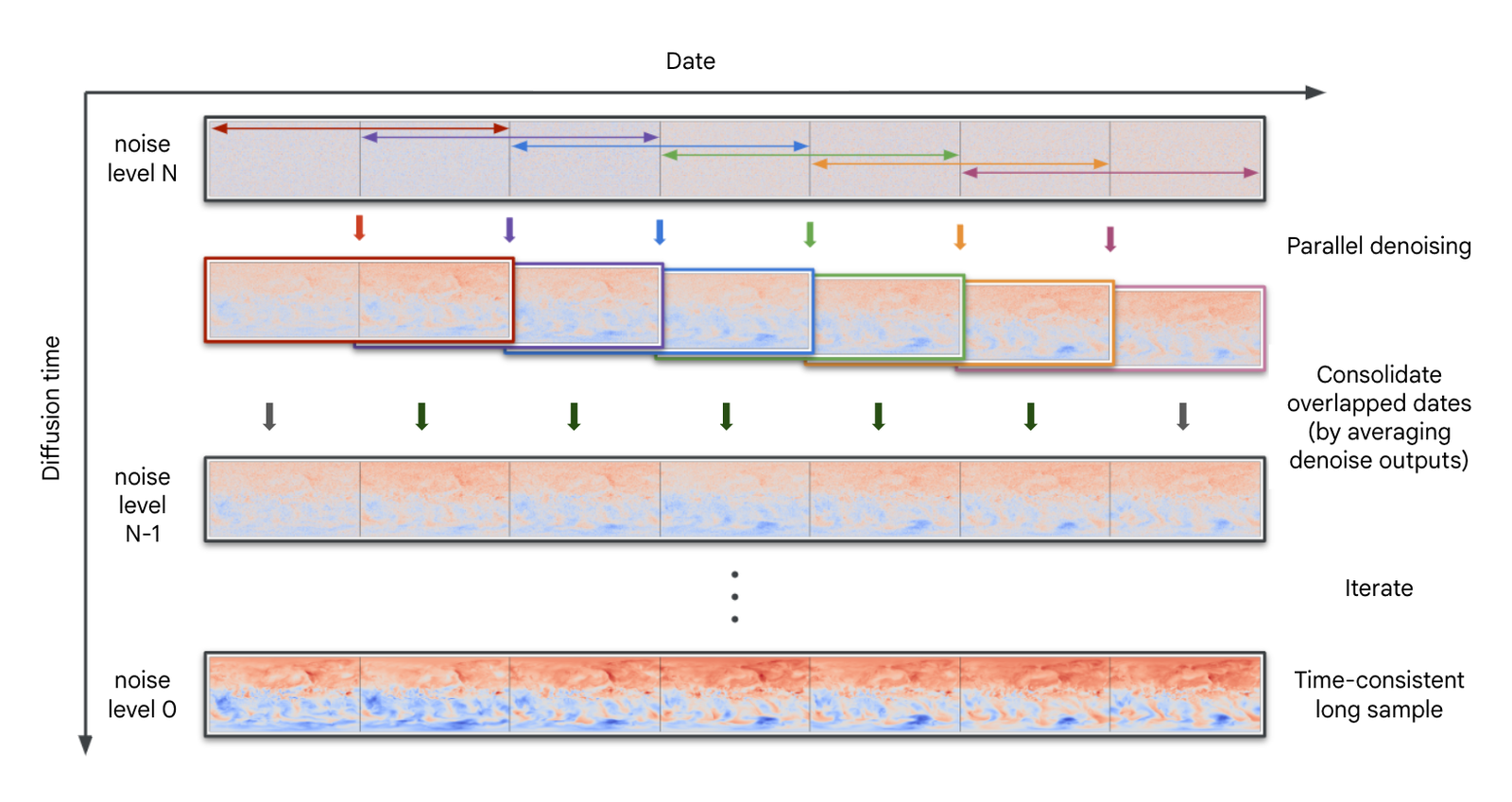

The super-resolution step is a purely statistical task that relies in learning local correlations in the weather data. As such, it is completely decoupled from the climate physics modeling and it is performed only on the weather manifold, for which, we employ a probabilistic diffusion model [45] coupled with a domain decomposition technique [46] to perform regional upsampling in both space and time, obtaining arbitrarily long time-coherent samples.

We showcase our methodology by downscaling an ensemble of coarse climate simulations [47] to high-resolution local weather sequences. Even when using a handful of climate trajectories for training, we show that our strategy is able to accurately capture statistics for the full ensemble. We also show that our methodology vastly outperforms the popular BCSD method, even when the latter is trained using the full ensemble, at capturing inter-variable and spatiotemporal correlations necessary for compound events such as heat-streaks and cyclones, while remaining competitive at capturing pointwise statistics.

2 Methods

As statistical downscaling is designed to address probabilistic questions, it focuses on distributions rather than individual trajectories. Typically, this involves processing an ensemble of climate trajectories, whose snapshots are subsequently downscaled to the desired resolution.

We formulate the statistical downscaling problem by considering two stochastic processes, and with , representing a high-resolution weather process and low-resolution simulated climate process [48] respectively, governed by

| (1) | ||||

| (2) |

where embodies the generally unknown high-fidelity dynamics of , and the dynamics of are often parameterized by a stochastically forced GCM [49], in which the form of is a modelling choice. Each stochastic process333For simplicity in exposition, we follow [49] where the important time-varying effects of the seasonal and diurnal cycles have been ignored, along with jump process contributions. is associated with a time-dependent measure, and , such that and , each governed by their corresponding Fokker-Planck equations. We assume a time-invariant unknown statistical model that relates and via a possibly nonlinear downsampling map. For brevity, we omit the time-dependency of the random variables and in subsequent discussion.

In general, (2) is calibrated via measurement functionals to (1) using a single observed trajectory: the historical weather. The goal of statistical downscaling is to approximate the inverse of with a downscaling map , trained on data for , for a finite horizon , such that for . Here, denotes the push-forward measure of through , and is assumed to be time-independent.

Setup

Given its probabilistic nature, we reformulate the task of finding as sampling from a conditional distribution [50]. We define the operator , where is the identity map, such that , where is the underlying unknown joint distribution. Assuming this joint distribution admits a conditional decomposition, we have:

| (3) |

where is time-independent. Thus, we recast statistical downscaling as learning to sample from , which allows us to compute statistics of interest of via Monte-Carlo methods. A cornerstone of our approach is leveraging the map to compute this conditional probability: given a low-resolution realization , we rewrite as the conditional probability distribution .

Finally, as is assumed time-independent we model the elements and as random variables with marginal distributions, and where and . Thus, our objective is to sample given only access to marginal samples of and .

Main idea

In general, downscaling is an ill-posed problem as is unknown. Therefore, we seek to approximate so the statistical properties of are preserved given samples of . In particular, such a map should satisfy . Thus, following [41], we impose a structured ansatz to approximate by factorizing it as the composition of a known, non-invertible downsampling map444Here we suppose that the downsampling map acts both in space and in time, by using interpolation in space, and by averaging in time using a window of one day. and an invertible debiasing map :

| (4) |

or alternatively, . This factorization explicitly decouples the two objectives of downscaling: debiasing and upsampling.

The range of defines an intermediate space of debiased/low-resolution samples with measure (see Fig. 1). The joint space is built by projecting samples of into , i.e., ; see Fig. 1(a). Using these spaces, we decompose the statistical downscaling problem into the following two sub-problems:

Generative methods

In this paper we leverage two state-of-the-art generative AI techniques to build the bias correction and super-resolution maps: the bias correction step is instantiated by a conditional flow matching method [43], whereas the super-resolution step is instantiated by a conditional denoising diffusion model [51] coupled with a domain decomposition strategy [46] to upsample both in space and time and create time-coherent sequences.

Bias correction

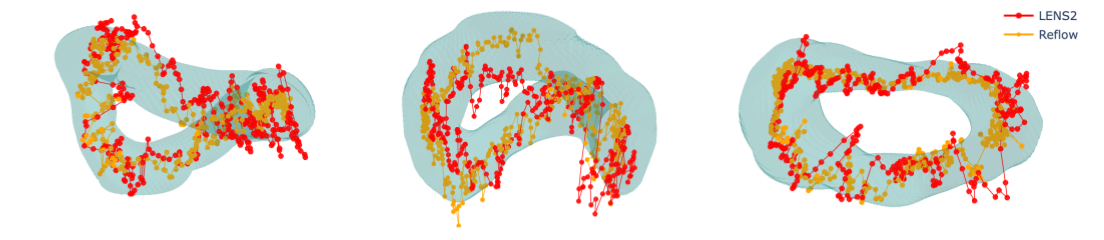

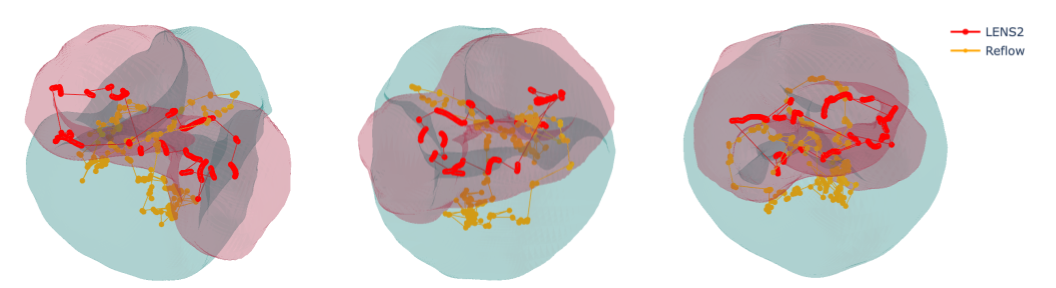

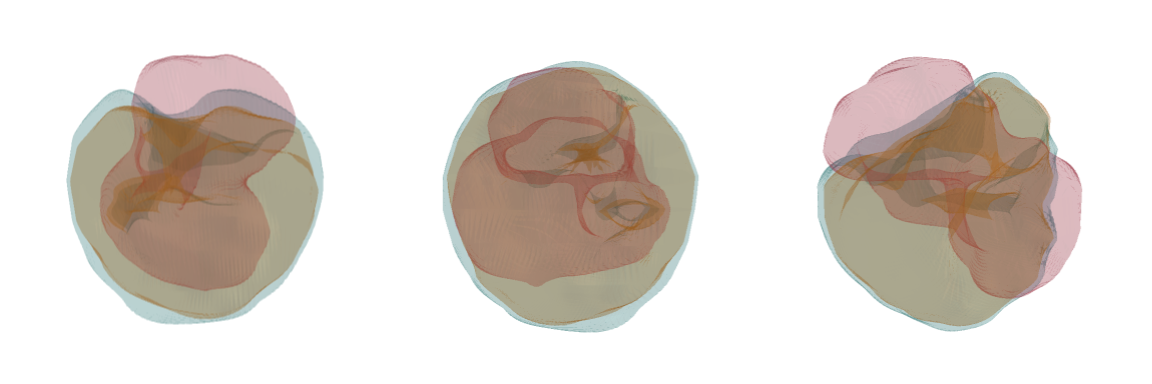

For debiasing, we leverage manifold alignment, which arises in many applications, such as domain adaptation [52], where it seeks to generalize models by matching the training and testing distributions, particularly when they are concentrated in low-dimensional manifolds. The debiasing map T, which aligns both the climate and weather manifolds (for a visualization see S7.2), also needs to preserve large features of the input, while correcting its statistical properties. Thus, colloquially, the transformation should not "move" too much mass with respect to the input .

This notion has a long story in applied mathematics going back to Gaspar Monge in the late 1700s and Leonid Kantorovich in the 50s, who formalized this idea, and kicked off the field of optimal transport [44]. Optimal transport seeks to solve the problem

| (5) |

for a cost function , which we assume to be the Euclidean distance. Note that by construction, the debiasing map satisfies the required constraints.

Due to limitations of existing methods for solving (5) (which are briefly summarized in S1.1), we adopt a rectified flow approach [43], a methodology under the umbrella of generative models. Rectified flow solves an optimal transport problem, and it has empirically shown to generalize well while being able scale to large dimensions.

Thus we define the map as the resolvent of a ODE given by

| (6) |

whose vector field is parametrized by a neural network (see S2.1.1 for further details). By identifying the input of the map as the initial condition, we have that .

We train by solving

| (7) |

where , and is the set of couplings with marginals given by (4). The choice of the coupling is essential to obtain a correct mapping as most of the physical information, such as seasonality, is encoded in this coupling. Once the model is trained, we solve (6) using an adaptive Runge-Kutta solver. One of the main advantages of this approach relies on the fact that trajectories of smooth autonomous systems555The system in (6) is not technically autonomous, but a simple change of variables to can produce an equivalent autonomous system. can not intersect, thus as the input distribution shifts due the climate change signal, the push-forward to also needs to shift to avoid intersecting trajectories. This is an important property to preserve and we demonstrate later in Figure 5.

Super-resolution

Following alignment, the resulting samples are super-resolved in space and in time to reconstruct extended trajectories. Due to the statistical nature of this task, we leverage a conditional diffusion model, given its ability to capture underlying distributions, which is vital due to the potentially large super-resolution factor. However, this process introduces two significant challenges: i) the presence of distribution shifts in the climate projection statistics, which we aim to preserve, and ii) computational resource constraints, which require the trajectories to be processes in batches, impacting its temporal coherence.

To avoid pollution stemming from color shifts [53], due to the inherent distribution shifts in our downstream tasks, we further decompose the upsampling stage in two steps: a linear step, corresponding to cubic interpolation, and a generative step that samples the conditional residual between the original sample and its interpolation. This procedure increases robustness to distribution shifts, as the interpolation is equivariant to shifts in the mean [29].

We denote the low-resolution debiased short sequence stemming from applying to a sequence of adjacent snapshots. Following Figure 1 the upsampling stage requires sampling from , where is the high-resolution spatio-temporal sequence, is the downsampling operator, which downsamples (by interpolation plus a spectral cut-off) in space and computes daily averages in time. We define as a linear deterministic upsampling map666In principle this step can be instantiate by any deterministic upsampling map., which spatially interpolates and replicates the result across the time dimension. Then, if we define the residual as we can recast the super-resolution step as sampling from

| (8) |

This expression is instantiated by a conditional diffusion model [51] which is trained in very short trajectories pairs . This allows to define the paired residual . During training, we learn a denoiser by optimizing

| (9) |

where is a training distribution for the noise levels . predicts the clean sample of the residual given a noisy sample [54]. More details on the diffusion model formulation and training are included in S2.2.1. The learned becomes part of the sampling SDE where for a fixed coarse input , we sample the residual by solving

| (10) |

backwards in diffusion time from to with terminal condition , where is the noise schedule.

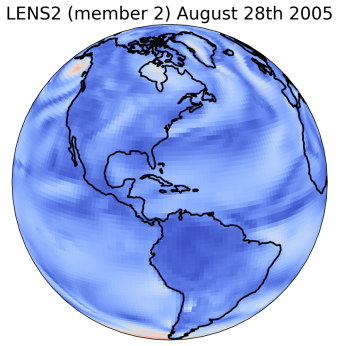

To generate long time-coherent sequences we leverage the mathematical underpinnings of sampling with diffusion models, which is reduced to solving a reverse underdamped Langevin SDE along an artificial diffusion time. As such, the temporal component of the samples becomes just another dimension, thus rendering it amenable to domain decomposition techniques, as the ones used in video generation [46].The sample generation is decomposed along the time axis in overlapping domains, at each time step each domain is advanced in diffusion time, and continuity is imposed in the overlapping regions, with an appropriate correction on the statistics of the noise, which ultimately results in time-coherent samples of arbitrary length. Further details can be found in S2.2.4.

3 Results

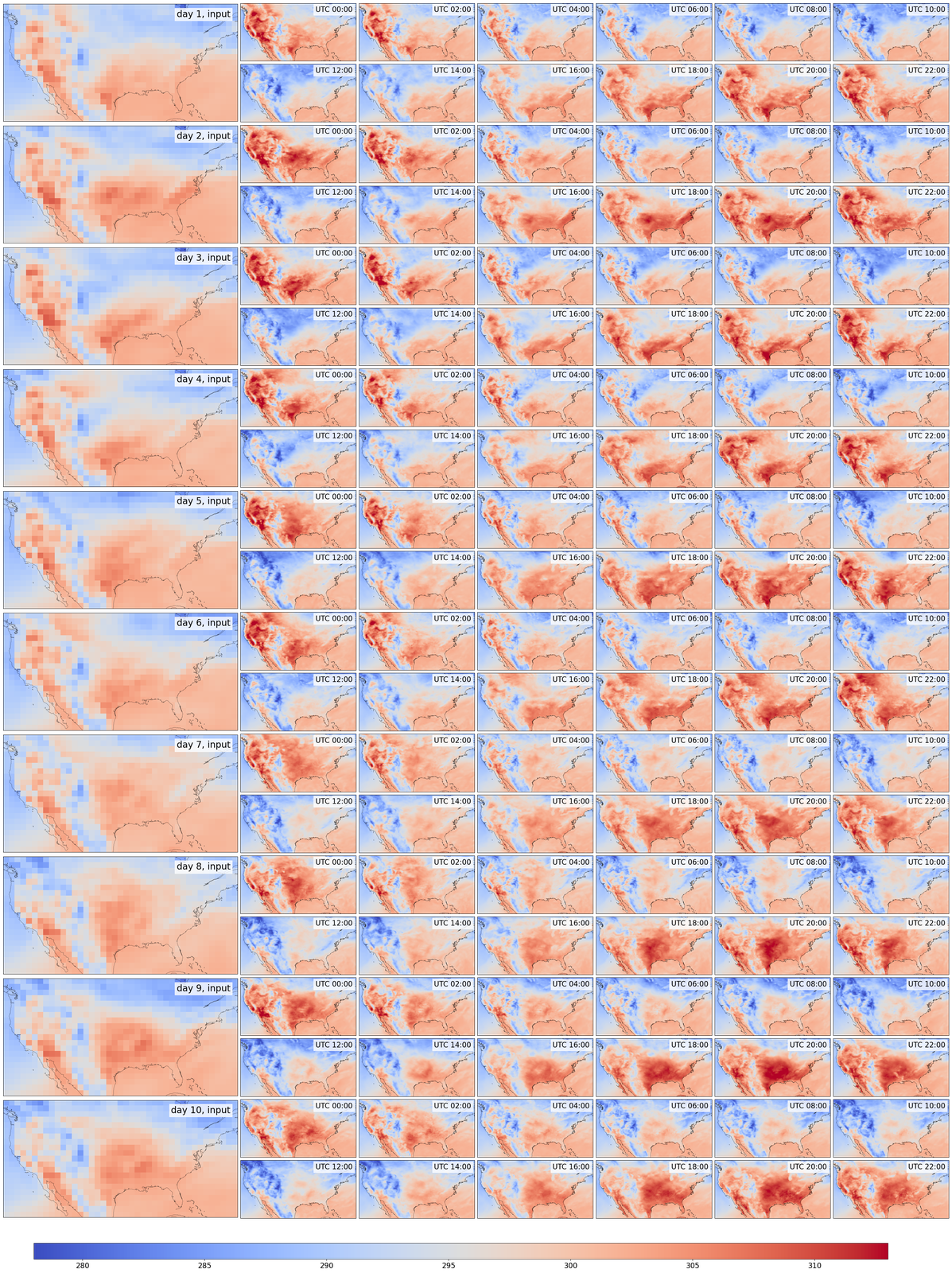

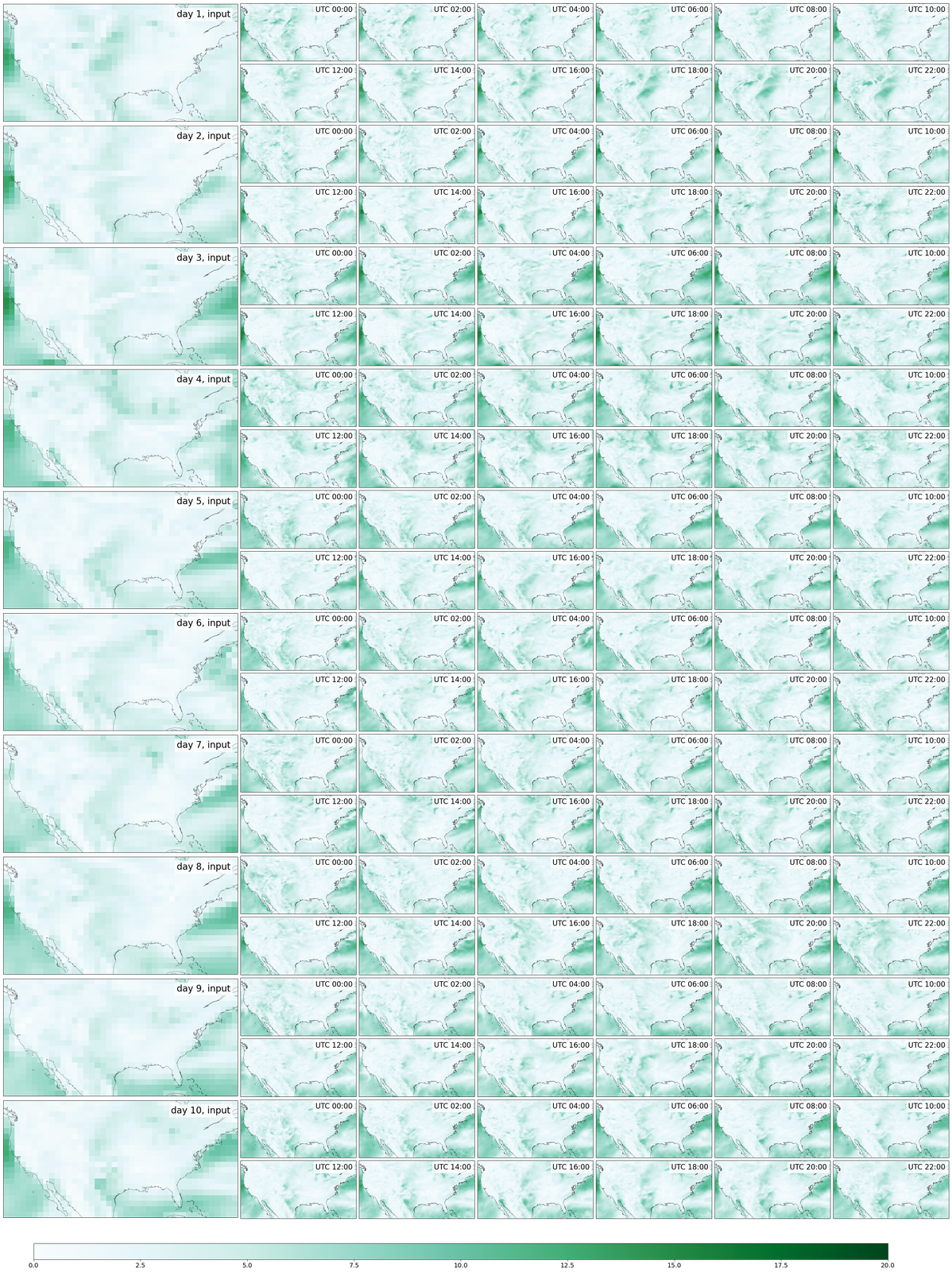

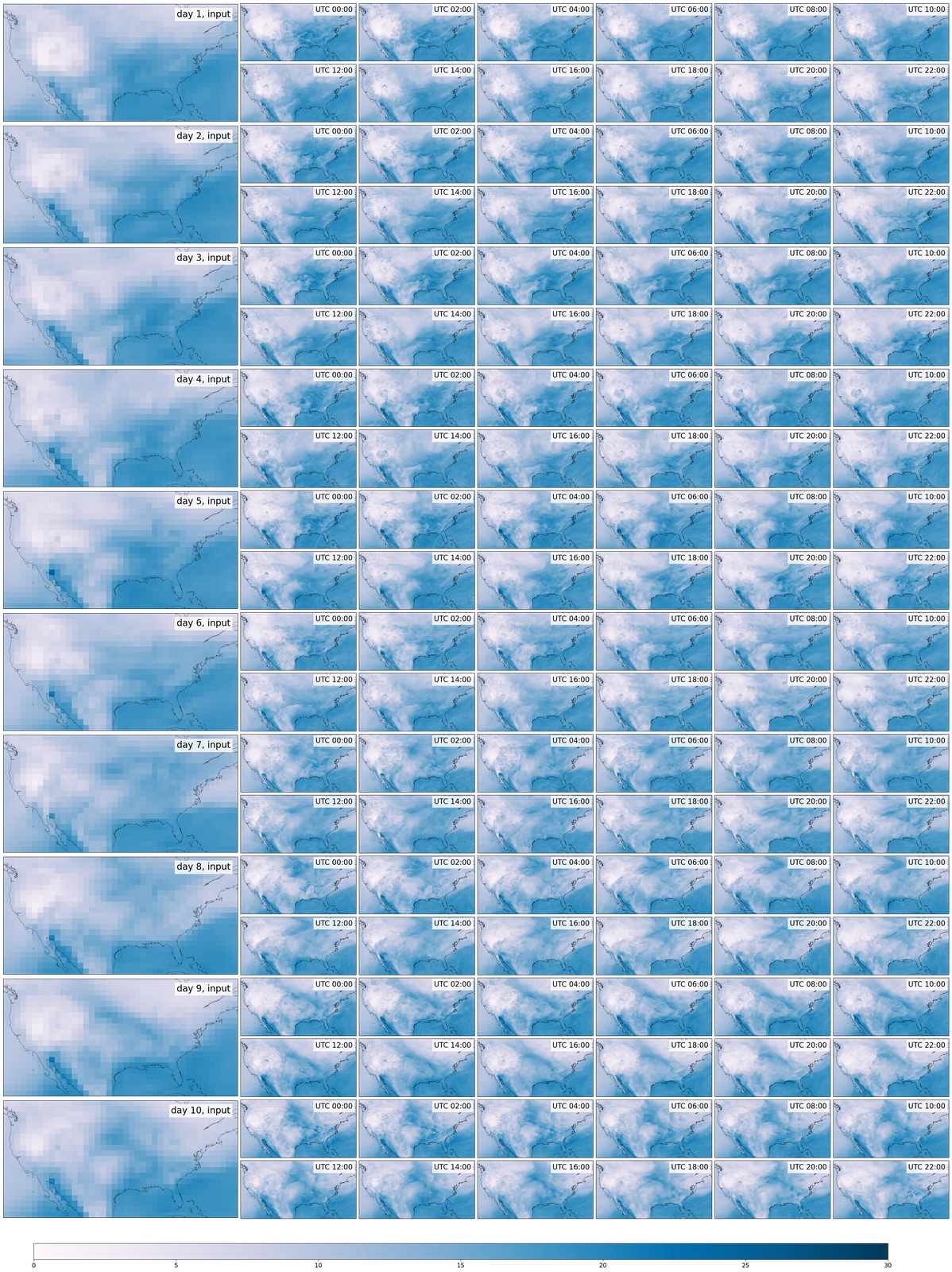

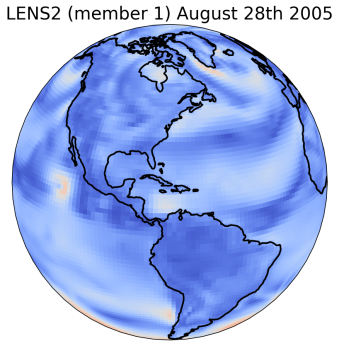

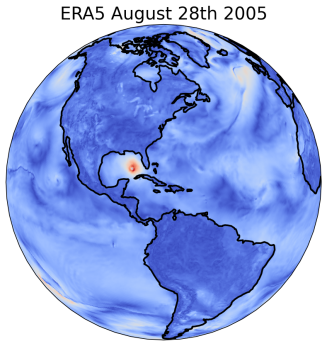

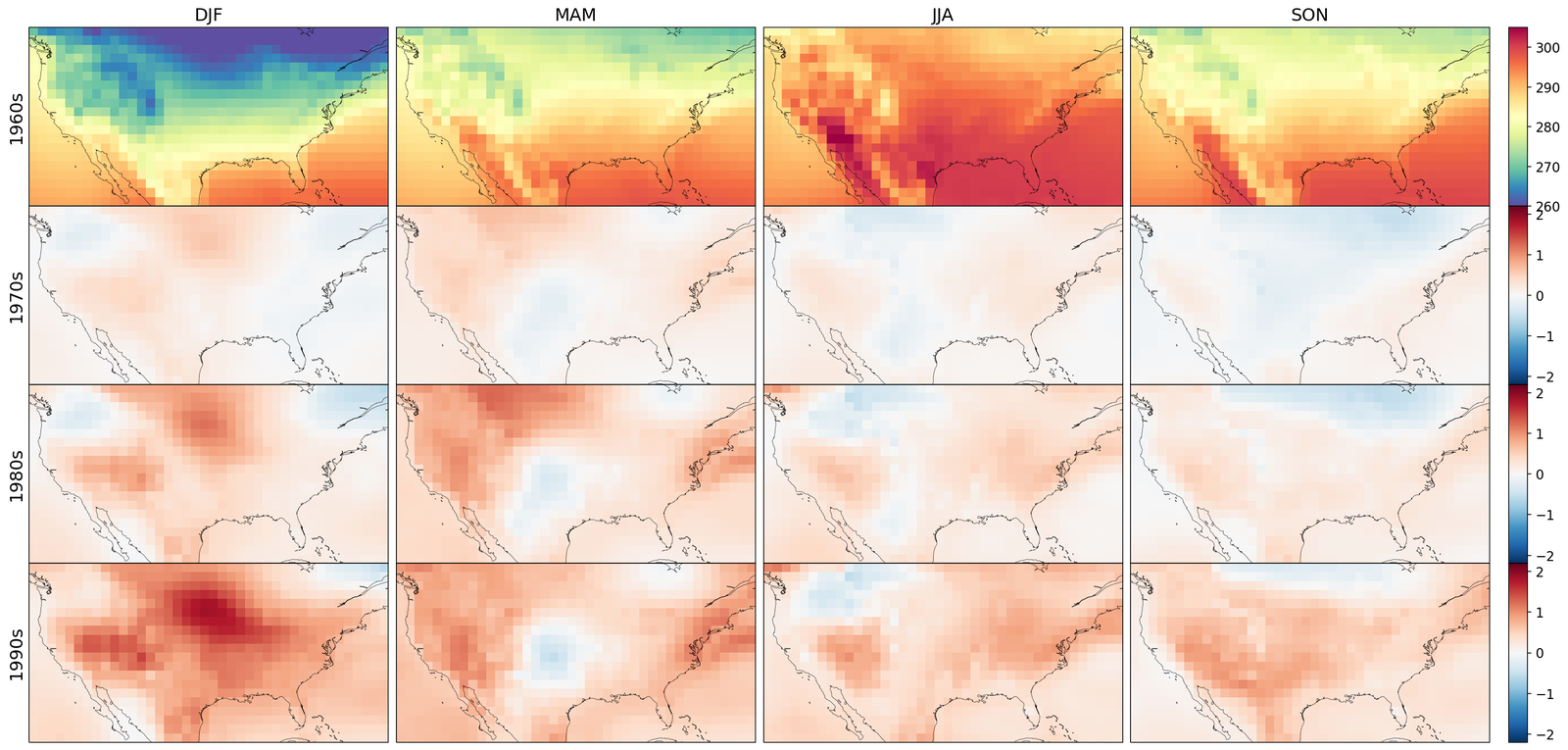

We illustrate the performance of our proposed method on a real-world, large-scale downscaling task. The coarse-resolution input is derived from the 100-member ensemble produced by the CESM2 Large Ensemble Community Project (LENS2) [47], regridded to a 1.5-degree (150 km) spatial resolution and daily averaged. Our objective is to statistically downscale key surface variables, including temperature, wind speed, humidity and sea-level pressure, to match the finer spatial-temporal characteristics of corresponding variables in the ERA5 reanalysis dataset [31], with a target resolution of 0.25-degree (25 km) in space and bi-hourly in time. This results in increasing the total resolution by a factor of 432 (). The bias correction step is applied globally, followed by regional super-resolution within a domain enclosing the Conterminous United States (CONUS)777Our approach is not region-specific. We have included results for other regions in S6.. We train the debiasing stage using as input data from 4 LENS2 ensemble members covering the period 1959-2000, and as target ERA5 reanalysis data for the same period, regridded to the LENS2 spatial-temporal resolution. Model selection and validation is performed against data from the entire LENS2 ensemble covering 2001 to 2010. The upsampling stage is trained by pairing the coarse-grained ERA5 data to its native resolution bi-hourly version. We present results for the test period from 2011 to 2020, by comparing various statistics against ERA5 observations during the same period. To prevent the results from being dominated by seasonal cycles, which have overwhelming effects on the total variations, we concentrate our evaluation on the month of August across all years.

Baselines.

We compare results from GenBCSR to output from the bias correction and spatial disaggregation (BCSD) method, a widely used statistical climate downscaling technique [55, 56, 57] (detailed in S2.3.1). BCSD employs quantile mapping (QM) between biased coarse-resolution inputs and an unbiased high-resolution reference climatology to correct biases and enhance spatial resolution, followed by a temporal disaggregation step using weighted analogs. Despite its widespread use, BCSD has notable limitations [58]. It cannot capture multivariate dependencies, often resulting in inconsistencies between related variables, and it struggles to accurately represent extreme events such as cyclones or heatwaves. These shortcomings are largely addressed by GenBCSR, as supported by the evidence presented below.

To assess the impact of the debiasing step, we also examine two alternative configurations of GenBCSR: one combining the QM-based debiasing step with generative super-resolution (QMSR) and another applying generative super-resolution directly to the original LENS2 trajectories without debiasing (SR).

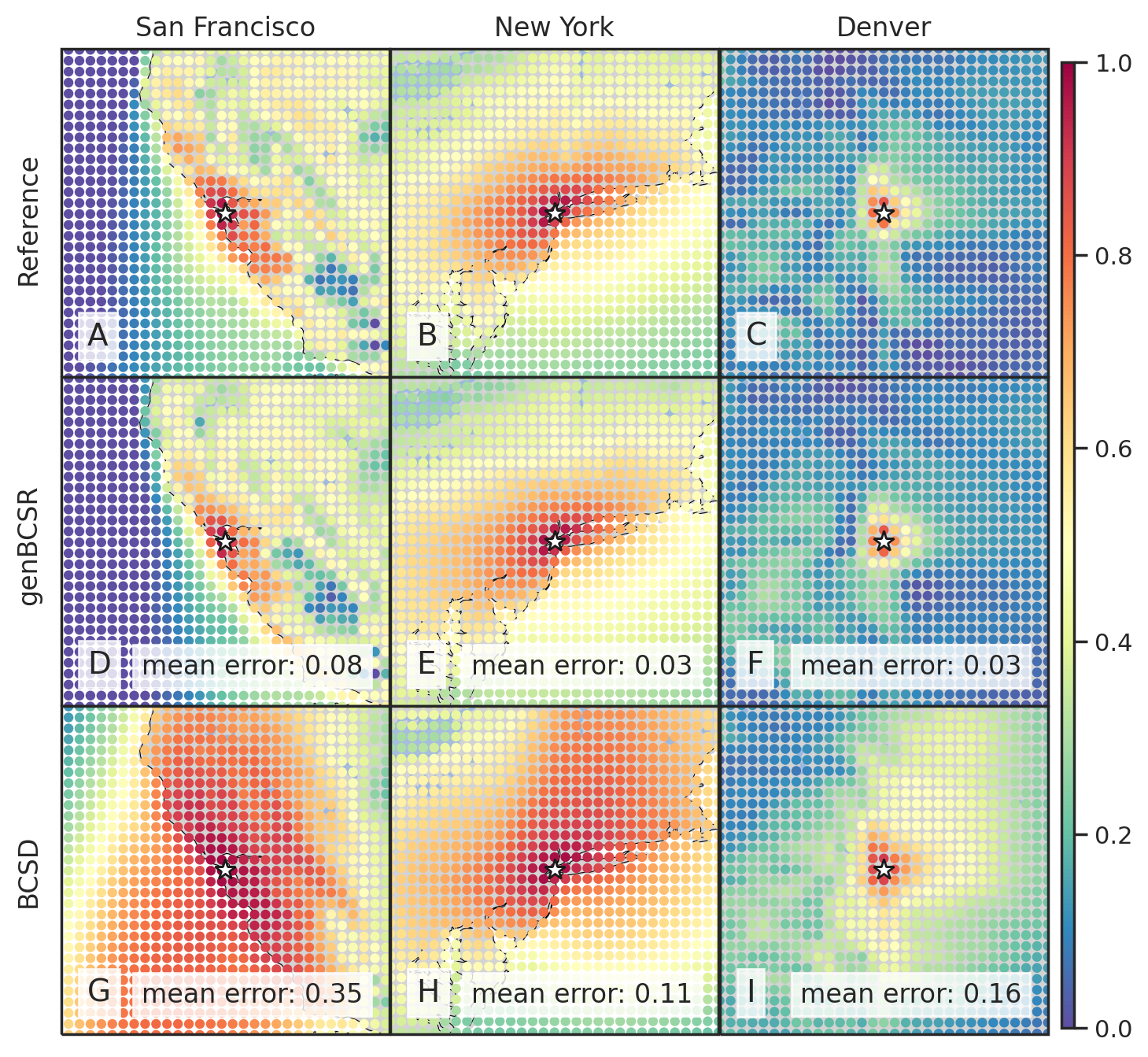

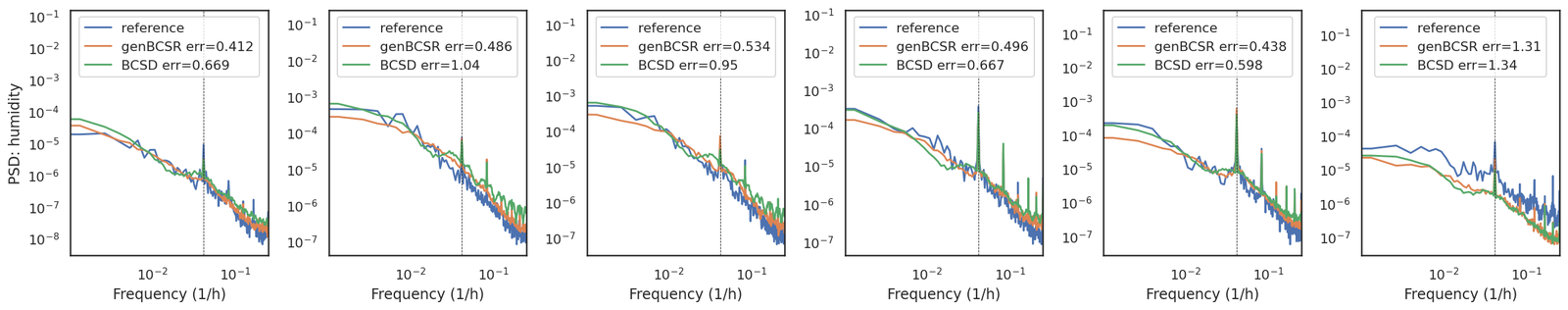

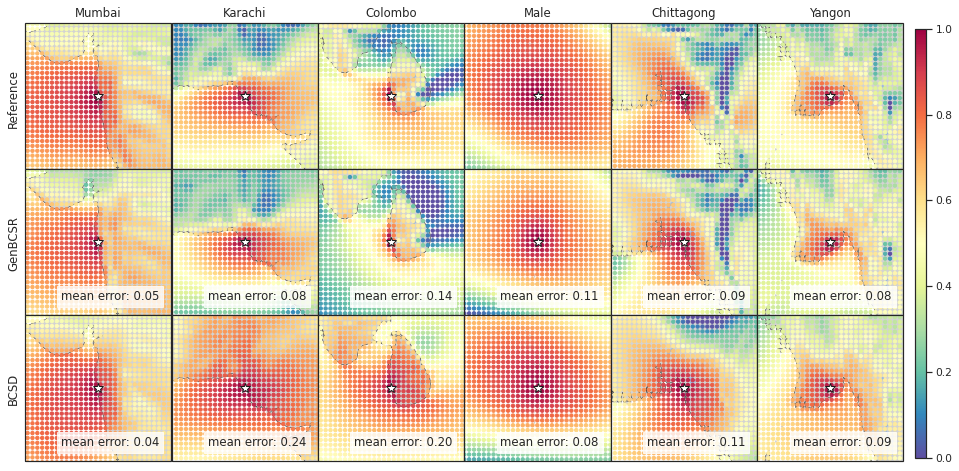

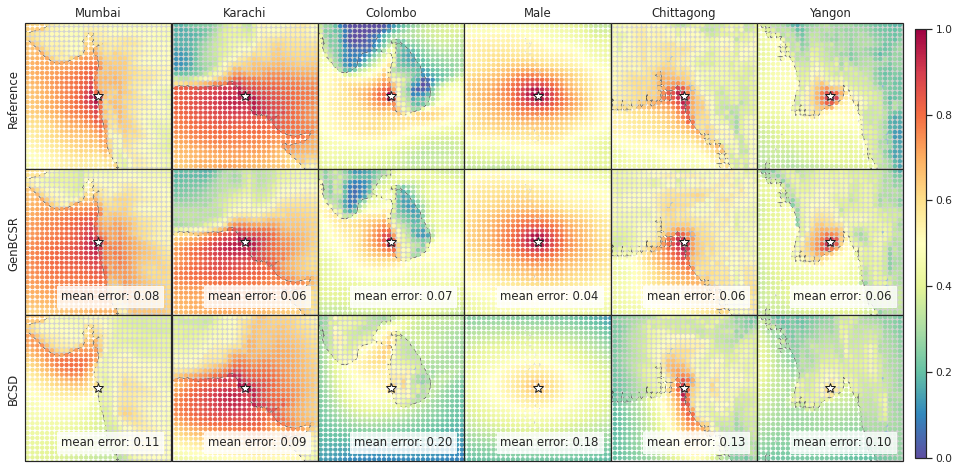

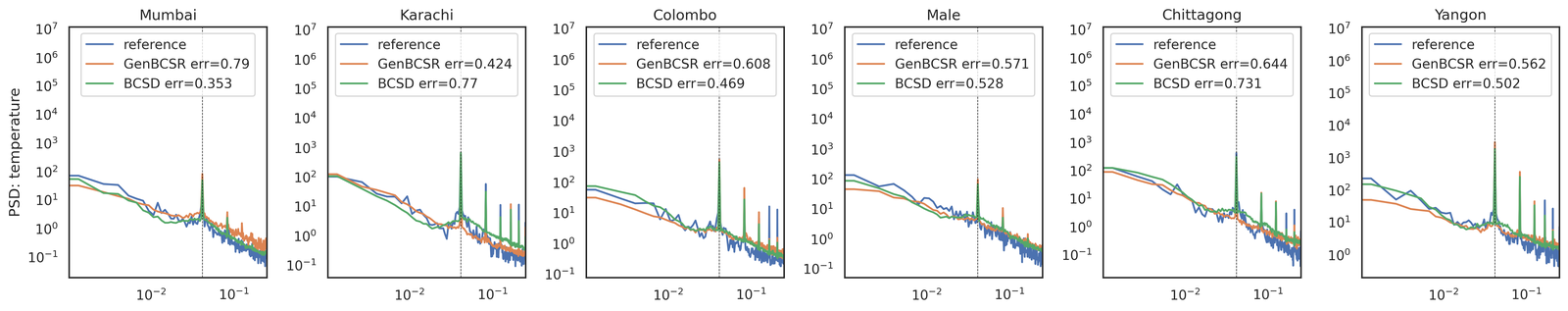

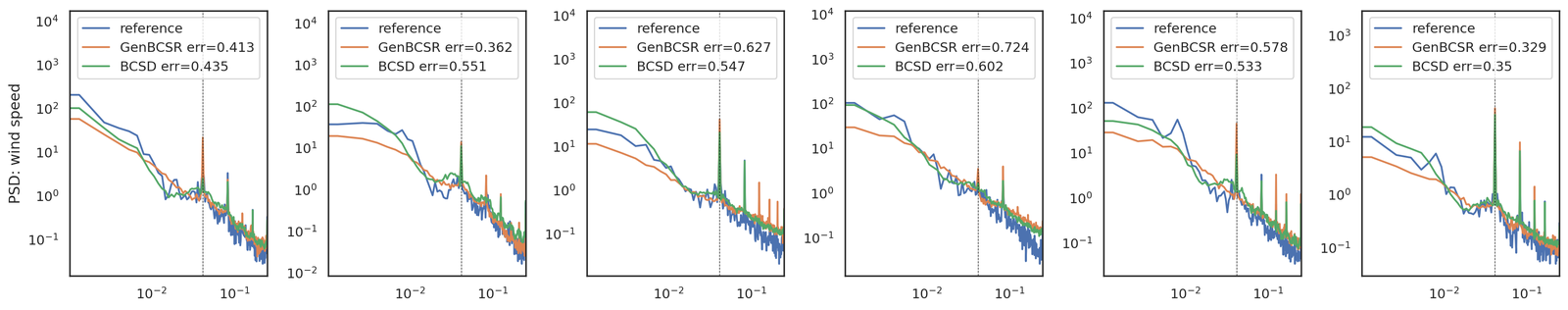

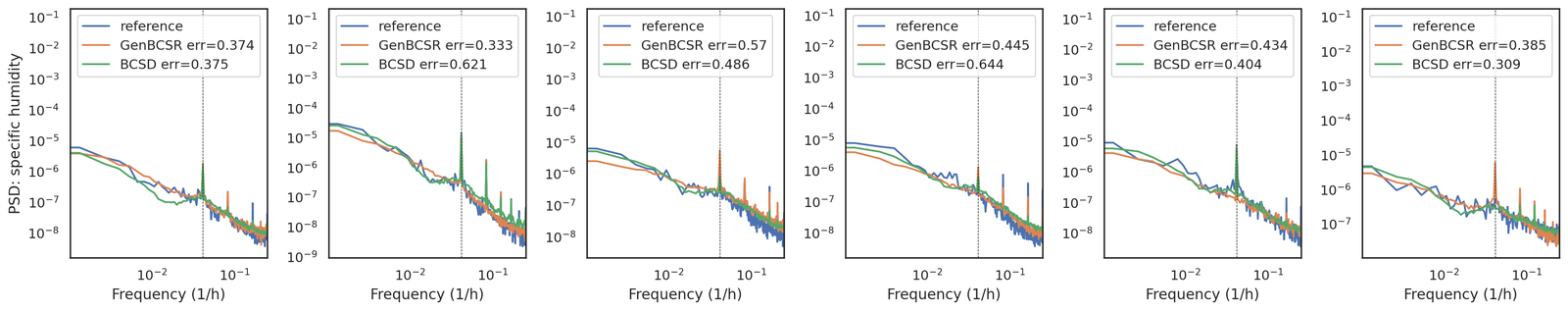

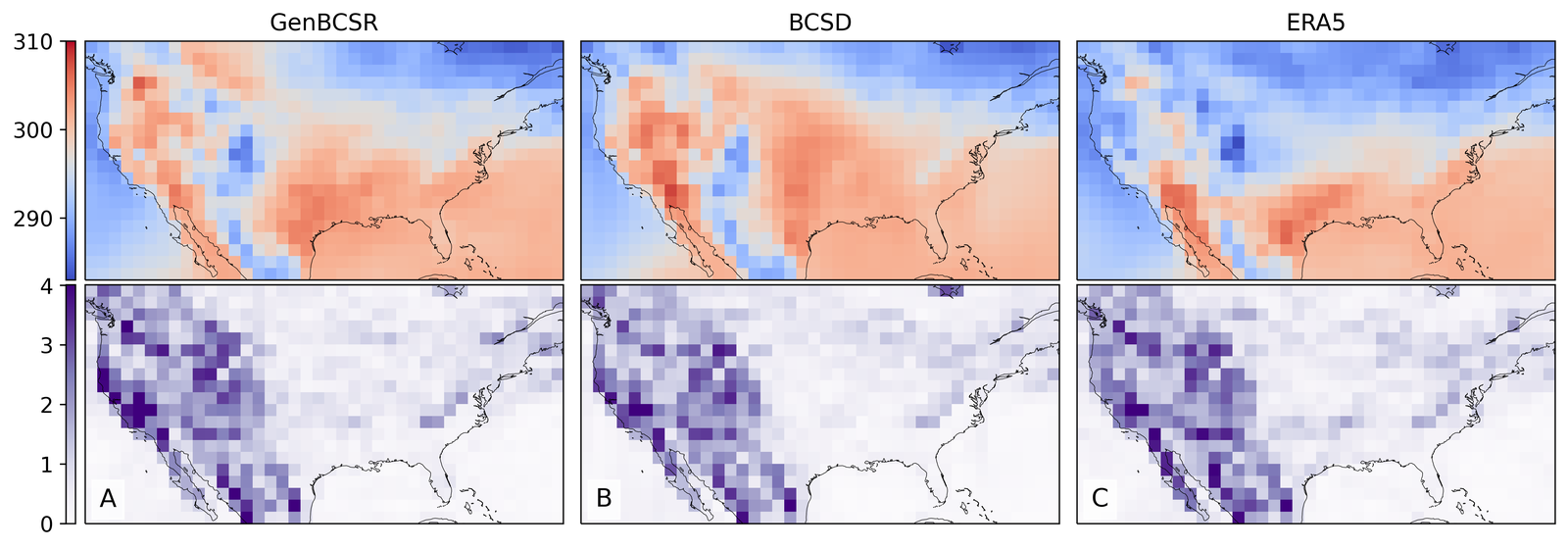

Consistent representations of multi-scale variability.

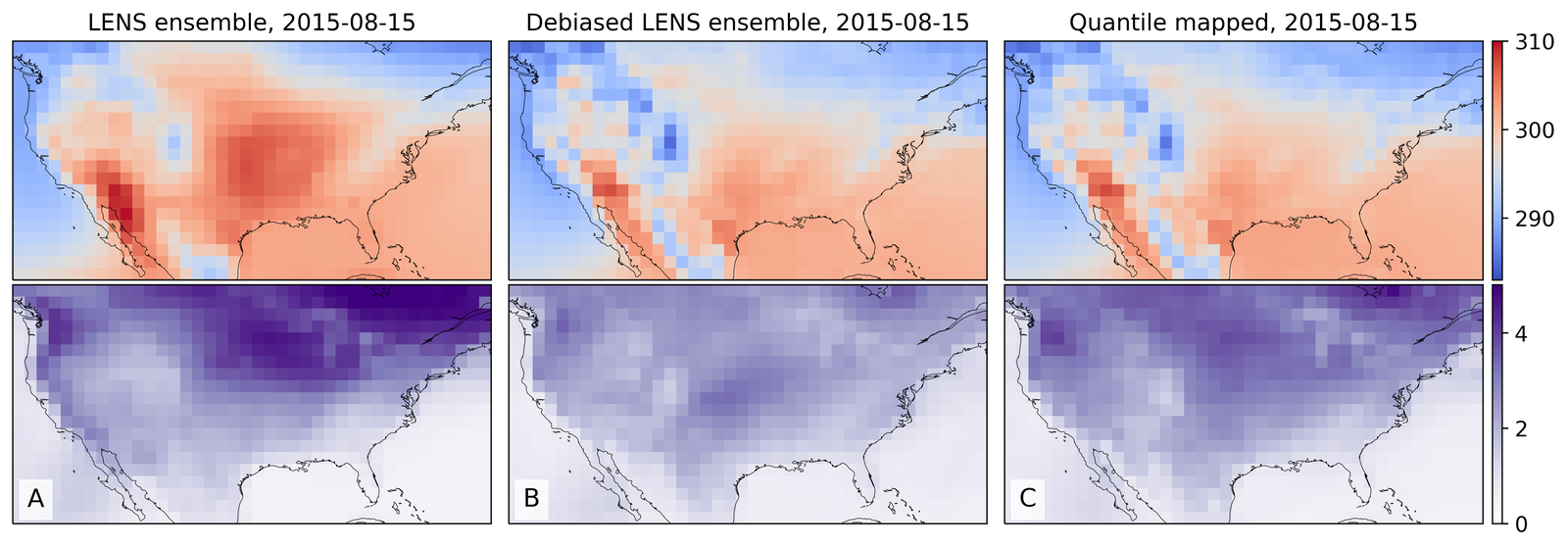

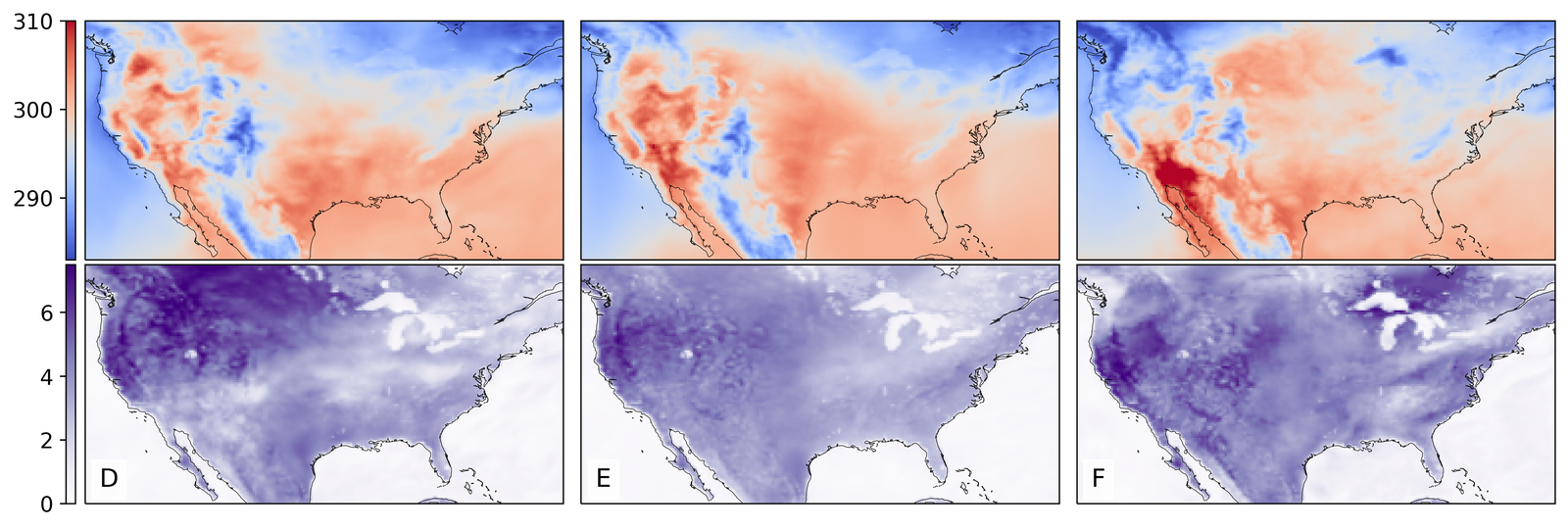

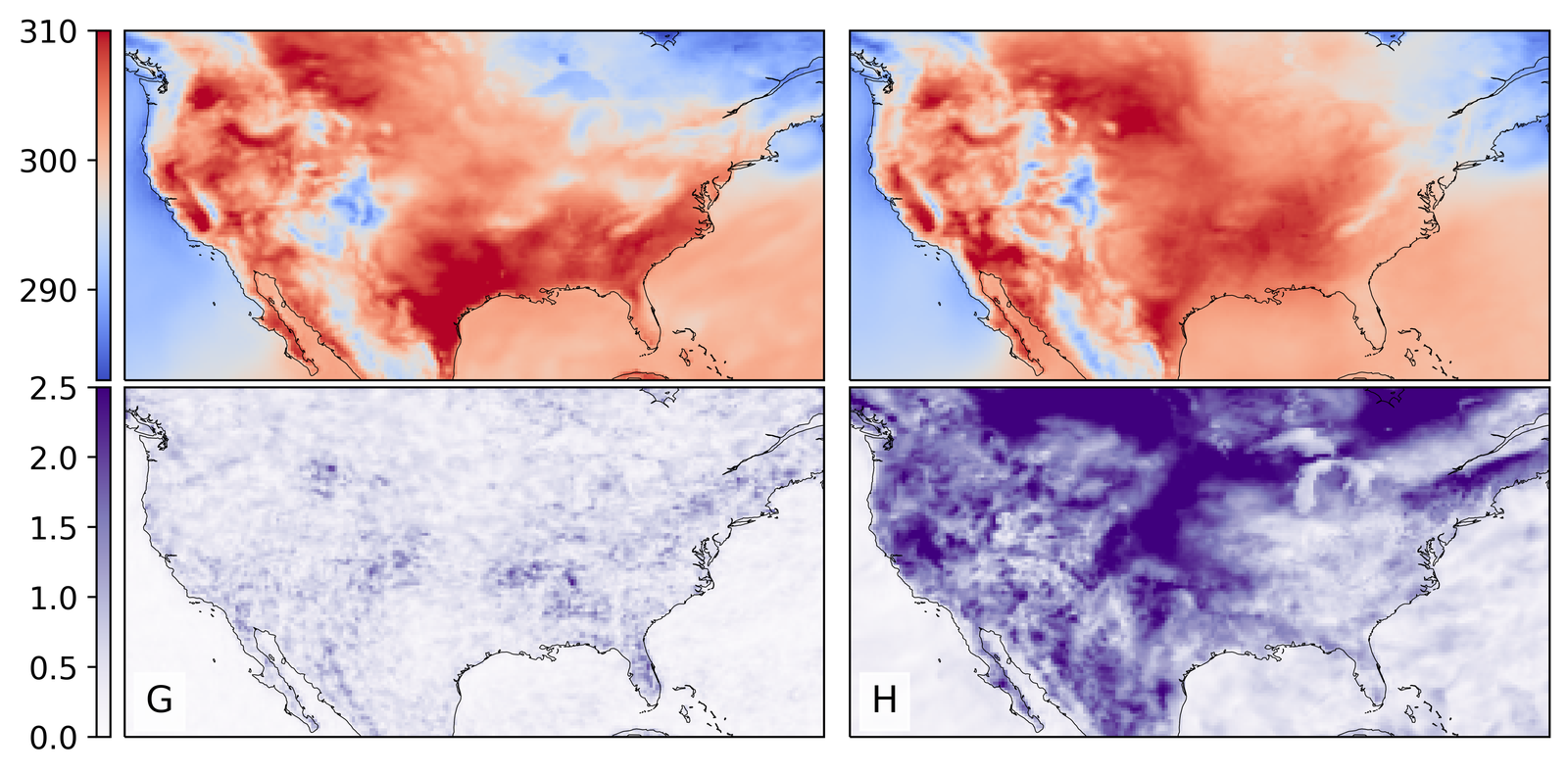

As shown in Figure 2, GenBCSR achieves consistency with large-scale inputs while introducing realistic fine-scale variability. In the debiasing step, GenBCSR modifies the original inputs (A) to better align with coarsened ERA5 observations (D). During the super-resolution step, GenBCSR (E) preserves the large-scale conditioning (B) more effectively than BCSD (F), which displays noticeable deviations from its inputs (C). Additionally, GenBCSR introduces small-scale variability, capturing spatial, diurnal, and systematic uncertainty components (S7.3). This balance between large-scale consistency and fine-scale detail highlights GenBCSR’s strength in producing reliable multi-scale downscaling results.

Effective debiasing leads to accurate inter-variable correlations and tail probabilities.

| Variable | Mean Absolute Bias | Wasserstein Distance | Mean Absolute Error, 99% | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| GenBCSR | BCSD | QMSR | SR | GenBCSR | BCSD | QMSR | SR | GenBCSR | BCSD | QMSR | SR | |

| Temperature (K) | 0.74 | 0.70 | 0.65 | 2.68 | 0.79 | 0.78 | 0.76 | 2.72 | 0.88 | 1.07 | 1.02 | 3.17 |

| Wind speed (m/s) | 0.19 | 0.17 | 0.16 | 1.90 | 0.28 | 0.31 | 0.25 | 1.92 | 0.64 | 0.73 | 0.59 | 2.66 |

| Specific humidity (g/kg) | 0.49 | 0.45 | 0.46 | 1.55 | 0.55 | 0.54 | 0.52 | 1.63 | 0.56 | 0.81 | 0.69 | 1.77 |

| Sea-level pressure (Pa) | 68.28 | 64.13 | 63.47 | 184.53 | 67.46 | 66.92 | 65.40 | 189.23 | 97.31 | 133.29 | 111.01 | 276.87 |

| Relative humidity (%) | 2.89 | 3.21 | 3.10 | 9.35 | 3.12 | 4.43 | 3.43 | 9.52 | 3.05 | 14.00 | 4.07 | 8.16 |

| Heat index (K) | 0.58 | 0.75 | 0.72 | 2.54 | 0.64 | 0.89 | 0.77 | 2.59 | 1.35 | 1.77 | 1.50 | 4.02 |

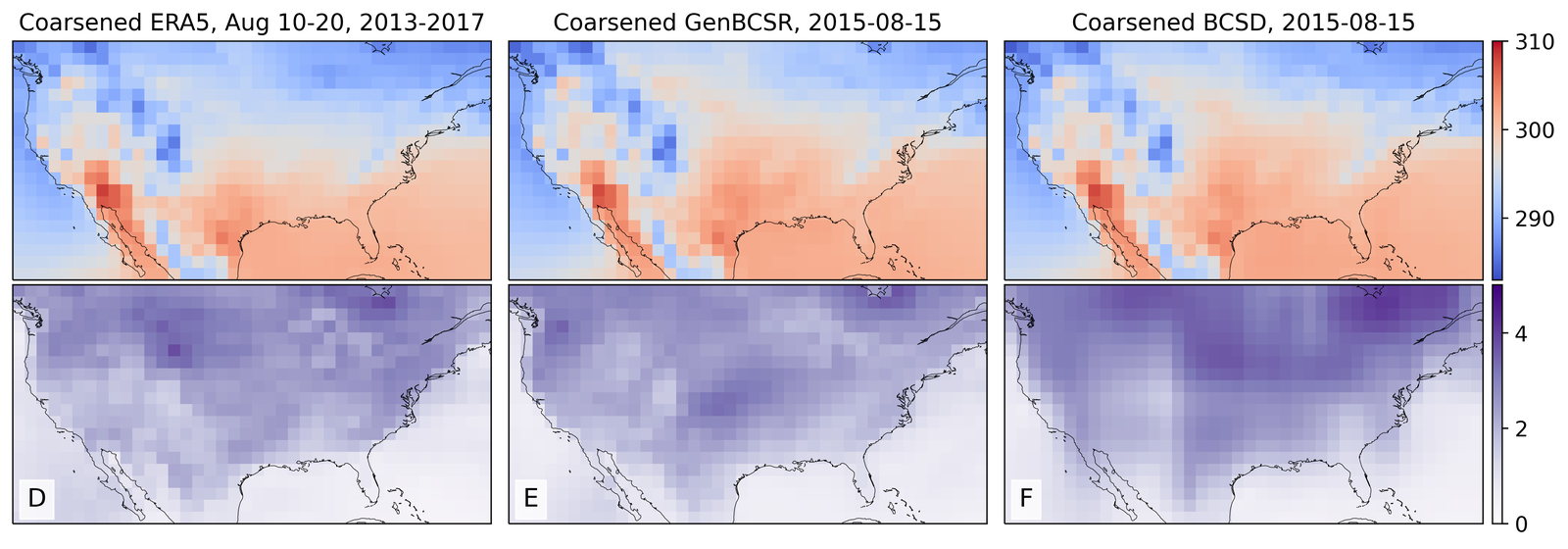

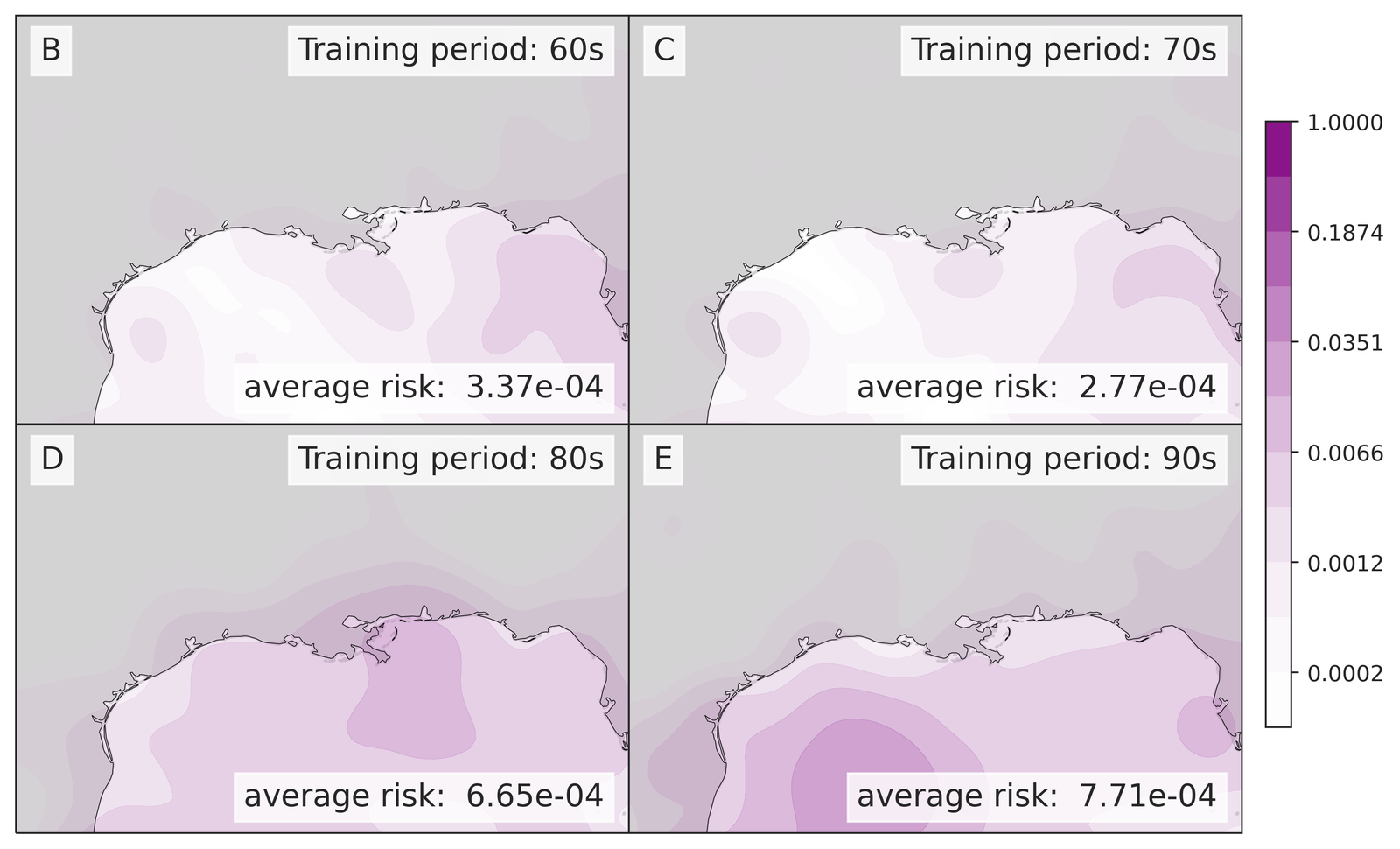

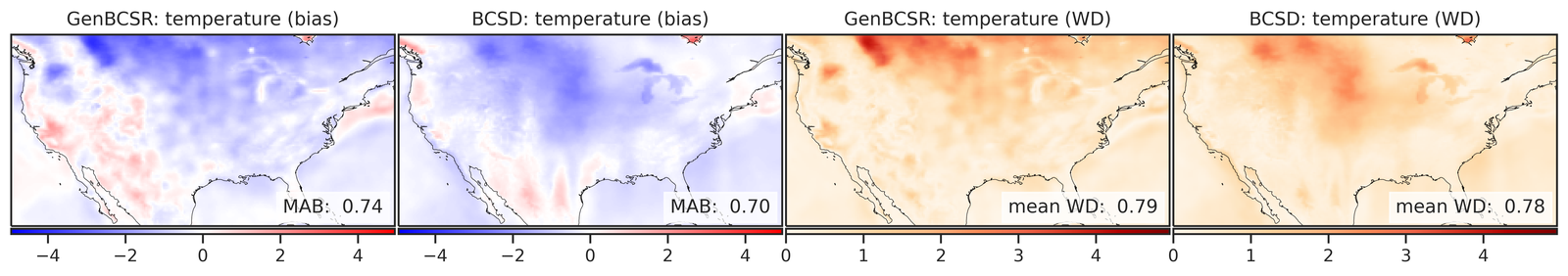

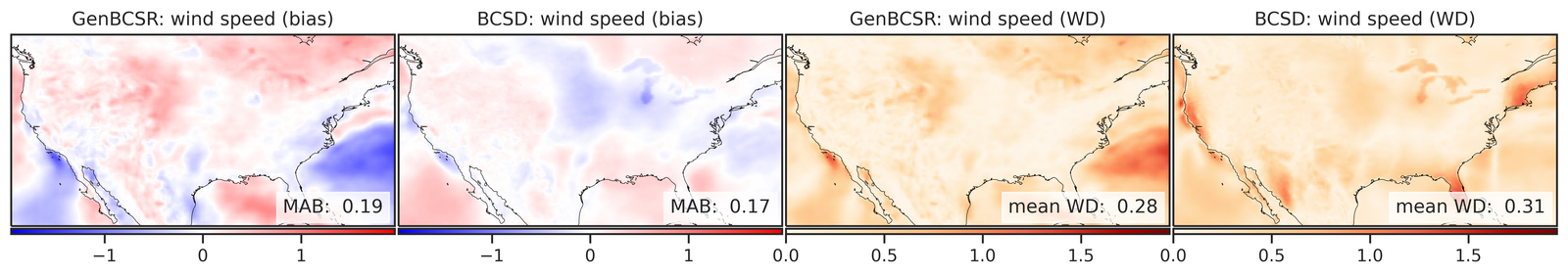

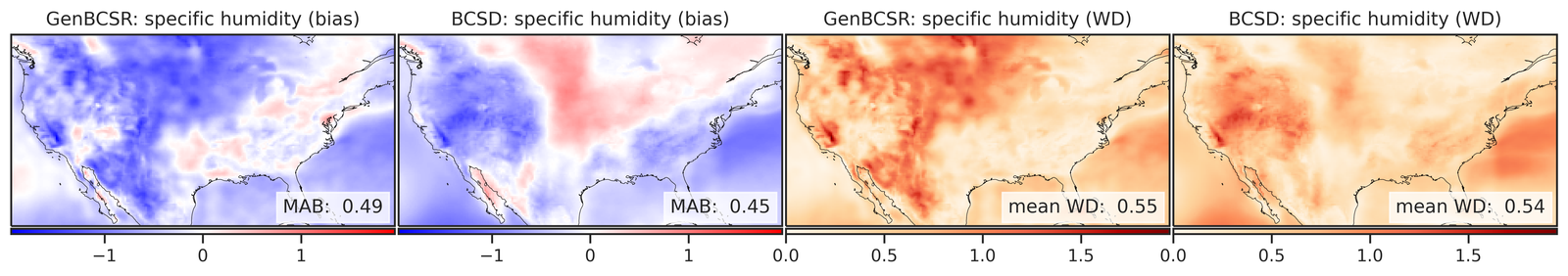

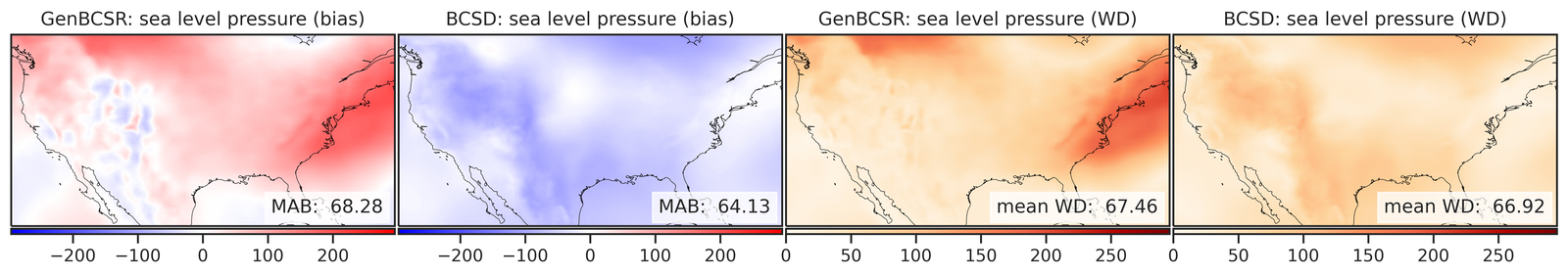

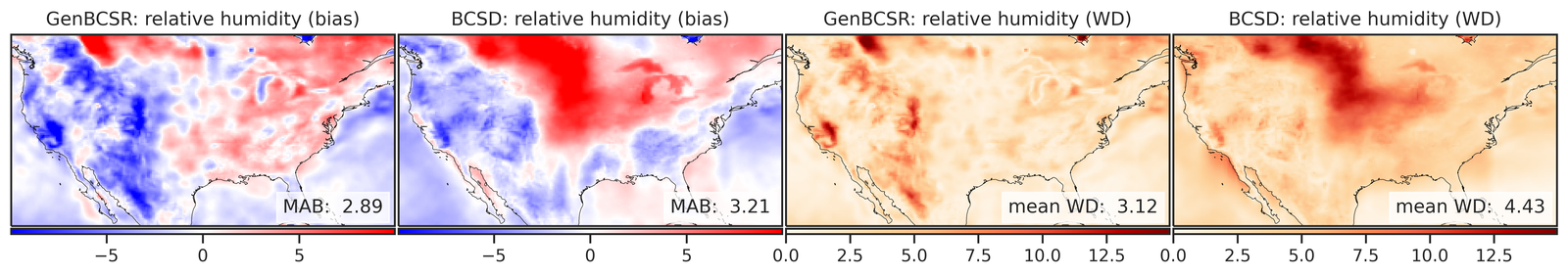

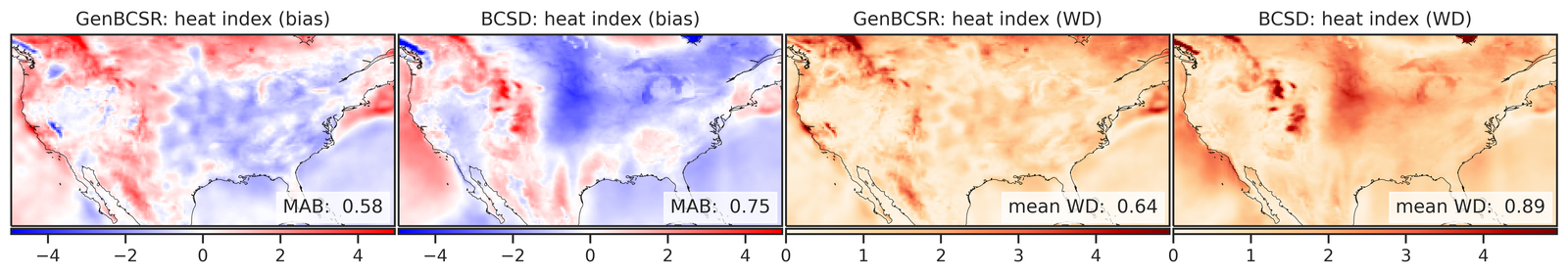

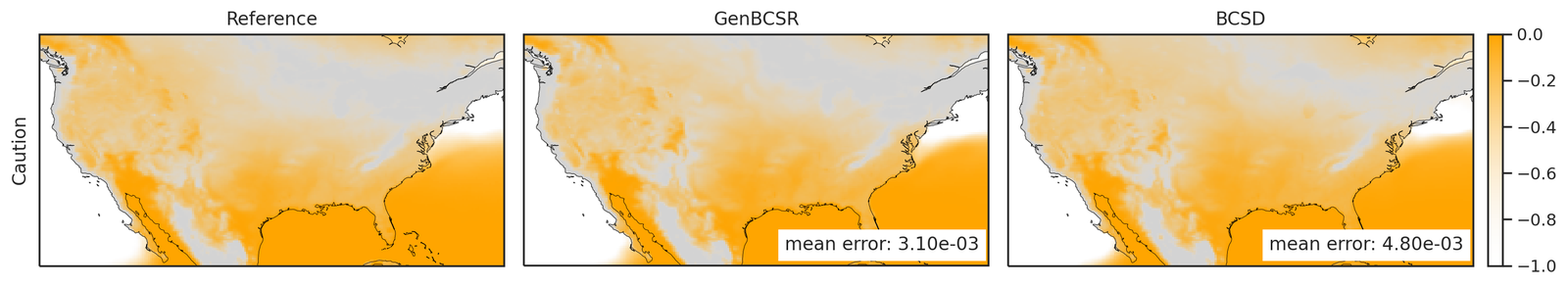

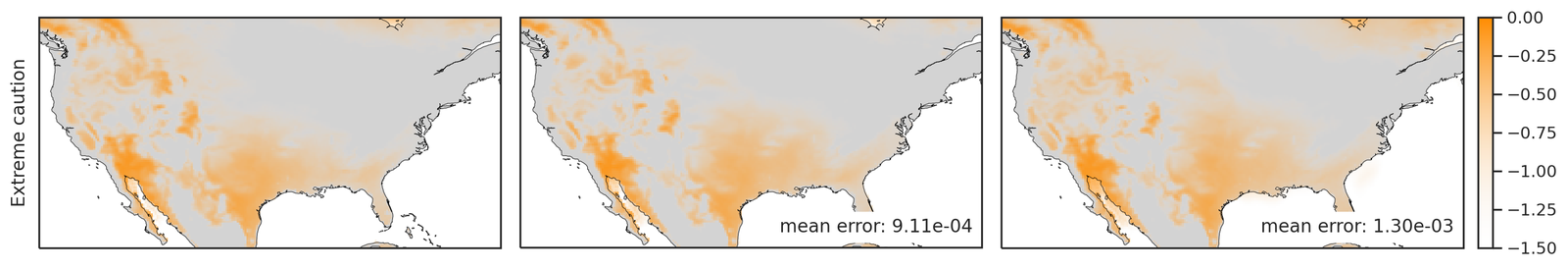

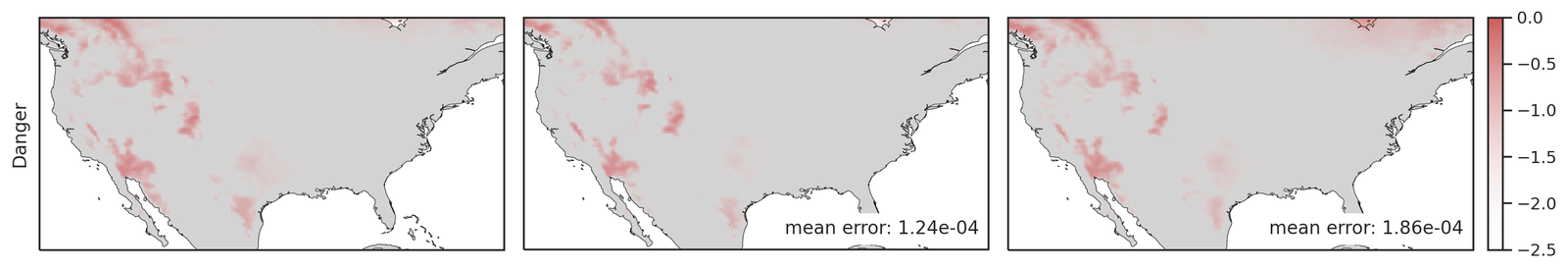

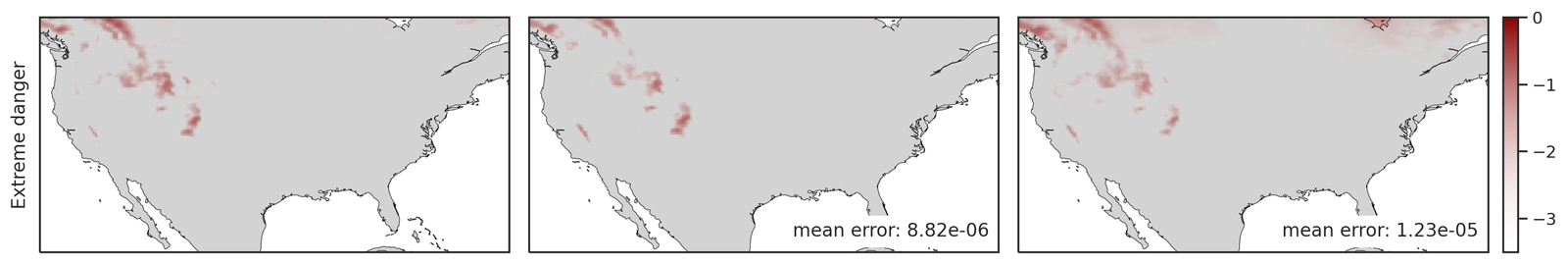

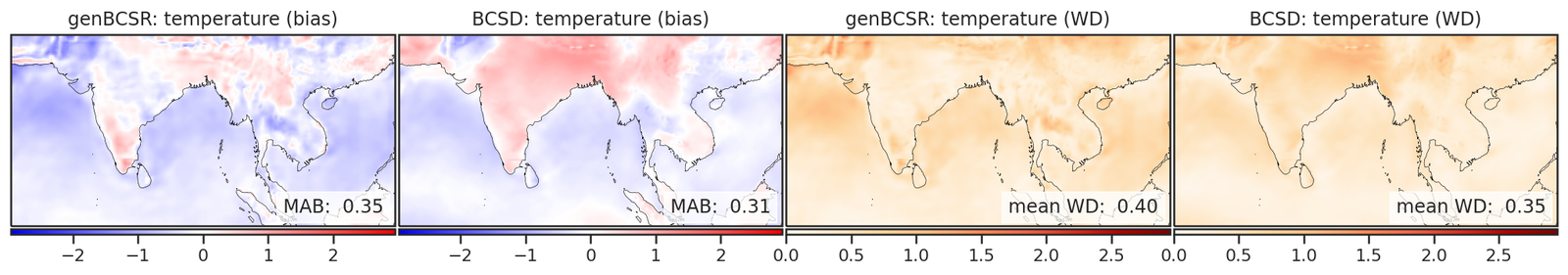

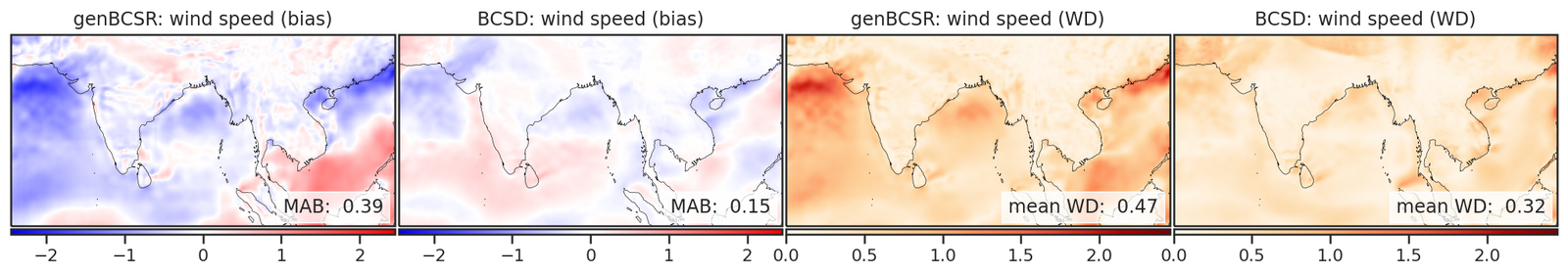

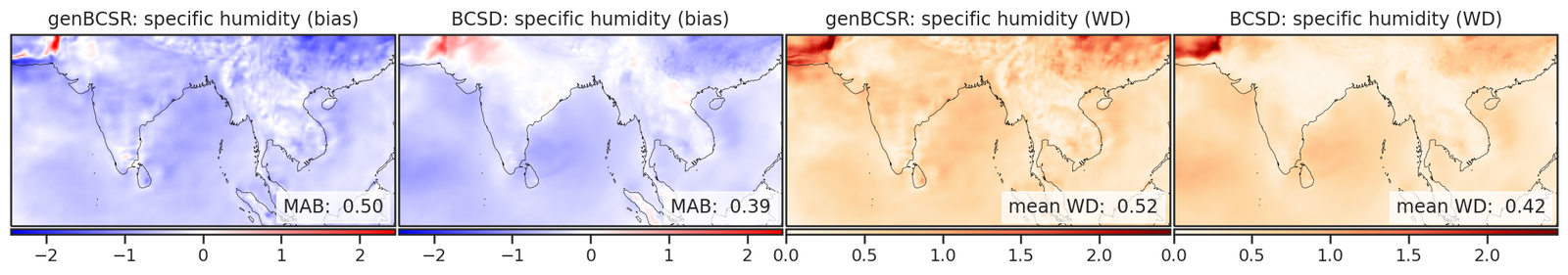

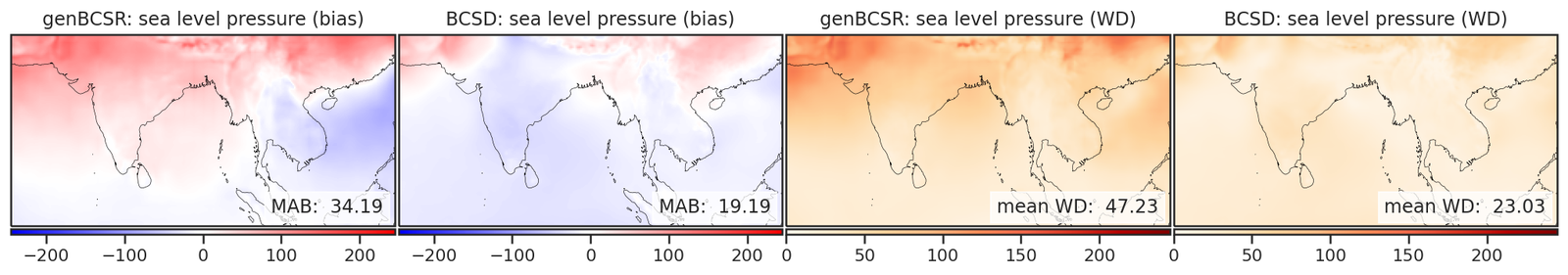

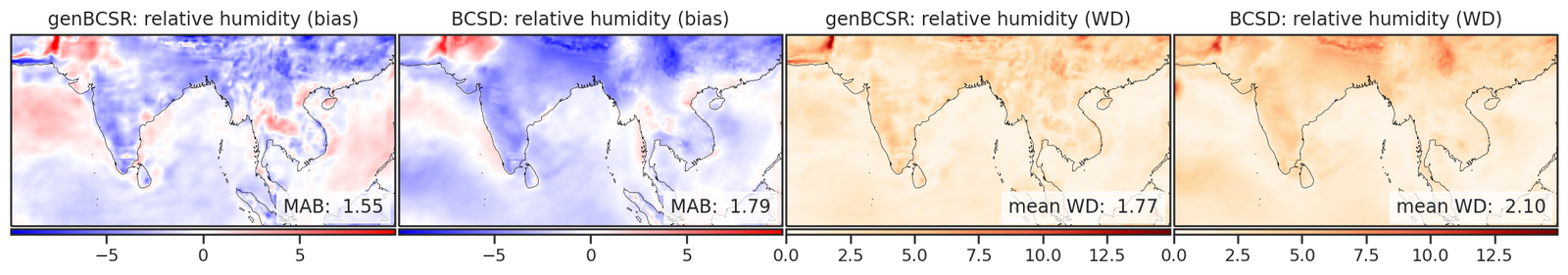

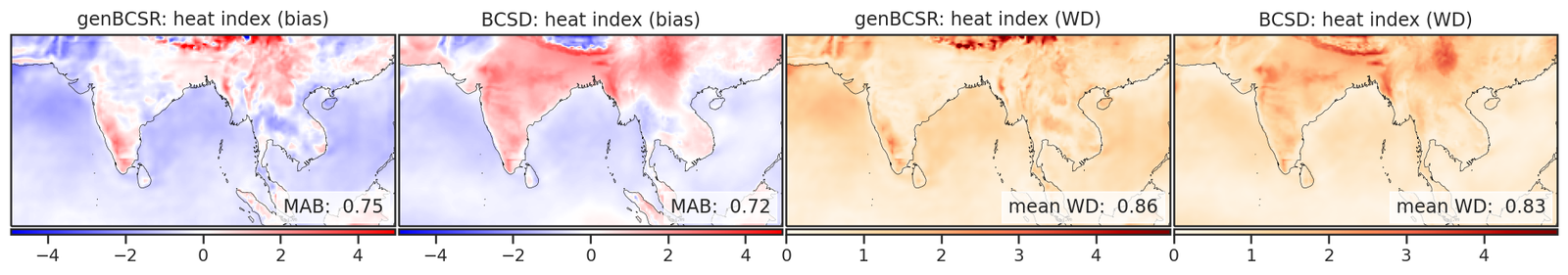

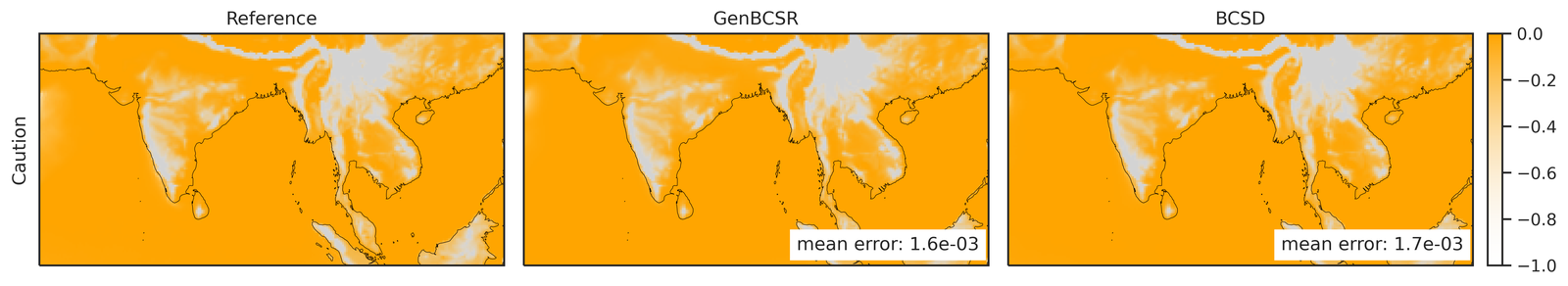

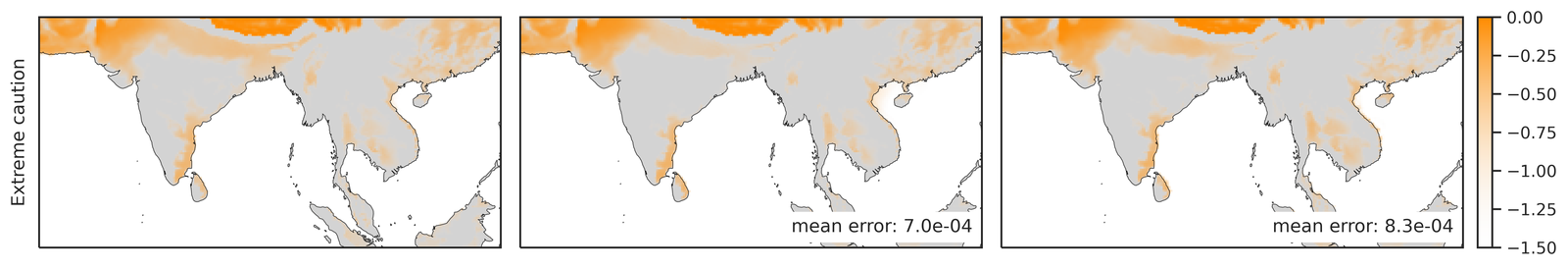

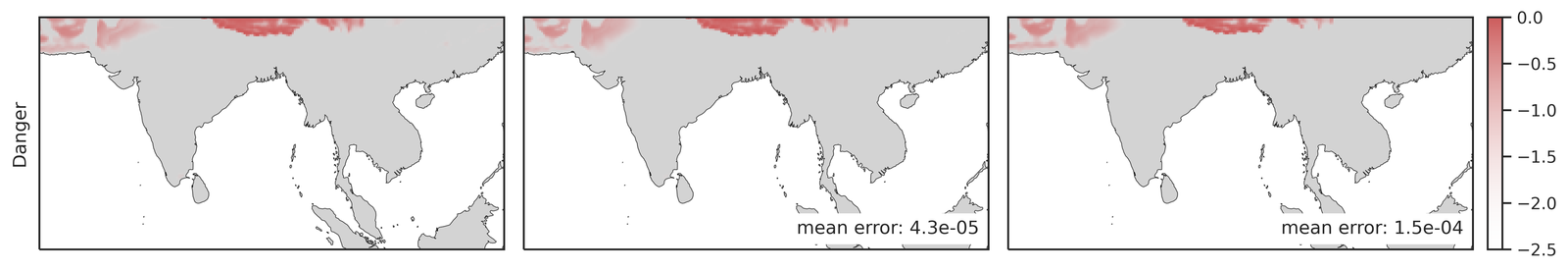

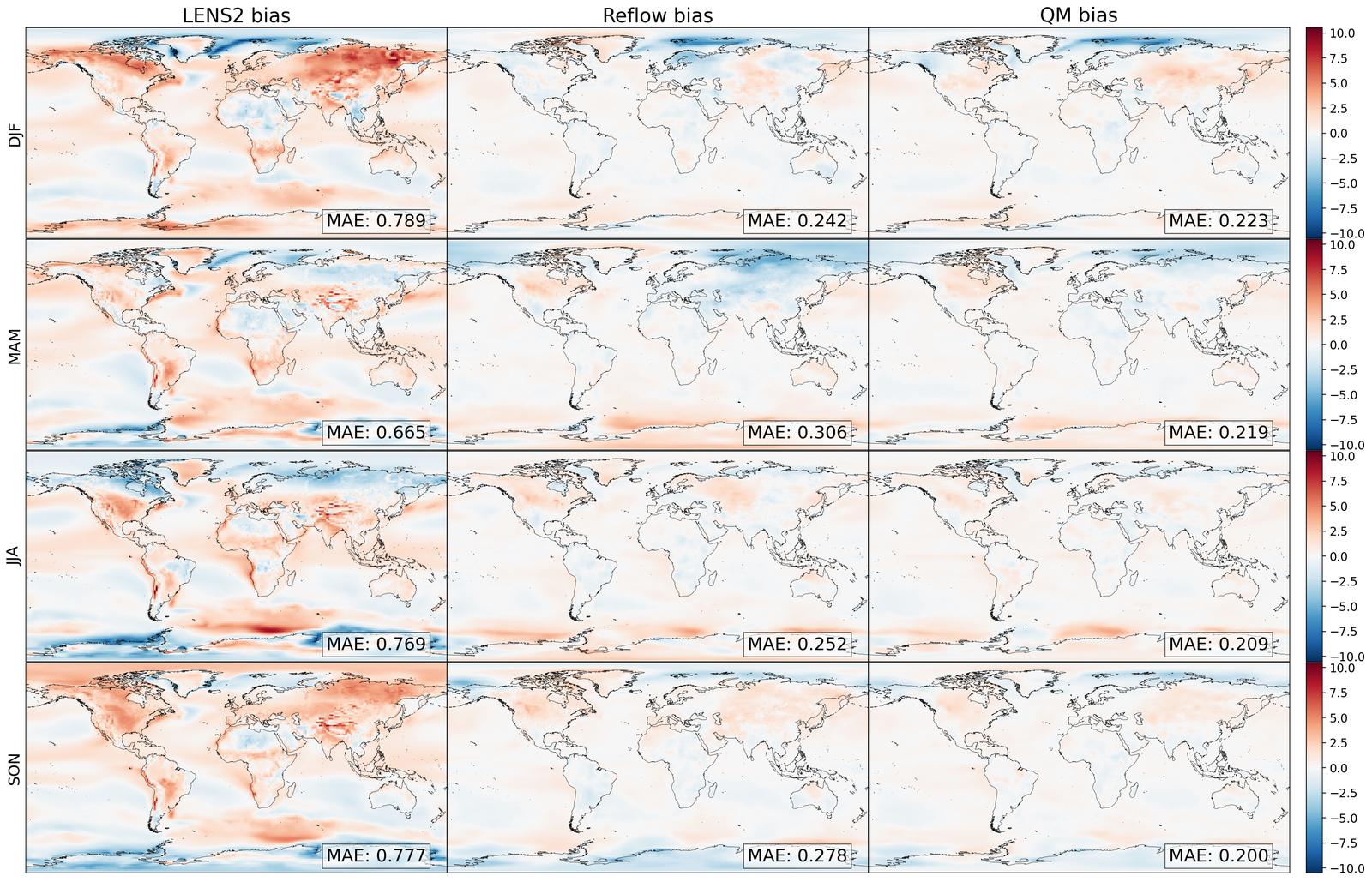

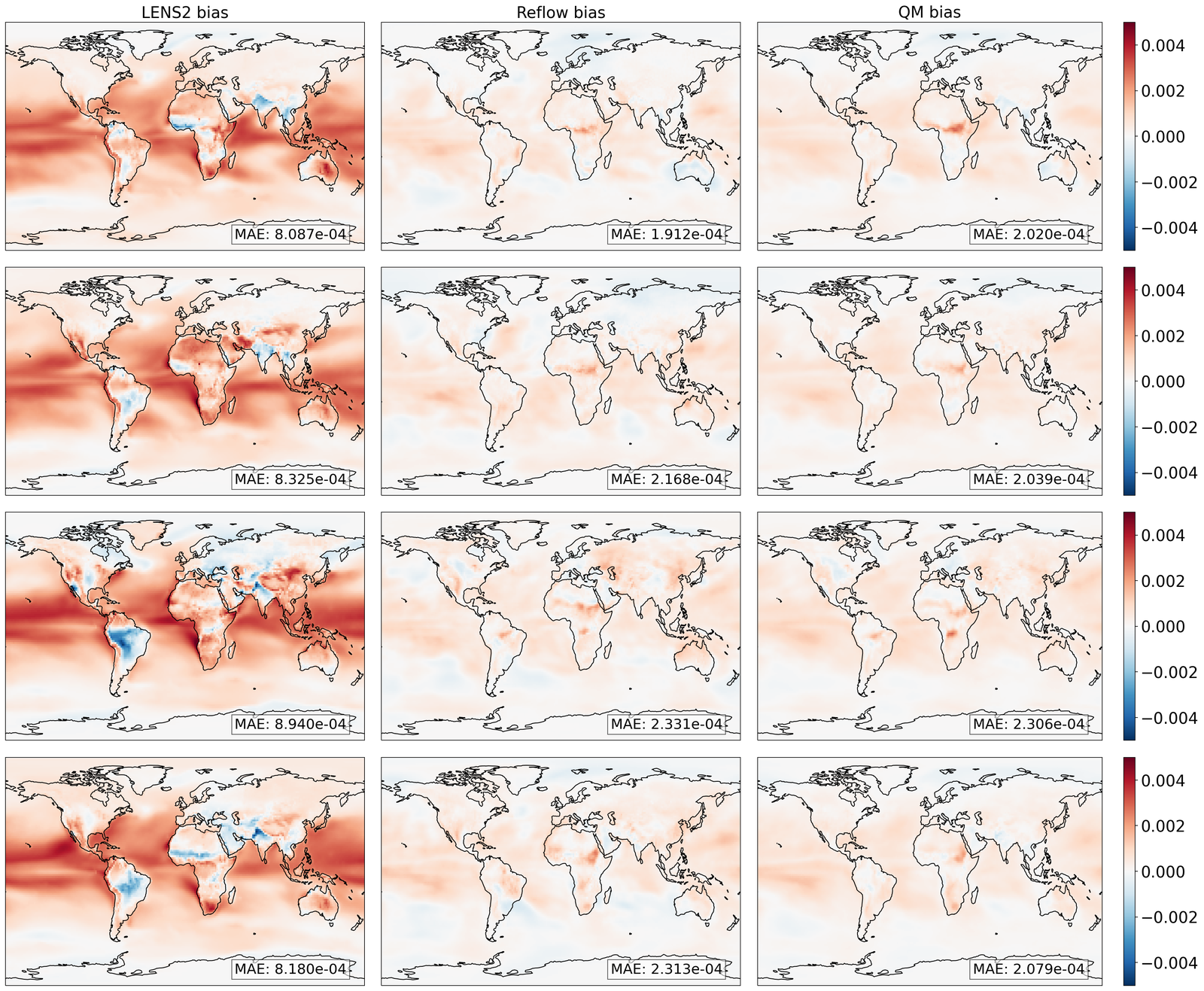

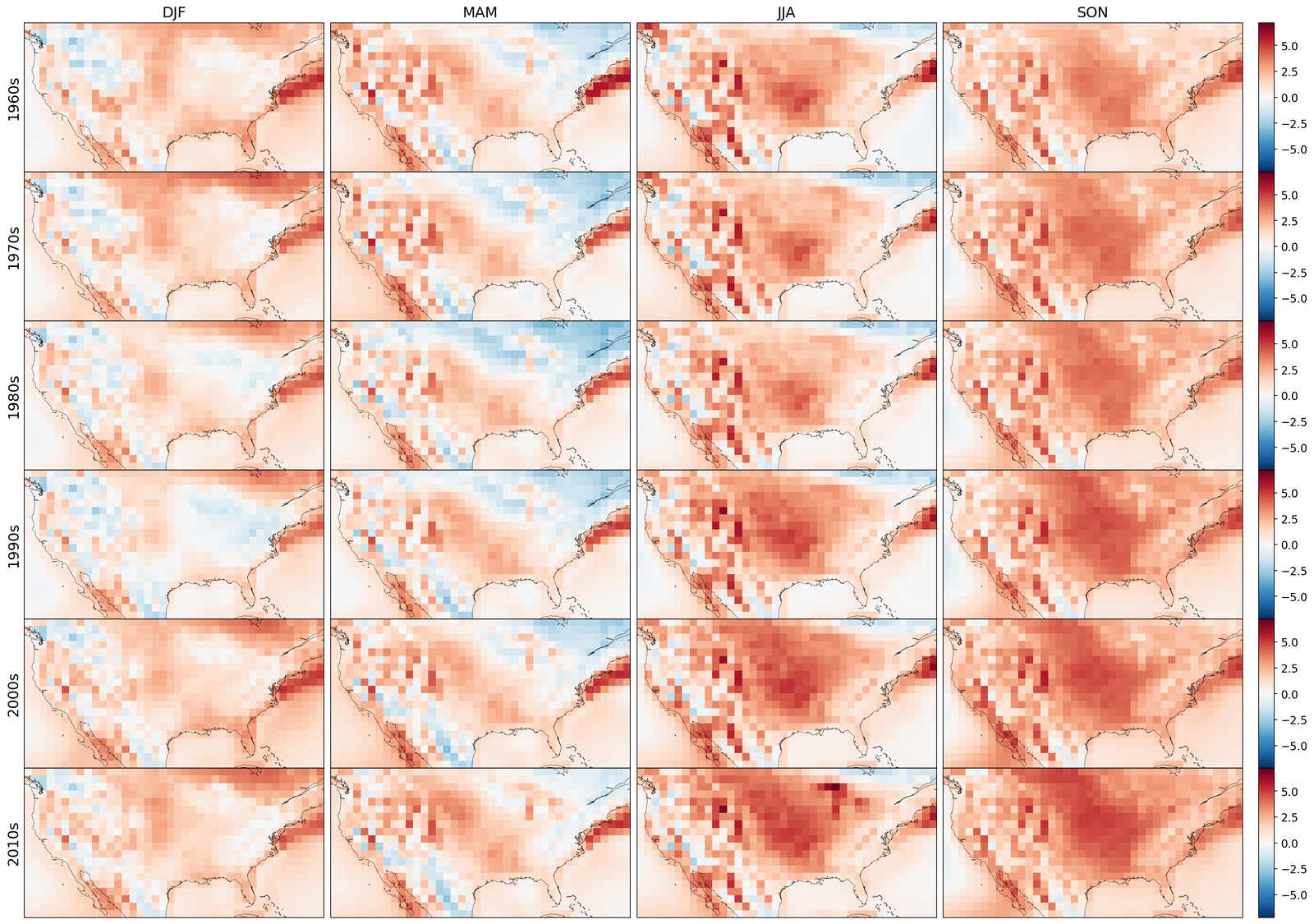

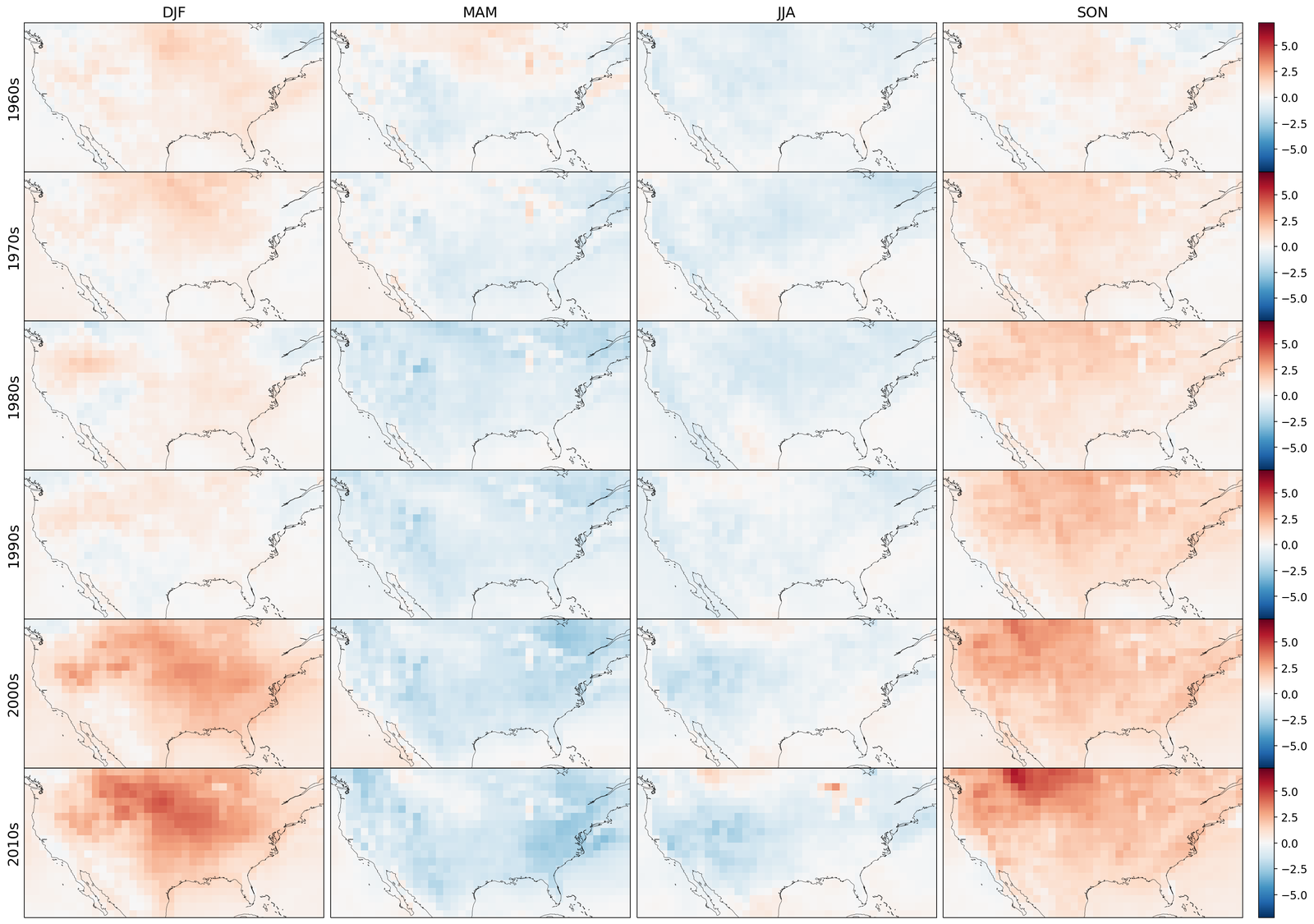

As shown in Table 1 and Figure 3, GenBCSR demonstrates strong performance in matching local pointwise marginal distributions, with mean absolute bias (MAB) capturing first-moment errors and Wasserstein distance (WD) providing a comprehensive measure of distribution discrepancies. Besides the four modeled variables, we evaluate two additional derived variables: relative humidity and heat index (S4.1). GenBCSR’s advantage over BCSD is particularly evident in these derived variables, while remaining competitive for directly predicted ones. This highlights GenBCSR’s superior ability to capture inter-variable correlations while accurately aligning marginal distributions. The improvements are most notable in regions such as the North West, Central US, and around the Great Lakes, as shown in Figure 3 (B and E).

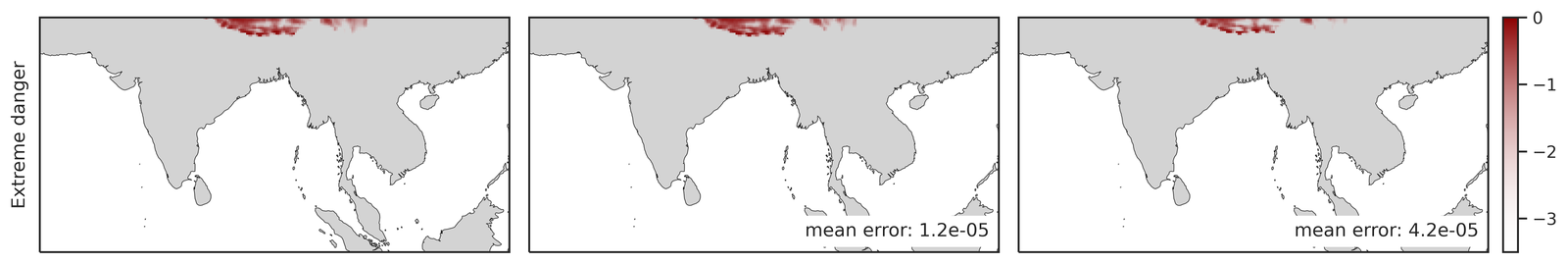

The advantage of GenBCSR becomes more pronounced when focusing on distribution tails, which are crucial for representing extreme weather events with significant real-world consequences. Table 1 shows that GenBCSR achieves lower MAE at the 99th percentile across most variables. This strength is further illustrated in Figure 3 (C), which depicts the probability of heat advisory conditions, defined by local heat indices exceeding the “danger" threshold of 312.6 K [59] (additional levels provided in S6). Across various tail thresholds, GenBCSR consistently exhibits significantly lower errors than BCSD, underscoring its robustness in predicting high-impact extremes.

The importance of multivariate debiasing is further emphasized through comparisons against alternative configurations. While QM-based debiasing combined with generative super-resolution (QMSR) reduces errors compared to no debiasing (SR) and is very effective for directly modeled variables, it still underperforms GenBCSR for derived variables and extreme tails. In contrast, perfect debiasing dramatically reduces errors (S5.2), reinforcing the critical role of accurate debiasing in improving model performance. These comparisons demonstrate that GenBCSR’s debiasing step allows for a better characterization of inter-variable correlations and tail statistics.

Superior spatio-temporal correlations and compound event statistics.

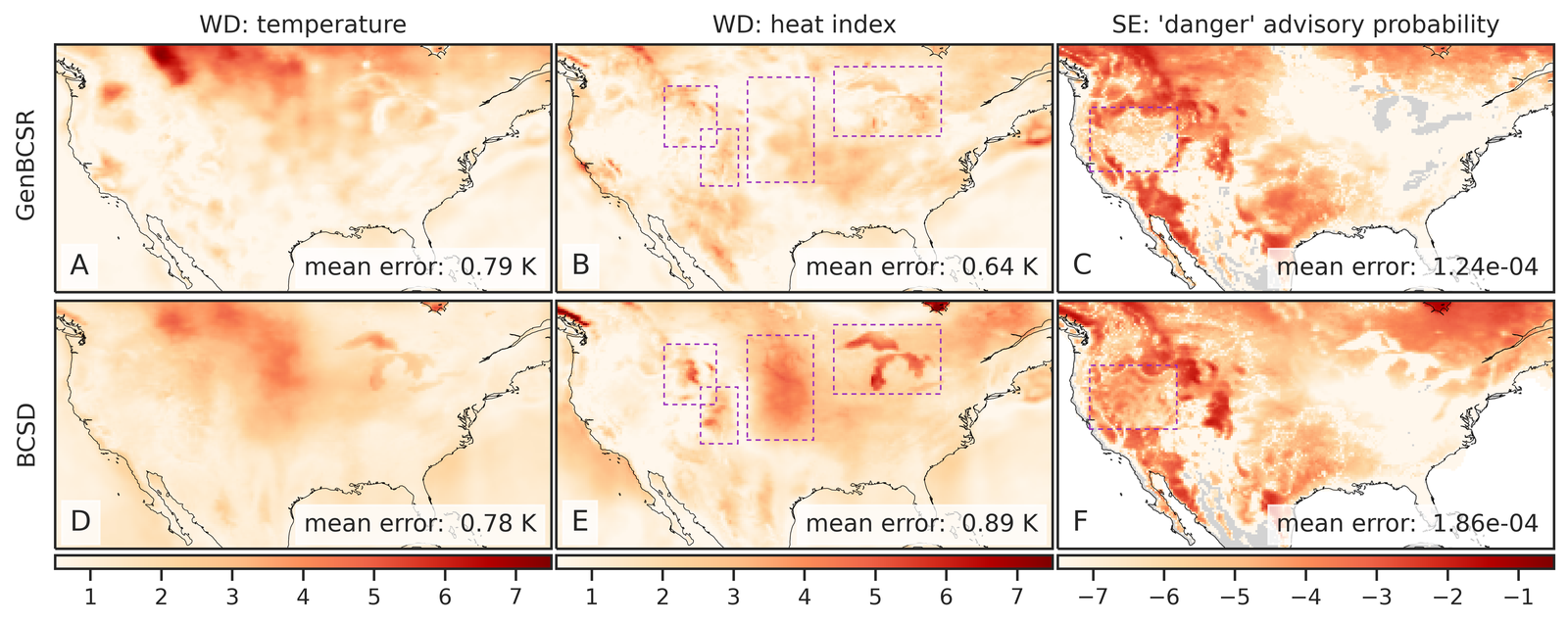

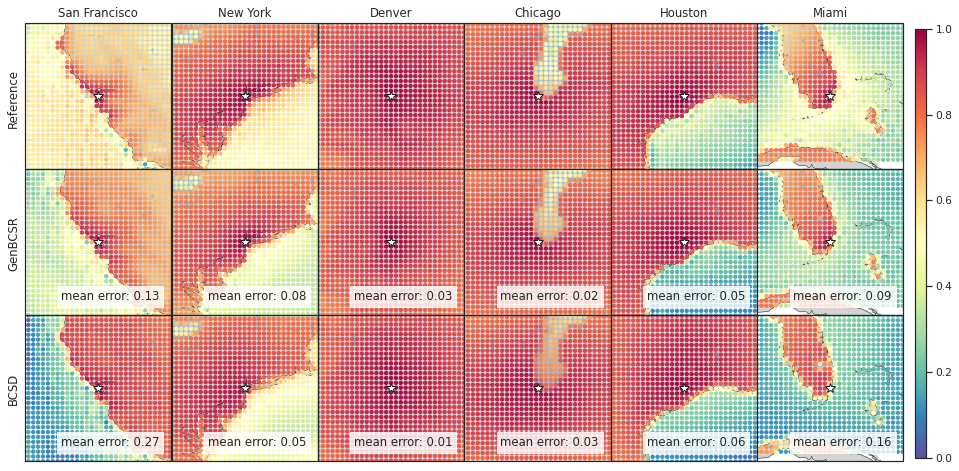

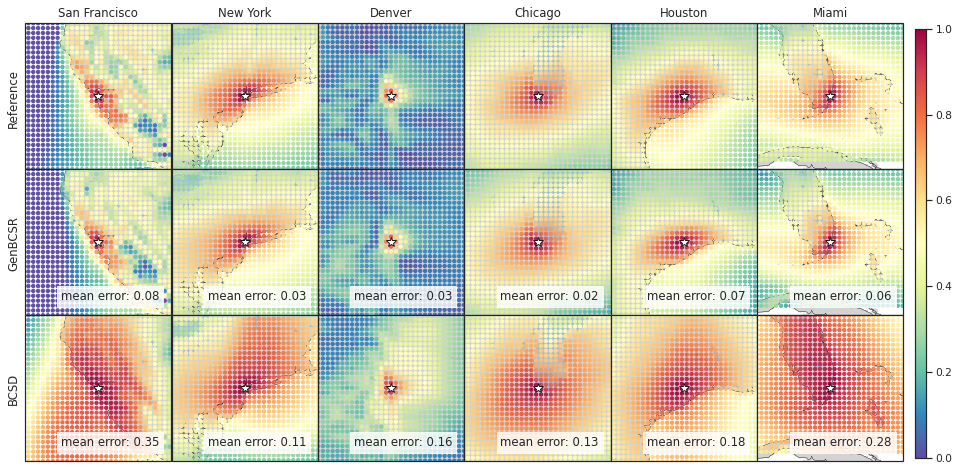

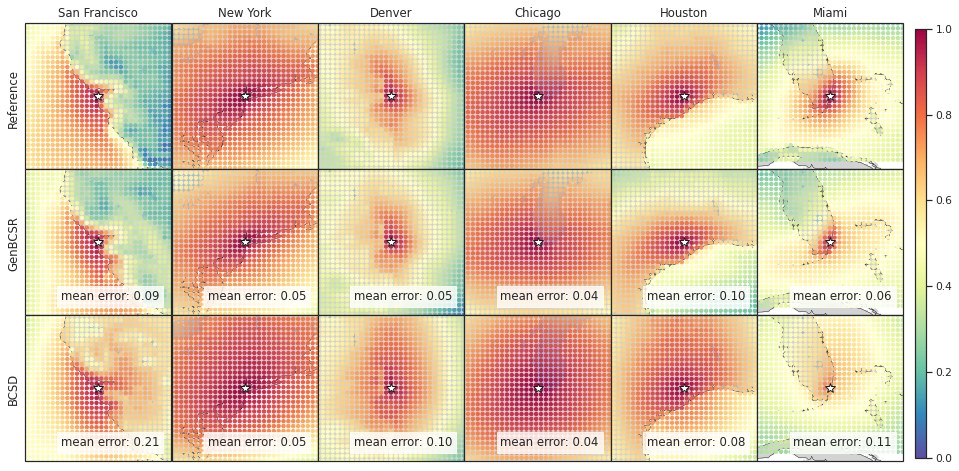

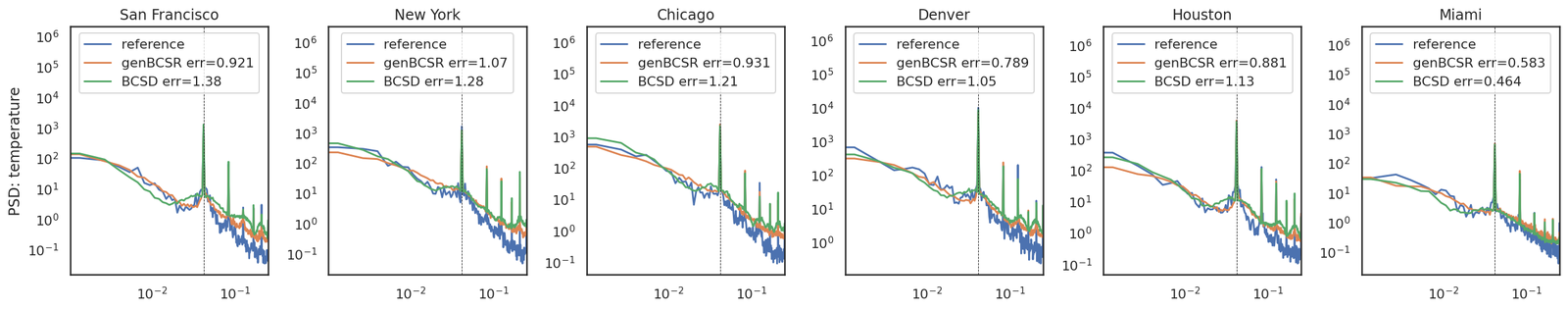

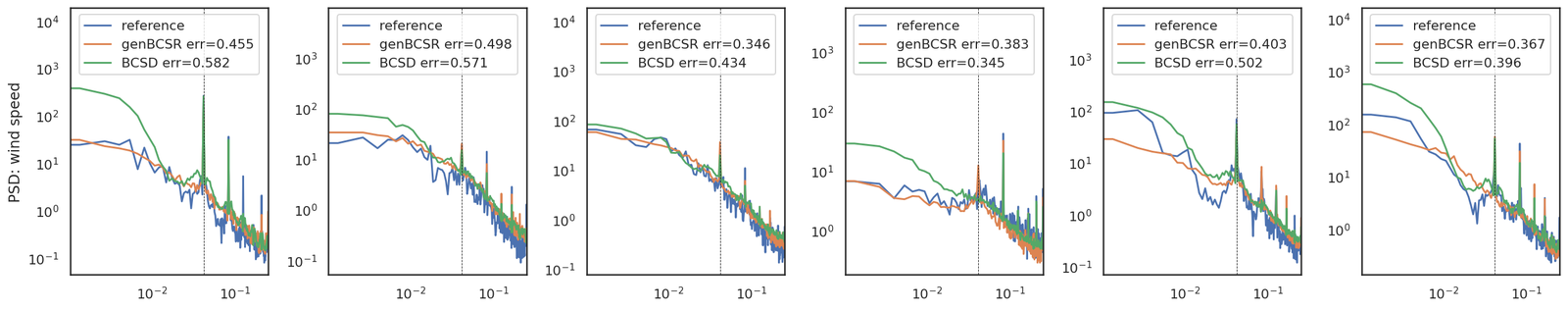

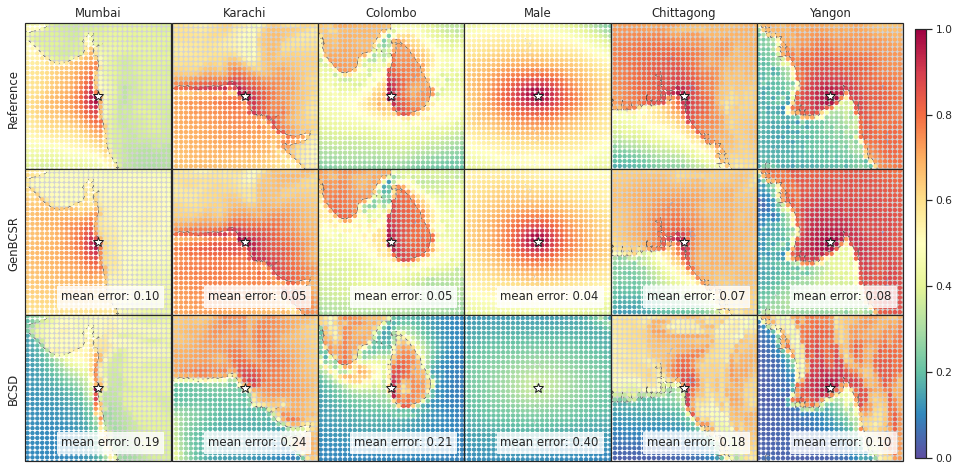

In addition to capturing inter-variable correlations, GenBCSR demonstrates significant improvements in representing spatial and temporal correlations. Figure 4 (A-I) illustrates the spatial correlation of surface wind speed at selected U.S. cities relative to nearby locations. The patterns produced by GenBCSR align closely with ERA5 observations, accurately reflecting the influence of geographic features and regional meteorology. Unlike BCSD, which produces overly smoothed correlations and misses finer regional details, GenBCSR effectively preserves local variability and resolves complex spatial structures.

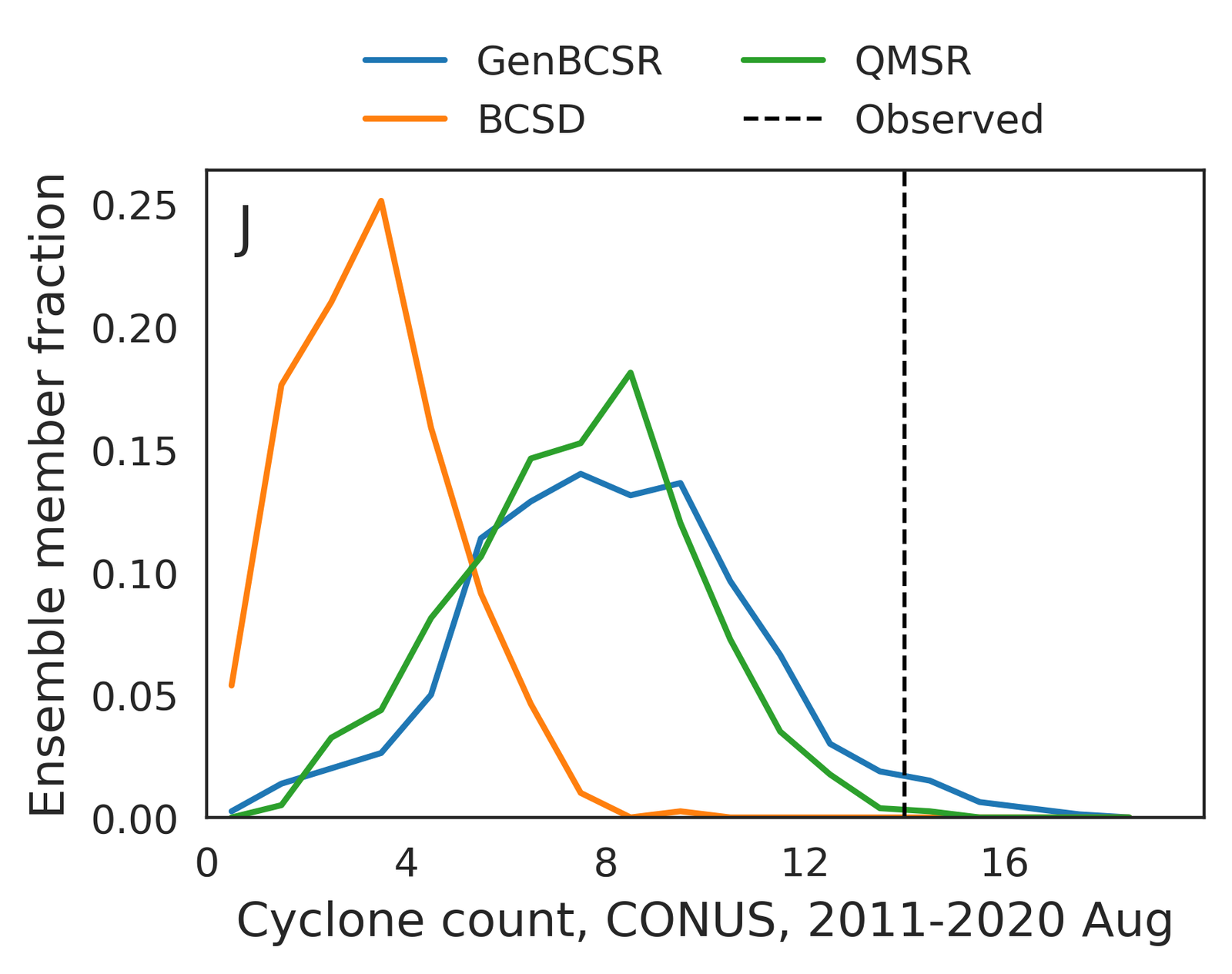

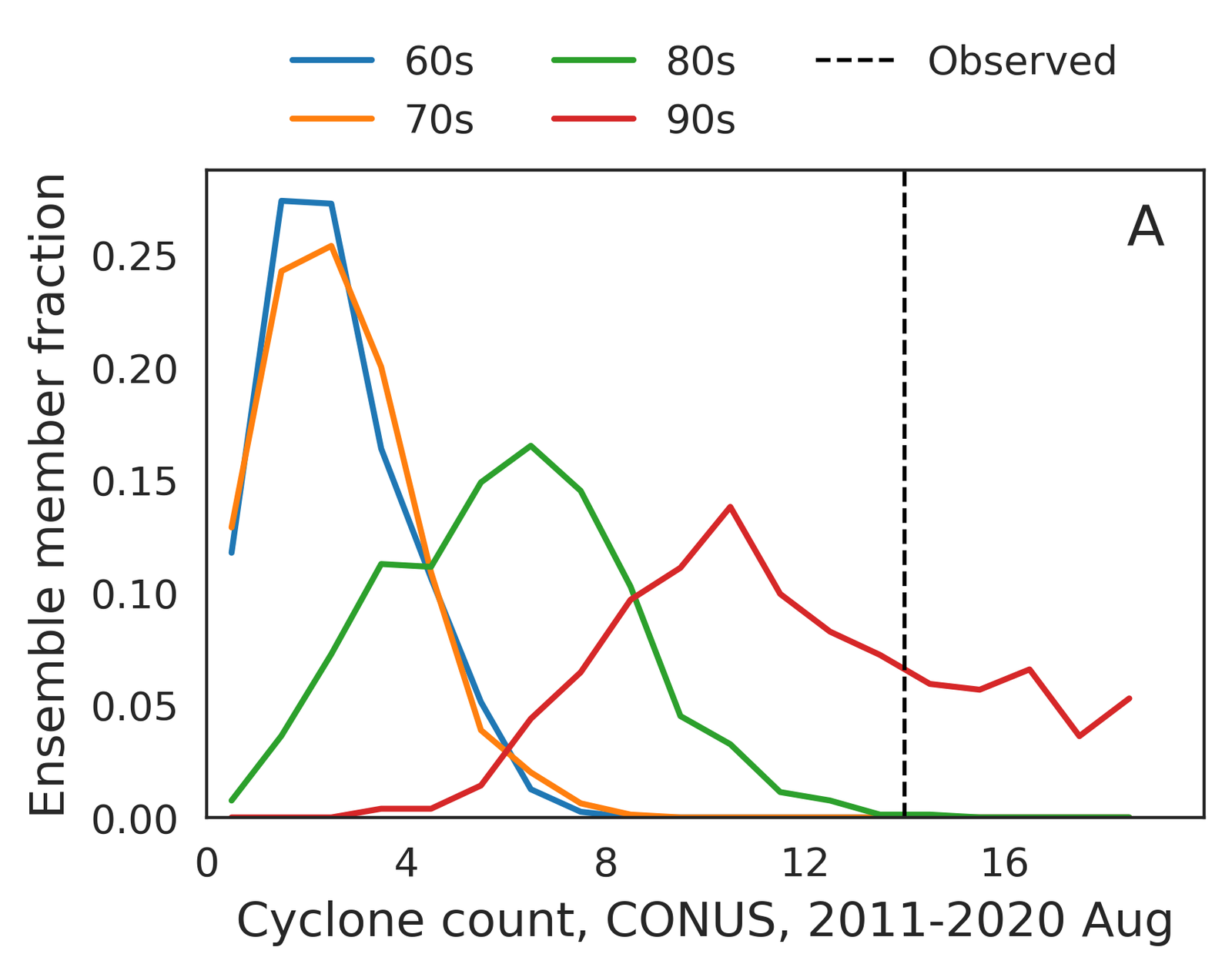

Tropical cyclones, with their pronounced spatial-temporal dependencies, serve as a representative example of GenBCSR’s proficiency in modeling compound event statistics. As shown in Figure 4 (J-K), when using TempestExtremes [103] to detect tropical cyclones, GenBCSR yields improved count statistics compared to the BCSD baseline, bringing them closer to those observed in ERA5. These improvements are largely attributed to the spatio-temporal upsampling in our approach, which, when combined with QM, also results in noticeable enhancements in the cyclone counts.

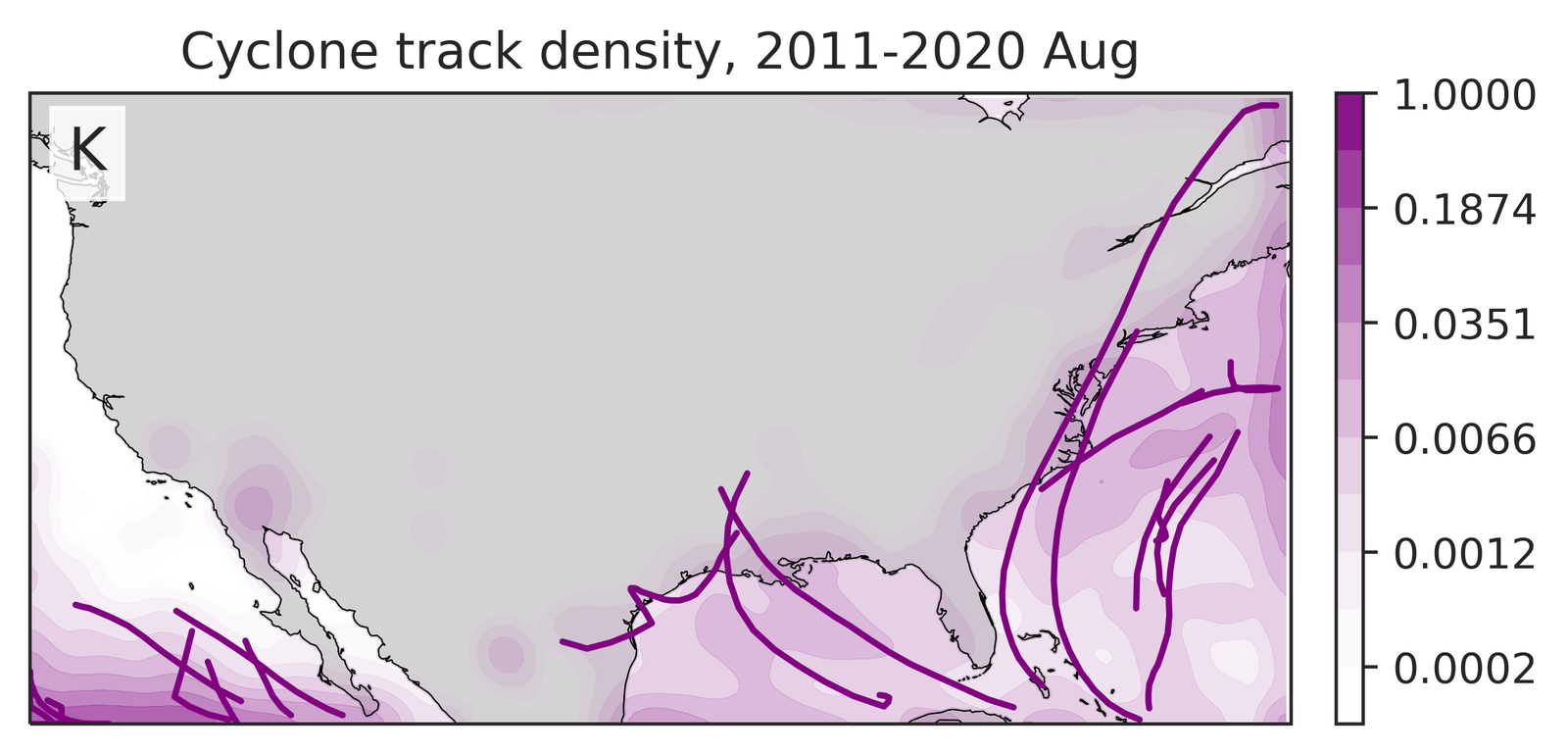

Prolonged heat streaks represent another example of compound events with significant impacts on human activities. Figure 4 (L-N) presents the predicted probabilities for heat streaks, defined as daily maximum temperatures exceeding climatology for multiple consecutive days. GenBCSR demonstrates a clear advantage over BCSD, capturing the frequency and intensity of these extreme events with significantly greater accuracy (additional results in S6). This improvement reflects GenBCSR’s ability to retain critical temporal dependencies, offering a more reliable representation of persistent extremes that are essential for understanding and adapting to climate impacts.

Robust to out-of-distribution shift due to nonstationarity

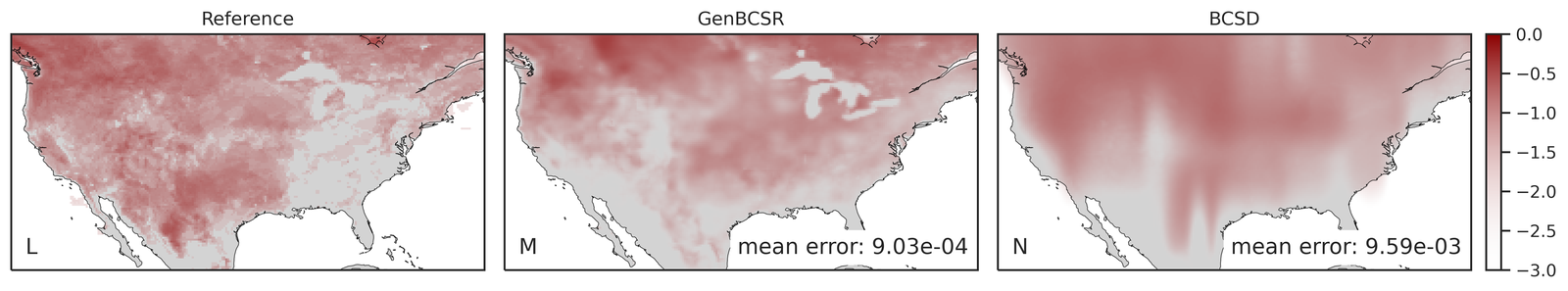

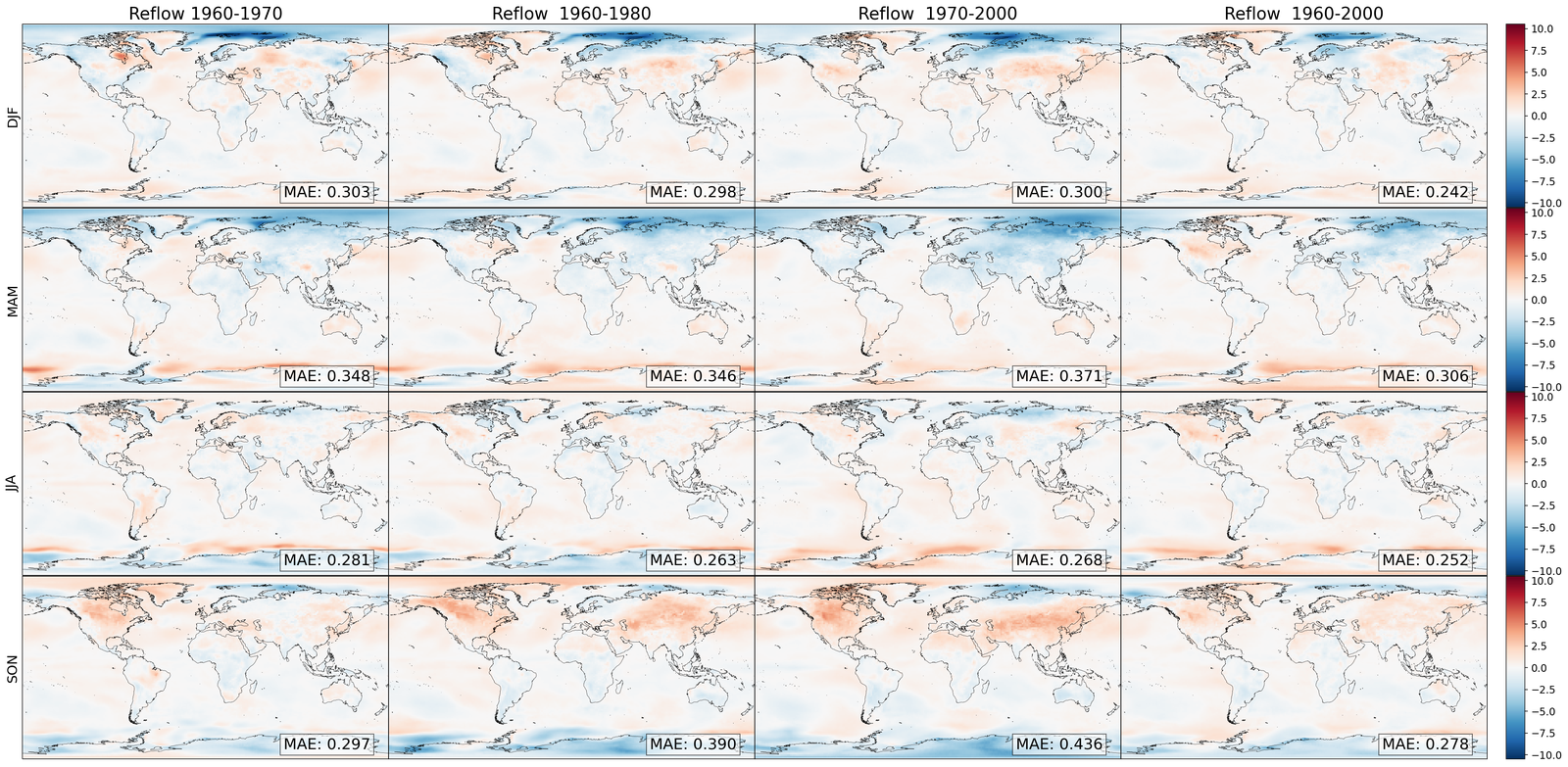

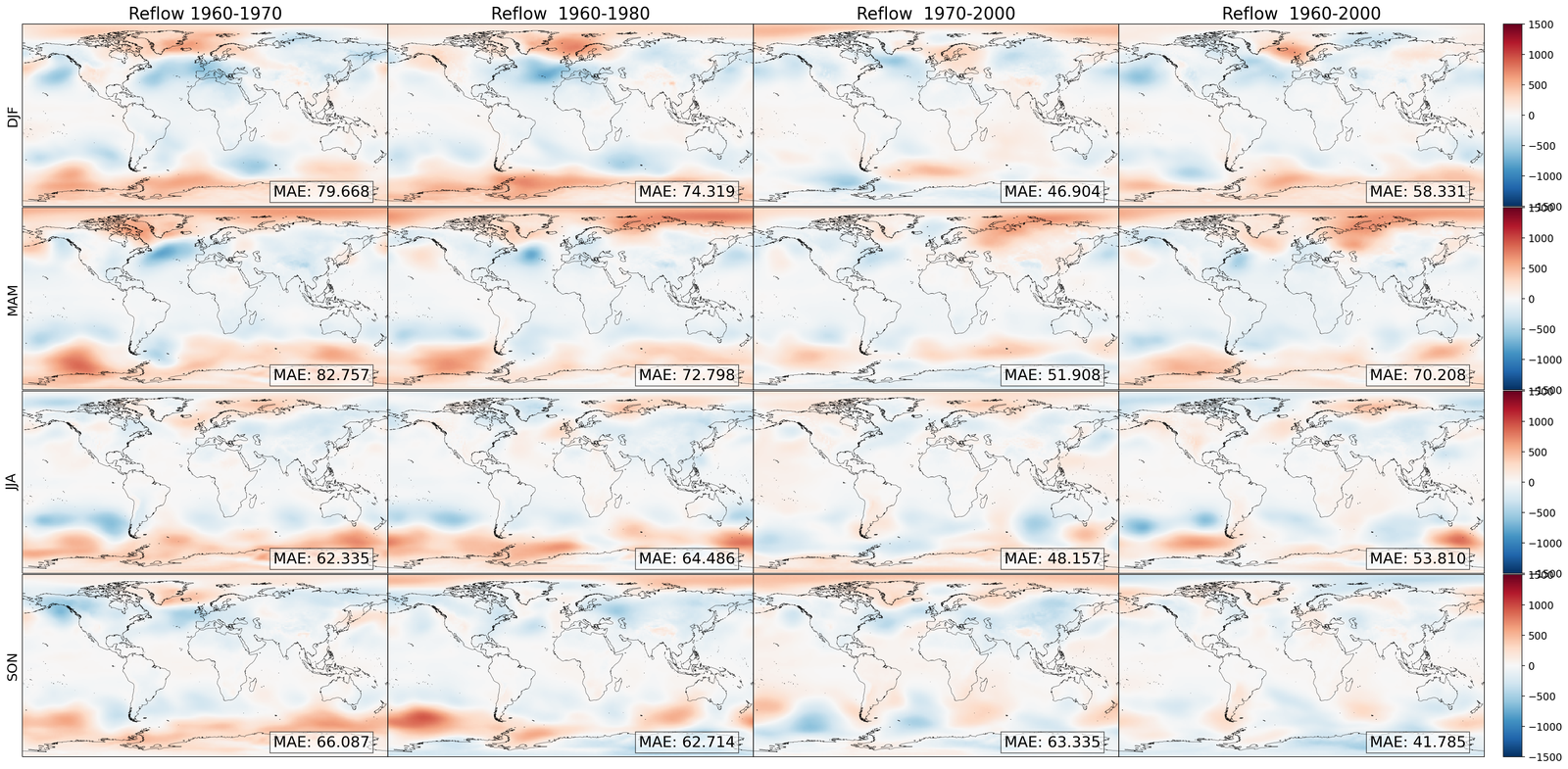

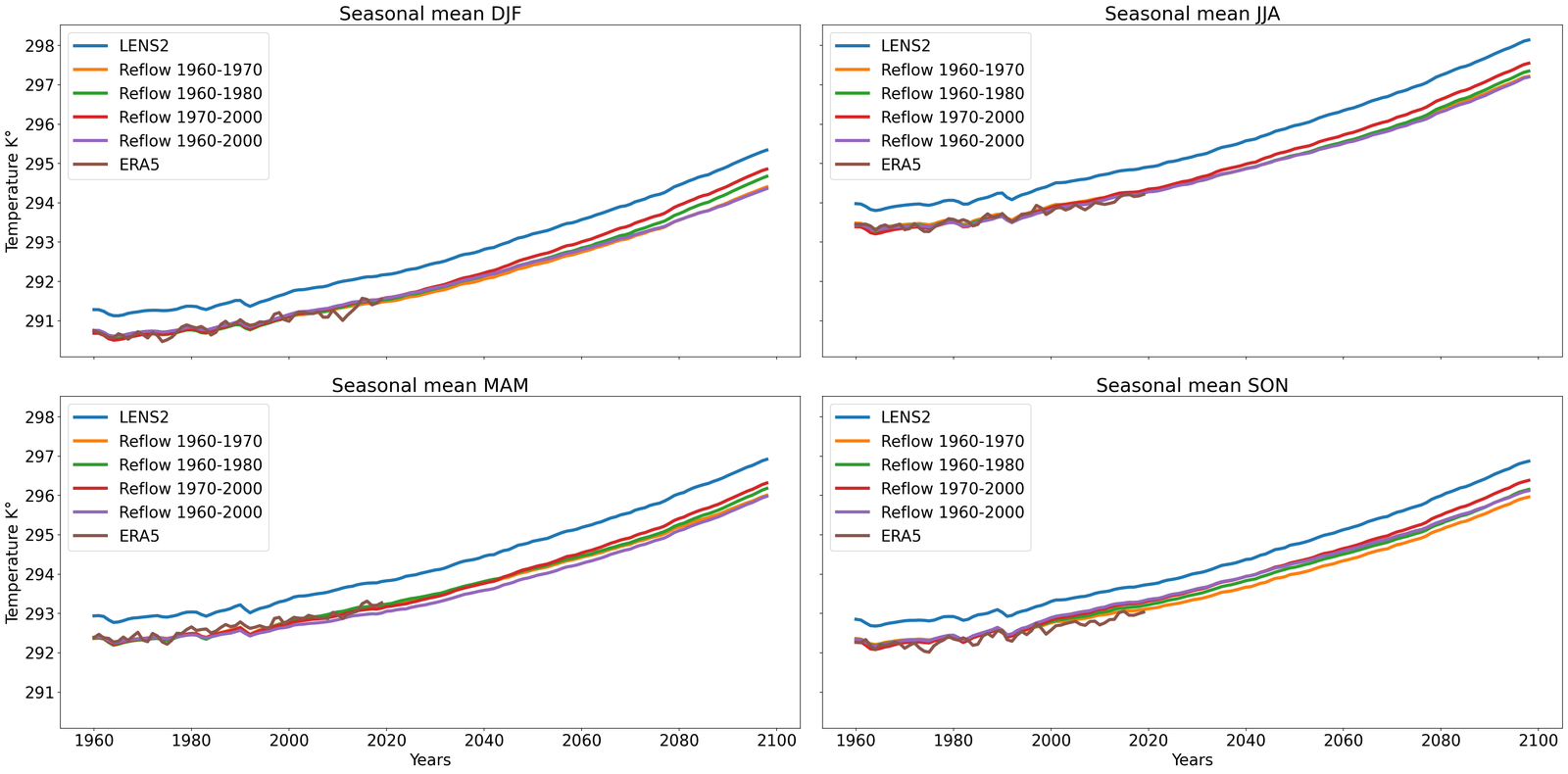

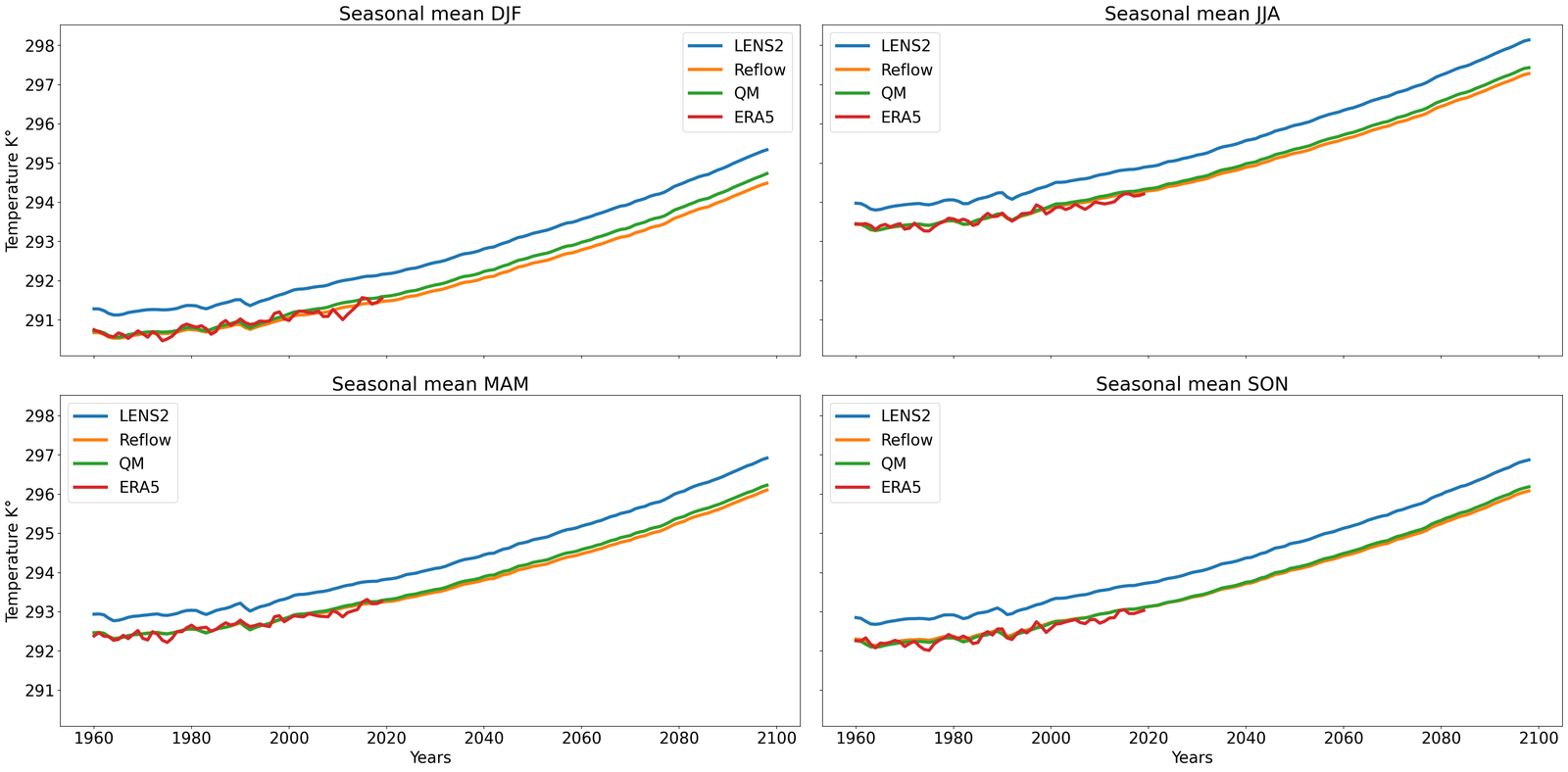

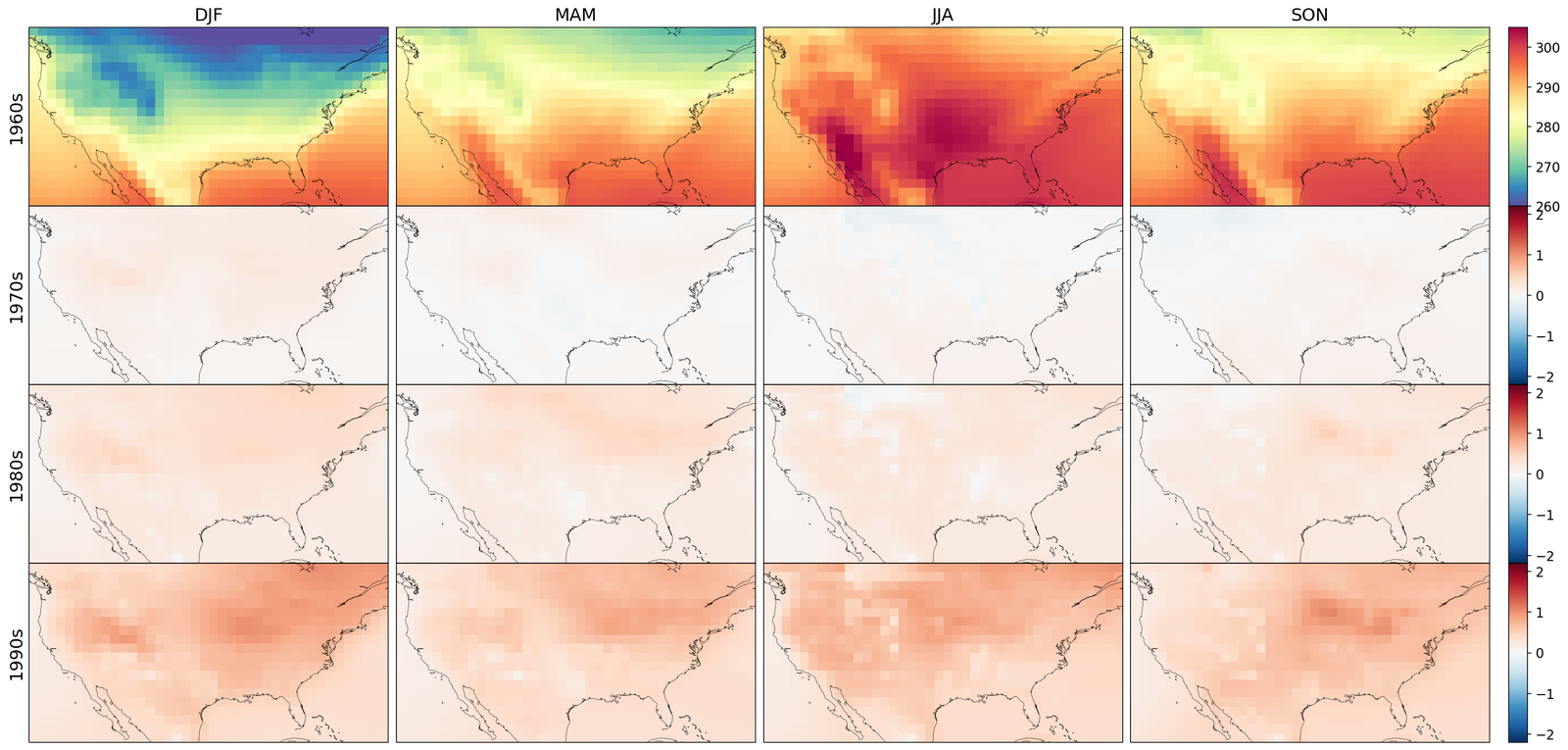

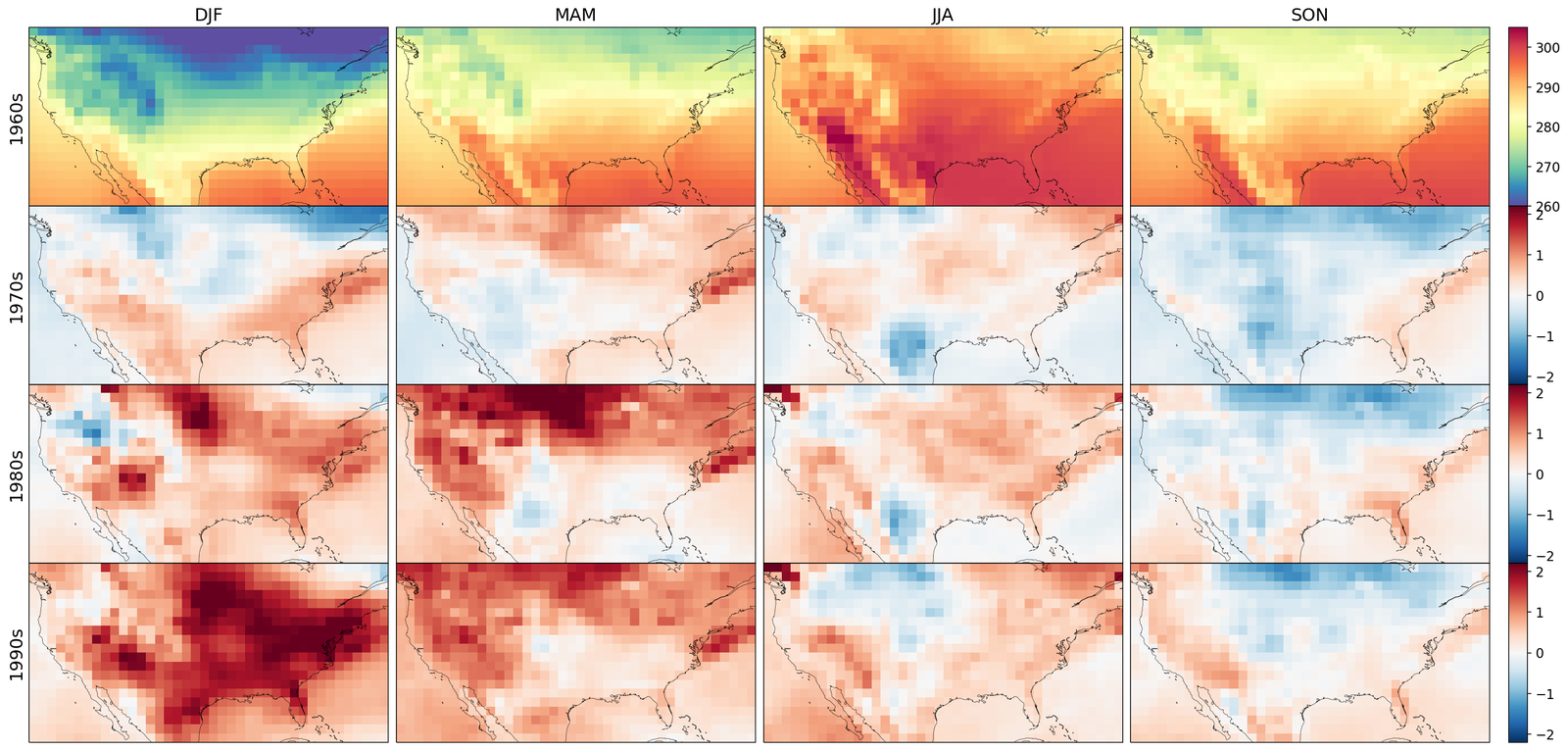

As climate is non-stationary, it is key for learning methods, optimized on past historical data, to be robust to distribution shifts. In particular, preserving climate change signals is crucial as statistical blending can erase the signal in climate projection ensembles [58]. To this end, we demonstrate that the bias-correction procedure in our method can preserve well long-term trends, attributing to learning a velocity field to remove the bias.

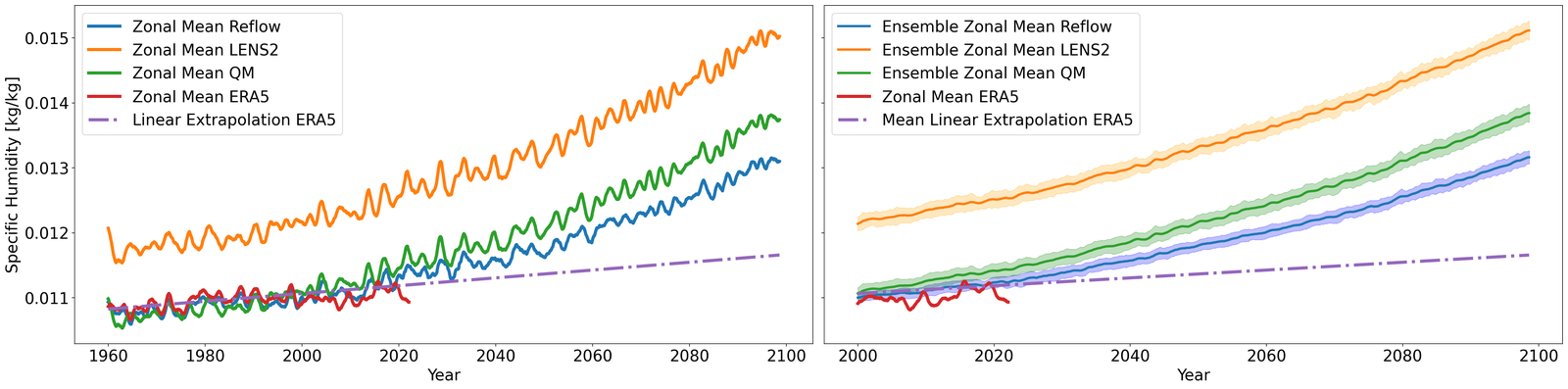

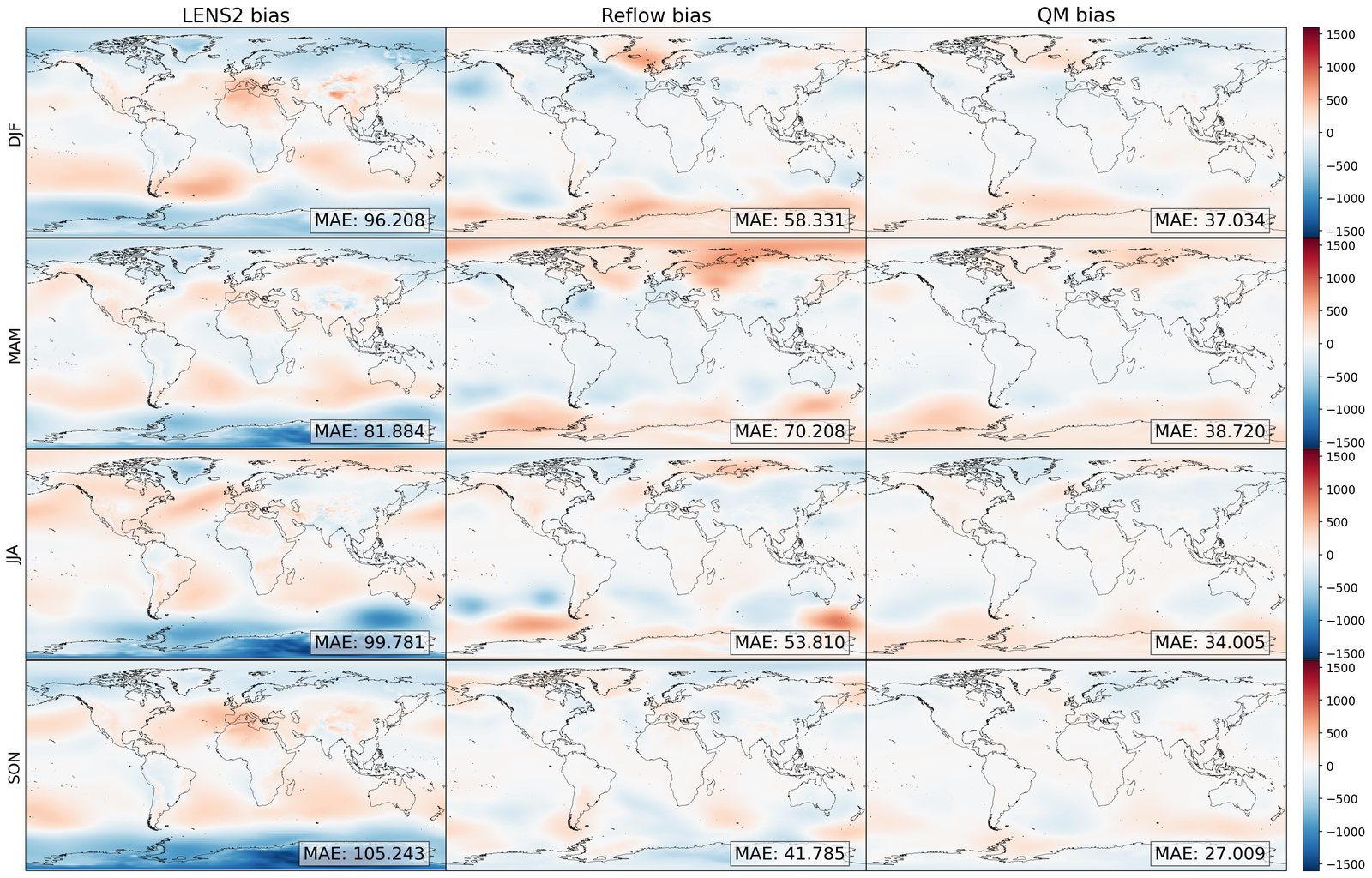

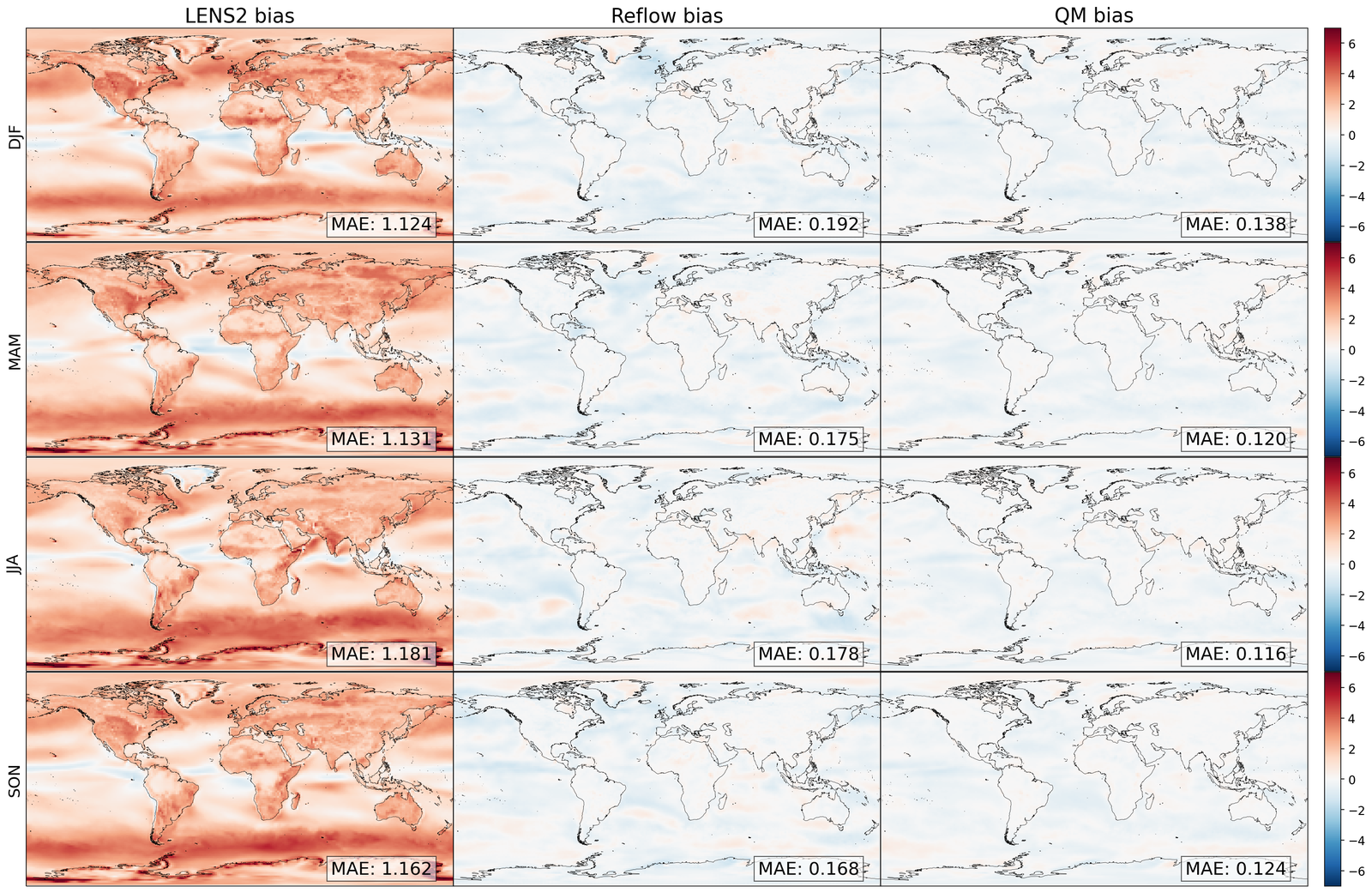

The results are compiled in Figure 5. There, we compute the projected zonal mean 2 meter temperatures (between °N and °S), time-averaged with a rolling window of one year (defined in S7.1.1), for the LENS2 ensemble, the bias-corrected samples (without super-resolution) using GenBCSR and QM, and the low-resolution ERA5 ground truth. In Figure 5 (left), we observe that the statistics of the debiased trajectory (from one of the ensemble members used for training) match the statistics of ERA5 for the training period (1960-2000), and then follow a similar trend to LENS2 for the next 60 years. In Figure 5(right), we observe that when debiasing the full LENS2 ensemble, the evaluation and testing statistics of ERA5 are within one standard deviation of the mean debiased statistics, which are then driven by the LENS2 statistics going forward.

It is not surprising to note that QM can also preserve the trend as it matches marginal (single-variate) distributions. However, importantly, it is worthwhile to observe that learning a high-dimensional velocity field as in equation 6 to match high-dimensional distributions is robust to the distribution shifts (after the training period). Furthermore, we note that simple linear extrapolation used from ERA5 data predicts a slower increase in zonal mean temperature, whereas a quadratic extrapolation predicts a faster increase. Viewing those two as massively simplified versions of mapping between distributions, we see the benefits of learning highly nonlinear mapping through the rectified approach equation 7, highlighting the need for preserving complex climate change signals involving multiple variables [58]. See S7.1 for extra results, including seasonal variability, and bias.

Finally, we briefly mention that this preservation of long-term trends can pass through the super-resolution stage so that the statistics in the final outputs also correlate with the trends, an important property we need to have for the method to be applicable to future climate projections. See Figure S6 in SI for details.

4 Discussion

We introduce a generative spatiotemporal statistical downscaling framework for unpaired data. Our methodology stems from a novel factorization of statistical downscaling into debiasing (or manifold alignment) and upsampling, in which each step is instantiated by a highly tailored generative model. Our approach addresses key limitations of prior methods for unpaired data, such as BCSD, by capturing both the local marginal distributions and inter-variable spatio-temporal correlations more effectively. By leveraging spatial interpolation and a diffusion-based residual generator, our model can represent fine-scale structures and temporal dynamics that are often overlooked by traditional techniques. This allows for more accurate and realistic downscaling of climate variables from coarse-resolution simulations to high-resolution outputs.

The practical implications of our method are significant, particularly for downstream applications where localized climate predictions are critical and paired data is not available. For instance, accurate spatial correlation modeling can improve renewable energy planning by better forecasting wind patterns for optimized wind farm placement, along with better spatial correlation of the heat index for improved electrical grid planning, especially for high-voltage power lines [20]. Additionally, the ability to capture inter-variable correlations, such as those between temperature and humidity, is essential for predicting the heat index, which has direct applications in public health, food production [17], energy demand forecasting [19, 20], and disaster preparedness [5]. Furthermore, our model’s improved temporal correlations enhance the ability to predict extreme events, such as prolonged heat waves, with significantly greater accuracy than BCSD, offering more reliable insights for managing heat-related risks [60, 61].

Looking forward, the current approach opens up several opportunities for further methodological development. One promising direction is the integration of additional auxiliary datasets, such as satellite-derived data or local observational networks, to improve the model’s robustness in regions with sparse data. Another direction is downscale data from an ensemble of climate models as we only require unpaired data and only the bias correction step relies on the physics of the climate models, thus providing localized forecasts capturing not only the internal variability of each model, but also the variability across different climate models. These developments have the potential to further refine the downscaling of climate simulations, making the method applicable to a broader range of variables, climate models, and geographic regions. Ultimately, this work contributes to the growing body of research on improving the resolution and accuracy of climate predictions, with far-reaching impacts in fields such as climate change mitigation, resource management, and environmental risk assessment.

Acknowledgement

We thank Lizao Li and Stephan Hoyer for productive discussions and Daniel Worrall for feedback on the manuscript. For the LENS2 dataset, we acknowledge the CESM2 Large Ensemble Community Project and the supercomputing resources provided by the IBS Center for Climate Physics in South Korea. ERA5 data [62] were downloaded from the Copernicus Climate Change Service [63]. The results contain modified Copernicus Climate Change Service information. Neither the European Commission nor ECMWF is responsible for any use that may be made of the Copernicus information or data it contains. We thank Tyler Russell for managing data acquisition and other internal business processes.

References

- \bibcommenthead

- [1] Balaji, V. et al. Are general circulation models obsolete? Proceedings of the National Academy of Sciences 119, e2202075119 (2022). URL https://www.pnas.org/doi/abs/10.1073/pnas.2202075119.

- [2] Courant, R., Friedrichs, K. & Lewy, H. Über die partiellen differenzengleichungen der mathematischen physik. Mathematische annalen 100, 32–74 (1928).

- [3] Schneider, T. et al. Climate goals and computing the future of clouds. Nature Climate Change 7, 3–5 (2017).

- [4] Knutson, T. R. et al. Dynamical downscaling projections of twenty-first-century Atlantic hurricane activity: CMIP3 and CMIP5 model-based scenarios. Journal of Climate 26, 6591–6617 (2013).

- [5] Goss, M. et al. Climate change is increasing the likelihood of extreme autumn wildfire conditions across California. Environmental Research Letters 15, 094016 (2020).

- [6] Christensen, J. H., Boberg, F., Christensen, O. B. & Lucas-Picher, P. On the need for bias correction of regional climate change projections of temperature and precipitation. Geophysical Research Letters 35 (2008). URL https://agupubs.onlinelibrary.wiley.com/doi/abs/10.1029/2008GL035694.

- [7] Zelinka, M. D. et al. Causes of higher climate sensitivity in cmip6 models. Geophysical Research Letters 47, e2019GL085782 (2020). URL https://agupubs.onlinelibrary.wiley.com/doi/abs/10.1029/2019GL085782. E2019GL085782 10.1029/2019GL085782.

- [8] Pepin, N. et al. Elevation-dependent warming in mountain regions of the world. Nature Climate Change 5, 424–430 (2015).

- [9] Grotch, S. L. & MacCracken, M. C. The use of general circulation models to predict regional climatic change. Journal of Climate 4, 286 – 303 (1991). URL https://journals.ametsoc.org/view/journals/clim/4/3/1520-0442_1991_004_0286_tuogcm_2_0_co_2.xml.

- [10] Hall, A. Projecting regional change. Science 346, 1461–1462 (2014). URL https://www.science.org/doi/abs/10.1126/science.aaa0629.

- [11] Diffenbaugh, N. S., Pal, J. S., Trapp, R. J. & Giorgi, F. Fine-scale processes regulate the response of extreme events to global climate change. Proceedings of the National Academy of Sciences 102, 15774–15778 (2005).

- [12] Gutowski, W. J. et al. The ongoing need for high-resolution regional climate models: Process understanding and stakeholder information. Bulletin of the American Meteorological Society 101, E664–E683 (2020).

- [13] Gutmann, E. et al. An intercomparison of statistical downscaling methods used for water resource assessments in the united states. Water Resources Research 50, 7167–7186 (2014). URL https://agupubs.onlinelibrary.wiley.com/doi/abs/10.1002/2014WR015559.

- [14] Hwang, S. & Graham, W. D. Assessment of alternative methods for statistically downscaling daily gcm precipitation outputs to simulate regional streamflow. JAWRA Journal of the American Water Resources Association 50, 1010–1032 (2014). URL https://onlinelibrary.wiley.com/doi/abs/10.1111/jawr.12154.

- [15] Naveena, N. et al. Statistical downscaling in maximum temperature future climatology. AIP Conference Proceedings 2357 (2022). URL https://doi.org/10.1063/5.0081087. 030026.

- [16] Abatzoglou, J. T. & Brown, T. J. A comparison of statistical downscaling methods suited for wildfire applications. International Journal of Climatology 32, 772–780 (2012). URL https://rmets.onlinelibrary.wiley.com/doi/abs/10.1002/joc.2312.

- [17] Wang, B. et al. Sources of uncertainty for wheat yield projections under future climate are site-specific. Nature Food 1, 720–728 (2020).

- [18] Teutschbein, C. & Seibert, J. Bias correction of regional climate model simulations for hydrological climate-change impact studies: Review and evaluation of different methods. Journal of Hydrology 456-457, 12–29 (2012).

- [19] Damiani, A., Ishizaki, N. N., Sasaki, H., Feron, S. & Cordero, R. R. Exploring super-resolution spatial downscaling of several meteorological variables and potential applications for photovoltaic power. Scientific Reports 14, 7254 (2024).

- [20] Dumas, M., Kc, B. & Cunliff, C. I. Extreme weather and climate vulnerabilities of the electric grid: A summary of environmental sensitivity quantification methods. Tech. Rep., Oak Ridge National Lab.(ORNL), Oak Ridge, TN (United States) (2019).

- [21] Wilby, R. L. et al. Statistical downscaling of general circulation model output: A comparison of methods. Water Resources Research 34, 2995–3008 (1998). URL https://agupubs.onlinelibrary.wiley.com/doi/abs/10.1029/98WR02577.

- [22] Wilby, R. L. et al. Integrated modelling of climate change impacts on water resources and quality in a lowland catchment: River kennet, uk. J. Hydrol. 330, 204–220 (2006).

- [23] Bischoff, T. & Deck, K. Unpaired downscaling of fluid flows with diffusion bridges. Artificial Intelligence for the Earth Systems 3, e230039 (2024).

- [24] Hammoud, M. A. E. R., Titi, E. S., Hoteit, I. & Knio, O. Cdanet: A physics-informed deep neural network for downscaling fluid flows. Journal of Advances in Modeling Earth Systems 14, e2022MS003051 (2022).

- [25] Harris, L., McRae, A. T., Chantry, M., Dueben, P. D. & Palmer, T. N. A generative deep learning approach to stochastic downscaling of precipitation forecasts. Journal of Advances in Modeling Earth Systems 14, e2022MS003120 (2022).

- [26] Price, I. & Rasp, S. Increasing the accuracy and resolution of precipitation forecasts using deep generative models, 10555–10571 (PMLR, 2022).

- [27] Harder, P. et al. Generating physically-consistent high-resolution climate data with hard-constrained neural networks. arXiv preprint arXiv:2208.05424 (2022).

- [28] Mardani, M. et al. Residual corrective diffusion modeling for km-scale atmospheric downscaling. arXiv preprint arXiv:2309.15214 (2023).

- [29] Lopez-Gomez, I. et al. Dynamical-generative downscaling of climate model ensembles. arXiv preprint arXiv:2410.01776 (2024).

- [30] Danabasoglu, G. et al. The community earth system model version 2 (cesm2). Journal of Advances in Modeling Earth Systems 12, e2019MS001916 (2020). URL https://agupubs.onlinelibrary.wiley.com/doi/abs/10.1029/2019MS001916. E2019MS001916 2019MS001916.

- [31] Hersbach, H. et al. The ERA5 global reanalysis. Quarterly Journal of the Royal Meteorological Society 146, 1999–2049 (2020). URL https://rmets.onlinelibrary.wiley.com/doi/abs/10.1002/qj.3803.

- [32] Dixon, K. W. et al. Evaluating the stationarity assumption in statistically downscaled climate projections: is past performance an indicator of future results? Climatic Change 135, 395–408 (2016).

- [33] Huang, X., Swain, D. L. & Hall, A. D. Future precipitation increase from very high resolution ensemble downscaling of extreme atmospheric river storms in california. Science Advances 6, eaba1323 (2020). URL https://www.science.org/doi/abs/10.1126/sciadv.aba1323.

- [34] Doblas-Reyes, F. et al. Linking Global to Regional Climate Change., 1363–1512 (Cambridge University Press, Cambridge, United Kingdom, 2021).

- [35] Pan, B. et al. Learning to correct climate projection biases. Journal of Advances in Modeling Earth Systems 13, e2021MS002509 (2021).

- [36] Dong, C., Loy, C. C., He, K. & Tang, X. Fleet, D., Pajdla, T., Schiele, B. & Tuytelaars, T. (eds) Learning a deep convolutional network for image super-resolution. (eds Fleet, D., Pajdla, T., Schiele, B. & Tuytelaars, T.) Computer Vision – ECCV 2014, 184–199 (Springer International Publishing, Cham, 2014).

- [37] Cheng, J. et al. Reslap: Generating high-resolution climate prediction through image super-resolution. IEEE Access 8, 39623–39634 (2020).

- [38] Vandal, T. et al. DeepSD: Generating high resolution climate change projections through single image super-resolution, KDD ’17, 1663–1672 (Association for Computing Machinery, New York, NY, USA, 2017). URL https://doi.org/10.1145/3097983.3098004.

- [39] Candès, E. J. & Fernandez-Granda, C. Towards a mathematical theory of super-resolution. Communications on Pure and Applied Mathematics 67, 906–956 (2014). URL https://onlinelibrary.wiley.com/doi/abs/10.1002/cpa.21455.

- [40] Kolmogorov, A. N. A refinement of previous hypotheses concerning the local structure of turbulence in a viscous incompressible fluid at high reynolds number. Journal of Fluid Mechanics 13, 82–85 (1962).

- [41] Wan, Z. Y. et al. Debias coarsely, sample conditionally: Statistical downscaling through optimal transport and probabilistic diffusion models. Advances in Neural Information Processing Systems 36, 47749–47763 (2023).

- [42] Mémoli, F. Gromov–wasserstein distances and the metric approach to object matching. Foundations of computational mathematics 11, 417–487 (2011).

- [43] Liu, X., Gong, C. & Liu, Q. Flow straight and fast: Learning to generate and transfer data with rectified flow (2023). URL https://openreview.net/forum?id=XVjTT1nw5z.

- [44] Villani, C. Optimal transport: old and new (Springer Berlin Heidelberg, 2009).

- [45] Karras, T., Aittala, M., Aila, T. & Laine, S. Elucidating the design space of diffusion-based generative models. Advances in neural information processing systems 35, 26565–26577 (2022).

- [46] Bar-Tal, O. et al. Lumiere: A space-time diffusion model for video generation. arXiv preprint arXiv:2401.12945 (2024).

- [47] Rodgers, K. B. et al. Ubiquity of human-induced changes in climate variability. Earth System Dynamics 12, 1393–1411 (2021). URL https://esd.copernicus.org/articles/12/1393/2021/.

- [48] Majda, A. J. Challenges in climate science and contemporary applied mathematics. Communications on Pure and Applied Mathematics 65, 920–948 (2012).

- [49] O’Neill, B. C. et al. The scenario model intercomparison project (ScenarioMIP) for CMIP6. Geoscientific Model Development 9, 3461–3482 (2016).

- [50] Molinaro, R. et al. Generative AI for fast and accurate statistical computation of fluids. arXiv preprint arXiv:2409.18359 (2024).

- [51] Song, Y. & Ermon, S. Improved techniques for training score-based generative models. Advances in neural information processing systems 33, 12438–12448 (2020).

- [52] Gong, B., Shi, Y., Sha, F. & Grauman, K. Geodesic flow kernel for unsupervised domain adaptation, 2066–2073 (IEEE, 2012).

- [53] Deck, K. & Bischoff, T. Easing color shifts in score-based diffusion models. arXiv preprint arXiv:2306.15832 (2023).

- [54] Song, Y. et al. Score-based generative modeling through stochastic differential equations. CoRR abs/2011.13456 (2020). URL https://arxiv.org/abs/2011.13456.

- [55] Wood, A. W., Maurer, E. P., Kumar, A. & Lettenmaier, D. P. Long-range experimental hydrologic forecasting for the eastern united states. Journal of Geophysical Research: Atmospheres 107, ACL–6 (2002).

- [56] Wood, A. W., Leung, L. R., Sridhar, V. & Lettenmaier, D. Hydrologic implications of dynamical and statistical approaches to downscaling climate model outputs. Climatic change 62, 189–216 (2004).

- [57] Thrasher, B., Maurer, E. P., McKellar, C. & Duffy, P. B. Technical note: Bias correcting climate model simulated daily temperature extremes with quantile mapping. Hydrology and Earth System Sciences 16, 3309–3314 (2012).

- [58] Chandel, V. S., Bhatia, U., Ganguly, A. R. & Ghosh, S. State-of-the-art bias correction of climate models misrepresent climate science and misinform adaptation. Environmental Research Letters 19, 094052 (2024).

- [59] National Oceanic and Atmospheric Administration (NOAA). Heat forecast tools. URL https://www.weather.gov/safety/heat-index.

- [60] Delworth, T. L., Mahlman, J. D. & Knutson, T. R. Changes in heat index associated with CO2-induced global warming. Climatic change 43, 369–386 (1999).

- [61] Dahl, K., Licker, R., Abatzoglou, J. T. & Declet-Barreto, J. Increased frequency of and population exposure to extreme heat index days in the united states during the 21st century. Environmental research communications 1, 075002 (2019).

- [62] Hersbach, H. et al. ERA5 hourly data on single levels from 1940 to present (2023).

- [63] Copernicus Climate Change Service, Climate Data Store. ERA5 hourly data on single levels from 1940 to present (2023).

- [64] Charalampopoulos, A.-T., Zhang, S., Harrop, B., Leung, L.-y. R. & Sapsis, T. Statistics of extreme events in coarse-scale climate simulations via machine learning correction operators trained on nudged datasets. arXiv preprint arXiv:2304.02117 (2023).

- [65] Candès, E. J. & Fernandez-Granda, C. Super-resolution from noisy data. Journal of Fourier Analysis and Applications 19, 1229–1254 (2013). URL https://doi.org/10.1007/s00041-013-9292-3.

- [66] Hua, Y. & Sarkar, T. K. On SVD for estimating generalized eigenvalues of singular matrix pencil in noise. IEEE Trans. Sig. Proc. 39, 892–900 (1991).

- [67] Roy, R. & Kailath, T. ESPRIT-estimation of signal parameters via rotational invariance techniques. IEEE Transactions on acoustics, speech, and signal processing 37, 984–995 (1989).

- [68] Schmidt, R. Multiple emitter location and signal parameter estimation. IEEE transactions on antennas and propagation 34, 276–280 (1986).

- [69] Vandal, T., Kodra, E. & Ganguly, A. R. Intercomparison of machine learning methods for statistical downscaling: the case of daily and extreme precipitation. Theoretical and Applied Climatology 137, 557–570 (2019).

- [70] Maraun, D. Bias correction, quantile mapping, and downscaling: Revisiting the inflation issue. Journal of Climate 26, 2137 – 2143 (2013). URL https://journals.ametsoc.org/view/journals/clim/26/6/jcli-d-12-00821.1.xml.

- [71] Hessami, M., Gachon, P., Ouarda, T. B. & St-Hilaire, A. Automated regression-based statistical downscaling tool. Environmental Modelling and Software 23, 813–834 (2008). URL https://www.sciencedirect.com/science/article/pii/S1364815207001867.

- [72] Bortoli, V. D., Thornton, J., Heng, J. & Doucet, A. Beygelzimer, A., Dauphin, Y., Liang, P. & Vaughan, J. W. (eds) Diffusion schrödinger bridge with applications to score-based generative modeling. (eds Beygelzimer, A., Dauphin, Y., Liang, P. & Vaughan, J. W.) Advances in Neural Information Processing Systems (2021). URL https://openreview.net/forum?id=9BnCwiXB0ty.

- [73] Choi, J., Kim, S., Jeong, Y., Gwon, Y. & Yoon, S. Ilvr: Conditioning method for denoising diffusion probabilistic models. arXiv preprint arXiv:2108.02938 (2021).

- [74] Meng, C. et al. SDEdit: Guided image synthesis and editing with stochastic differential equations (2021).

- [75] Park, T., Efros, A. A., Zhang, R. & Zhu, J.-Y. Contrastive learning for unpaired image-to-image translation, 319–345 (Springer, 2020).

- [76] Sasaki, H., Willcocks, C. G. & Breckon, T. P. UNIT-DDPM: Unpaired image translation with denoising diffusion probabilistic models. arXiv preprint arXiv:2104.05358 (2021).

- [77] Su, X., Song, J., Meng, C. & Ermon, S. Dual diffusion implicit bridges for image-to-image translation (2023). URL https://openreview.net/forum?id=5HLoTvVGDe.

- [78] Wu, C. H. & De la Torre, F. Unifying diffusion models’ latent space, with applications to cyclediffusion and guidance. arXiv preprint arXiv:2210.05559 (2022).

- [79] Zhao, M., Bao, F., Li, C. & Zhu, J. Egsde: Unpaired image-to-image translation via energy-guided stochastic differential equations. arXiv preprint arXiv:2207.06635 (2022).

- [80] Grover, A., Chute, C., Shu, R., Cao, Z. & Ermon, S. Alignflow: Cycle consistent learning from multiple domains via normalizing flows (2019). URL https://openreview.net/forum?id=S1lNELLKuN.

- [81] Groenke, B., Madaus, L. & Monteleoni, C. Climalign: Unsupervised statistical downscaling of climate variables via normalizing flows, CI2020, 60–66 (Association for Computing Machinery, New York, NY, USA, 2021). URL https://doi.org/10.1145/3429309.3429318.

- [82] Cuturi, M. Sinkhorn distances: Lightspeed computation of optimal transport. Advances in neural information processing systems 26 (2013).

- [83] Courty, N., Flamary, R., Habrard, A. & Rakotomamonjy, A. Joint distribution optimal transportation for domain adaptation. Advances in neural information processing systems 30 (2017).

- [84] Flamary, R., Courty, N., Tuia, D. & Rakotomamonjy, A. Optimal transport for domain adaptation. IEEE Trans. Pattern Anal. Mach. Intell 1 (2016).

- [85] Papadakis, N. Optimal transport for image processing. Ph.D. thesis, Université de Bordeaux (2015).

- [86] Tian, C., Zhang, X., Lin, J. C.-W., Zuo, W. & Zhang, Y. Generative adversarial networks for image super-resolution: A survey. arXiv preprint arXiv:2204.13620 (2022).

- [87] Lugmayr, A., Danelljan, M., Van Gool, L. & Timofte, R. SRFlow: Learning the super-resolution space with normalizing flow (2020).

- [88] Dhariwal, P. & Nichol, A. Diffusion models beat gans on image synthesis. Advances in Neural Information Processing Systems 34, 8780–8794 (2021).

- [89] Li, H. et al. Srdiff: Single image super-resolution with diffusion probabilistic models. Neurocomputing 479, 47–59 (2022). URL https://www.sciencedirect.com/science/article/pii/S0925231222000522.

- [90] Finzi, M. A., Boral, A., Wilson, A. G., Sha, F. & Zepeda-Núñez, L. Krause, A. et al. (eds) User-defined event sampling and uncertainty quantification in diffusion models for physical dynamical systems. (eds Krause, A. et al.) Proceedings of the 40th International Conference on Machine Learning, Vol. 202 of Proceedings of Machine Learning Research, 10136–10152 (PMLR, 2023). URL https://proceedings.mlr.press/v202/finzi23a.html.

- [91] Amos, B., Xu, L. & Kolter, J. Z. Precup, D. & Teh, Y. W. (eds) Input convex neural networks. (eds Precup, D. & Teh, Y. W.) Proceedings of the 34th International Conference on Machine Learning, Vol. 70 of Proceedings of Machine Learning Research, 146–155 (PMLR, 2017). URL https://proceedings.mlr.press/v70/amos17b.html.

- [92] Bao, F., Li, C., Cao, Y. & Zhu, J. All are worth words: a vit backbone for score-based diffusion models (2022). URL https://openreview.net/forum?id=WfkBiPO5dsG.

- [93] Anderson, B. D. Reverse-time diffusion equation models. Stochastic Processes and their Applications 12, 313–326 (1982).

- [94] Efron, B. Tweedie’s formula and selection bias. Journal of the American Statistical Association 106, 1602–1614 (2011).

- [95] Ho, J. & Salimans, T. Classifier-free diffusion guidance (2022). URL https://arxiv.org/abs/2207.12598. 2207.12598.

- [96] Wood, A. W., Maurer, E. P., Kumar, A. & Lettenmaier, D. P. Long-range experimental hydrologic forecasting for the eastern United States. Journal of Geophysical Research: Atmospheres 107, ACL 6–1–ACL 6–15 (2002).

- [97] Danabasoglu, G. et al. The community earth system model version 2 (CESM2). Journal of Advances in Modeling Earth Systems 12, e2019MS001916 (2020).

- [98] Eyring, V. et al. Overview of the coupled model intercomparison project phase 6 (CMIP6) experimental design and organization. Geoscientific model development 9, 1937–1958 (2016).

- [99] Fasullo, J. T. et al. An overview of the E3SM version 2 large ensemble and comparison to other E3SM and CESM large ensembles. Earth system dynamics 15, 367–386 (2024).

- [100] Soci, C. et al. The ERA5 global reanalysis from 1940 to 2022. Quarterly journal of the Royal Meteorological Society. Royal Meteorological Society (Great Britain) 150, 4014–4048 (2024).

- [101] Rothfusz, L. P. The heat index “equation” (or, more than you ever wanted to know about heat index). Technical Attachment SR 90-23, NWS Southern Region Headquarters (1990).

- [102] National Weather Service. Heat index equation. https://www.wpc.ncep.noaa.gov/html/heatindex_equation.shtml. Accessed: 2024-12-08.

- [103] Ullrich, P. A. et al. Tempestextremes v2. 1: A community framework for feature detection, tracking, and analysis in large datasets. Geoscientific Model Development 14, 5023–5048 (2021).

Appendix S1 Related work

The most direct approach to super-resolve low-resolution data is to learn a low- to high-resolution mapping via paired data when it is possible to collect such data [29]. For complex dynamical systems, as the one arising from climate simulations, several methods carefully manipulate high- and low-resolution models, either by nudging or by enforcing boundary conditions, to produce paired data without introducing spectral biases [64, 32]. Alternatively, if one has strong prior knowledge about the downsampling process, optimization methods can solve an inverse problem to directly estimate the high-resolution data, leveraging prior assumptions such as sparsity in compressive sensing [65, 39] or translation invariance [66, 67, 68].

In the climate projection setting, there is no straightforward way to obtain paired data due to the nature of the problem (i.e., turbulent flows, with characteristically different statistics across a large span of spatiotemporal scales). In the weather and climate literature (see [69] for an extensive overview), prior knowledge can be exploited to downscale specific variables [21]. One of the most predominant methods of this type is bias correction and spatial disaggregation (BCSD), which combines traditional spline interpolation with a quantile matching bias correction [70] and a disaggregation step , and linear models [71]. Recently, several studies have used ML to downscale physical quantities such as precipitation [38], but without quantifying the uncertainty of the prediction. Yet, to our knowledge, a generally applicable method to downscale arbitrary variables under an assumption of unpaired data is lacking.

Another difficulty is to remove the bias in the low resolution data. This is an instance of domain adaptation, a topic popularly studied in computer vision. Recent work has used generative models such as GANs and diffusion models to bridge the gap between two domains [23, 72, 73, 74, 35, 75, 76, 77, 78, 79]. A popular domain alignment method dubbed AlignFlow [80] was used in [81] for downscaling weather data. This approach learns normalizing flows for source and target data of the same dimension, and uses a common latent space to move across domains. The advantage of those methods is that they do not require training data from two domains in correspondence. Many of those approaches are related to optimal transport (OT), a rigorous mathematical framework for learning maps between two domains without paired data [44].

In fact, BCSD also relies in optimal transport in the bias correction step, which is instantiated by quantile mapping (QM). Quantile mapping is the solution of the one-dimensional OT problem, which applies to each pixel and variable independently. Recent computational advances in OT for discrete (i.e., empirical) measures [82] have resulted in a wide set of methods for domain adaptation [83, 84]. Despite their empirical success with careful choices of regularization, their use alone for high-dimensional images has remained limited [85].

GenBCSR uses diffusion models to perform super-resolution after a debiasing step implemented using a flow-matching algorithm. We avoid common issues from GANs [86] and flow-based methods [87], which include over-smoothing, mode collapse, and large model footprints [88, 89]. Also, due to the debiasing map, which matches the low-frequency content in distribution (see Figure 1 (a) of [41]), we do not need to explicitly impose that the low-frequency power spectra of the two datasets match, as some competing methods do [23]. Compared to formulations that perform upsampling and debiasing simultaneously [23, 38], our framework performs these two tasks separately by training each step separately, one global debiasing model and one super-resolution model for each target geographical region888Given enough computational resources, one can, in principle, super-resolve globally.

Lastly, in comparison to other two-stage approaches [23, 81], debiasing is conducted at low resolutions, which is less expensive, as it is performed on a much smaller space, and more efficient, as it is not hampered by spurious biases introduced by interpolation techniques such as linear interpolation, which, unless properly filtered, may incur aliasing. Also, compared to [41], the linear downsampling map is fixed, as we use a conditional diffusion model whose score function is trained with knowledge of the conditioning (which is an input of the score function), i.e., a-priori conditioning, instead of training an unconditional model and only conditioning at inference time by modifying the score function [90], i.e., a-posteriori conditioning. As in our case, the map is non-local (instead of a decimation mask), directly using the approach in [41], which leverages [90], would incur a higher computational and memory footprint burden, as the SVD of needs to be explicitly constructed, stored, and applied at each inference time. Another difference with [23, 81, 41] is the temporal super-resolution, as these methods only sample snapshots, thus they do not impose temporal coherence in the resulting sequences.

S1.1 Optimal Transport Solvers

Solving the optimal transport problem has attracted a wealth of attention in recent years with many methods developed to solve it. Among them perhaps the most popular are the Sinkhorn-type algorithms [82] with Sinkhorn barycenters; however, due to its nature as a kernel-based method, it often struggles with generalization, which is crucial in our case, as we will encounter distributional shifts for future climate snapshots. This assumption is one of the main difference between this work and [41] where the underlying systems posses a stationary distribution. One alternative is using the dual formulation of (5) as the minimization over convex functions parameterized by a neural network [91]. Unfortunately, this formulation tends to struggle for large dimensional systems as the ones considered in this work.

In contrast with other generative methodologies such as GANs [81, 80], rectified flow is a dynamical model. The model predicts a vector field in which an ODE is solved for the alignment. By allowing it to evolve in an artificial flow time, the overall transformation has greater capacity, while mitigating the mode collapse phenomenon, as the trajectories can not intersect in phase space.

Appendix S2 Method details

S2.1 Bias correction

We provide more details on how the debiasing or bias correction is performed. Here denotes a biased low-resolution snapshot, and denotes an unbiased low-resolution one, where is the image of through the linear downsampling map (see Figure 1). The space of biased low-resolution dataset is given by a collection of trajectories from the LENS2 ensemble dataset, each trajectory, which we denote by (such that , has slightly different spatio-temporal statistics so we seek to leverage this difference to further extract statistical performance from our debiasing step. We characterize the statistics of each trajectory using their mean and standard deviation in the training set, namely and , which are estimated using the samples within the training range. The space of the unbiased low-resolution snapshot , is given by the daily means of the ERA5 historical data regridded to ° resolution. We denote the mean and standard deviation of the set as and respectively.

To render the training more efficient, we normalize both the input and output data following: for , and , then we seek to find the smallest deviation between the two anomalies. In general, and have similar spacial structure, in which orography seems to be the dominant features, thus computing a transport map between the non-normalized variables ( and ), and the normalized ones ( and ) yield similar results, albeit with the latter empirically capturing better statistics. This is a typical example of using simple statistical methods to extract as much as possible information, and then leverage neural network models to capture the deviations. We define the map as follows. For an input stemming from a trajectory , we first normalize it using the statistics of the trajectory during (for the training years) . Then we solve an evolution problem whose vector field is parameterized by a neural network, and where the last two entries induce a family of vector fields and the initial condition condition, , is given by the normalized input. Finally, we identify the solution of the ODE at the terminal time to the normalized output, i.e., , which is then de-normalized resulting in , where is the Hadamard product.

In this case the ODE, which takes as an initial condition is given by

| (S1) |

To train we use the rectified flow approach, namely,

| (S2) |

where , is the set of couplings with the correct marginals, and are the indexes of the training trajectories instantiated by the different LENS2 ensemble members. The choice of the coupling is essential to obtain a correct mapping. This coupling encodes some of the physical information, in particular, seasonality is included in the coupling by sampling data pairs that correspond to similar time-stamps (up to a couple of years) for both LENS2 and ERA5 samples. In addition, time-coherence is also included in this step, as the training batches are composed of a few chunks of contiguous data in time, which are fed to the network during training. We observed that using a fully randomized sample of the training data often lead to overfitting, which was attenuated by feeding chunked data. Empirically we found that using chunks of 8 contiguous days provides good performance on the validation set, avoiding overfitting and preserving the climate change signal. This methodology is also adapted to consider the case in which is composed of several subsets (trajectories) with different (but close) statistical properties as we sample snapshots corresponding to different ensemble members to snapshots of a single ERA5 trajectory. Once the model is trained, we solve (S1) using a adaptive Runge-Kutta solver, which allows us to align the simulated climate manifold to the weather manifold. See S7.2 for a visualization.

S2.1.1 Model architecture

For the architecture we use a U-ViT [92], with four levels. The input to the network are three 3-tensors, , , and ; each of dimensions plus a scalar corresponding to the evolution time . Here the four channels correspond to the surface fields being modeled as shown in Table S3. The output is one 3-tensor corresponding to the instant velocity of . In this case, , and are used as conditioning vectors. After some processing, they are concatenated to along the channel dimension as shown in

Resize and aggregation layers for encoding

As the spatial dimensions of input tensors, , are not easily amenable to downsampling, i.e, they are not multiples of small prime numbers, we use a resize layer at the beginning. The resize layers performs a cubic interpolation to obtain a 3-tensor of dimensions , followed by a convolutional network with lat-lon boundary conditions: periodic in the longitudinal dimension (using the jax.numpy.pad function with wrapr mode) and constant padding in the latitudinal dimension, which repeats the value at the end of the array (using the jax.numpy.pad function with edge mode).

For the inputs, the convolutional network works as an dealiasing step. It has a kernel size of , which we write as:

| (S3) |

where denotes a convolutional layer with filters, kernel size and stride .

The conditioning inputs, i.e., the statistics , and , go through a slightly different process: they are are also interpolated, but they go trough a shallow convolutional network composed of one convolutional layers followed by a normalization layer with a swish activation function, and another convolutional layer. Here, both convolutional layers have a kernel size . The first has an embedding dimension of as it acts as an anti-aliasing layer while the second has an embedding dimension of as it seeks to project the information into the embedding space. In summary, we have

| (S4) | |||

| (S5) |

Then all the fields are concatenated along the channel dimension, i.e.,

| (S6) |

of dimensions . The last dimension comes from the concatenation of a tensor with channel-dimension , and two other with a channel-dimension of .

Downsampling stack

After the inputs are rescaled, projected and concatenated, we feed the composite fields to an U-ViT. For the downsampling stack we use levels, at each level we downsample by a factor two in each dimension, while increasing the number of channels by a factor of two, so we only have a mild compression as we descend through the stack.

The first layer takes the output of the merge and resizing, and we perform a projection

| (S7) |

where is the latent input from the encoding step. Then is successively downsampled using a convolution with stride , and an embedding dimension of , where is the level of the U-Net.

| (S8) |

where is the number of resnet at each level. The output of the downsampled embedding is then then processed by a sequence of resnet blocks following:

| (S9) |

where , the number of channels at each level, Dop is dropout layer with probability , here runs from to . In addition, time embedding , is processes with a Fourier embedding layer with a dimension of , which is then used in conjunction with a FiLM layer following

| (S10) |

where are non-trainable frequencies evenly spaced on a logarithmic scale between and , and . Finally, GN stands for a group normalization layer with groups.

Attention Core

For the attention core we use a Vision Transformer with 2D position encoding, heads, a token size of and normalized query and keys inside the attention module.

Upsampling stack. The upsampling stack takes the downsampled latent variables and sequentially increases their resolution while merging them with skip connections until the original resolution is reached. This process, within the U-ViT model, is completely different from the super-resolution stage of the framework as shown in Figure 1, which is treated in detail in S2.2 The upsampling stack contains the same number of levels and residual blocks as the downsampling one. At each level, it adds the corresponding skip connection in the upsampling stack:

| (S11) |

followed by the same blocks defined in (S9), followed by an upsampling block

| (S12) |

where the channel2space operator expands the channels into blocks locally, effectively increasing the spatial resolution by in each direction.

Decoding and Resizing. We apply a final block to the output of the upsampling stack.

| (S13) |

followed by a resizing layer as the one defined in (S3), with number of channels equal to the number of input fields. This operation brings back the output to the size of the input.

S2.1.2 Hyperparameters

Table S1 shows the set of hyperparameters used for the denoiser architecture, as well as those applied during the training and sampling phases of the rectified flow model. We also include the optimization algorithm used for minimizing (S2), along with the learning rate scheduler and weighting.

| Debias architecture (output shape: ) | |

|---|---|

| Downsampling ratios (spatial) | [2, 2, 2, 2] |

| Number of residual blocks per resolution | 6 |

| Hidden channels | [128, 256, 512, 1024] |

| Axial attention layers in resolution | [False, False, False, True] |

| Training | |

| Device | TPUv5e, |

| Duration | M steps |

| Batch size | (with data parallelism) |

| Learning rate | cosine annealed (peak=, end=), 1K linear warm-up steps |

| Gradient clipping | max norm = 0.6 |

| Time sampling | |

| Condition dropout probability () | 0.5 |

| Inference | |

| Device | Nvidia A100 |

| Integrator | Runge-Kutta 4th order |

| Solver number of steps | |

S2.1.3 Training, evaluation and test data

We trained the debiasing stage of GenBCSR using 4 LENS2 members cmip6_1001_001, cmip6_1021_002, cmip6_1041_003, and cmip6_1061_004, using data from to . We point out that these members share the same forcing [47], but different initializations to sample internal variability. Debiasing is performed with respect to the coarse-grained ERA5 data for the same period.

For model selection we used the following 14 LENS2 members: cmip6_1001_001, cmip6_1041_003, cmip6_1081_005, cmip6_1121_007, cmip6_1231_001, cmip6_1231_003, cmip6_1231_005, cmip6_1231_007, smb_1011_001, smbb_1301_011, cmip6_1281_001, cmip6_1301_003, smbb_1251_013, and smbb_1301_020, using data from to .

For testing we use the full 100-member LENS2 ensemble from to . The full ensemble used for testing contains members with different forcings and perturbations.

S2.1.4 Computational cost

Training the rectified flow model took approximately three days using four TPUv5e nodes (32 cores total), with one sample per core. Each host loaded a sequence of eight contiguous daily snapshots per iteration, which were then distributed among the cores.

For inference, the debiasing step took around one hour to process each 140-year ensemble member on a host with 16 A100 GPUs. Debiasing the full 100-member LENS2ensemble for 140 years took about four hours using 25 nodes, each equipped with 16 A100 GPUs.

S2.2 Super-resolution

S2.2.1 Diffusion model formulation

In this section, we provide a brief high-level description of the generic diffusion-based generative modeling framework. While different variants exist, we mostly follow that of [45] and refer interested readers to its Appendix for a detailed explanation of the methodology.

Diffusion models are a type of generative model that work by gradually adding Gaussian noise to real data until they become indistinguishable from pure noise (forward process). The unique power of these models is their ability to reverse this process, starting from noise and progressively refining it to create new samples that resemble the original data (backward process, or sampling).

Mathematically, we describe the forward diffusion process as a stochastic differential equation (SDE)

| (S14) |

where is a prescribed noise schedule and a strictly increasing function of the diffusion time (note: to be distinguished from real physical time ), denotes its derivative with respect to , and is the standard Wiener process. The linearity of the forward SDE implies that the distribution of is Gaussian given :

| (S15) |

with mean and diagonal covariance . For , i.e. the maximum diffusion time, we impose such that can be faithfully approximated by the isotropic Gaussian .

The main underpinning of diffusion models is that there exists a backward SDE, which, when integrated from to , induces the same marginal distributions as those from the forward SDE (S14) [93, 54]:

| (S16) |

In other words, with full knowledge of (S16) one can easily draw samples to use as the initial condition and run a SDE solver to obtain the corresponding , which resembles a real sample from . However, in (S16), the term , also known as the score function, is not directly known. Thus, the primary machine learning task associated with diffusion models is centered around expressing and approximating the score function with a neural network, whose parameters are learned from data. Specifically, the form of parameterization is inspired by Tweedie’s formula [94]:

| (S17) |

where is a denoising neural network that predicts the clean data sample given a noisy sample ( is drawn from a standard Gaussian ). Training involves sampling both data samples and diffusion times , and optimizing parameters with respect to the mean denoising loss defined by

| (S18) |

where denotes the loss weight for noise level . We use the weighting scheme proposed in [45] as well as the pre-conditioning strategies therein to improve training stability.

At sampling time, the score function in SDE (S16) is substituted with the learned denoising network using expression (S17).

In the case that an input is required, i.e. sampling from conditional distribution , the input is incorporated by the denoiser as an additional input. Classifier-free guidance (CFG) [95] may be employed to trade off between maintaining coherence with the conditional input and increasing coverage of the target distribution. Concretely, CFG is implemented through modifying the denoising function at sampling time:

| (S19) |

where is a scalar that controls the guidance strength (increasing means paying more attention to ) and denotes the null conditioning input (i.e., a zero-filled tensor with the same shape as ), such that represents unconditional denoising. The unconditional and conditional denoisers are trained jointly using the same neural network model, by randomly dropping the conditioning input from training samples with probability .

S2.2.2 Input and output normalization

As part of (8), we construct a diffusion model to sample from the conditional distribution , where denotes the residual between the high-resolution target and the interpolated low-resolution climate input . A substantial portion of the variability in is due to its strong seasonal and diurnal periodicity. To avoid learning these predictable patterns and direct the model’s focus toward capturing non-trivial interactions, we learn to sample , the residual normalized by its climatological mean and standard deviation computed over the training dataset:

| (S20) |

The input is also strongly seasonal. However, we do not remove its seasonal components and instead normalize with respect to its date-agnostic mean and standard deviation:

| (S21) |

which ensures that the model is still able to to leverage the seasonality in the input signals when deriving its output.

In summary, samples are obtained as

| (S22) |

where denotes the sampling function (solving the reverse-time SDE end-to-end) for given the climatology-normalized coarse-resolution input , and a noise realization .

S2.2.3 Model architecture

The diffusion model denoiser is implemented using a U-ViT, which consists of a downsampling and a upsampling stack, each composed of convolutional and axial attention layers. The denoiser takes as inputs noised samples , the conditioning inputs , and the noise level . The output is the climatology-normalized residual sample

| (S23) |

The output samples span 60 degrees in longitude, 30 degrees in latitude (covering the region of interest, such as CONUS) and 3 days in time, leading to tensor shape (for quarter degree spatial and bi-hourly temporal resolutions), whose dimensions representing time, longitude, latitude and variable dimensions respectively. is a noisy version of and thus share the same size. also has the same number of dimensions, but is in lower resolution with shape , while is a scalar.

Encoding. The input is merged with the noisy sample . We first apply an encoding block

| (S24) |

which first transfers to the same shape as through interpolation (cubic in space and nearest neighbor in time), followed by a layer normalization (LN), sigmoid linear unit (SiLU) activation and a spatial convolutional layer (parameters inside the brackets indicate output feature dimension, kernel size and stride respectively) that encode the input into latent features. The latent features are concatenated with in the channel dimension and projected by a convolutional layer to form the input to the subsequent downsampling stack:

| (S25) |

Downsampling stack. The downsampling stack consists of a sequence of levels, each at a coarser resolution than the previous. Each level, indexed by , further comprises a strided convolutional layer that applies spatial downsampling

| (S26) |

followed by 4 residual blocks defined by

| (S27) |

where denotes the index of the residual block. FiLM is a linear modulation layer

| (S28) |

where are non-trainable embedding frequencies evenly spaced on a logarithmic scale.

At higher downsampling levels (corresponding to lower resolutions), we additionally apply a sequence of axial multi-head attention (MHA) layers along each dimension (both spatial and time) at the end of each block, defined by

| (S29) |

where denotes the axis over which attention is applied. The fact that attention is sequentially applied one dimension at a time ensures that the architecture scales favorably as the input dimensions increase.

The outputs from each block are collected and fed into the upsampling stack as skip connections, similar to the approach used in classical U-Net architectures.