Stochastic Mutual Information Gradient Estimation for Dimensionality Reduction Networks

Abstract

Feature ranking and selection is a widely used approach in various applications of supervised dimensionality reduction in discriminative machine learning. Nevertheless there exists significant evidence on feature ranking and selection algorithms based on any criterion leading to potentially sub-optimal solutions for class separability. In that regard, we introduce emerging information theoretic feature transformation protocols as an end-to-end neural network training approach. We present a dimensionality reduction network (MMINet) training procedure based on the stochastic estimate of the mutual information gradient. The network projects high-dimensional features onto an output feature space where lower dimensional representations of features carry maximum mutual information with their associated class labels. Furthermore, we formulate the training objective to be estimated non-parametrically with no distributional assumptions. We experimentally evaluate our method with applications to high-dimensional biological data sets, and relate it to conventional feature selection algorithms to form a special case of our approach.

keywords:

feature projection, dimensionality reduction, neural networks, information theoretic learning, mutual information, stochastic gradient estimation, MMINet1 Introduction

In supervised discriminative model learning, given a finite number of training data samples, optimal exploitation of the information content in the extracted features with respect to their class conditions is essential. Applications in various research fields have developed different domain-specific methods for feature learning and subsequent supervised model training [28, 26, 24]. Many exploratory applications in practice are further characterized by high-dimensional feature representations where the dimensionality reduction problem is to be addressed.

One traditional approach towards supervised dimensionality reduction is feature selection, referring to the process of selecting the most class-informative subset from the high-dimensional feature set and discarding others [16]. Particularly, feature selection based on information theoretic criteria (e.g., maximum mutual information) have shown significant promise in earlier studies [2, 25]. Although selecting a class-relevant subset of features leads to intuitively interpretable and preferable learning algorithms, feature ranking and selection algorithms are known to potentially yield sub-optimal solutions due to their inability to thoroughly assess feature dependencies [10, 44]. In that regard, feature transformation based dimensionality reduction methods provide a more robust alternative [16], which have been also studied in the form of information theoretic projections or rotations [43, 19, 11].

These latter studies constitute the basis of our current work, in which we address the problem of learning feature transformations based on a maximum mutual information criterion between transformed features and their associated class labels using artificial neural networks. Beyond exhaustively aiming to estimate the mutual information quantity between continuous valued features and discrete valued class labels across training data samples [38, 14], we claim that feature transformations under a maximum mutual information criterion can be obtained by using a stochastic estimate of the gradient of the mutual information. This feature transformation approach can be further realized as a dimensionality reduction neural network which: (1) can be trained via standard gradient descent, (2) reduces the inference time to a single forward pass through the learned network, and (3) simplifies the overall supervised dimensionality reduction problem by alleviating the need for heuristic and sub-optimal feature selection algorithms.

In this paper we present MMINet, a generic dimensionality reduction neural network training procedure based on maximum mutual information criterion between the network-transformed features and their associated class labels. We derive a stochastic estimate of the gradient of the mutual information between the continuous valued projected feature random variables and discrete valued class labels, and use this stochastic quantity for the loss function in artificial neural network learning. Furthermore, we formulate the training objective non-parametrically, relying on non-parametric kernel density estimations to approximate projected feature space class-conditional probability densities. We interpret our approach as determining a manifold on which transformations of the original features carry maximal mutual information with the class labels. Subsequently, feature selection becomes a special sparse solution case of all possible solutions that MMINet can provide when it is restricted to a single linear layer architecture. For our empirical assessments, we demonstrate our results on publicly available high-dimensional biological microarray datasets for cancer diagnostics, in comparison to several conventional feature selection methods.

The remainder of this article is organized as follows. In the upcoming Section 2 we briefly present related work on feature selection and feature transformation based dimensionality reduction approaches, as well as some recent information theoretic neural network training studies. We then describe the proposed MMINet approach on feature transformation learning neural networks with maximum mutual information criterion in Section 3. As part of our experimental studies in Section 4, we initially illustrate the limitations of a simple feature selection approach with a toy example in Section 4.1. In Section 4.2 we describe both the synthetically generated and the diagnostic biological data sets that we used in our empirical assessments. Subsequently we describe our implementations and present our results in Sections 4.3 and 4.4. We conclude the article with a discussion of our methodology, results, current limitations and potential improvements.

2 Related Work

Supervised dimensionality reduction by feature selection refers to selecting the most class-informative feature subset from a high-dimensional feature set based on a defined optimality criterion to maximize class separability [16]. A theoretically optimal dimensionality reduction procedure for a specified classifier is to iteratively adjust a pre-determined feature dimensionality reduction framework until the best cross validated decoding accuracy is achieved, which are known as the wrapper methods (see Figure 1(a)). One well-known example is the support vector machine (SVM) recursive feature elimination (RFE) approach [17]. SVM-RFE is a wrapper feature selection method around an SVM classifier which uses backward elimination of features with the smallest model weights. Intuitively, as the dimensionality and amount of training data increases, wrapper methods become computationally cumbersome and time consuming for model learning. Filter methods provide an alternative in the form of feature ranking and subset selection algorithms based on a pre-defined optimality criterion (see Figure 1(b)). In particular, feature selection based on information theoretic criteria, where salient statistical properties of features can be exploited by a probabilistic dependence measure, have shown significant promise in supervised dimensionality reduction [2, 25, 35].

Feature selection methods offer the advantage of preserving original representations of the variables. This subsequently translates to sustaining better and easier model interpretability, and makes them preferable depending on the learning application domain [15, 27]. Nevertheless there exists significant evidence on feature ranking and selection algorithms leading to potentially sub-optimal solutions for class separability [10, 44]. This argument can be simply illustrated by considering the case where two redundant features can become informative jointly (as will be shown in Section 4.1). Accordingly, feature transformation based dimensionality reduction methods can provide a more robust and viable alternative [16, 20] (see Figure 1(c)), which are also demonstrated in the form of information theoretic linear projections or rotations [43, 19, 30, 47, 11]. We motivate our study in the light of these work, where we aim to use standard gradient descent based artificial neural network training and inference pipelines to perform nonlinear maximum mutual information based feature transformations. We previously explored this idea for neurophysiological feature transformations in brain-computer interfaces [32], which we re-address here in the context of neural networks.

Recently a different line of work focused on estimating mutual information of high dimensional continuous variables over neural networks, initially proposed as mutual information neural estimation (MINE) [3]. From an unsupervised representation learning perspective [21] extended MINE to learn powerful lower dimensional data representations that perform well on a variety of tasks, by maximizing the estimated mutual information between the input and output of a deep neural network encoder. More recently [45] proposed to estimate the gradient of mutual information rather than itself for similar representation learning setups, which was argued to provide a more stable estimate for unsupervised representation learning. Yet, these studies are particularly interested in learning unsupervised deep representations of continuous high-dimensional random variables from an information theoretic perspective, which are however being successfully translated into the convention of artificial neural networks.

Going further towards application domains, neural network based information theoretic metric estimators also demonstrated significant promise in various uses within diverse artificial intelligence settings. One of such use cases include medical dialogue systems for automatic diagnosis [46], where mutual information estimation models are embedded within a policy learning framework to enhance the reward function and encourage the model to select the most discriminative symptoms to make a diagnosis. Another example extends disentangled representation learning models by an information theoretic formulation for image classification and retrieval problems in computer vision [39]. Potential contemporary use cases can further extend to mobile cloud computing applications [6], as well as end-to-end deep learning models for communication systems with efficient mutual information based encoding [13].

3 MMINet: Information Theoretic Dimensionality Reduction Neural Network

3.1 Problem Statement

Let denote the finite training data set where is a sample of a continuous valued random variable , and is a sample of a discrete valued random variable , indicating the discrete class label for . From a dimensionality reduction perspective, the objective is to find a mapping network such that the high -dimensional input feature space is mapped to a lower -dimensional transformed feature space while maximizing the mutual information between the transformed data and corresponding class labels based on the observations, as expressed by Equation (1).

| (1) |

where the continuous random variable has transformed data samples in a -dimensional feature space, denotes the parameters of the mapping , and denotes the function space for possible feature mappings .

In Bayesian optimal classification, upper and lower bounds on the probability of error in estimating a discrete valued random variable from an observational random variable can be determined by information theoretic criteria (i.e., Fano’s lower bound inequality [12] and Hellman-Raviv upper bound on Bayes error [18]). Specifically, these bounds suggest that the lowest possible Bayes error of any given classifier can be achieved when the mutual information between the random variables and is maximized (cf. [32, 43]).

3.2 Learning with Maximum Mutual Information Criterion

Mutual information between the continuous random variable and the discrete random variable is defined as: , which also can be expressed by Equation (2).

| (2) |

To solve the objective in Equation (1), exact estimation of the mutual information quantity is not necessary. Instead, we are only interested in adaptively estimating the optimal feature mapping network parameters under maximum mutual information criterion. Motivated by similar work from information theory [9, 5], we approach the optimization problem stochastically. As illustrated in Figure 2, the network parameters will be iteratively updated based on the instantaneous estimate of the gradient of mutual information at each iteration (i.e., ), which we define as the stochastic mutual information gradient (SMIG).

During this network training procedure, in fact we approximate the true gradient of the mutual information stochastically, and perform parameter updates based on the SMIG evaluated with the instantaneous sample and the values of at iteration . This stochastic estimate quantity can be obtained by dropping the expectation operation over from the true gradient given in Equation (3).

| (3) |

Subsequently, the expression for SMIG at iteration can be denoted by Equation (4).

| (4) |

In the neural network training process, consistently with Figure 2, we simply use as the instantaneous loss to be backpropagated over the network for parameter updates at iteration . Applying the Bayes’ Theorem, the instantaneous loss estimate from Equation (4) can be expressed via Equation (5).

| (5) |

where the class priors will be empirically determined over the training data samples, and at each iteration will be approximated via non-parametric kernel density estimations [36] on class conditional distributions of the transformed data samples expressed as in Equation (6).

| (6) |

where index iterates over the training samples of the conditioned class and denotes the number of samples in that class. Since a continuously differentiable kernel choice is necessary for proper evaluation of the gradients, we use Gaussian kernels as denoted in Equation (7).

| (7) |

with the kernel bandwidth matrix H determined using Silverman’s rule of thumb [40]. Finally, note that the SMIG in Equation (4) is a biased estimator of the true gradient of mutual information in Equation (3), since it is based on kernel density estimators with finite samples which are biased estimators [34]. An increase in the training data sample size per class can yield better class conditional kernel density estimates [22] that can be exploited during the neural network optimization process.

4 Experimental Studies

4.1 An Illustrative Example

We first demonstrate a simple example on how feature selection can lead to confounding results regarding class separability as we highlighted in Section 1. We will illustrate a two-class classification problem with two-dimensional data distributions such that there is significant overlap in distributions when an individual feature is selected (see Figure 3). While class distributions are easily separable when both features are considered together, a feature selection between the two dimensions will lead to significant information loss.

We subsequently show the projection results using a simple MMINet architecture with a single linear (dense) layer , where is a one by two projection array. We observe that maximum mutual information criterion based linear feature transformation ensures minimum probability of error based on the available training data samples. This example illustrates one setting on how feature selection can lead to sub-optimal solutions for class separability.

4.2 Experimental Data

We evaluate our information theoretic dimensionality reduction approach on two different types of datasets. Firstly we perform feasibility assessments on a synthetically generated dataset, and later conduct experiments using three diagnostic biological microarray datasets.

4.2.1 Synthetically Generated Data

Preliminary evaluations of our approach are performed using an artificially generated dataset with regards to a well-known basis for comparison of learning algorithms [41]. We use the Monk3 Dataset, from the MONK’s problems [41], which handles a binary classification task where 432 data samples are described by features . For each data sample, binary class labels are obtained by the following logical operation: . From the 432 data samples, 5% have noisy labels. Overall, the problem implies that there are only three relevant features to infer the class label and the remaining three features are redundant.

4.2.2 High-Dimensional Diagnostic Biological Data

We perform further empirical assessments using high-dimensional biological microarray data from the following three datasets: (1) Breast Cancer Wisconsin Diagnostic Dataset [7] consisting of 569 samples of 30 dimensional features extracted from digitized images of a fine needle aspirate of a breast mass, describing cell characteristics where the cell is either classified as malignant or benign, (2) Glioma Dataset [31] containing 50 samples of four class data (i.e., cancer/non-cancer glioblastomas or oligodendrogliomas) defined by high-dimensional microarray gene expression data of 4434 features, (3) Lung Carcinoma Dataset [4] containing 203 samples in five classes adenocarcinomas, squamous cell lung carcinomas, pulmonary carcinoids, small-cell lung carcinomas and normal lung, defined by 3312 mRNA gene expression variables.

4.3 Implementations

We evaluate MMINet feature transformation method in comparison to four supervised feature selection methods: (1) feature selection based on fisher score as a similarity based approach [8], (2) minimum redundancy maximum relevance (mRMR) feature selection [35] as an information theoretic feature ranking and selection criterion, (3) -SVM as a sparse regularization based method that utilizes an -norm regularizer on a linear support vector machine (SVM) classifier [48], (4) SVM-RFE as a wrapper feature selection method around an SVM classifier with recursive feature elimination (RFE) where features with lowest SVM weights are recursively eliminated backwards [17].

For our MMINet implementations111Codes are available at: https://github.com/oozdenizci/MMIDimReduction we used the Chainer deep learning framework [42]. Stochastic model training was performed by considering one instantaneous sample at a time (i.e., one sample per batch) for one complete pass across the whole training dataset (i.e., one epoch), and we employed momentum stochastic gradient descent [37]. The neural network architecture was arbitrarily defined as a two hidden layer network with ELU activations following the hidden layer outputs. Dimensionalities of the dense layers were chosen to be to , to , and to . All features were standardized by removing the mean and scaling to unit variance. After dimensionality reduction (i.e., feature selection or MMINet transformation), for classification purposes we used linear SVM classifiers and reported averaged 5-fold cross-validation accuracies in all experiments.

4.4 Results

Results with the synthetically generated Monk3 Dataset [41] demonstrated higher accuracies with MMINet in several experiments. We performed dimensionality reduction (from ) and 5-fold cross-validated classification of the 432 data samples for output dimensionalities of . For , MMINet yields the highest average accuracy of , with regards to with mRMR and with the other methods. Considering that the dimensionality reduction problem handles six input features, we observed that several of the feature selection methods identified the same feature as the most informative to construct , which consistently resulted in this decoding accuracy. Similar behavior is observed for , where MMINet yields a accuracy, whereas all feature selection methods selected the same two features and yield an average accuracy of . Finally for , MMINet yields , -SVM and SVM-RFE yields , and the other two methods yield an average accuracy of . This indicates that for selection of three features, in almost all cross-validation folds feature selection methods choose the truly relevant features, while MMINet transformations also yield comparable results. The overall upper accuracy range is further dependent on our choice to use a linear classifier for this problem. We did not increase the output feature dimensionality higher than three due to the nature of the constructed artificial dataset.

| Breast Cancer [7] | Glioma [31] | Lung Carcinoma [4] | |

|---|---|---|---|

| number of classes | |||

| number of data samples | |||

| Fisher Score | |||

| mRMR | |||

| -SVM | |||

| SVM-RFE | |||

| MMINet |

Regarding our experiments with high-dimensional diagnostic biological data, Table 1 presents averaged 5-fold cross-validation accuracies for the cases where output feature dimensionality is chosen equal to the number of classes for consistency across methods. MMINet yields accuracies of for binary classification with the Breast Cancer Wisconsin Diagnostic Dataset [7], for 4-class classification with the Glioma Dataset [31], and for 5-class classification with the Lung Carcinoma Dataset [4], all relatively higher than the compared feature selection methods. We observe that our feature transformation approach provides a performance upper bound to several feature selection methods in classification, based on the same classifier modality. We argue this to be due to feature selection algorithms being more restricted and simply resemble to sparse linear projection solutions when MMINet would be constrained to have a single dense layer.

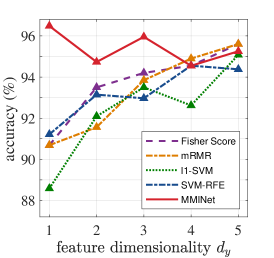

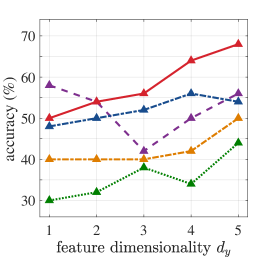

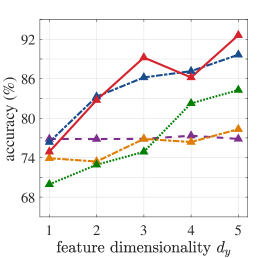

Figure 4 demonstrates an extension of the results in Table 1, where we vary the output feature dimensionality for all datasets. We observe in almost all cases that MMINet continues to provide a better performance than the other methods. Mainly SVM-RFE, a wrapper method, is competitive with MMINet as anticipated due to the classifier-oriented nature of the algorithm. Note that we did not arbitrarily increase the number of dimensions too high for MMINet, since the method relies on -dimensional kernel density estimators at the output feature space and higher dimensional density estimates are known to be unstable [40].

5 Discussion

We present a supervised dimensionality reduction network training procedure based on the stochastic estimate of the mutual information gradient. Based on the construction of the objective function, at the network output feature space the transformed features and their associated class labels carry maximum mutual information. Complete process is formulated non-parametrically based on kernel density estimates which approximate class-conditional densities in the projected feature space. We demonstrate our approach empirically using pilot experimental biological data, where feature selection algorithms are widely popular approaches for dimensionality reduction. We interpret our approach to be a more general solution than maximum mutual information based feature selection algorithms. Such selection algorithms resemble to sparse linear projection solutions when MMINet is constrained to have a single dense layer.

It is well known that the ultimate objective in Equation (1) is hard to estimate due to entangling multiple continuous and discrete random variables where continuous random variables can have infinitely large positive or negative entropy values, whereas the entropy of a discrete random variable is always positive [38, 14]. Due to the fact that there is not a global solution to optimize this objective, it is important to note that the stopping criteria is an important factor in our model training. For our current implementations we did not optimize this aspect by using a validation set based stopping criterion, which could further improve the robustness of the approach.

We stress the importance of the distinction between our study and conventional discriminative neural network training protocols. Such discriminative networks are trained end-to-end using raw data to minimize negative log-likelihood as a measure of classification error minimization based on a training data set. On the other hand, our approach is a general supervised feature dimensionality reduction and lower-dimensional feature space learning method which relies on maximum mutual information criterion. Therefore we did not perform comparisons of the dimensionality reduction methods to discriminative neural networks such that a comparable basis is maintained.

Going beyond multilayer perceptrons, stochastic training of the MMINet framework can also embed any deep neural network architecture for lower dimensional representation learning. It is important to note that in contrast to feature selection methods, which preserve the original representations of feature variables, our transformation based approach will deeply modify features onto a new feature space. In combination with the theoretical advancements on gradient-based methods of neural network interpretability (e.g., layer-wise relevance propagation [1, 29]), obtained synergies across features as highlighted by high-dimensional feature relevances can yield significant insights based on the application domain. Such feature-synergy based ideas were particularly found interesting for feature learning in brain interfacing as we studied earlier [32, 33], as well as gene expression data analysis [23] in consistency with their biological interpretations.

Acknowledgments

Our work is supported by NSF (IIS-1149570, CNS-1544895, IIS-1715858), DHHS (90RE5017-02-01), and NIH (R01DC009834).

References

- Bach et al. [2015] Bach, S., Binder, A., Montavon, G., Klauschen, F., Müller, K.-R., & Samek, W. (2015). On pixel-wise explanations for non-linear classifier decisions by layer-wise relevance propagation. PloS one, 10, e0130140.

- Battiti [1994] Battiti, R. (1994). Using mutual information for selecting features in supervised neural net learning. IEEE Transactions on Neural Networks, 5, 537–550.

- Belghazi et al. [2018] Belghazi, M. I., Baratin, A., Rajeshwar, S., Ozair, S., Bengio, Y., Courville, A., & Hjelm, D. (2018). Mutual information neural estimation. In International Conference on Machine Learning (pp. 531–540).

- Bhattacharjee et al. [2001] Bhattacharjee, A. et al. (2001). Classification of human lung carcinoma by mRNA expression profiling reveals distinct adenocarcinoma subclasses. PNAS, 98, 13790–13795.

- Chen et al. [2008] Chen, B., Hu, J., Li, H., & Sun, Z. (2008). Adaptive filtering under maximum mutual information criterion. Neurocomputing, 71, 3680–3684.

- Ciobanu et al. [2019] Ciobanu, R.-I., Dobre, C., Bălănescu, M., & Suciu, G. (2019). Data and task offloading in collaborative mobile fog-based networks. IEEE Access, 7, 104405–104422.

- Dua & Graff [2019] Dua, D., & Graff, C. (2019). UCI machine learning repository. URL: http://archive.ics.uci.edu/ml.

- Duda et al. [2012] Duda, R. O., Hart, P. E., & Stork, D. G. (2012). Pattern Classification. John Wiley & Sons.

- Erdogmus et al. [2003] Erdogmus, D., Hild, K. E., & Principe, J. C. (2003). Online entropy manipulation: Stochastic information gradient. IEEE Signal Processing Letters, 10, 242–245.

- Erdogmus et al. [2008] Erdogmus, D., Ozertem, U., & Lan, T. (2008). Information theoretic feature selection and projection. In Speech, Audio, Image and Biomedical Signal Processing using Neural Networks (pp. 1–22).

- Faivishevsky & Goldberger [2012] Faivishevsky, L., & Goldberger, J. (2012). Dimensionality reduction based on non-parametric mutual information. Neurocomputing, 80, 31–37.

- Fano [1961] Fano, R. M. (1961). Transmission of information: A statistical theory of communications.

- Fritschek et al. [2019] Fritschek, R., Schaefer, R. F., & Wunder, G. (2019). Deep learning for channel coding via neural mutual information estimation. In IEEE 20th International Workshop on Signal Processing Advances in Wireless Communications (pp. 1–5).

- Gao et al. [2017] Gao, W., Kannan, S., Oh, S., & Viswanath, P. (2017). Estimating mutual information for discrete-continuous mixtures. In Advances in Neural Information Processing Systems (pp. 5986–5997).

- Garrett et al. [2003] Garrett, D., Peterson, D. A., Anderson, C. W., & Thaut, M. H. (2003). Comparison of linear, nonlinear, and feature selection methods for EEG signal classification. IEEE Transactions on Neural Systems and Rehabilitation Engineering, 11, 141–144.

- Guyon & Elisseeff [2003] Guyon, I., & Elisseeff, A. (2003). An introduction to variable and feature selection. Journal of Machine Learning Research, 3, 1157–1182.

- Guyon et al. [2002] Guyon, I., Weston, J., Barnhill, S., & Vapnik, V. (2002). Gene selection for cancer classification using support vector machines. Machine Learning, 46, 389–422.

- Hellman & Raviv [1970] Hellman, M., & Raviv, J. (1970). Probability of error, equivocation, and the Chernoff bound. IEEE Transactions on Information Theory, 16, 368–372.

- Hild et al. [2006] Hild, K. E., Erdogmus, D., Torkkola, K., & Principe, J. C. (2006). Feature extraction using information–theoretic learning. IEEE Transactions on Pattern Analysis and Machine Intelligence, 28, 1385–1392.

- Hinton & Salakhutdinov [2006] Hinton, G. E., & Salakhutdinov, R. R. (2006). Reducing the dimensionality of data with neural networks. Science, 313, 504–507.

- Hjelm et al. [2018] Hjelm, R. D., Fedorov, A., Lavoie-Marchildon, S., Grewal, K., Bachman, P., Trischler, A., & Bengio, Y. (2018). Learning deep representations by mutual information estimation and maximization. arXiv preprint arXiv:1808.06670, .

- Hwang et al. [1994] Hwang, J.-N., Lay, S.-R., & Lippman, A. (1994). Nonparametric multivariate density estimation: a comparative study. IEEE Transactions on Signal Processing, 42, 2795–2810.

- Jacob et al. [2009] Jacob, L., Obozinski, G., & Vert, J.-P. (2009). Group lasso with overlap and graph lasso. In Proceedings of the 26th Annual International Conference on Machine Learning (pp. 433–440). ACM.

- Jiang et al. [2016] Jiang, C., Zhang, H., Ren, Y., Han, Z., Chen, K.-C., & Hanzo, L. (2016). Machine learning paradigms for next-generation wireless networks. IEEE Wireless Communications, 24, 98–105.

- Kwak & Choi [2002] Kwak, N., & Choi, C. H. (2002). Input feature selection by mutual information based on parzen window. IEEE Transactions on Pattern Analysis and Machine Intelligence, 24, 1667–1671.

- Larranaga et al. [2006] Larranaga, P. et al. (2006). Machine learning in bioinformatics. Briefings in Bioinformatics, 7, 86–112.

- Lazar et al. [2012] Lazar, C., Taminau, J., Meganck, S., Steenhoff, D., Coletta, A., Molter, C., de Schaetzen, V., Duque, R., Bersini, H., & Nowe, A. (2012). A survey on filter techniques for feature selection in gene expression microarray analysis. IEEE/ACM Transactions on Computational Biology and Bioinformatics, 9, 1106–1119.

- Lemm et al. [2011] Lemm, S., Blankertz, B., Dickhaus, T., & Müller, K.-R. (2011). Introduction to machine learning for brain imaging. NeuroImage, 56, 387–399.

- Montavon et al. [2018] Montavon, G., Samek, W., & Müller, K.-R. (2018). Methods for interpreting and understanding deep neural networks. Digital Signal Processing, 73, 1–15.

- Nenadic [2007] Nenadic, Z. (2007). Information discriminant analysis: Feature extraction with an information-theoretic objective. IEEE Transactions on Pattern Analysis and Machine Intelligence, 29, 1394–1407.

- Nutt et al. [2003] Nutt, C. L. et al. (2003). Gene expression-based classification of malignant gliomas correlates better with survival than histological classification. Cancer research, 63, 1602–1607.

- Özdenizci & Erdoğmuş [2020] Özdenizci, O., & Erdoğmuş, D. (2020). Information theoretic feature transformation learning for brain interfaces. IEEE Transactions on Biomedical Engineering, 67, 69–78.

- Özdenizci et al. [2020] Özdenizci, O., Wang, Y., Koike-Akino, T., & Erdoğmuş, D. (2020). Learning invariant representations from EEG via adversarial inference. IEEE Access, 8, 27074–27085.

- Parzen [1962] Parzen, E. (1962). On estimation of a probability density function and mode. The Annals of Mathematical Statistics, 33, 1065–1076.

- Peng et al. [2005] Peng, H., Long, F., & Ding, C. (2005). Feature selection based on mutual information criteria of max-dependency, max-relevance, and min-redundancy. IEEE Transactions on Pattern Analysis and Machine Intelligence, 27, 1226–1238.

- Principe et al. [2000] Principe, J. C., Xu, D., Fisher, J., & Haykin, S. (2000). Information theoretic learning. Unsupervised Adaptive Filtering, 1, 265–319.

- Qian [1999] Qian, N. (1999). On the momentum term in gradient descent learning algorithms. Neural Networks, 12, 145–151.

- Ross [2014] Ross, B. C. (2014). Mutual information between discrete and continuous data sets. PloS one, 9, e87357.

- Sanchez et al. [2019] Sanchez, E. H., Serrurier, M., & Ortner, M. (2019). Learning disentangled representations via mutual information estimation. arXiv preprint arXiv:1912.03915, .

- Silverman [1986] Silverman, B. W. (1986). Density Estimation for Statistics and Data Analysis. Chapman & Hall.

- Thrun et al. [1991] Thrun, S. B. et al. (1991). The MONK’s problems a performance comparison of different learning algorithms, .

- Tokui et al. [2015] Tokui, S., Oono, K., Hido, S., & Clayton, J. (2015). Chainer: a next-generation open source framework for deep learning. In Proceedings of Workshop on Machine Learning Systems in the Twenty-ninth Annual Conference on Neural Information Processing Systems (pp. 1–6). volume 5.

- Torkkola [2003] Torkkola, K. (2003). Feature extraction by non-parametric mutual information maximization. Journal of Machine Learning Research, 3, 1415–1438.

- Torkkola [2008] Torkkola, K. (2008). Information-theoretic methods. In Feature Extraction (pp. 167–185). Springer.

- Wen et al. [2020] Wen, L., Zhou, Y., He, L., Zhou, M., & Xu, Z. (2020). Mutual information gradient estimation for representation learning. arXiv preprint arXiv:2005.01123, .

- Xia et al. [2020] Xia, Y., Zhou, J., Shi, Z., Lu, C., & Huang, H. (2020). Generative adversarial regularized mutual information policy gradient framework for automatic diagnosis. In Proceedings of the AAAI Conference on Artificial Intelligence (pp. 1062–1069). volume 34.

- Zhang et al. [2010] Zhang, H., Guan, C., & Ang, K. K. (2010). An information theoretic linear discriminant analysis method. In International Conference on Pattern Recognition (pp. 4182–4185).

- Zhu et al. [2004] Zhu, J., Rosset, S., Tibshirani, R., & Hastie, T. J. (2004). 1-norm support vector machines. In Advances in Neural Information Processing Systems (pp. 49–56).