Stochastic Neural Networks with Infinite Width are Deterministic

Abstract

This work theoretically studies stochastic neural networks, a main type of neural network in use. We prove that as the width of an optimized stochastic neural network tends to infinity, its predictive variance on the training set decreases to zero. Our theory justifies the common intuition that adding stochasticity to the model can help regularize the model by introducing an averaging effect. Two common examples that our theory can be relevant to are neural networks with dropout and Bayesian latent variable models in a special limit. Our result thus helps better understand how stochasticity affects the learning of neural networks and potentially design better architectures for practical problems.

1 Introduction

Applications of neural networks have achieved great success in various fields. A major extension of the standard neural networks is to make them stochastic, namely, to make the output a random function of the input. In a broad sense, stochastic neural networks include neural networks trained with dropout (Srivastava et al.,, 2014; Gal and Ghahramani,, 2016), Bayesian networks (Mackay,, 1992), variational autoencoders (VAE) (Kingma and Welling,, 2013), and generative adversarial networks (Goodfellow et al.,, 2014). In this work, we formulate a rather broad definition of a stochastic neural network in Section 3. There are many reasons why one wants to make a neural network stochastic. Two main reasons are (1) regularization and (2) distribution modeling. Since neural networks with stochastic latent layers are more difficult to train, stochasticity is believed to help regularize the model and prevent memorization of samples (Srivastava et al.,, 2014). The second reason is easier to understand from the perspective of latent variable models. By making the network stochastic, one implicitly assumes that there exist latent random variables that generate the data through some unknown function. Therefore, by sampling these latent variables, we are performing a Monte Carlo sampling from the underlying data distribution, which allows us to model the underlying data distribution by a neural network. This type of logic is often invoked to motivate the VAE and GAN. Therefore, stochastic networks are of both practical and theoretical importance to study. However, most existing works on stochastic nets are empirical in nature, and almost no theory exists to explain how stochastic nets work from a unified perspective.

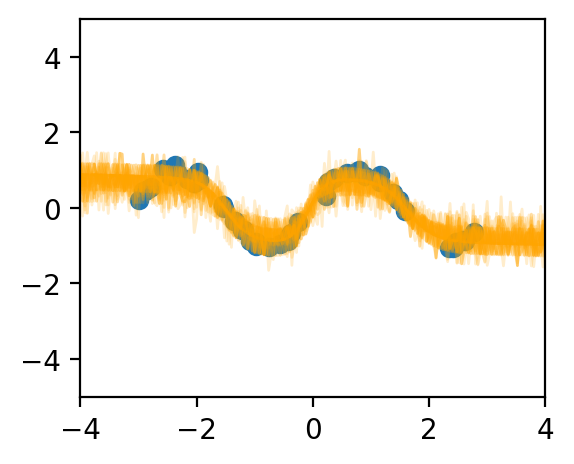

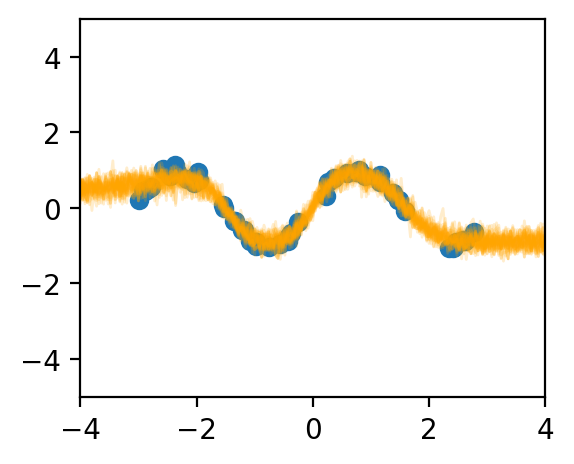

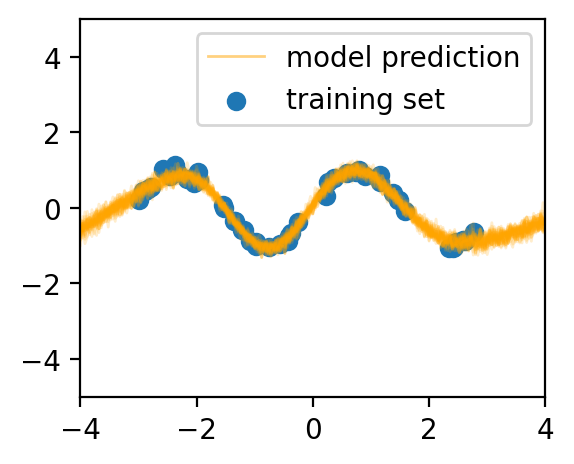

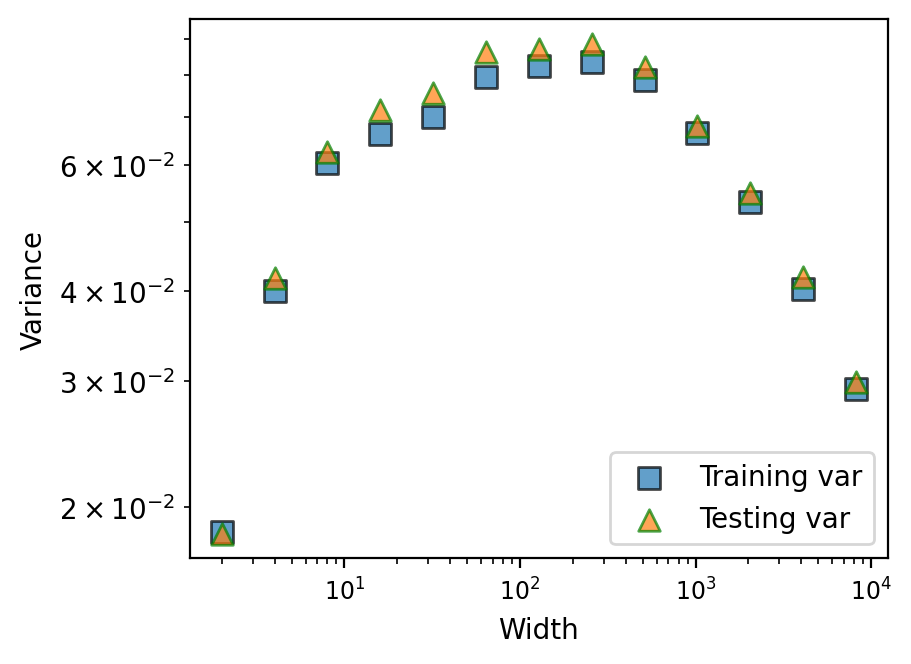

In this work, we theoretically study the stochastic neural networks. We prove that as the width of an optimized stochastic net increases to infinity, its predictive variance decreases to zero on the training set, a result we believe to have both important practical and theoretical implications. Two common examples that our theory can apply to are dropout and VAEs. See Figure 1 for an illustration of this effect. Along with this proof, we propose a novel theoretical framework and lay out a potential path for future theoretical research on stochastic nets. This framework allows us to abstract away the specific definitions of contemporary architectures of neural networks and makes our result applicable to a family of functions that includes many common neural networks as a strict subset.

2 Related Works

For most tasks, the quantity we would like to model is the conditional probability of the target for a given input , . This implies that good Bayesian inference can only be made if the statistics of are correctly modeled. For example, stochastic neural networks are expected to have well-calibrated uncertainty estimates, a trait that is highly desirable for practical safe and reliable applications (Wilson and Izmailov,, 2020; Gawlikowski et al.,, 2021; Izmailov et al.,, 2021). This expectation means that a well-trained stochastic network should have a predictive variance that matches the actual level of randomness in the labeling. However, despite the extensive exploration of empirical techniques, almost no work theoretically studies the capability of a neural network to model the statistics of data distribution correctly, and our work fills in an important gap in this field of research. Two applications we consider in this work are dropout (Srivastava et al.,, 2014), which can be interpreted a stochastic technique for approximate Bayesian inference, and VAE (Kingma and Welling,, 2013), which is among the main Bayesian deep learning methods in use.

Theoretically, while a unified approach is lacking, some previous works exist to separately study different stochastic techniques in deep learning. A series of recent works approaches the VAE loss theoretically (Dai and Wipf,, 2019). Another line of recent works analyzes linear models trained with VAE to study the commonly encountered mode collapse problem of VAE (Lucas et al.,, 2019; Koehler et al.,, 2021). In the case of dropout, Gal and Ghahramani, (2016) establishes the connection between the dropout technique and Bayesian learning. Another series of work extensively studied the dropout technique with a linear network (Cavazza et al.,, 2018; Mianjy and Arora,, 2019; Arora et al.,, 2020) and showed that dropout effectively controls the rank of the learned solution and approximates a data-dependent regularization.

The investigation of neural network behaviour in the extreme width limit is also relevant to our work (Neal,, 1996; Lee et al.,, 2018; Jacot et al.,, 2018; Matthews et al.,, 2018; Lee et al.,, 2019; Matthews et al.,, 2018; Novak et al.,, 2018; Garriga-Alonso et al.,, 2018; Allen-Zhu et al.,, 2018; Khan et al.,, 2019; Agrawal et al.,, 2020), which establishes some equivalence between neural networks and Gaussian processes (GPs) (Rasmussen,, 2003). Meanwhile, several theoretical studies (Jacot et al.,, 2018; Lee et al.,, 2018; Chizat et al.,, 2018) demonstrated that for infinite-width networks, the distribution of functions induced by gradient descent during training could also be described as a GP with the neural tangent kernel (NTK) kernel (Jacot et al.,, 2018), which facilitates the study of deep neural networks using theoretical tools from kernel methods. Our work studies the property of the global minimum of an infinite-width network and does not rely on the NNGP or NTK formalism. Also, both lines of research are not directly relevant for studying trained stochastic networks at the global minimum.

3 Main Result

In this section, we present and discuss our main result. Notation-wise, let denote a matrix, denote its -th row viewed as a vector. Let be any vector, and denote its -th element; however, when involves a complicated expression, we denote its -th element as for clarity.

3.1 Problem Setting

We first introduce two basic assumptions of the network structure.

Assumption 1.

(Neural networks can be decomposed into Lipshitz-continuous blocks.) Let be a neural network. We assume that there exist functions and such that , where denotes functional composition. Additionally, both and are Lipshitz-continuous.

Throughout this work, the component functions of a network are called a block, which can be seen as a generalization of a layer. It is appropriate to call the input block and the output block. Because the Lipshitz constant of a neural network can be upper bounded by the product of the largest eigenvalue of each weight matrix times the Lipshitz constant of the non-linearity, the assumption that every block is Lipshitz-continuous applies to all existing networks with fixed weights and with Lipshitz-continuous activation functions (such as ReLU, tanh, Swish (Ramachandran et al.,, 2017), Snake (Ziyin et al.,, 2020) etc.).

If we restrict ourselves to feedforward architectures, we can discuss the meaning of an ”increasing width” without much ambiguity. However, in our work, since the definition of blocks (layers) is abstract, it is not immediately clear what it means to ”increase the width of a block.” The following definition makes it clear that one needs to specify a sequence of blocks to define an increasing width.111Also, note that this definition of ”width” makes it possible to define different ways of ”increasing” the width and is thus more general than the standard procedure of simply increasing the output dimension of the corresponding linear transformation.

Definition 1.

(Models with an increasing width.) Each block of a neural network is labeled with two indices . Let ; we write if for all , and . Moreover, to every block , there corresponds a countable set of blocks . For a block , its corresponding block set is denoted as . Also, to every sequence of blocks , there also corresponds a sequence of parameter sets such that is parametrized by . The corresponding parameter set of block is denoted as .

Note that if , and , must be equal to ; namely, specifying constrains the input dimension of the next block. It is appropriate to call the width of the block . Since each block is parametrized by its own parameter set, the union of all parameter sets is the parameter set of the neural network : . Since every block comes with the respective indices and equipped with its own parameter set, we omit specifying the indices and the parameter set when unnecessary. The next assumption specifies what it means to have a larger width.

Assumption 2.

(A model with larger width can express a model with smaller width.) Let be a block and its block set. Each block in is associated with a set of parameters such that for any pair of functions , any fixed , and any mappings from , there exists parameters such that for all and .

This assumption can be seen as a constraint on the types of block sets we can choose. As a concrete example, the following proposition shows that the block set induced by a linear layer with arbitrary input and output dimensions followed by an element-wise non-linearity satisfies our assumption.

Proposition 1.

Let where is an element-wise function, , and . Then, satisfies Assumption 2.

Proof. Consider two functions and in . Let be an arbitrary mapping from It suffices to show that there exist and such that for all . For a matrix , we use to denote the -th row of . By definition, this condition is equivalent to

| (1) |

which is achieved by setting and , where is the -th row of .

Now, we are ready to define a stochastic neural network.

Definition 2.

(Stochastic Neural Networks) A neural network is said to be a stochastic neural network with stochastic block if is a function of and a random vector , and the corresponding deterministic function satisfies Assumption 2, where .

Namely, a stochastic network becomes a proper neural network when averaged over the noise of the stochastic block. To proceed, we make the following assumption about the randomness in the stochastic layer.

Assumption 3.

(Uncorrelated noise) For a stochastic block , , where is a diagonal matrix and for all .

This assumption applies to standard stochastic techniques in deep learning, such as dropout or the reparametrization trick used in approximate Bayesian deep learning. Lastly, we assume the following condition for the architecture.

Assumption 4.

(Stochastic block is followed by linear transformation.) Let be the stochastic neural network under consideration, and let be the stochastic layer. We assume that for all , for a fixed function with parameter set , where and bias for a fixed integer . In our main result, we further assume that for notational conciseness.

In other words, we assume that the second block can always be decomposed as , such that is an optimizable linear transformation. This is the only architectural assumption we make. In principle, this can be replaced by weaker assumptions. However, we leave this as an important future work because Assumption 4 is sufficient for the purpose of this work and is general enough for the applications we consider (such as dropout and VAE). We also stress that the condition that starts with a linear transformation does not mean that the first actual layer of is linear. Instead, can be followed by an arbitrary Lipshitz activation function as is usual in practice; in our definition, if it exists, this following activation is assumed into the definition of .

The actual rather restrictive assumption in Assumption 4 is that the function has a fixed input dimension (like a “bottleneck”). In practice, when one scales up the model, it is often the case that one wants to scale up the width of all other layers simultaneously. For the first block, this is allowed by assumption 2.222For example, if is a multilayer perceptron, it is easy to check that assumption 2 is satisfied if one increases the intermediate layers of simultaneously. We note that this bottleneck assumption is mainly for notational concision. In the appendix A.3, we show that one can also extend the result to the case when the input dimension (and the intermediate dimensions) of also increases as one increases the width of the stochastic layer.

Notation Summary. To summarize, we require a network to be decomposed into two blocks: and is a stochastic block. Each block is associated with its indices, which specify its input and output dimensions, and a parameter set that we optimize over. For example, we can write a block as to specify that is the -th block in a neural network, is a mapping from to , and that its parameters are . However, for notational conciseness and better readability, we omit some of the specifications when the context is clear. For the parameter , we abuse the notation a little. Sometimes, we view as a set and discuss its unions and subsets; for example, let ; then, we say that the parameter set of is the union of the parameter set of and : . Alternatively, we also view as a vector in a subset of the real space, so that we can look for the minimizer in such a space (in expressions such as ).

3.2 Convergence without Prior

In this work, we restrict our theoretical result to the MSE loss. Consider an arbitrary training set , when training the deterministic network, we want to find

| (2) |

It is convenient to write as .

An overparametrized network can be defined as a network that can achieve zero training loss on such a dataset.

Definition 3.

A neural network is said to be overparametrized for a non-negative differentiable loss function if there exists such that . For a stochastic neural network with stochastic block , is said to be overparametrized if is overparametrized, where is the expectation operation that averages over .333We note that our result can be easily generalized to some cases when zero training loss is not achievable. For example, when there is degeneracy in the data (when the same comes with two different labels), zero training loss is not reachable, but our result can be generalized to such a case.

Namely, for a stochastic network, we say that it is overparametrized if its deterministic part is overparametrized. When there is no degeneracy in the data (if , then ), zero training loss can be achieved for a wide enough neural network, and this definition is essentially equivalent to assuming that there is no data degeneracy and is thus not a strong limitation of our result.

With a stochastic block, the training loss becomes (due to the sampling of the hidden representation)

| (3) |

Note that this loss function can still be reduced to if for all with probability .

With these definitions at hand, we are ready to state our main result.

Theorem 1.

Let the neural network under consideration satisfy Assumptions 1 ,2, 4 and 3, and assume that the loss function is given by Eq. (3). Let be a sequence of stochastic networks such that, for fixed integers , with stochastic block . Let be overparameterized for all for some . Let be a global minimum of the loss function. Then, for all in the training set,

| (4) |

Proof Sketch. The full proof is given in Appendix Section A.1. In the proof, we denote the term as . Let be the global minimizer of . Then, for any , by definition of the global minimum,

| (5) |

If , we have , which implies that for all . By bias-variance decomposition of the MSE, this, in turn, implies that for all . Therefore, it is sufficient to construct a sequence of such that . The rest of the proof shows that, with the architecture assumptions we made, such a network can indeed be constructed. In particular, the architectural assumptions allow us to make independent copies of the output of the stochastic block and the linear transformation after it allows us to average over such independent copies to recover the mean with a vanishing variance, which can then be shown to be able to achieve zero loss.

Remark.

Note that the parameter set that we optimize is different for different ; we do not attach an index to in the proof for notational conciseness. In plain words, our main result states that for all in the training set, an overparametrized stochastic neural network at its global minimum has zero predictive variance in the limit . The condition that the width is a multiple of is not essential and is only used for making the proof concise. One can prove the same result without requiring . Also, one might find the restriction to the MSE loss restrictive. However, it is worth noting that our result is quite strong because it applies to an arbitrary latent noise distribution whose second moment exists. It is possible to prove a similar result for a broader class of loss functions if we place more restrictions on the distribution of the latent noise (such as the existence of higher moments).

At a high level, one might wonder why the optimized model achieves zero variance. Our results suggest that the form of the loss function may be crucial. The MSE loss can be decomposed into a bias term and a variance term:

| (6) |

Minimizing the MSE loss involves both minimizing the bias term and the variance term, and the key step of our proof involves showing that a neural network with a sufficient width can reduce the variance to zero. We thus conjecture that convergence to a zero-variance model can be generally true for a broad class of loss functions. For example, one possible candidate for this function class is the set of convex loss functions, which favor a mean solution more than a solution with variance (by Jensen’s inequality), and a neural network is then encouraged to converge to such solutions so as to minimize the variance. However, identifying this class of loss functions is beyond the scope of the present work, and we leave it as an important future step. Lastly, we also stress that the main results are not a trivial consequence of the MSE being convex. When the model is linear, it is rather straightforward to show that the variance reduces to zero in the large-width limit because taking the expectation of the model output is equivalent to taking the expectation of the latent noise. However, this is not trivial to prove for a genuine neural network because the net is, in general, a nonlinear and nonconvex function of the latent noise .

3.3 Application to Dropout

Definition 4.

A stochastic block is said to be a -dropout layer if , where is a deterministic block, and are independent random variables such that with probability and with probability .

Since the noise of dropout is independent, one can immediately apply the main theorem and obtain the following corollary.

Corollary 1.

For any , an optimized stochastic network with an infinite width -dropout layer has zero variance on the training set.

Our result thus formally proves the intuitive hypothesis in the original dropout paper (Srivastava et al.,, 2014) that applying dropout to training has the effect of encouraging an averaging effect in the latent space.

3.4 Convergence with a Prior

We now extend our result to the case where a soft prior constraint exists in the loss function. The model setting of this section is equivalent to a special type of Bayesian latent variable model (e.g., a VAE) whose prior strength decreases towards zero as the width of the model is increased.444In the context of VAE, many previous works have noticed that the VAE has problems with its prediction variance (Dai and Wipf,, 2019; Wang and Ziyin,, 2022). However, none of the previous works discusses the behavior of a VAE when its width tends to infinity. The result in this section is more general and involved than Theorem 1 because the soft prior constraint matches the latent variable to the prior distribution in addition to the MSE loss. The existence of the prior term regularizes the model and prevents a perfect fitting of the original MSE loss, and the main message of this section is to show that even in the presence of such a (weak) regularization term, a vanishing variance can still emerge, but relatively slowly.

While it is natural that a latent variable model can be decomposed into two blocks, where is the decoder, and is an encoder, we require one additional condition. Namely, the stochastic block ends with a linear transformation layer (which is satisfied by a standard VAE encoder (Kingma and Welling,, 2013), for example).

Definition 5.

A stochastic block is said to be a encoder block if , where is the Hadamard product, , are linear transformations and is the bias of , is a deterministic block, and are uncorrelated random variables with zero mean and unit variance.

Note that we explicitly require the weight matrix to come with a bias. For the other linear transformations, we have omitted the bias term for notational simplicity. One can check that this definition is consistent with Definition 2. Namely, a net with an encoder block is indeed a type of stochastic net. When the training loss only consists of the MSE, it should follow as an immediate corollary of Theorem 1 that the variance converges to zero. However, the prior term complicates the problem. The following definition specifies the type of the prior loss under our consideration.

Definition 6.

(Prior-Regularized Loss.) Let , such that is a encoder block. A loss function is said to be a prior-regularized loss function if , where is given by Eq. (3) and , where , and are differentiable functions that are equal to zero if for all , and .

We have abstracted away the actual details of the definitions of the prior loss. For our purpose, it is sufficient to say that the equation means that the loss function encourages the posterior to have a zero mean and encourages a unit variance. As an example, one can check that the standard ELBO loss for VAE satisfies this definition. With this architecture, we prove a similar result. The proof is given in Section A.2.

Theorem 2.

Assuming that the neural networks under consideration satisfy Assumptions 1 ,2, 4, and the stochastic block is a encoder block and satisfies Assumption 3, and that the loss function is a prior-regularized loss with parameter , let be fixed integers, and be a sequence of stochastic networks with stochastic block . Let be overparameterized for all for some . Let be the global minimum of the loss function. Then, for all in the training set,

| (7) |

Remark.

One should also be careful when interpreting this result. Strictly speaking, this result shows that, conditioning on a fixed input to the encoder, an optimized latent variable model (such as a VAE) outputs a deterministic prediction, independent of the sampling of the hidden representation. Strictly speaking, this does not mean that the generation will be deterministic because it is also often assumed that the outputs of the decoder obey another independent, say, Gaussian distribution with a predetermined variance. However, it is hardly the case for practitioners to separately inject a Gaussian noise to the generated data (Doersch,, 2021), and so our result is relevant for practical situations. Also, the condition implies that cannot increase too fast with the hidden width.

One might wonder whether a vanishing prior strength makes this setting trivial. The proof shows that it is far from trivial and that even a prior with vanishing strength can have a very strong influence on how fast the variance decays towards zero. The proof suggests that if the prior strength decays as , the variance should decay roughly as . Namely, the smaller the , the slower the rate of convergence towards . Additionally, the fastest exponent at which the variance can decay is , significantly smaller than the case for Theorem 1, where the proof suggests an exponent of . This means that even a vanishing regularization strength can have a strong impact on the prediction variance of the model. Quantitatively, as approaches zero, the variance can decay arbitrarily slowly. Qualitatively, our result implies that having a regularization term or not qualitatively changes the nature of the global minima of the stochastic model. Also, we note that our problem setting is formally equivalent to a generalized555It is generalized because the MSE is not necessarily a reconstruction loss. -VAE (Higgins et al.,, 2016) with . While it is rarely the case for practitioners to scale towards zero, we stress that the main value of Theorem 2 is the theoretical insight it offers on the qualitative distinction between having a prior term and having not.

4 Numerical Simulations

We perform experiments with nonlinear neural networks to demonstrate the studied effect of vanishing variance. The first part describes the illustrative experiment presented at the beginning of the paper. The second part experimentally demonstrates that a dropout network and VAE have a vanishing prediction variance on the training set as the width tends to infinity. Additional experiments performed with weight decay and SGD are presented in the appendix.

4.1 Illustration

In this experiment, we let the target function be for uniformly sampled from the domain . The target is corrupted by a weak noise . The model is a feedforward network with tanh activation with the number of neurons where , and a dropout with dropout probability is applied in the hidden layer. See Figure 1.

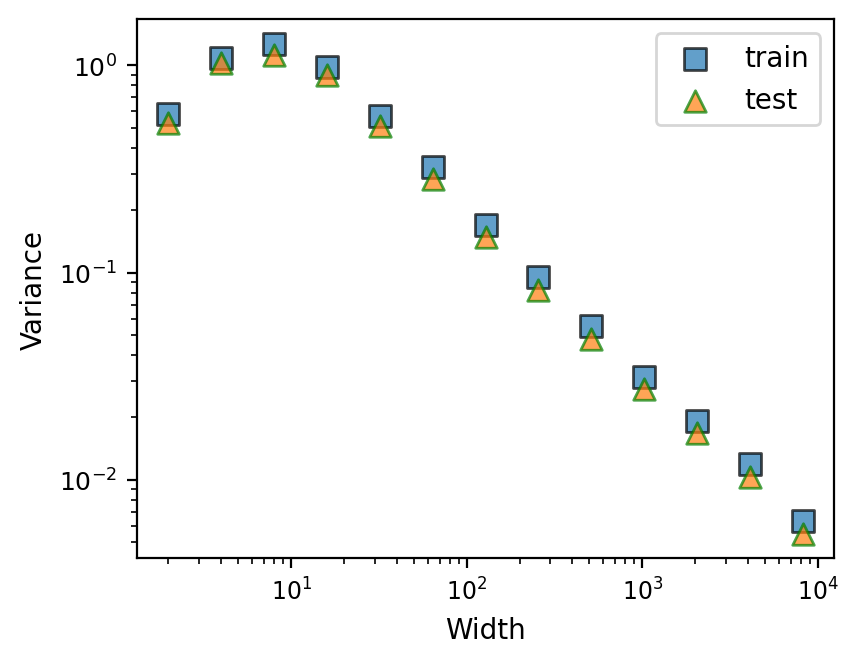

4.2 Dropout

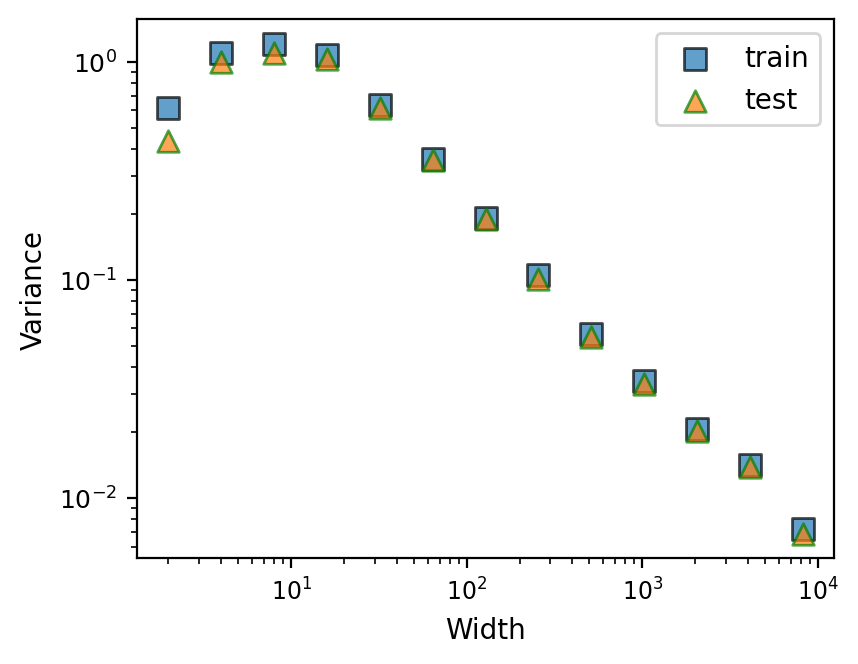

We now systematically explore the prediction variance of a model trained with dropout. As an extension of the previous experiment, we let both the input and the target be vectors such that . The target function is for , where and the noise is also a vector. We let and sample data point pairs for training. The model is a three-layer MLP with ReLU activation functions, with the number of neurons , where is the width of the hidden layer. Dropout is applied to the post-activation values of the hidden layer. In the experiments, we set the dropout probability to be 0.1, and we independently sample outputs times to estimate the prediction variance. Training proceeds with Adam for steps, with an initial learning rate of , and the learning rate is decreased by a factor of 10 every step. See Figure 2(a) for a log-log plot of width vs. prediction variance. We see that the prediction variance of the model on the training set decreases towards zero as the width increases, as our theory predicts. For completeness, we also plot the prediction variance for an independently sampled test set for completeness. We see that, for this task, the prediction variance of the test points agree well with that of the training set. A linear regression on the slope of the tail of the width-variance curve shows that the variances decrease roughly as , close to what our proof suggests (); we hypothesize that the exponent is slightly smaller than because the training is stopped at a finite time and the model has not fully reached the global minimum.666Moreover, with a finite-learning rate, SGD is a biased estimator of a minimum (Ziyin et al., 2021a, )..

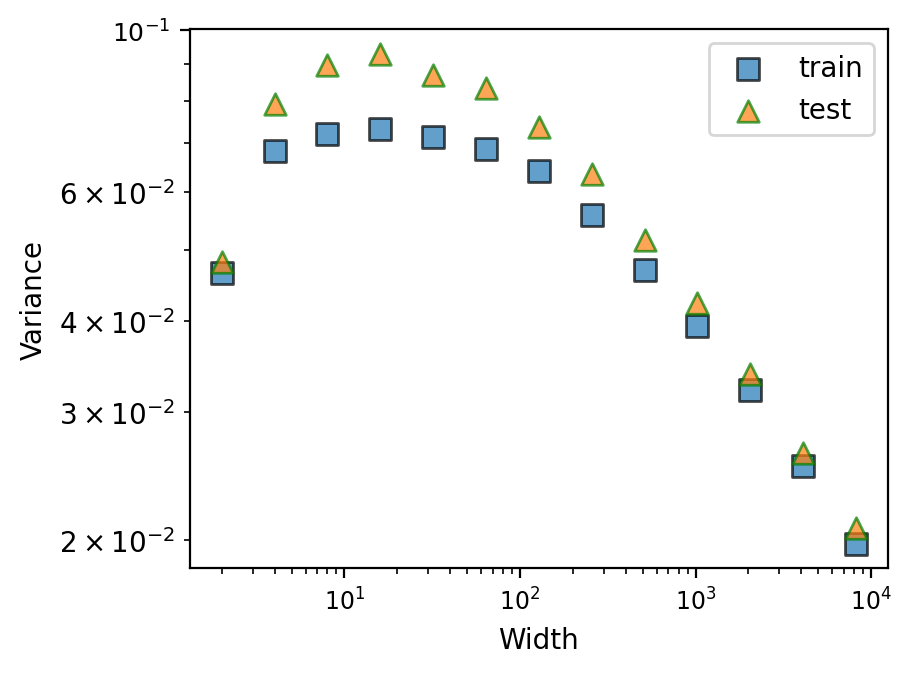

4.3 Variational Autoencoder

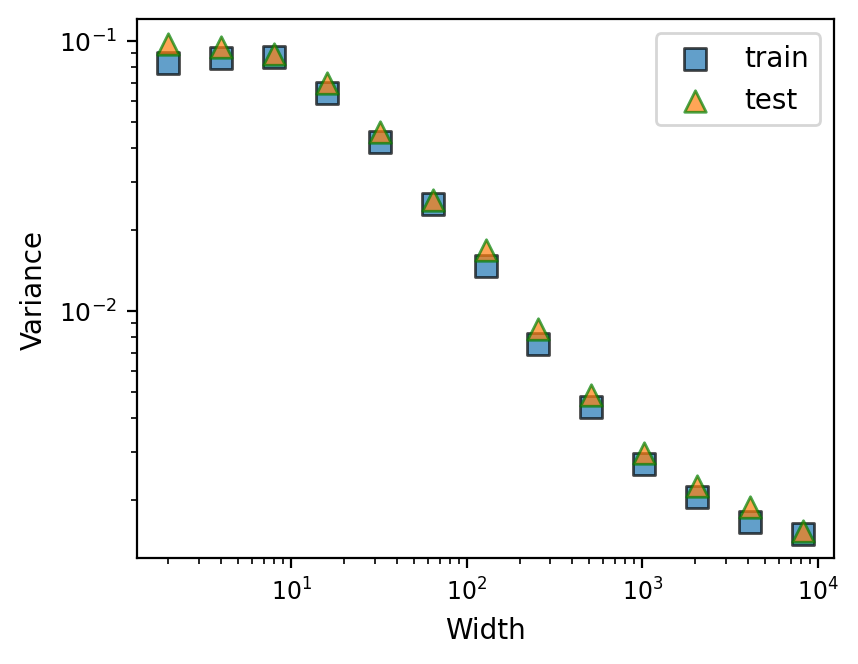

In this section, we conduct experiment on a VAE with latent Gaussian noise. The input data is sampled from a standard Gaussian distribution with . We generate data points for training. The VAE employs a standard encoder-decoder architecture with ReLU nonlinearity. The encoder is a two-layer feedforward network with neurons . The decoder is also a two-layer feedforward network with architecture . Note that our theory requires the prior term not to increase with the width; we, therefore, choose . The training objective is the minus standard Evidence Lower Bound (ELBO) composed of reconstruction error and the KL divergence between the parameterized variable and standard Gaussian. We independently sample outputs times to estimate the prediction variance for estimating the variance. The results in Fig. 2(b) show that the variances of both training and test set decrease as the width increases and follow the same pattern.

4.4 Experiments with Weight Decay

Weight decay often has the Bayesian interpretation of imposing a normal distribution prior over the variables, and sometimes it is believed to prevent the model from making a deterministic prediction (Gal and Ghahramani,, 2016). We, therefore, also perform the same experiments as in the previous two subsections, with a weight decay strength of . See Appendix Sec. B.1 for the results. We notice that the experimental result is similar and that applying weight decay is not sufficient to prevent the model from reaching a vanishing variance. Conventionally, we expect the prediction of a dropout net to be of order and not directly dependent on the width (Gal and Ghahramani,, 2016); however, this experiment suggests that width may wield a stronger influence on the prediction variance than the weight decay strength. Since a model cannot reach the actual global minimum when weight decay is applied, this experiment is beyond the applicability of our theory; therefore, this result suggests a conjecture that even in a local minimum, it can still be highly likely for stochastic models to reach a solution with vanishing variance, and proving or disproving this conjecture can be an important future task.

5 Discussion

In this work, we theoretically studied the prediction variance of stochastic neural networks. We showed that when the loss function satisfies certain conditions and under mild architectural conditions, the prediction variance of a stochastic net on the training set tends to zero as the width of the stochastic layer tends to infinity. Our theory offers a precise mathematical explanation to a frequently quoted anecdotal problem of a stochastic network, that the neural networks can be too expressive such that adding noise to the latent layers is not sufficient to make a network capable of modeling a distribution well (Higgins et al.,, 2016; Burgess et al.,, 2018; Dai and Wipf,, 2019). Our result also offers a formal justification of the original intuition behind the dropout technique. From a practical point of view, our result suggests that it is generally nontrivial to train a model whose prediction variance matches the true variance of the data. One potential fix is to design a loss function that encourages a nonvanishing prediction variance, which is an interesting future problem. There are two major limitations of the present approach. First of all, our result can only be applied to data points in the training set, and it is unclear what we can say about the test set. Even though experiments on non-linear neural networks suggest that the test variance may also decrease towards zero, it is unclear under what condition this is the case, and it is an important topic to investigate in the future. The second limitation is that we have only studied the global minimum, and it is important to study whether or under what condition the variance also vanishes for a local minimum.

References

- Agrawal et al., (2020) Agrawal, D., Papamarkou, T., and Hinkle, J. (2020). Wide neural networks with bottlenecks are deep gaussian processes. Journal of Machine Learning Research, 21(175).

- Allen-Zhu et al., (2018) Allen-Zhu, Z., Li, Y., and Liang, Y. (2018). Learning and generalization in overparameterized neural networks, going beyond two layers. arXiv preprint arXiv:1811.04918.

- Arora et al., (2020) Arora, R., Bartlett, P., Mianjy, P., and Srebro, N. (2020). Dropout: Explicit forms and capacity control.

- Burgess et al., (2018) Burgess, C. P., Higgins, I., Pal, A., Matthey, L., Watters, N., Desjardins, G., and Lerchner, A. (2018). Understanding disentangling in -vae. arXiv preprint arXiv:1804.03599.

- Cavazza et al., (2018) Cavazza, J., Morerio, P., Haeffele, B., Lane, C., Murino, V., and Vidal, R. (2018). Dropout as a low-rank regularizer for matrix factorization. In International Conference on Artificial Intelligence and Statistics, pages 435–444. PMLR.

- Chizat et al., (2018) Chizat, L., Oyallon, E., and Bach, F. (2018). On lazy training in differentiable programming. arXiv preprint arXiv:1812.07956.

- Dai and Wipf, (2019) Dai, B. and Wipf, D. (2019). Diagnosing and enhancing vae models. arXiv preprint arXiv:1903.05789.

- Doersch, (2021) Doersch, C. (2021). Tutorial on variational autoencoders.

- Gal and Ghahramani, (2016) Gal, Y. and Ghahramani, Z. (2016). Dropout as a bayesian approximation: Representing model uncertainty in deep learning. In international conference on machine learning, pages 1050–1059. PMLR.

- Garriga-Alonso et al., (2018) Garriga-Alonso, A., Rasmussen, C. E., and Aitchison, L. (2018). Deep convolutional networks as shallow gaussian processes. arXiv preprint arXiv:1808.05587.

- Gawlikowski et al., (2021) Gawlikowski, J., Tassi, C. R. N., Ali, M., Lee, J., Humt, M., Feng, J., Kruspe, A., Triebel, R., Jung, P., Roscher, R., et al. (2021). A survey of uncertainty in deep neural networks. arXiv preprint arXiv:2107.03342.

- Goodfellow et al., (2014) Goodfellow, I., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S., Courville, A., and Bengio, Y. (2014). Generative adversarial nets. Advances in neural information processing systems, 27.

- Higgins et al., (2016) Higgins, I., Matthey, L., Pal, A., Burgess, C., Glorot, X., Botvinick, M., Mohamed, S., and Lerchner, A. (2016). beta-vae: Learning basic visual concepts with a constrained variational framework.

- Izmailov et al., (2021) Izmailov, P., Vikram, S., Hoffman, M. D., and Wilson, A. G. (2021). What are bayesian neural network posteriors really like? arXiv preprint arXiv:2104.14421.

- Jacot et al., (2018) Jacot, A., Gabriel, F., and Hongler, C. (2018). Neural tangent kernel: Convergence and generalization in neural networks. arXiv preprint arXiv:1806.07572.

- Khan et al., (2019) Khan, M. E. E., Immer, A., Abedi, E., and Korzepa, M. (2019). Approximate inference turns deep networks into gaussian processes. Advances in Neural Information Processing Systems, 32.

- Kingma and Welling, (2013) Kingma, D. P. and Welling, M. (2013). Auto-encoding variational bayes. arXiv preprint arXiv:1312.6114.

- Koehler et al., (2021) Koehler, F., Mehta, V., Risteski, A., and Zhou, C. (2021). Variational autoencoders in the presence of low-dimensional data: landscape and implicit bias. arXiv preprint arXiv:2112.06868.

- Lee et al., (2018) Lee, J., Bahri, Y., Novak, R., Schoenholz, S. S., Pennington, J., and Sohl-Dickstein, J. (2018). Deep neural networks as gaussian processes. In International Conference on Learning Representations.

- Lee et al., (2019) Lee, J., Xiao, L., Schoenholz, S., Bahri, Y., Novak, R., Sohl-Dickstein, J., and Pennington, J. (2019). Wide neural networks of any depth evolve as linear models under gradient descent. Advances in neural information processing systems, 32:8572–8583.

- Liu et al., (2021) Liu, K., Ziyin, L., and Ueda, M. (2021). Noise and fluctuation of finite learning rate stochastic gradient descent.

- Lucas et al., (2019) Lucas, J., Tucker, G., Grosse, R., and Norouzi, M. (2019). Don’t blame the elbo! a linear vae perspective on posterior collapse.

- Mackay, (1992) Mackay, D. J. C. (1992). Bayesian methods for adaptive models. PhD thesis, California Institute of Technology.

- Matthews et al., (2018) Matthews, A. G. d. G., Rowland, M., Hron, J., Turner, R. E., and Ghahramani, Z. (2018). Gaussian process behaviour in wide deep neural networks. arXiv preprint arXiv:1804.11271.

- Mianjy and Arora, (2019) Mianjy, P. and Arora, R. (2019). On dropout and nuclear norm regularization. In International Conference on Machine Learning, pages 4575–4584. PMLR.

- Neal, (1996) Neal, R. (1996). Bayesian learning for neural networks. Lecture Notes in Statistics.

- Novak et al., (2018) Novak, R., Xiao, L., Lee, J., Bahri, Y., Yang, G., Hron, J., Abolafia, D. A., Pennington, J., and Sohl-Dickstein, J. (2018). Bayesian deep convolutional networks with many channels are gaussian processes. arXiv preprint arXiv:1810.05148.

- Ramachandran et al., (2017) Ramachandran, P., Zoph, B., and Le, Q. V. (2017). Searching for activation functions.

- Rasmussen, (2003) Rasmussen, C. E. (2003). Gaussian processes in machine learning. In Summer school on machine learning, pages 63–71. Springer.

- Srivastava et al., (2014) Srivastava, N., Hinton, G., Krizhevsky, A., Sutskever, I., and Salakhutdinov, R. (2014). Dropout: a simple way to prevent neural networks from overfitting. The journal of machine learning research, 15(1):1929–1958.

- Wang and Ziyin, (2022) Wang, Z. and Ziyin, L. (2022). Posterior collapse of a linear latent variable model. arXiv preprint arXiv:2205.04009.

- Wilson and Izmailov, (2020) Wilson, A. G. and Izmailov, P. (2020). Bayesian deep learning and a probabilistic perspective of generalization. arXiv preprint arXiv:2002.08791.

- Ziyin et al., (2020) Ziyin, L., Hartwig, T., and Ueda, M. (2020). Neural networks fail to learn periodic functions and how to fix it. arXiv preprint arXiv:2006.08195.

- (34) Ziyin, L., Li, B., Simon, J. B., and Ueda, M. (2021a). Sgd may never escape saddle points.

- (35) Ziyin, L., Liu, K., Mori, T., and Ueda, M. (2021b). Strength of minibatch noise in sgd. arXiv preprint arXiv:2102.05375.

Appendix A Proofs and Theoretical Concerns

A.1 Proof of Theorem 1

Proof. In the proof, we denote the term as . Let be the global minimizer of . Then, for any , we have by definition

| (8) |

If , we have , which implies that for all . By the bias-variance decomposition of the MSE, this, in turn, implies that for all . Therefore, it is sufficient to construct a sequence of such that .

Now, we construct such a . Let denote the deterministic counterpart of . By definition and by Assumption 1, we have

| (9) | ||||

| (10) |

By the architecture assumption (assumption 4), there exists a function parametered by a parameter set and a linear transformation such that we can further decompose the two neural networks as

| (11) | ||||

| (12) |

where for a fixed integer and is a linear transformation belonging to the parameter set of . Note that, by definition, the parameter set of the block is .

Let be a global minimum of :

| (13) |

and, by the assumption of overparametrization, we also have for all .

We now specify the parameters for for . By assumption 2, we can find parameters such that for . Namely, we choose the parameters such that the expected output of the stochastic block are identical copies of the output of the overparametrized deterministic model with width .

Since is a linear transformation, one can factorize it as a product of two matrices such that , where and :

| (14) | ||||

| (15) |

Now, note that by definition, the function for any coincides with the block of . Namely, such that , and we let and .

Now, the last step is to specify . We let , where if and otherwise. Namely, is nothing but an averaging matrix that sums and rescales the deterministic layer by a factor of .

To summarize, our specification defines the following stochastic neural network:

| (16) |

By definition of and , , and are independent for different . Moreover, because has variance by assumption, has variance .

Now, as ,

| (17) |

where denotes convergence in mean square. Because is a Lipshitz continuous function by Assumption 1,

| (18) |

This implies that the expectation of the constructed model with increasing width converge to that of the overparametrized determinstic model (convergence in mean square implies convergence in mean). Therefore, defining our model as , we obtain

| (19) |

Therefore, we have, by the bias-variance decomposition for MSE:

| (20) |

Both terms converges to for all , and so the sum of two terms converge to . This finishes the proof.

A.2 Proof of Theorem 2

Before the proof, we first comment that the proof is quite similar to the previous case. The difference lies in how we construct the model so as to reduce the training loss to zero as

Proof. In the proof, we denote the term as . Let be the global minimizer of . Then, for any , we have by definition

| (21) |

If , we have , which implies that for all . This, in turn, implies that for all because both the reconstruction loss and the prior are non-negative. Therefore, it is sufficient to construct a sequence of such that .

Let denote the deterministic counterpart of , and let be a global minimum of . By the definition of a neural network, we can write

| (22) | ||||

| (23) |

By the assumption of overparametrization, for all .

By the architecture assumption, there exists a function parametrized by a parameter set such that we can further decompose the two neural networks as

| (24) | ||||

| (25) |

where for a fixed integer and is a linear transformation belonging to the parameter set of , and is the linear stochastic layer. Now, the parameter set of the network is .

By definition, the loss takes the form

| (26) |

We first let and , which immediately minimizes the variance part of of the loss: for all . By assumption, for , is overparametrized, and one can find such that .

For , we let for a positive scalar . Namely, we copy the rows of the matrix so that the expected output of the stochastic block are identical copies of the output of the overparametrized deterministic model with width , rescaled by a factor .777Note that this factor of is one crucial difference from the previous proof. This factor of will be crucial for reducing to 0.

Since is a linear transformation, one can factorize it as a product of two matrices such that where and :

| (27) | ||||

| (28) |

Now, by definition, the function such that , and we let and . Again, notice that we have rescaled the matrix by a factor of .

Now, the last step is specify . We let where if and otherwise. Namely, sums and rescales the deterministic layer by a factor of . This transformation has an averaging effect.

To summarize, our specification defines the following stochastic neural network:

| (29) |

By definition of and , , and are independent for different . Moreover, because has variance by assumption, has variance . We let , where , and, therefore, has variance which vanishes to as increases.

At the same time, because is a differentiable function of ,

| (30) | ||||

| (31) | ||||

| (32) |

where the last line follows from the condition , which holds by assumption.888Namely, with this construction, the variance part of the loss scales as and the prior part of the loss scales as . The sum of the two terms are minimized if , or, .

Now, as ,

| (33) |

where denotes convergence in mean square. Because is a Lipshitz continuous function,

| (34) |

This implies that the expectation of the model with increasing width converge to that of the overparametrized deterministic model (convergence in mean square implies convergence in mean. Therefore, defining our model as

| (35) |

Therefore, defining our model as , we have, by the bias-variance decomposition for MSE:

| (36) |

which converges to . This finishes the proof.

A.3 Removing the Bottleneck Constraint for

In this section, we prove a version of Theorem 1 to demonstrate how one can remove the Bottleneck constraint of . A similarly generalized version of Theorem 2 can also be proved using following the same steps, and so we leave that as an exercise to the readers. To begin, we first need an extended version of Assumption 5.

Assumption 5.

(A model with larger width can express a model with smaller width II.) Additionally, let denote a subset of (namely, and for all , there exists such that ). Let be a block and its block set. Each block in is associated with a set of parameters such that for any pair of functions , any fixed , and any mappings from , there exists parameters such that for all .

The original Assumption 2 only specifies what it means to have a larger output dimension. This extended version, in addition, says what it means to have a larger input dimension for a block. We note that this additional condition is quite general and is satisfied by the usual structures, such as a fully connected layer. As the original Assumption 2, this assumption also agrees with the standard intuitive understanding of what it means to have a larger width.

With this additional assumption, we can remove the bottleneck requirement in the original Assumption 4. Formally, we now require the following weak condition for the architecture.

Assumption 6.

( can be further decomposed into two blocks) Let be the stochastic neural network under consideration, and let be the stochastic layer. We assume that for all , for a block with its block set . is a linear transformation with the standard block set (see Proposition 1).

This generalized assumption effectively means that the model can be decomposed into three blocks:

| (37) |

With these extended assumptions, we can prove a more general version of Theorem 1. In comparison to the original Theorem 1, this theorem effectively allows one to increase the width of all the layers that and implicitly contain simultaneously.

Theorem 3.

Let the neural network under consideration satisfy Assumptions 1 ,2, 5, 6 and 3, and assume that the loss function is given by Eq. (3). Let be a sequence of stochastic networks, for fixed integers , with stochastic block . Additionally, let be an monotonically increasing function of such that for ,

| (38) |

Let be overparameterized for all for some . Let be a global minimum of the loss function. Then, for all in the training set,

| (39) |

Proof. In the proof, we denote the term as . Let be the global minimizer of . Then, for any , we have by definition

| (40) |

If , we have , which implies that for all . By the bias-variance decomposition of the MSE, this, in turn, implies that for all . Therefore, it is sufficient to construct a sequence of such that .

Now, we construct such a . Let denote the deterministic counterpart of . By definition and by Assumption 1, we have

| (41) | ||||

| (42) |

By the architecture assumption (assumption 6), there exists a function parametered by a parameter set and a linear transformation such that we can further decompose the two neural networks as

| (43) | ||||

| (44) |

where for a fixed integer and is a linear transformation belonging to the parameter set of . Note that, by definition, the parameter set of the block is .

Let be a global minimum of :

| (45) |

and, by the assumption of overparametrization, we also have for all .

We now specify the parameters for for . By assumption 2, we can find parameters such that for . Namely, we choose the parameters such that the expected output of the stochastic block are identical copies of the output of the overparametrized deterministic model with width .

Since is a linear transformation, we factorize it as a product of two matrices such that , where and :

| (46) | ||||

| (47) |

Now, note that by assumption 5, for any subset of any , there exists such that , where is the corresponding block of . For , we let the beginning columns of the same as , and the remaining columns :

| (48) |

With this choice, it follows that for any , for the block of .

Now, the last step is to specify . We let , where if and otherwise. Namely, is nothing but an averaging matrix that sums and rescales the deterministic layer by a factor of .

To summarize, our specification defines the following stochastic neural network:

| (49) |

By definition of and , , and are independent for different . Moreover, because has variance by assumption, has variance .

Now, as ,

| (50) |

where denotes convergence in mean square. Because is a Lipshitz continuous function by Assumption 1,

| (51) |

This implies that the expectation of the constructed model with increasing width converge to that of the overparametrized determinstic model (convergence in mean square implies convergence in mean). Therefore, defining our model as , we obtain

| (52) |

Therefore, we have, by the bias-variance decomposition for MSE:

| (53) |

Both terms converges to for all , and so the sum of two terms converge to . This finishes the proof.

Appendix B Additional Experiments

B.1 Weight decay

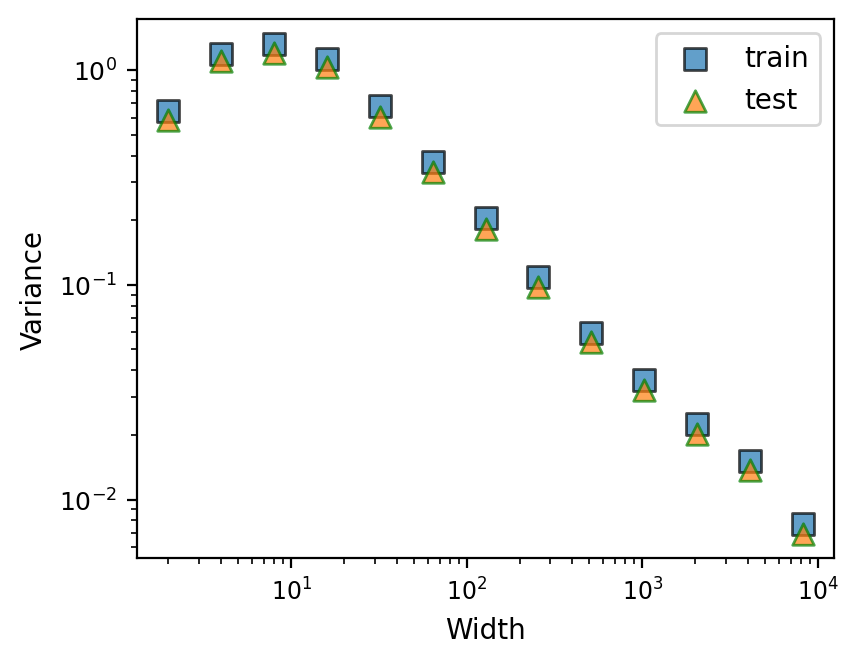

This part of experiment has been described in the main text. See Figure 3. We see that even with weight decay, the prediction variance drops towards zero unhindered.

B.2 Training with SGD

Since our result only depends on the global minimum of the loss function, one expects to also find that the prediction variance to decrease with a different optimization procedure. In this section, we perform the same experiment with SGD. See Figure 4. We see that, for dropout, the result is similar to the case with Adam. For VAE, the result is a little more subtle in the tail, where the decrease in variance slows down. We hypothesize that it is because the SGD algorithm increase in fluctuation and reduce in stability as the width of the hidden layer increases (Liu et al.,, 2021; Ziyin et al., 2021b, ), which causes the prediction variance to increase and partially offsets the effect due to averaging of the parameters.