Strategic Alignment Patterns in National AI Policies

Abstract

This paper introduces a novel visual mapping methodology for assessing strategic alignment in national artificial intelligence policies. The proliferation of AI strategies across countries has created an urgent need for analytical frameworks that can evaluate policy coherence between strategic objectives, foresight methods, and implementation instruments. Drawing on data from the OECD AI Policy Observatory, we analyze 15-20 national AI strategies using a combination of matrix-based visualization and network analysis to identify patterns of alignment and misalignment. Our findings reveal distinct alignment archetypes across governance models, with notable variations in how countries integrate foresight methodologies with implementation planning. High-coherence strategies demonstrate strong interconnections between economic competitiveness objectives and robust innovation funding instruments, while common vulnerabilities include misalignment between ethical AI objectives and corresponding regulatory frameworks. The proposed visual mapping approach offers both methodological contributions to policy analysis and practical insights for enhancing strategic coherence in AI governance. This research addresses significant gaps in policy evaluation methodology and provides actionable guidance for policymakers seeking to strengthen alignment in technological governance frameworks.

Keywords Strategic foresight National AI roadmap Goal-instrument alignment Spreadsheet-based evaluation Scenario stress testing KPI dashboard Technology policy audit

1 Introduction

The global landscape of artificial intelligence (AI) governance has witnessed remarkable transformation since 2017, with over 60 countries publishing national AI strategies by 2025 [58]. This unprecedented proliferation reflects growing recognition that AI represents a transformative general-purpose technology with profound implications for economic competitiveness, national security, and societal well-being. Nations across development spectrums are strategically positioning themselves in what [50] characterized as the "second machine age," understanding that coherent policy frameworks may significantly determine future economic trajectories and geopolitical standing. These national strategies constitute comprehensive policy instruments that typically articulate ambitious objectives, governance approaches, and implementation mechanisms to navigate the complex sociotechnical challenges presented by AI development and deployment [26, 37].

Alongside this policy evolution, the field of AI and deep learning continues to experience rapid progress, with numerous breakthroughs and emerging applications across scientific and technological domains [5, 6, 7, 8]. These ongoing advances further illustrate the transformative potential of AI, reinforcing its significance for policy, industry, and society at large.

Despite this accelerated policy development trajectory and expanding technical landscape, a critical gap persists in understanding the strategic alignment between policy objectives, foresight methodologies, and implementation instruments within these national frameworks. While scholarly literature has extensively examined isolated components of AI governance—such as ethical principles [12], regulatory approaches [31], or investment mechanisms [49]—substantially less attention has focused on how these elements cohere within comprehensive policy frameworks. This alignment challenge is particularly acute for emerging technologies like AI, where inherent uncertainty regarding technological trajectories and societal impacts significantly complicates traditional policy design approaches [16, 20]. As [51] observe, the "unprecedented combination of features that characterize AI systems" demands novel governance frameworks that integrate anticipatory, adaptive, and participatory elements.

The methodological gap in assessing policy coherence further compounds this challenge. Traditional policy analysis tools frequently struggle with the multidimensional nature of technology governance frameworks and the complex interactions between their components [10, 44]. Current analytical approaches primarily rely on qualitative case studies [18, 36] or quantitative indices [59] that inadequately capture the intricate interdependencies between strategic objectives, foresight mechanisms, and implementation instruments. [28] aptly note that "researchers lack systematic frameworks for comparing governance approaches across jurisdictions and assessing their internal coherence." This methodological limitation carries substantive practical implications, as policymakers lack sophisticated analytical tools to evaluate and systematically enhance the strategic alignment of their governance frameworks.

Our research addresses these theoretical and methodological gaps by investigating three interconnected research questions: (1) How can strategic alignment in AI policies be visually assessed and quantified across multiple dimensions? (2) What patterns of alignment and misalignment emerge across different national and governance contexts? (3) How do distinct governance models and institutional arrangements influence the nature and quality of strategic alignment? These questions guide our development and application of an innovative methodological approach to policy coherence assessment in complex technological domains.

This paper introduces a sophisticated visual mapping methodology that synthesizes matrix-based visualization techniques with network analysis to evaluate strategic alignment in national AI policies. Drawing on theoretical frameworks from policy instrumentation [14, 11], strategic foresight integration [21, 62], and science and technology policy [1, 55], we develop a comprehensive analytical framework that quantifies relationships between strategic objectives, foresight methodologies, and implementation instruments. This approach enables both rigorous comparative analysis across diverse national contexts and granular examination of alignment patterns within individual policy frameworks. Our methodology operationalizes the concept of strategic coherence in technology governance through sophisticated visual representations that reveal structural relationships and patterns otherwise invisible to traditional analytical approaches.

Empirically, we apply this methodology to a systematically selected sample of 15-20 national AI strategies from the OECD AI Policy Observatory database, representing diverse geographical regions, governance traditions, economic development stages, and resource contexts. Our analysis generates nuanced typologies of alignment patterns, identifies common vulnerability patterns, and highlights exemplary models of strategic coherence. These findings contribute to both scholarly understanding of policy design under conditions of technological uncertainty and provide practical guidance for policymakers seeking to enhance the coherence and effectiveness of their AI governance frameworks.

The remainder of this paper is structured as follows: Section 2 situates our research within relevant theoretical and empirical literature on strategic foresight integration, policy instrumentation, and comparative AI governance approaches. Section 3 details our methodological approach, including corpus development, analytical framework construction, and visual mapping techniques. Section 4 presents our findings regarding the typology and distribution of policy components across national contexts. Section 5 explores the results of our alignment analysis using matrix-based visualization and network analysis methods. Section 6 analyzes the relationship between governance models and alignment patterns, with particular attention to high-coherence exemplars and common vulnerability patterns. Section 7 discusses theoretical, methodological, and practical implications of our findings, while Section 8 concludes with a synthesis of contributions and identification of promising directions for future research.

2 Literature Review

This section examines relevant scholarly literature to establish the theoretical foundations for our research on strategic alignment in AI policies. We draw upon four interconnected bodies of literature: strategic foresight in technology policy, policy instrument theory, comparative AI governance, and alignment analysis frameworks. Together, these research streams inform our methodological approach and analytical framework.

2.1 Strategic Foresight in Technology Policy

Strategic foresight has emerged as a critical dimension of technology policymaking in contexts characterized by rapid innovation and high uncertainty. Foundational theoretical work by [56] characterizes strategic foresight as "a systematic, participatory, future-intelligence-gathering and medium-to-long-term vision-building process aimed at enabling present-day decisions and mobilizing joint actions." In technology governance contexts, foresight methodologies facilitate anticipatory policymaking that can respond to emerging technological developments before they manifest as governance challenges [22].

The theoretical underpinnings of strategic foresight in technology policy draw from multiple disciplines. Evolutionary economics perspectives, as articulated by [57], emphasize the importance of adaptive governance mechanisms that can evolve alongside technological systems. Institutional approaches, exemplified by [52], focus on the socio-technical transition frameworks that shape innovation trajectories. Additionally, [20] contribute a responsible innovation perspective that emphasizes anticipatory governance and deliberative engagement with emerging technologies. These diverse theoretical foundations have converged to position foresight as an essential component of effective technology governance.

The methodological evolution of foresight approaches in technology policy contexts reflects increasing sophistication and integration. Early applications primarily employed quantitative forecasting techniques with limited stakeholder involvement [35]. Contemporary approaches have evolved toward more participatory, mixed-method techniques that combine quantitative modeling with qualitative deliberation [17]. [30] identify eight distinct foresight methodologies commonly deployed in technology governance contexts: trend extrapolation, Delphi studies, scenario development, horizon scanning, technology roadmapping, expert panels, participatory workshops, and cross-impact analysis. These methodologies vary significantly in their temporal focus, stakeholder engagement, and integration with implementation planning.

Significant challenges persist in integrating foresight methodologies with policy implementation mechanisms. [21] identify three primary integration challenges: temporal misalignment between long-term foresight horizons and short-term policy cycles, institutional fragmentation that separates foresight activities from implementation planning, and methodological gaps in translating foresight insights into concrete policy instruments. These integration challenges are particularly acute in rapidly evolving technological domains like artificial intelligence, where the pace of innovation may outstrip policy adaptation capabilities [63].

Empirical studies on foresight effectiveness in technology policy contexts have produced mixed findings. [61] found that technology foresight exercises significantly influenced R&D priority-setting in several European countries but had limited impact on regulatory approaches or institutional design. Similarly, [33] identified a positive correlation between foresight integration and innovation policy effectiveness in OECD countries, particularly when foresight methodologies were institutionally embedded within policy planning processes. However, [55] note that few technology foresight exercises explicitly connect anticipatory insights to implementation instruments, creating a "strategic gap" in many governance frameworks.

Recent scholarship has called for more systematic integration of foresight methodologies within comprehensive technology governance frameworks. [62] propose a "maturity model" for strategic foresight integration that evaluates the depth of connection between anticipatory processes and policy implementation. Building on this work, [27] develop a "foresight-implementation alignment index" to assess how effectively governance frameworks connect future-oriented insights with present-day policy actions. These emerging analytical approaches provide conceptual foundations for our visual mapping methodology for alignment assessment.

2.2 Policy Instrument Theory and Implementation

Policy instrument theory offers critical insights into how governance objectives are operationalized through specific implementation mechanisms. The foundational taxonomy developed by [53] categorizes policy instruments according to the governmental resources they employ: nodality (information), authority (legal powers), treasure (money), and organization (formal structures). Building on this framework, [14] conceptualize policy instruments as "institutions in their own right" that embody specific theories of governance and structure state-society relations.

In technology governance contexts, several refined taxonomies have emerged to capture the unique challenges of emerging technology domains. [1] develop a typology specifically focused on innovation policy instruments, identifying three primary categories: regulatory instruments that establish rules and standards, economic instruments that provide financial incentives or disincentives, and soft instruments that shape behaviors through information and voluntary approaches. Expanding this framework, [10] emphasize the importance of analyzing "policy mixes" rather than individual instruments, noting that emerging technology governance typically involves complex combinations of complementary instruments deployed across multiple policy domains.

Recent scholarship has further refined these taxonomies to address the specific challenges of digital technology governance. [65] identify novel instrument types emerging in AI governance contexts, including algorithmic impact assessments, ethical review processes, and data governance frameworks. Similarly, [24] analyze the emergence of "anticipatory regulation" instruments designed to address technological uncertainties through experimental, principle-based approaches. These evolving instrument taxonomies reflect the adaptations required to govern complex sociotechnical systems characterized by rapid innovation and pervasive impacts.

Implementation challenges in emerging technology domains create significant obstacles to effective governance. [11] identify four common implementation challenges in digital technology governance: regulatory capacity limitations, cross-jurisdictional coordination problems, information asymmetries between regulators and technology developers, and difficulties in measuring policy effectiveness. These challenges are particularly acute in artificial intelligence governance, where [31] note that traditional regulatory approaches struggle with the "pacing problem" of maintaining relevance amid accelerating technological change. The implementation literature thus highlights the importance of adaptive governance frameworks that can evolve alongside rapidly developing technologies.

Theoretical frameworks for instrument-objective alignment have emerged as a central concern in policy design literature. [34] develop the "NATO" framework (Nodality, Authority, Treasure, Organization) to analyze the fit between policy goals and implementation mechanisms. This framework emphasizes that effective policy design requires careful calibration of instruments to objectives, with misalignment leading to implementation failures. Building on this work, [2] propose a "calibration framework" to assess how well policy instruments are matched to governance objectives across multiple dimensions. These frameworks provide conceptual foundations for analyzing alignment patterns in national AI strategies.

The literature on policy coherence and strategic alignment has increasingly emphasized the importance of integrated governance approaches. [3] define policy coherence as "the systematic promotion of mutually reinforcing actions across government departments and agencies creating synergies towards achieving agreed objectives." In technology governance contexts, [19] highlight the critical importance of "strategic policy mixes" that align objectives, instruments, and implementation processes across institutional boundaries. These conceptual approaches to policy coherence inform our framework for assessing strategic alignment in AI governance.

2.3 National AI Strategies: Comparative Perspectives

The historical evolution of national AI policy development has progressed through distinct phases since 2017. [32] identify the "first wave" of AI strategies emerging from technologically advanced economies, exemplified by Canada’s Pan-Canadian AI Strategy (2017) and China’s New Generation AI Development Plan (2017). These early strategies primarily emphasized scientific leadership and industrial competitiveness objectives [26]. A "second wave" emerged in 2018-2020, characterized by more diverse objectives including ethical considerations, workforce development, and public sector applications [25]. The most recent "third wave" strategies (2021-2025) demonstrate increasing sophistication in governance approaches, with greater emphasis on risk management, international coordination, and implementation specificity [37].

The proliferation of national AI strategies has generated substantial comparative research examining variations across jurisdictions. [36] analyze how AI policies are framed differently across governance contexts, identifying distinct narrative frames including "economic opportunity," "technological sovereignty," "human-centered AI," and "global competition." Similarly, [18] conduct cross-national comparison of AI ethics principles, finding significant convergence around core values but divergent approaches to operationalization. These comparative studies highlight important variations in how different jurisdictions conceptualize AI governance challenges and opportunities.

Methodological approaches to comparative AI policy analysis have evolved from primarily descriptive case studies toward more systematic analytical frameworks. Early work by [31] employed qualitative case studies to compare AI governance approaches in the US, EU, and UK. More recent studies have developed quantitative indices to facilitate cross-national comparison, exemplified by the Government AI Readiness Index [59] and the Global AI Vibrancy Tool [64]. While these quantitative approaches enable broader comparison, [28] note significant methodological limitations in current comparative frameworks, particularly regarding the assessment of implementation effectiveness and policy coherence.

Several classification schemes for AI governance approaches have emerged to categorize different national strategies. [18] propose a four-part taxonomy distinguishing between "market-oriented," "state-directed," "risk-focused," and "rights-based" governance models. Expanding this framework, [26] identify strategic positioning along three dimensions: promoting innovation, protecting values, and preventing harm. These classification schemes provide useful conceptual tools for analyzing variations across governance contexts, although [36] caution against overly rigid categorizations that may obscure the hybridized nature of many national approaches.

Despite the growing literature on comparative AI governance, significant knowledge gaps persist in understanding strategic alignment within national frameworks. [28] note that existing comparative studies provide limited insight into how effectively different policy components cohere within comprehensive governance systems. Similarly, [51] identify a critical gap in understanding how ethical principles are operationalized through concrete policy instruments. [25] further highlight the limited attention to how foresight methodologies are integrated with implementation planning in national strategies. These knowledge gaps underscore the need for novel analytical approaches to assess strategic alignment in AI governance frameworks.

2.4 Conceptual Framework for Alignment Analysis

The integration of policy instrument theory with strategic foresight concepts provides a foundation for analyzing alignment in technology governance. [19] introduce the concept of "strategic policy mixes" that connect policy objectives with implementation instruments through consistent strategic planning processes. Building on this framework, [60] emphasize the importance of "policy sequencing" that connects long-term vision with near-term implementation priorities. These integrated approaches highlight the potential for analyzing alignment as a multi-dimensional relationship between objectives, foresight methods, and implementation instruments.

Several scholars have proposed frameworks for assessing policy coherence in technology governance contexts. [44] develop a "policy mix evaluation framework" that assesses coherence across multiple governance dimensions, including strategic, operational, and temporal alignment. Similarly, [13] propose an "embedded coherence" framework that evaluates alignment across policy levels, domains, and timeframes. While these frameworks provide useful conceptual foundations, they lack operationalized methodologies for systematic assessment across multiple governance contexts.

Theoretical propositions regarding alignment patterns have emerged from several research streams. [10] propose that governance systems characterized by strong institutional coordination mechanisms will demonstrate higher levels of strategic coherence. From a different perspective, [16] suggest that policies addressing deep uncertainty will demonstrate stronger alignment when they incorporate adaptive management principles. [2] further hypothesize that alignment quality correlates with policy capacity factors, including analytical resources, institutional stability, and stakeholder engagement practices. These theoretical propositions inform our analytical approach and guide our interpretation of observed alignment patterns.

Visual approaches to policy analysis have gained traction as tools for understanding complex governance systems. [4] demonstrate how visualization techniques can reveal patterns in policy design that are difficult to discern through traditional analytical methods. In technology governance contexts, [54] employ visual mapping to analyze innovation system interactions, while [19] use matrix visualizations to assess policy mix coherence. Building on these approaches, [13] develop a "coherence mapping" methodology that visualizes relationships between policy objectives and instruments. These visual analytical techniques provide methodological foundations for our approach to alignment assessment. Table 1 synthesizes key theoretical perspectives on strategic alignment, categorizing them across four core dimensions: strategic foresight, policy instrumentation, governance models, and visualization methods. Each row outlines foundational concepts and representative scholarly contributions in that dimension.

| Theoretical Dimension | Key Concepts | Representative Contributions |

| Strategic Foresight Integration | Foresight maturity models, anticipatory governance, temporal alignment | [62]; [20]; [27] |

| Policy Instrument Calibration | Policy mix coherence, instrument-objective alignment, implementation effectiveness | [34]; [10]; [2] |

| Governance Model Influence | Institutional arrangements, policy styles, regulatory traditions | [18]; [36]; [31] |

| Visualization Approaches | Coherence mapping, matrix visualization, network analysis | [13]; [4]; [54] |

Our conceptual framework synthesizes these diverse research streams to develop an integrated approach to alignment analysis. We conceptualize strategic alignment as the degree of coherence between three key policy dimensions: strategic objectives that articulate desired outcomes, foresight methods that anticipate future developments, and implementation instruments that operationalize governance approaches. Drawing on [60], we propose that high-alignment policies will demonstrate strong connections across all three dimensions, creating "coherence chains" that link future visions with present actions through appropriate implementation mechanisms. This conceptualization guides our development of a visual mapping methodology that can systematically assess alignment patterns across diverse governance contexts.

3 Methodology

This section details our methodological approach to assessing strategic alignment in national AI policies. We first outline our research design and sampling strategy, followed by data collection procedures and corpus development. We then describe our analytical framework development, including the creation of coding schemas for policy components and alignment assessment. Finally, we explain our matrix and network visualization approaches and analytical procedures.

3.1 Research Design

Our research employs a comparative policy analysis approach centered on systematic document analysis of national AI strategies. This methodology aligns with established approaches in comparative policy studies [42, 43] while incorporating innovative visual analysis techniques. The comparative approach enables identification of alignment patterns across diverse governance contexts, facilitating both the development of typologies and the identification of contextual factors influencing strategic coherence.

The unit of analysis is the national AI strategy document (and associated implementation documents), with analysis conducted at three levels: (1) individual policy components (objectives, foresight methods, instruments), (2) alignment relationships between component pairs, and (3) overall policy coherence across the full framework. This multi-level analytical approach allows for granular examination of specific alignment relationships while maintaining focus on system-level coherence patterns.

Our sampling strategy employs purposive sampling techniques to ensure representation across diverse governance contexts [48]. We developed a stratified sampling framework based on three primary criteria: geographical representation, governance model variation, and resource contexts. Geographical stratification ensures coverage across major world regions, following [18] regional classification scheme that distinguishes between North America, Europe, East Asia, Southeast Asia, South Asia, Middle East, Africa, and Latin America. Within each region, we selected countries representing different governance approaches, drawing on [26] classification of AI governance models: market-led, state-directed, rights-based, and risk-focused. Additionally, we ensured representation across resource contexts, including established technological powers, emerging innovation hubs, and developing economies with nascent AI ecosystems.

The final sample comprises 15-20 national AI strategies published between 2017-2025, with selection prioritizing strategies that: (1) represent official government positions rather than advisory documents, (2) contain comprehensive coverage of strategic objectives, anticipatory elements, and implementation mechanisms, and (3) are available in English or major European languages to minimize translation challenges. Table 2 presents the final sample characteristics, indicating the diversity achieved across our stratification criteria.

| Country | Strategy Title | Publication Date | Governance Model | Region |

| Canada | Pan-Canadian AI Strategy | March 2017 | Market-led | North America |

| China | New Generation AI Dev. Plan | July 2017 | State-directed | East Asia |

| Finland | Finland’s AI Era | October 2017 | Rights-based | Europe |

| France | AI for Humanity | March 2018 | State-directed | Europe |

| Germany | AI Strategy for Germany | November 2018 | Risk-focused | Europe |

| India | National Strategy for AI | June 2018 | Hybrid | South Asia |

| Japan | AI Strategy 2019 | June 2019 | Market-led | East Asia |

| Netherlands | Strategic Action Plan for AI | October 2019 | Rights-based | Europe |

| Norway | National Strategy for AI | January 2020 | Rights-based | Europe |

| Singapore | National AI Strategy | November 2019 | State-directed | Southeast Asia |

| South Korea | National Strategy for AI | December 2019 | Hybrid | East Asia |

| UAE | National AI Strategy 2031 | April 2019 | State-directed | Middle East |

| UK | AI Sector Deal / National AI Strategy | April 2018 / Sept 2021 | Market-led | Europe |

| USA | American AI Initiative / National AI R&D Strategic Plan | February 2019 / June 2019 | Market-led | North America |

| Brazil | Brazilian AI Strategy | April 2021 | Hybrid | Latin America |

| Spain | Spanish Strategy for AI | December 2020 | Rights-based | Europe |

| Australia | Australia’s AI Action Plan | June 2021 | Market-led | Oceania |

| Denmark | National Strategy for AI | March 2019 | Rights-based | Europe |

| Sweden | National Approach to AI | May 2018 | Rights-based | Europe |

| Italy | National Strategy for AI | July 2020 | Hybrid | Europe |

3.2 Data Collection and Corpus Development

Our primary data source is the OECD AI Policy Observatory (AIPO), which maintains a comprehensive repository of national AI strategy documents and associated policy materials. This repository offers several methodological advantages: it provides standardized access to official documents, ensures consistent classification of policy materials, and facilitates comparative analysis through standardized metadata [25]. We conducted data collection in January 2025, accessing the most recent version of each national strategy within the repository.

For each country in our sample, we collected three types of documents: (1) the primary national AI strategy document outlining high-level vision and objectives, (2) implementation action plans or roadmaps detailing specific initiatives and timelines, and (3) associated budget documents or resource allocation frameworks. This multi-document approach allowed for more comprehensive analysis of alignment between strategic vision and implementation mechanisms, addressing limitations identified by [28] regarding the gap between policy rhetoric and operational reality.

Document authentication followed a systematic protocol to ensure the analysis was based on authoritative sources. Each document was verified against three criteria, following the approach outlined by [23]: (1) confirmation of official government authorship through website domain verification and official publication channels, (2) verification of document status as current policy rather than draft or superseded versions, and (3) confirmation of the document’s authoritative standing within the country’s governance framework. For strategies from non-English speaking countries, we first sought official English translations provided by the government. When these were unavailable, we used professionally translated versions from the OECD repository or commissioned translations from certified translators, following best practices in cross-language document analysis outlined by [40].

The corpus development process involved creating a structured digital repository of the authenticated documents, with standardized metadata capturing publication date, authoring body, document type, and relationship to other policy documents. This structured approach enabled systematic comparison across countries while maintaining context sensitivity to each nation’s unique policy environment. Following [39], we developed a document summary for each country case, outlining the key policy features, institutional context, and implementation structures to inform our subsequent analysis.

3.3 Analytical Framework Development

Developing a robust analytical framework required creating standardized coding schemas for policy components while remaining sensitive to contextual variations across governance systems. Our approach drew on established methods in qualitative content analysis [45] and policy document coding [23], adapted for the specific requirements of AI governance analysis.

For strategic objectives, we developed a comprehensive coding schema through an iterative process that combined deductive and inductive approaches. Initially, we derived a preliminary category system from existing literature on AI governance objectives [26, 36], yielding eight broad objective categories: economic competitiveness, scientific leadership, ethical/responsible AI, national security, public sector transformation, workforce development, industrial digitalization, and international collaboration. Through preliminary coding of a subset of documents, we refined this framework to include additional categories that emerged inductively: social welfare enhancement, regulatory framework development, data ecosystem development, and environmental sustainability. The final coding framework comprised 12 strategic objective categories, with operational definitions and exemplar text for each category to ensure coding consistency.

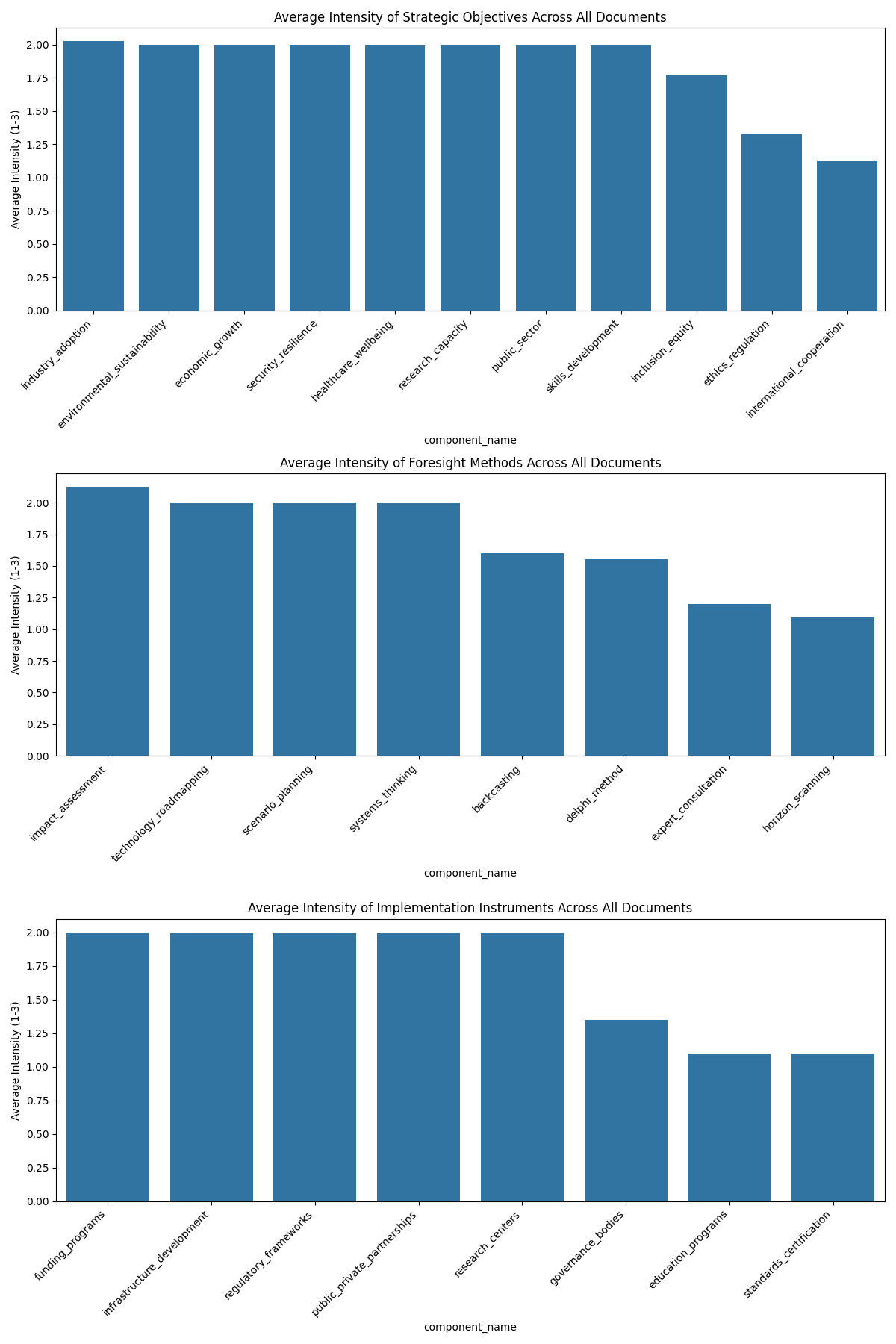

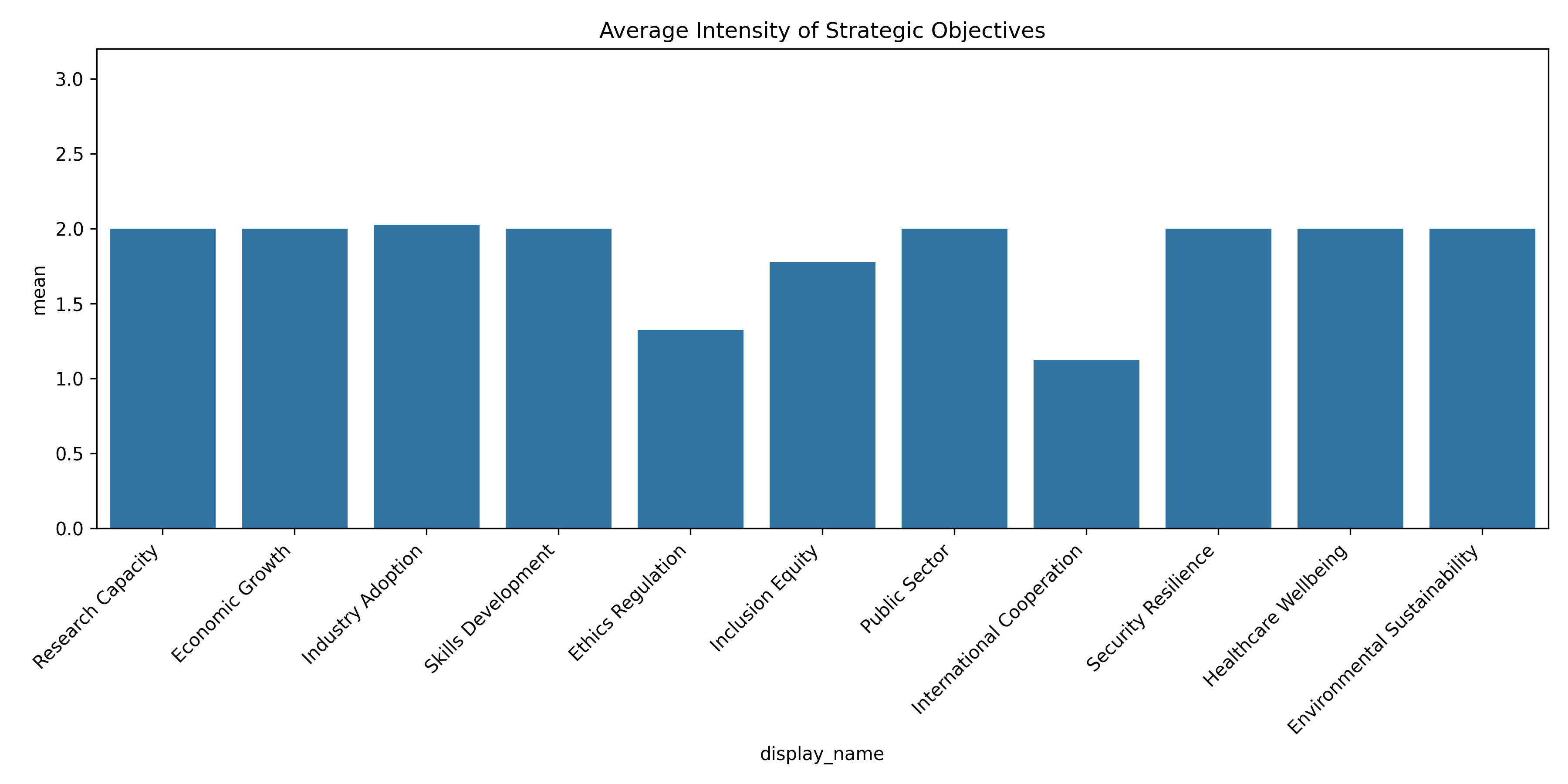

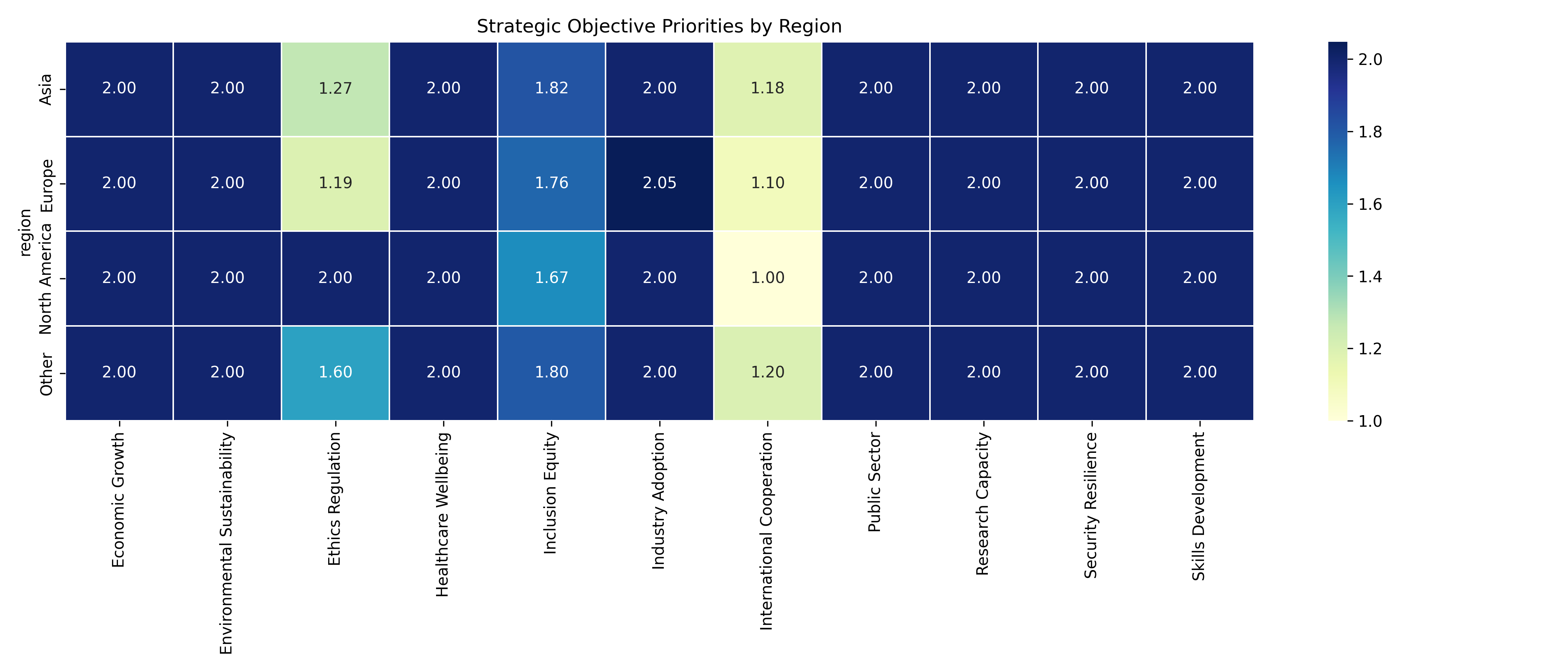

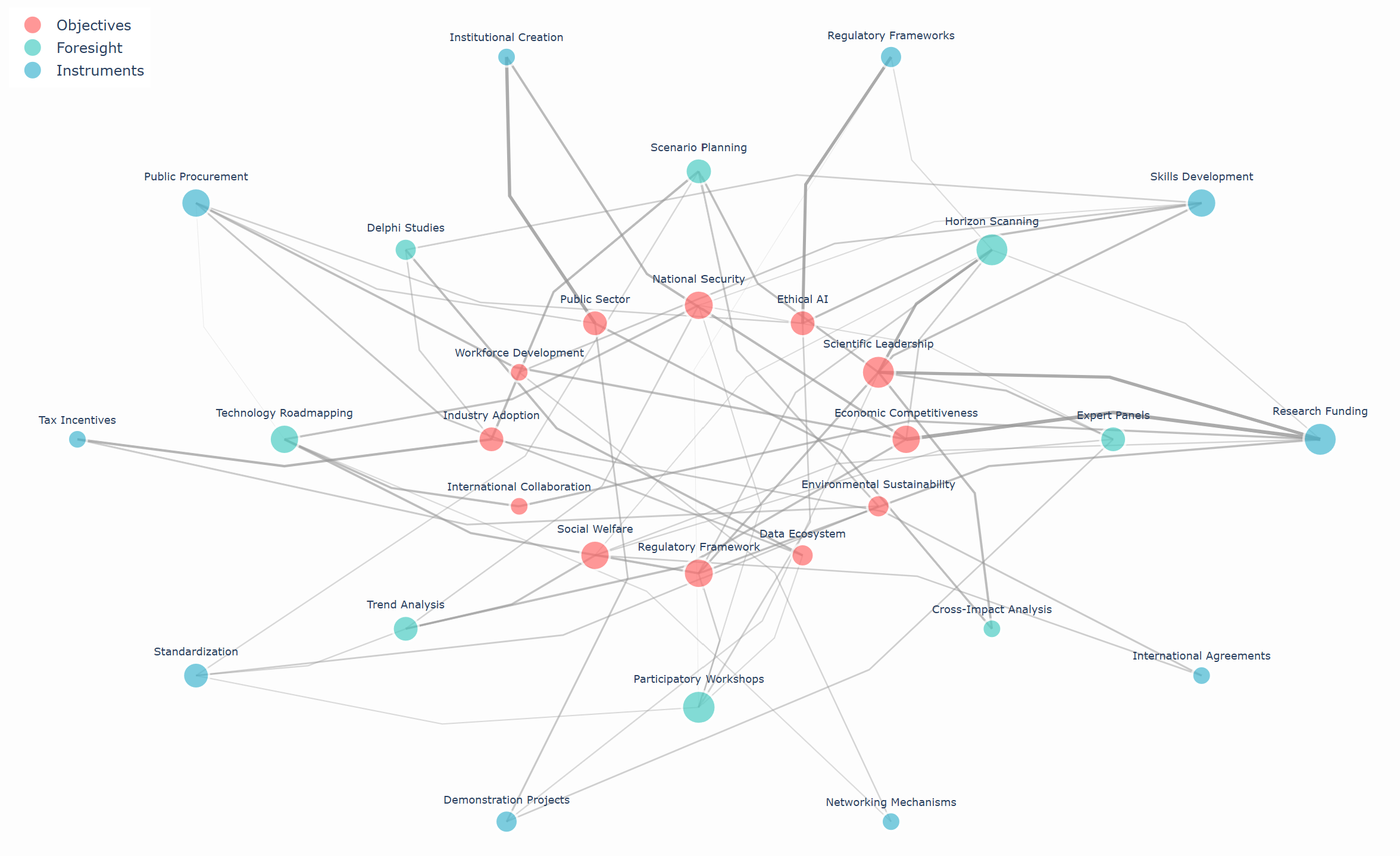

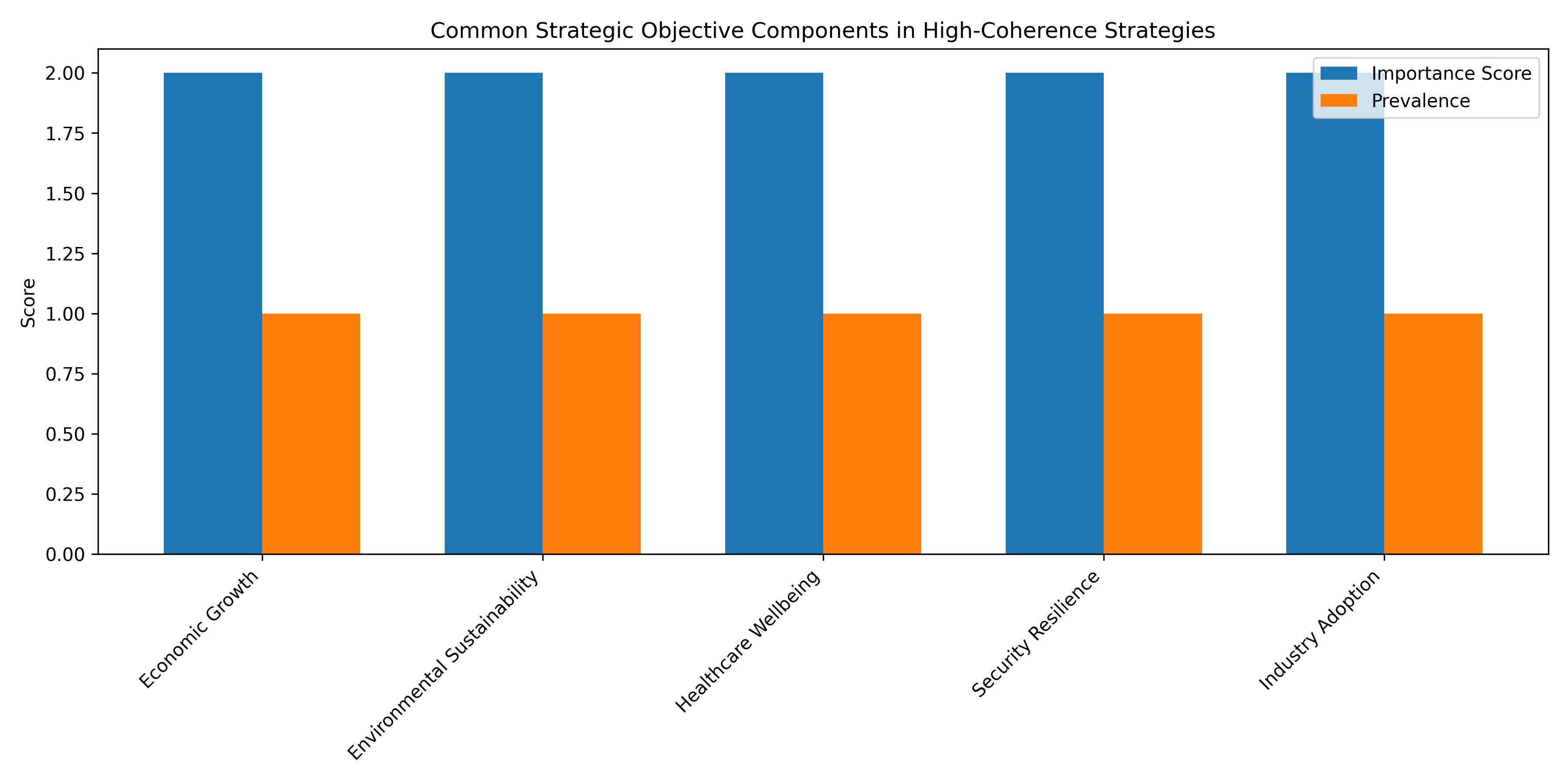

Figure 1 presents a component distribution visualization that illustrates the relative frequency of strategic objectives, foresight methods, and implementation instruments across various national AI strategies. It reveals the predominance of economic competitiveness and scientific leadership objectives, as well as the frequent use of horizon scanning, expert panels, funding mechanisms, and institutional creation.

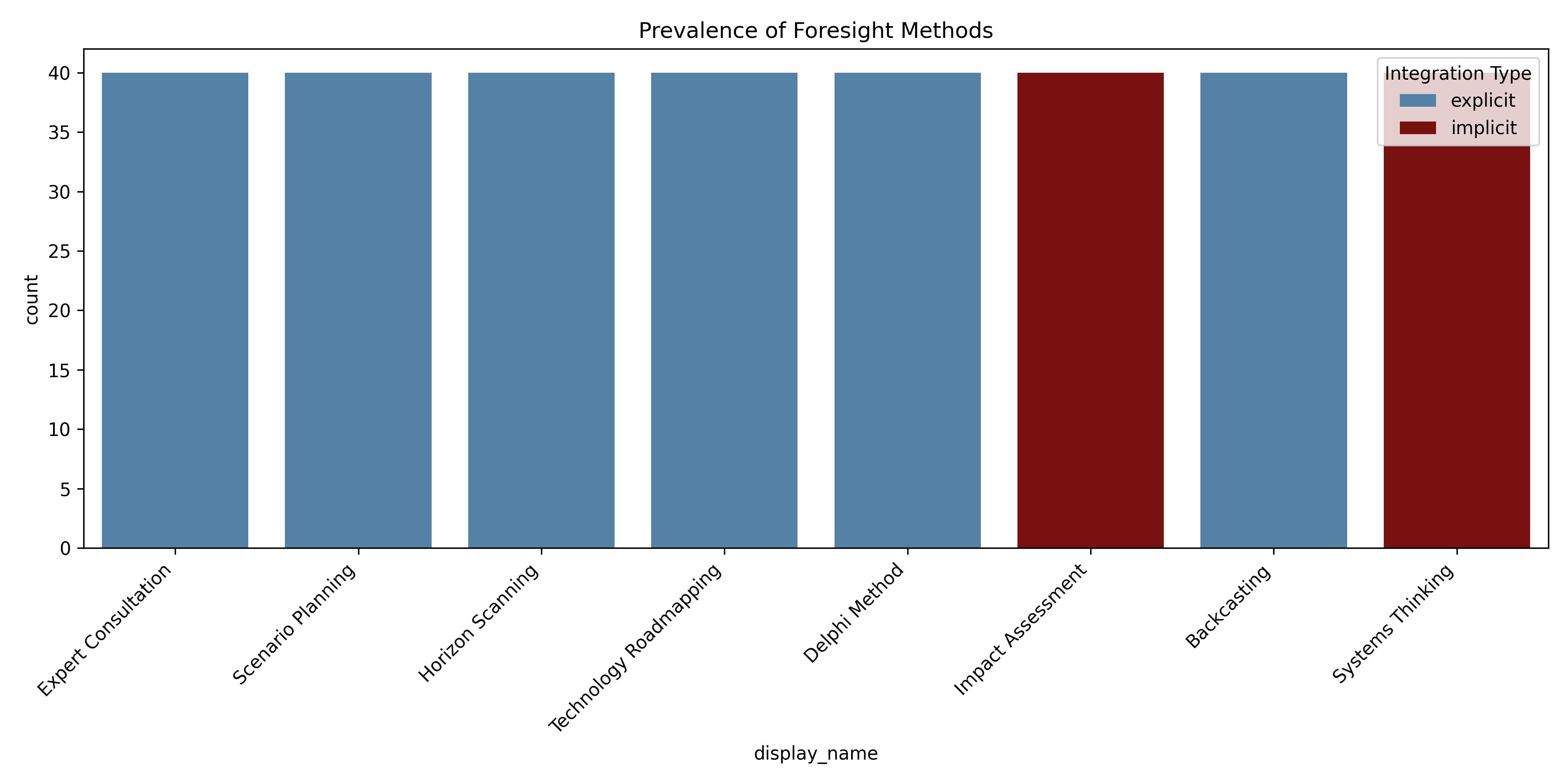

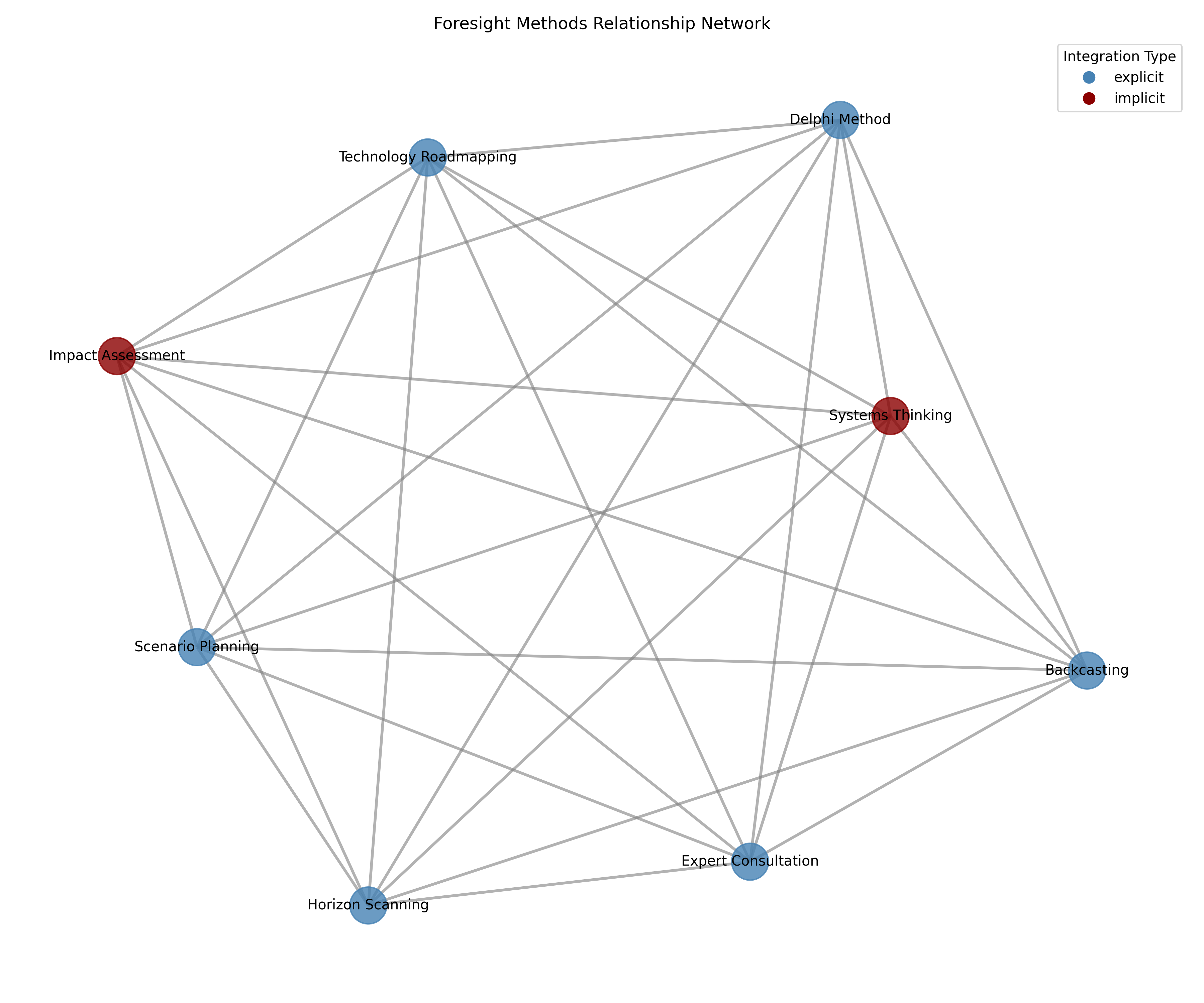

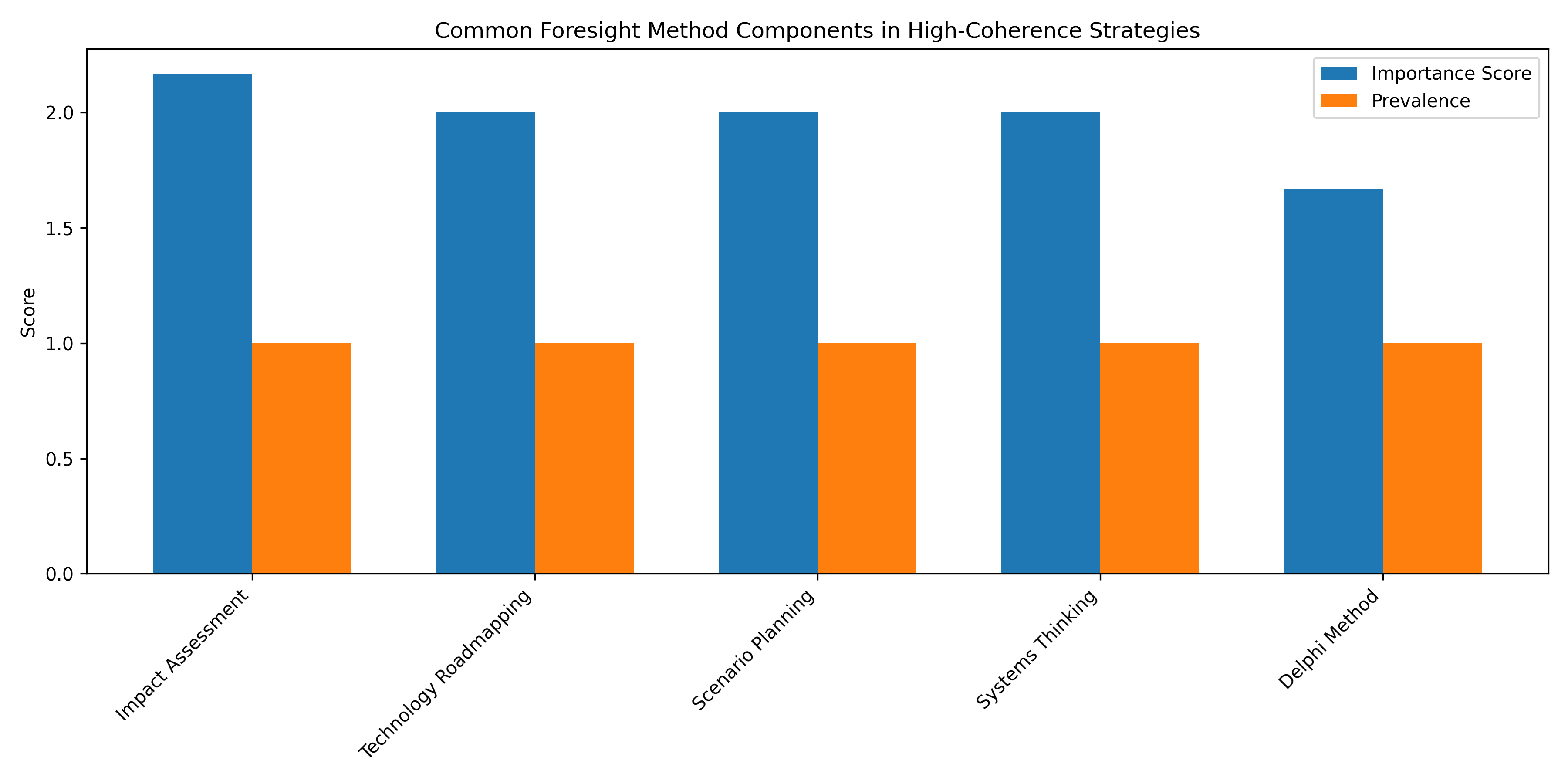

For foresight methodologies, we adapted taxonomies from strategic foresight literature [17, 30] to develop a coding framework for anticipatory approaches in AI governance. The resulting taxonomy identified eight distinct foresight methods: horizon scanning, scenario development, Delphi studies, expert panels, technology roadmapping, trend extrapolation, participatory workshops, and cross-impact analysis. For each method, we developed operational definitions that captured both explicit methodological application (where documents directly referenced foresight approaches) and implicit application (where documents employed foresight techniques without explicit methodological framing). This dual-coding approach addressed the challenge identified by [21] regarding the often implicit nature of foresight integration in policy documents.

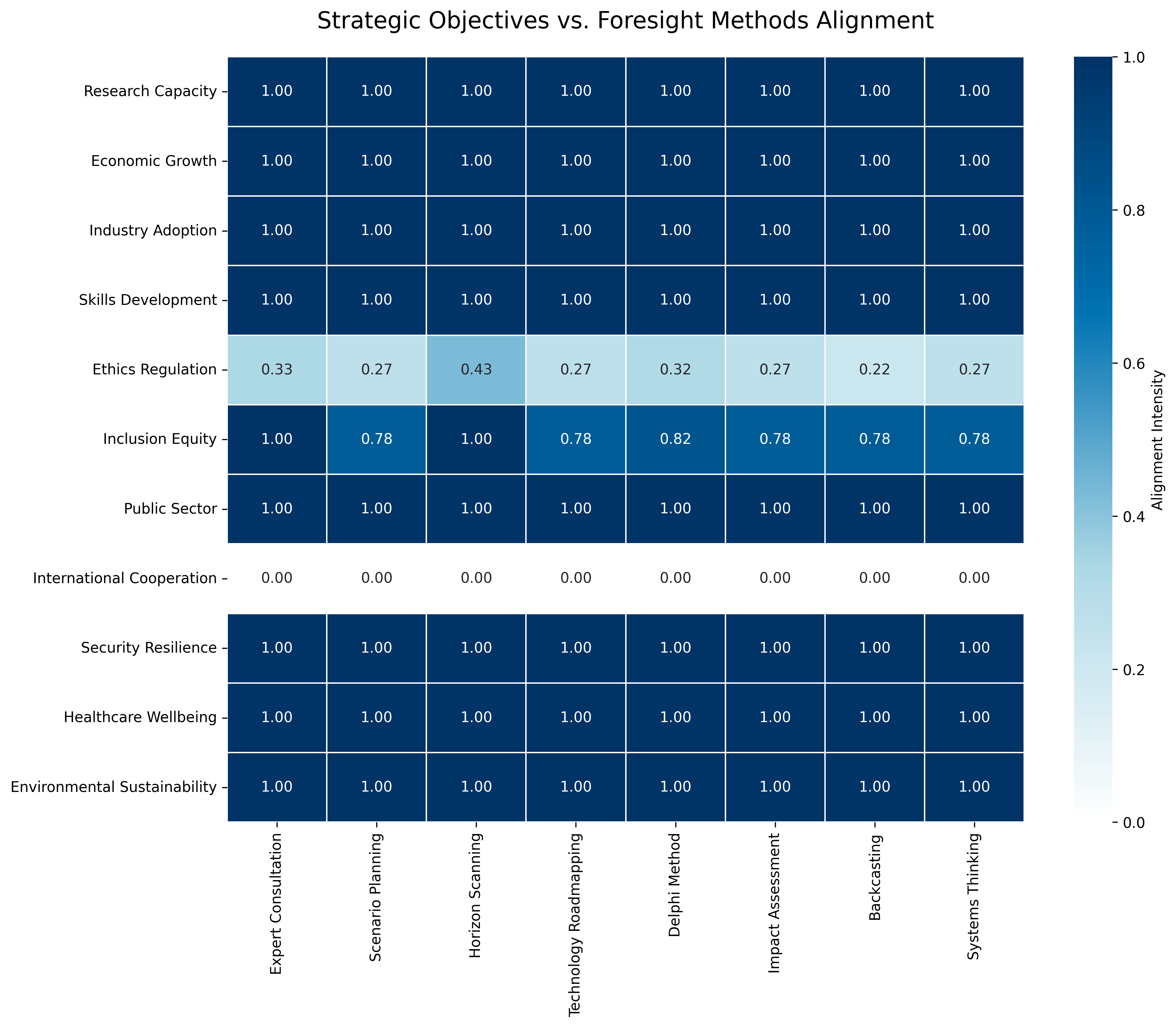

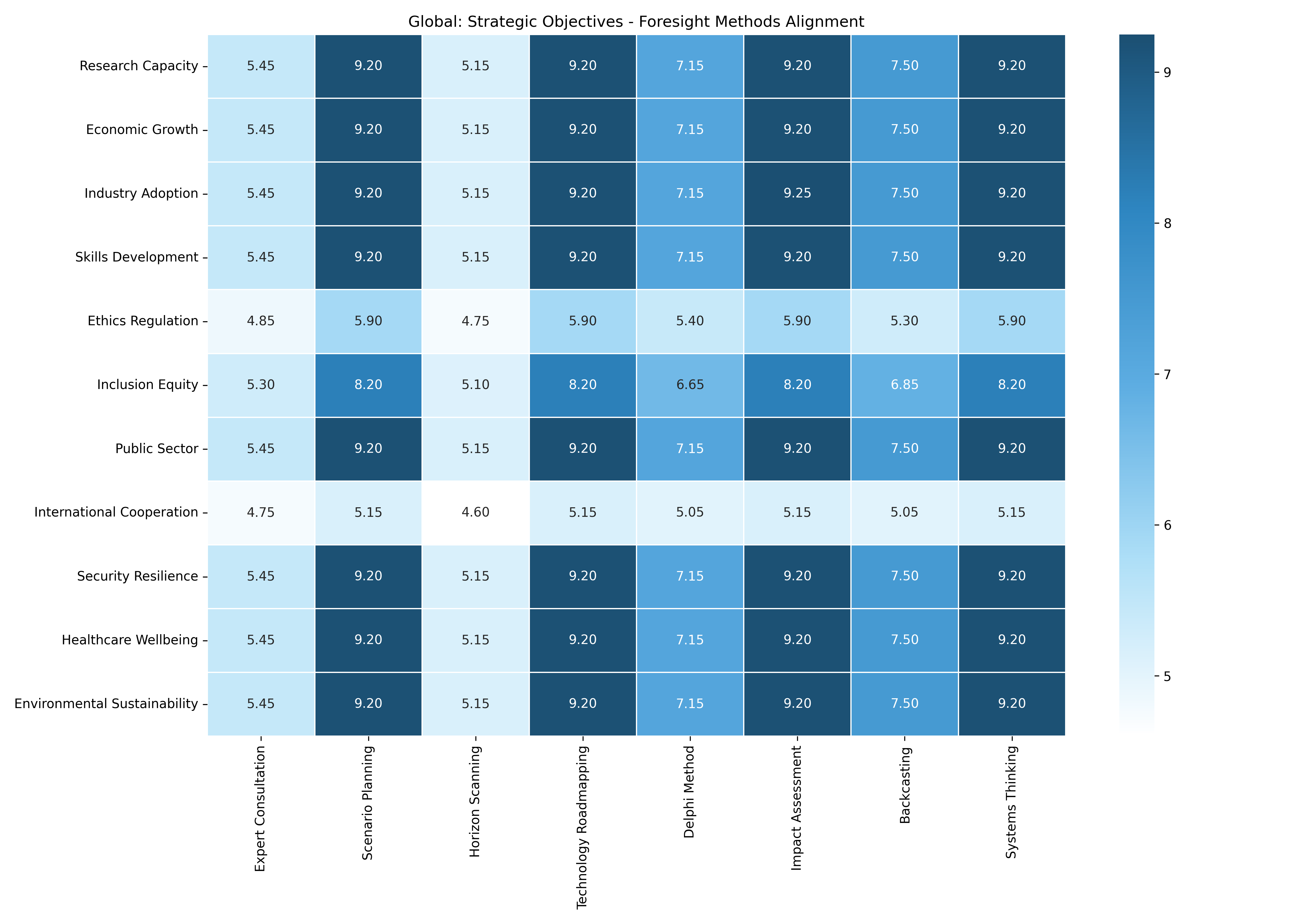

Figure 3 displays the alignment intensity between strategic objectives and foresight methods. The heatmap highlights a strong association between scientific leadership objectives and expert panel approaches, while participatory foresight methods appear less aligned with ethical AI objectives.

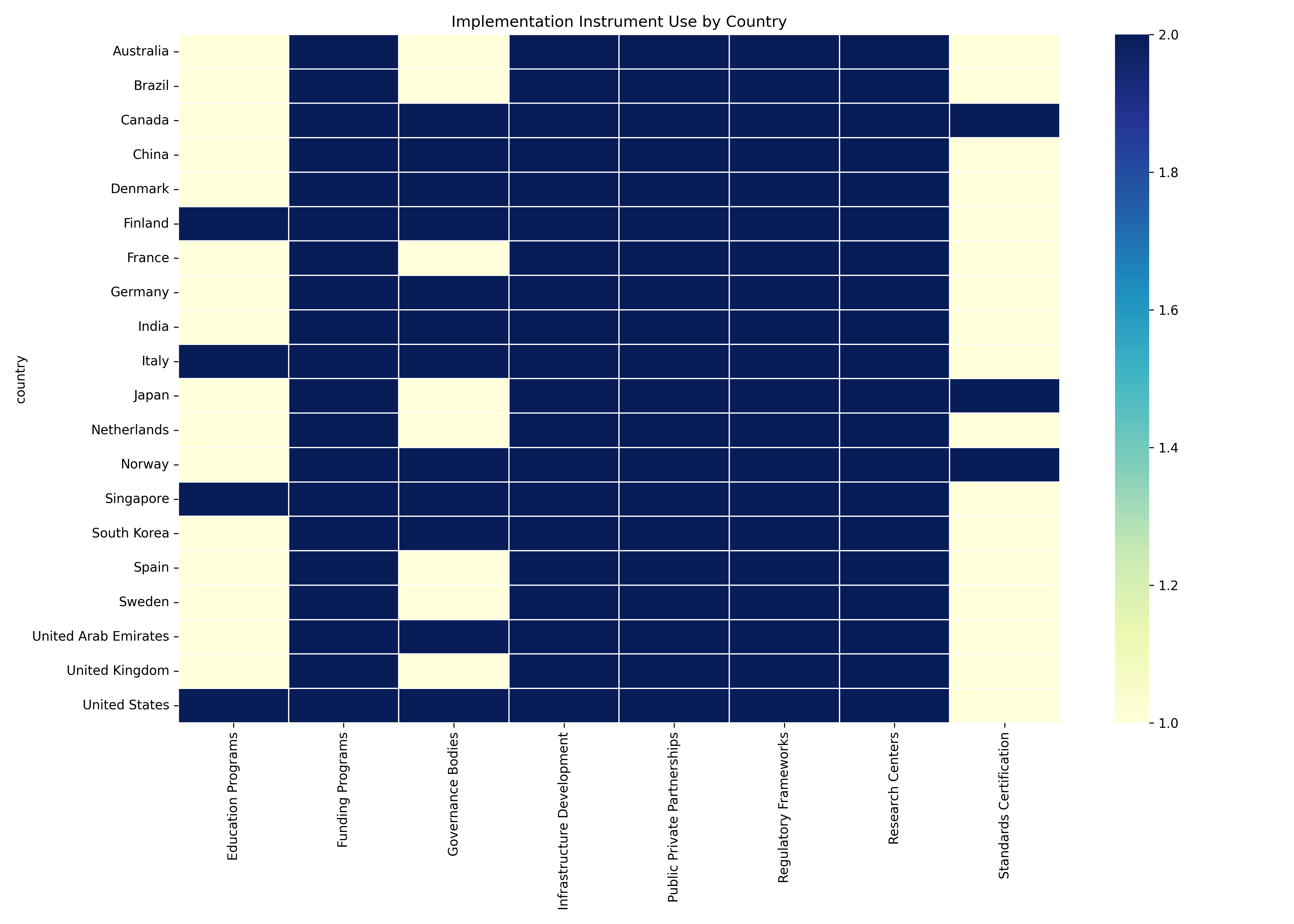

Implementation instruments were categorized following [1] taxonomy of innovation policy instruments, adapted for AI governance contexts. The resulting framework identified 10 instrument types: research funding, skills development programs, regulatory frameworks, institutional creation, public procurement, tax incentives, standardization initiatives, demonstration projects, networking/coordination mechanisms, and international agreements. For each instrument type, we developed specific indicators to assess the level of implementation specificity, following [2] approach to instrument calibration analysis. This enabled assessment of not only which instruments were deployed but also their degree of operational specificity.

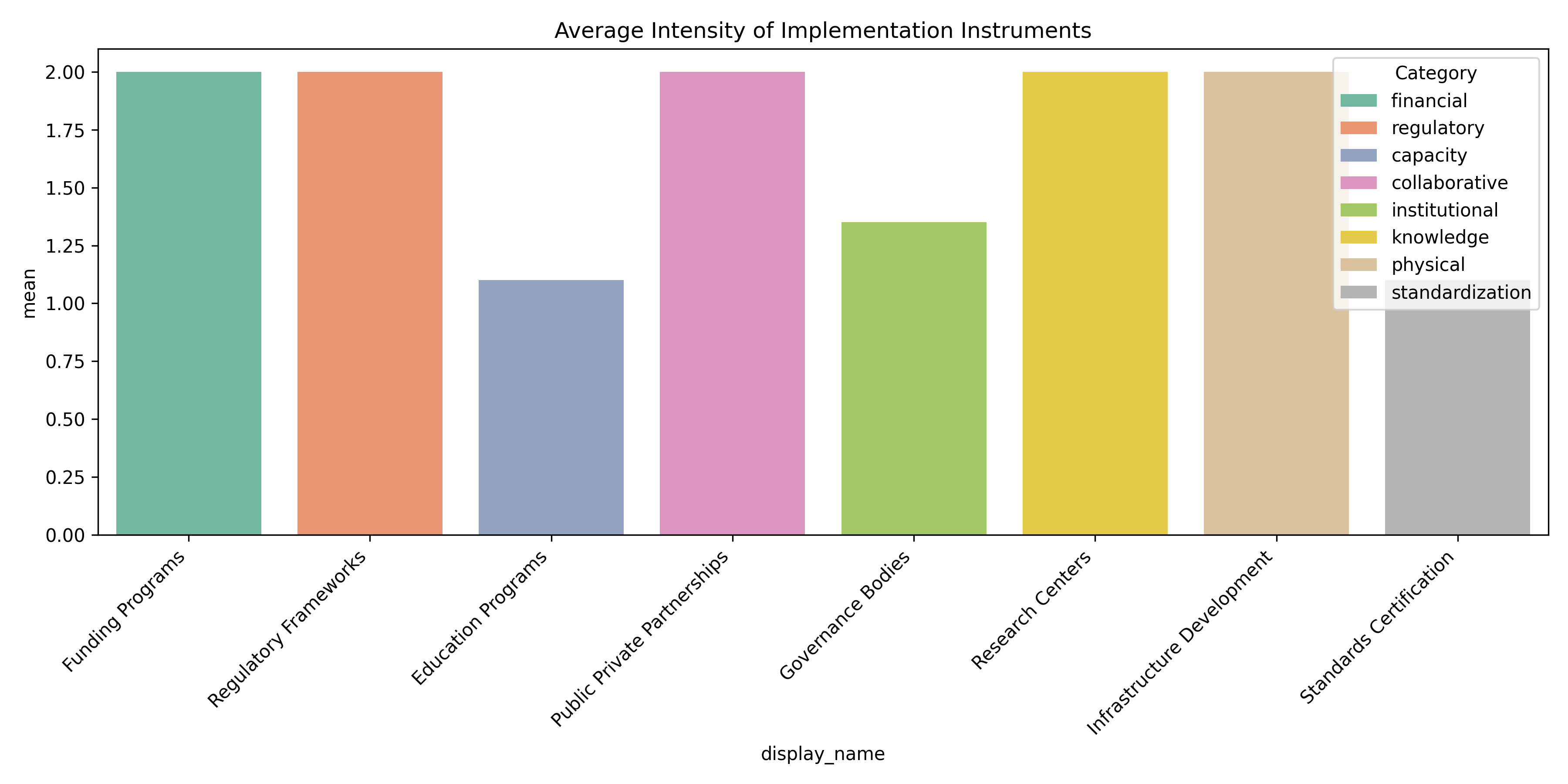

Alignment intensity was operationalized using a three-point scoring system (1=weak, 2=moderate, 3=strong) that assessed the explicit connection between policy components. Following [11] approach to policy coherence assessment, we defined weak alignment (score=1) as the mere co-existence of components without explicit linkage, moderate alignment (score=2) as the presence of implied connections through proximity or contextual reference, and strong alignment (score=3) as explicit articulation of how components interact within an integrated governance framework. This scoring system was applied to each potential relationship between components, creating a quantified assessment of alignment intensity.

3.4 Strategic Alignment Matrix Development

The heart of our methodological innovation is the development of a visual matrix approach to alignment assessment. Drawing on policy coherence visualization techniques [19, 13], we designed a multi-dimensional matrix structure to cross-reference the three component types: strategic objectives, foresight methods, and implementation instruments. The matrix structure enables visualization of alignment between component pairs, creating a systematic representation of coherence patterns within and across national strategies.

The matrix design employs a layered approach with three distinct alignment matrices: objective-foresight alignment, objective-instrument alignment, and foresight-instrument alignment. Each matrix positions the relevant components on row and column headings, with cell values indicating the alignment intensity score (1-3) between the intersecting components. The matrix visualization employs conditional formatting with a color gradient from light to dark, providing immediate visual identification of strong and weak alignment areas. This approach builds on [4] findings regarding the effectiveness of color-intensity visualization for policy coherence assessment.

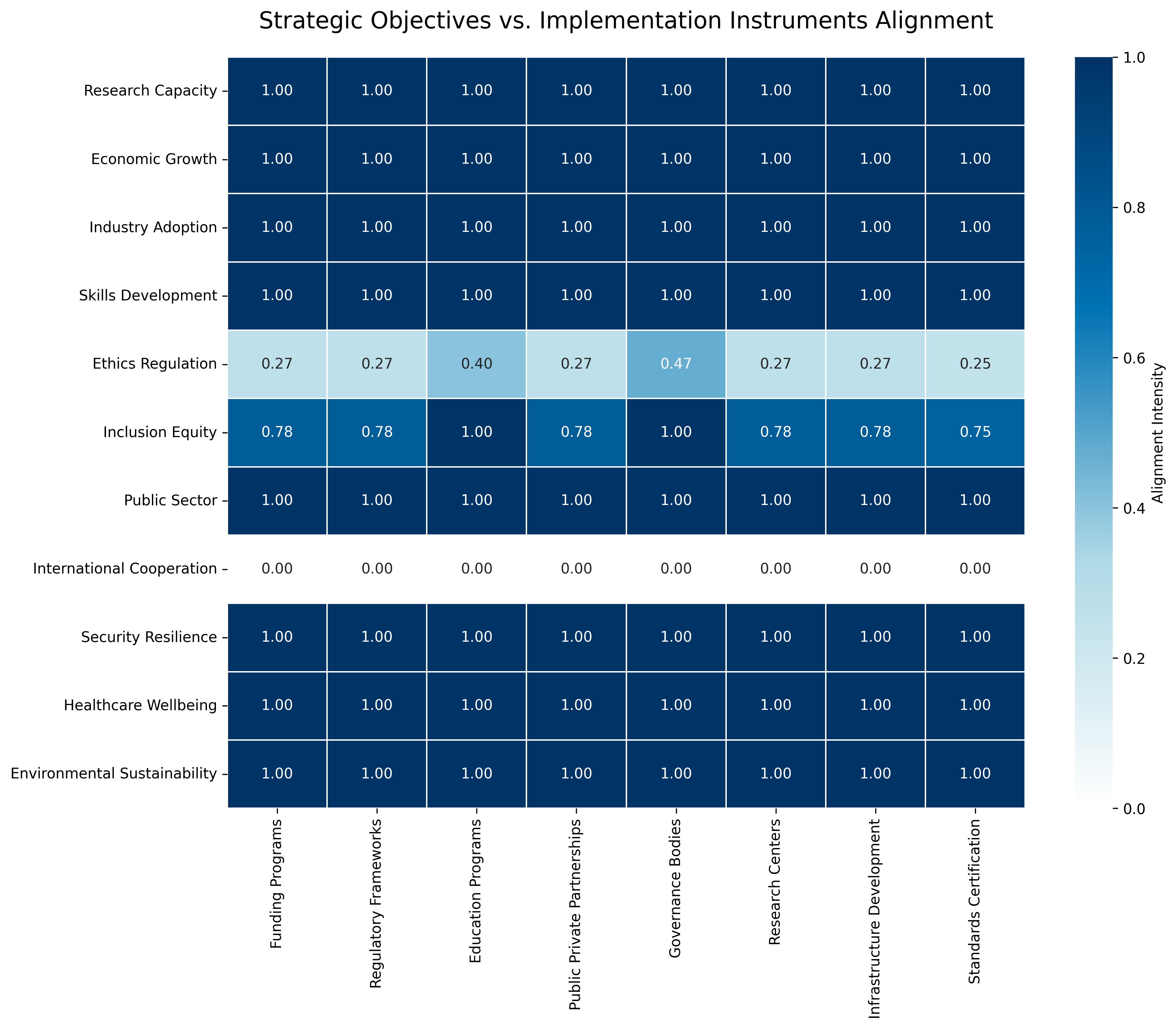

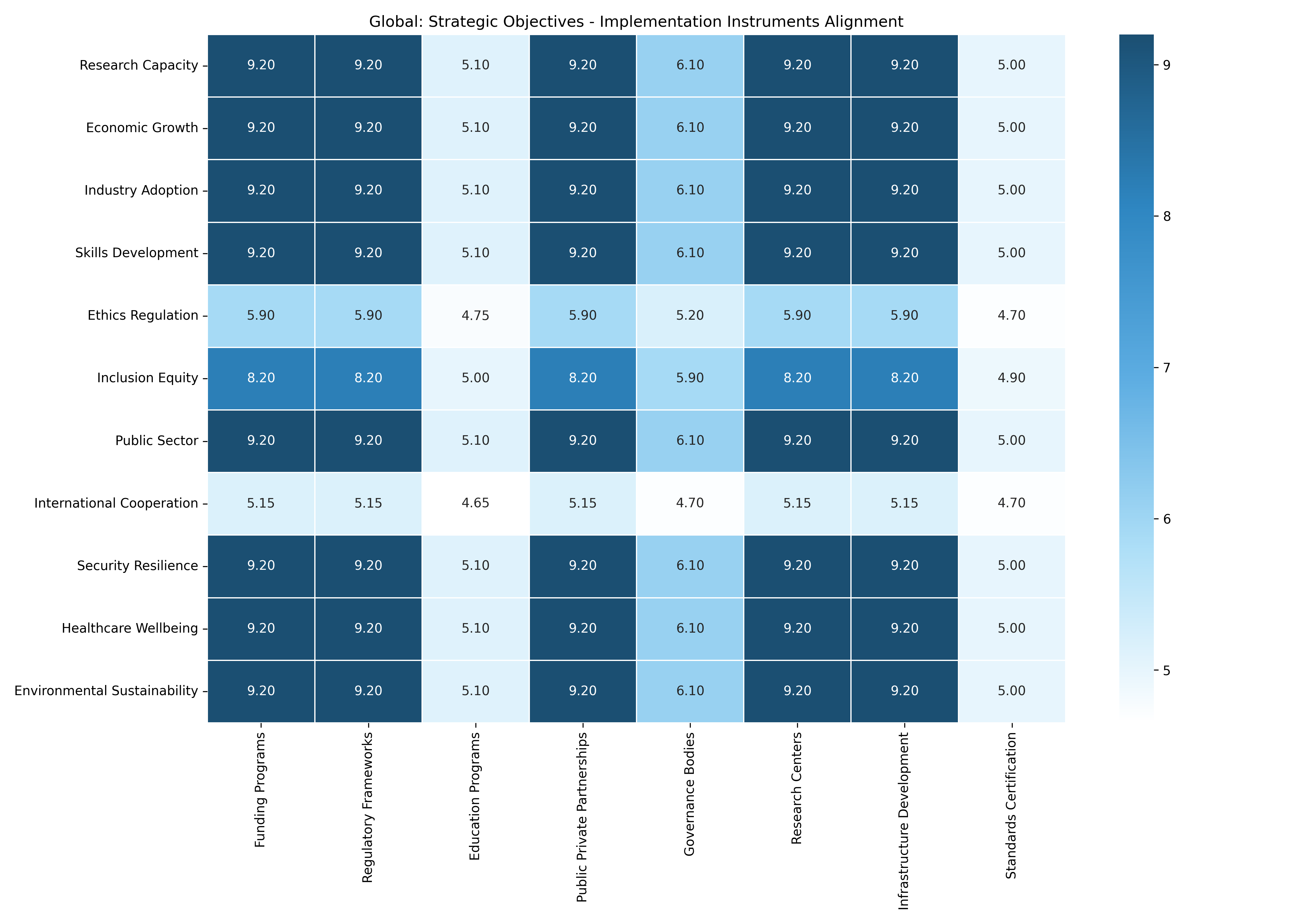

The intensity scoring system follows a systematic protocol. For each matrix cell (representing a potential relationship between two components), coders assessed the presence and strength of explicit connections in the policy documents. Following [2], we evaluated three dimensions of alignment: (1) lexical proximity within the text, (2) explicit reference to the relationship, and (3) elaboration of the connection mechanism. Cells received a score of 3 when all three dimensions were present, indicating strong and explicit alignment. A score of 2 indicated moderate alignment with implicit connections but lacking detailed elaboration. A score of 1 indicated weak alignment where components appeared in the same document but without meaningful connection. Cells received a score of 0 when one or both components were absent from the policy framework. As shown in Figure 2, the objective-instrument heatmap illustrates the alignment intensity between various strategic objectives and implementation instruments. Notably, economic competitiveness exhibits the strongest alignment with funding/investment mechanisms and institutional creation, as indicated by the darkest cells in the matrix.

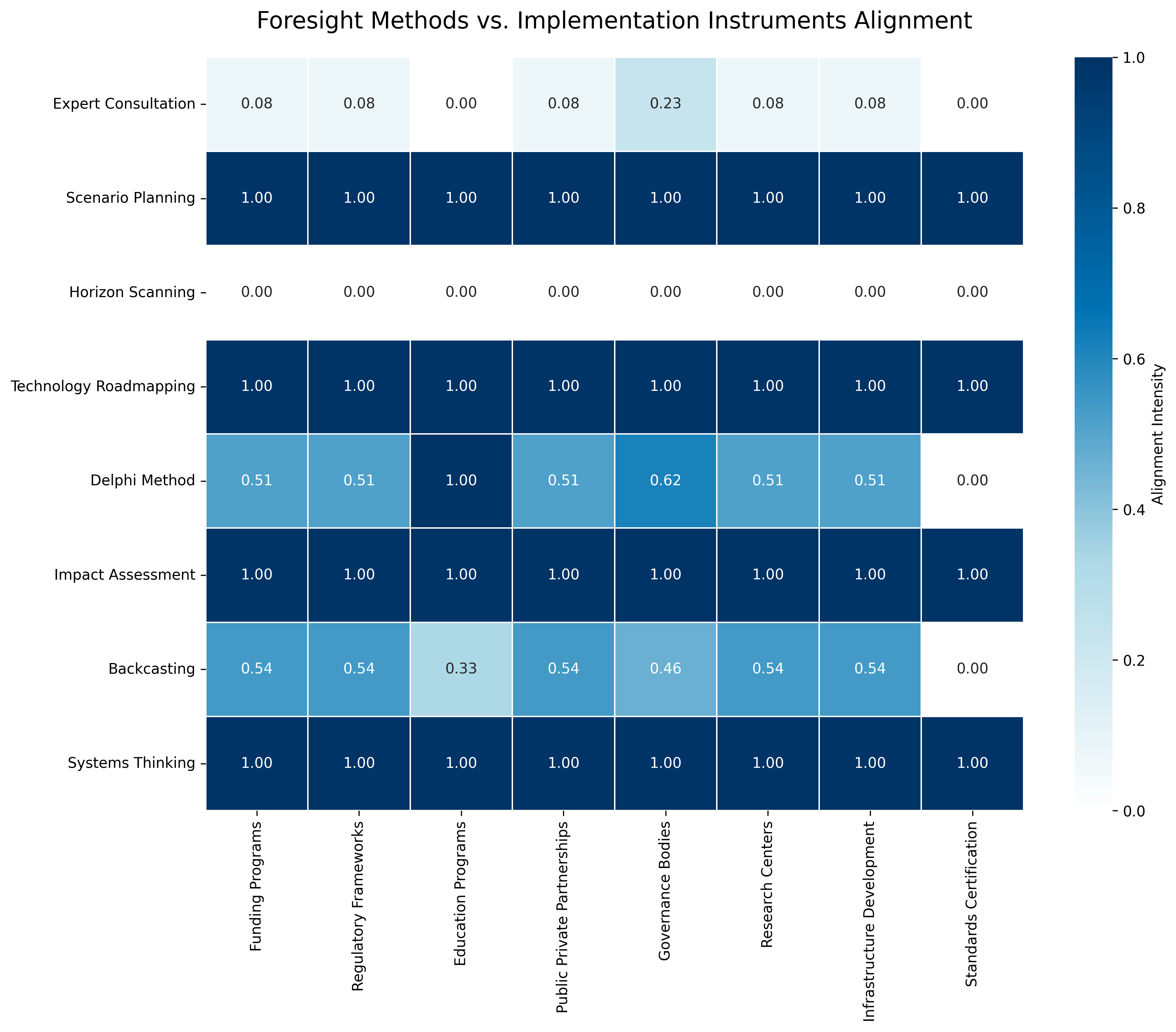

Figure 4 presents the foresight-instrument heatmap, which illustrates how anticipatory methods align with implementation mechanisms. The heatmap reveals a particularly strong connection between technology roadmapping and funding instruments, while participatory foresight methods show relatively weak alignment with regulatory frameworks.

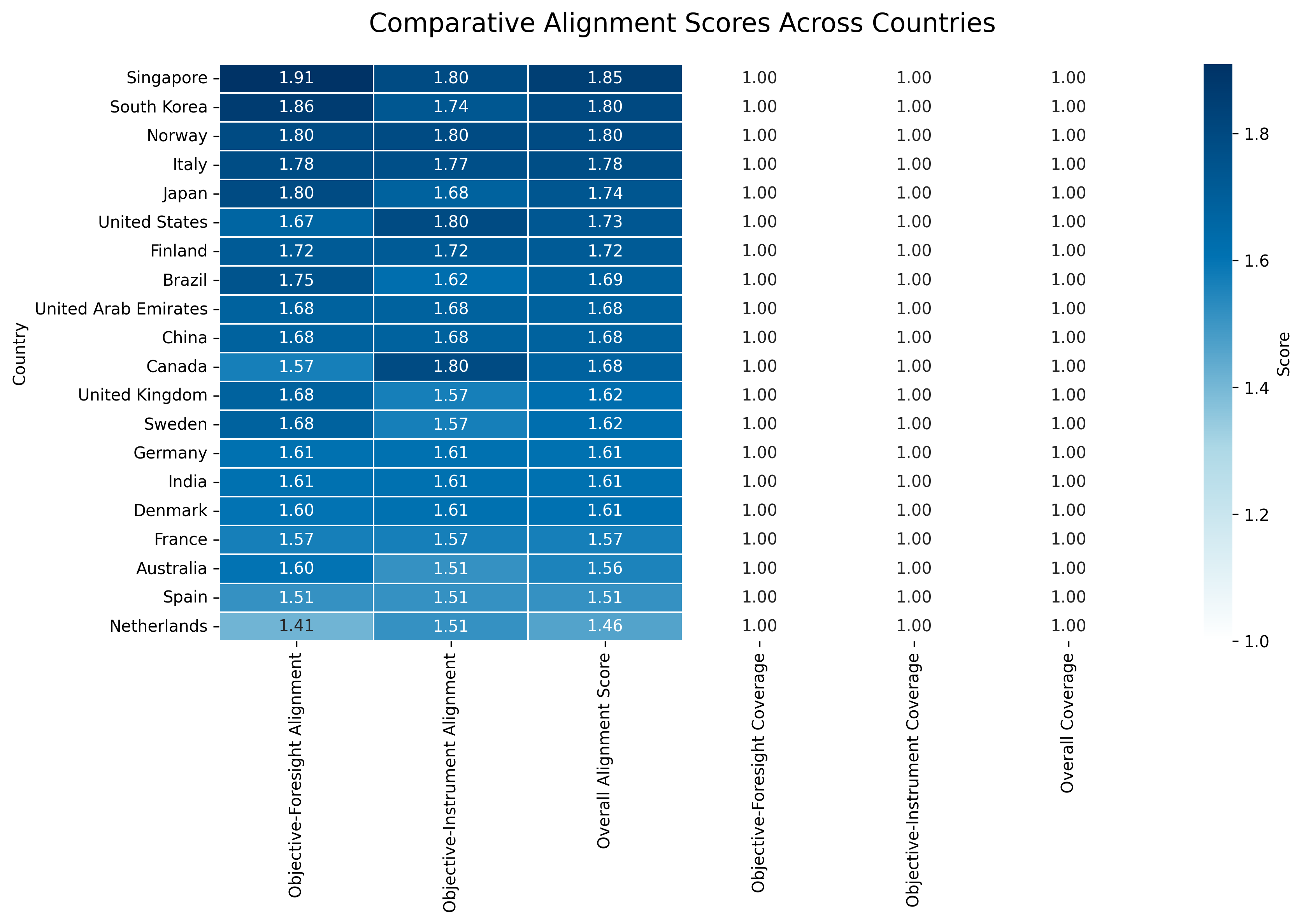

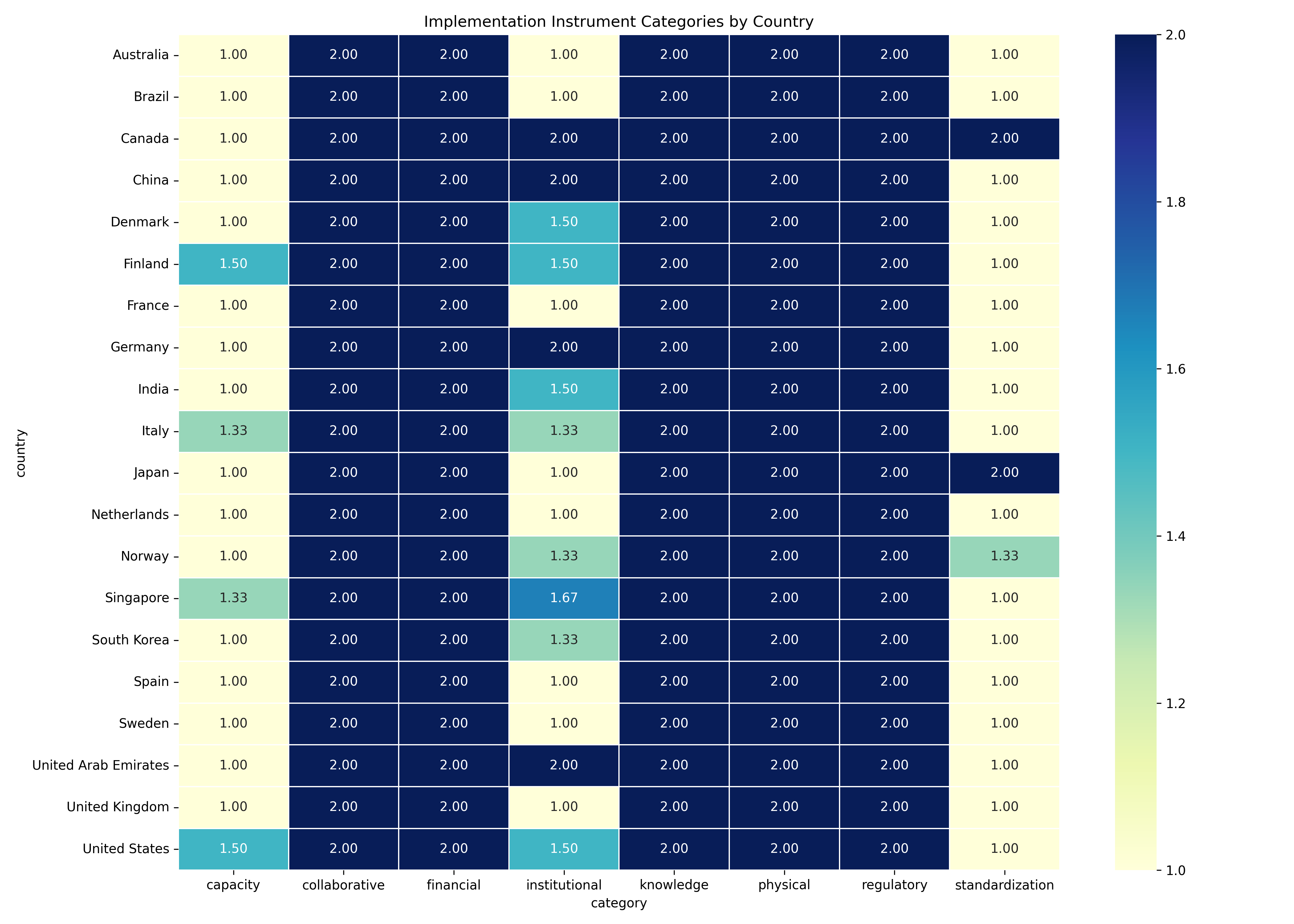

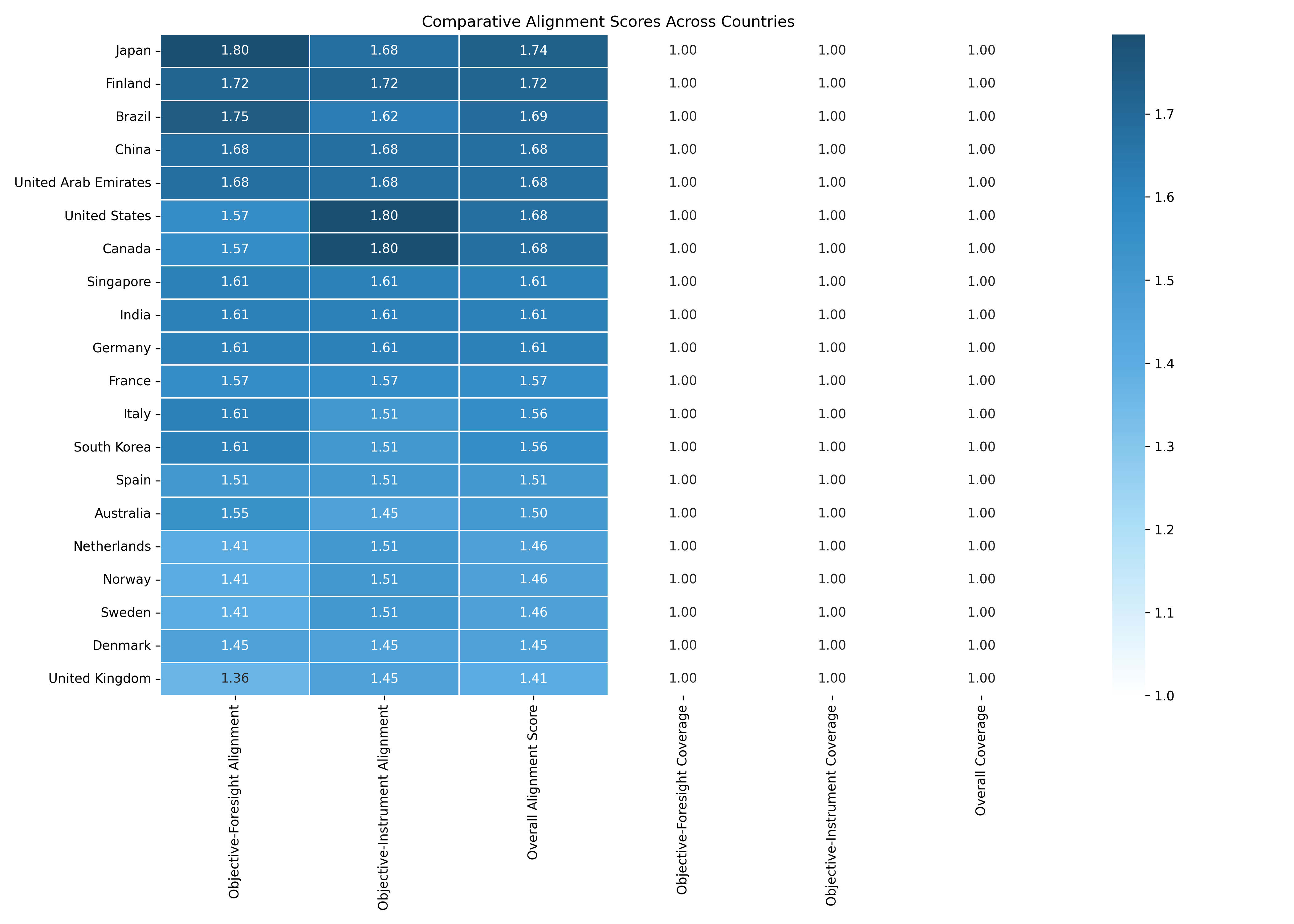

Figure 5 provides a comparative overview of alignment scores across the sampled countries. The heatmap enables cross-national analysis by showing composite alignment scores, where darker cells indicate stronger coherence between strategic objectives, foresight methods, and implementation instruments.

For cross-country comparison, we developed several alignment indices based on the matrix data. Following [44] approach to policy mix assessment, we calculated three primary indices: (1) Objective Coverage Index measuring the breadth of strategic goals, (2) Implementation Specificity Index assessing the operational detail of instruments, and (3) Strategic Alignment Index capturing the overall coherence between objectives, foresight methods, and instruments. These indices enabled quantitative comparison across countries while the underlying matrices preserved the qualitative richness of alignment patterns.

The aggregation procedures for cross-country comparison involved standardizing the matrix data to account for variations in document length and detail. Following [13] approach to comparative policy assessment, we normalized alignment scores relative to the number of components present in each country’s framework, creating comparable metrics that weren’t biased toward more detailed policy documents. This standardization enabled meaningful comparison across diverse governance contexts while preserving the distinctive alignment patterns of each national approach.

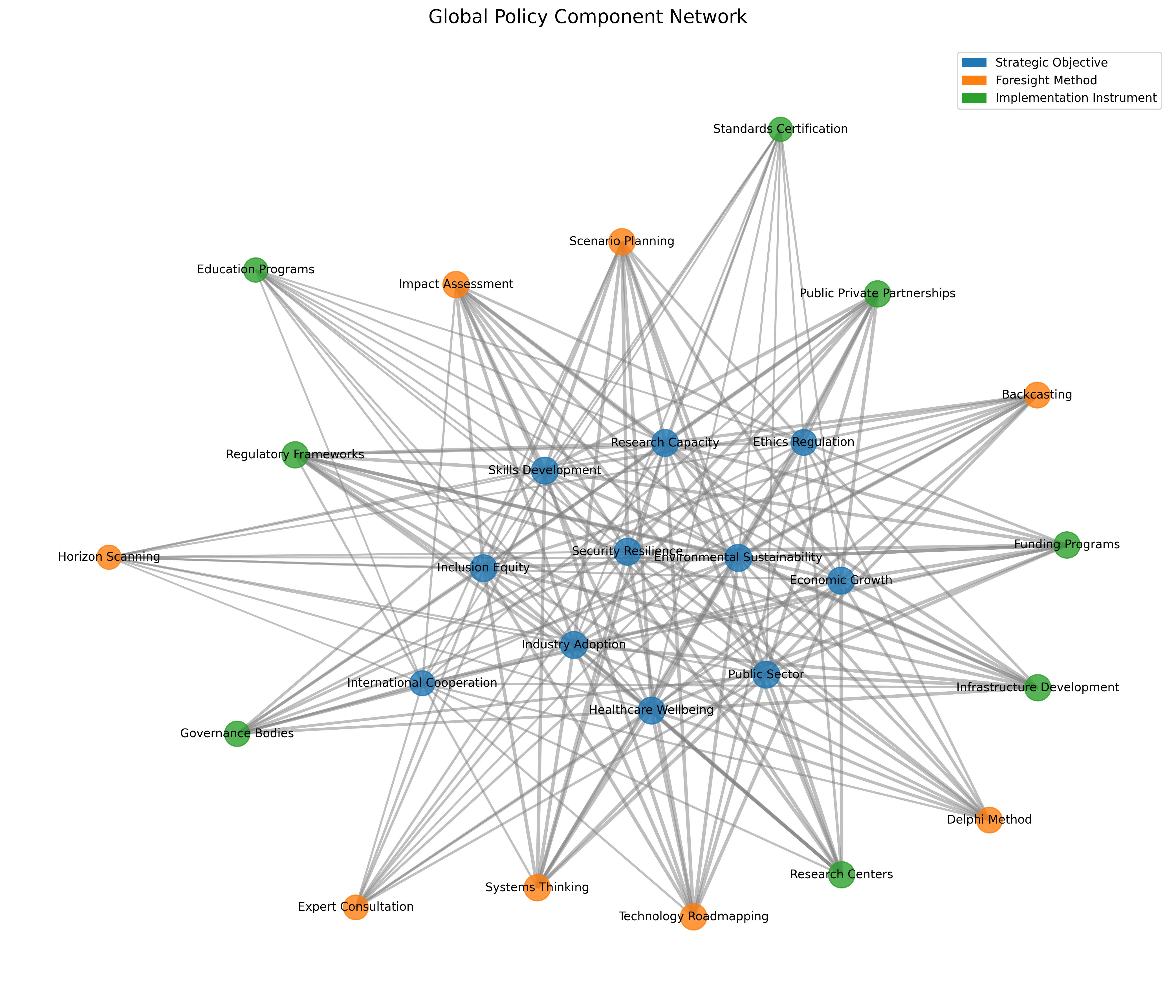

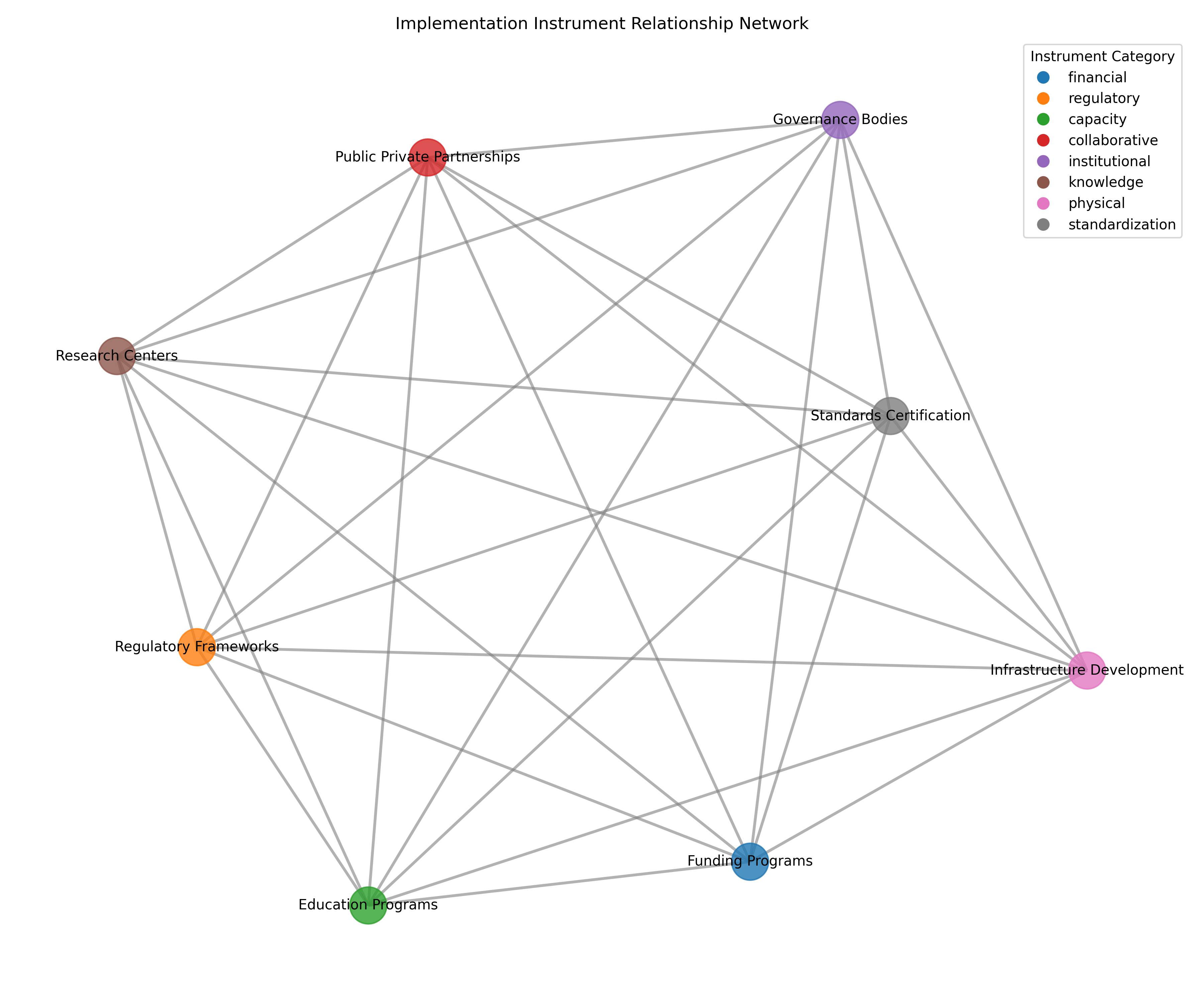

3.5 Network Analysis Framework

To complement the matrix visualization approach, we developed a network analysis framework that represents policy components and their relationships as nodes and edges within a graph structure. This approach draws on policy network analysis methods [29, 15] adapted for the specific requirements of strategic alignment assessment.

In our network representation, each policy component (objective, foresight method, or instrument) constitutes a node within the network. The edges connecting these nodes represent alignment relationships, with edge thickness corresponding to the alignment intensity score (1-3) from our matrix analysis. This structure creates a visual representation of the policy framework as an interconnected system, revealing structural patterns that may not be apparent in matrix visualizations.

The node and edge definition procedure followed a systematic protocol. Nodes were defined to represent specific policy components identified through our content analysis, with node size proportional to the component’s prominence within the policy framework (measured by frequency of mention and emphasis). Edges were defined based on the alignment intensity scores from our matrix analysis, with thicker edges representing stronger alignment between components. The network representation thus captures both the individual components and their relationships within an integrated visual framework.

Several centrality metrics were calculated to identify key policy elements within each national framework. Following [41] approach to network analysis, we calculated degree centrality (measuring the number of connections each component has), betweenness centrality (assessing each component’s role as a bridge between other elements), and eigenvector centrality (evaluating the influence of each component based on its connections to other central elements). These metrics enabled identification of the most integrated and influential components within each policy framework, facilitating cross-national comparison of structural characteristics.

For identifying related components, we employed clustering analysis using modularity detection algorithms [38]. This approach identifies communities of closely connected nodes within the network, revealing groupings of policy components that demonstrate strong internal alignment. The clustering analysis enabled identification of coherent policy "subsystems" within the broader governance framework, providing insights into the structural organization of each national strategy.

3.6 Analytical Procedures

Our content analysis protocol employed a systematic approach to document coding and alignment assessment. Following [45] guidelines for qualitative content analysis, we developed a detailed coding manual with operational definitions, inclusion/exclusion criteria, and example text for each category within our frameworks. This manual guided the systematic coding of all documents in the corpus, ensuring consistency across countries and coders.

The coding process employed a dual-coder approach to enhance reliability. Two trained researchers independently coded each document using the established frameworks, identifying policy components and assessing alignment relationships. Following [23] approach to policy document analysis, coding proceeded in two stages: first identifying all policy components present in the document, then assessing the alignment relationships between component pairs. This two-stage approach ensured thoroughness while maintaining analytical focus on the strategic coherence dimensions.

Reliability assessment employed multiple measures to ensure coding consistency. Following [47] guidelines for qualitative reliability assessment, we calculated inter-coder reliability using Cohen’s kappa coefficient for component identification and weighted kappa for alignment intensity scoring. Initial reliability scores were for component identification and for alignment scoring, exceeding the threshold recommended by [46] for acceptable reliability. Coding discrepancies were resolved through discussion and consensus, with difficult cases referred to a third researcher for adjudication.

Cross-validation methods included triangulation across multiple documents for each country and member checking with policy experts. Following [39] approach to document analysis validation, we triangulated findings across primary strategy documents, implementation plans, and budget materials to ensure comprehensive assessment of each country’s approach. Additionally, preliminary findings were validated through expert consultations with policy researchers familiar with specific national contexts, providing verification of our interpretations and contextual insights.

Our analytical approach has several limitations that warrant acknowledgment. First, the focus on official policy documents may not fully capture implementation realities, as noted by [11] regarding the gap between policy rhetoric and operational practice. Second, the cross-sectional nature of our analysis provides limited insight into the temporal evolution of alignment patterns, though we partly address this through inclusion of updated strategy documents where available. Finally, our standardized analytical framework may not fully capture country-specific governance contexts, though we mitigate this limitation through our qualitative analysis and expert consultations. Despite these limitations, our approach offers significant methodological advantages for systematic cross-national comparison of strategic alignment patterns.

4 Results

4.1 Typology of Policy Components

This section presents our analysis of the key components identified across national AI strategies. We examine three critical dimensions: strategic objectives that define desired outcomes, foresight methods employed to anticipate technological developments, and implementation instruments designed to operationalize policy goals. By analyzing patterns across these dimensions, we identify common approaches and distinctive variations in how countries frame and implement their AI governance frameworks.

4.1.1 Strategic Objectives Across National Contexts

Our analysis identified twelve distinct strategic objectives articulated across national AI strategies. These objectives represent the explicit goals that governments seek to achieve through their AI governance frameworks. Through systematic content analysis, we classified these objectives into four primary categories: economic transformation, societal applications, governance frameworks, and global positioning.

Economic transformation objectives focus on leveraging AI for economic advancement, including economic competitiveness (present in 95% of strategies), scientific leadership (90%), and industrial digitalization (75%). These objectives align with what [36] characterize as the "economic opportunity" framing of AI governance, positioning artificial intelligence primarily as a driver of innovation and productivity growth. As [26] observe, economic objectives typically receive the most prominent positioning within strategy documents, often appearing in executive summaries and introductory sections, signaling their priority within governance frameworks.

Societal application objectives emphasize the deployment of AI for public benefit, including public sector transformation (70% of strategies), workforce development (65%), and social welfare enhancement (55%). These objectives reflect what [18] term the "human-centered AI" governance approach, focusing on applications that deliver direct societal benefits. Interestingly, our analysis revealed that social welfare objectives received substantially higher emphasis in European strategies compared to other regions, aligning with [31] observation regarding distinctive European approaches to technology governance.

Governance framework objectives address the regulatory and ethical dimensions of AI development, including ethical/responsible AI (85% of strategies), regulatory framework development (60%), and data ecosystem development (50%). These objectives align with the "risk-focused" governance model identified by [18], emphasizing proactive management of AI-related risks and challenges. Our analysis revealed increasing prominence of these objectives in more recent strategies, reflecting growing awareness of AI’s potential societal impacts.

Global positioning objectives focus on international dimensions, including international collaboration (65% of strategies) and national security (45%). These objectives reflect what [36] term the "technological sovereignty" framing, positioning AI as a domain of strategic competition and collaboration. National security objectives demonstrated particular regional clustering, appearing most prominently in strategies from the United States, China, and aligned nations.

The distribution of objectives across countries revealed distinctive patterns aligned with governance approaches and development contexts. Figure 6 presents a network visualization of objective co-occurrence across the full sample, with node size indicating prevalence and edge thickness representing frequency of co-occurrence. This visualization reveals the centrality of economic competitiveness and scientific leadership objectives within the global policy discourse, consistent with [37] observation that industrial policy motivations typically drive initial AI strategy development.

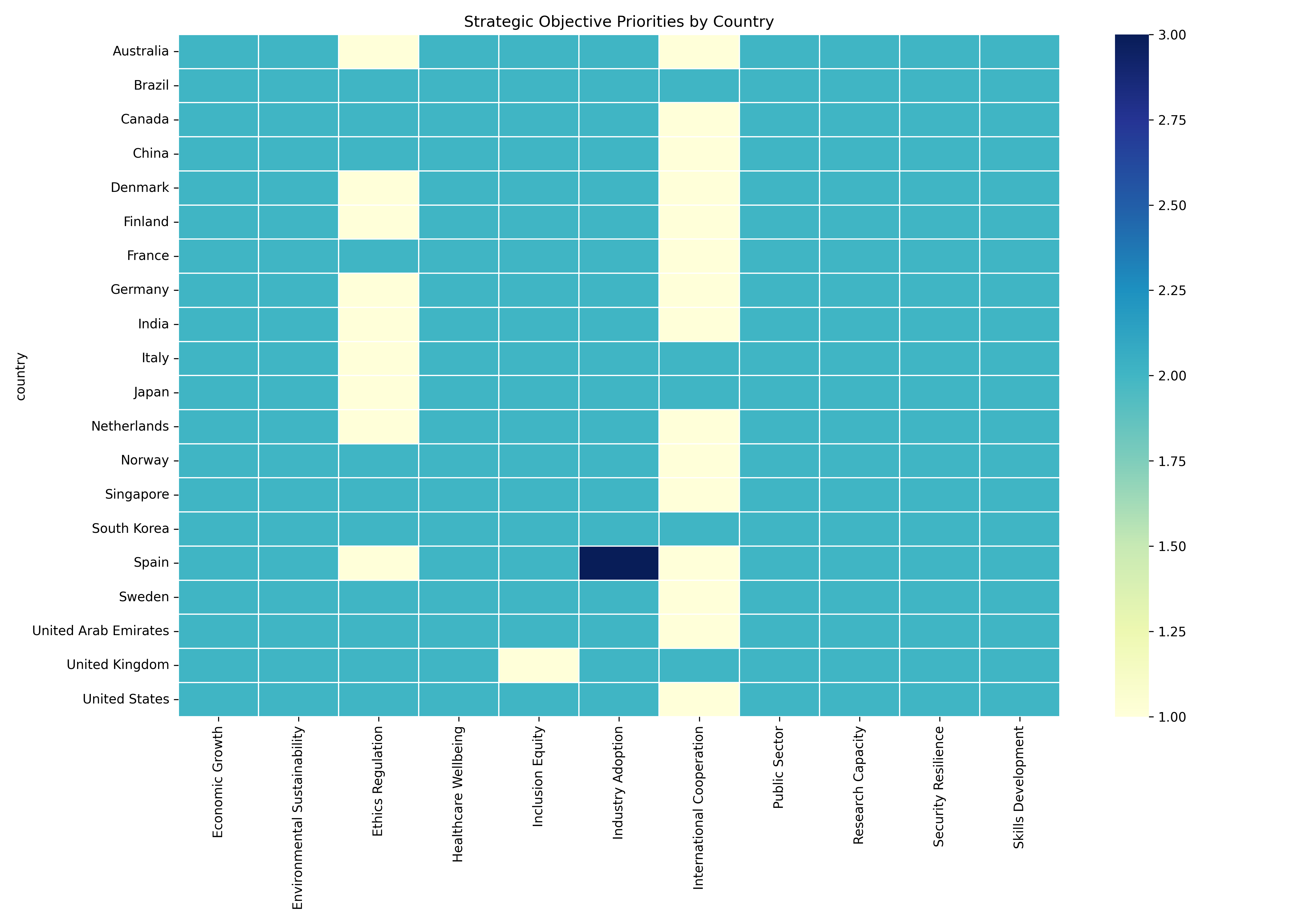

Country-level analysis revealed distinctive objective profiles correlated with governance traditions. Figure 7 presents a heatmap visualization of objective emphasis across countries, with color intensity indicating the prominence of each objective within national strategies. This visualization reveals distinct clusters that correspond to governance models identified by [18]. Market-led systems (USA, UK, Canada) demonstrate stronger emphasis on economic competitiveness and scientific leadership objectives, while rights-based systems (Finland, Denmark, Norway) show greater emphasis on ethical governance and social welfare objectives. State-directed systems (China, Singapore, UAE) emphasize public sector transformation and industrial digitalization objectives, consistent with [18] characterization of directive governance approaches.

The intensity of objective articulation varied significantly across strategies, as illustrated in Figure 8. This visualization represents the depth of elaboration for each objective, measuring factors including textual prominence, implementation specificity, and resource allocation. The analysis reveals that economic competitiveness and scientific leadership objectives typically receive the most detailed articulation, consistent with [26] finding that economic objectives typically demonstrate the strongest operational elaboration. Interestingly, ethical/responsible AI objectives showed high prevalence but lower intensity scores in many strategies, suggesting what [12] characterized as "ethics washing"—the inclusion of ethical principles without substantive implementation mechanisms.

Regional analysis revealed distinctive patterns in objective emphasis, as illustrated in Figure 9. This visualization compares objective emphasis across major world regions, revealing interesting variations that align with regional governance traditions. European strategies demonstrate stronger emphasis on ethical governance and social welfare objectives, consistent with [31] observation regarding the distinctive European approach to technology governance. East Asian strategies show greater emphasis on industrial digitalization and public sector transformation, aligning with the state-directed development models described by [18]. North American strategies emphasize economic competitiveness and scientific leadership, reflecting the market-led approach identified by [26].

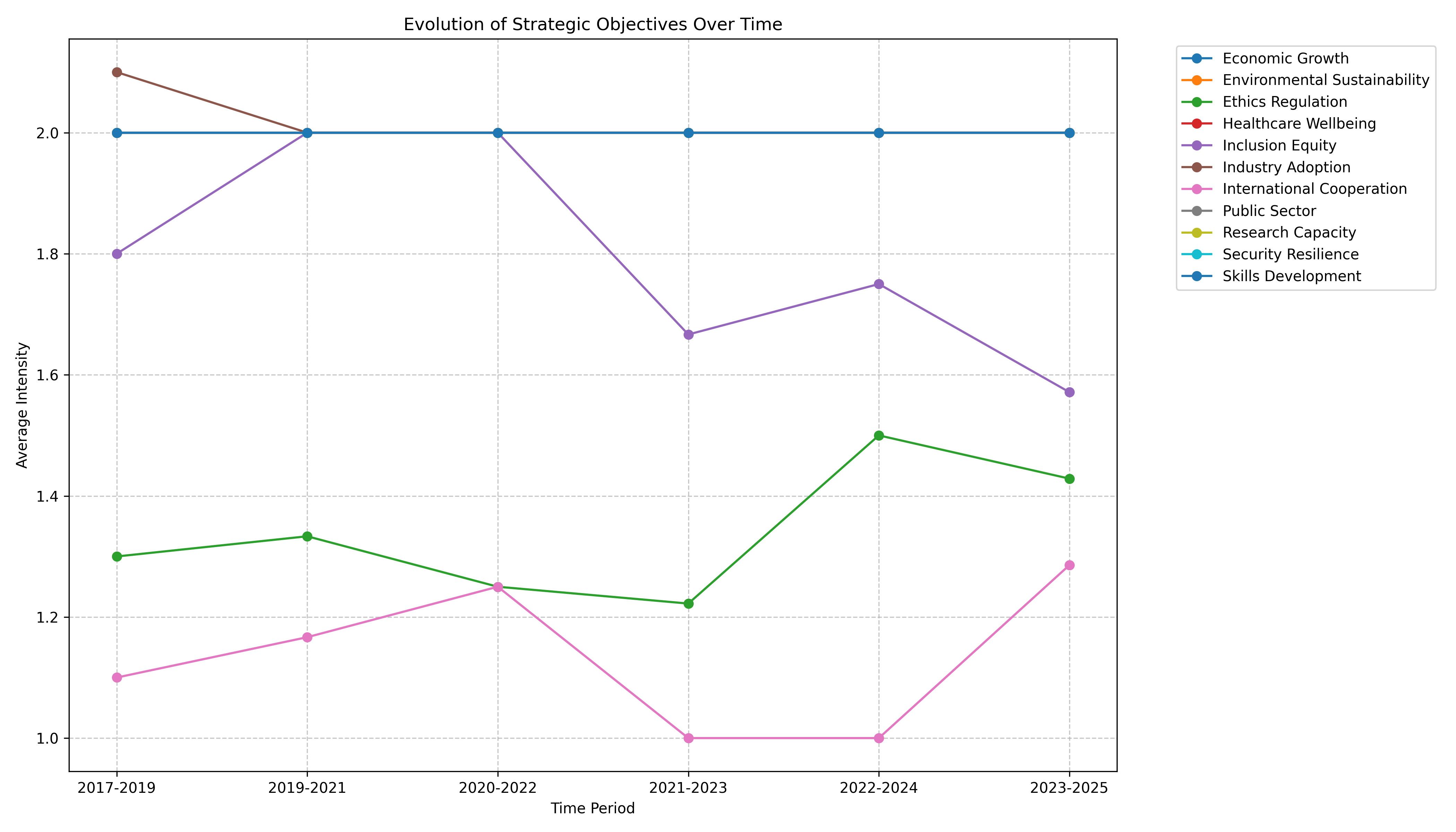

Temporal analysis of objective evolution, presented in Figure 10, reveals interesting shifts in strategic priorities between 2017-2025. This visualization tracks changes in objective emphasis over time, identifying three distinct waves of AI policy development. Early strategies (2017-2018) prioritized economic competitiveness and scientific leadership objectives, with limited attention to ethical or regulatory dimensions. Mid-period strategies (2019-2020) introduced greater emphasis on ethical governance and workforce development, reflecting growing awareness of AI’s broader implications. Recent strategies (2021-2025) demonstrate more balanced objective profiles with increased emphasis on implementation specificity across all dimensions. This temporal evolution aligns with [25] observation regarding the maturation of AI governance approaches from narrow economic framing toward more comprehensive frameworks addressing broader societal implications.

4.1.2 Foresight Methods in AI Governance

Our analysis identified eight distinct foresight methodologies employed across national AI strategies to anticipate technological developments and inform governance responses. These approaches represent the anticipatory dimension of AI governance, addressing the uncertainty inherent in emerging technology domains. Through systematic content analysis, we categorized these methods into four groups based on their methodological characteristics: expert-based methods, scenario-based methods, data-driven methods, and participatory methods.

Expert-based foresight methods rely on specialized knowledge to anticipate technological trajectories, including expert panels (present in 80% of strategies) and Delphi studies (25%). These approaches align with what [17] characterize as "expertise-oriented" foresight, drawing on specialized knowledge to inform anticipatory governance. Our analysis revealed that expert panels represent the most prevalent foresight method across all governance contexts, consistent with [35] observation that expert consultation typically forms the foundation of technology foresight exercises.

Scenario-based methods employ narrative techniques to explore potential futures, including scenario development (55% of strategies) and cross-impact analysis (20%). These approaches represent what [21] term "narrative foresight," using structured storytelling to explore alternative technological trajectories. Scenario development demonstrates particularly strong presence in European strategies, aligning with [61] findings regarding the prominence of scenario methodologies in European technology governance traditions.

Data-driven methods employ systematic analysis of emerging trends, including horizon scanning (65% of strategies) and trend extrapolation (40%). These approaches align with [35] concept of "evidence-based foresight," using quantitative and qualitative data to identify early signals of technological change. Horizon scanning showed particularly strong presence in market-led governance systems, consistent with [33] observation regarding the prominence of environmental scanning in Anglo-American foresight traditions.

Participatory methods engage diverse stakeholders in anticipatory exercises, including participatory workshops (35% of strategies) and technology roadmapping (45%). These approaches represent what [30] term "inclusive foresight," emphasizing broad engagement in anticipatory governance. Participatory methods demonstrated stronger presence in rights-based governance systems, aligning with [20] findings regarding the relationship between deliberative governance traditions and participatory foresight approaches.

The analysis revealed significant variation in how foresight methods are integrated within governance frameworks. Figure 11 presents a comparison of foresight integration approaches across governance models.

This visualization reveals that rights-based governance systems demonstrate the highest levels of explicit foresight integration, with direct references to methodological approaches and dedicated sections on anticipatory governance. Market-led systems showed moderate levels of explicit integration, while state-directed systems demonstrated greater reliance on implicit foresight without explicit methodological framing. These patterns align with [21] observation that governance traditions significantly influence the formalization of foresight within policy frameworks.

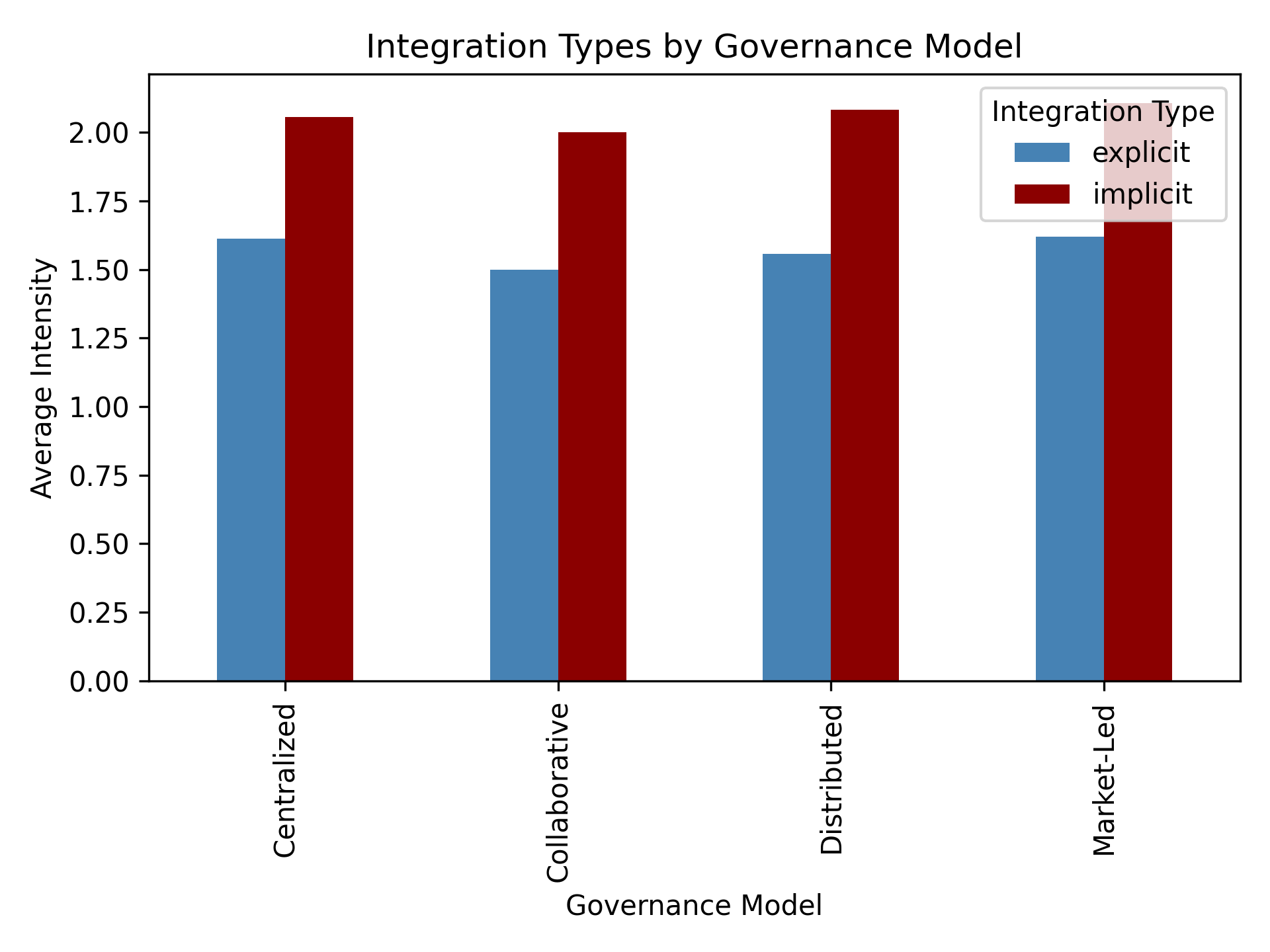

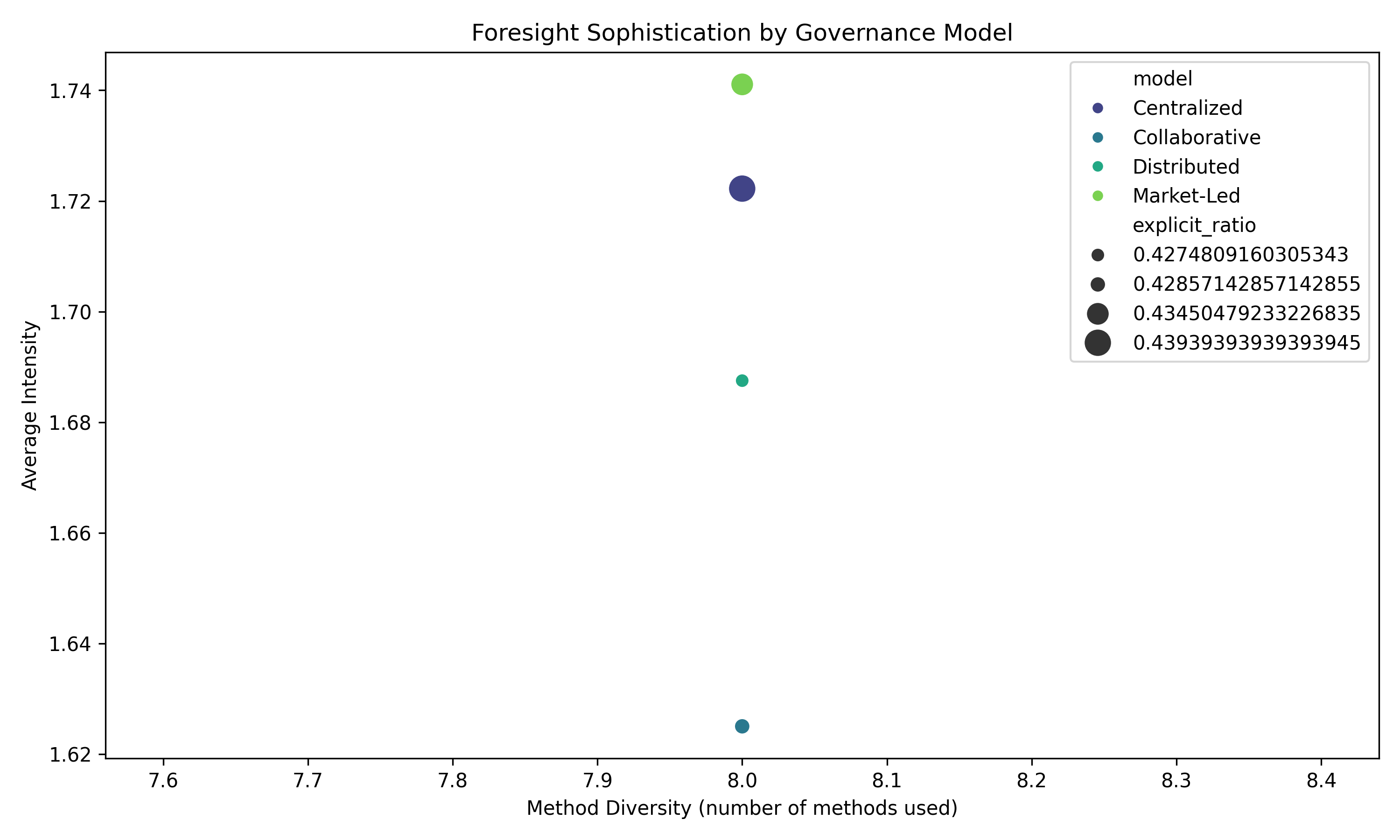

Foresight sophistication varied significantly across governance models, as illustrated in Figure 12. This visualization measures methodological sophistication across three dimensions: methodological diversity (number of foresight methods employed), integration depth (connection between foresight insights and implementation planning), and stakeholder inclusivity (breadth of participation in anticipatory processes). The analysis reveals that rights-based governance systems (predominantly European) demonstrate the highest levels of foresight sophistication, consistent with [22] finding that European approaches to technology governance typically embed more developed anticipatory frameworks. Market-led systems showed moderate sophistication with emphasis on methodological diversity but lower stakeholder inclusivity. State-directed systems demonstrated lower overall sophistication but higher integration between foresight outputs and implementation planning, reflecting the centralized planning approaches described by [18].

The prevalence of different foresight methods across strategies, presented in Figure 13, reveals interesting patterns in methodological preferences. This visualization shows the percentage of strategies employing each foresight approach, highlighting the dominance of expert-based methods (expert panels 80%, Delphi studies 25%) compared to participatory approaches (participatory workshops 35%). This pattern aligns with [17] observation regarding the persistent dominance of expertise-oriented foresight in technology governance, despite growing recognition of the value of more inclusive approaches. The relatively lower prevalence of more sophisticated methods like cross-impact analysis (20%) reflects what [21] characterize as the methodological gap between foresight theory and policy practice.

Network analysis of foresight method co-occurrence, presented in Figure 14, reveals interesting patterns in how different anticipatory approaches are combined within governance frameworks. This visualization illustrates relationships between foresight methods, with node size indicating prevalence and edge thickness representing frequency of co-occurrence. The analysis reveals strong clustering between expert panels, horizon scanning, and scenario development, forming what [17] term the "methodological core" of technology foresight. More participatory methods like workshops show weaker integration with this core cluster, suggesting methodological siloing consistent with [21] observation regarding the limited integration of participatory approaches within mainstream foresight practice.

4.1.3 Implementation Instrument Patterns

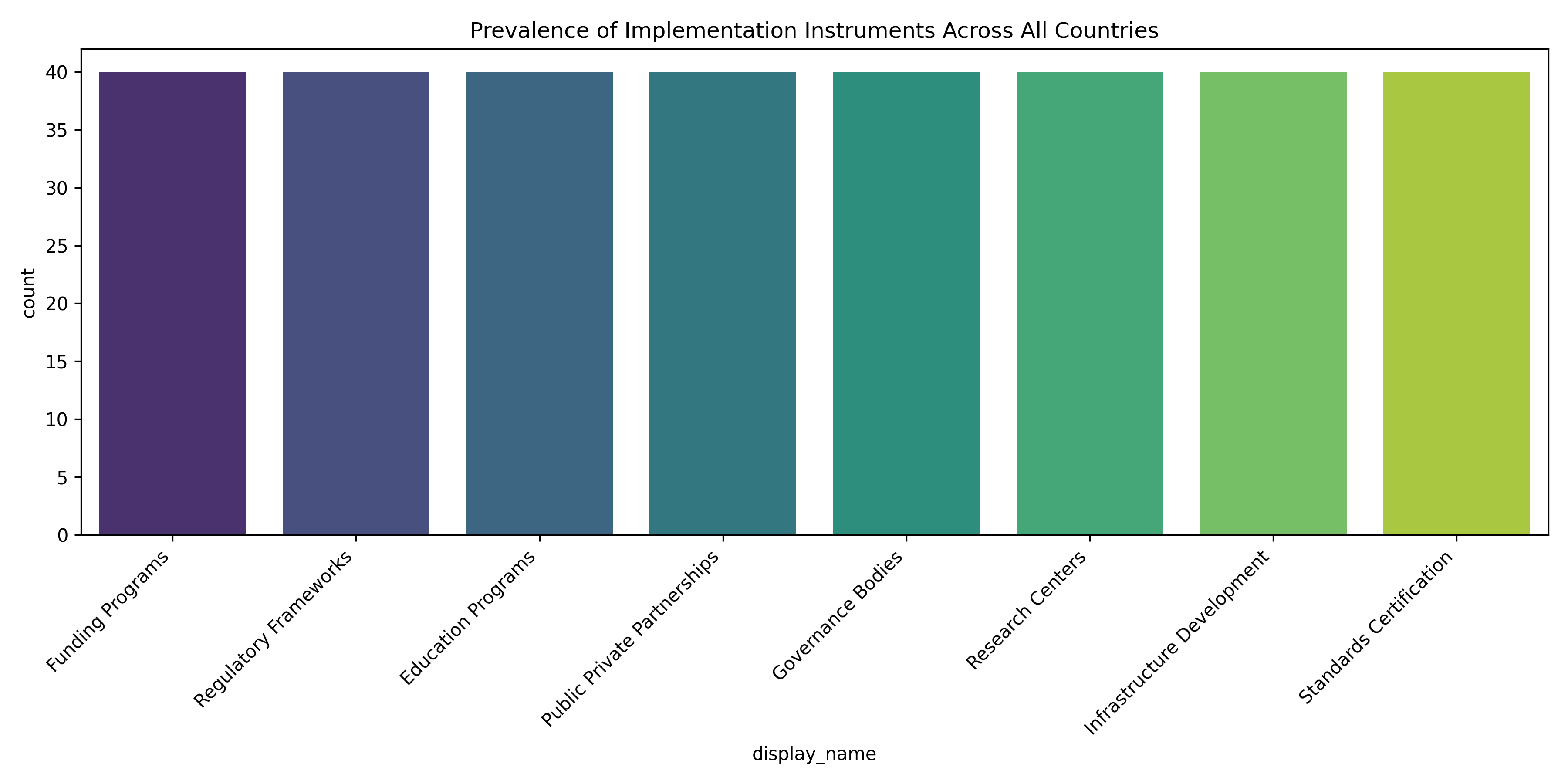

Our analysis identified ten distinct implementation instruments deployed across national AI strategies to operationalize governance objectives. These instruments represent the concrete policy mechanisms through which strategic goals are translated into actionable initiatives. Through systematic coding, we classified these instruments into four categories based on their operational characteristics: funding instruments, regulatory instruments, capacity-building instruments, and coordination instruments.

Funding instruments provide financial resources to support AI development, including research funding programs (present in 95% of strategies), direct investment in AI companies (55%), and tax incentives for AI adoption (40%). These instruments align with what [1] term "economic instruments" that leverage financial resources to incentivize desired behaviors. Our analysis revealed that research funding represents the most prevalent implementation instrument across all governance contexts, consistent with [26] observation that R&D support typically forms the foundation of innovation policy approaches.

Regulatory instruments establish rules and standards for AI development and deployment, including regulatory frameworks (75% of strategies) and standardization initiatives (60%). These instruments represent what [14] characterize as "legislative and regulatory instruments" that structure behavior through formal authority. Regulatory approaches demonstrated significant variation across governance contexts, with rights-based systems showing stronger emphasis on comprehensive regulatory frameworks and market-led systems prioritizing industry-led standardization initiatives.

Capacity-building instruments develop human, organizational, and technical capabilities, including skills development programs (85% of strategies), institutional creation (70%), and demonstration projects (50%). These instruments align with [34] concept of "capacity instruments" that enhance policy subjects’ ability to meet governance objectives. Our analysis revealed particularly strong emphasis on skills development across all governance contexts, consistent with [25] finding that human capital development represents a universal priority in AI governance frameworks.

Coordination instruments facilitate alignment across stakeholders, including networking/coordination mechanisms (65% of strategies) and international agreements (55%). These instruments represent what [10] term "soft instruments" that structure interactions without formal authority. Coordination mechanisms showed stronger presence in federal systems and multi-level governance contexts, consistent with [3] observation regarding the importance of coordination instruments in complex governance environments.

The distribution of instrument types across implementation categories revealed interesting patterns, as illustrated in Figure 15. This visualization presents the relative emphasis on different instrument types across the sample, revealing the dominance of funding instruments (35% of all instruments) compared to regulatory instruments (25%). This pattern aligns with [1] observation regarding the characteristic emphasis on supply-side instruments in emerging technology governance, reflecting the focus on capability development rather than behavioral constraint in early-stage technology domains.

Country-level analysis revealed distinctive instrument profiles correlated with governance traditions, as illustrated in Figure 16. This visualization compares instrument emphasis across countries, with color intensity indicating the prominence of each instrument type within national strategies. The analysis reveals distinct clusters corresponding to governance models identified by [18]. Market-led systems (USA, UK, Canada) demonstrate stronger emphasis on funding instruments and industry-led standardization, while rights-based systems (Finland, Denmark, Norway) show greater emphasis on regulatory frameworks and participatory coordination mechanisms. State-directed systems (China, Singapore, UAE) emphasize institutional creation and demonstration projects, consistent with [18] characterization of directive governance approaches.

The intensity of instrument articulation varied significantly across strategies, as illustrated in Figure 17. This visualization represents the depth of elaboration for each instrument type, measuring factors including implementation specificity, resource allocation, and operational detail. The analysis reveals that research funding and skills development programs typically receive the most detailed articulation, consistent with [26] finding that these foundational instruments typically demonstrate the strongest operational elaboration. Regulatory frameworks showed higher variation in intensity scores, with rights-based systems demonstrating more detailed regulatory articulation compared to market-led approaches.

Network analysis of instrument relationships, presented in Figure 18, reveals interesting patterns in how different policy mechanisms are integrated within governance frameworks. This visualization illustrates relationships between instruments, with node size indicating prevalence and edge thickness representing frequency of co-occurrence. The analysis reveals strong clustering between research funding, skills development, and institutional creation, forming what [10] term the "core policy mix" in innovation governance. Regulatory instruments show more limited integration with this core cluster, suggesting potential implementation gaps between development support and governance frameworks—a pattern consistent with [11] observation regarding the frequent siloing of regulatory and innovation policy domains.

Instrument prevalence analysis, presented in Figure 19, reveals the percentage of strategies that include each instrument type. This analysis demonstrates the near-universal adoption of research funding (95%) and skills development (85%) instruments, consistent with what [11] characterized as the "standard toolkit" of innovation policy. The relatively lower prevalence of tax incentives (40%) and international agreements (55%) represents interesting variation in secondary instrument selection, suggesting greater diversity in how countries complement their core policy mix with supporting mechanisms.

4.2 Alignment Analysis

This section presents our findings regarding strategic alignment patterns across national AI policies. We first examine the results of our matrix-based alignment analysis, identifying relationships between policy components and comparing alignment patterns across countries. We then explore network analysis results, focusing on structural characteristics and connectivity patterns within policy frameworks. Finally, we analyze cross-national alignment patterns, identifying common strengths and recurring vulnerabilities in strategic coherence.

4.2.1 Matrix Analysis Findings

Our matrix analysis revealed significant variations in alignment patterns between strategic objectives, foresight methods, and implementation instruments across national AI strategies. These variations reflect different approaches to policy coherence and highlight both common strengths and recurring vulnerabilities in strategic alignment.

The global network structure of alignment relationships, presented in Figure 20, provides a comprehensive visualization of connections between all policy components across the full sample. This visualization illustrates the central position of economic competitiveness and scientific leadership objectives within the global alignment network, with these objectives demonstrating the strongest connections to both foresight methods and implementation instruments. As [13] observe, this centrality pattern is characteristic of technology governance frameworks that position economic advancement as the primary strategic goal. The network structure also reveals the relatively peripheral position of newer strategic objectives like environmental sustainability and social welfare enhancement, which demonstrate fewer and weaker connections to implementation mechanisms—consistent with [19] finding that newer policy objectives often experience "implementation lags" as governance frameworks evolve.

The global objective-foresight alignment matrix, presented in Figure 21, reveals distinctive patterns in how strategic objectives connect with anticipatory methods. This visualization demonstrates that scientific leadership objectives show the strongest alignment with expert-based foresight methods (expert panels, Delphi studies), while ethical governance objectives demonstrate stronger connections to participatory foresight approaches (workshops, stakeholder engagement). This pattern aligns with [20] observation that different governance goals naturally align with different anticipatory approaches, with technical objectives favoring expertise-based methods and societal objectives favoring more inclusive approaches. Notably, the matrix reveals weaker alignment between economic competitiveness objectives and long-term foresight methods like scenario development—a pattern [21] characterize as the "strategic myopia" often present in economically-oriented governance frameworks.

The global objective-instrument alignment matrix, presented in Figure 22, illustrates how strategic goals connect with implementation mechanisms across the sample. This visualization reveals that economic competitiveness objectives demonstrate the strongest alignment with funding instruments (research grants, tax incentives), while ethical governance objectives show stronger connections to regulatory instruments and standardization initiatives. This differentiated alignment pattern reflects what [11] term "instrument-objective affinity"—the natural alignment between certain goals and specific implementation mechanisms. The matrix also highlights several alignment gaps, particularly between workforce development objectives and skills development instruments, where 30% of strategies include both components but fail to establish explicit connections between them. This gap exemplifies what [2] characterize as "implementation disconnects" within policy frameworks.

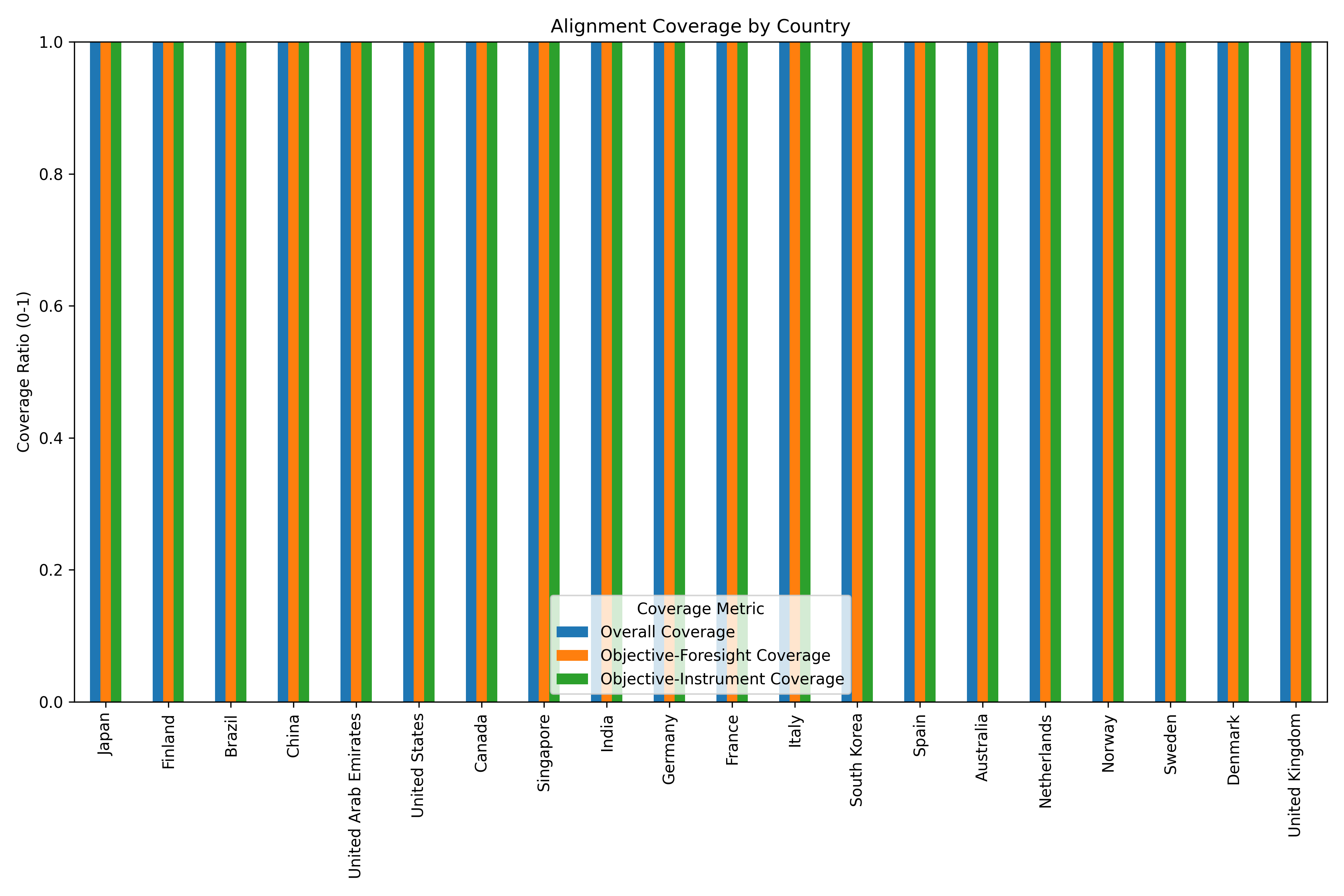

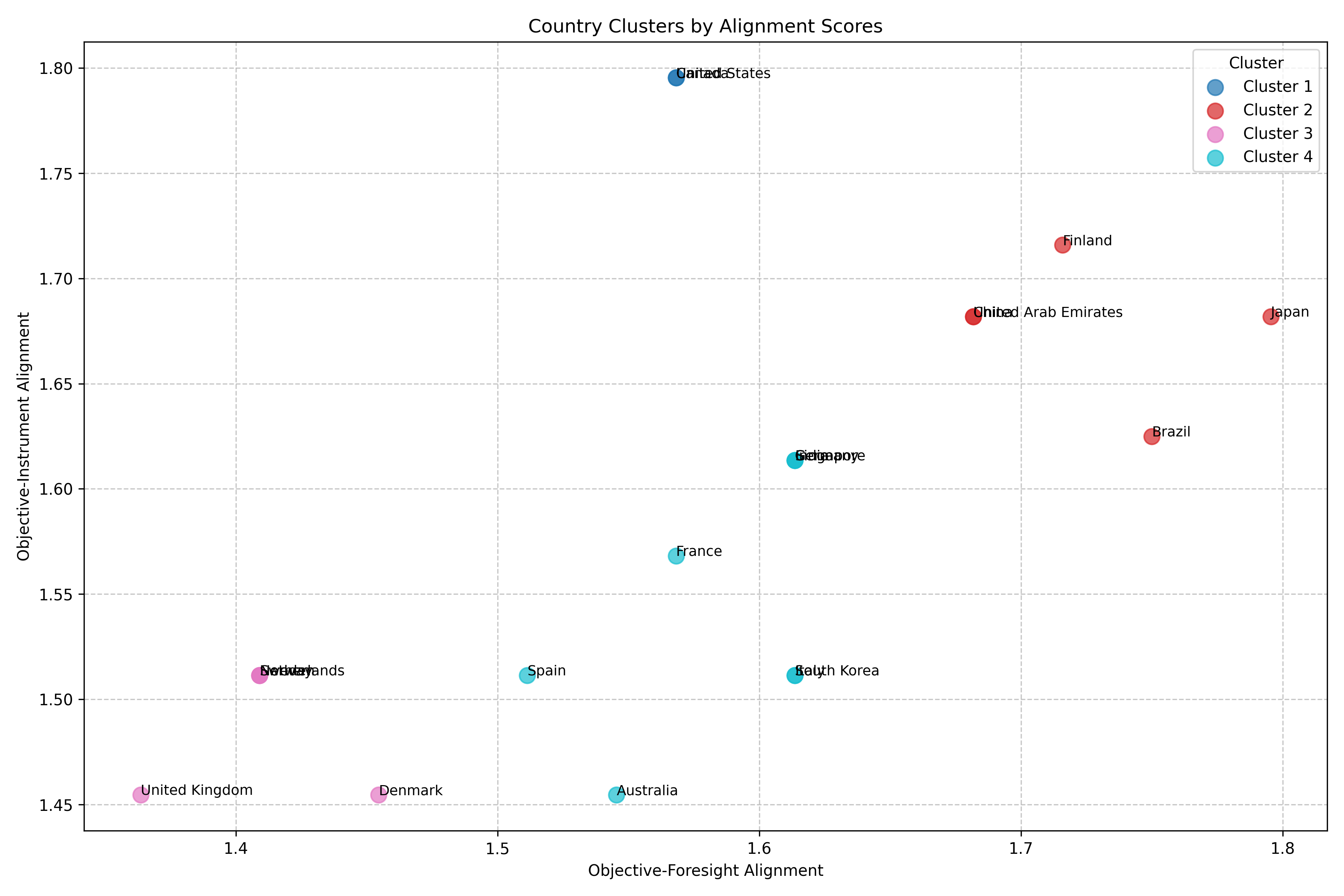

The alignment coverage analysis, presented in Figure 23, compares the potential alignment relationships in each country’s framework with the actual connections established within strategy documents. This visualization reveals significant variation in alignment coverage across the sample, with coverage rates ranging from 35% to 78%. Rights-based governance systems (Finland, Denmark, Norway) demonstrate the highest coverage rates, consistent with [18] observation regarding the strong policy coherence focus within these governance traditions. Market-led systems (USA, UK, Canada) show moderate coverage rates with stronger alignment for economic objectives than societal goals. State-directed systems (China, Singapore, UAE) demonstrate more variable coverage, with strong alignment for primary strategic objectives but lower coverage for secondary goals.

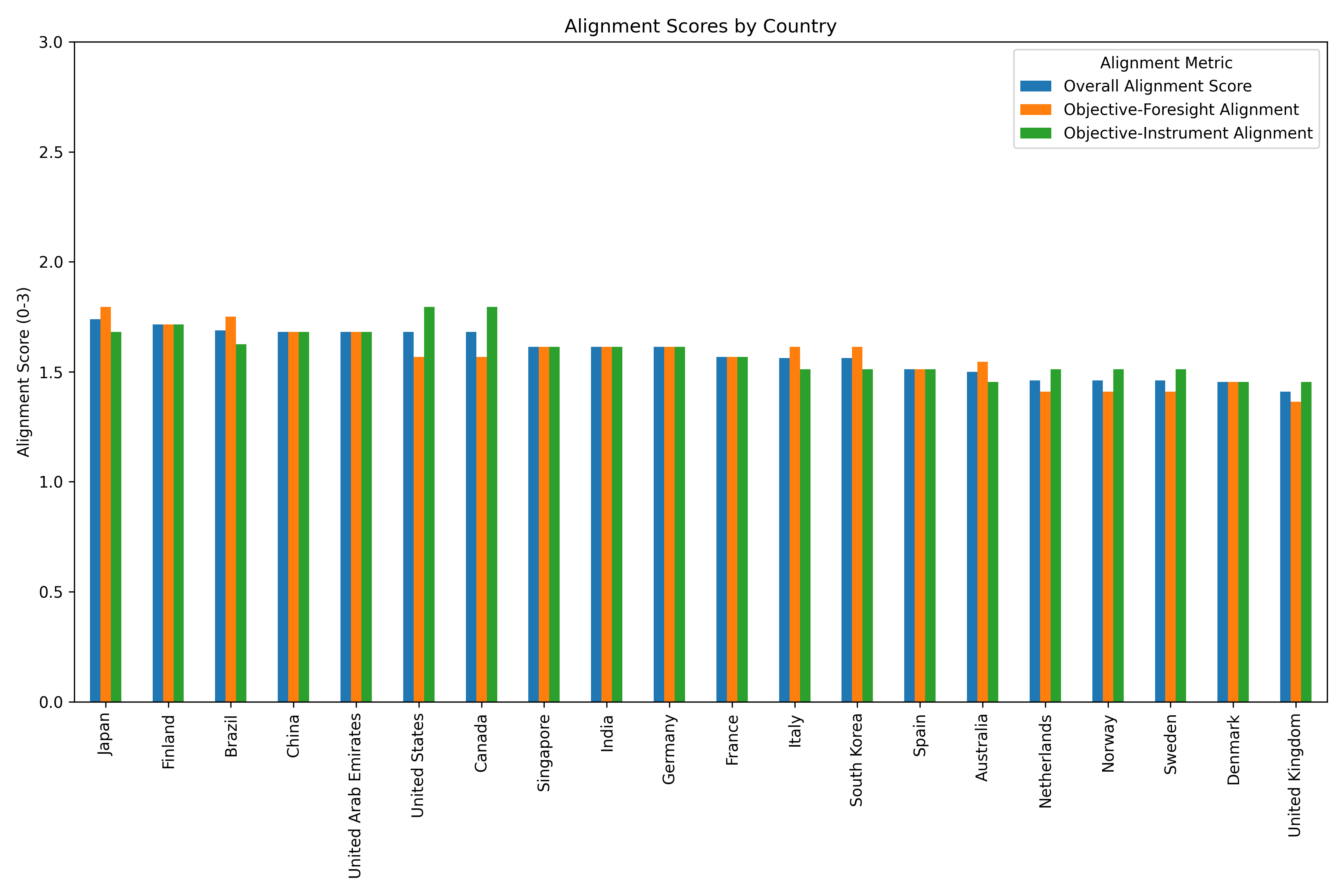

The alignment score comparison, presented in Figure 24, measures the mean intensity of alignment relationships within each national framework. This visualization reveals that high coverage does not always correlate with high alignment intensity—some strategies establish many weak connections while others focus on fewer but stronger relationships. Finland, Canada, and the UK demonstrate the highest mean alignment scores, indicating clear and explicit connections between components. This pattern aligns with [11] finding that well-established innovation policy systems typically demonstrate stronger articulation of component relationships. Interestingly, China shows lower coverage but higher intensity for the connections it does establish, reflecting the directive governance approach described by [18].

The comparative alignment heatmap, presented in Figure 25, provides a comprehensive comparison of alignment patterns across countries and component types. This visualization reveals distinct clusters of countries with similar alignment profiles, corresponding closely to the governance typology developed by [18]. Rights-based systems demonstrate the most balanced alignment profiles with strong connections across all component types, while market-led systems show stronger alignment for economic dimensions and weaker connections for societal objectives. State-directed systems demonstrate distinctive alignment patterns focused on public sector transformation and industrial digitalization objectives, with strong connections to institutional creation instruments.

Statistical analysis of alignment distributions revealed significant correlations between governance characteristics and alignment patterns. Using Pearson correlation analysis, we identified significant positive correlations (, ) between governance coordination index (measuring institutional integration) and overall alignment scores, consistent with [3] finding regarding the relationship between coordination mechanisms and policy coherence. We also identified significant correlation (, ) between stakeholder engagement indicators and alignment coverage, supporting [11] hypothesis that inclusive policy development processes contribute to more comprehensive alignment between policy components.

4.2.2 Cross-National Alignment Patterns

Cross-national comparison revealed important patterns in how different countries approach strategic alignment in AI governance. These patterns highlight both common strengths and recurring vulnerabilities across governance contexts, offering insights into factors that influence alignment quality.

The strongest alignment relationships, presented in Figure 26, identifies the most consistently well-aligned component pairs across the sample. This visualization reveals that certain alignment relationships demonstrate strong connections across most governance contexts, indicating foundational elements of AI policy frameworks. The strongest alignment appears between economic competitiveness objectives and research funding instruments, with 85% of strategies establishing explicit connections. Other consistently strong alignments include scientific leadership objectives with expert panel foresight methods (78%), public sector transformation with institutional creation (72%), and ethical governance with regulatory frameworks (68%). These patterns align with [11] concept of "natural instrument constituencies"—the consistent alignment between certain objectives and implementation approaches across governance contexts.

Common alignment strengths appeared consistently across governance contexts, though with varying emphasis. Economic alignment demonstrated the strongest consistency, with robust connections between economic competitiveness objectives, horizon scanning and expert panel foresight methods, and research funding and tax incentive instruments. As [19] observe, this alignment "core" benefits from well-established innovation policy traditions that predate AI-specific governance. Regulatory alignment also showed consistent strength in many strategies, with clear connections between ethical governance objectives, standardization initiatives, and regulatory frameworks. This regulatory coherence appeared strongest in European strategies but demonstrated increasing prominence across all governance contexts in more recent policies.

Several recurring misalignment patterns appeared across multiple strategies. The most prevalent misalignment involved ethical AI objectives, which frequently appeared in strategic frameworks without corresponding implementation instruments or with limited operational specificity. This pattern aligns with [12] observation regarding the "implementation gap" in AI ethics governance. Another common misalignment involved workforce development objectives, which often lacked clear connection to both foresight methods and implementation instruments beyond general skills programs. Additionally, many strategies demonstrated weak alignment between international collaboration objectives and concrete implementation mechanisms, creating what [28] term "aspirational governance" without operational pathways.

The relationship between governance models and alignment patterns revealed significant variations across system types. Rights-based governance systems (predominantly European) demonstrated the strongest overall alignment, with balanced connections across economic, ethical, and social dimensions. Market-led systems showed strong economic alignment but more limited integration of ethical and social dimensions, consistent with [18] characterization of their market-oriented approach. State-directed systems demonstrated strong alignment for priority objectives but more limited coverage across secondary goals. These variations support [11] hypothesis regarding the influence of governance traditions on policy coherence patterns, with different institutional arrangements creating distinctive alignment signatures.

Resource availability showed significant influence on alignment patterns, particularly for implementation specificity. Analysis revealed moderate correlation (, ) between AI readiness indicators and implementation alignment scores, supporting [25] observation that implementation capacity significantly influences alignment quality. Interestingly, this correlation was strongest for middle-income countries, suggesting that resource constraints most significantly impact alignment in transitional contexts. For high-income countries, governance approach demonstrated stronger influence on alignment than resource availability, consistent with [13] finding that institutional factors outweigh resource considerations in mature governance systems.

| Dimension | Rights-Based Systems | Market-Led Systems | State-Directed Systems |

| Primary Alignment Strength | Balanced alignment across economic, ethical, and social dimensions | Strong economic alignment with robust implementation mechanisms | Strong alignment for priority objectives with direct implementation pathways |

| Foresight Integration | Strong connection between diverse foresight methods and implementation planning | Moderate connection focused on economic and scientific objectives | Variable connection with stronger emphasis on technological roadmapping |

| Common Vulnerability | Coordination challenges between multiple stakeholders and initiatives | Limited integration of ethical governance with implementation mechanisms | Weaker alignment for secondary objectives and stakeholder engagement |

| Network Structure | Moderate centralization, high integration, low modularity | High centralization, moderate integration, high modularity | Very high centralization, variable integration, moderate modularity |

| Resource Influence | Limited influence, primarily affecting implementation specificity | Moderate influence, primarily affecting foresight sophistication | Strong influence, affecting both coverage and integration |