Strong contraction mapping and topological non-convex optimization

Abstract

The strong contraction mapping, a self-mapping that the range is always a subset of the domain, admits a unique fixed-point which can be pinned down by the iteration of the mapping. We introduce a topological non-convex optimization method as an application of strong contraction mapping to achieve global minimum convergence. The strength of the approach is its robustness to local minima and initial point position.

I Introduction

Calculus of variation plays a critical role in the modern calculation. Mostly, the function of interest cannot be obtained directly but makes a functional attain its extremum. This leads people to construct different functionals according to different questions, such as the least action principle, Fermat’s principle, maximum likelihood estimation, finite element analysis, machine learning and so forth. These methods provide us the routine to transfer the original problem to an optimization problem, which makes optimization methods have been used almost everywhere.

However, the popular gradient-based optimization methods be applied to a non-convex function with many local minima is unprincipled. They face great challenges of finding the global minimum point of the function. Because the information from the derivative of a single point is not sufficient to decide the global geometrical property of the function. In the gradient-based methods, the domain of the searching area is divided into several subsets with regards to different local minima. And usually, iterating points will converge to one local minimum depends on where the initial point starts from. For a successful minimum point convergence, the initial point luckily happens to be sufficiently near the global minimum point.

It is the very time to think outside box and try to cultivate some method other than gradient-based methods. Before that, let us first think about why gradient-based optimization methods fail the task of global minimum convergence. Let (X,d) be a metric space and T:X X is a self-mapping. For the inequality that,

| (1) |

if T is called contractive; if T is called nonexpansive; if , T is called Lipschitz continuousHusain ; Latif . The gradient-based methods are usually nonexpansive mapping. The fixed point may exist but is not unique for a general situation. For instance, if the objective function has many local minima, for the gradient descent method mapping , any local minimum is a fixed point accordingly. From the perspective of spectra of a bounded operator, for a nonexpansive mapping, any minimum of the objective function is an eigenvector of the eigenvalue equation , in which . In the optimization problem, nonexpansive mapping sometimes works but their weakness is obvious.

It is worth to note that to summarize the condition for the existence of fixed-point of non-expansive mapping or Lipschitz continuous mapping is a great challenge. Topologically, to prove the existence of fixed-point of the contraction mapping is relatively easier than to prove the existence of fixed-point of non-expansive mapping or Lipschitz continuous mapping. For contraction mapping, the set of the range is shrinking. While, for non-expansive mapping or Lipschitz continuous mapping, the change of range set can involve complicated transformation and rotationAhues ; Rudin . There is no straightforward way to find the fixed-point of mapping. The fixed-point(or fixed-points) of Lipschitz continuous mapping, even if it exists, can cunningly hide inside an inflating volume. Because both the existence and uniqueness of solution are important in optimization problem so that the contractive mapping is more favored than the nonexpansive mapping.

The well-known Banach fixed-point theorem, as the first fixed-point theorem on contraction mapping, plays an important role in solving linear or nonlinear system. But for optimization problems, the condition of contraction mapping that , which usually requires convexity of the function, is hard to be applied to non-convex optimization problem. In this study, we are trying to extend the Banach fixed-point theorem to an applicable method for optimization problems, which is called strong contraction mapping.

Strong contraction mapping is a surjection self-mapping that always maps to the subset of its domain. We will prove that strong contraction mapping admits a unique fixed-point, explain how to build an optimization method as an application of strong contraction mapping and illustrate why its fixed-point is the global minimum point of the objective function.

II Strong contraction mapping and the fixed-point

Recall the definition of diameter of a metric space .

Definition 1.

Let be a metric space. And the metric measurement refers to the maximum distance between two points in the vector space X Fred :

| (2) |

Definition 2.

Let be a complete metric space. Then a mapping is called weak contraction mapping on if the range of mapping T is always a subset of its domain during the iteration, namely, and there exists a such that .

This contraction mapping is called strong because the requirement is looser than what in Banach fixed-point theorem, which doesn’t require the distance between two points getting smaller and smaller but the diameter of the range of the mapping getting smaller and eventually shrinking to a point. Thereafter, the inequality is included by the inequality as a special case.

Theorem 1.

Let be a non-empty complete metric space with strong contraction mapping . Then T admits a unique fixed-point in such that .

To prove the Theorem.1, one can follow the same logic of Banach fixed-point theorem proofBanach but substitute the inequality with . Let be arbitrary initial point and define a sequence as: .

Lemma 1.1.

is a Cauchy sequence in and hence converges to a limit in .

Proof.Let such that .

Let be arbitrary, since , we can find a large such that

Hence, by choosing large enough:

Thus, is Cauchy sequence.

As long as is bounded, the convergence is guaranteed, which is independent of the choice of .

Lemma 1.2.

is a fixed-point of in .

Proof.

Thus,.

Definition 3.

is a fixed-set of T in X.

Lemma 1.3.

is the only fixed-point of in , the only element of and the diameter .

Proof. Suppose there exists another fixed-point y that , then choose the subspace that both the and are the only elements in . By definition, so that, both the and are elements in , namely,

Since is a non-empty complete metric space and the diameter , has a single element .

Thus .

In this section, we have proven the existence and uniqueness of fixed-point of strong contraction mapping. Compared with contraction map, the strong contraction map can address a much wider range of problems as the requirement is looser than . Different from , the inequality doesn’t require in sequence must move closer to each other for every step but confine the range of mapping to be smaller and smaller. Thereafter, the sequence is Cauchy sequence and converge to the fixed-point. It is worth to mention if some mapping is not a strong contraction mapping. Still, one can think about whether or is a strong contraction mapping to find a way around. If that is the case, then the iteration of or yields a fixed-point.

III Optimization algorithm implementation

After the discussion of strong contraction mapping, let us think about how to construct an optimization algorithm occupied with the property of strong contraction mapping.

The objective function is a mapping defined on X, .

Definition 4.

And an affine hyperplane is a subset of of the form

where is a linear functional that does not vanish identically and is a given constantBrezis .

To overcome the dilemma that the optimization method may be saturated by local minima, intuitively, one can utilize a hyperplane that parallels to the domain to cut the objective function such that the intersection between the hyperplane and the function are contours. They reflect the global geometrical property of the function. The difficulty is how to iterate the hyperplane to move downwards and decide the position of the global minimum point.

Definition 5.

Contours is a subset C of of the form

Observing that contours will divide the objective function into two parts. One is higher than the height of contours and the other is lower than the height of contours. Now, our task is to map to a point lower than the height of contours. As a numerical method, instead of getting all points of contours or symbolic expression of contours, we want to get n roots on the contours using root finding algorithm, that is

| (3) |

where, indicates th iterate and indicate th root.

First, provide one arbitrary initial point to the function and calculate the height of contours at the height of the initial point;

If the objective function is non-convex, as a consequence, to map to a point lower than the height of contours cannot be achieved by simply averaging the roots on contours. However, the objective function mostly can be decomposed into many locally convex subsets. We can map to a point lower than the height of contours by averaging the roots belong to the same locally convex subsets and iterate hyperplane downwards.

A function is convex, if for every and , we have the inequality Bertsekas

| (4) |

The inequality indicates the rate of convergence. The smaller q is, the higher rate of convergence is achieved. To achieve high rate of convergence, it is important to extend the Inequality.4 to the situation with regards to many points ,

By induction,

| (5) |

which is Jensen’s Inequality Chandler ,

| (6) |

where,

When we apply the Jensen’s Inequality.6 to the objective function . Let ,for , the Jensen’s Inequality.6 turns to be a strict inequality, that is

| (7) |

Let , that is

Therefore, it is important to check whether two roots belong to the same locally convex subset and traverse all roots, decompose them into these locally convex subsets and get averages accordingly. Based on the Inequality.4, one practical way to achieve that is to pair every two roots on contours and scan function’s value along the segment between the two roots and check whether there exists a point higher than contours’ height. Traverse all the roots and apply this examination on them, then we can decompose the roots with regards to different locally convex subsets. To check whether two roots belong to the same convex subset, number of random points along the segment between two roots are checked whether higher than the contour’s level or notSchachter ; Tseng . If the Inequality.4 always holds for times of test, then we believe the two roots locate at the same locally convex subset and store them together.

After the set of roots being decomposed into several locally convex subsets, the average of roots in the same locally convex subset is always lower than the contours’ height due to Jensen’s Inequality.6.

Theorem 2.

Provided there is a unique global minimum point of the objective function , then the fixed-point of the strong contraction mapping is the global minimum point of the function.

Since the iterating point is always lower than ,

Hence, there exists a such that,

As goes to infinity, then

Because the fixed-point is at the same height as the global minimum point and the global minimum point is unique. Thus, the fixed-point coincides with the global minimum point. The iteration yields the fixed-point that solves the equation .Taylor

Rewrite the mapping that ,which is averaging of roots that locate at the same locally convex subset,

| (8) |

Then, like a Russian doll, the dynamical system can be explicitly written as an expansion of iterates as

| (9) |

After the set of roots are decomposed into several convex subsets, the averages of roots with regards to each subset are calculated and the lowest one is returned as an update point from each iterate. Thereafter, the remaining calculation is to repeat the iterate over and over until convergence and return the converged point as the global minimum. The decomposition of roots set provided a divide-and-conquer method to transfer the original problem to a number of subproblems and solve them recursively. Cormen

In summary, the main procedure of topological non-convex optimization method is decomposed into the following steps: 1. Given the initial guess point for the objective function and calculate the contour level ; 2. Solve the equation and get number of roots. Decompose the set of roots into several convex subsets, return the lowest average of roots as an update point from each iterate; 3. Repeat the above iterate until convergence.

According to the previous discussion, the mechanism of the proposed optimization method has posted some restrictions to the objective function. In summary, it requests the objective function to be continuous, occupied with a unique global minimum point, can be decomposed into many locally convex components and its domain is non-empty complete. And the good news is the most of real-world non-convex optimization problems can satisfy these prerequisites.

IV Experiments on Sphere, McCormick and Ackley functions

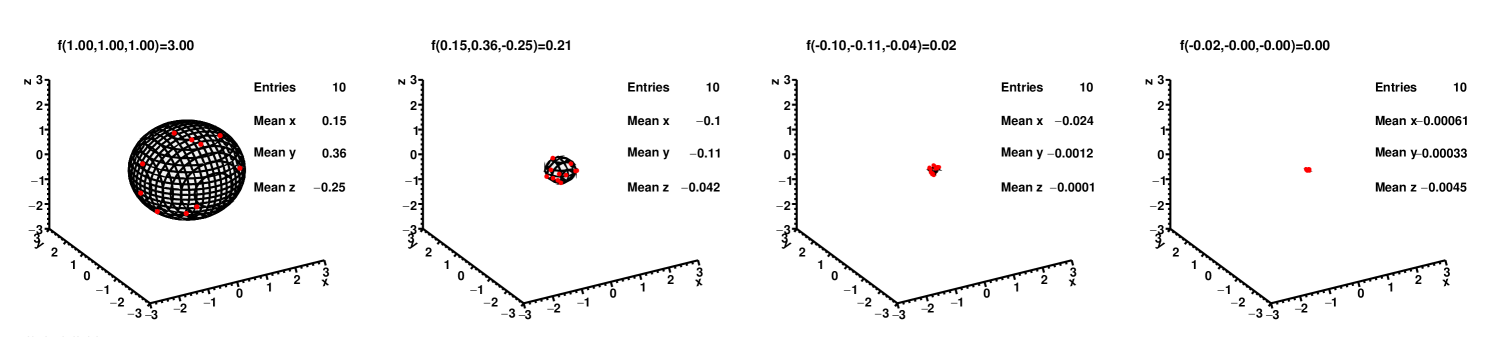

First of all, the optimization algorithm has been tested on a convex function the Sphere function . The minimum is (0,0,0), where . The iterations of roots and contours is shown in FIG.1 and the update of searching point is shown in TABLE.1.

| iterate | updating point | height of contour |

|---|---|---|

| 0 | (1.00,1.00,1.00) | 3.0000 |

| 1 | (0.15,0.36,-0.25) | 0.2146 |

| 2 | (-0.1,-0.11,-0.042) | 0.0237 |

| 3 | (-0.024,-0.0012,-0.0001) | 0.0004 |

| 4 | (-0.0061,-0.00033,-0.0045) |

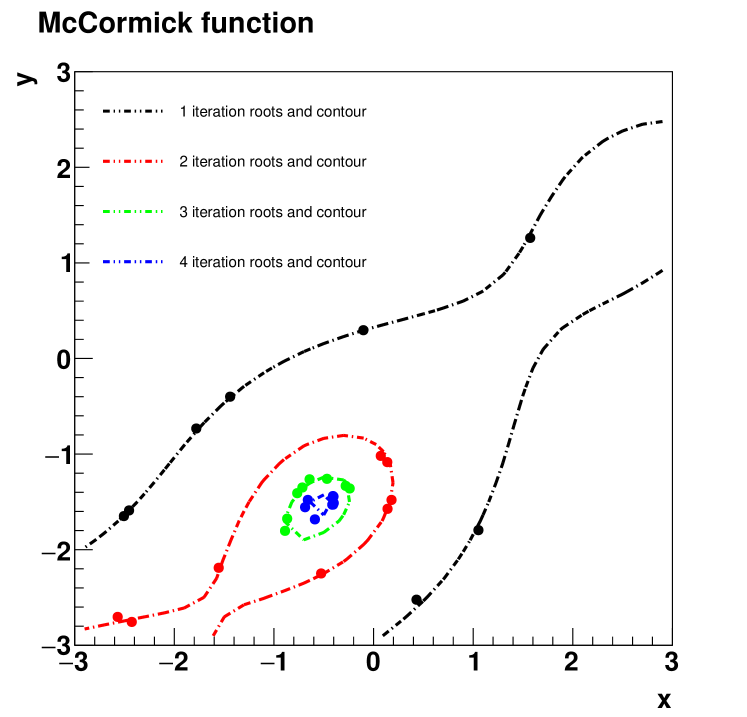

Then, we test the optimization algorithm on the McCormick function. And the first 4 iterates of roots and contour is shown in FIG.2 and the detailed iteration of the searching point from the numerical calculation is shown in TABLE.2. The test result indicate the average of roots can move towards the global minimum point (-0.54719,-1.54719), where .

| iterate | updating point | height of contour |

|---|---|---|

| 0 | (2.00000,2.0000) | 2.2431975047 |

| 1 | (-0.6409,-0.8826) | -1.1857067055 |

| 2 | (-0.8073,-1.8803) | -1.7770492114 |

| 3 | (-0.5962,-1.4248) | -1.8814760998 |

| 4 | (-0.4785,-1.5162) | -1.9074191216 |

| 5 | (-0.5640,-1.5686) | -1.9125755974 |

| 6 | (-0.5561,-1.5467) | -1.9131043354 |

| 7 | (-0.5474,-1.5465) | -1.9132219834 |

| 8 | (-0.5473,-1.5472) |

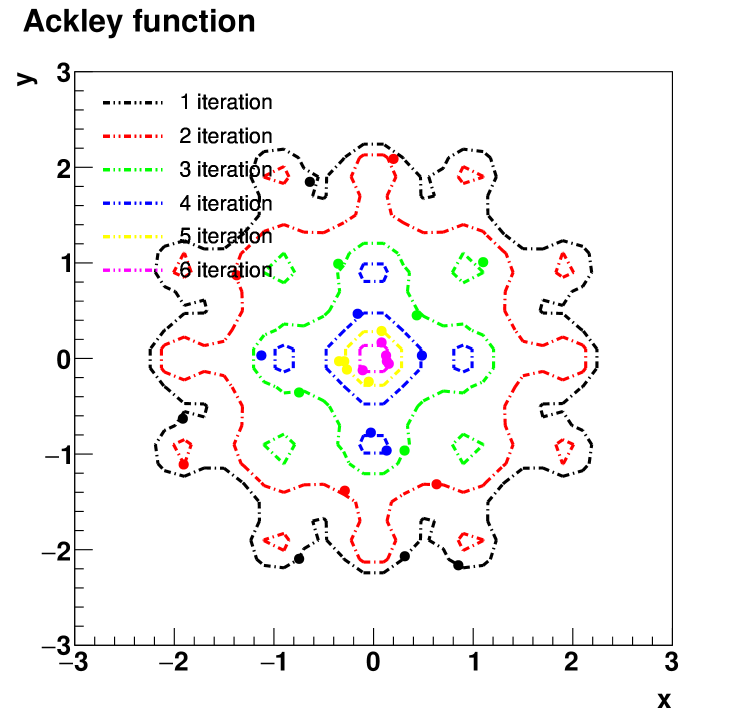

Ackley function would be a nightmare for most gradient-based methods. Nevertheless, the algorithm has been tested on Ackley function where the global minimum locates at (0,0) that . And the first 6 iterates of roots and contours are shown in FIG.3 and the minimum point (-0.00000034,0.00000003) return by the algorithm is shown in TABLE.3. The test result shows that the optimization algorithm is robust to local minima and able to achieve the global minimum convergence. The quest to locate the global minimum pays off handsomely.

| iterate | updating point | height of contour |

|---|---|---|

| 0 | (2.00000000,2.00000000) | 6.59359908 |

| 1 | (-0.78076083,-1.34128187) | 5.82036224 |

| 2 | (-0.35105371,-0.62030933) | 4.11933422 |

| 3 | (-0.20087095,0.38105138) | 3.09359564 |

| 4 | (0.06032320,-0.88101860) | 2.17077104 |

| ⋮ | ⋮ | ⋮ |

| 15 | (0.00000404,-0.00000130) | 0.00001199 |

| 16 | (-0.00000194,-0.00000079) | 0.00000591 |

| 17 | (-0.00000034,0.00000003) |

The observation from these experiments is that the size of contour become smaller and smaller during the iterative process and eventually converge to a point, which is the global minimum point of the function.

V Conclusion

We introduced the definition of the strong contraction mapping and the existence and uniqueness of its fixed-point in this paper. As an extension of Banach fixed-point theorem, the iteration of strong contraction mapping is a Cauchy sequence and yields the unique fixed-point, which perfectly fit with the task of optimization. The global minimum convergence regardless of local minima and initial point position is a very significant strength for the optimization algorithm. And we illustrated how to implement an optimization method occupied with strong contraction mapping property. This topological optimization method finds a way around that even if the objective function is non-convex, still, we can decompose it into many convex components and take advantage of convexity of a locally convex component to pin down the global minimum point. This optimization method has been tested on Sphere, McCormick and Ackley functions and successfully achieved the global minimum convergence as expected. These experiments demonstrate the contours’ shrinking and the iterating point’s approaching the global minimum point. We look forward to extending our study to the higher dimensional situation and believe that the optimization method works for that in principle.

References

- (1) Husain, T., and Abdul Latif. ”Fixed points of multivalued nonexpansive maps.” International Journal of Mathematics and Mathematical Sciences 14.3 (1991): 421-430.

- (2) Latif, Abdul, and Ian Tweddle. ”On multivalued f-nonexpansive maps.” Demonstratio Mathematica 32.3 (1999): 565-574.

- (3) Ahues, Mario, Alain Largillier, and Balmohan Limaye. Spectral computations for bounded operators. Chapman and Hall/CRC, 2001.

- (4) Rudin, Walter. ”Functional analysis. 1991.” Internat. Ser. Pure Appl. Math (1991).

- (5) Brezis, Haim. Functional analysis, Sobolev spaces and partial differential equations. Springer Science Business Media, 2010.

- (6) Croom, Fred H. Principles of topology. Courier Dover Publications, 2016.

- (7) Kiwiel, K. C. (2001). Convergence and efficiency of subgradient methods for quasiconvex minimization. Mathematical programming, 90(1), 1-25.

- (8) Banach, S. (1922). Sur les opérations dans les ensembles abstraits et leur application aux équations intégrales. Fund math, 3(1), 133-181.

- (9) Branciari, A. (2002). A fixed point theorem for mappings satisfying a general contractive condition of integral type. International Journal of Mathematics and Mathematical Sciences, 29(9), .

- (10) Taylor, A. E., & Lay, D. C. (1958). (Vol. 2). New York: Wiley.

- (11) Schachter, Bruce. ”Decomposition of polygons into convex sets.” IEEE Transactions on Computers 11 (1978): 1078-1082.

- (12) Tseng, Paul. ”Applications of a splitting algorithm to decomposition in convex programming and variational inequalities.” SIAM Journal on Control and Optimization 29.1 (1991): 119-138.

- (13) Arveson, W. (2006). A short course on spectral theory (Vol. 209). Springer Science Business Media.

- (14) Blanchard, P.; Devaney, R. L.; Hall, G. R. (2006). Differential Equations. London: Thompson. pp. 96–111. ISBN 0-495-01265-3.

- (15) Bertsekas, Dimitri (2003). Convex Analysis and Optimization. Athena Scientific.

- (16) Chandler, David. ”Introduction to modern statistical mechanics.” Introduction to Modern Statistical Mechanics, by David Chandler, pp. 288. Foreword by David Chandler. Oxford University Press, Sep 1987. ISBN-10: 0195042778. ISBN-13: 9780195042771 (1987): 288.

- (17) Cormen, Thomas H., Charles E. Leiserson, Ronald L. Rivest, and Clifford Stein. Introduction to algorithms. MIT press, 2009.