21215 \lmcsheadingLABEL:LastPageDec. 09, 2022May 20, 2025

This paper is an extended version of [PPRT22].

[a,b] [a,b] [c] [a]

Structural Reductions and Stutter Sensitive Properties

Abstract.

Verification of properties expressed as -regular languages such as LTL can benefit hugely from stutter insensitivity, using a diverse set of reduction strategies. However properties that are not stutter invariant, for instance due to the use of the neXt operator of LTL or to some form of counting in the logic, are not covered by these techniques in general.

We propose in this paper to study a weaker property than stutter insensitivity. In a stutter insensitive language both adding and removing stutter to a word does not change its acceptance, any stuttering can be abstracted away; by decomposing this equivalence relation into two implications we obtain weaker conditions. We define a shortening insensitive language where any word that stutters less than a word in the language must also belong to the language. A lengthening insensitive language has the dual property. A semi-decision procedure is then introduced to reliably prove shortening insensitive properties or deny lengthening insensitive properties while working with a reduction of a system. A reduction has the property that it can only shorten runs. Lipton’s transaction reductions or Petri net agglomerations are examples of eligible structural reduction strategies.

We also propose to use a partition of a property language into its stutter insensitive, shortening insensitive, lengthening insensitive and length sensitive parts; this lets us apply at least some structural reductions even when working with arbitrary properties.

An implementation and experimental evidence are provided showing that most non-random properties sensitive to stutter are actually shortening or lengthening insensitive. Performance of experiments on a large (random) benchmark from the model-checking competition indicates that, despite being a semi-decision procedure, the approach can still improve state of the art verification tools.

Key words and phrases:

model-checking, structural reductions, stutter-sensitivity, omega-automata1. Introduction

Model checking is an automatic verification technique for proving the correctness of systems that have finite state abstractions. Properties can for instance be expressed using the popular Linear-time Temporal Logic (LTL) [Var07]. To verify LTL properties, the automata-theoretic approach [Var07] builds a product between a Büchi automaton representing the negation of the LTL formula and the reachable state graph of the system (seen as a set of infinite runs). This approach has been used successfully to verify both hardware and software components[KPR04, DR18], but it suffers from the so called “state explosion problem”: as the number of state variables in the system increases, the size of the system state space grows exponentially.

One way to tackle this issue is to consider structural reductions. Structural reductions take their roots in the work of Lipton [Lip75] (with extensions by Lamport [LS89, Lam90]) and Berthelot [Ber85]. Nowadays, these reductions are still considered as an attractive way to alleviate the state explosion problem [Laa18, BLBDZ19, Thi20]. Structural reductions strive to fuse structurally “adjacent” events into a single atomic step, leading to fewer interleavings of independent events and fewer observable behaviors in the resulting system. An example of such a structural reduction is shown on Figure 1 where actions are progressively grouped (see Section 3.1 for a more detailed presentation). It can be observed that the Kripke structure representing the state space of the program is significantly simplified.

[width=]fig/fig

Traditionally structural reductions construct a smaller system that preserves properties such as deadlock freedom, liveness, reachability [HP06], and stutter insensitive temporal logic [PP00] such as the fragment of LTL without the next operator LTL∖X. The verification of a stutter insensitive property on a given system does not depend on whether non observable events (i.e. that do not update atomic propositions) are abstracted or not. On Fig 1 both instructions "" and "" of thread are non observable.

This paper shows that structural reductions can in fact be used even for fragments of LTL that are not stutter insensitive. We identify two fragments that we call shortening insensitive (if a word is in the language, then so is any version that stutters less) or lengthening insensitive (if a word is in the language, then so is any version that stutters more). Based on this classification we introduce two semi-decision procedures that provide a reliable verdict only in one direction: e.g. presence of counter examples is reliable for lengthening insensitive properties, but absence is not. We also propose to decompose properties to partition their language into stutter insensitive, shortening insensitive and lengthening insensitive parts, allowing one to use structural reductions even if the property is not globally insensitive to length.

The paper is structured as follows, Section 2 presents the definitions and notations relevant to our setting in an abstract manner, focusing on the level of description of a language. Section 3 instantiates these definitions in the more concrete setting of LTL verification. Section 4 provides experimental evidence supporting the claim that the method we propose is both applicable to many formulae and can significantly improve state of the art model-checkers. Section 5 introduces several extensions to the approach, providing new material with respect to [PPRT22]. Some related work is presented in Section 6 before concluding.

[width=0.7]fig/figure

2. Definitions

In this section we first introduce in Section 2.1 a “shorter than” partial order relation on infinite words, based on the number of repetitions or stutter in the word. This partial order gives us in Section 2.2 the notions of shortening and lengthening insensitive language, which are shown to be weaker versions of classical stutter insensitivity in Section 2.3. We then define in Section 2.4 the reduction of a language which contains a shorter representative of each word in the original language. Finally we show that we can use a semi-decision procedure to verify shortening or lengthening insensitive properties using a reduction of a system.

2.1. A “Shorter than” relation for infinite words

[Word] A word over a finite alphabet is an infinite sequence of symbols in . We canonically denote a word using one of the two forms:

-

•

(plain word) with for all , , and , or

-

•

(-word) with and for all , , and for , and . represents an infinite stutter on the final symbol of the word.

The set of all words over alphabet is denoted .

These notations using a power notation for repetitions of a symbol in a word are introduced to highlight stuttering. We force the symbols to alternate to ensure we have a canonical representation: with a suffix (not starting by symbol ), the word must be represented as and not . To represent a word of the form we use an -word: .

[Shorter than] A plain word is shorter than a plain word if and only if for all , . For two -words and , is shorter than if and only if for all , .

We denote this relation on words as .

For instance, for any given suffix , . Note that as well, but that and are incomparable. -words are incomparable with plain words.

The relation is a partial order on words.

Proof.

The relation is clearly reflexive (), anti-symmetric (, ) and transitive (). The order is partial since some words (such as and presented above) are incomparable. ∎

[Stutter equivalence] A word is stutter equivalent to , denoted as if and only if there exists a shorter word such that . This relation is an equivalence relation thus partitioning words of into equivalence classes.

We denote the equivalence class of a word and denote the shortest word in that equivalence class.

For any given word there is a shortest representative in that is the word where no symbol is ever consecutively repeated more than once (until the for an -word). By definition all words that are comparable to are stutter equivalent to each other, since can play the role of in the definition of stutter equivalence, giving us an equivalence relation: it is reflexive, symmetric and transitive.

For instance, with denoting a suffix, would be the shortest representative of any word of of the form . We can see by this definition that, despite being incomparable, since and .

2.2. Sensitivity of a language to the length of words

[Language] A language over a finite alphabet is a set of words over , hence . We denote by the complement of a language .

In the literature, most studies that exploit a form of stuttering are focused on stutter insensitive languages [PPH96, Val90, Pel94, GW94, HP06]. In a stutter insensitive language , duplicating any letter (also called stuttering) or removing any duplicate letter from a word of must produce another word of . In other words, all stutter equivalent words in a class must be either in the language or outside it. Let us introduce weaker variants of this property, which were originally presented in [PPRT22].

[Shortening insensitive] A language is shortening insensitive if and only if for any word it contains, all shorter words such that also belong to .

For instance, a shortening insensitive language that contains the word must also contain shorter words , and . If it contains it also contains , and .

[Lengthening insensitive] A language is lengthening insensitive if and only if for any word it contains, all longer words such that also belong to .

For instance, a lengthening insensitive language that contains the word must also contain all longer words , …, and more generally words of the form with and . If it contains the shortest representative of an equivalence class, it contains all words in the stutter equivalence class.

While stutter insensitive languages have been heavily studied, there is, to our knowledge, no study on what reductions are possible if only one direction holds, i.e. the language is shortening or lengthening insensitive, but not both. A shortening insensitive language is essentially asking for something to happen before a certain deadline or stuttering “too much”. A lengthening insensitive language is asking for something to happen at the earliest at a certain date or after having stuttered at least a certain number of times. Figure 2 represents these situations graphically.

2.3. Relationship to stutter insensitive logic

A language is both shortening and lengthening insensitive if and only if it is stutter insensitive (see Fig. 2). This fact is already used in [MD15] to identify a stutter insensitive language using only its automaton. Furthermore since stutter equivalent classes of words are entirely inside or outside a stutter insensitive language, a language is stutter insensitive if and only if the complement language is stutter insensitive.

However, if we look at sensitivity to length and how it interacts with the complement operation, we find a dual relationship where the complement of a shortening insensitive language is lengthening insensitive and vice versa.

A language is shortening insensitive if and only if the complement language is lengthening insensitive.

Proof.

Let be shortening insensitive. Let be a word in the complement of . Any word such that must also belong to , since if it belonged to the shortening insensitive , would also belong to . Hence is lengthening insensitive. The converse implication can be proved using the same reasoning. ∎

If we look at Figure 2, the dual effect of complement on the sensitivity of the language to length is apparent: if gray and white are switched we can see that is lengthening insensitive and shortening insensitive.

2.4. When is visiting shorter words enough?

[Reduction] Let be a reduction function such that for every in . The reduction by of a language is .

Note that the partial order is not strict so that the image of a word may be the word itself, hence the identity function is a reduction function. In most cases however we expect the reduction function to map many words of the original language to a single shorter word of the reduced language. Note that given any two reduction functions and , is also a reduction function, therefore is still a reduction of . Hence chaining reduction rules still produces a reduction. As we will discuss in Section 3.1 structural reductions of a specification such as Lipton’s transaction reduction [Lip75, Laa18] or Petri net agglomerations [Ber85, Thi20] (see also Section 3.6) induce a reduction at the language level. In Fig. 1 fusing statements into a single atomic step in the program induces a reduction of the language.

Theorem 1 (Reduced Emptiness Checks).

Let be a reduction function. Given two languages and ,

-

•

if is shortening insensitive, then

-

•

if is lengthening insensitive, then .

Proof 2.1.

(Shortening insensitive ) Assume that so there does not exists . Because is shortening insensitive, it is impossible that any word with belongs to . (Lengthening insensitive ) At least one word is in and . Let with , since is lengthening insensitive and , must also belong to and thus to .

With this theorem original to this paper we now can build a semi-decision procedure that is able to prove some lengthening or disprove some shortening insensitive properties using a reduction of a system. In practice, the language will be the language of the negation of a property, and and will be respectively the language of a system and the language of its reduced version.

3. Application to Verification

We now introduce the more concrete setting of LTL verification to exploit the theoretical results on languages and their shortening/lengthening sensitivity developed in Section 2.

3.1. Kripke Structure

From the point of view of LTL verification with a state-based logic, executions of a system (also called runs) are seen as infinite words over the alphabet , where is a set of atomic propositions that may be true or false in each state. So each symbol in a run gives the truth value of all of the atomic propositions in that state of the execution, and each time an action happens we progress in the run to the next symbol. Some actions of the system update the truth value of atomic propositions, but some actions can leave them unchanged, which corresponds to stuttering.

[Kripke Structure Syntax] Let designate a set of atomic propositions. A Kripke structure over is a tuple where is the set of states, is the transition relation, is the state labeling function, and is the initial state. {defi}[Kripke Structure Semantics] The language of a Kripke structure is defined over the alphabet . It contains all runs of the form where is the initial state of and , either , or if is a deadlock state such that then .

All system executions are considered maximal, so that they are represented by infinite runs. If the system can deadlock or terminate in some way, we can extend these finite words by an infinite stutter on the last symbol of the word to obtain a run.

Example. Subfigure (1) of Figure 1 depicts a program where each thread ( and ) has three reachable positions (we consider that each instruction is atomic). In this example we assume that the only observable atomic propositions are (true when ) and (true when ). The variable is not observed.

Subfigure (2) of Figure 1 depicts the reachable states of this system as a Kripke structure. Actions of thread (which do not modify the value of or ) are horizontal while actions of thread are vertical. While each thread has 3 reachable positions, the emission of the message by must precede the reception by so that some situations are unreachable. Based on Definition 3.1 we can compute the language of this system. It consists of three words: when thread goes first , with an interleaving , and when thread goes first .

In subfigure (3) of Figure 1, the actions ""of thread are fused into a single atomic operation. This is possible because action of thread is stuttering (it cannot affect either or ) and is non-interfering with other events (it neither enables nor disables any event other than subsequent instruction "chan.send(z)"). The language of this smaller KS is a reduction of the language of the original system. It contains two runs: thread goes first and thread goes first .

In subfigure (4) of Figure 1, the already fused action ""of thread is further fused with the chan.recv(); action of thread . This leads to a smaller KS whose language is still a reduction of the original system now containing a single run: . This simple example shows the power of structural reductions, when they are applicable, with a reduction of the initial language to a single word.

3.2. Automata theoretic verification

Let us consider the problem of model-checking of an -regular property (such as one described by an LTL formula) on a system using the automata-theoretic approach [Var07]. In this approach, we wish to answer the problem of language inclusion: do all runs of the system belong to the language of the property ? To do this, when the property is an -regular language (e.g. an LTL or PSL formula), we first negate the property , then build a (variant of) a Büchi automaton whose language111Because computing the complement of a Büchi automaton is worse than exponential in the worst case [Yan08, SV12], syntactically negating and producing an automaton is preferable when is derived from e.g. an LTL formula. consists of all the runs that do not satisfy i.e. . We then perform a synchronized product between this Büchi automaton and the Kripke structure corresponding to the system’s state space (where is defined to satisfy ). Either the language of the product is empty , and the property is thus true of this system, or the product is non empty, and from any run in the language of the product we can build a counter-example to the property.

We will consider in the rest of the paper that the shortening or lengthening insensitive language of Definitions 2.2 and 2.2 is given as an omega-regular language or Büchi automaton typically obtained from the negation of an LTL property, and that the reduction of Definition 2.4 is applied to a language that corresponds to all runs in a Kripke structure typically capturing the state space of a system.

LTL verification with reductions. With Theorem 1, a shortening insensitive property shown to be true on the reduction (empty intersection with the language of the negation of the property) is also true of the original system. A lengthening insensitive property shown to be false on the reduction (non-empty intersection with the language of the negation of the property, hence counter-examples exist) is also false in the original system. Unfortunately, our procedure cannot prove using a reduction that a shortening insensitive property is false, or that a lengthening insensitive property is true. We offer a semi-decision procedure.

3.3. Detection of language sensitivity

We now present a strategy to decide if a given property expressed as a Büchi automaton is shortening insensitive, lengthening insensitive, or both.

This section relies heavily on the operations introduced and discussed at length in [MD15]. The authors define two syntactic transformations and of a transition-based generalized Büchi automaton (TGBA) that can be built from any LTL formula to represent its language [Cou99]. TGBA are a variant of Büchi automata where the acceptance conditions are placed on edges rather than states of the automaton.

The closure operation decreases stutter, it adds to the language any word that is shorter than a word in the language. Informally, the strategy consists in detecting when a sequence is possible and adding an edge , hence its name for “closure”. The self-loopization operation increases stutter, it adds to the language any run that is longer than a run in the language. Informally, the strategy consists in adding a self-loop to any state so that we can always decide to repeat a letter (and stay in that state) rather than progress in the automaton, hence its name for “self-loop”. More formally and .

Using these operations [MD15] shows that there are several possible ways to test if an omega-regular language (encoded as a Büchi automaton) is stutter insensitive: essentially applying either of the operations or should leave the language unchanged. This allows one to recognize that a property is stutter insensitive even though it syntactically contains e.g. the neXt operator of LTL.

For instance is stutter insensitive if and only if . The full test is thus simply reduced to a language emptiness check testing that both and operations leave the language of the automaton unchanged.

Indeed for stutter insensitive languages, all or none of the runs belonging to a given stutter equivalence class of runs must belong to the language . In other words, if shortening or lengthening a run can make it switch from belonging to to belonging to , the language is stutter sensitive. This is apparent on Figure 2.

We want weaker conditions here, but we can reuse the and operations developed for testing stutter insensitivity. Indeed for an automaton encoding a shortening insensitive language, should hold. Conversely if encodes a lengthening insensitive language, should hold. We express these tests as emptiness checks on a product in the following way.

Theorem 2.

[Testing sensitivity] Let designate a Büchi automaton, and designate its complement.

, if and only if defines a shortening insensitive language.

if and only if defines a lengthening insensitive language.

Proof 3.1.

The expression is equivalent to . The lengthening insensitive case is similar.

Thanks to property 2.3, and in the spirit of [MD15] we could also test the complement of a language for the dual property if that is more efficient, i.e. if and only if defines a shortening insensitive language and similarly iff is lengthening insensitive. We did not really investigate these alternatives as the complexity of the test was already negligible in all of our experiments.

Overall complexity of these tests is dominated by the complement operation on the automaton to compute ; this operation is worst case exponential in the size of . However, when the automaton is obtained by translating a LTL formula , we can compute the automaton for the negation of instead of the complement , avoiding this exponential. The operation only introduces new edges, so is worst case quadratic in the number of states of . The operation unfortunately may require adding new states, to allow to stutter on the last seen valuation of the system (a valuation is in ), hence is worst case exponential over the number of atomic propositions in , but formulas in our experiments do not have more than 5 atomic propositions so this complexity does not dominate the procedure. The emptiness check computes the product of two automata and which is worst case linear to the product of the sizes of the two automata.

3.4. Semi Decision Procedure

With these elements we can describe a semi decision procedure to verify a property on a system represented by a Kripke structure .

-

(1)

Decide if is shortening insensitive or lengthening insensitive using Theorem 2. If neither is true, abort the procedure.

-

(2)

Reduce the system using the atomic propositions of as observed alphabet . Thus is a reduction in the sense of Definition 2.4.

-

(3)

Use a model-checker to verify whether the reduced system satisfies , i.e. whether .

-

(4)

If satisfies and is shortening insensitive or does not satisfy and is lengthening insensitive, conclude.

-

(5)

Otherwise abort the procedure, and run a model-checker using the original system and property.

Note that the complexity of this procedure is dominated by the model-checking step, since is typically extremely large in front of . If the reduction is successful, can be up to exponentially smaller than , but this depends on the size of the observed alphabet as well as on the system definition. The experiments presented in Section 4 confirm that in most cases verifying on the reduced system is much cheaper than doing model-checking on the original system.

3.5. Agglomeration of events produces shorter runs

Structural reductions are one of the possible strategies to reduce the complexity of analyzing a system. Depending on the input formalism the terminology used is different, but the main results remain stable.

In [Lip75] transaction reduction consists in fusing two adjacent actions of a thread (or even across threads in recent versions such as [Laa18] ). The first action must not modify atomic properties and must commutate with any action of other threads. Fusing these actions leads to shorter runs, where a stutter is lost. In the program of Fig. 1, "z=40" is enabled from the initial state and must happen before "chan.send(z)", but it commutes with instructions of thread and is not observable. Hence the language built with an atomicity assumption on "z=40;chan.send(z)" is indeed a reduction of .

Let us reason at the level of a Kripke structure. The goal of such reductions is to structurally detect the following situation in language : let designate a run (not necessarily in the language). Then there must exist two indexes and such that for any natural number , , is in the language. In other words, the set of runs must be included in the language. This corresponds to an event that does not impact the truth value of atomic propositions (it stutters) and can be freely commuted with any event that occurs between indexes and in the run. This event is simply constrained to occur at the earliest at index in the run and at the latest at index . In Fig. 1 the event "z=40" can happen as early as in the initial state, and must occur before "chan.send(z)" and thus matches this definition.

Note that these runs are all stutter equivalent, but are incomparable by the shorter than relation (e.g. are incomparable). In this situation, a reduction can choose to only represent the run (e.g. represents all these runs) instead of any of these runs. This run was not originally in the language in general, but it is indeed shorter than any of the runs so it matches definition 2.4 for a reduction. Note that the stutter-equivalent class of does contain all these longer runs so that in a stutter insensitive context, examining is enough to conclude for any of the runs in . This is why usage of structural reductions is compatible with verification of a logic such as and has been proposed for that express purpose in the literature [PP00, HP06, Laa18].

Thus transaction reductions [Lip75, Laa18] as well as both pre-agglomeration and post-agglomeration of Petri nets [PP00, EHP05, HP06, Thi20] produce a system whose language is a reduction of the language of the original system.

A formal definition involves a) introducing the syntax of a formalism and b) its semantics in terms of language, then c) defining the reduction rule, and d) proving its effect is a reduction at the language level. The exercise is not particularly difficult, and the definition of reduction rules mostly fall into the category above, where a non observable event that happens at the earliest at point in the run and at the latest at point in the run is abstracted from the trace. As an example, we provide in Section 3.6 such a proof for structural agglomerations rules on Petri nets.

Our experimental Section 4.2 uses slightly extended versions of these rules (defined in [Thi20]) for (potentially partial) pre and post-agglomeration. That paper presents structural reductions rules from which we selected the rules valid in the context of LTL verification. Only one rule preserving stutter insensitive LTL was not compatible with our approach since it does not produce a reduction at the language level: rule “Redundant transitions” proposes that if two transitions and have the same combined effect as a transition , and firing enables , can be discarded from the net. This reduces the number of edges in the underlying representing the state space, but does not affect reachability of states. However, it selects as representative a run involving both and that is longer than the one using in the original net, it is thus not legitimate to use it in our strategy (although it remains valid for ). Rules “Pre agglomeration” and “Post agglomeration” are the most powerful rules of [Thi20] that we are able to apply in our context. They are known to preserve (but not full LTL) and their effect is a reduction at the language level, hence we can use them when dealing with shortening/lengthening insensitive formulae.

3.6. Petri net agglomeration rules

This section presents agglomeration rules for Petri nets, and shows that this transformation of the structure of a net leads to a reduction of the associated language in the sense of Definition 2.4. All of this section is new content with respect to [PPRT22].

[Structure] A Petri net is a tuple where is the finite set of places, is the finite set of transitions, and represent the pre and post incidence matrices, and is the initial marking.

Notations. We use (resp. ) to designate a place (resp. transition) or its index dependent on the context. We represent markings as vectors of natural numbers with entries. We also describe and , for any given transition , as vectors with entries. In vector spaces, we use to denote , and we use sum with the usual element-wise definition. Since a Petri net is bipartite graph, we note (resp. ) the pre set (resp. post set) of a node (place or transition). For example, the pre set of place is .

[Semantics] The semantics of a Petri net is given by the firing rule that relates pairs of markings: in any marking , if satisfies , then with . Given a set of atomic propositions and an evaluation function , we can associate to a net the corresponding Kripke structure over .

An atomic proposition is a Boolean formula (using ) built from comparisons () of arbitrary weighted sum of place markings to another sum or a constant, e.g. , with and . The support of a property expressed over is the set of places whose marking is truly used in an atomic predicate, i.e. such that at least one comparison atom has a non zero in a sum. The support of the property defines the subset of invisible or stuttering transitions satisfying . Hence, given a labeling function , we can guarantee that and any , if then . With respect to stutter, the atomic propositions are only interested in the projection of reachable markings over the variables in the support, values of places in are not observable in markings. A small support means more potential reductions, as rules mostly cannot apply to observed places or their neighborhood.

Agglomeration in a place consists in discarding and its surrounding transitions (pre and post set of ) to build instead a transition for every element in the Cartesian product that represents the effect of the sequence of firing a transition in then immediately a transition in . This “acceleration” of tokens in reduces interleaving in the state space, but can preserve properties of interest if is chosen correctly. This type of reduction has been heavily studied [HP06, Laa18] as it forms a common ground between structural reductions, partial order reductions and techniques that stem from transaction reduction.

We present here two rules that can be used to safely decide if a place can be agglomerated. Figure 3 shows these rules graphically, using the classical graphical notation for Petri nets.

[Pre and Post Agglomeration] Let designate a Petri net and be the support of a property on this net. Let be a place of satisfying:

| not in support | |

| initially unmarked | |

| distinct feeders and consumers | |

| feeders produce a single token in | |

| consumers require a single token in |

Pre agglomeration in is possible if it also the case that:

Post agglomeration in is possible if it also the case that:

In either of these cases, we agglomerate place leading to a net where

-

•

we discard all transitions in and place from the net, and

-

•

we add a new transition such that and

Pre agglomeration (see Fig. 3(a)) looks for a place such that all predecessor transitions are stuttering, have as single output place, and once enabled cannot be disabled by firing any other transition (hence they commute freely with other transitions that do not consume in ). With these conditions, we can always “delay” the firing of until it becomes relevant to enable a transition that consumes from . This will yield a Petri net whose language is a reduction of the original one.

Conversely, Post agglomeration (see Fig. 3(b)) looks for a place such that all successor transitions are stuttering, and have as single input place. With these conditions, tokens that arrive in are always free to choose where they want to go, and nothing can prevent them from going there. Instead of waiting in until we take this decision, we can make this choice immediately after firing a transition that places a token in . This again will yield a Petri net whose language is a reduction of the original one.

[width=.48]fig/preagglo1 \includestandalone[width=.48]fig/preagglo2

[width=.48]fig/postagglo1 \includestandalone[width=.48]fig/postagglo2

Let be a Petri net, and a place of this net satisfying one of the agglomeration criterion. The agglomeration in of produces a net whose language is a reduction of the language of .

Proof 3.2.

(Sketch) Consider an execution of of the form: where , and , . For pre-agglomeration of in , the image of this execution in the agglomerated net is of the form: where the firing of has shifted to the right until it is fused with the subsequent firing of . Indeed, we have the strong diamond property that states commutativity of with any of the transitions between and in the execution. Let be such a transition, if such that then . Indeed cannot be disabled by any transition once it is enabled (due to “strongly quasi persistent” property), and firing of cannot enable any transition not in (since ). The semantics of a Petri net also induce that firing before or after lead to the same marking. Furthermore, the underlying run in the agglomerated net is shorter (by one repetition) than the original run, because is guaranteed to stutter (it is one of the constraints that ).

If the execution of the original net contains a firing of transition but not of , where , and , , the image is the execution where the transition is never fired. Since is stuttering, this corresponding run is shorter than the original (by one repetition).

If the execution does not contain any firing of a transition in , the image of the execution is the execution itself which is legitimate since identity is a reduction. Lastly, the divergent free constraint ensures that cannot be fired infinitely many times in succession so that the original net cannot exhibit an execution of the form (ending on infinitely many firings of ) that would no longer exist in the agglomerated net.

A similar reasoning is possible for post agglomeration, given an execution where , and , of the original net, the image in the agglomerated net is where the firing of has shifted to the left until it is fused with the preceding firing of . Again the diamond property applies, is enabled as soon as fires and cannot subsequently be disabled by other transitions. Because is constrained to be stuttering, the corresponding run is shorter than the original one.

4. Experimentation

4.1. A Study of Properties

This section provides an empirical study of the applicability of the techniques presented in this paper to LTL properties found in the literature. To achieve this we explored several LTL benchmarks [EH00, SB00, DAC98, HJM+21, KBG+21]. Some work [EH00, SB00] summarizes the typical properties that users express in LTL. The formulae of this benchmark have been extracted directly from the literature. Dwyer et al. [DAC98] propose property specification patterns, expressed in several logics including LTL. These patterns have been extracted by analysing 447 formulae coming from real world projects. The RERS challenge [HJM+21] presents generated formulae inspired from real world reactive systems. The MCC [KBG+21] benchmark establishes a huge database of LTL formulae in the form of Petri net models coming from sources with random LTL formulae for each one. These formulae use up to state-based atomic propositions (e.g. "" or " is fireable"), limit the nesting depth of temporal operators to and are filtered by the organizers in order to be non trivial. Since these formulae come with a concrete system we were able to use this benchmark to also provide performance results for our approach in Section 4.2. We retained model/formula pairs from this benchmark; the missing were rejected due to parse limitations of our tool when the model size is excessive ( transitions). This set of roughly formulae exhibiting real patterns and random ones lets us evaluate if the fragment of LTL that we consider is common in practice. Table 1 summarizes, for each benchmark, the number and percentage of formulae that are either stuttering insensitive, lengthening insensitive, or shortening insensitive. The sum of both shortening and lengthening formulae represents more than one third (and up to 60 percent) of the formulae of these benchmarks.

Concerning the polarity, although lengthening insensitive formulae seem to appear more frequently, most of these benchmarks actually contain each formula in both positive and negative forms (we retained only one) so that the summed percentage might be more relevant as a metric since lengthening insensitivity of is equivalent to shortening insensitivity of . Analysis of the human generated Dwyer patterns [DAC98] reveals that shortening/lengthening insensitive formulae mostly come from the patterns precedence chain, response chain and constrained chain. These properties specify causal relation between events, which are observable as causal relations between observably different states (that might be required to strictly follow each other), but this causality chain is not impacted by non observable events.

| Benchmark | Total | SI | LI | ShI | LS |

|---|---|---|---|---|---|

| Dwyer et al. [DAC98] | 55 | 32 (58%) | 13 (24%) | 9 (16%) | 1 (2%) |

| Spot [EH00, DAC98, SB00] | 94 | 63 (67%) | 17 (18%) | 11 (12%) | 3 (3%) |

| RERS [HJM+21]. | 2050 | 714 (35%) | 777 (38%) | 559 (27%) | 0 |

| MCC [KBG+21] | 43989 | 24462 (56%) | 6837 (16%) | 5390 (12%) | 7300 (17%) |

4.2. A Study of Performances

Benchmark Setup. Among the LTL benchmarks presented in Table 1, we opted for the MCC benchmark to evaluate the techniques presented in this paper. This benchmark seems relevant since (1) it contains both academic and industrial models, (2) it has a huge set of (random) formulae and (3) includes models so that we could measure the effect of the approach in a model-checking setting. The model-checking competition (MCC) is an annual event in its edition in 2021 where competing tools are evaluated on a large benchmark. We use the formulae and models from the latest edition of the contest, where Tapaal [DJJ+12] was awarded the gold medal and ITS-Tools [Thi15] was silver in the LTL category of the contest. We evaluate both of these tools in the following performance measures, showing that our strategy is agnostic to the back-end analysis engine. Our experimental setup consists in two steps.

-

(1)

Parse the model and formula pair, and analyze the sensitivity of the formula. When the formula is shortening or lengthening insensitive (but not both) output two model/formula pairs: reduced and original. The “original” version does also benefit from structural simplification rules (e.g. removing redundant places and transitions, implicit places that are always sufficiently marked to fire related transitions…), but we apply only rules that are compatible with full LTL (in particular not enabling agglomeration rules). The “reduced” version additionally benefits from rules that are reductions at the language level, in the sense of Definition 2.4. These rules mainly include pre and post agglomeration, that also can enable even more structural simplification rules, since we iterate all applicable reduction rules to a fixed point. The original and reduced model/formula pairs that result from this procedure are then exported in the same format the contest uses. This step was implemented within ITS-tools.

-

(2)

Run an MCC compatible tool on both the reduced and original versions of each model/formula pair and record the time performance and the verdict.

For the first step, using Spot [MD15], we detect that a formula is either shortening or lengthening insensitive for 99.81% of formulae in less than 1 second. After this analysis, we obtain 12 227 model/formula pairs where the formula is either shortening insensitive or lengthening insensitive (but not both). Among these pairs, in cases (24.6%) none of the structural reduction rules we use were applicable. For more details on the precise structural reductions, see the companion artifact paper [TMRPAP24]. Since our strategy does not improve such cases, we retain the remaining 9 222 (75.4%) model/formula pairs in the performance plots of Figure 4. We measured that on average 34.19% of the places and 32.69% of the transitions of the models were discarded by reduction rules with respect to the “original” model, though the spread is high as there are models that are almost fully reducible and some that are barely so. Application of reduction rules is in complexity related to the size of the structure of the net and takes less than 20 seconds to compute in 95.5% of the models. We are able to treat examples (21% of the original model/formula pairs of the MCC) using reductions. All these formulae until now could not be handled using reduction techniques.

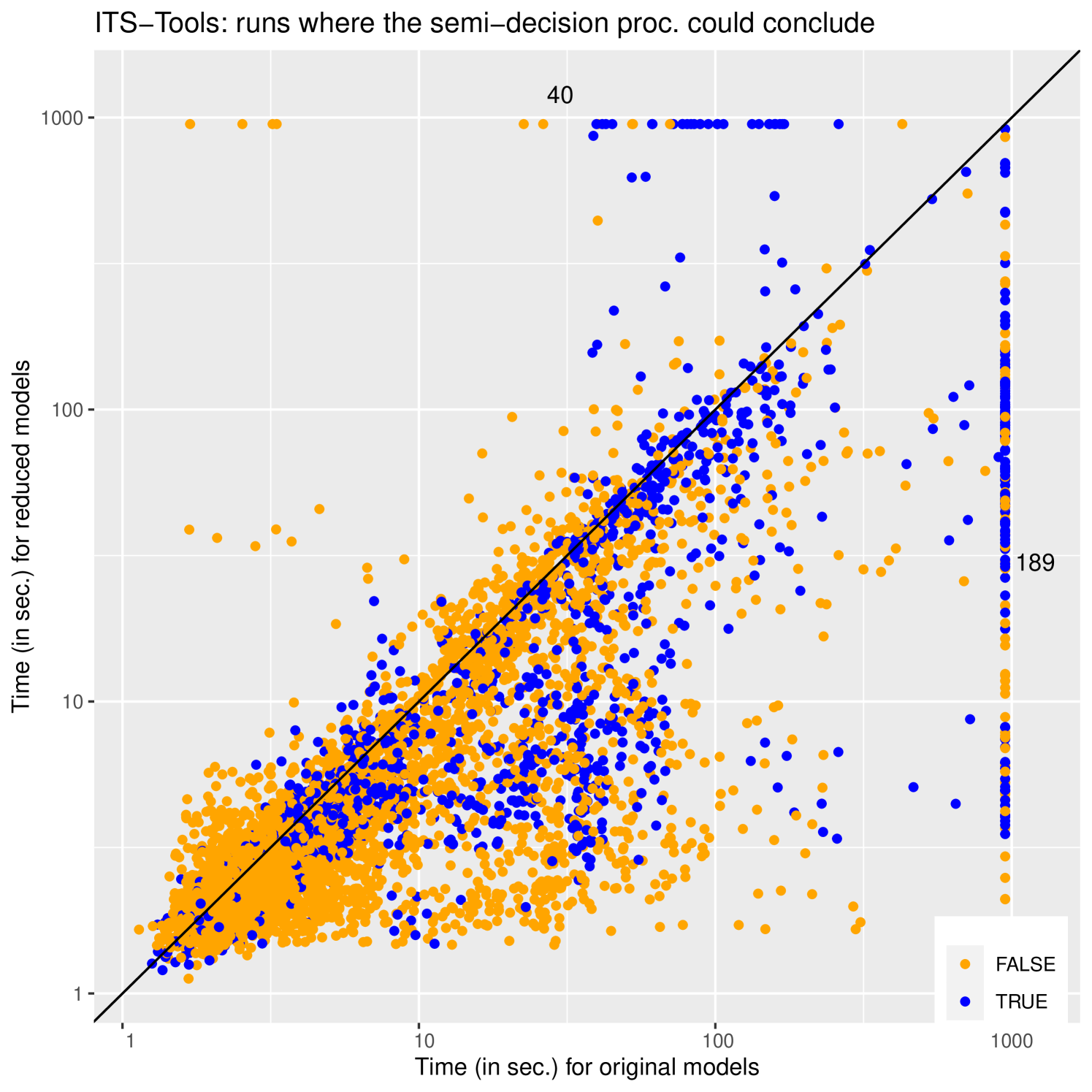

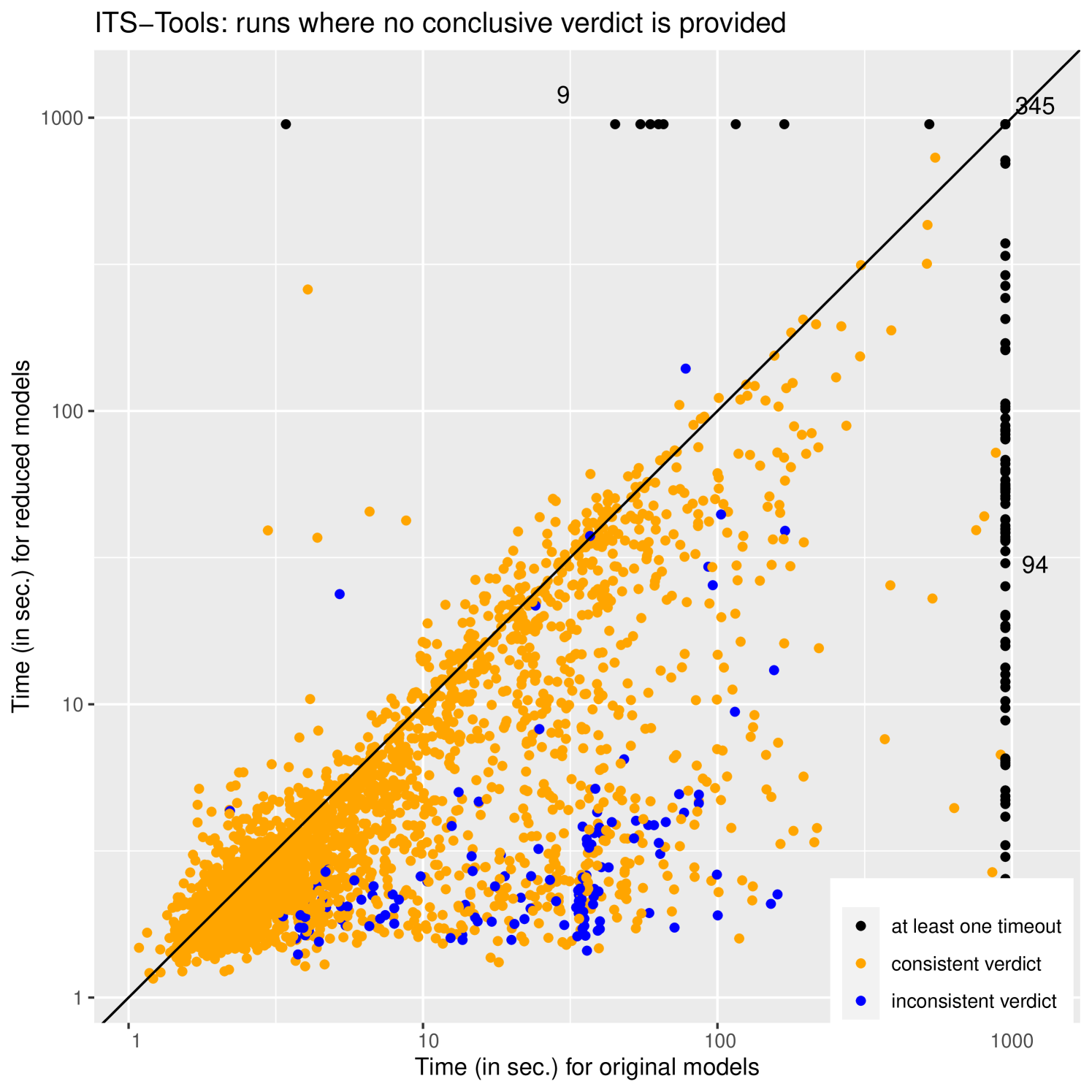

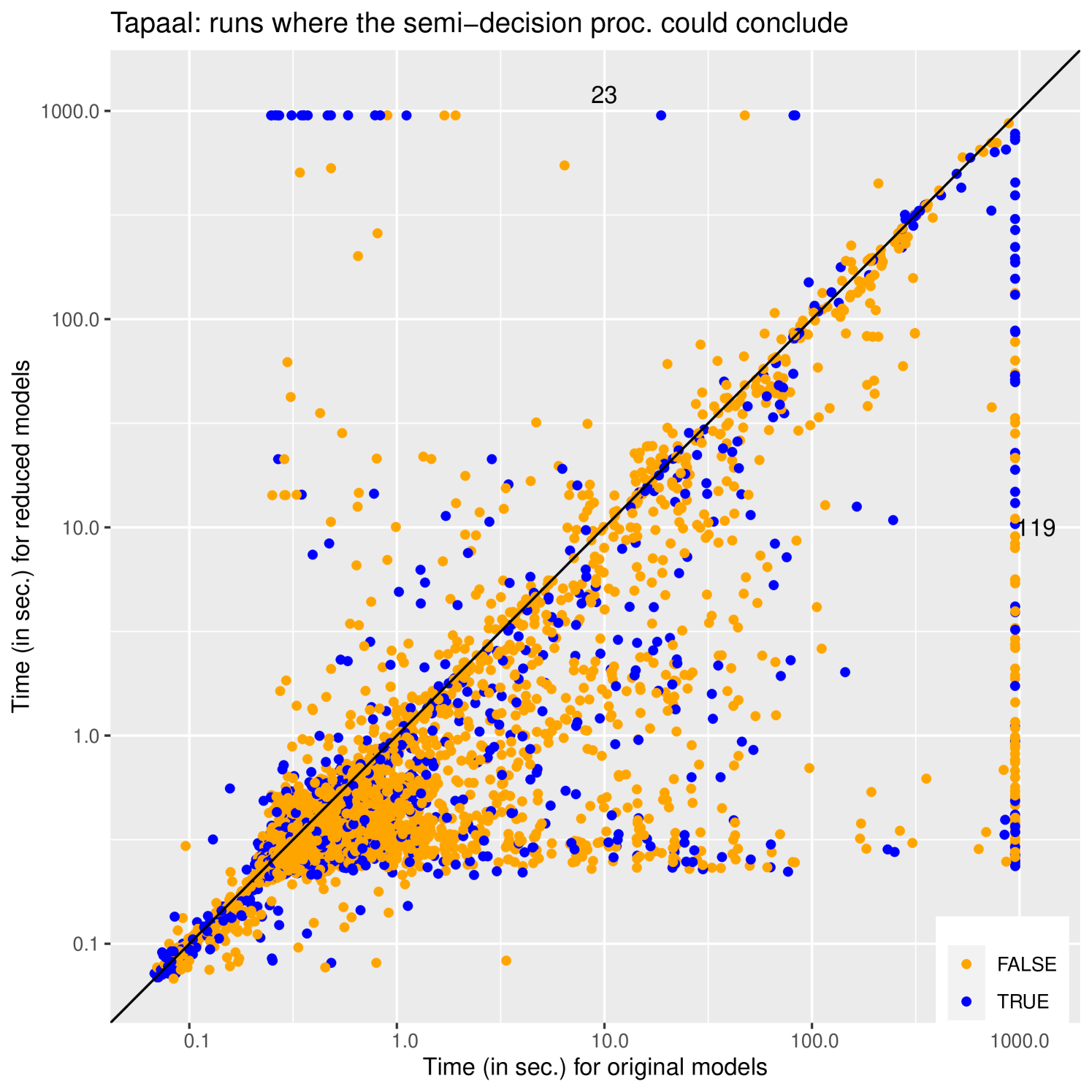

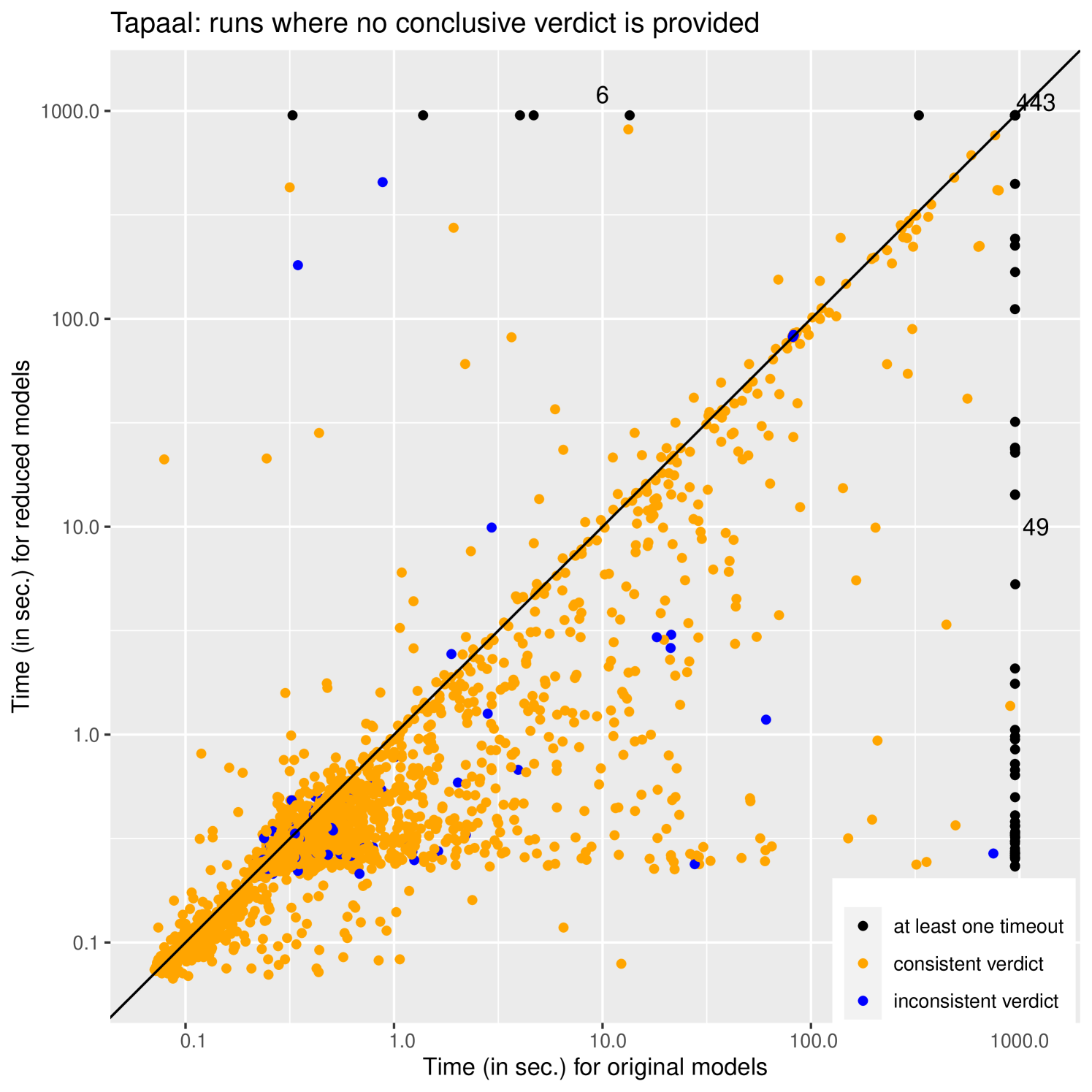

For the second step, we measured the solution time for both reduced and original model/formula pairs using the two best tools of the MCC’2021 contest. A full tool using our strategy might optimistically first run on the reduced model/formula pair hoping for a definitive answer, but we recommend the use of a portfolio approach where the first definitive answer is kept. In these experiments we neutrally measured the time for taking a semi-decision on the reduced model vs. the time for taking a (complete) decision on the original model. We then classify the results into two sets, decidable instances are shown on the left of Fig. 4 and instances that are not decidable (by our procedure) are on the right. On “decidable instances” our semi-decision procedure could have concluded reliably because the system satisfies the formula and the property is shortening insensitive, or the system does not satisfy the formula and the property is lengthening insensitive. Non decidable instances shown on the right are those where the verdict on the reduced model is not to be trusted (or both the original and reduced procedures timed out).

With this workflow we show that our approach is generic and can be easily implemented on top of any MCC compatible model-checking tool. All experiments were run with a 950 seconds timeout (close to 15 minutes, which is generous when the contest offers 1 hour for 16 properties). We used a heterogeneous cluster of machines with four cores allocated to each experiment, and ensured that experiments concerning reduced and original versions of a given model/formula are comparable (by running them on the same architecture). To help reproducibility of these experiments, a software artifact is presented in [TMRPAP24], and available from the CodeOcean platform https://doi.org/10.24433/CO.6846969.v1.

Figure 4 presents the results of these experiments. The results are all presented as log-log scatter plots opposing a run on the original to a run on the reduced model/formula pair. Each dot represents an experiment on a model/formula pair; a dot below the diagonal indicates that the reduced version was faster to solve, while a point above it indicates a case where the reduced model actually took longer to solve than the original (fortunately there are relatively few)222An example found in the benchmark is a formula requiring , original system has no states satisfying 3 steps from initial state, but the reduced model does; and it takes a very long sequence of steps to reinvalidate . Points that timeout for one (or both) of the approaches are plotted on the line at 950 seconds, we also indicate the number of points that are in this line (or corner) next to it.

The plots on the left (a) and (c) correspond to “decidable instances” while those on the right are not decidable by our procedure. The two plots on the top correspond to the performance of ITS-tools, while those on the bottom give the results with Tapaal. The general form of the results with both tools is quite similar confirming that our strategy is indeed responsible for the measured gains in performance and that they are reproducible. Reduced problems are generally easier to solve than the original. This gain is in the best case exponential as is visible through the existence of spread of points reaching out horizontally in this log-log space (particularly on the Tapaal plots).

The colors on the decidable instances reflect whether the verdict was true or false. For false properties a counter-example was found by both procedures interrupting the search, and while the search space of a reduced model is a priori smaller, heuristics and even luck can play a role in finding a counter-example early. True answers on the other hand generally require a full exploration of the state space so that the reductions should play a major role in reducing the complexity of model-checking. The existence of True answers where the reduction fails is surprising at first, but a smaller Kripke structure does not necessarily induce a smaller product as happens sometimes in this large benchmark (and in other reduction techniques such as stubborn sets [Val90]). On the other hand the points aligned to the right of the plots a) and c) (189 for ITS-tools and 119 for Tapaal) correspond to cases where our procedure improved these state of the art tools, allowing one to reach a conclusion when the original method fails.

The plots on the right use orange to denote cases where the verdict on the reduced and original models were the same; on these points the procedures had comparable behaviors (either exploring a whole state space or exhibiting a counter-example). The blue color denotes points where the two procedures disagree, with several blue points above the diagonal reflecting cases where the reduced procedure explored the whole state space and thought the property was true while the original procedure found a counter-example (this is the worst case). Surprisingly, even though on these non decidable plots b) and d) our procedure should not be trusted, it mostly agrees (in 95% of the cases) with the decision reached on the original.

Out of the 9222 experiments in total, for ITS-tools 5901 runs reached a trusted decision (64 %), 2927 instances reached an untrusted verdict (32 %), and the reduced procedure timed out in 394 instances (4 %). Tapaal reached a trusted decision in 5866 instances (64 %), 2884 instances reached an untrusted verdict (31 %), and the reduced procedure timed out in 472 instances (5 %). On this benchmark of formulae we thus reached a trusted decision in almost two thirds of the cases using the reduced procedure.

5. Extensions and Perspectives

In this section we investigate how to improve the results obtained in the previous sections, thus extending the presentation of [PPRT22]. The main extension considered is to focus on fragments of a language that satisfy the shortening/lengthening/stutter insensitivity criterion, even when the language as a whole is length sensitive. We first introduce tools to partition a property language into these fragments and present experimental evaluation on a benchmark of LTL formulae. We then present possible approaches to confirm non trustworthy answers of the current semi decision procedure, one of which turns out to be applicable to a much wider range of properties. All of this section is new content with respect to [PPRT22].

5.1. Partition of a Language

The idea is to partition the language of the (negation of the) property into four parts: the stutter insensitive part, the pure shortening insensitive part, the pure lengthening insensitive part, and the pure fully length sensitive part. This is a partition of the language, hence the “pure” qualifier indicates that there is no overlap, e.g. the pure shortening insensitive part does not contain any word of the stutter insensitive part of the original language.

Given this partition, we can use the most efficient available procedure to check emptiness of the product of the system with each part. For the first three parts in particular, we can work with a reduction of the system. The stutter insensitive part benefits from a full decision procedure when working with the reduction, and the partly length insensitive parts can use the semi-decision procedure of Theorem 1.

Length-sensitive partition of a language.

Given a language , we define:

-

•

The stutter insensitive part of noted is defined as the largest subset of that is stutter insensitive. More precisely ,

-

•

The pure shortening insensitive part of noted is the largest subset of that is shortening insensitive but does not intersect with . More precisely

-

•

The pure lengthening insensitive part of noted is the largest subset of that is lengthening insensitive but does not intersect with . More precisely

-

•

The pure fully length sensitive part of noted is the largest subset of that is shortening and lengthening sensitive but does not intersect with the previous parts. More precisely

To compute this partition when the language is represented by a Buchi automaton , we can reuse the closure () and self-loopization () syntactic transformations of an automaton introduced in Section 3.3.

Computing the length-sensitive partition of a language.

Let designate a Büchi automaton recognizing language .

-

•

Let , let , let , then

-

•

Let ,

-

–

Let , let , let , then .

-

–

Let , let , let , then .

-

–

-

•

Finally,

The automaton produced in each case matches Definition 5.1, e.g. when recognizes language . The reasoning behind these definitions is illustrated in Figure 5. For each equivalence class, we simply reason on three distinct but comparable words and such that . The question for each of these words is whether they belong to the language of or not. This gives us cases to consider, but some cases are redundant, e.g. the case where and belong to but doesn’t is homogeneous to the case where belongs to but neither nor do. Figure 5 thus only represents the significantly different cases for a given equivalence class of runs.

[width=]fig/SIpart

[width=]fig/ShortPart

It can be noted that it is quite easy to adapt the steps to compute less strict versions of these “partitions”, e.g. extracting the sublanguage can be done using equations for computing (see Fig. 5) but substituting for in all equations. This involves fewer complement operations on automata.

Unfortunately, computing the complement of an automaton is worst case exponential in the size of , and the procedure described here uses several complementations to implement set difference: . At least when the automaton comes from an LTL property, we can compute instead of computing the complement . The other complement operations in our procedure seem unavoidable at this stage however. Despite this high worst case complexity, the complexity of model-checking as a whole is usually dominated by the size of the Kripke structure, which can be substantially reduced by structural reductions. Hence a model-checking procedure using the partition and structural reductions where possible might still be competitive with the default procedure that cannot use structural reductions at all unless the property is fully stutter insensitive.

5.2. Experiments with Partitioning

As preliminary experimentation we computed these partitions on the LTL formulae of Section 4.1 333The total number of formulae in the MCC dataset do not match those in Table 1 since this experiment was run with a more recent version of ITS-Tools (release ) that can parse more model/formula pairs of this benchmark ( up from out of model/formula pairs in the full MCC data set).. Results are presented in Table 2.

| Bench. | Total | TO+MO | SI | LI | w/ | ShI | w/ | LS | w/ | w/ | w/ | w/ |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Dwyer | 55 | 0 | 32 | 13 | 13 | 9 | 9 | 1 | 1 | 1 | 1 | 0 |

| Spot | 93 | 1+0 | 63 | 17 | 17 | 11 | 11 | 2 | 2 | 2 | 2 | 1 |

| RERS | 2050 | 0 | 714 | 777 | 777 | 559 | 559 | 0 | 0 | 0 | 0 | 0 |

| MCC | 44252 | 328+40 | 26146 | 4996 | 4994 | 6161 | 6146 | 6581 | 6527 | 6498 | 6573 | 2799 |

This table shows that:

-

•

An overwhelming majority (over in all cases, and of non random formulae) of shortening or lengthening insensitive properties contain a stutter insensitive sublanguage (column ). This perhaps explain the high consistency of results on non decidable instances of Fig 4. This observation is also true of fully length sensitive properties , with over of these properties containing a stutter insensitive sublanguage. At least for this part of the language, strategies using structural reductions and/or partial order reduction are possible.

-

•

While computing the partition of a language can theoretically be prohibitively expensive, in practice in most cases it is possible to do so. We fail to compute the partition for only one property of the benchmark, and less than one percent of the MCC full dataset. These measures use a timeout of 15 seconds, which we consider negligible in most cases in the full model-checking procedure that has timeout of the order of several minutes.

-

•

Most length sensitive languages (classified as “LS”) are actually a combination of a lengthening insensitive and a shortening insensitive part, typically with some stuttering insensitive behavior added on top (in over of cases), but do not actually contain an pure fully length sensitive part. Only of the length sensitive properties of the MCC actually contain a fully sensitive part, which only represents of all model/formula pairs in the MCC (that ITS-Tools could parse). These percentages fall to practically zero on non random formulae.

Overall the results in this table are very encouraging. Studying fragments of the language using the most efficient strategy for each part seems to be a promising approach, particularly for the properties for which we would currently default to a study on the non reduced version of the model.

5.3. Extending the language

This section is independent of the two previous sections, and presents a new complementary approach that can help to validate cases where there are no counter-examples.

For any language , by extension of the closure operation on automaton, let .

Given two languages and , and a reduction function for such that is a reduction of ,

Proof 5.1.

Because , we are guaranteed that, in any stutter equivalent class , all words of the reduced language in this class (if any exist) are strictly longer than any word in hence they are strictly longer than any word in . Since only contains words that are shorter or equal to words of the original language, also must contain only words that are strictly longer than any word in .

This observation gives us a new semi-decision procedure (only able to prove absence of counter-examples) that uses a structurally reduced model and can be applied to arbitrary properties. Of course, it is only really relevant for lengthening insensitive or length sensitive properties as stutter insensitive properties enjoy a full decision procedure and shortening insensitive properties are not modified by the operation (by definition). Note that for these shortening insensitive properties, we already had established in Theorem 1 that when the product () is empty, the result is reliable.

5.4. Revisiting the decision procedure

A revisited model-checking approach exploiting the new features introduced in this section, and applicable to arbitrary properties could be:

-

(1)

First, optimistically compute the product of the property and the reduced .

-

(2)

If the property language is SI, LI or ShI and the verdict is reliable, conclude.

-

(3)

Otherwise, try to confirm the verdict obtained:

-

•

If the product is empty, try to confirm using the product of and the reduced . If this product is empty, conclude.

-

•

If the product is not empty, try to confirm the existence of a reliable counter-example in the product of with the reduced . If it exists conclude.

-

•

-

(4)

If these tests fail, we can still try to compute the result for the part of the language on the reduced system (since this is a full and reliable decision procedure) and proceed (if the product is empty, otherwise we have a trusted counter-example) to verify the remaining parts of the language on the original .

Overall for length sensitive properties, separate analysis of the parts of the language on the reduced (using the most appropriate decision procedure) remains possible, thus extending the scope of properties where at least part of the analysis can be performed using structural reductions.

While the outlined approach has not yet been implemented, the experiments on partitioning of Table 2 indicate that there is a lot of room for these strategies to work (over of properties in the benchmark contain an part). Moreover the high correlation () between untrusted verdicts of the semi-decision procedure (see Fig. 4 B) and D)) and actual verdicts seems to indicate that an approach seeking to confirm untrusted verdicts could be very successful.

6. Related Work

Partial order vs structural reductions. Partial order reduction (POR) [PPH96, Val90, Pel94, GW94] is a very popular approach to combat state explosion for stutter insensitive formulae. These approaches use diverse strategies (stubborn sets, ample sets, sleep sets…) to consider only a subset of events at each step of the model-checking while still ensuring that at least one representative of each stutter equivalent class of runs is explored. Because the preservation criterion is based on equivalence classes of runs, this family of approaches is limited only to the stutter insensitive fragment of LTL (see Fig.2). However the structural reduction rules used in this paper are compatible and can be stacked with POR when the formula is stutter insensitive; this is the setting in which most structural reduction rules were originally defined. Hence in the approach where we study either fully formulas or simply the fragment of the language of the property separately, POR can be stacked on top of our approach for studying this fragment.

Structural reductions in the literature. The structural reductions rules we used in the performance evaluation are defined on Petri nets where the literature on the subject is rich [Ber85, PP00, EHP05, HP06, BLBDZ19, Thi20]. However there are other formalism where similar reduction rules have been defined such as [PPR08] using “atomic” blocks in Promela, transaction reductions for the widely encompassing intermediate language PINS of LTSmin [Laa18], and even in the context of multi-threaded programs [FQ03]. All these approaches are structural or syntactic, and they are run prior to model-checking per se.

Non structural reductions in the literature. Other strategies have been proposed that instead of structurally reducing the system, dynamically build an abstraction of the Kripke structure where less observable stuttering occurs. These strategies build a whose language is a reduction of the language of the original (in the sense of Def. 2.4), that can then be presented to the emptiness check algorithm with the negation of the formula. They are thus also compatible with the approach proposed in this paper. Such strategies include the Covering Step Graph (CSG) construction of [VM97] where a “step” is performed (instead of firing a single event) that includes several independent transitions. The Symbolic Observation Graph of [KP08] is another example where states of the original are computed (using BDDs) and aggregated as long as the atomic proposition values do not evolve; in practice it exhibits to the emptiness check only shortest runs in each equivalence class hence it is a reduction.

7. Conclusion

To combat the state space explosion problem that LTL model-checking meets, structural reductions have been proposed that syntactically compact the model so that it exhibits fewer interleavings of non observable actions. Prior to this work, all of these approaches were limited to the stutter insensitive fragment of the logic. We bring a semi-decision procedure that widens the applicability of these strategies to formula that are shortening insensitive or lengthening insensitive. The experimental evidence presented shows that the fragment of the logic covered by these new categories is quite useful in practice. An extensive measure using the models, formulae and the two best tools of the model-checking competition 2021 shows that our strategy can improve the decision power of state of the art tools, and confirm that in the best case an exponential speedup of the decision procedure can be attained. We further proposed in this paper approaches that seek to confirm untrusted semi-decision verdicts using a partition of the language, and an approach based on extending the (arbitrary) property language to obtain a procedure that could reliably conclude that the product is empty while working with a reduced system. We also identified several other strategies that are compatible with our approach since they construct a reduced language.

References

- [Ber85] Gérard Berthelot. Checking properties of nets using transformation. In Applications and Theory in Petri Nets, volume 222 of LNCS, pages 19–40. Springer, 1985.

- [BLBDZ19] Bernard Berthomieu, Didier Le Botlan, and Silvano Dal Zilio. Counting Petri net markings from reduction equations. International Journal on Software Tools for Technology Transfer, April 2019.

- [CGJ+00] Edmund M. Clarke, Orna Grumberg, Somesh Jha, Yuan Lu, and Helmut Veith. Counterexample-guided abstraction refinement. In CAV, volume 1855 of LNCS, pages 154–169. Springer, 2000.

- [Cou99] Jean-Michel Couvreur. On-the-fly verification of linear temporal logic. In World Congress on Formal Methods, volume 1708 of Lecture Notes in Computer Science, pages 253–271. Springer, 1999.

- [DAC98] Matthew B. Dwyer, George S. Avrunin, and James C. Corbett. Property specification patterns for finite-state verification. In Mark Ardis, editor, Proceedings of the 2nd Workshop on Formal Methods in Software Practice (FMSP’98), pages 7–15. ACM Press, March 1998. doi:10.1145/298595.298598.

- [DJJ+12] Alexandre David, Lasse Jacobsen, Morten Jacobsen, Kenneth Yrke Jørgensen, Mikael H. Møller, and Jiří Srba. TAPAAL 2.0: Integrated development environment for timed-arc Petri nets. In Cormac Flanagan and Barbara König, editors, Tools and Algorithms for the Construction and Analysis of Systems, pages 492–497, Berlin, Heidelberg, 2012. Springer Berlin Heidelberg.

- [DR18] Rohit Dureja, , and Kristin Yvonne Rozier. More scalable LTL model checking via discovering design-space dependencies (). In Proceedings of the 24th International Conference on Tools and Algorithms for the Construction and Analysis of Systems (TACAS’24), pages 309–327, Cham, 2018. Springer International Publishing. doi:10.1007/978-3-319-89960-2_17.

- [EH00] Kousha Etessami and Gerard J. Holzmann. Optimizing Büchi automata. In C. Palamidessi, editor, Proceedings of the 11th International Conference on Concurrency Theory (Concur’00), volume 1877 of LNCS, pages 153–167, Pennsylvania, USA, 2000. Springer-Verlag.

- [EHP05] Sami Evangelista, Serge Haddad, and Jean-François Pradat-Peyre. Syntactical colored Petri nets reductions. In ATVA, volume 3707 of LNCS, pages 202–216. Springer, 2005.

- [FQ03] Cormac Flanagan and Shaz Qadeer. A type and effect system for atomicity. In PLDI, pages 338–349. ACM, 2003.

- [GW94] Patrice Godefroid and Pierre Wolper. A partial approach to model checking. Inf. Comput., 110(2):305–326, 1994.

- [HJM+21] Falk Howar, Marc Jasper, Malte Mues, David Schmidt, and Bernhard Steffen. The rers challenge: towards controllable and scalable benchmark synthesis. International Journal on Software Tools for Technology Transfer, pages 1–14, 06 2021. doi:10.1007/s10009-021-00617-z.

- [HP06] Serge Haddad and Jean-François Pradat-Peyre. New efficient Petri nets reductions for parallel programs verification. Parallel Processing Letters, 16(1):101–116, 2006.

- [KBG+21] F. Kordon, P. Bouvier, H. Garavel, L. M. Hillah, F. Hulin-Hubard, N. Amat., E. Amparore, B. Berthomieu, S. Biswal, D. Donatelli, F. Galla, , S. Dal Zilio, P. G. Jensen, C. He, D. Le Botlan, S. Li, , J. Srba, Y. Thierry-Mieg, A. Walner, and K. Wolf. Complete Results for the 2021 Edition of the Model Checking Contest. http://mcc.lip6.fr/2021/results.php, June 2021.

- [KP08] Kais Klai and Denis Poitrenaud. MC-SOG: an LTL model checker based on symbolic observation graphs. In Kees M. van Hee and Rüdiger Valk, editors, Applications and Theory of Petri Nets, 29th International Conference, PETRI NETS 2008, Xi’an, China, June 23-27, 2008. Proceedings, volume 5062 of LNCS, pages 288–306. Springer, 2008.

- [KPR04] Raman Kazhamiakin, Marco Pistore, and Marco Roveri. Formal verification of requirements using SPIN: A case study on web services. In SEFM, pages 406–415. IEEE Computer Society, 2004.

- [Laa18] Alfons Laarman. Stubborn transaction reduction. In NFM, volume 10811 of LNCS, pages 280–298. Springer, 2018.

- [Lam90] Leslie Lamport. A theorem on atomicity in distributed algorithms. Distributed Comput., 4:59–68, 1990.

- [Lip75] Richard J. Lipton. Reduction: A method of proving properties of parallel programs. Commun. ACM, 18(12):717–721, 1975.

- [LS89] Leslie Lamport and Fred B. Schneider. Pretending atomicity. SRC Research Report 44, May 1989. URL: https://www.microsoft.com/en-us/research/publication/pretending-atomicity/.

- [MD15] Thibaud Michaud and Alexandre Duret-Lutz. Practical stutter-invariance checks for -regular languages. In SPIN, volume 9232 of LNCS, pages 84–101. Springer, 2015.

- [Pel94] Doron A. Peled. Combining partial order reductions with on-the-fly model-checking. In CAV, volume 818 of LNCS, pages 377–390. Springer, 1994.

- [PP00] Denis Poitrenaud and Jean-François Pradat-Peyre. Pre- and post-agglomerations for LTL model checking. In ICATPN, volume 1825 of LNCS, pages 387–408. Springer, 2000.

- [PPH96] Doron A. Peled, Vaughan R. Pratt, and Gerard J. Holzmann, editors. Partial Order Methods in Verification, Proceedings of a DIMACS Workshop, 1996, volume 29 of DIMACS Series in Discrete Mathematics and Theoretical Computer Science. DIMACS/AMS, 1996.

- [PPR08] Christophe Pajault, Jean-François Pradat-Peyre, and Pierre Rousseau. Adapting Petri nets reductions to Promela specifications. In FORTE, volume 5048 of LNCS, pages 84–98. Springer, 2008.

- [PPRT22] Emmanuel Paviot-Adet, Denis Poitrenaud, Etienne Renault, and Yann Thierry-Mieg. LTL under reductions with weaker conditions than stutter invariance. In FORTE, volume 13273 of Lecture Notes in Computer Science, pages 170–187. Springer, 2022.

- [SB00] Fabio Somenzi and Roderick Bloem. Efficient Büchi automata for LTL formulæ. In Proceedings of the 12th International Conference on Computer Aided Verification (CAV’00), volume 1855 of LNCS, pages 247–263, Chicago, Illinois, USA, 2000. Springer-Verlag.

- [SV12] Sven Schewe and Thomas Varghese. Tight bounds for the determinisation and complementation of generalised Büchi automata. In Supratik Chakraborty and Madhavan Mukund, editors, Proceedings of the 10th International Symposium on Automated Technology for Verification and Analysis (ATVA’12), volume 7561 of Lecture Notes in Computer Science, pages 42–56. Springer, October 2012. doi:10.1007/978-3-642-33386-6_5.

- [Thi15] Yann Thierry-Mieg. Symbolic model-checking using ITS-tools. In TACAS, volume 9035 of LNCS, pages 231–237. Springer, 2015.

- [Thi20] Yann Thierry-Mieg. Structural reductions revisited. In Petri Nets, volume 12152 of LNCS, pages 303–323. Springer, 2020.

- [TMRPAP24] Yann Thierry-Mieg, Etienne Renault, Emmanuel Paviot-Adet, and Denis Poitrenaud. A model-checker exploiting structural reductions even with stutter sensitive ltlimage 1. Science of Computer Programming, 235, 2024. doi:10.1016/j.scico.2024.103089.

- [Val90] Antti Valmari. A stubborn attack on state explosion. In CAV, volume 531 of LNCS, pages 156–165. Springer, 1990.

- [Var07] Moshe Y. Vardi. Automata-theoretic model checking revisited. In VMCAI, volume 4349 of LNCS, pages 137–150. Springer, 2007.

- [VM97] François Vernadat and François Michel. Covering step graph preserving failure semantics. In Pierre Azéma and Gianfranco Balbo, editors, Application and Theory of Petri Nets 1997, 18th International Conference, ICATPN ’97, Toulouse, France, June 23-27, 1997, Proceedings, volume 1248 of LNCS, pages 253–270. Springer, 1997.

- [Yan08] Qiqi Yan. Lower bounds for complementation of omega-automata via the full automata technique. Logical Methods in Computer Science, 4(1), March 2008.