Sub-Gaussian Error Bounds for Hypothesis Testing

††thanks: This research was supported in part by the US National Science Foundation under grant HDR: TRIPODS 19-34884.

Abstract

We interpret likelihood-based test functions from a geometric perspective where the Kullback-Leibler (KL) divergence is adopted to quantify the distance from a distribution to another. Such a test function can be seen as a sub-Gaussian random variable, and we propose a principled way to calculate its corresponding sub-Gaussian norm. Then an error bound for binary hypothesis testing can be obtained in terms of the sub-Gaussian norm and the KL divergence, which is more informative than Pinsker’s bound when the significance level is prescribed. For -ary hypothesis testing, we also derive an error bound which is complementary to Fano’s inequality by being more informative when the number of hypotheses or the sample size is not large.

I Introduction

Hypothesis testing is one central task in statistics. One of its simplest forms is the binary case: given independent and identically distributed (i.i.d.) random variables , one wants to infer whether the null hypothesis or the alternative hypothesis is true. The binary case serves as an important starting point from which further results can be established, in the settings of both classical and quantum hypothesis testing [1, 2]. With , one can construct the empirical distribution , where is the Dirac measure that puts unit mass at . Adopting the Kullback-Leibler (KL) divergence as a distance from to or , one can construct a test function as

| (1) |

where is the indicator function, serves as a threshold beyond which the decision that is closer to than to is made, and is the KL divergence from probability to probability if . Conventionally, if is not absolutely continuous with respect to , then . Note is discrete; hence if both and are discrete with the same support, (1) is well defined. Denote the densities of and with respect to the counting measure as and , respectively, and we have

| (2) |

In fact, in this case, (1) is equivalent to the test function for the likelihood ratio test [4]

| (3) |

where . In the case that both and are continuous, the KL divergence difference is not well defined. Nonetheless, the technically tricky part is the term “,” where we use to denote the density of with respect to the Lebesgue measure as if it had one. But it appears twice and is cancelled out formally. We might conveniently define the KL divergence difference in this case as (2), and still find the equivalence between (1) and (3). Using the KL divergence in the context of hypothesis testing can be beneficial. Firstly, it provides a clear geometric meaning to the likelihood ratio test, as well as to the general idea underlying hypothesis testing. Secondly, it also offers a geometric, or even physical, interpretation of the lower bound for the resulting statistical errors, as shown below.

Under the null hypothesis , the type I error rate (or the significance level) that is incurred by applying (1) for a fixed is

| (4) |

where is the product probability measure for under . In practice, by prescribing the significance level, for example, letting , one can derive the corresponding and determine the desired test function. However, in this work, our focus is to find a test function at given , we mainly deal with the case that is fixed, and is obtained in a somewhat passive way. Thanks to the Neyman-Pearson lemma [3], the likelihood ratio test is known to be optimal in the sense of statistical power. Hence, given the incurred , test function (1) has the minimal type II error rate among all possible test functions with the corresponding type I error rate no gretaer than :

| (5) |

where is the product probability measure for under the alternative hypothesis .

Controlling statistical errors is of practical importance; however, typically one cannot suppress both types of error simultaneously. Under our i.i.d. setting, a classical result, based on Pinsker’s inequality, concerning the error bound for any (measurable) test function is that [4]

| (6) |

This result is striking in that without going into the details of calculating and , one can have a nontrivial lower bound of their sum in terms of the KL divergence between two candidate probabilities, as long as the right-hand side of (6) is greater than 0. For a fixed , this bound is solely determined by , which reflects the “distance” from to . This result also has a significant physical meaning. At a nonequilibrium steady state, if denotes the probability associated with observing a stochastic trajectory in the forward process, and in the backward process, then the theory of stochastic thermodynamics tells us that is equivalent to the average entropy production in the forward process, which is always nonnegative [5, 6]. Hence, if one wishes to infer the arrow of time based on observations, then Pinsker’s result (6) implies that the chance of making an error is high if is small. In fact, we know that at equilibrium, and one cannot tell the arrow of time at all; hypothesis testing is just random guess in this case.

While (6) is useful, can we have a tighter and thus more informative bound? In this work, we will show that by taking advantage of the sub-Gaussian property of [7, 8], one can derive a bound (17) on statistical errors in terms of its sub-Gaussian norm (as well as the KL divergence from to ). We name such an error bound as “sub-Gaussian” to highlight this fact. It turns out that it is a tighter bound than (6) in the sense that it provides a greater lower bound for (or for at any given ). In practice, a small is commonly set as the significance level, and our result can hopefully be more relevant. Moreover, in the case of -ary hypothesis testing where hypotheses are present, we also derive a bound (24) for making incorrect decisions, which is complementary to the celebrated Fano’s inequality [9] when the number of hypotheses or the sample size is not large. The error bounds presented in this work are universal and easily applicable. We hope these findings can help better quantify errors in various statistical practices involving hypothesis testing.

II Main Results

We will first introduce the sub-Gaussian norm of . Then error bounds in the binary and -ary cases are established, respectively.

II-A Sub-Gaussian norm of

Sub-Gaussian random variables are natural generalizations of Gaussian ones. The so-called sub-Gaussian property can be defined in several different but equivalent ways [7, 8]. In this work, we pick one that suits most for our purposes.

Definition 1.

A random variable with probability law is called sub-Gaussian if there exists such that its central moment generating function satisfies

Definition 2.

The associated sub-Gaussian norm of with respect to is defined as

Remark 1.

is a well defined norm for the centered variable [6]. It is the same for a location family of random variables that have different means but are otherwise identical. Also, is equal to the -Orlicz norm of up to a numerical constant factor.

Lemma 1.

A bounded random variable is sub-Gaussian. In particular, if almost surely with respect to , then .

Test function (1) is an indicator function and takes on values in ; hence it is bounded. No matter what the law of is, is always sub-Gaussian by Lemma 1 with a uniform upper bound of its sub-Gaussian norm that

| (7) |

However, if is fixed as a result of some being used in (1), then a more informative sub-Gaussian norm for can be obtained under the situation that . In this case, by (4),

and one can explicitly write

Using , one can rewrite the sub-Gaussian property as

| (8) |

Since is sub-Gaussian, there exists such that at any , we have , which is the maximal value of . This fact implies and . The latter poses a constraint on ’s under which (8) holds:

| (9) |

Since , we know the minimal universal for all is , consistent with (7).

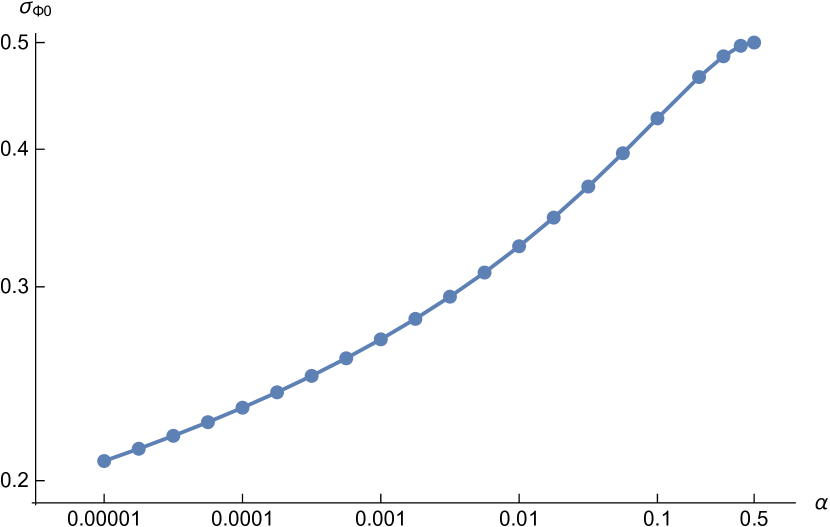

For a specific , the minimal that makes (8) valid is denoted as , which is defined to be the sub-Gaussian norm of under the law , the push forward probability measure of induced by . We may also simply state that is the sub-Gaussian norm of under . The norm can be numerically obtained in a principled way, as summarized in the following theorem.

Theorem 1.

For , besides the trivial solution with any , the equations

| (12) |

have only one nontrivial solution where . The sub-Gaussian norm of under is . For , .

Proof.

We will consider three cases based on the value of .

Case I: . In this case, can be obtained directly by noticing

Hence by direct inspection, .

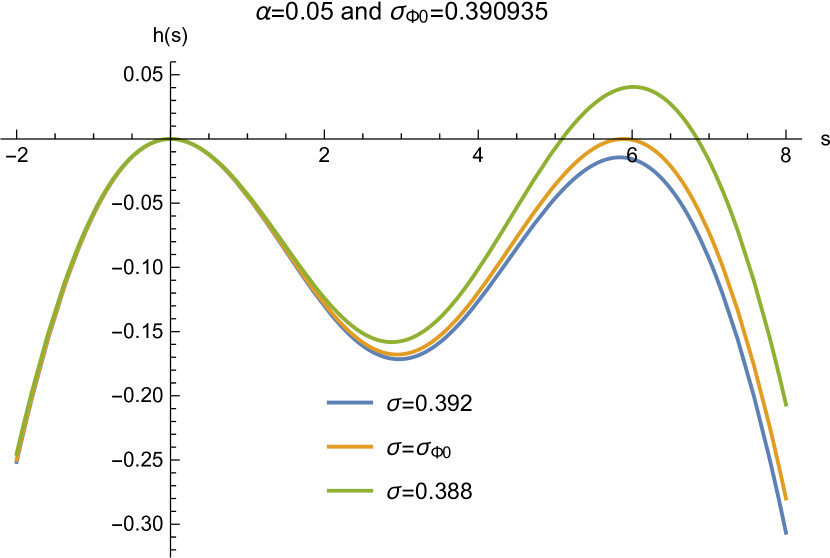

Case II: . Before diving into the proof, we briefly address the main idea first. Given , the function depends on both and . Requiring its maximum to be no greater than 0 at some naturally leads to two conditions that and , which are just (12). It is expected that can be obtained from the corresponding nontrivial solutions, since it is the minimal that satisfies (8). Fig. 1 confirms this intuition, where is assumed for illustration. By tuning to some , one can see the maximum of at some can be exactly equal to 0, i.e., . Also at this , is tangent to the -axis, indicating that . Hence .

Now we turn to the proof. It is trivial that for any , attains its maximal value 0 at , no matter what is. This does not provide much useful information of . To proceed, we need a nontrivial local maximum of at some . Our first observation is that when , there is no local maximum achieved for , because for all . To see this, let , , and , then we have that

where (hence ) is used in the first inequality, the second inequality is due to for , and the last inequality is given by (9) since we have already known is sub-Gaussian. This result indicates that the nontrivial maximum, if any, can only be found at some .

For , following similar steps, we obtain

and the condition then implies

It is straightforward to check that is a positive, monotonically increasing, and strongly convex function. Hence it can intersect the straight line at no more than two points. Note , and . The intercept of is by (9), and the slope is greater than 0. Hence by tuning , it is always possible to make and intersect twice. Denote these two points as and , respectively, with . As shown in Fig. 1, is the minimum between two maxima and . Then further requiring at some , which is attainable since is known to be sub-Gaussian, we obtain , and Theorem 1 for the part is proved.

Case III: . Note or is invariant under the transformations and . Hence is the same for and .

Combining all three cases, we have proved Theorem 1. ∎

II-B Sub-Gaussian bound for binary hypothesis testing

Lemma 2.

Consider two general probability measures and on a common measurable space. Suppose , and let be the density of with respect to . Let be a sub-Gaussian random variable which is a function of that has law or . Then we have

| (13) |

where denotes the sub-Gaussian norm of with respect to the push forward measure .

Recently, there have been several works with findings similar to Theorem 2, in the context of nonequilibrium statistical physics [6], data exploration or model bias analysis [10, 11], or uncertainty quantification for stochastic processes [12]. They can, however, be analyzed in a unified way based on the spirit in [13].

Proof.

We have assumed and . The associated entropy functional of with respect to is defined as . It is straightforward to find that

| (14) |

On the other hand, by the variational representation of , we have that

| (15) |

where is a measurable function. We have and .

Theorem 2.

Suppose , and denote the sub-Gaussian norm of test function (1) under the null hypothesis as . Then we have

| (16) |

Proof.

Let , and , and due to the i.i.d. setting, . Then the proof is completed by letting in Lemma 2. ∎

Corollary 1.

One has

| (17) |

Remark 2.

Corollary 1 can be relaxed by replacing the sub-Gaussian norm with one of its upper bounds. In fact, if we use the universal upper bound provided by (7), then Corollary 1 reduces to Pinsker’s classical result (6). However, our bound is always stronger in general. In particular, when controlling is more important than controlling , one might set to put more emphasis on it. Hence for the same sample size , the larger is, the smaller and are, resulting in a tighter bound for .

Remark 3.

There is another inequality from Theorem 2 that . But it is somewhat trivial because the bound is greater than 1 and in general does not provide much useful information. For example, one can always accept , and for this trivial decision rule, , but by definition. Hence , and the extra term is not informative at all.

Remark 4.

Suppose also , which is the usual case in hypothesis testing. Then by symmetry, it is straightforward to have

| (18) |

where is the sub-Gaussian norm of under , and it is a function of . This result is nontrivially different than (17), not only because different norms are applied, but also because the KL divergence is not symmetric in two involved probabilities. Given (18), we can either bound when is given or bound in an implicit way when is given.

II-C Sub-Gaussian bound for -ary hypothesis testing

A generalization of our result to the -ary hypothesis testing can be obtained. Suppose there are hypotheses, represented by the corresponding probability distributions . Suppose from one of such distributions , data points are drawn independently. Our task is to infer the hypothesis index from data. Similar to (1), let us consider the test function for the -th hypothesis as

with in the binary case. We will consider the case that for all . Unlike in the binary case where can be adopted to intentionally render a small , the test function here is purely likelihood-based without any prescribed preference over any particular hypothesis. It is known that this approach minimizes in the binary case (the Bayes classifier). From such test functions , one can construct a random vector . Assume there always exists a single index such that

holds for all . In this case, , and for . Since is random, we expect that may differ for each realization. However, it is almost surely with respect to all ’s that

| (19) |

Under hypotheses, we can construct a matrix, denoted , that encodes the error incurred in testing:

| (23) |

where the matrix element . By (19), the row sum of is 1. The diagonal elements of are actually the probabilities that the underlying hypothesis is correctly identified. In other words, the probability of making an incorrect decision when the data are generated from the th hypothesis is . We denote . The following theorem provides a lower bound to that is complementary to Fano’s inequality.

Theorem 3.

Suppose for all . For any , we have

| (24) |

where is the sub-Gaussian norm of with respect to the th hypothesis.

Proof.

First note that is sub-Gaussian since it takes on values in . If is fixed, then the sub-Gaussian norm can be calculated similarly as in the binary case. Even is unknown, by (2), we can formally have

| (25) |

Summing over and combining (19), we find

Finally, we arrive at (24) by rearranging the terms. Hence the proof is completed. ∎

If we aim at lower bounding , then using the sub-Gaussian norm in Theorem 3 seems not useful practically, since itself depends on . Nonetheless, due to the universal upper bound (7), we can have a relaxed version of (24) as in the corollary below.

Corollary 2.

For any , we have

| (26) |

or, using the mean square root of KL divergences, we have

| (27) |

Furthermore, if holds for each pair of and , then

| (28) |

Remark 6.

It is interesting to compare (28) with Fano’s inequality [9], which, under the same assumption that all KL divergences are uniformly bounded by , states that

| (29) |

As evidenced by the scalings of and in (28) and (29), respectively, there is a region that our result outperforms Fano’s in the sense that it provides a greater lower bound for . Qualitatively, this happens when at least one of the number of hypotheses and the sample size is not large. For example, when , Fano’s inequality is trivial, while our result can still work nontrivially.

III Conclusion and discussion

In this work, by using the sub-Gaussian property of test functions, we uncover two universal error bounds in terms of the sub-Gaussian norm and the Kullback-Leibler divergence. In the case of binary hypothesis testing, our bound (17) is always tighter than Pinsker’s bound (6) for any given . In the case of -ary hypothesis testing, our result (24) is complementary to Fano’s inequality (29) by providing a more informative bound when the number of hypotheses or the sample size is not large.

Given the universality of our results, we hope, with possible generalizations, they can find potential applications in fields ranging from clinical trials to quantum state discrimination. In particular, the quantum extension of these bounds is of special interest. Due to the experimental cost, it may be important to quantify statistical errors in the presence of a limited number of observations, and nonasymptotic rather than asymptotic results are thus more relevant. Both our bounds hold for any finite sample size, and can hopefully be helpful in such cases.

Acknowledgment

YW gratefully thank Prof. Dan Nettleton for helpful discussions that stimulated this work and Prof. Huaiqing Wu for a careful review of the manuscript and insightful comments.

References

- [1] R. W. Keener, Theoretical Statistics: Topics for a Core Course. New York, NY, USA: Springer, 2010.

- [2] M. Hayashi, Quantum Information Theory: A Mathematical Foundation, 2nd ed. New York, NY, USA: Springer-Verlag, 2017.

- [3] J. Neyman and E. S. Pearson, IX, “On the problem of the most efficient tests of statistical hypotheses,” Phil. Trans. R. Soc. London, vol. A231, pp. 289–337, April 1933.

- [4] T. M. Cover and J. A. Thomas, Elements of Information Theory. Hoboken, NJ, USA: John Wiley & Sons, 1991.

- [5] U. Seifert, “Entropy production along a stochastic trajectory and an integral fluctuation theorem,” Phys. Rev. Lett., vol. 95, no.4, July 2005, Art. no. 040602.

- [6] Y. Wang, “Sub-Gaussian and subexponential fluctuation-response inequalities,” Phys. Rev. E, vol. 102, no. 5, November 2020, Art. no. 052105.

- [7] M. J. Wainwright, High-Dimensional Statistics: A Non-Asymptotic Viewpoint. Cambridge, U.K.: Cambridge University Press, 2019.

- [8] R. Vershynin, High-Dimensional Probability: An Introduction with Applications in Data Science. Cambridge, U.K.: Cambridge University Press, 2018.

- [9] R. Fano, Transmission of Information: A Statistical Theory of Communications. Cambridge, MA, USA: M.I.T. Press, 1961.

- [10] D. Russo and J. Zou, “How much does your data exploration overfit? Controlling bias via information usage,” IEEE Trans. Inf. Theory, vol. 66, no. 1, pp. 302–323, January 2020.

- [11] K. Gourgoulias, M. A. Katsoulakis, L. Rey-Bellet, and J. Wang, “How biased is your model? Concentration inequalities, information and model bias,” IEEE Trans. Inf. Theory, vol. 66, no. 5, pp. 3079–3097, May 2020.

- [12] J. Birrell and L. Rey-Bellet, “Uncertainty quantification for Markov processes via variational principles and functional inequalities,” SIAM/ASA J. Uncertain. Quantif. vol. 8, no. 2, pp. 539–572, April 2020.

- [13] S. G. Bobkov and F. Götze, “Exponential integrability and transportation cost related to logarithmic Sobolev inequalities,” J. Funct. Anal., vol. 163, no. 1, pp. 1–28, April 1999.