Superlinear Precision and Memory in Simple Population Codes

Abstract

The brain constructs population codes to represent stimuli through widely distributed patterns of activity across neurons. An important figure of merit of population codes is how much information about the original stimulus can be decoded from them. Fisher information is widely used to quantify coding precision and specify optimal codes, because of its relationship to mean squared error (MSE) under certain assumptions. When neural firing is sparse, however, optimizing Fisher information can result in codes that are highly sub-optimal in terms of MSE. We find that this discrepancy arises from the non-local component of error not accounted for by the Fisher information. Using this insight, we construct optimal population codes by directly minimizing the MSE. We study the scaling properties of MSE with coding parameters, focusing on the tuning curve width. We find that the optimal tuning curve width for coding no longer scales as the inverse population size, and the quadratic scaling of precision with system size predicted by Fisher information alone no longer holds. However, superlinearity is still preserved with only a logarithmic slowdown. We derive analogous results for networks storing the memory of a stimulus through continuous attractor dynamics, and show that similar scaling properties optimize memory and representation.

Information about sensory stimuli or motor variables is often encoded in the joint activity of large populations of neurons. In a classic form of such population coding, neurons fire selectively with a “bump” of elevated activity around a certain preferred value of the encoded variable. Such bump codes, because of their ubiquity in the brain [1, 2, 3, 4, 5] and amenability to quantitative analysis, have been the subject of intense theoretical scrutiny [6, 7, 8, 9, 10, 11].

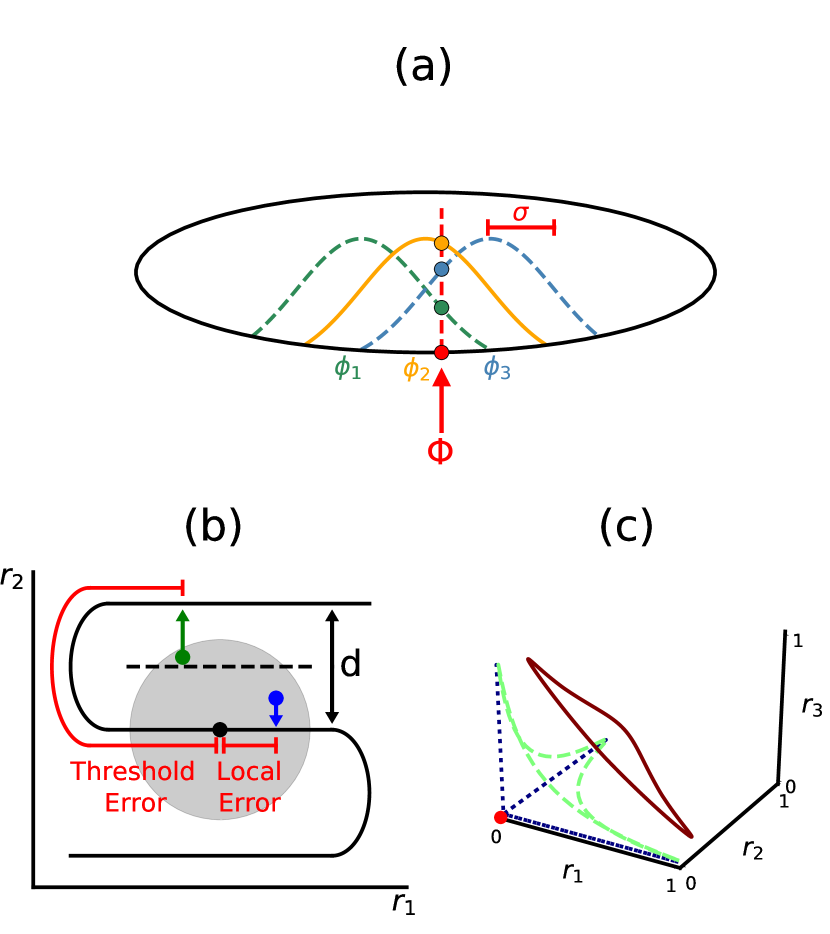

Fig. 1a shows a schematic of a bump code, where a network of neurons encodes a one-dimensional periodic stimulus parametrized by angle . When the stimulus is presented, each neuron independently fires Poisson spikes at a rate :

| (1) |

Here, is the peak firing rate and is a unimodal function with width and the peak value of 1 at . We set the baseline firing rate , but later find that this assumption can be relaxed. The preferred angles ’s of the neurons are evenly distributed across the stimulus domain, such that . Equation (1), which maps each stimulus value to the expected response of a neuron, is known as the neuronal tuning curve. Such unimodal tuning curves are widely observed in sensory and motor peripheries and even in cognitive areas [12, 13, 14]. If the spikes are collected for time while the stimulus remains present, the number of spikes fired by each neuron will be distributed as . The population response constitutes the neural encoding of stimulus .

A natural measure of encoding performance in a population code is the mean squared-error (MSE) in estimating the stimulus from the populaton response. In general, MSE is difficult to compute thus a common approach has been to instead compute the Fisher information (FI) [15, 16, 17, 18, 19, 20]:

| (2) |

For any unbiased estimator , the MSE is bounded from below by the inverse of FI via the Cramér-Rao bound. [21, 22]:

| (3) |

Averaging over all possible stimulus values, the quantity is a lower bound on overall MSE.

In general, a bigger population will enable more accurate decoding. However, neurons are costly to maintain so the brain may optimize other coding parameters. A parameter of particular interest is the tuning curve width . For bump codes representing a scalar variable, FI grows as [16, 23, 24]. Thus, if remained constant, FI would scale linearly with .

While it may appear that we can achieve infinite precision by sending , this is not the case because the relevant quantity, , diverges from in the regime in which the tuning curves are too narrow to span the space between each neuron’s bump center (i.e., the support of the tuning curves does not cover the stimulus domain). The correct FI-optimal that minimizes the former actually scales as [25], and so the optimal FI scaling of precision is superlinear, scaling as .

Unfortunately, the Cramér-Rao bound is guaranteed to be “tight” only when the number of samples collected for the estimate tends to infinity. When the number of spikes obtained from the population is small, the inverse FI can severely underestimate the true MSE, as demonstrated in [26, 27, 25]. This finding calls into question whether any superlinear scaling of MSE is actually achievable in bump codes. Thus, we are left with two open questions which we answer in this Letter: What is the MSE-optimal scaling of tuning width in classical bump codes? And, is superlinear coding possible?

A natural way to start is to ask when the inverse FI and MSE become decoupled. For this, we adopt a geometric view of coding. A redundant code of an analog variable may be viewed as an embedding of a lower-dimensional manifold (encoded variable) into a higher-dimensional space (coding variables, i.e. neural response). Thus, our bump population code for a scalar variable is an embedding of a line into the -dimensional activity state space . In Fig. 1b, the solid black coding line corresponds to the noiseless neural responses as a parametric function of the encoded variable . Noise in the neural responses perturbs the network state away from the coding line, and a decoder must map the perturbed state back onto it. When the noise is small, a good decoder can map the state back to the vicinity of the original point on the coding line; the small remaining errors are local. When the noise magnitude exceeds a threshold value, the perturbed state, and thus its reconstructed estimate, is closer to a distant point on the coding line. Such errors are called threshold errors [28]. Both types of error contribute to the MSE, but FI only takes into account of local errors. Thus, when the threshold errors proliferate, the MSE grows and parts ways from the inverse FI.

The tuning curve width affects the layout, length, and spacing of the coding line, and through them the probability of threshold errors. If the total volume of state space is held fixed (equivalent to fixing the minimum and maximum firing rate of neurons and the number of neurons) as the tuning curve is narrowed, the coding line increases in length, resulting in a smaller local error as a fraction of the range of the variable. However, the longer coding line is packed more closely near the axes of the space and near the origin (Fig. 1c). As we see below, this increases threshold error probability.

We now use the above insight to heuristically derive a simple analytic expression for how MSE scales with network size in bump population codes. The total MSE can be written as a sum of two terms, arising from local and threshold errors:

| (4) |

where () corresponds to the expected value of local (threshold) squared errors and is the probability of threshold errors.

As noted above, for unimodal tuning curves, the FI for a one-dimensional stimulus has the following scaling:

| (5) |

where is a prefactor dependent on and , which is fixed. We assume that is accurately described by the Cramér-Rao bound and that the regime of interest satisfies . We will subsequently verify the consistency of this assumption.

We next consider the threshold error term. If at any stimulus value few neurons respond and do so with small rates, there is a finite probability that no spikes will be fired. In such a trial, the decoder must guess an angle from no data, resulting in a large error of . This is the manifestation of threshold error in our system, and corresponds to the intersection of a noise ball with the origin in the geometric view of Fig. 1c. To reduce such error, the neurons must code redundantly in the sense of multiple neurons covering the same angular space to ensure that at least some will respond for any stimulus. It is thus expected that the optimal width will decrease more slowly than to ensure that the threshold errors remain comparable to the inevitable local errors.

The equation for the MSE now becomes:

| (6) |

where is the probability that no spikes are fired by any neurons during the observation time and is the mean-squared error in the case of random guessing over the circle. For broad enough tuning curves, the total firing rate in the population is nearly independent of stimulus value and is given by , where is a constant determined by the tuning curve shape, e.g. for Gaussian [27]. Thus, we have . Above, we made the assumption that decreases more slowly than . This implies both that fluctuations in is negligible and . The scaling of optimal width that we presently derive is consistent with this assumption.

From Equation (6), the value of that minimizes MSE is readily computed to be

| (7) | ||||

| (8) |

With this form for , the optimal MSE then scales as

| (9) |

Due to in the numerator, the first term is dominant for large and scales as . This provides the optimal scaling of MSE for neurons with unimodal tuning curves encoding a one-dimensional stimulus. An important note is that since the first term corresponds to the local error, the Cramér-Rao bound is in fact asymptotically tight with the optimal scaling in Equation (8). This furthermore implies that the extra error, i.e. the absolute difference between and , the inverse FI at the optimal tuning width, should scale as .

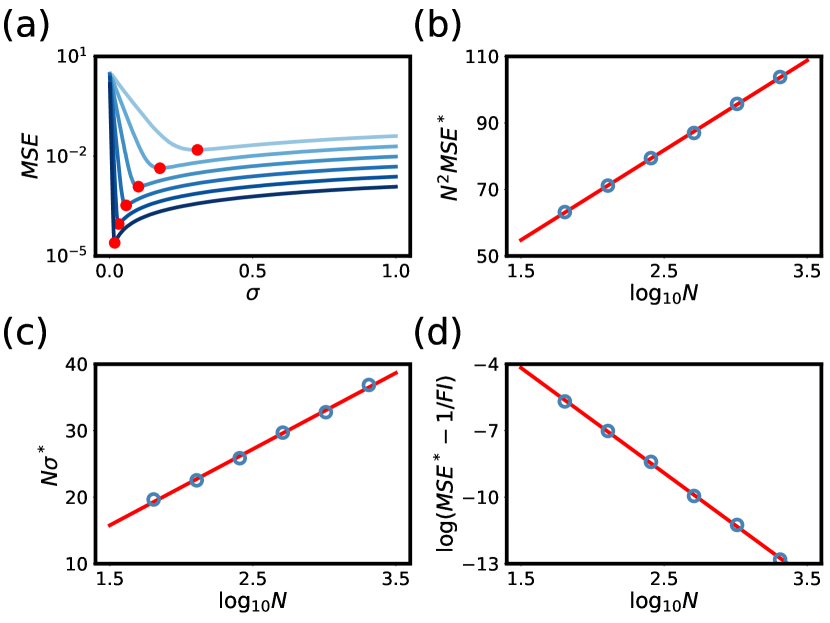

To test these predictions, we performed simulations of the encoding/decoding process that employed maximum likelihood decoding of neurons with Gaussian tuning curves. We chose various system sizes and measured MSE as a function of tuning curve width for each value of (Fig. 2a). To check the consistency with our predictions, we rescaled the minimal MSE for each and plotted versus (Fig. 2b), which should be linear if the predicted scaling is correct. We similarly rescaled the optimal tuning curve width and plotted versus (Fig. 2c), which should also be linear. We finally plotted the extra threshold error beyond the local error (Fig. 2d). In all cases, the predicted scaling was observed. These numerical results together provide strong evidence that our simple estimate captures the correct asymptotic behavior and that indeed can scale nearly as , with only a logarithmic slowdown. Moreover, the optimal tuning curve width scales as , an adjustment from the Fisher information-derived scaling. We emphasize that this is a general result for any unimodal symmetric tuning curves as long as neurons are homogeneous, densely distributed, and fire independent Poisson spikes with zero baseline.

We decided to test whether the result still holds if the neurons are assumed to have a small (compared to the maximum) nonzero baseline firing rate. The above derivation cannot be reused because the main cause of threshold errors is now no longer non-response of the stimulus-driven neurons but higher responses by neurons far from the stimulus. Nevertheless, a heuristic derivation suggests that the basic scaling relations still remain the same, and numerical results also corroborate this. Details can be found in the Supplemental Material.

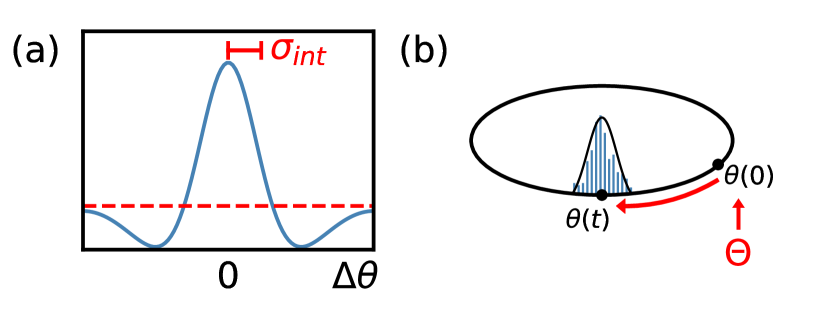

The brain also uses population representations to store short-term memory of continuous variables, which can be maintained as the dynamical attractor states of a continuous attractor network [29, 30, 31]. The performance of such memory networks is also known to be bounded by FI [32]. This motivated us to ask whether the considerations discussed above may also apply in a memory setting. For direct comparison, we consider neurons storing a periodic one-dimensional variable, through bump-like activity profiles. To achieve a persistent bump in the absence of a stimulus, the neurons are arranged in a ring and each neuron interacts with others via short range excitatory and long range inhibitory connections (Fig. 3a). The excitatory interaction width plays an analogous role to the tuning curve width we considered earlier. The result of this connectivity structure is that a persistent bump of local activity is dynamically maintained around an initial location determined by the external input stimulus (which is subsequently taken away) [33, 34]. Due to neural noise, the bump location does not remain stationary, but rather performs diffusive motion away from its initial location [32] (Fig. 3b). This diffusion amounts to erasure of the stored information (initial stimulus location), and a desirable memory network would have lower diffusivity.

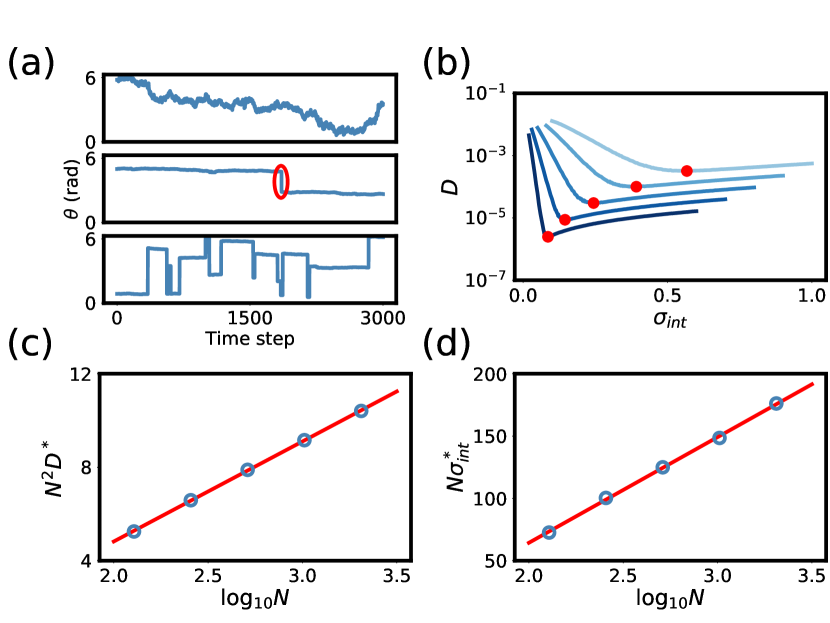

In fact, we find that the motion is diffusive only for large enough interaction width. For smaller interaction width, diffusive motion is interrupted by large jumps where the activity bump stochastically shrinks and spontaneously reassembles in a potentially distant location (Fig. 4a). These non-local jumps can be understood as the dynamical analogue of threshold errors in the sensory network. Clearly, such large jumps are catastrophic for memory performance. Thus a similar trade-off exists for the memory network: decreasing the interaction width tightens the bump and decreases its diffusivity, but it also increases the chance of complete destabilization and non-local reformation of the bump.

We performed dynamical simulations for networks of various sizes and varied . For each network, we computed the diffusion constant as the slope of mean squared displacement versus time for short durations, averaged over the entire simulation. Although the motion was not always purely diffusive, we can nonetheless extract an effective diffusion constant in this fashion, and mean squared displacement was linear over short times for all networks. From the results (Fig. 4b), we found the minimum diffusivity and the corresponding optimal interaction width and plotted and versus (Fig. 4c-4d). In both cases the scaling is linear, indicating that the optimal memory network exhibits the same scaling as we found for the sensory system.

Thus, it is found that the decomposition of error into local and non-local terms is a phenomenon common to both population coding and short-term memory. Fisher information, being a partial derivative, is fundamentally a local quantity and can only take into account of the first term. Neglecting the existence of the non-local source of error inevitably leads to failure of any FI-based approach to characterizing and optimizing model performance. Conversely, we hope to have demonstrated that FI can still be used fruitfully if it is complemented with an appropriate characterization of the remaining error.

References

- Georgopoulos et al. [1982] A. P. Georgopoulos, J. F. Kalaska, R. Caminiti, and J. T. Massey, On the relations between the direction of two-dimensional arm movements and cell discharge in primate motor cortex, The Journal of Neuroscience 2, 1527 (1982).

- Lee et al. [1988] C. Lee, W. H. Rohrer, and D. L. Sparks, Population coding of saccadic eye movements by neurons in the superior colliculus, Nature 332, 357 (1988).

- Taube [1998] J. S. Taube, Head direction cells and the neurophysiological basis for a sense of direction, Progress in Neurobiology 55, 225 (1998).

- Wimmer et al. [2014] K. Wimmer, D. Q. Nykamp, C. Constantinidis, and A. Compte, Bump attractor dynamics in prefrontal cortex explains behavioral precision in spatial working memory, Nature Neuroscience 17, 431 (2014).

- Kim et al. [2017] S. S. Kim, H. Rouault, S. Druckmann, and V. Jayaraman, Ring attractor dynamics in the Drosophilia central brain, Science 356, 849 (2017).

- Georgopoulos et al. [1986] A. P. Georgopoulos, A. B. Schwartz, and R. E. Kettner, Neuronal population coding of movement direction, Science 233, 1416 (1986).

- Salinas and Abbott [1994] E. Salinas and L. F. Abbott, Vector Reconstruction from Firing Rates, Journal of Computational Neuroscience 1, 89 (1994).

- Snippe [1996] H. P. Snippe, Parameter Extraction from Population Codes: A Critical Assessment, Neural Computation 8, 511 (1996).

- Oram et al. [1998] M. W. Oram, P. Földiák, D. I. Perrett, and F. Sengpiel, The ’Ideal Homunculus’: decoding neural population signals, Trends in Neurosciences 21, 259 (1998).

- Pouget et al. [2000] A. Pouget, P. Dayan, and R. Zemel, Information Processing with Population Codes, Nature Reviews Neuroscience 1, 125 (2000).

- Sompolinsky et al. [2001] H. Sompolinsky, H. Yoon, K. Kang, and M. Shamir, Population coding in neuronal systems with correlated noise, Physical Review E 64, 051904 (2001).

- Schwartz et al. [1988] A. B. Schwartz, R. E. Kettner, and A. P. Georgopoulos, Primate Motor Cortex and Free Arm Movements to Visual Targets in Three-Dimensional Space. I. Relations Between Single Cell Discharge and Direction of Movement, The Journal of Neuroscience 8, 2913 (1988).

- Miller and Jacobs [1991] J. P. Miller and G. A. Jacobs, Representation of Sensory Information in the Cricket Cercal Sensory System. I. Response Properties of the Primary Interneurons, Journal of Neurophysiology 66, 1680 (1991).

- Young and Yamane [1992] M. P. Young and S. Yamane, Sparse Population Coding of Faces in the Inferotemporal Cortex, Science 256, 1327 (1992).

- Paradiso [1988] M. A. Paradiso, A Theory for the Use of Visual Orientation Information which Exploits the Columnar Structure of Striate Cortex, Biological Cybernetics 58, 35 (1988).

- Seung and Sompolinsky [1993] H. S. Seung and H. Sompolinsky, Simple models for reading neuronal population codes, Proceedings of the National Academy of Sciences 90, 10749 (1993).

- Abbott and Dayan [1999] L. F. Abbott and P. Dayan, The effect of correlated variability on the accuracy of a population code, Neural Computation 11, 91 (1999).

- Harper and McAlpine [2004] N. S. Harper and D. McAlpine, Optimal neural population coding of an auditory spatial cue, Nature 430, 682 (2004).

- Toyoizumi et al. [2006] T. Toyoizumi, K. Aihara, and S. I. Amari, Fisher Information for Spike-Based Population Decoding, Physical Review Letters 97, 098102 (2006).

- Yarrow et al. [2012] S. Yarrow, E. Challis, and P. Seriès, Fisher and Shannon Information in Finite Neural Populations, Neural Computation 24, 1740 (2012).

- Rao [1945] C. R. Rao, Information and the accuracy attainable in the estimation of statistical parameters, Bulletin of the Calcutta Mathematica Society 37, 81 (1945).

- Cramér [1946] H. Cramér, Mathematical Methods of Statistics (Princeton University Press, 1946).

- Zhang and Sejnowski [1993] K. Zhang and T. J. Sejnowski, Neuronal Tuning: To Sharpen or Broaden?, Neural Computation 11 (1993).

- Dayan and Abbott [2001] P. Dayan and L. F. Abbott, Theoretical Neuroscience (MIT Press, 2001).

- Berens et al. [2011] P. Berens, A. S. Ecker, S. Gerwinn, A. S. Tolias, and M. Bethge, Reassessing optimal neural population codes with neurometric functions, Proceedings of the National Academy of Sciences 108, 4423 (2011).

- Bethge et al. [2002] M. Bethge, D. Rotermund, and K. Pawelzik, Optimal Short Term Population Coding: When Fisher Information Fails, Neural Computation 14, 2317 (2002).

- Yaeli and Meir [2010] S. Yaeli and R. Meir, Error-based analysis of optimal tuning functions explains phenomena observed in sensory neurons, Frontiers in Computational Neuroscience 4, 130 (2010).

- Yoo et al. [2016] Y. Yoo, O. O. Koyluoglu, S. Vishwanath, and I. Fiete, Multi-periodic neural coding for adaptive information transfer, Theoretical Computer Science 633, 37 (2016).

- Seung [1996] H. S. Seung, How the brain keeps the eyes still, Proceedings of the National Academy of Sciences 93, 13339 (1996).

- Camperi and Wang [1998] M. Camperi and X. Wang, A Model of Visuospatial Working Memory in Prefrontal Cortex: Recurrent Network and Cellular Bistability, Journal of Computational Neuroscience 5, 383 (1998).

- Compte et al. [2000] A. Compte, N. Brunel, P. S. Goldman-Rakic, and X. Wang, Synaptic Mechanisms and Network Dynamics Underlying Spatial Working Memory in a Cortical Network Model, Cerebral Cortex 10, 910 (2000).

- Burak and Fiete [2002] Y. Burak and I. Fiete, Fundamental limits on persistent activity in networks of noisy neurons, Proceedings of the National Academy of Sciences 109, 17645 (2002).

- Skaggs et al. [1995] W. E. Skaggs, J. J. Knierim, H. S. Kudrimoti, and B. L. McNaughton, A model of the neural basis of the rat’s sense of direction, Advances in Neural Information Processing Systems 7, 173 (1995).

- Zhang [1996] K. Zhang, Representation of Spatial Orientation by the Intrinsic Dynamics of the Head-Direction Cell Ensemble: A Theory, The Journal of Neuroscience 16, 2112 (1996).